Adaptive Submodularity A New Approach to Active Learning

Adaptive Submodularity: A New Approach to Active Learning and Stochastic Optimization Daniel Golovin and Andreas Krause California Institute of Technology Center for the Mathematics of Information 1

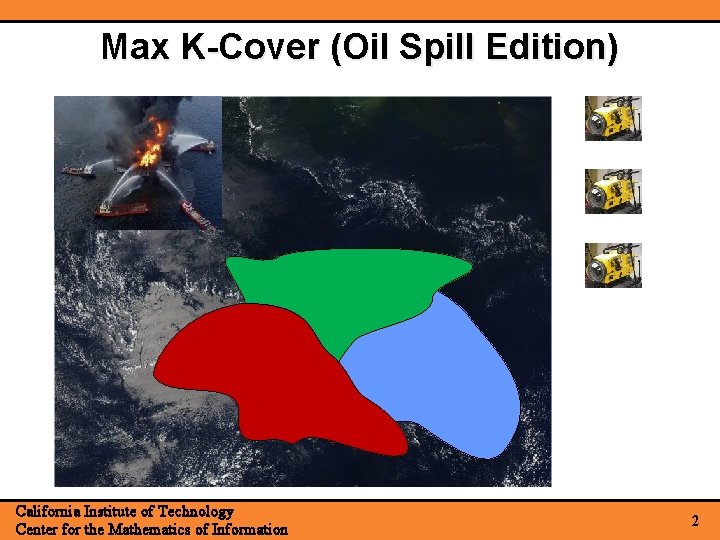

Max K-Cover (Oil Spill Edition) California Institute of Technology Center for the Mathematics of Information 2

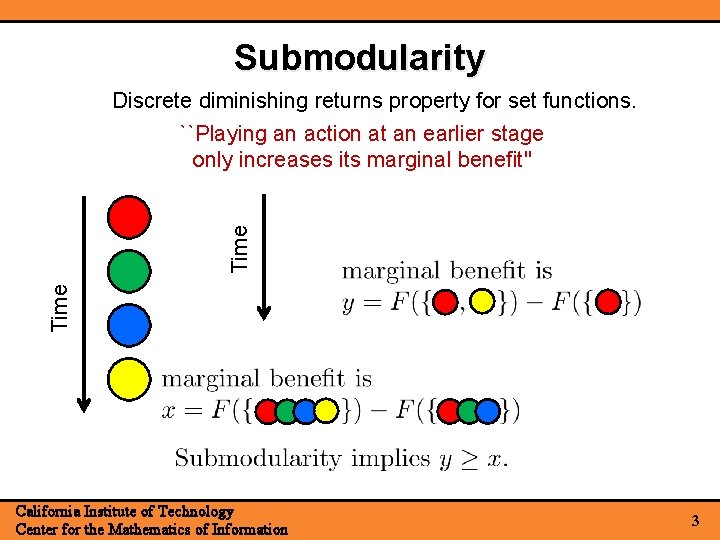

Submodularity Time Discrete diminishing returns property for set functions. ``Playing an action at an earlier stage only increases its marginal benefit'' California Institute of Technology Center for the Mathematics of Information 3

![The Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology Center The Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology Center](http://slidetodoc.com/presentation_image_h2/88056d7ce6c31f3d58e715b0477527ee/image-4.jpg)

The Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology Center for the Mathematics of Information 4

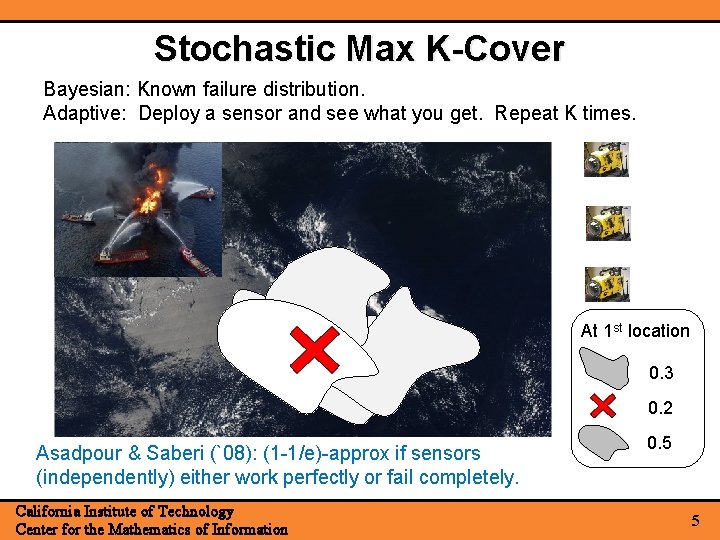

Stochastic Max K-Cover Bayesian: Known failure distribution. Adaptive: Deploy a sensor and see what you get. Repeat K times. At 1 st location 0. 3 0. 2 Asadpour & Saberi (`08): (1 -1/e)-approx if sensors (independently) either work perfectly or fail completely. California Institute of Technology Center for the Mathematics of Information 0. 5 5

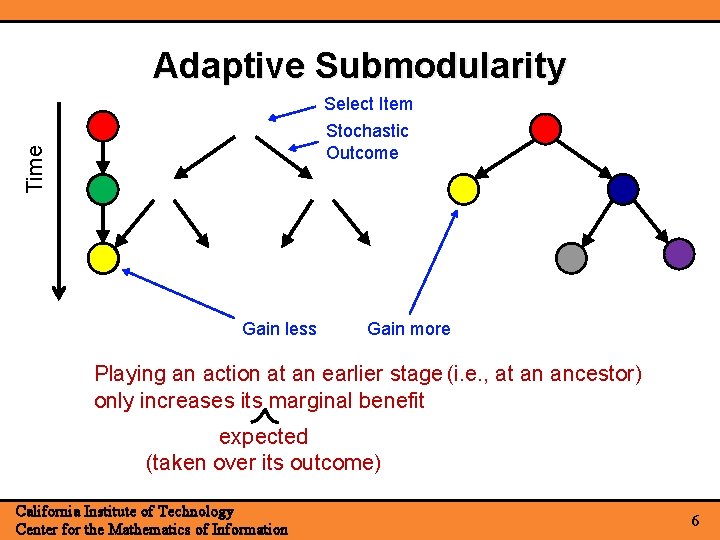

Adaptive Submodularity Select Item Time Stochastic Outcome Gain less Gain more Playing an action at an earlier stage (i. e. , at an ancestor) only increases its marginal benefit expected (taken over its outcome) California Institute of Technology Center for the Mathematics of Information 6

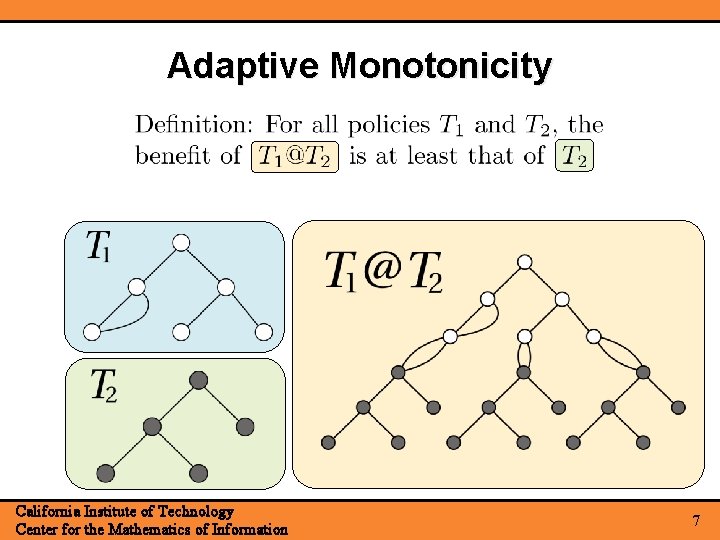

Adaptive Monotonicity California Institute of Technology Center for the Mathematics of Information 7

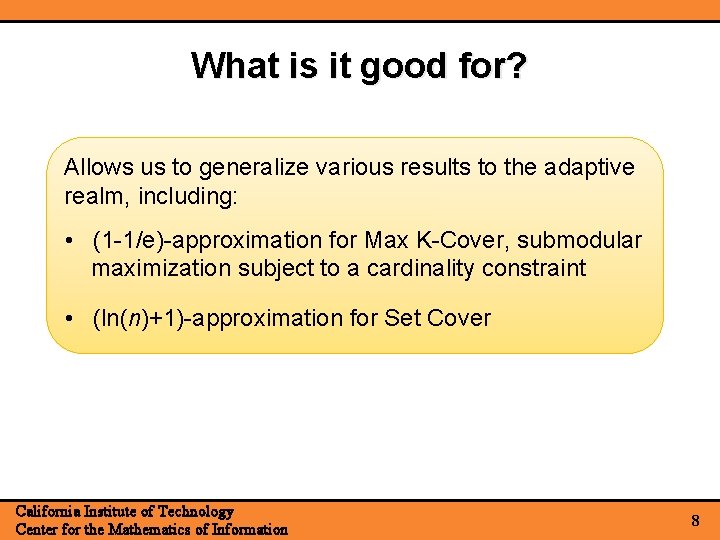

What is it good for? Allows us to generalize various results to the adaptive realm, including: • (1 -1/e)-approximation for Max K-Cover, submodular maximization subject to a cardinality constraint • (ln(n)+1)-approximation for Set Cover California Institute of Technology Center for the Mathematics of Information 8

![Recall the Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology Recall the Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology](http://slidetodoc.com/presentation_image_h2/88056d7ce6c31f3d58e715b0477527ee/image-9.jpg)

Recall the Greedy Algorithm Theorem [Nemhauser et al ‘ 78] California Institute of Technology Center for the Mathematics of Information 9

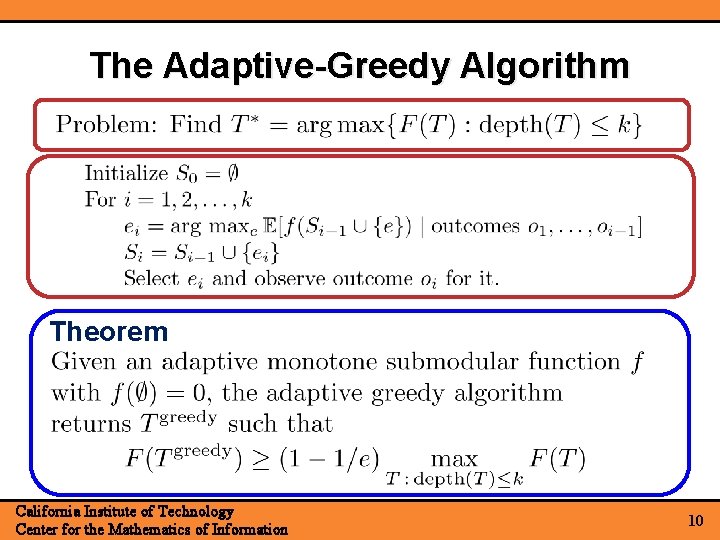

The Adaptive-Greedy Algorithm Theorem California Institute of Technology Center for the Mathematics of Information 10

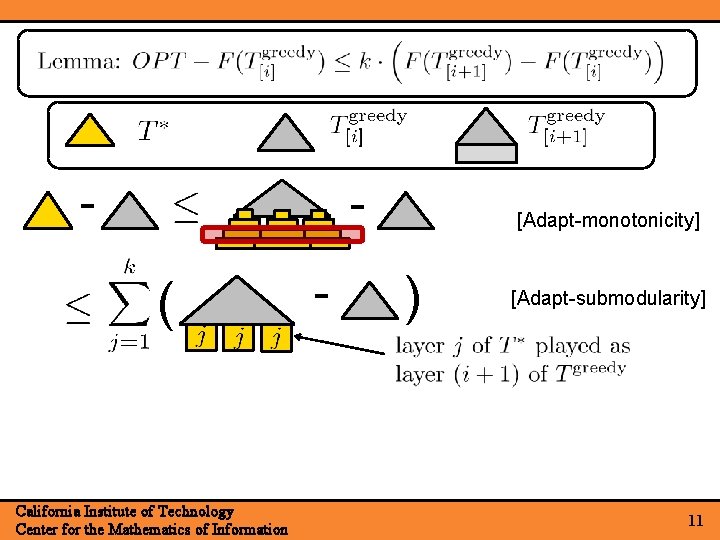

- - ( California Institute of Technology Center for the Mathematics of Information - [Adapt-monotonicity] ) [Adapt-submodularity] 11

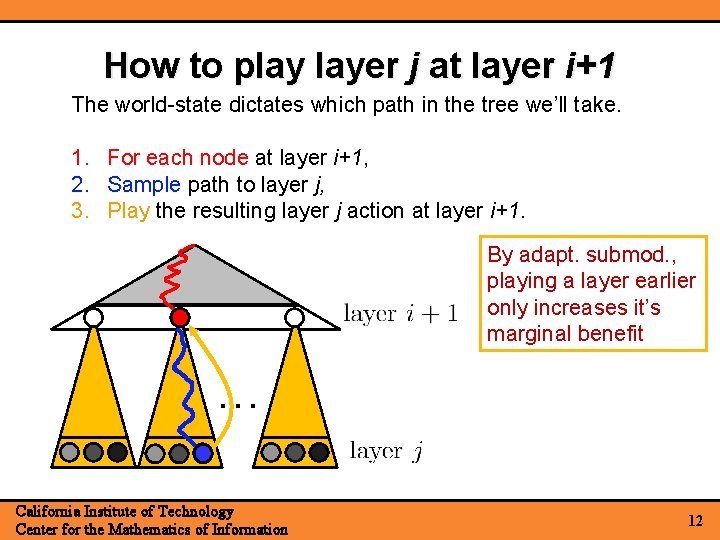

How to play layer j at layer i+1 The world-state dictates which path in the tree we’ll take. 1. For each node at layer i+1, 2. Sample path to layer j, 3. Play the resulting layer j action at layer i+1. By adapt. submod. , playing a layer earlier only increases it’s marginal benefit … California Institute of Technology Center for the Mathematics of Information 12

![- - ( ( [Adapt-monotonicity] - ) ) [Adapt-submodularity] ( - ) [Def. of - - ( ( [Adapt-monotonicity] - ) ) [Adapt-submodularity] ( - ) [Def. of](http://slidetodoc.com/presentation_image_h2/88056d7ce6c31f3d58e715b0477527ee/image-13.jpg)

- - ( ( [Adapt-monotonicity] - ) ) [Adapt-submodularity] ( - ) [Def. of adapt-greedy] California Institute of Technology Center for the Mathematics of Information 13

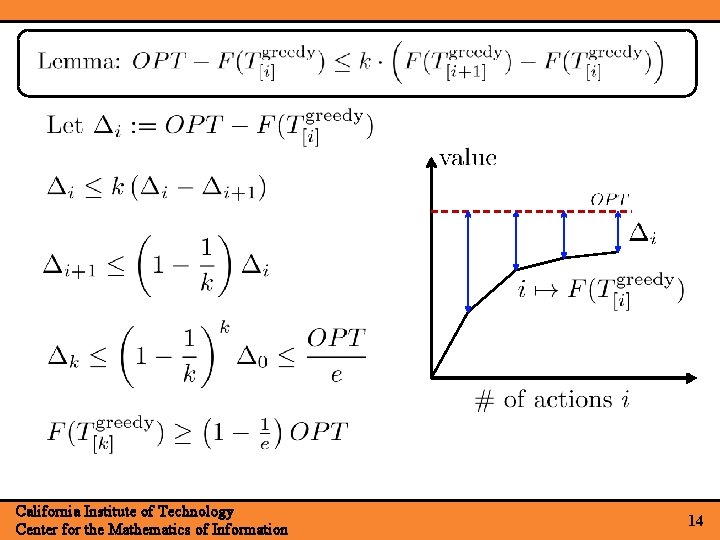

California Institute of Technology Center for the Mathematics of Information 14

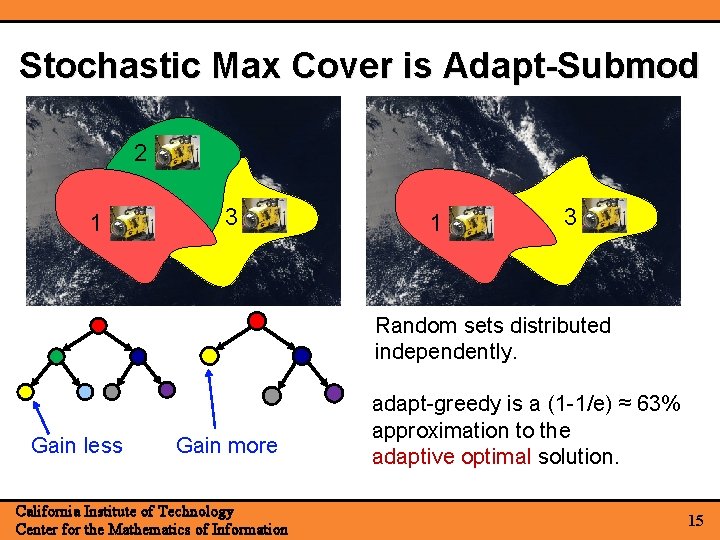

Stochastic Max Cover is Adapt-Submod 2 1 3 Random sets distributed independently. Gain less Gain more California Institute of Technology Center for the Mathematics of Information adapt-greedy is a (1 -1/e) ≈ 63% approximation to the adaptive optimal solution. 15

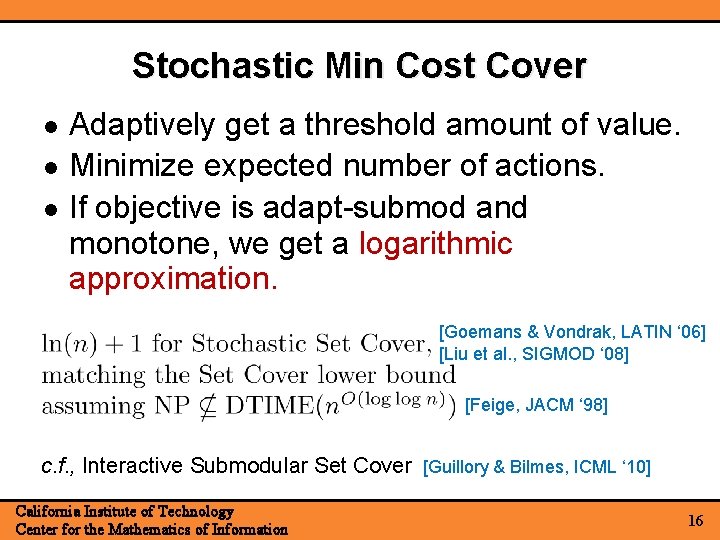

Stochastic Min Cost Cover Adaptively get a threshold amount of value. Minimize expected number of actions. If objective is adapt-submod and monotone, we get a logarithmic approximation. [Goemans & Vondrak, LATIN ‘ 06] [Liu et al. , SIGMOD ‘ 08] [Feige, JACM ‘ 98] c. f. , Interactive Submodular Set Cover [Guillory & Bilmes, ICML ‘ 10] California Institute of Technology Center for the Mathematics of Information 16

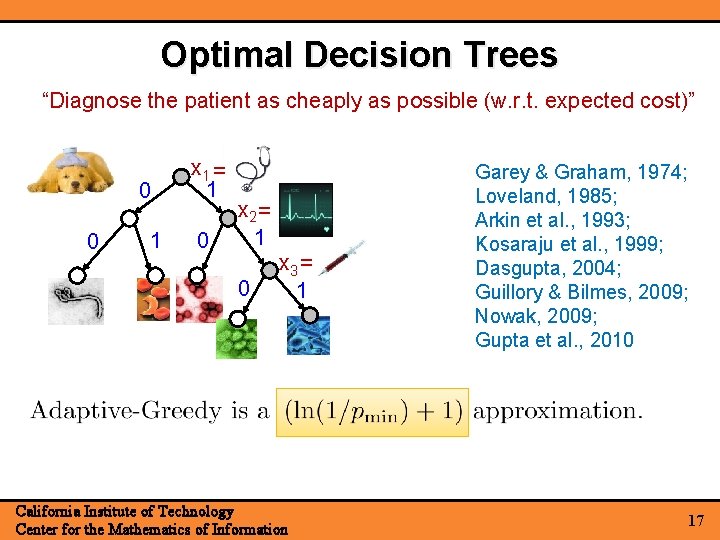

Optimal Decision Trees “Diagnose the patient as cheaply as possible (w. r. t. expected cost)” 0 0 1 x 1 = 1 0 x 2 = 1 0 x 3 = 1 California Institute of Technology Center for the Mathematics of Information Garey & Graham, 1974; Loveland, 1985; Arkin et al. , 1993; Kosaraju et al. , 1999; Dasgupta, 2004; Guillory & Bilmes, 2009; Nowak, 2009; Gupta et al. , 2010 17

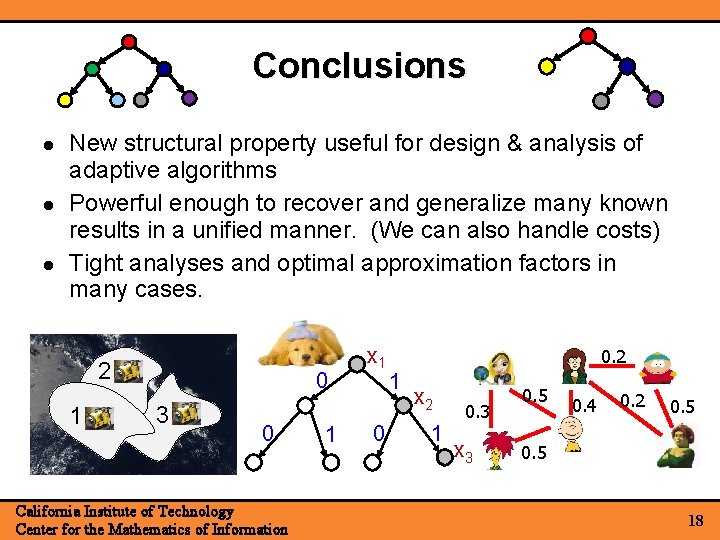

Conclusions New structural property useful for design & analysis of adaptive algorithms Powerful enough to recover and generalize many known results in a unified manner. (We can also handle costs) Tight analyses and optimal approximation factors in many cases. 2 1 0 3 0 California Institute of Technology Center for the Mathematics of Information 1 x 1 0 0. 2 1 x 2 1 0. 3 x 3 0. 5 0. 4 0. 2 0. 5 18

- Slides: 18