Acoustic Model Adaptation Based On Pronunciation Variability Analysis

�� Acoustic Model Adaptation Based On Pronunciation Variability Analysis For Non-Native Speech Recognition Yoo Rhee Oh, Jae Sam Yoon, and Hong Kook Kim Dept. of Information and Communications Gwangju Institute of Science and Technology (GIST), Gwangju 500 -712, Korea ICASSP 2006 SLP-L 6: Advances in LVCSR Algorithms Presenter: Ting-Wei Hsu

Reference • Making a Speech Recognizer Tolerate Non-native Speech through Gaussian Mixture Merging, John J. Morgan, Proceedings of In. STIL/ICALL 2004 – NLP and Speech Technologies in Advanced Language Learning Systems – Venice 17 -19 June, 2004 2

Outline 1. Introduction 2. Effect of non-native speech 3. Acoustic model adaptation for non-native speech recognition 4. Experiments 5. Conclusion 3

1. Introduction • Nowadays we have many chances to use a different language from the mother tongue by the stream of the internationalization. • Moreover, there is an increasing demand on the automatic systems using the speech recognition. – Ex: Computer assisted language learning (CALL) … • However, the performance of an automatic speech recognition (ASR) system tested by the non-native speech degrades significantly, compared with that by the native speech. • The main reason – A target language, with which the speech recognition system has been already trained – The mother tongue of the non-native speaker have different pronunciation spaces of the vowel and consonant sounds. 4

1. Introduction (cont. ) • An ASR for the non-native speech requires kind of adaptation to compensate for this fact. – Pronunciation modeling • Making a nonnative speech recognition system to include the pronunciation variants by non-native speakers for each word – Acoustic modeling • Adapting the acoustic models by one of adaptation methods such MLLR, MAP adaptation – Language modeling • Adapting the language model • The combination of these approaches can be used for more improvement. 5

1. Introduction (cont. ) • In this paper, the pronunciation variability is first investigated and then the acoustic model adaptation is performed for the phonetic units. • Pronunciation variability – Modeled by a phoneme confusion matrix for pronunciation from native to non-native speech. – Clustering the state of acoustic models of target language. • Acoustic model adaptation – Making the states of the variant units tied. – The mixture of each acoustic model is increased 6

3. Acoustic model adaptation for non-native speech recognition English Single mixture (AM 0) (AM 1) English , Korean (AM 2) 7

2. Effect of non-native speech • 2. 1. English baseline ASR – Training set • Wall Street Journal database , WSJ 0, 7, 138 utterances, microphone, sampled at a rate of 16 k. Hz – Recognition feature • 12 mel-frequency cepstral coefficients (MFCC) with a logarithmic energy for every 10 ms analysis frame, 39 dimensional feature vector. cepstral mean normalization and energy normalization to the feature vectors. – Acoustic models • 3 -state left-to-right, context-dependent, 4 -mixture, and crossword triphone models, trained by using the HTK version 3. 2 toolkit 8

2. Effect of non-native speech (cont. ) • 2. 2. Speech database for English spoken by Koreans – Korean-Spoken English Corpus (K-SEC) • Developing sets (Korean) • Evaluating sets 1 (Korean) • Evaluating sets 2 (English) • 2. 3. Effect of native and non-native speech on the baseline ASR – Taking the lexicon only from the text of the test set. (CMU pronunciationg dictionary) – A backed-off bigram is used for a language model 9

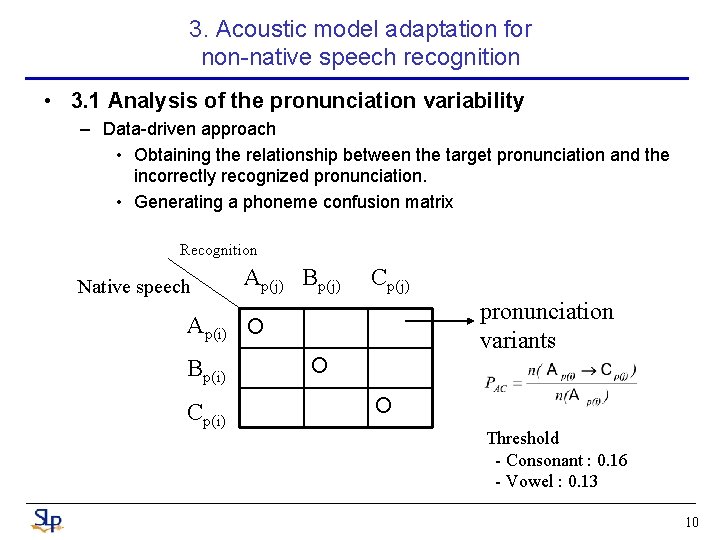

3. Acoustic model adaptation for non-native speech recognition • 3. 1 Analysis of the pronunciation variability – Data-driven approach • Obtaining the relationship between the target pronunciation and the incorrectly recognized pronunciation. • Generating a phoneme confusion matrix Recognition Native speech Ap(j) Bp(j) Cp(j) pronunciation variants Ap(i) O Bp(i) Cp(i) O O Threshold - Consonant : 0. 16 - Vowel : 0. 13 10

3. Acoustic model adaptation for non-native speech recognition (cont. ) • 3. 2. Proposed acoustic model adaptation for nonnative speech recognition – state tying /p/ /iy/→/ih/ 11

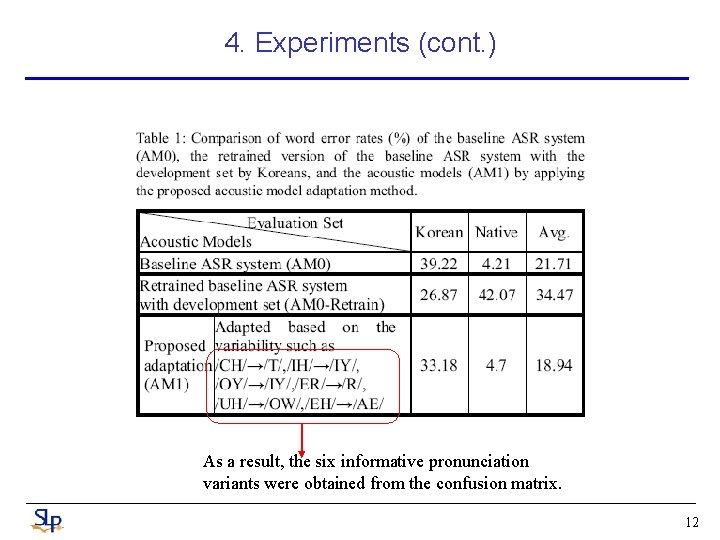

4. Experiments (cont. ) As a result, the six informative pronunciation variants were obtained from the confusion matrix. 12

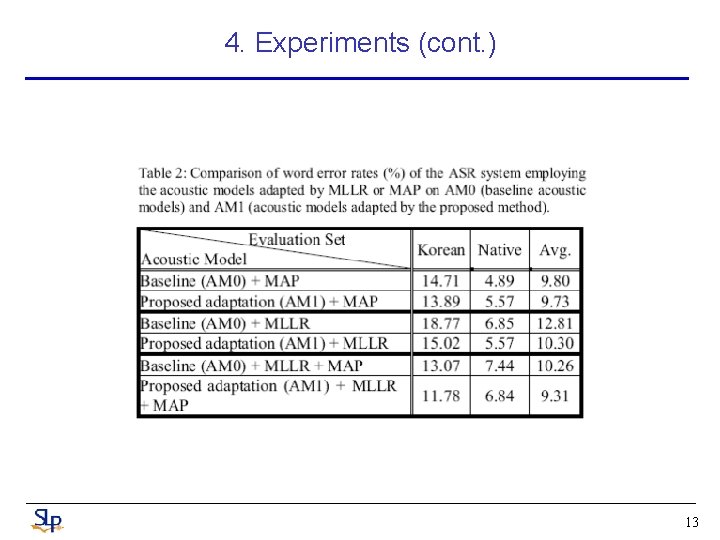

4. Experiments (cont. ) 13

5. Conclusion • We proposed the acoustic model adaptation method for non-native speech recognition. • The proposed method, which is a data-driven approach, first ranked the phonetic units that gave most informative pronunciation variability by recognizing nonnative speech using the acoustic models trained by native speech. 14

Another way… • Korean speak English – English Model → English Model for Korean • By phoneme confusion matrix to do state tying • American speak Arabic – Arabic Model → Arabic Model for American • Hidden Markov Model (HMM) phone sets are trained for English and Arabic, and then English phones are merged into the Arabic phones to make a new Arabic system. • The phone level transcriptions of the training data had to be relabeled with English phones • By phoneme confusion matrix to do model merging (MM) adaptation 15

- Slides: 15