Achieving NonInclusive Cache Performance with Inclusive Caches Aamer

- Slides: 30

Achieving Non-Inclusive Cache Performance with Inclusive Caches Aamer Jaleel, Eric Borch, Malini Bhandaru, Simon Steely Jr. , Joel Emer Intel Corporation, VSSAD Aamer. Jaleel@intel. com IEEE/ACM International Symposium on Microarchitecture (MICRO’ 2010)

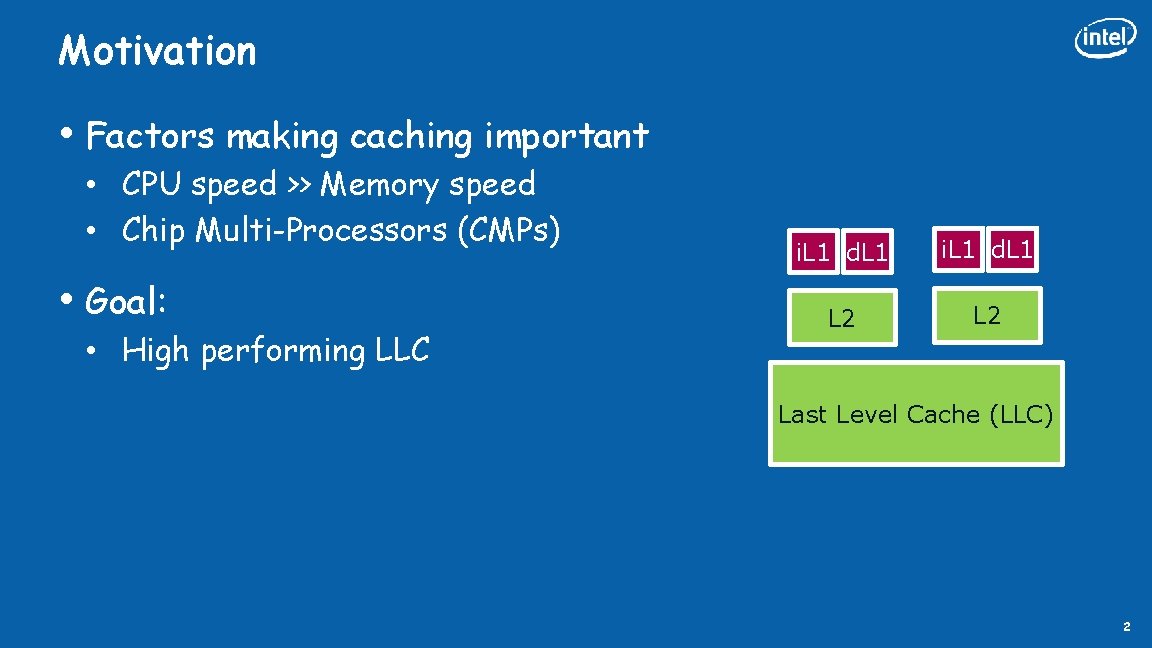

Motivation • Factors making caching important • CPU speed >> Memory speed • Chip Multi-Processors (CMPs) • Goal: • High performing LLC i. L 1 d. L 1 L 2 Last Level Cache (LLC) 2

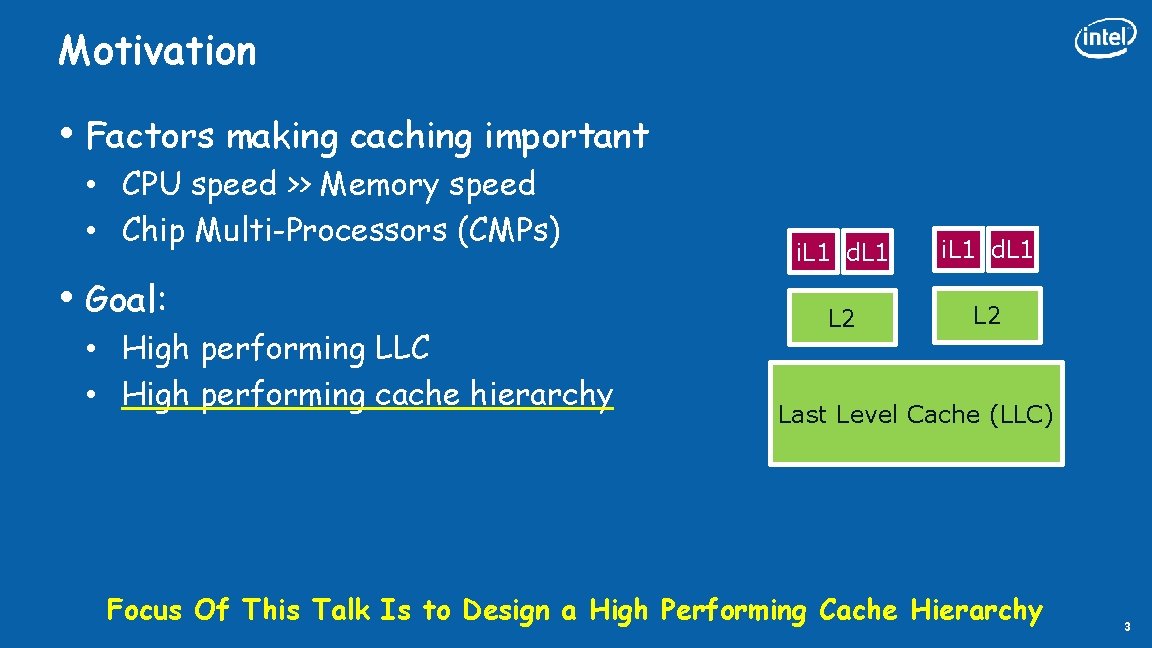

Motivation • Factors making caching important • CPU speed >> Memory speed • Chip Multi-Processors (CMPs) • Goal: • High performing LLC • High performing cache hierarchy i. L 1 d. L 1 L 2 Last Level Cache (LLC) Focus Of This Talk Is to Design a High Performing Cache Hierarchy 3

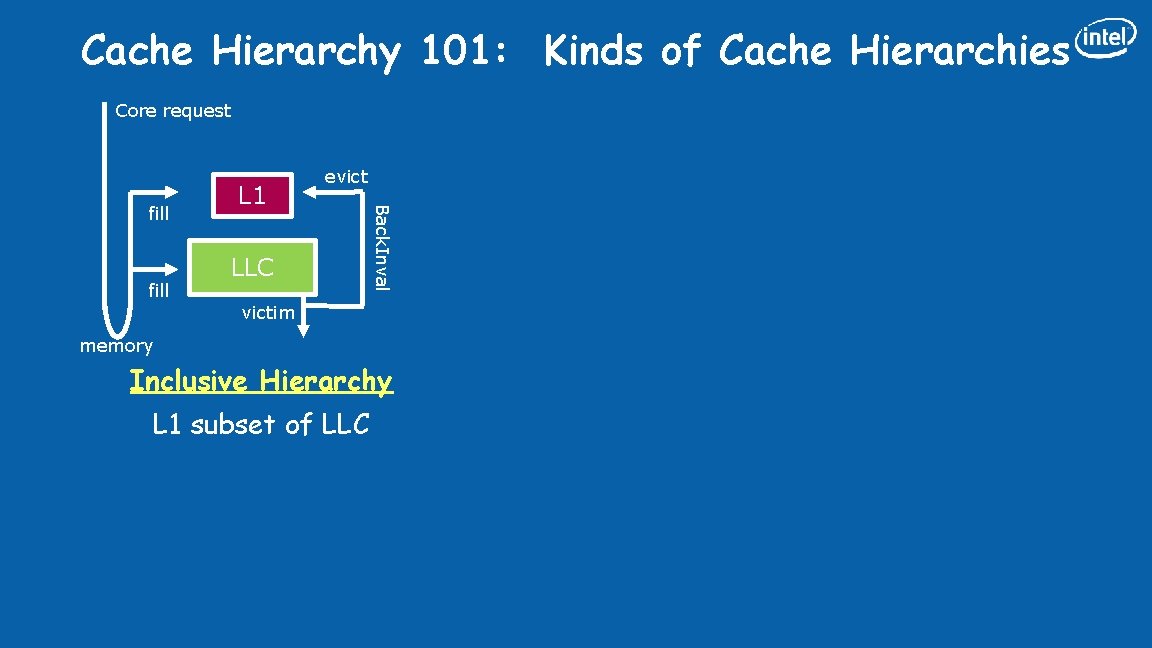

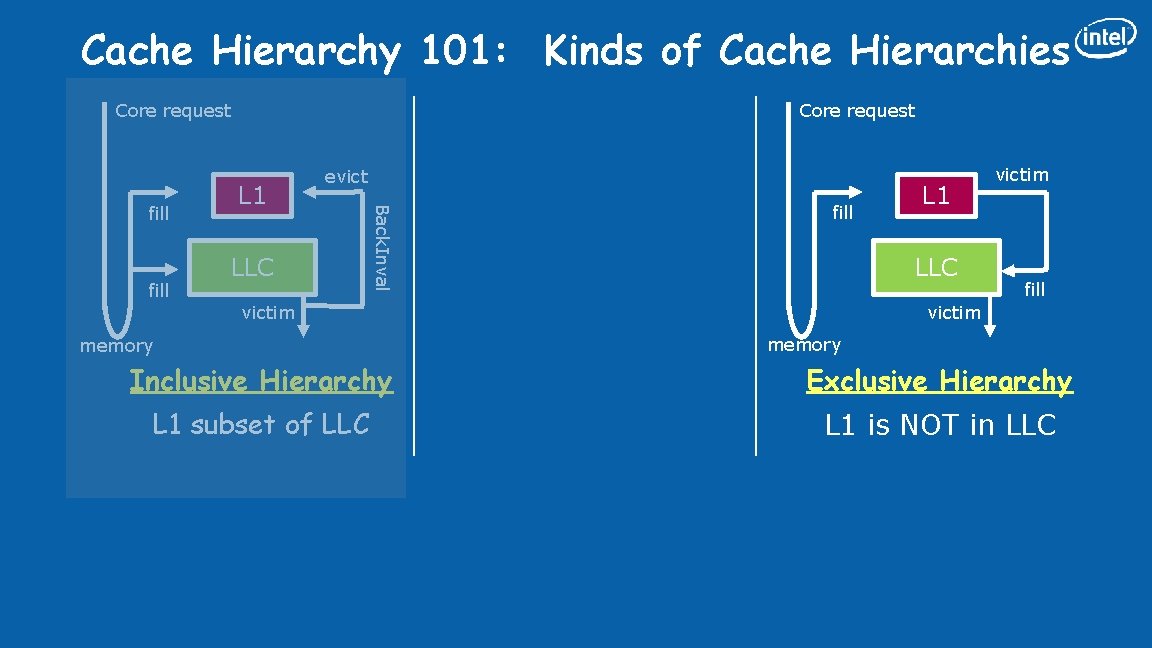

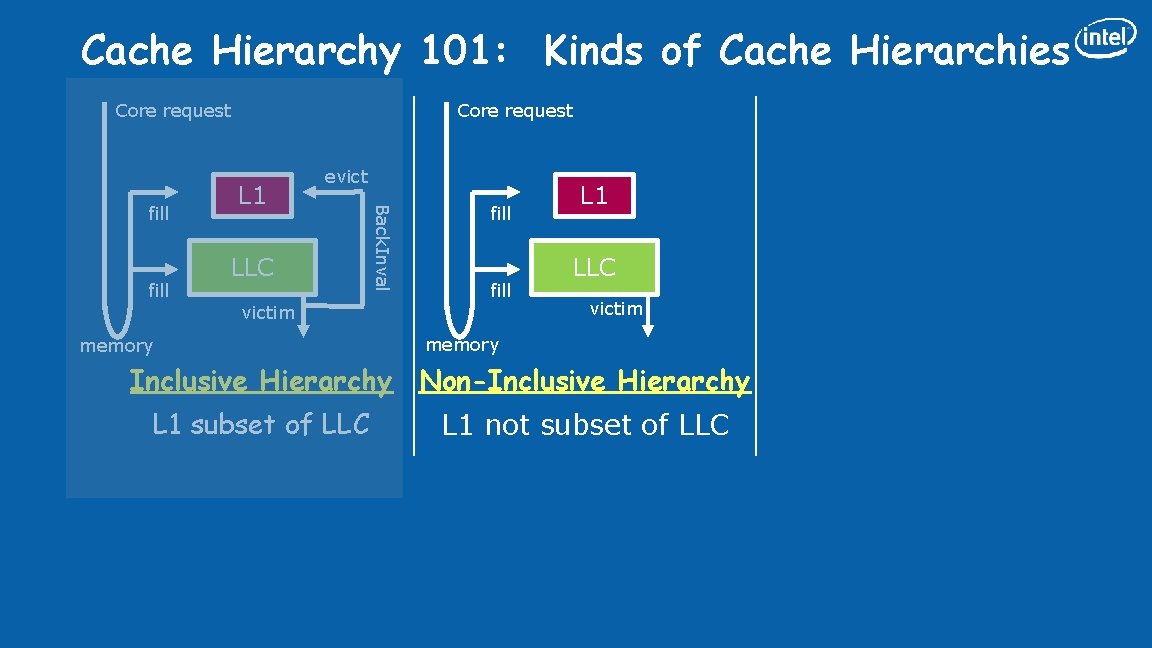

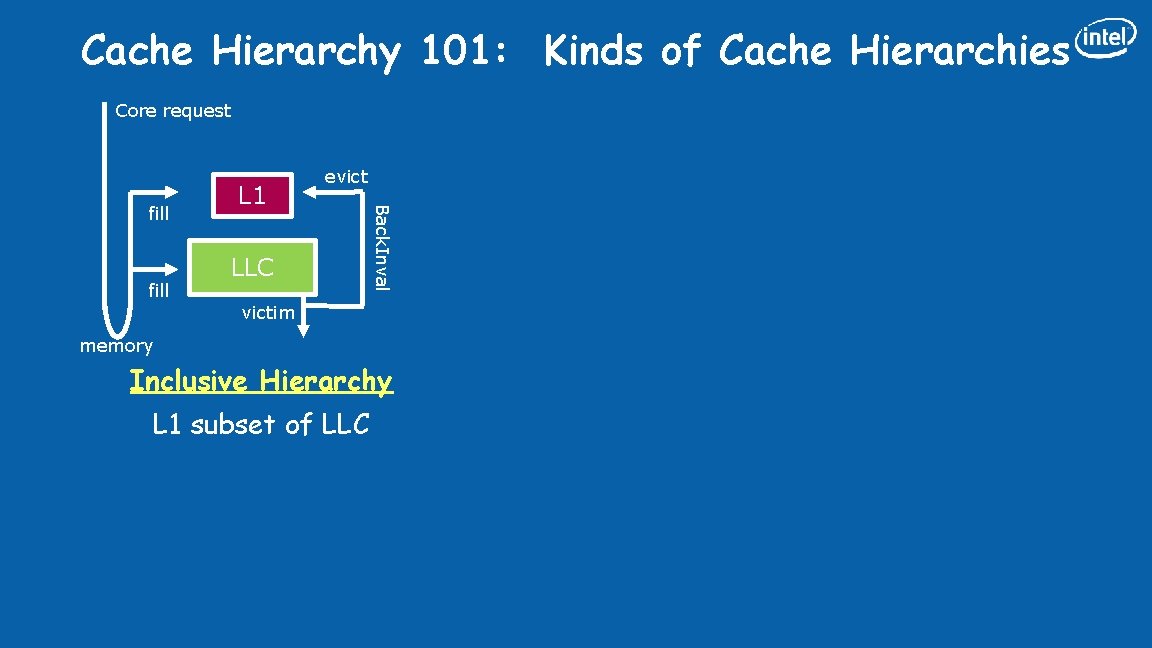

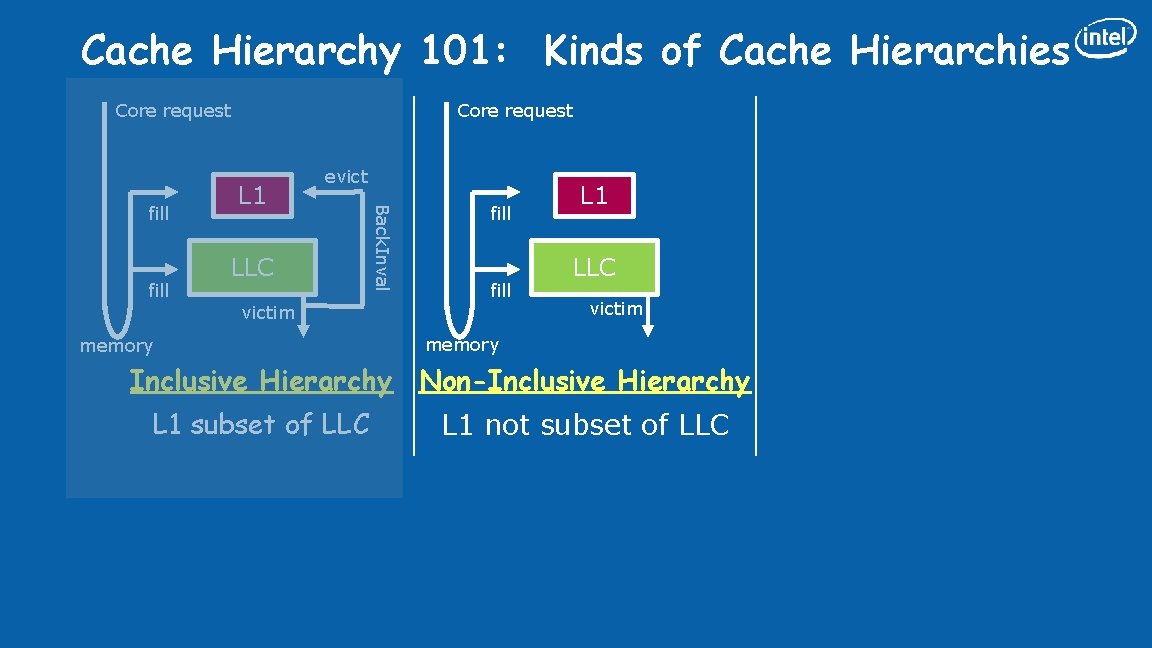

Cache Hierarchy 101: Kinds of Cache Hierarchies Core request fill LLC Back. Inval fill L 1 evictim memory Inclusive Hierarchy L 1 subset of LLC

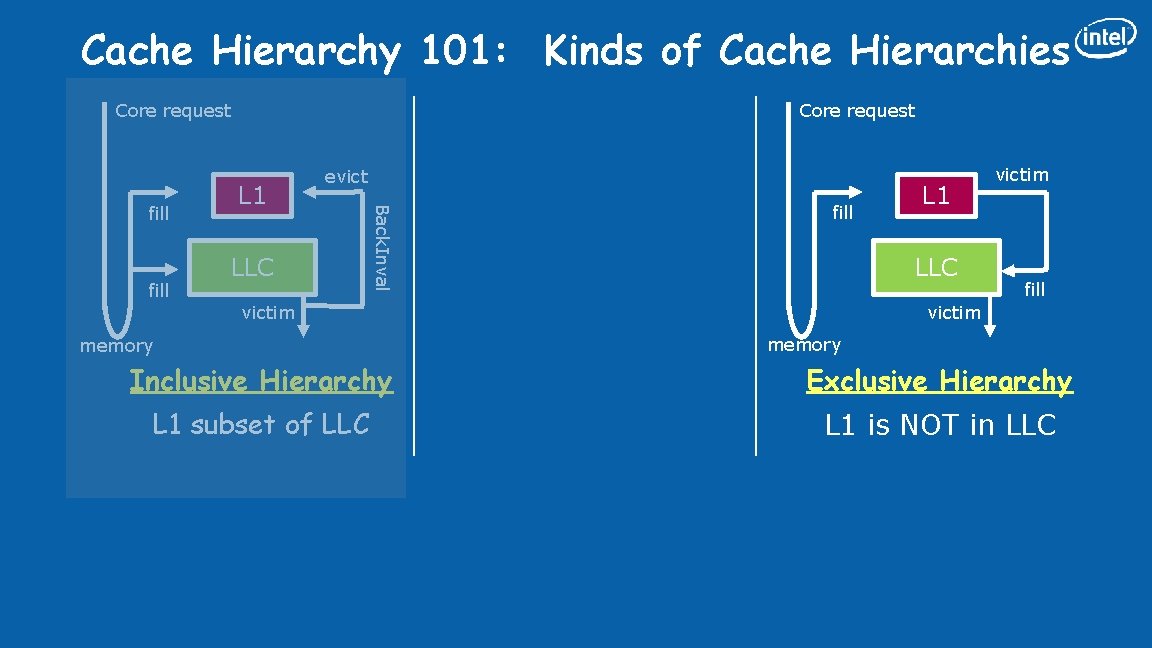

Cache Hierarchy 101: Kinds of Cache Hierarchies Core request fill L 1 evict LLC Back. Inval fill Core request fill LLC victim memory L 1 victim fill victim memory Inclusive Hierarchy Exclusive Hierarchy L 1 subset of LLC L 1 is NOT in LLC

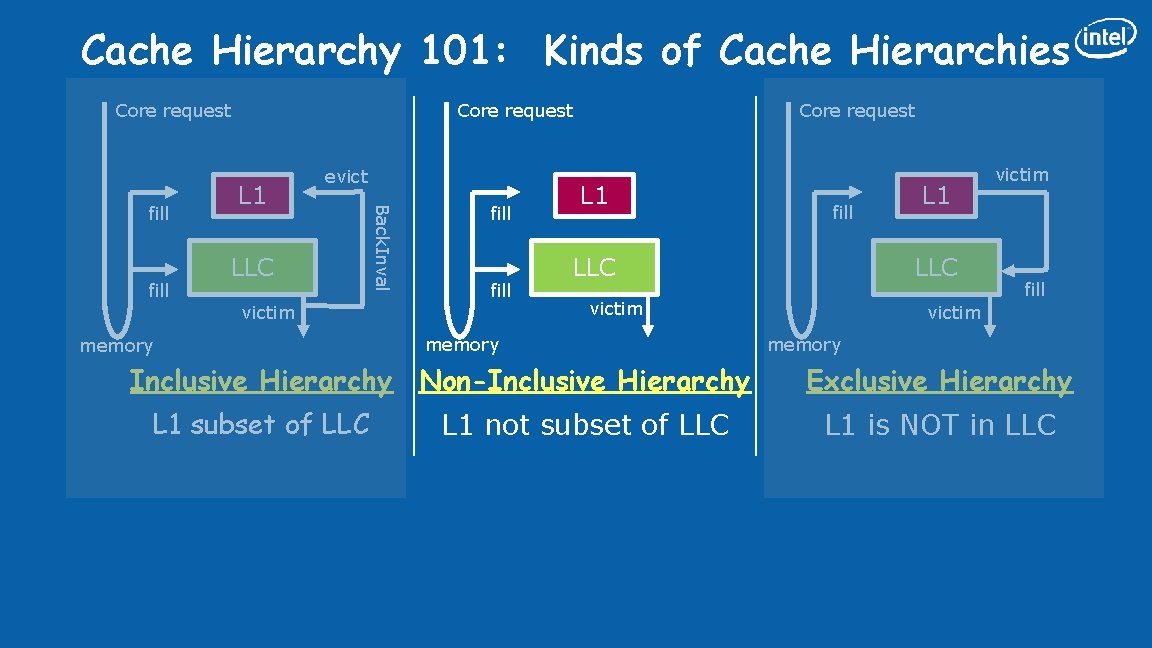

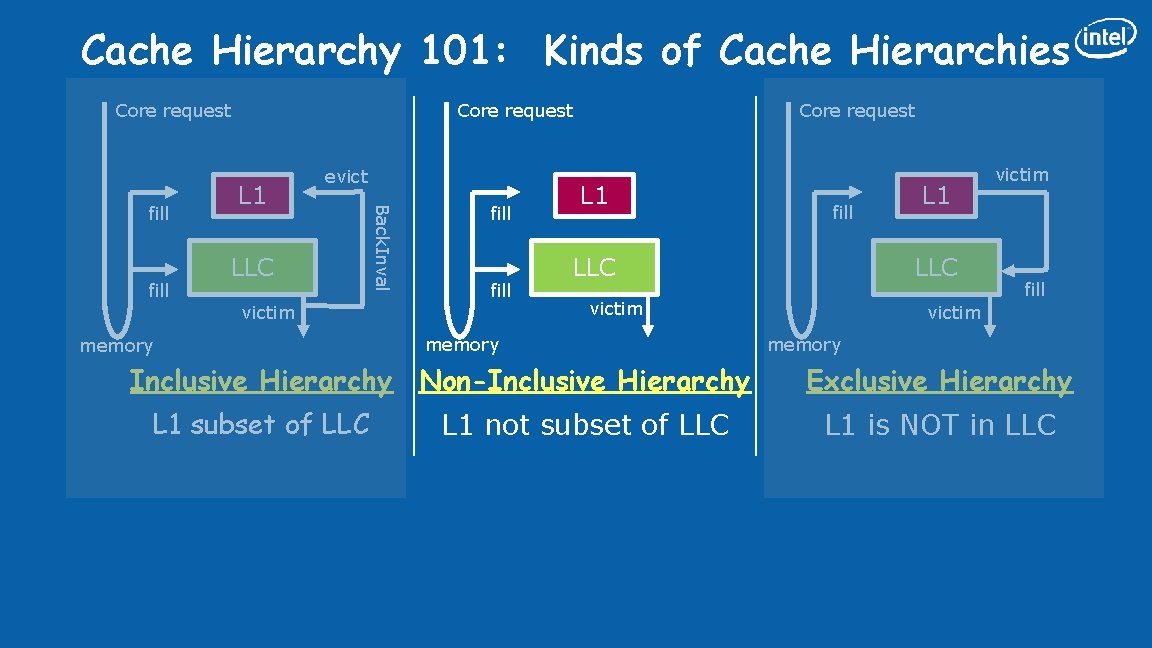

Cache Hierarchy 101: Kinds of Cache Hierarchies Core request fill L 1 evict LLC victim memory Back. Inval fill Core request L 1 LLC memory L 1 not subset of LLC L 1 LLC victim Inclusive Hierarchy Non-Inclusive Hierarchy L 1 subset of LLC fill victim memory Exclusive Hierarchy L 1 is NOT in LLC

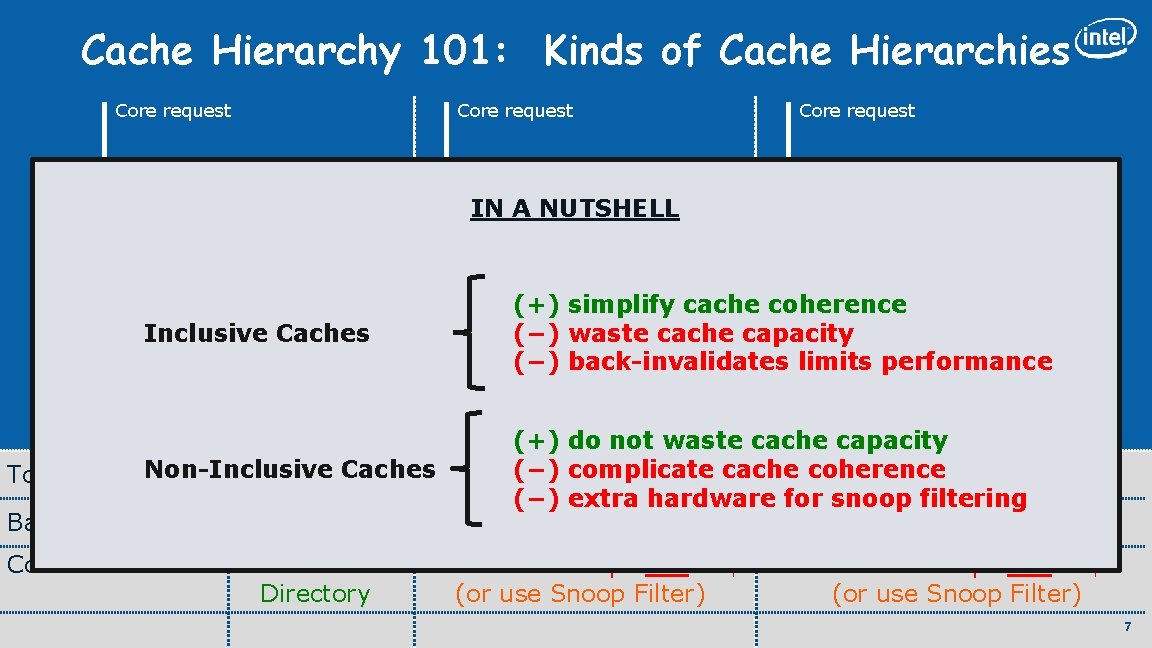

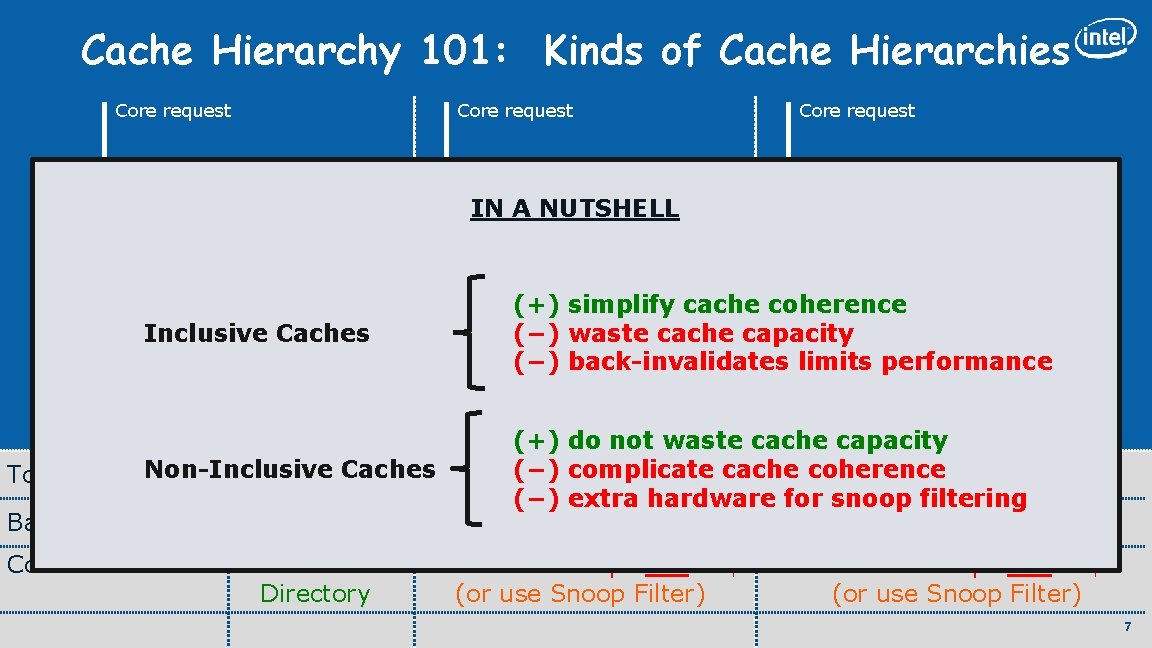

Cache Hierarchy 101: Kinds of Cache Hierarchies Core request fill L 1 evict LLC Back. Inval fill Core request victim Inclusive Caches memory L 1 IN A NUTSHELL fill memory LLC fill L 1 LLC victim fill (+) simplify cache coherence victim (−) waste cache capacity memory (−) back-invalidates limits performance Inclusive Hierarchy Non-Inclusive Hierarchy L 1 subset of LLC Core request L 1 not subset of LLC Exclusive Hierarchy L 1 is NOT in LLC (+) do not waste cache capacity cache coherence Non-Inclusive Total Capacity: LLC Caches>= LLC(−) andcomplicate <= (L 1+LLC) L 1 + LLC (−) extra hardware for snoop filtering Back-Invalidate: YES NO NO Coherence: LLC Acts As Directory LLC miss snoops ALL L 1$ (or use Snoop Filter) 7

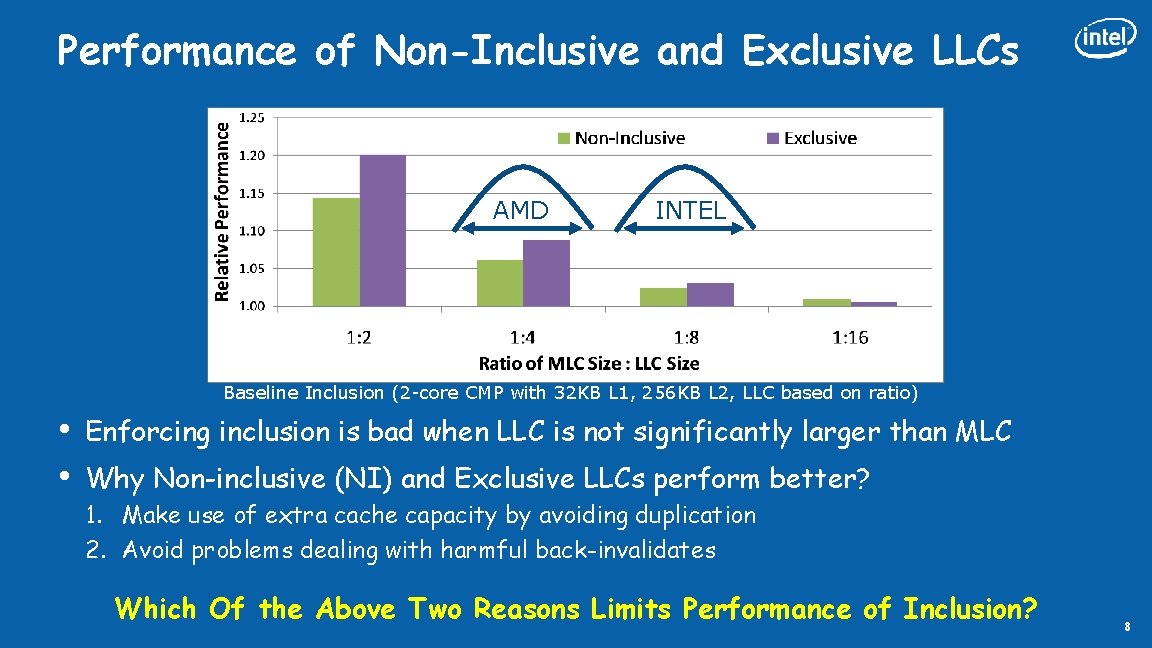

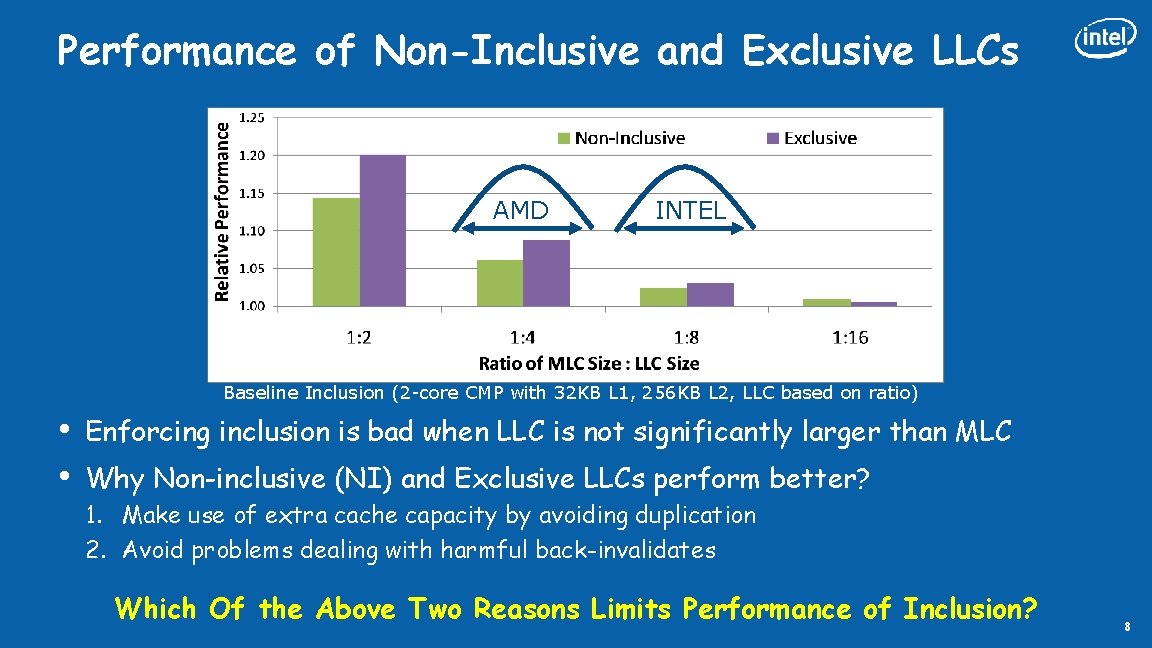

Performance of Non-Inclusive and Exclusive LLCs AMD INTEL Baseline Inclusion (2 -core CMP with 32 KB L 1, 256 KB L 2, LLC based on ratio) • • Enforcing inclusion is bad when LLC is not significantly larger than MLC Why Non-inclusive (NI) and Exclusive LLCs perform better? 1. Make use of extra cache capacity by avoiding duplication 2. Avoid problems dealing with harmful back-invalidates Which Of the Above Two Reasons Limits Performance of Inclusion? 8

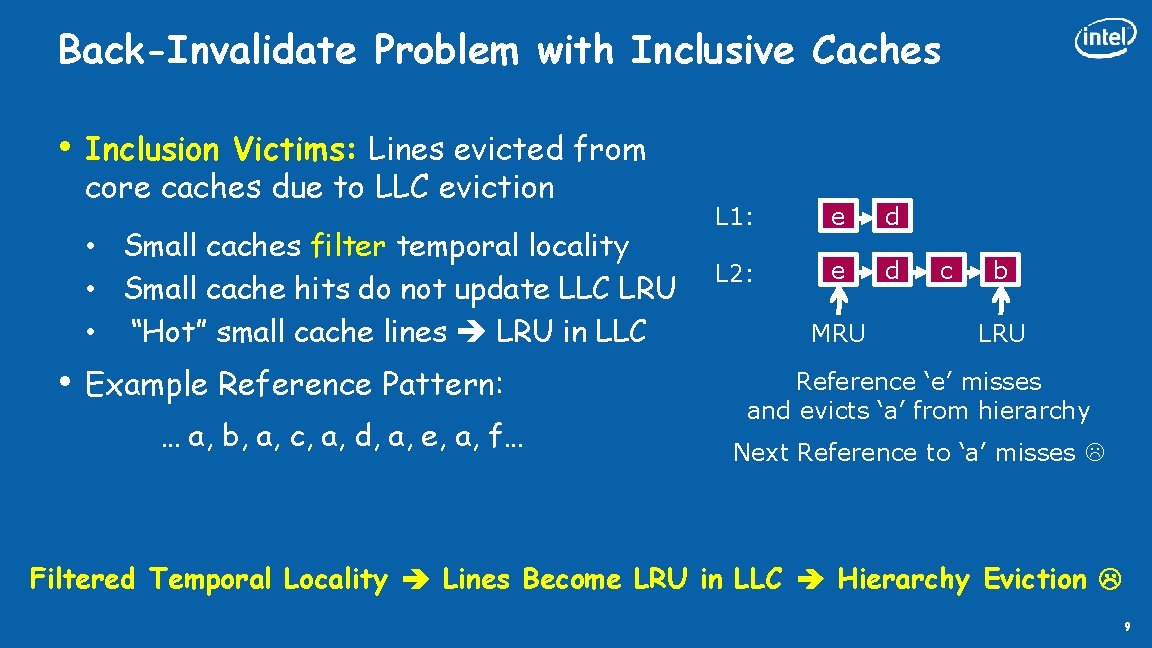

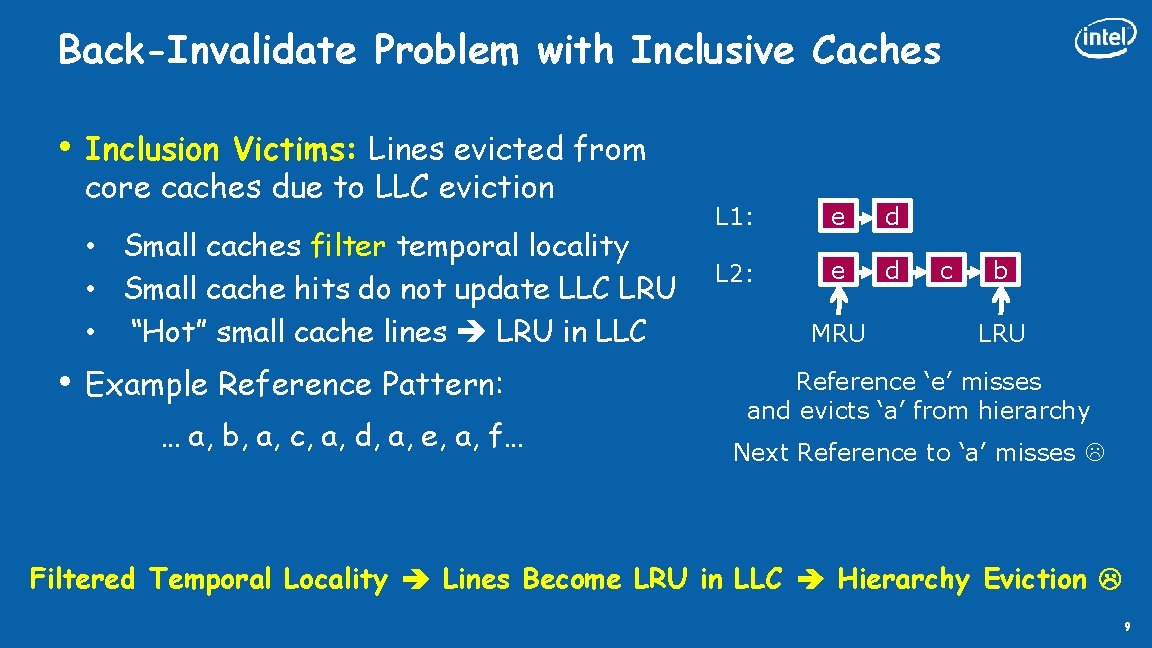

Back-Invalidate Problem with Inclusive Caches • Inclusion Victims: Lines evicted from core caches due to LLC eviction • Small caches filter temporal locality • Small cache hits do not update LLC LRU • “Hot” small cache lines LRU in LLC • Example Reference Pattern: … a, b, a, c, a, d, a, e, a, f… L 1: b d a e c b d a c L 2: b d a e c b d a c MRU b a c b a LRU Reference ‘e’ misses and evicts ‘a’ from hierarchy Next Reference to ‘a’ misses Filtered Temporal Locality Lines Become LRU in LLC Hierarchy Eviction 9

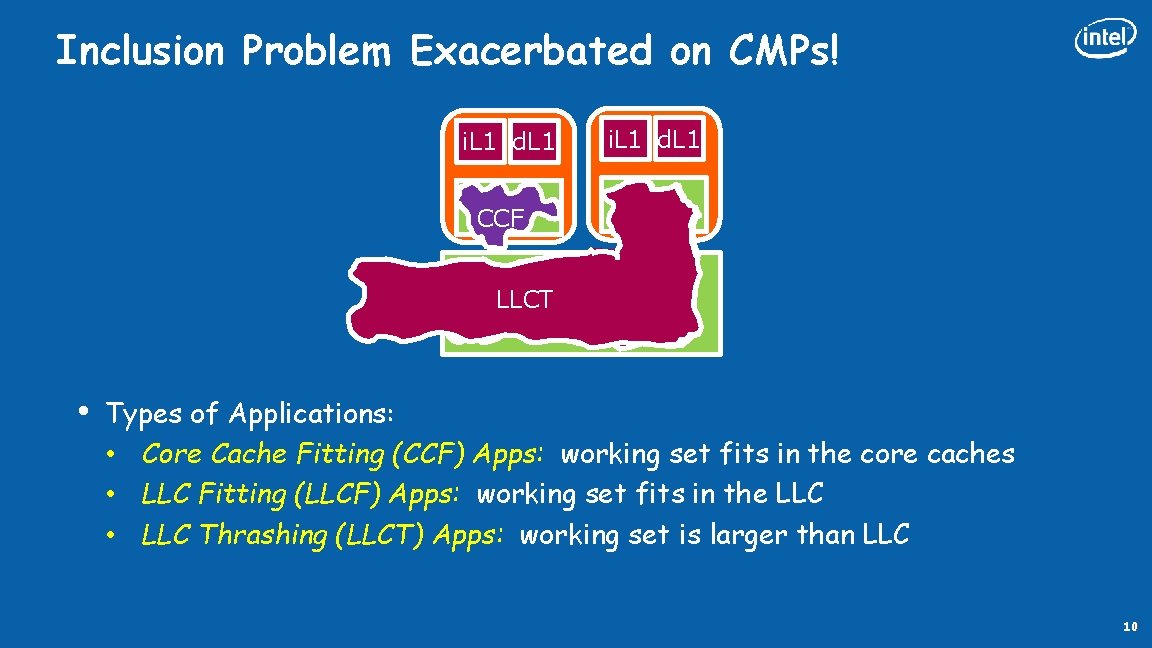

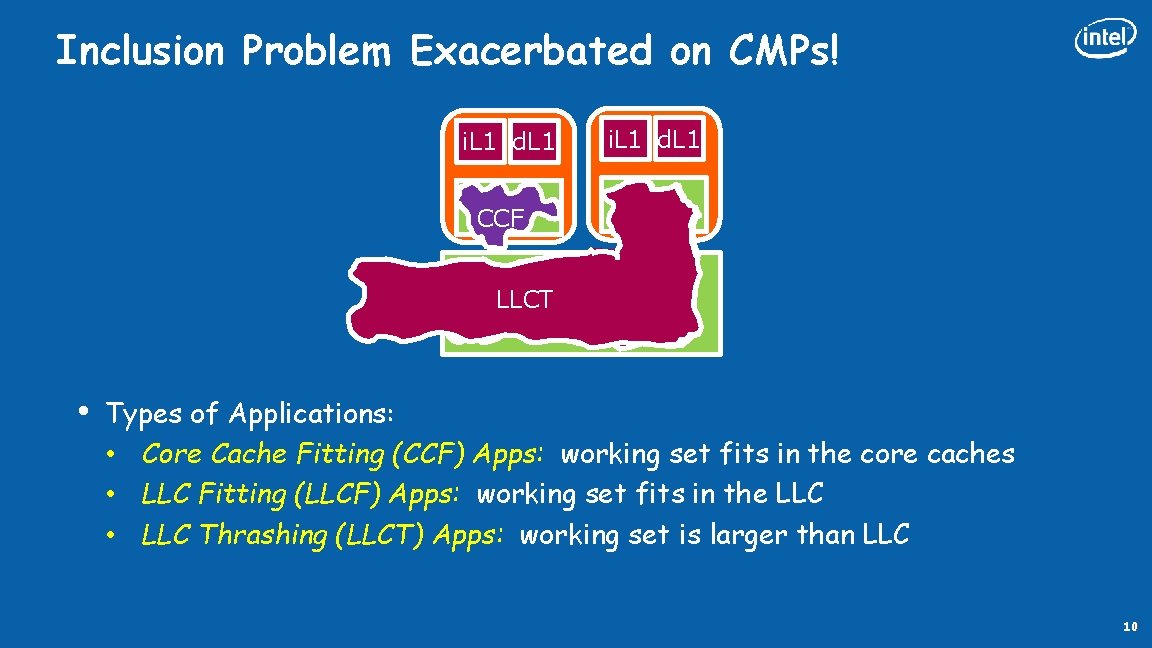

Inclusion Problem Exacerbated on CMPs! i. L 1 d. L 1 L 2 CCF i. L 1 d. L 1 L 2 LLCTLLCF LLC • Types of Applications: • Core Cache Fitting (CCF) Apps: working set fits in the core caches • LLC Fitting (LLCF) Apps: working set fits in the LLC • LLC Thrashing (LLCT) Apps: working set is larger than LLC 10

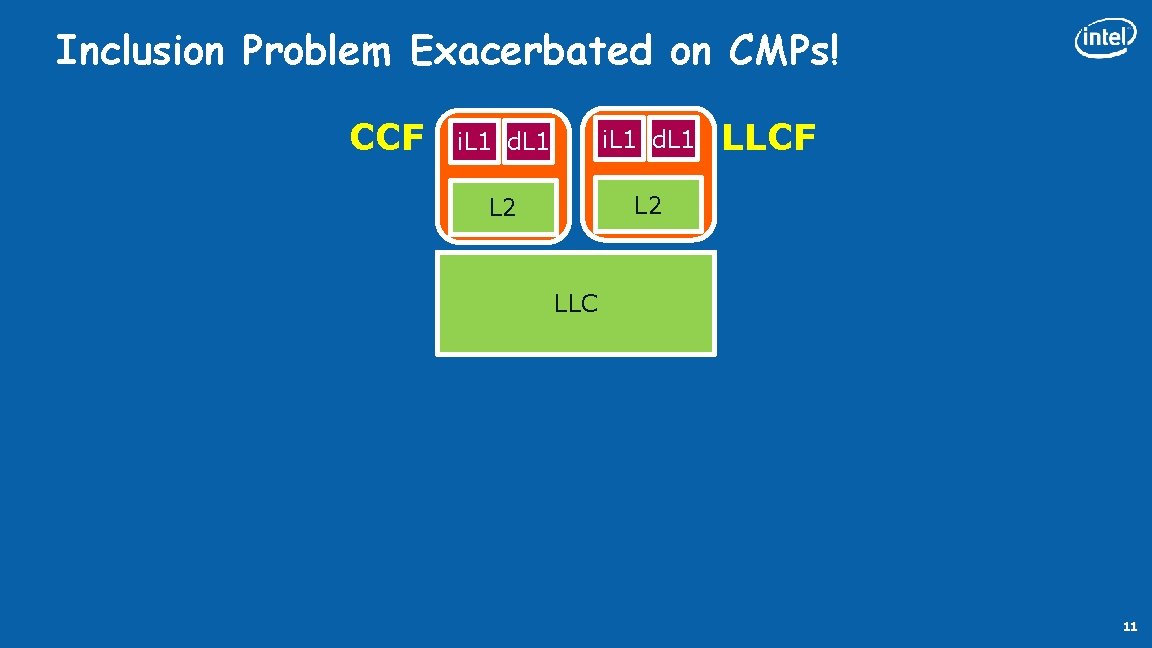

Inclusion Problem Exacerbated on CMPs! CCF i. L 1 d. L 1 L 2 LLCF LLC 11

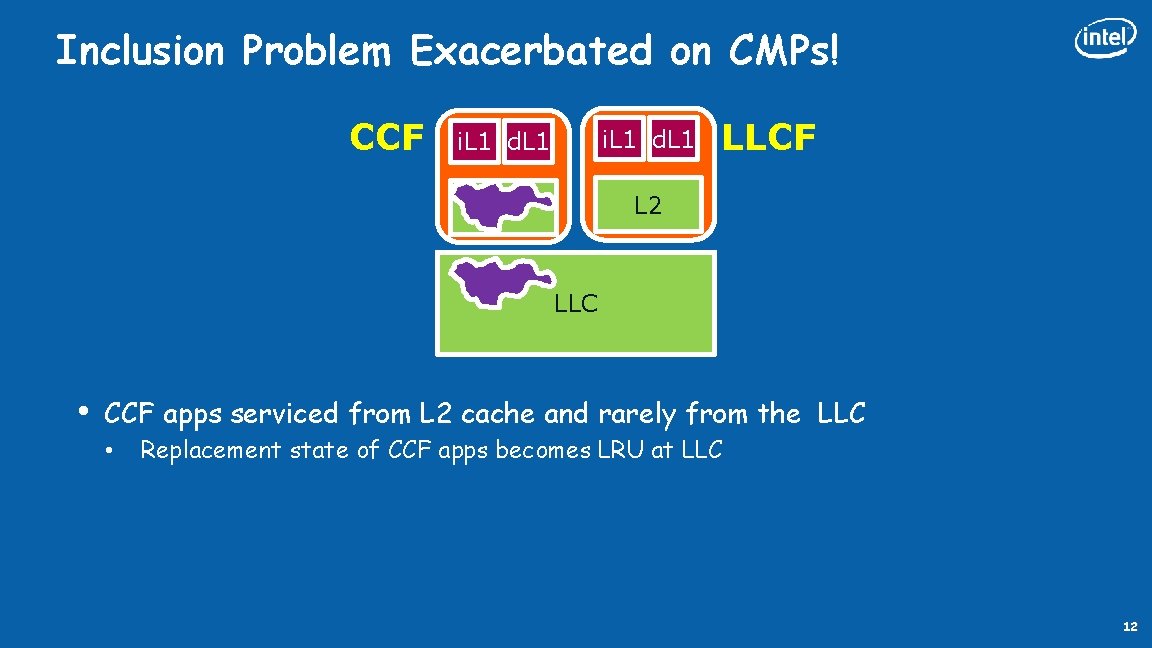

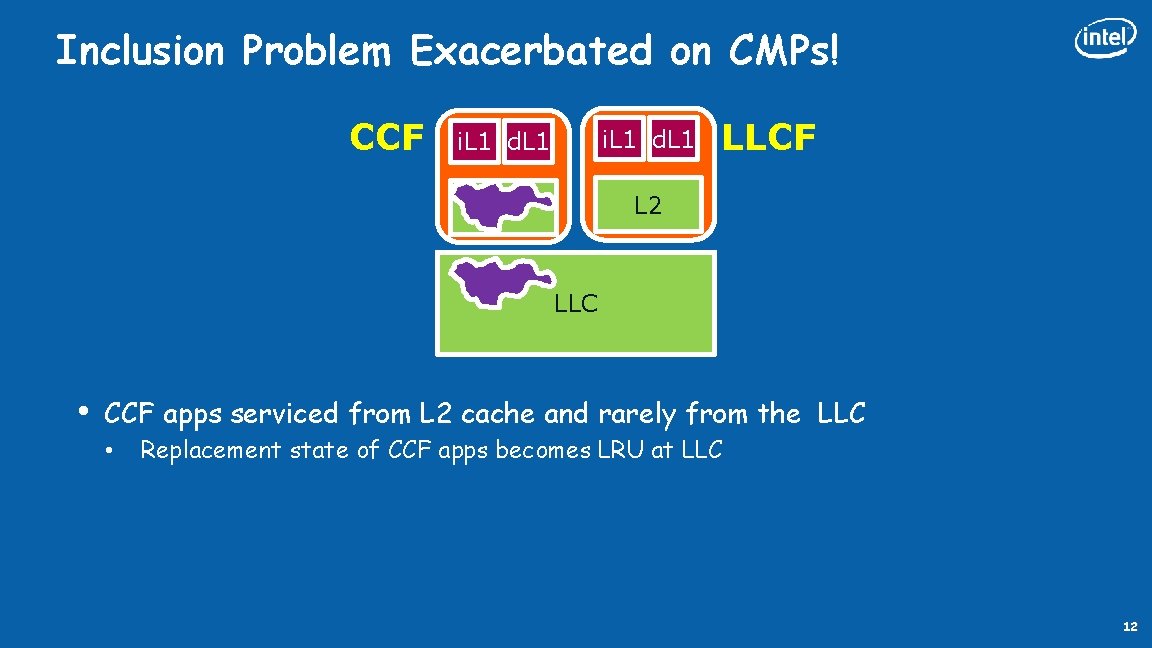

Inclusion Problem Exacerbated on CMPs! CCF i. L 1 d. L 1 L 2 LLCF LLC • CCF apps serviced from L 2 cache and rarely from the LLC • Replacement state of CCF apps becomes LRU at LLC 12

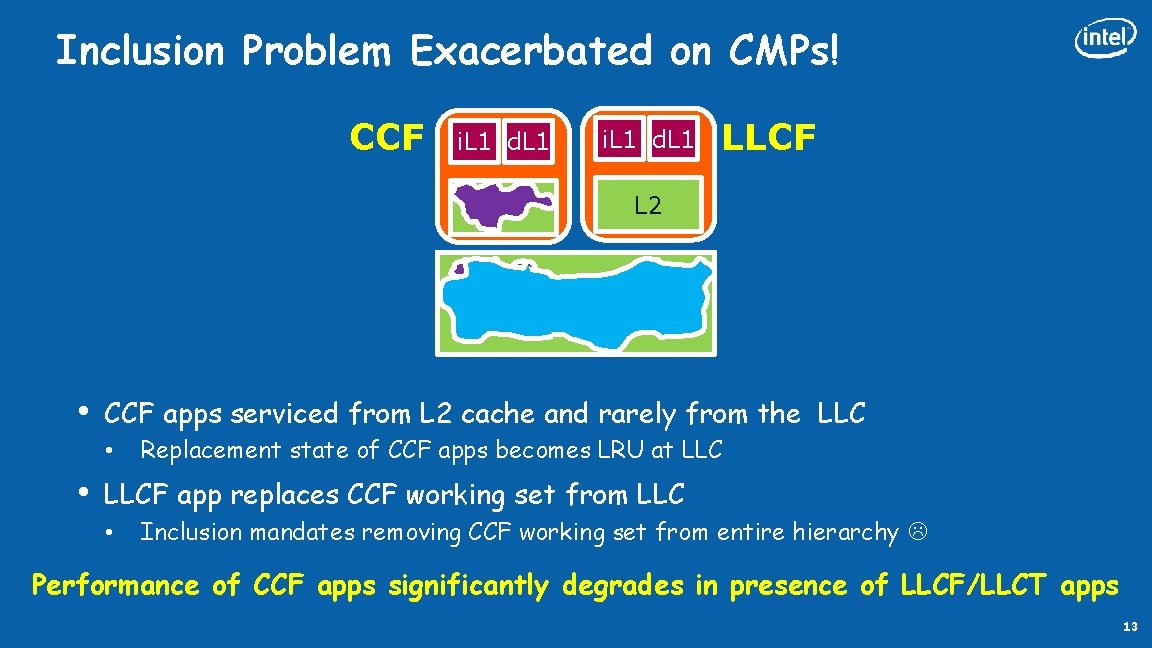

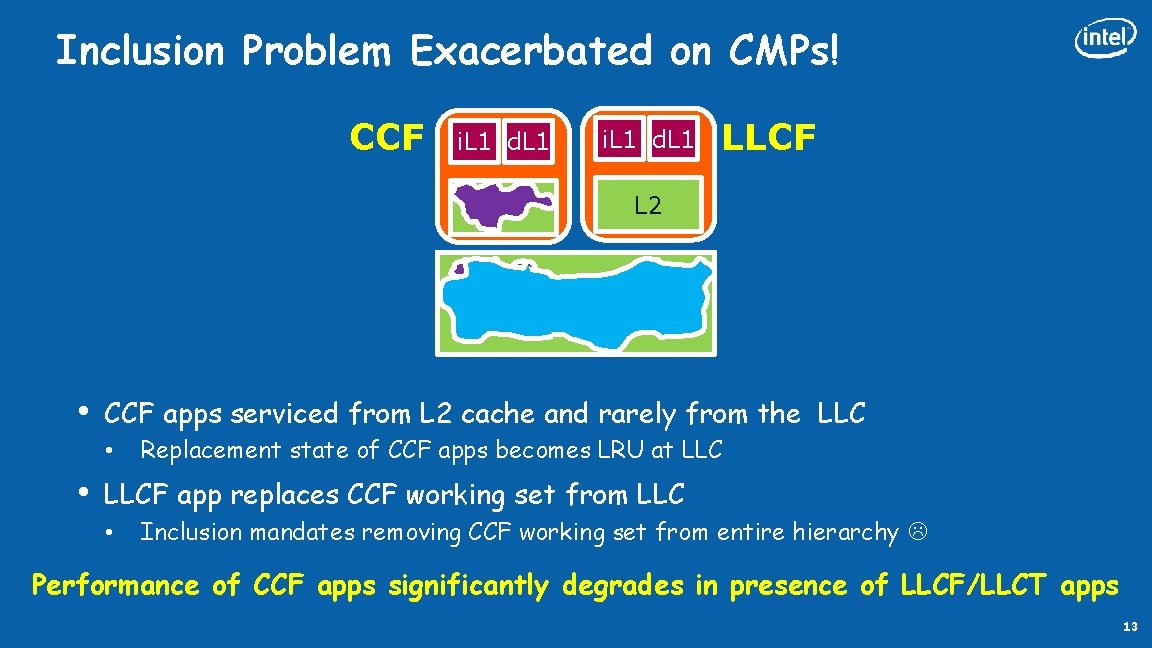

Inclusion Problem Exacerbated on CMPs! CCF i. L 1 d. L 1 L 2 LLCF LLC • CCF apps serviced from L 2 cache and rarely from the LLC • • Replacement state of CCF apps becomes LRU at LLCF app replaces CCF working set from LLC • Inclusion mandates removing CCF working set from entire hierarchy Performance of CCF apps significantly degrades in presence of LLCF/LLCT apps 13

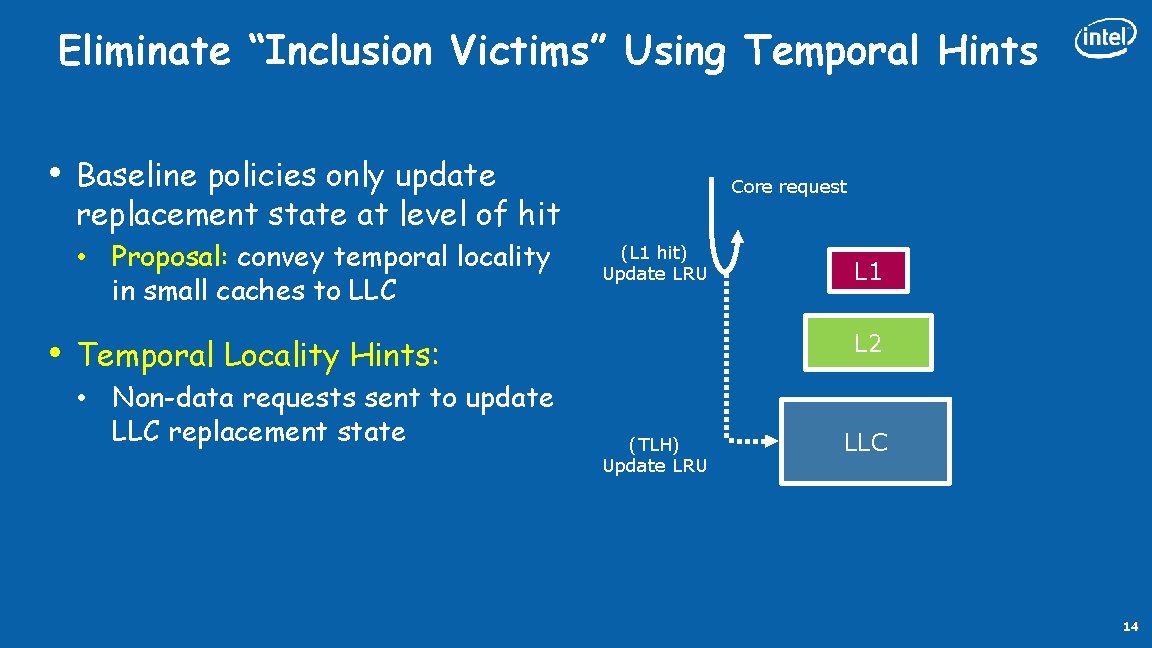

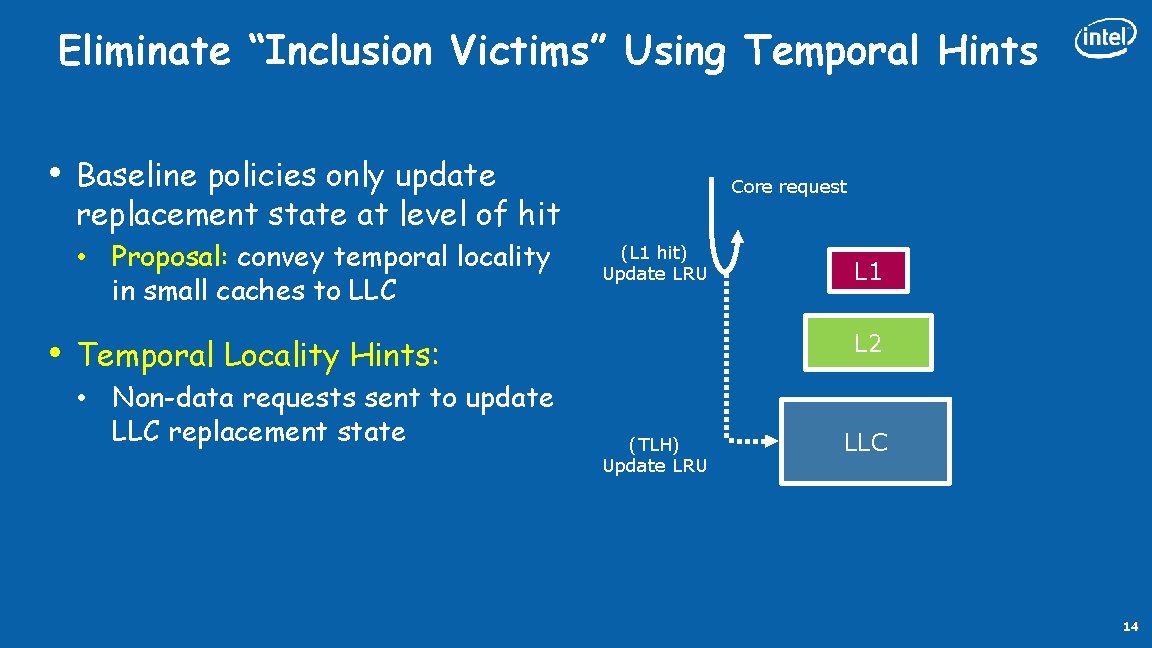

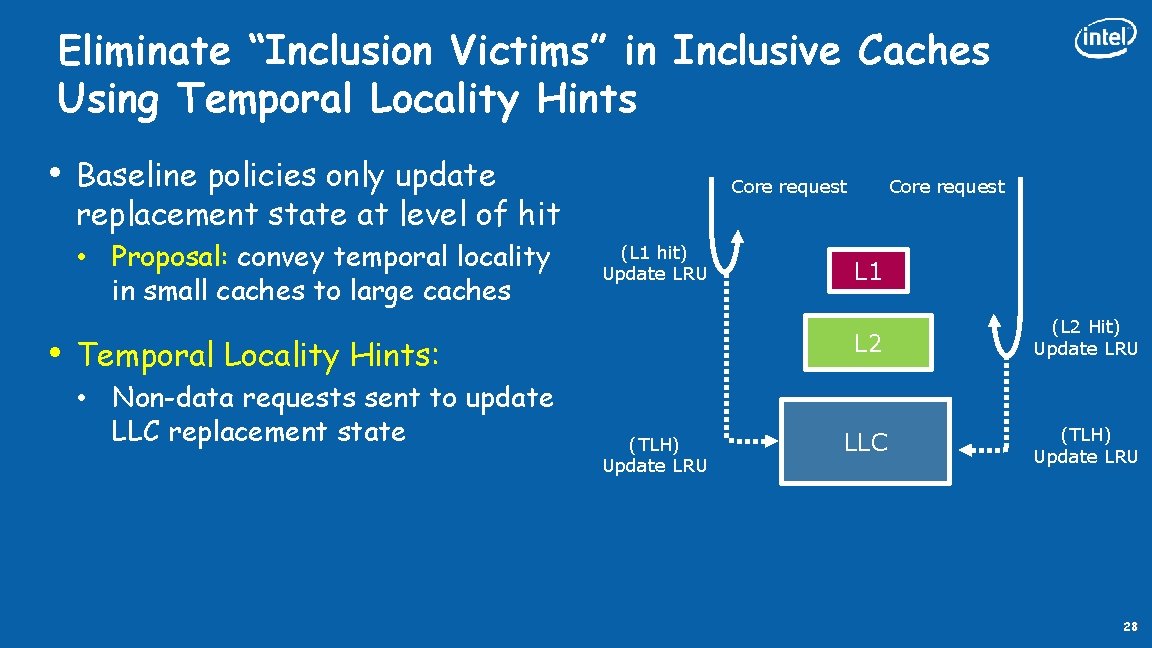

Eliminate “Inclusion Victims” Using Temporal Hints • Baseline policies only update replacement state at level of hit • Proposal: convey temporal locality in small caches to LLC • Core request (L 1 hit) Update LRU L 2 Temporal Locality Hints: • Non-data requests sent to update LLC replacement state L 1 (TLH) Update LRU LLC 14

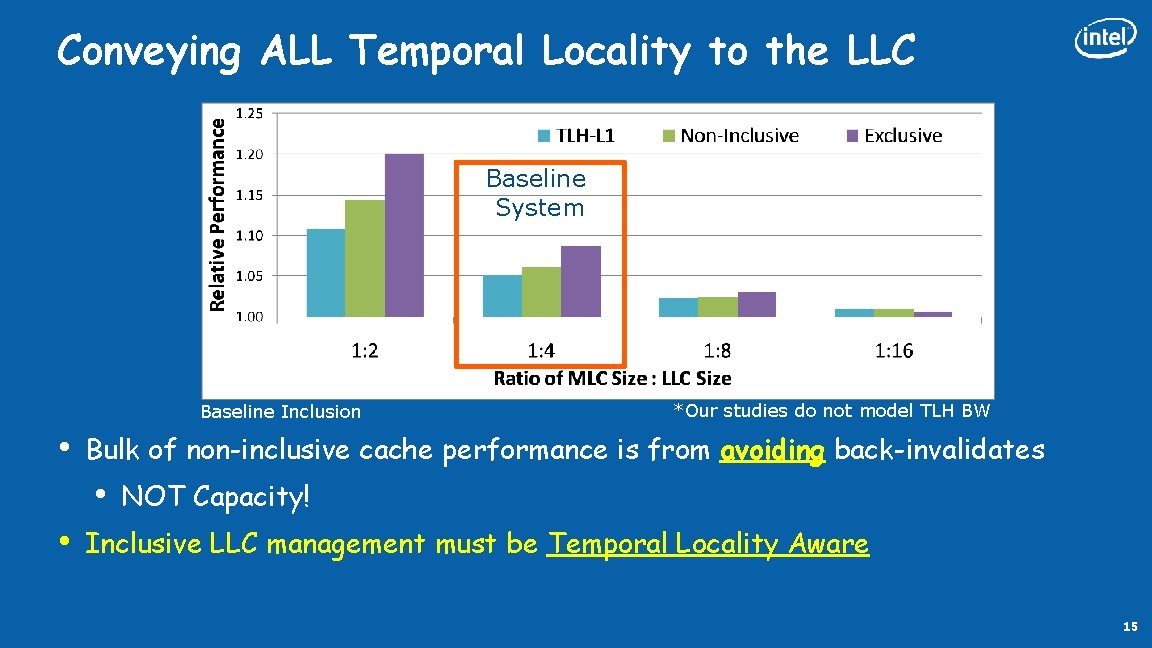

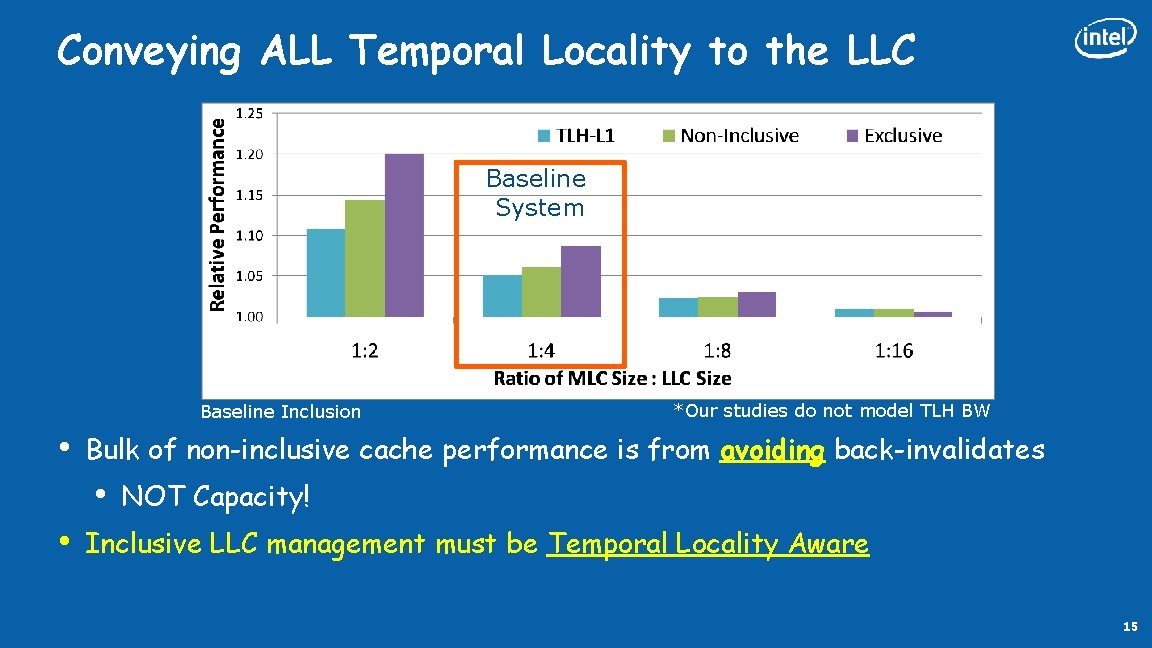

Conveying ALL Temporal Locality to the LLC Baseline System Baseline Inclusion • Bulk of non-inclusive cache performance is from avoiding back-invalidates • • *Our studies do not model TLH BW NOT Capacity! Inclusive LLC management must be Temporal Locality Aware 15

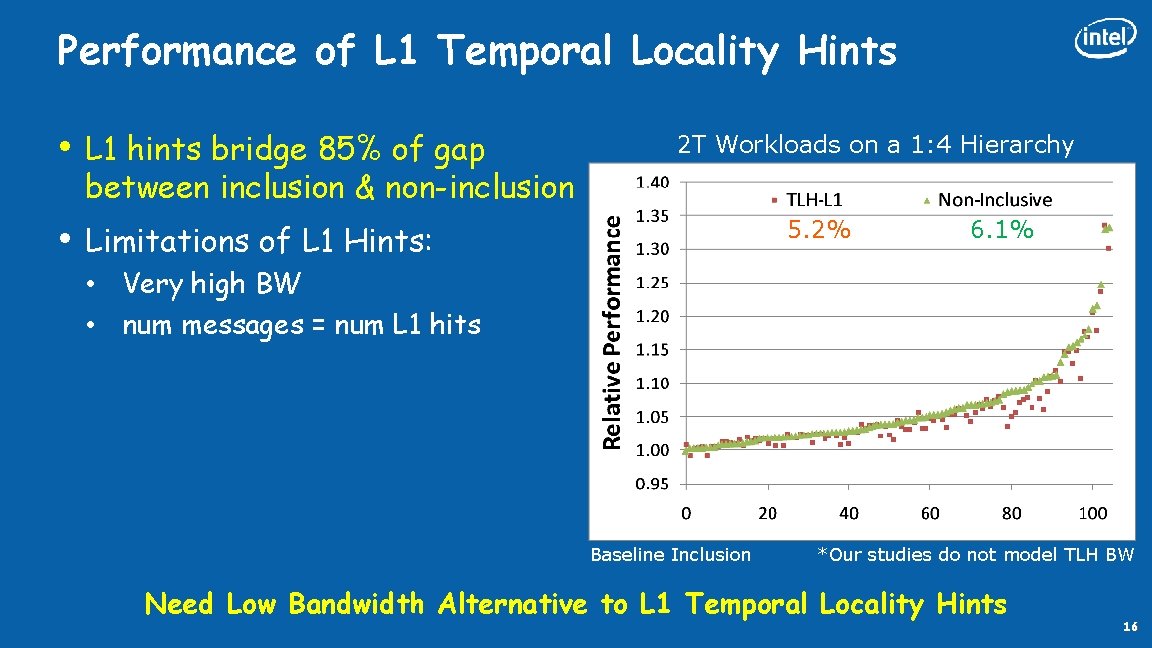

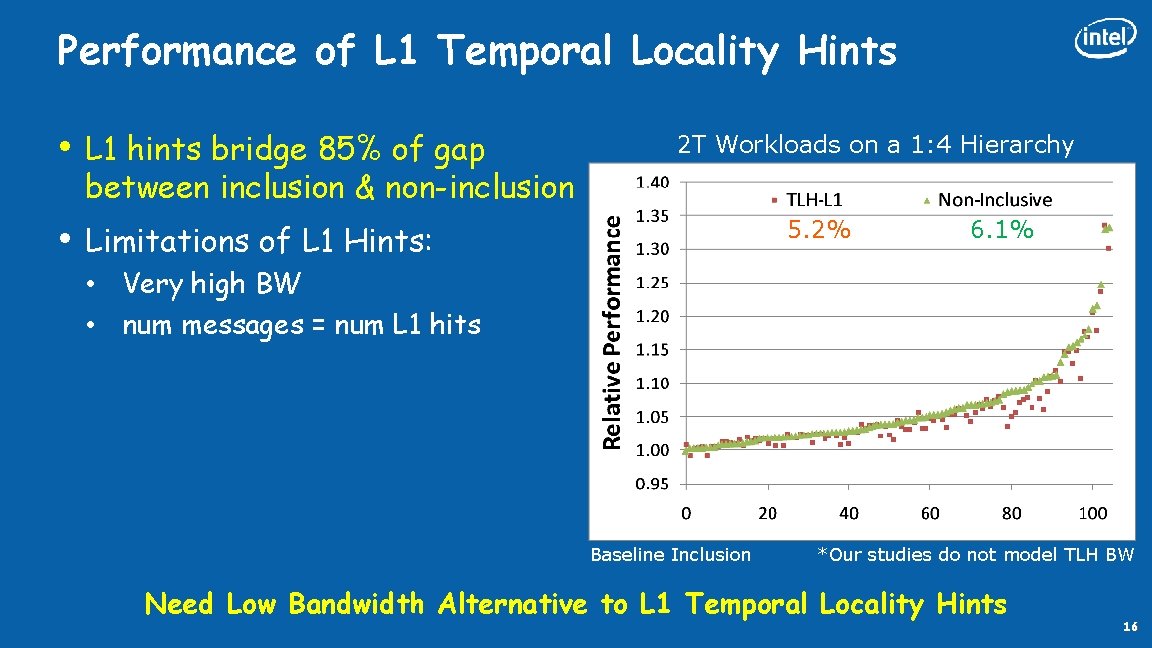

Performance of L 1 Temporal Locality Hints • • L 1 hints bridge 85% of gap between inclusion & non-inclusion 2 T Workloads on a 1: 4 Hierarchy 5. 2% Limitations of L 1 Hints: 6. 1% • Very high BW • num messages = num L 1 hits Baseline Inclusion *Our studies do not model TLH BW Need Low Bandwidth Alternative to L 1 Temporal Locality Hints 16

Improving Inclusive Cache Performance • Eliminate back-invalidates (i. e. build non-inclusive caches) • Increases coherence complexity • Goal: Retain benefits of inclusion yet avoid inclusion victims • Solution: Temporal Locality Aware (TLA) Cache Management • Ensure LLC DOES NOT evict “hot” lines from core caches • Must identify LLC lines that have high temporal locality in core caches 17

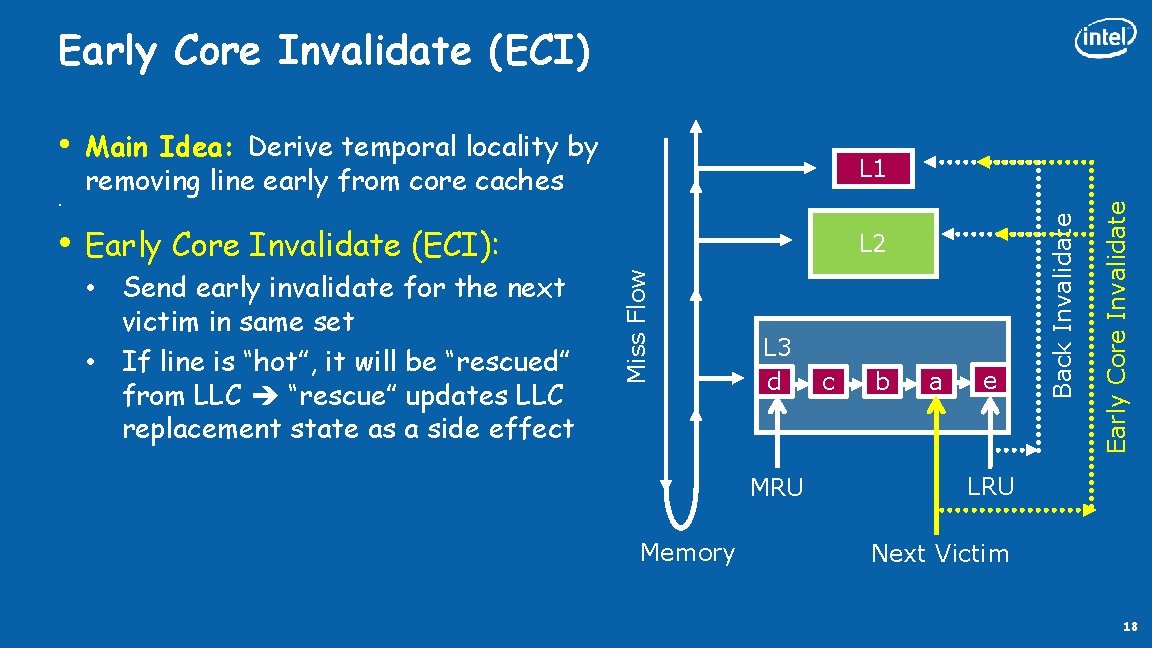

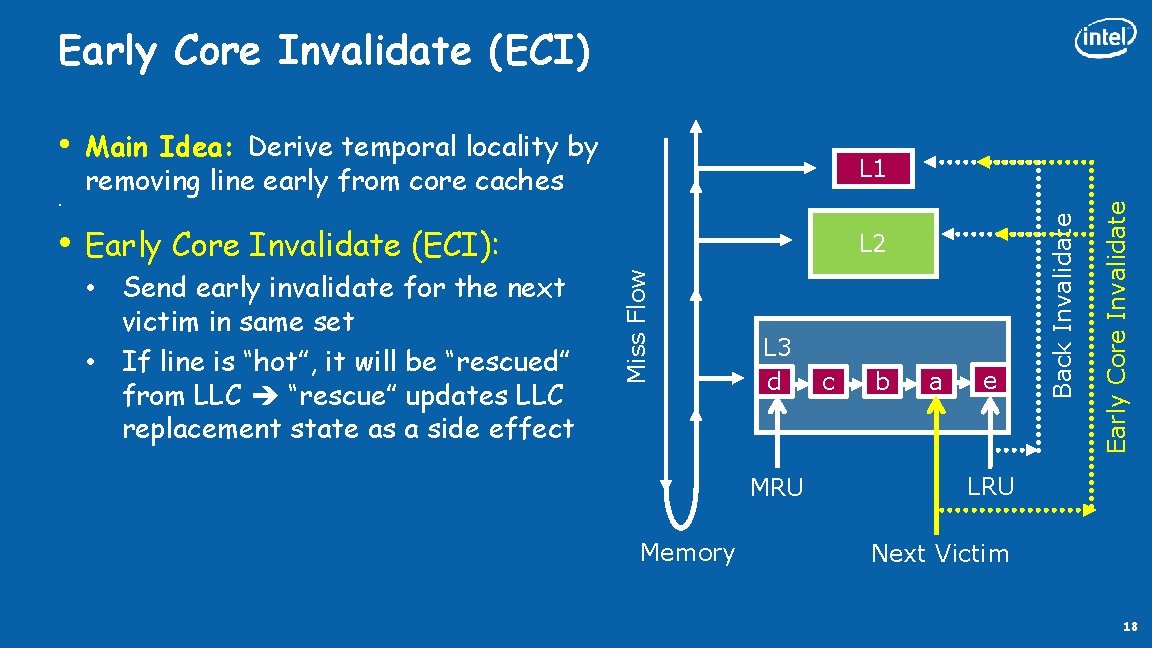

• Main Idea: Derive temporal locality by removing line early from core caches L 1 Early Core Invalidate (ECI): L 2 • Send early invalidate for the next victim in same set • If line is “hot”, it will be “rescued” from LLC “rescue” updates LLC replacement state as a side effect L 3 d MRU Memory c b a e Back Invalidate • Miss Flow • Early Core Invalidate (ECI) LRU Next Victim 18

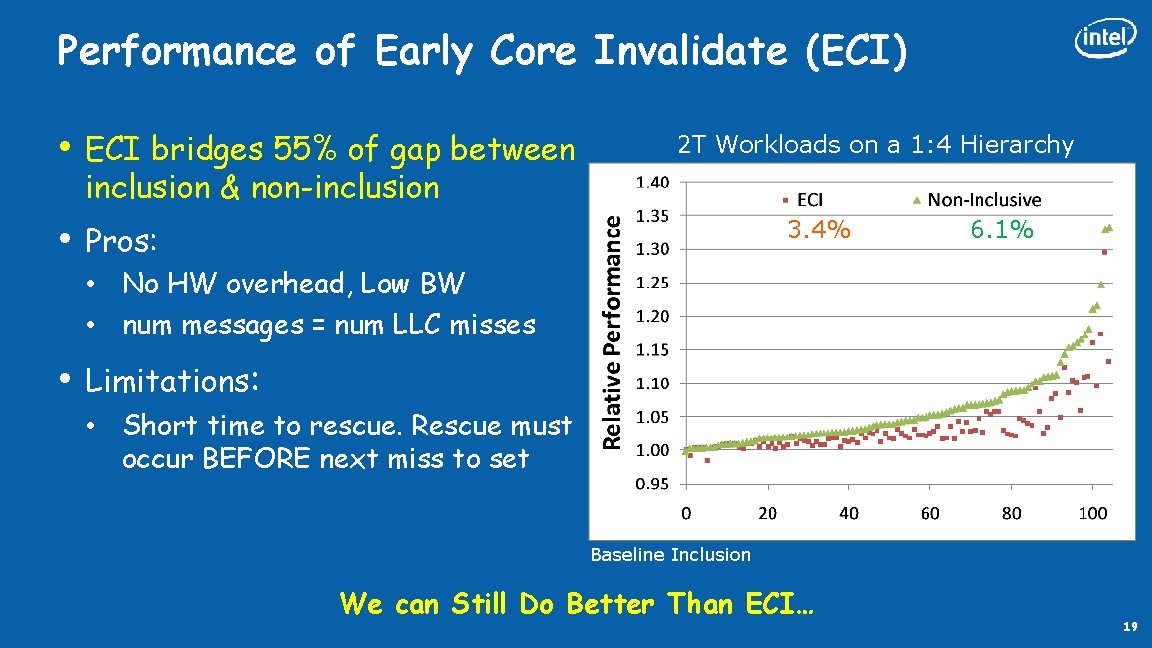

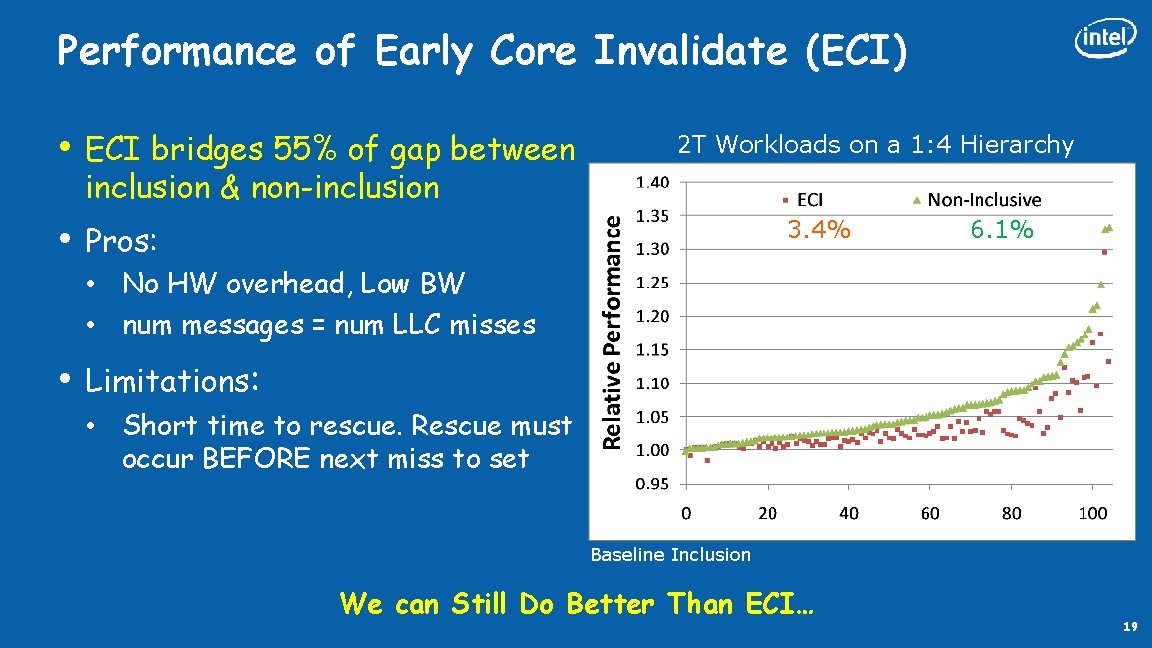

Performance of Early Core Invalidate (ECI) • • ECI bridges 55% of gap between inclusion & non-inclusion 2 T Workloads on a 1: 4 Hierarchy 3. 4% Pros: 6. 1% • No HW overhead, Low BW • num messages = num LLC misses • Limitations: • Short time to rescue. Rescue must occur BEFORE next miss to set Baseline Inclusion We can Still Do Better Than ECI… 19

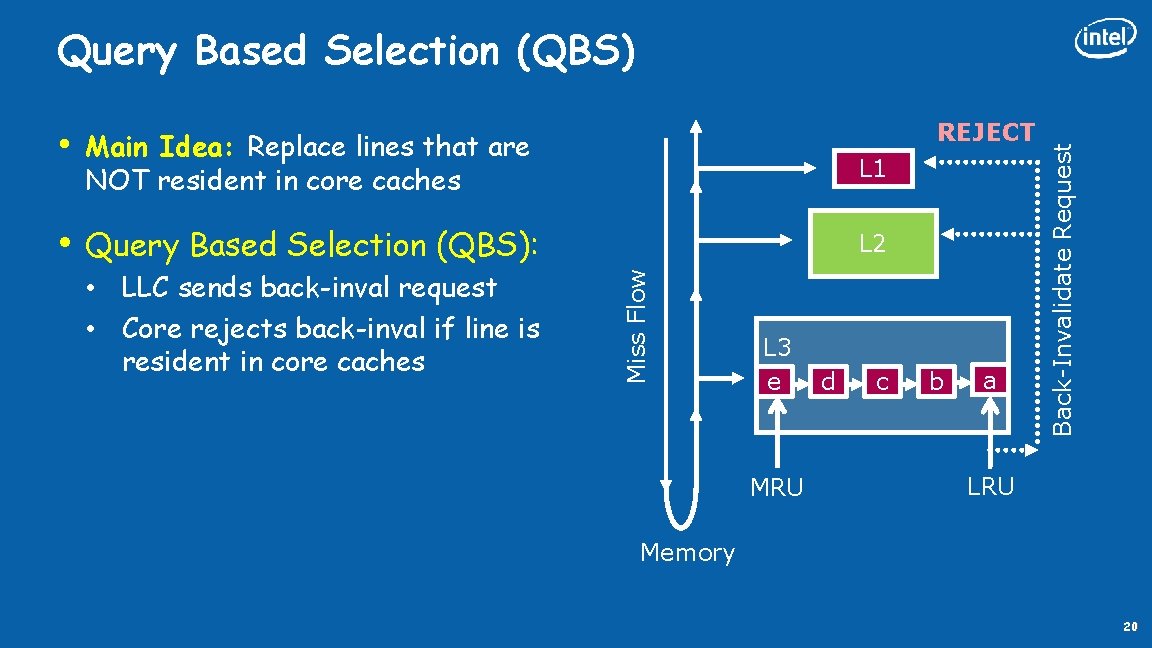

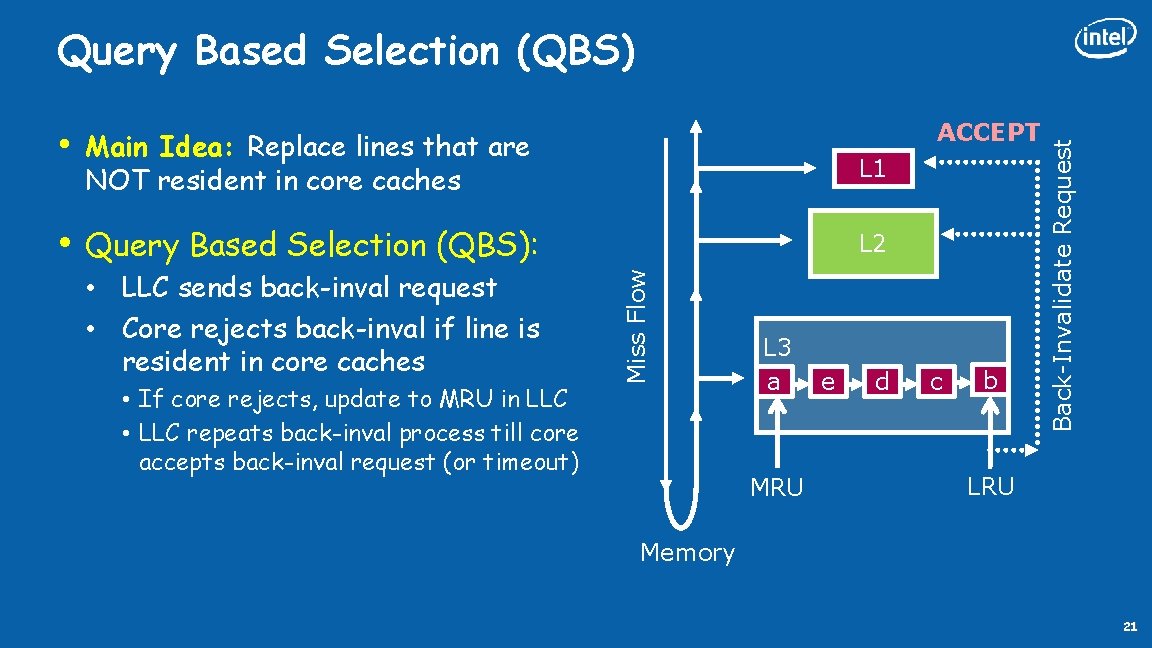

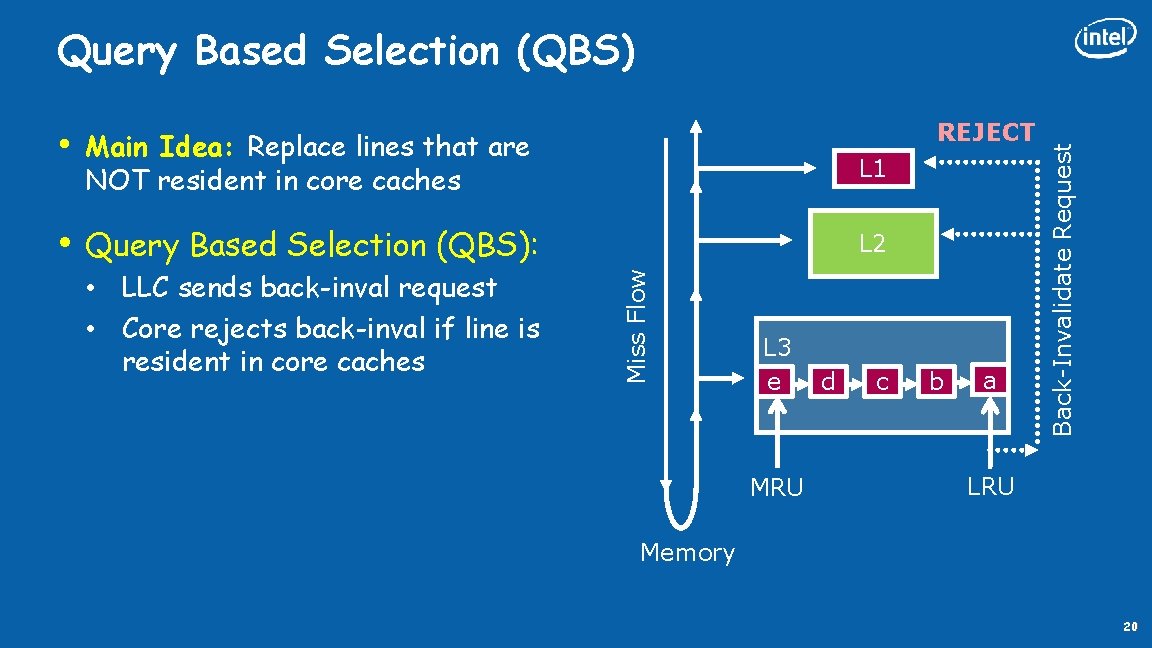

REJECT Main Idea: Replace lines that are NOT resident in core caches L 1 • Query Based Selection (QBS): L 2 • LLC sends back-inval request • Core rejects back-inval if line is resident in core caches Miss Flow • L 3 e MRU d c b a Back-Invalidate Request Query Based Selection (QBS) LRU Memory 20

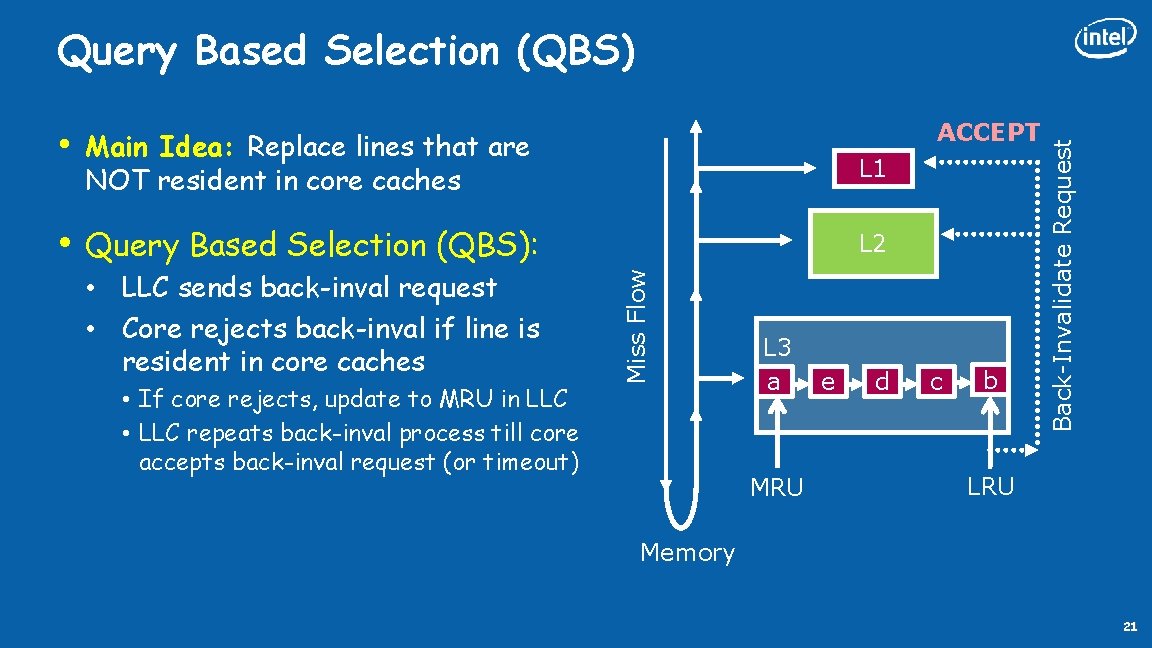

ACCEPT Main Idea: Replace lines that are NOT resident in core caches L 1 • Query Based Selection (QBS): L 2 • LLC sends back-inval request • Core rejects back-inval if line is resident in core caches • If core rejects, update to MRU in LLC • LLC repeats back-inval process till core accepts back-inval request (or timeout) Miss Flow • L 3 a MRU e d c b Back-Invalidate Request Query Based Selection (QBS) LRU Memory 21

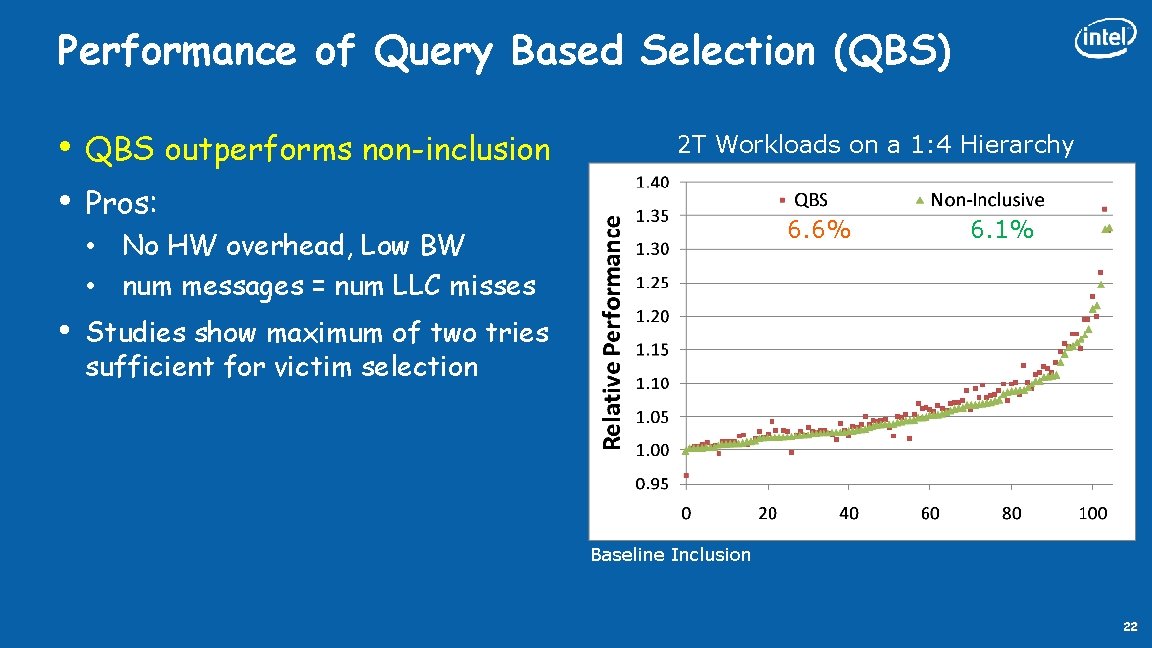

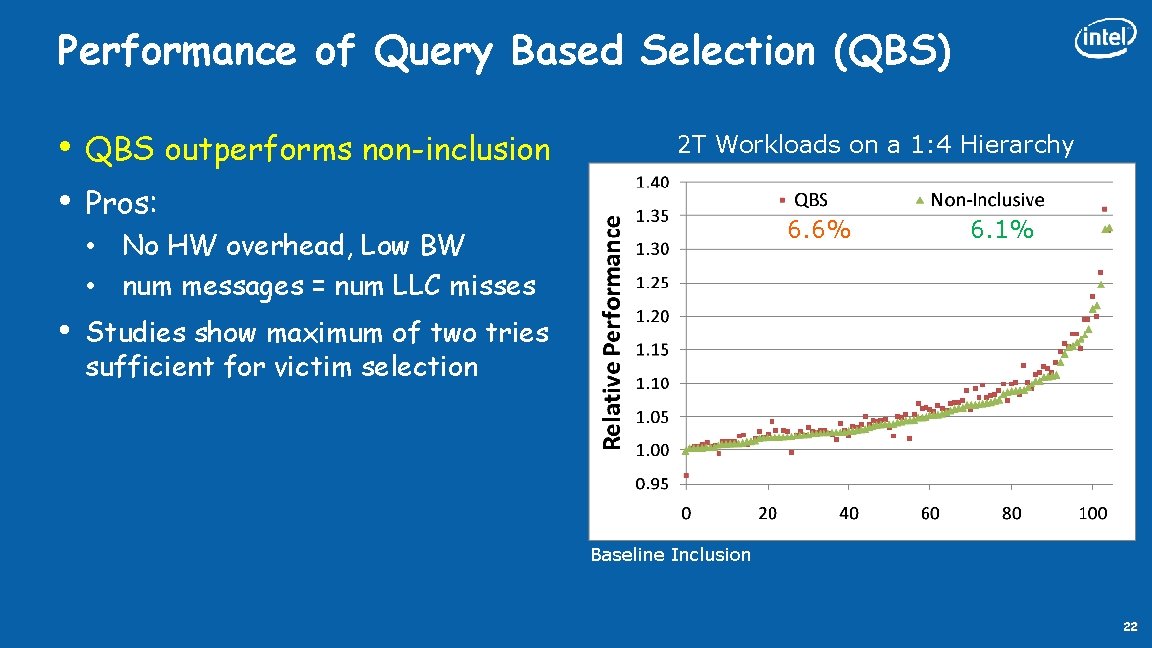

Performance of Query Based Selection (QBS) • • QBS outperforms non-inclusion 2 T Workloads on a 1: 4 Hierarchy Pros: 6. 6% • No HW overhead, Low BW • num messages = num LLC misses • 6. 1% Studies show maximum of two tries sufficient for victim selection Baseline Inclusion 22

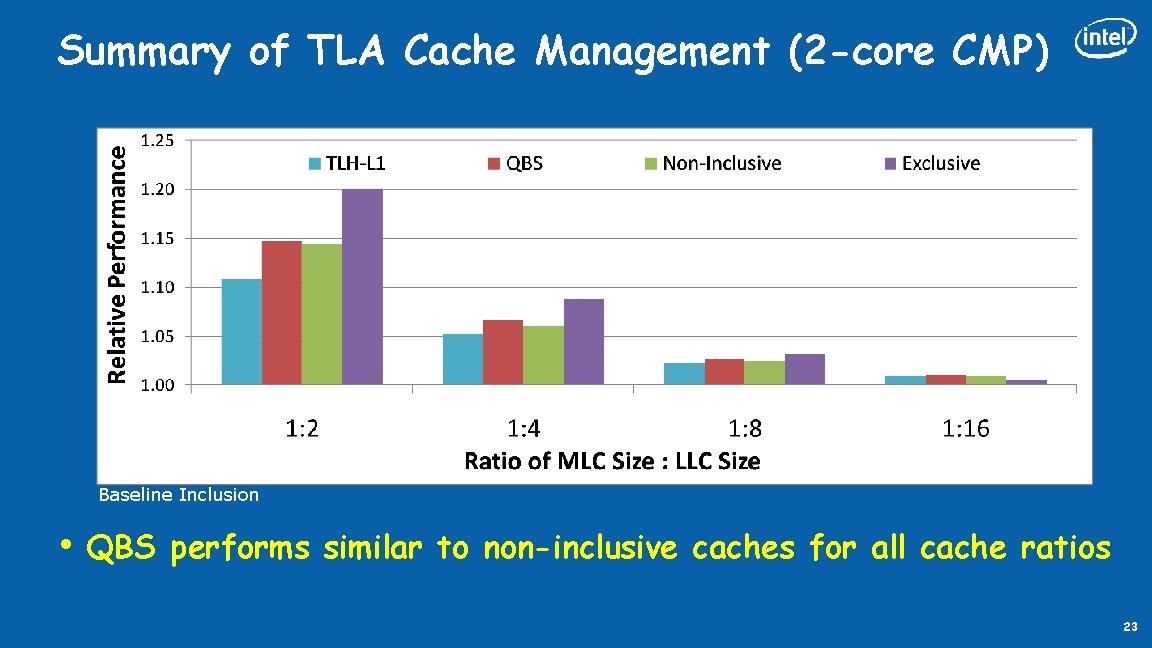

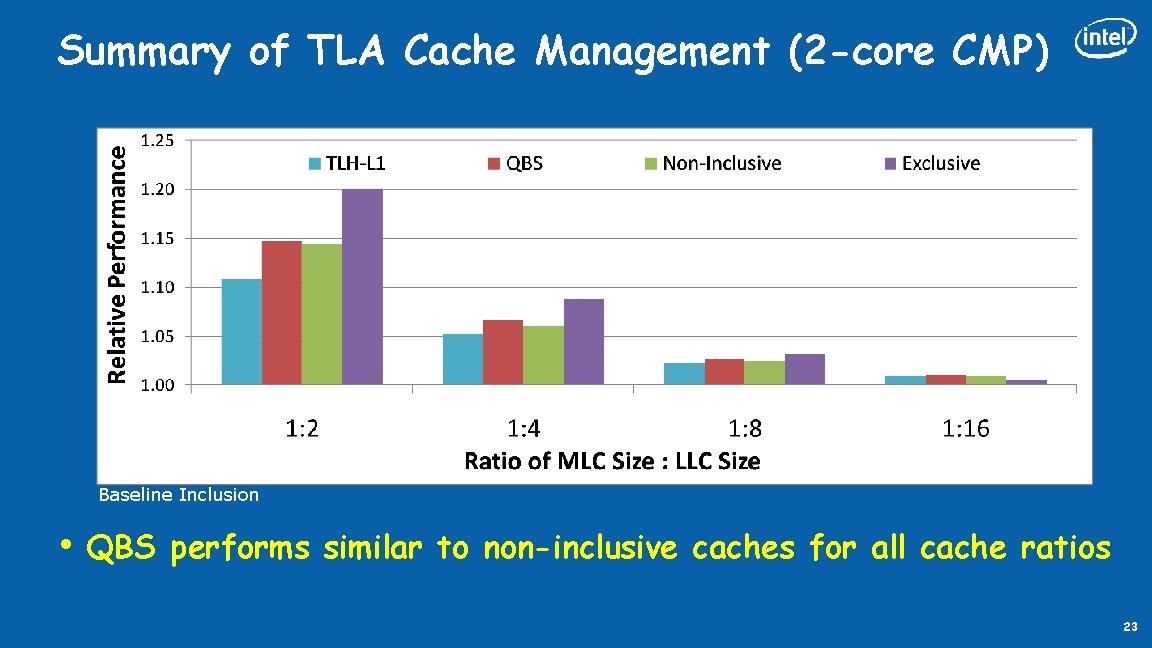

Summary of TLA Cache Management (2 -core CMP) Baseline Inclusion • QBS performs similar to non-inclusive caches for all cache ratios 23

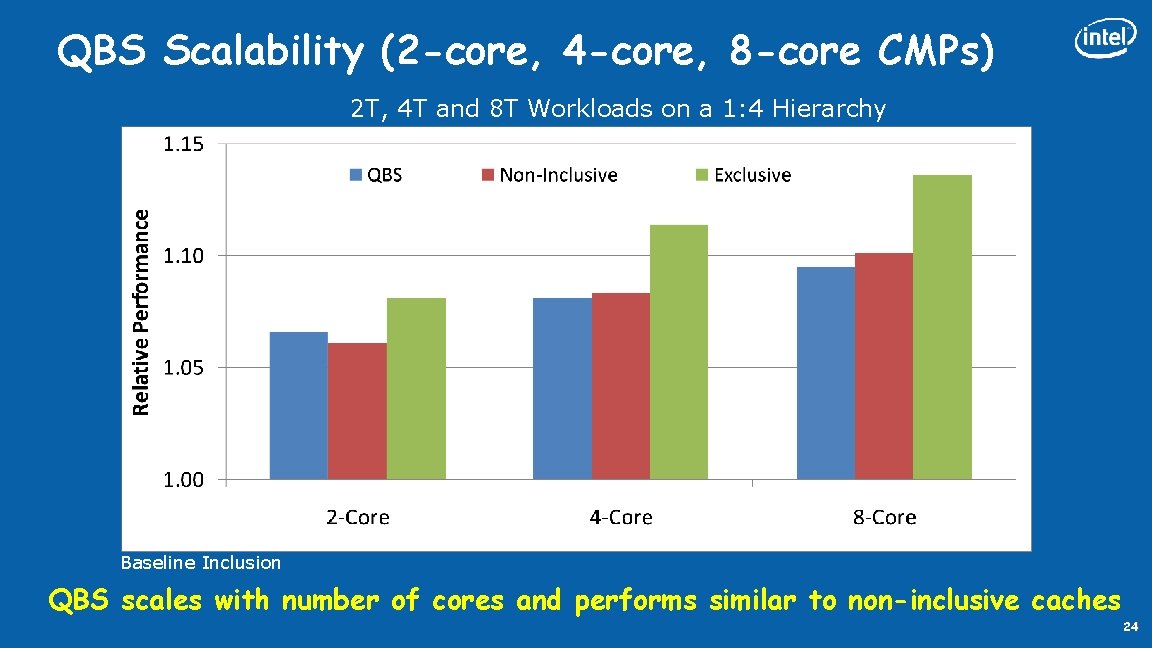

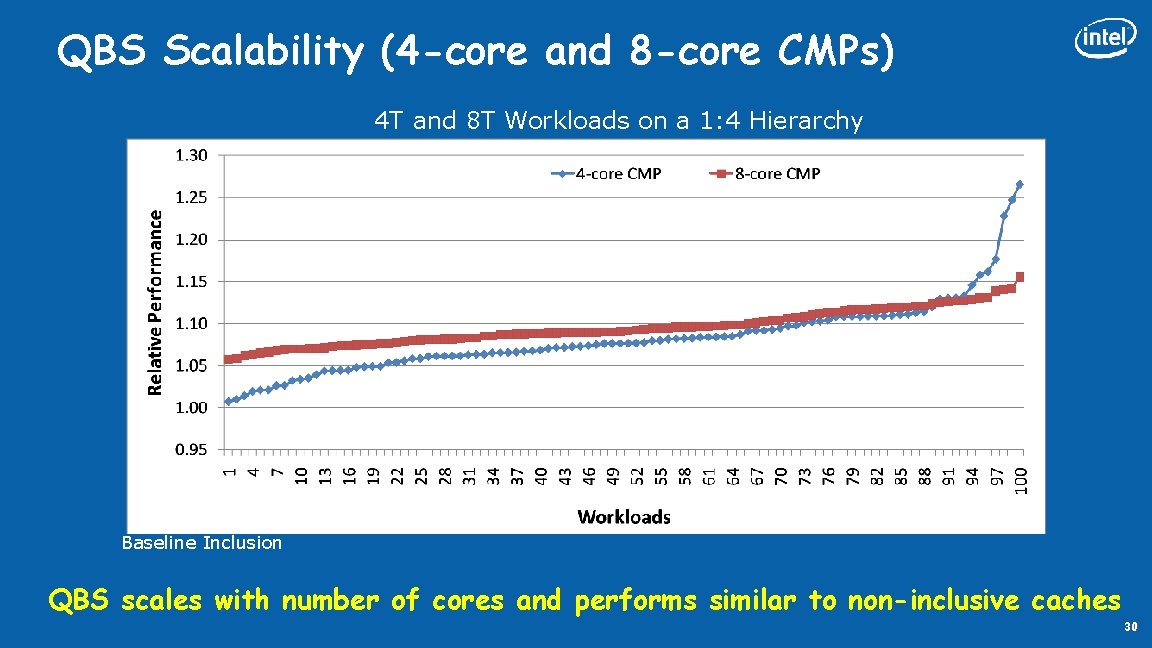

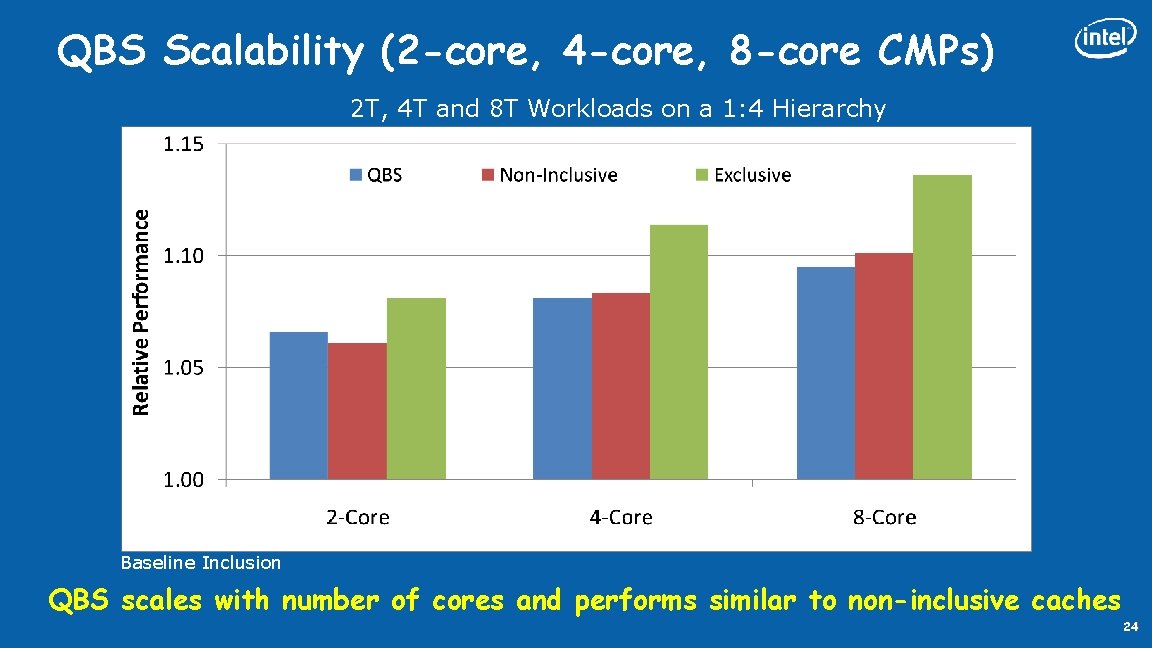

QBS Scalability (2 -core, 4 -core, 8 -core CMPs) 2 T, 4 T and 8 T Workloads on a 1: 4 Hierarchy Baseline Inclusion QBS scales with number of cores and performs similar to non-inclusive caches 24

Summary • Problem: Inclusive cache problem becomes WORSE on CMPs • Conventional Wisdom: Primary benefit of non-inclusive cache is because of higher capacity • We show: primary benefit NOT capacity but avoiding back-invalidates • Proposal: Temporal Locality Aware Cache Management – E. g. Core Cache fitting + LLC Fitting/Thrashing – Retains benefit of inclusion while minimizing back-invalidate problem – TLA managed inclusive cache = performance of non-inclusive cache 25

Q&A 26

Cache Hierarchy 101: Kinds of Cache Hierarchies Core request fill L 1 evict LLC victim memory Back. Inval fill Core request fill L 1 LLC victim memory Inclusive Hierarchy Non-Inclusive Hierarchy L 1 subset of LLC L 1 not subset of LLC

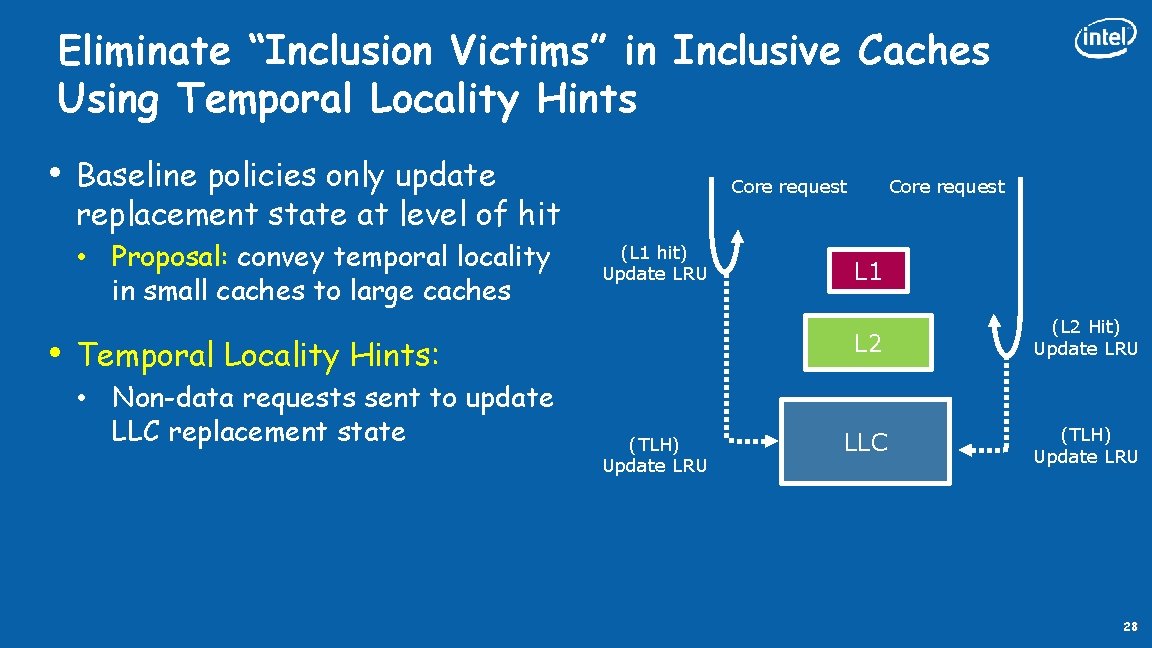

Eliminate “Inclusion Victims” in Inclusive Caches Using Temporal Locality Hints • Baseline policies only update replacement state at level of hit • Proposal: convey temporal locality in small caches to large caches • Core request (L 1 hit) Update LRU Temporal Locality Hints: • Non-data requests sent to update LLC replacement state (TLH) Update LRU Core request L 1 L 2 (L 2 Hit) Update LRU LLC (TLH) Update LRU 28

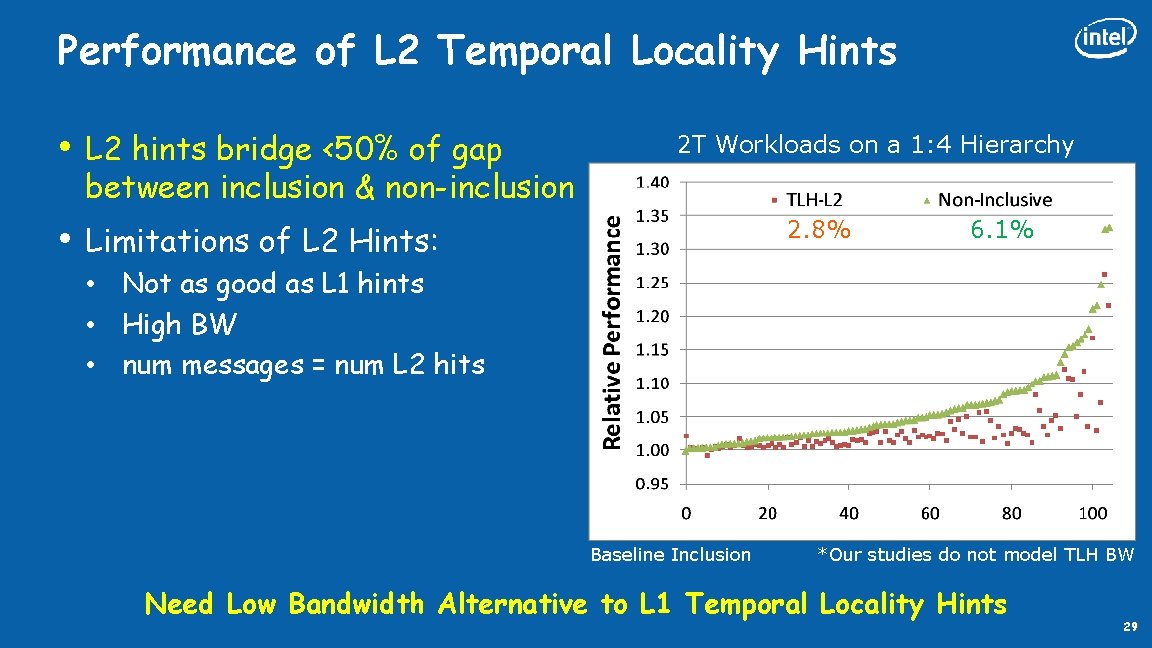

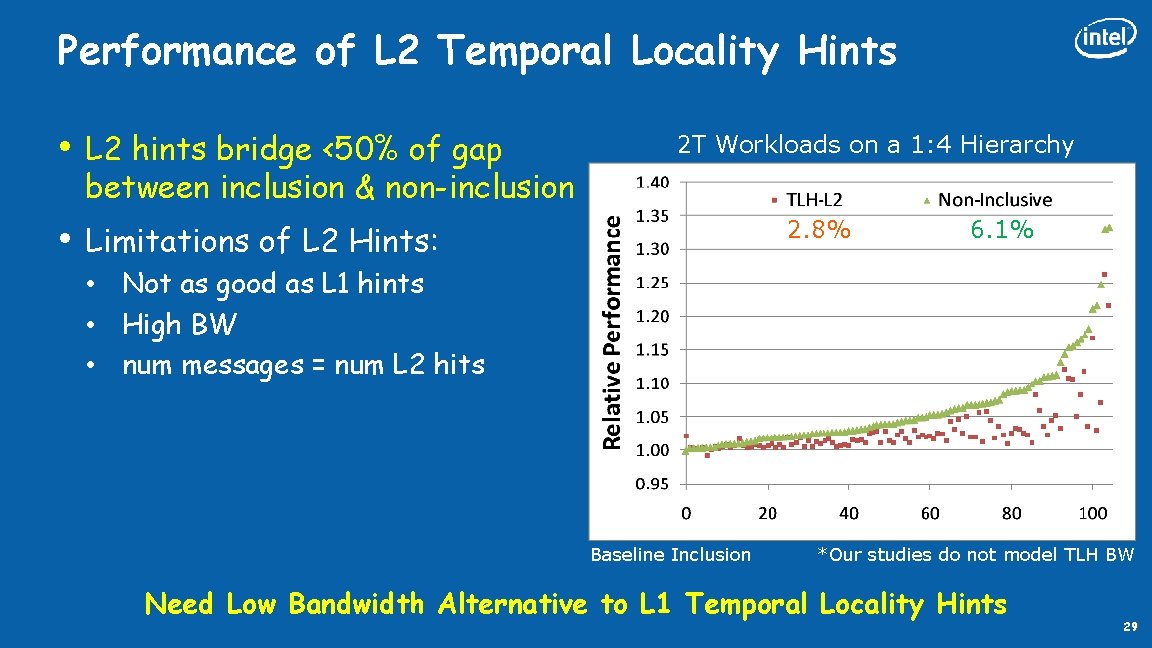

Performance of L 2 Temporal Locality Hints • • L 2 hints bridge <50% of gap between inclusion & non-inclusion 2 T Workloads on a 1: 4 Hierarchy 2. 8% Limitations of L 2 Hints: 6. 1% • Not as good as L 1 hints • High BW • num messages = num L 2 hits Baseline Inclusion *Our studies do not model TLH BW Need Low Bandwidth Alternative to L 1 Temporal Locality Hints 29

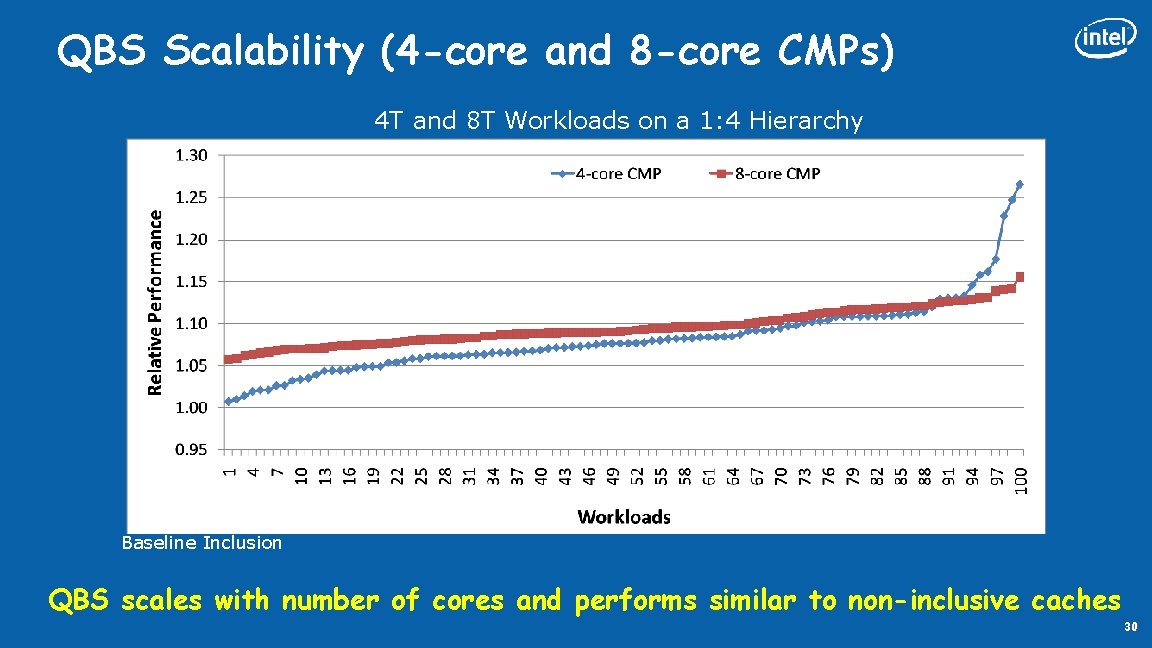

QBS Scalability (4 -core and 8 -core CMPs) 4 T and 8 T Workloads on a 1: 4 Hierarchy Baseline Inclusion QBS scales with number of cores and performs similar to non-inclusive caches 30