Accessing files with NLTK Regular Expressions Accessing additional

![Reminder, file access • file(filename[, mode]) • filename. close() – File no longer available Reminder, file access • file(filename[, mode]) • filename. close() – File no longer available](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-3.jpg)

![Texting example [w for w in wordlist if re. search('^[ghi][mno][jlk][def]$', w) • First letter Texting example [w for w in wordlist if re. search('^[ghi][mno][jlk][def]$', w) • First letter](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-34.jpg)

![Python use of re • re. search(pattern, string[, flags]) – scan through string looking Python use of re • re. search(pattern, string[, flags]) – scan through string looking](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-35.jpg)

![Some shortened forms w = word class: equivalent to [a-z. A-Z 0 -9_] W Some shortened forms w = word class: equivalent to [a-z. A-Z 0 -9_] W](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-36.jpg)

![• re. findall(pattern, string[, flags]) – return all non-overlapping matches of pattern in • re. findall(pattern, string[, flags]) – return all non-overlapping matches of pattern in](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-37.jpg)

![Applications of re • Extract word pieces > word = 'supercalifragilisticexpialidocious' >>> re. findall(r'[aeiou]', Applications of re • Extract word pieces > word = 'supercalifragilisticexpialidocious' >>> re. findall(r'[aeiou]',](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-38.jpg)

- Slides: 60

Accessing files with NLTK Regular Expressions

Accessing additional files • Python has tools for accessing files from the local directories and also for obtaining files from the web. – We have seen the tools for reading any file from a local directory – Now, let’s see how to obtain files from the web.

![Reminder file access filefilename mode filename close File no longer available Reminder, file access • file(filename[, mode]) • filename. close() – File no longer available](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-3.jpg)

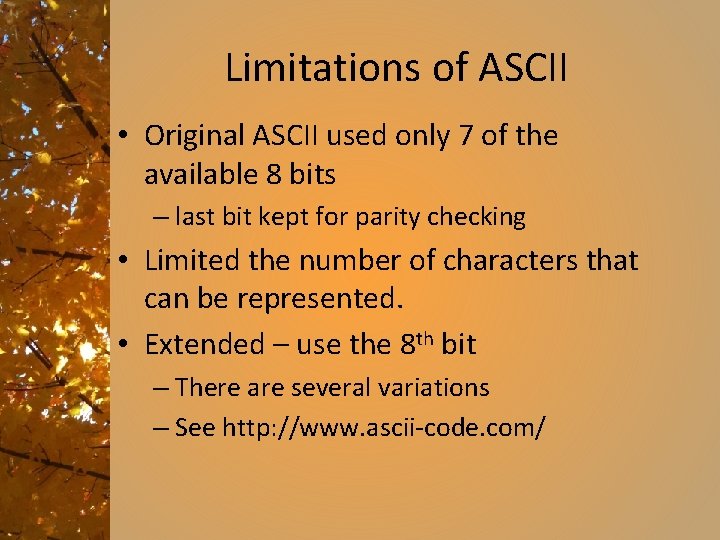

Reminder, file access • file(filename[, mode]) • filename. close() – File no longer available • filename. fileno() Mode is ‘r’ for read only, ‘w’ for write only, ‘r+’ for read or write, ‘a’ for append. Where filename is the internal name of the file object – returns the file descriptor, not usually needed. • filename. read([size]) – read at most size bytes. If size not specified, read to end of file. • filename. readline([size]) – read one line. If size provided, read that many bytes. Empty string returned if EOF encountered immediately • filename. readlines([sizehint]) – return a list of lines. If sizehint present, return approximately that number of lines, possibly rounding to fill a buffer. • filename. write(string)

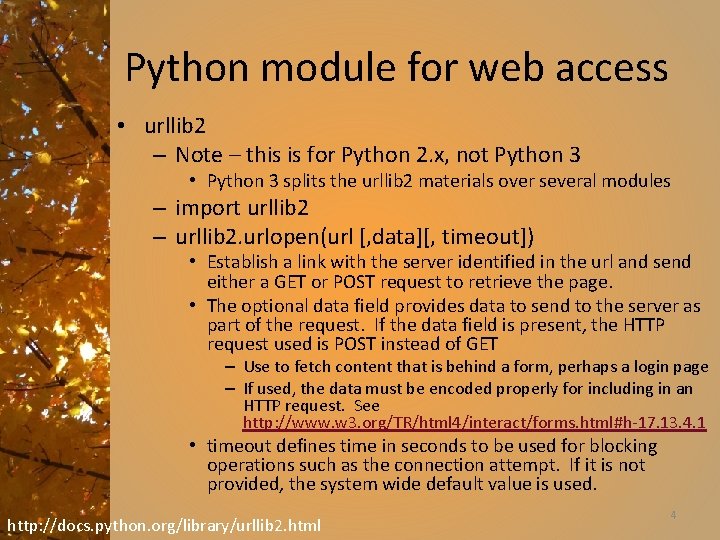

Python module for web access • urllib 2 – Note – this is for Python 2. x, not Python 3 • Python 3 splits the urllib 2 materials over several modules – import urllib 2 – urllib 2. urlopen(url [, data][, timeout]) • Establish a link with the server identified in the url and send either a GET or POST request to retrieve the page. • The optional data field provides data to send to the server as part of the request. If the data field is present, the HTTP request used is POST instead of GET – Use to fetch content that is behind a form, perhaps a login page – If used, the data must be encoded properly for including in an HTTP request. See http: //www. w 3. org/TR/html 4/interact/forms. html#h-17. 13. 4. 1 • timeout defines time in seconds to be used for blocking operations such as the connection attempt. If it is not provided, the system wide default value is used. http: //docs. python. org/library/urllib 2. html 4

URL fetch and use • urlopen returns a file-like object with methods: – Same as for files: read(), readlines(), fileno(), close() – New for this class: • info() – returns meta information about the document at the URL • getcode() – returns the HTTP status code sent with the response (ex: 200, 404) • geturl() – returns the URL of the page, which may be different from the URL requested if the server redirected the request 5

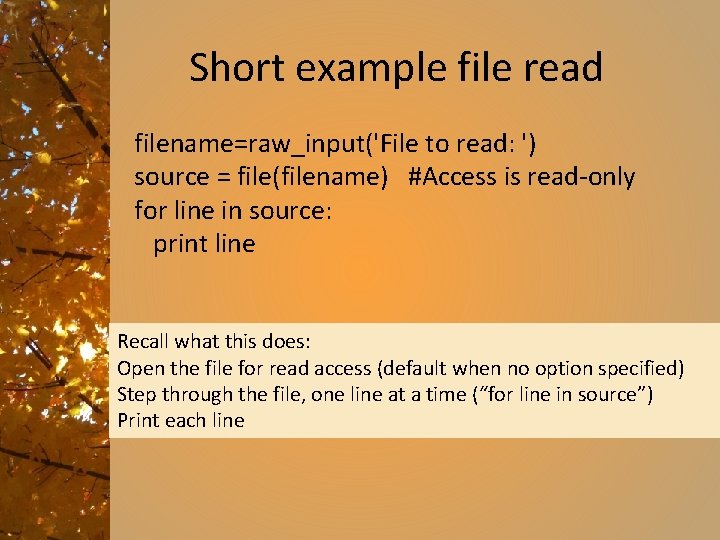

Short example file read filename=raw_input('File to read: ') source = file(filename) #Access is read-only for line in source: print line Recall what this does: Open the file for read access (default when no option specified) Step through the file, one line at a time (“for line in source”) Print each line

URL fetch import urllib 2 url = raw_input("Enter the URL of the page to fetch: ") if "http: //" not in url[0: 6]: url = "http: //"+url print "Attempting to open ", url try: linecount=0 page=urllib 2. urlopen(url) result = page. getcode() if result == 200: for line in page: print linecount+=1 print "Page Information n ", page. info() print "Result code = ", page. getcode() print "Page contains ", linecount, " lines. " except: print "n. Could not open URL: ", url

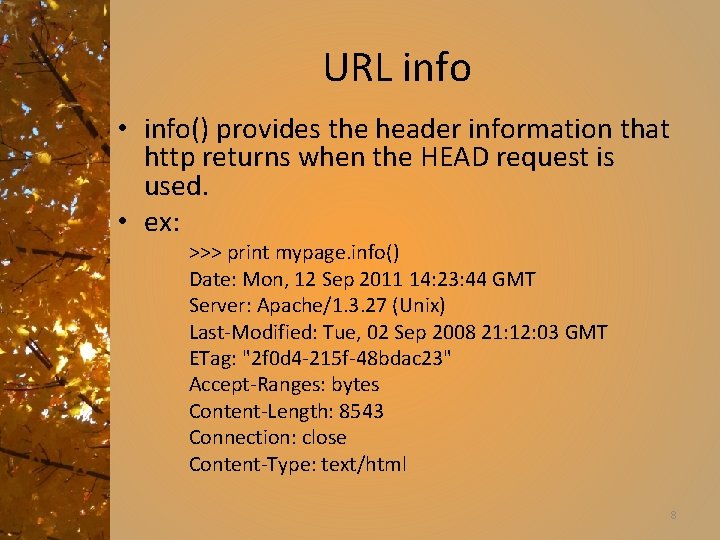

URL info • info() provides the header information that http returns when the HEAD request is used. • ex: >>> print mypage. info() Date: Mon, 12 Sep 2011 14: 23: 44 GMT Server: Apache/1. 3. 27 (Unix) Last-Modified: Tue, 02 Sep 2008 21: 12: 03 GMT ETag: "2 f 0 d 4 -215 f-48 bdac 23" Accept-Ranges: bytes Content-Length: 8543 Connection: close Content-Type: text/html 8

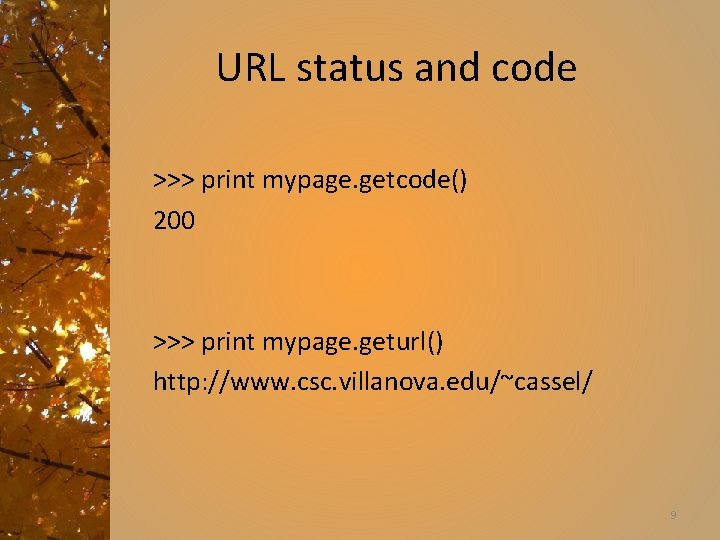

URL status and code >>> print mypage. getcode() 200 >>> print mypage. geturl() http: //www. csc. villanova. edu/~cassel/ 9

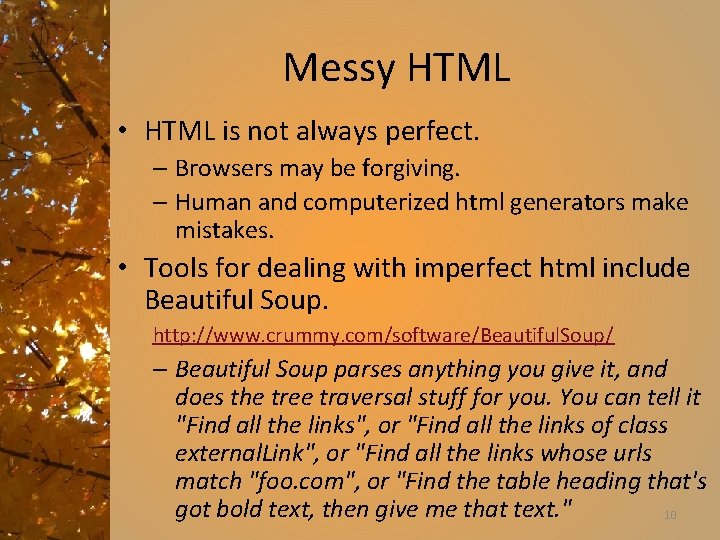

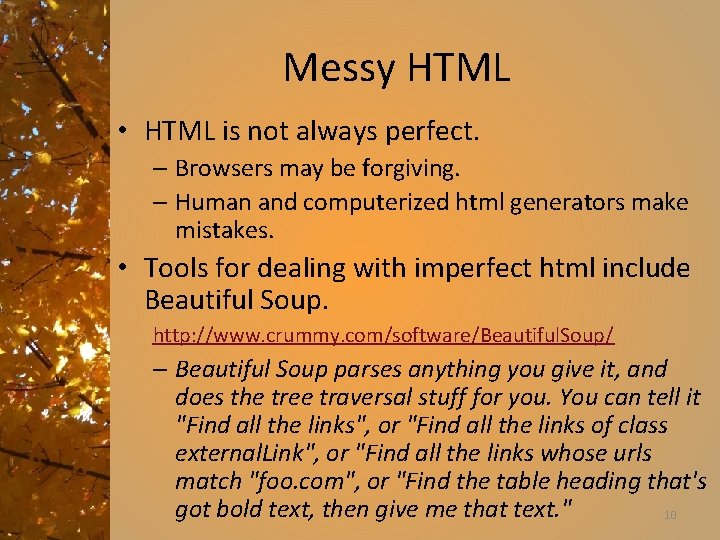

Messy HTML • HTML is not always perfect. – Browsers may be forgiving. – Human and computerized html generators make mistakes. • Tools for dealing with imperfect html include Beautiful Soup. http: //www. crummy. com/software/Beautiful. Soup/ – Beautiful Soup parses anything you give it, and does the tree traversal stuff for you. You can tell it "Find all the links", or "Find all the links of class external. Link", or "Find all the links whose urls match "foo. com", or "Find the table heading that's got bold text, then give me that text. " 10

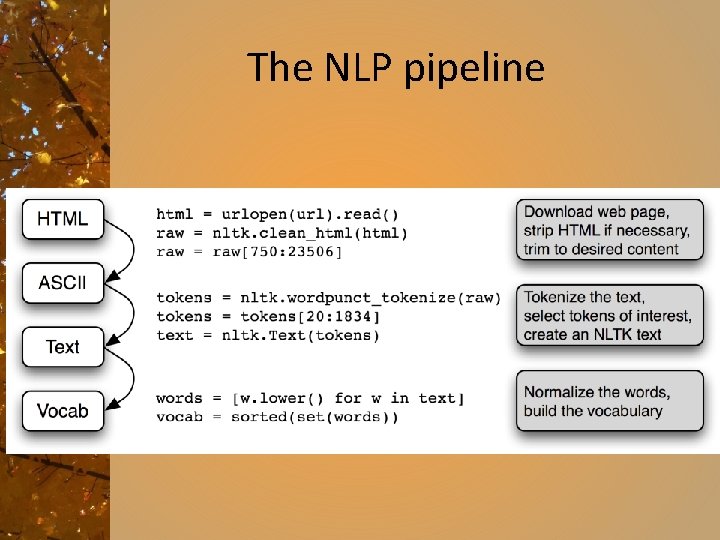

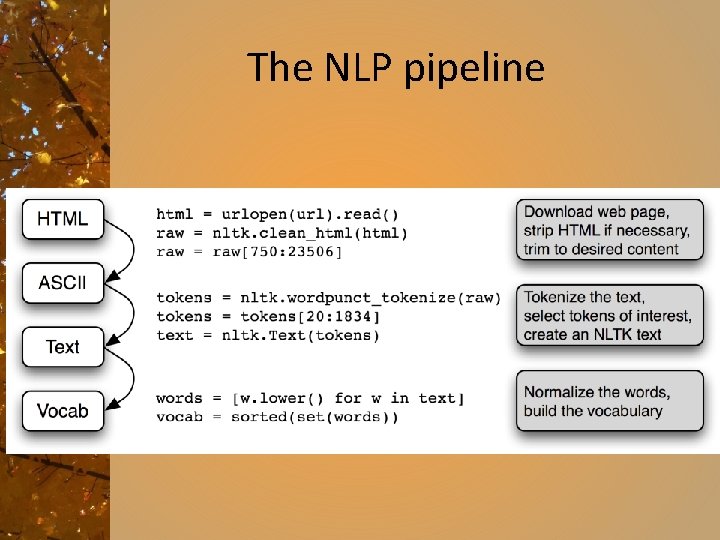

The NLP pipeline

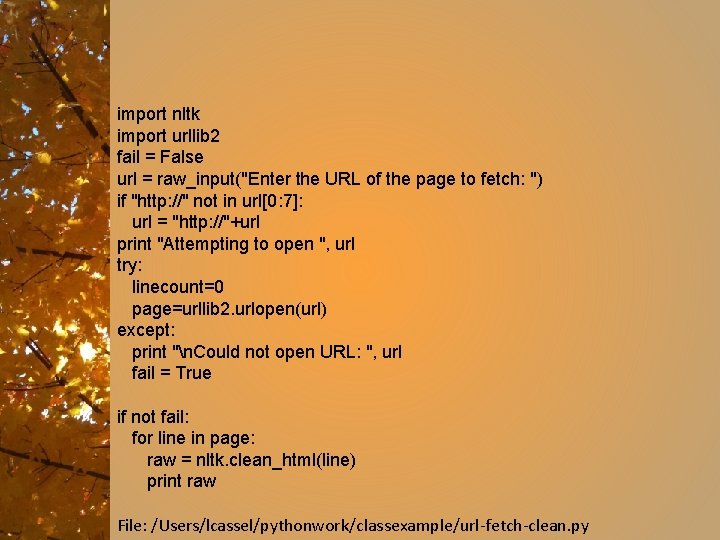

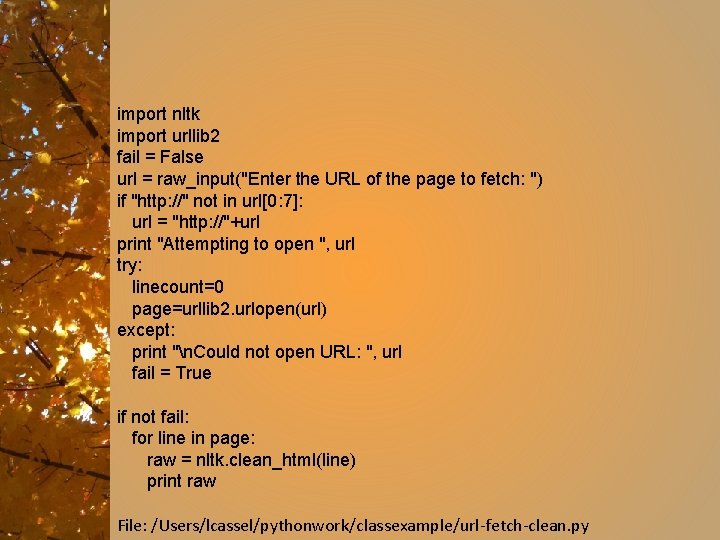

import nltk import urllib 2 fail = False url = raw_input("Enter the URL of the page to fetch: ") if "http: //" not in url[0: 7]: url = "http: //"+url print "Attempting to open ", url try: linecount=0 page=urllib 2. urlopen(url) except: print "n. Could not open URL: ", url fail = True if not fail: for line in page: raw = nltk. clean_html(line) print raw File: /Users/lcassel/pythonwork/classexample/url-fetch-clean. py

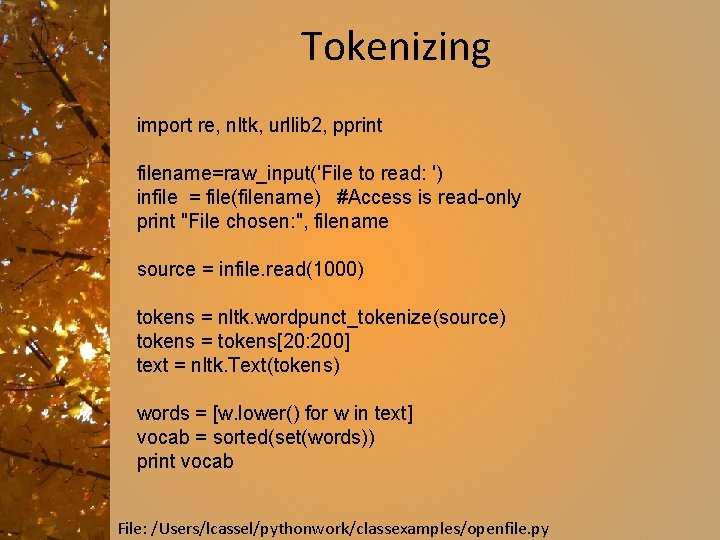

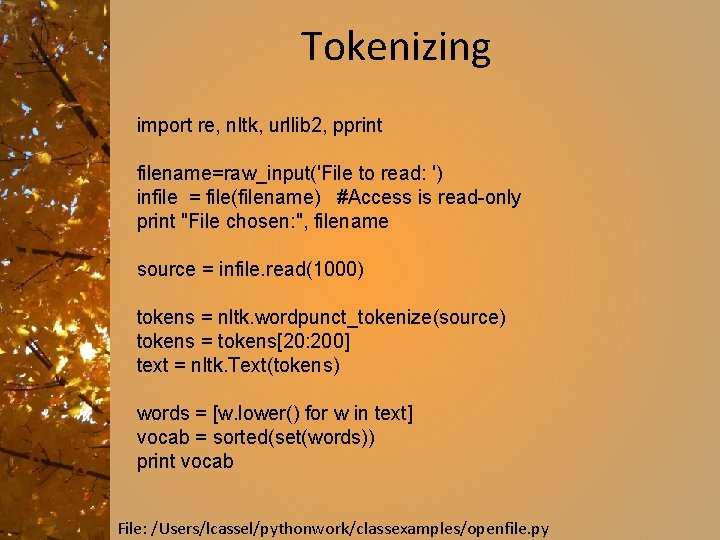

Tokenizing import re, nltk, urllib 2, pprint filename=raw_input('File to read: ') infile = file(filename) #Access is read-only print "File chosen: ", filename source = infile. read(1000) tokens = nltk. wordpunct_tokenize(source) tokens = tokens[20: 200] text = nltk. Text(tokens) words = [w. lower() for w in text] vocab = sorted(set(words)) print vocab File: /Users/lcassel/pythonwork/classexamples/openfile. py

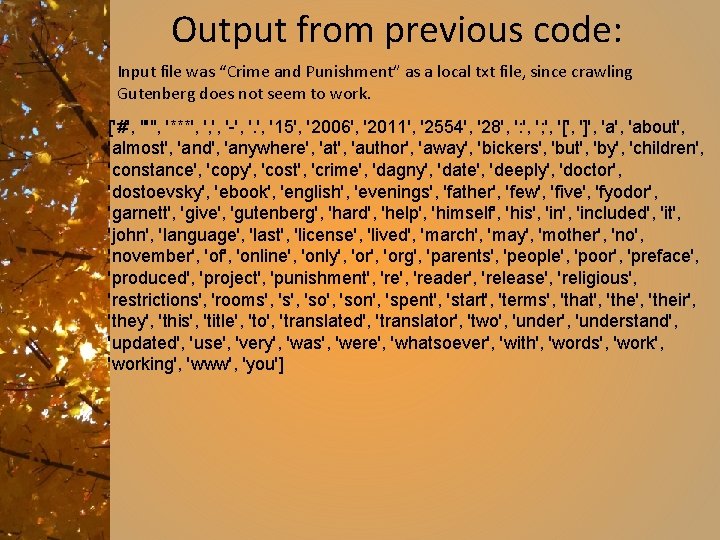

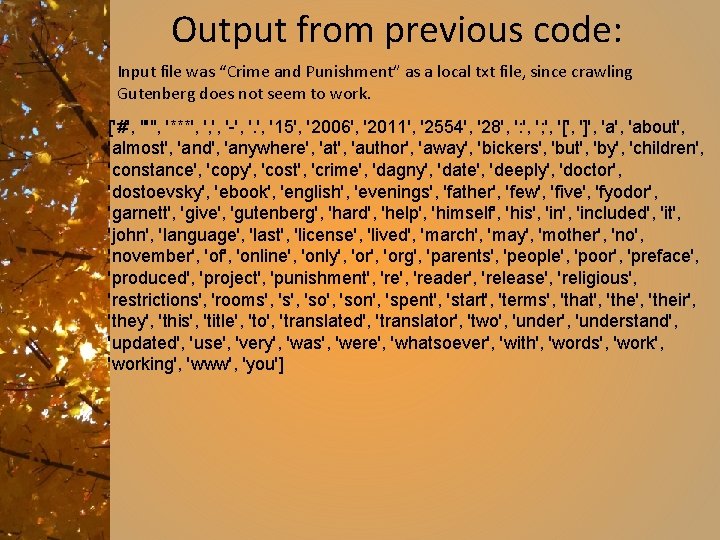

Output from previous code: Input file was “Crime and Punishment” as a local txt file, since crawling Gutenberg does not seem to work. ['#', "'", '***', ', ', '-', '15', '2006', '2011', '2554', '28', ': ', '; ', '[', ']', 'about', 'almost', 'and', 'anywhere', 'at', 'author', 'away', 'bickers', 'but', 'by', 'children', 'constance', 'copy', 'cost', 'crime', 'dagny', 'date', 'deeply', 'doctor', 'dostoevsky', 'ebook', 'english', 'evenings', 'father', 'few', 'five', 'fyodor', 'garnett', 'give', 'gutenberg', 'hard', 'help', 'himself', 'his', 'included', 'it', 'john', 'language', 'last', 'license', 'lived', 'march', 'may', 'mother', 'november', 'of', 'online', 'only', 'org', 'parents', 'people', 'poor', 'preface', 'produced', 'project', 'punishment', 'reader', 'release', 'religious', 'restrictions', 'rooms', 'so', 'son', 'spent', 'start', 'terms', 'that', 'their', 'they', 'this', 'title', 'to', 'translated', 'translator', 'two', 'understand', 'updated', 'use', 'very', 'was', 'were', 'whatsoever', 'with', 'words', 'working', 'www', 'you']

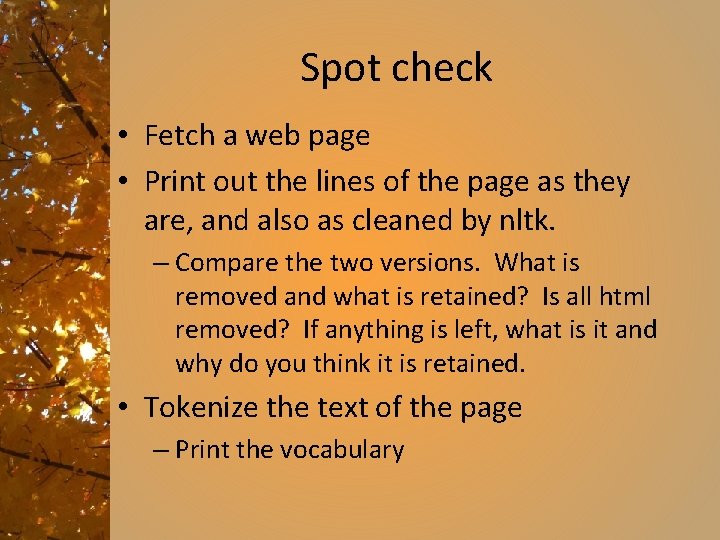

Spot check • Fetch a web page • Print out the lines of the page as they are, and also as cleaned by nltk. – Compare the two versions. What is removed and what is retained? Is all html removed? If anything is left, what is it and why do you think it is retained. • Tokenize the text of the page – Print the vocabulary

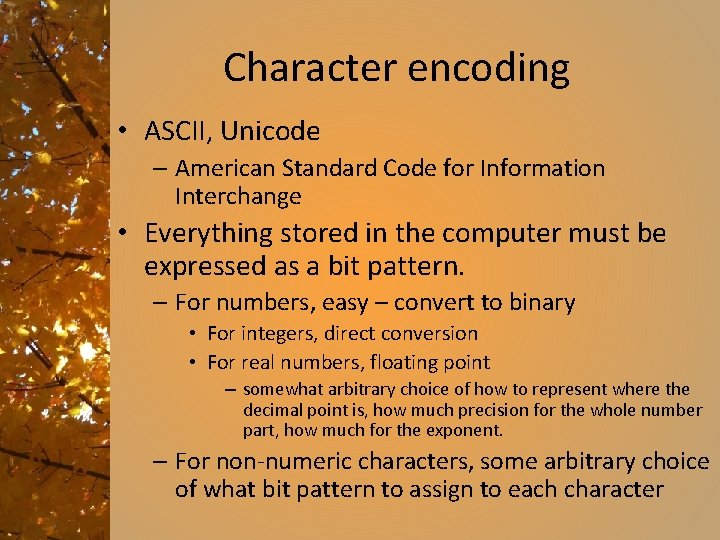

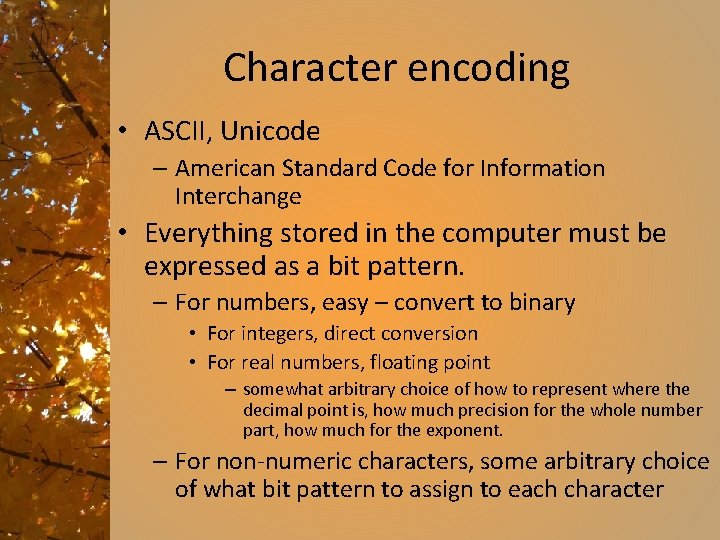

Character encoding • ASCII, Unicode – American Standard Code for Information Interchange • Everything stored in the computer must be expressed as a bit pattern. – For numbers, easy – convert to binary • For integers, direct conversion • For real numbers, floating point – somewhat arbitrary choice of how to represent where the decimal point is, how much precision for the whole number part, how much for the exponent. – For non-numeric characters, some arbitrary choice of what bit pattern to assign to each character

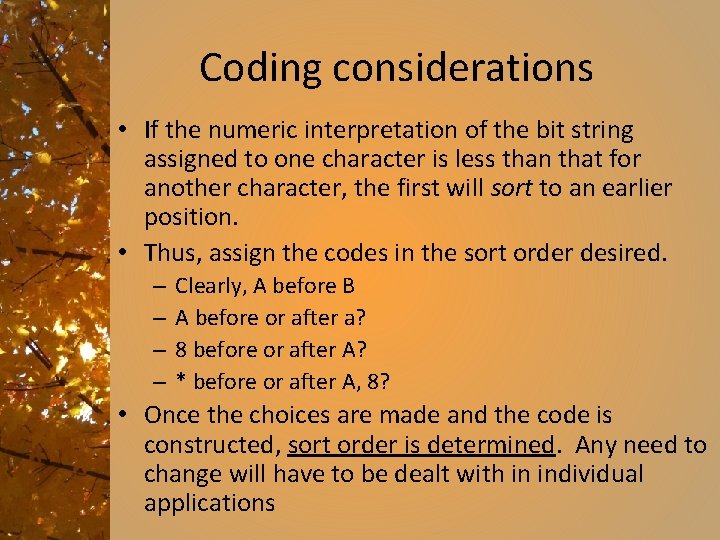

Coding considerations • If the numeric interpretation of the bit string assigned to one character is less than that for another character, the first will sort to an earlier position. • Thus, assign the codes in the sort order desired. – – Clearly, A before B A before or after a? 8 before or after A? * before or after A, 8? • Once the choices are made and the code is constructed, sort order is determined. Any need to change will have to be dealt with in individual applications

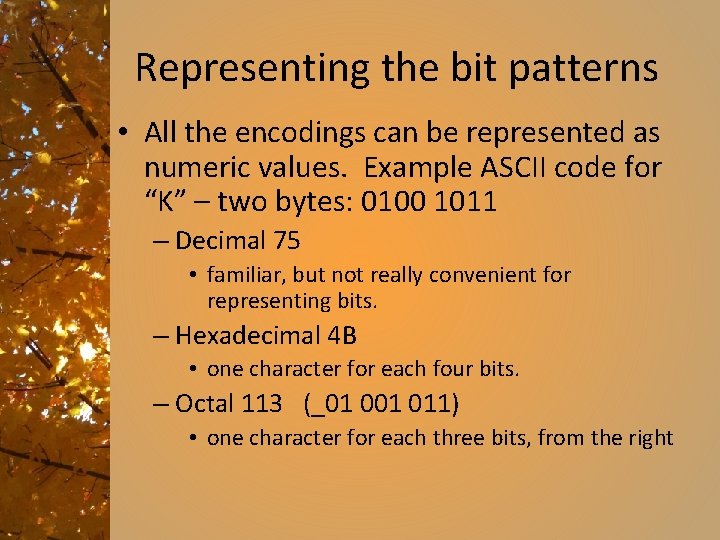

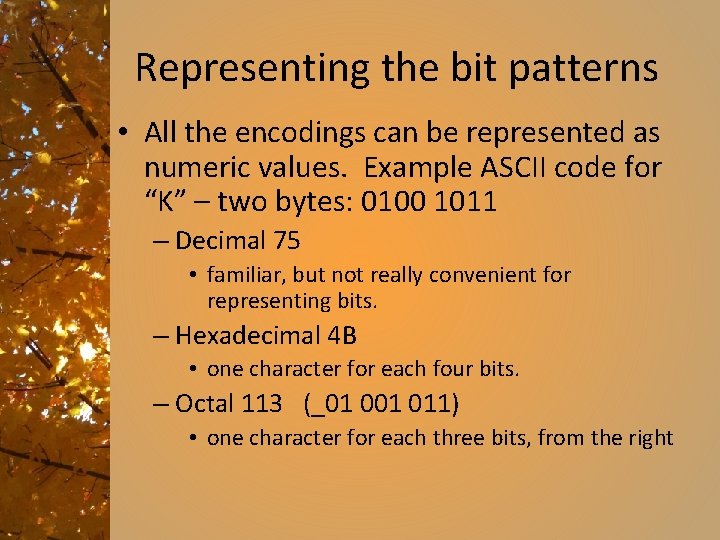

Representing the bit patterns • All the encodings can be represented as numeric values. Example ASCII code for “K” – two bytes: 0100 1011 – Decimal 75 • familiar, but not really convenient for representing bits. – Hexadecimal 4 B • one character for each four bits. – Octal 113 (_01 011) • one character for each three bits, from the right

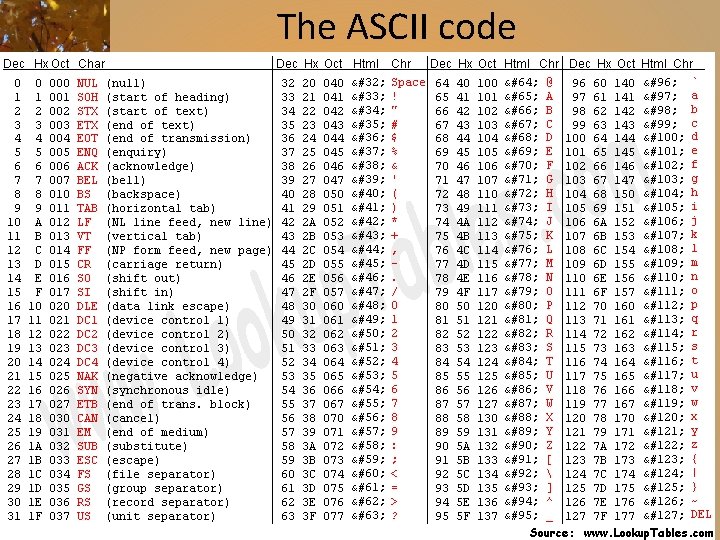

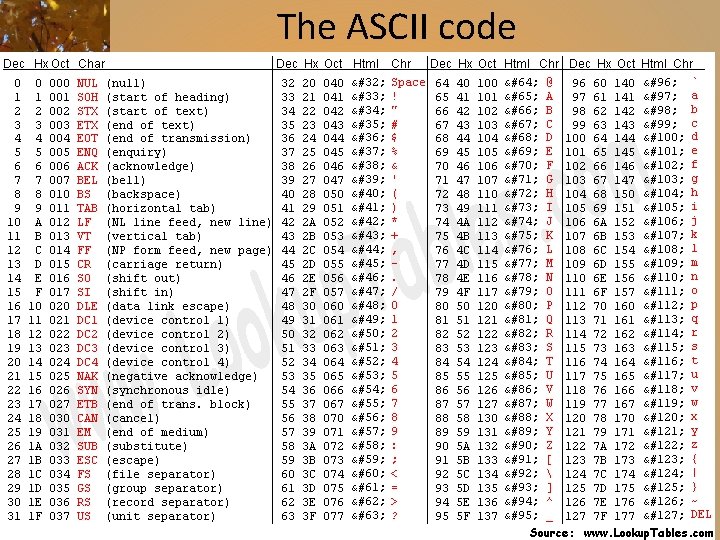

The ASCII code

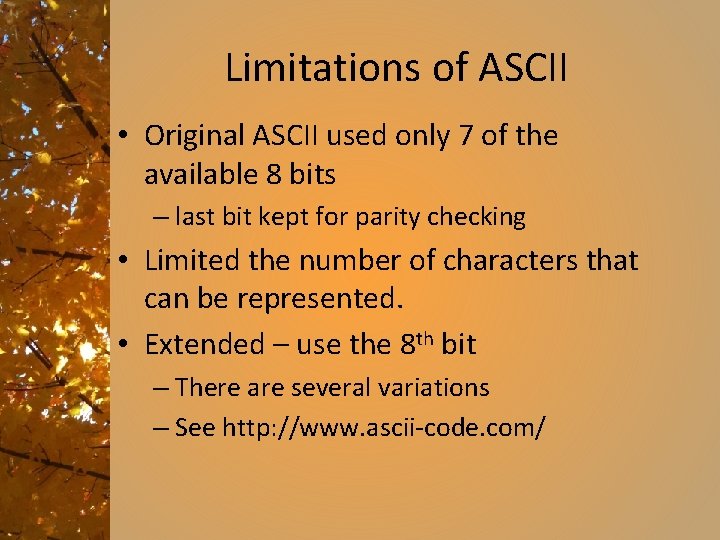

Limitations of ASCII • Original ASCII used only 7 of the available 8 bits – last bit kept for parity checking • Limited the number of characters that can be represented. • Extended – use the 8 th bit – There are several variations – See http: //www. ascii-code. com/

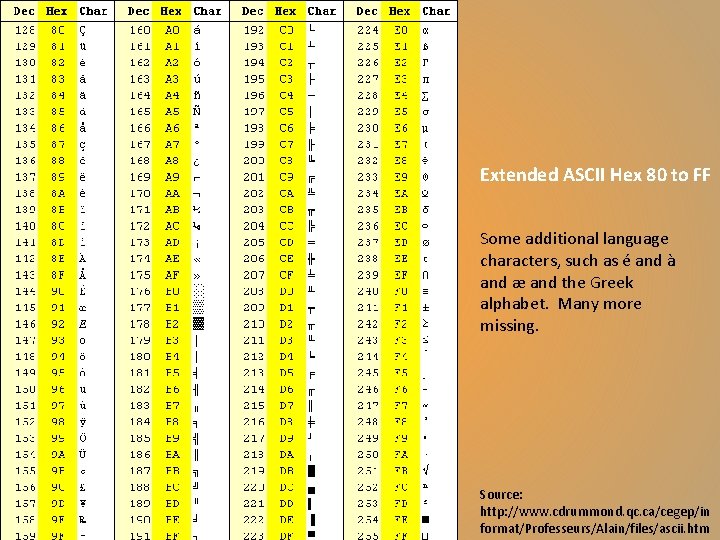

Extended ASCII Hex 80 to FF Some additional language characters, such as é and à and æ and the Greek alphabet. Many more missing. Source: http: //www. cdrummond. qc. ca/cegep/in format/Professeurs/Alain/files/ascii. htm

Unicode • ASCII is just one encoding example • ASCII, even extended, does not have enough space for all needed encodings. • Different schemes in use present potential conflict – different codes for the same symbol, different symbols with the same code if you deal with more than one scheme. • Enter unicode. See unicode. org

From unicode. org Unicode provides a unique number for every character, no matter what the platform, no matter what the program, no matter what the language. The Unicode Standard has been adopted by such industry leaders as Apple, HP, IBM, Just. Systems, Microsoft, Oracle, SAP, Sun, Sybase, Unisys and many others. Unicode is required by modern standards such as XML, Java, ECMAScript (Java. Script), LDAP, CORBA 3. 0, WML, etc. , and is the official way to implement ISO/IEC 10646. It is supported in many operating systems, all modern browsers, and many other products. The emergence of the Unicode Standard, and the availability of tools supporting it, are among the most significant recent global software technology trends.

Unicode • There are three encoding forms: – 8, 16, 32 bits – UTF-8 includes the ASCII codes – UTF-16 all commonly used symbols, other symbols available in pairs of 16 -bit units – UTF-32 when size is not an issue. All symbols in 32 bit string of bits

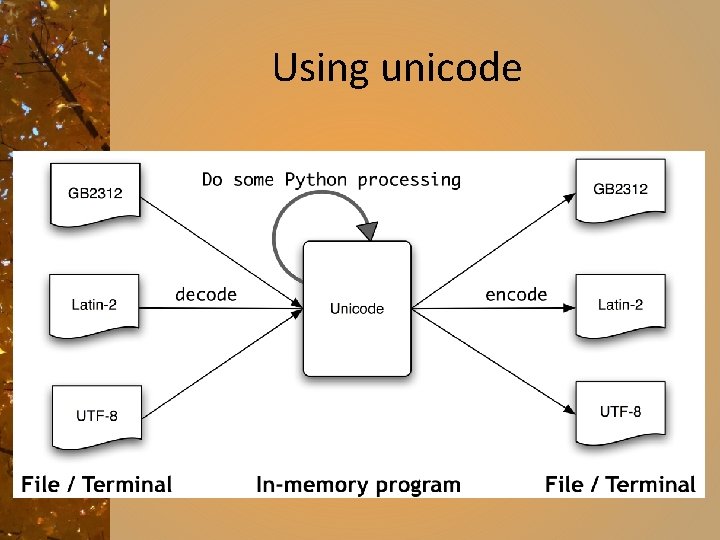

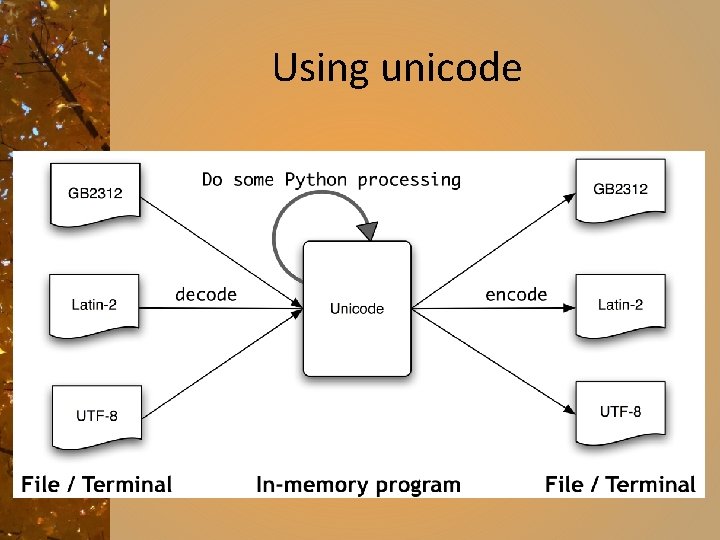

Using unicode

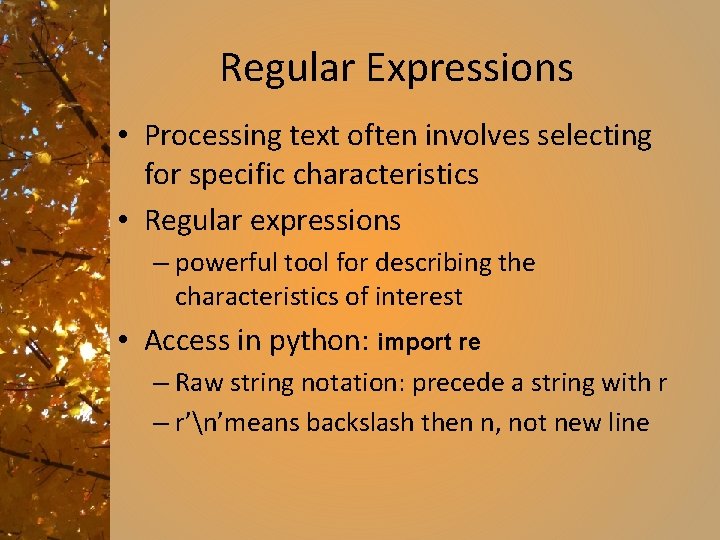

Regular Expressions • Processing text often involves selecting for specific characteristics • Regular expressions – powerful tool for describing the characteristics of interest • Access in python: import re – Raw string notation: precede a string with r – r’n’means backslash then n, not new line

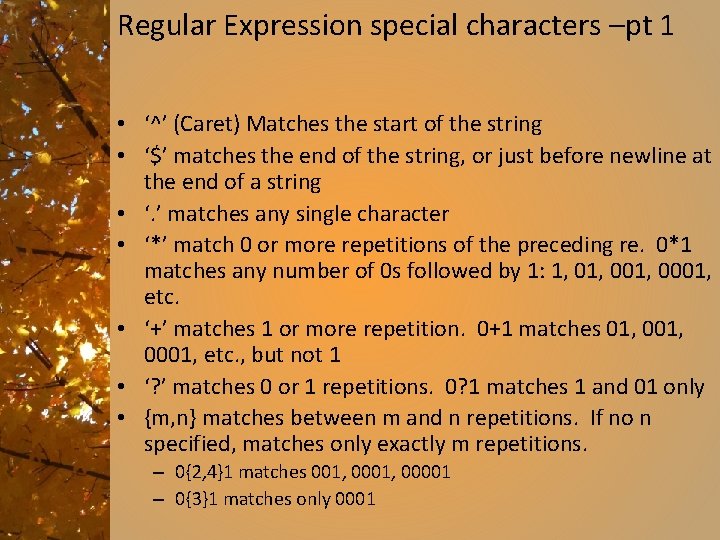

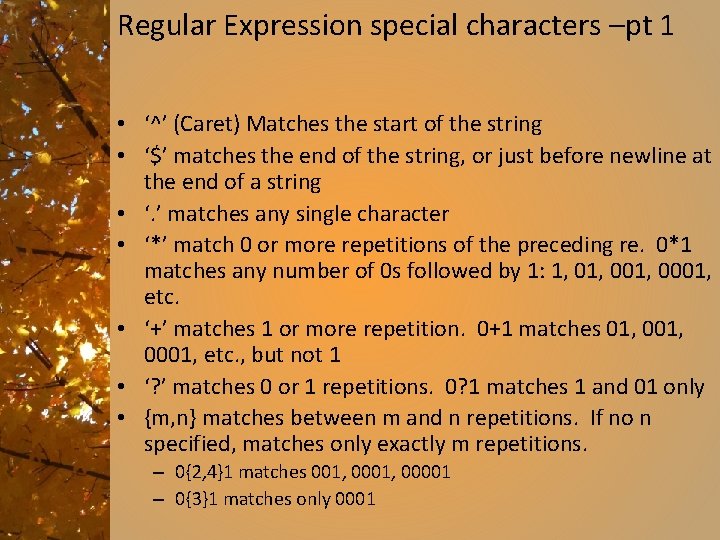

Regular Expression special characters –pt 1 • ‘^’ (Caret) Matches the start of the string • ‘$’ matches the end of the string, or just before newline at the end of a string • ‘. ’ matches any single character • ‘*’ match 0 or more repetitions of the preceding re. 0*1 matches any number of 0 s followed by 1: 1, 001, 0001, etc. • ‘+’ matches 1 or more repetition. 0+1 matches 01, 0001, etc. , but not 1 • ‘? ’ matches 0 or 1 repetitions. 0? 1 matches 1 and 01 only • {m, n} matches between m and n repetitions. If no n specified, matches only exactly m repetitions. – 0{2, 4}1 matches 001, 00001 – 0{3}1 matches only 0001

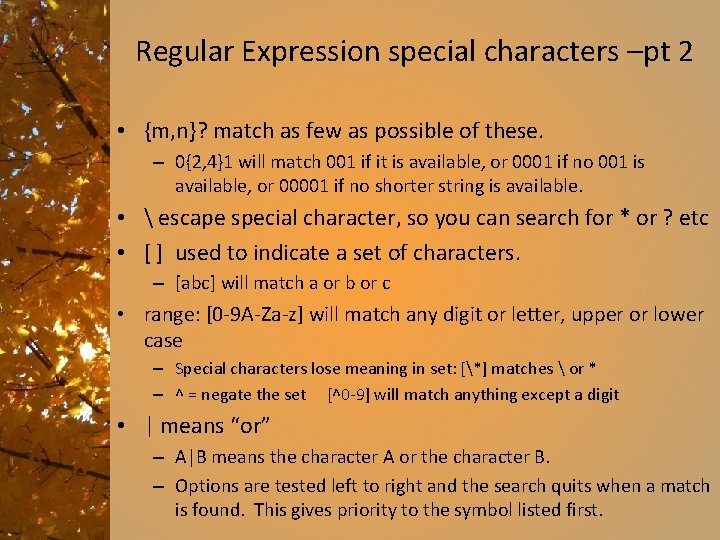

Regular Expression special characters –pt 2 • {m, n}? match as few as possible of these. – 0{2, 4}1 will match 001 if it is available, or 0001 if no 001 is available, or 00001 if no shorter string is available. • escape special character, so you can search for * or ? etc • [ ] used to indicate a set of characters. – [abc] will match a or b or c • range: [0 -9 A-Za-z] will match any digit or letter, upper or lower case – Special characters lose meaning in set: [*] matches or * – ^ = negate the set [^0 -9] will match anything except a digit • | means “or” – A|B means the character A or the character B. – Options are tested left to right and the search quits when a match is found. This gives priority to the symbol listed first.

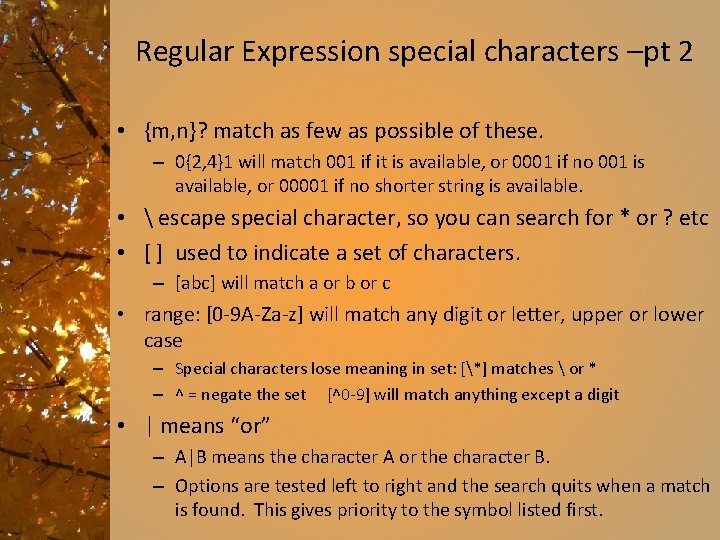

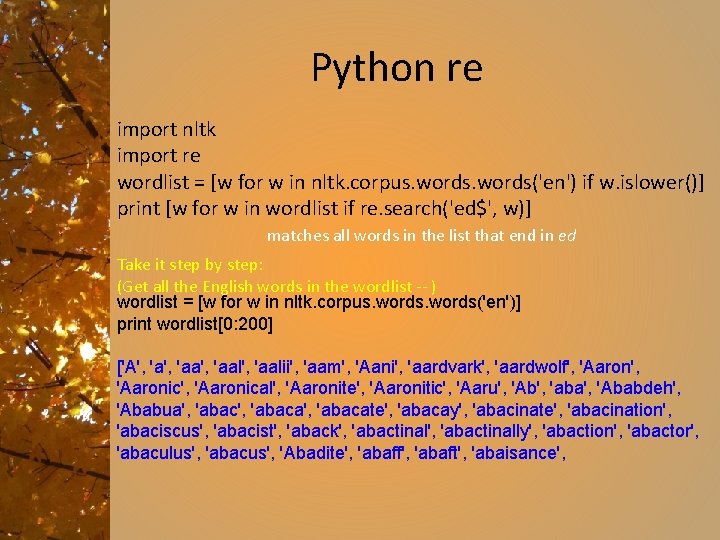

Python re import nltk import re wordlist = [w for w in nltk. corpus. words('en') if w. islower()] print [w for w in wordlist if re. search('ed$', w)] matches all words in the list that end in ed Take it step by step: (Get all the English words in the wordlist -- ) wordlist = [w for w in nltk. corpus. words('en')] print wordlist[0: 200] ['A', 'aa', 'aalii', 'aam', 'Aani', 'aardvark', 'aardwolf', 'Aaronic', 'Aaronical', 'Aaronite', 'Aaronitic', 'Aaru', 'Ab', 'aba', 'Ababdeh', 'Ababua', 'abaca', 'abacate', 'abacay', 'abacinate', 'abacination', 'abaciscus', 'abacist', 'aback', 'abactinally', 'abaction', 'abactor', 'abaculus', 'abacus', 'Abadite', 'abaff', 'abaft', 'abaisance',

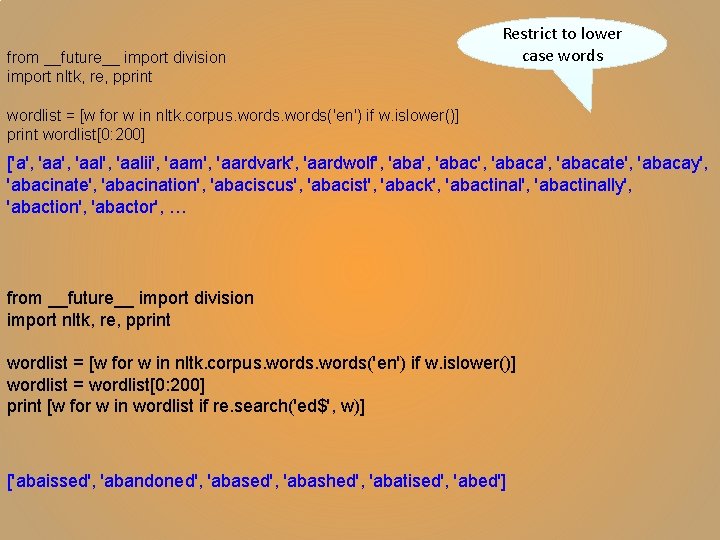

from __future__ import division import nltk, re, pprint Restrict to lower case words wordlist = [w for w in nltk. corpus. words('en') if w. islower()] print wordlist[0: 200] ['a', 'aal', 'aalii', 'aam', 'aardvark', 'aardwolf', 'abac', 'abacate', 'abacay', 'abacinate', 'abacination', 'abaciscus', 'abacist', 'aback', 'abactinally', 'abaction', 'abactor', … from __future__ import division import nltk, re, pprint wordlist = [w for w in nltk. corpus. words('en') if w. islower()] wordlist = wordlist[0: 200] print [w for w in wordlist if re. search('ed$', w)] ['abaissed', 'abandoned', 'abashed', 'abatised', 'abed']

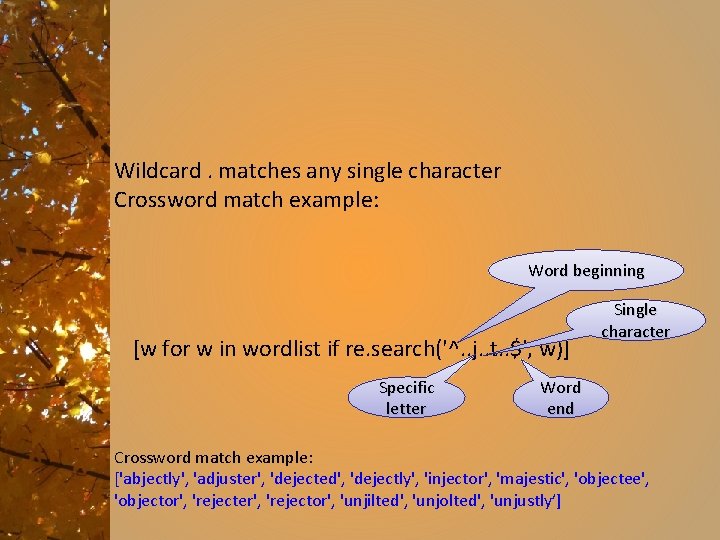

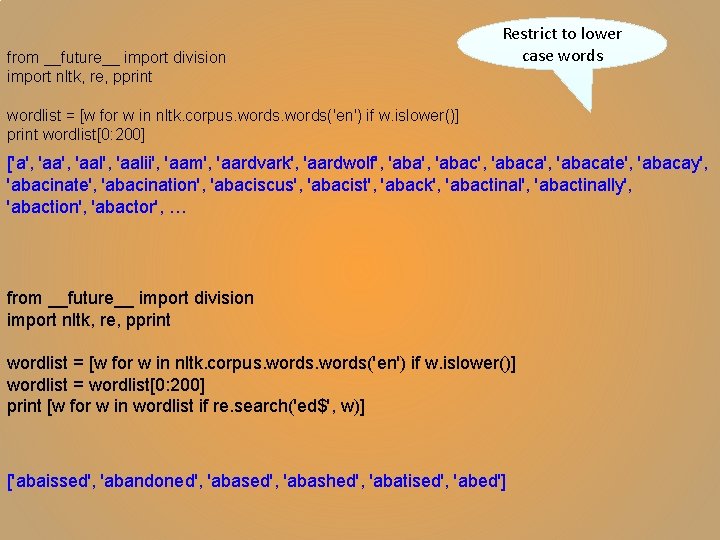

Wildcard. matches any single character Crossword match example: Word beginning [w for w in wordlist if re. search('^. . j. . t. . $', w)] Specific letter Single character Word end Crossword match example: ['abjectly', 'adjuster', 'dejected', 'dejectly', 'injector', 'majestic', 'objectee', 'objector', 'rejecter', 'rejector', 'unjilted', 'unjolted', 'unjustly’]

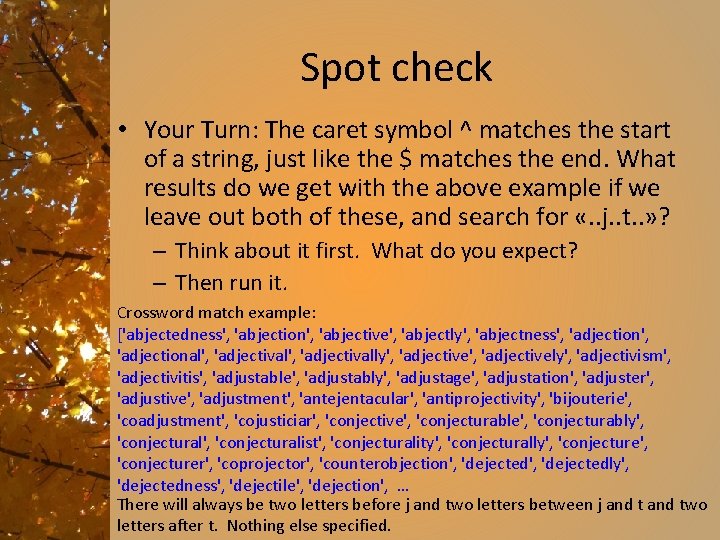

Spot check • Your Turn: The caret symbol ^ matches the start of a string, just like the $ matches the end. What results do we get with the above example if we leave out both of these, and search for «. . j. . t. . » ? – Think about it first. What do you expect? – Then run it. Crossword match example: ['abjectedness', 'abjection', 'abjective', 'abjectly', 'abjectness', 'adjectional', 'adjectivally', 'adjectively', 'adjectivism', 'adjectivitis', 'adjustable', 'adjustably', 'adjustage', 'adjustation', 'adjuster', 'adjustive', 'adjustment', 'antejentacular', 'antiprojectivity', 'bijouterie', 'coadjustment', 'cojusticiar', 'conjective', 'conjecturably', 'conjecturalist', 'conjecturality', 'conjecturally', 'conjecturer', 'coprojector', 'counterobjection', 'dejectedly', 'dejectedness', 'dejectile', 'dejection', … There will always be two letters before j and two letters between j and two letters after t. Nothing else specified.

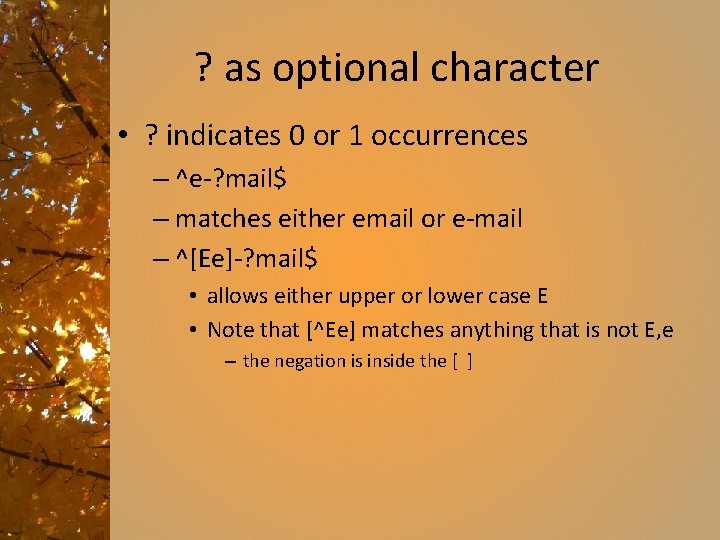

? as optional character • ? indicates 0 or 1 occurrences – ^e-? mail$ – matches either email or e-mail – ^[Ee]-? mail$ • allows either upper or lower case E • Note that [^Ee] matches anything that is not E, e – the negation is inside the [ ]

![Texting example w for w in wordlist if re searchghimnojlkdef w First letter Texting example [w for w in wordlist if re. search('^[ghi][mno][jlk][def]$', w) • First letter](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-34.jpg)

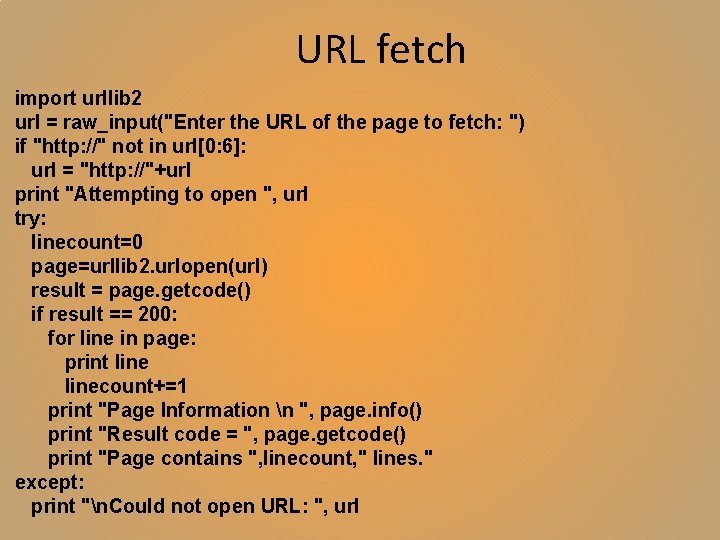

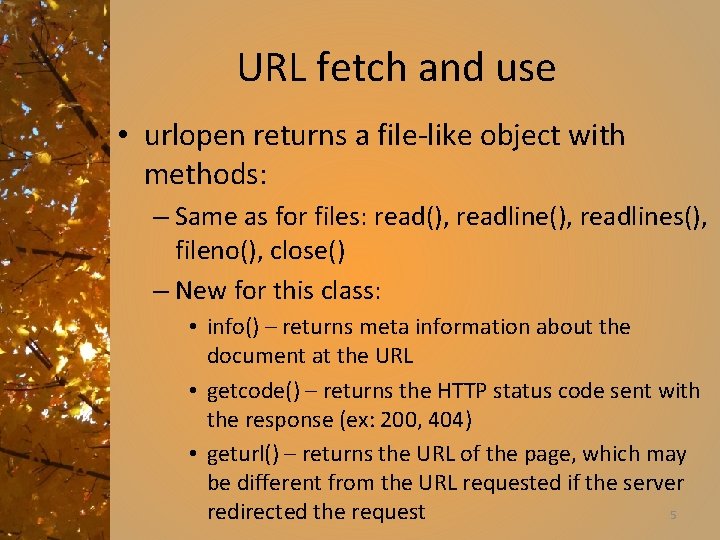

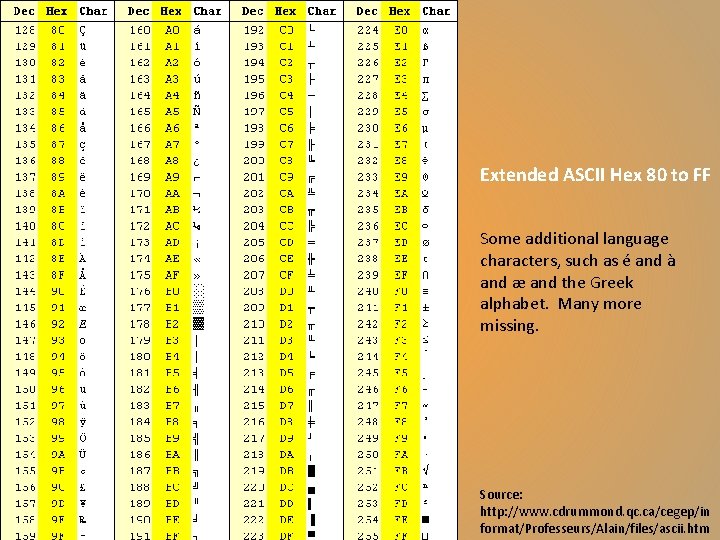

Texting example [w for w in wordlist if re. search('^[ghi][mno][jlk][def]$', w) • First letter from ghi, second from mno, then jlk, then def ['gold', 'golf', 'hold', 'hole'] • Take away the ^ and $ 'tinkerlike', 'tinkerly', 'tinkershire', 'tinkershue', 'tinkerwise', 'tinlet', 'titleholder', 'toolholding', 'touchhole', 'trainless', 'traphole', 'trinkerman', 'trinketer', 'trinketry', 'trinkety', 'trioleate', 'triolefin', 'trioleic’, …

![Python use of re re searchpattern string flags scan through string looking Python use of re • re. search(pattern, string[, flags]) – scan through string looking](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-35.jpg)

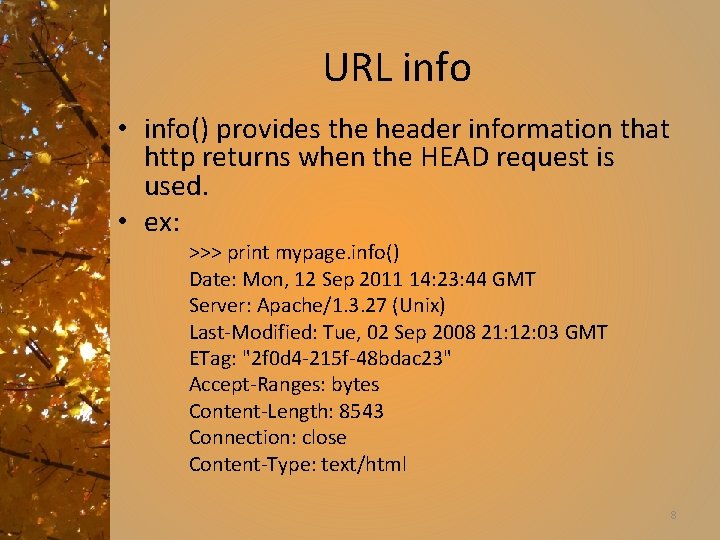

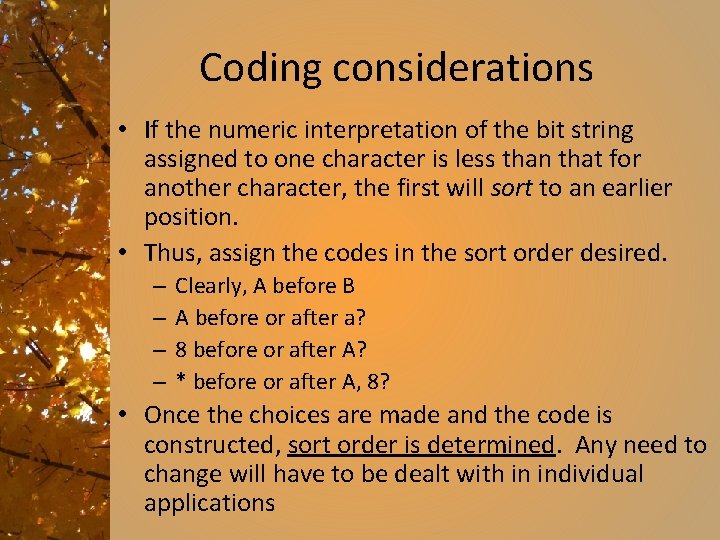

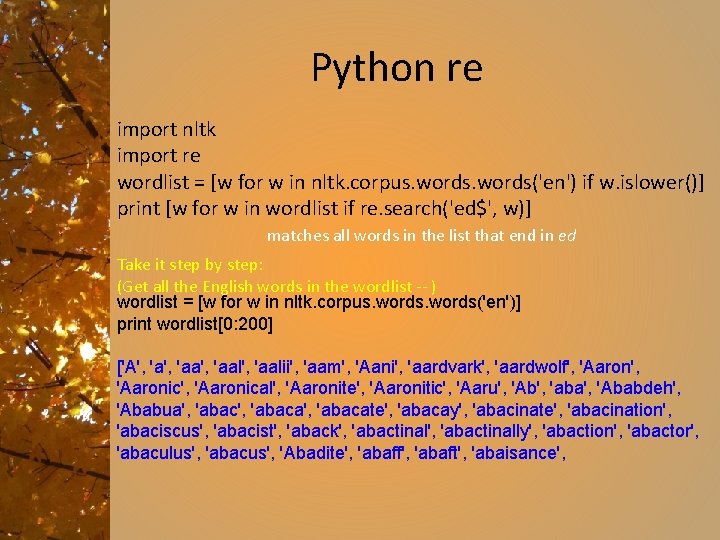

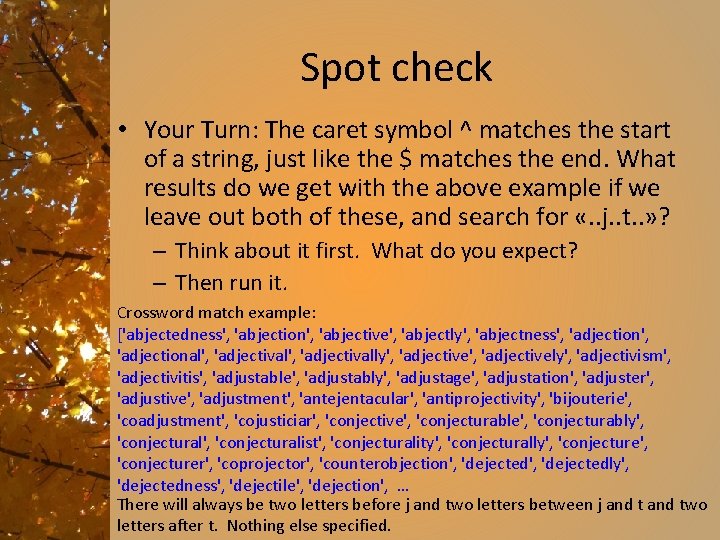

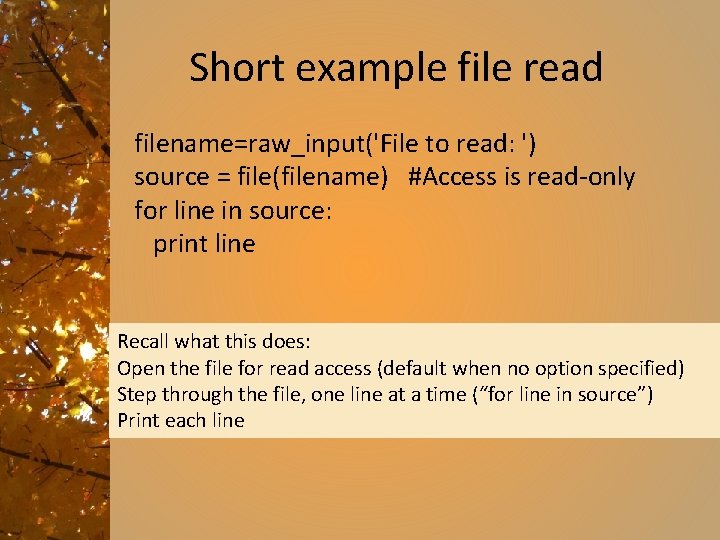

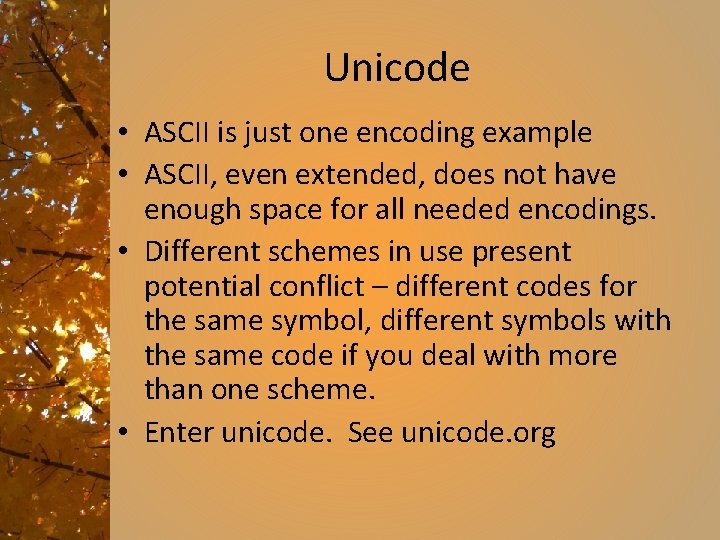

Python use of re • re. search(pattern, string[, flags]) – scan through string looking for pattern. Return None if not found. • re. match(pattern, string) – if zero or more characters at the beginning of string match the re pattern, return a corresponding Match. Object instance. Return None if string does not match the pattern. • re. split(pattern, string) – Split string by occurrences of pattern. from: http: //docs. python. org/library/re. html some options not included

![Some shortened forms w word class equivalent to az AZ 0 9 W Some shortened forms w = word class: equivalent to [a-z. A-Z 0 -9_] W](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-36.jpg)

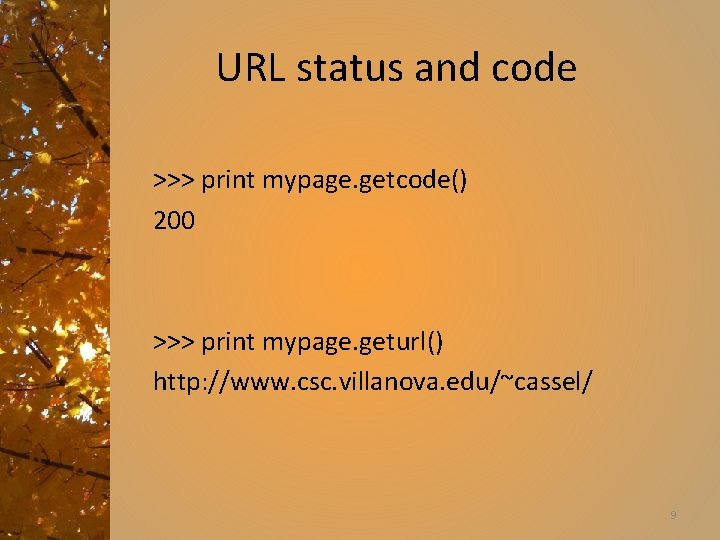

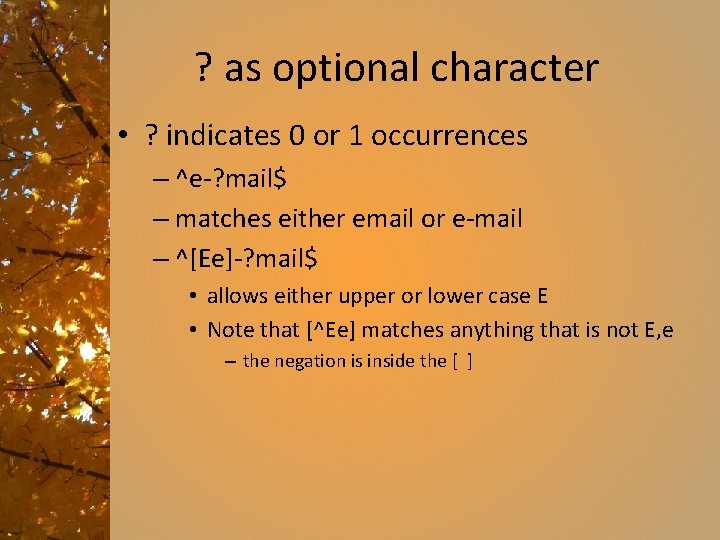

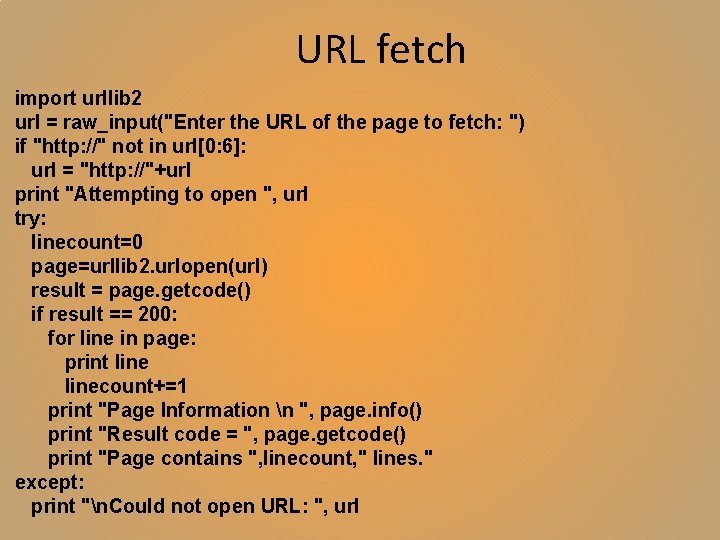

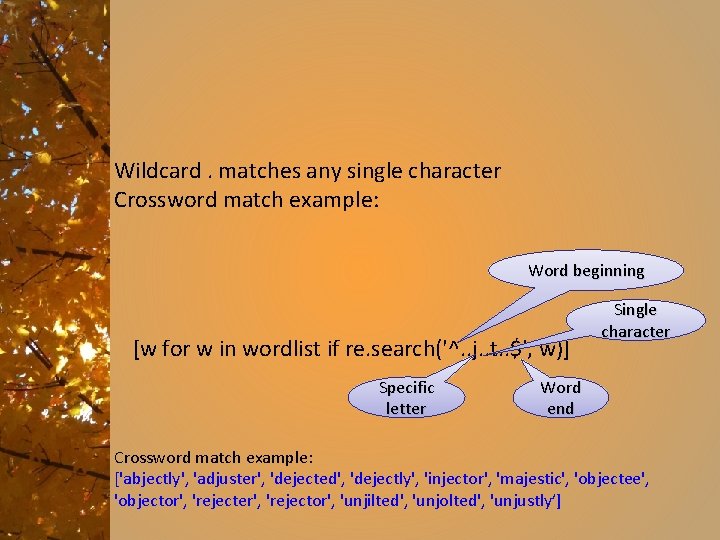

Some shortened forms w = word class: equivalent to [a-z. A-Z 0 -9_] W = complement of w – all characters other than letters and digits >>> re. split('W+', 'Words, words. ') ['Words', 'words', ''] >>> re. split('(W+)', 'Words, words. ') ['Words', ', ', 'words', ''] >>> re. split('W+', 'Words, words. ', 1) ['Words', 'words, words. '] “If capturing parentheses are used in pattern, then the text of all groups in the pattern are also returned as part of the resulting list. ” – thus, the split is on the non alpha-numeric characters, but those characters are included in the resulting list. >>> re. split('[a-f]+', '0 a 3 B 9', flags=re. IGNORECASE) ['0', '3', '9'] Ref: http: //docs. python. org/library/re. html

![re findallpattern string flags return all nonoverlapping matches of pattern in • re. findall(pattern, string[, flags]) – return all non-overlapping matches of pattern in](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-37.jpg)

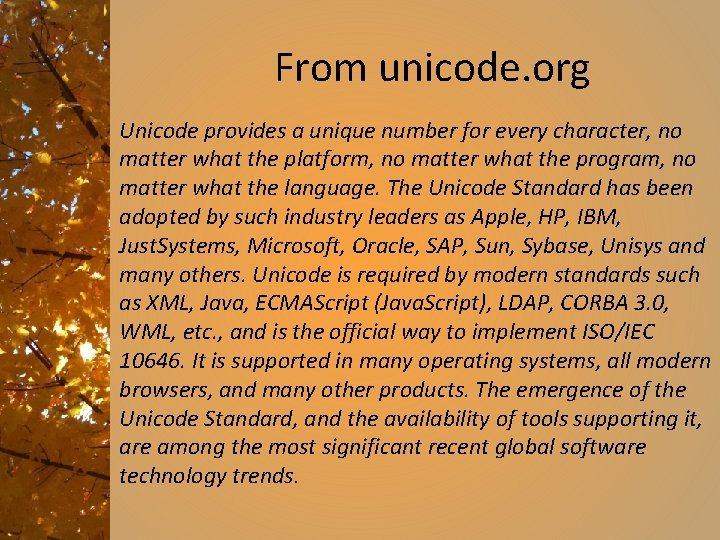

• re. findall(pattern, string[, flags]) – return all non-overlapping matches of pattern in string, as a list of strings. String scanned left-to-right. Matches returned in order found.

![Applications of re Extract word pieces word supercalifragilisticexpialidocious re findallraeiou Applications of re • Extract word pieces > word = 'supercalifragilisticexpialidocious' >>> re. findall(r'[aeiou]',](https://slidetodoc.com/presentation_image_h/a66255602993143b65f735968ec79de1/image-38.jpg)

Applications of re • Extract word pieces > word = 'supercalifragilisticexpialidocious' >>> re. findall(r'[aeiou]', word) ['u', 'e', 'a', 'i', 'e', 'i', 'a', 'i', 'o', 'u'] >>> len(re. findall(r'[aeiou]', word)) 16 • another >>> wsj = sorted(set(nltk. corpus. treebank. words())) >>> fd = nltk. Freq. Dist(vs for word in wsj. . . for vs in re. findall(r'[aeiou]{2, }', word)) >>> fd. items() vu 50390: ch 3 lcassel$ python re 2. py [('io', 549), ('ea', 476), ('ie', 331), ('ou', 329), ('ai', 261), ('ia', 253), ('ee', 217), ('oo', 174), ('ua', 109), ('au', 106), ('ue', 105), ('ui', 95), ('ei', 86), ('oi', 65), ('oa', 59), ('eo', 39), ('iou', 27), ('eu', 18), ('oe', 15), ('iu', 14), ('ae', 11), ('eau', 10), ('uo', 8), ('ao', 6), ('oui', 6), ('eou', 5), ('uee', 4), ('aa', 3), ('ieu', 3), ('uie', 3), ('eei', 2), ('aia', 1), ('aiia', 1), ('eea', 1), ('iai', 1), ('iao', 1), ('ioa', 1), ('oei', 1), ('ooi', 1), ('ueui', 1), ('uu', 1)]

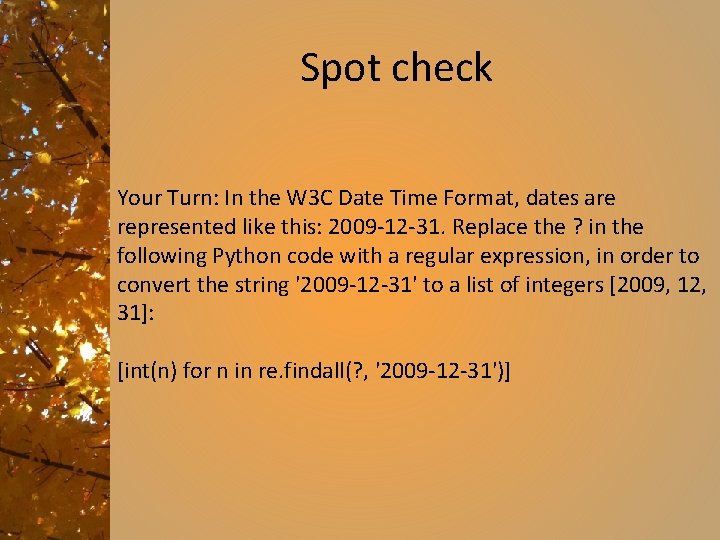

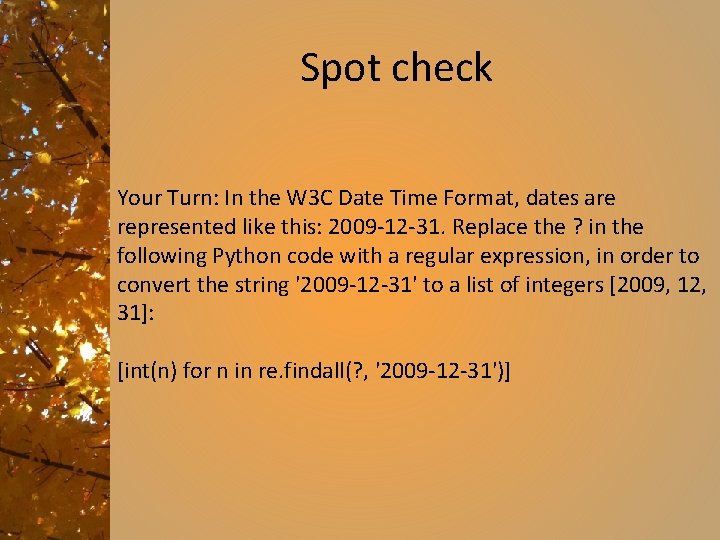

Spot check Your Turn: In the W 3 C Date Time Format, dates are represented like this: 2009 -12 -31. Replace the ? in the following Python code with a regular expression, in order to convert the string '2009 -12 -31' to a list of integers [2009, 12, 31]: [int(n) for n in re. findall(? , '2009 -12 -31')]

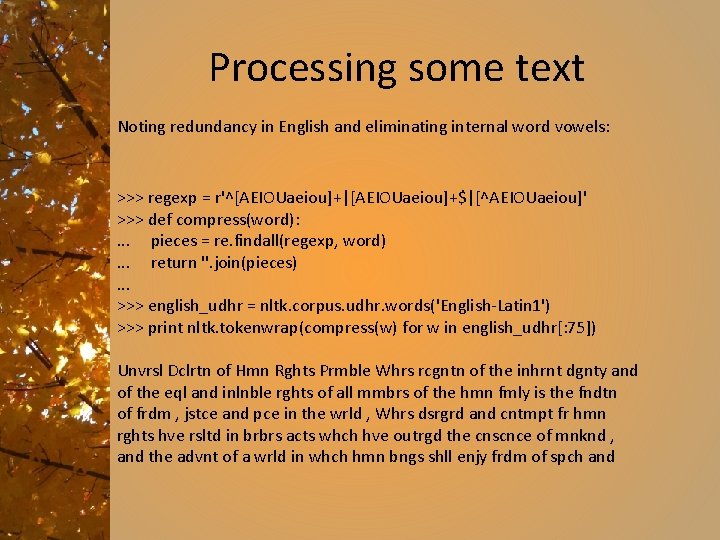

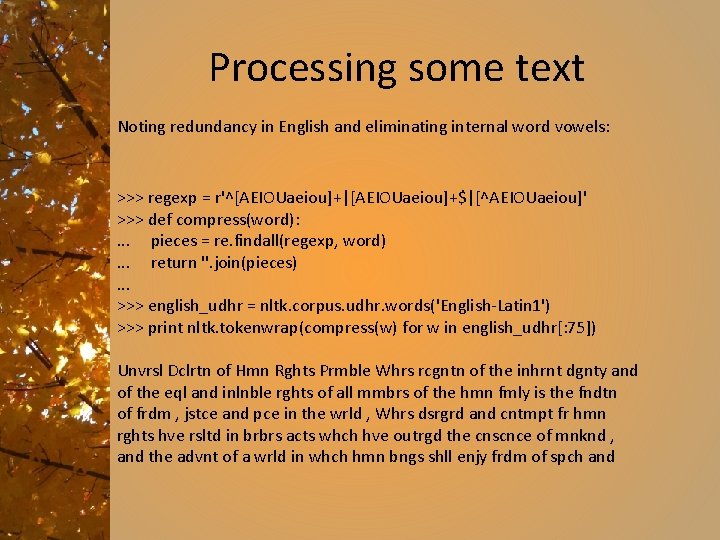

Processing some text Noting redundancy in English and eliminating internal word vowels: >>> regexp = r'^[AEIOUaeiou]+|[AEIOUaeiou]+$|[^AEIOUaeiou]' >>> def compress(word): . . . pieces = re. findall(regexp, word). . . return ''. join(pieces). . . >>> english_udhr = nltk. corpus. udhr. words('English-Latin 1') >>> print nltk. tokenwrap(compress(w) for w in english_udhr[: 75]) Unvrsl Dclrtn of Hmn Rghts Prmble Whrs rcgntn of the inhrnt dgnty and of the eql and inlnble rghts of all mmbrs of the hmn fmly is the fndtn of frdm , jstce and pce in the wrld , Whrs dsrgrd and cntmpt fr hmn rghts hve rsltd in brbrs acts whch hve outrgd the cnscnce of mnknd , and the advnt of a wrld in whch hmn bngs shll enjy frdm of spch and

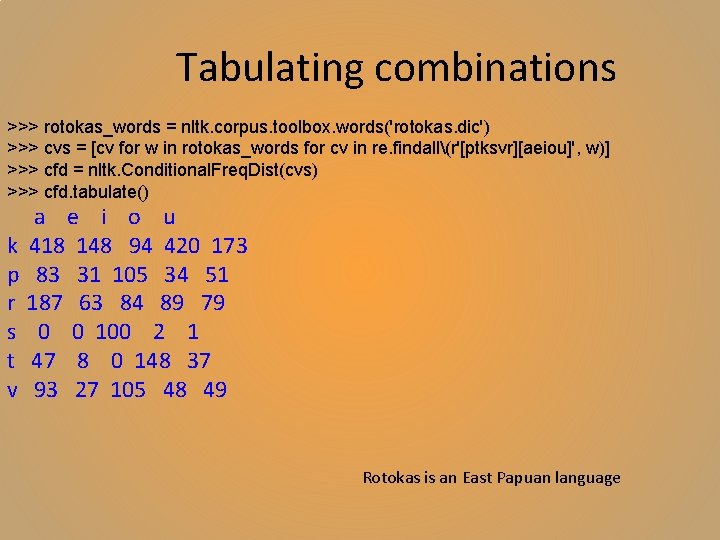

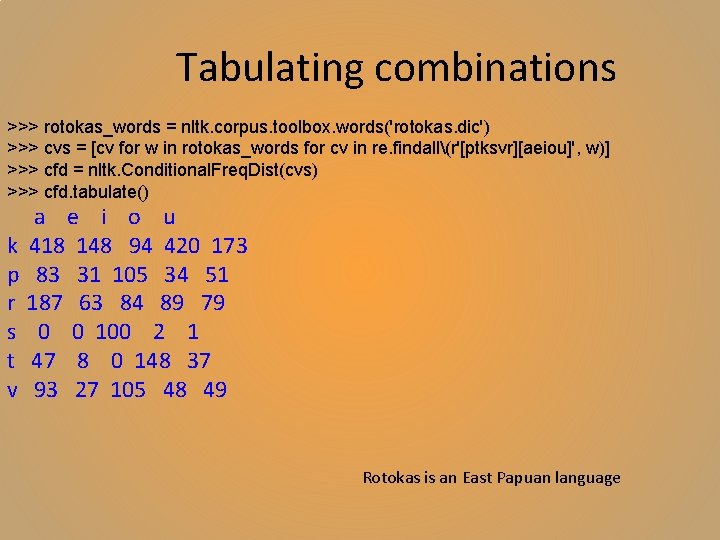

Tabulating combinations >>> rotokas_words = nltk. corpus. toolbox. words('rotokas. dic') >>> cvs = [cv for w in rotokas_words for cv in re. findall(r'[ptksvr][aeiou]', w)] >>> cfd = nltk. Conditional. Freq. Dist(cvs) >>> cfd. tabulate() a e i o u k 418 148 94 420 173 p 83 31 105 34 51 r 187 63 84 89 79 s 0 0 100 2 1 t 47 8 0 148 37 v 93 27 105 48 49 Rotokas is an East Papuan language

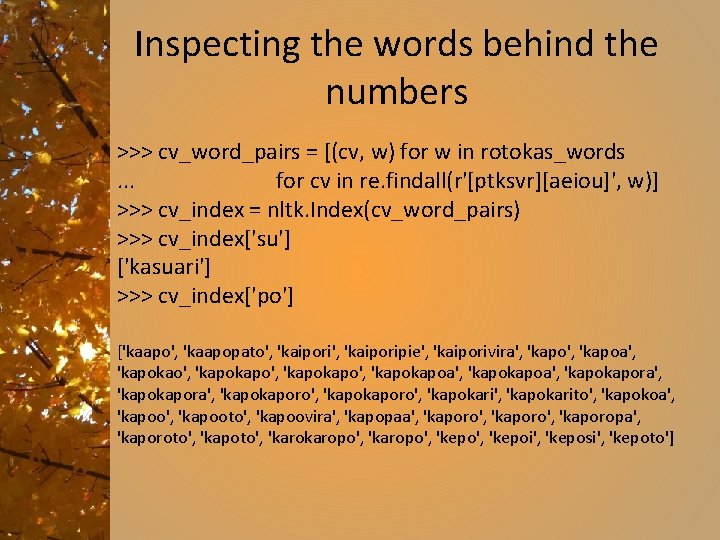

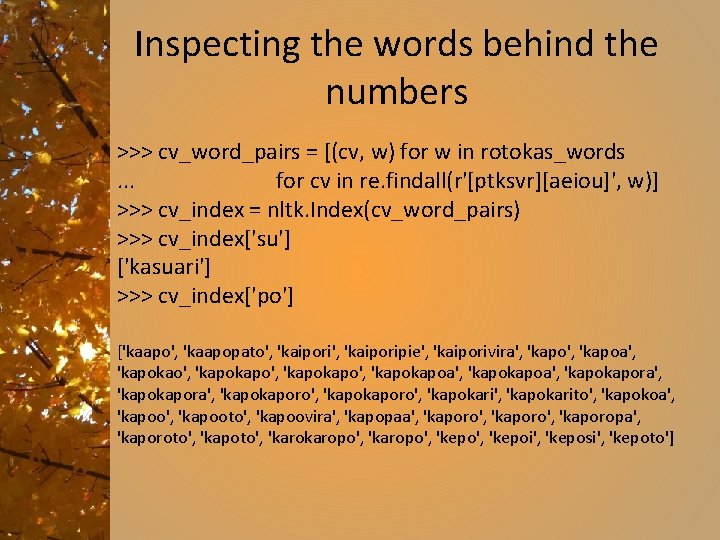

Inspecting the words behind the numbers >>> cv_word_pairs = [(cv, w) for w in rotokas_words. . . for cv in re. findall(r'[ptksvr][aeiou]', w)] >>> cv_index = nltk. Index(cv_word_pairs) >>> cv_index['su'] ['kasuari'] >>> cv_index['po'] ['kaapo', 'kaapopato', 'kaiporipie', 'kaiporivira', 'kapoa', 'kapokao', 'kapokapo', 'kapokapoa', 'kapokapora', 'kapokaporo', 'kapokarito', 'kapokoa', 'kapooto', 'kapoovira', 'kapopaa', 'kaporo', 'kaporopa', 'kaporoto', 'kapoto', 'karopo', 'kepoi', 'keposi', 'kepoto']

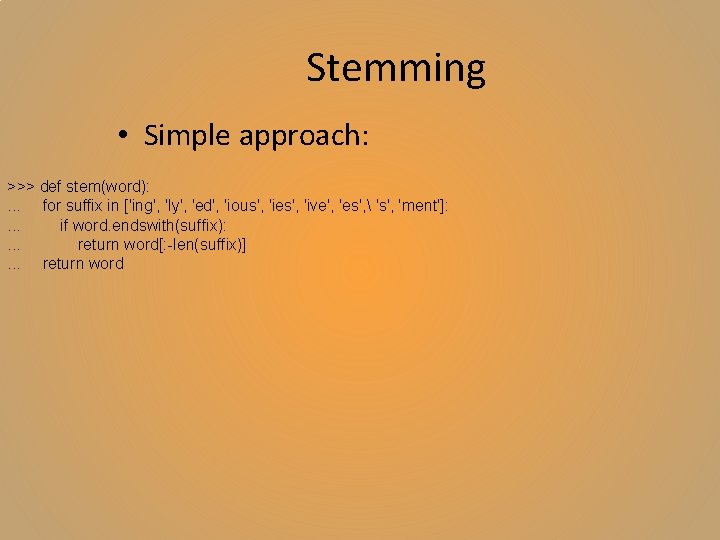

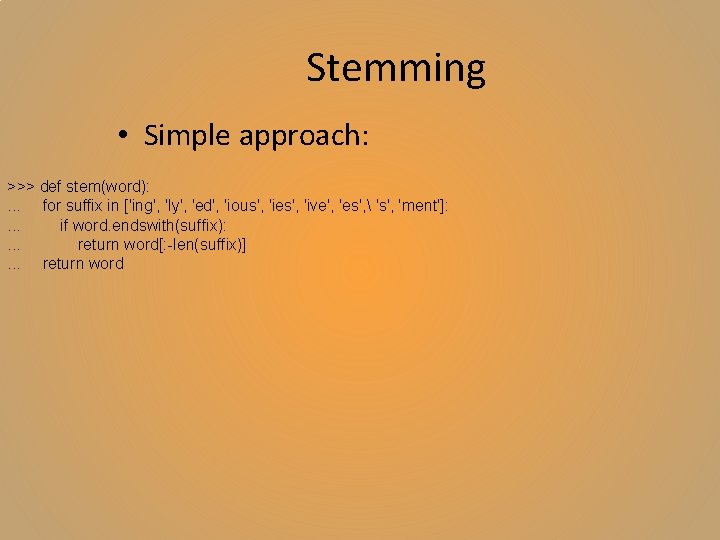

Stemming • Simple approach: >>> def stem(word): . . . for suffix in ['ing', 'ly', 'ed', 'ious', 'ies', 'ive', 'es', 's', 'ment']: . . . if word. endswith(suffix): . . . return word[: -len(suffix)]. . . return word

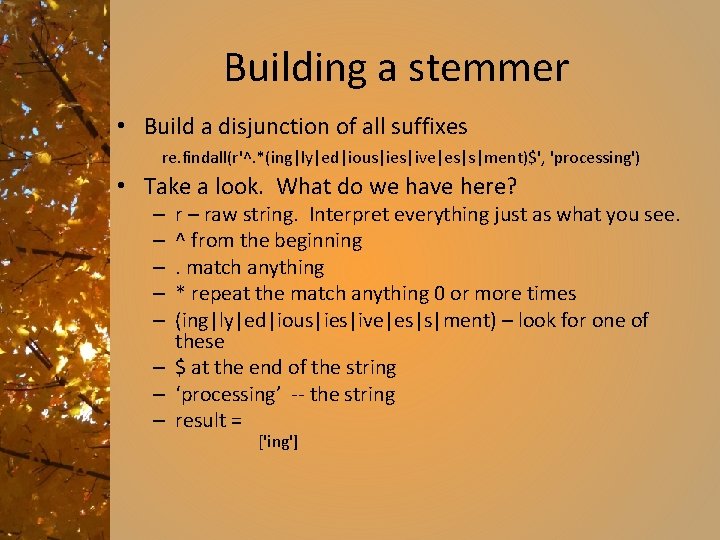

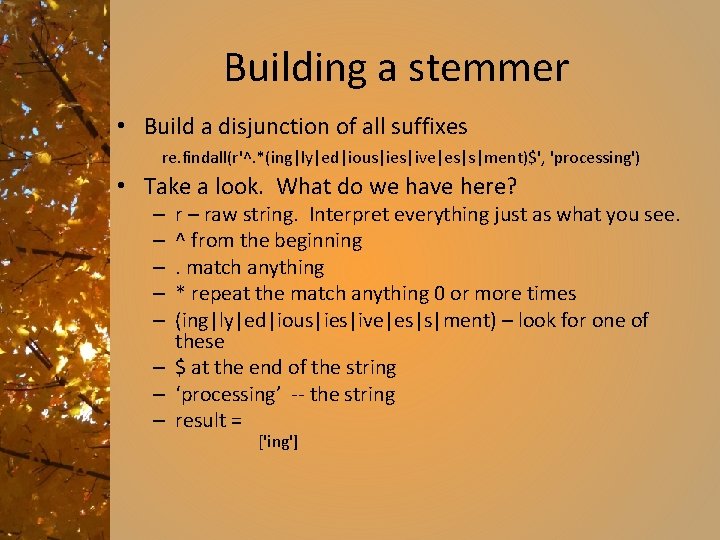

Building a stemmer • Build a disjunction of all suffixes re. findall(r'^. *(ing|ly|ed|ious|ies|ive|es|s|ment)$', 'processing') • Take a look. What do we have here? r – raw string. Interpret everything just as what you see. ^ from the beginning. match anything * repeat the match anything 0 or more times (ing|ly|ed|ious|ies|ive|es|s|ment) – look for one of these – $ at the end of the string – ‘processing’ -- the string – result = – – – ['ing']

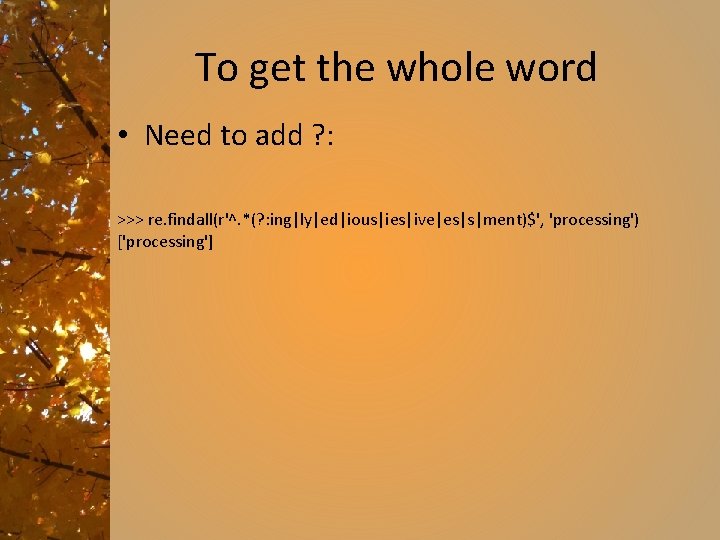

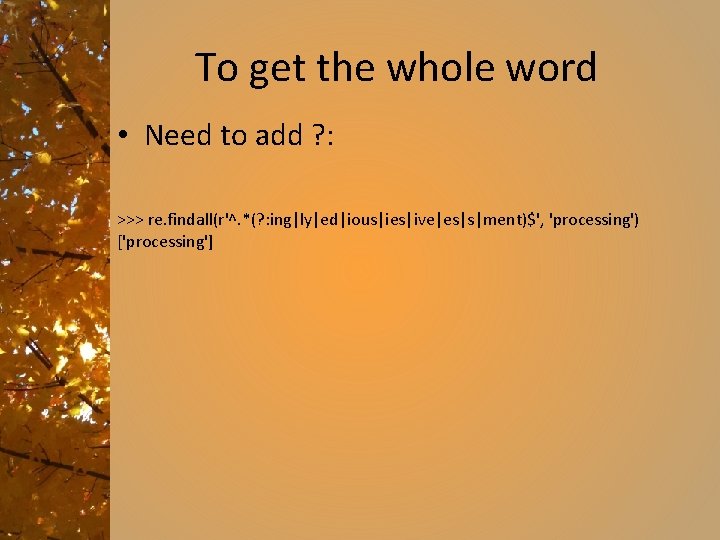

To get the whole word • Need to add ? : >>> re. findall(r'^. *(? : ing|ly|ed|ious|ies|ive|es|s|ment)$', 'processing') ['processing']

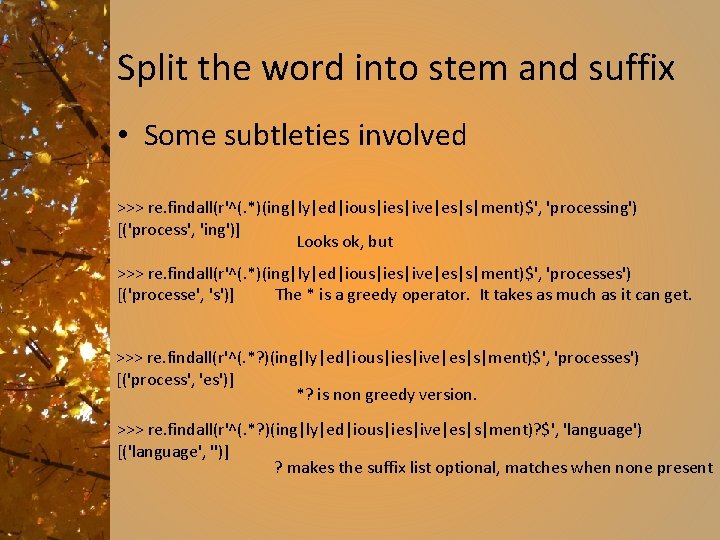

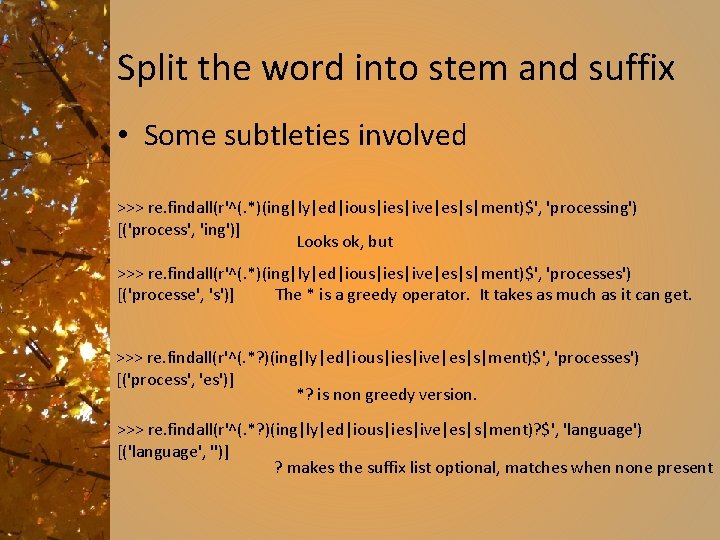

Split the word into stem and suffix • Some subtleties involved >>> re. findall(r'^(. *)(ing|ly|ed|ious|ies|ive|es|s|ment)$', 'processing') [('process', 'ing')] Looks ok, but >>> re. findall(r'^(. *)(ing|ly|ed|ious|ies|ive|es|s|ment)$', 'processes') [('processe', 's')] The * is a greedy operator. It takes as much as it can get. >>> re. findall(r'^(. *? )(ing|ly|ed|ious|ies|ive|es|s|ment)$', 'processes') [('process', 'es')] *? is non greedy version. >>> re. findall(r'^(. *? )(ing|ly|ed|ious|ies|ive|es|s|ment)? $', 'language') [('language', '')] ? makes the suffix list optional, matches when none present

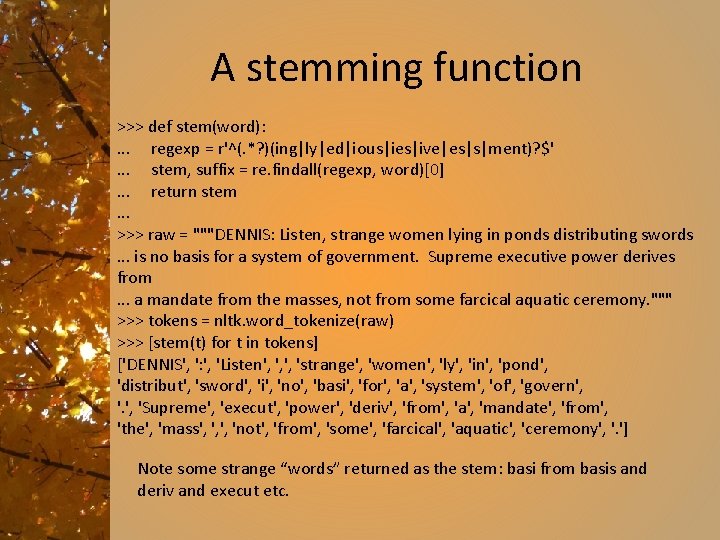

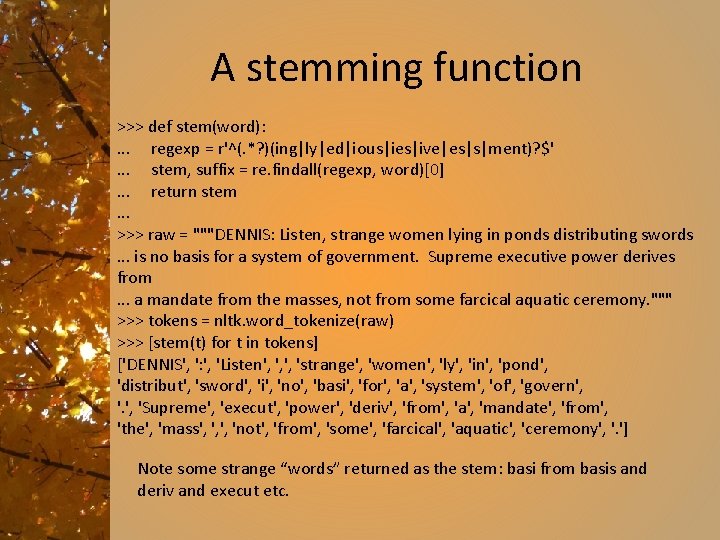

A stemming function >>> def stem(word): . . . regexp = r'^(. *? )(ing|ly|ed|ious|ies|ive|es|s|ment)? $'. . . stem, suffix = re. findall(regexp, word)[0]. . . return stem. . . >>> raw = """DENNIS: Listen, strange women lying in ponds distributing swords. . . is no basis for a system of government. Supreme executive power derives from. . . a mandate from the masses, not from some farcical aquatic ceremony. """ >>> tokens = nltk. word_tokenize(raw) >>> [stem(t) for t in tokens] ['DENNIS', ': ', 'Listen', ', ', 'strange', 'women', 'ly', 'in', 'pond', 'distribut', 'sword', 'i', 'no', 'basi', 'for', 'a', 'system', 'of', 'govern', 'Supreme', 'execut', 'power', 'deriv', 'from', 'a', 'mandate', 'from', 'the', 'mass', ', ', 'not', 'from', 'some', 'farcical', 'aquatic', 'ceremony', '. '] Note some strange “words” returned as the stem: basi from basis and deriv and execut etc.

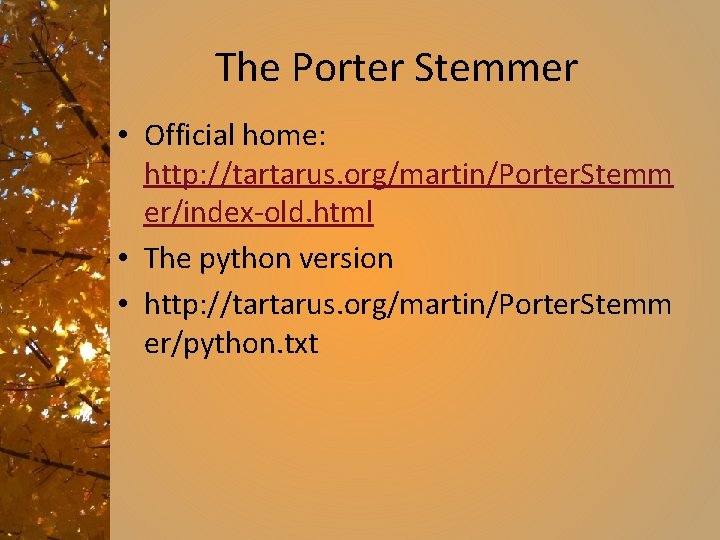

The Porter Stemmer • Official home: http: //tartarus. org/martin/Porter. Stemm er/index-old. html • The python version • http: //tartarus. org/martin/Porter. Stemm er/python. txt

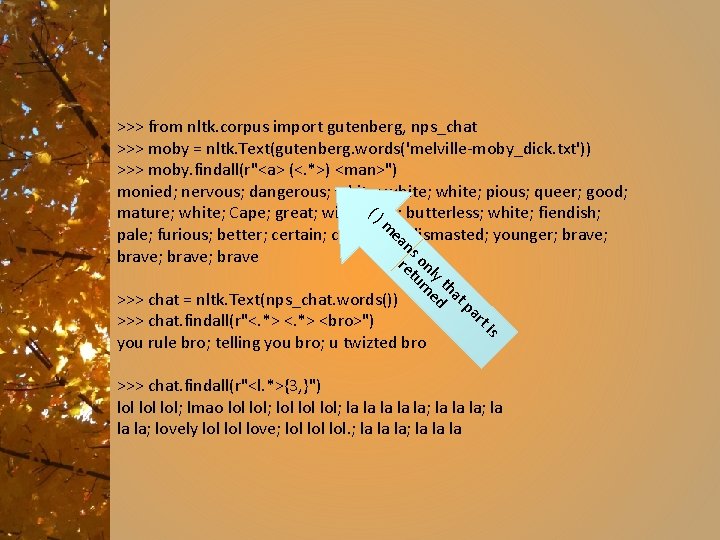

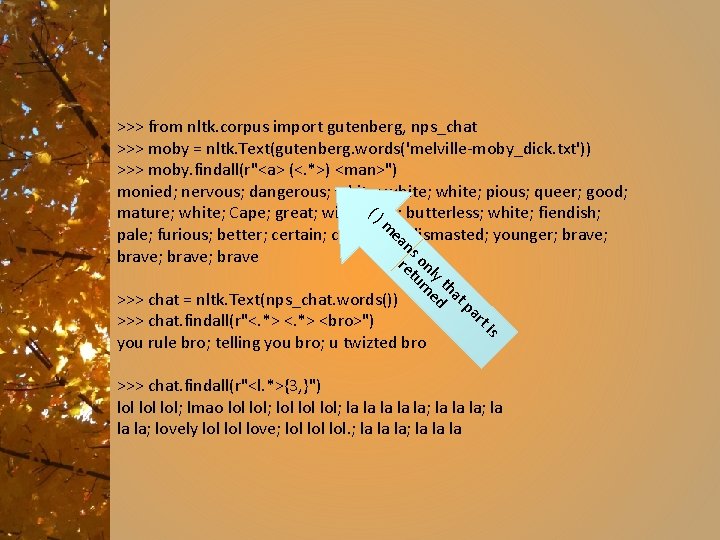

>>> from nltk. corpus import gutenberg, nps_chat >>> moby = nltk. Text(gutenberg. words('melville-moby_dick. txt')) >>> moby. findall(r"<a> (<. *>) <man>") monied; nervous; dangerous; white; pious; queer; good; () mature; white; Cape; great; wise; m butterless; white; fiendish; ea dismasted; younger; brave; pale; furious; better; certain; complete; ns brave; brave re on tu ly rn th ed at >>> chat = nltk. Text(nps_chat. words()) p >>> chat. findall(r"<. *> <bro>") you rule bro; telling you bro; u twizted bro ar ti s >>> chat. findall(r"<l. *>{3, }") lol lol; lmao lol; lol lol; la la la; lovely lol love; lol lol. ; la la la

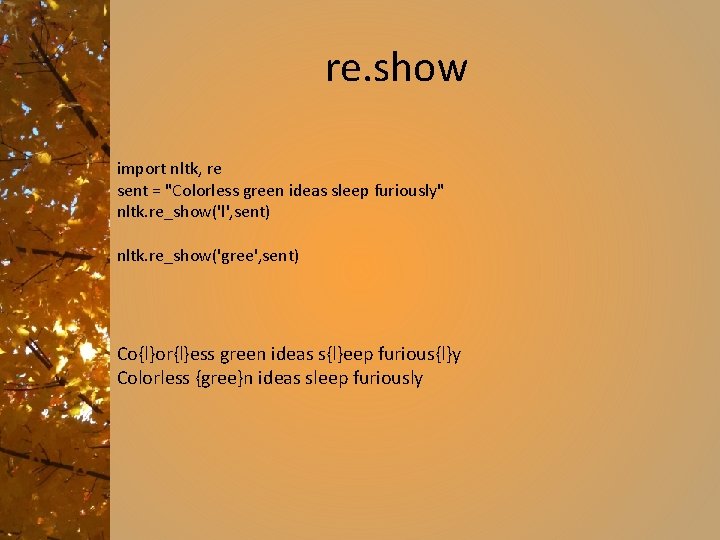

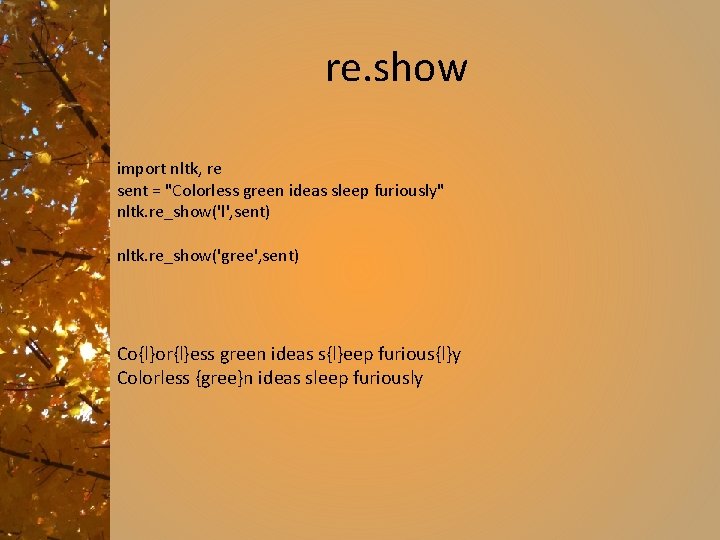

re. show import nltk, re sent = "Colorless green ideas sleep furiously" nltk. re_show('l', sent) nltk. re_show('gree', sent) Co{l}or{l}ess green ideas s{l}eep furious{l}y Colorless {gree}n ideas sleep furiously

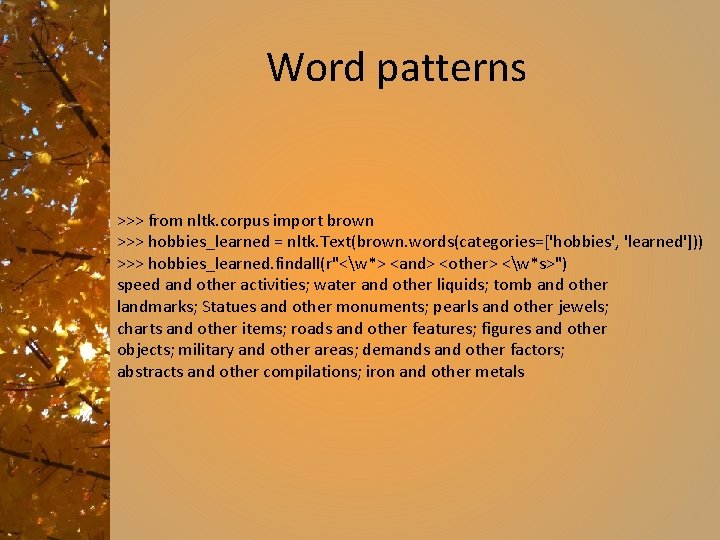

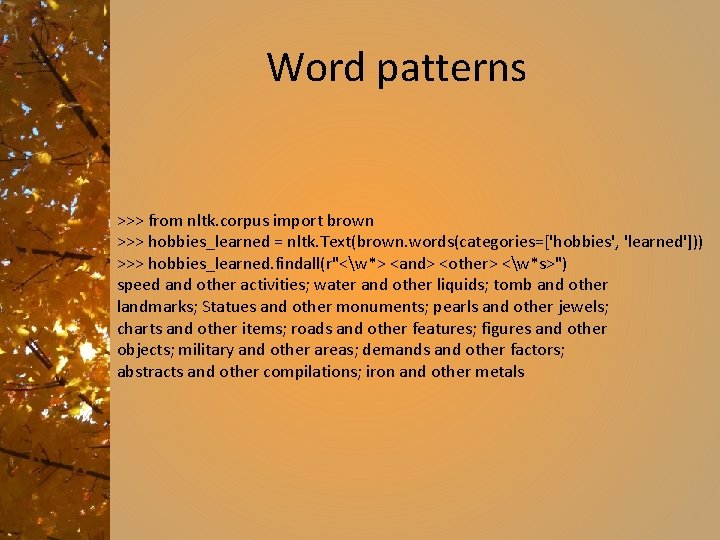

Word patterns >>> from nltk. corpus import brown >>> hobbies_learned = nltk. Text(brown. words(categories=['hobbies', 'learned'])) >>> hobbies_learned. findall(r"<w*> <and> <other> <w*s>") speed and other activities; water and other liquids; tomb and other landmarks; Statues and other monuments; pearls and other jewels; charts and other items; roads and other features; figures and other objects; military and other areas; demands and other factors; abstracts and other compilations; iron and other metals

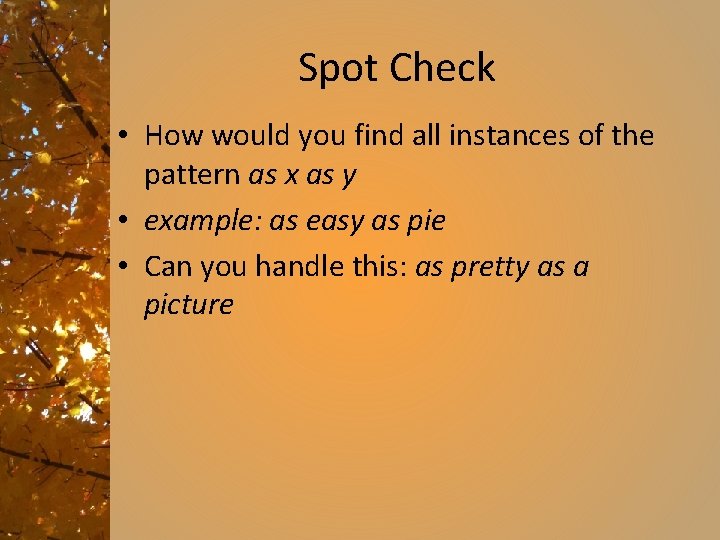

Spot Check • How would you find all instances of the pattern as x as y • example: as easy as pie • Can you handle this: as pretty as a picture

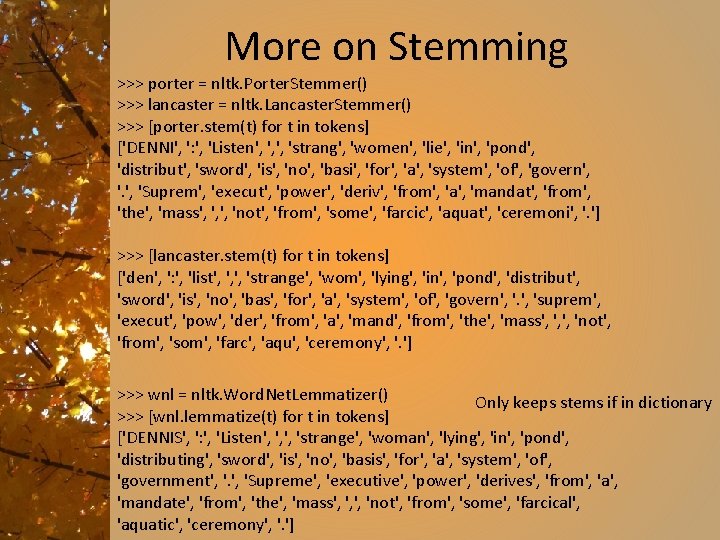

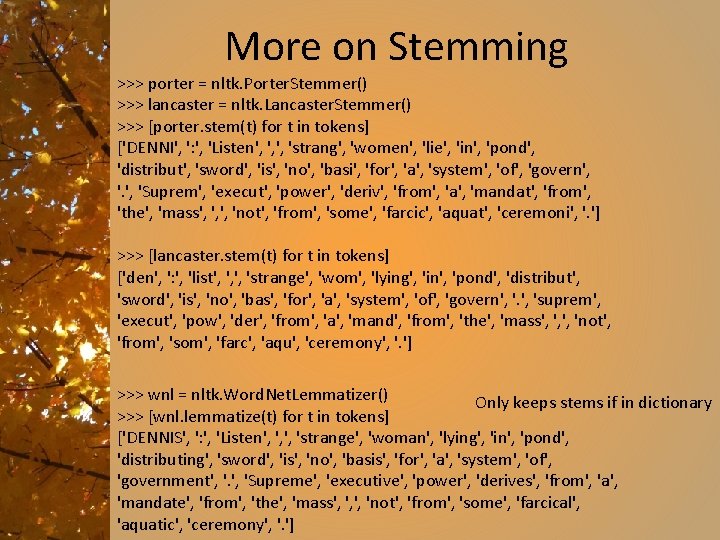

More on Stemming >>> porter = nltk. Porter. Stemmer() >>> lancaster = nltk. Lancaster. Stemmer() >>> [porter. stem(t) for t in tokens] ['DENNI', ': ', 'Listen', ', ', 'strang', 'women', 'lie', 'in', 'pond', 'distribut', 'sword', 'is', 'no', 'basi', 'for', 'a', 'system', 'of', 'govern', 'Suprem', 'execut', 'power', 'deriv', 'from', 'a', 'mandat', 'from', 'the', 'mass', ', ', 'not', 'from', 'some', 'farcic', 'aquat', 'ceremoni', '. '] >>> [lancaster. stem(t) for t in tokens] ['den', ': ', 'list', ', ', 'strange', 'wom', 'lying', 'in', 'pond', 'distribut', 'sword', 'is', 'no', 'bas', 'for', 'a', 'system', 'of', 'govern', 'suprem', 'execut', 'pow', 'der', 'from', 'a', 'mand', 'from', 'the', 'mass', ', ', 'not', 'from', 'som', 'farc', 'aqu', 'ceremony', '. '] >>> wnl = nltk. Word. Net. Lemmatizer() Only keeps stems if in dictionary >>> [wnl. lemmatize(t) for t in tokens] ['DENNIS', ': ', 'Listen', ', ', 'strange', 'woman', 'lying', 'in', 'pond', 'distributing', 'sword', 'is', 'no', 'basis', 'for', 'a', 'system', 'of', 'government', 'Supreme', 'executive', 'power', 'derives', 'from', 'a', 'mandate', 'from', 'the', 'mass', ', ', 'not', 'from', 'some', 'farcical', 'aquatic', 'ceremony', '. ']

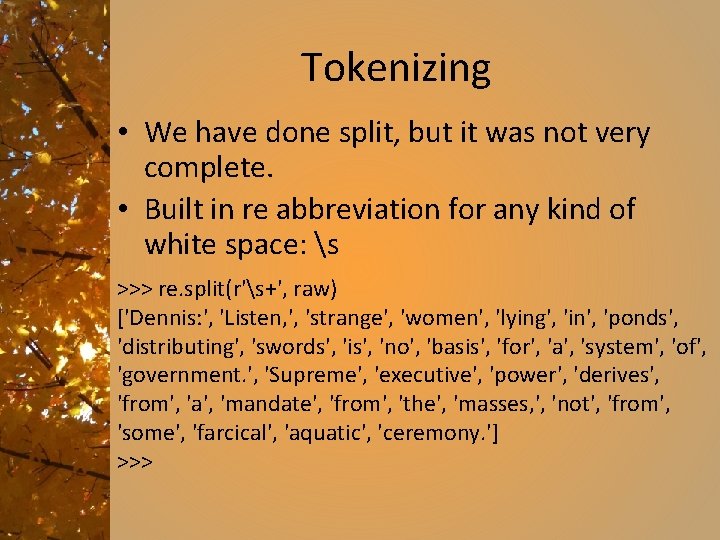

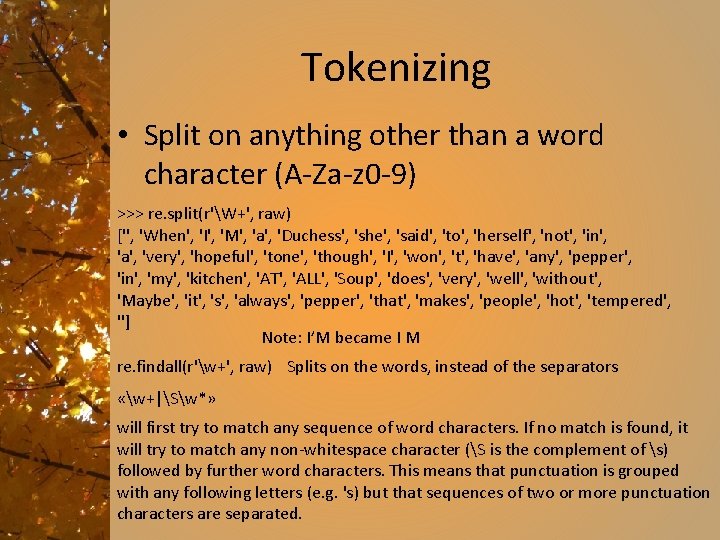

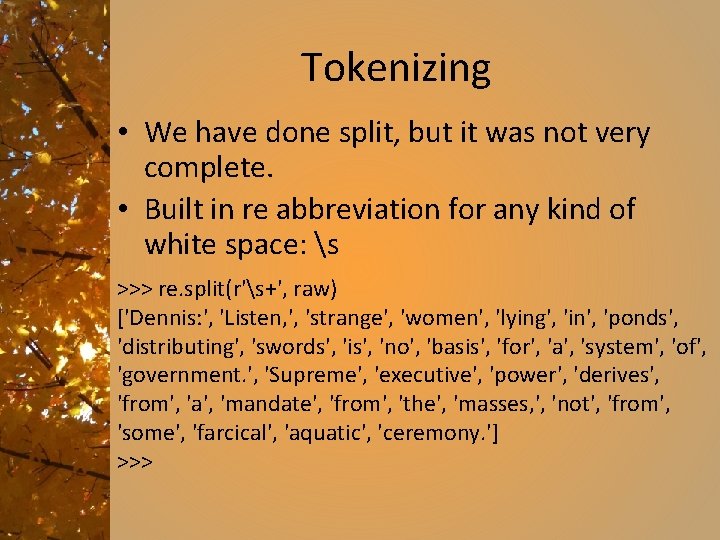

Tokenizing • We have done split, but it was not very complete. • Built in re abbreviation for any kind of white space: s >>> re. split(r's+', raw) ['Dennis: ', 'Listen, ', 'strange', 'women', 'lying', 'in', 'ponds', 'distributing', 'swords', 'is', 'no', 'basis', 'for', 'a', 'system', 'of', 'government. ', 'Supreme', 'executive', 'power', 'derives', 'from', 'a', 'mandate', 'from', 'the', 'masses, ', 'not', 'from', 'some', 'farcical', 'aquatic', 'ceremony. '] >>>

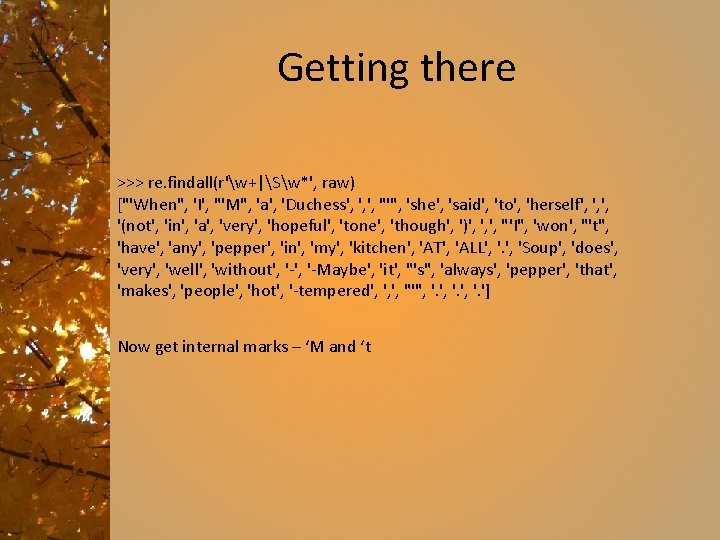

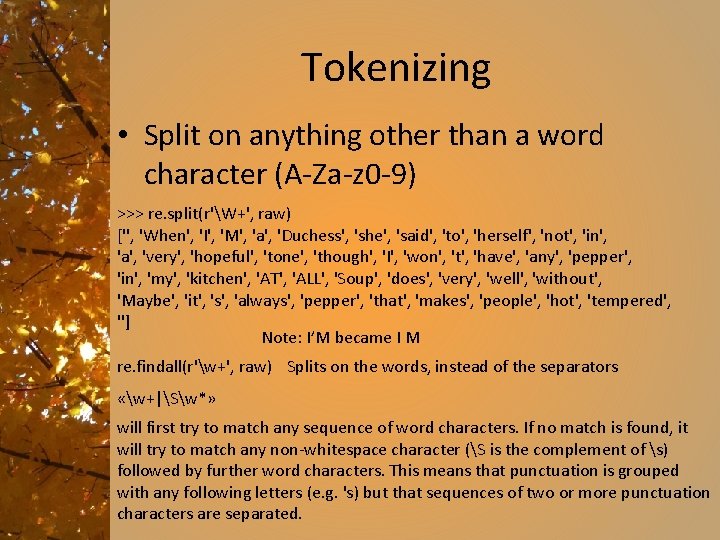

Tokenizing • Split on anything other than a word character (A-Za-z 0 -9) >>> re. split(r'W+', raw) ['', 'When', 'I', 'M', 'a', 'Duchess', 'she', 'said', 'to', 'herself', 'not', 'in', 'a', 'very', 'hopeful', 'tone', 'though', 'I', 'won', 't', 'have', 'any', 'pepper', 'in', 'my', 'kitchen', 'AT', 'ALL', 'Soup', 'does', 'very', 'well', 'without', 'Maybe', 'it', 's', 'always', 'pepper', 'that', 'makes', 'people', 'hot', 'tempered', ''] Note: I’M became I M re. findall(r'w+', raw) Splits on the words, instead of the separators «w+|Sw*» will first try to match any sequence of word characters. If no match is found, it will try to match any non-whitespace character (S is the complement of s) followed by further word characters. This means that punctuation is grouped with any following letters (e. g. 's) but that sequences of two or more punctuation characters are separated.

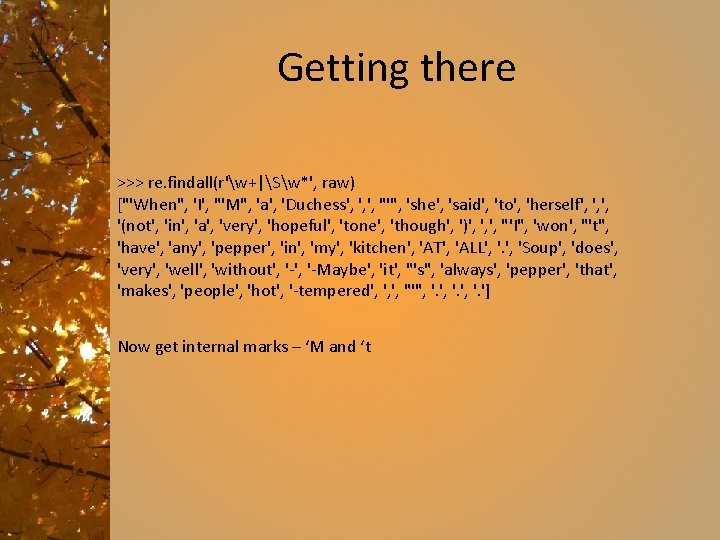

Getting there >>> re. findall(r'w+|Sw*', raw) ["'When", 'I', "'M", 'a', 'Duchess', ', ', "'", 'she', 'said', 'to', 'herself', ', ', '(not', 'in', 'a', 'very', 'hopeful', 'tone', 'though', ')', ', ', "'I", 'won', "'t", 'have', 'any', 'pepper', 'in', 'my', 'kitchen', 'AT', 'ALL', 'Soup', 'does', 'very', 'well', 'without', '-Maybe', 'it', "'s", 'always', 'pepper', 'that', 'makes', 'people', 'hot', '-tempered', ', ', "'", '. ', '. '] Now get internal marks – ‘M and ‘t

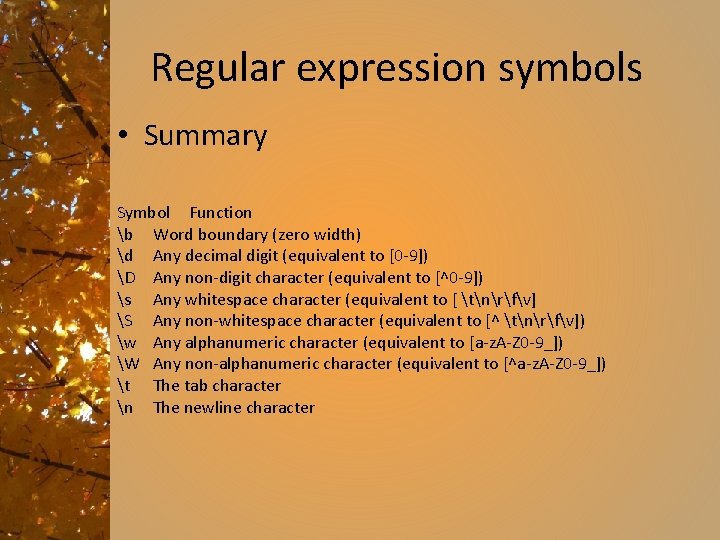

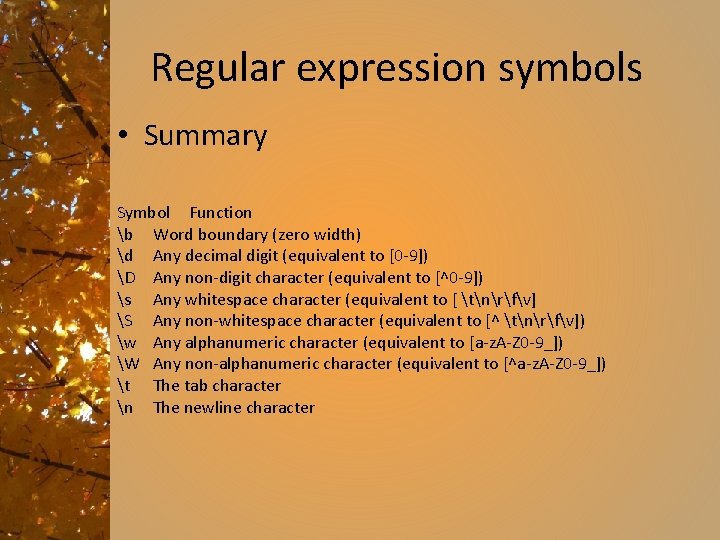

Regular expression symbols • Summary Symbol Function b Word boundary (zero width) d Any decimal digit (equivalent to [0 -9]) D Any non-digit character (equivalent to [^0 -9]) s Any whitespace character (equivalent to [ tnrfv] S Any non-whitespace character (equivalent to [^ tnrfv]) w Any alphanumeric character (equivalent to [a-z. A-Z 0 -9_]) W Any non-alphanumeric character (equivalent to [^a-z. A-Z 0 -9_]) t The tab character n The newline character

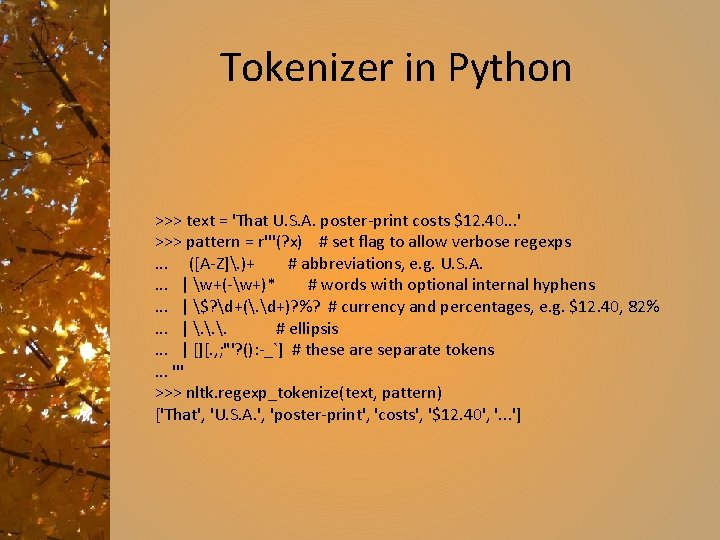

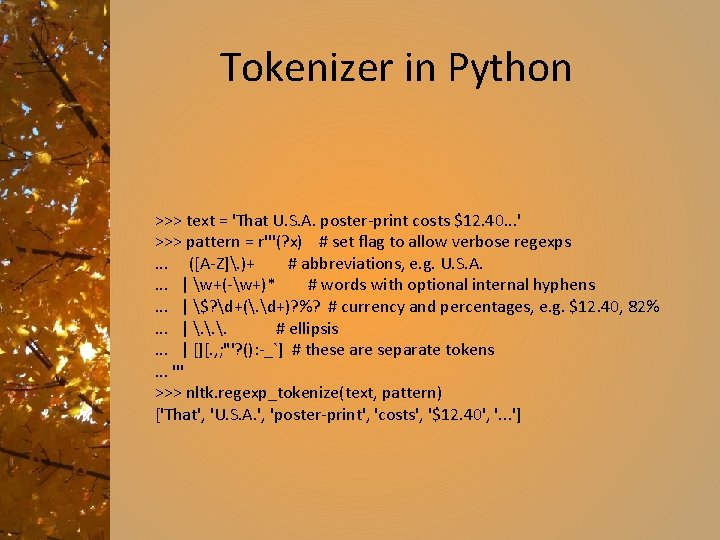

Tokenizer in Python >>> text = 'That U. S. A. poster-print costs $12. 40. . . ' >>> pattern = r'''(? x) # set flag to allow verbose regexps. . . ([A-Z]. )+ # abbreviations, e. g. U. S. A. . | w+(-w+)* # words with optional internal hyphens. . . | $? d+(. d+)? %? # currency and percentages, e. g. $12. 40, 82%. . . | . . . # ellipsis. . . | [][. , ; "'? (): -_`] # these are separate tokens. . . ''' >>> nltk. regexp_tokenize(text, pattern) ['That', 'U. S. A. ', 'poster-print', 'costs', '$12. 40', '. . . ']

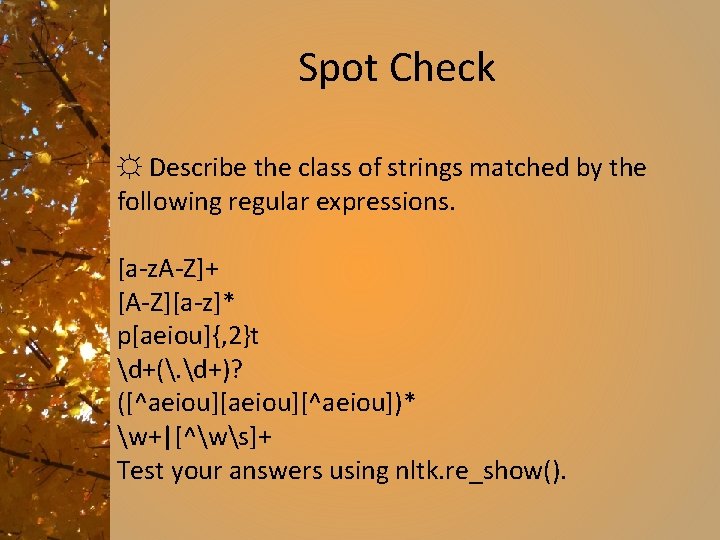

Spot Check ☼ Describe the class of strings matched by the following regular expressions. [a-z. A-Z]+ [A-Z][a-z]* p[aeiou]{, 2}t d+(. d+)? ([^aeiou][^aeiou])* w+|[^ws]+ Test your answers using nltk. re_show().

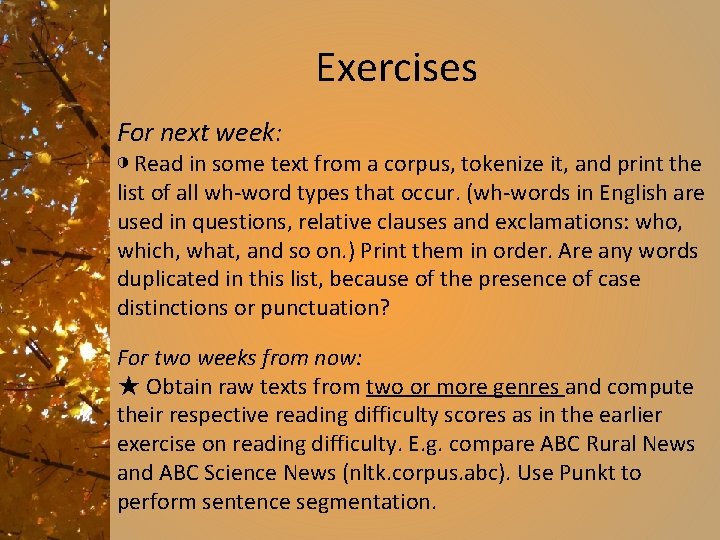

Exercises For next week: ◑ Read in some text from a corpus, tokenize it, and print the list of all wh-word types that occur. (wh-words in English are used in questions, relative clauses and exclamations: who, which, what, and so on. ) Print them in order. Are any words duplicated in this list, because of the presence of case distinctions or punctuation? For two weeks from now: ★ Obtain raw texts from two or more genres and compute their respective reading difficulty scores as in the earlier exercise on reading difficulty. E. g. compare ABC Rural News and ABC Science News (nltk. corpus. abc). Use Punkt to perform sentence segmentation.