Access Map Pattern Matching Prefetch Optimization Friendly Method

![Limitation of Stride Prefetch[Chen+95] Out-of-Order Memory Access Memory Address Space ・ ・ ・ for Limitation of Stride Prefetch[Chen+95] Out-of-Order Memory Access Memory Address Space ・ ・ ・ for](https://slidetodoc.com/presentation_image/1ebe311f2c7f7b010fbcd8a073b22230/image-4.jpg)

![Related Works l Sequence-base Prefetching u. Sequential Prefetch [Smith+ 1978] u. Stride Prefetching Table Related Works l Sequence-base Prefetching u. Sequential Prefetch [Smith+ 1978] u. Stride Prefetching Table](https://slidetodoc.com/presentation_image/1ebe311f2c7f7b010fbcd8a073b22230/image-18.jpg)

- Slides: 20

Access Map Pattern Matching Prefetch: Optimization Friendly Method Yasuo Ishii 1, Mary Inaba 2, and Kei Hiraki 2 1 NEC Corporation 2 The University of Tokyo

Background l Speed gap between processor and memory has been increased l To hide long memory latency, many techniques have been proposed. u. Importance of HW data prefetch has been increased l Many HW prefetchers have been proposed

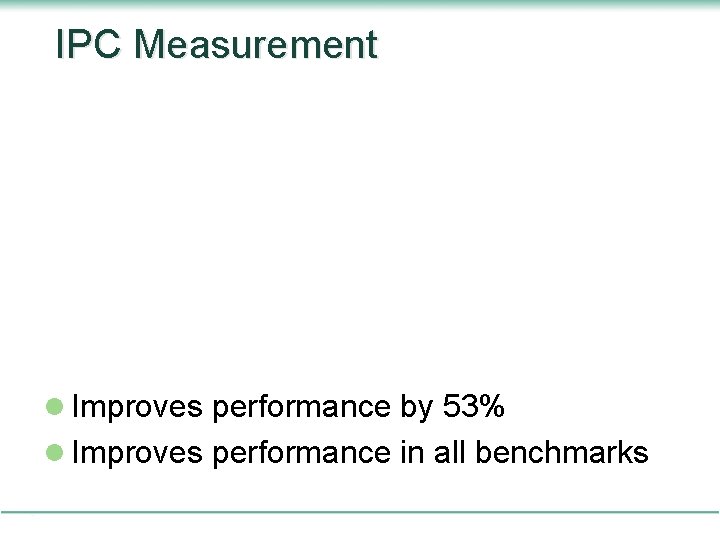

Conventional Methods l Prefetchers uses 1. Instruction Address 2. Memory Access Order 3. Memory Address l Optimizations scrambles information u Out-of-Order memory access u Loop unrolling

![Limitation of Stride PrefetchChen95 OutofOrder Memory Access Memory Address Space for Limitation of Stride Prefetch[Chen+95] Out-of-Order Memory Access Memory Address Space ・ ・ ・ for](https://slidetodoc.com/presentation_image/1ebe311f2c7f7b010fbcd8a073b22230/image-4.jpg)

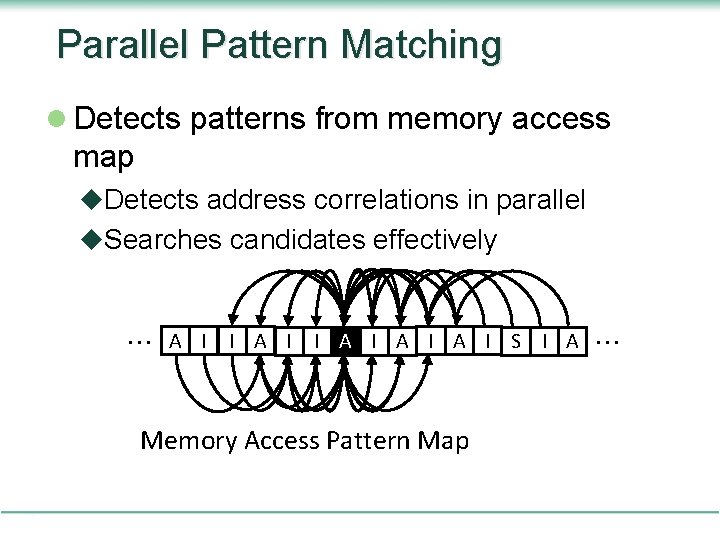

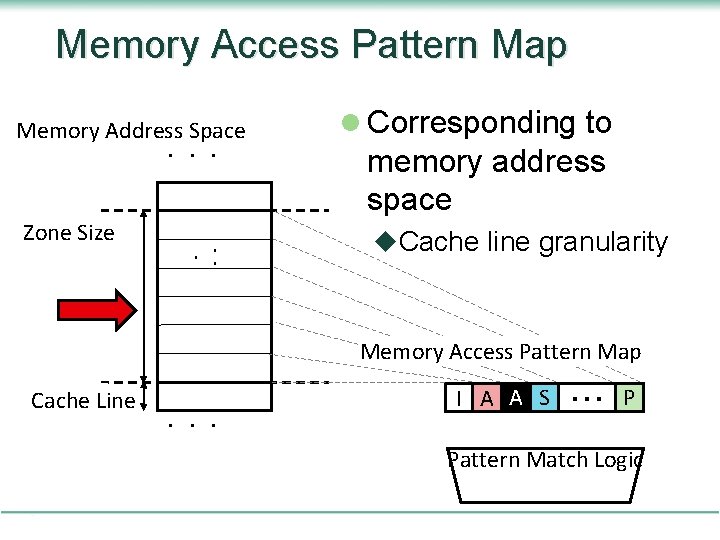

Limitation of Stride Prefetch[Chen+95] Out-of-Order Memory Access Memory Address Space ・ ・ ・ for (int i=0; i<N; i++) { load A[2*i]; ・・・・・ (A) } 0 x. AAFF 0 x. AB 00 Access 1 0 x. AB 02 Access 2 0 x. AB 03 0 x. AB 04 Tag Address Stride State A 0 x. AB 04 2 steady Access 3 Out of Order 0 x. AB 05 0 x. AB 06 Cannot detect strides Access 4 ・ ・ ・ 0 x. ABFF ・ ・ ・ Cache Line

Weakness of Conventional Methods l Out-of-Order Memory Access u Scrambles memory access order u Prefetcher cannot detect address correlations l Loop-Unrolling u Requires additional table entry u Each entry trained slowly Optimization friendly prefetcher is required

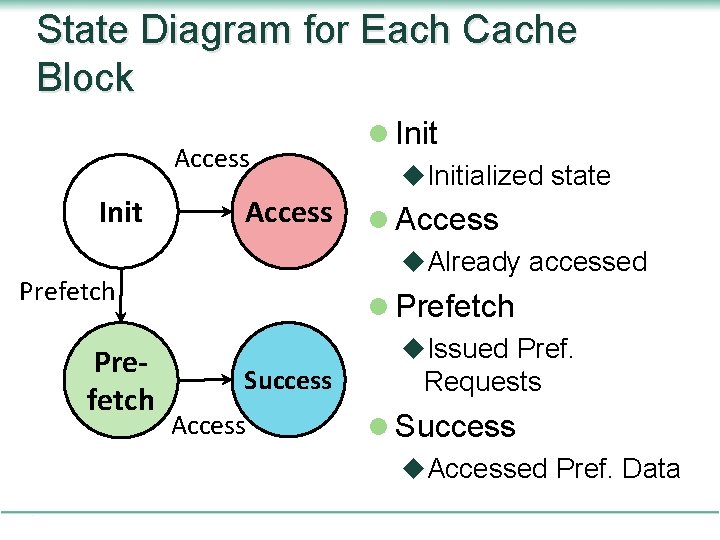

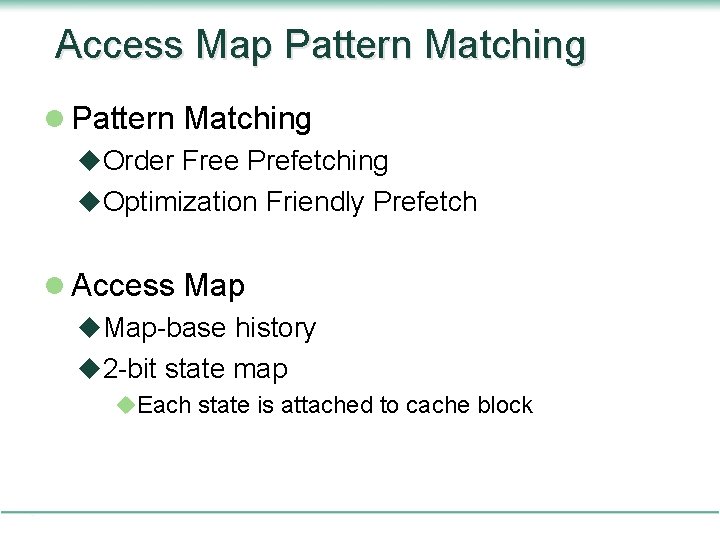

Access Map Pattern Matching l Pattern Matching u. Order Free Prefetching u. Optimization Friendly Prefetch l Access Map u. Map-base history u 2 -bit state map u. Each state is attached to cache block

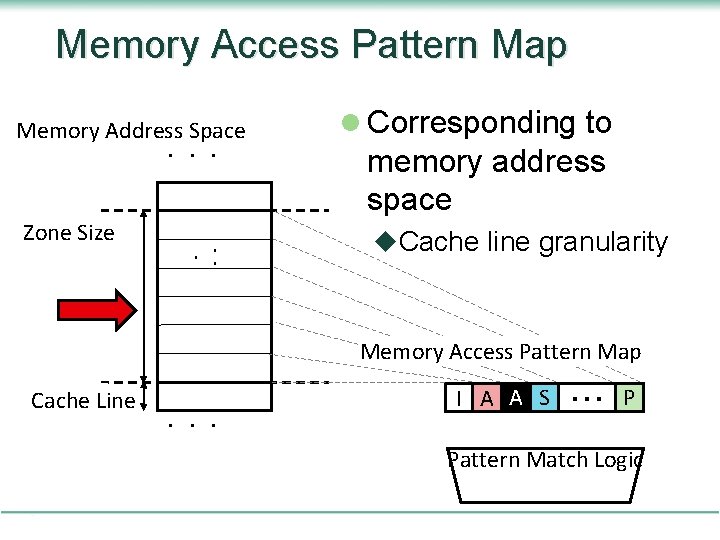

State Diagram for Each Cache Block Access Init Access Prefetch Success Access l Init u. Initialized state l Access u. Already accessed l Prefetch u. Issued Pref. Requests l Success u. Accessed Pref. Data

Memory Access Pattern Map Memory Address Space ・ ・ ・ Zone Size ・ ・・ l Corresponding to memory address space u. Cache line granularity Memory Access Pattern Map I A AI S ・・・ P Cache Line ・ ・ ・ Pattern Match Logic

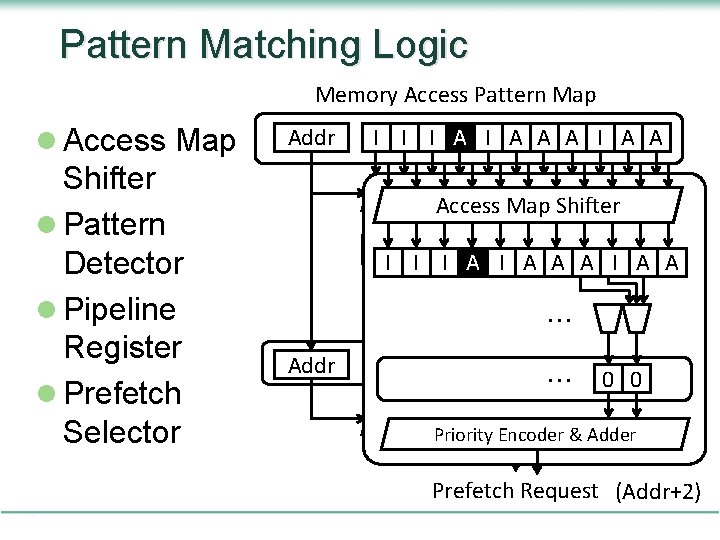

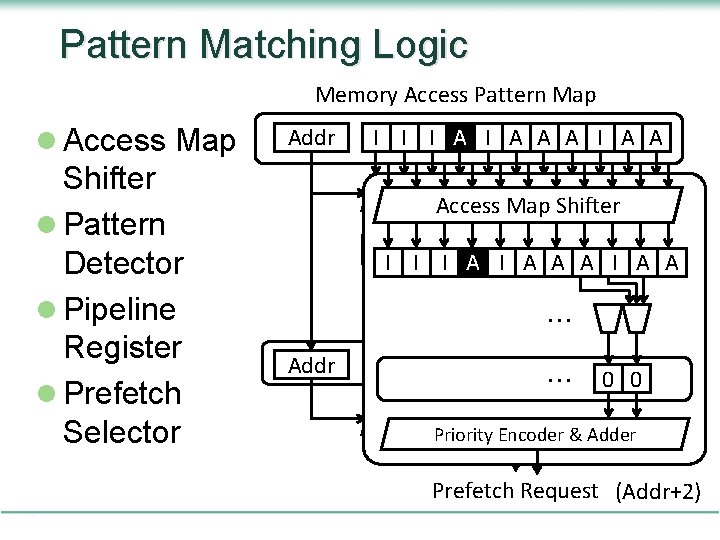

Pattern Matching Logic Memory Access Pattern Map l Access Map Shifter l Pattern Detector l Pipeline Register l Prefetch Selector Addr I I I A A Access Map Shifter I I I A I A A ・・・ Addr 0 0 1 1 ・・・ Feedback Path ・・・ 0 0 + + + ・・・ 2 3 Encoder & Adder 1 Priority Encoder & Adder Prefetch Request (Addr+2)

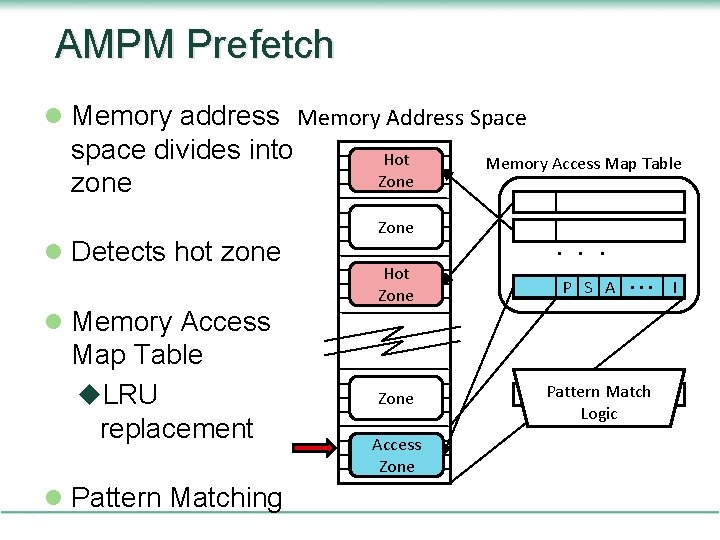

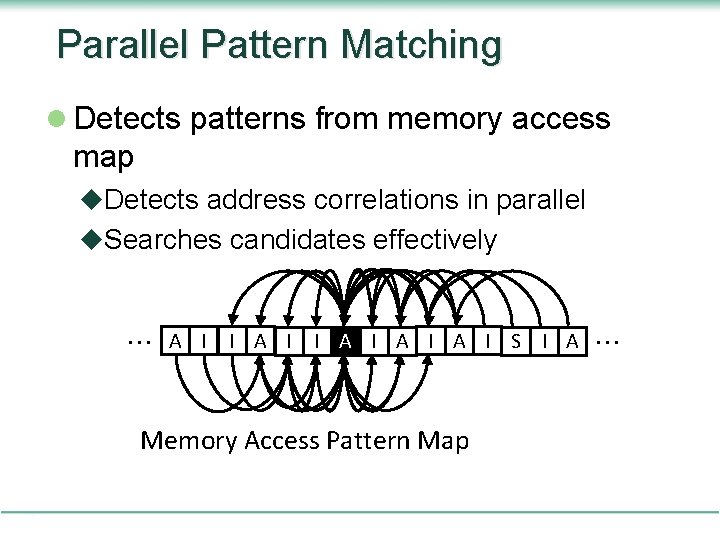

Parallel Pattern Matching l Detects patterns from memory access map u. Detects address correlations in parallel u. Searches candidates effectively ・・・ A I I A I A I S I A ・・・ Memory Access Pattern Map

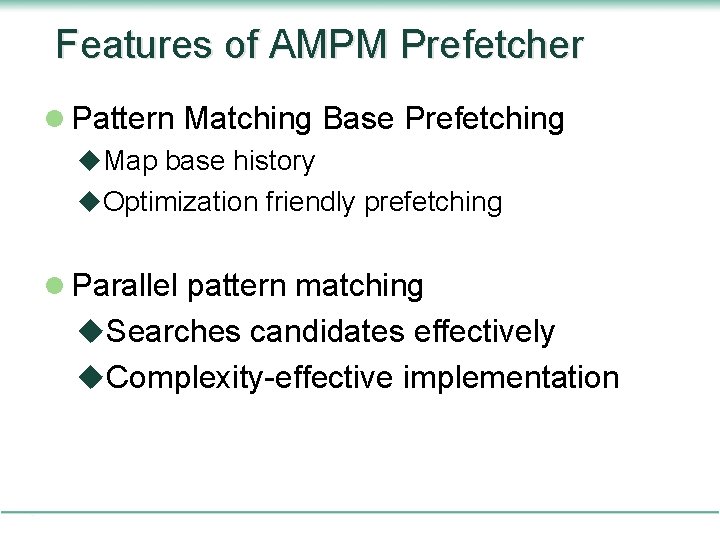

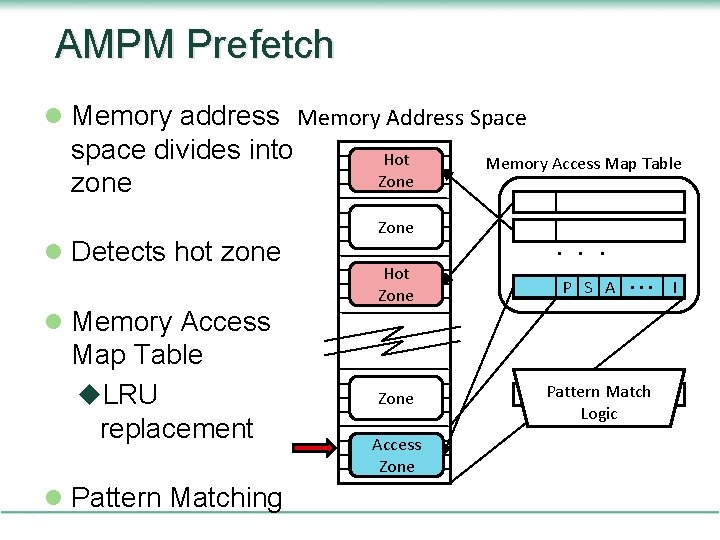

AMPM Prefetch l Memory address Memory Address Space space divides into zone l Detects hot zone l Memory Access Map Table u. LRU replacement l Pattern Matching Hot Zone Memory Access Map Table Zone Hot Zone Access Hot Zone ・ ・ ・ P S A ・・・ I Pattern Request Match Prefetch Logic

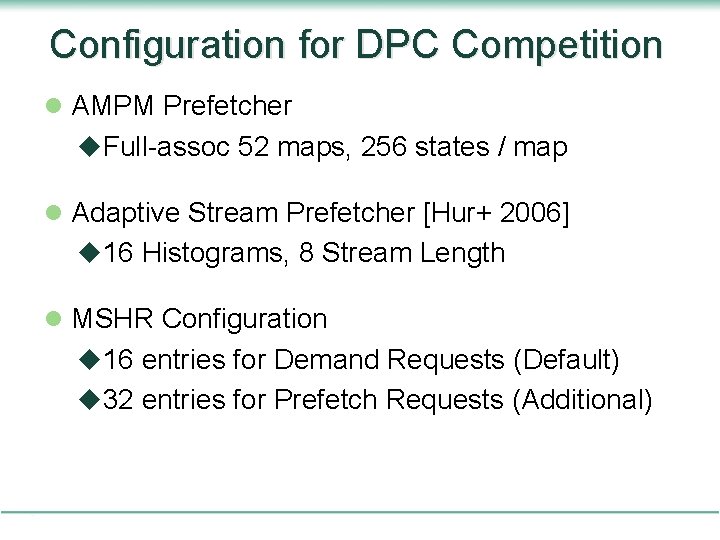

Features of AMPM Prefetcher l Pattern Matching Base Prefetching u. Map base history u. Optimization friendly prefetching l Parallel pattern matching u. Searches candidates effectively u. Complexity-effective implementation

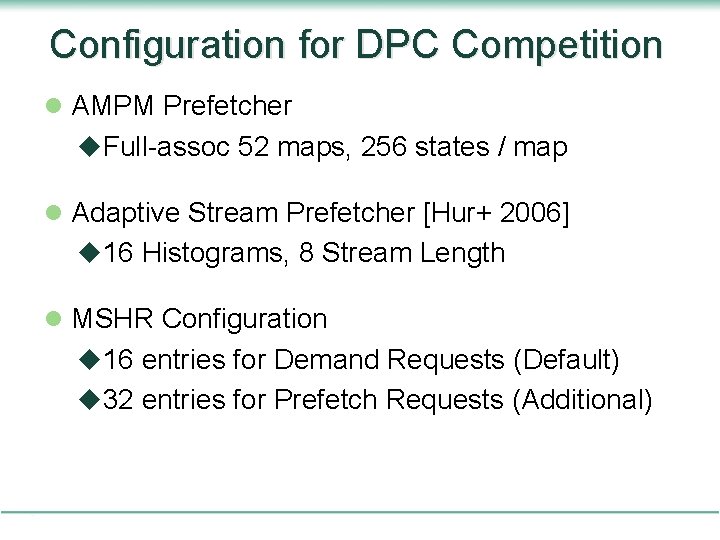

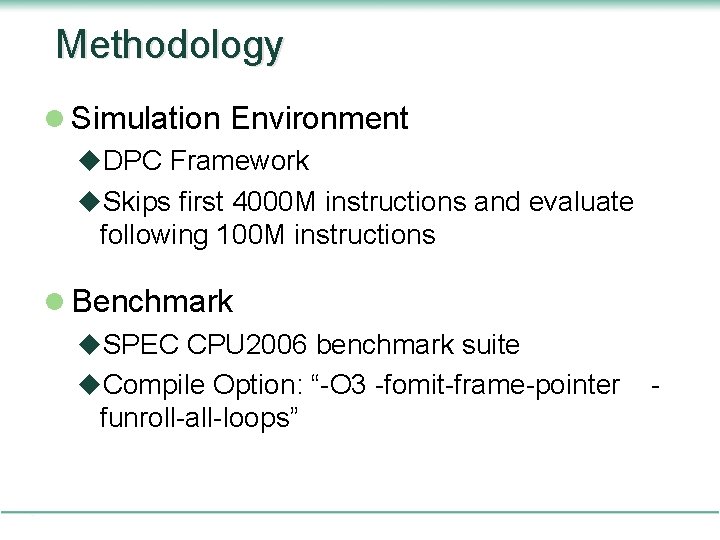

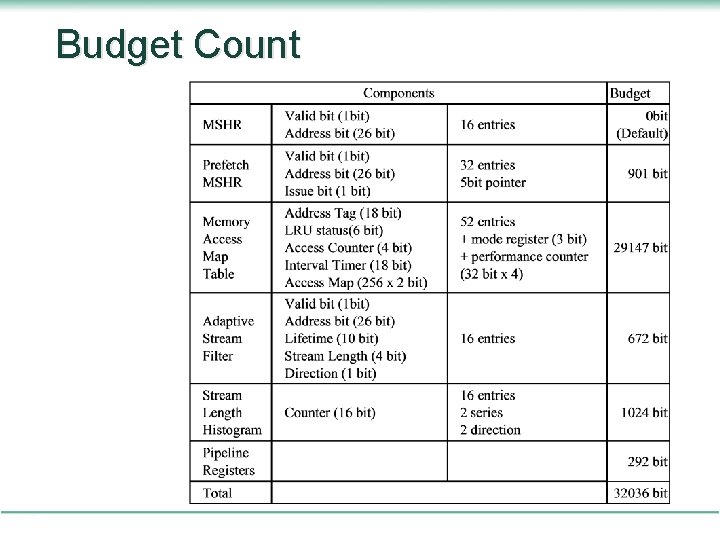

Configuration for DPC Competition l AMPM Prefetcher u. Full-assoc 52 maps, 256 states / map l Adaptive Stream Prefetcher [Hur+ 2006] u 16 Histograms, 8 Stream Length l MSHR Configuration u 16 entries for Demand Requests (Default) u 32 entries for Prefetch Requests (Additional)

Budget Count

Methodology l Simulation Environment u. DPC Framework u. Skips first 4000 M instructions and evaluate following 100 M instructions l Benchmark u. SPEC CPU 2006 benchmark suite u. Compile Option: “-O 3 -fomit-frame-pointer funroll-all-loops” -

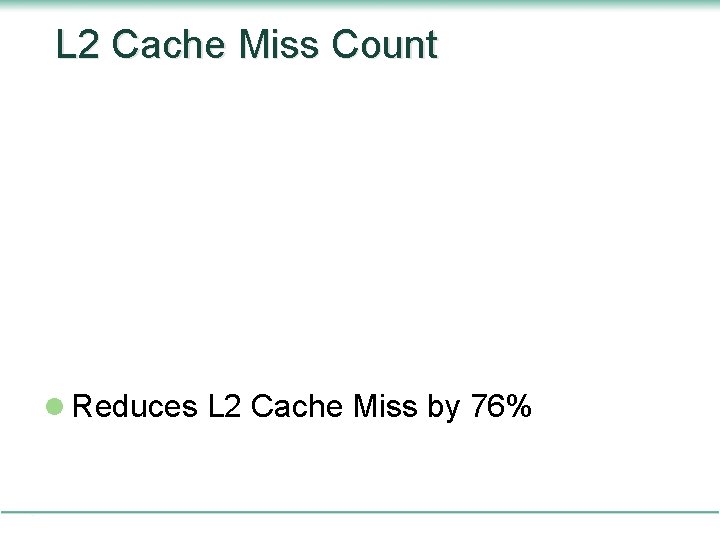

IPC Measurement l Improves performance by 53% l Improves performance in all benchmarks

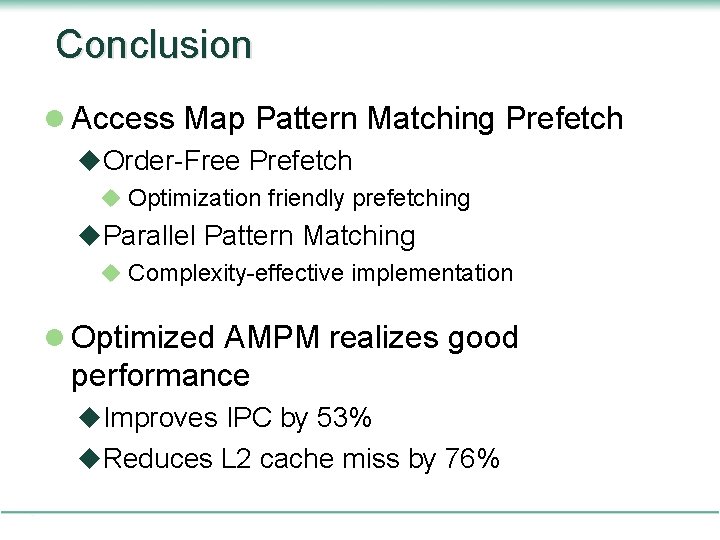

L 2 Cache Miss Count l Reduces L 2 Cache Miss by 76%

![Related Works l Sequencebase Prefetching u Sequential Prefetch Smith 1978 u Stride Prefetching Table Related Works l Sequence-base Prefetching u. Sequential Prefetch [Smith+ 1978] u. Stride Prefetching Table](https://slidetodoc.com/presentation_image/1ebe311f2c7f7b010fbcd8a073b22230/image-18.jpg)

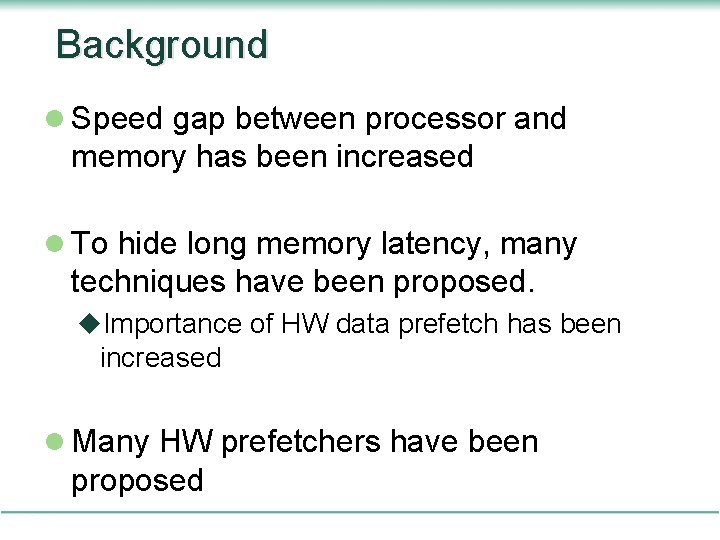

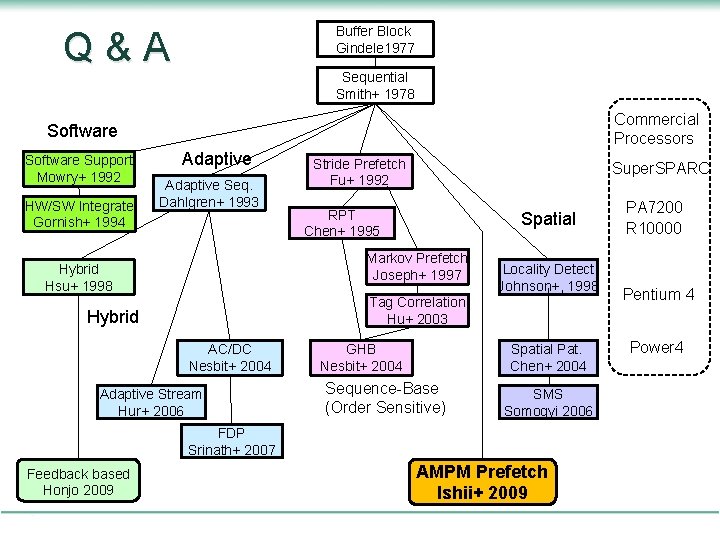

Related Works l Sequence-base Prefetching u. Sequential Prefetch [Smith+ 1978] u. Stride Prefetching Table [Fu+ 1992] u. Markov Predictor [Joseph+ 1997] u. Global History Buffer [Nesbit+ 2004] l Adaptive Prefetching u. AC/DC [Nesbit+ 2004] u. Feedback Directed Prefetch [Srinath+ 2007] u. Focus Prefetching[Manikantan+ 2008]

Conclusion l Access Map Pattern Matching Prefetch u. Order-Free Prefetch u Optimization friendly prefetching u. Parallel Pattern Matching u Complexity-effective implementation l Optimized AMPM realizes good performance u. Improves IPC by 53% u. Reduces L 2 cache miss by 76%

Buffer Block Gindele 1977 Q&A Sequential Smith+ 1978 Commercial Processors Software Support Mowry+ 1992 HW/SW Integrate Gornish+ 1994 Adaptive Seq. Dahlgren+ 1993 Stride Prefetch Fu+ 1992 Super. SPARC RPT Chen+ 1995 Spatial Markov Prefetch Joseph+ 1997 Hybrid Hsu+ 1998 Locality Detect Johnson+, 1998 Tag Correlation Hu+ 2003 Hybrid AC/DC Nesbit+ 2004 Adaptive Stream Hur+ 2006 GHB Nesbit+ 2004 Spatial Pat. Chen+ 2004 Sequence-Base (Order Sensitive) SMS Somogyi 2006 FDP Srinath+ 2007 Feedback based Honjo 2009 AMPM Prefetch Ishii+ 2009 PA 7200 R 10000 Pentium 4 Power 4