Acceleratorlevel Parallelism Mark D Hill Wisconsin Vijay Janapa

![Gables for N IP So. C CPUs IP[0] A 0 = 1 A 0*Ppeak Gables for N IP So. C CPUs IP[0] A 0 = 1 A 0*Ppeak](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-31.jpg)

![Perf limited by IP[0] at I 0 = 8 I[1] not used no roofline Perf limited by IP[0] at I 0 = 8 I[1] not used no roofline](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-33.jpg)

![IP[1] present but Perf drops to 1! Why? I 1 = 0. 1 memory IP[1] present but Perf drops to 1! Why? I 1 = 0. 1 memory](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-34.jpg)

![Perf only 2 with IP[1] bottleneck IP[1] SRAM/reuse I 1 = 0. 1 8 Perf only 2 with IP[1] bottleneck IP[1] SRAM/reuse I 1 = 0. 1 8](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-35.jpg)

![Does more IP[i] SRAM help Op. Intensity (Ii)? Compute v. Communication: Op. Intensity (I) Does more IP[i] SRAM help Op. Intensity (Ii)? Compute v. Communication: Op. Intensity (I)](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-40.jpg)

- Slides: 50

Accelerator-level Parallelism Mark D. Hill, Wisconsin & Vijay Janapa Reddi, Harvard @ Microsoft, February 2020 Aspects of this work on Mobile So. Cs and Gables were developed while the authors were “interns” with Google’s Mobile Silicon Group. Thanks! 1

COMPUTING COMMUNITY CONSORTIUM (CCC): CATALYZING I. T. ’S VIRTUOUS CYCLE Citizens Industry Government Academia Get involved w/ white papers, workshops, & advocating I. T. research

Accelerator-level Parallelism Call to Action Future apps demand much more computing Standard tech scaling & architecture NOT sufficient Mobile So. Cs show a promising approach: ALP = Parallelism among workload components concurrently executing on multiple accelerators (IPs) Call to action to develop “science” for ubiquitous ALP 4

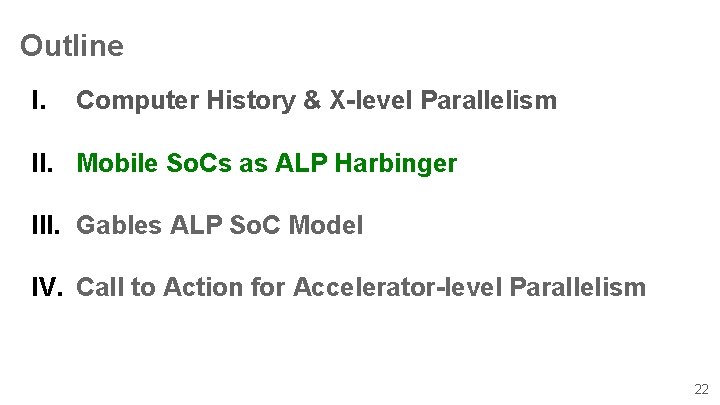

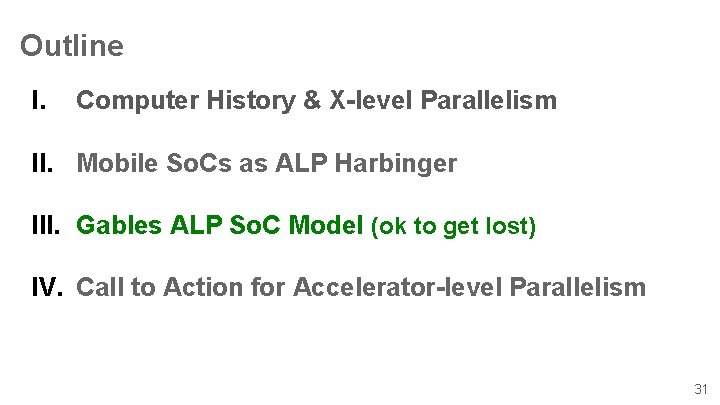

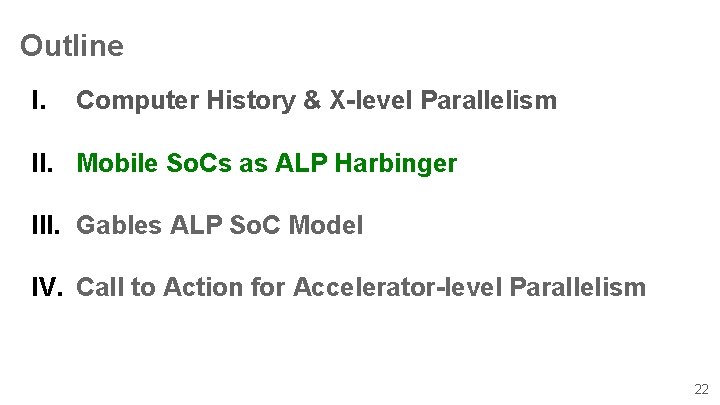

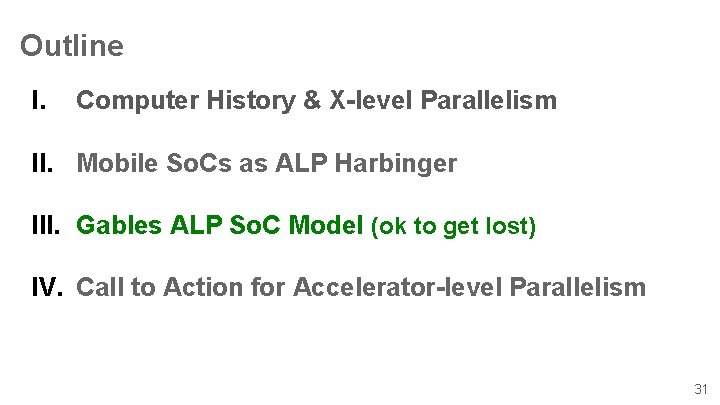

Outline I. Computer History & X-level Parallelism II. Mobile So. Cs as ALP Harbinger III. Gables ALP So. C Model IV. Call to Action for Accelerator-level Parallelism 5

20 th Century Information & Communication Technology Has Changed Our World • <long list omitted> Required innovations in algorithms, applications, programming languages, … , & system software Key (invisible) enablers (cost-)performance gains • Semiconductor technology (“Moore’s Law”) • Computer architecture (~80 x per Danowitz et al. ) 6

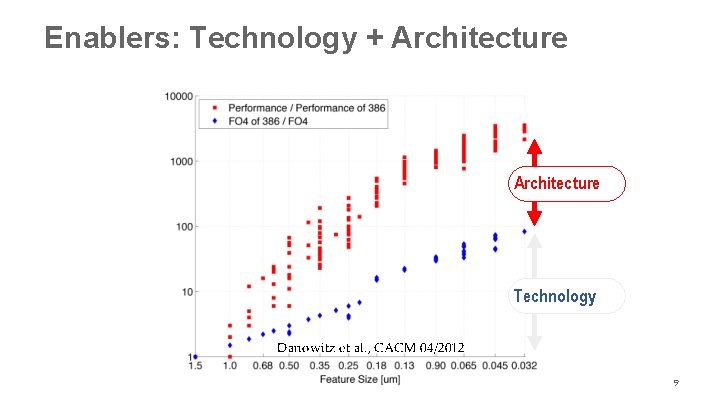

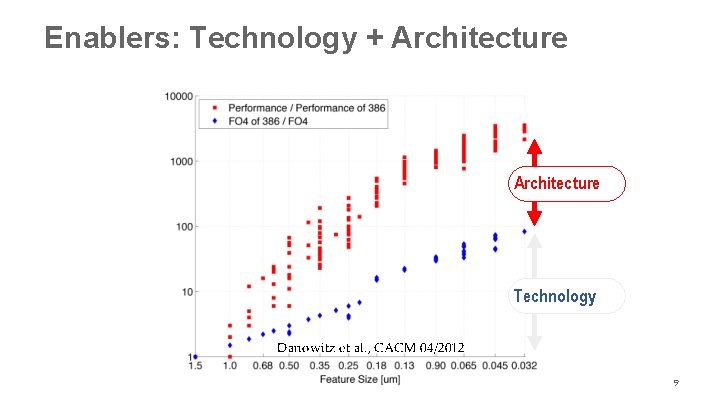

Enablers: Technology + Architecture Technology Danowitz et al. , CACM 04/2012 9

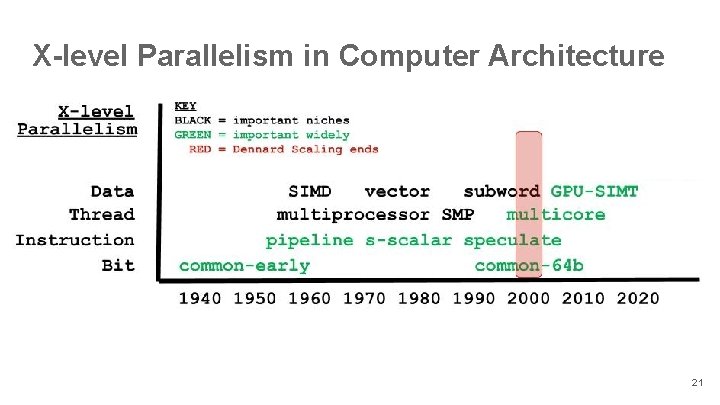

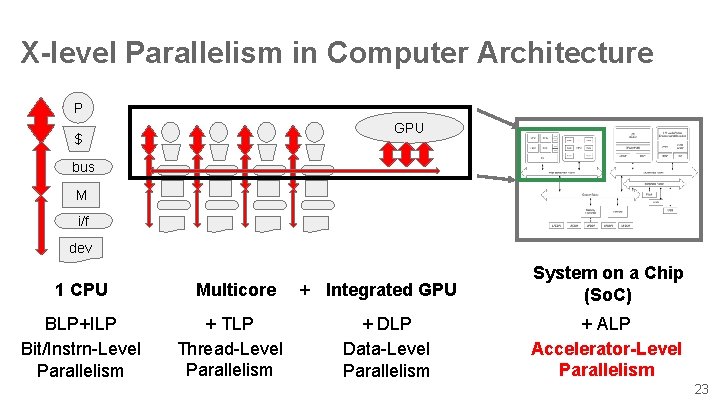

How did Architecture Exploit Moore’s Law? MORE (& faster) transistors even faster computers Memory – transistors in parallel • Vast semiconductor memory (DRAM) • Cache hierarchy for fast memory illusion Processing – transistors in parallel Bit-, Instruction-, Thread-, & Data-level Parallelism Now Accelerator-level Parallelism 10

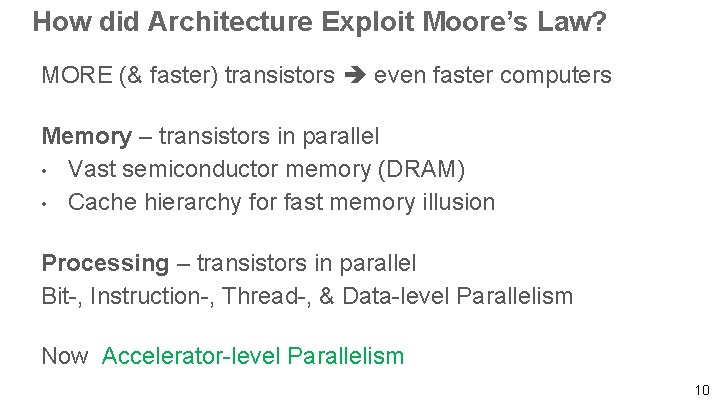

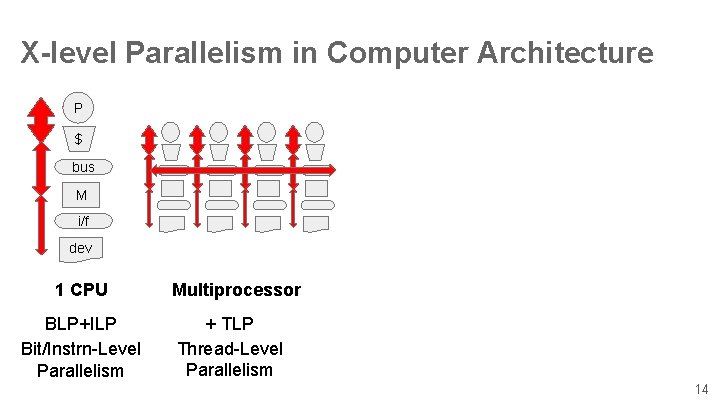

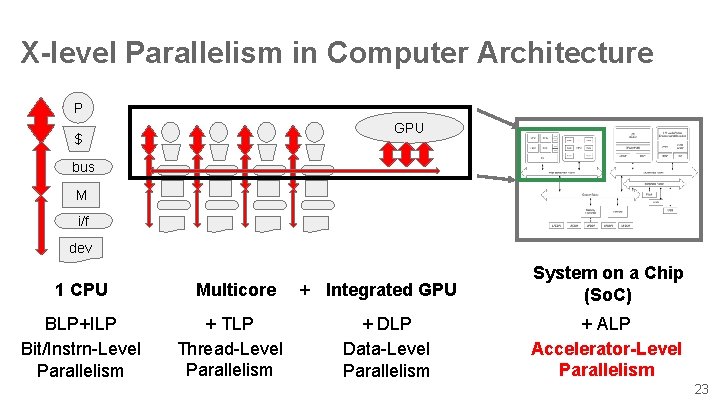

X-level Parallelism in Computer Architecture P $ bus M i/f dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism 11

Bit-level Parallelism (BLP) Early computers: few switches (transistors) • compute a result in many steps • E. g. , 1 multiplication partial product per cycle Bit-level parallelism • More transistors compute more in parallel • E. g. , Wallace Tree multiplier (right) Larger words help: 8 b 16 b 32 b 64 b Important: Easy for software NEW: Smaller word size, e. g. machine learning inference accelerators 12

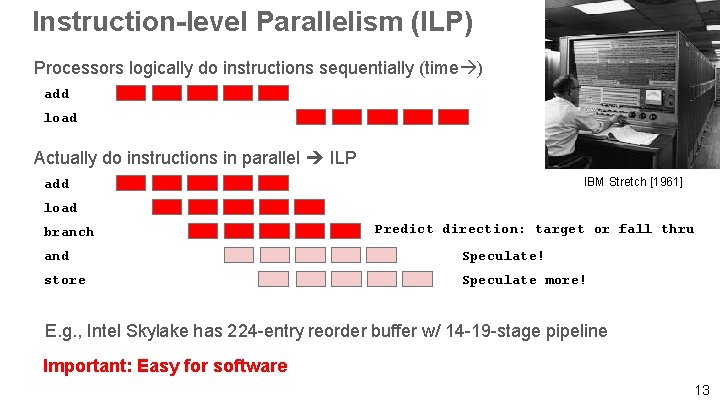

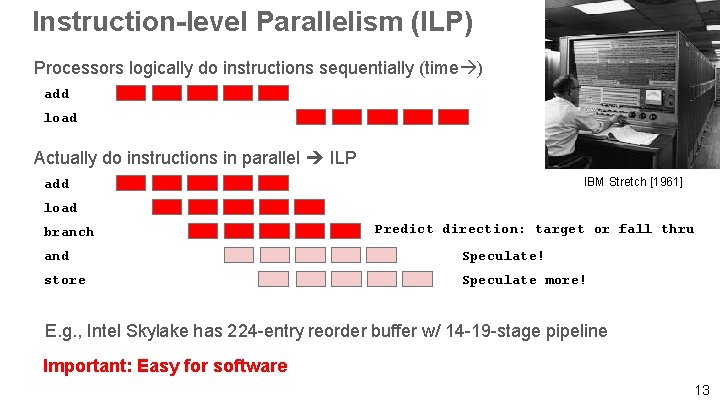

Instruction-level Parallelism (ILP) Processors logically do instructions sequentially (time ) add load Actually do instructions in parallel ILP IBM Stretch [1961] add load branch Predict direction: target or fall thru and Speculate! store Speculate more! E. g. , Intel Skylake has 224 -entry reorder buffer w/ 14 -19 -stage pipeline Important: Easy for software 13

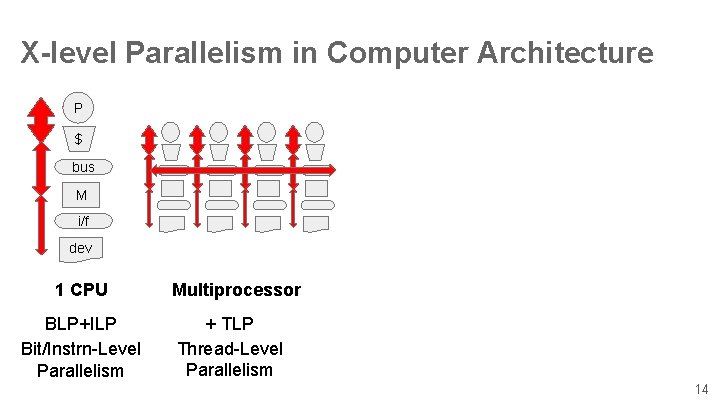

X-level Parallelism in Computer Architecture P $ bus M i/f dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism Multiprocessor + TLP Thread-Level Parallelism 14

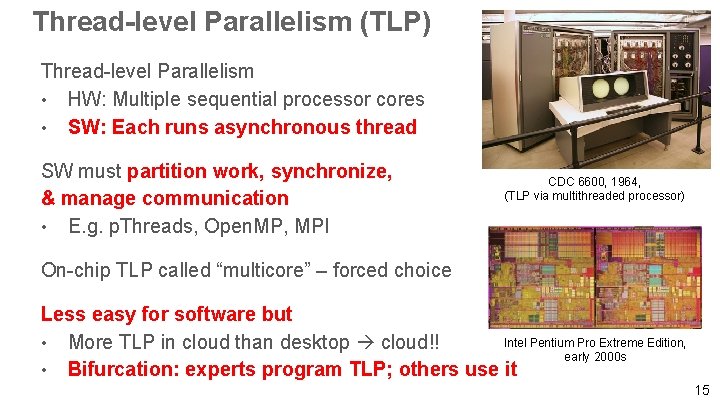

Thread-level Parallelism (TLP) Thread-level Parallelism • HW: Multiple sequential processor cores • SW: Each runs asynchronous thread SW must partition work, synchronize, & manage communication • E. g. p. Threads, Open. MP, MPI CDC 6600, 1964, (TLP via multithreaded processor) On-chip TLP called “multicore” – forced choice Less easy for software but Intel Pentium Pro Extreme Edition, • More TLP in cloud than desktop cloud!! early 2000 s • Bifurcation: experts program TLP; others use it 15

X-level Parallelism in Computer Architecture P $ bus M i/f dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism Multicore + TLP Thread-Level Parallelism 17

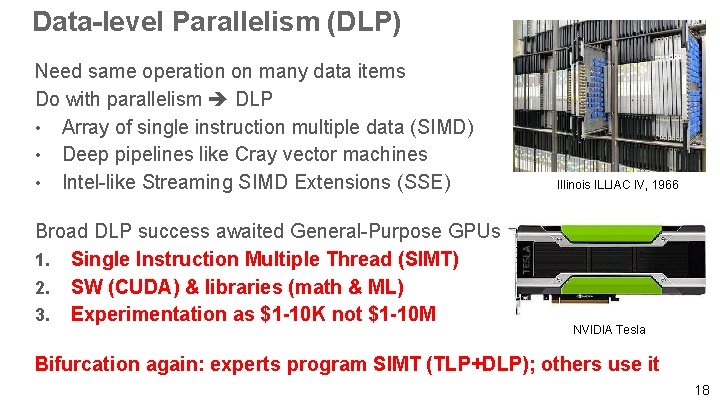

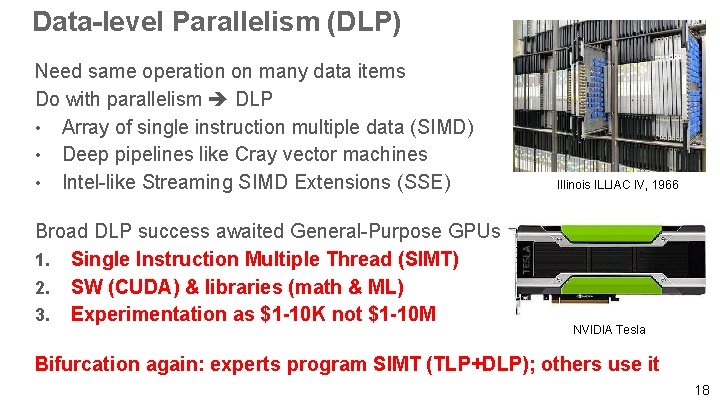

Data-level Parallelism (DLP) Need same operation on many data items Do with parallelism DLP • Array of single instruction multiple data (SIMD) • Deep pipelines like Cray vector machines • Intel-like Streaming SIMD Extensions (SSE) Broad DLP success awaited General-Purpose GPUs 1. Single Instruction Multiple Thread (SIMT) 2. SW (CUDA) & libraries (math & ML) 3. Experimentation as $1 -10 K not $1 -10 M Illinois ILLIAC IV, 1966 NVIDIA Tesla Bifurcation again: experts program SIMT (TLP+DLP); others use it 18

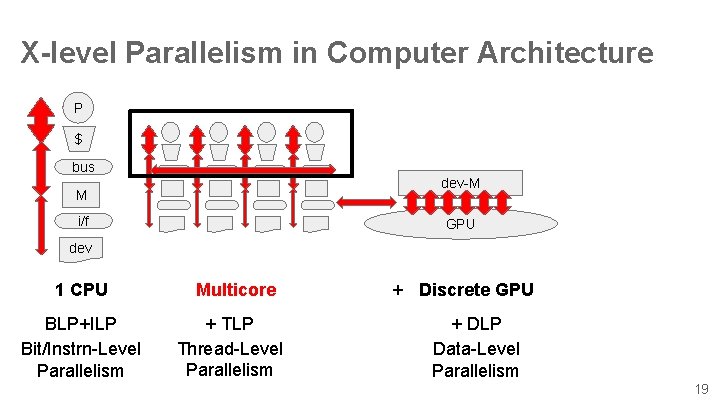

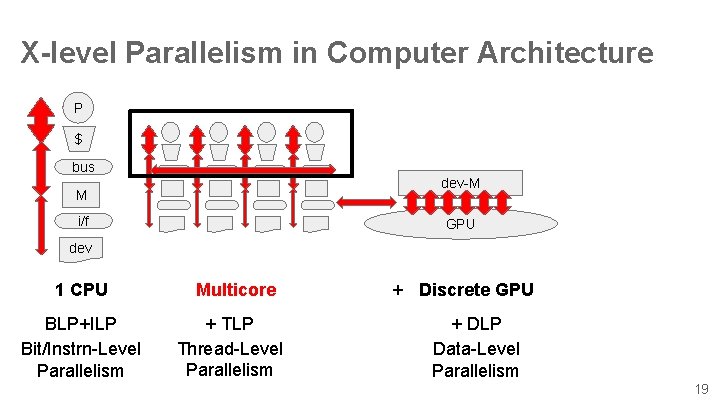

X-level Parallelism in Computer Architecture P $ bus dev-M M i/f GPU dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism Multicore + TLP Thread-Level Parallelism + Discrete GPU + DLP Data-Level Parallelism 19

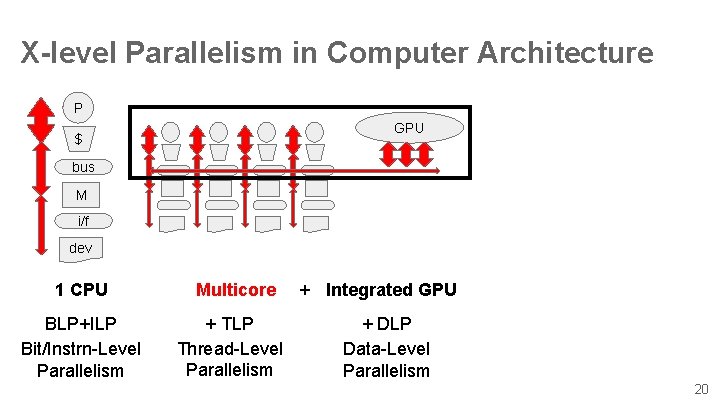

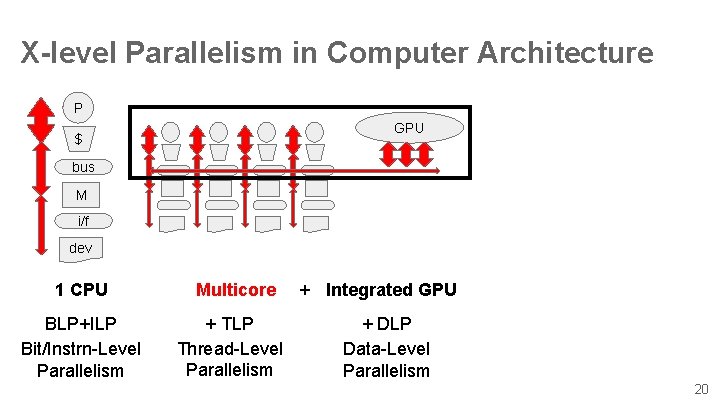

X-level Parallelism in Computer Architecture P GPU $ bus M i/f dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism Multicore + TLP Thread-Level Parallelism + Integrated GPU + DLP Data-Level Parallelism 20

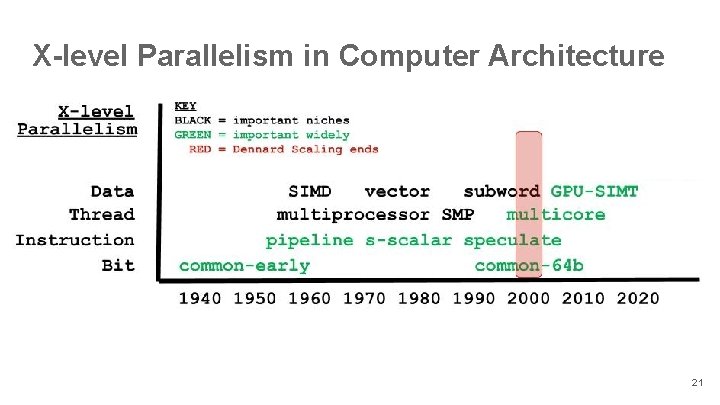

X-level Parallelism in Computer Architecture 21

Outline I. Computer History & X-level Parallelism II. Mobile So. Cs as ALP Harbinger III. Gables ALP So. C Model IV. Call to Action for Accelerator-level Parallelism 22

X-level Parallelism in Computer Architecture P GPU $ bus M i/f dev 1 CPU BLP+ILP Bit/Instrn-Level Parallelism Multicore + TLP Thread-Level Parallelism + Integrated GPU + DLP Data-Level Parallelism System on a Chip (So. C) + ALP Accelerator-Level Parallelism 23

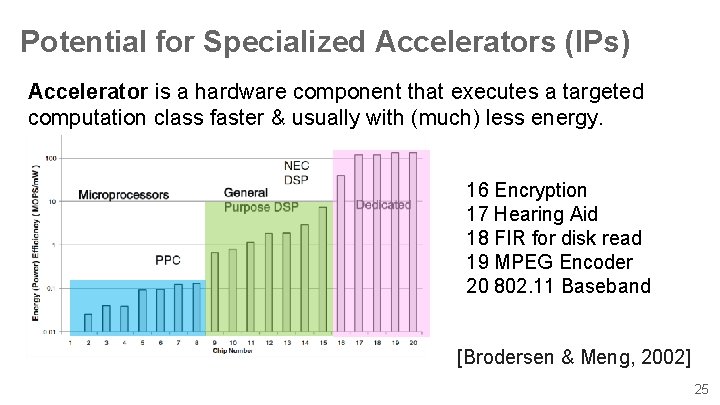

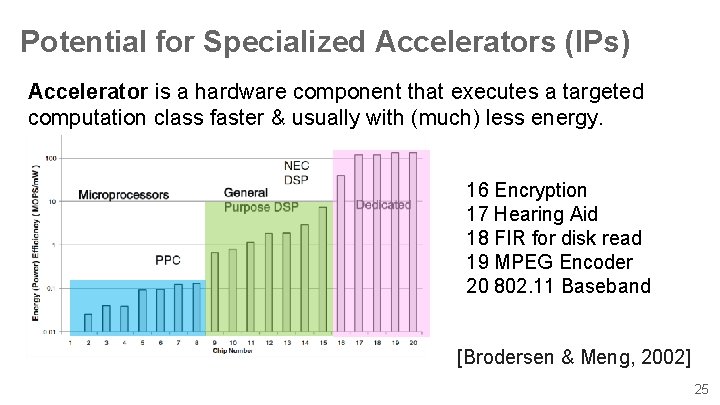

Potential for Specialized Accelerators (IPs) Accelerator is a hardware component that executes a targeted v computation class faster & usually with (much) less energy. v 16 Encryption 17 Hearing Aid 18 FIR for disk read 19 MPEG Encoder 20 802. 11 Baseband [Brodersen & Meng, 2002] 25

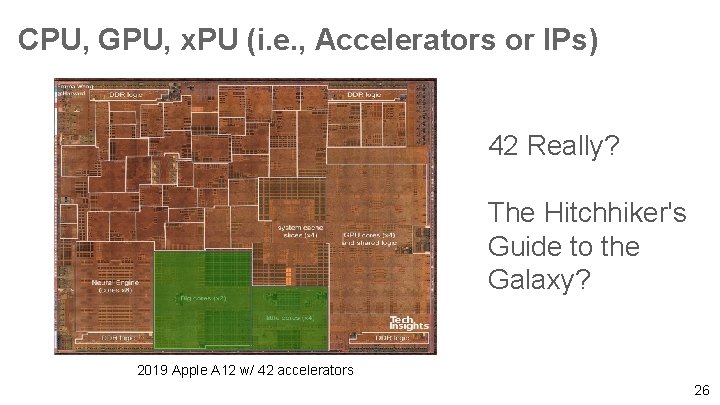

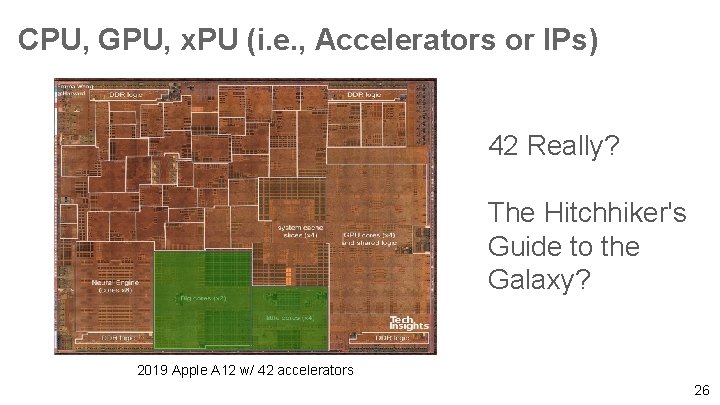

CPU, GPU, x. PU (i. e. , Accelerators or IPs) 42 Really? The Hitchhiker's Guide to the Galaxy? 2019 Apple A 12 w/ 42 accelerators 26

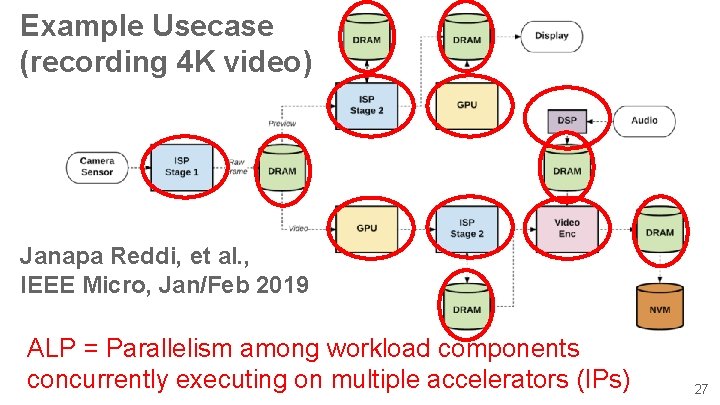

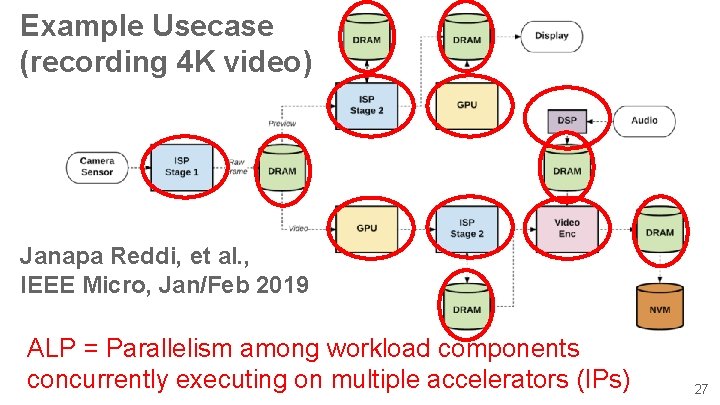

Example Usecase (recording 4 K video) Janapa Reddi, et al. , IEEE Micro, Jan/Feb 2019 ALP = Parallelism among workload components concurrently executing on multiple accelerators (IPs) 27

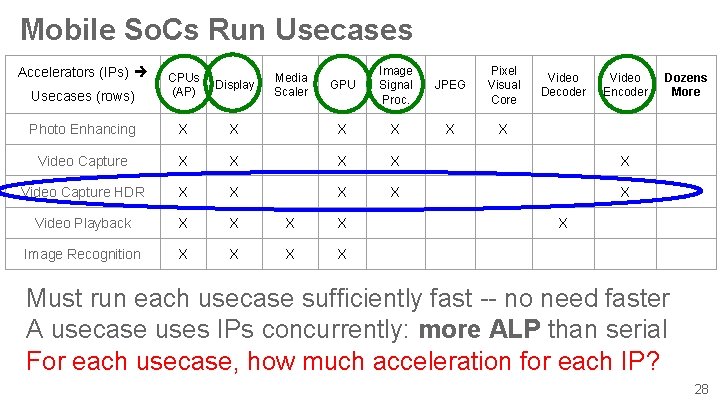

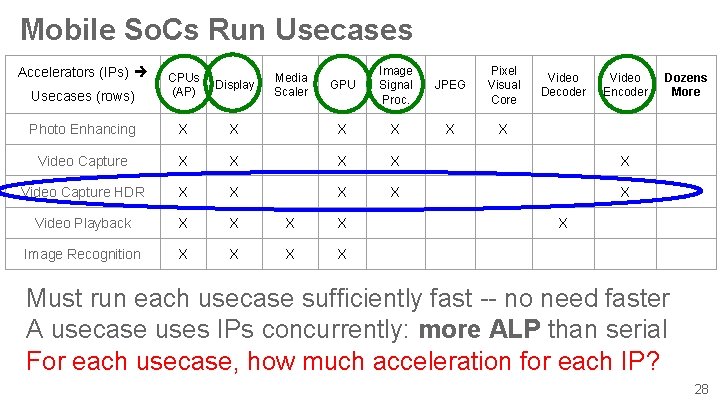

Mobile So. Cs Run Usecases GPU Image Signal Proc. JPEG Pixel Visual Core X X X X X Video Capture HDR X X X Video Playback X X Image Recognition X X Accelerators (IPs) Usecases (rows) CPUs (AP) Display Photo Enhancing X Video Capture Media Scaler Video Decoder Video Encoder Dozens More X Must run each usecase sufficiently fast -- no need faster A usecase uses IPs concurrently: more ALP than serial For each usecase, how much acceleration for each IP? 28

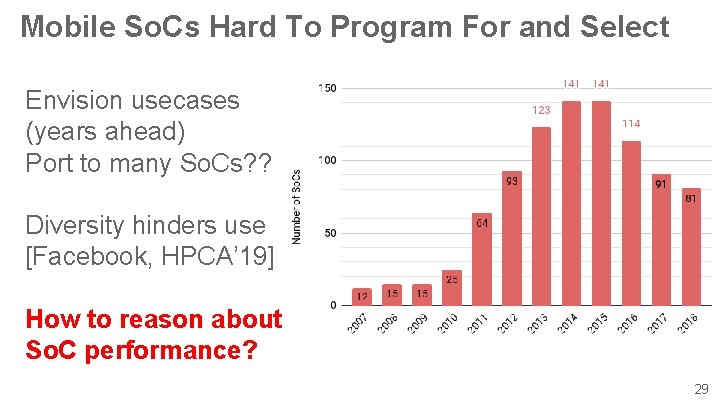

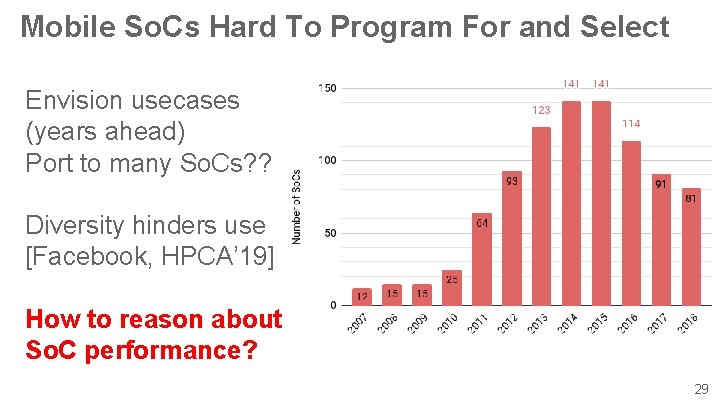

Mobile So. Cs Hard To Program For and Select Envision usecases (years ahead) Port to many So. Cs? ? Diversity hinders use [Facebook, HPCA’ 19] How to reason about So. C performance? 29

Mobile So. Cs Hard To Design Envision usecases (2 -3 years ahead) Select IPs Size IPs Design Uncore Which accelerators? How big? How to even start? 30

Outline I. Computer History & X-level Parallelism II. Mobile So. Cs as ALP Harbinger III. Gables ALP So. C Model (ok to get lost) IV. Call to Action for Accelerator-level Parallelism 31

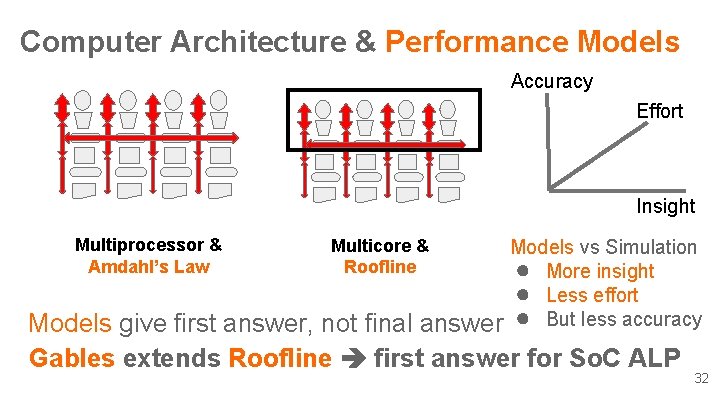

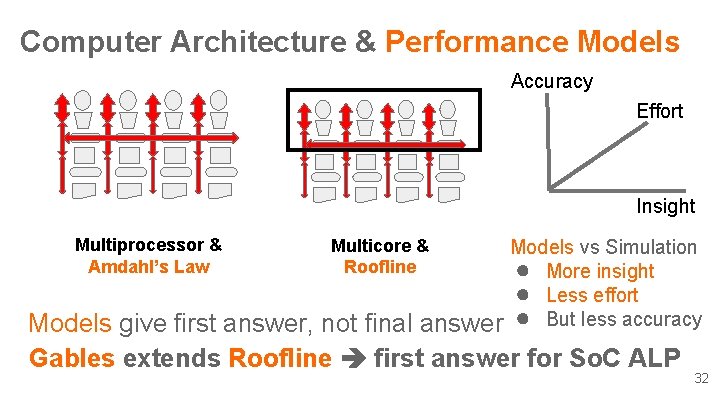

Computer Architecture & Performance Models Accuracy Effort Insight Multiprocessor & Amdahl’s Law Multicore & Roofline Models vs Simulation ● More insight ● Less effort ● But less accuracy Models give first answer, not final answer Gables extends Roofline first answer for So. C ALP 32

Roofline for Multicore Chips, 2009 Multicore HW • Ppeak = peak perf of all cores • Bpeak = peak off-chip bandwidth Multicore SW • I = operational intensity = #operations/#off-chip-bytes • E. g. , 2 ops / 16 bytes I = 1/8 Output Patt = upper bound on performance attainable 33

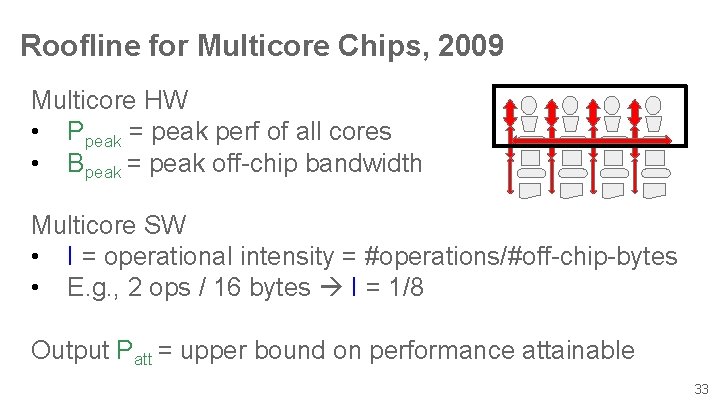

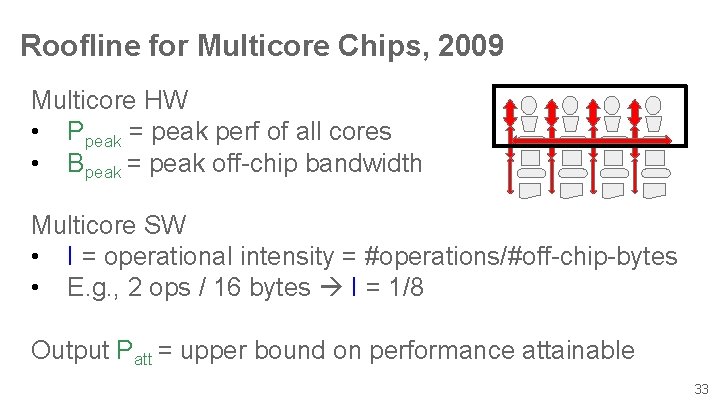

Roofline for Multicore Chips, 2009 Ppeak (Patt) Bpeak* I (I) Source: https: //commons. wikimedia. org/wiki/File: Exam ple_of_a_naive_Roofline_model. svg Compute v. Communication: Op. Intensity (I) = #operations / #off-chip bytes 34

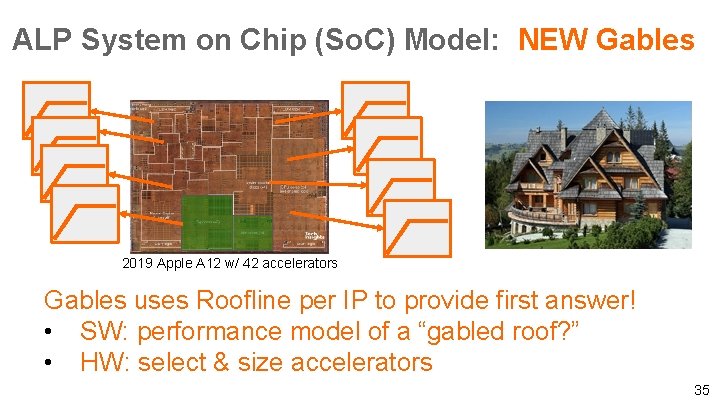

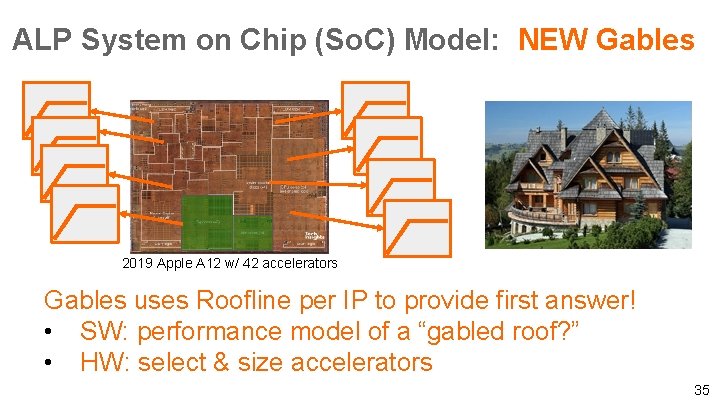

ALP System on Chip (So. C) Model: NEW Gables 2019 Apple A 12 w/ 42 accelerators Gables uses Roofline per IP to provide first answer! • SW: performance model of a “gabled roof? ” • HW: select & size accelerators 35

![Gables for N IP So C CPUs IP0 A 0 1 A 0Ppeak Gables for N IP So. C CPUs IP[0] A 0 = 1 A 0*Ppeak](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-31.jpg)

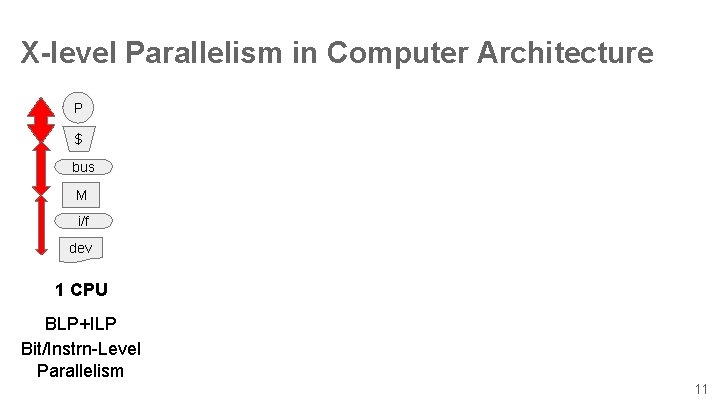

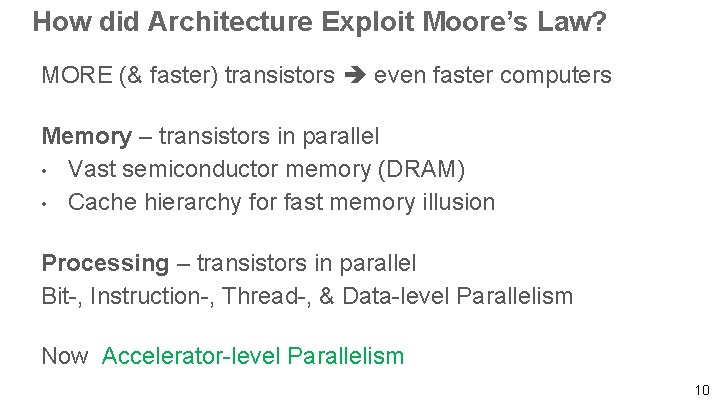

Gables for N IP So. C CPUs IP[0] A 0 = 1 A 0*Ppeak B 0 A 1*Ppeak IP[1] AN-1*Ppeak IP[N-1] B 1 BN-1 ← Share off-chip Bpeak → Usecase at each IP[i] • Operational intensity Ii operations/byte • Non-negative work fi (fi’s sum to 1) w/ IPs in parallel 36

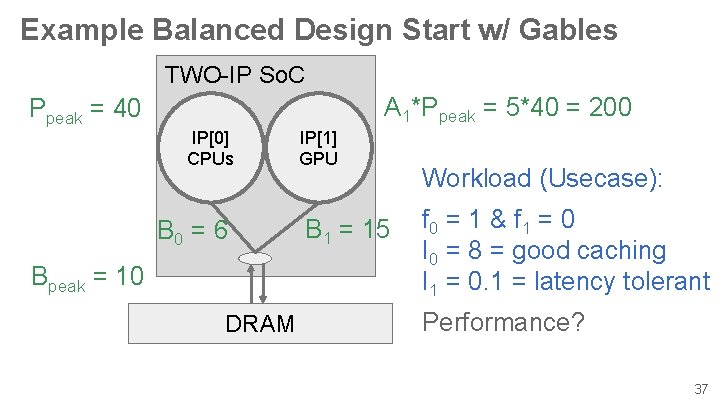

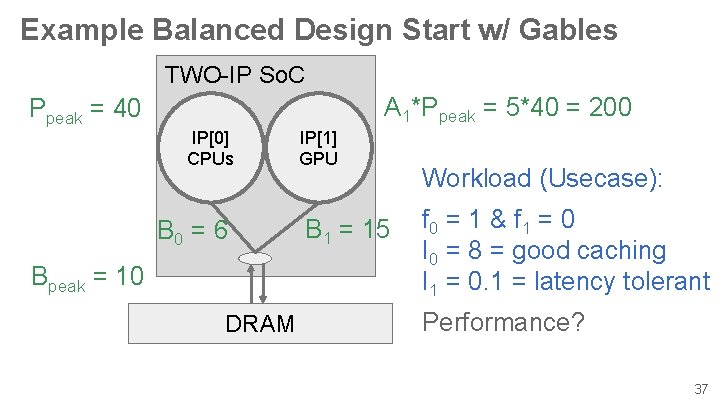

Example Balanced Design Start w/ Gables TWO-IP So. C A 1*Ppeak = 5*40 = 200 Ppeak = 40 IP[0] CPUs B 0 = 6 Bpeak = 10 DRAM IP[1] GPU B 1 = 15 Workload (Usecase): f 0 = 1 & f 1 = 0 I 0 = 8 = good caching I 1 = 0. 1 = latency tolerant Performance? 37

![Perf limited by IP0 at I 0 8 I1 not used no roofline Perf limited by IP[0] at I 0 = 8 I[1] not used no roofline](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-33.jpg)

Perf limited by IP[0] at I 0 = 8 I[1] not used no roofline Let’s Assign IP[1] work: f 1 = 0 0. 75 Ppeak = 40 Bpeak = 10 A 1 = 5 B 0 = 6 B 1 = 15 f 1 = 0 I 0 = 8 I 1 = 0. 1 38 38

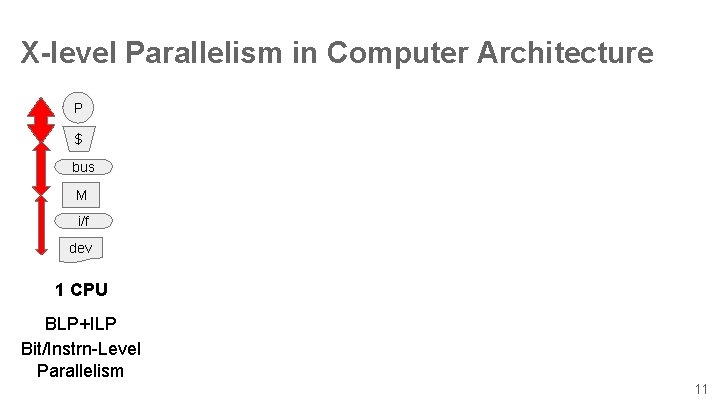

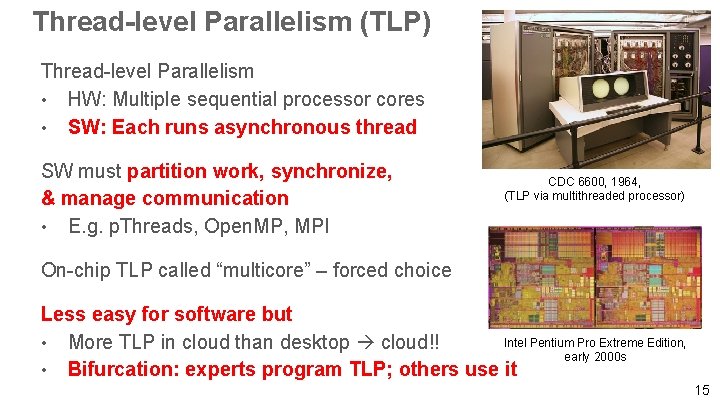

![IP1 present but Perf drops to 1 Why I 1 0 1 memory IP[1] present but Perf drops to 1! Why? I 1 = 0. 1 memory](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-34.jpg)

IP[1] present but Perf drops to 1! Why? I 1 = 0. 1 memory bottleneck Enhance Bpeak = 10 30 (at a cost) Ppeak = 40 Bpeak = 10 A 1 = 5 B 0 = 6 B 1 = 15 f 1 = 0. 75 I 0 = 8 I 1 = 0. 1 39 39

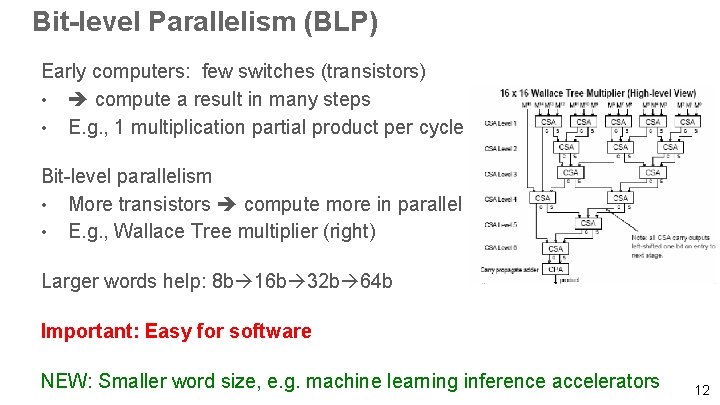

![Perf only 2 with IP1 bottleneck IP1 SRAMreuse I 1 0 1 8 Perf only 2 with IP[1] bottleneck IP[1] SRAM/reuse I 1 = 0. 1 8](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-35.jpg)

Perf only 2 with IP[1] bottleneck IP[1] SRAM/reuse I 1 = 0. 1 8 Reduce overkill Bpeak = 30 20 Ppeak = 40 Bpeak = 30 A 1 = 5 B 0 = 6 B 1 = 15 f 1 = 0. 75 I 0 = 8 I 1 = 0. 1 40 40

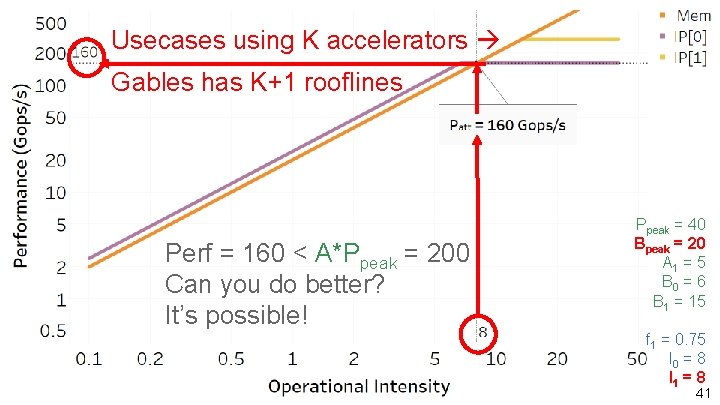

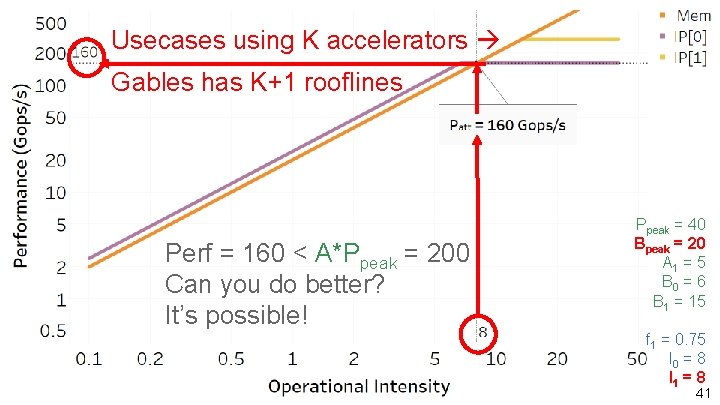

Usecases using K accelerators Gables has K+1 rooflines Perf = 160 < A*Ppeak = 200 Can you do better? It’s possible! Ppeak = 40 Bpeak = 20 A 1 = 5 B 0 = 6 B 1 = 15 f 1 = 0. 75 I 0 = 8 I 1 = 8 41 41

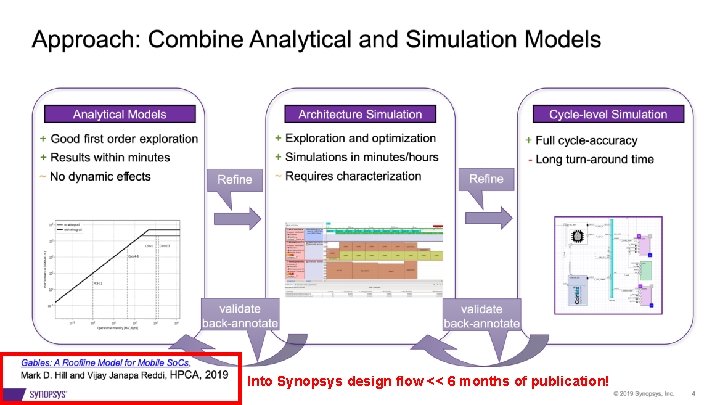

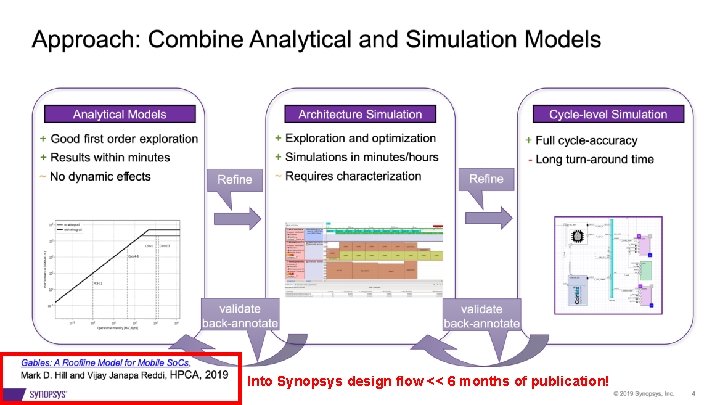

Into Synopsys design flow << 6 months of publication! 43

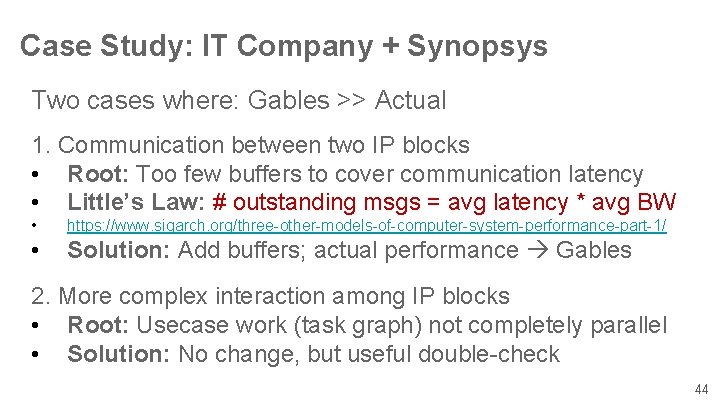

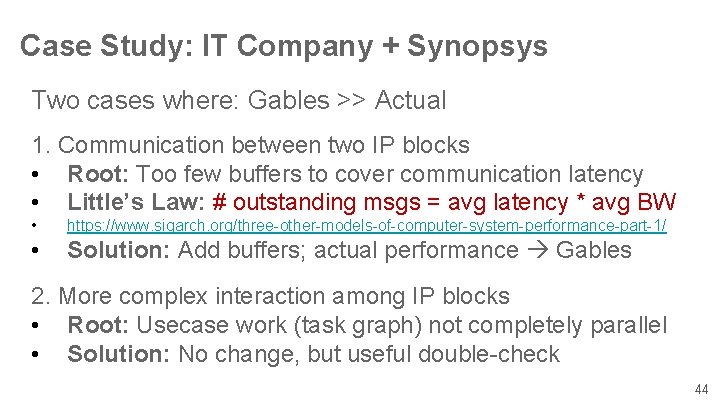

Case Study: IT Company + Synopsys Two cases where: Gables >> Actual 1. Communication between two IP blocks • Root: Too few buffers to cover communication latency • Little’s Law: # outstanding msgs = avg latency * avg BW • https: //www. sigarch. org/three-other-models-of-computer-system-performance-part-1/ • Solution: Add buffers; actual performance Gables 2. More complex interaction among IP blocks • Root: Usecase work (task graph) not completely parallel • Solution: No change, but useful double-check 44

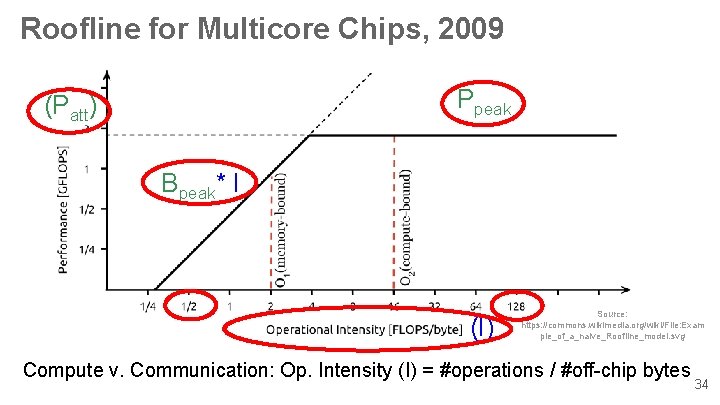

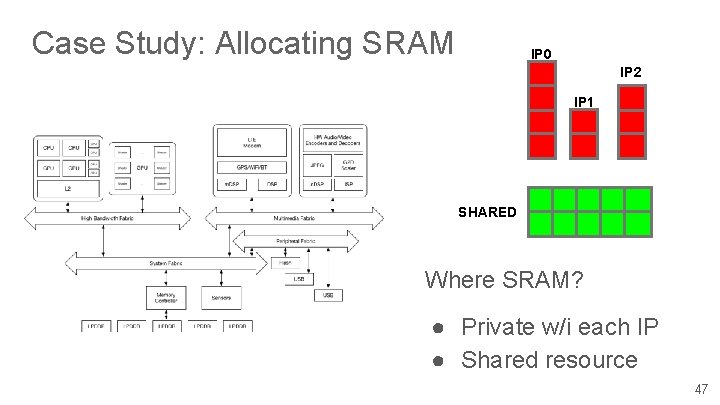

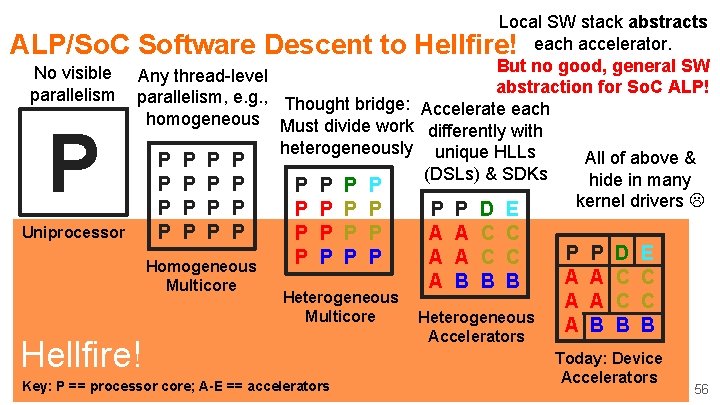

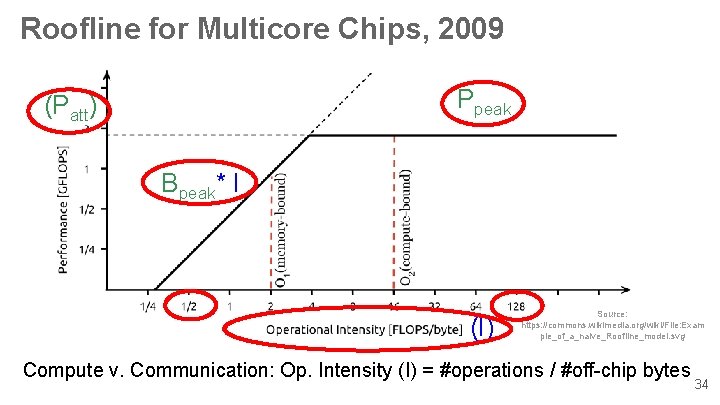

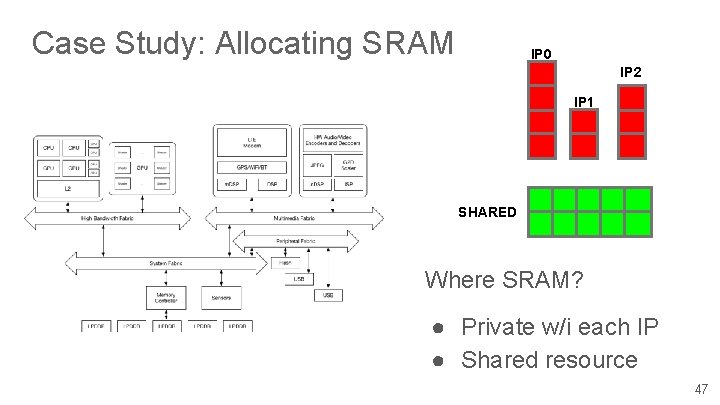

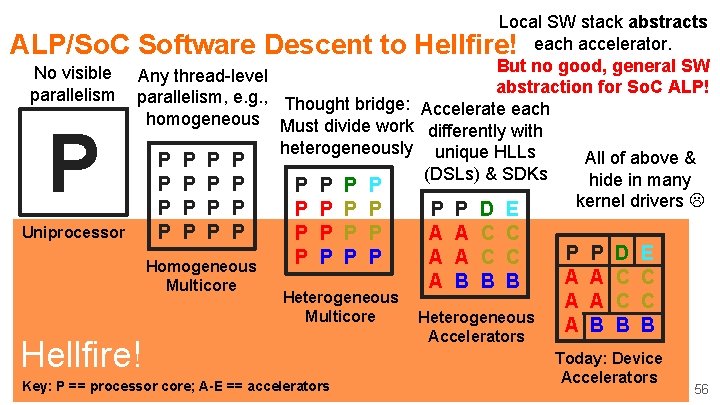

Case Study: Allocating SRAM IP 0 IP 2 IP 1 SHARED Where SRAM? ● Private w/i each IP ● Shared resource 47

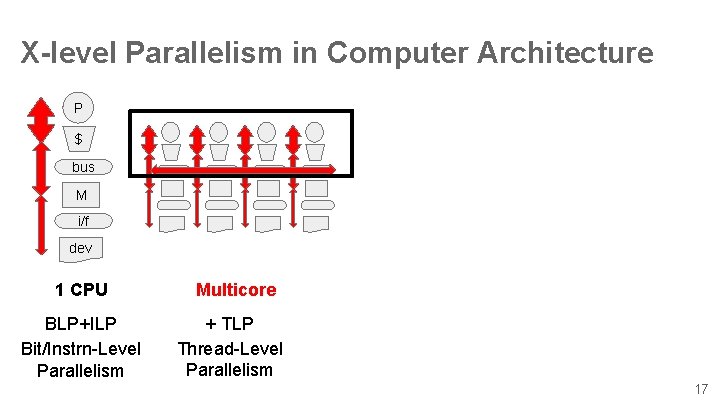

![Does more IPi SRAM help Op Intensity Ii Compute v Communication Op Intensity I Does more IP[i] SRAM help Op. Intensity (Ii)? Compute v. Communication: Op. Intensity (I)](https://slidetodoc.com/presentation_image/275083c289d518db1973f6eccca38529/image-40.jpg)

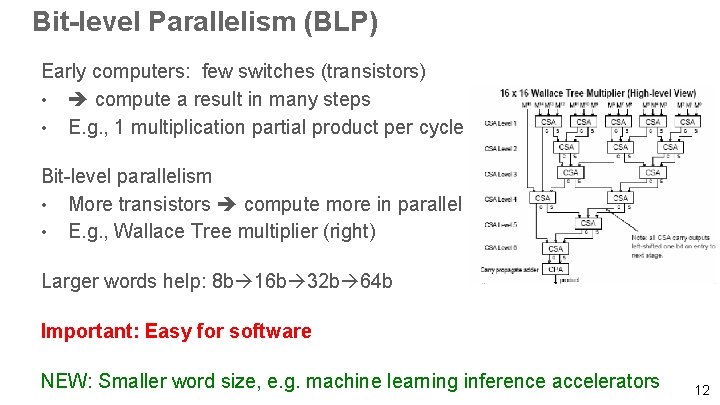

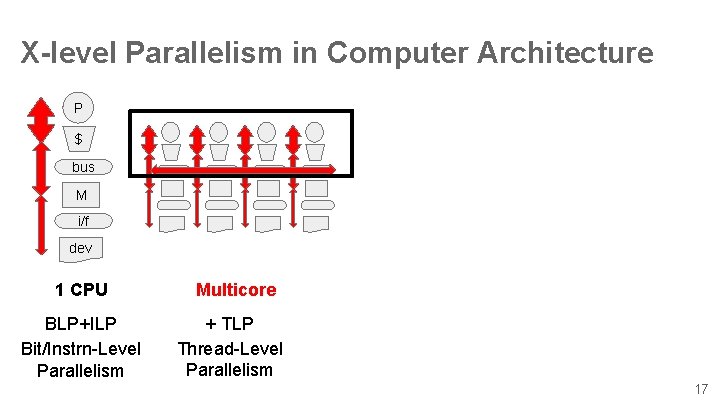

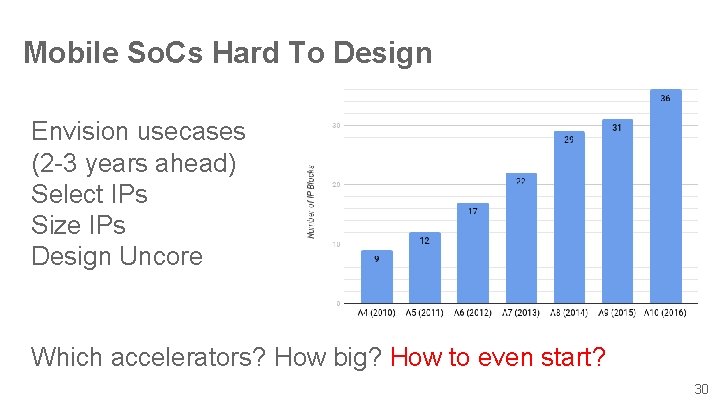

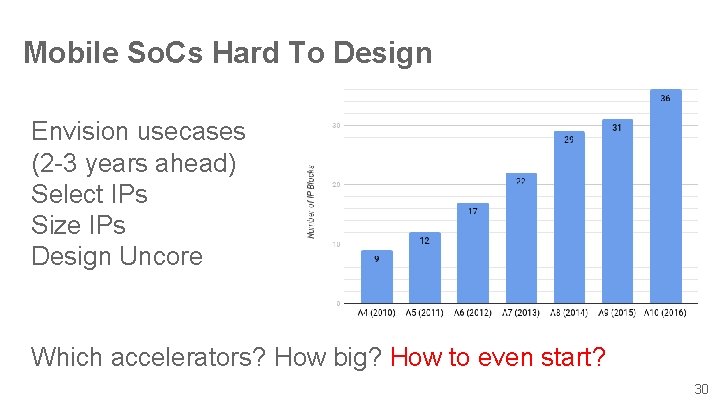

Does more IP[i] SRAM help Op. Intensity (Ii)? Compute v. Communication: Op. Intensity (I) = #operations / #off-chip bytes Ii Not much fits Small W/S fits Med. W/S fits Large W/S fits W/S = working set IP[i] SRAM Non-linear function that increases when new footprint/working-set fits Should consider these plots when sizing IP[i] SRAM Later evaluation can use simulation performance on y-axis 49

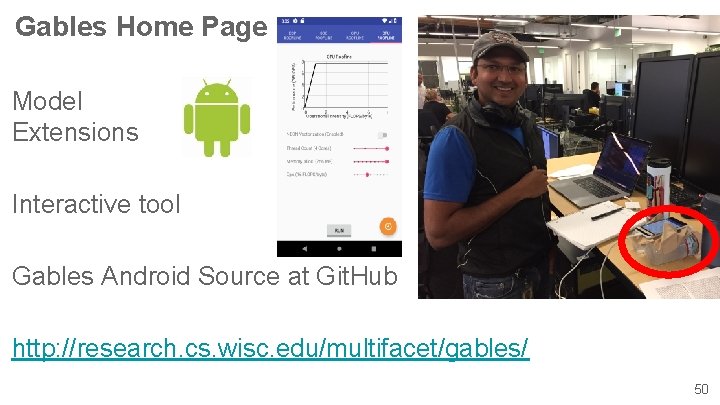

Gables Home Page Model Extensions Interactive tool Gables Android Source at Git. Hub http: //research. cs. wisc. edu/multifacet/gables/ 50

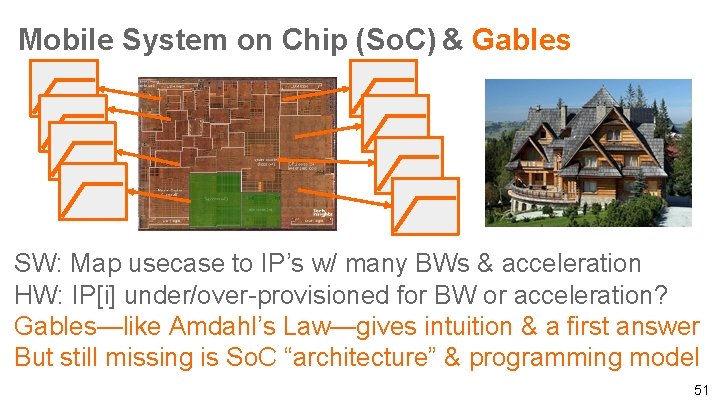

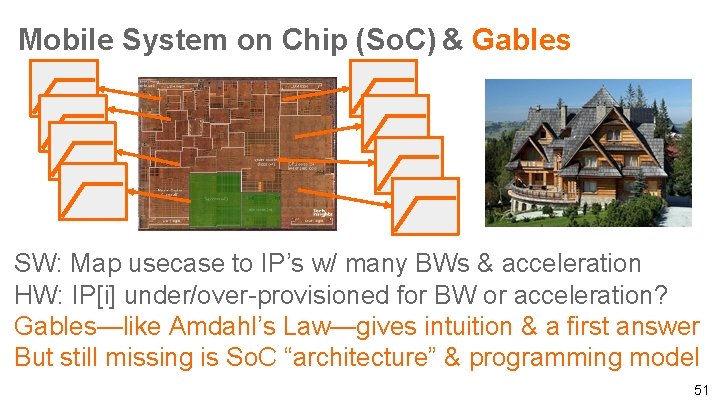

Mobile System on Chip (So. C) & Gables SW: Map usecase to IP’s w/ many BWs & acceleration HW: IP[i] under/over-provisioned for BW or acceleration? Gables—like Amdahl’s Law—gives intuition & a first answer But still missing is So. C “architecture” & programming model 51

Outline I. Computer History & X-level Parallelism II. Mobile So. Cs as ALP Harbinger III. Gables ALP So. C Model IV. Call to Action for Accelerator-level Parallelism 52

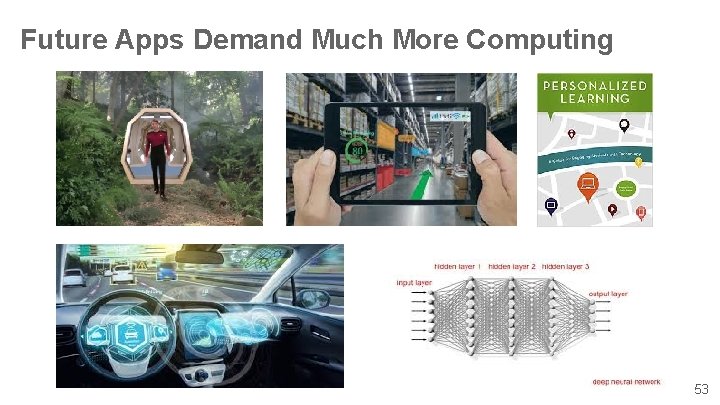

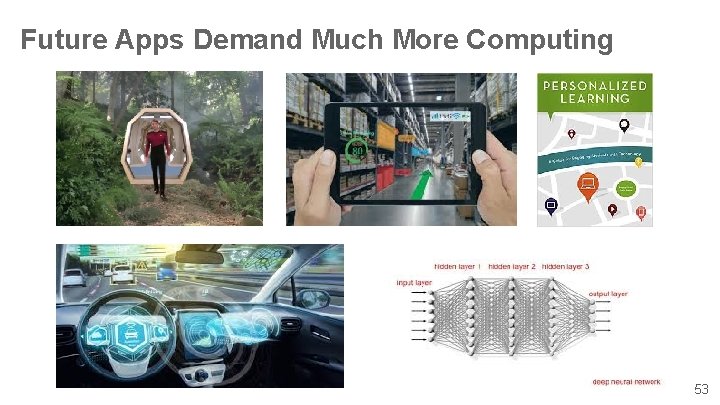

Future Apps Demand Much More Computing 53

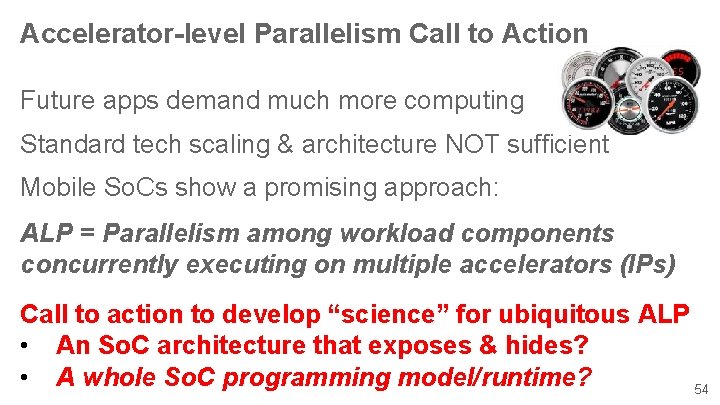

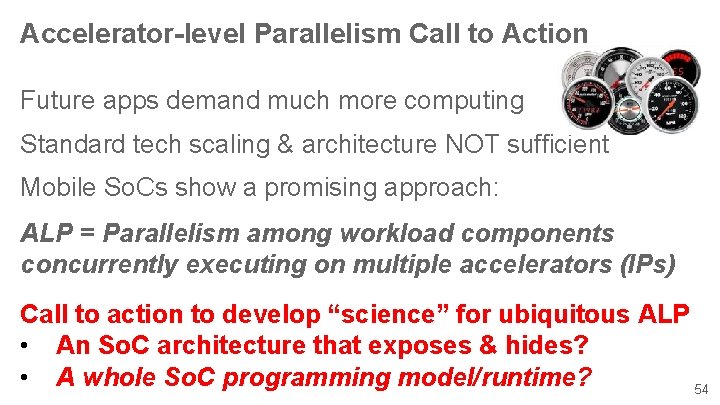

Accelerator-level Parallelism Call to Action Future apps demand much more computing Standard tech scaling & architecture NOT sufficient Mobile So. Cs show a promising approach: ALP = Parallelism among workload components concurrently executing on multiple accelerators (IPs) Call to action to develop “science” for ubiquitous ALP • An So. C architecture that exposes & hides? • A whole So. C programming model/runtime? 54

ALP/So. C No visible parallelism P Local SW stack abstracts Software Descent to Hellfire! each accelerator. But no good, general SW Any thread-level abstraction for So. C ALP! parallelism, e. g. , Thought bridge: Accelerate each homogeneous Must divide work differently with heterogeneously unique HLLs All of above & P P (DSLs) & SDKs hide in many Uniprocessor P P P Homogeneous Multicore P P P P Heterogeneous Multicore Hellfire! Key: P == processor core; A-E == accelerators P A A A P A A B D C C B E C C B Heterogeneous Accelerators kernel drivers P A A A P A A B D C C B E C C B Today: Device Accelerators 56

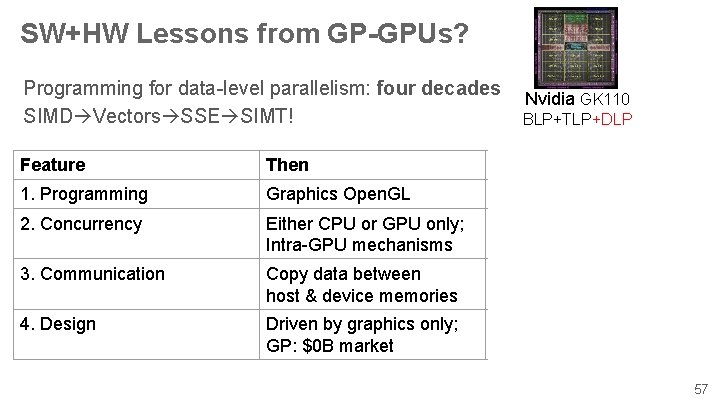

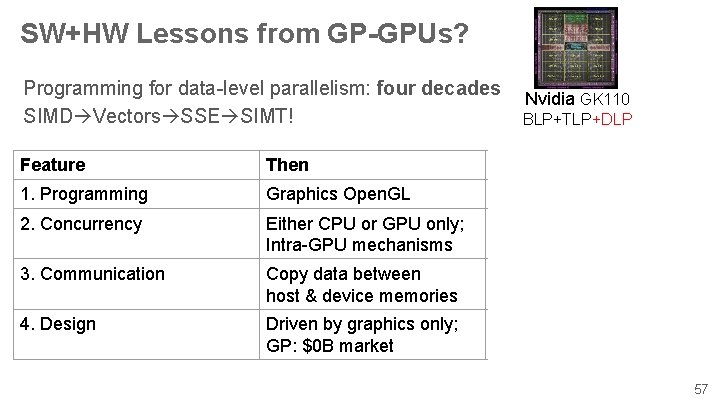

SW+HW Lessons from GP-GPUs? Programming for data-level parallelism: four decades SIMD Vectors SSE SIMT! Nvidia GK 110 BLP+TLP+DLP Feature Then Now 1. Programming Graphics Open. GL SIMT (Cuda/Open. CL/HIP) 2. Concurrency Either CPU or GPU only; Intra-GPU mechanisms Finer-grain interaction Intra-GPU mechanisms 3. Communication Copy data between host & device memories Maybe shared memory, sometimes coherence 4. Design Driven by graphics only; GP: $0 B market GP major player, e. g. , deep neural networks 57

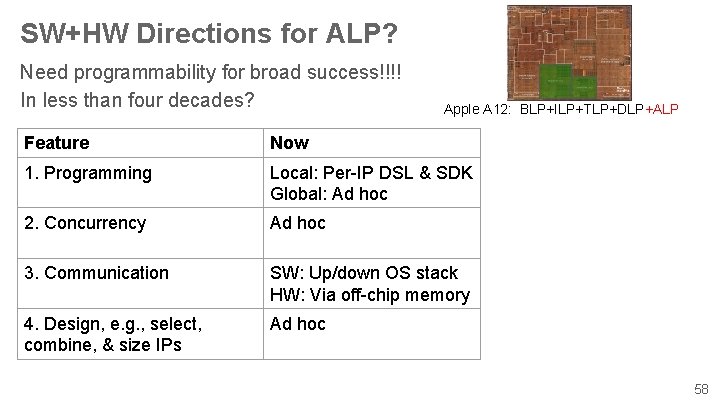

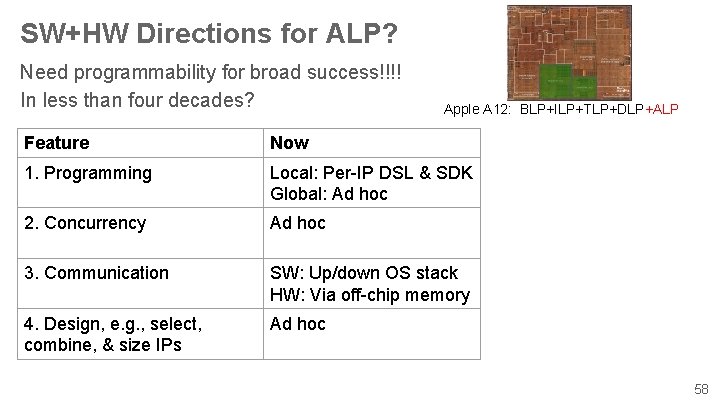

SW+HW Directions for ALP? Need programmability for broad success!!!! In less than four decades? Apple A 12: BLP+ILP+TLP+DLP+ALP Feature Now Future? 1. Programming Local: Per-IP DSL & SDK Abstract ALP/So. C like Global: Ad hoc SIMT does for GP-GPUs 2. Concurrency Ad hoc 3. Communication SW: Up/down OS stack SW+HW for queue pairs? HW: Via off-chip memory Want control/data planes 4. Design, e. g. , select, combine, & size IPs Ad hoc GP-GPU-like scheduling? Virtualize/partition IP? Make a “science. ” Speed with tools/frameworks 58

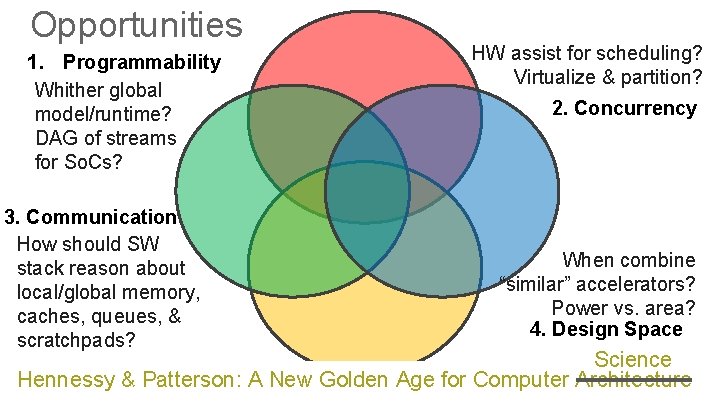

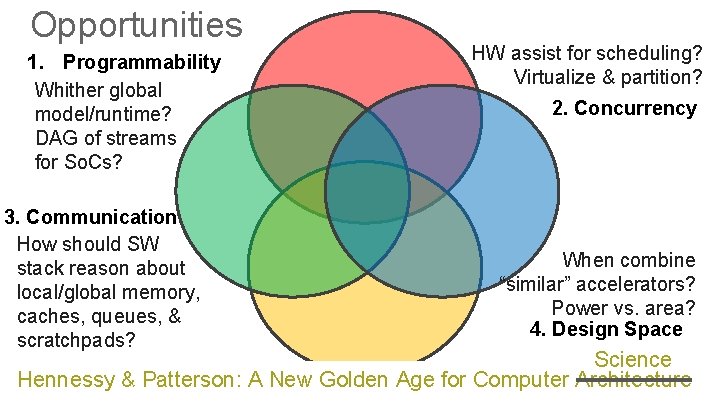

Opportunities Challenges 1. Programmability Whither global model/runtime? DAG of streams for So. Cs? 3. Communication How should SW stack reason about local/global memory, caches, queues, & scratchpads? HW assist for scheduling? Virtualize & partition? 2. Concurrency When combine “similar” accelerators? Power vs. area? 4. Design Space Science 59 Hennessy & Patterson: A New Golden Age for Computer Architecture

New Feb 2020! 60