Accelerating Pointer Chasing in 3 DStacked Memory Challenges

![IMPICA Page Table: Mechanism Virtual Address Bit [47: 41] Region Table Bit [40: 21] IMPICA Page Table: Mechanism Virtual Address Bit [47: 41] Region Table Bit [40: 21]](https://slidetodoc.com/presentation_image_h/cf2bb5d65a1f6773a68cd7833486ce61/image-14.jpg)

- Slides: 34

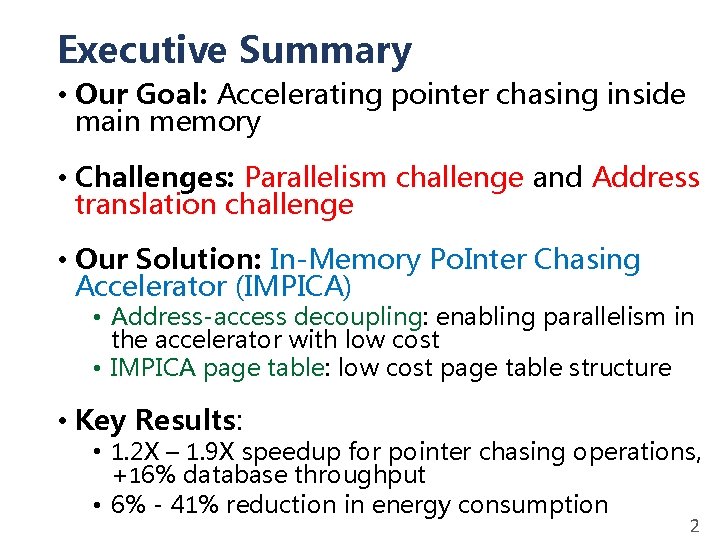

Accelerating Pointer Chasing in 3 D-Stacked Memory: Challenges, Mechanisms, Evaluation Kevin Hsieh Samira Khan, Nandita Vijaykumar, Kevin K. Chang, Amirali Boroumand, Saugata Ghose, Onur Mutlu

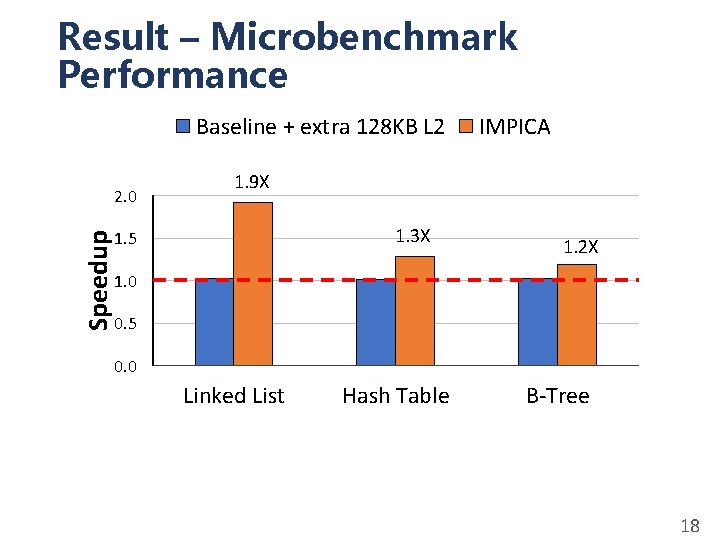

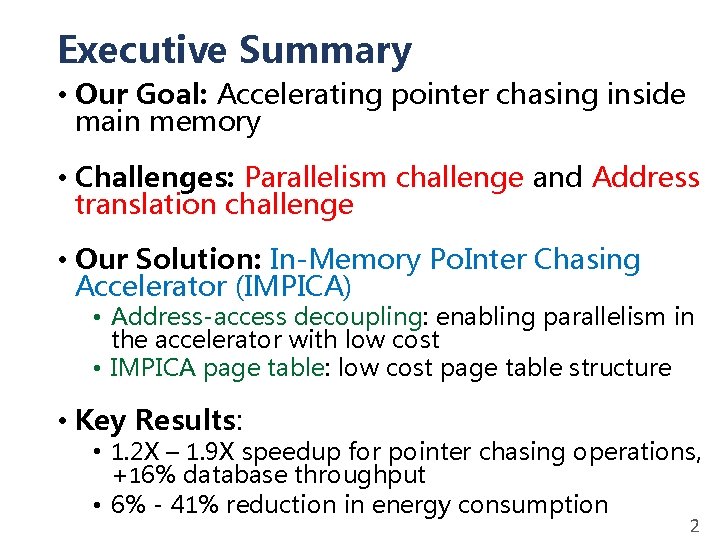

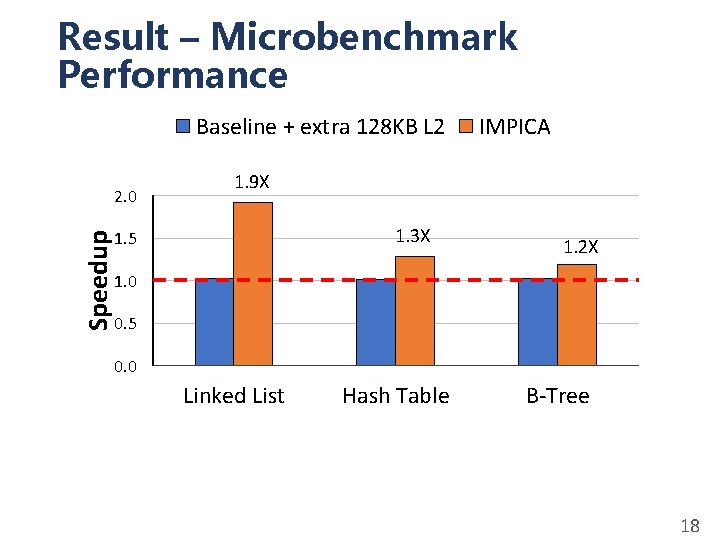

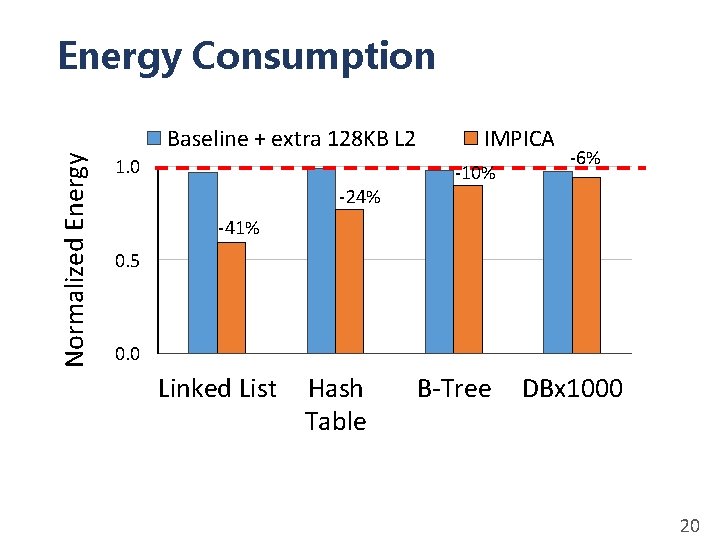

Executive Summary • Our Goal: Accelerating pointer chasing inside main memory • Challenges: Parallelism challenge and Address translation challenge • Our Solution: In-Memory Po. Inter Chasing Accelerator (IMPICA) • Address-access decoupling: enabling parallelism in the accelerator with low cost • IMPICA page table: low cost page table structure • Key Results: • 1. 2 X – 1. 9 X speedup for pointer chasing operations, +16% database throughput • 6% - 41% reduction in energy consumption 2

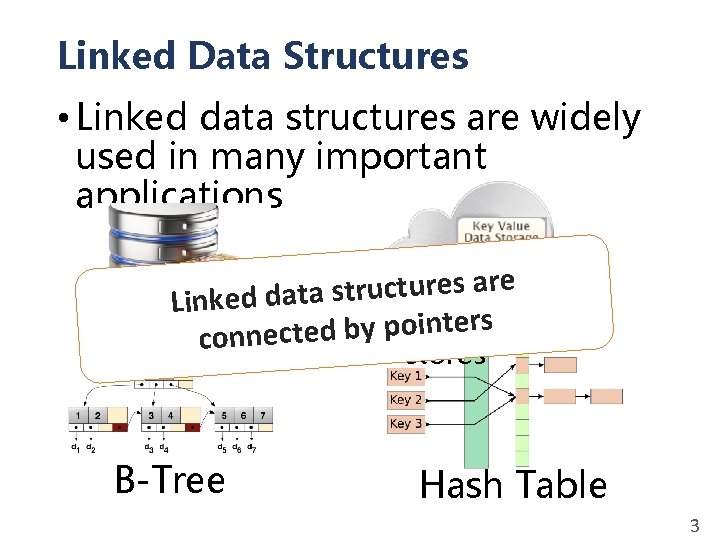

Linked Data Structures • Linked data structures are widely used in many important applications e r a s e r u t c u r t s a t inked da L Databas s Key-value r e t n i o p y b d e t c conne e B-Tree stores Hash Table 3

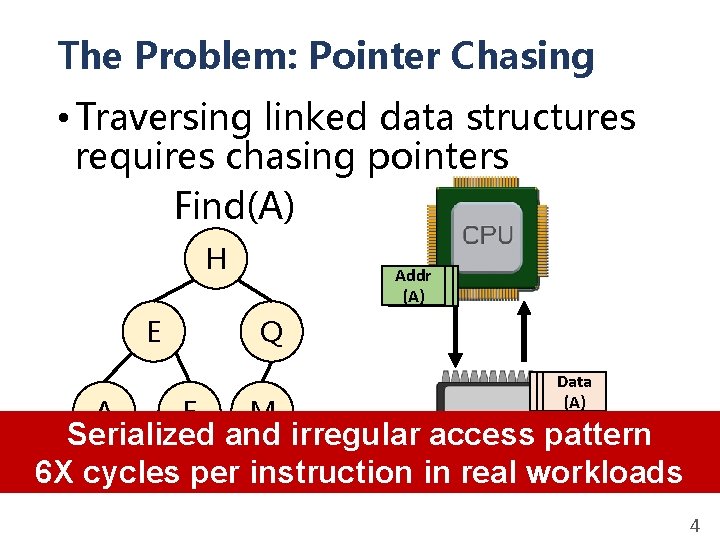

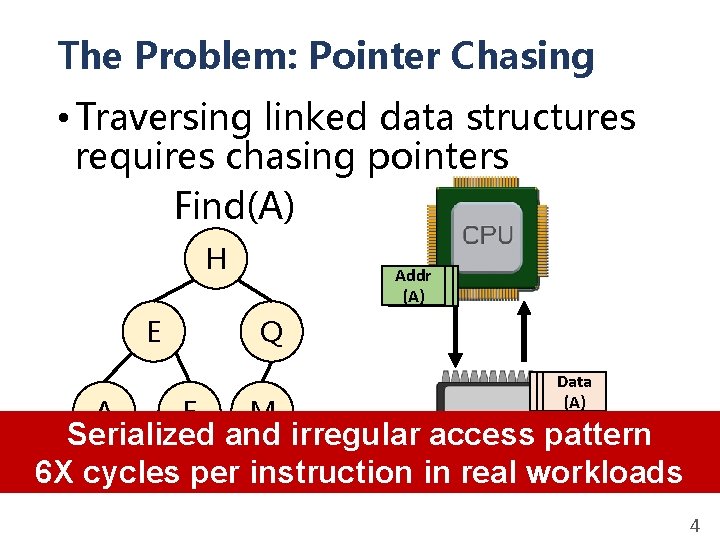

The Problem: Pointer Chasing • Traversing linked data structures requires chasing pointers Find(A) H E A Addr (A) (E) (H) Q F M Data (H) (E) (A) MEM pattern Serialized and irregular access 6 X cycles per instruction in real workloads 4

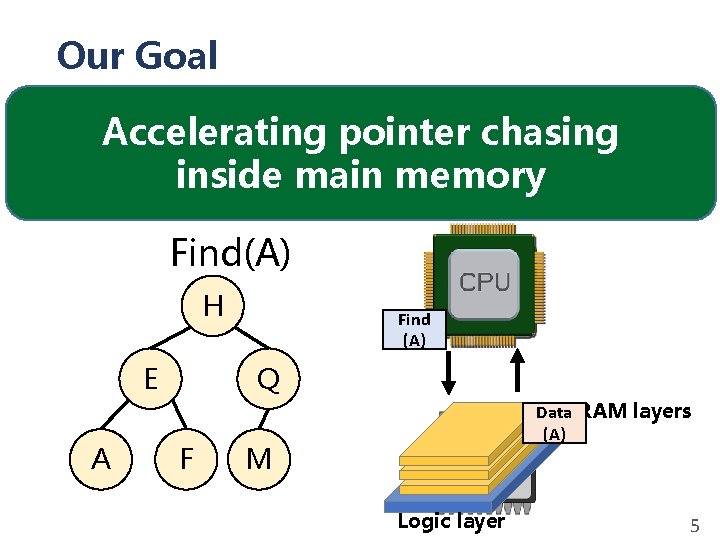

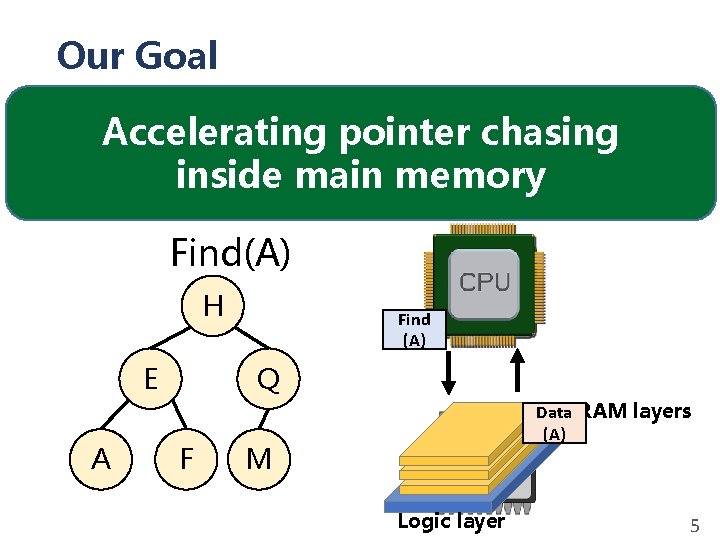

Our Goal Accelerating pointer chasing inside main memory Find(A) H E A Find (A) Q F M DRAM layers Data (A) MEM Logic layer 5

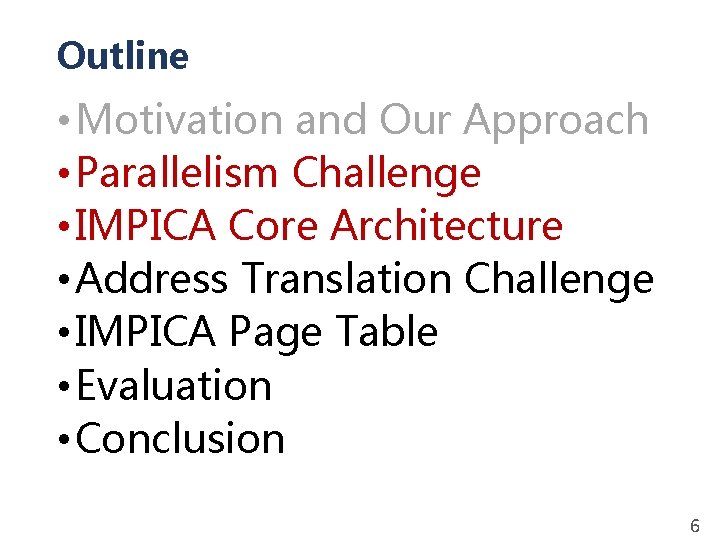

Outline • Motivation and Our Approach • Parallelism Challenge • IMPICA Core Architecture • Address Translation Challenge • IMPICA Page Table • Evaluation • Conclusion 6

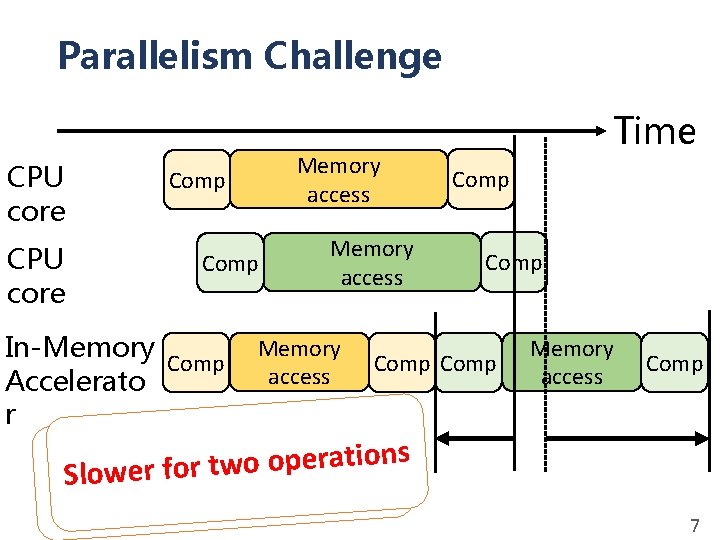

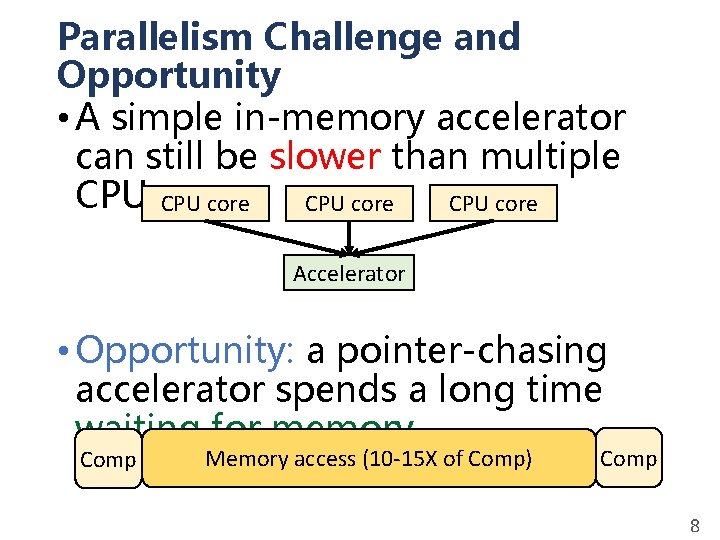

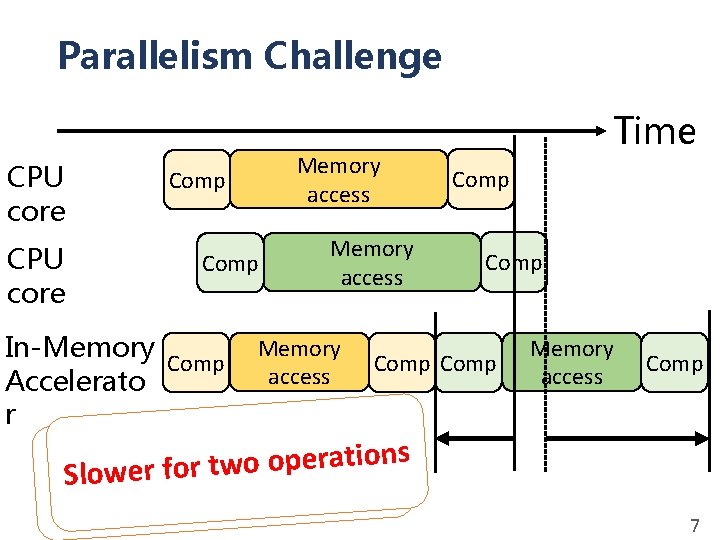

Parallelism Challenge CPU core Memory access Comp In-Memory Comp Accelerato r Memory access Time Comp Memory access Comp s n o i t a r e p o n o o i w t t a r r o e f p r o e e w n o l o S r o f r e t s Fa 7

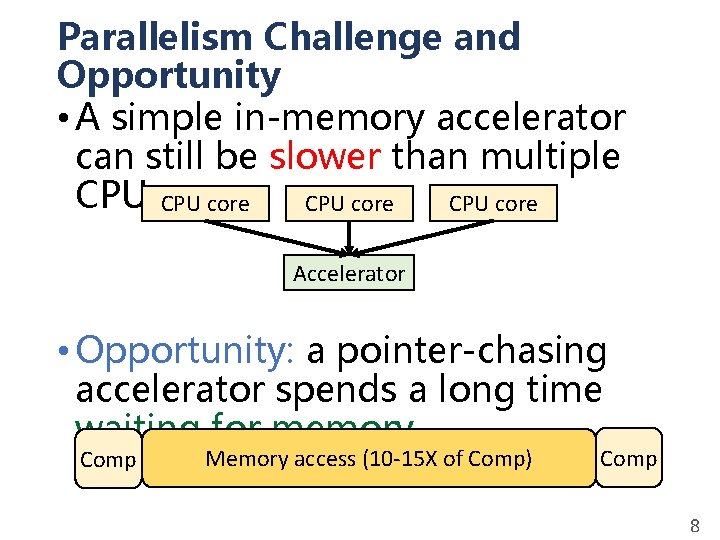

Parallelism Challenge and Opportunity • A simple in-memory accelerator can still be slower than multiple CPU cores CPU core Accelerator • Opportunity: a pointer-chasing accelerator spends a long time waiting for memory Comp Memory access (10 -15 X of Comp) Comp 8

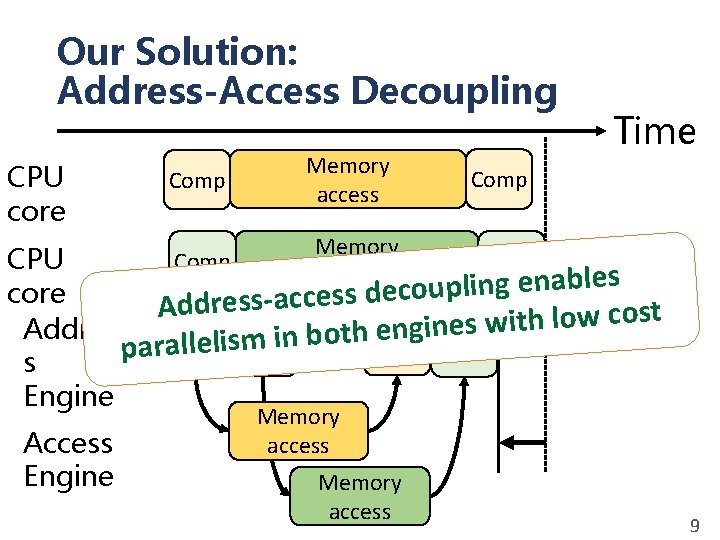

Our Solution: Address-Access Decoupling CPU core Comp Memory access Time Comp Memory access Comp CPU s e l b a n e g n i l p u o c e d core s s e c c a s s e r d d A t s o c w o l h t i w s e n i g Addres n e h t o b n i m s i l lle Comp ra. Comp a p s Engine Access Engine Memory access 9

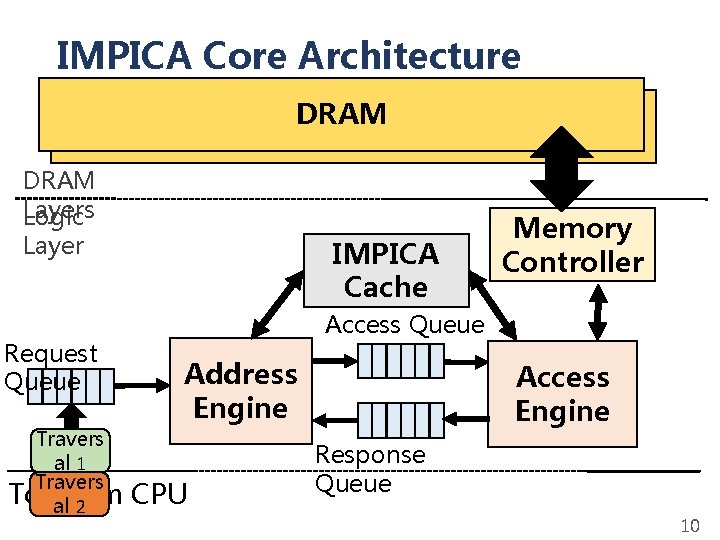

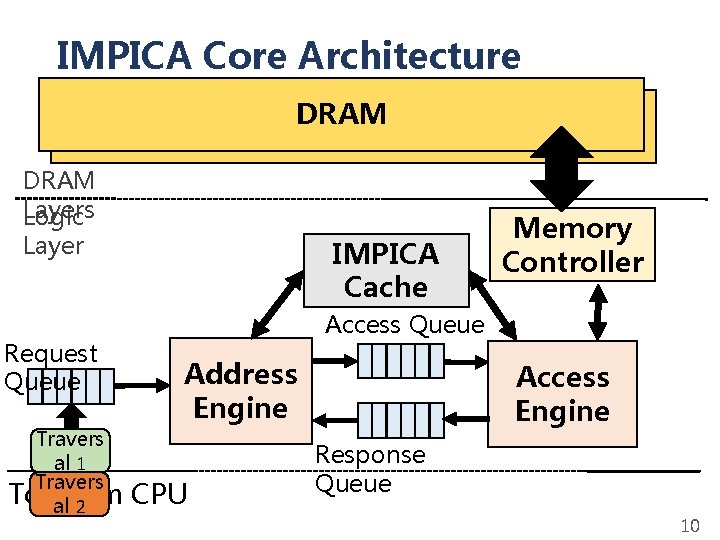

IMPICA Core Architecture DRAM Dies DRAM Layers Logic Layer Request Queue Travers al 1 Travers To/From al 2 IMPICA Cache Memory Controller Access Queue Address Engine CPU Access Engine Response Queue 10

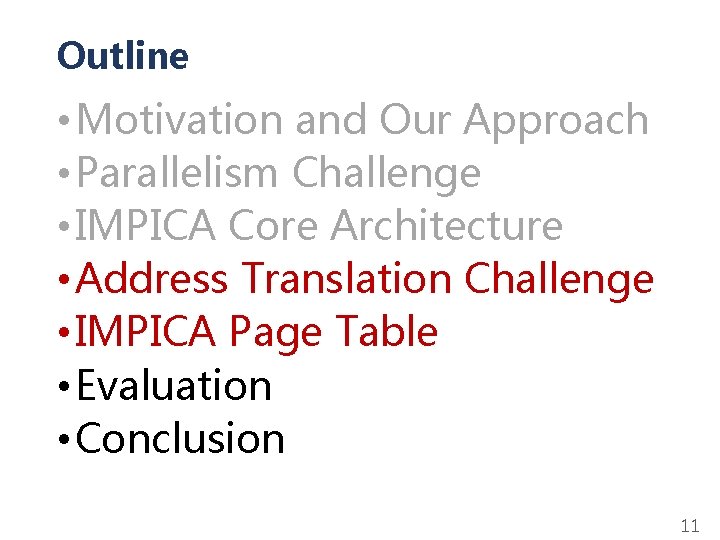

Outline • Motivation and Our Approach • Parallelism Challenge • IMPICA Core Architecture • Address Translation Challenge • IMPICA Page Table • Evaluation • Conclusion 11

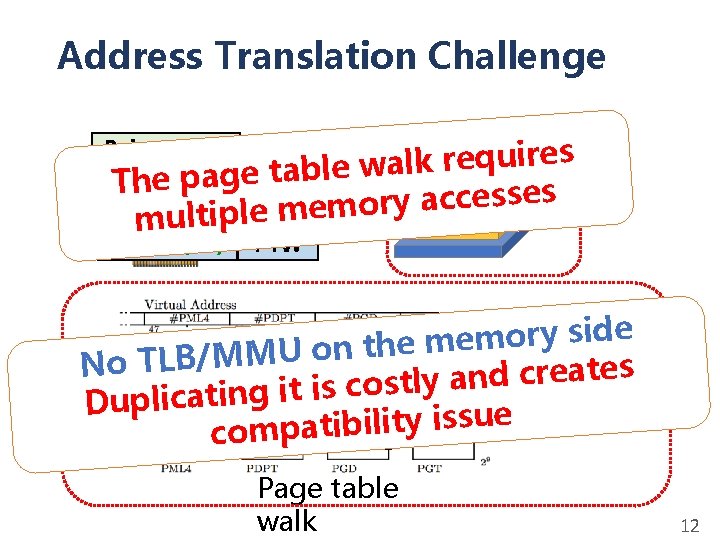

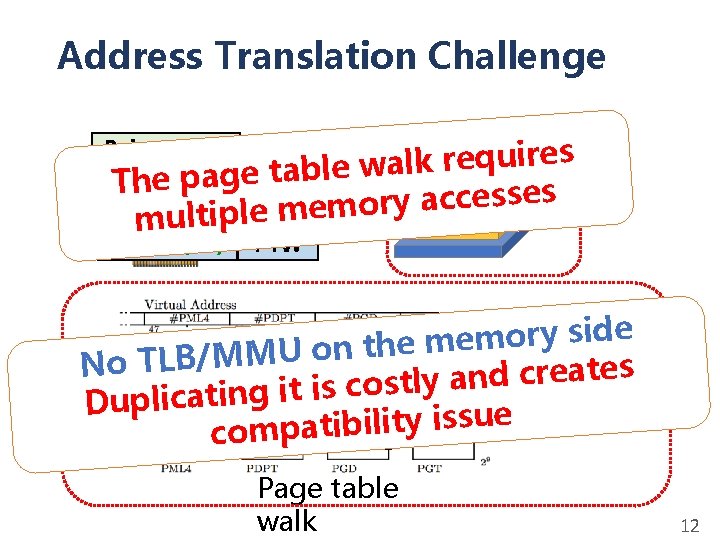

Address Translation Challenge s e r i u q e r k l a w e l b a t e g a p e Th s e s s e c c a y r o e mem l p i t l u m TLB/MMU Pointer (VA) Pointer (PA) PTW e d i s y r o m e h t n o U M M / B L No T PTW s e t a e r c d n a y l t s o c PTW s i t i g n i t a c i l p Du PTW e u s s i y t i l i b i t a comp PTW Page table walk 12

Our Solution: IMPICA Page Table • Completely decouple the page table of IMPICA from the page table of the. IMPICA CPUs Page Table CPU Page Table A C I P M I o t n i e r tu c u r t s a t a d d e k n Page Map li. Virtual Physical Page s n o i g e r IMPICA ny a o t l a i t r a p a is e l b a t Region e g a p A C IMPI mapping Virtual Page Virtual Address Space Physical Page Physical Address Space 13

![IMPICA Page Table Mechanism Virtual Address Bit 47 41 Region Table Bit 40 21 IMPICA Page Table: Mechanism Virtual Address Bit [47: 41] Region Table Bit [40: 21]](https://slidetodoc.com/presentation_image_h/cf2bb5d65a1f6773a68cd7833486ce61/image-14.jpg)

IMPICA Page Table: Mechanism Virtual Address Bit [47: 41] Region Table Bit [40: 21] Bit [20: 12] Bit [11: 0] e l b a t e g Flat pa ss e c c a y r o m e m saves one + t + s o m l a s i e l b a Tiny region t he c a c e h t n i s y a alw Flat Page Table (2 MB) Small Page Table (4 KB) + Physical Address

Outline • Motivation and Our Approach • Parallelism Challenge • IMPICA Core Architecture • Address Translation Challenge • IMPICA Page Table • Evaluation • Conclusion 15

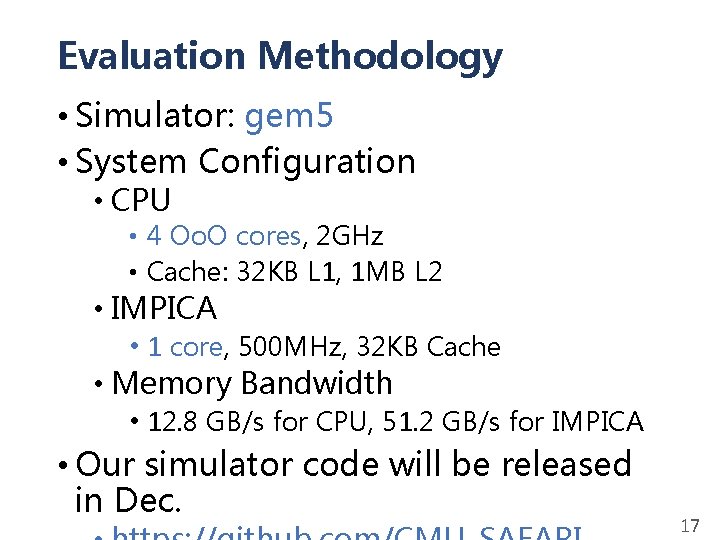

Evaluated Workloads • Microbenchmarks • Linked list (from Olden benchmark) • Hash table (from Memcached) • B-tree (from DBx 1000) • Application • DBx 1000 (with TPC-C benchmark) 16

Evaluation Methodology • Simulator: gem 5 • System Configuration • CPU • 4 Oo. O cores, 2 GHz • Cache: 32 KB L 1, 1 MB L 2 • IMPICA • 1 core, 500 MHz, 32 KB Cache • Memory Bandwidth • 12. 8 GB/s for CPU, 51. 2 GB/s for IMPICA • Our simulator code will be released in Dec. 17

Result – Microbenchmark Performance Baseline + extra 128 KB L 2 Speedup 2. 0 IMPICA 1. 9 X 1. 3 X 1. 5 1. 2 X 1. 0 0. 5 0. 0 Linked List Hash Table B-Tree 18

Database Throughput Result – Database Performance +16% 1. 22 1. 12 1. 02 +2% 0. 92 Baseline + extra 128 KB L 2 Database Latency +5% 1. 00 0. 95 Baseline + extra 1 MB L 2 IMPICA -0% -4% -13% 0. 90 0. 85 Baseline + extra 128 KB L 2 1 MB L 2 IMPICA 19

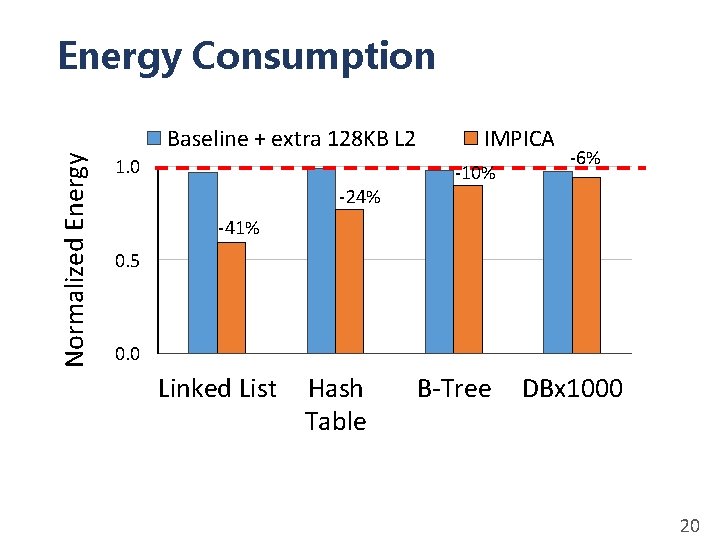

Energy Consumption Normalized Energy Baseline + extra 128 KB L 2 1. 0 -24% IMPICA -10% -6% -41% 0. 5 0. 0 Linked List Hash Table B-Tree DBx 1000 20

More in the Paper • Interface and design considerations • CPU interface and programming model • Page table management • Cache coherence • Area and power overhead analysis • Sensitivity to IMPICA page table 21

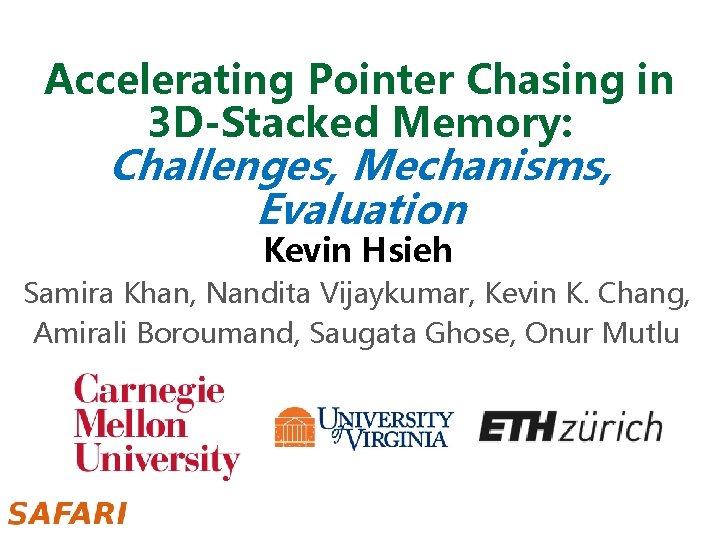

Conclusion • Performing pointer-chasing inside main memory can greatly speed up the traversal of linked data structures • Challenges: Parallelism challenge and Address translation challenge • Our Solution: In-Memory Po. Inter Chasing Accelerator • Address-access decoupling: enabling parallelism with low cost • IMPICA page table: low cost page table structure • Key Results: • 1. 2 X – 1. 9 X speedup for pointer chasing operations, +16% database throughput • 6% - 41% reduction in energy consumption 22

Accelerating Pointer Chasing in 3 D-Stacked Memory: Challenges, Mechanisms, Evaluation Kevin Hsieh Samira Khan, Nandita Vijaykumar, Kevin K. Chang, Amirali Boroumand, Saugata Ghose, Onur Mutlu

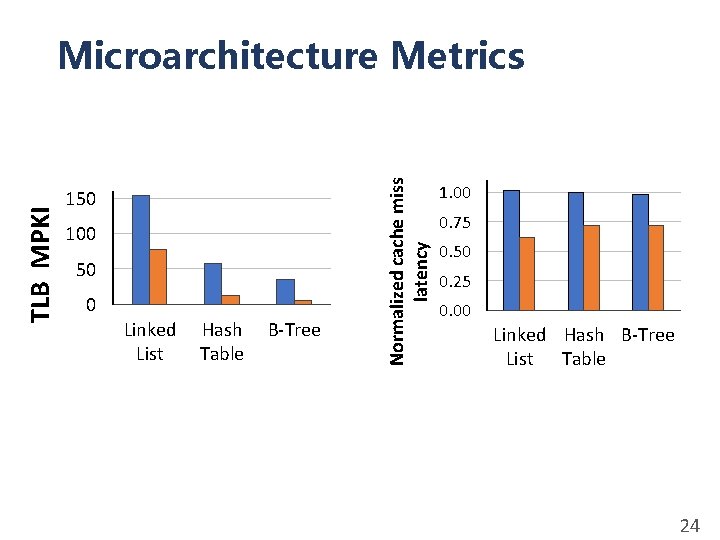

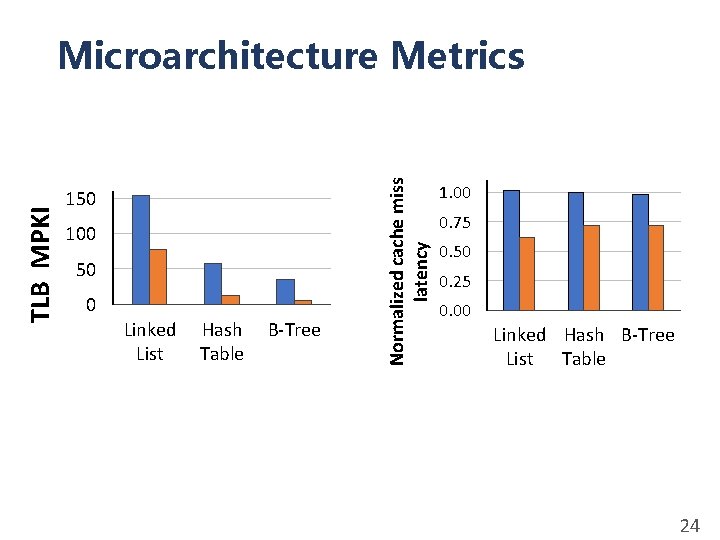

150 100 50 0 Linked List Hash Table B-Tree Normalized cache miss latency TLB MPKI Microarchitecture Metrics 1. 00 0. 75 0. 50 0. 25 0. 00 Linked Hash B-Tree List Table 24

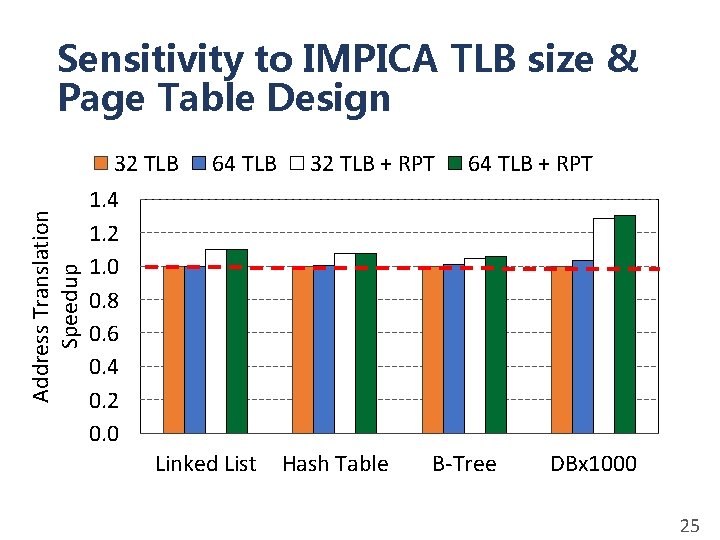

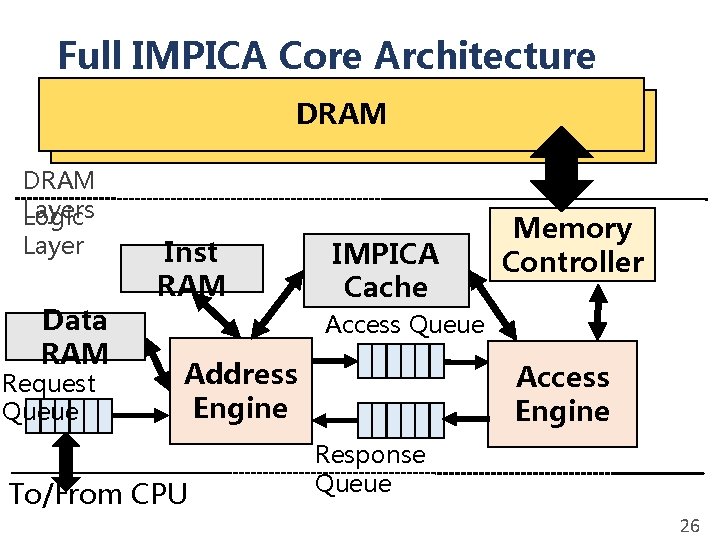

Address Translation Speedup Sensitivity to IMPICA TLB size & Page Table Design 32 TLB 64 TLB 32 TLB + RPT 64 TLB + RPT 1. 4 1. 2 1. 0 0. 8 0. 6 0. 4 0. 2 0. 0 Linked List Hash Table B-Tree DBx 1000 25

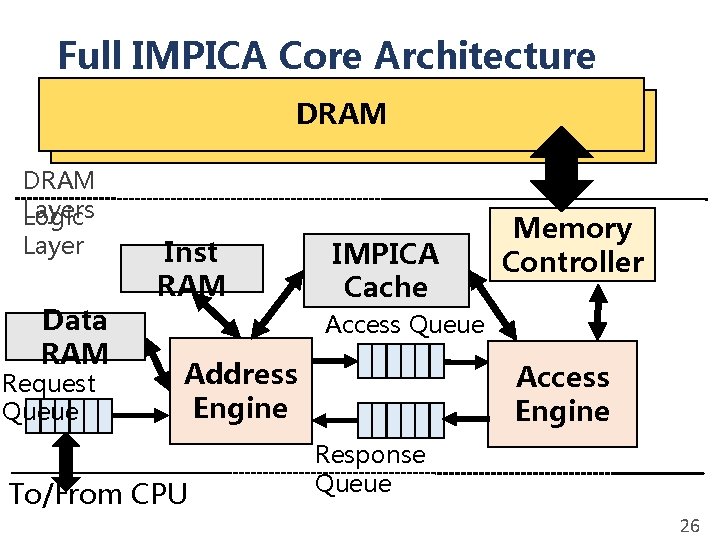

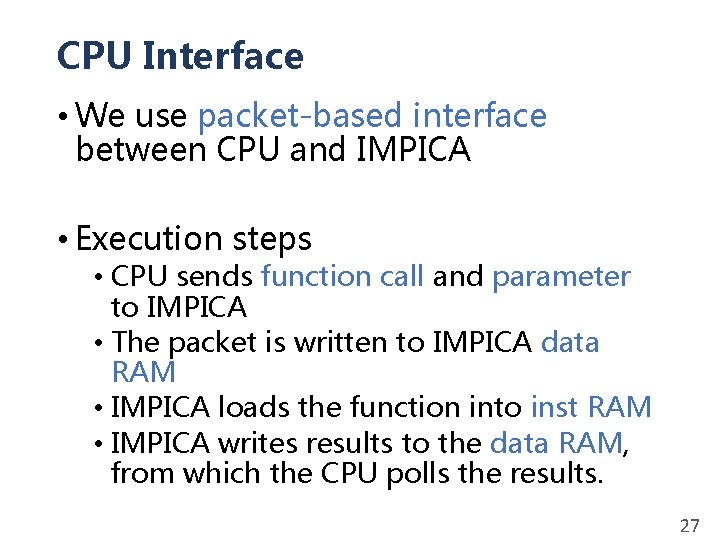

Full IMPICA Core Architecture DRAM Dies DRAM Layers Logic Layer Data RAM Request Queue Inst RAM IMPICA Cache Memory Controller Access Queue Address Engine To/From CPU Access Engine Response Queue 26

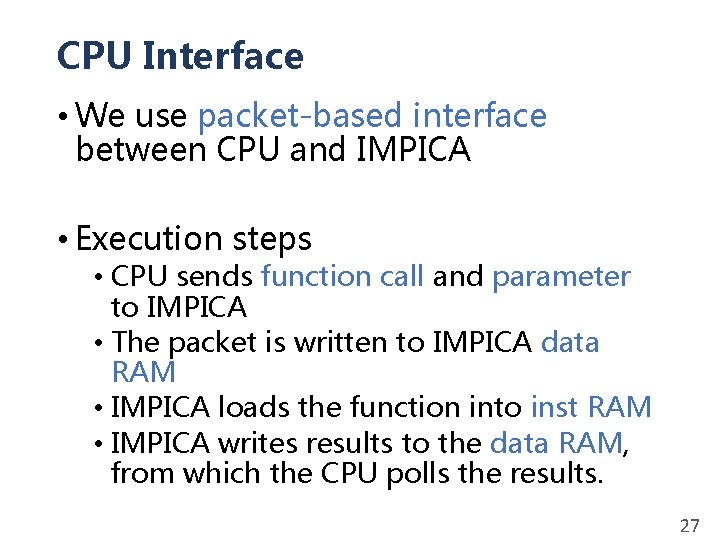

CPU Interface • We use packet-based interface between CPU and IMPICA • Execution steps • CPU sends function call and parameter to IMPICA • The packet is written to IMPICA data RAM • IMPICA loads the function into inst RAM • IMPICA writes results to the data RAM, from which the CPU polls the results. 27

Programming Model • An IMPICA program is written as a function in the application code with a compiler directive • The compiler compiles these functions into IMPICA instructions and wraps the function calls with communication codes 28

Page Table Management • The application allocates the memory for its linked data structures with a special API • The OS reserves a portion of the virtual address space as IMPICA regions • The OS maintains the coherence between CPU page table and IMPICA page table in the page fault handler 29

IMPICA Page Table Size • Region Table • 4 entries (covers a 2 TB memory range) • 68 B • Flat page table (each) • 220 entries • 8 MB • Small page table (each) • 29 entries • 4 KB 30

Handling of Multiple Memory Stacks • The OS knows the IMPICA region because of our page table management • The OS always maps the IMPICA region of the same application into the same memory stack, including the corresponding IMPICA page table 31

Cache Coherence • We execute every function that operates on the IMPICA regions in the accelerator • It can be extended with more advanced cache coherence mechanism. 32

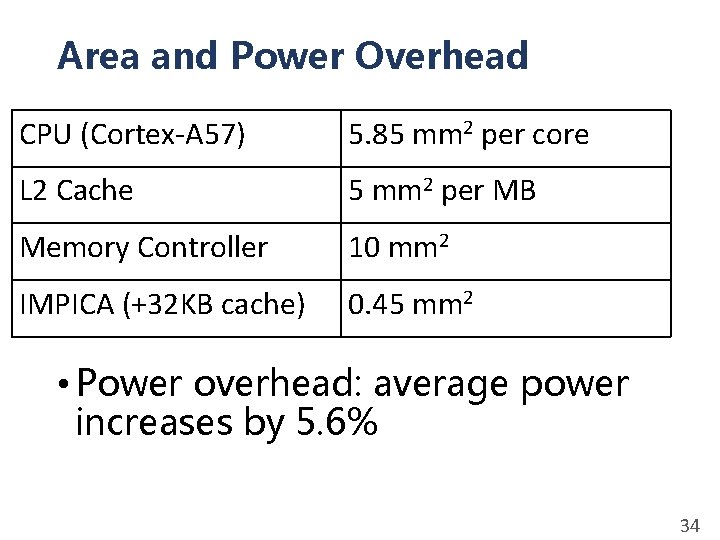

Limit of Parallelism • The parallelism of IMPICA is limited by • Data RAM size (for call stacks) • Memory access time vs. address computation time • The size of the queues • Each IMPICA core can easily parallelize 10 – 15 pointer chasing requests. 33

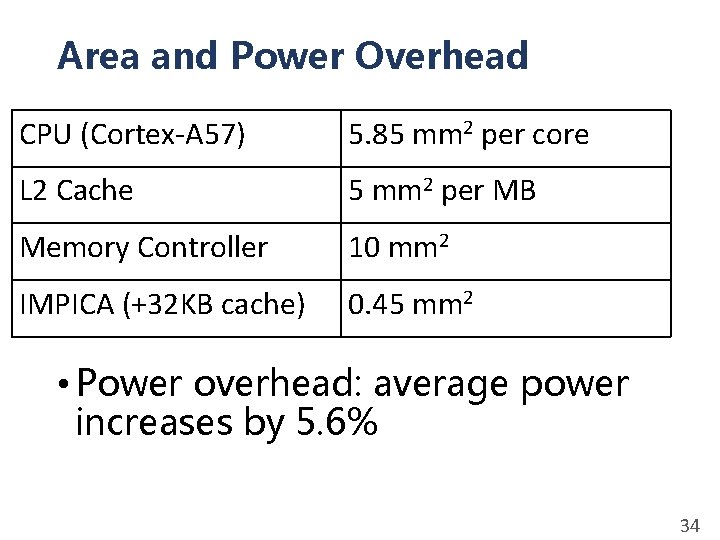

Area and Power Overhead CPU (Cortex-A 57) 5. 85 mm 2 per core L 2 Cache 5 mm 2 per MB Memory Controller 10 mm 2 IMPICA (+32 KB cache) 0. 45 mm 2 • Power overhead: average power increases by 5. 6% 34