Accelerating Machine Learning Applications on Graphics Processors Narayanan

- Slides: 22

Accelerating Machine Learning Applications on Graphics Processors Narayanan Sundaram and Bryan Catanzaro Presented by Narayanan Sundaram

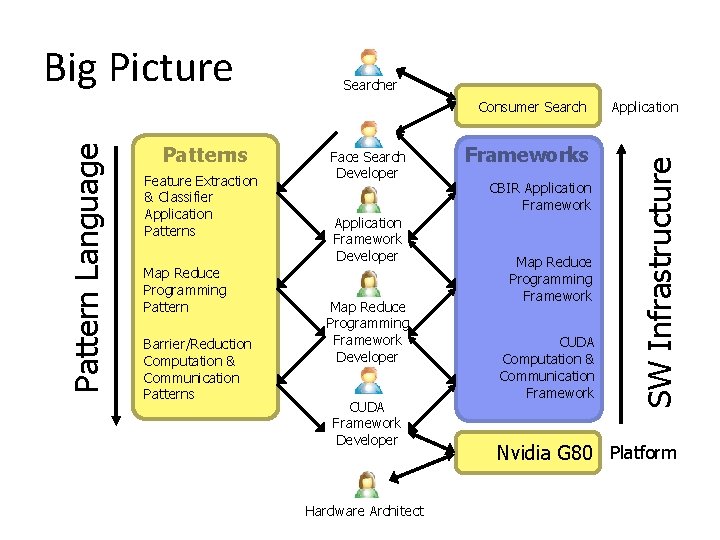

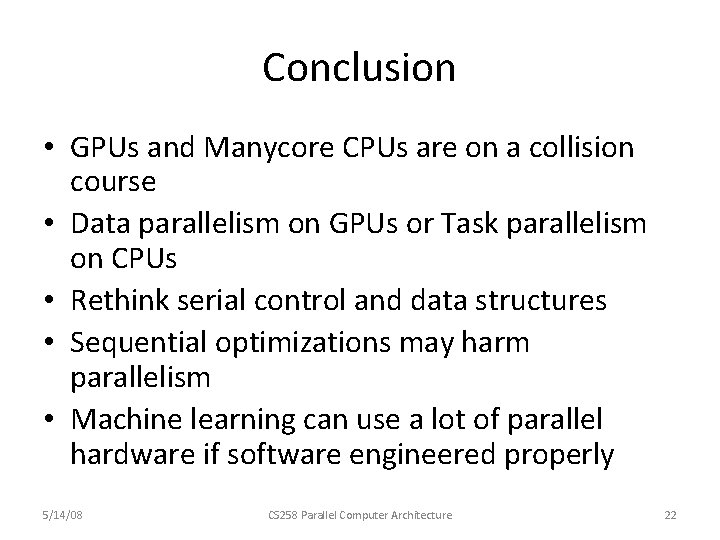

Searcher Pattern Language Consumer Search Patterns Feature Extraction & Classifier Application Patterns Map Reduce Programming Pattern Barrier/Reduction Computation & Communication Patterns Face Search Developer Application Framework Developer Map Reduce Programming Framework Developer CUDA Framework Developer Hardware Architect Frameworks CBIR Application Framework Map Reduce Programming Framework CUDA Computation & Communication Framework Application SW Infrastructure Big Picture Nvidia G 80 Platform

GPUs as proxy for manycore • GPUs are interesting architectures to program • Transitioning from highly specialized pipelines to general purpose • The only way to get performance from GPUs is through parallelism (No caching, branch prediction, prefetching etc. ) • Can launch millions of threads in one call 5/14/08 CS 258 Parallel Computer Architecture 3

GPUs are not for everyone • Memory coalescing is really important • Irregular memory accesses to even local stores is discouraged - up to 30% performance hit on some apps for local memory bank conflicts • Cannot forget that it is a SIMD machine • Memory consistency is non-existent & inter-SM synchronization is absent • Hardware scheduled threads • 20 us overhead for kernel call (20, 000 instructions @ 1 GHz) 5/14/08 CS 258 Parallel Computer Architecture 4

NVIDIA G 80 Architecture 5/14/08 CS 258 Parallel Computer Architecture 5

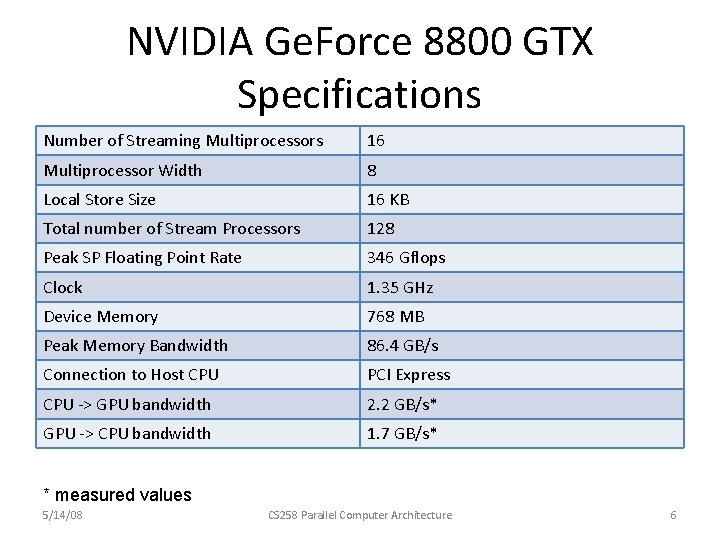

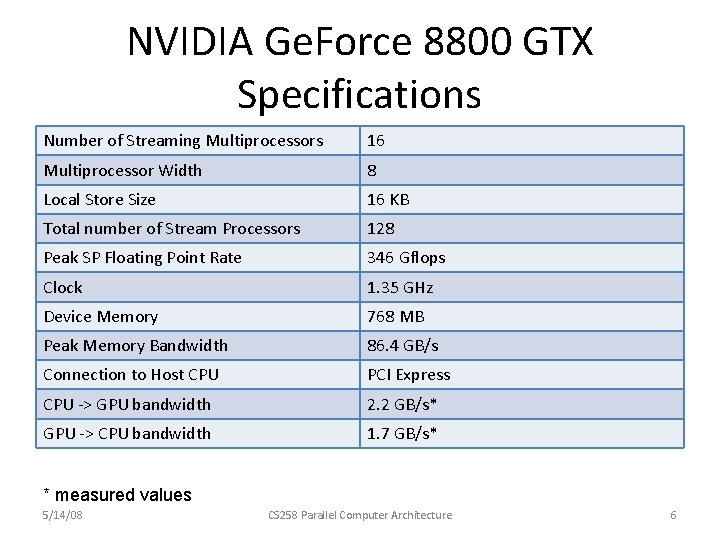

NVIDIA Ge. Force 8800 GTX Specifications Number of Streaming Multiprocessors 16 Multiprocessor Width 8 Local Store Size 16 KB Total number of Stream Processors 128 Peak SP Floating Point Rate 346 Gflops Clock 1. 35 GHz Device Memory 768 MB Peak Memory Bandwidth 86. 4 GB/s Connection to Host CPU PCI Express CPU -> GPU bandwidth 2. 2 GB/s* GPU -> CPU bandwidth 1. 7 GB/s* * measured values 5/14/08 CS 258 Parallel Computer Architecture 6

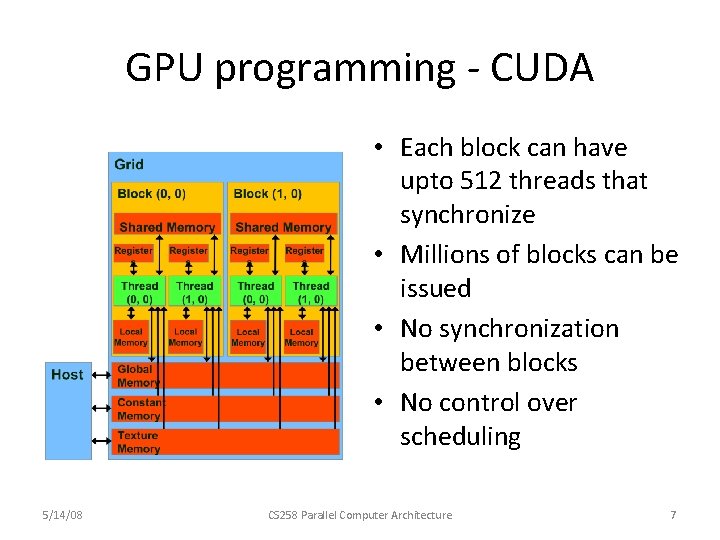

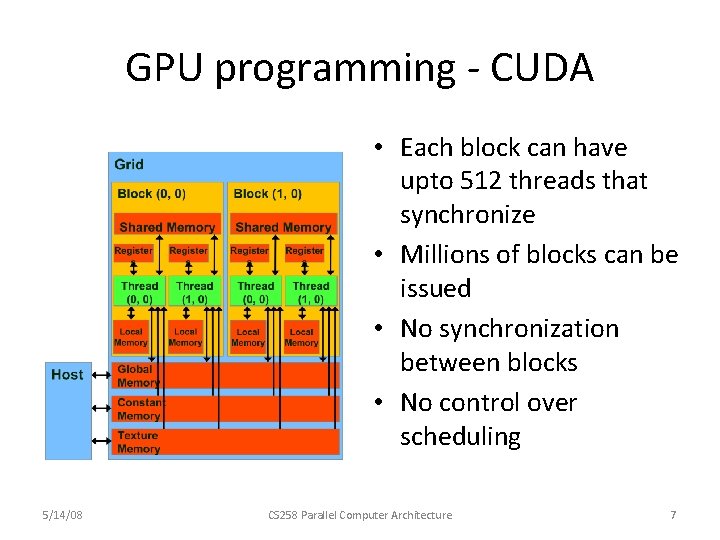

GPU programming - CUDA • Each block can have upto 512 threads that synchronize • Millions of blocks can be issued • No synchronization between blocks • No control over scheduling 5/14/08 CS 258 Parallel Computer Architecture 7

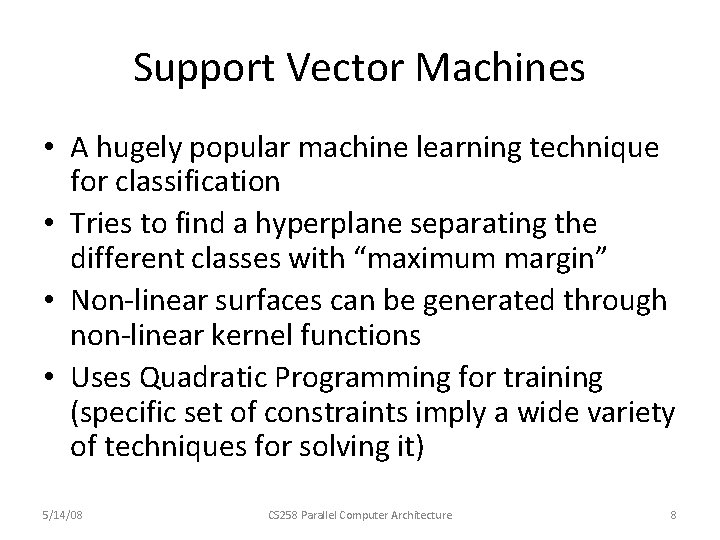

Support Vector Machines • A hugely popular machine learning technique for classification • Tries to find a hyperplane separating the different classes with “maximum margin” • Non-linear surfaces can be generated through non-linear kernel functions • Uses Quadratic Programming for training (specific set of constraints imply a wide variety of techniques for solving it) 5/14/08 CS 258 Parallel Computer Architecture 8

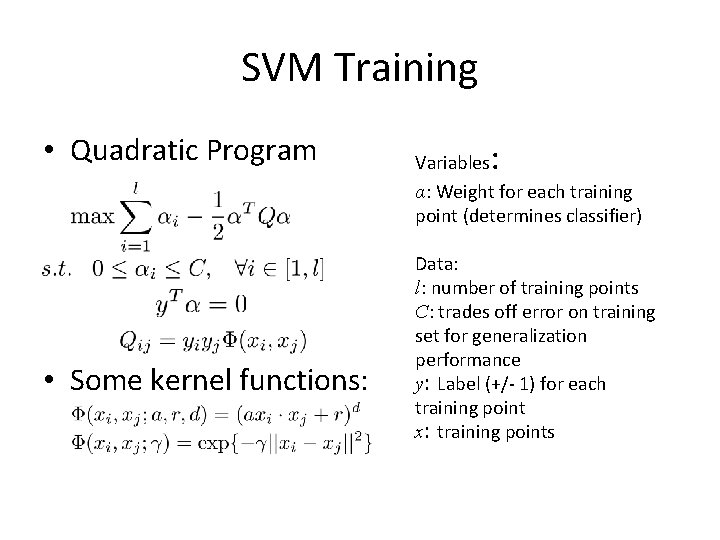

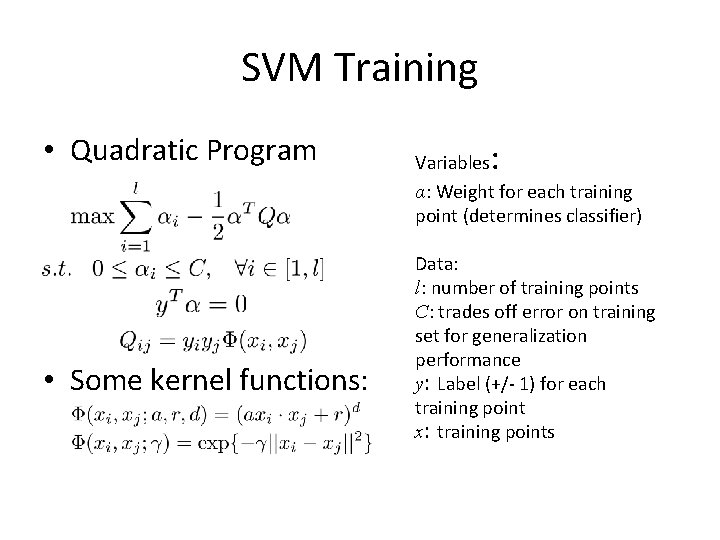

SVM Training • Quadratic Program • Some kernel functions: : Variables α: Weight for each training point (determines classifier) Data: l: number of training points C: trades off error on training set for generalization performance y: Label (+/- 1) for each training point x: training points

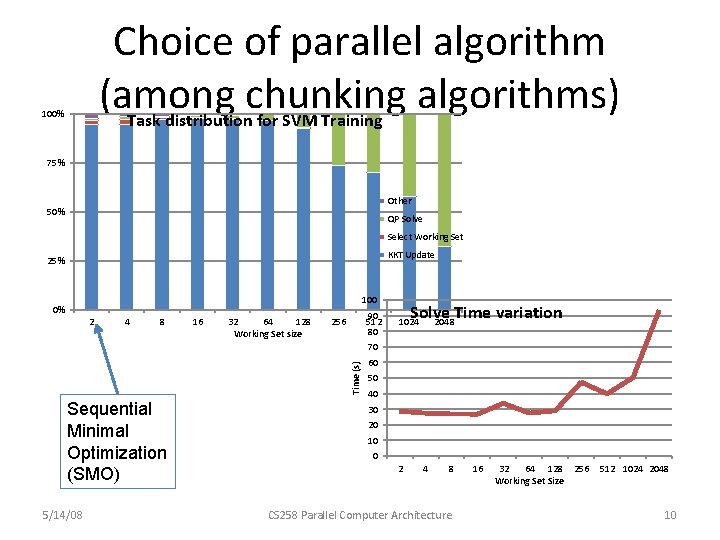

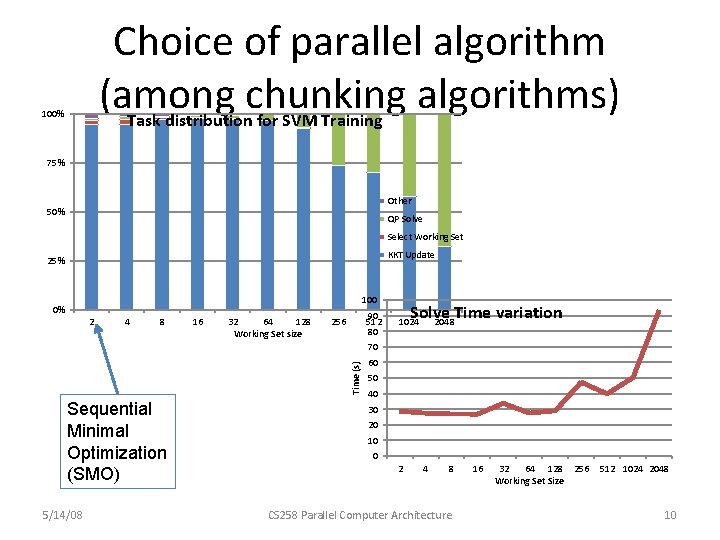

Choice of parallel algorithm (among chunking algorithms) Task distribution for SVM Training 100% 75% Other 50% QP Solve Select Working Set KKT Update 25% 100 0% 2 4 8 16 32 64 128 Working Set size 90 512 80 256 Solve Time variation 2048 1024 Time (s) 70 Sequential Minimal Optimization (SMO) 5/14/08 60 50 40 30 20 10 0 2 4 8 CS 258 Parallel Computer Architecture 16 32 64 128 256 Working Set Size 512 1024 2048 10

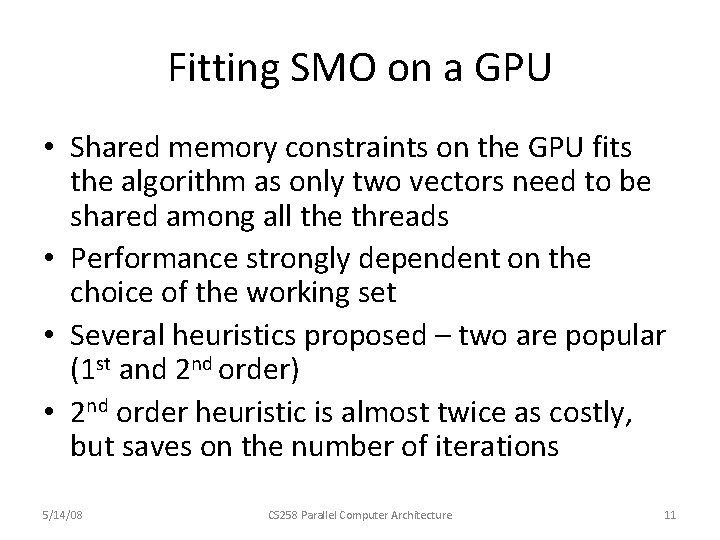

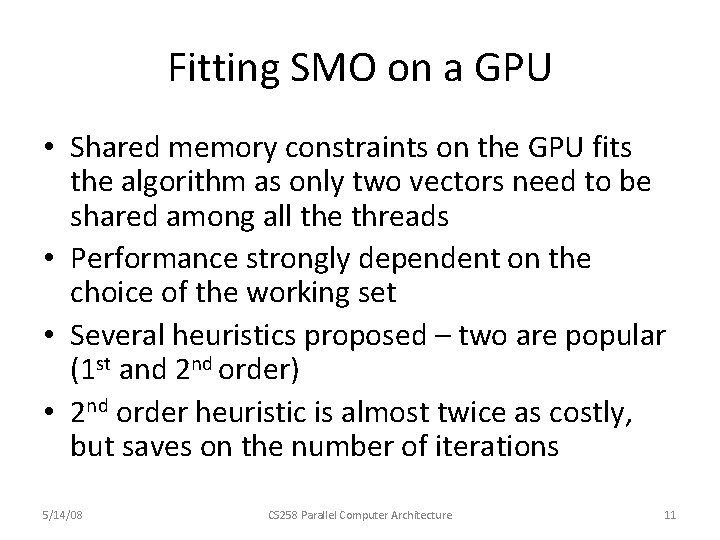

Fitting SMO on a GPU • Shared memory constraints on the GPU fits the algorithm as only two vectors need to be shared among all the threads • Performance strongly dependent on the choice of the working set • Several heuristics proposed – two are popular (1 st and 2 nd order) • 2 nd order heuristic is almost twice as costly, but saves on the number of iterations 5/14/08 CS 258 Parallel Computer Architecture 11

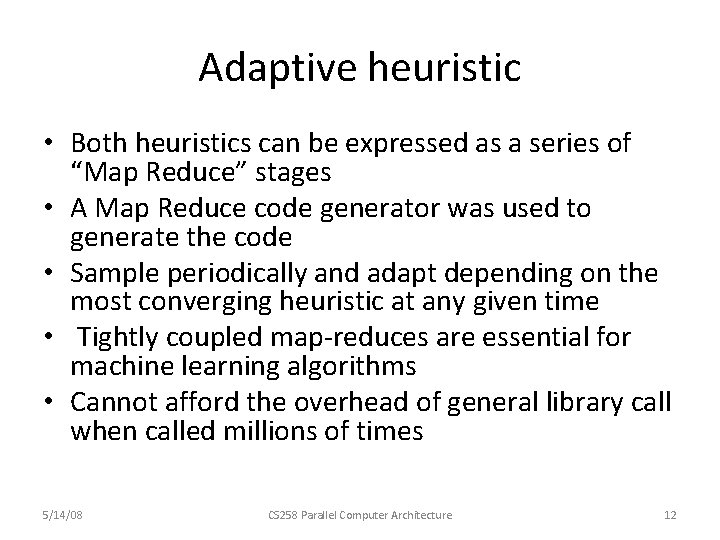

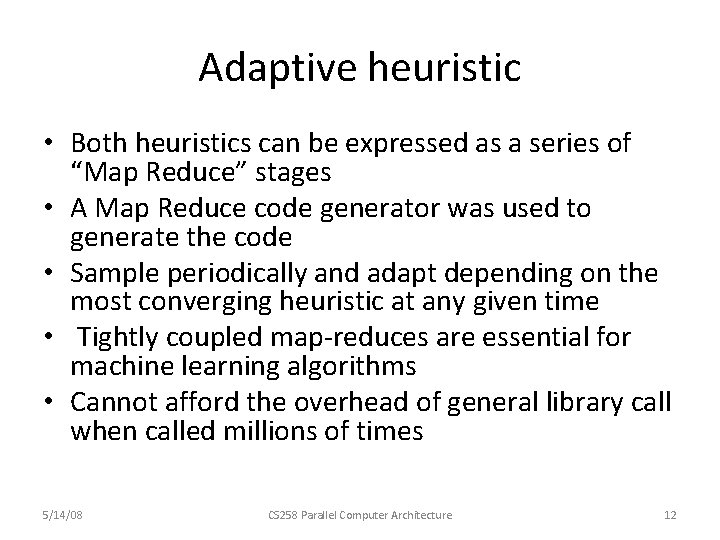

Adaptive heuristic • Both heuristics can be expressed as a series of “Map Reduce” stages • A Map Reduce code generator was used to generate the code • Sample periodically and adapt depending on the most converging heuristic at any given time • Tightly coupled map-reduces are essential for machine learning algorithms • Cannot afford the overhead of general library call when called millions of times 5/14/08 CS 258 Parallel Computer Architecture 12

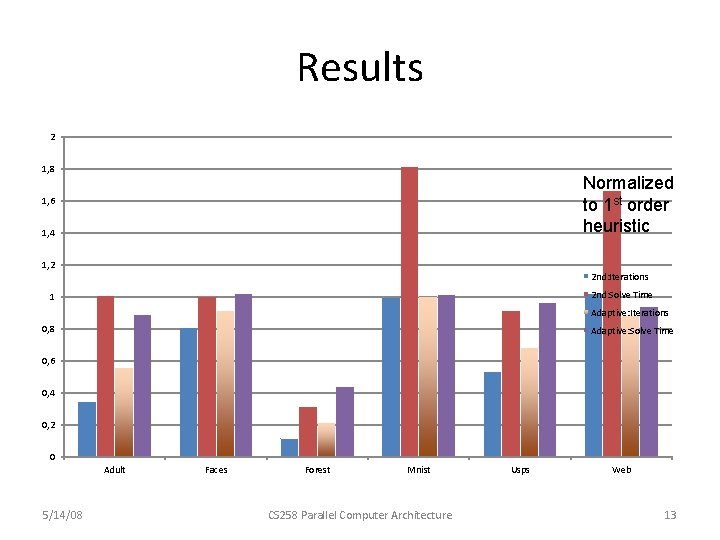

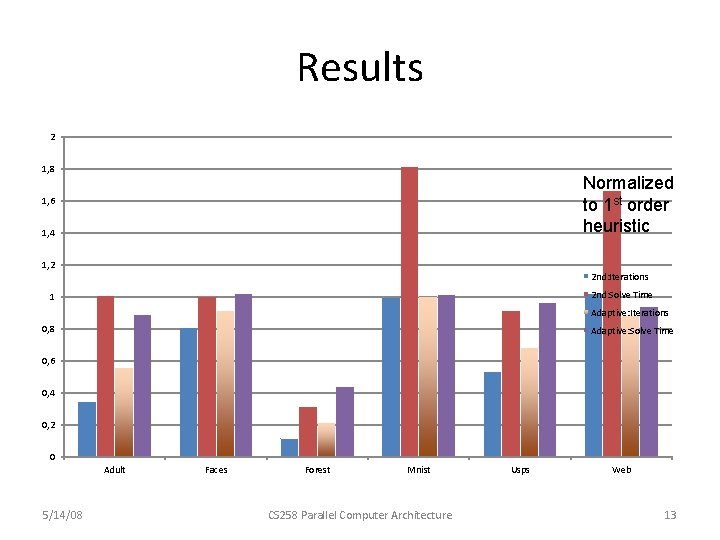

Results 2 1, 8 Normalized to 1 st order heuristic 1, 6 1, 4 1, 2 2 nd: Iterations 2 nd: Solve Time 1 Adaptive: Iterations 0, 8 Adaptive: Solve Time 0, 6 0, 4 0, 2 0 Adult 5/14/08 Faces Forest Mnist CS 258 Parallel Computer Architecture Usps Web 13

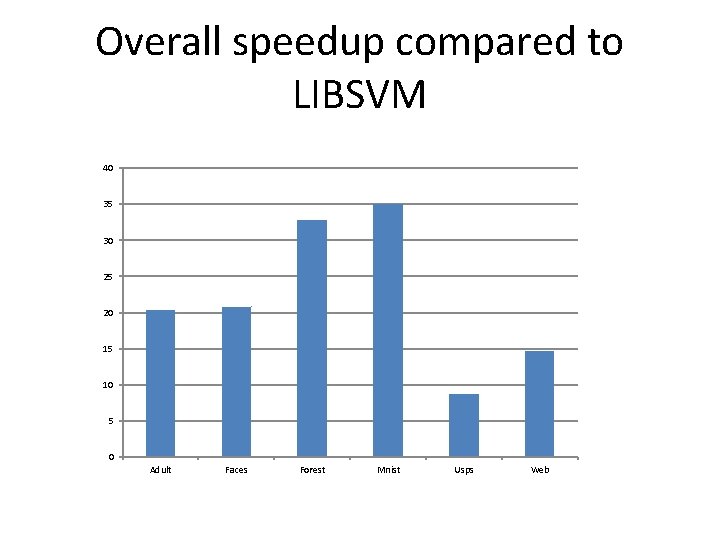

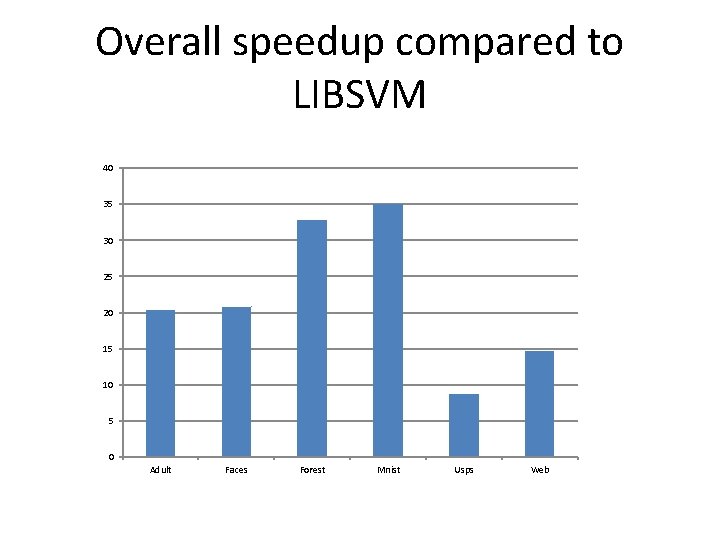

Overall speedup compared to LIBSVM 40 35 30 25 20 15 10 5 0 Adult Faces Forest Mnist Usps Web

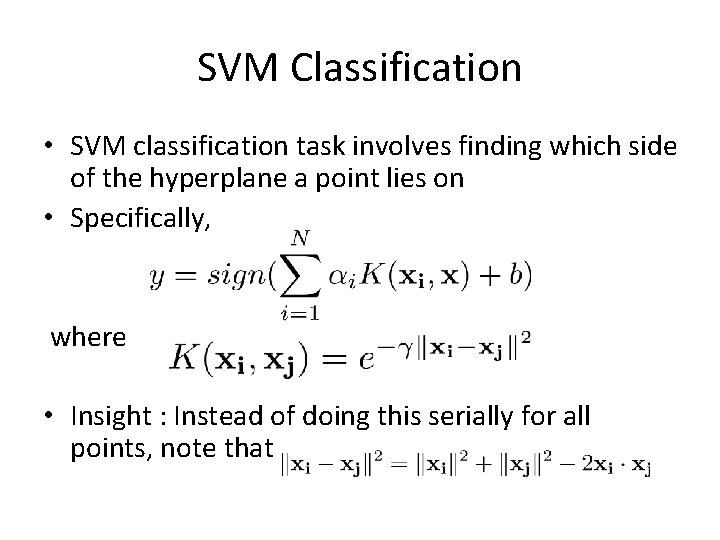

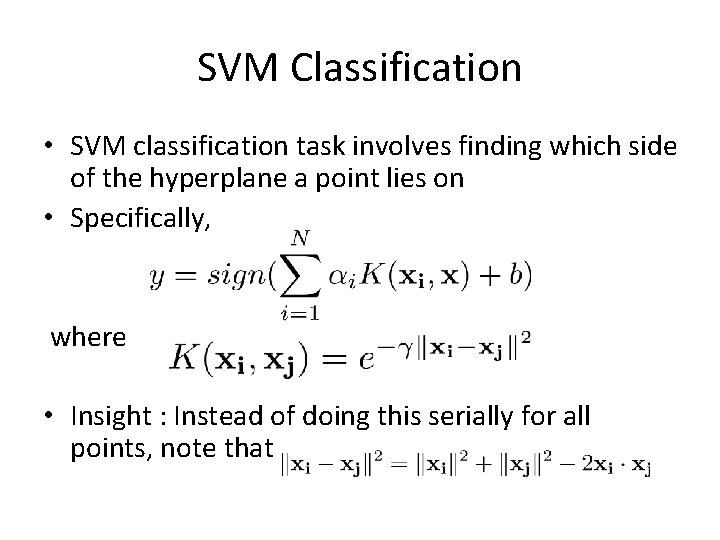

SVM Classification • SVM classification task involves finding which side of the hyperplane a point lies on • Specifically, where • Insight : Instead of doing this serially for all points, note that

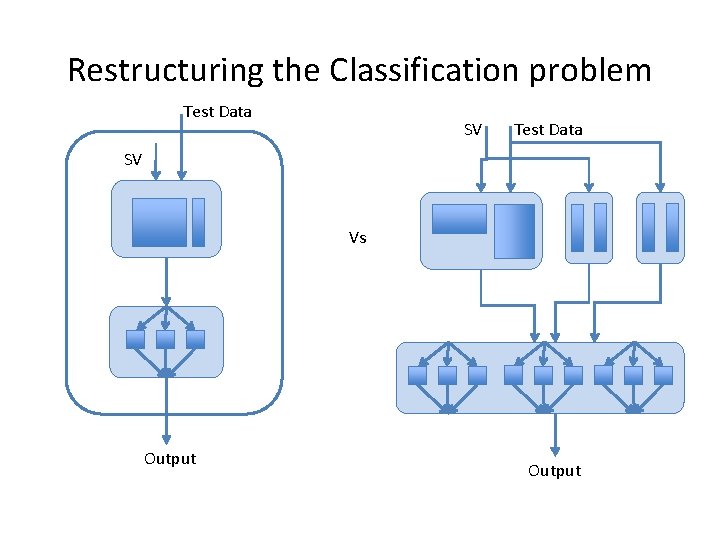

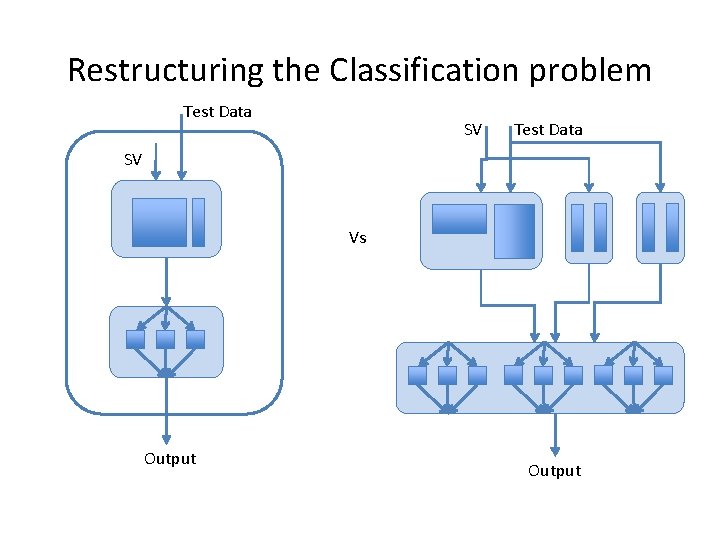

Restructuring the Classification problem Test Data SV Vs Output

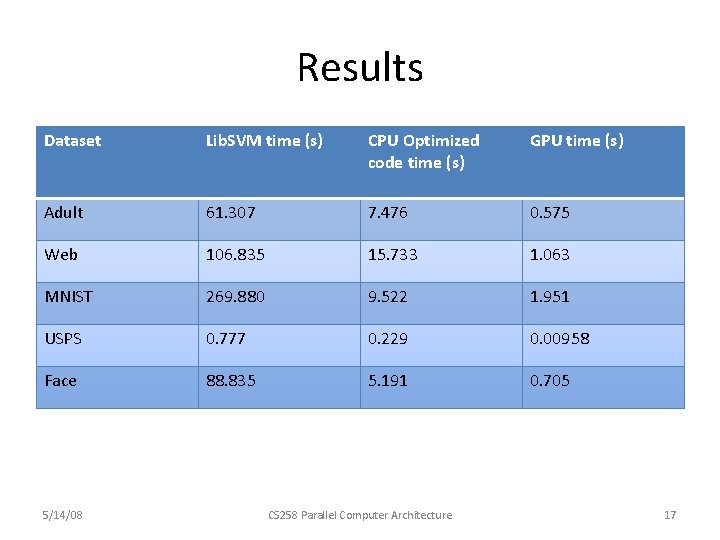

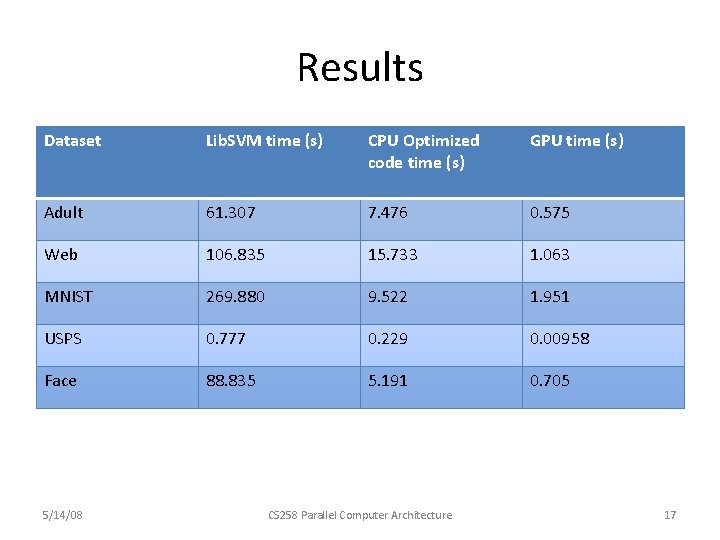

Results Dataset Lib. SVM time (s) CPU Optimized code time (s) GPU time (s) Adult 61. 307 7. 476 0. 575 Web 106. 835 15. 733 1. 063 MNIST 269. 880 9. 522 1. 951 USPS 0. 777 0. 229 0. 00958 Face 88. 835 5. 191 0. 705 5/14/08 CS 258 Parallel Computer Architecture 17

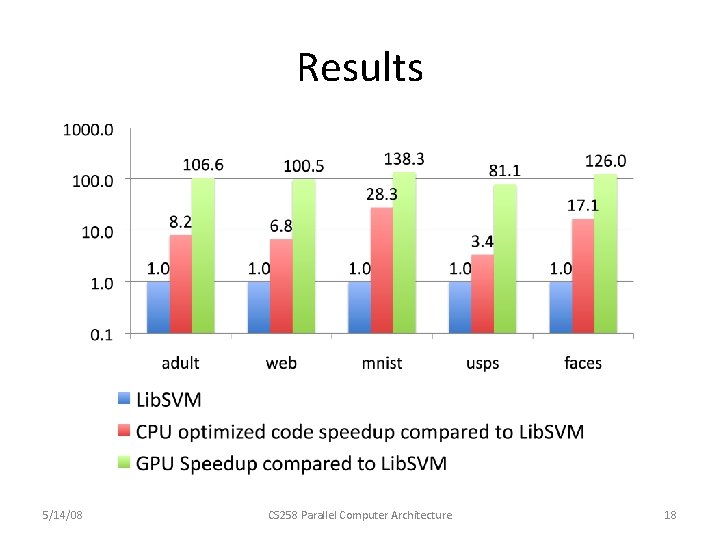

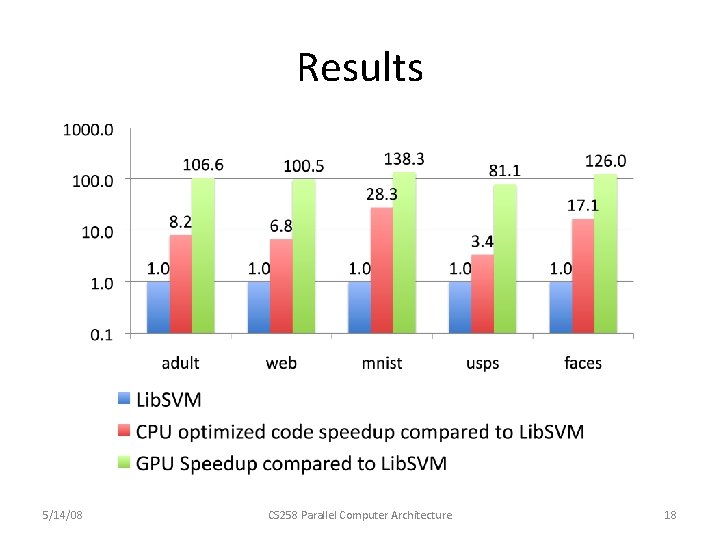

Results 5/14/08 CS 258 Parallel Computer Architecture 18

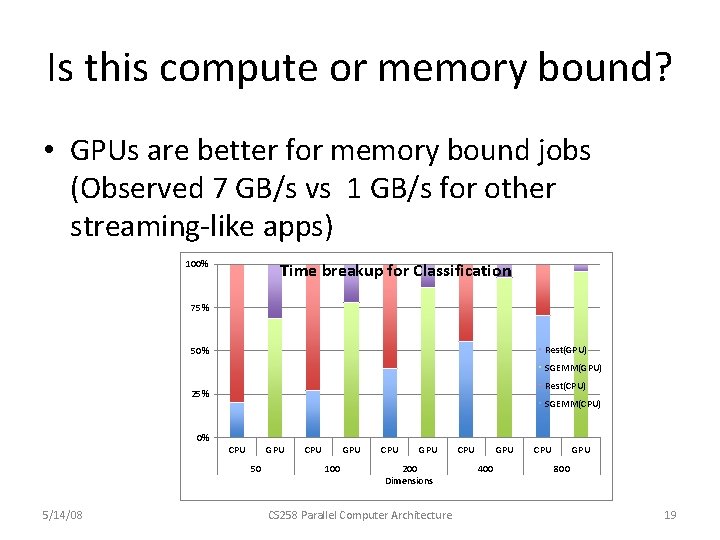

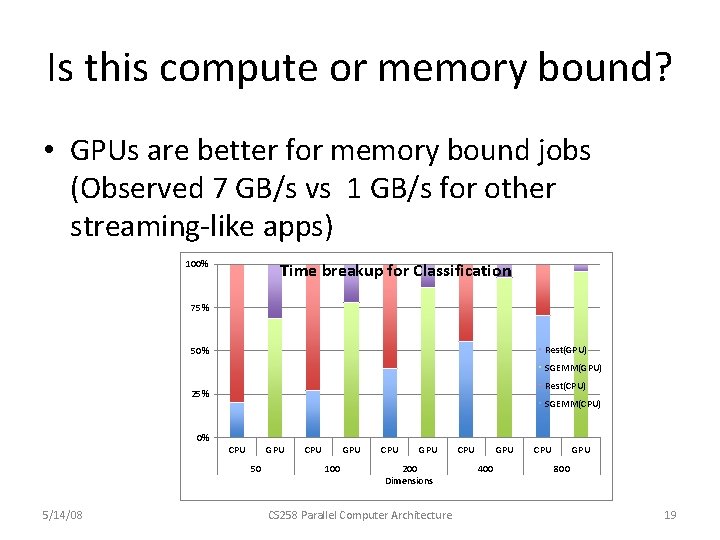

Is this compute or memory bound? • GPUs are better for memory bound jobs (Observed 7 GB/s vs 1 GB/s for other streaming-like apps) 100% Time breakup for Classification 75% Rest(GPU) 50% SGEMM(GPU) Rest(CPU) 25% SGEMM(CPU) 0% CPU GPU 50 5/14/08 CPU GPU 100 CPU GPU 200 Dimensions CS 258 Parallel Computer Architecture CPU GPU 400 CPU GPU 800 19

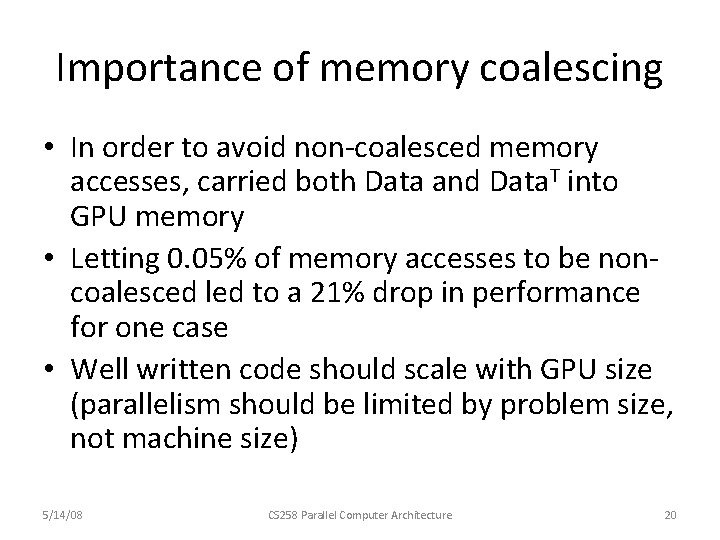

Importance of memory coalescing • In order to avoid non-coalesced memory accesses, carried both Data and Data. T into GPU memory • Letting 0. 05% of memory accesses to be noncoalesced led to a 21% drop in performance for one case • Well written code should scale with GPU size (parallelism should be limited by problem size, not machine size) 5/14/08 CS 258 Parallel Computer Architecture 20

Is SIMD becoming ubiquitous? • SIMD already important for performance on uniprocessor systems • Task Vs Data parallelism • Intel’s new GPU has wide SIMD • CUDA lesson - Runtime SIMD binding easier for programmers • Non-SIMD leads to performance penalty, not incorrect programs – prevents premature optimizations and keep code flexible 5/14/08 CS 258 Parallel Computer Architecture 21

Conclusion • GPUs and Manycore CPUs are on a collision course • Data parallelism on GPUs or Task parallelism on CPUs • Rethink serial control and data structures • Sequential optimizations may harm parallelism • Machine learning can use a lot of parallel hardware if software engineered properly 5/14/08 CS 258 Parallel Computer Architecture 22