Accelerating Astronomy Astrophysics in the New Era of

- Slides: 26

Accelerating Astronomy & Astrophysics in the New Era of Parallel Computing: GPUs, Phi and Cloud Computing Eric Ford Penn State Department of Astronomy & Astrophysics Institute for Cyber. Science Center for Astrostatistics Center for Exoplanets & Habitable Worlds IAU General Assembly, Division B August 7, 2015 Background: NASA

Why should Astronomers care about trends in Computing? • Theory: – Increase resolution/particles in simulations – Add more physics to models • Observation: – Analyze massive datasets – Rapid analysis of time-domain data • Comparing theory & observations: – Explore high-dimensional parameter space – Statistics of rare events Image credit: LSST Corp.

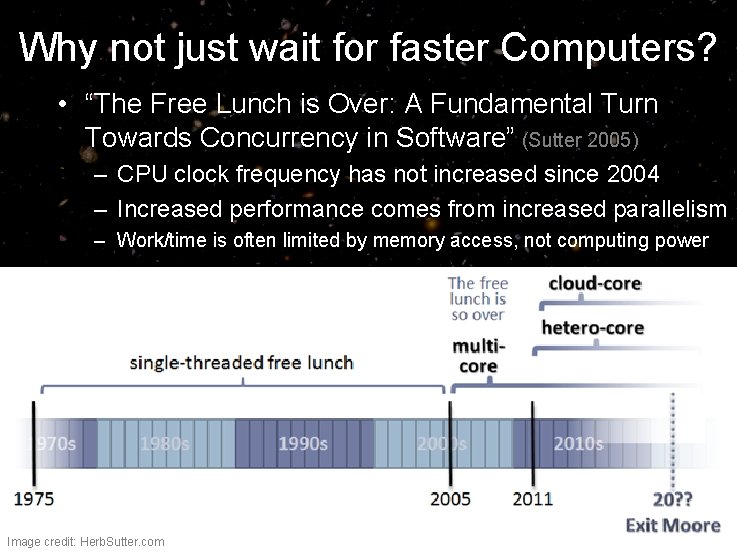

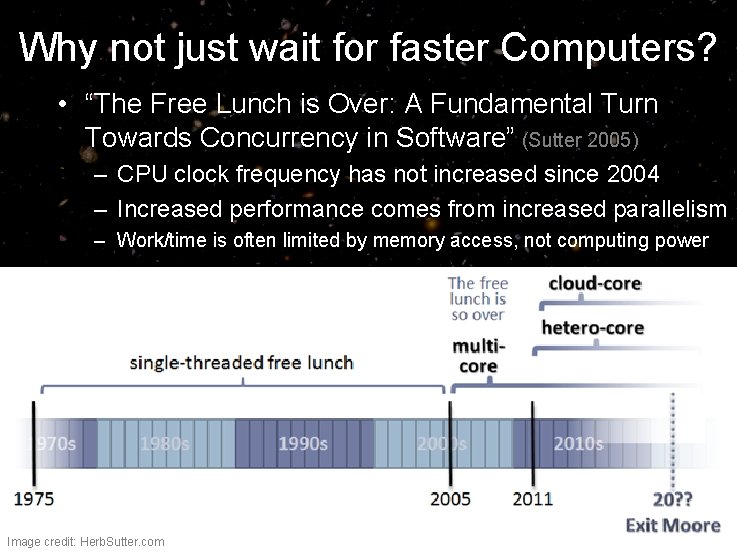

Why not just wait for faster Computers? • “The Free Lunch is Over: A Fundamental Turn Towards Concurrency in Software” (Sutter 2005) – CPU clock frequency has not increased since 2004 – Increased performance comes from increased parallelism – Work/time is often limited by memory access, not computing power Clock frequency vs Year Performance gap CPU vs DRAM memory processor 2004 Image credit: Herb. Sutter. com memory Hennesy & Patterson 2012

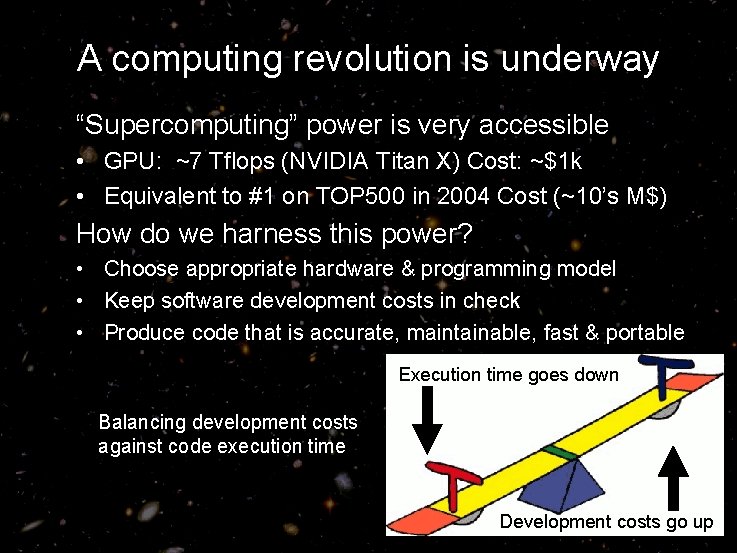

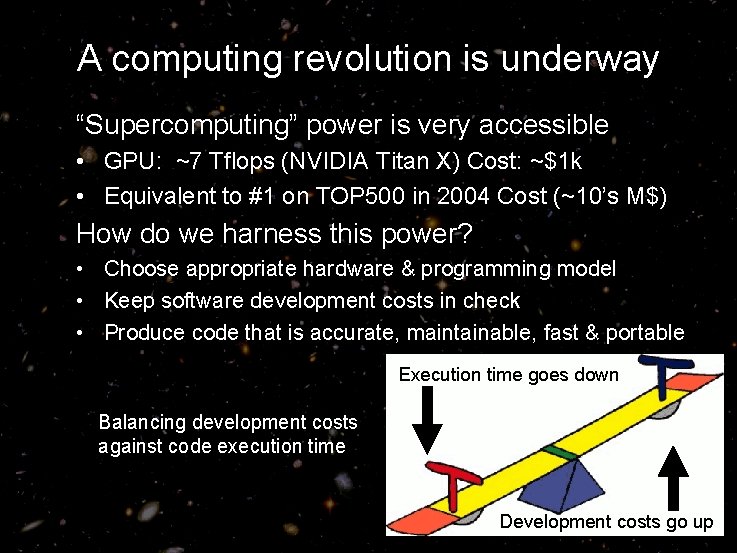

A computing revolution is underway “Supercomputing” power is very accessible • GPU: ~7 Tflops (NVIDIA Titan X) Cost: ~$1 k • Equivalent to #1 on TOP 500 in 2004 Cost (~10’s M$) How do we harness this power? • Choose appropriate hardware & programming model • Keep software development costs in check • Produce code that is accurate, maintainable, fast & portable Execution time goes down Balancing development costs against code execution time Development costs go up

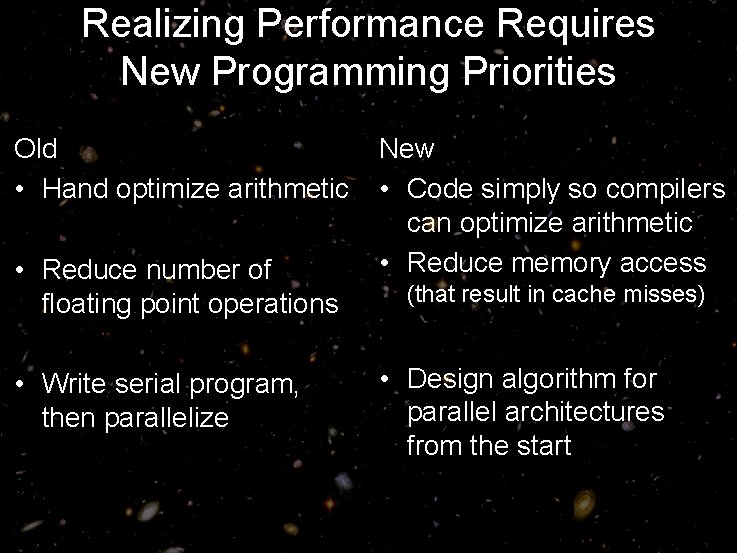

Realizing Performance Requires New Programming Priorities Old • Hand optimize arithmetic • Reduce number of floating point operations • Write serial program, then parallelize New • Code simply so compilers can optimize arithmetic • Reduce memory access (that result in cache misses) • Design algorithm for parallel architectures from the start

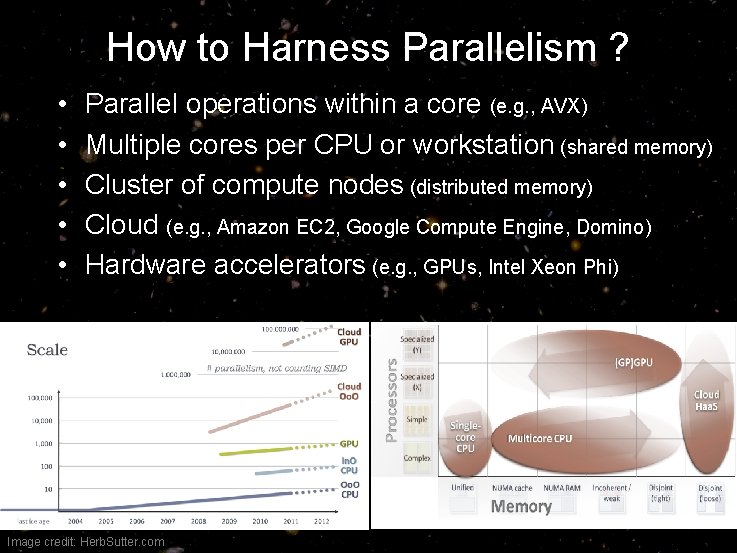

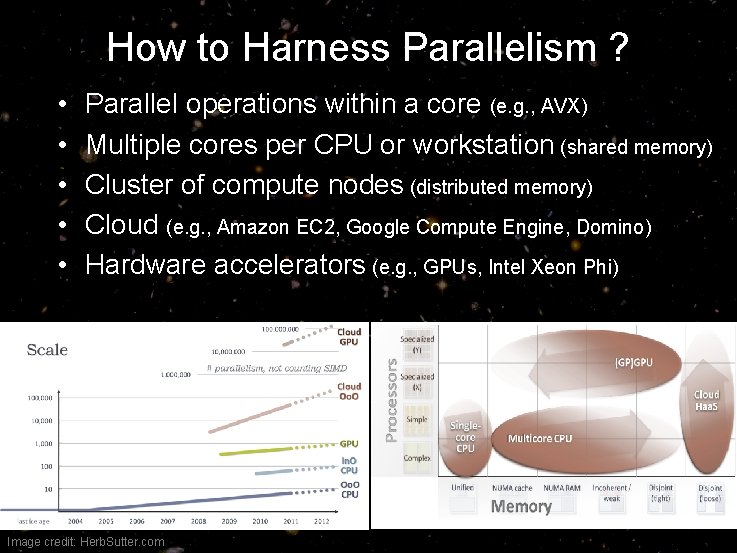

How to Harness Parallelism ? • • • Parallel operations within a core (e. g. , AVX) Multiple cores per CPU or workstation (shared memory) Cluster of compute nodes (distributed memory) Cloud (e. g. , Amazon EC 2, Google Compute Engine, Domino) Hardware accelerators (e. g. , GPUs, Intel Xeon Phi) Image credit: Herb. Sutter. com

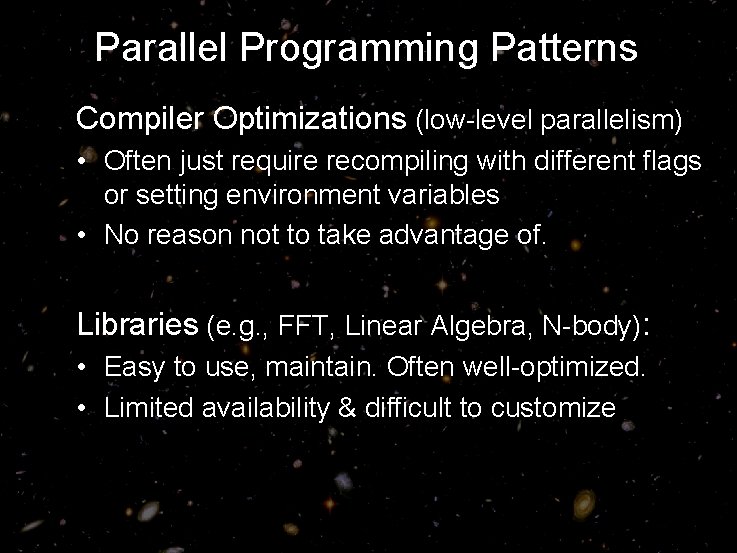

Parallel Programming Patterns Compiler Optimizations (low-level parallelism) • Often just require recompiling with different flags or setting environment variables • No reason not to take advantage of. Libraries (e. g. , FFT, Linear Algebra, N-body): • Easy to use, maintain. Often well-optimized. • Limited availability & difficult to customize

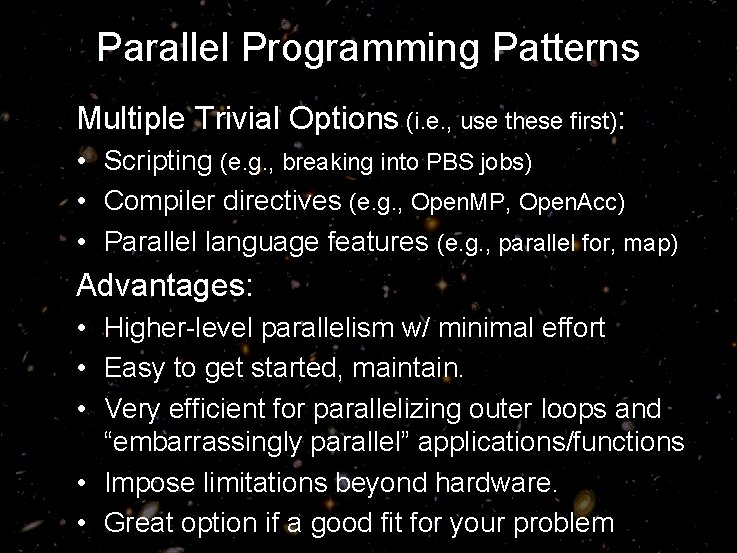

Parallel Programming Patterns Multiple Trivial Options (i. e. , use these first): • Scripting (e. g. , breaking into PBS jobs) • Compiler directives (e. g. , Open. MP, Open. Acc) • Parallel language features (e. g. , parallel for, map) Advantages: • Higher-level parallelism w/ minimal effort • Easy to get started, maintain. • Very efficient for parallelizing outer loops and “embarrassingly parallel” applications/functions • Impose limitations beyond hardware. • Great option if a good fit for your problem

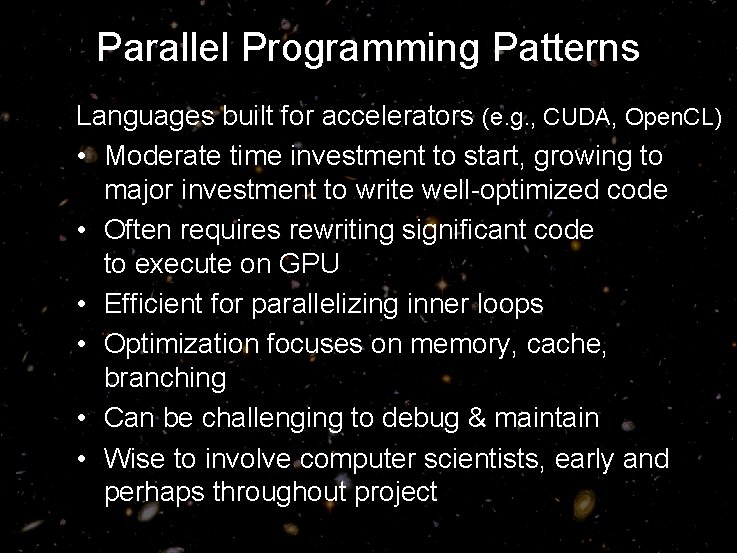

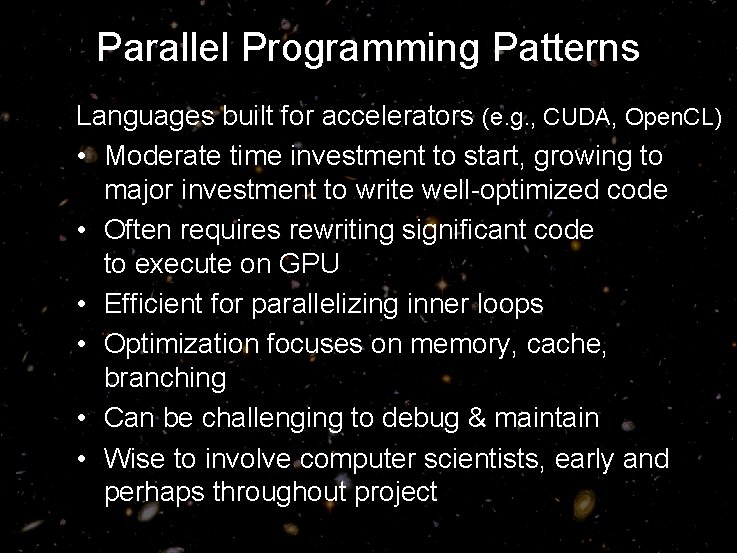

Parallel Programming Patterns Languages built for accelerators (e. g. , CUDA, Open. CL) • Moderate time investment to start, growing to major investment to write well-optimized code • Often requires rewriting significant code to execute on GPU • Efficient for parallelizing inner loops • Optimization focuses on memory, cache, branching • Can be challenging to debug & maintain • Wise to involve computer scientists, early and perhaps throughout project

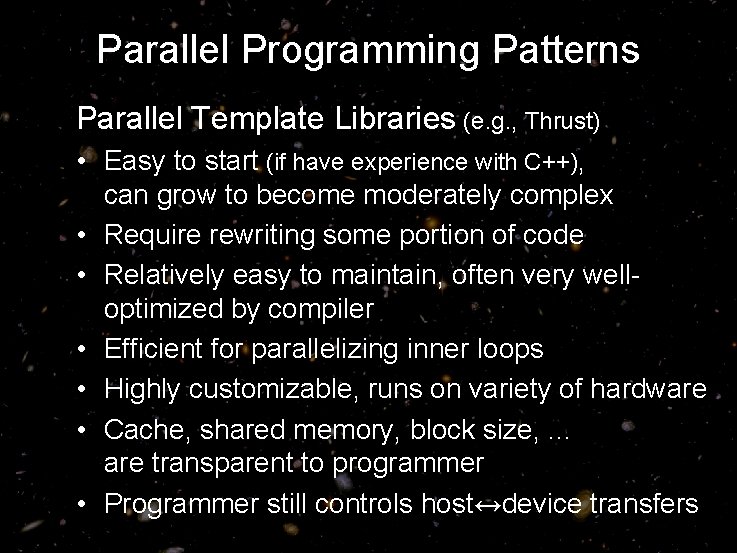

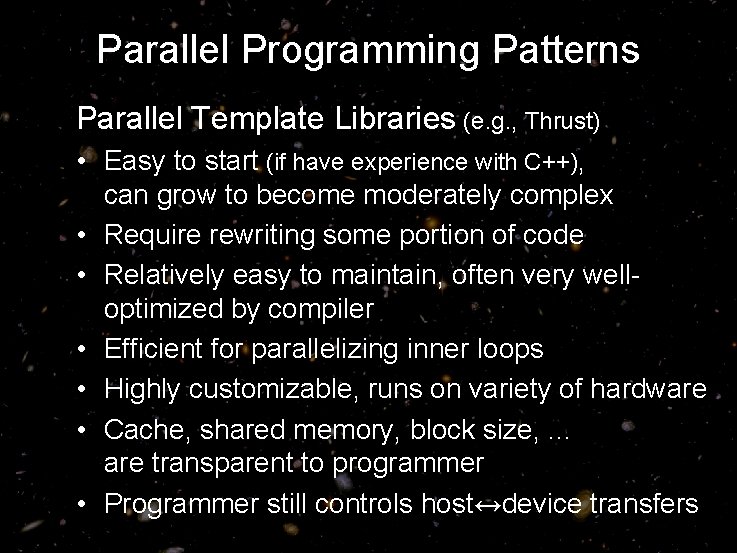

Parallel Programming Patterns Parallel Template Libraries (e. g. , Thrust) • Easy to start (if have experience with C++), can grow to become moderately complex • Require rewriting some portion of code • Relatively easy to maintain, often very welloptimized by compiler • Efficient for parallelizing inner loops • Highly customizable, runs on variety of hardware • Cache, shared memory, block size, . . . are transparent to programmer • Programmer still controls host↔device transfers

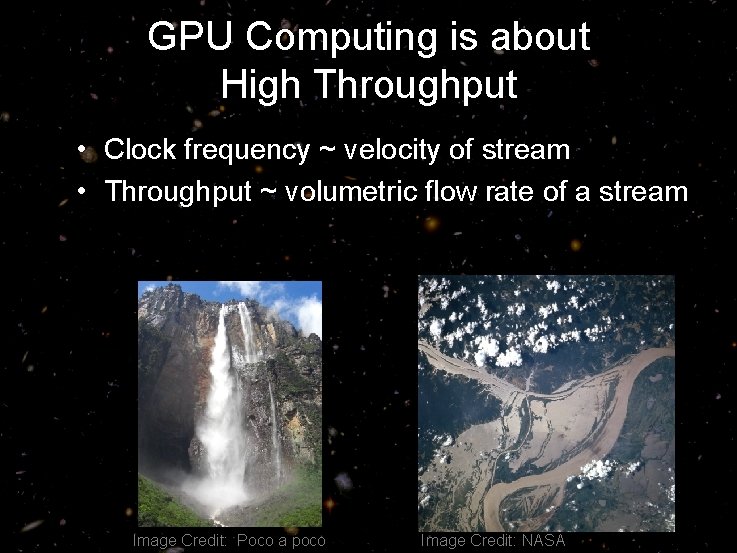

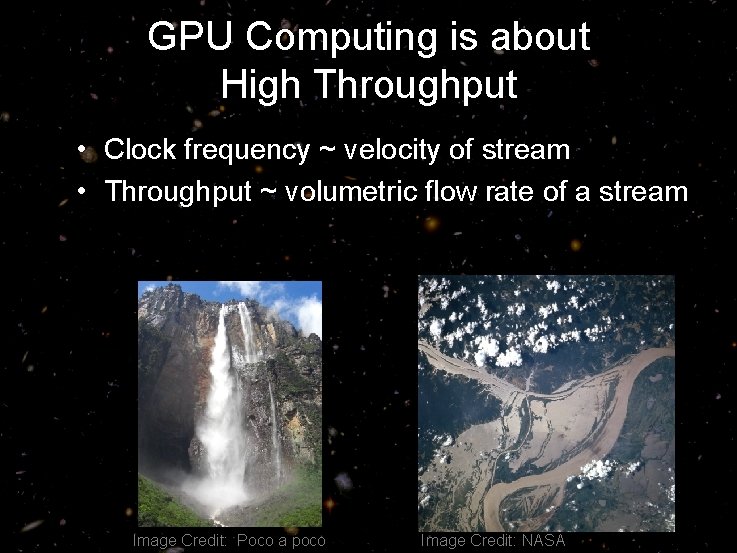

GPU Computing is about High Throughput • Clock frequency ~ velocity of stream • Throughput ~ volumetric flow rate of a stream GIG = CPU Image Credit: Poco a poco + GPU Image Credit: NASA

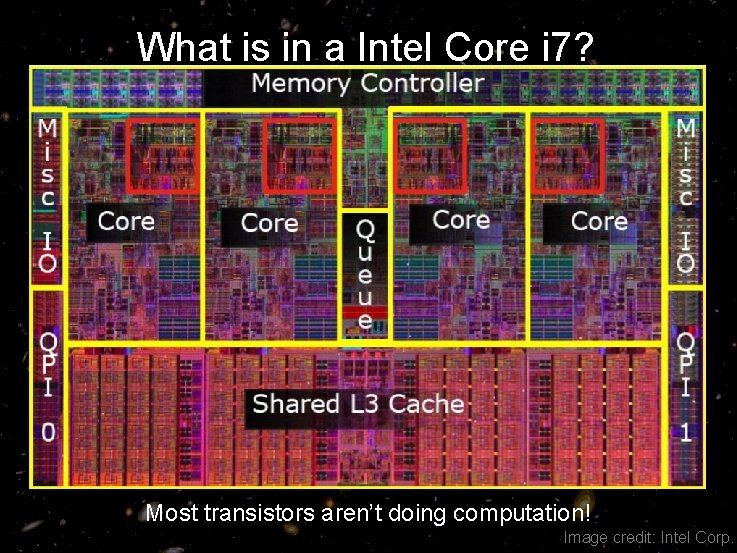

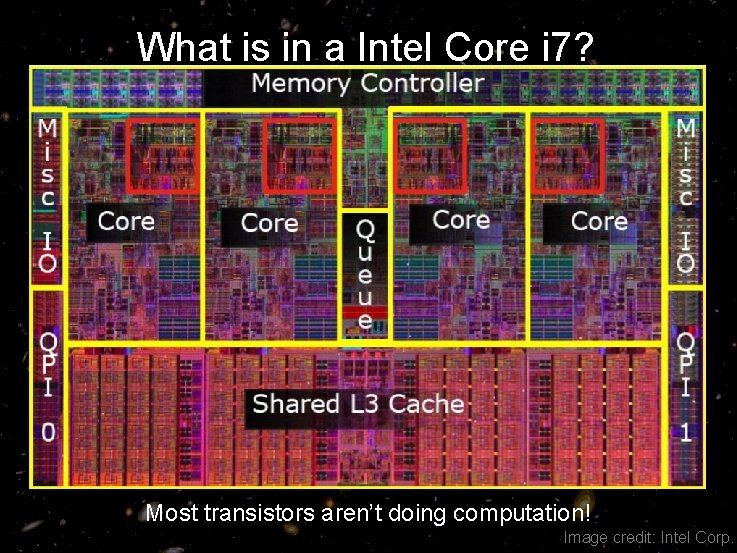

What is in a Intel Core i 7? Most transistors aren’t doing computation! Image credit: Intel Corp.

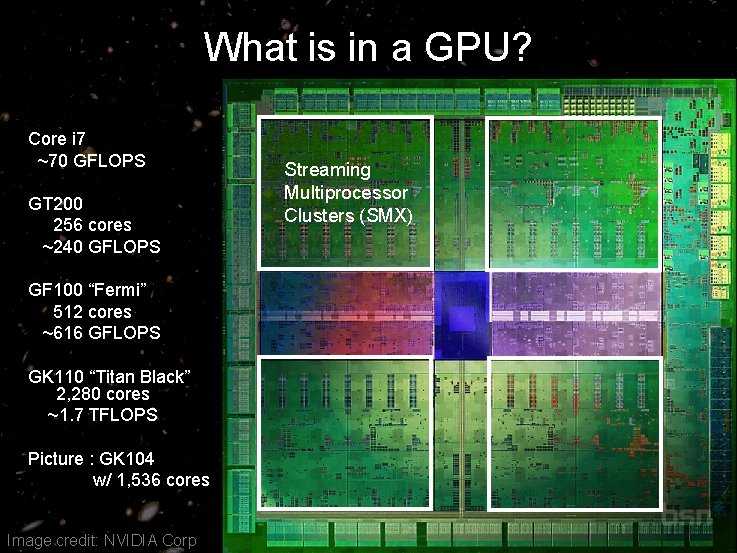

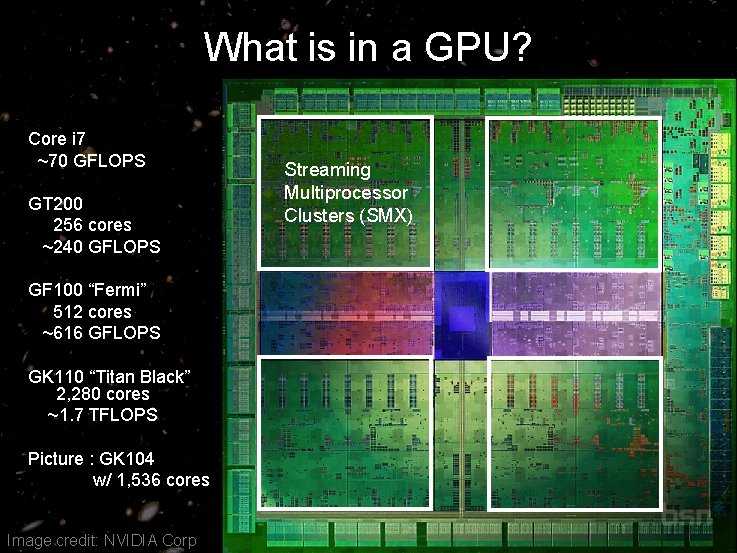

What is in a GPU? Core i 7 ~70 GFLOPS GT 200 256 cores ~240 GFLOPS GF 100 “Fermi” 512 cores ~616 GFLOPS GK 110 “Titan Black” 2, 280 cores ~1. 7 TFLOPS Picture : GK 104 w/ 1, 536 cores Image credit: NVIDIA Corp. Streaming Multiprocessor Clusters (SMX)

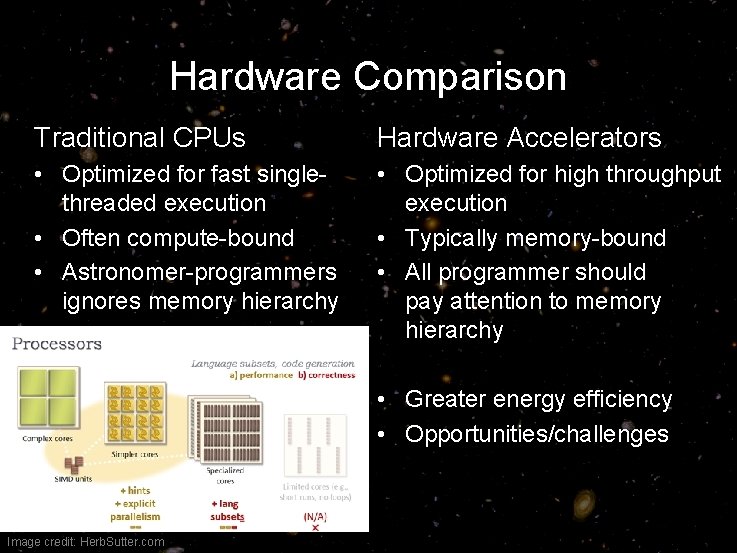

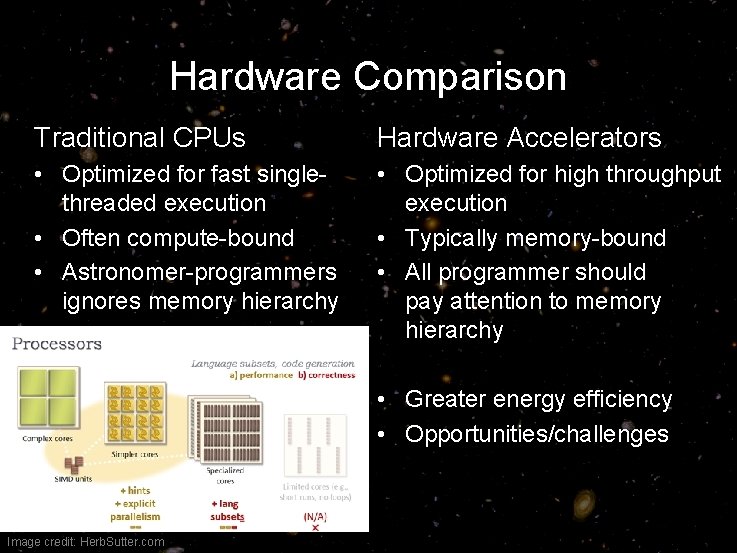

Hardware Comparison Traditional CPUs Hardware Accelerators • Optimized for fast singlethreaded execution • Often compute-bound • Astronomer-programmers ignores memory hierarchy • Optimized for high throughput execution • Typically memory-bound • All programmer should pay attention to memory hierarchy • Greater energy efficiency • Opportunities/challenges Image credit: Herb. Sutter. com

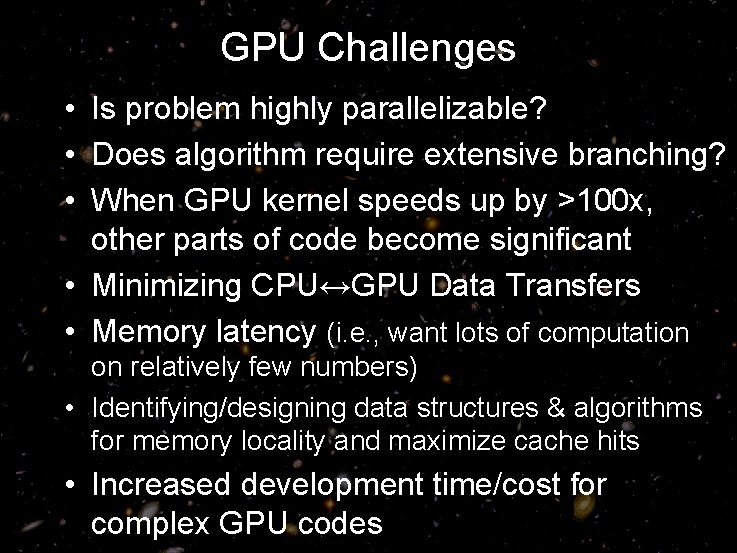

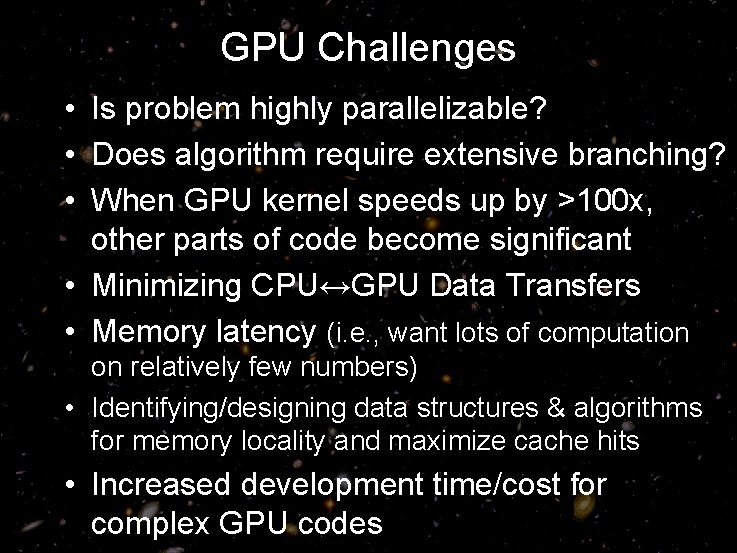

GPU Challenges • Is problem highly parallelizable? • Does algorithm require extensive branching? • When GPU kernel speeds up by >100 x, other parts of code become significant • Minimizing CPU↔GPU Data Transfers • Memory latency (i. e. , want lots of computation on relatively few numbers) • Identifying/designing data structures & algorithms for memory locality and maximize cache hits • Increased development time/cost for complex GPU codes

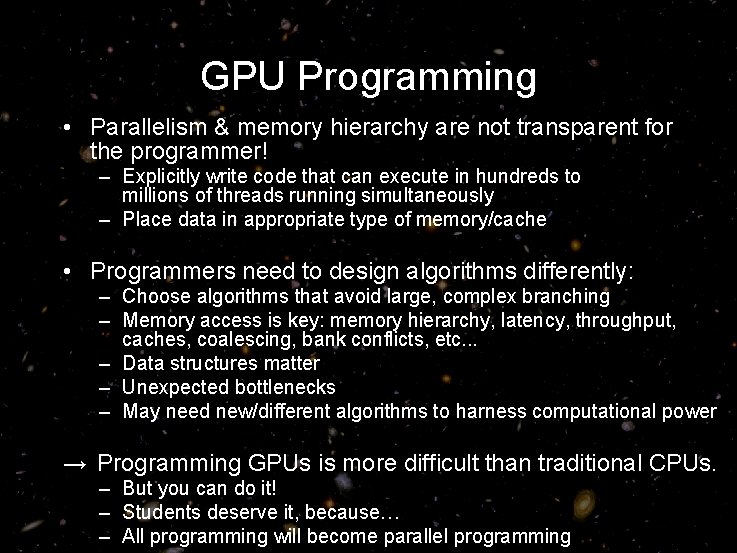

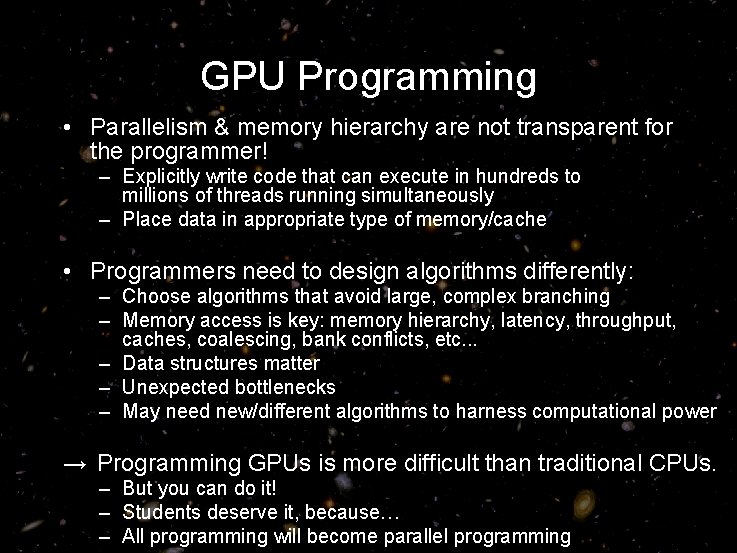

GPU Programming • Parallelism & memory hierarchy are not transparent for the programmer! – Explicitly write code that can execute in hundreds to millions of threads running simultaneously – Place data in appropriate type of memory/cache • Programmers need to design algorithms differently: – Choose algorithms that avoid large, complex branching – Memory access is key: memory hierarchy, latency, throughput, caches, coalescing, bank conflicts, etc. . . – Data structures matter – Unexpected bottlenecks – May need new/different algorithms to harness computational power → Programming GPUs is more difficult than traditional CPUs. – But you can do it! – Students deserve it, because… – All programming will become parallel programming

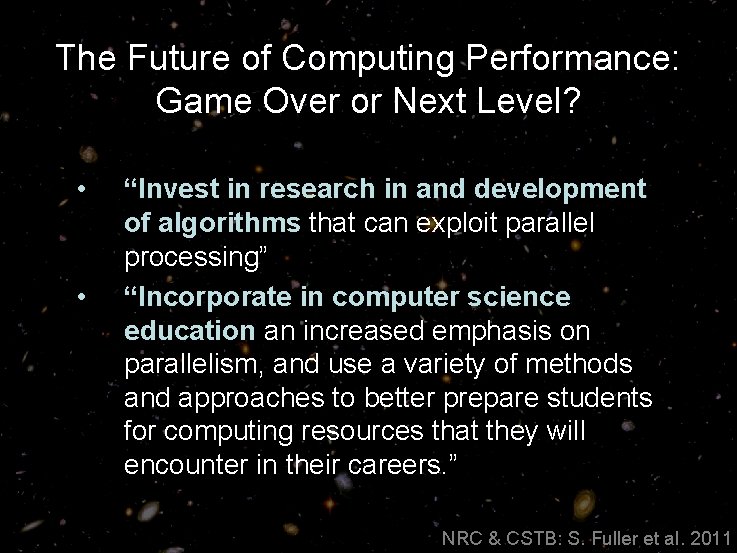

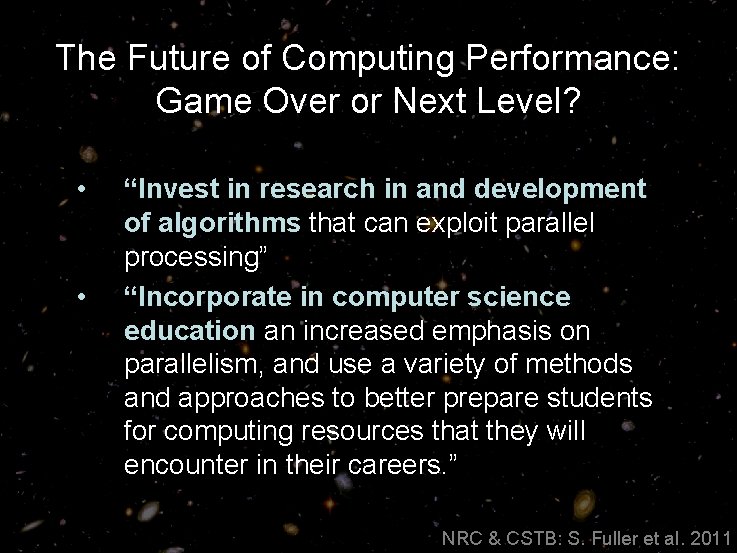

The Future of Computing Performance: Game Over or Next Level? • • “Invest in research in and development of algorithms that can exploit parallel processing” “Incorporate in computer science education an increased emphasis on parallelism, and use a variety of methods and approaches to better prepare students for computing resources that they will encounter in their careers. ” NRC & CSTB: S. Fuller et al. 2011

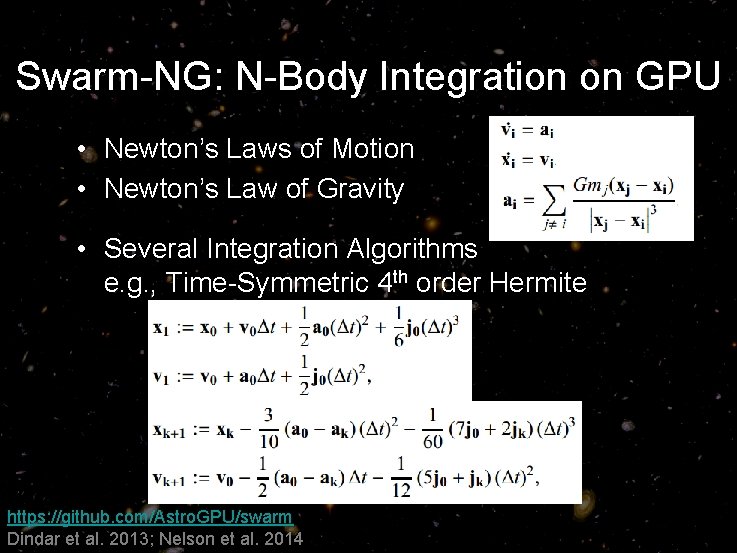

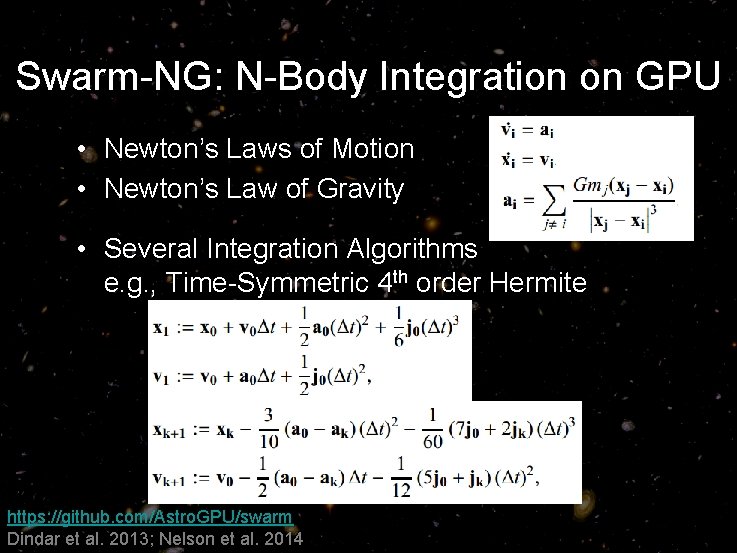

Swarm-NG: N-Body Integration on GPU • Newton’s Laws of Motion • Newton’s Law of Gravity • Several Integration Algorithms e. g. , Time-Symmetric 4 th order Hermite https: //github. com/Astro. GPU/swarm Dindar et al. 2013; Nelson et al. 2014

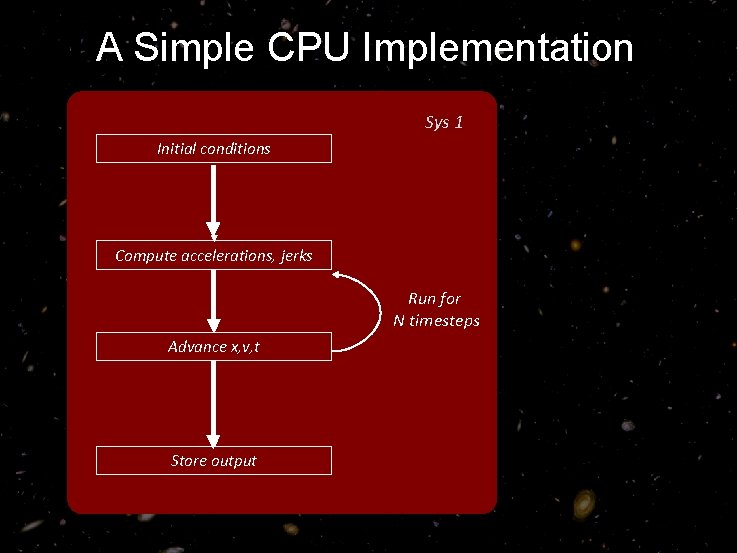

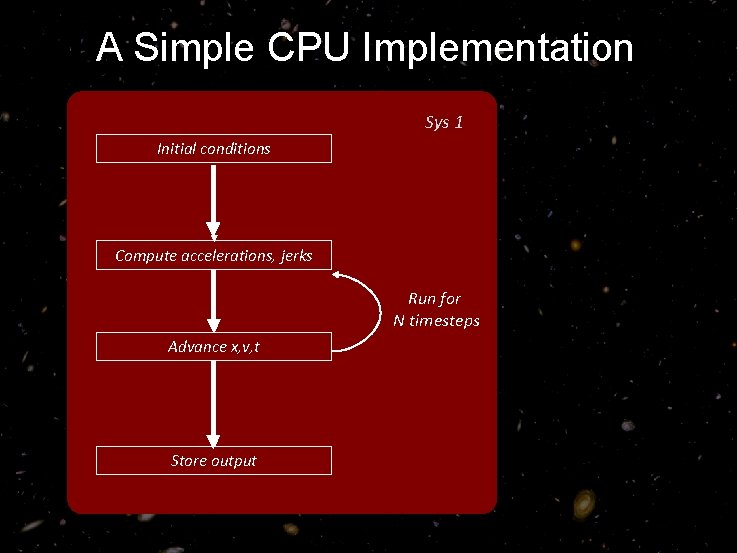

A Simple CPU Implementation Sys 1 Initial conditions Compute accelerations, jerks Run for N timesteps Advance x, v, t Store output

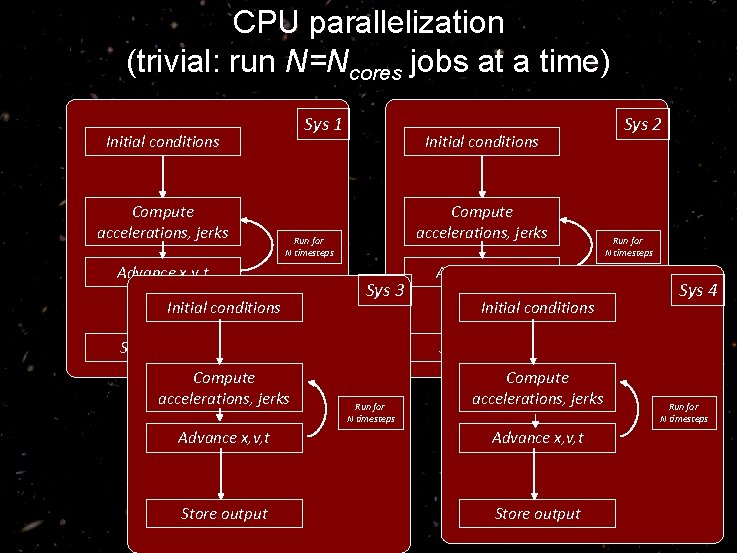

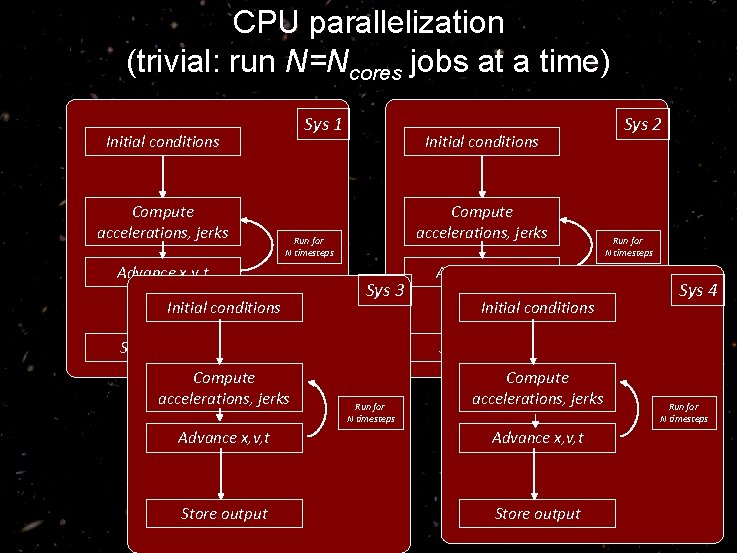

CPU parallelization (trivial: run N=Ncores jobs at a time) Sys 1 Initial conditions Compute accelerations, jerks Run for N timesteps Advance x, v, t Initial conditions Sys 3 Store output Compute accelerations, jerks Advance x, v, t Initial conditions Sys 2 Run for N timesteps Sys 4 Store output Run for N timesteps Compute accelerations, jerks Advance x, v, t Store output Run for N timesteps

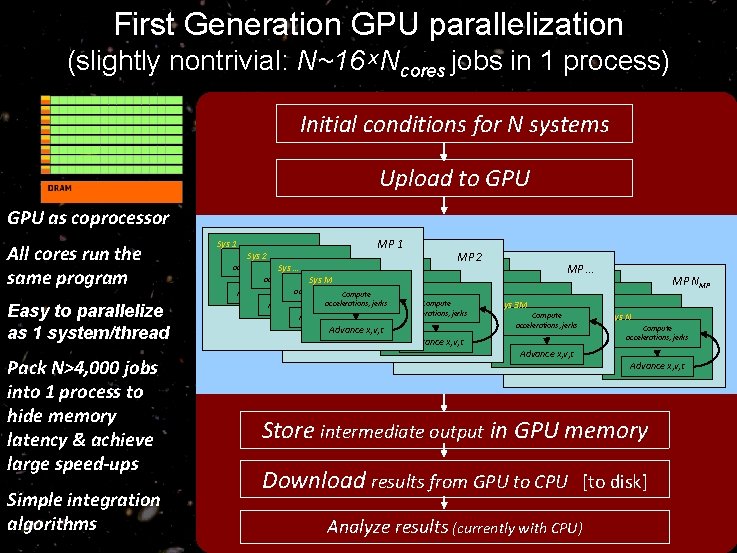

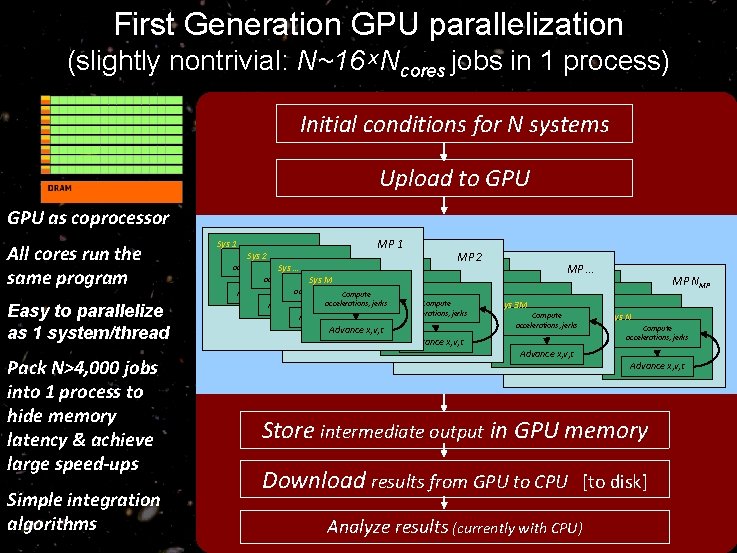

First Generation GPU parallelization (slightly nontrivial: N~16 Ncores jobs in 1 process) Initial conditions for N systems Upload to GPU as coprocessor All cores run the same program Easy to parallelize as 1 system/thread Pack N>4, 000 jobs into 1 process to hide memory latency & achieve large speed-ups Simple integration algorithms Sys 1 MP 1 Sys M+1 1 Sys 2 Compute MP 2 accelerations, Sys jerks Sys 2 M+1 1 Sys 2 M+2 … Compute MP … accelerations, jerks accelerations, Sys jerks Sys 2 2 M+2 Sys … M Sys … 1 Compute MP NMP jerks accelerations, Sys jerks … M accelerations, Sys 2 M Advance x, v, t accelerations, jerks Sys … 2 Compute Compute jerks accelerations, jerks 3 M M accelerations, Sys x, v, t jerks Advance x, v, t. Advance … Compute accelerations, Sys Compute accelerations, jerks Advance x, v, t Sys M N Compute accelerations, jerks Advance x, v, t Compute accelerations, jerks Advance x, v, t Advance x, v, t Store intermediate output in GPU memory Download results from GPU to CPU [to disk] Analyze results (currently with CPU)

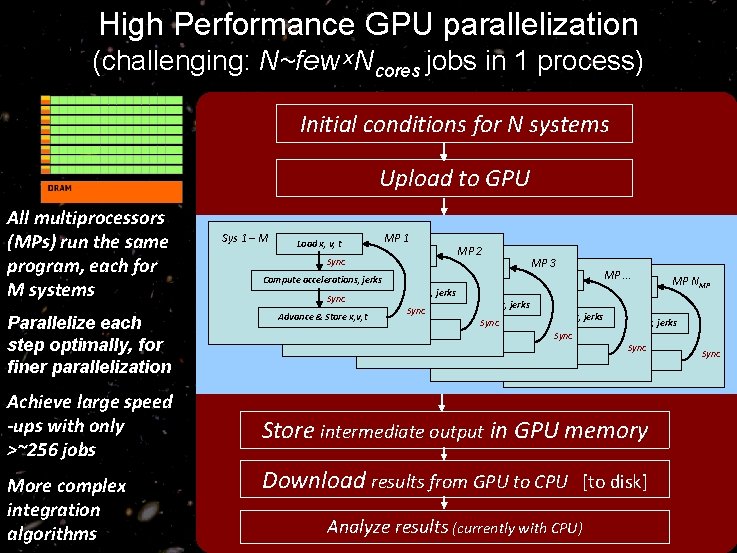

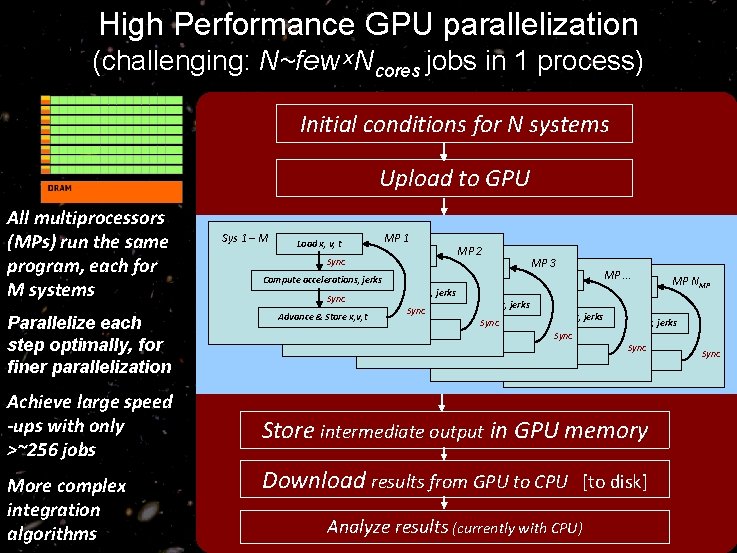

High Performance GPU parallelization (challenging: N~few Ncores jobs in 1 process) Initial conditions for N systems Upload to GPU All multiprocessors (MPs) run the same program, each for M systems Parallelize each step optimally, for finer parallelization Achieve large speed -ups with only >~256 jobs More complex integration algorithms Sys 11 M Sys Load x, v, t Sys 1 M+1 Compute Sys accelerations, jerks Sync Compute MP 1 MP 2 Load x, v, t Sys 1 Sys 2 M+1 2 M Load x, v, t accelerations, jerks Sync Sys 1 … … Compute Sys 3 M Compute accelerations, jerks Advance x, v, t accelerations, jerks Sync Compute MP 3 MP … Sys 1… Load x, v, Nt Sys Load x, v, t MP NMP Compute accelerations, jerks Compute Advance Sync x, v, t Sync Compute accelerations, jerks Sync Advance Sync x, v, t Advance & Store x, v, t Compute accelerations, jerks Sync x, v, t Compute accelerations, jerks Advance x, v, t Sync Advance x, v, t Store intermediate output in GPU memory Download results from GPU to CPU [to disk] Analyze results (currently with CPU) Sync

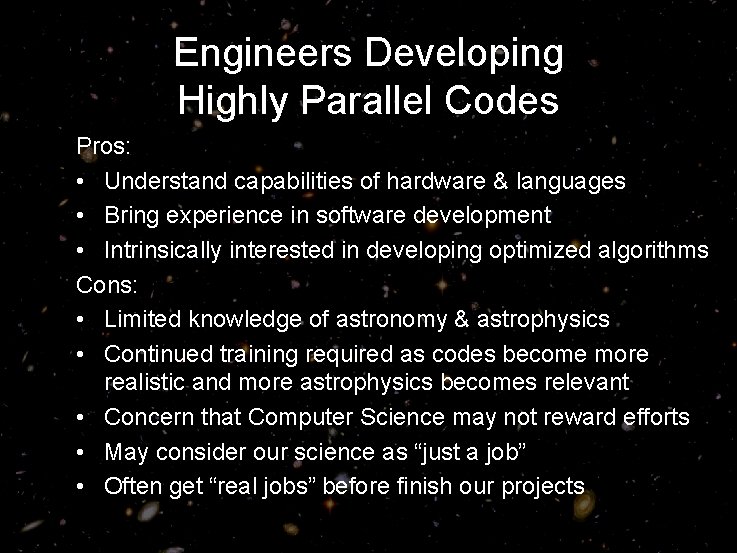

Engineers Developing Highly Parallel Codes Pros: • Understand capabilities of hardware & languages • Bring experience in software development • Intrinsically interested in developing optimized algorithms Cons: • Limited knowledge of astronomy & astrophysics • Continued training required as codes become more realistic and more astrophysics becomes relevant • Concern that Computer Science may not reward efforts • May consider our science as “just a job” • Often get “real jobs” before finish our projects

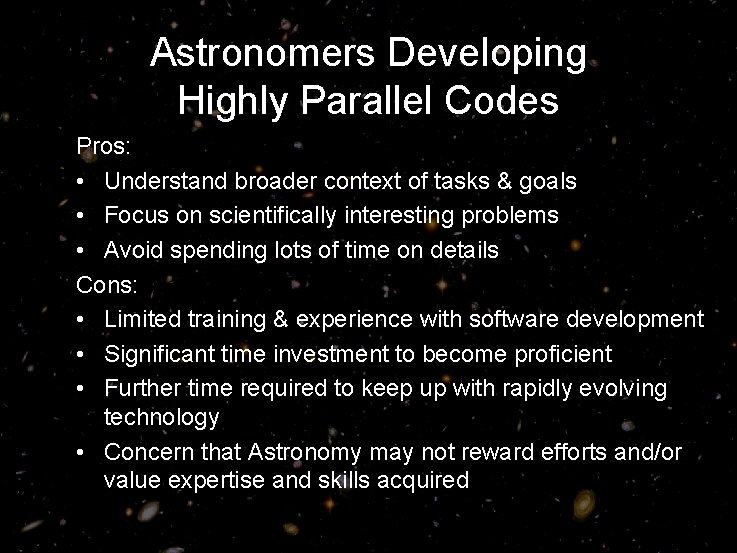

Astronomers Developing Highly Parallel Codes Pros: • Understand broader context of tasks & goals • Focus on scientifically interesting problems • Avoid spending lots of time on details Cons: • Limited training & experience with software development • Significant time investment to become proficient • Further time required to keep up with rapidly evolving technology • Concern that Astronomy may not reward efforts and/or value expertise and skills acquired

GPU Applications to Astrophysics

Questions? Illustration Credit: Lynette Cook