Accelerated Erasure Coding The New Frontier of Software

- Slides: 25

Accelerated Erasure Coding: The New Frontier of Software Defined Storage Dineshkumar Bhaskaran Aricent Technologies 2018 Storage Developer Conference India © All Rights Reserved.

Introduction to Data Resiliency r Traditional RAID and Mirroring r Multiple disks are used for data placement thereby improving performance and resiliency r r r High storage overhead; high rebuild times Difficult to recover from co-related disk failures Erasure coding r Erasure coding is data protection method in which data is encoded to data blocks and parity blocks. These are then stored across locations or storage nodes r Compute intensive 2018 Storage Developer Conference India © All Rights Reserved. 2

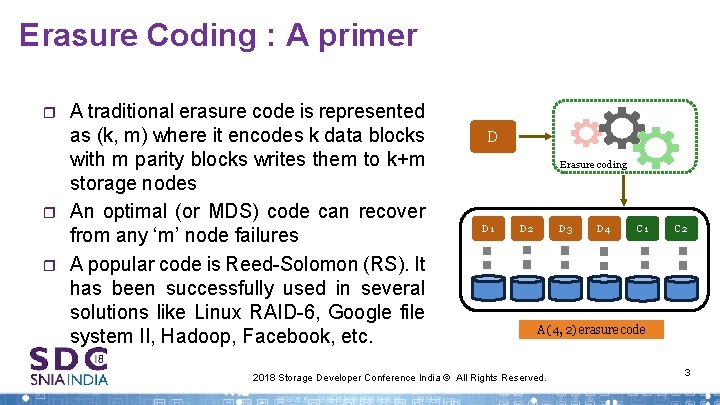

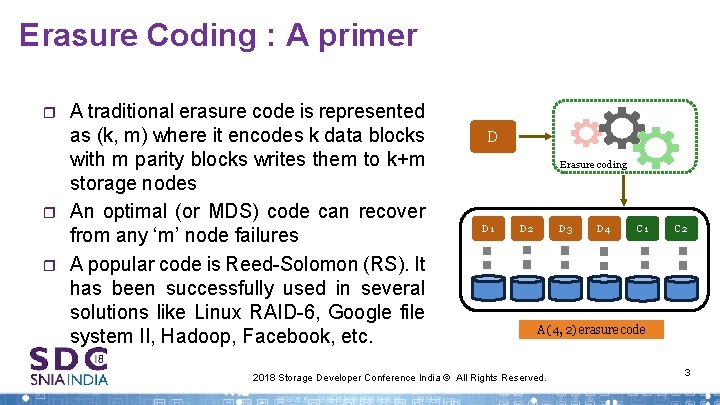

Erasure Coding : A primer r A traditional erasure code is represented as (k, m) where it encodes k data blocks with m parity blocks writes them to k+m storage nodes An optimal (or MDS) code can recover from any ‘m’ node failures A popular code is Reed-Solomon (RS). It has been successfully used in several solutions like Linux RAID-6, Google file system II, Hadoop, Facebook, etc. D Erasure coding D 1 D 2 D 3 D 4 C 1 C 2 A (4, 2) erasure code 2018 Storage Developer Conference India © All Rights Reserved. 3

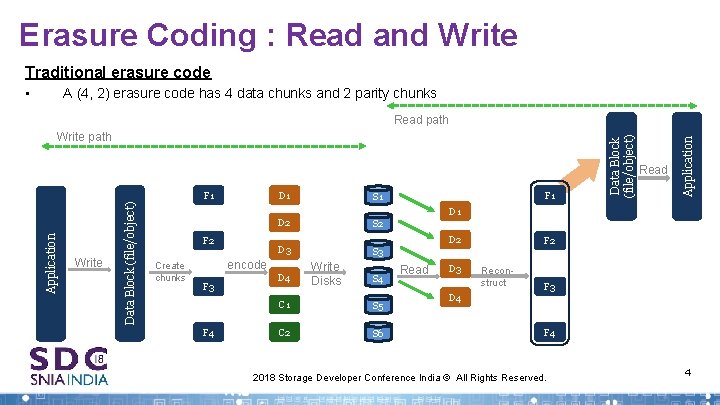

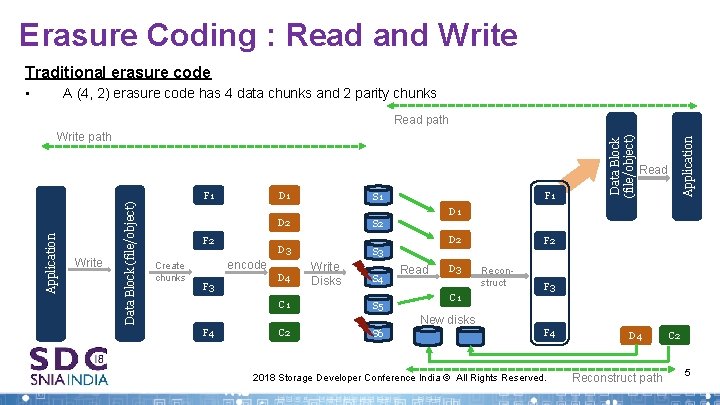

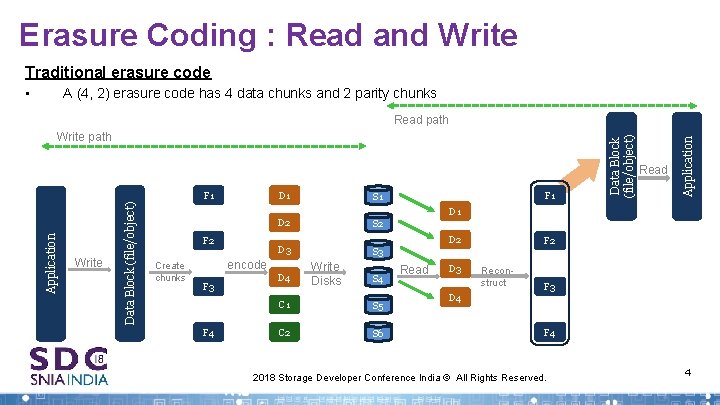

Erasure Coding : Read and Write Traditional erasure code • A (4, 2) erasure code has 4 data chunks and 2 parity chunks Write Data Block (file/object) Application F 1 D 1 S 1 D 2 S 2 D 3 S 3 F 1 Read Application Write path Data Block (file/object) Read path D 1 F 2 Create chunks encode F 3 F 4 D 4 Write Disks S 4 C 1 S 5 C 2 S 6 D 2 Read D 3 D 4 F 2 Reconstruct F 3 F 4 2018 Storage Developer Conference India © All Rights Reserved. 4

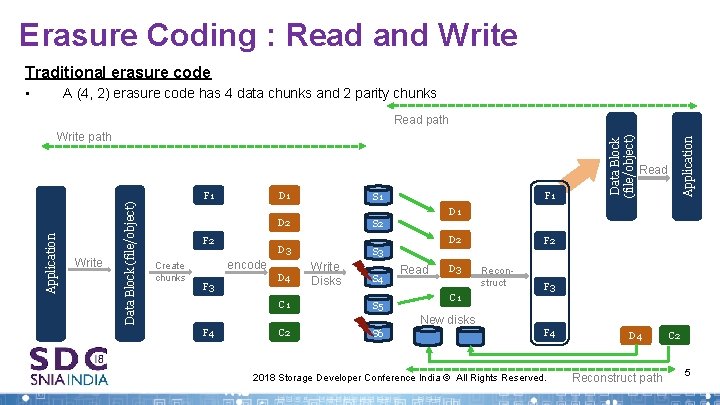

Erasure Coding : Read and Write Traditional erasure code • A (4, 2) erasure code has 4 data chunks and 2 parity chunks Write Data Block (file/object) Application F 1 D 1 S 1 D 2 S 2 D 3 S 3 F 1 Read Application Write path Data Block (file/object) Read path D 1 F 2 Create chunks encode F 3 D 4 C 1 Write Disks S 4 S 5 D 2 Read D 3 C 1 F 2 Reconstruct F 3 New disks F 4 C 2 S 6 F 4 2018 Storage Developer Conference India © All Rights Reserved. D 4 Reconstruct path C 2 5

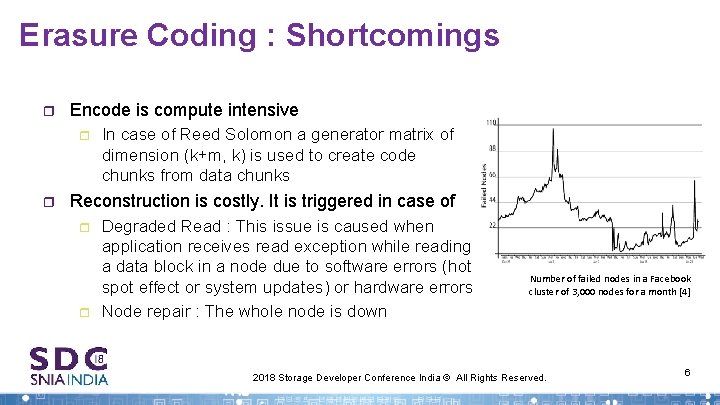

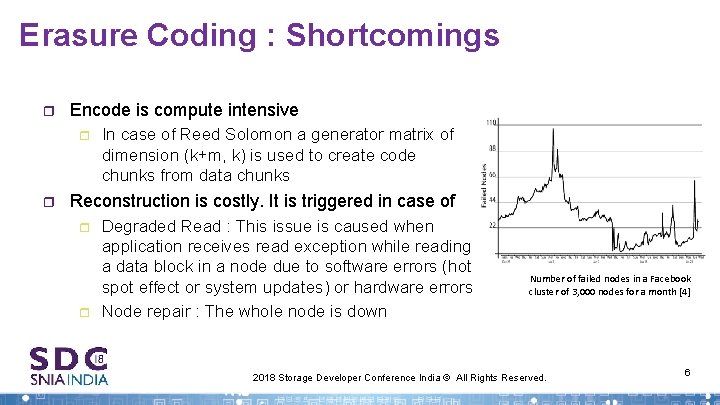

Erasure Coding : Shortcomings r Encode is compute intensive r r In case of Reed Solomon a generator matrix of dimension (k+m, k) is used to create code chunks from data chunks Reconstruction is costly. It is triggered in case of r r Degraded Read : This issue is caused when application receives read exception while reading a data block in a node due to software errors (hot spot effect or system updates) or hardware errors Node repair : The whole node is down Number of failed nodes in a Facebook cluster of 3, 000 nodes for a month [4] 2018 Storage Developer Conference India © All Rights Reserved. 6

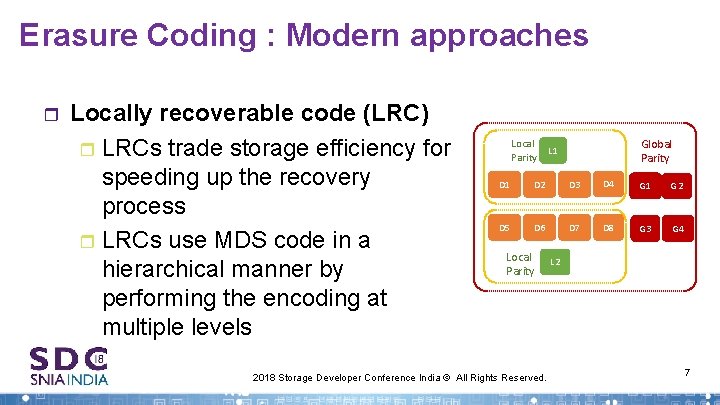

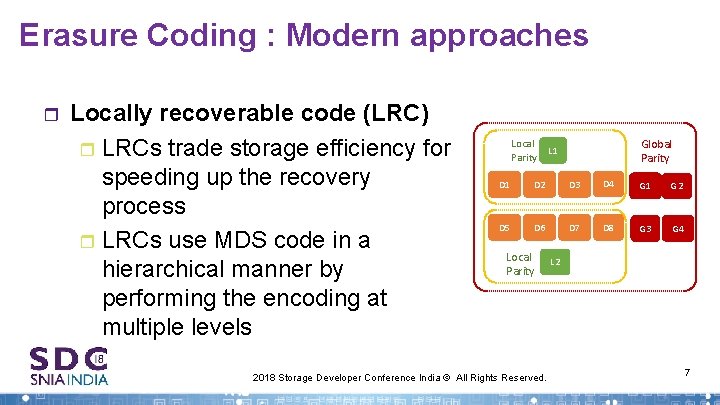

Erasure Coding : Modern approaches r Locally recoverable code (LRC) r LRCs trade storage efficiency for speeding up the recovery process r LRCs use MDS code in a hierarchical manner by performing the encoding at multiple levels Local Parity Global Parity L 1 D 2 D 3 D 4 G 1 G 2 D 5 D 6 D 7 D 8 G 3 G 4 Local Parity 2018 Storage Developer Conference India © All Rights Reserved. L 2 7

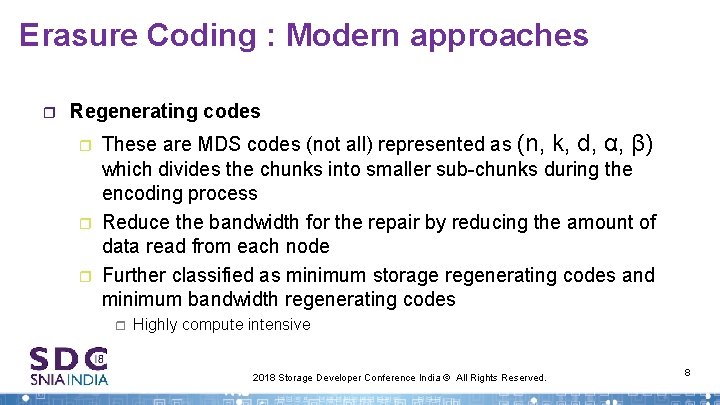

Erasure Coding : Modern approaches r Regenerating codes r r r These are MDS codes (not all) represented as (n, k, d, α, β) which divides the chunks into smaller sub-chunks during the encoding process Reduce the bandwidth for the repair by reducing the amount of data read from each node Further classified as minimum storage regenerating codes and minimum bandwidth regenerating codes r Highly compute intensive 2018 Storage Developer Conference India © All Rights Reserved. 8

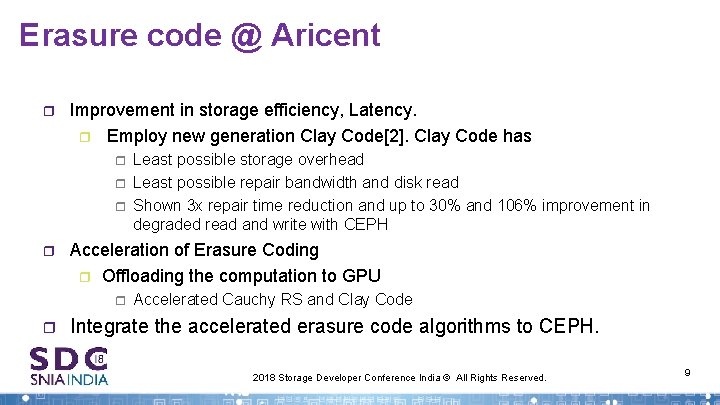

Erasure code @ Aricent r Improvement in storage efficiency, Latency. r Employ new generation Clay Code[2]. Clay Code has r r Acceleration of Erasure Coding r Offloading the computation to GPU r r Least possible storage overhead Least possible repair bandwidth and disk read Shown 3 x repair time reduction and up to 30% and 106% improvement in degraded read and write with CEPH Accelerated Cauchy RS and Clay Code Integrate the accelerated erasure code algorithms to CEPH. 2018 Storage Developer Conference India © All Rights Reserved. 9

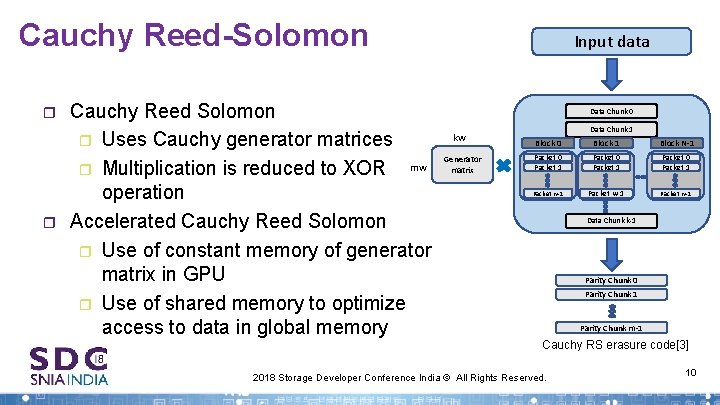

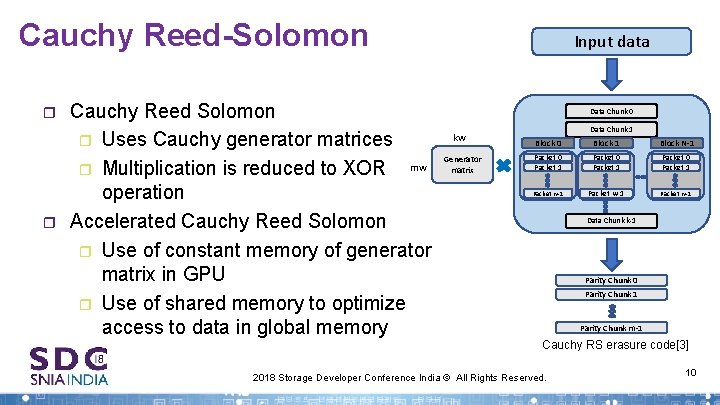

Cauchy Reed-Solomon r r Cauchy Reed Solomon r Uses Cauchy generator matrices mw r Multiplication is reduced to XOR operation Accelerated Cauchy Reed Solomon r Use of constant memory of generator matrix in GPU r Use of shared memory to optimize access to data in global memory Input data Data Chunk 0 kw Generator matrix Data Chunk 1 Block 0 Block 1 Block N-1 Packet 0 Packet 1 Packet w-1 Data Chunk k-1 Parity Chunk 0 Parity Chunk 1 Parity Chunk m-1 Cauchy RS erasure code[3] 2018 Storage Developer Conference India © All Rights Reserved. 10

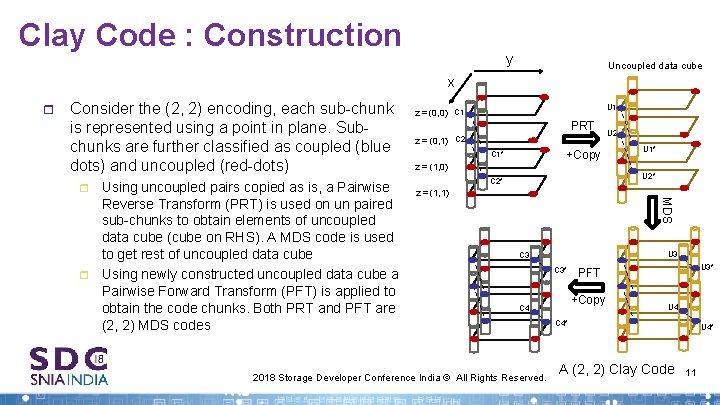

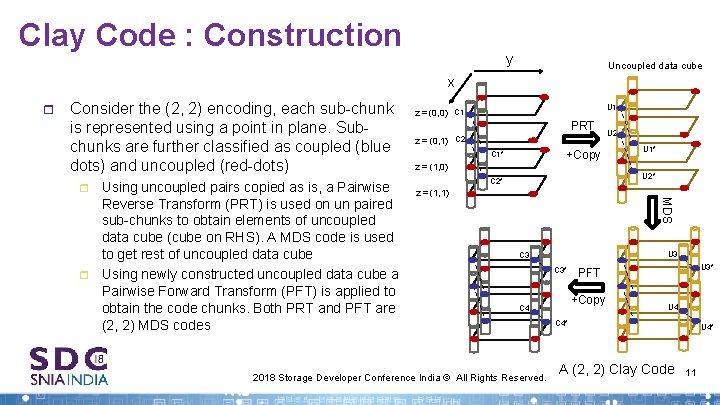

Clay Code : Construction y Uncoupled data cube x r Consider the (2, 2) encoding, each sub-chunk is represented using a point in plane. Subchunks are further classified as coupled (blue dots) and uncoupled (red-dots) r PRT z = (0, 1) C 2 +Copy C 1* z = (1, 0) U 2 U 1* U 2* C 2* z = (1, 1) MDS r Using uncoupled pairs copied as is, a Pairwise Reverse Transform (PRT) is used on un paired sub-chunks to obtain elements of uncoupled data cube (cube on RHS). A MDS code is used to get rest of uncoupled data cube Using newly constructed uncoupled data cube a Pairwise Forward Transform (PFT) is applied to obtain the code chunks. Both PRT and PFT are (2, 2) MDS codes U 1 z = (0, 0) C 1 U 3 C 3* +Copy C 4 2018 Storage Developer Conference India © All Rights Reserved. U 3* PFT U 4 C 4* A (2, 2) Clay Code U 4* 11

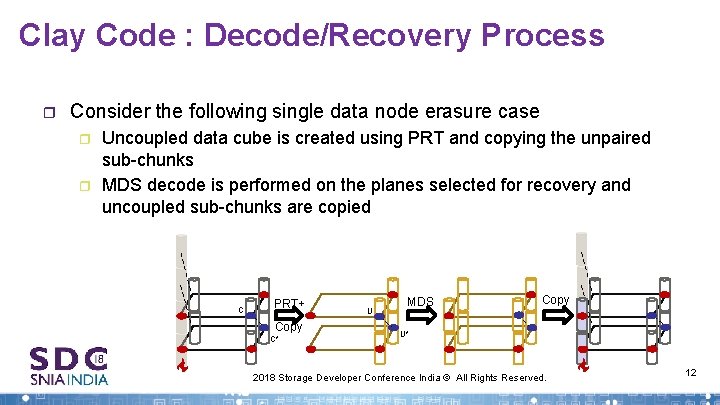

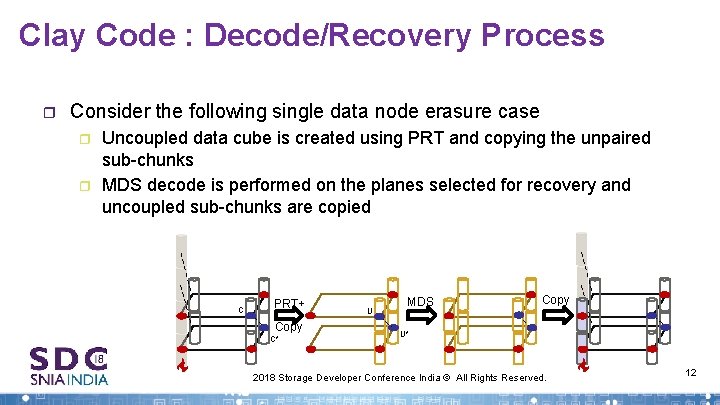

Clay Code : Decode/Recovery Process r Consider the following single data node erasure case r r Uncoupled data cube is created using PRT and copying the unpaired sub-chunks MDS decode is performed on the planes selected for recovery and uncoupled sub-chunks are copied C PRT+ Copy C* U MDS Copy U* 2018 Storage Developer Conference India © All Rights Reserved. 12

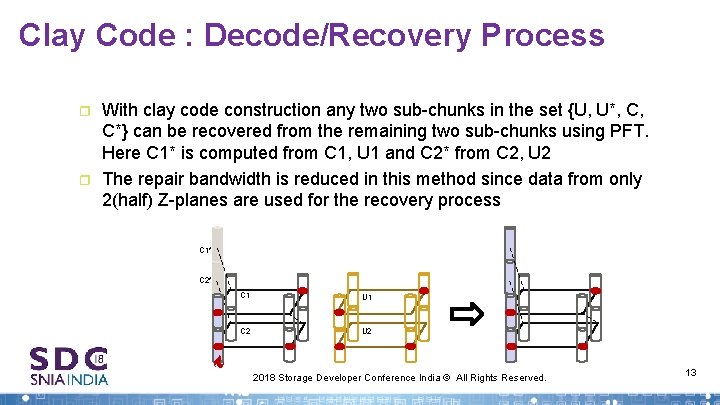

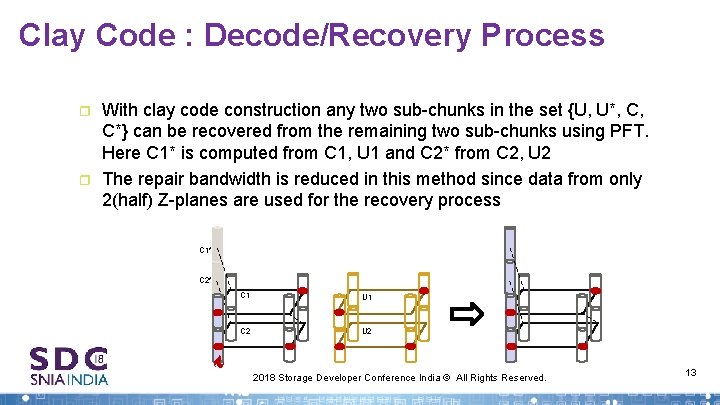

Clay Code : Decode/Recovery Process r r With clay code construction any two sub-chunks in the set {U, U*, C, C*} can be recovered from the remaining two sub-chunks using PFT. Here C 1* is computed from C 1, U 1 and C 2* from C 2, U 2 The repair bandwidth is reduced in this method since data from only 2(half) Z-planes are used for the recovery process C 1* C 2* C 1 U 1 C 2 U 2 2018 Storage Developer Conference India © All Rights Reserved. 13

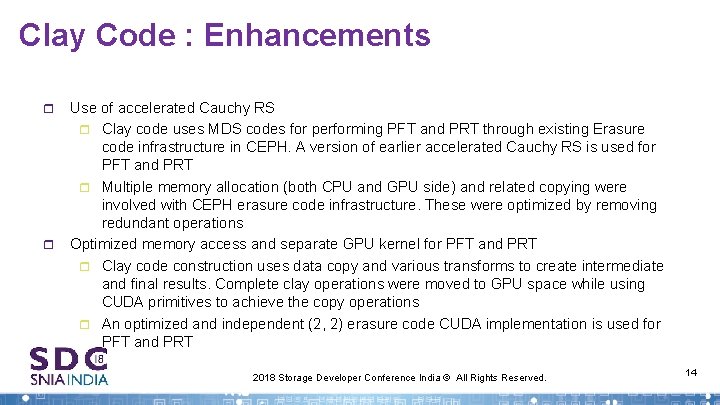

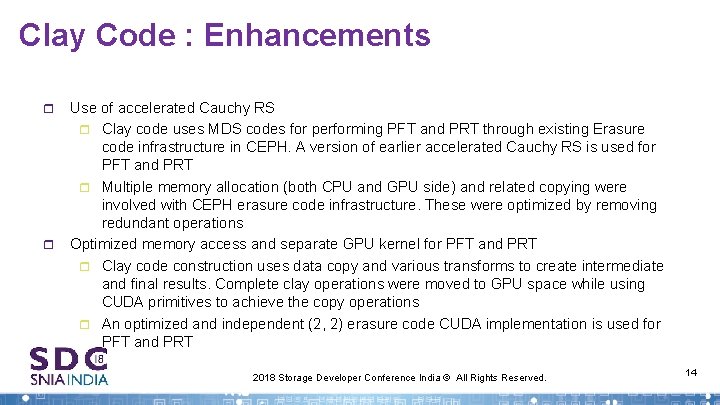

Clay Code : Enhancements r r Use of accelerated Cauchy RS r Clay code uses MDS codes for performing PFT and PRT through existing Erasure code infrastructure in CEPH. A version of earlier accelerated Cauchy RS is used for PFT and PRT r Multiple memory allocation (both CPU and GPU side) and related copying were involved with CEPH erasure code infrastructure. These were optimized by removing redundant operations Optimized memory access and separate GPU kernel for PFT and PRT r Clay code construction uses data copy and various transforms to create intermediate and final results. Complete clay operations were moved to GPU space while using CUDA primitives to achieve the copy operations r An optimized and independent (2, 2) erasure code CUDA implementation is used for PFT and PRT 2018 Storage Developer Conference India © All Rights Reserved. 14

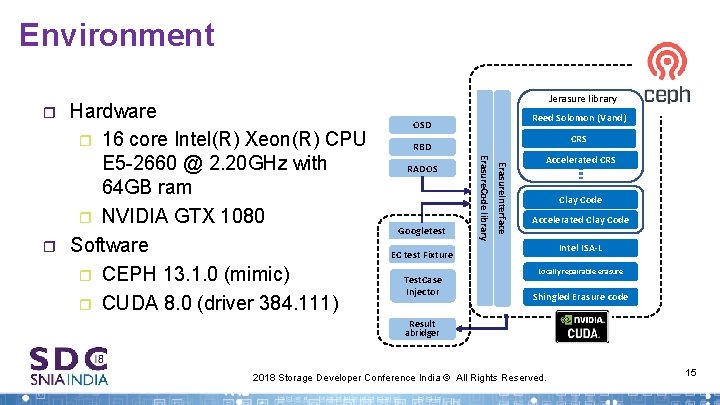

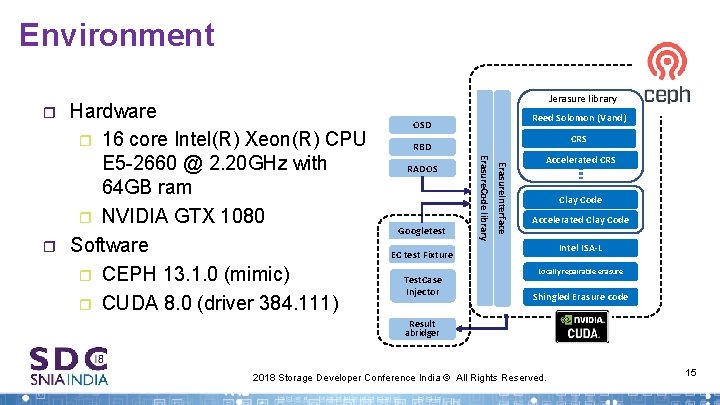

Environment r Reed Solomon (Vand) OSD CRS RBD Googletest Erasure. Interface RADOS Erasure. Code library r Hardware r 16 core Intel(R) Xeon(R) CPU E 5 -2660 @ 2. 20 GHz with 64 GB ram r NVIDIA GTX 1080 Software r CEPH 13. 1. 0 (mimic) r CUDA 8. 0 (driver 384. 111) Jerasure library Accelerated CRS Clay Code Accelerated Clay Code Intel ISA-L EC test Fixture Test. Case Injector Locally repairable erasure Shingled Erasure code Result abridger 2018 Storage Developer Conference India © All Rights Reserved. 15

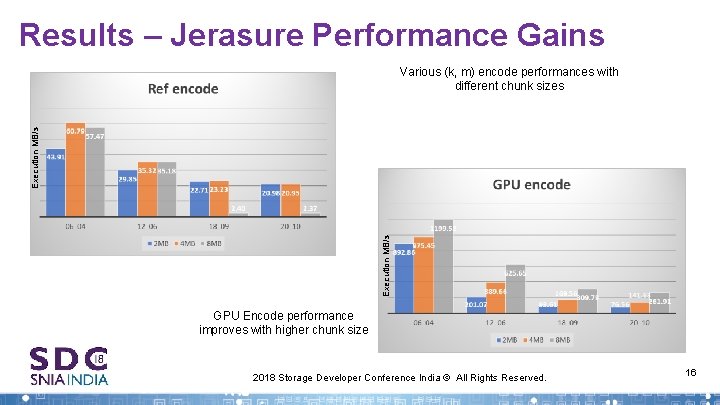

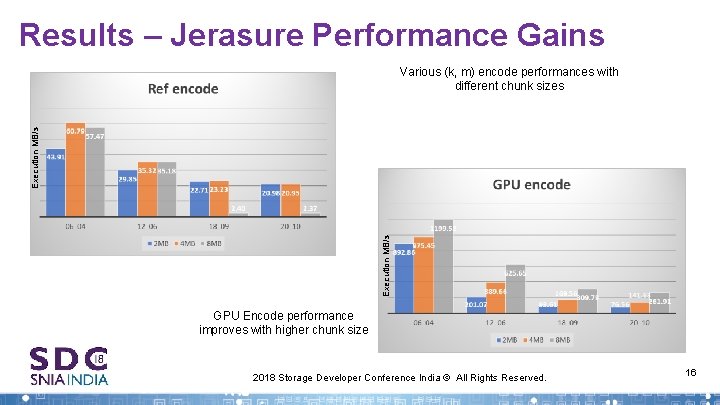

Results – Jerasure Performance Gains Execution MB/s Various (k, m) encode performances with different chunk sizes GPU Encode performance improves with higher chunk size 2018 Storage Developer Conference India © All Rights Reserved. 16

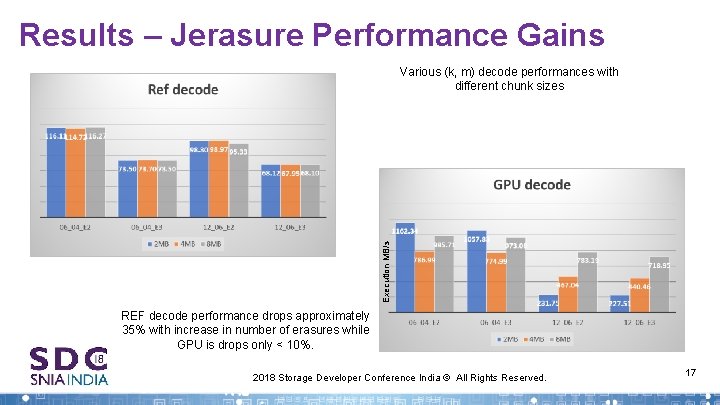

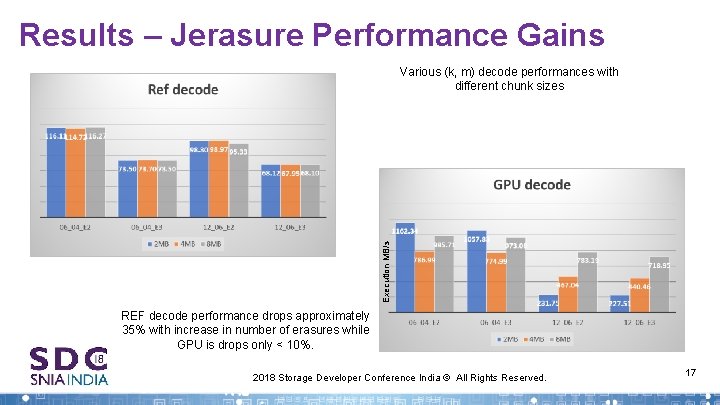

Results – Jerasure Performance Gains Execution MB/s Various (k, m) decode performances with different chunk sizes REF decode performance drops approximately 35% with increase in number of erasures while GPU is drops only < 10%. 2018 Storage Developer Conference India © All Rights Reserved. 17

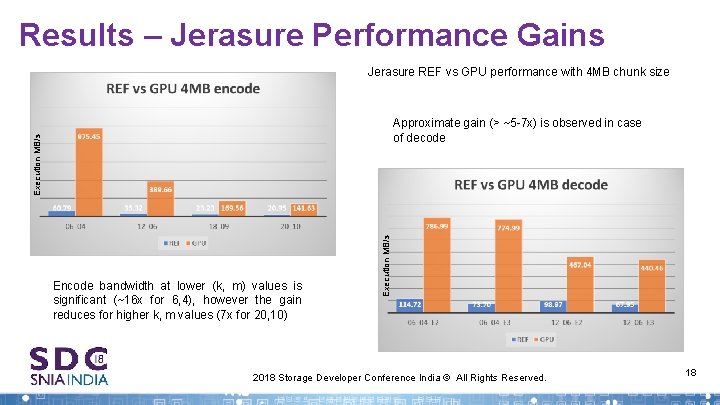

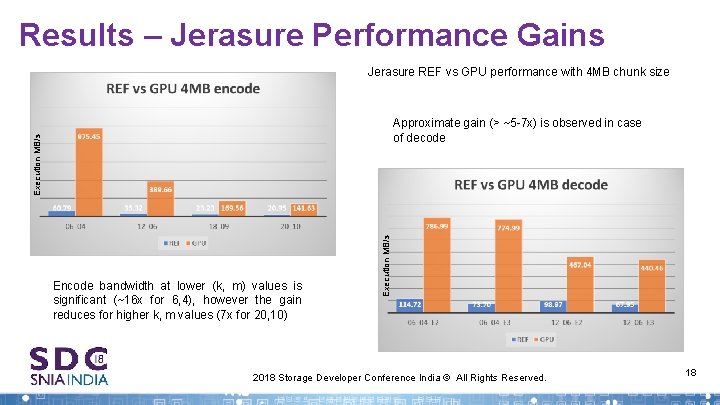

Results – Jerasure Performance Gains Jerasure REF vs GPU performance with 4 MB chunk size Encode bandwidth at lower (k, m) values is significant (~16 x for 6, 4), however the gain reduces for higher k, m values (7 x for 20, 10) Execution MB/s Approximate gain (> ~5 -7 x) is observed in case of decode 2018 Storage Developer Conference India © All Rights Reserved. 18

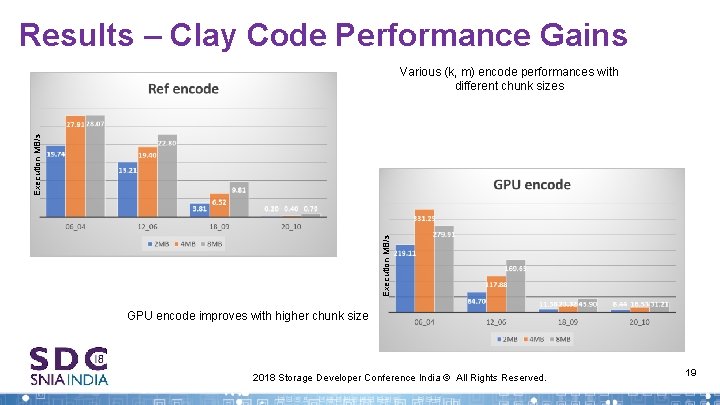

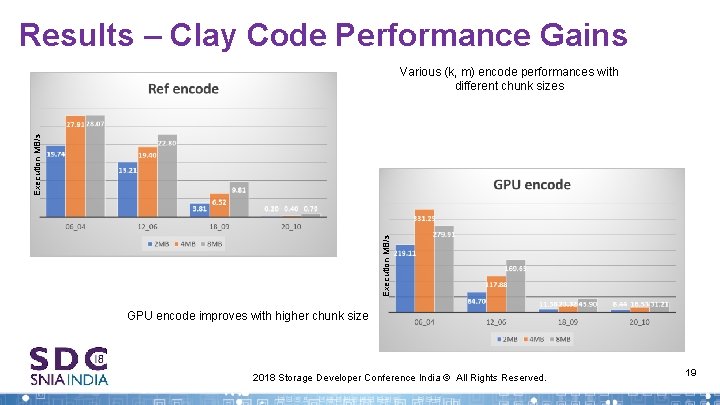

Results – Clay Code Performance Gains Execution MB/s Various (k, m) encode performances with different chunk sizes GPU encode improves with higher chunk size 2018 Storage Developer Conference India © All Rights Reserved. 19

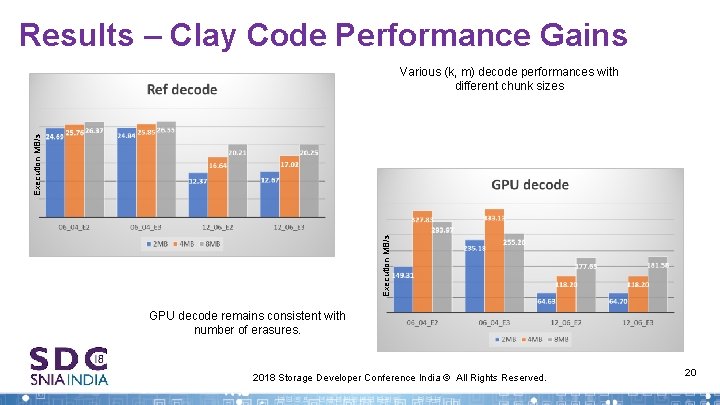

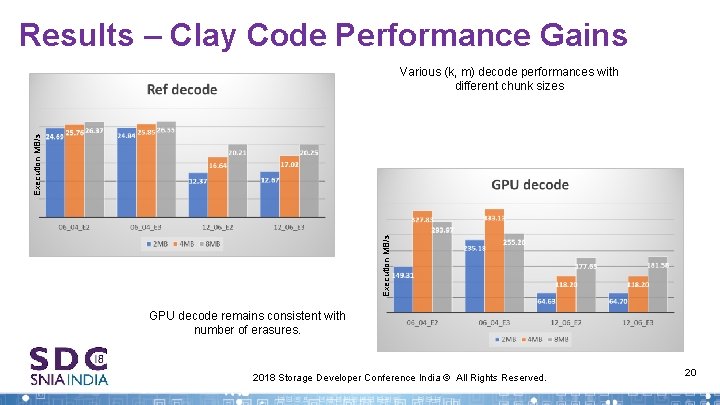

Results – Clay Code Performance Gains Execution MB/s Various (k, m) decode performances with different chunk sizes GPU decode remains consistent with number of erasures. 2018 Storage Developer Conference India © All Rights Reserved. 20

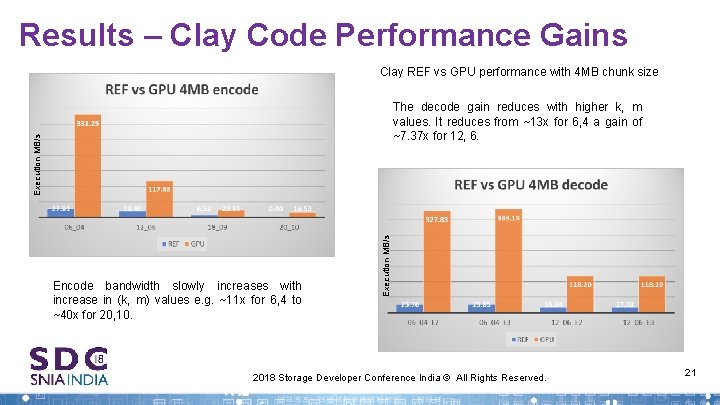

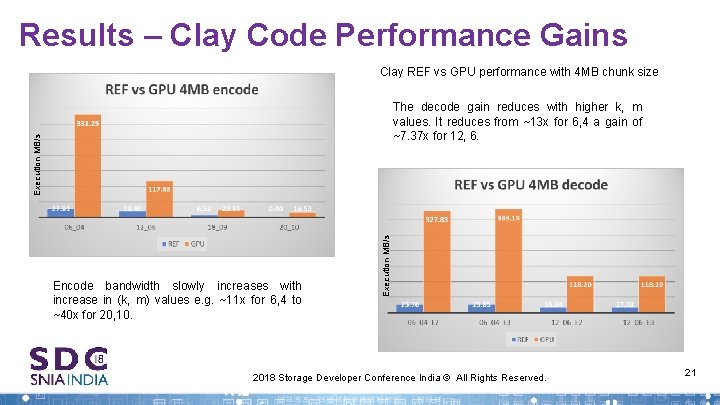

Results – Clay Code Performance Gains Clay REF vs GPU performance with 4 MB chunk size Encode bandwidth slowly increases with increase in (k, m) values e. g. ~11 x for 6, 4 to ~40 x for 20, 10. Execution MB/s The decode gain reduces with higher k, m values. It reduces from ~13 x for 6, 4 a gain of ~7. 37 x for 12, 6. 2018 Storage Developer Conference India © All Rights Reserved. 21

Summary r r Accelerated Cauchy Reed Solomon and Clay Code show good performance gain compared to corresponding CPU versions. r Jerasure code show good improvements upto 16. 03 x for encode and 10. 6 x for decode r Clay code shows improvements up to 41. 32 x in encode and 13. 08 x in decode for different k, m values We continue the work of r Enhancing Clay code on GPU r Testing Clay code with a CEPH Cluster comprising four server machine with 16 core Intel Xeon CPU E 5 -2660 @ 2. 20 GHz, 64 GB ram with NVIDIA GTX 1080 card and 60 TB storage array 2018 Storage Developer Conference India © All Rights Reserved. 22

Erasure code : Future possibilities r Erasure Coding Use Cases r Application Workload Dependent Resiliency r Storage Technology Dependent Resiliency r Integration of EC with File System r Data Migration for Resiliency Optimization 2018 Storage Developer Conference India © All Rights Reserved. 23

Reference 1. 2. 3. 4. Mingyuan Xia, Mohit Saxena, Mario Blaum, and David A. Pease. A Tale of Two Erasure Codes in HDFS. Usenix conference on File and storage technologies, 2015 Myna Vajha, Vinayak Ramkumar, Bhagyashree Puranik, Ganesh Kini, Elita Lobo, Birenjith Sasidharan, and P. Vijay Kumar, Indian Institute of Science, Bangalore; Alexandar Barg and Min Ye, University of Maryland; Srinivasan Narayanamurthy, Syed Hussain, and Siddhartha Nandi. Clay Codes: Moulding MDS Codes to Yield an MSR Code, Usenix conference on File and storage technologies, 2018. Chengjian Liu, Qiang Wang, Xiaowen Chu, Yiu-Wing Leung. G-CRS: GPU Accelerated Cauchy Reed-Solomon Coding, IEEE Transactions on Parallel and Distributed Systems, 2018 Maheswaran Sathiamoorthy, Alexandros G. Dimakis, Megasthenis Asteris, Ramkumar Vadali, Dhruba Borthakur, Dimitris Papailiopoulos, Scott Chen. XORing Elephants: Novel Erasure Codes for Big Data, Proceedings of the VLDB Endowment, 2013. 2018 Storage Developer Conference India © All Rights Reserved. 24

Thank you 2018 Storage Developer Conference India © All Rights Reserved. 25