ACAT 07 Highlights NIKHEF Amsterdam 23 27 April

- Slides: 46

ACAT 07 Highlights NIKHEF, Amsterdam 23 -27 April 2007

The Workshop For more information http: //agenda. nikhef. nl/conference. Display. py? conf. Id=55 n n Plenary talks - 9 Parallel sessions - 3 Computer Technology for Physics Research (24 talks) Data Analysis – Algorithms and Tools (28 talks) Methodology of Computations in Theoretical Physics (28 talks) n n n Round-table discussions Plenary panel discussion Summary talks 114 worldwide participants

Plenary Talks n Jos Engelen (CERN): Exploration of the Terascale Challenges n Markus Schulz (CERN): Bootstrapping a Grid Infrastructure (advise by Jeff Templon)

Plenary talk by Ronald van Driel Providing HPC Resources for Philips Research and Partners

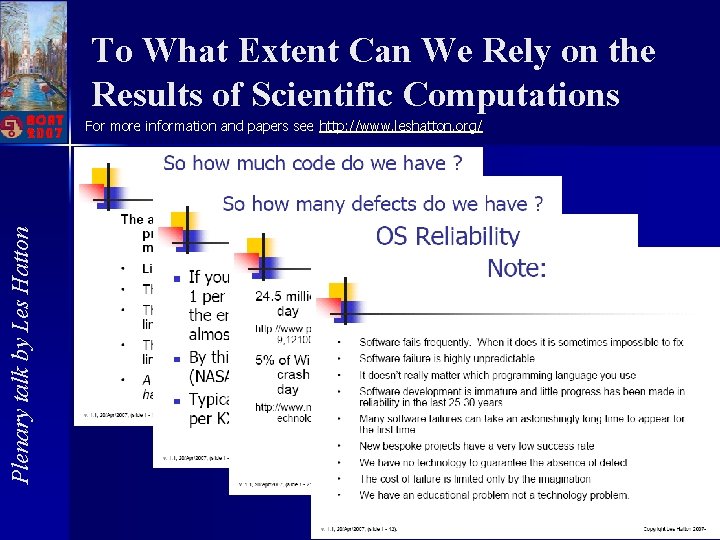

To What Extent Can We Rely on the Results of Scientific Computations Plenary talk by Les Hatton For more information and papers see http: //www. leshatton. org/

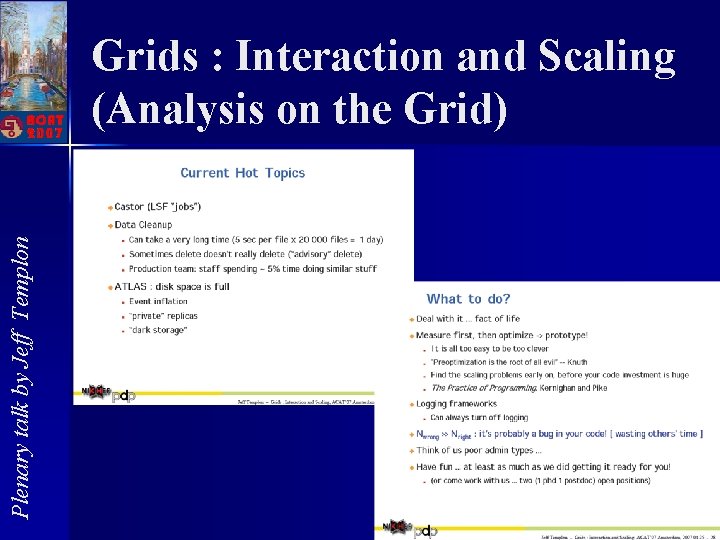

Plenary talk by Jeff Templon Grids : Interaction and Scaling (Analysis on the Grid)

Panel Disscusion: Critical Issues of Distributed Computing n n Bruce Allen: Einstein@Home and BOINC Fons: Interactive Parallel Distributed Data Analysis Using PROOF

Session 1: Computing Technology for Physics Research n 7 sessions 24 talks – – – 12 Grid 5 Monitoring/online 3 Math packages 2 GUIs 1 PROOF 1 Simulation

Grid

Middleware Grid • All experiments make large usage of existing MW… but all have developed private solutions to complement it

Grid Ganga

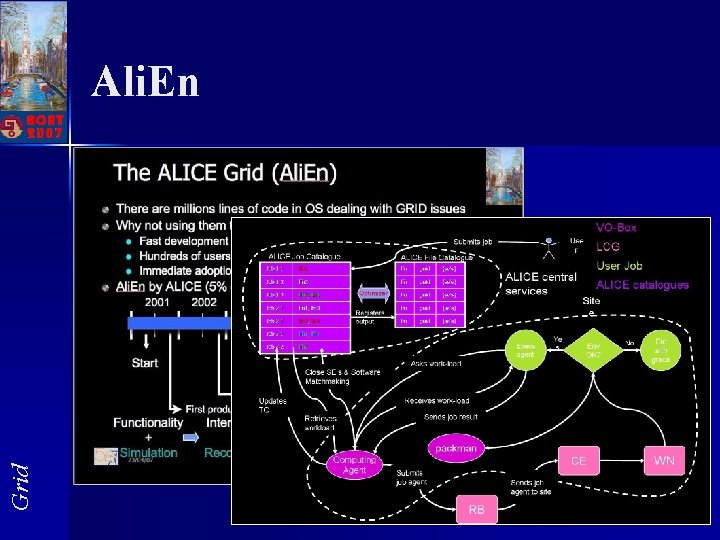

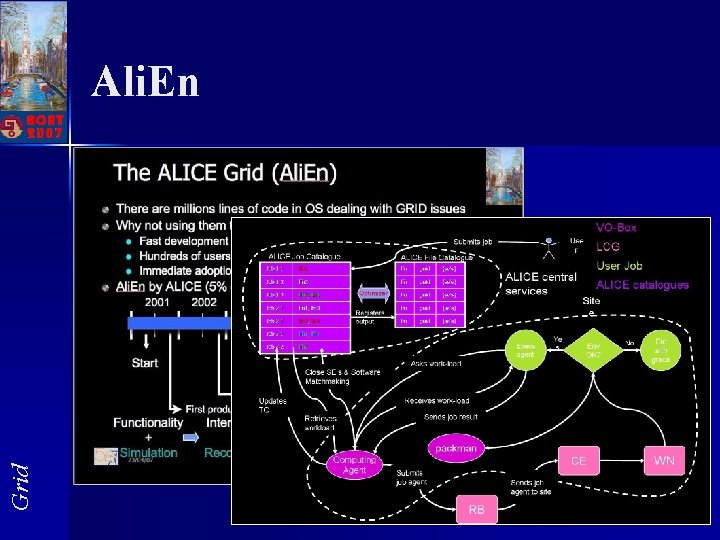

Grid Ali. En

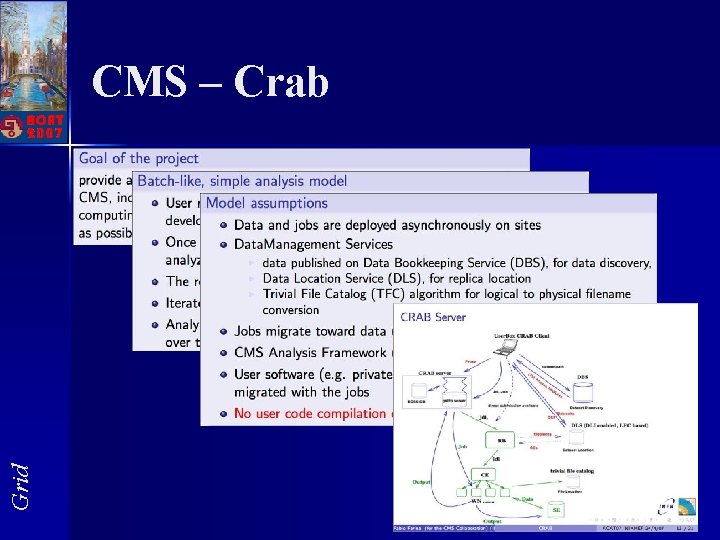

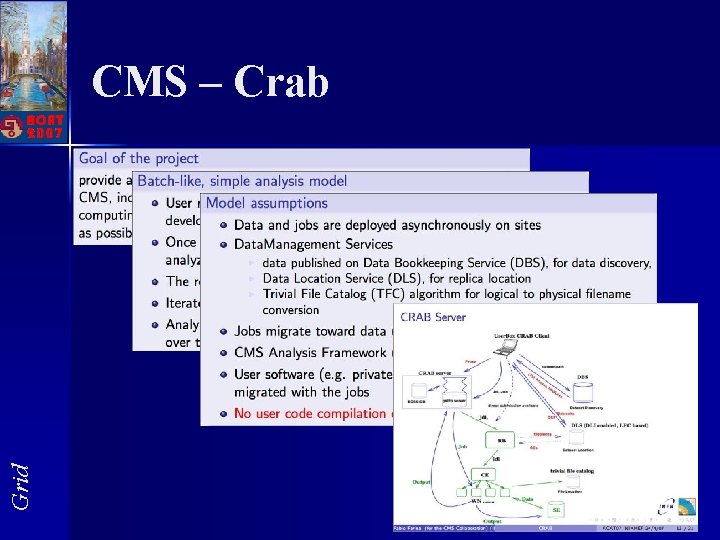

Grid CMS – Crab

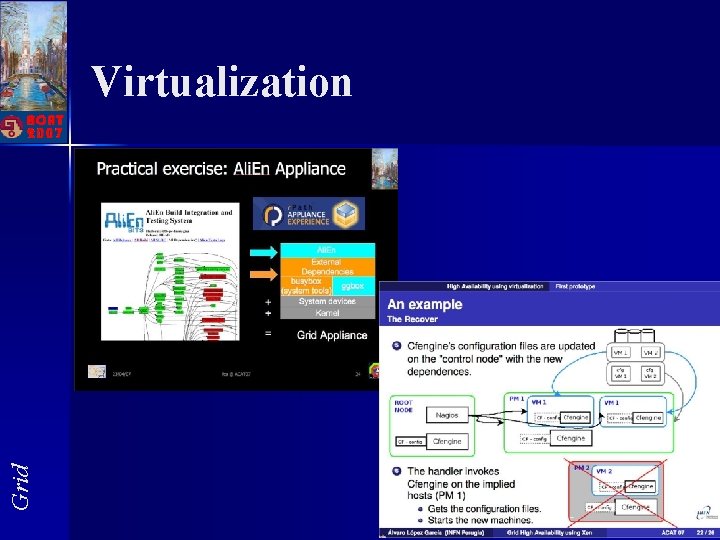

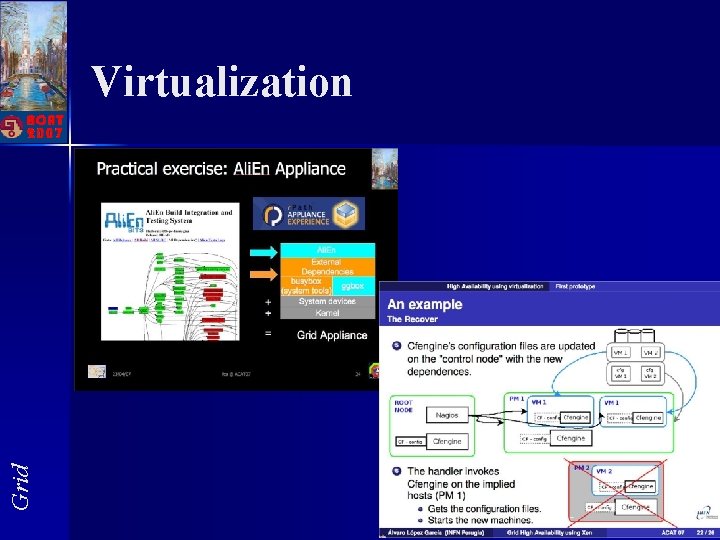

Grid Virtualization

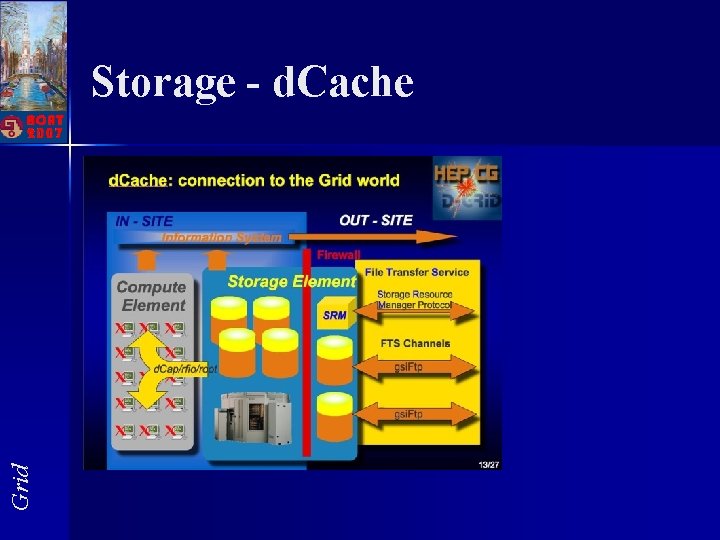

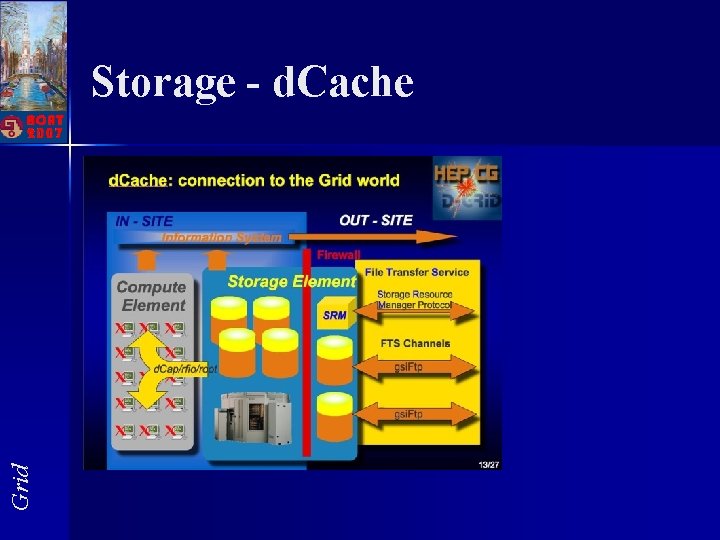

Grid Storage - d. Cache

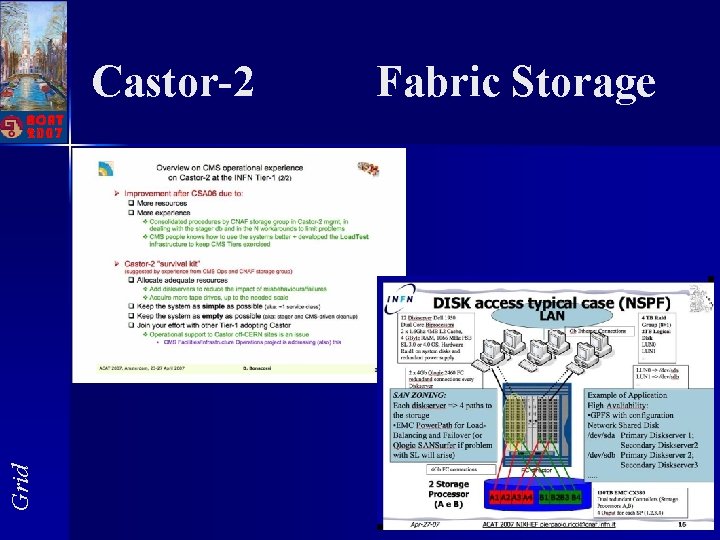

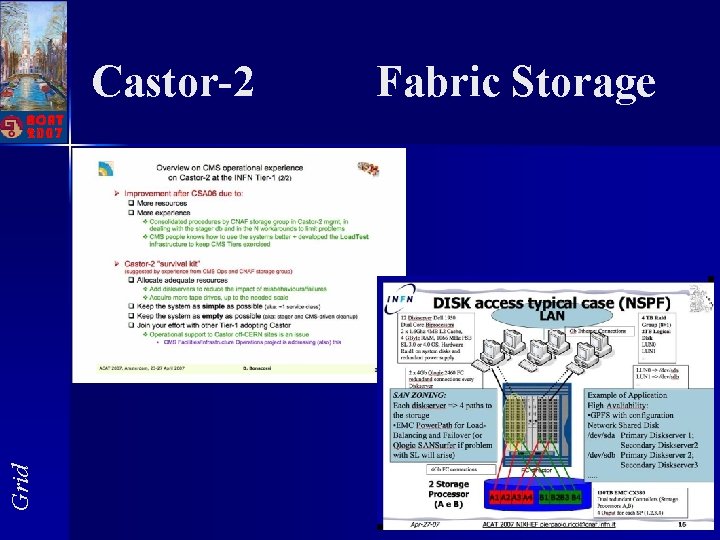

Grid Castor-2 Fabric Storage

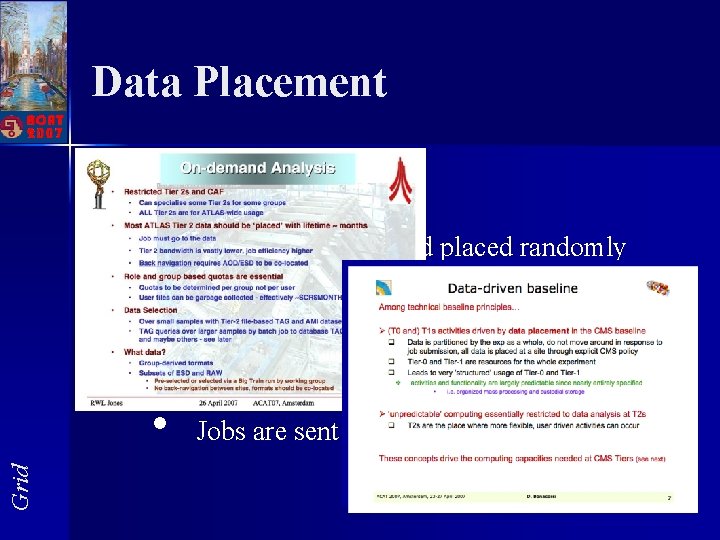

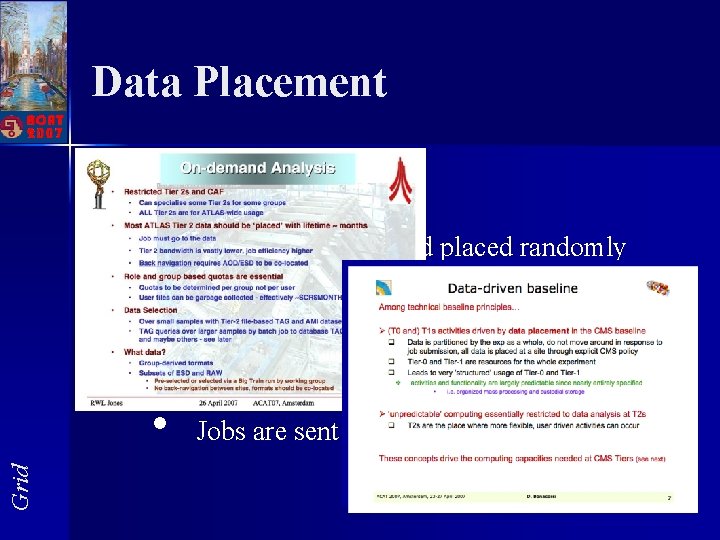

Data Placement • Grid • Ideally. . . • • Data is produced and placed randomly Data replication or job placement at data location are optimised by the Grid In reality • • Data is placed in a given location Jobs are sent to this location

Grid Analysis

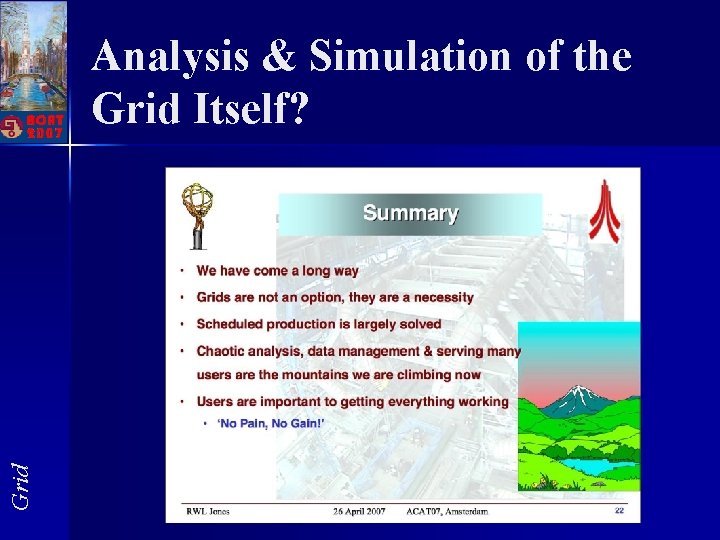

Grid Analysis & Simulation of the Grid Itself?

Online Monitoring

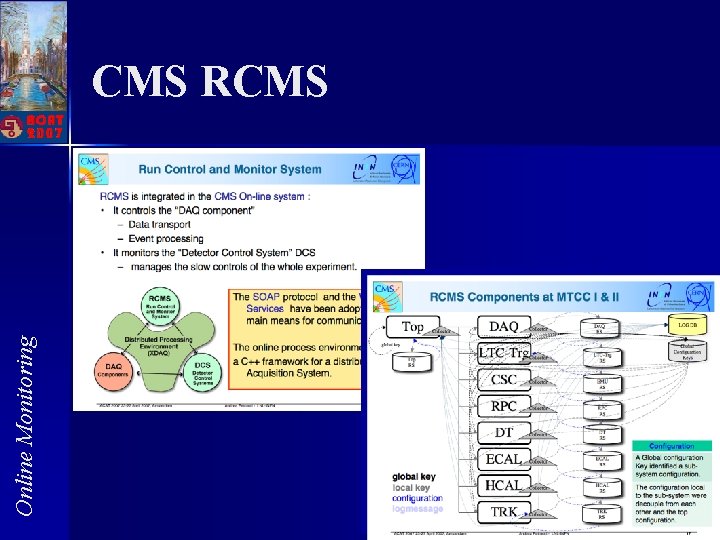

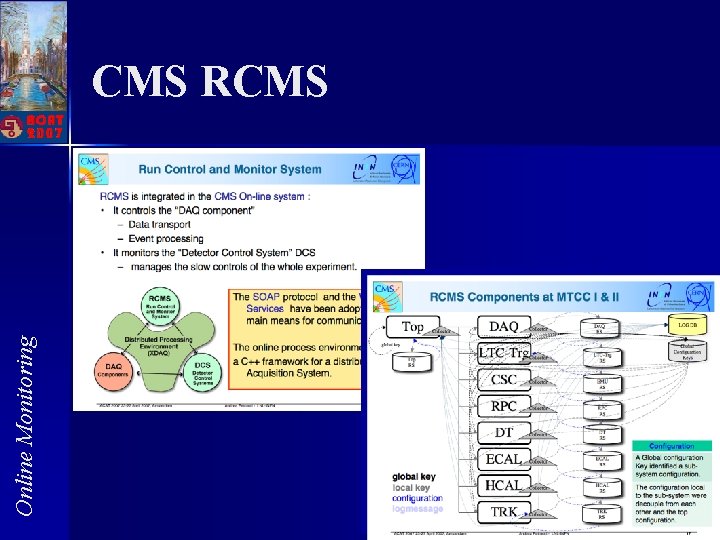

Online Monitoring CMS RCMS

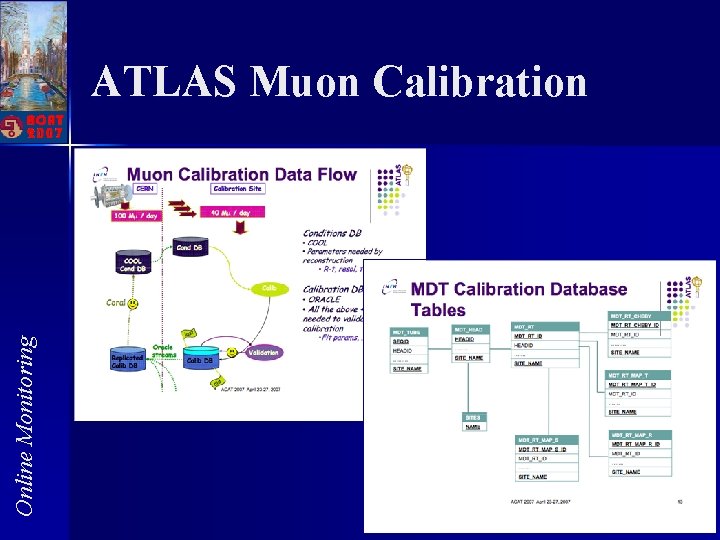

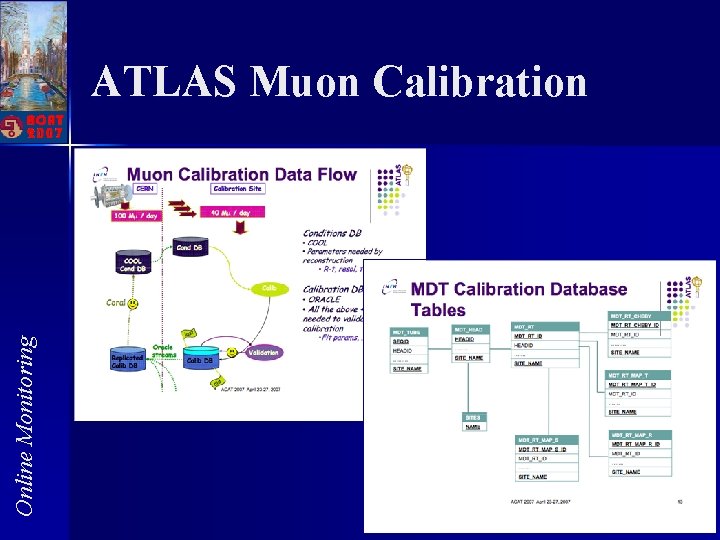

Online Monitoring ATLAS Muon Calibration

Online Monitoring DZero

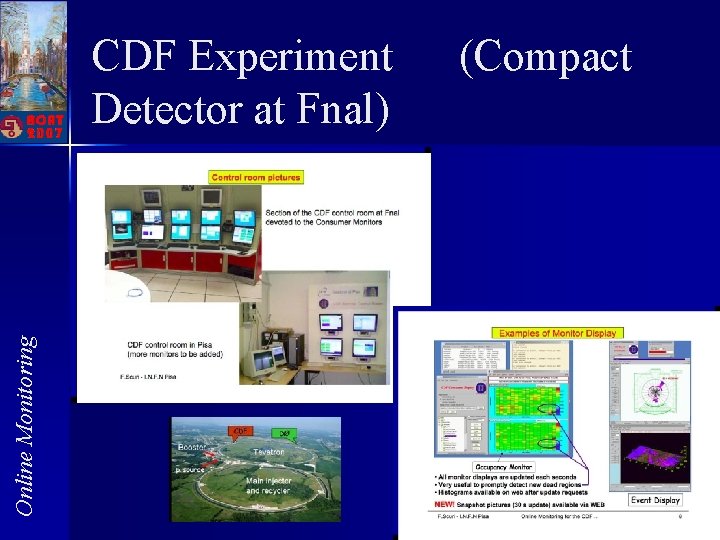

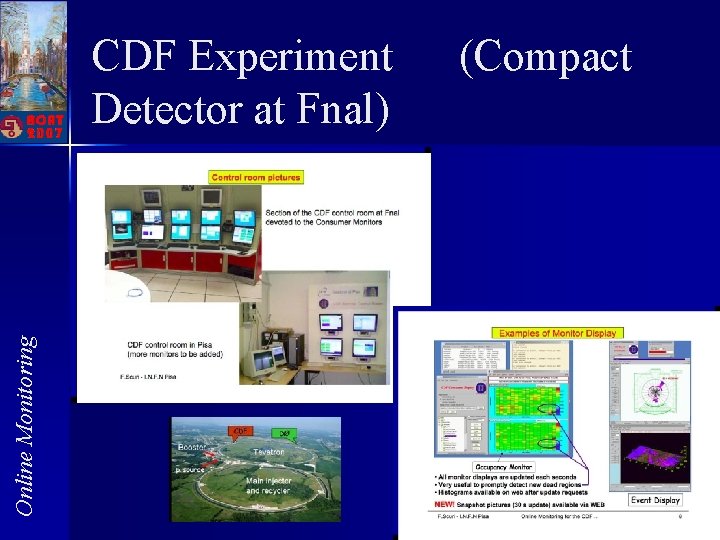

Online Monitoring CDF Experiment Detector at Fnal) (Compact

Simulation

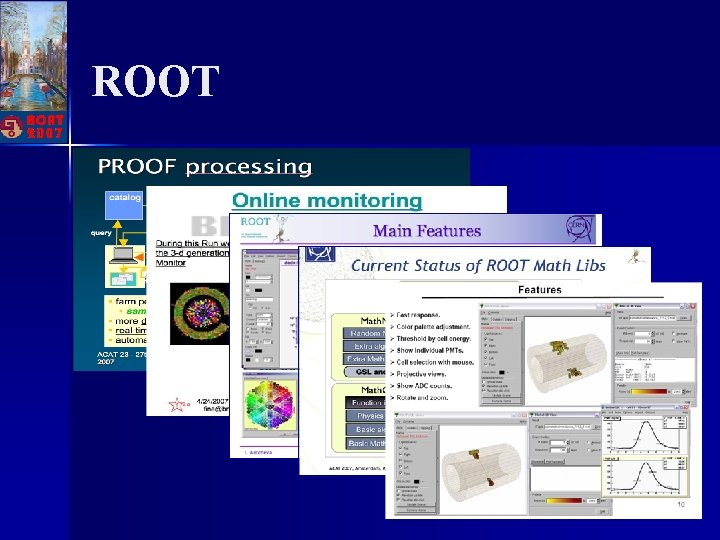

ROOT

Summary of Session 2 n Session on Data Analysis, Algorithms and Tools : – Neural Networks and Other Pattern Recognition Techniques – Evolutionary Algorithms – Advanced Data Analysis Environments – Statistical Methods – Detector and Accelerator Simulations – Reconstruction Algorithms – Visualization Techniques

Multi-Variate Analysis Methods n Large fraction of the talks on MVA: – General purpose implementation (e. g. TMVA, SPR) – New methods (self-organizing maps (SOM) ) – Varied usage: n n n Running experiments (Tevatron) New experiments (LHC, BESIII) Cosmic Ray experiments Trigger Reconstruction (Track, vertex, e/γ, b-, τ-tagging) Data analysis, event selection 29

Multi. Variate Methods Usage 30

Discussion on Usage of Multivariate Methods P. Bhat: Multivariate Methods in HEP • Sociological Issues ãWe have been conservative in the use of MV methods for discovery ãWe have been more aggressive in the use of MV methods for setting limits ãBut discovery is more important and needs all the power you can muster! ãThis is expected to change at LHC (? ) • Multivariate Analysis Issues ãDimensionality ãChoosing the right method for the problem ãControlling ãTesting Reduction: optimal choice of variables without losing information Model Complexity Convergence ãValidation ãComputational ãCorrectness Efficiency of modeling ãWorries about hidden bias ãWorries about underestimating errors Thomas Speer

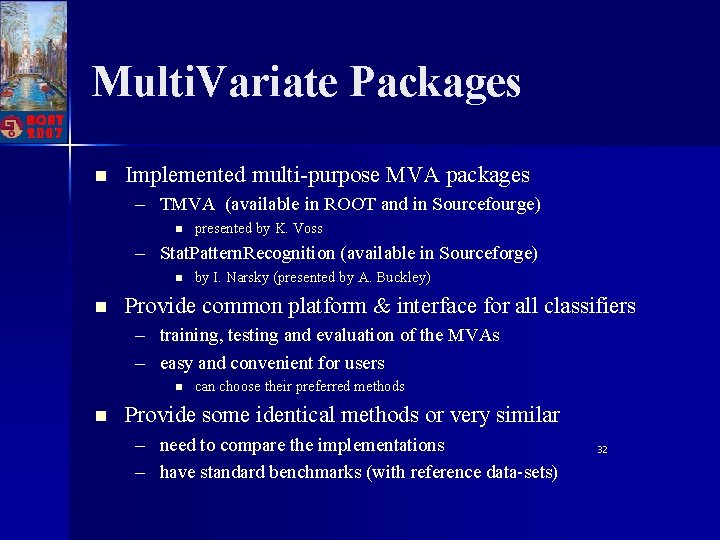

Multi. Variate Packages n Implemented multi-purpose MVA packages – TMVA (available in ROOT and in Sourcefourge) n presented by K. Voss – Stat. Pattern. Recognition (available in Sourceforge) n n by I. Narsky (presented by A. Buckley) Provide common platform & interface for all classifiers – training, testing and evaluation of the MVAs – easy and convenient for users n n can choose their preferred methods Provide some identical methods or very similar – need to compare the implementations – have standard benchmarks (with reference data-sets) 32

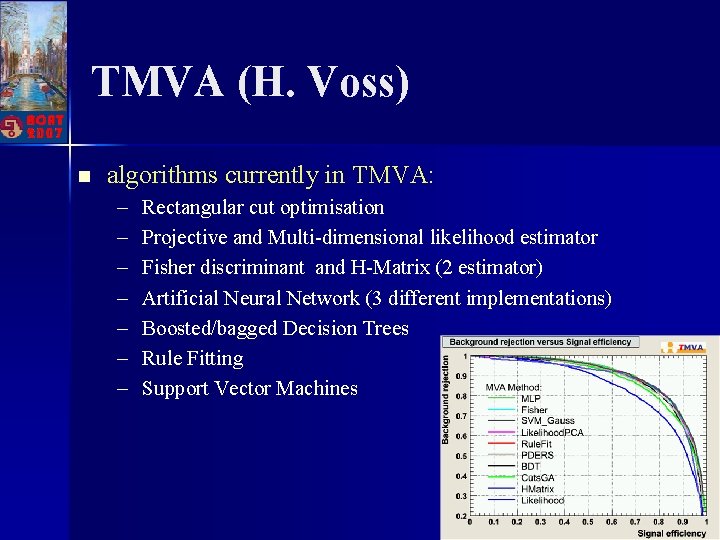

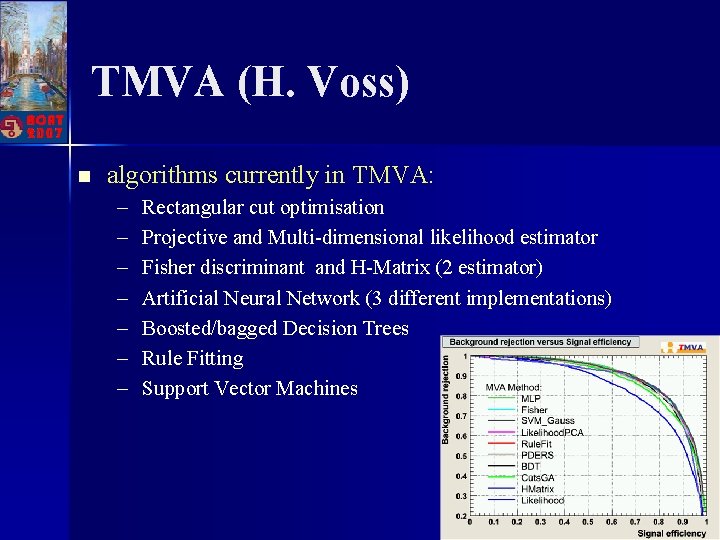

TMVA (H. Voss) n algorithms currently in TMVA: – – – – Rectangular cut optimisation Projective and Multi-dimensional likelihood estimator Fisher discriminant and H-Matrix (2 estimator) Artificial Neural Network (3 different implementations) Boosted/bagged Decision Trees Rule Fitting Support Vector Machines 33

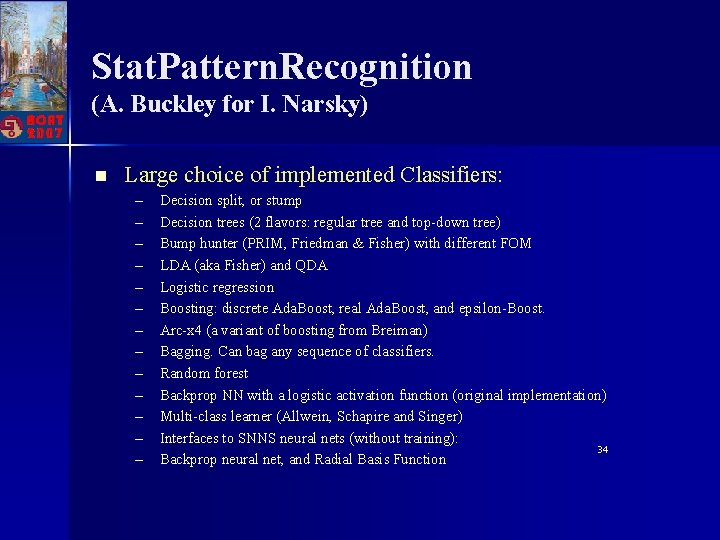

Stat. Pattern. Recognition (A. Buckley for I. Narsky) n Large choice of implemented Classifiers: – – – – Decision split, or stump Decision trees (2 flavors: regular tree and top-down tree) Bump hunter (PRIM, Friedman & Fisher) with different FOM LDA (aka Fisher) and QDA Logistic regression Boosting: discrete Ada. Boost, real Ada. Boost, and epsilon-Boost. Arc-x 4 (a variant of boosting from Breiman) Bagging. Can bag any sequence of classifiers. Random forest Backprop NN with a logistic activation function (original implementation) Multi-class learner (Allwein, Schapire and Singer) Interfaces to SNNS neural nets (without training): 34 Backprop neural net, and Radial Basis Function

Stat. Pattern. Recognition

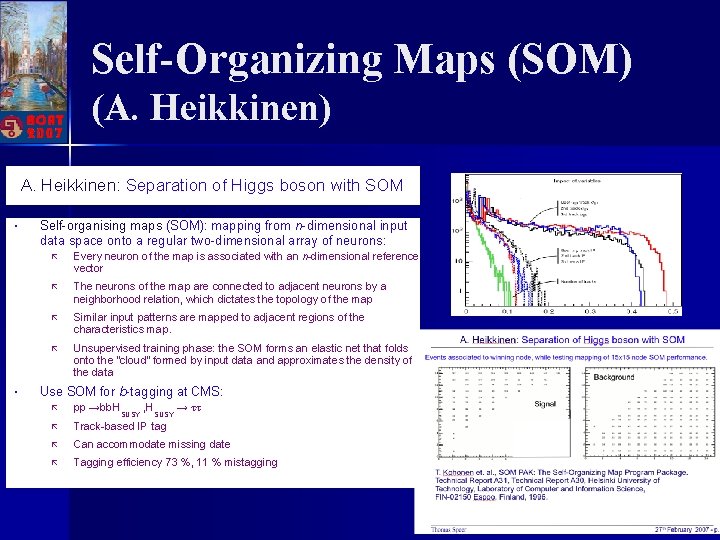

Various Usage of MVA n Presentations showing usage of MVA: – P. Bhat: Usage in HEP (at the Tevatron) n Baysian NN – A. Heikkinen: Separation of Higgs boson with SOM – M. Wolter: Optimization of tau identification in ATLAS n comparison of TMVA algorithms – R. C. Torres: Online electron/jet-identification in ATLAS using NN – J. Seixas: Online electron/jet-identification in ATLAS using SOM – S. Riggi: NN for high energy cosmic rays mass identification – S. Khatchadourian: NN Level 2 Trigger in Gamma Ray Astronomy

Baysian Neural Networks 37

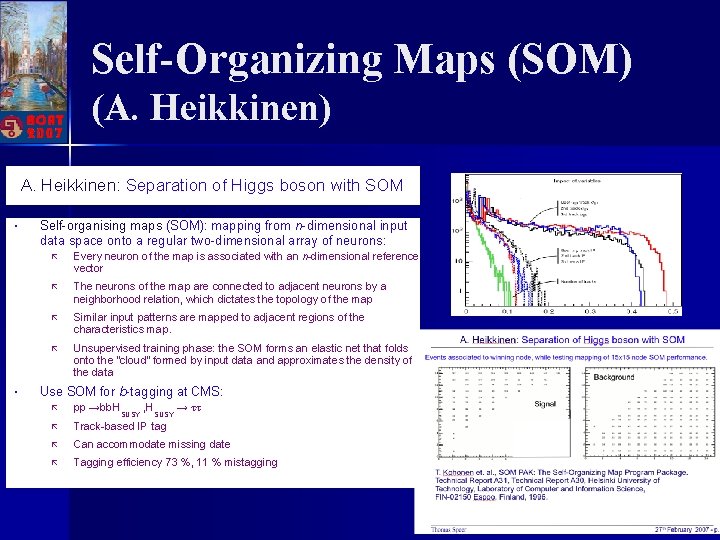

Self-Organizing Maps (SOM) (A. Heikkinen) A. Heikkinen: Separation of Higgs boson with SOM • • Self-organising maps (SOM): mapping from n-dimensional input data space onto a regular two-dimensional array of neurons: ã Every neuron of the map is associated with an n-dimensional reference vector ã The neurons of the map are connected to adjacent neurons by a neighborhood relation, which dictates the topology of the map ã Similar input patterns are mapped to adjacent regions of the characteristics map. ã Unsupervised training phase: the SOM forms an elastic net that folds onto the ”cloud” formed by input data and approximates the density of the data Use SOM for b-tagging at CMS: ã pp →bb. H ã Track-based IP tag ã Can accommodate missing date ã Tagging efficiency 73 %, 11 % mistagging SUSY , H SUSY → ττ

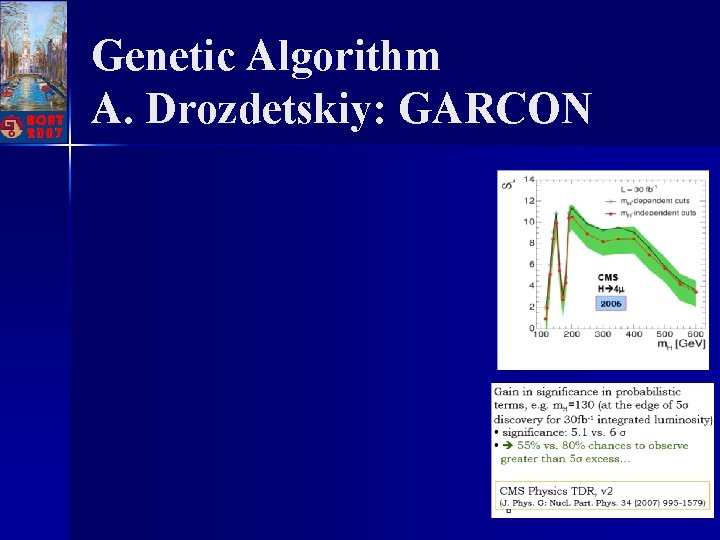

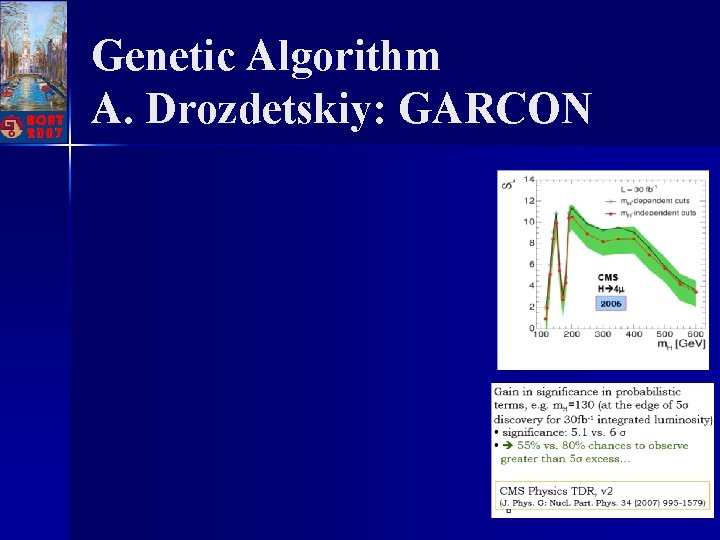

Evolutionary Algorithms • Natural evolution: generate a population of individuals with increasing fitness to environment • Evolutionary ãProcess ãAbility computation simulates the natural evolution on a computer leading to maintenance or increase of a population to survive and reproduce in a specific environment ãQuantitatively measured by evolutionary fitness • Goal of evolutionary computation: to generate a set of solutions (to a problem) of increasing quality ãGenetic Algorithms (GA) (J. H. Holland, 1975): A. Drozdetskiy - GARCON ãGenetic Programming (GP) (J. R. Koza, 1992) ãGene Expression Programming (GEP) (C. Ferreira, 2001): L. Teodorescu • Main differences: ãEncoding method ãReproduction Thomas Speer method

Genetic Algorithm A. Drozdetskiy: GARCON

Session 3 n Methodology of Computations in Theoretical Physics – Loop technology n FORM and parallel version (Par. Form) – Generators and Automators (automatic computation systems) n n from physics processes to event generators advances in algorithms and systems – optimize FFT with max in-cache operations (J. Raynolds) – Error-free algorithms to solve systems of linear equations (M. Morhac)

Statistical Algorithms n n Baysian approach for upper limits and Confidence Levels (Zhu, Bitykov) Chi 2 for comparison of weighted and unweighted histograms (N. Gagunashvili) – algorithm introduced in ROOT by D. Hertl last summer n n Machine learning approach to unfolding (N. Gagunashvili) Two dimensional goodness of fit testing (R. Lopes)

Plenary Sessions n J. Schmidhuber : Recent Progress in Machine Learning – Recurrent Neural Networks n new feedback network: – Long-Short Term Memory (LSTM) n various applications: – robotics, speed recognition, time-series prediction, etc. . . – no slides posted, see his Web-site (google Schmidhuber n or Recurrent Neural Networks) n

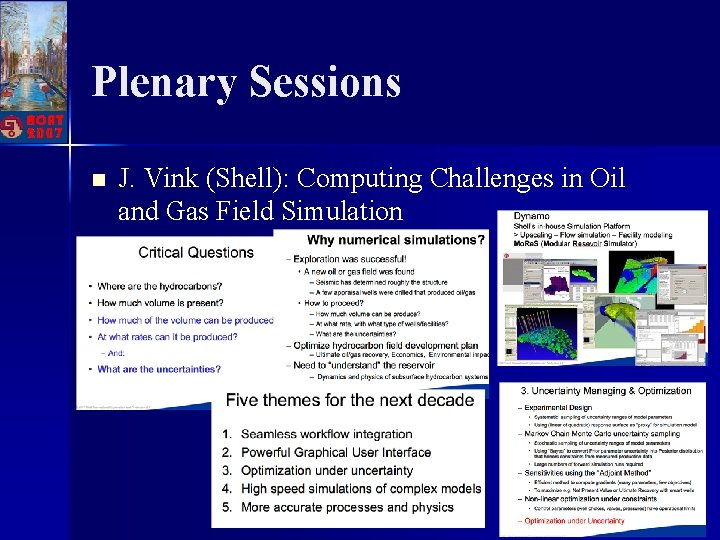

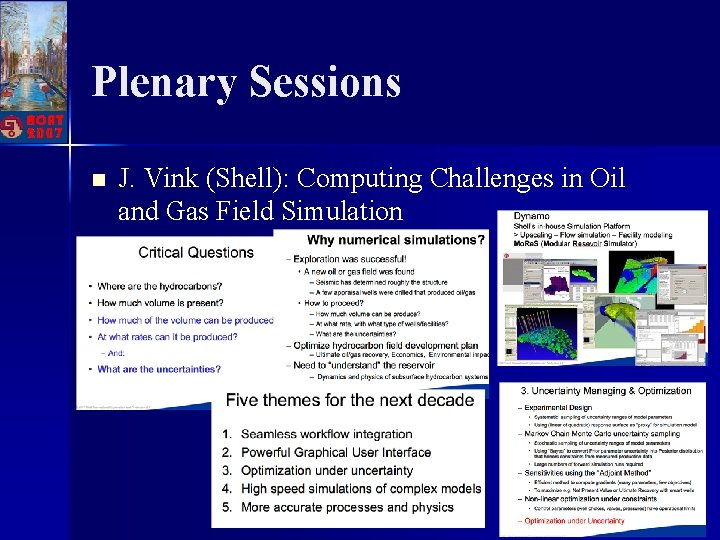

Plenary Sessions n J. Vink (Shell): Computing Challenges in Oil and Gas Field Simulation

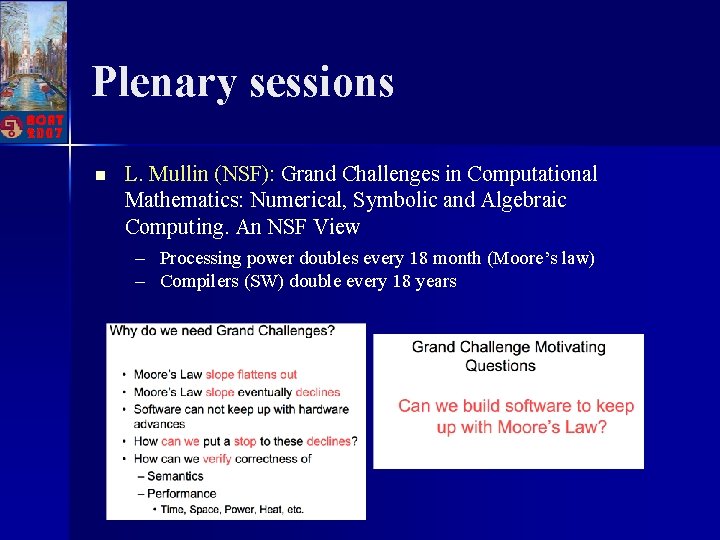

Plenary sessions n L. Mullin (NSF): Grand Challenges in Computational Mathematics: Numerical, Symbolic and Algebraic Computing. An NSF View – Processing power doubles every 18 month (Moore’s law) – Compilers (SW) double every 18 years

Conclusion Proceedings on DVD with an ISBN number n It was a very nice conference n – Many interesting talks on various subjects – Useful discussions n Well organized … with a nice weather

Non-acat

Non-acat Amsterdam 27 april

Amsterdam 27 april Eric laenen

Eric laenen Nikhef

Nikhef Nikhef webmail

Nikhef webmail Acat roma

Acat roma Jrcptb msf

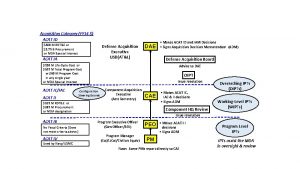

Jrcptb msf Acat id

Acat id Acat imt

Acat imt Highlights from the book of isaiah

Highlights from the book of isaiah The passage highlights……

The passage highlights…… Proposal highlights

Proposal highlights Highlights from the book of isaiah

Highlights from the book of isaiah Elements and principles of art

Elements and principles of art Highlights memorandum

Highlights memorandum Investment highlights

Investment highlights Sample of reflection in work immersion

Sample of reflection in work immersion Feature highlights

Feature highlights Life in new amsterdam

Life in new amsterdam Vwtv

Vwtv Economa amsterdam

Economa amsterdam Singel 425 amsterdam

Singel 425 amsterdam Eduarte rocva

Eduarte rocva Amsterdam bildirgesi

Amsterdam bildirgesi Leegstandverordening amsterdam

Leegstandverordening amsterdam Clint harman new amsterdam

Clint harman new amsterdam Citown

Citown Amsterdam opera house

Amsterdam opera house Messiaanse gemeente amsterdam

Messiaanse gemeente amsterdam Fcc amsterdam

Fcc amsterdam Carl jung amsterdam

Carl jung amsterdam Open universiteit amsterdam

Open universiteit amsterdam Debt agency amsterdam

Debt agency amsterdam Wapla

Wapla Hollanda corpus müzesi

Hollanda corpus müzesi Roz amsterdam

Roz amsterdam Amsterdam office market

Amsterdam office market Wetenschap en techniek amsterdam

Wetenschap en techniek amsterdam Bioinformatica amsterdam

Bioinformatica amsterdam Amsterdam news circulation

Amsterdam news circulation Fast net mon

Fast net mon Studentenwoningweb

Studentenwoningweb Stayz phillip island

Stayz phillip island What's happening these days

What's happening these days Walraven van hall monumento

Walraven van hall monumento Open library amsterdam

Open library amsterdam School trip amsterdam

School trip amsterdam