ACADEMIC IMPRESSIONS Online Course Design EVALUATION Jennifer Freeman

- Slides: 33

ACADEMIC ■ IMPRESSIONS Online Course Design EVALUATION Jennifer Freeman

Evaluation: Session Goals • Understand the difference between assessment and evaluation • Define formative, summative and confirmative evaluation and understand the importance of each • Explore theories and methods of evaluation • Design a basic revision plan

Evaluation vs. Assessment • Evaluation: measuring the quality and effectiveness of learning materials and activities • Assessment: measuring students’ learning and achievement of goals and objectives

Course Evaluation: Why? • • • Fix things that are broken Ensure learning outcomes are being achieved Discover causes for failures…proactively fix other problems Discover potential usability/accessibility issues What works in theory doesn’t always work in practice Constant maintenance and improvements to content and strategies • Dynamic nature of online learning

What Do We Evaluate? • Instructional materials’ alignment with objectives and the effectiveness of testing instruments • Quality of instructional materials • Quality of external resources • Effectiveness of instructional strategies • Usability of tools and technology • Effectiveness of teaching skills • Evaluation chart

Formative Evaluation of Instructional Materials • Why? – Uncover problems early on; fix broken stuff (hopefully before students find it) – Identify potential usability/accessibility issues – Examine effectiveness and improve functionality – Dynamic nature of online learning • What? When? – An ongoing process, usually done both during development and while being taught – Asks the question, “How are we doing? ”

Formative Evaluation of Instructional Materials • Who will use this evaluation information? – Course development team – Instructor • How? What should be evaluated? – Instructional materials – Instructional strategies – Use of tools and technology

Formative Evaluation: Questions to Ask • Do learning activities and assessments align with the learning objectives? • Do learning materials meet quality standards? – Are learning materials error-free? – Are learning materials accessible? – Are learning materials usable? • Are the technology tools appropriate and working properly?

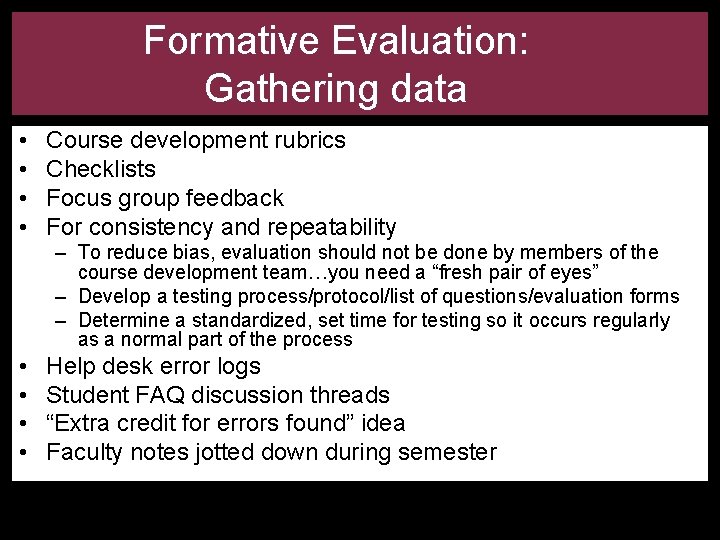

Formative Evaluation: Gathering data • • Course development rubrics Checklists Focus group feedback For consistency and repeatability – To reduce bias, evaluation should not be done by members of the course development team…you need a “fresh pair of eyes” – Develop a testing process/protocol/list of questions/evaluation forms – Determine a standardized, set time for testing so it occurs regularly as a normal part of the process • • Help desk error logs Student FAQ discussion threads “Extra credit for errors found” idea Faculty notes jotted down during semester

course is released

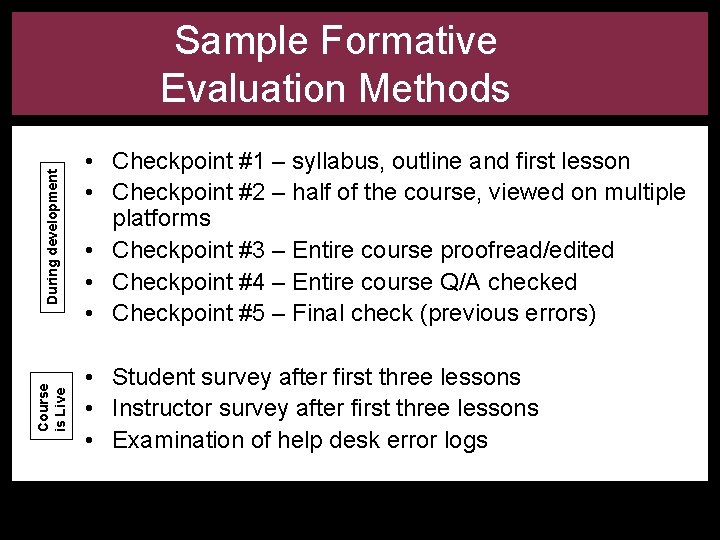

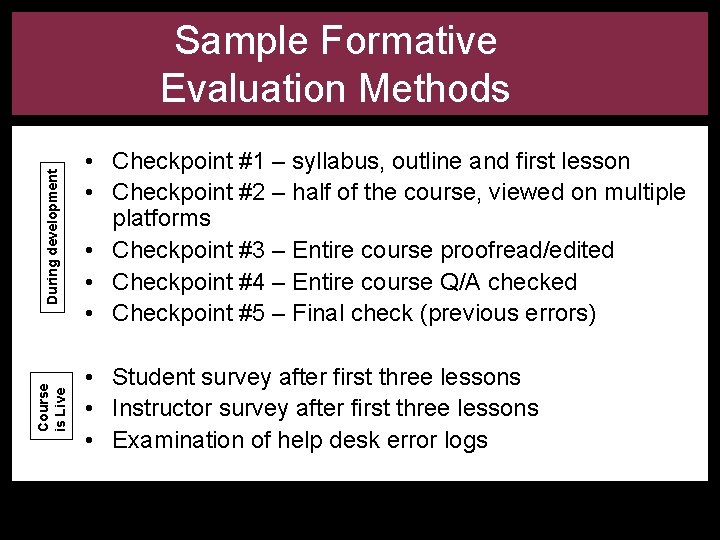

Course is Live During development Sample Formative Evaluation Methods • Checkpoint #1 – syllabus, outline and first lesson • Checkpoint #2 – half of the course, viewed on multiple platforms • Checkpoint #3 – Entire course proofread/edited • Checkpoint #4 – Entire course Q/A checked • Checkpoint #5 – Final check (previous errors) • Student survey after first three lessons • Instructor survey after first three lessons • Examination of help desk error logs

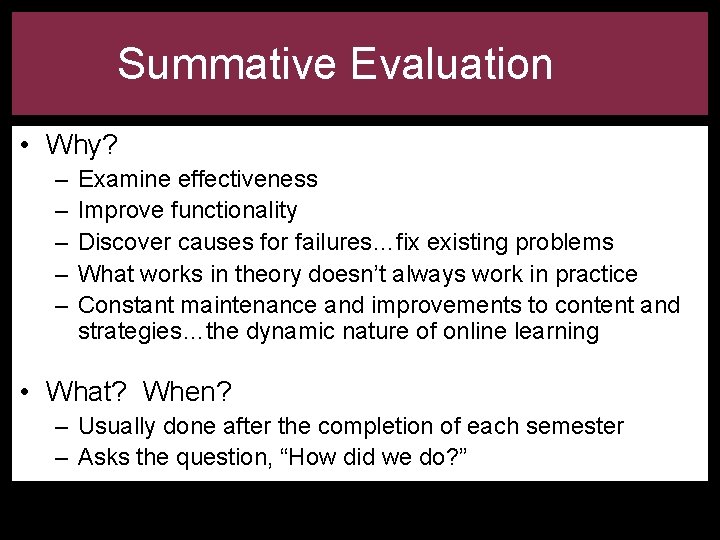

Summative Evaluation • Why? – – – Examine effectiveness Improve functionality Discover causes for failures…fix existing problems What works in theory doesn’t always work in practice Constant maintenance and improvements to content and strategies…the dynamic nature of online learning • What? When? – Usually done after the completion of each semester – Asks the question, “How did we do? ”

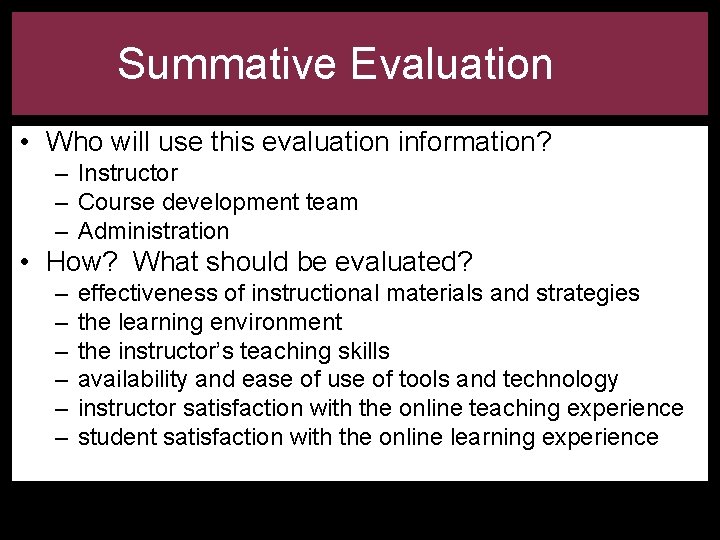

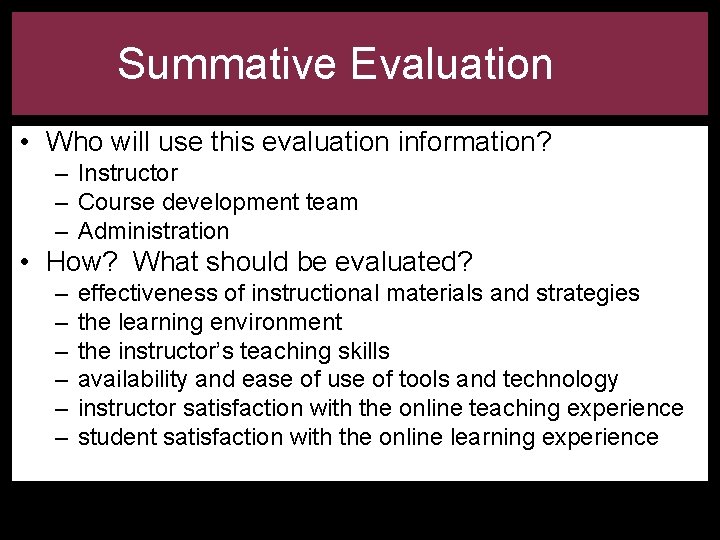

Summative Evaluation • Who will use this evaluation information? – Instructor – Course development team – Administration • How? What should be evaluated? – – – effectiveness of instructional materials and strategies the learning environment the instructor’s teaching skills availability and ease of use of tools and technology instructor satisfaction with the online teaching experience student satisfaction with the online learning experience

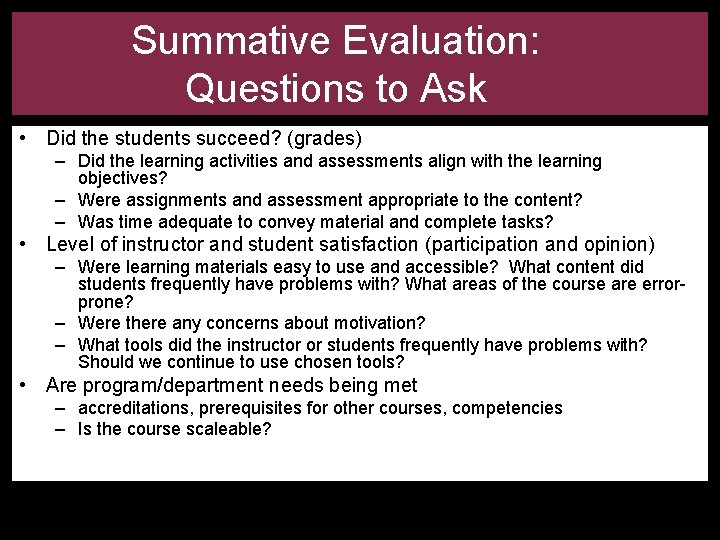

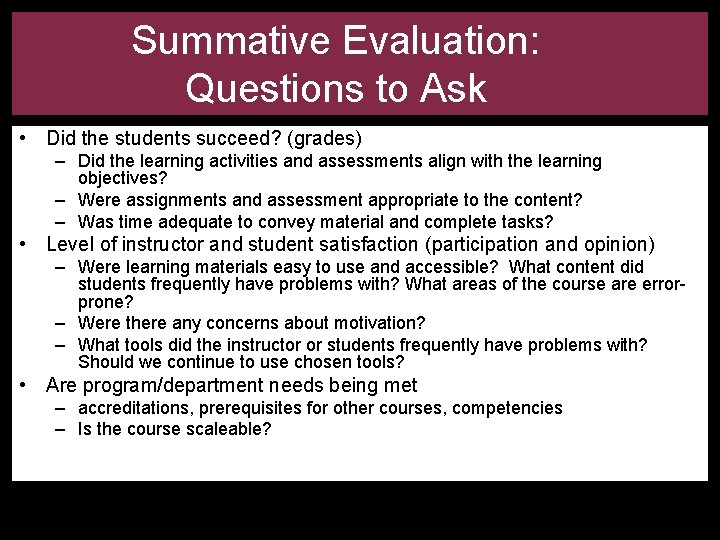

Summative Evaluation: Questions to Ask • Did the students succeed? (grades) – Did the learning activities and assessments align with the learning objectives? – Were assignments and assessment appropriate to the content? – Was time adequate to convey material and complete tasks? • Level of instructor and student satisfaction (participation and opinion) – Were learning materials easy to use and accessible? What content did students frequently have problems with? What areas of the course are errorprone? – Were there any concerns about motivation? – What tools did the instructor or students frequently have problems with? Should we continue to use chosen tools? • Are program/department needs being met – accreditations, prerequisites for other courses, competencies – Is the course scaleable?

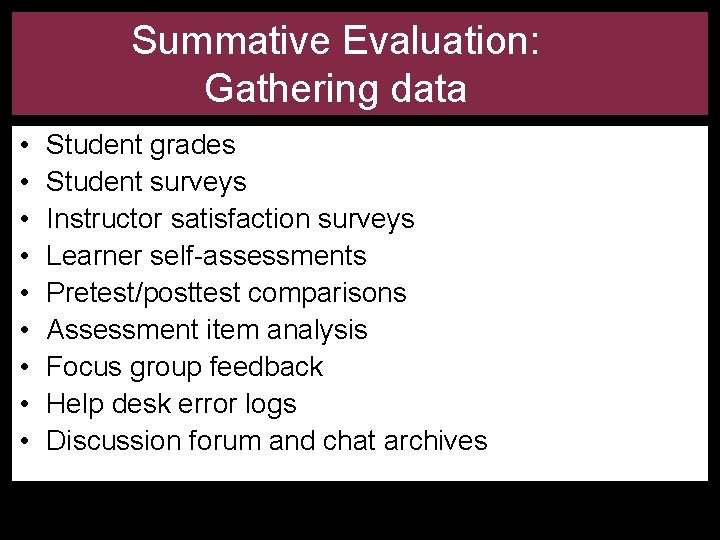

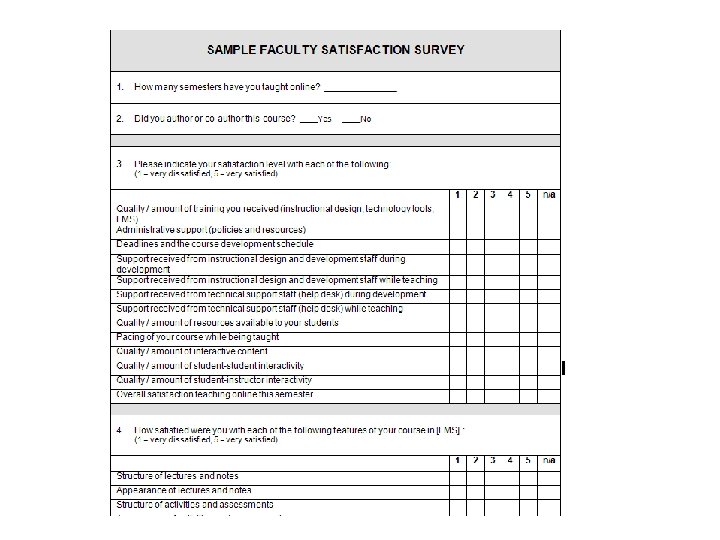

Summative Evaluation: Gathering data • • • Student grades Student surveys Instructor satisfaction surveys Learner self-assessments Pretest/posttest comparisons Assessment item analysis Focus group feedback Help desk error logs Discussion forum and chat archives

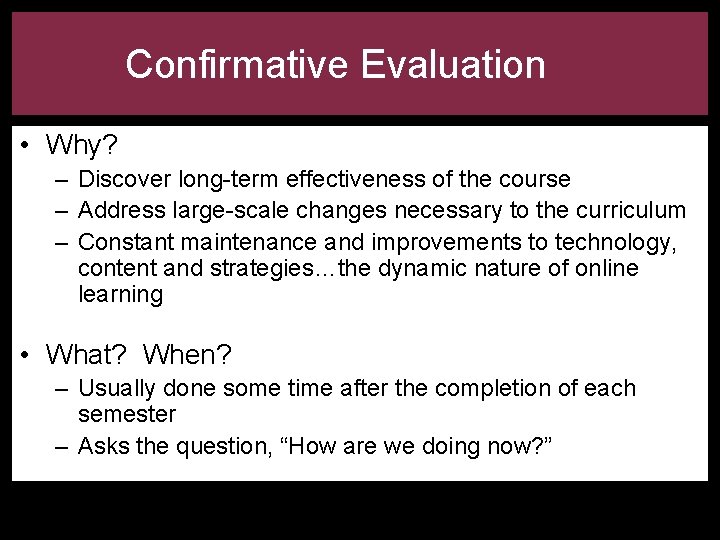

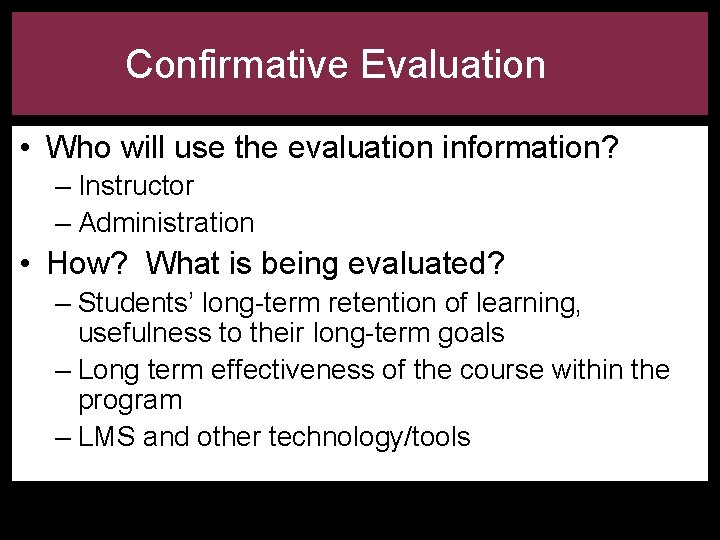

Confirmative Evaluation • Why? – Discover long-term effectiveness of the course – Address large-scale changes necessary to the curriculum – Constant maintenance and improvements to technology, content and strategies…the dynamic nature of online learning • What? When? – Usually done some time after the completion of each semester – Asks the question, “How are we doing now? ”

Confirmative Evaluation • Who will use the evaluation information? – Instructor – Administration • How? What is being evaluated? – Students’ long-term retention of learning, usefulness to their long-term goals – Long term effectiveness of the course within the program – LMS and other technology/tools

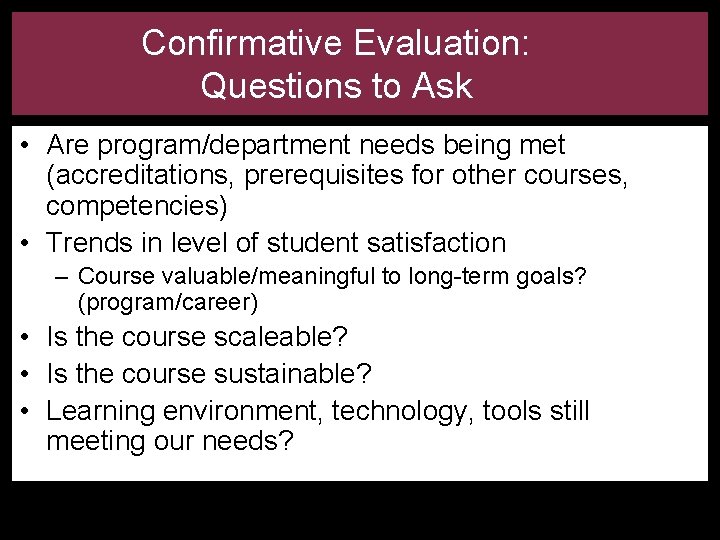

Confirmative Evaluation: Questions to Ask • Are program/department needs being met (accreditations, prerequisites for other courses, competencies) • Trends in level of student satisfaction – Course valuable/meaningful to long-term goals? (program/career) • Is the course scaleable? • Is the course sustainable? • Learning environment, technology, tools still meeting our needs?

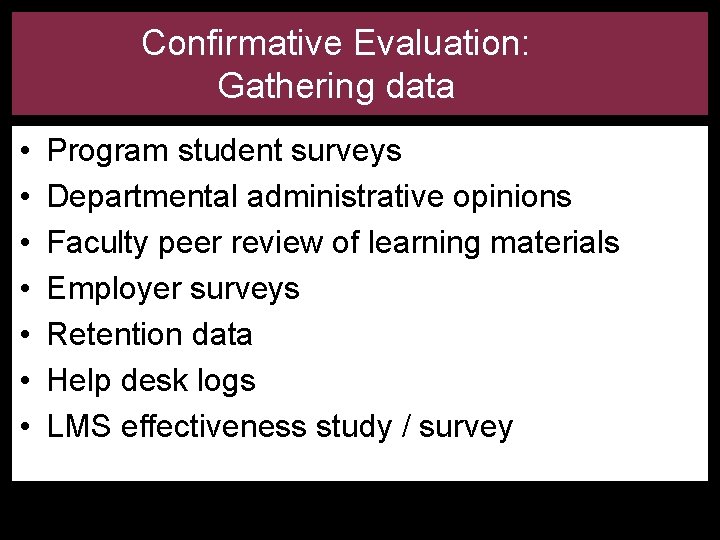

Confirmative Evaluation: Gathering data • • Program student surveys Departmental administrative opinions Faculty peer review of learning materials Employer surveys Retention data Help desk logs LMS effectiveness study / survey

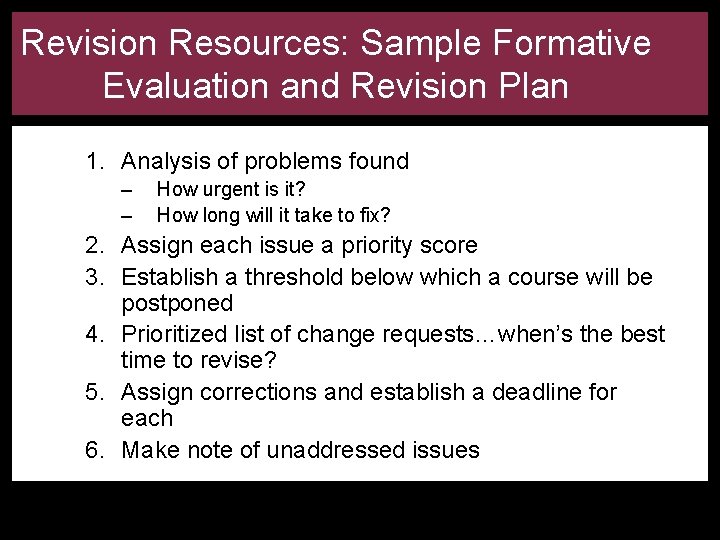

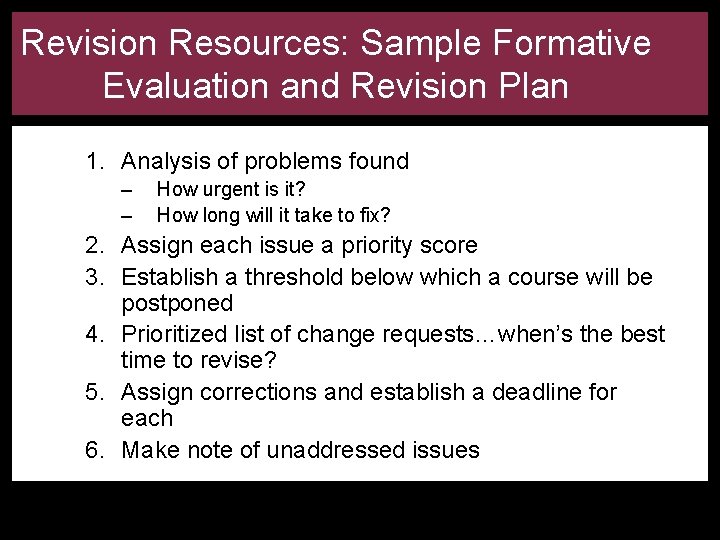

Revision Resources: Sample Formative Evaluation and Revision Plan 1. Analysis of problems found – – How urgent is it? How long will it take to fix? 2. Assign each issue a priority score 3. Establish a threshold below which a course will be postponed 4. Prioritized list of change requests…when’s the best time to revise? 5. Assign corrections and establish a deadline for each 6. Make note of unaddressed issues

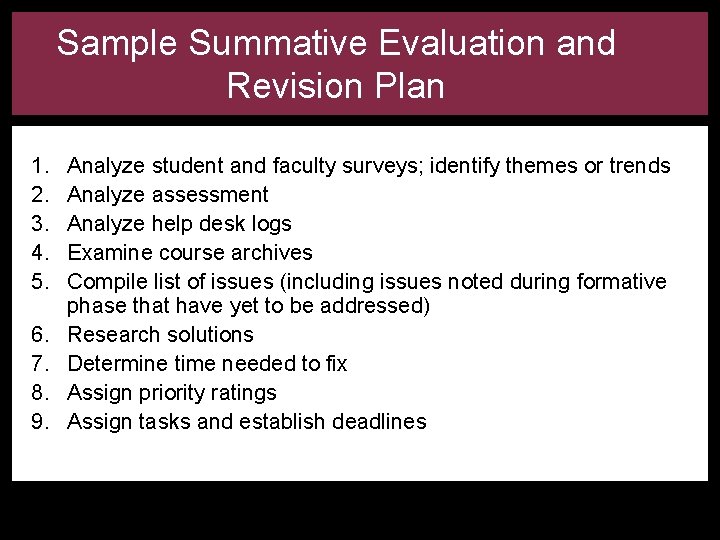

Sample Summative Evaluation and Revision Plan 1. 2. 3. 4. 5. 6. 7. 8. 9. Analyze student and faculty surveys; identify themes or trends Analyze assessment Analyze help desk logs Examine course archives Compile list of issues (including issues noted during formative phase that have yet to be addressed) Research solutions Determine time needed to fix Assign priority ratings Assign tasks and establish deadlines

Revision Activity Music Appreciation • Ten modules, each includes lecture notes, one or more Powerpoint presentations with audio, a graded listening quiz and a synchronous chat requirement • Project – “My Favorite Composer” biography assignment submitted as Word doc to instructor • Midterm – multiple choice exam • Final – multiple choice exam

Common LMS Evaluation Criteria • Costs rising at a reasonable rate? • Are server space and maintenance needs being met? Is the vendor and software in compliance with required standards? • How reliable has the system been? • Have there been any security concerns? • Level of customization possible within the system? • Satisfied with the structure and presentation of courses? • Satisfied with the authoring tools provided? • Satisfied with the tracking capabilities of the system? • Satisfied with the testing engine and/or assessment tools available in the system?

Common LMS Evaluation Criteria • Satisfied with the collaboration tools (discussion areas, journaling, help desk, whiteboard) provided through the system? • Satisfied with the productivity tools (calendar, help files, search engine) provided through the system? • Is student / faculty / staff documentation or training sufficient? • How usable do students, faculty and staff find the tools? • What is the vendor’s reputation in the industry? • What is the vendor’s position in the industry?

What We’ve Learned • The difference between assessment and evaluation • Definitions of formative, summative and confirmative evaluation and the importance of each • Methods of evaluation • Sample evaluation and revision plans

Jennifer Freeman jfreeman@utsystem. edu

Tire tread impressions are always plastic impressions.

Tire tread impressions are always plastic impressions. Academic impressions

Academic impressions Dental impression taking course

Dental impression taking course Academic competencies

Academic competencies Microsoft it academy

Microsoft it academy Microsoft official academic course microsoft word 2016

Microsoft official academic course microsoft word 2016 Microsoft official academic course microsoft excel 2016

Microsoft official academic course microsoft excel 2016 Short authentic academic text

Short authentic academic text Microsoft official academic course microsoft word 2016

Microsoft official academic course microsoft word 2016 Course evaluation chalmers

Course evaluation chalmers Idea course evaluations

Idea course evaluations Objectives of strategic management

Objectives of strategic management One and a half brick wall

One and a half brick wall Course title and course number

Course title and course number Chaine parallèle muscle

Chaine parallèle muscle Walter jackson freeman

Walter jackson freeman S198a corporations act

S198a corporations act Ciaran freeman

Ciaran freeman Abby freeman unl

Abby freeman unl Ciasta mondrian caitlin freeman

Ciasta mondrian caitlin freeman Stoner dan freeman

Stoner dan freeman Foster and freeman vsc 80

Foster and freeman vsc 80 Edward freeman

Edward freeman Tavoletta freeman

Tavoletta freeman Kali freeman

Kali freeman Morgan freeman almighty

Morgan freeman almighty Friedman vs freeman

Friedman vs freeman Walter jackson freeman ii

Walter jackson freeman ii Vicki freeman

Vicki freeman Foster freeman

Foster freeman Foster and freeman vsc 80

Foster and freeman vsc 80 Vicki freeman

Vicki freeman Cosmic voyage morgan freeman

Cosmic voyage morgan freeman Centralità di freeman

Centralità di freeman