AASPI Software Computational Environment Tim Kwiatkowski Welcome Consortium

- Slides: 18

AASPI Software Computational Environment Tim Kwiatkowski Welcome Consortium Members November 10, 2009

Overview • Hardware • Clusters • Multiprocessor / Multi-core • Software • Computational Environment • Compilers • Libraries • Graphics • Software Design • Directory Layout • The Future

Hardware Clusters The AASPI Software was originally designed to run on U**X/Linux clusters using MPI (Message Passing Interface). Large Granularity No need for expensive interconnects. Gigabit Ethernet is sufficient. Depending on the size of the cluster, can be difficult to administer.

Hardware Multiprocessor / Multi-core • Newer multi-core processors have • become available • Currently no explicit multi-threading. • MPI using “Loopback” Communication • Simpler to administer • Can be grown into a cluster

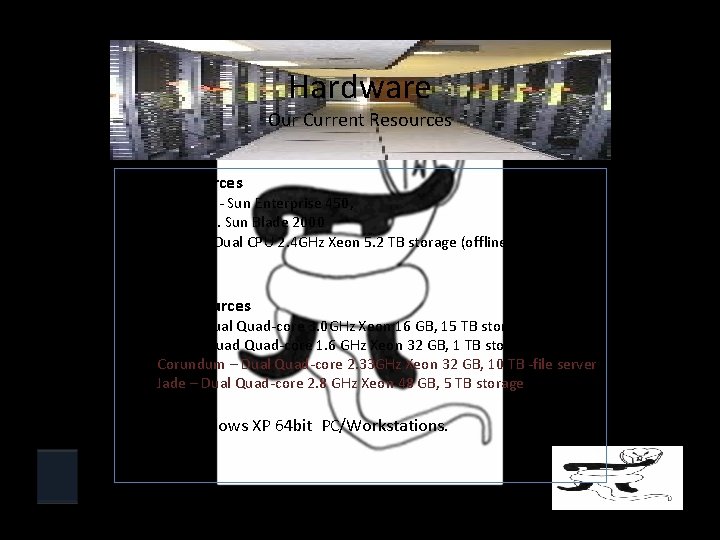

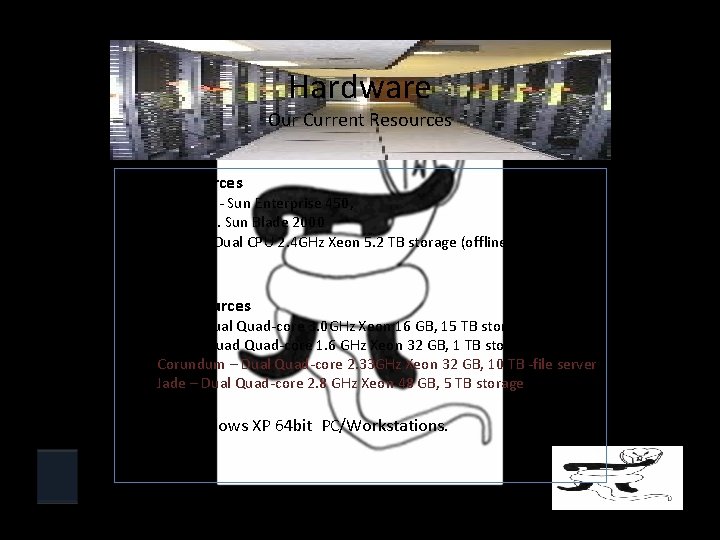

Hardware Our Current Resources Older Resources diamond - Sun Enterprise 450, 2 ea. Sun Blade 2000 fluorite- Dual CPU 2. 4 GHz Xeon 5. 2 TB storage (offline) Newer Resources Opal – Dual Quad-core 3. 0 GHz Xeon 16 GB, 15 TB storage Ruby – Quad-core 1. 6 GHz Xeon 32 GB, 1 TB storage Corundum – Dual Quad-core 2. 33 GHz Xeon 32 GB, 10 TB -file server Jade – Dual Quad-core 2. 8 GHz Xeon 48 GB, 5 TB storage 22 Windows XP 64 bit PC/Workstations.

Hardware Our Current Resources – Cluster Resources Muntu 1 management node, 1 head node, 16 compute nodes. Each node: 3. 06 GHz Dual processor , 4 GB RAM. Total disk storage: ~2 TB OSCER (Oklahoma Supercomputing Center for Education & Research) As a whole: 531 User Accessible Nodes, 120 TB Fast scratch storage, 34450 GFlop peak, 28030 GFlop sustained. Our own dedicated OSCER nodes / storage Dual Quad core (3 ea. 2. 33 GHz, 3 ea. 2. 66 GHz) 16 GB RAM Storage node - Dual Quad core 2. 33 GHz, 16 GB RAM, 18 TB disk storage. www. oscer. ou. edu

Hardware Recommendations What type of hardware do I need to run the AASPI software? The short answer: It depends. Entry level suggestion: Dual or Quad-core 2. 5 GHz+ 2 GB /core >2 TB disk capacity

Software Environment − OS Operating System As shipped, we have chosen to pre-compile the AASPI software. This should work on most Redhat 4 Release 4 and higher installations. Some needed packages blas, lapack, libf 2 c, bzip 2 -libs, zlib, X 11 packages for running the GUI, Mesa-lib. GLU

Software Environment − 64 vs. 32 bit • SEPLib is compiled as 32 -bit code. So, all of the AASPI computational code, is compiled as 32 bit. • The AASPI GUI code is currently compiled as a 64 bit executable. This is not necessary, but this is what is included in our release. • We would like to support both architectures with a preference for 64 bit.

Software Environment − Compilers We have chosen to pre-compile the AASPI software to make your life easier. However, IF you are compiling on your own… Required: A good Fortran 90 compiler such as the Portland Group Fortran compiler or the Intel Fortran 90/95 compiler. We use the Intel Fortran compiler. Required: A good C/C++ compiler. GCC is fine. Required: Patience! Most of the compiling issues come from the 3 rd party packages!

Software Environment − Libraries The software depends on several external libraries: Seismic Unix (Center for Wave Phenomena SEPlib (Stanford Exploration Project) - Colorado School of Mines) Open. MPI (We have used MPICH in the past) FFTW (mostly Version 2 at the present time migrating to version 3) Lapack & BLAS (The Intel Math Kernel Library could be used as a substitute) The FOX Toolkit (GUI interface and seismic data display)

Software Environment − Graphics Now we have a GUI interface. It’s X-Windows based. How do we use it? Some Solutions Use a desktop Linux workstation. Use a Mac Thin. Anywhere VNC Hummingbird Exceed Xming Cygwin

Software Design Practices/Goals • Use modern programming languages • Fortran 90/95 • C/C++ • Modular Design • Maximize code re-use • Use Fortran 90/95 modules/interfaces • Use C++ classes/template programming • Libraries • Organize processes/functions into logical, reusable libraries

Software Layout Precompiled binaries Non-AASPI package compiled libraries Non-AASPI RPMS Non-AASPI packages - source AASPI include files (along with others) AASPI libraries & other shared libraries AASPI man pages AASPI source code Scripts – program wrappers and utilities

The Future Replacing SEPlib – You’ve heard this before. We were planning to move to Madagascar (RSF). SEPlib continues to limit our flexibility. For the meantime, we will continue to use the SEP tools (especially visualization). Initial plans will modify the SEG-Y import/export and internal file access. We still plan to support the SEP (RSF) format, but use our own core libraries MS Windows? – Perhaps… All of our core code should be multiplatform. MPI is available on Windows platforms via cluster services. The main issues are with our dependencies: Seismic UNIX and SEPlib.

Future Computing GPU Research We are experimenting with the newest generation of equipment – GPUs (Graphics Processing Units) Currently, we are working with CUDA (Compute Unified Device Architecture from n. Vidia). The software development target for GPU processing will be the desktop PC most likely as plug-ins for Petrel. Certain codes may lend themselves more naturally to GPU processing than others.

Special Thanks For the last year, Ha has been responsible for most of the code packaging and releases as well as the GUI development. Ha T. Mai

AASPI Software Computational Environment Tim Kwiatkowski Thank You! Questions?