A User Driven Dynamic Circuit Network Implementation Evangelos

- Slides: 21

A User Driven Dynamic Circuit Network Implementation Evangelos Chaniotakis Network Engineering Group Energy Sciences Network Lawrence Berkeley National Laboratory DANMS 2008 November 30 2008 Networking for the Future of Science

Contents • • • • Introduction ESnet Network Architecture Virtual Circuit Implementation User-Driven VCs Layer 2 and 3 support Path Computation Authentication and Authorization Oversubscription and soft reservations Collaboration Network use Future work Acknowledgments Questions

Introduction • ESnet's mission: provide the network infrastructure for DOE researchers • Rapid growth in scientific computing • Highly distributed collaboration reaching the global scale – LHC, e. VLBI • Distribution of large data sets becoming more and more common (40 Tb / day projected for LHC) • ESnet must reliably and economically accommodate large flows and regular Internet traffic • But: Large flows don't work too well al TCP/IP • Our solution: Isolate large flows into VCs • Provides predictable bandwidth, allows impolite protocols without disruption to other traffic

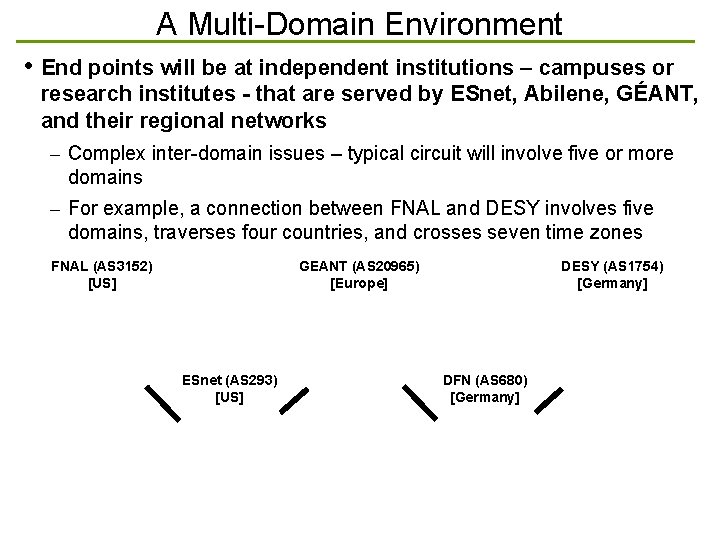

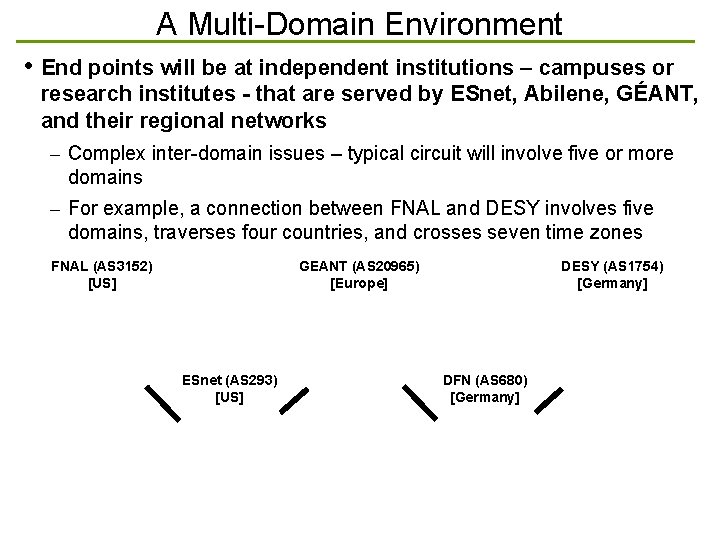

A Multi-Domain Environment • End points will be at independent institutions – campuses or research institutes - that are served by ESnet, Abilene, GÉANT, and their regional networks – Complex inter-domain issues – typical circuit will involve five or more domains – For example, a connection between FNAL and DESY involves five domains, traverses four countries, and crosses seven time zones FNAL (AS 3152) [US] GEANT (AS 20965) [Europe] ESnet (AS 293) [US] DESY (AS 1754) [Germany] DFN (AS 680) [Germany]

ESnet Network Architecture • A core 10 G best-effort IP network • A logically distinct Science Data Network • Virtual circuits are generally engineered and provisioned only on SDN links • Engineered OSPF metrics ensure that best effort traffic uses IP core and avoids SDN • In case of IP network bifurcation, the SDN network will be used by best-effort traffic. • Qo. S is used to engineer this backup mechanism

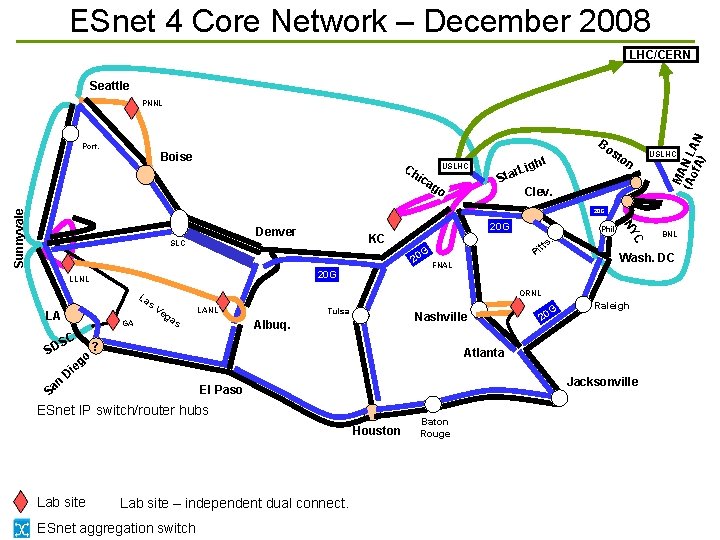

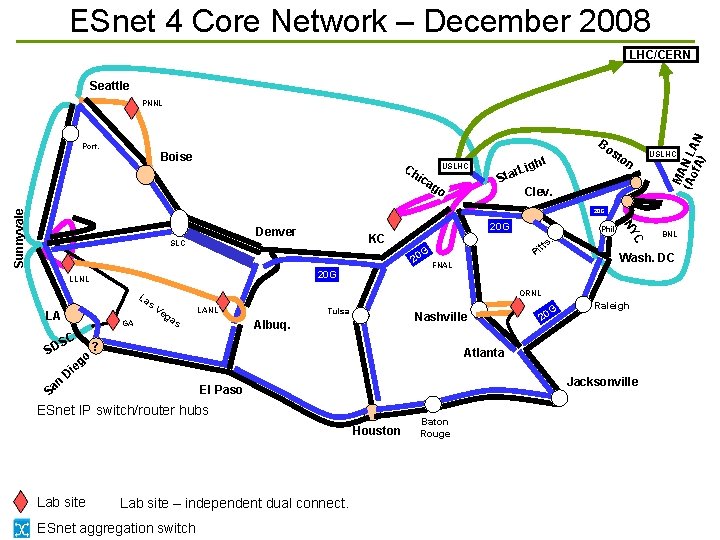

ESnet 4 Core Network – December 2008 LHC/CERN Seattle Port. Boise Ch USLHC ica go Bo st t igh r. L Sta Clev. on M (Ao. AN L f. A) AN PNNL USLHC Denver SLC 20 G KC 20 G LLNL La s LA S GA C DS n Sa e Di go Ve ga s LANL Tulsa Nashville Albuq. ? G 20 Raleigh Atlanta Jacksonville El Paso Houston Lab site – independent dual connect. ESnet aggregation switch Baton Rouge BNL Wash. DC FNAL ORNL ESnet IP switch/router hubs Lab site tt Pi G 20 Phil s. C NY Sunnyvale 20 G

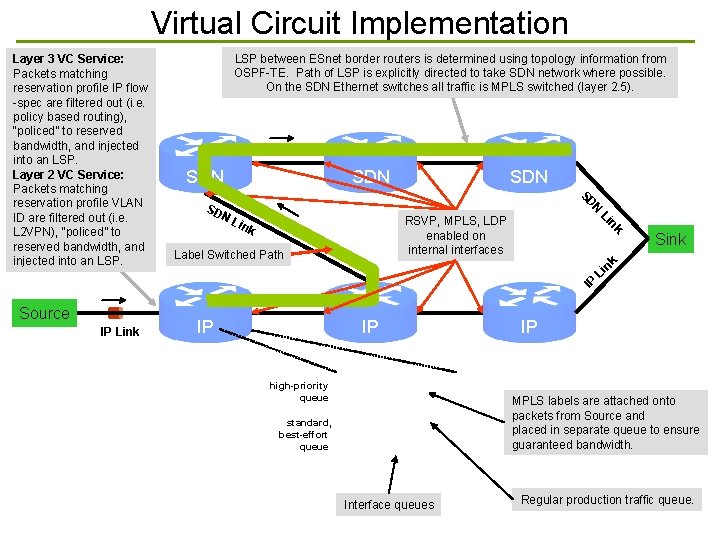

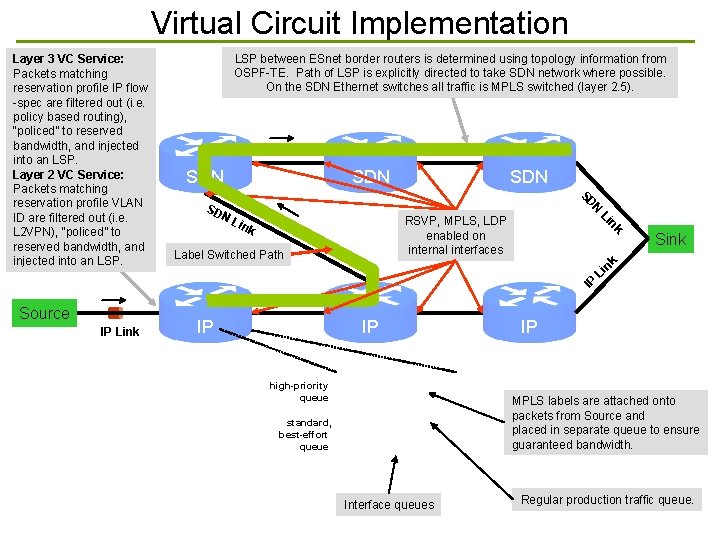

Virtual Circuit Implementation LSP between ESnet border routers is determined using topology information from OSPF-TE. Path of LSP is explicitly directed to take SDN network where possible. On the SDN Ethernet switches all traffic is MPLS switched (layer 2. 5). SDN SDN RSVP, MPLS, LDP enabled on internal interfaces Sink IP Li nk Label Switched Path nk ink Li NL N SD SD Layer 3 VC Service: Packets matching reservation profile IP flow -spec are filtered out (i. e. policy based routing), “policed” to reserved bandwidth, and injected into an LSP. Layer 2 VC Service: Packets matching reservation profile VLAN ID are filtered out (i. e. L 2 VPN), “policed” to reserved bandwidth, and injected into an LSP. Source IP Link IP IP high-priority queue IP MPLS labels are attached onto packets from Source and placed in separate queue to ensure guaranteed bandwidth. standard, best-effort queue Interface queues Regular production traffic queue.

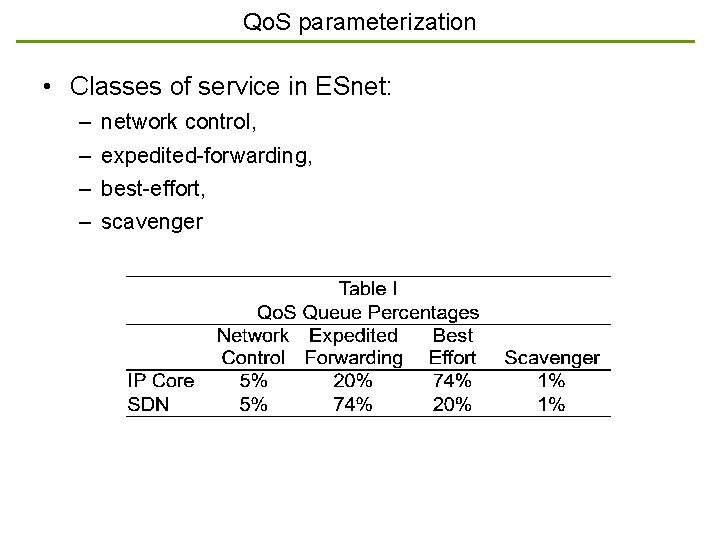

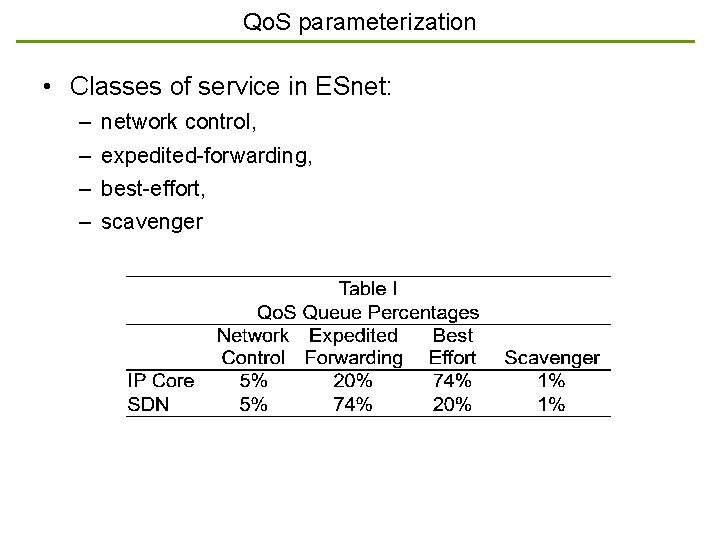

Qo. S parameterization • Classes of service in ESnet: – – network control, expedited-forwarding, best-effort, scavenger

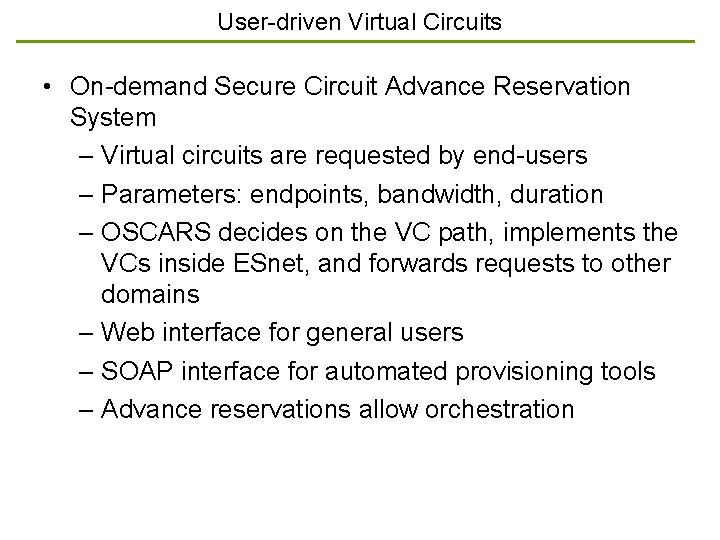

User-driven Virtual Circuits • On-demand Secure Circuit Advance Reservation System – Virtual circuits are requested by end-users – Parameters: endpoints, bandwidth, duration – OSCARS decides on the VC path, implements the VCs inside ESnet, and forwards requests to other domains – Web interface for general users – SOAP interface for automated provisioning tools – Advance reservations allow orchestration

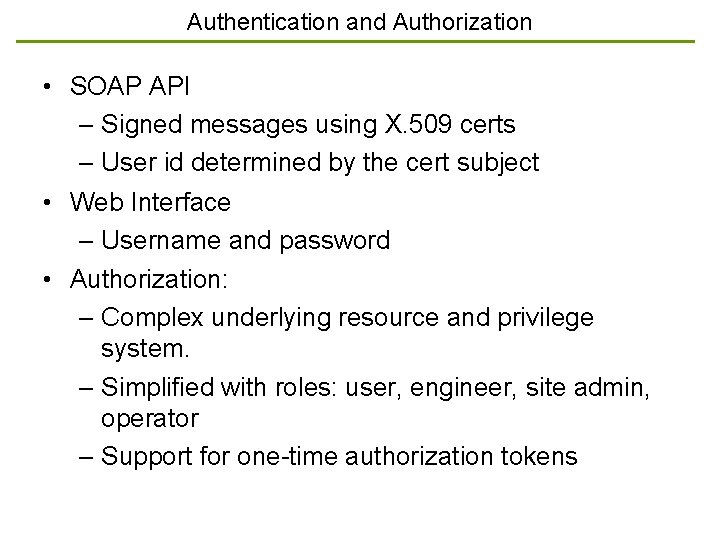

Authentication and Authorization • SOAP API – Signed messages using X. 509 certs – User id determined by the cert subject • Web Interface – Username and password • Authorization: – Complex underlying resource and privilege system. – Simplified with roles: user, engineer, site admin, operator – Support for one-time authorization tokens

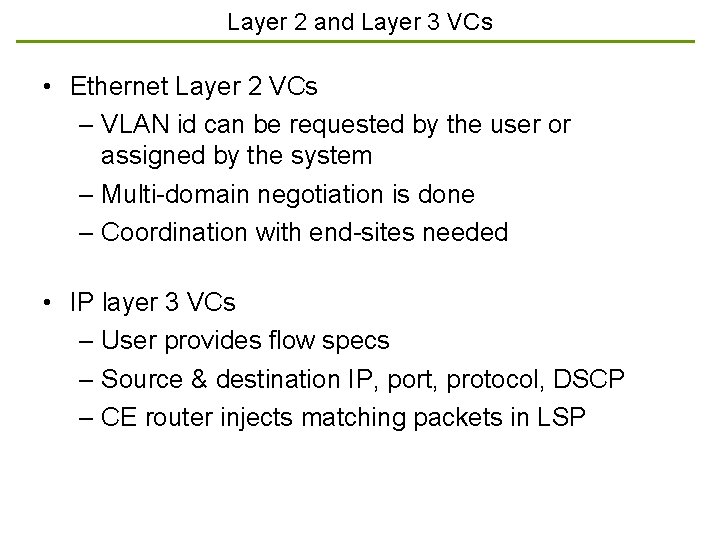

Layer 2 and Layer 3 VCs • Ethernet Layer 2 VCs – VLAN id can be requested by the user or assigned by the system – Multi-domain negotiation is done – Coordination with end-sites needed • IP layer 3 VCs – User provides flow specs – Source & destination IP, port, protocol, DSCP – CE router injects matching packets in LSP

Path Computation • OSCARS periodically harvests full topology information for ESnet • When a path needs to be computed for a new VC request, a topology graph is populated from that data as well as all concurrent VCs. • Then, all links that cannot satisfy the new VC are pruned. • Finally, a Djikstra shortest-path algorithm is run on the pruned graph • The base graph currently stands at ~1000 nodes and 1500 edges.

Automated Device Configuration • After a VC has been reserved the network devices must be configured • Cisco and Juniper platforms are supported • Users can use the SOAP API to signal VC setup and teardown • OSCARS has a scheduler component that periodically checks for pending configuration tasks • A platform-specific configuration template is filled out and pushed to the routers. • Currently 10 -100 seconds are needed to instantiate a circuit in this manner.

Over-subscription and Soft Reservations • Original concept did not allow for any kind of oversubscription or over-booking. • Emerging user requirements: – User-managed load-balancing – Redundant VCs • We decided to allow users to oversubscribe their VCs. • Packets below reserved bandwidth are marked expedited-forwarding (normal VC traffic) • Any packets exceeding that are marked as scavenger.

Collaboration • • DICE: Dante, Internet 2, Caltech/USLHCNet, ESnet Close partnership with Internet 2 Interoperability with Auto. BAHN, Phosphorus Automated provisioning with Tera. Paths, Lambda. Station and Phoebus • Standardization efforts: – OGF: • NSI WG, • NML WG, • NM WG – GLIF: • GNI API WG

Network Use • Currently in pre-production. • 16 long-term VCs, total ~40 Gbps reserved – Almost all related to LHC T 0 -T 1 and T 1 -T 2 – Almost all are “soft” reservations • Primary users: Fermilab, Brookhaven • Our users consistently demand production-quality availability for LHC T 0 -T 1 and T 1 -T 2 VCs. • Cross-domain VCs with Internet 2 using Lambda. Station and Terapaths • Demos at SC 07, SC 08, multiple Joint Techs and I 2 Member Meetings • VCs minimally disrupted during full replacement of network gear in two of our Po. Ps.

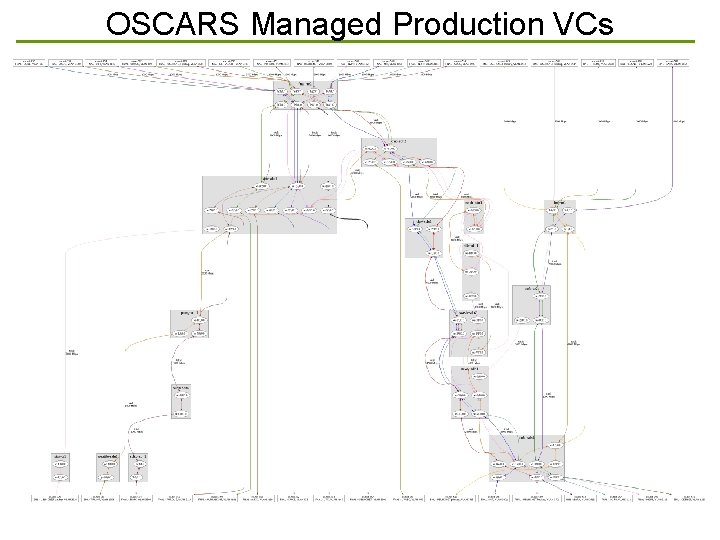

OSCARS Managed Production VCs

Future work • Outage management – Automated VC rerouting based on network management system data, and scheduled or unscheduled outages • Multi-layer VCs – Integrated solution for services provisioned across multiple layers – ie an L 3 service over a L 2 circuit over a L 1 lightpath. • Optimizations – Support for short-lived just-in-time VCs (<15 min) – Provisioning and instantiation speed-up

Acknowledgments • • • Tom Lehman, ISI East John Vollbrecht, Internet 2 Andrew Lake, Internet 2 Afrodite Sevasti, Auto. BAHN project Guy Roberts, DANTE Radek Krzywania, PSNC

Thank you! • Questions?

Authors • Chin P. Guok, ESnet chin@es. net • David W. Robertson, LBNL dwrobertson@lbl. gov • Evangelos Chaniotakis, ESnet haniotak@es. net • Mary R. Thompson, LBNL mrthompson@lbl. gov • William E. Johnston, ESnet wej@es. net • Brian Tierney, ESnet tierney@es. net