A Survey of Parallel Computer Architectures w CSC

- Slides: 37

A Survey of Parallel Computer Architectures w CSC 521 Advanced Computer Architecture w Dr. Craig Reinhart w The General and Logical Theory of Automata w Lin, Shu-Hsien (ANDY) w 4/24/2008 2/24/2021 1

What is the parallel computer architectures? w Pipelining Instruction w Multiple CPU Functional Units w Separate CPU and I/O processors 2/24/2021 2

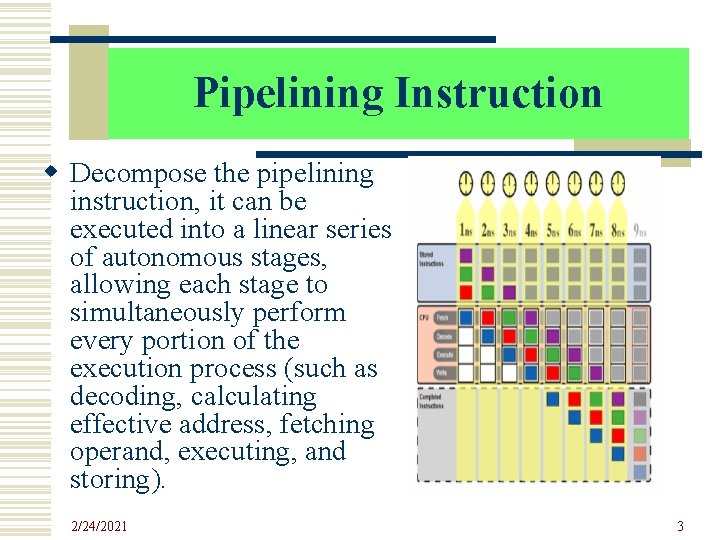

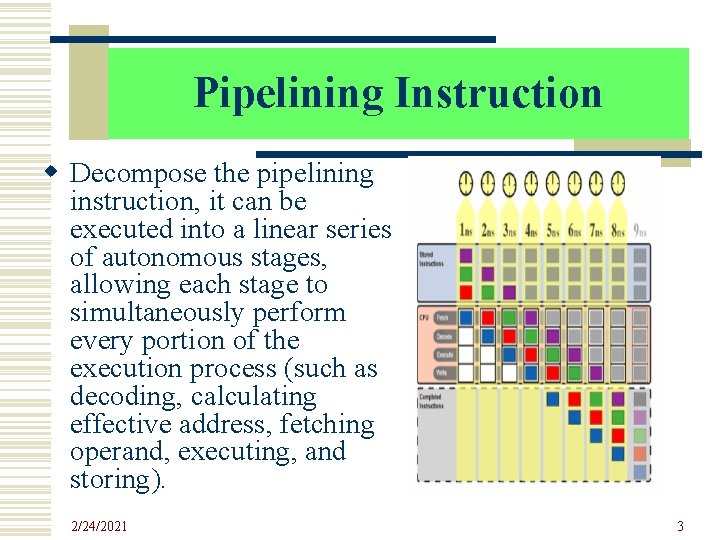

Pipelining Instruction w Decompose the pipelining instruction, it can be executed into a linear series of autonomous stages, allowing each stage to simultaneously perform every portion of the execution process (such as decoding, calculating effective address, fetching operand, executing, and storing). 2/24/2021 3

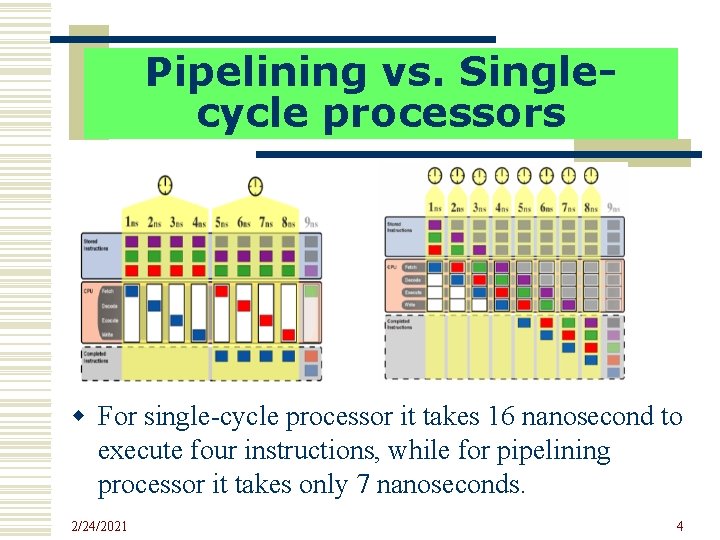

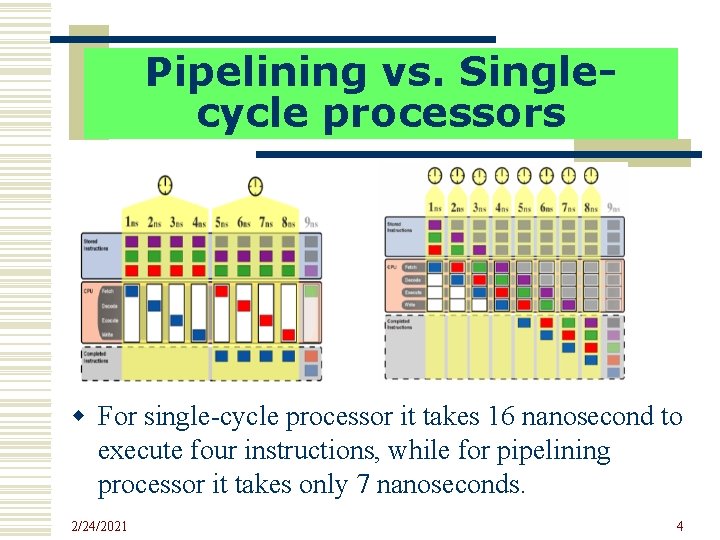

Pipelining vs. Singlecycle processors w For single-cycle processor it takes 16 nanosecond to execute four instructions, while for pipelining processor it takes only 7 nanoseconds. 2/24/2021 4

Multiple CPU Functional Units(1) w Multiple CPU Functional Units provides independent functional units for arithmetic and Boolean operations that execute concurrently. 2/24/2021 5

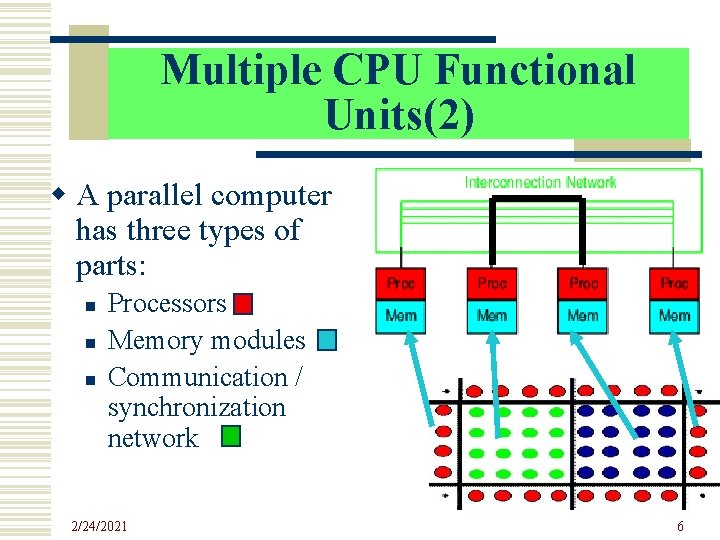

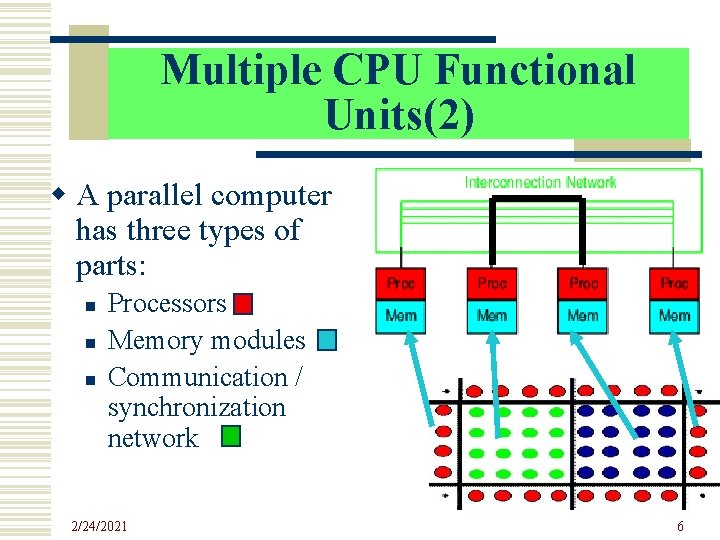

Multiple CPU Functional Units(2) w A parallel computer has three types of parts: n n n Processors Memory modules Communication / synchronization network 2/24/2021 6

Single Processor V. S. Multi-Processor Energy-Efficient Performance The two figures are showing the different performance with a single processor and multi-processors: w The right above figure shows that based on the single processor. Increasing clock frequency by 20% to single processor delivers a 13% percent performance gain, but require 73% greater Power. w The below figure shows that adding a second processor on the underclocking experience, the clock frequency effectively delivers 73% more performance. 2/24/2021 7

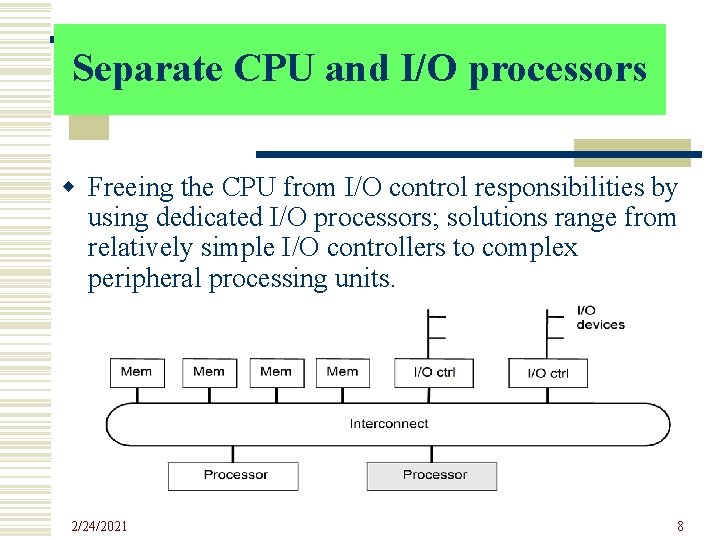

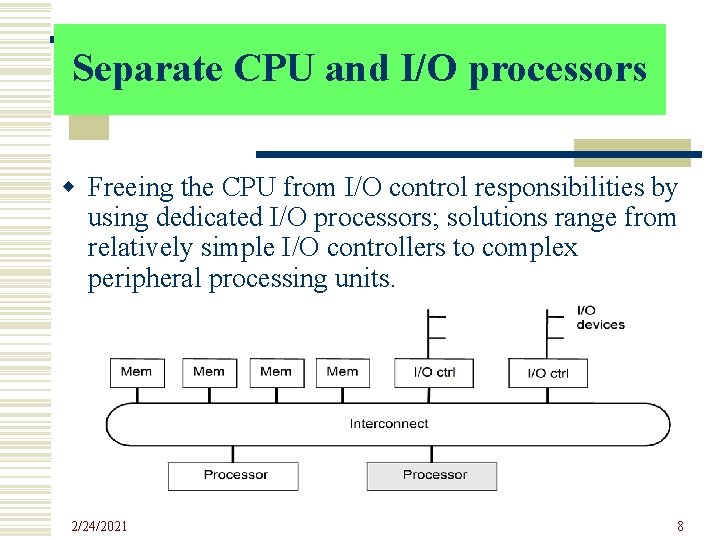

Separate CPU and I/O processors w Freeing the CPU from I/O control responsibilities by using dedicated I/O processors; solutions range from relatively simple I/O controllers to complex peripheral processing units. 2/24/2021 8

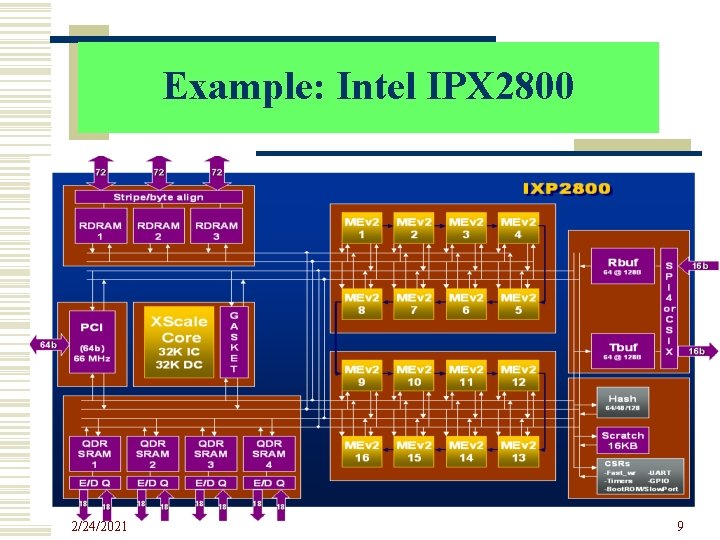

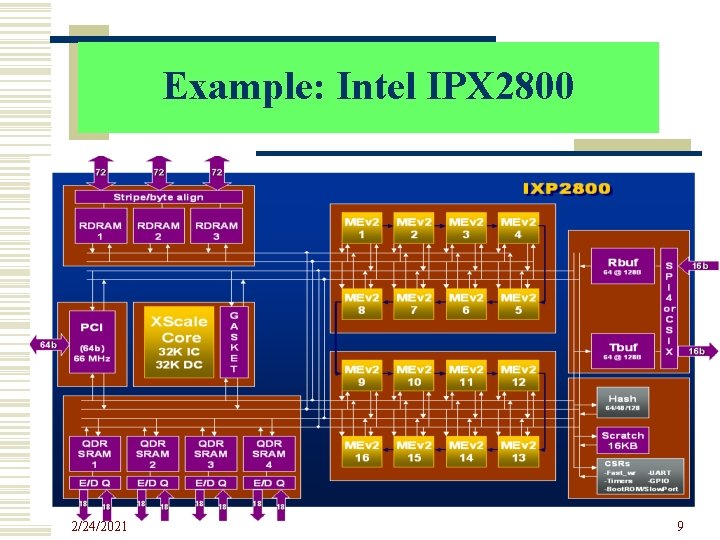

Example: Intel IPX 2800 2/24/2021 9

High-level Taxonomy of Parallel Computer Architectures w A parallel architecture provides an explicit, highlevel framework for the parallel programming solutions by providing multiple processors, whether simple or complex, that cooperate to solve problems through concurrent execution. 2/24/2021 10

Flynn’s Taxonomy Classifies Architectures (1) w SISD--(single instruction, single data stream) -- Defines serial computers. -- An ordinary computer w MISD--(multiple instruction, single data stream) -- It would involve multiple processors applying different instructions to a single datum; this hypothetical possibility is generally deemed to be impractical. 2/24/2021 11

Flynn’s Taxonomy Classifies Architectures (2) w SIMD--(single instruction, multiple data streams) -- It involves multiple processors simultaneously executing the same instruction on different data -- Massively parallel army-of-ants approach: processors execute the same sequence of instructions (or else NO-OP) in lockstep (TMC CM-2) w MIMD--(multiple instruction, multiple data streams) -- It involves multiple processors autonomously executing diverse instructions on diverse data -- It is true, symmetric, parallel computing (Sun Enterprise) 2/24/2021 12

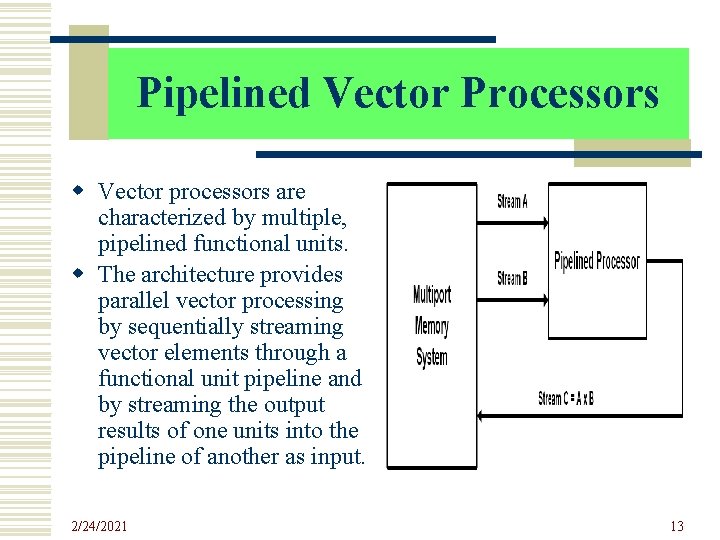

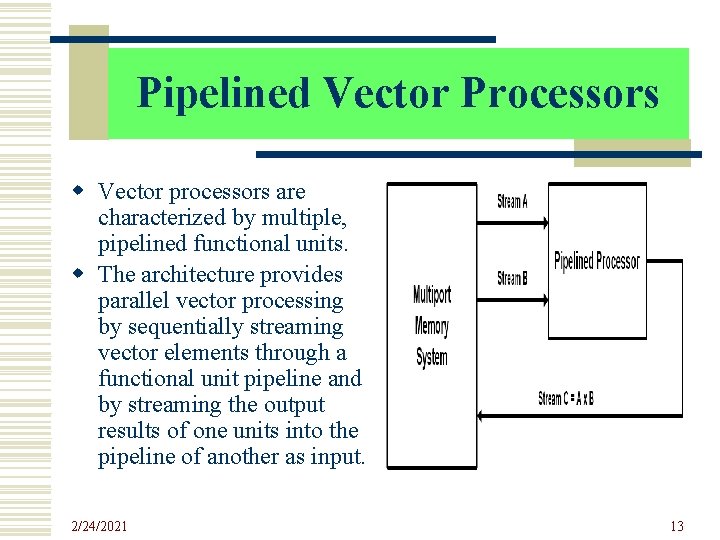

Pipelined Vector Processors w Vector processors are characterized by multiple, pipelined functional units. w The architecture provides parallel vector processing by sequentially streaming vector elements through a functional unit pipeline and by streaming the output results of one units into the pipeline of another as input. 2/24/2021 13

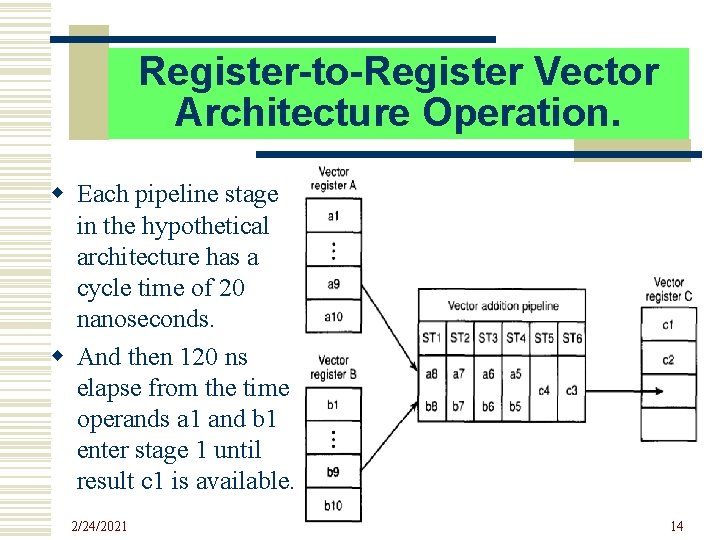

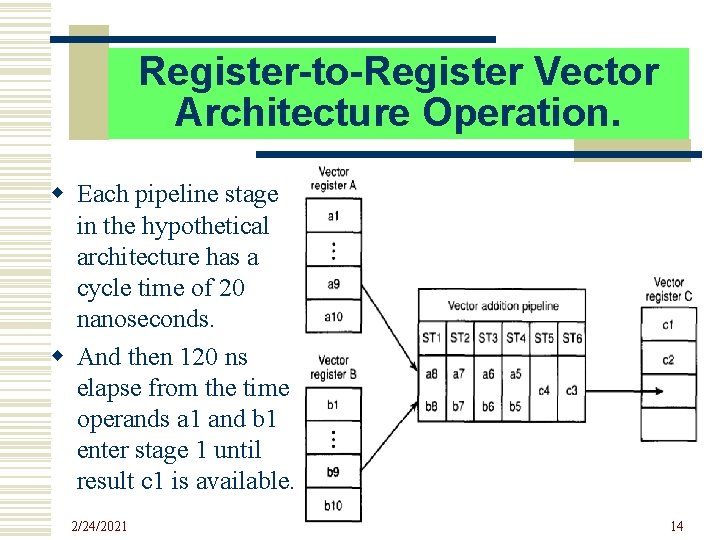

Register-to-Register Vector Architecture Operation. w Each pipeline stage in the hypothetical architecture has a cycle time of 20 nanoseconds. w And then 120 ns elapse from the time operands a 1 and b 1 enter stage 1 until result c 1 is available. 2/24/2021 14

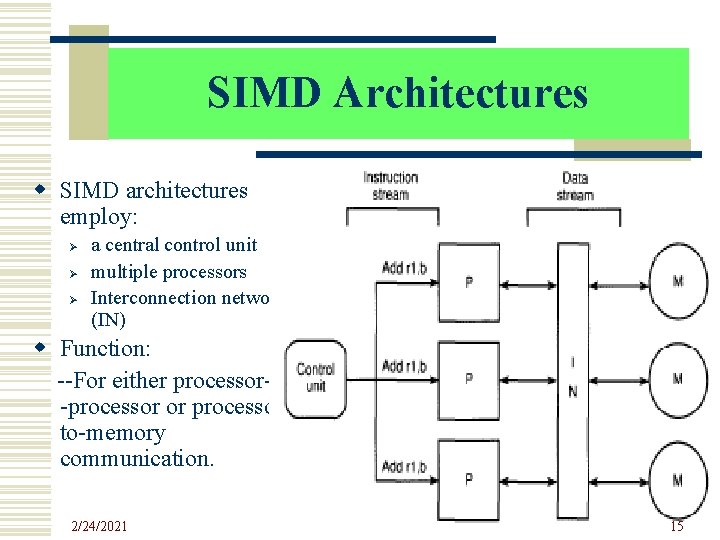

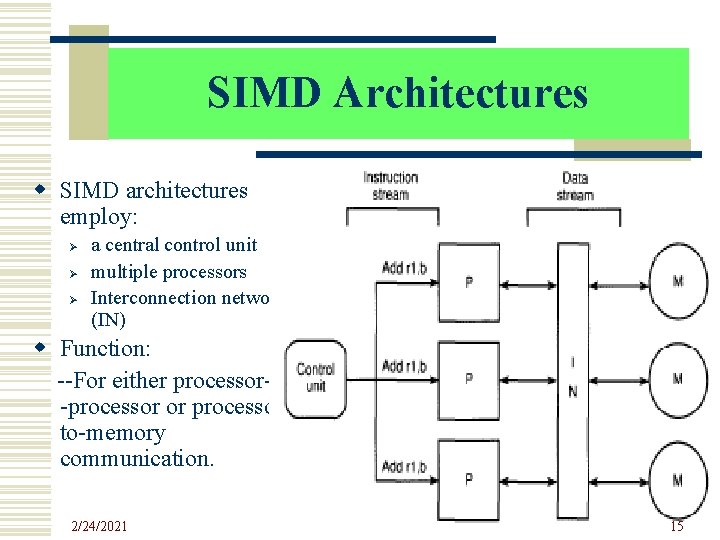

SIMD Architectures w SIMD architectures employ: Ø Ø Ø a central control unit multiple processors Interconnection network (IN) w Function: --For either processor-to -processor or processorto-memory communication. 2/24/2021 15

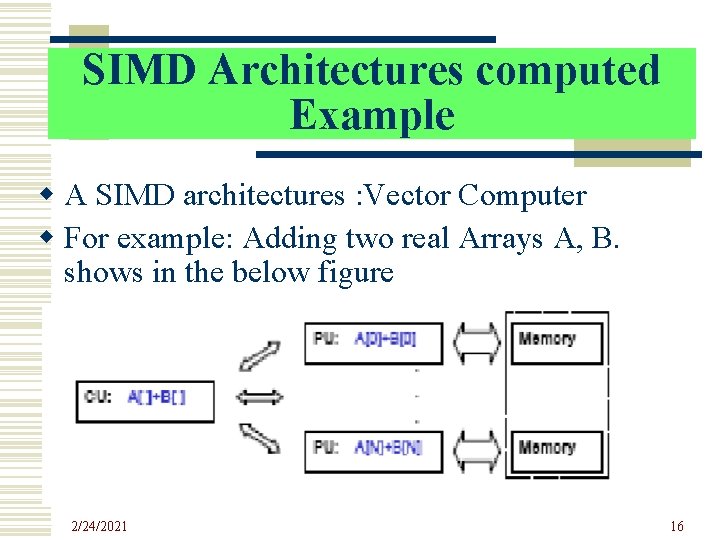

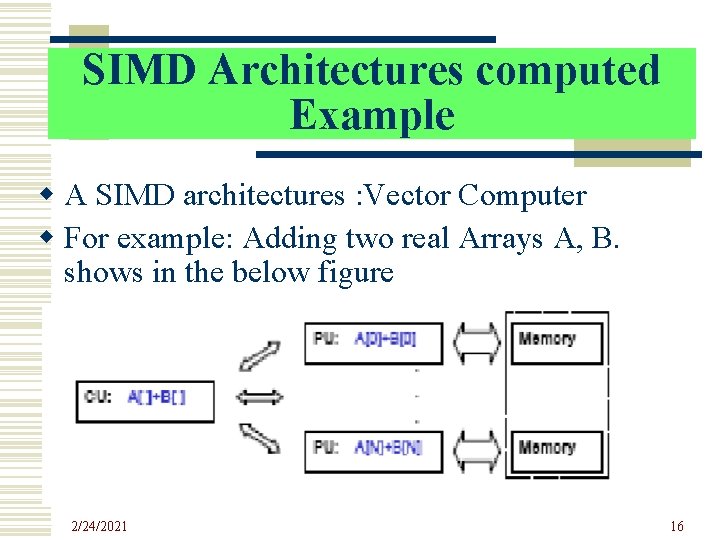

SIMD Architectures computed Example w A SIMD architectures : Vector Computer w For example: Adding two real Arrays A, B. shows in the below figure 2/24/2021 16

SIMD Architectures Problems Some SIMD problems, e. g. w SIMD cannot use commodity processors w SIMD cannot supports multiple users w SIMD is less efficiency in conditionally executed parallel code 2/24/2021 17

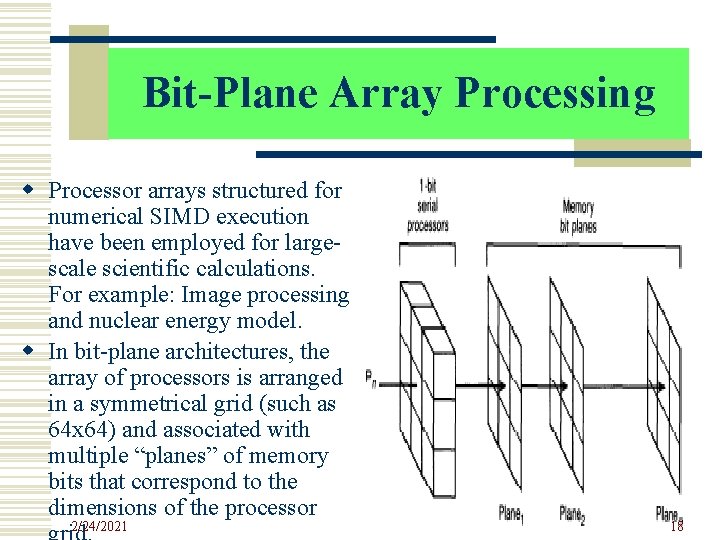

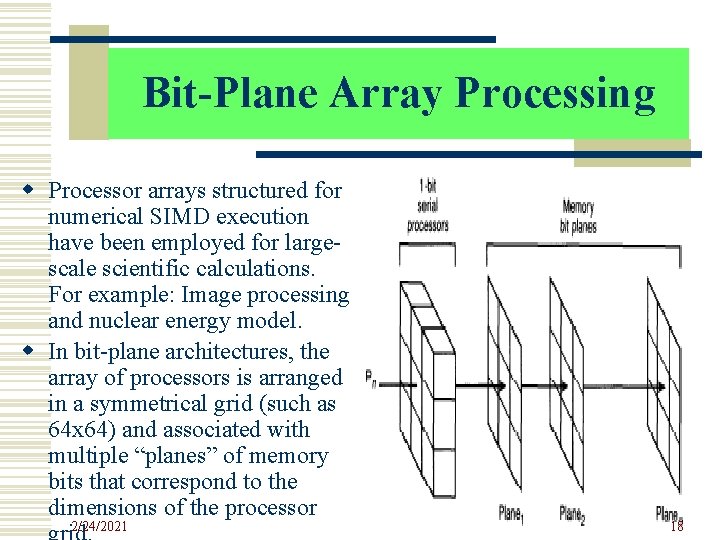

Bit-Plane Array Processing w Processor arrays structured for numerical SIMD execution have been employed for largescale scientific calculations. For example: Image processing and nuclear energy model. w In bit-plane architectures, the array of processors is arranged in a symmetrical grid (such as 64 x 64) and associated with multiple “planes” of memory bits that correspond to the dimensions of the processor 2/24/2021 18

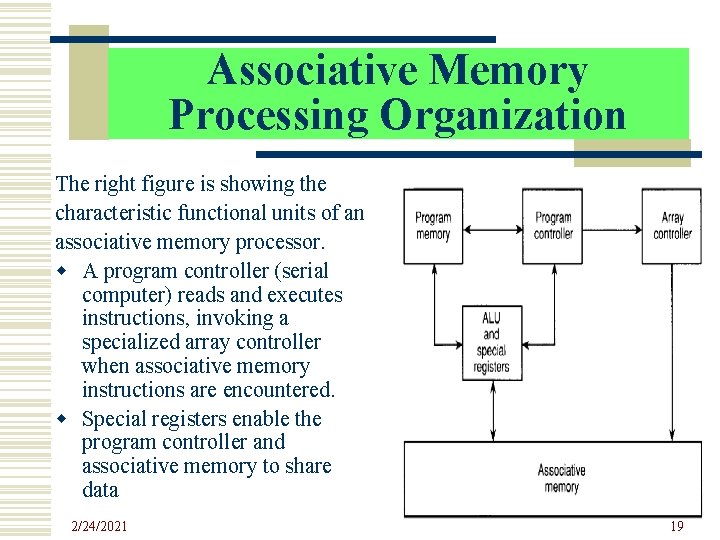

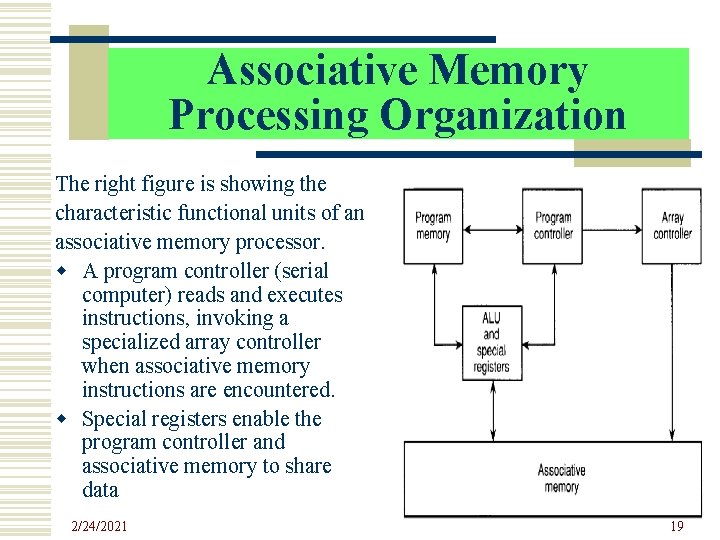

Associative Memory Processing Organization The right figure is showing the characteristic functional units of an associative memory processor. w A program controller (serial computer) reads and executes instructions, invoking a specialized array controller when associative memory instructions are encountered. w Special registers enable the program controller and associative memory to share data 2/24/2021 19

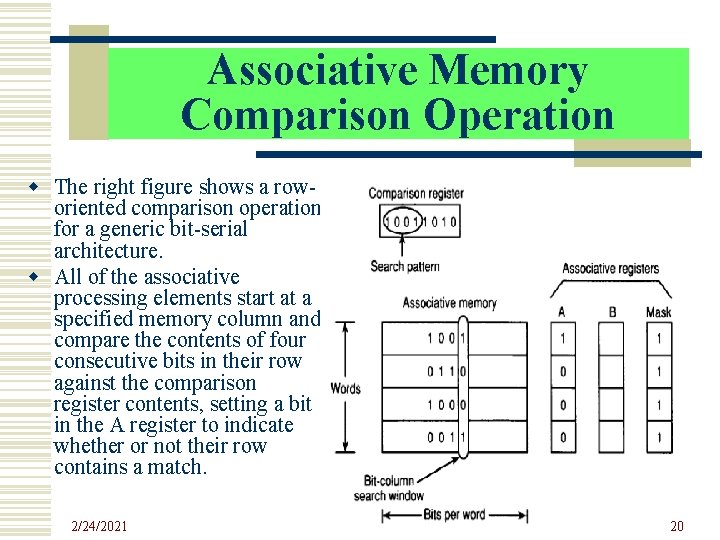

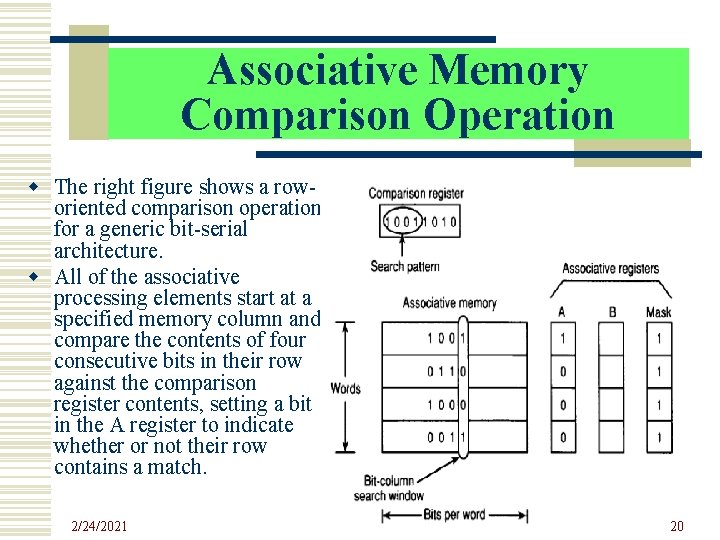

Associative Memory Comparison Operation w The right figure shows a roworiented comparison operation for a generic bit-serial architecture. w All of the associative processing elements start at a specified memory column and compare the contents of four consecutive bits in their row against the comparison register contents, setting a bit in the A register to indicate whether or not their row contains a match. 2/24/2021 20

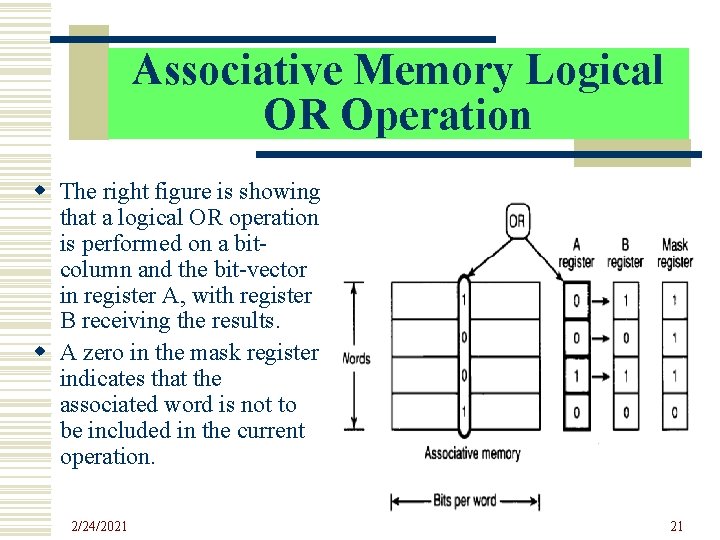

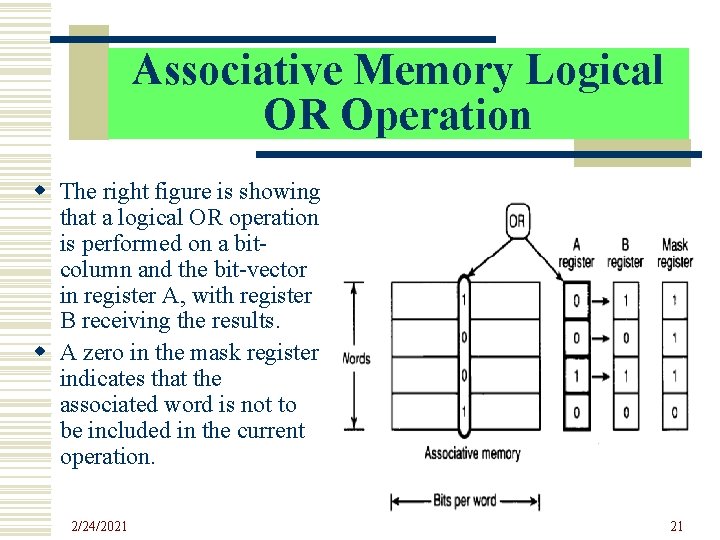

Associative Memory Logical OR Operation w The right figure is showing that a logical OR operation is performed on a bitcolumn and the bit-vector in register A, with register B receiving the results. w A zero in the mask register indicates that the associated word is not to be included in the current operation. 2/24/2021 21

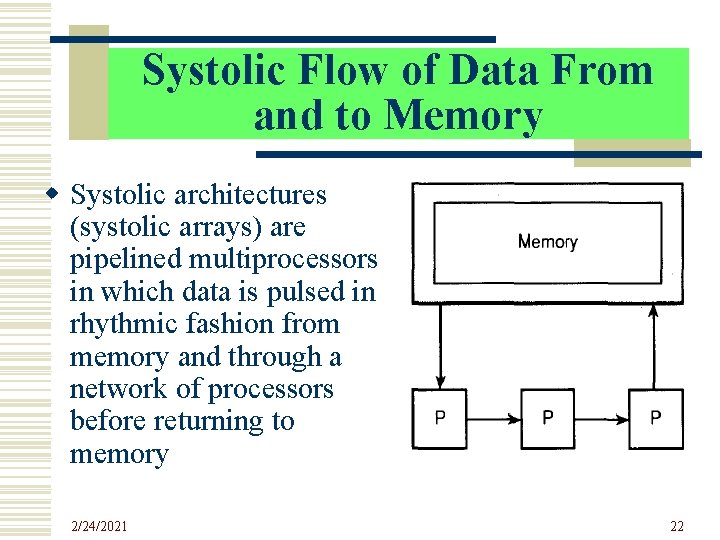

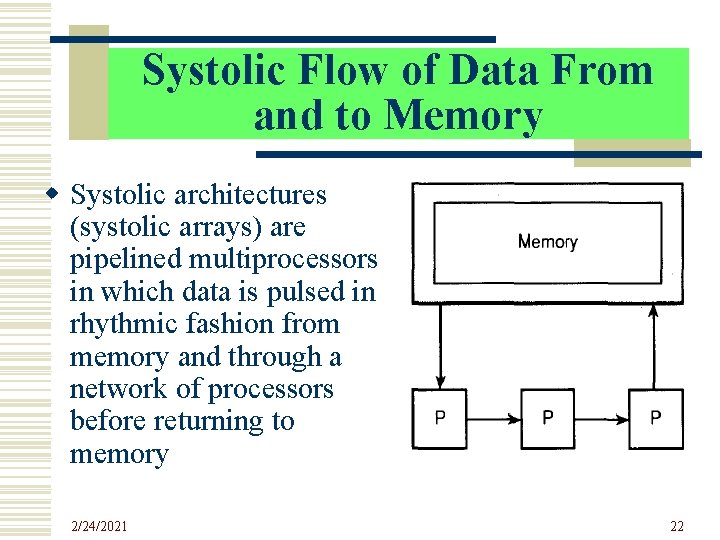

Systolic Flow of Data From and to Memory w Systolic architectures (systolic arrays) are pipelined multiprocessors in which data is pulsed in rhythmic fashion from memory and through a network of processors before returning to memory 2/24/2021 22

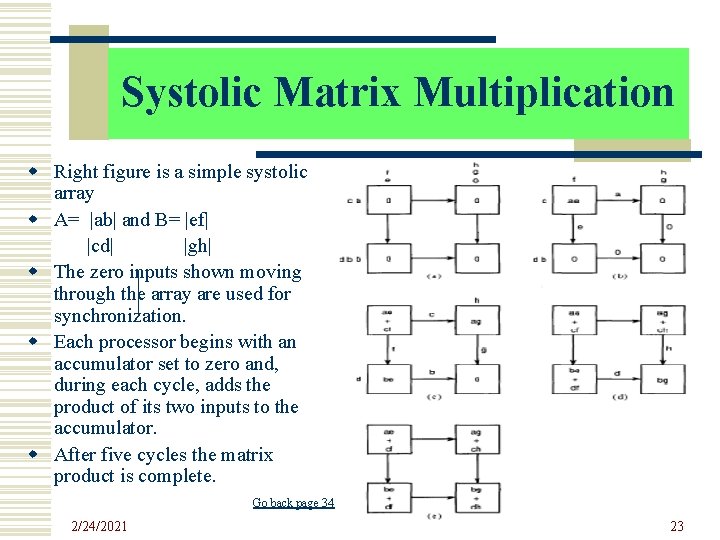

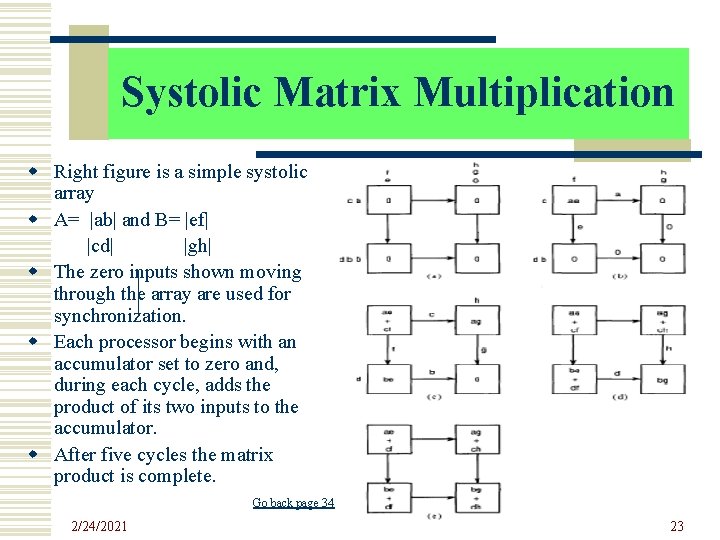

Systolic Matrix Multiplication w Right figure is a simple systolic array w A= |ab| and B= |ef| |cd| |gh| w The zero inputs shown moving through the array are used for synchronization. w Each processor begins with an accumulator set to zero and, during each cycle, adds the product of its two inputs to the accumulator. w After five cycles the matrix product is complete. Go back page 34 2/24/2021 23

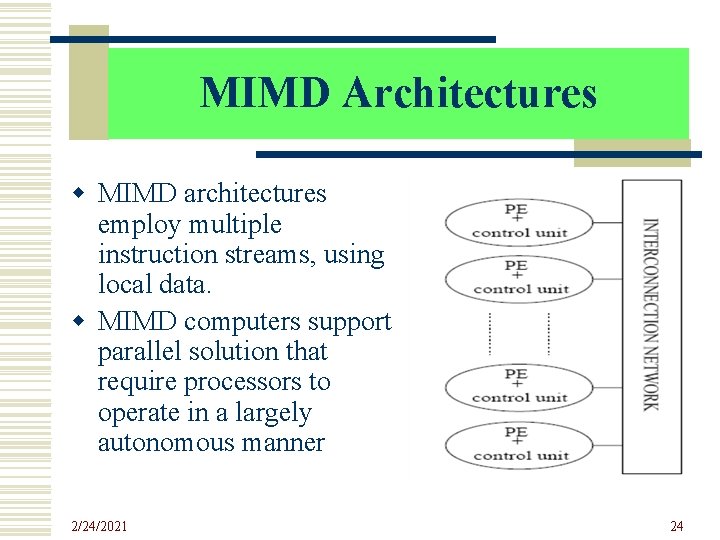

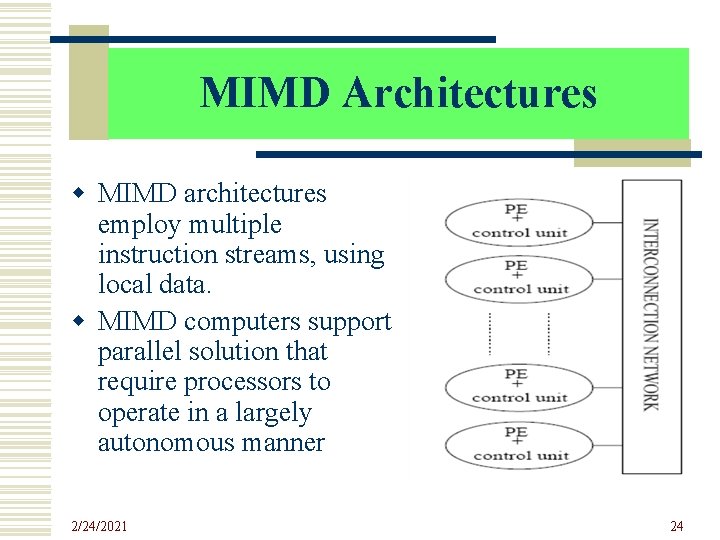

MIMD Architectures w MIMD architectures employ multiple instruction streams, using local data. w MIMD computers support parallel solution that require processors to operate in a largely autonomous manner 2/24/2021 24

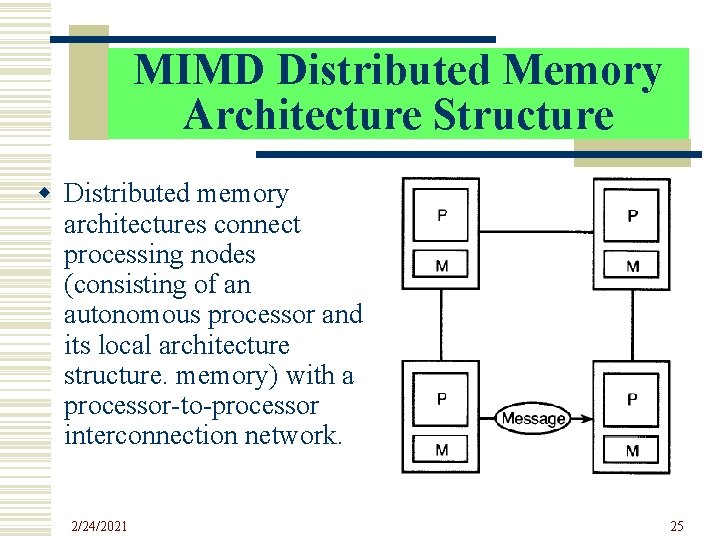

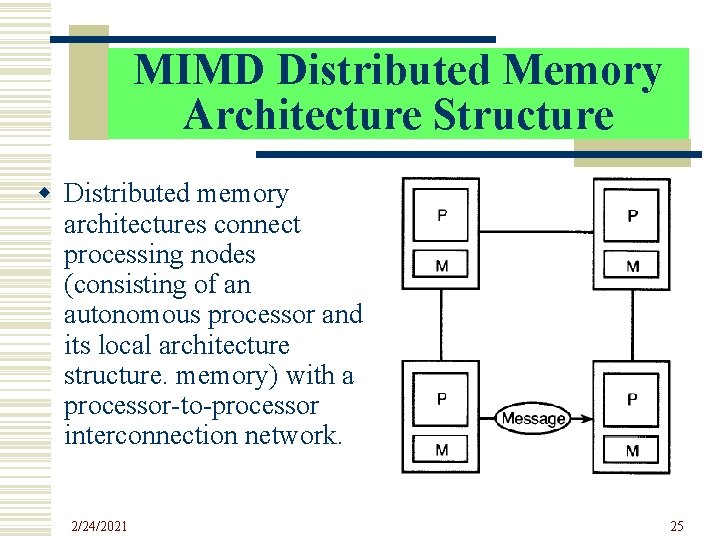

MIMD Distributed Memory Architecture Structure w Distributed memory architectures connect processing nodes (consisting of an autonomous processor and its local architecture structure. memory) with a processor-to-processor interconnection network. 2/24/2021 25

Example: Distributed Memory Architectures w Right figure is IBM RS/6000 SP machine. It is a distributed memory architectures machine. 2/24/2021 26

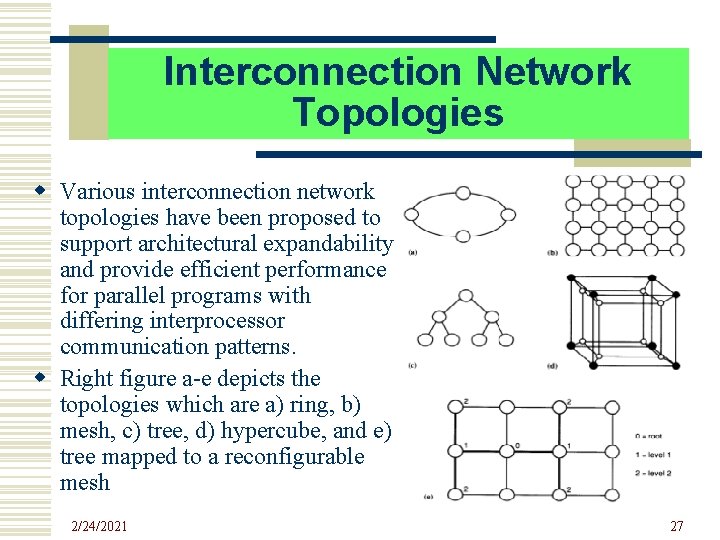

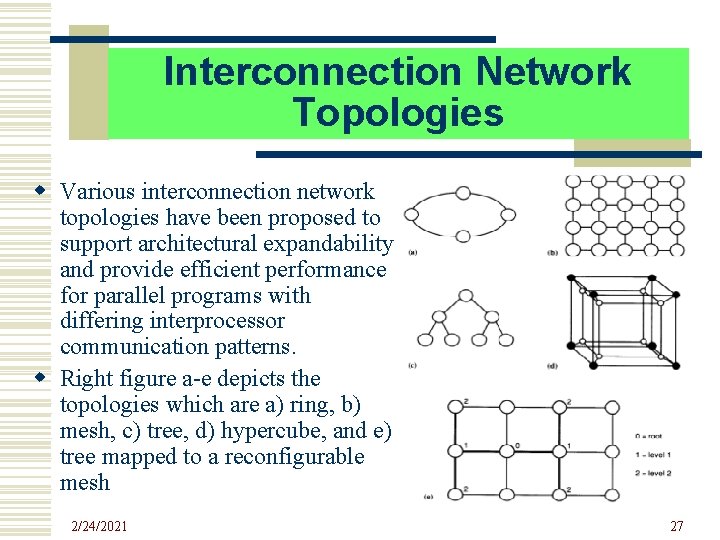

Interconnection Network Topologies w Various interconnection network topologies have been proposed to support architectural expandability and provide efficient performance for parallel programs with differing interprocessor communication patterns. w Right figure a-e depicts the topologies which are a) ring, b) mesh, c) tree, d) hypercube, and e) tree mapped to a reconfigurable mesh 2/24/2021 27

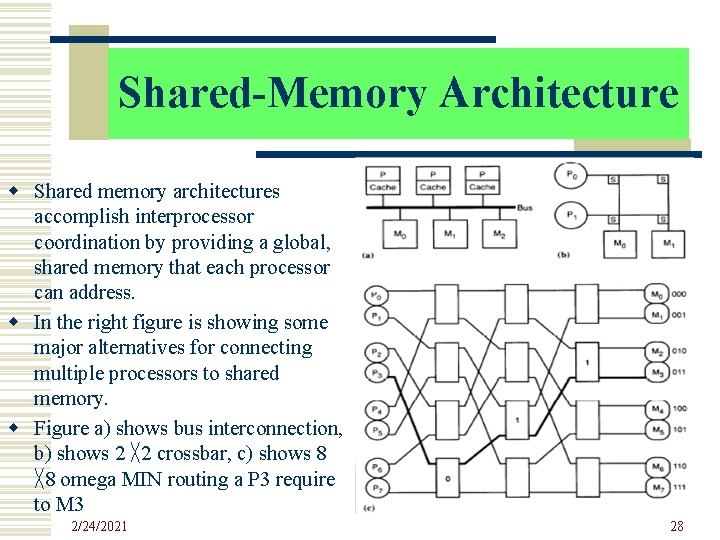

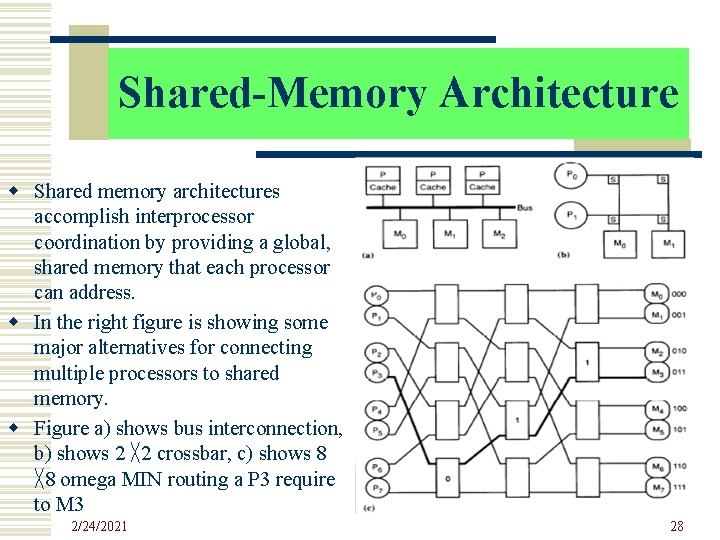

Shared-Memory Architecture w Shared memory architectures accomplish interprocessor coordination by providing a global, shared memory that each processor can address. w In the right figure is showing some major alternatives for connecting multiple processors to shared memory. w Figure a) shows bus interconnection, b) shows 2 ╳ 2 crossbar, c) shows 8 ╳ 8 omega MIN routing a P 3 require to M 3 2/24/2021 28

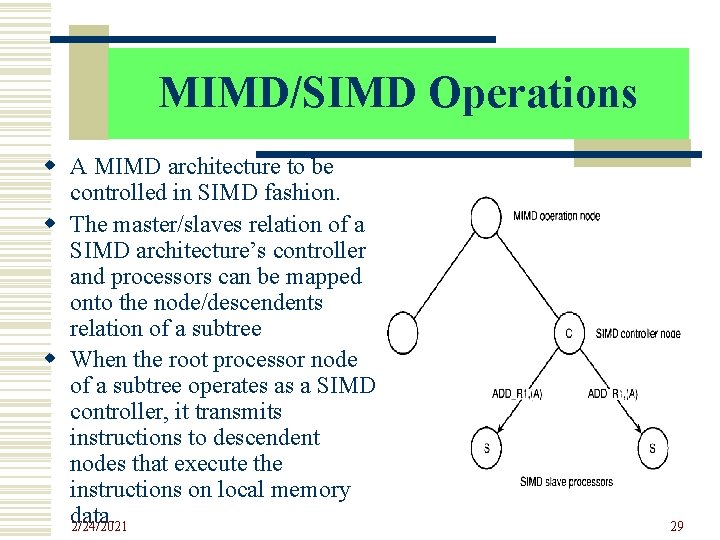

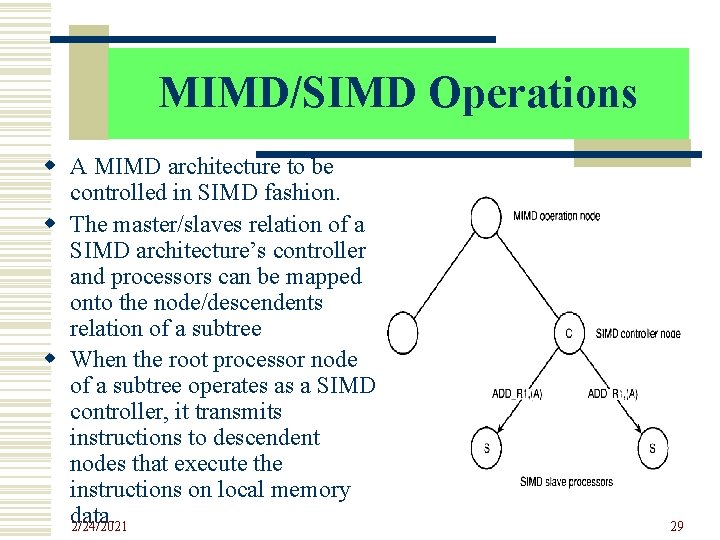

MIMD/SIMD Operations w A MIMD architecture to be controlled in SIMD fashion. w The master/slaves relation of a SIMD architecture’s controller and processors can be mapped onto the node/descendents relation of a subtree w When the root processor node of a subtree operates as a SIMD controller, it transmits instructions to descendent nodes that execute the instructions on local memory data. 2/24/2021 29

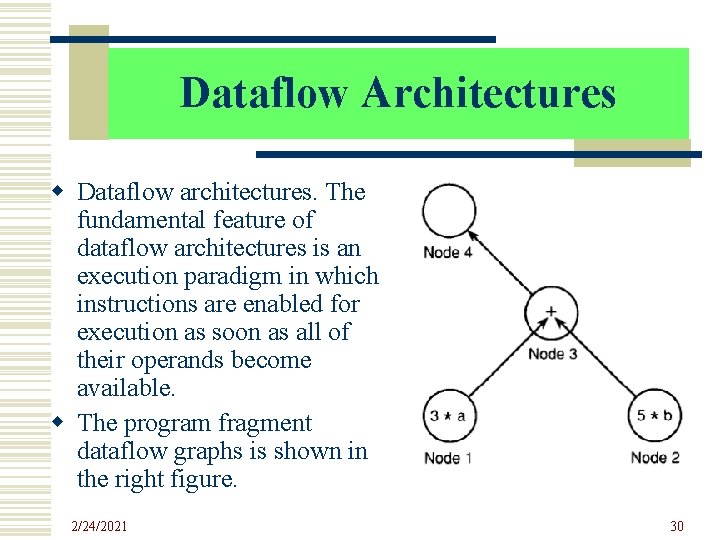

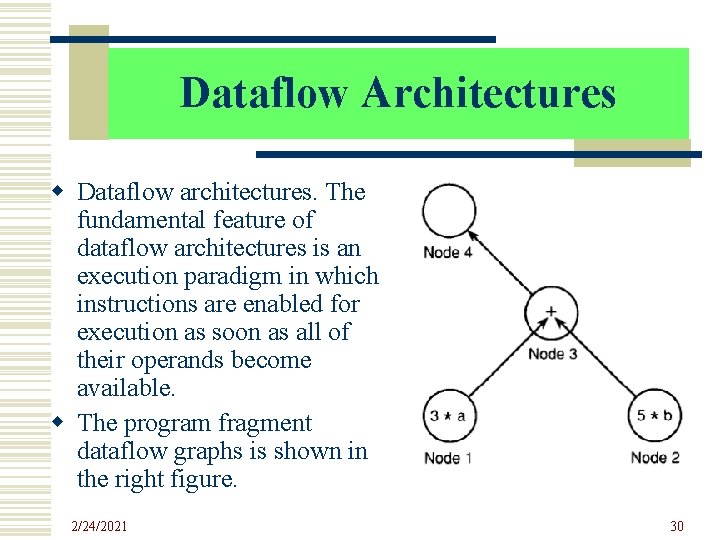

Dataflow Architectures w Dataflow architectures. The fundamental feature of dataflow architectures is an execution paradigm in which instructions are enabled for execution as soon as all of their operands become available. w The program fragment dataflow graphs is shown in the right figure. 2/24/2021 30

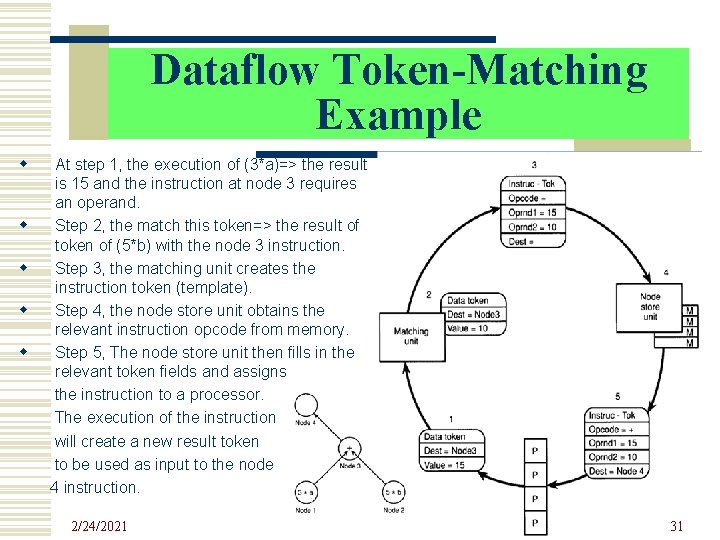

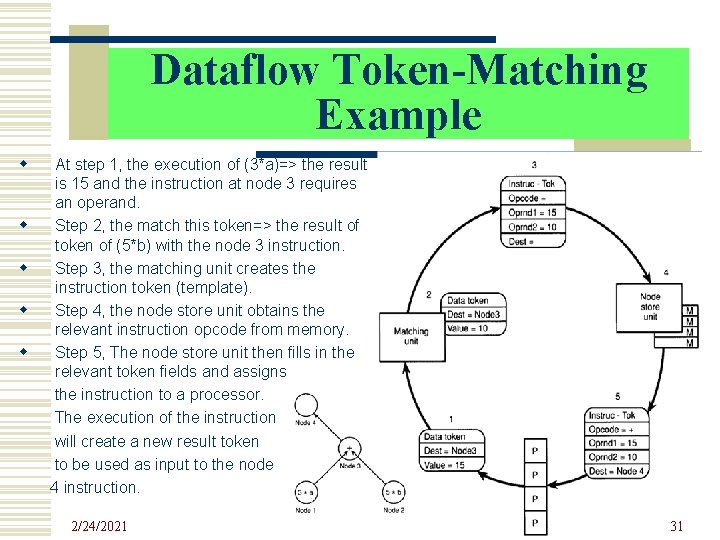

Dataflow Token-Matching Example w w w At step 1, the execution of (3*a)=> the result is 15 and the instruction at node 3 requires an operand. Step 2, the match this token=> the result of token of (5*b) with the node 3 instruction. Step 3, the matching unit creates the instruction token (template). Step 4, the node store unit obtains the relevant instruction opcode from memory. Step 5, The node store unit then fills in the relevant token fields and assigns the instruction to a processor. The execution of the instruction will create a new result token to be used as input to the node 4 instruction. 2/24/2021 31

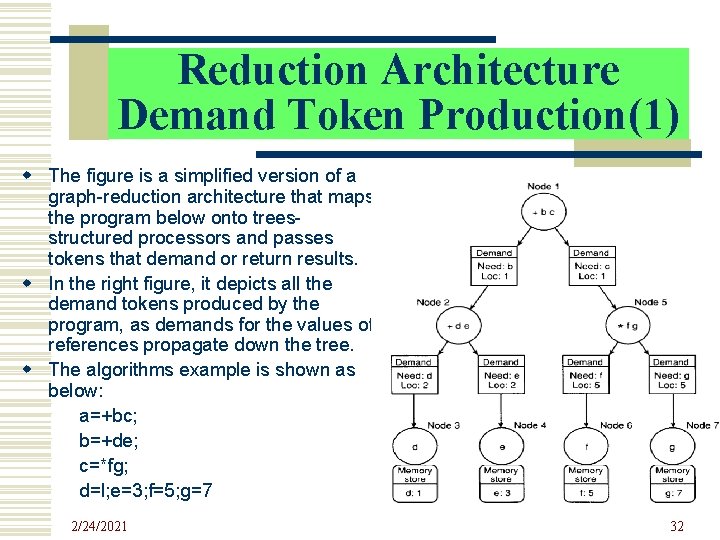

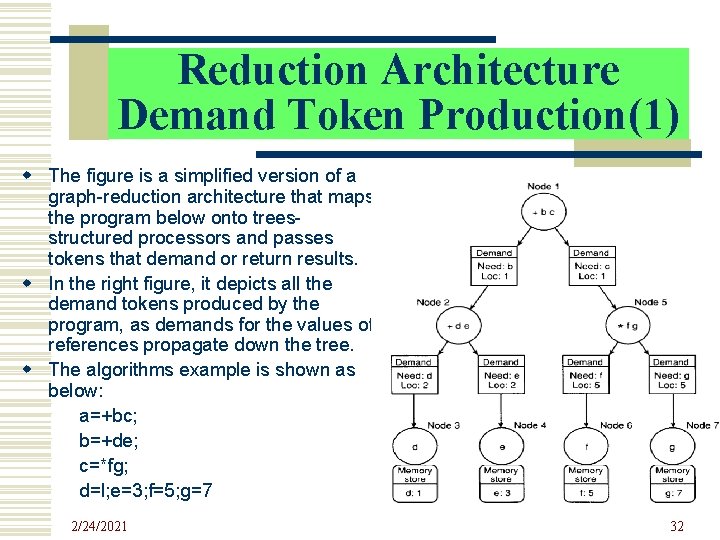

Reduction Architecture Demand Token Production(1) w The figure is a simplified version of a graph-reduction architecture that maps the program below onto treesstructured processors and passes tokens that demand or return results. w In the right figure, it depicts all the demand tokens produced by the program, as demands for the values of references propagate down the tree. w The algorithms example is shown as below: a=+bc; b=+de; c=*fg; d=l; e=3; f=5; g=7 2/24/2021 32

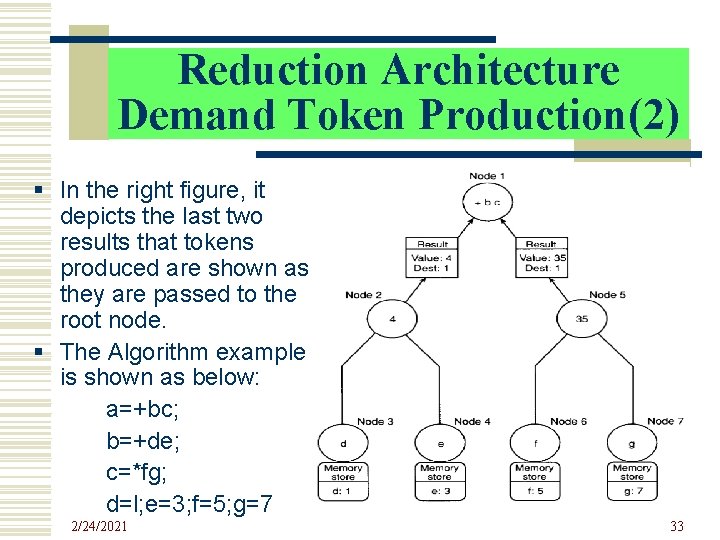

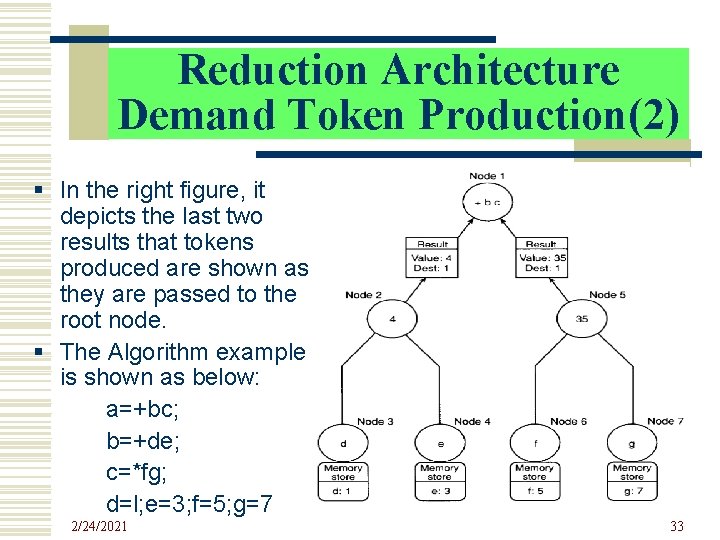

Reduction Architecture Demand Token Production(2) § In the right figure, it depicts the last two results that tokens produced are shown as they are passed to the root node. § The Algorithm example is shown as below: a=+bc; b=+de; c=*fg; d=l; e=3; f=5; g=7 2/24/2021 33

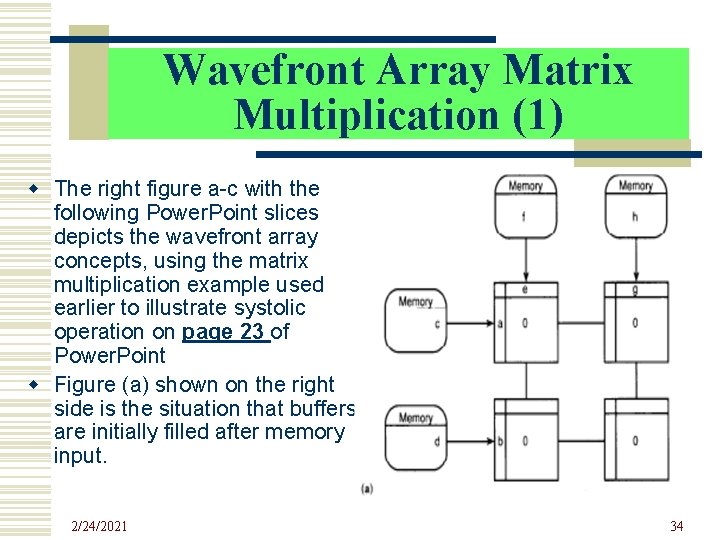

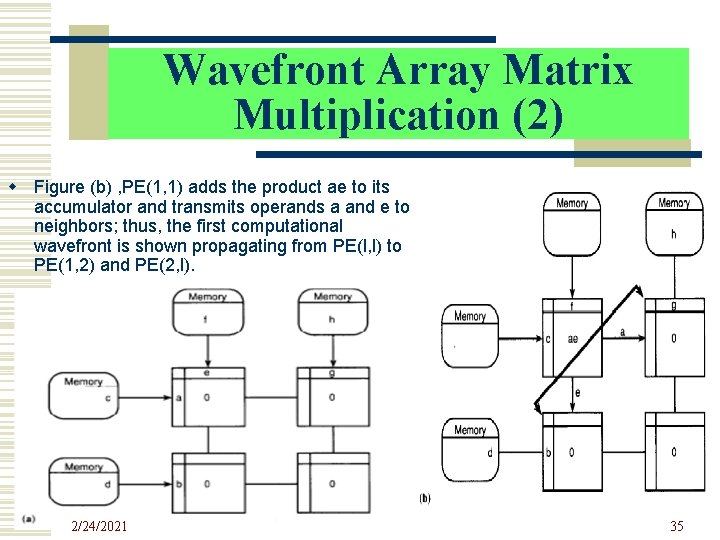

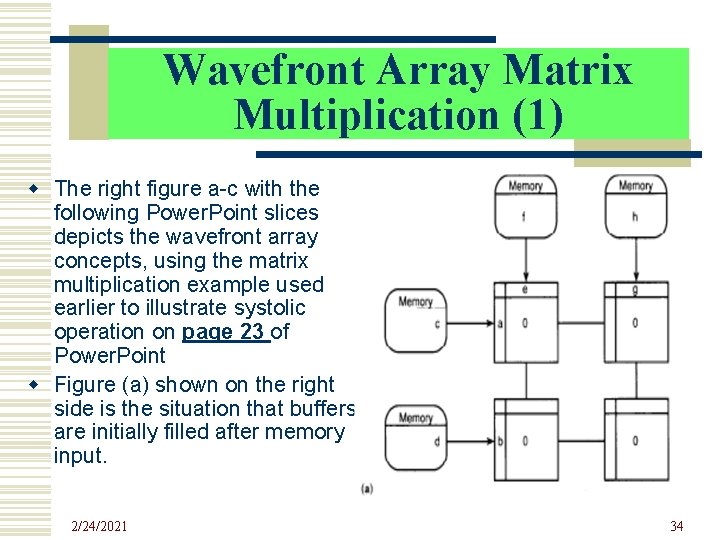

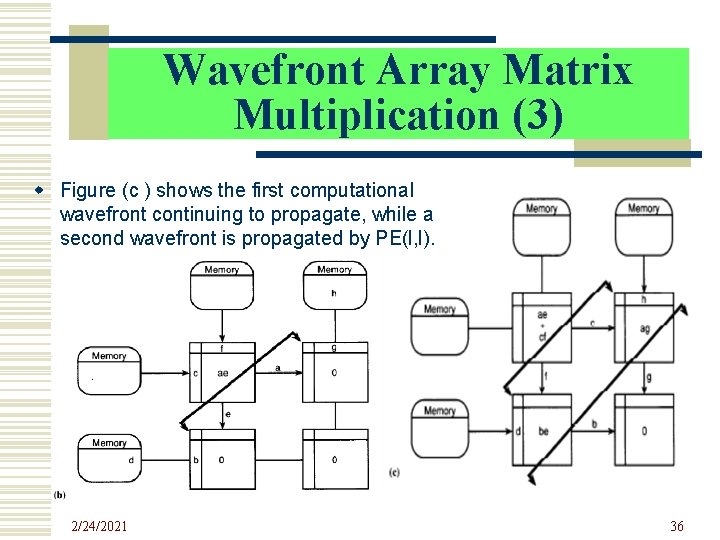

Wavefront Array Matrix Multiplication (1) w The right figure a-c with the following Power. Point slices depicts the wavefront array concepts, using the matrix multiplication example used earlier to illustrate systolic operation on page 23 of Power. Point w Figure (a) shown on the right side is the situation that buffers are initially filled after memory input. 2/24/2021 34

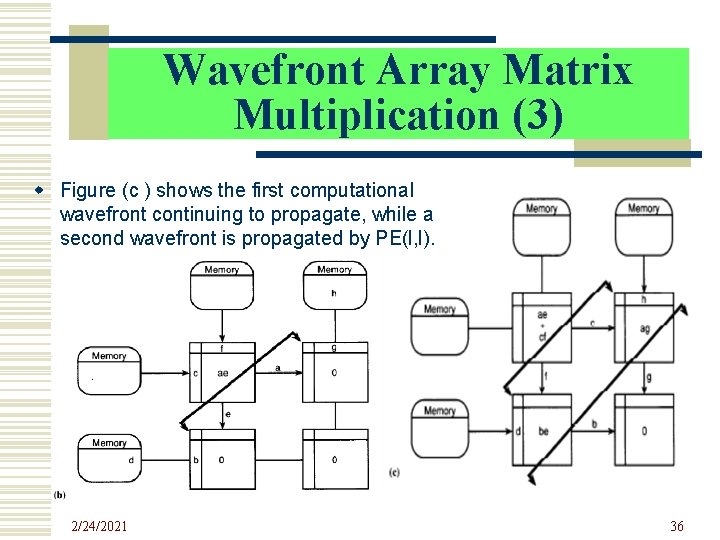

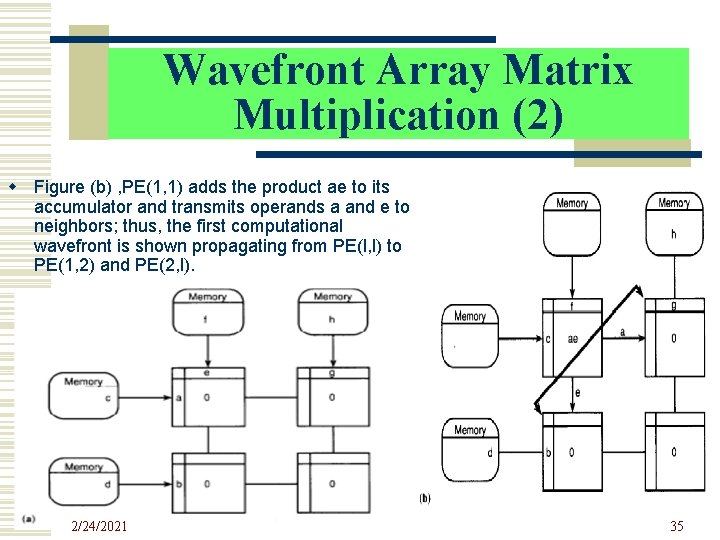

Wavefront Array Matrix Multiplication (2) w Figure (b) , PE(1, 1) adds the product ae to its accumulator and transmits operands a and e to neighbors; thus, the first computational wavefront is shown propagating from PE(l, l) to PE(1, 2) and PE(2, l). 2/24/2021 35

Wavefront Array Matrix Multiplication (3) w Figure (c ) shows the first computational wavefront continuing to propagate, while a second wavefront is propagated by PE(l, l). 2/24/2021 36

Conclusion w What is the parallel computer architectures? R. W. Hackney term: “A confusing menagerie of computer designs. ” 2/24/2021 37