A SURVEY OF APPROACHES TO AUTOMATIC SCHEMA MATCHING

- Slides: 34

A SURVEY OF APPROACHES TO AUTOMATIC SCHEMA MATCHING Sushant Vemparala Gaurang Telang

Motivating Example Assume UTA needs to integrate 40 databases from its different schools with a total of 27, 000 elements. It would take approximately 12 person years to integrate them if done manually. How would you reduce the manual burden ?

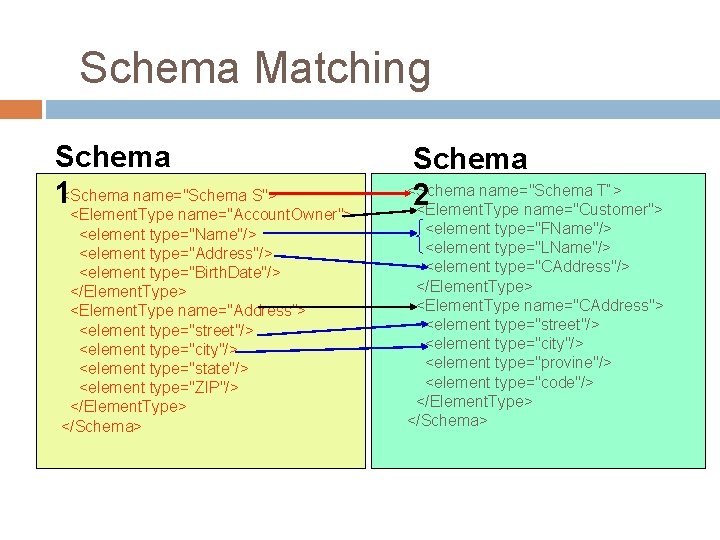

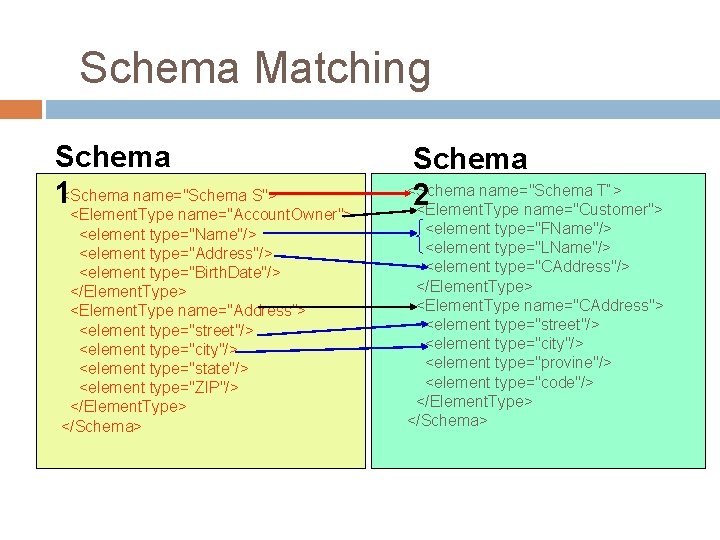

Schema Matching Schema 1<Schema name="Schema S"> <Element. Type name="Account. Owner"> <element type="Name"/> <element type="Address"/> <element type="Birth. Date"/> </Element. Type> <Element. Type name="Address"> <element type="street"/> <element type="city"/> <element type="state"/> <element type="ZIP"/> </Element. Type> </Schema> Schema <Schema name="Schema T“> 2<Element. Type name="Customer"> <element type="FName"/> <element type="LName"/> <element type="CAddress"/> </Element. Type> <Element. Type name="CAddress"> <element type="street"/> <element type="city"/> <element type="provine"/> <element type="code"/> </Element. Type> </Schema>

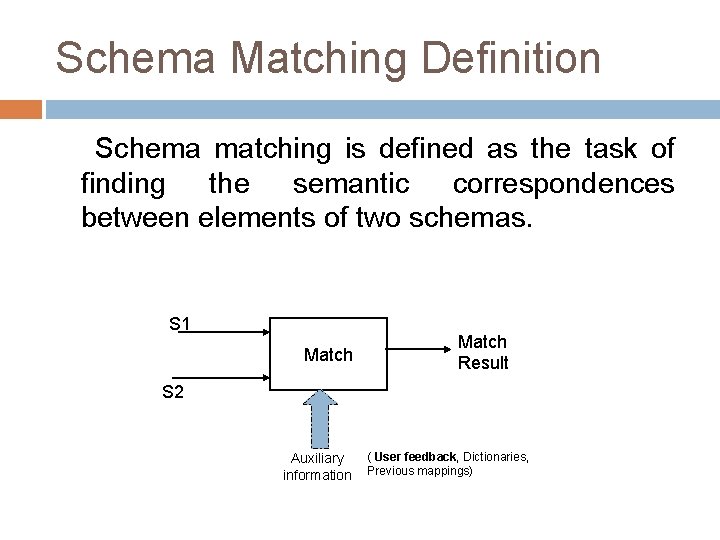

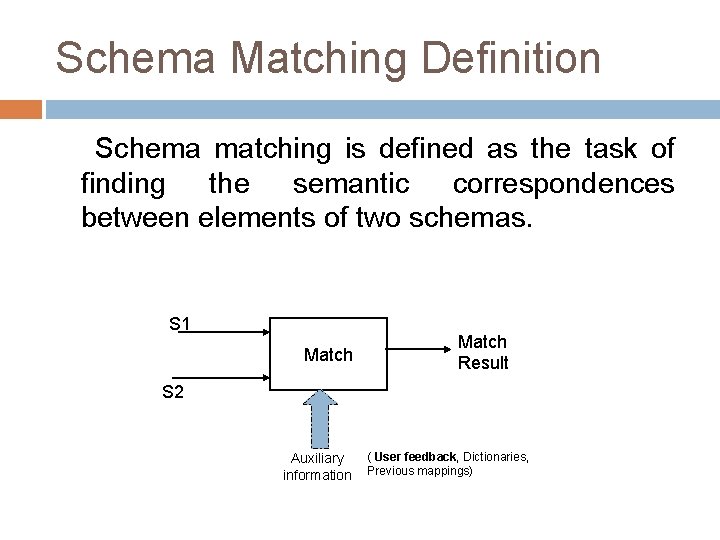

Schema Matching Definition Schema matching is defined as the task of finding the semantic correspondences between elements of two schemas. S 1 Match Result S 2 Auxiliary information ( User feedback, Dictionaries, Previous mappings)

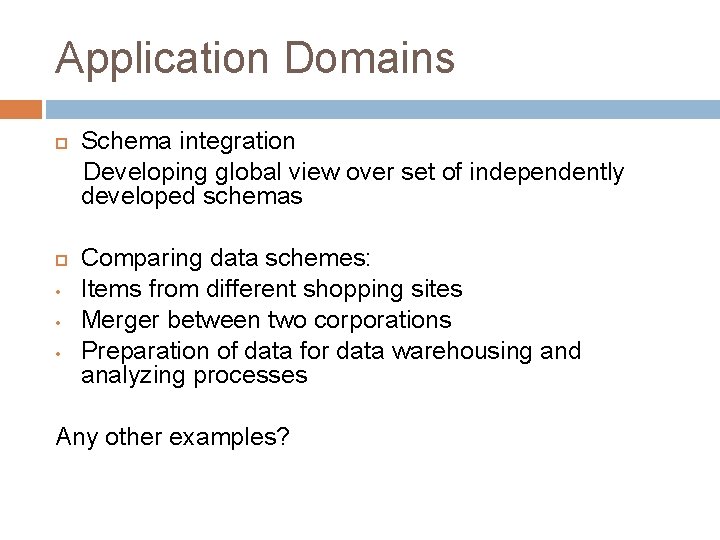

Application Domains • • • Schema integration Developing global view over set of independently developed schemas Comparing data schemes: Items from different shopping sites Merger between two corporations Preparation of data for data warehousing and analyzing processes Any other examples?

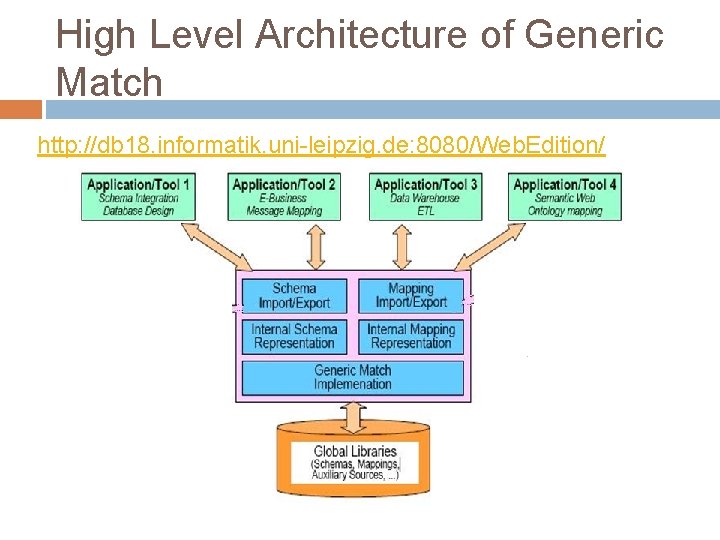

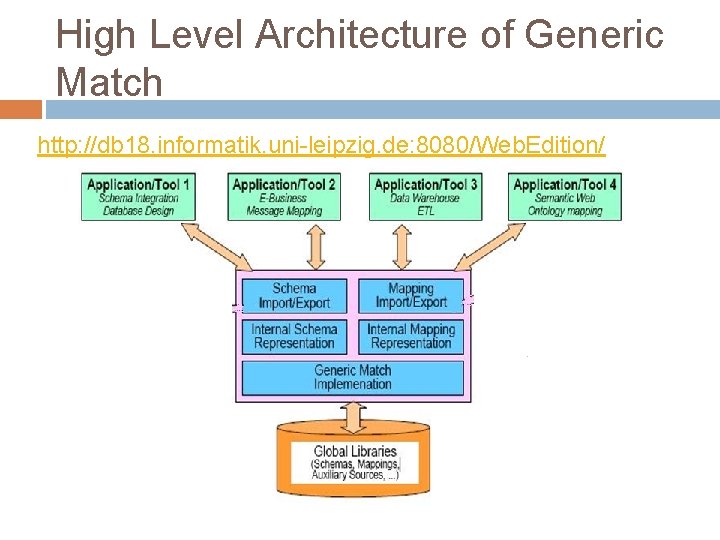

High Level Architecture of Generic Match http: //db 18. informatik. uni-leipzig. de: 8080/Web. Edition/

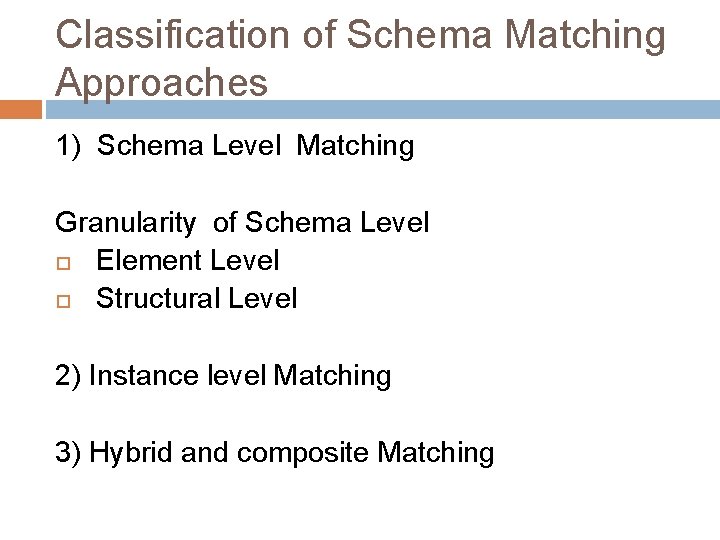

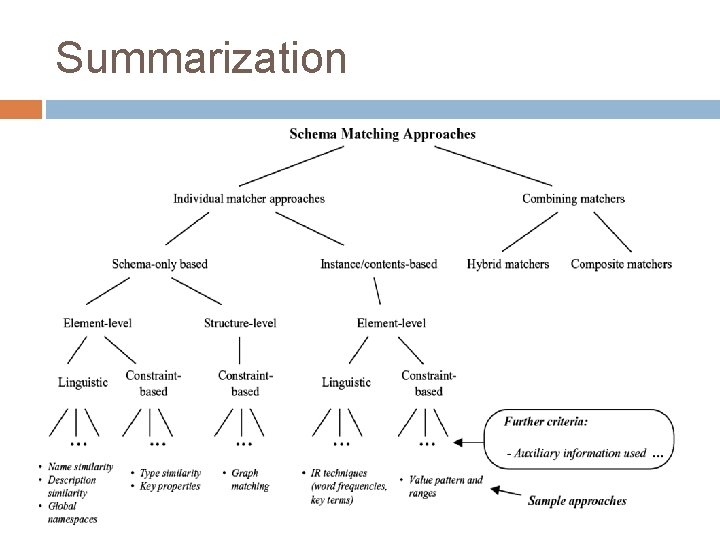

Classification of Schema Matching Approaches 1) Schema Level Matching Granularity of Schema Level Element Level Structural Level 2) Instance level Matching 3) Hybrid and composite Matching

Schema Level Matching Only Schema level information(No data content) Properties? (Name, description, data type , is-a /part-of relationship, constraints and structure) Match will find match candidates (each having similarity value)

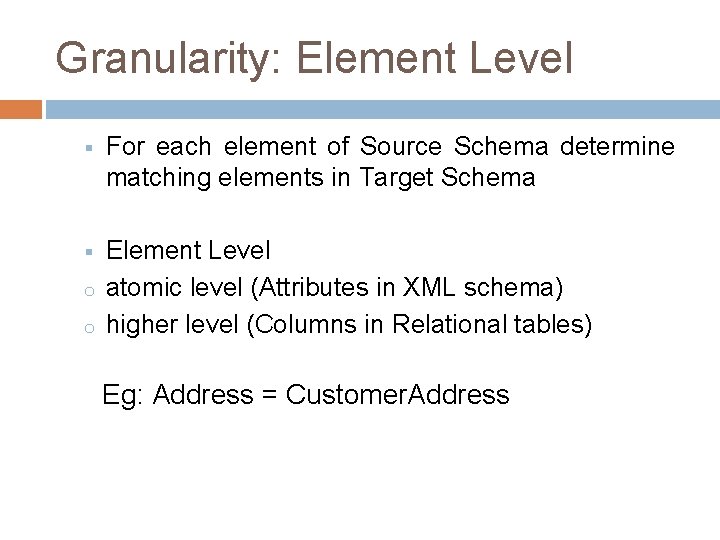

Granularity: Element Level § For each element of Source Schema determine matching elements in Target Schema § Element Level atomic level (Attributes in XML schema) higher level (Columns in Relational tables) o o Eg: Address = Customer. Address

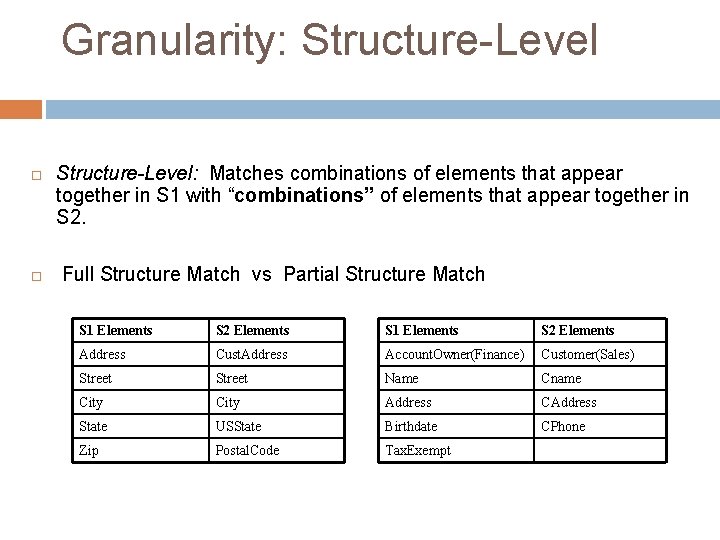

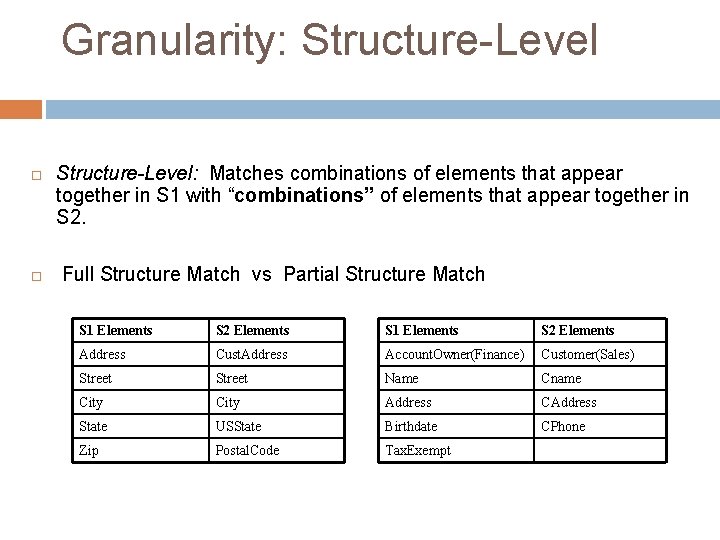

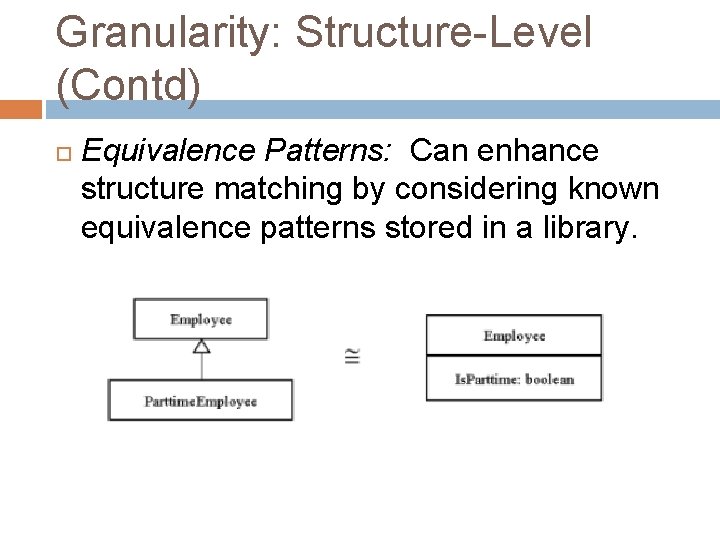

Granularity: Structure-Level: Matches combinations of elements that appear together in S 1 with “combinations” of elements that appear together in S 2. Full Structure Match vs Partial Structure Match S 1 Elements S 2 Elements Address Cust. Address Account. Owner(Finance) Customer(Sales) Street Name Cname City Address CAddress State USState Birthdate CPhone Zip Postal. Code Tax. Exempt

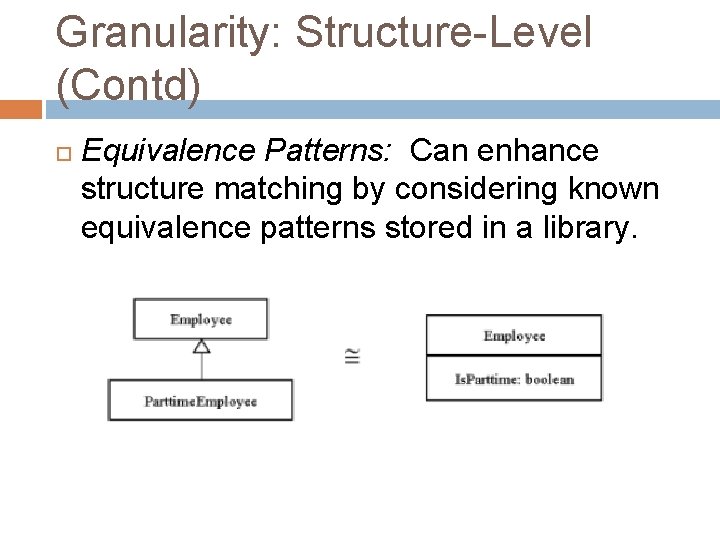

Granularity: Structure-Level (Contd) Equivalence Patterns: Can enhance structure matching by considering known equivalence patterns stored in a library.

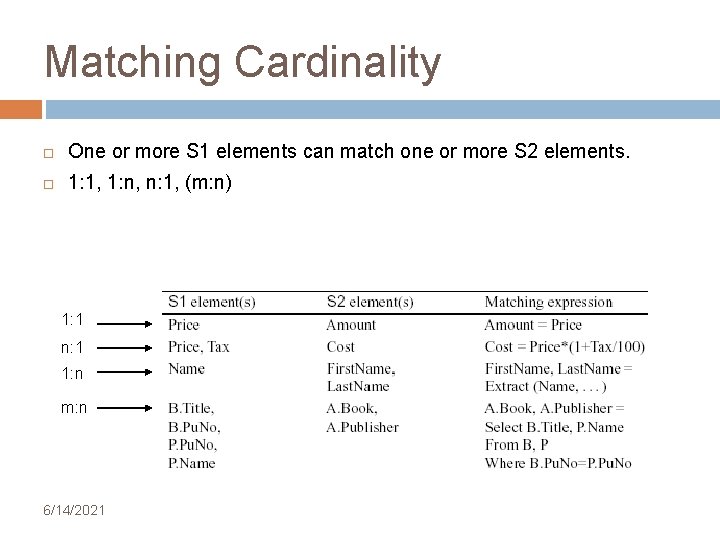

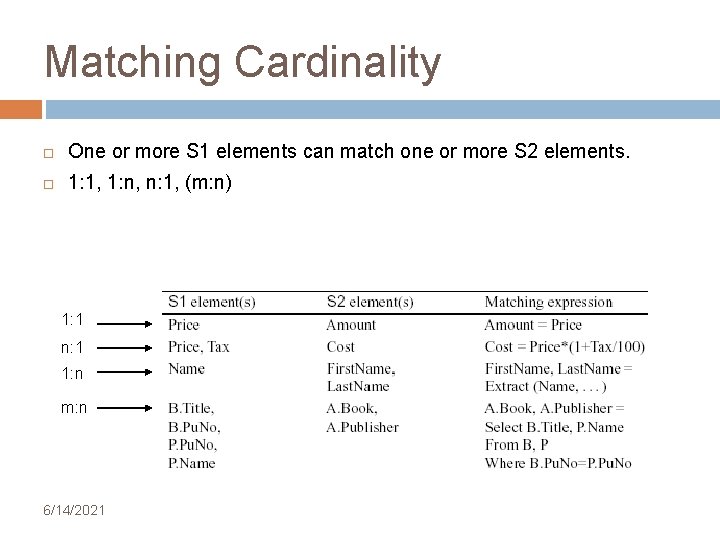

Matching Cardinality One or more S 1 elements can match one or more S 2 elements. 1: 1, 1: n, n: 1, (m: n) 1: 1 n: 1 1: n m: n 6/14/2021 12

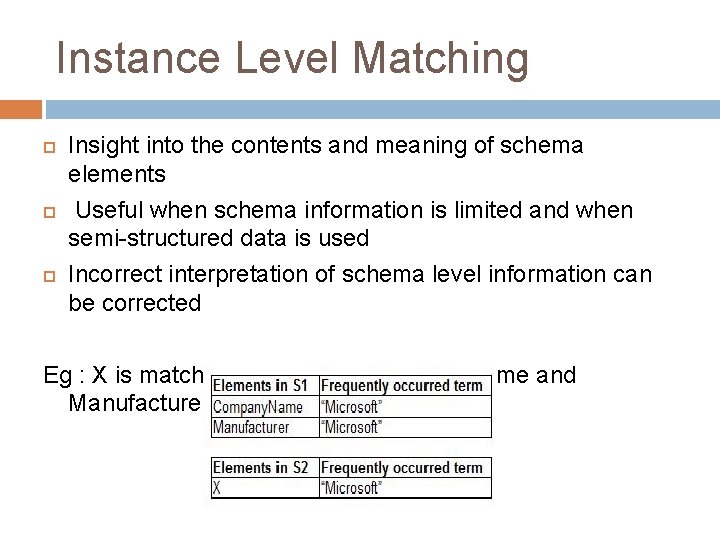

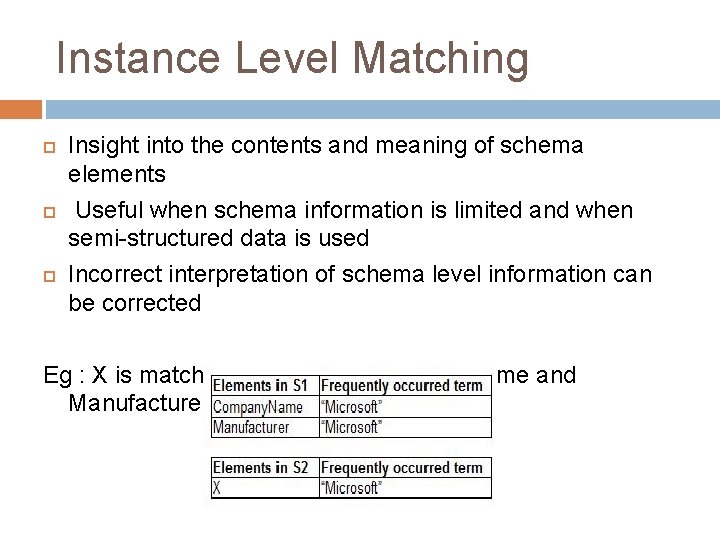

Instance Level Matching Insight into the contents and meaning of schema elements Useful when schema information is limited and when semi-structured data is used Incorrect interpretation of schema level information can be corrected Eg : X is match candidate for Company. Name and Manufacturer

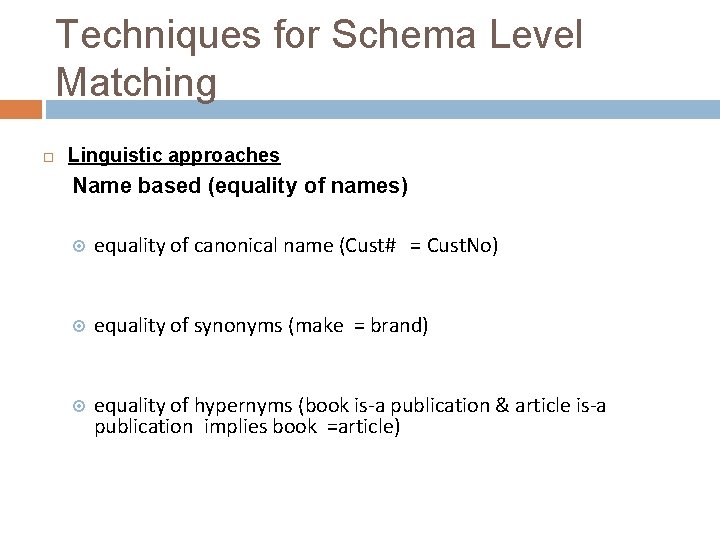

Techniques for Schema Level Matching Linguistic approaches Name based (equality of names) equality of canonical name (Cust# = Cust. No) equality of synonyms (make = brand) equality of hypernyms (book is-a publication & article is-a publication implies book =article)

Techniques for Schema level Matching Name Matching (Contd) Similarity based on pronunciation or soundex (ship 2=Ship. To) user-provided name matches (issue=bug) Not limited to 1: 1 matches (phone = {home. Phone, office. Phone} ) Context based : Payroll application(salary=income) vs Tax reporting application(salary!=income)

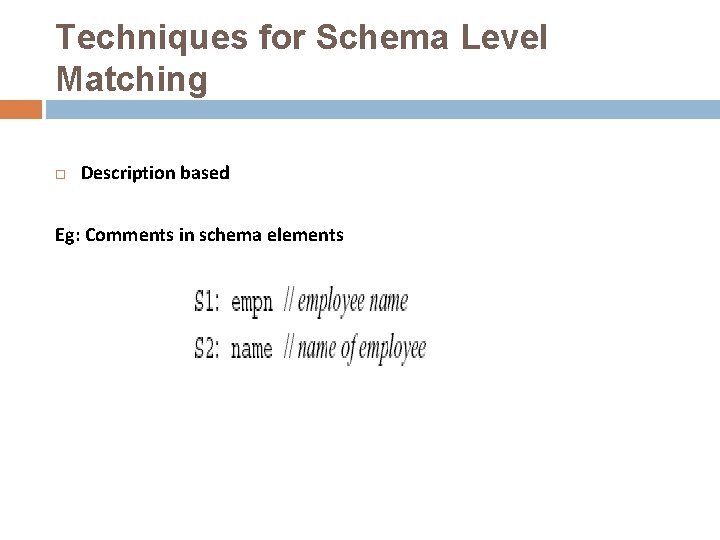

Techniques for Schema Level Matching Description based Eg: Comments in schema elements

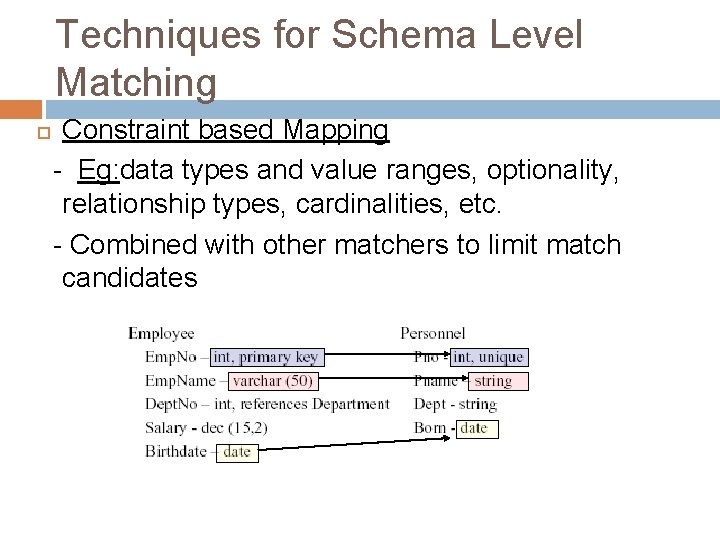

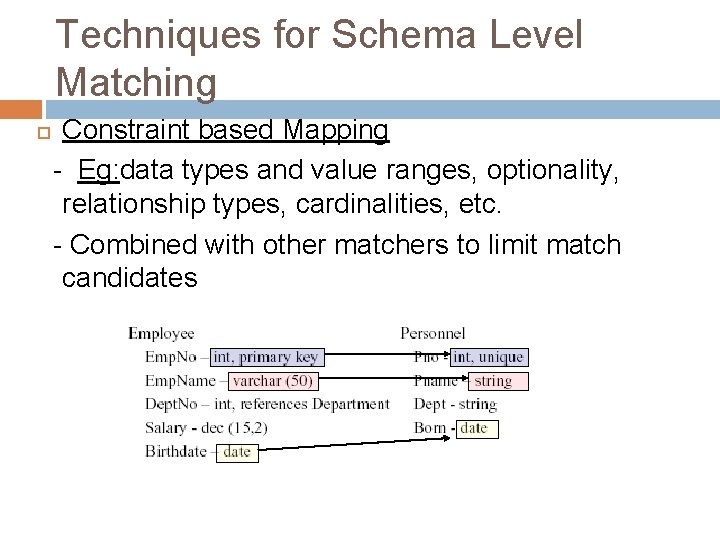

Techniques for Schema Level Matching Constraint based Mapping - Eg: data types and value ranges, optionality, relationship types, cardinalities, etc. - Combined with other matchers to limit match candidates

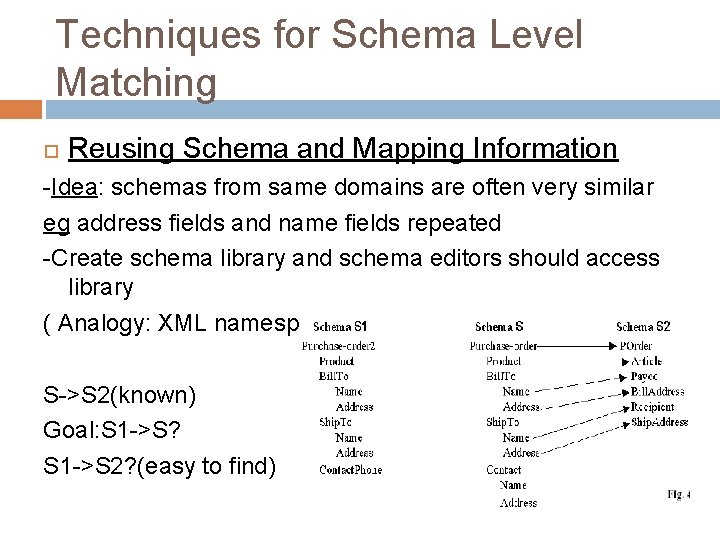

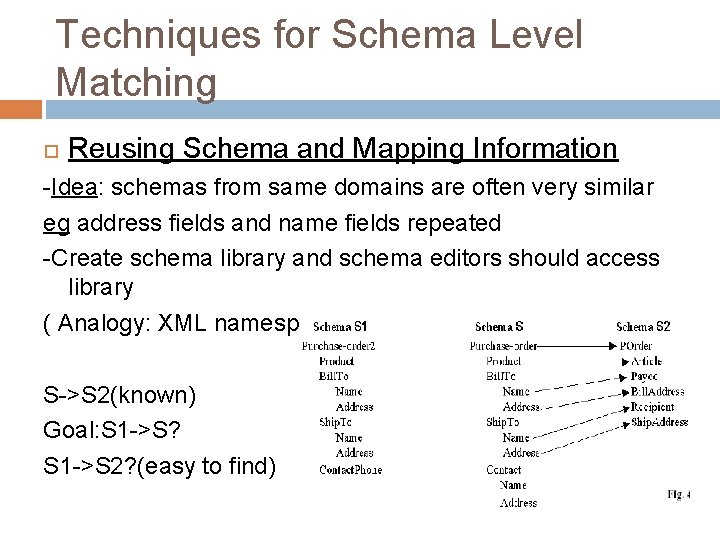

Techniques for Schema Level Matching Reusing Schema and Mapping Information -Idea: schemas from same domains are often very similar eg address fields and name fields repeated -Create schema library and schema editors should access library ( Analogy: XML namespaces) S->S 2(known) Goal: S 1 ->S? S 1 ->S 2? (easy to find)

Techniques for Instance Level IR techniques (Measures such as Jacard coefficient) Constraint-based Characterization (Emp. No range vs Dept No range) Auxiliary Information Learning (Eg : Evaluate S 1 contents Characterization 1, Evaluate S 2 contents against Characterization 1 ) Drawback of Instance based?

Combining Matcher: Hybrid Matcher Integrates multiple matching criteria Eg: -A Matcher with Name matching and constraint based matching Single Pass Matching criteria is hard-wired

Combining Matcher: Composite Matcher Combine the result of several independently executed Matchers Iterative (Match result of 1 st Matcher is consumed by the 2 nd Matcher) Flexible ordering Which is efficient –Hybrid and Composite?

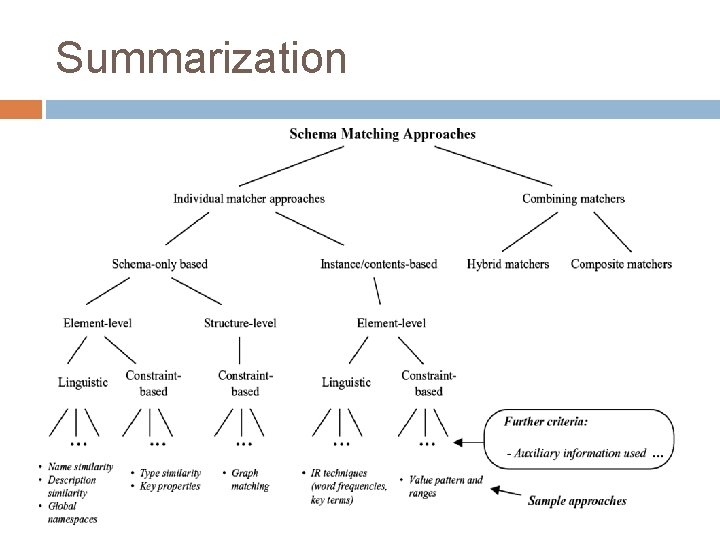

Summarization

How good is a Match? Assessing match quality is difficult Human verification and tuning of matching is often required A useful metric would be to measure the amount of human work required to reach the perfect match Recall: how many good matches did we show? Precision: how many of the matches we show are good?

Current Work LSD SKAT Similarity Flooding

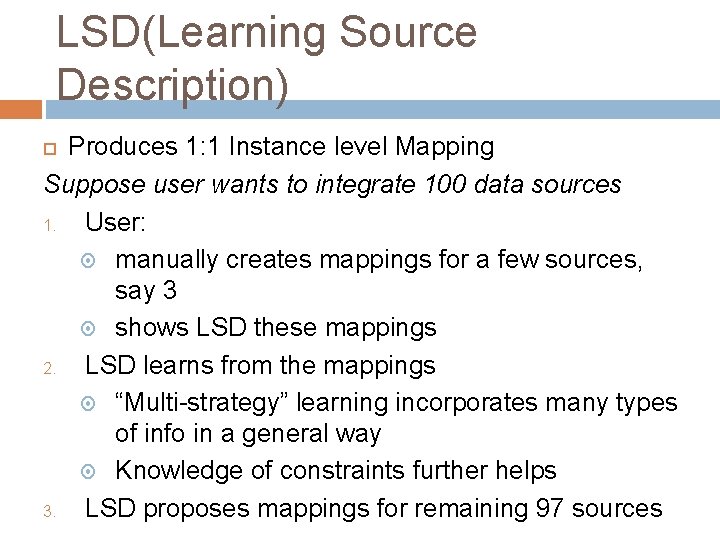

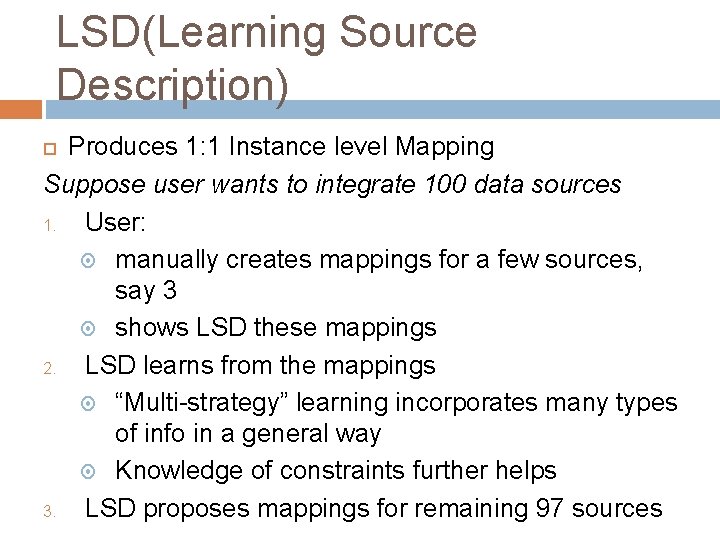

LSD(Learning Source Description) Produces 1: 1 Instance level Mapping Suppose user wants to integrate 100 data sources 1. User: manually creates mappings for a few sources, say 3 shows LSD these mappings 2. LSD learns from the mappings “Multi-strategy” learning incorporates many types of info in a general way Knowledge of constraints further helps 3. LSD proposes mappings for remaining 97 sources

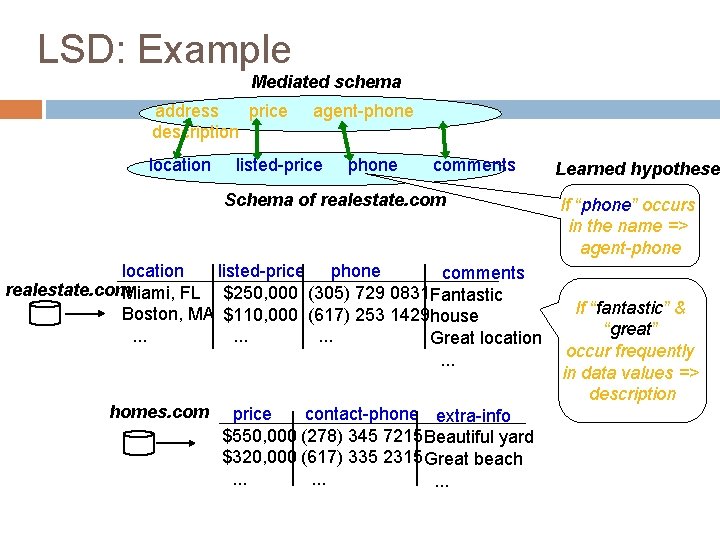

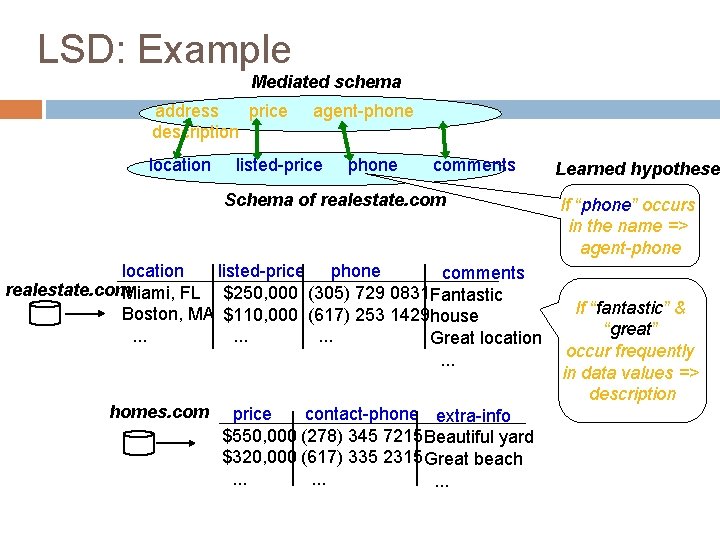

LSD: Example Mediated schema address price description location agent-phone listed-price phone comments Schema of realestate. com location listed-price phone comments realestate. com. Miami, FL $250, 000 (305) 729 0831 Fantastic Boston, MA $110, 000 (617) 253 1429 house. . Great location. . . homes. com price contact-phone extra-info $550, 000 (278) 345 7215 Beautiful yard $320, 000 (617) 335 2315 Great beach. . Learned hypothese If “phone” occurs in the name => agent-phone If “fantastic” & “great” occur frequently in data values => description

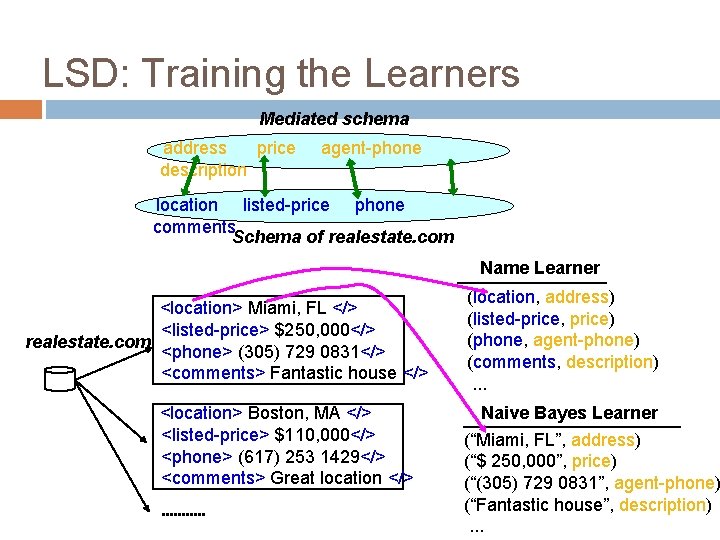

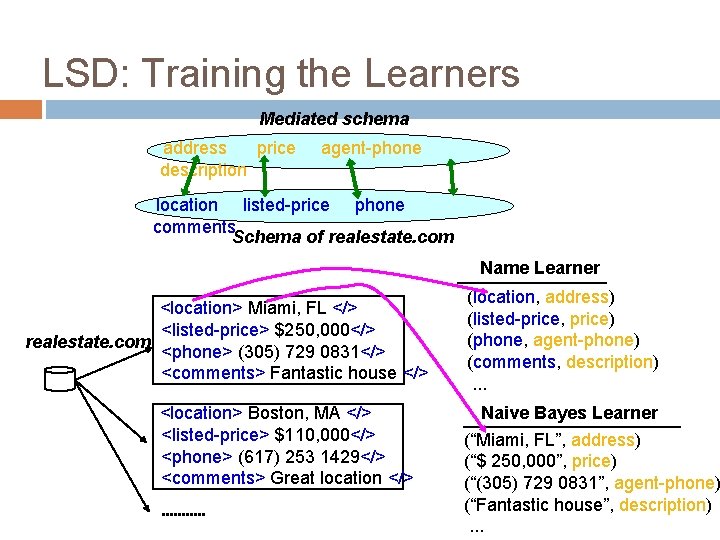

LSD: Training the Learners Mediated schema address price description agent-phone location listed-price phone comments Schema of realestate. com Name Learner <location> Miami, FL </> <listed-price> $250, 000</> realestate. com <phone> (305) 729 0831</> <comments> Fantastic house </> <location> Boston, MA </> <listed-price> $110, 000</> <phone> (617) 253 1429</> <comments> Great location </> (location, address) (listed-price, price) (phone, agent-phone) (comments, description). . . Naive Bayes Learner (“Miami, FL”, address) (“$ 250, 000”, price) (“(305) 729 0831”, agent-phone) (“Fantastic house”, description). . .

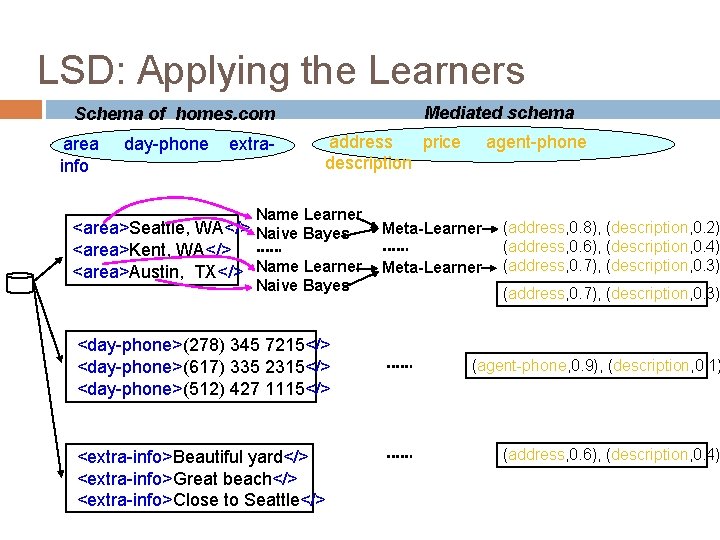

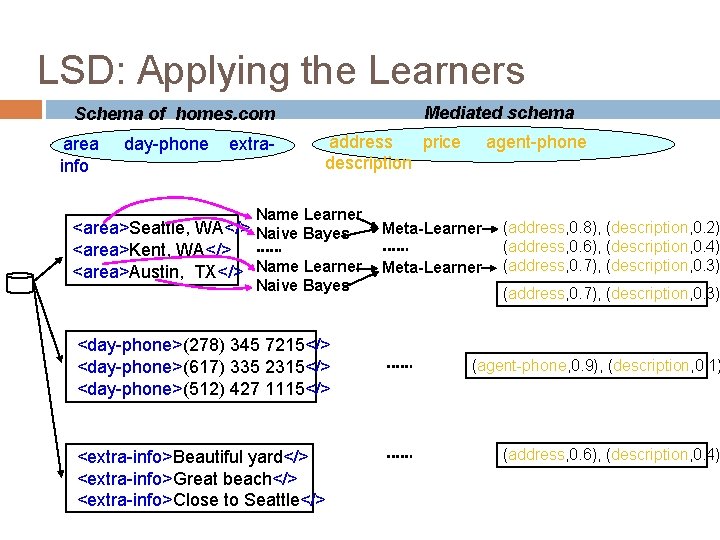

LSD: Applying the Learners Mediated schema Schema of homes. com area info day-phone extra- address price description Name Learner <area>Seattle, WA</> Naive Bayes <area>Kent, WA</> <area>Austin, TX</> Name Learner Naive Bayes <day-phone>(278) 345 7215</> <day-phone>(617) 335 2315</> <day-phone>(512) 427 1115</> <extra-info>Beautiful yard</> <extra-info>Great beach</> <extra-info>Close to Seattle</> agent-phone Meta-Learner (address, 0. 8), (description, 0. 2) (address, 0. 6), (description, 0. 4) (address, 0. 7), (description, 0. 3) (agent-phone, 0. 9), (description, 0. 1) (address, 0. 6), (description, 0. 4)

SKAT(Semantic Knowledge Articulation) Expert supplies SKAT with few initial rules Ex : 1) Match US. president US. chancellor 2) Mis. Match human. nail factory. nail SKAT articulates on supplied matching rules Expert approves/rejects. Creates correct rules and computes an updated articulation (Knowledge gained from irrelevant and rejected rules stored)

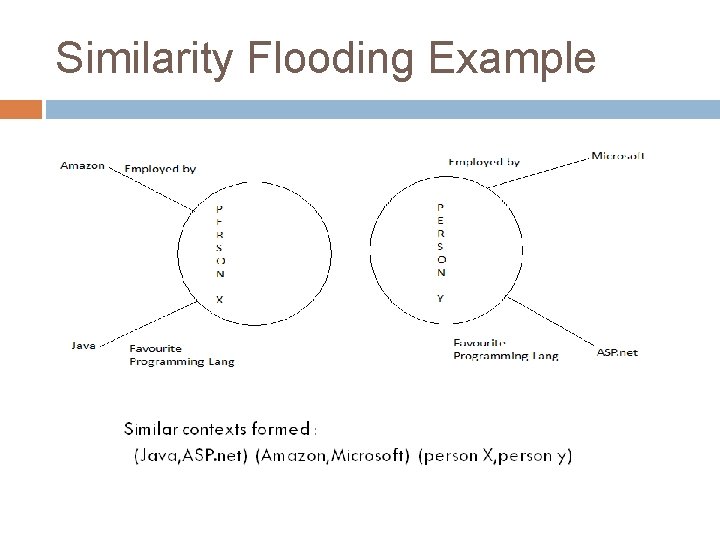

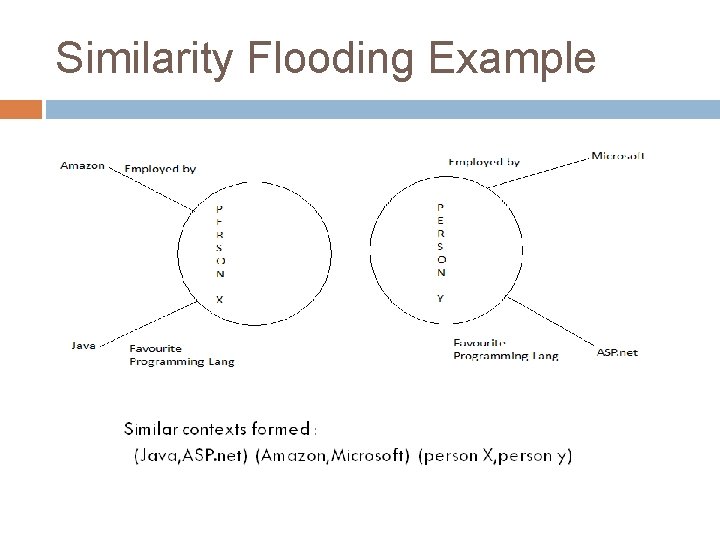

Similarity Flooding Intuition : Whenever any two elements in the graphs G 1 and G 2 are similar, their neighbors tend to be similar. Transform schemas into directed labeled graphs

Similarity Flooding Example

Conclusion v v v User feedback: User Interaction: minimize user input but maximize impact of the feedback If we require user acceptance for our matches, then what happens if our matcher returns thousands or hundreds of matches? The more configurable the matcher, the better Problem with Schema representation and Data Dealing with inconsistent data values for a schema element. independence of schema representation Mapping Maintenance: what happens when you map between two schemas and then one changes? Sophisticated techniques required for n: m matches [Current work based on 1: 1]

Conclusion More attention 1) Re-use opportunities 2) Learning from User feedback Any other issues to address?

THANK YOU!