A Study of Partitioning Policies for Graph Analytics

![Implementation: ● Partitioning: State-of-the-art graph partitioner, Cu. SP [IPDSP’ 19] ● Processing: State-of-the-art distributed Implementation: ● Partitioning: State-of-the-art graph partitioner, Cu. SP [IPDSP’ 19] ● Processing: State-of-the-art distributed](https://slidetodoc.com/presentation_image_h/09e207ded86e3f9d9c3c0bb71af1ec1f/image-14.jpg)

![Partitioning Time Cu. SP [IPDSP’ 19] 24 Partitioning Time Cu. SP [IPDSP’ 19] 24](https://slidetodoc.com/presentation_image_h/09e207ded86e3f9d9c3c0bb71af1ec1f/image-24.jpg)

- Slides: 26

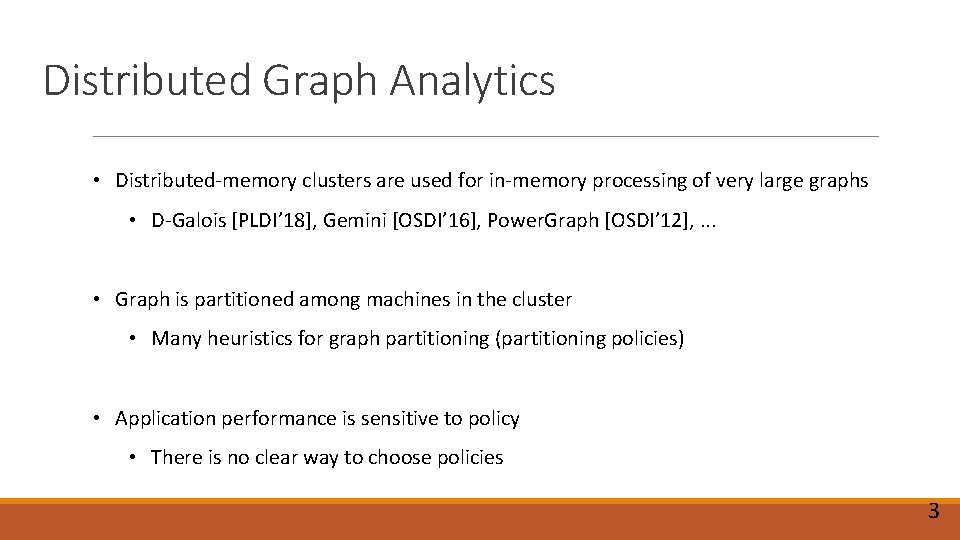

A Study of Partitioning Policies for Graph Analytics on Largescale Distributed Platforms Gurbinder Gill Roshan Dathathri Loc Hoang Keshav Pingali 1

Graph Analytics Applications: machine learning and network analysis Datasets: unstructured graphs Need TBs of memory Credits: Wikipedia, SFL Scientific, Make. Use. Of Credits: Sentinel Visualizer 2

Distributed Graph Analytics • Distributed-memory clusters are used for in-memory processing of very large graphs • D-Galois [PLDI’ 18], Gemini [OSDI’ 16], Power. Graph [OSDI’ 12], . . . • Graph is partitioned among machines in the cluster • Many heuristics for graph partitioning (partitioning policies) • Application performance is sensitive to policy • There is no clear way to choose policies 3

Existing Partitioning Studies • Performed on small graphs and clusters • Only considered metrics such as edges cut, avg. number of replicas, etc. • Did not consider work-efficient data-driven algorithms • Only topology-driven algorithms evaluated • Used framework that use similar communication pattern for all partitioning policies • Putting some partitioning policies at a disadvantage 4

Contributions • Experimental study of partitioning strategies for work-efficient graph analytics applications: • Largest publicly available web-crawls, such as wdc 12 (~1 TB) • Large KNL and Skylake clusters with up to 256 machines (~69 K threads) • Uses the start-of-the-art graph analytics system, D-Galois [PLDI’ 19] • Evaluate various kinds of partitioning policies • Analyze partitioning policies using an analytical model and micro-benchmarking • Present decision tree for selecting the best partitioning policy 5

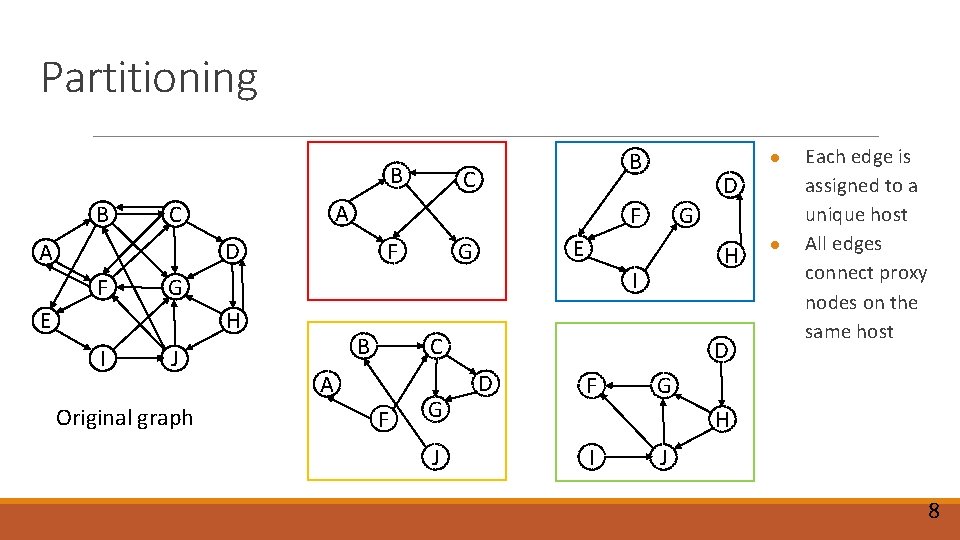

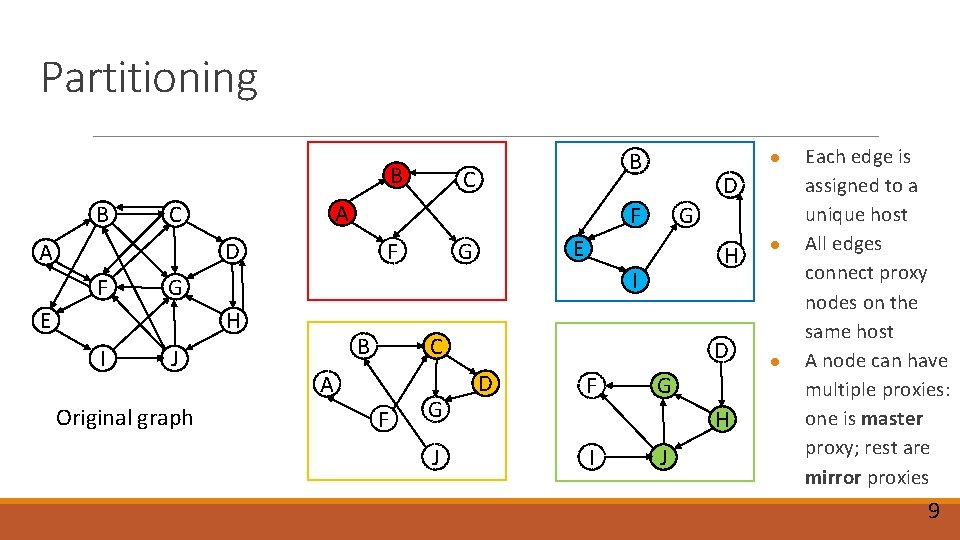

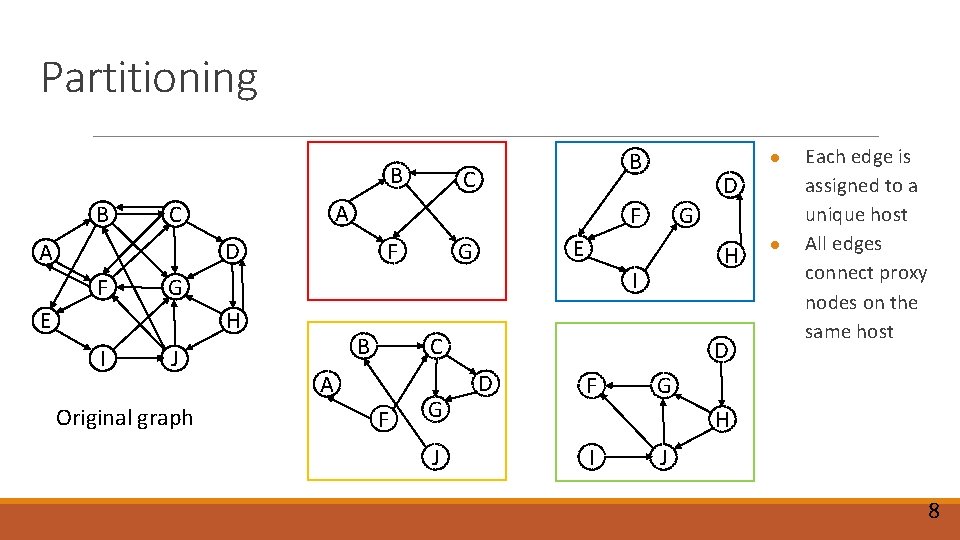

Partitioning B C D A F G H E I J Original graph 6

Partitioning ● B C Each edge is assigned to a unique host D A F G H E I J Original graph 7

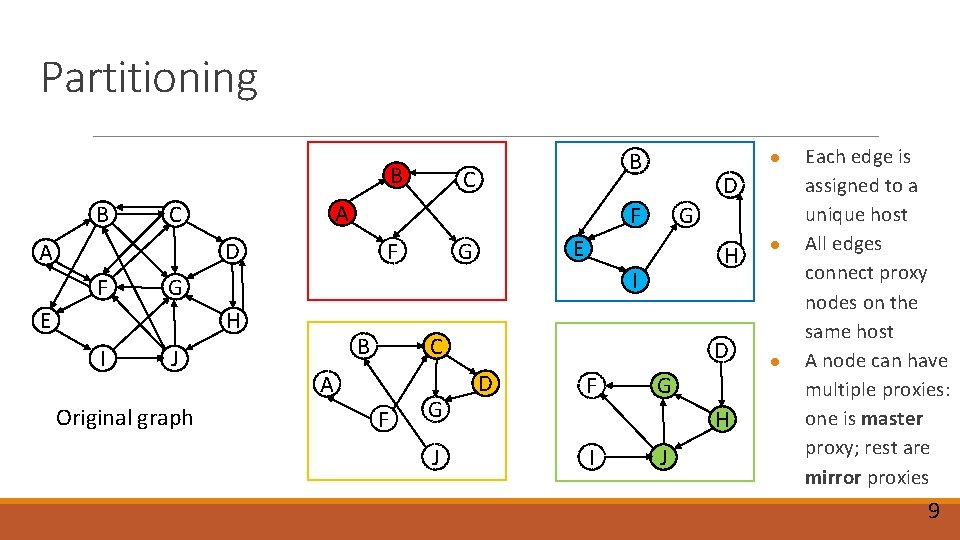

Partitioning B B F F J Original graph H I G E G G E D F D A C ● B B C A F G J D D F ● Each edge is assigned to a unique host All edges connect proxy nodes on the same host G H I J 8

Partitioning B B F F J Original graph H I G E G G E D F D A C ● B B C A F G J D D F G H I J ● ● Each edge is assigned to a unique host All edges connect proxy nodes on the same host A node can have multiple proxies: one is master proxy; rest are mirror proxies 9

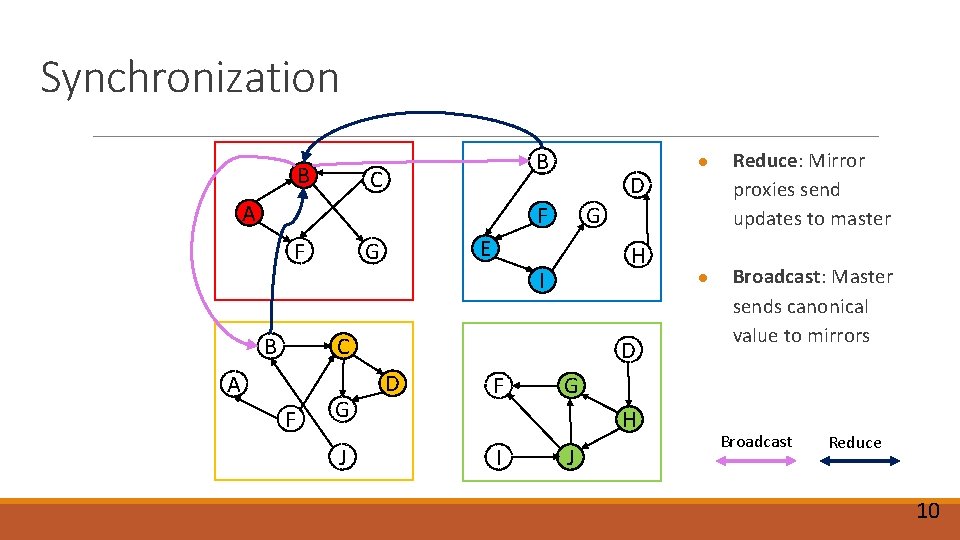

Synchronization B B C A D F F H I B C A F G J D D F ● Broadcast: Master sends canonical value to mirrors G H I Reduce: Mirror proxies send updates to master G E G ● J Broadcast Reduce 10

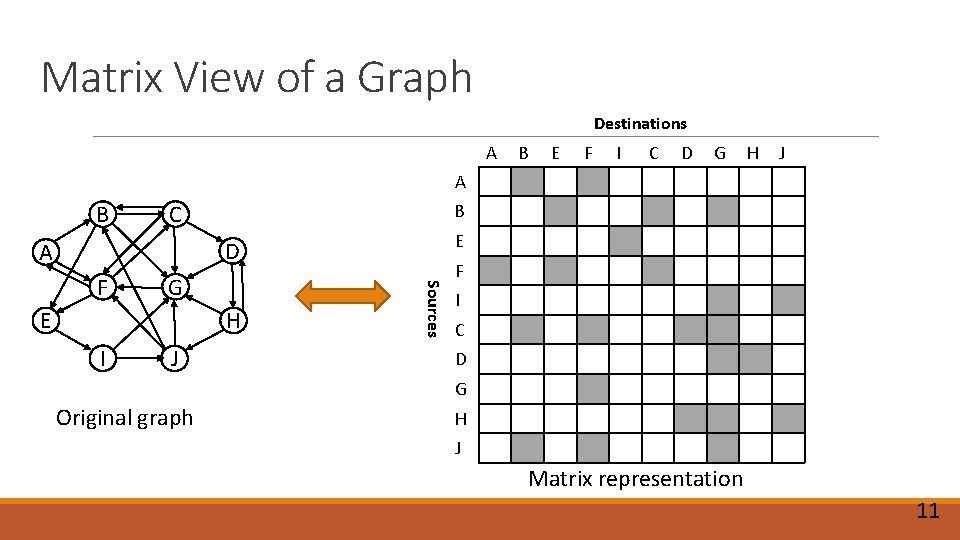

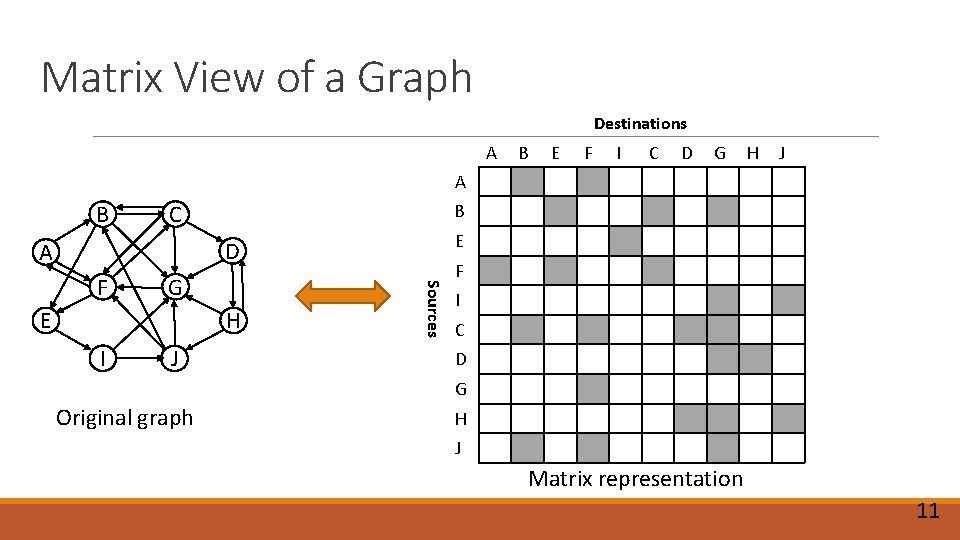

Matrix View of a Graph Destinations A B E F I C D G H J A B C B E D A G H E I J Sources F F I C D G Original graph H J Matrix representation 11

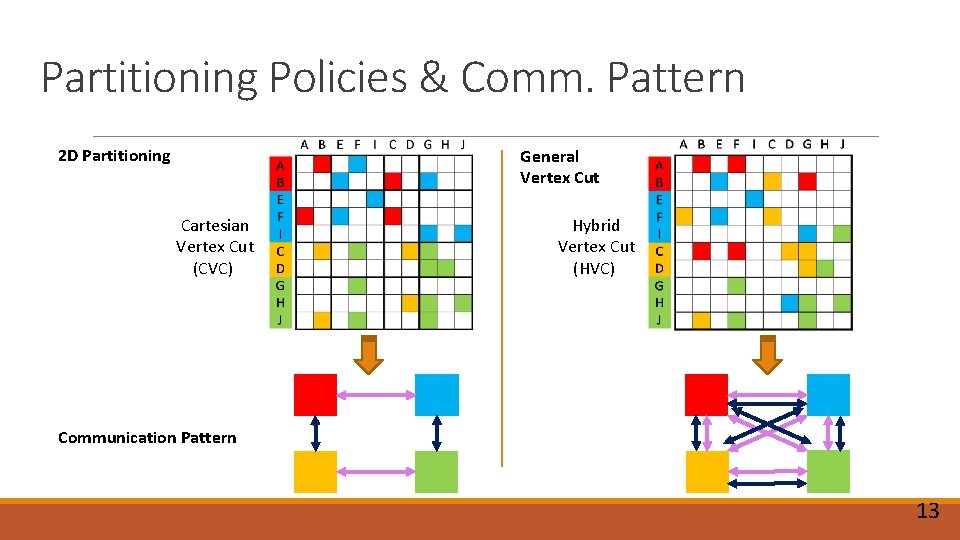

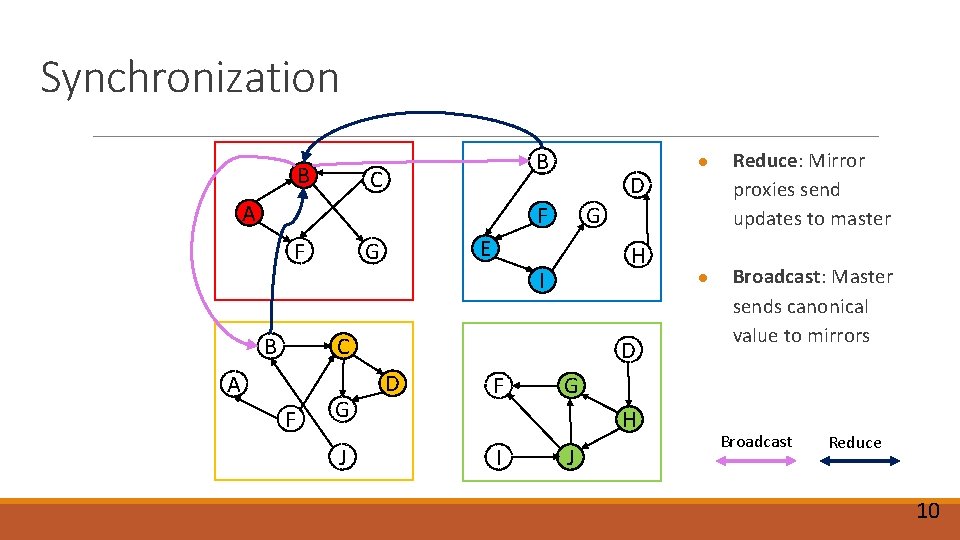

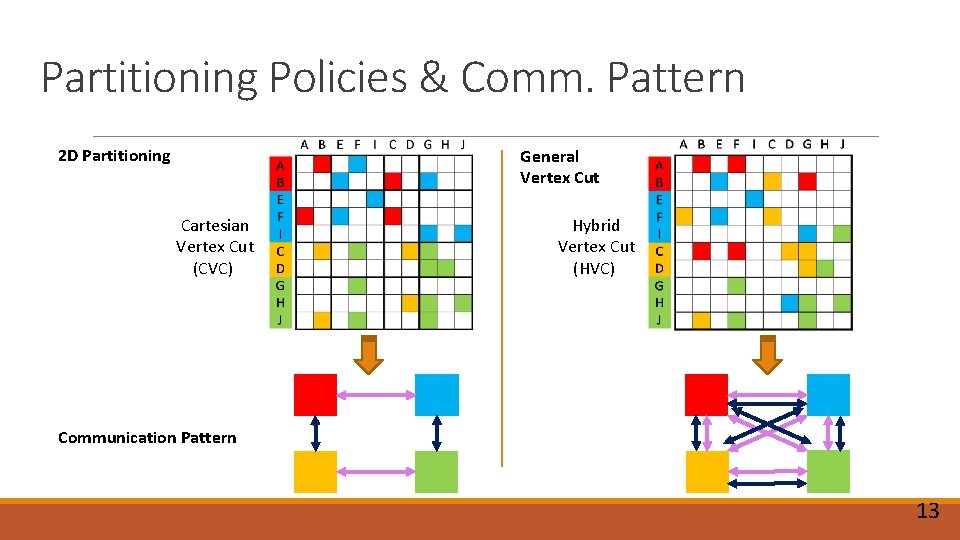

Partitioning Policies & Comm. Pattern 1 D Partitioning Incoming Edge Cut (IEC) Outgoing Edge Cut (OEC) Communication Pattern 12

Partitioning Policies & Comm. Pattern 2 D Partitioning General Vertex Cut Cartesian Vertex Cut (CVC) Hybrid Vertex Cut (HVC) Communication Pattern 13

![Implementation Partitioning Stateoftheart graph partitioner Cu SP IPDSP 19 Processing Stateoftheart distributed Implementation: ● Partitioning: State-of-the-art graph partitioner, Cu. SP [IPDSP’ 19] ● Processing: State-of-the-art distributed](https://slidetodoc.com/presentation_image_h/09e207ded86e3f9d9c3c0bb71af1ec1f/image-14.jpg)

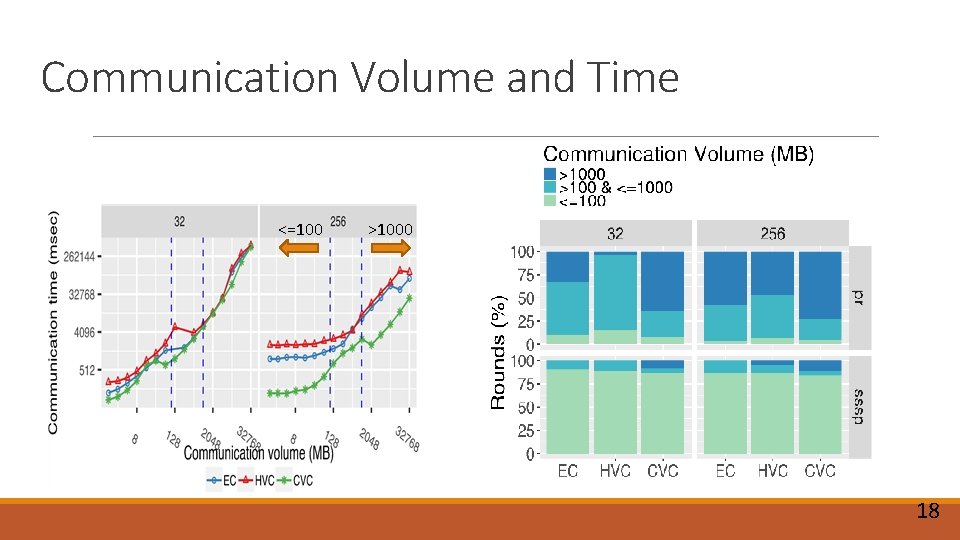

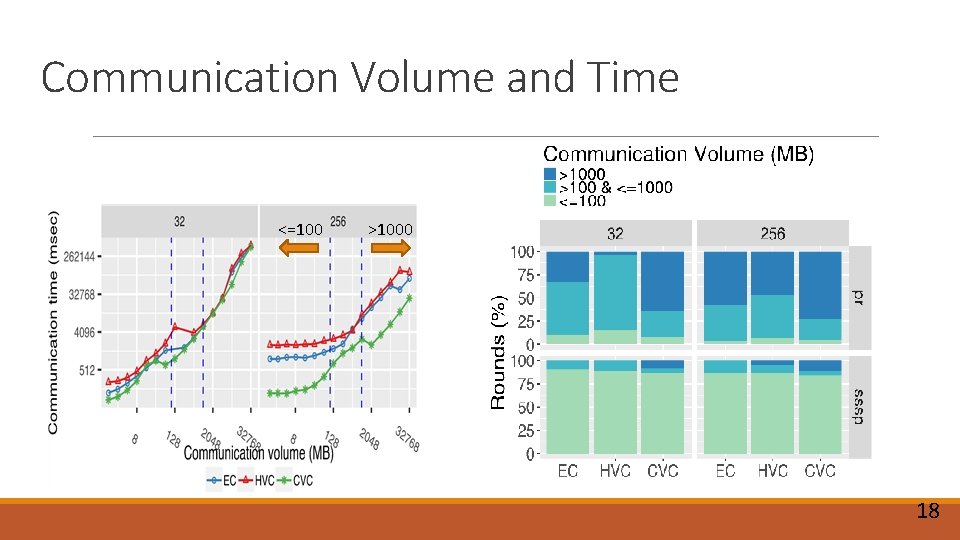

Implementation: ● Partitioning: State-of-the-art graph partitioner, Cu. SP [IPDSP’ 19] ● Processing: State-of-the-art distributed graph analytics system, D-Galois [PLDI’ 18] ○ Bulk synchronous parallel (BSP) execution ○ Uses Gluon [PLDI’ 19] as communication substrate: ■ Uses partition-specific communication pattern 14

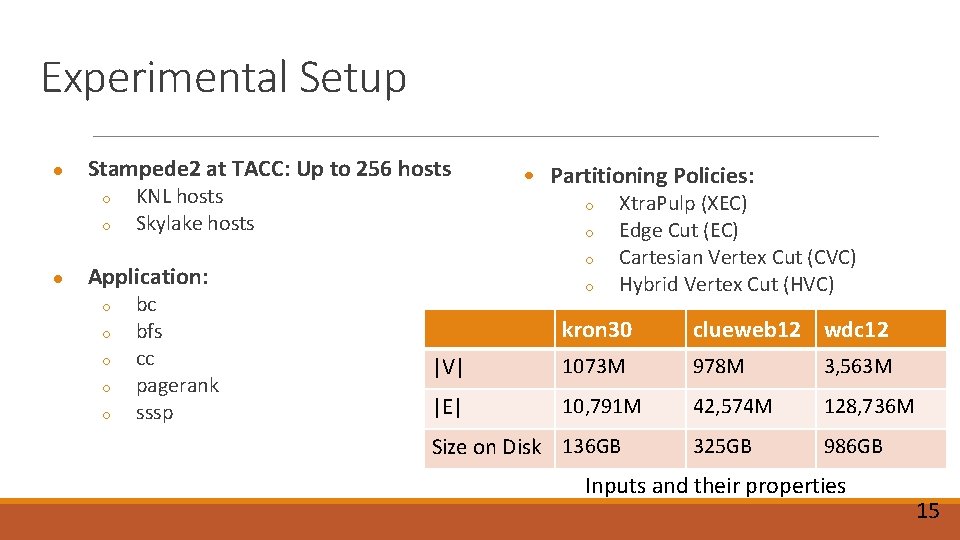

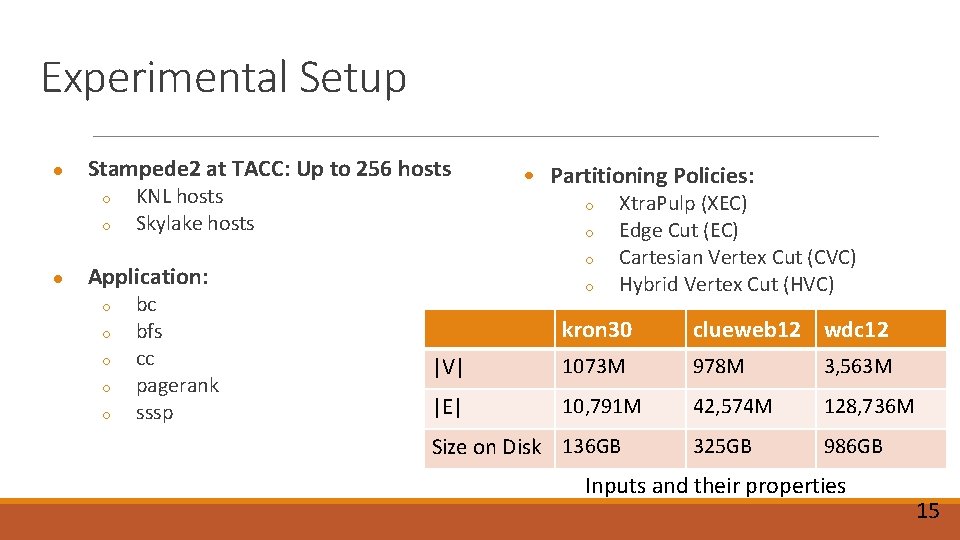

Experimental Setup ● Stampede 2 at TACC: Up to 256 hosts ○ ○ ● KNL hosts Skylake hosts ○ ○ ○ Application: ○ ○ ○ bc bfs cc pagerank sssp • Partitioning Policies: ○ Xtra. Pulp (XEC) Edge Cut (EC) Cartesian Vertex Cut (CVC) Hybrid Vertex Cut (HVC) kron 30 clueweb 12 wdc 12 |V| 1073 M 978 M 3, 563 M |E| 10, 791 M 42, 574 M 128, 736 M 325 GB 986 GB Size on Disk 136 GB Inputs and their properties 15

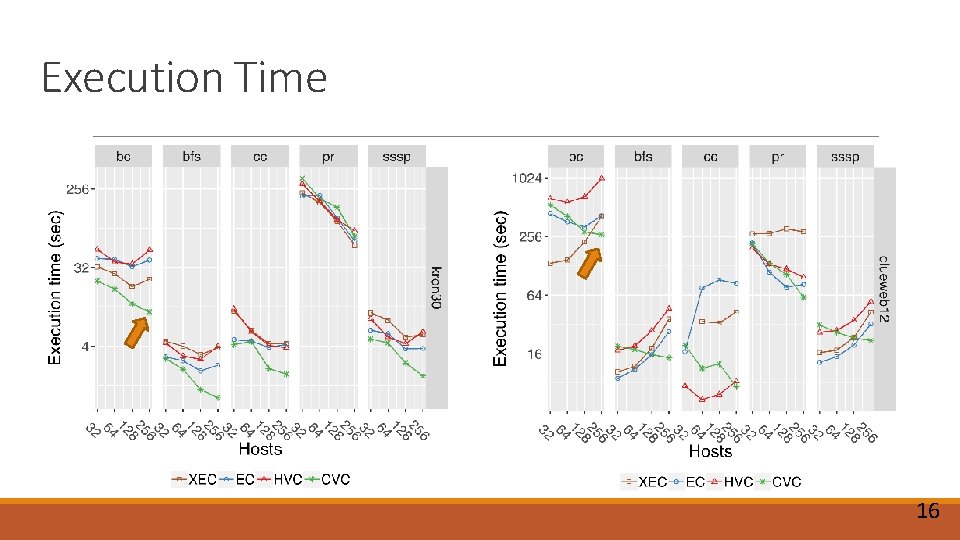

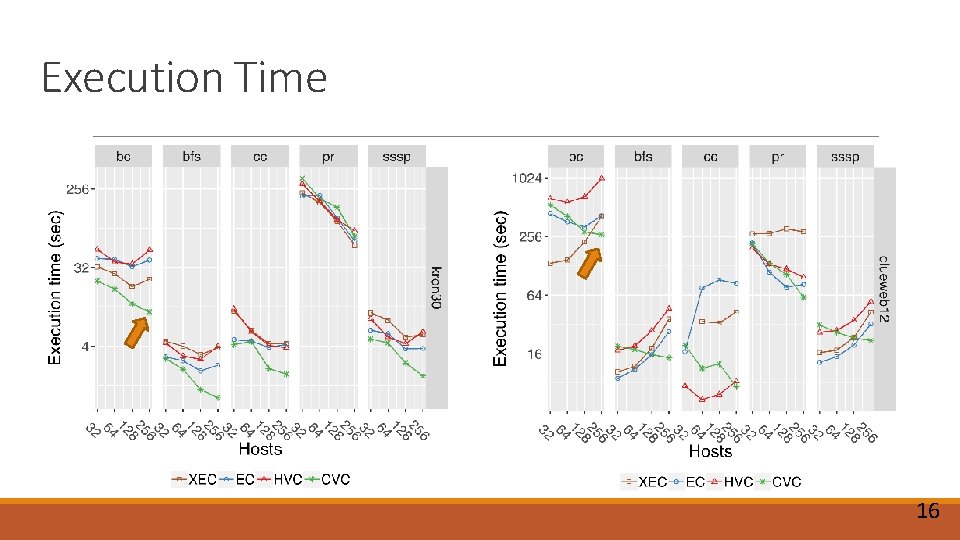

Execution Time 16

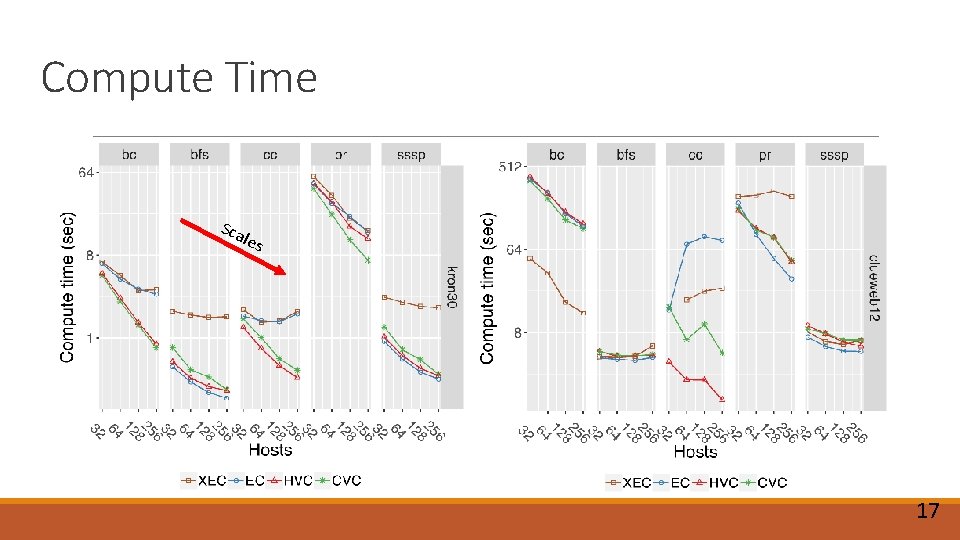

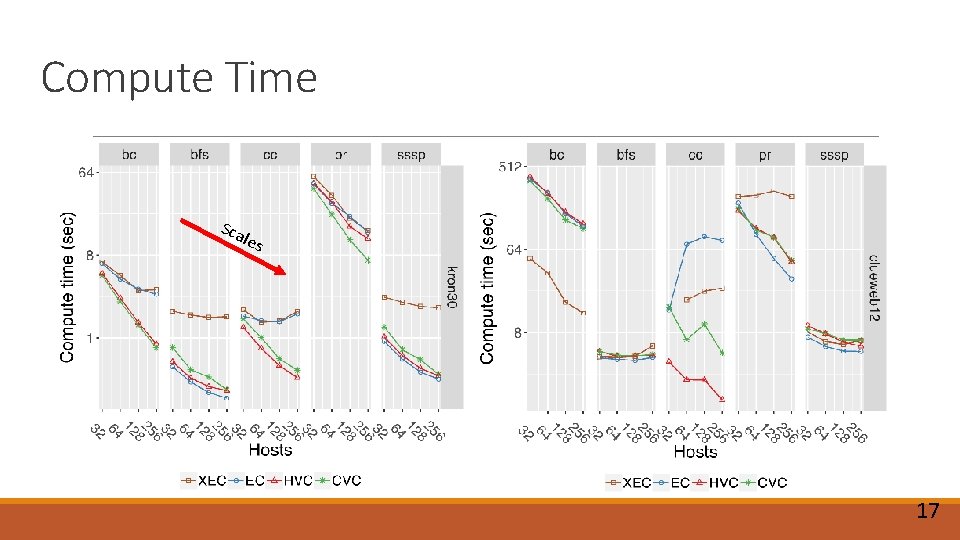

Compute Time Sca les 17

Communication Volume and Time <=100 >1000 Put 8 and 256 micro here + arrows 18

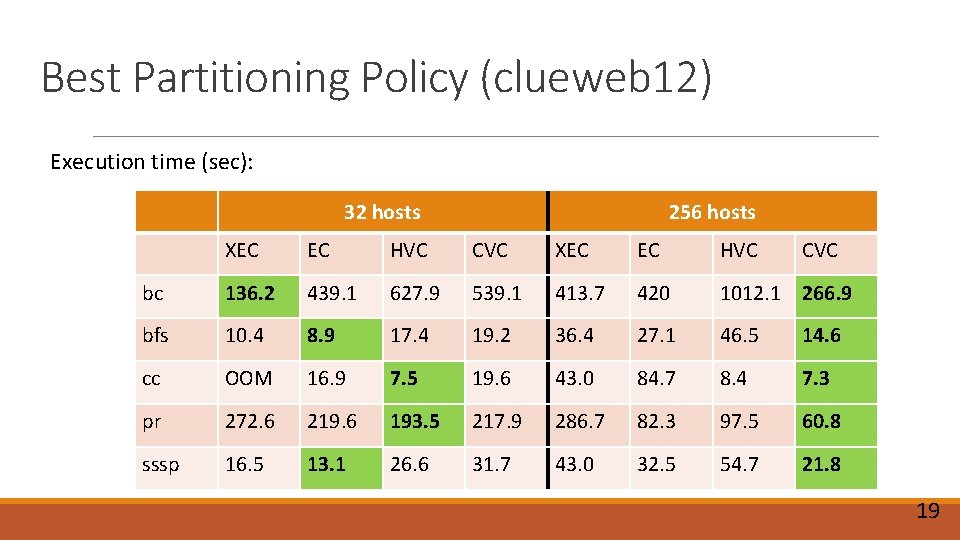

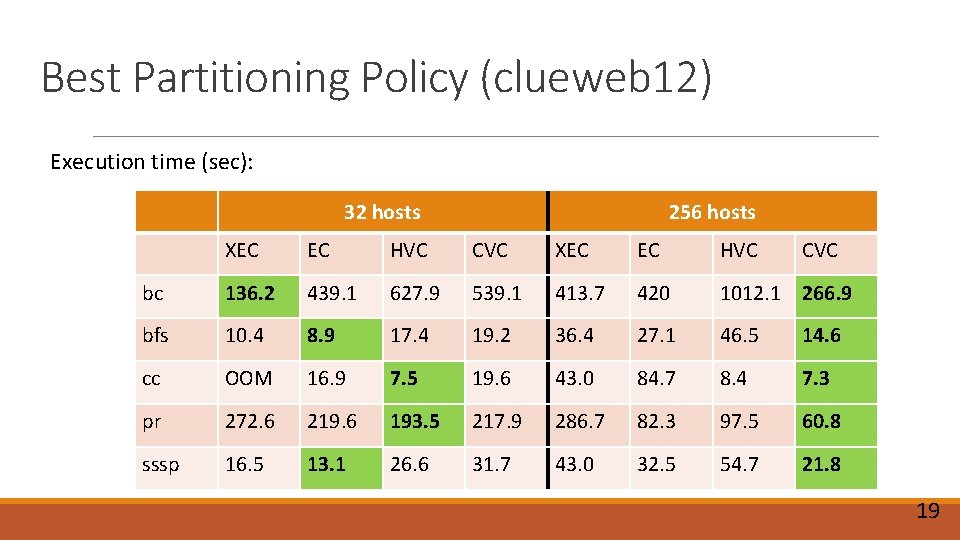

Best Partitioning Policy (clueweb 12) Execution time (sec): 32 hosts 256 hosts XEC EC HVC CVC bc 136. 2 439. 1 627. 9 539. 1 413. 7 420 1012. 1 266. 9 bfs 10. 4 8. 9 17. 4 19. 2 36. 4 27. 1 46. 5 14. 6 cc OOM 16. 9 7. 5 19. 6 43. 0 84. 7 8. 4 7. 3 pr 272. 6 219. 6 193. 5 217. 9 286. 7 82. 3 97. 5 60. 8 sssp 16. 5 13. 1 26. 6 31. 7 43. 0 32. 5 54. 7 21. 8 19

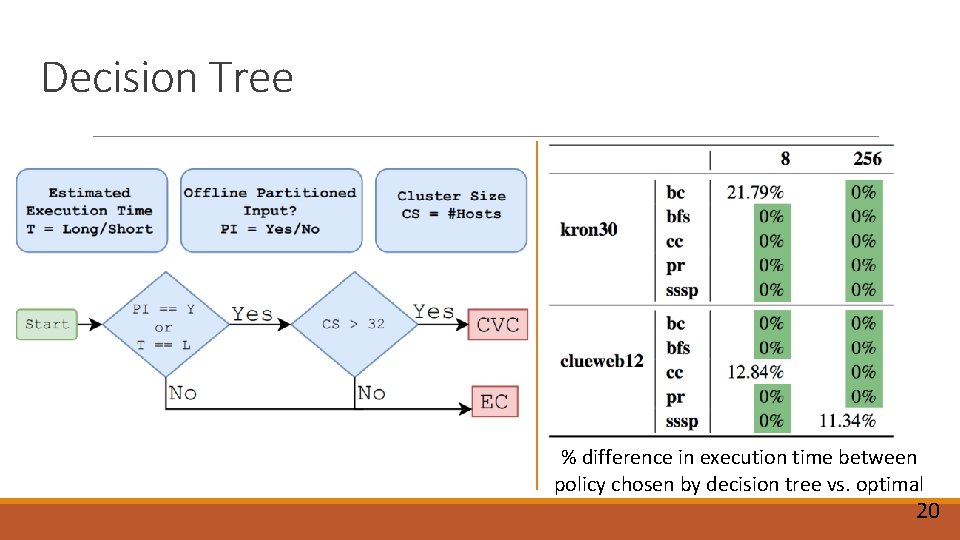

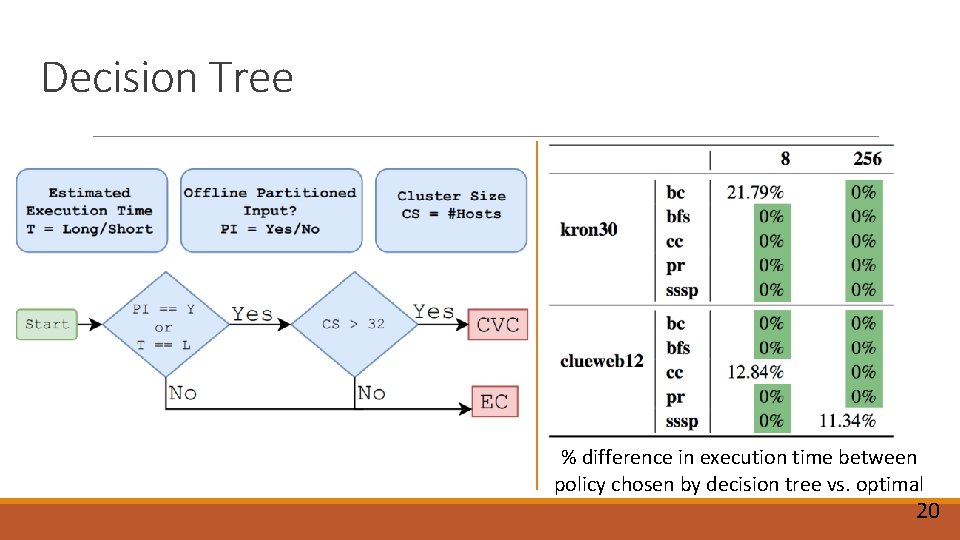

Decision Tree % difference in execution time between policy chosen by decision tree vs. optimal 20

Key Lessons ● Best performing policy depends on: ○ Application ○ Input ○ Number of hosts (scale) ● EC performs well at small scale but CVC wins at large scale ● Graph analytics systems must support: ○ Various partitioning policies ○ Partition-specific communication pattern 21

Source Code ● Frameworks used for the study: ○ Graph Partitioner: Cu. SP [IPDPS’ 19] ○ Communication Substrate: Gluon [PLDI’ 18] ○ Distributed Graph Analytics Framework: D-Galois [PLDI’ 18] https: //github. com/Intelligent. Software. Systems/Galois ~ Thank you ~ 22

23

![Partitioning Time Cu SP IPDSP 19 24 Partitioning Time Cu. SP [IPDSP’ 19] 24](https://slidetodoc.com/presentation_image_h/09e207ded86e3f9d9c3c0bb71af1ec1f/image-24.jpg)

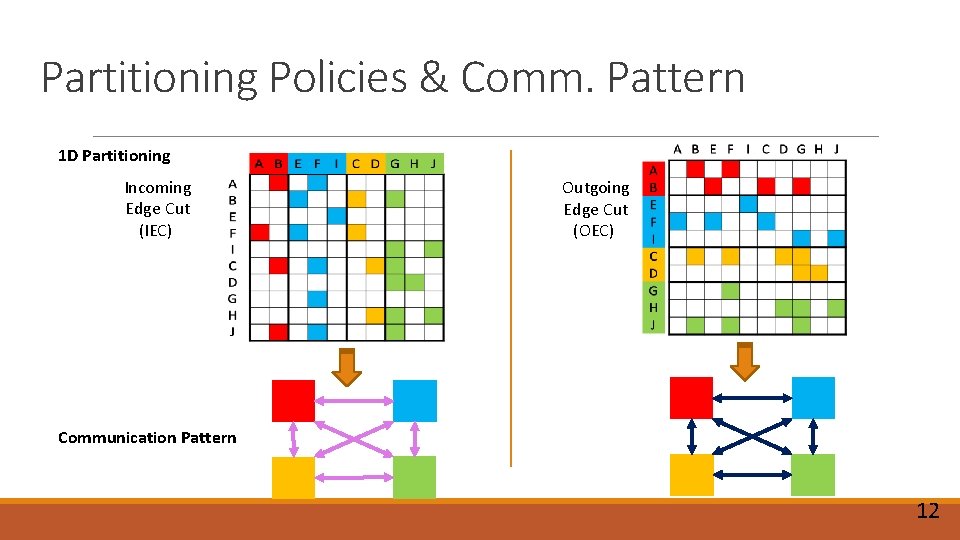

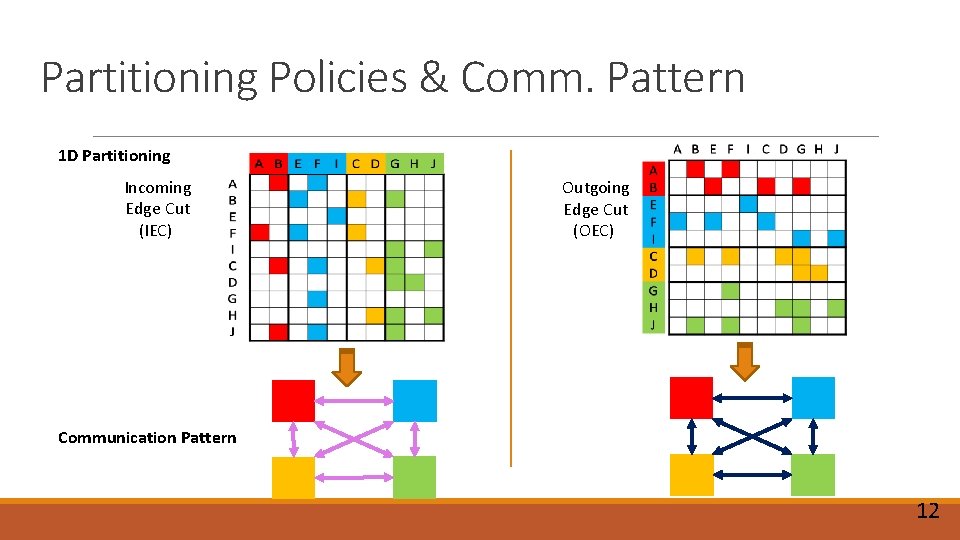

Partitioning Time Cu. SP [IPDSP’ 19] 24

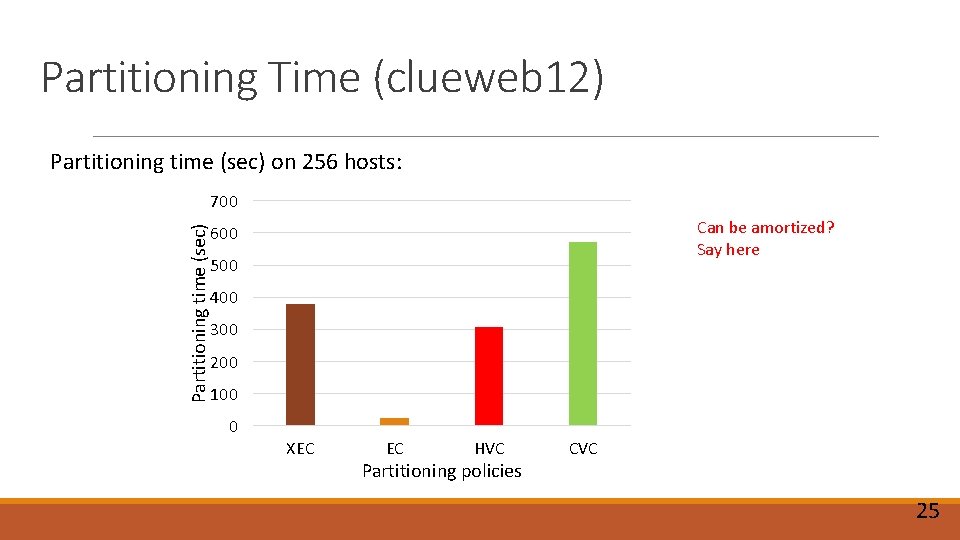

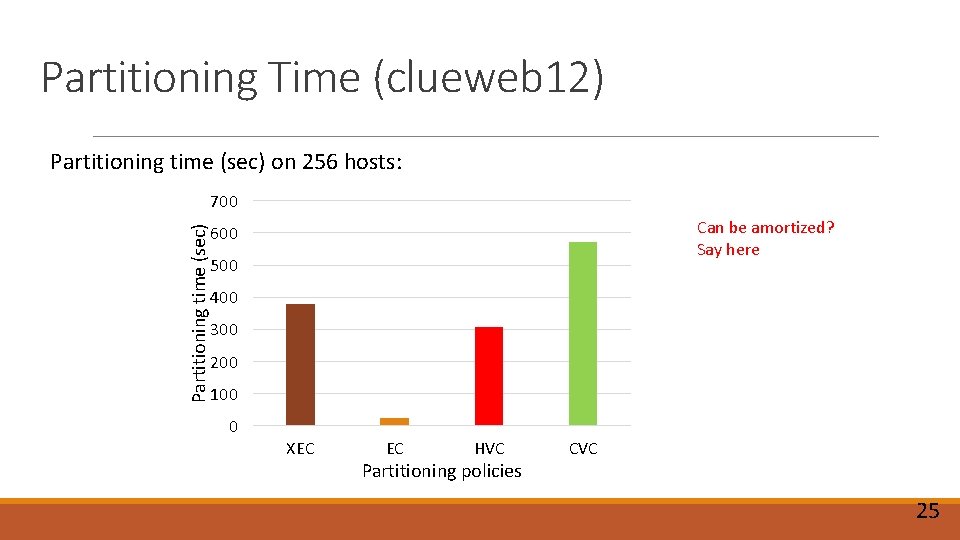

Partitioning Time (clueweb 12) Partitioning time (sec) on 256 hosts: Partitioning time (sec) 700 Can be amortized? Say here 600 500 400 300 200 100 0 XEC EC HVC Partitioning policies CVC 25

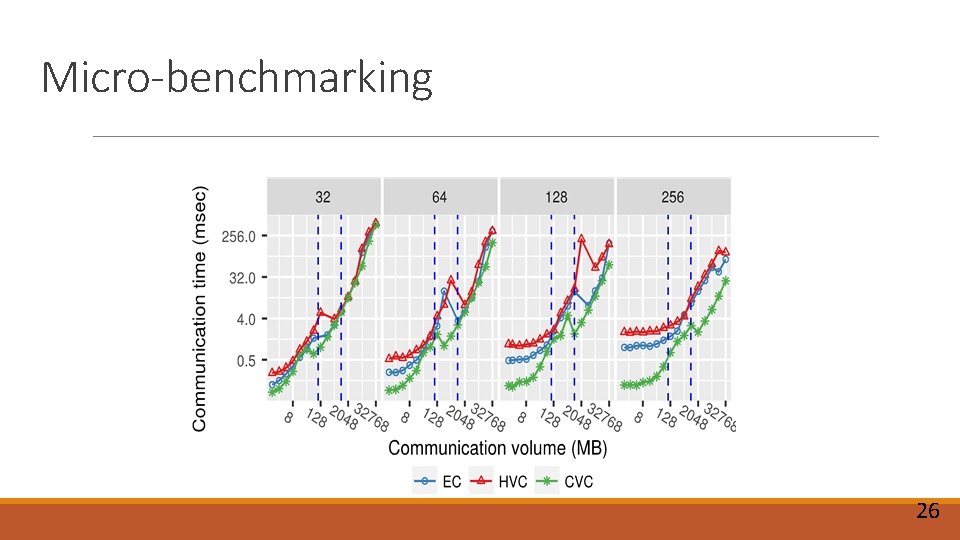

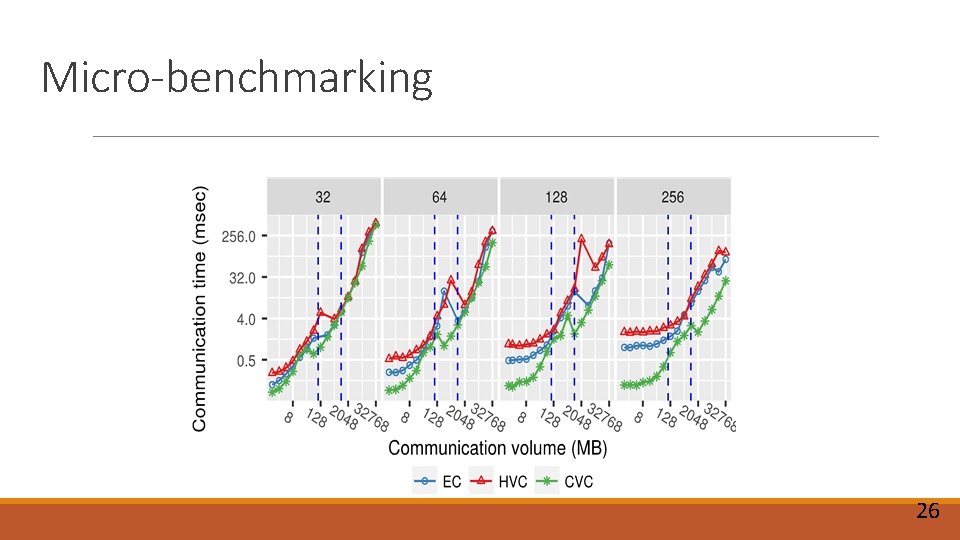

Micro-benchmarking 26