A Standard for Shared Memory Parallel Programming 1997

![DO Worksharing directive n DO directive: – C$OMP DO [clause[[, ] clause]… ] – DO Worksharing directive n DO directive: – C$OMP DO [clause[[, ] clause]… ] –](https://slidetodoc.com/presentation_image/1e9560e09a6153591a60c9ba73b4c30e/image-3.jpg)

- Slides: 56

A Standard for Shared Memory Parallel Programming © 1997 -98 Silicon Graphics Inc. All rights reserved.

Using Open. MP n n Using iterative worksharing constructs Analysis of loop level parallelism Reducing overhead in loop level model Domain decomposition – Writing scalable programs in Open. MP n n Comparisons with message passing Performance and scalability considerations © 1997 -98 Silicon Graphics Inc. All rights reserved.

![DO Worksharing directive n DO directive COMP DO clause clause DO Worksharing directive n DO directive: – C$OMP DO [clause[[, ] clause]… ] –](https://slidetodoc.com/presentation_image/1e9560e09a6153591a60c9ba73b4c30e/image-3.jpg)

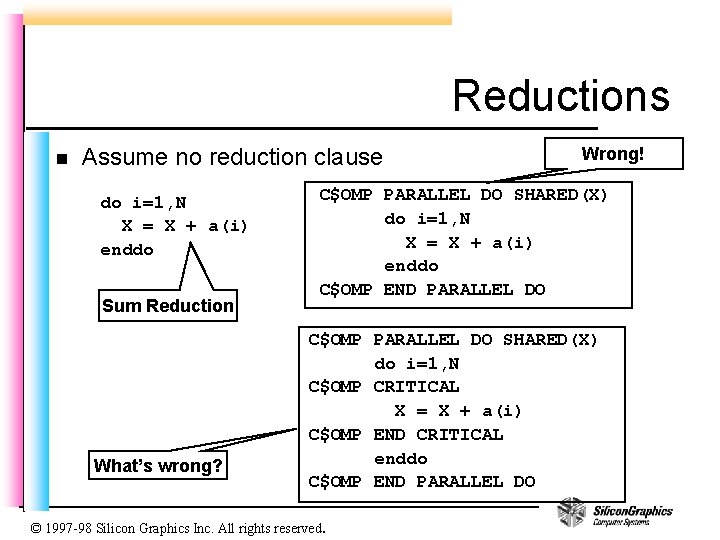

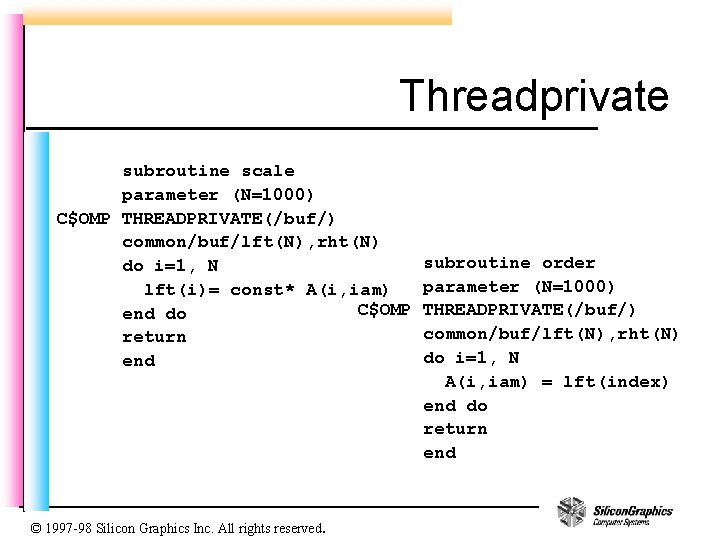

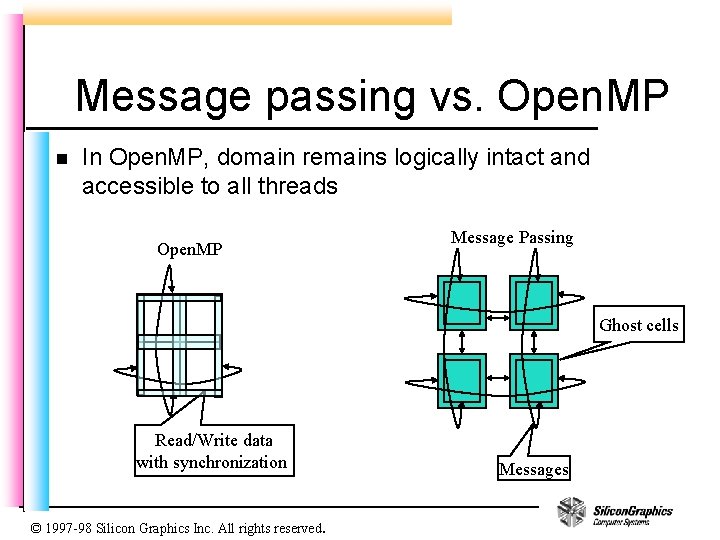

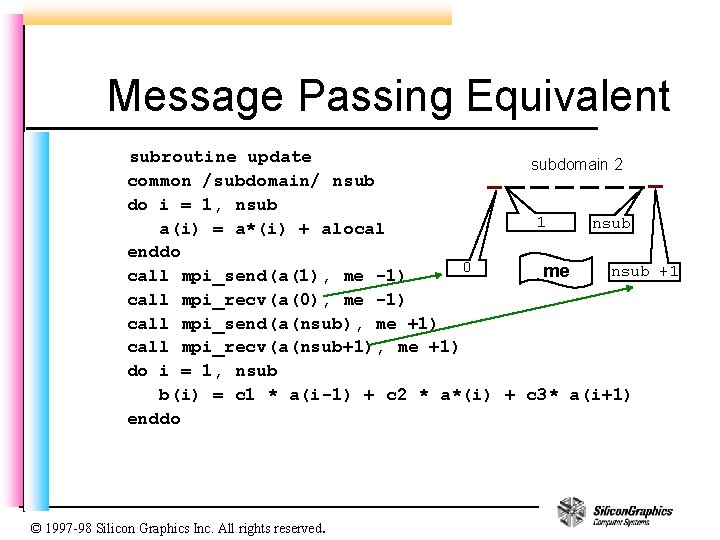

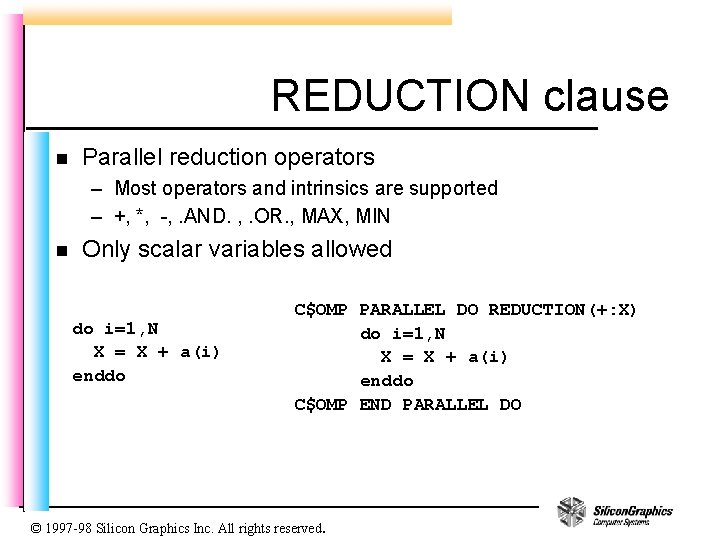

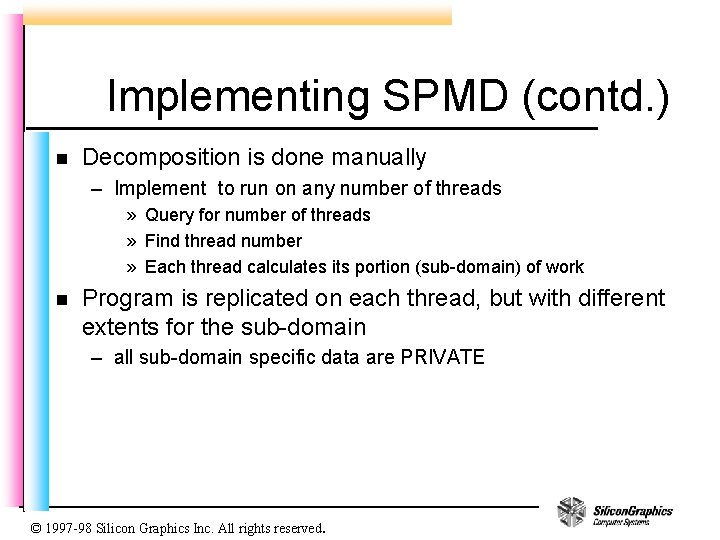

DO Worksharing directive n DO directive: – C$OMP DO [clause[[, ] clause]… ] – C$OMP DO [NOWAIT] n Clauses: – – – PRIVATE(list) FIRSTPRIVATE(list) LASTPRIVATE(list) REDUCTION({op|intrinsic}: list}) ORDERED SCHEDULE(TYPE[, chunk]) © 1997 -98 Silicon Graphics Inc. All rights reserved.

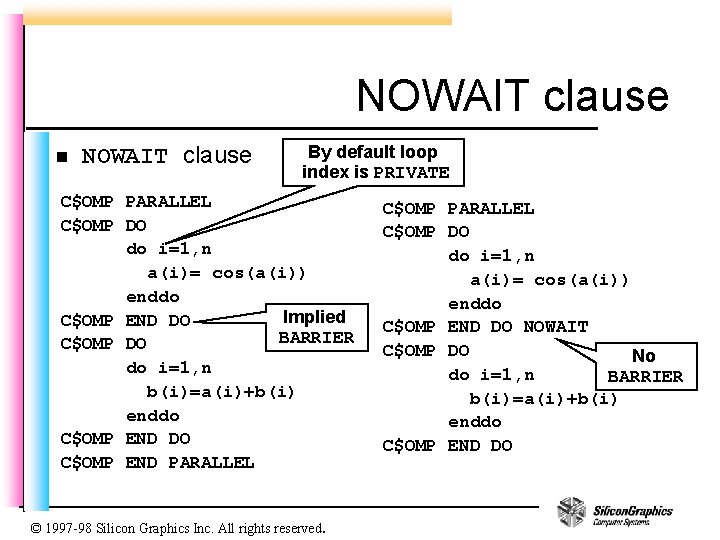

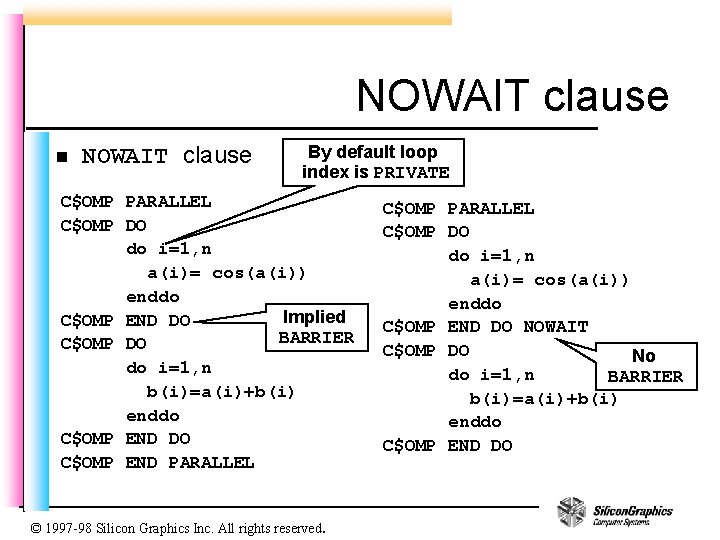

NOWAIT clause n NOWAIT clause By default loop index is PRIVATE C$OMP PARALLEL C$OMP DO do i=1, n a(i)= cos(a(i)) enddo Implied C$OMP END DO BARRIER C$OMP DO do i=1, n b(i)=a(i)+b(i) enddo C$OMP END DO C$OMP END PARALLEL © 1997 -98 Silicon Graphics Inc. All rights reserved. C$OMP PARALLEL C$OMP DO do i=1, n a(i)= cos(a(i)) enddo C$OMP END DO NOWAIT C$OMP DO No do i=1, n BARRIER b(i)=a(i)+b(i) enddo C$OMP END DO

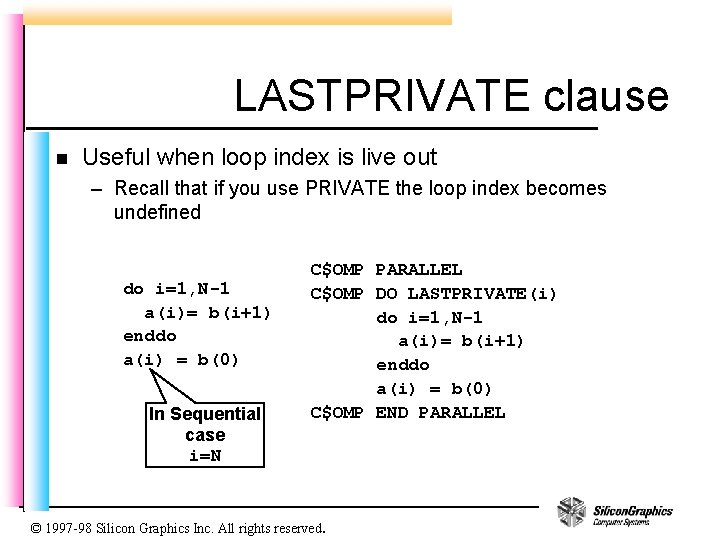

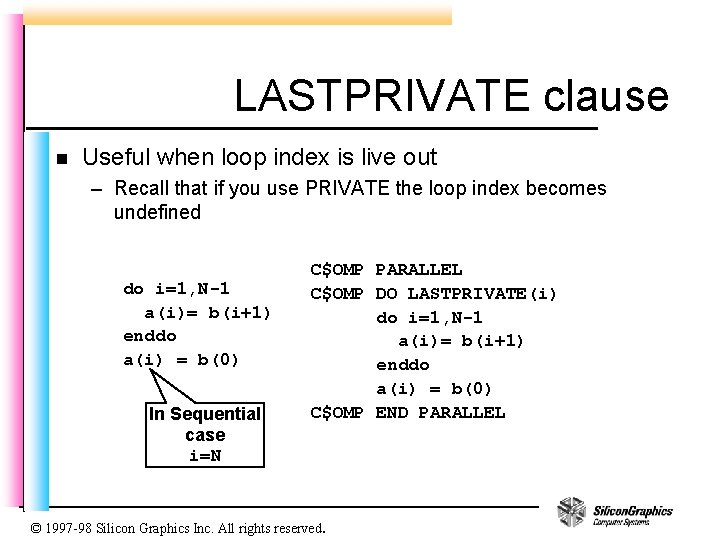

LASTPRIVATE clause n Useful when loop index is live out – Recall that if you use PRIVATE the loop index becomes undefined do i=1, N-1 a(i)= b(i+1) enddo a(i) = b(0) In Sequential case i=N C$OMP PARALLEL C$OMP DO LASTPRIVATE(i) do i=1, N-1 a(i)= b(i+1) enddo a(i) = b(0) C$OMP END PARALLEL © 1997 -98 Silicon Graphics Inc. All rights reserved.

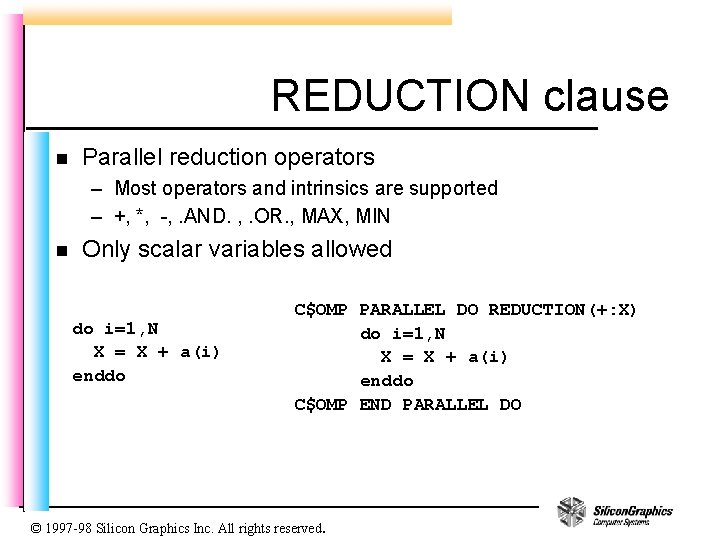

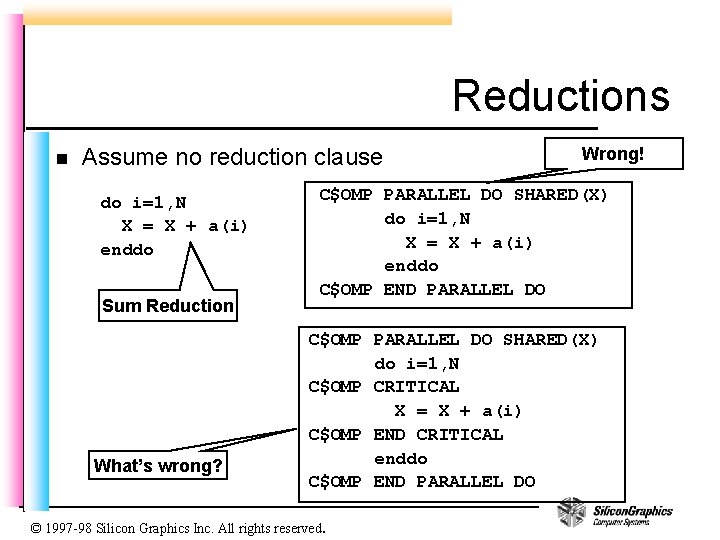

Reductions n Assume no reduction clause do i=1, N X = X + a(i) enddo Sum Reduction What’s wrong? Wrong! C$OMP PARALLEL DO SHARED(X) do i=1, N X = X + a(i) enddo C$OMP END PARALLEL DO C$OMP PARALLEL DO SHARED(X) do i=1, N C$OMP CRITICAL X = X + a(i) C$OMP END CRITICAL enddo C$OMP END PARALLEL DO © 1997 -98 Silicon Graphics Inc. All rights reserved.

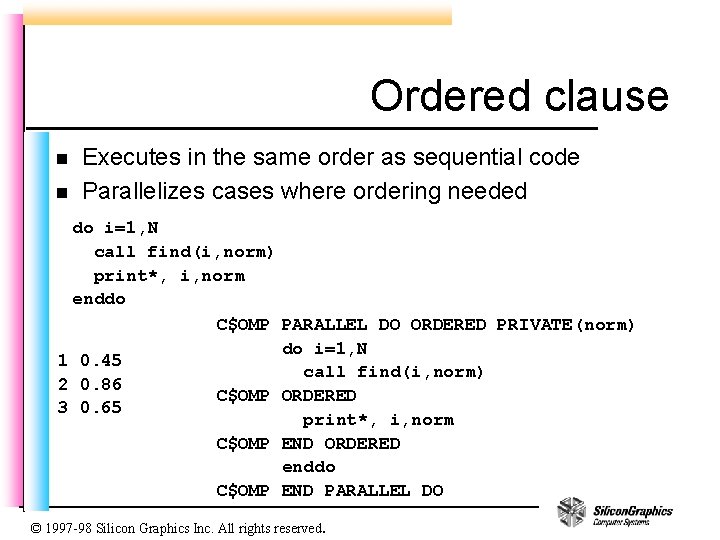

REDUCTION clause n Parallel reduction operators – Most operators and intrinsics are supported – +, *, -, . AND. , . OR. , MAX, MIN n Only scalar variables allowed do i=1, N X = X + a(i) enddo C$OMP PARALLEL DO REDUCTION(+: X) do i=1, N X = X + a(i) enddo C$OMP END PARALLEL DO © 1997 -98 Silicon Graphics Inc. All rights reserved.

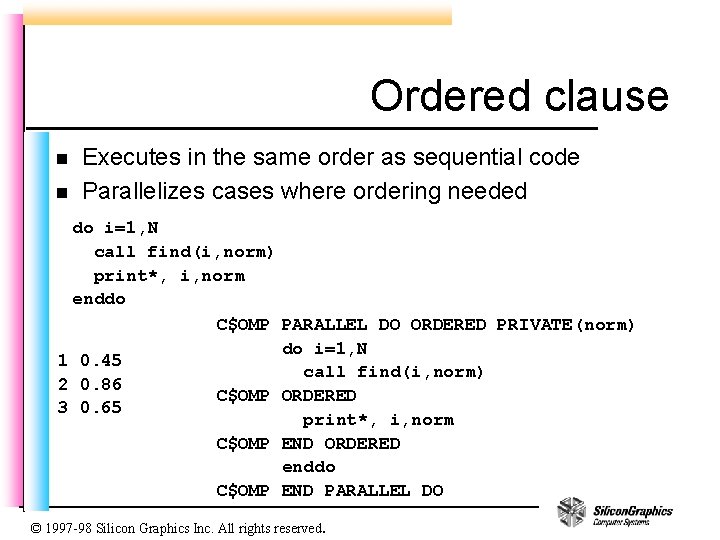

Ordered clause n n Executes in the same order as sequential code Parallelizes cases where ordering needed do i=1, N call find(i, norm) print*, i, norm enddo C$OMP PARALLEL DO ORDERED PRIVATE(norm) do i=1, N 1 0. 45 call find(i, norm) 2 0. 86 C$OMP ORDERED 3 0. 65 print*, i, norm C$OMP END ORDERED enddo C$OMP END PARALLEL DO © 1997 -98 Silicon Graphics Inc. All rights reserved.

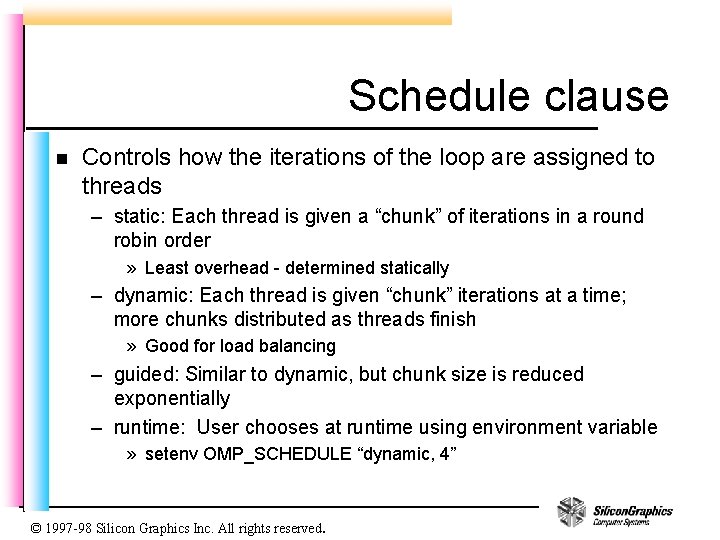

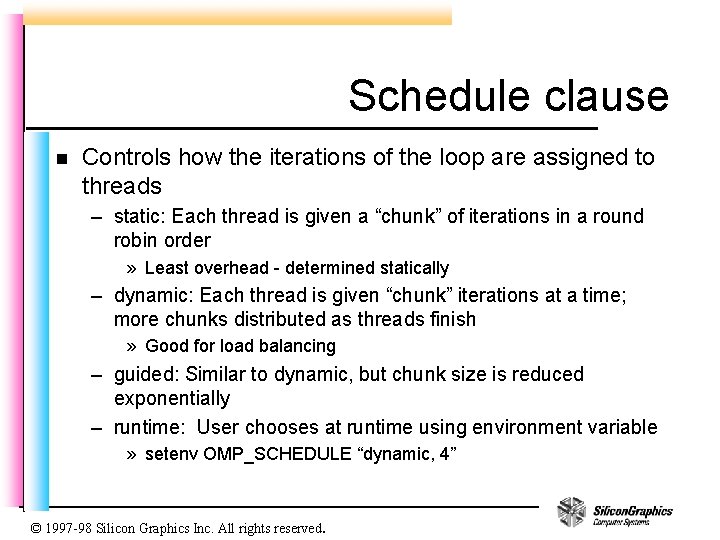

Schedule clause n Controls how the iterations of the loop are assigned to threads – static: Each thread is given a “chunk” of iterations in a round robin order » Least overhead - determined statically – dynamic: Each thread is given “chunk” iterations at a time; more chunks distributed as threads finish » Good for load balancing – guided: Similar to dynamic, but chunk size is reduced exponentially – runtime: User chooses at runtime using environment variable » setenv OMP_SCHEDULE “dynamic, 4” © 1997 -98 Silicon Graphics Inc. All rights reserved.

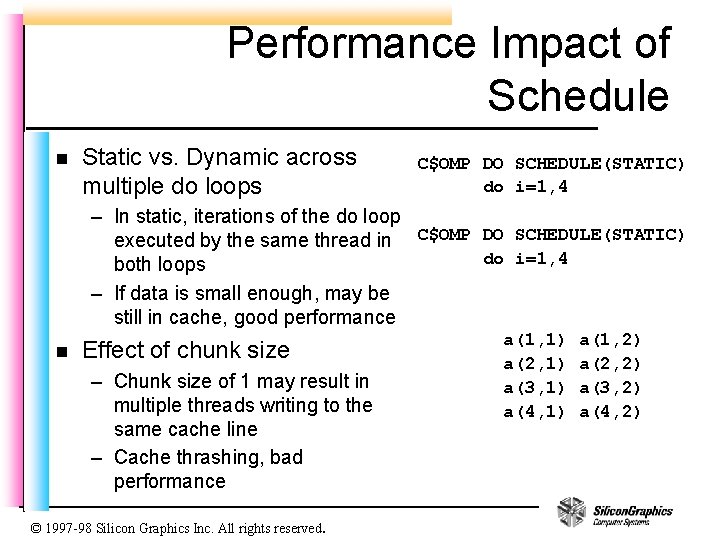

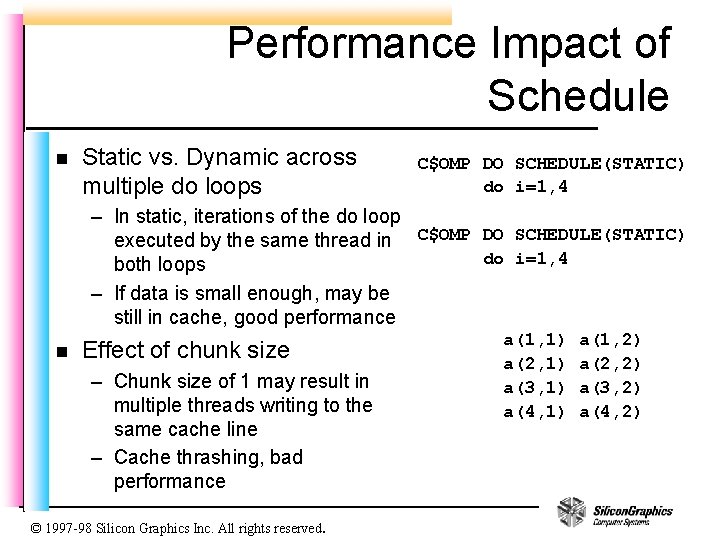

Performance Impact of Schedule n Static vs. Dynamic across multiple do loops C$OMP DO SCHEDULE(STATIC) do i=1, 4 – In static, iterations of the do loop executed by the same thread in C$OMP DO SCHEDULE(STATIC) do i=1, 4 both loops – If data is small enough, may be still in cache, good performance n Effect of chunk size – Chunk size of 1 may result in multiple threads writing to the same cache line – Cache thrashing, bad performance © 1997 -98 Silicon Graphics Inc. All rights reserved. a(1, 1) a(2, 1) a(3, 1) a(4, 1) a(1, 2) a(2, 2) a(3, 2) a(4, 2)

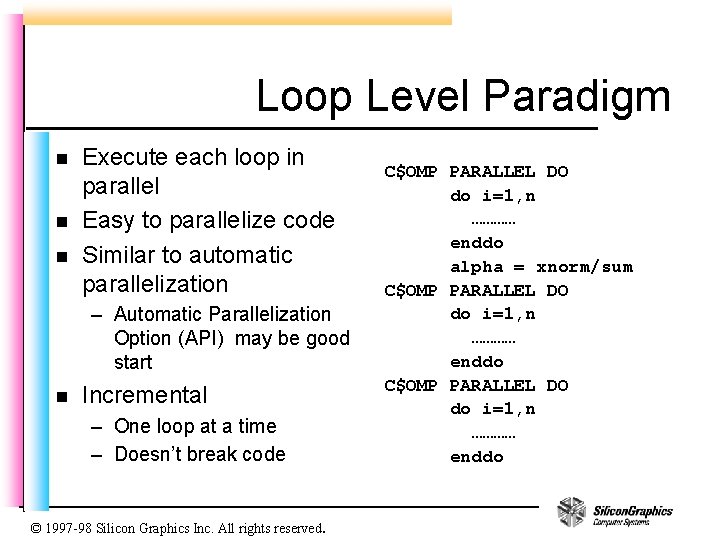

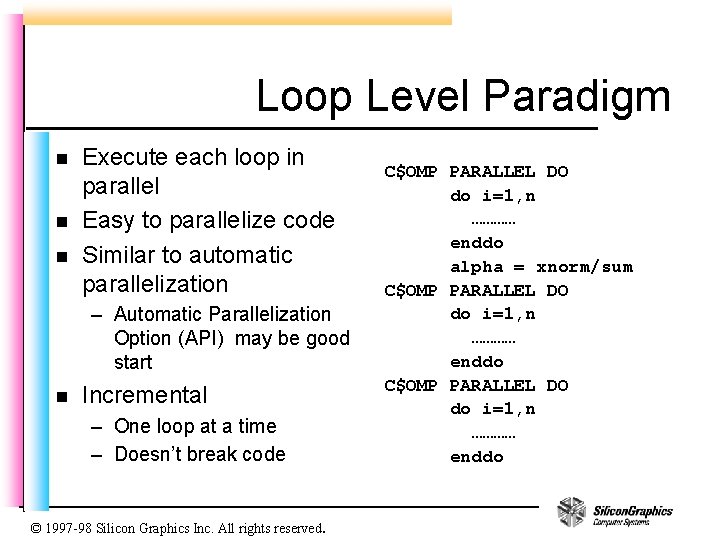

Loop Level Paradigm n n n Execute each loop in parallel Easy to parallelize code Similar to automatic parallelization – Automatic Parallelization Option (API) may be good start n Incremental – One loop at a time – Doesn’t break code © 1997 -98 Silicon Graphics Inc. All rights reserved. C$OMP PARALLEL DO do i=1, n ………… enddo alpha = xnorm/sum C$OMP PARALLEL DO do i=1, n ………… enddo

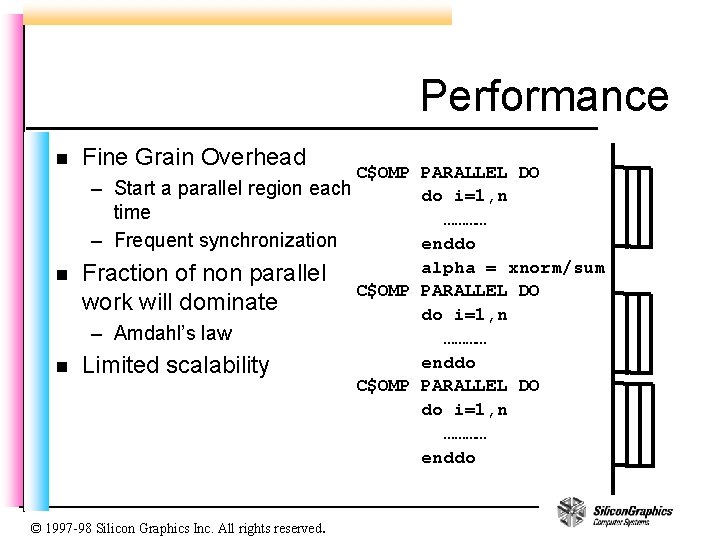

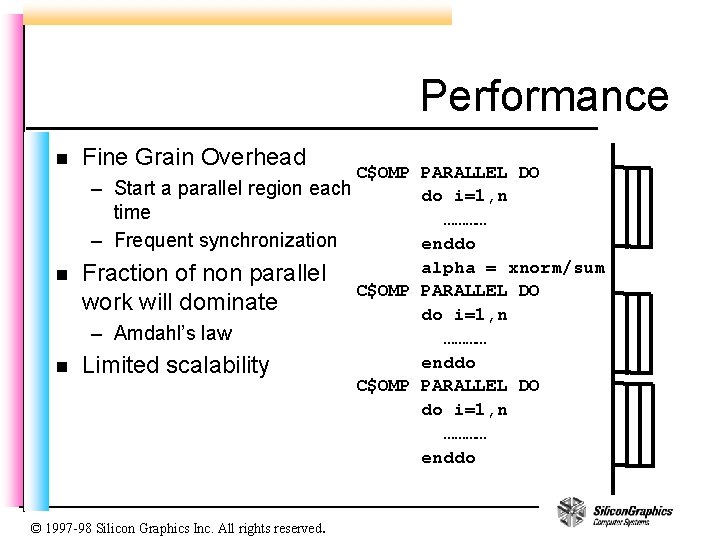

Performance n Fine Grain Overhead C$OMP PARALLEL DO – Start a parallel region each do i=1, n time ………… – Frequent synchronization enddo alpha = xnorm/sum n Fraction of non parallel C$OMP PARALLEL DO work will dominate do i=1, n – Amdahl’s law ………… enddo n Limited scalability C$OMP PARALLEL DO do i=1, n ………… enddo © 1997 -98 Silicon Graphics Inc. All rights reserved.

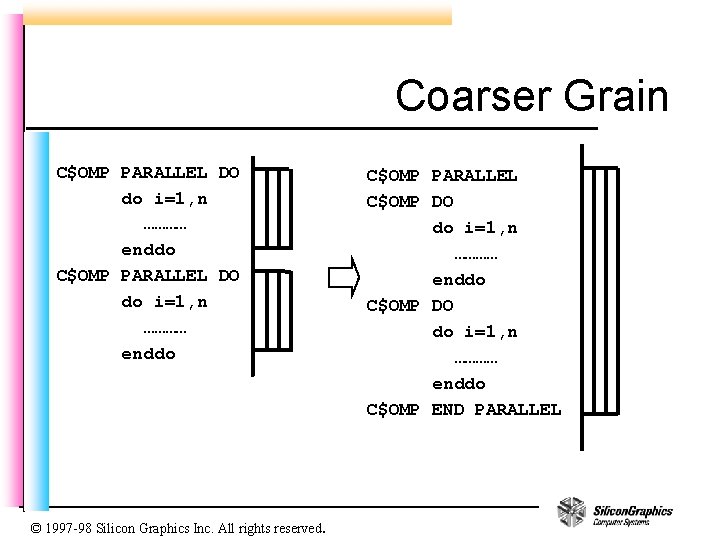

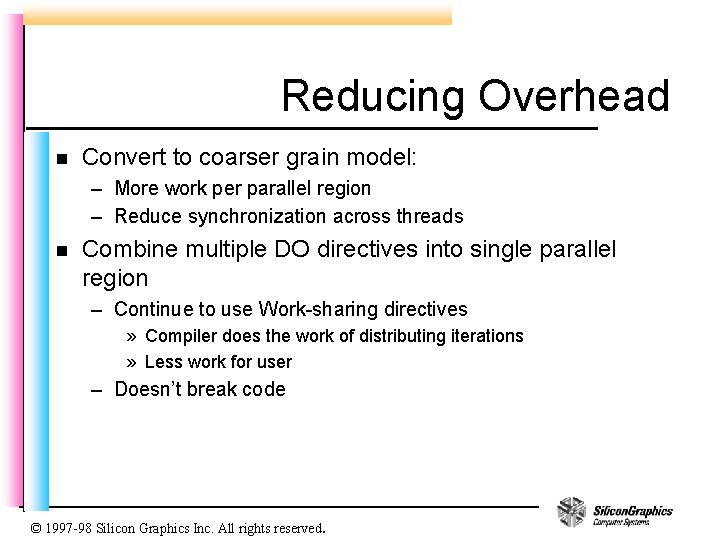

Reducing Overhead n Convert to coarser grain model: – More work per parallel region – Reduce synchronization across threads n Combine multiple DO directives into single parallel region – Continue to use Work-sharing directives » Compiler does the work of distributing iterations » Less work for user – Doesn’t break code © 1997 -98 Silicon Graphics Inc. All rights reserved.

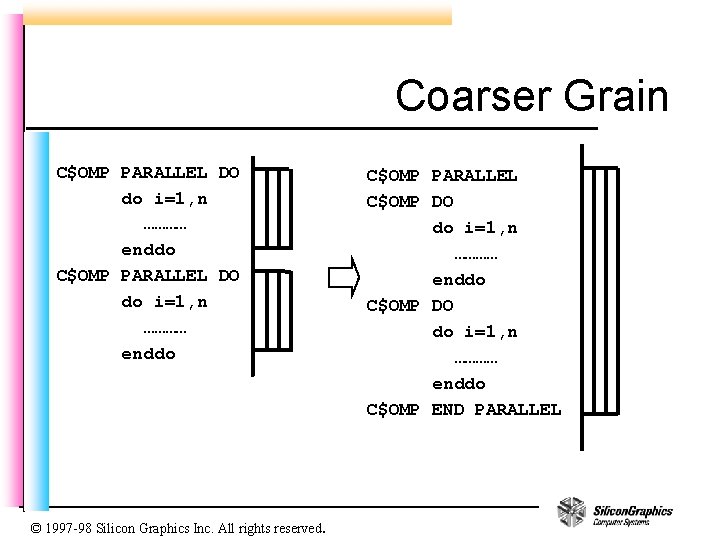

Coarser Grain C$OMP PARALLEL DO do i=1, n ………… enddo © 1997 -98 Silicon Graphics Inc. All rights reserved. C$OMP PARALLEL C$OMP DO do i=1, n ………… enddo C$OMP END PARALLEL

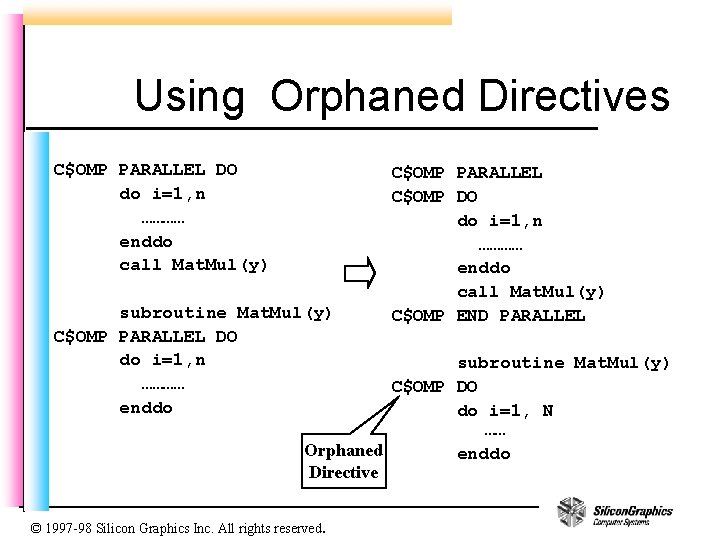

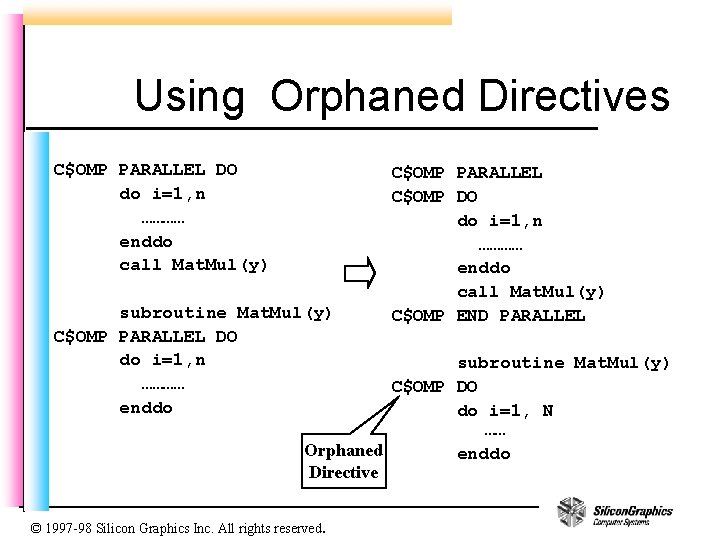

Using Orphaned Directives C$OMP PARALLEL DO do i=1, n ………… enddo call Mat. Mul(y) subroutine Mat. Mul(y) C$OMP PARALLEL DO do i=1, n ………… enddo C$OMP PARALLEL C$OMP DO do i=1, n ………… enddo call Mat. Mul(y) C$OMP END PARALLEL subroutine Mat. Mul(y) C$OMP DO do i=1, N …… Orphaned enddo Directive © 1997 -98 Silicon Graphics Inc. All rights reserved.

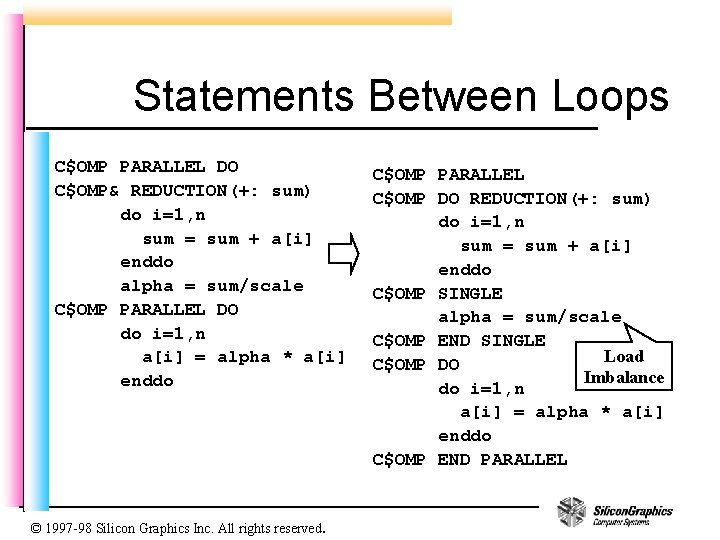

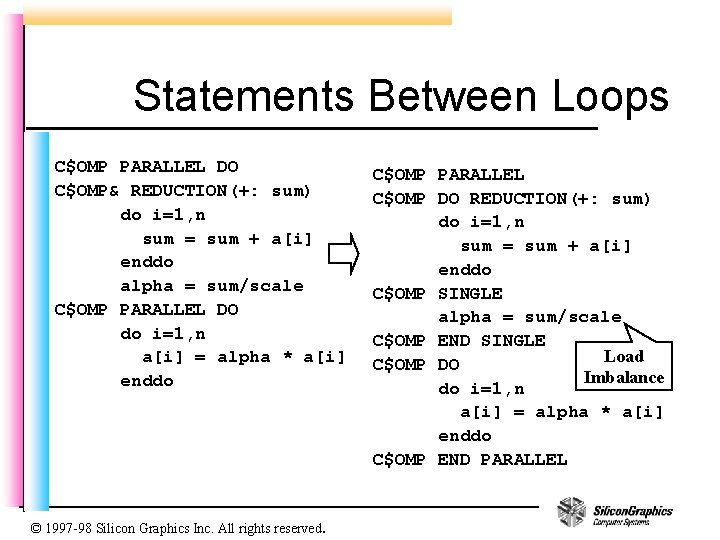

Statements Between Loops C$OMP PARALLEL DO C$OMP& REDUCTION(+: sum) do i=1, n sum = sum + a[i] enddo alpha = sum/scale C$OMP PARALLEL DO do i=1, n a[i] = alpha * a[i] enddo © 1997 -98 Silicon Graphics Inc. All rights reserved. C$OMP PARALLEL C$OMP DO REDUCTION(+: sum) do i=1, n sum = sum + a[i] enddo C$OMP SINGLE alpha = sum/scale C$OMP END SINGLE Load C$OMP DO Imbalance do i=1, n a[i] = alpha * a[i] enddo C$OMP END PARALLEL

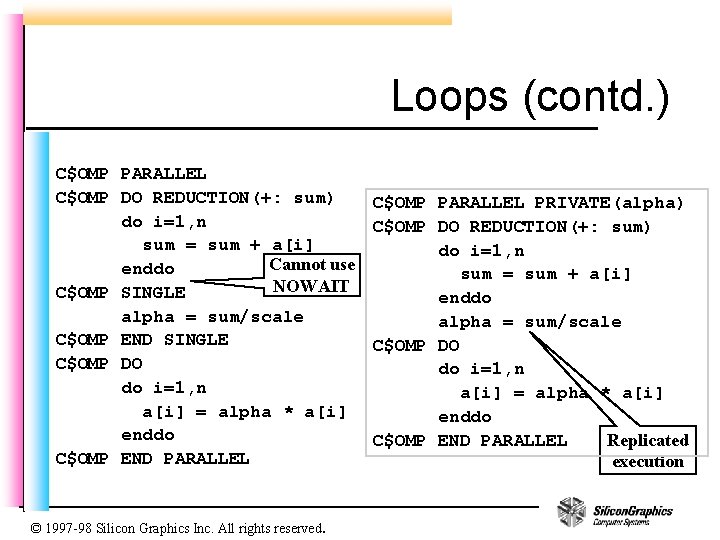

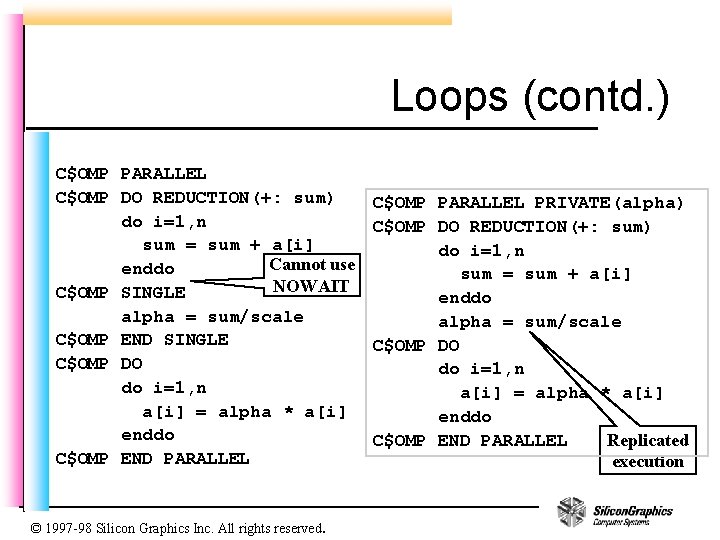

Loops (contd. ) C$OMP PARALLEL C$OMP DO REDUCTION(+: sum) do i=1, n sum = sum + a[i] Cannot use enddo NOWAIT C$OMP SINGLE alpha = sum/scale C$OMP END SINGLE C$OMP DO do i=1, n a[i] = alpha * a[i] enddo C$OMP END PARALLEL © 1997 -98 Silicon Graphics Inc. All rights reserved. C$OMP PARALLEL PRIVATE(alpha) C$OMP DO REDUCTION(+: sum) do i=1, n sum = sum + a[i] enddo alpha = sum/scale C$OMP DO do i=1, n a[i] = alpha * a[i] enddo C$OMP END PARALLEL Replicated execution

Coarse Grain Work-sharing n n Reduced overhead by increasing work per parallel region But…work-sharing constructs still need to compute loop bounds at each construct Work between loops not always parallelizable Synchronization at the end of directive not always avoidable © 1997 -98 Silicon Graphics Inc. All rights reserved.

Domain Decomposition n We could have computed loop bounds once and used that for all loops – i. e. , compute loop decomposition a priori n Enter Domain Decomposition – More general approach to loop decomposition – Decompose work into the number of threads – Each threads gets to work on a sub-domain © 1997 -98 Silicon Graphics Inc. All rights reserved.

Domain Decomposition (contd. ) n n Transfers onus of decomposition from compiler (worksharing) to user Results in Coarse Grain program – Typically one parallel region for whole program – Reduced overhead good scalability n Domain Decomposition results in a model of programming called SPMD © 1997 -98 Silicon Graphics Inc. All rights reserved.

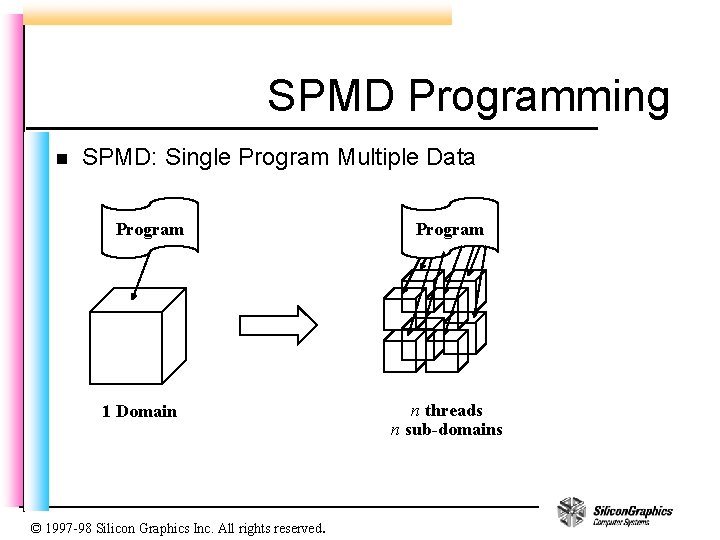

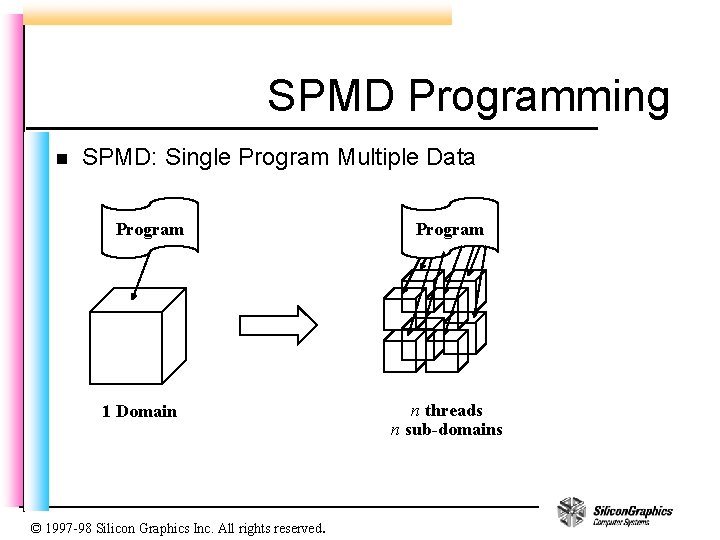

SPMD Programming n SPMD: Single Program Multiple Data Program 1 Domain © 1997 -98 Silicon Graphics Inc. All rights reserved. Program n threads n sub-domains

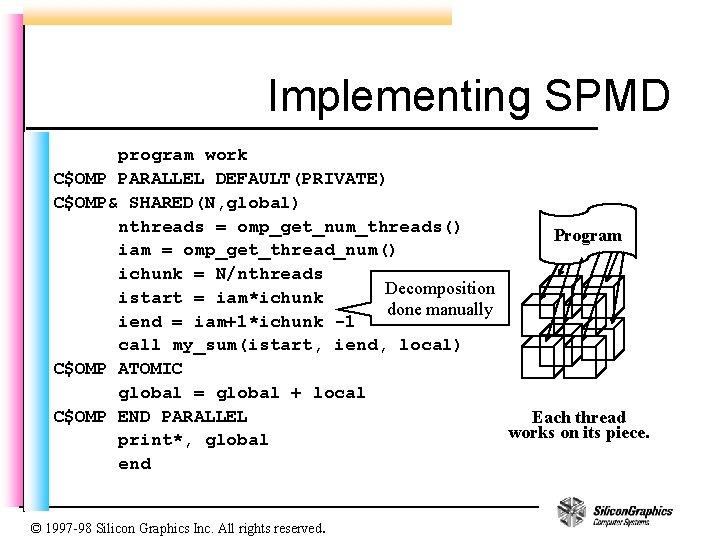

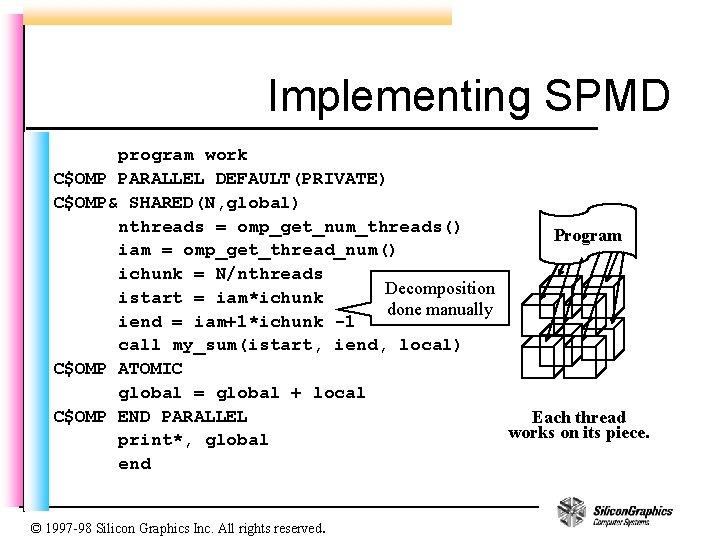

Implementing SPMD program work C$OMP PARALLEL DEFAULT(PRIVATE) C$OMP& SHARED(N, global) nthreads = omp_get_num_threads() Program iam = omp_get_thread_num() ichunk = N/nthreads Decomposition istart = iam*ichunk done manually iend = iam+1*ichunk -1 call my_sum(istart, iend, local) C$OMP ATOMIC global = global + local C$OMP END PARALLEL Each thread works on its piece. print*, global end © 1997 -98 Silicon Graphics Inc. All rights reserved.

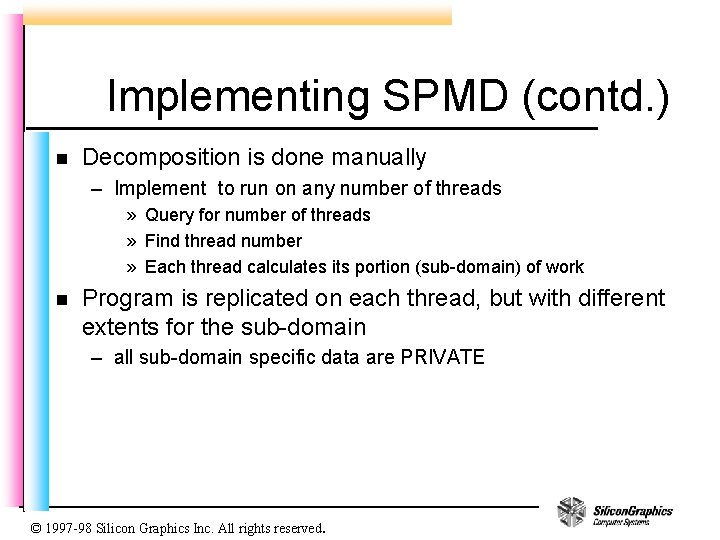

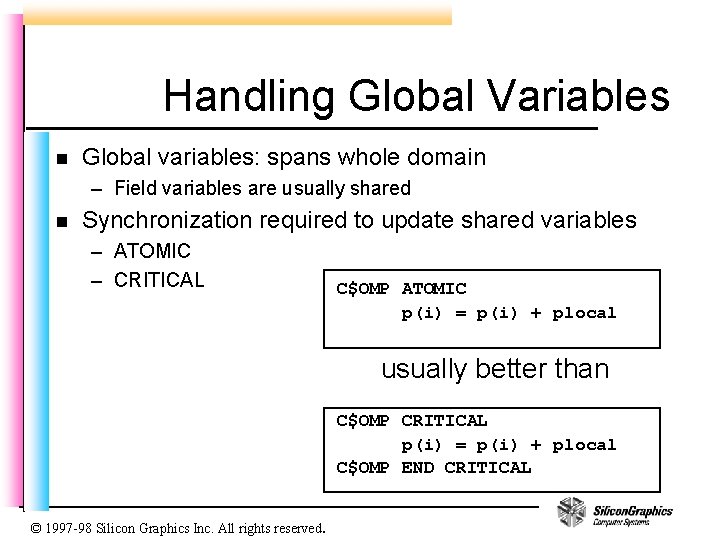

Implementing SPMD (contd. ) n Decomposition is done manually – Implement to run on any number of threads » Query for number of threads » Find thread number » Each thread calculates its portion (sub-domain) of work n Program is replicated on each thread, but with different extents for the sub-domain – all sub-domain specific data are PRIVATE © 1997 -98 Silicon Graphics Inc. All rights reserved.

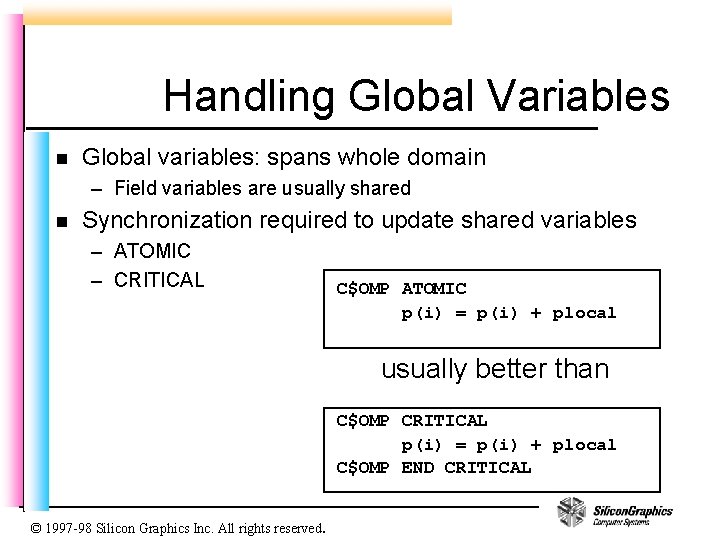

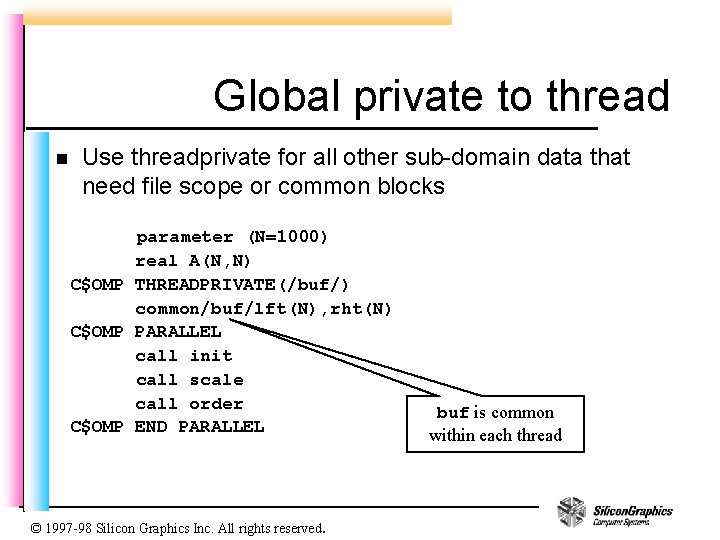

Handling Global Variables n Global variables: spans whole domain – Field variables are usually shared n Synchronization required to update shared variables – ATOMIC – CRITICAL C$OMP ATOMIC p(i) = p(i) + plocal usually better than C$OMP CRITICAL p(i) = p(i) + plocal C$OMP END CRITICAL © 1997 -98 Silicon Graphics Inc. All rights reserved.

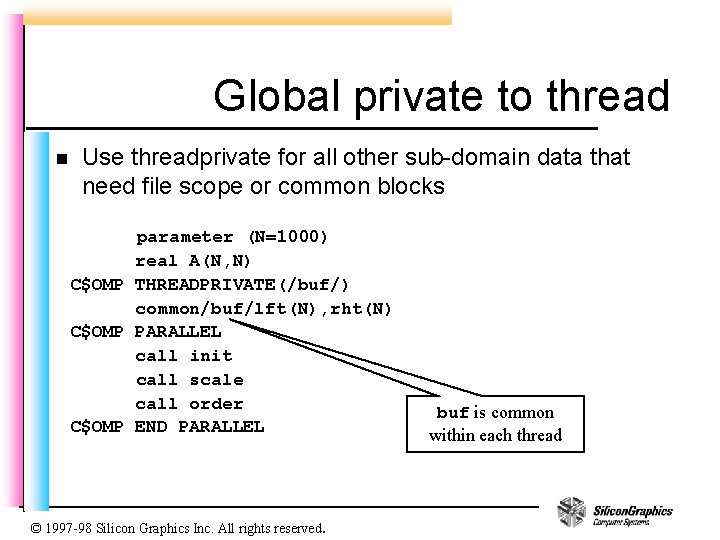

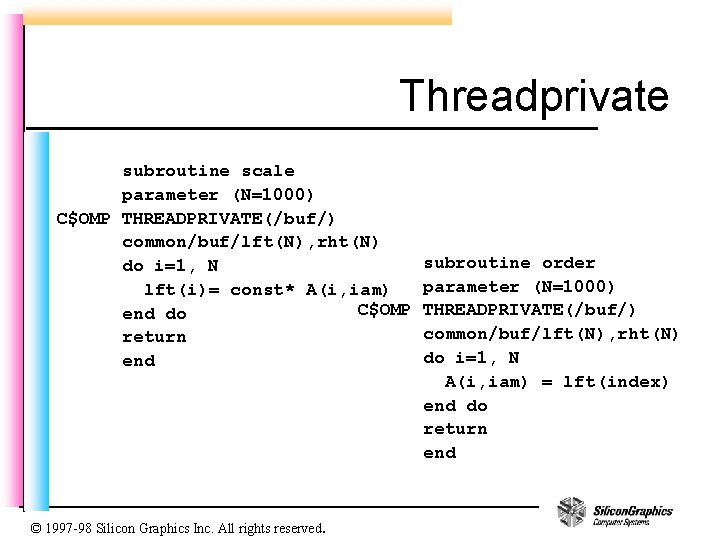

Global private to thread n Use threadprivate for all other sub-domain data that need file scope or common blocks parameter (N=1000) real A(N, N) C$OMP THREADPRIVATE(/buf/) common/buf/lft(N), rht(N) C$OMP PARALLEL call init call scale call order C$OMP END PARALLEL © 1997 -98 Silicon Graphics Inc. All rights reserved. buf is common within each thread

Threadprivate subroutine scale parameter (N=1000) C$OMP THREADPRIVATE(/buf/) common/buf/lft(N), rht(N) do i=1, N lft(i)= const* A(i, iam) C$OMP end do return end © 1997 -98 Silicon Graphics Inc. All rights reserved. subroutine order parameter (N=1000) THREADPRIVATE(/buf/) common/buf/lft(N), rht(N) do i=1, N A(i, iam) = lft(index) end do return end

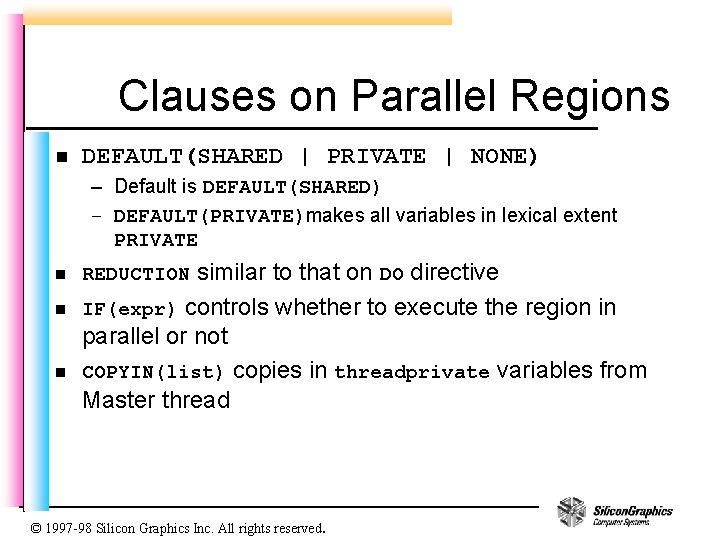

Clauses on Parallel Regions n DEFAULT(SHARED | PRIVATE | NONE) – Default is DEFAULT(SHARED) – DEFAULT(PRIVATE)makes all variables in lexical extent PRIVATE n n n similar to that on DO directive IF(expr) controls whether to execute the region in parallel or not COPYIN(list) copies in threadprivate variables from Master thread REDUCTION © 1997 -98 Silicon Graphics Inc. All rights reserved.

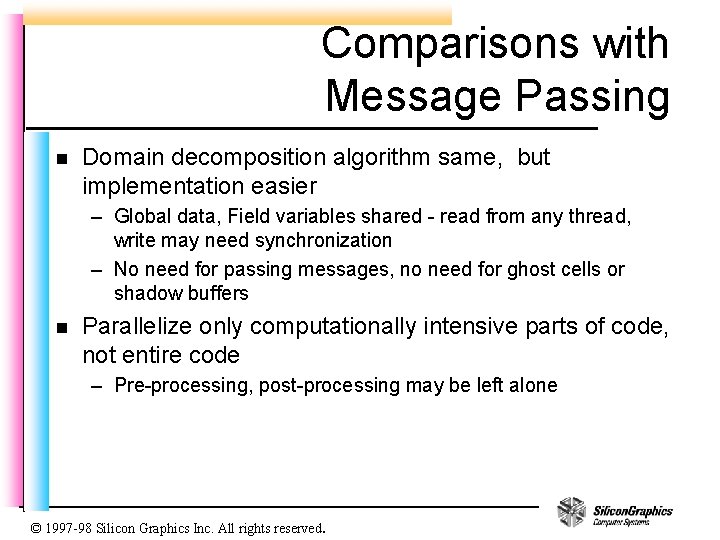

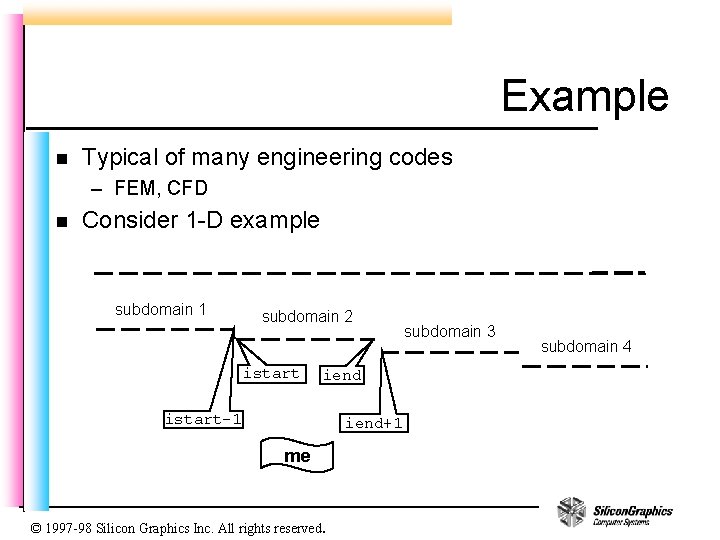

Comparisons with Message Passing n Domain decomposition algorithm same, but implementation easier – Global data, Field variables shared - read from any thread, write may need synchronization – No need for passing messages, no need for ghost cells or shadow buffers n Parallelize only computationally intensive parts of code, not entire code – Pre-processing, post-processing may be left alone © 1997 -98 Silicon Graphics Inc. All rights reserved.

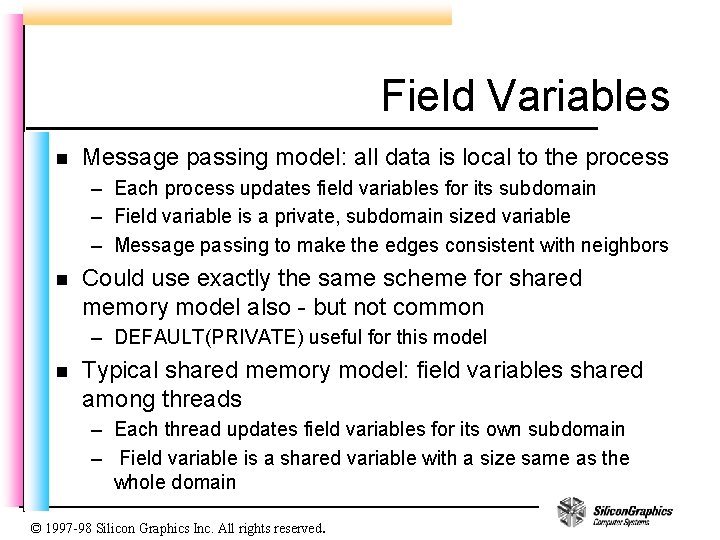

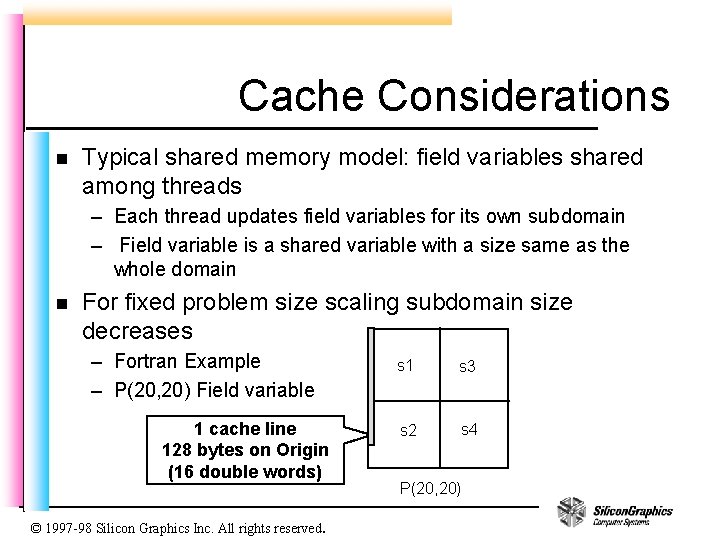

Field Variables n Message passing model: all data is local to the process – Each process updates field variables for its subdomain – Field variable is a private, subdomain sized variable – Message passing to make the edges consistent with neighbors n Could use exactly the same scheme for shared memory model also - but not common – DEFAULT(PRIVATE) useful for this model n Typical shared memory model: field variables shared among threads – Each thread updates field variables for its own subdomain – Field variable is a shared variable with a size same as the whole domain © 1997 -98 Silicon Graphics Inc. All rights reserved.

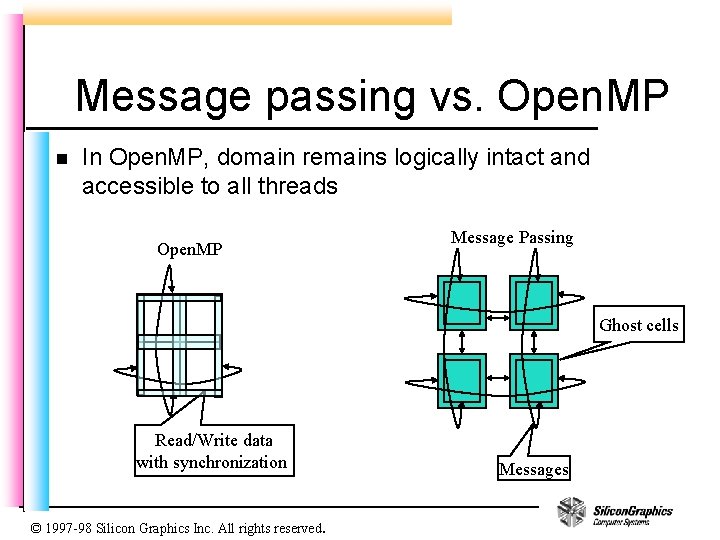

Message passing vs. Open. MP n In Open. MP, domain remains logically intact and accessible to all threads Open. MP Message Passing Ghost cells Read/Write data with synchronization © 1997 -98 Silicon Graphics Inc. All rights reserved. Messages

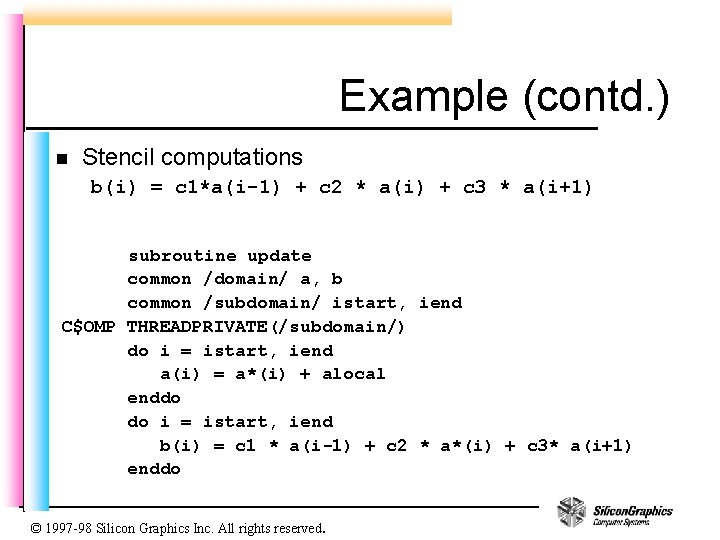

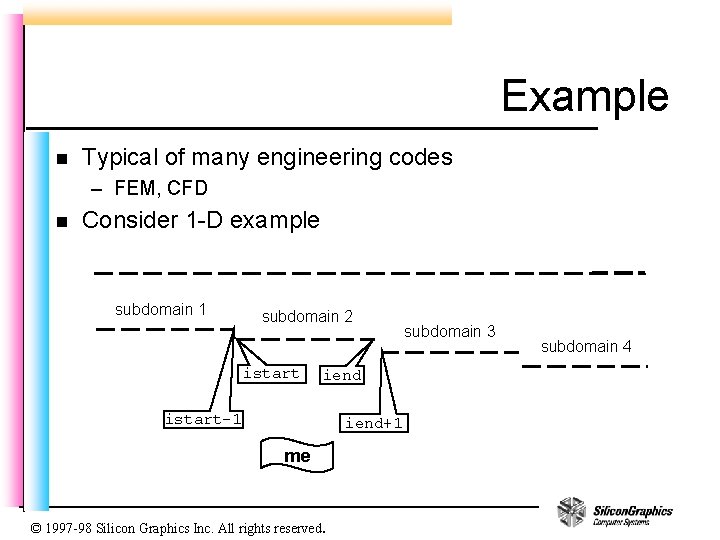

Example n Typical of many engineering codes – FEM, CFD n Consider 1 -D example subdomain 1 subdomain 2 istart iend istart-1 iend+1 me © 1997 -98 Silicon Graphics Inc. All rights reserved. subdomain 3 subdomain 4

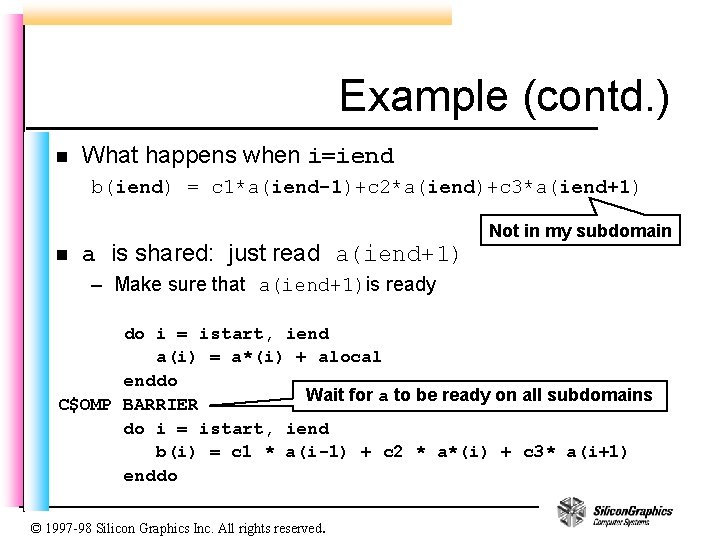

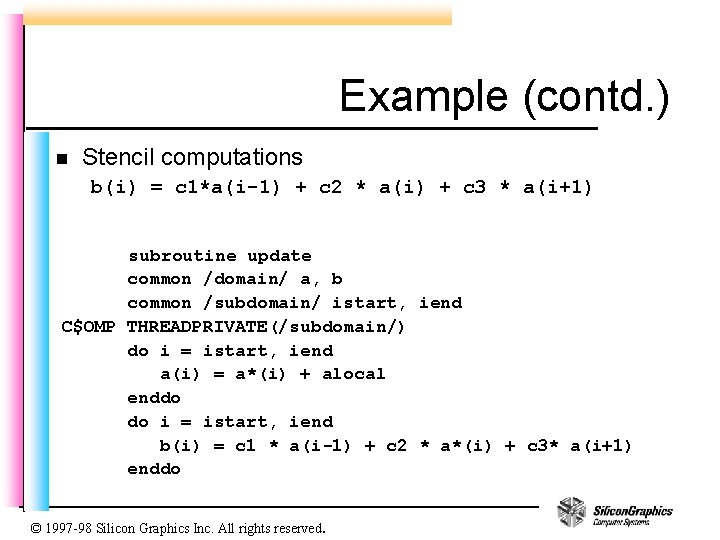

Example (contd. ) n Stencil computations b(i) = c 1*a(i-1) + c 2 * a(i) + c 3 * a(i+1) subroutine update common /domain/ a, b common /subdomain/ istart, iend C$OMP THREADPRIVATE(/subdomain/) do i = istart, iend a(i) = a*(i) + alocal enddo do i = istart, iend b(i) = c 1 * a(i-1) + c 2 * a*(i) + c 3* a(i+1) enddo © 1997 -98 Silicon Graphics Inc. All rights reserved.

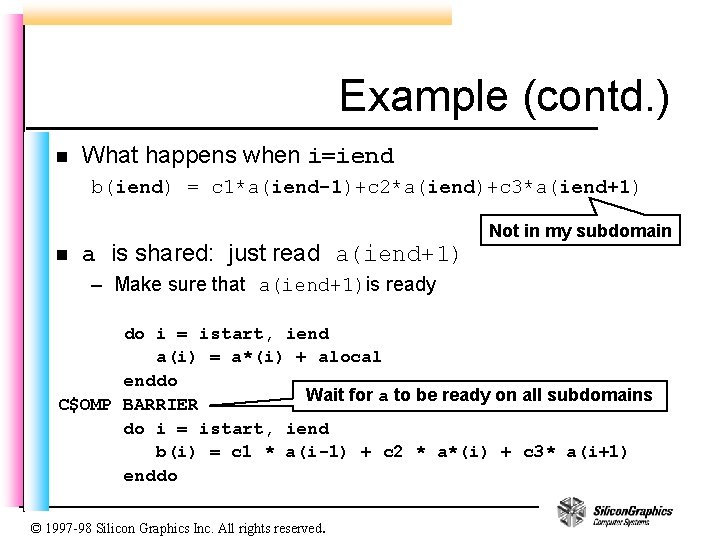

Example (contd. ) n What happens when i=iend b(iend) = c 1*a(iend-1)+c 2*a(iend)+c 3*a(iend+1) n a is shared: just read a(iend+1) Not in my subdomain – Make sure that a(iend+1)is ready do i = istart, iend a(i) = a*(i) + alocal enddo Wait for a to be ready on all subdomains C$OMP BARRIER do i = istart, iend b(i) = c 1 * a(i-1) + c 2 * a*(i) + c 3* a(i+1) enddo © 1997 -98 Silicon Graphics Inc. All rights reserved.

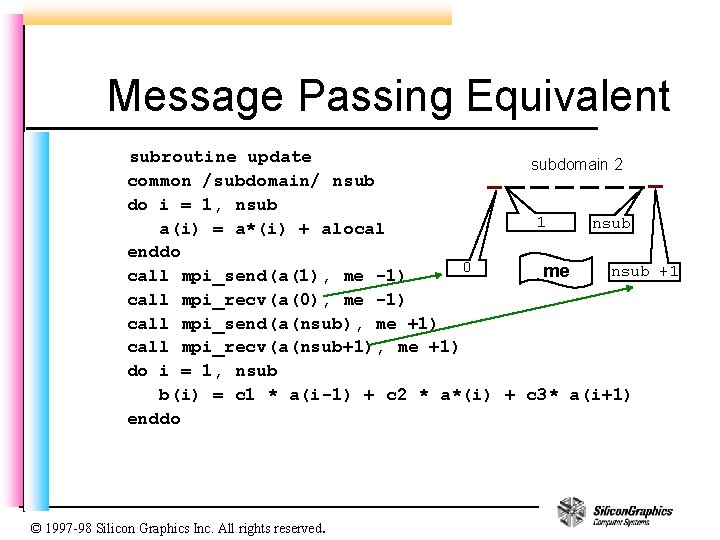

Message Passing Equivalent subroutine update subdomain 2 common /subdomain/ nsub do i = 1, nsub 1 nsub a(i) = a*(i) + alocal enddo 0 nsub +1 me call mpi_send(a(1), me -1) call mpi_recv(a(0), me -1) call mpi_send(a(nsub), me +1) call mpi_recv(a(nsub+1), me +1) do i = 1, nsub b(i) = c 1 * a(i-1) + c 2 * a*(i) + c 3* a(i+1) enddo © 1997 -98 Silicon Graphics Inc. All rights reserved.

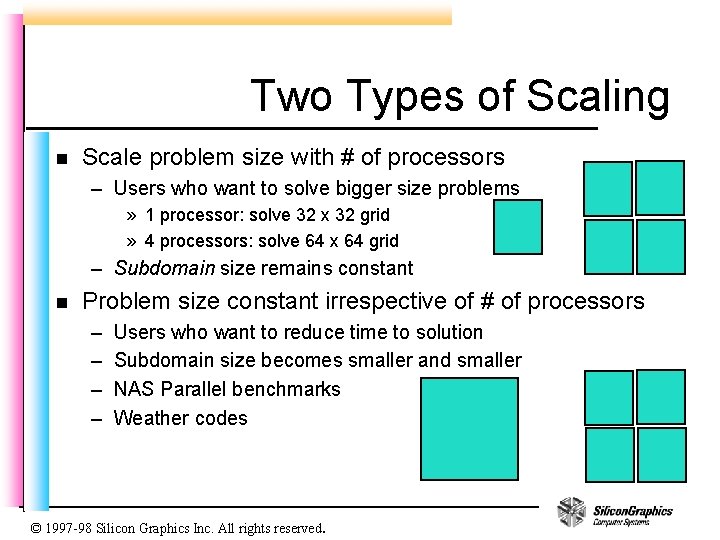

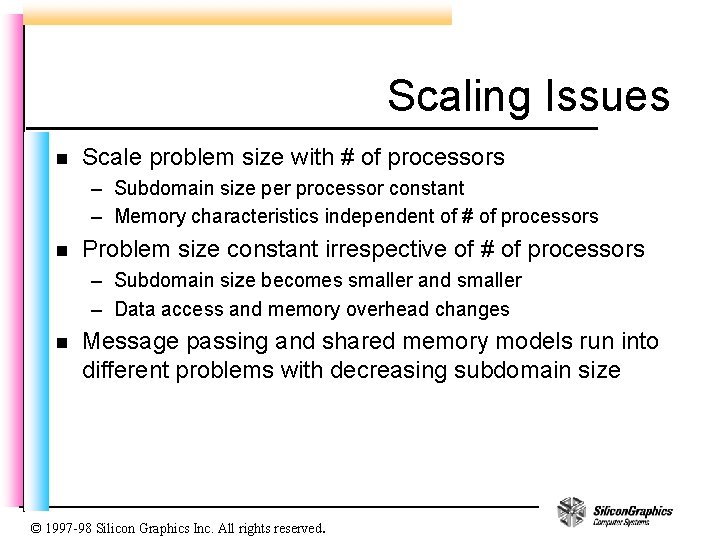

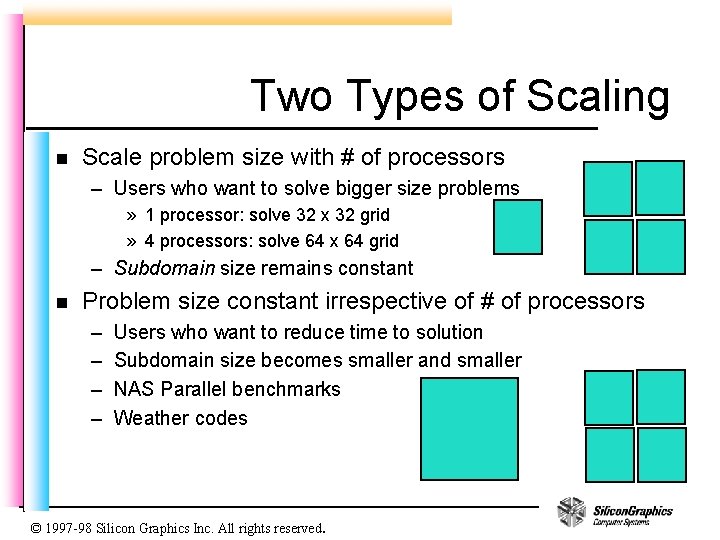

Two Types of Scaling n Scale problem size with # of processors – Users who want to solve bigger size problems » 1 processor: solve 32 x 32 grid » 4 processors: solve 64 x 64 grid – Subdomain size remains constant n Problem size constant irrespective of # of processors – – Users who want to reduce time to solution Subdomain size becomes smaller and smaller NAS Parallel benchmarks Weather codes © 1997 -98 Silicon Graphics Inc. All rights reserved.

Scaling Issues n Scale problem size with # of processors – Subdomain size per processor constant – Memory characteristics independent of # of processors n Problem size constant irrespective of # of processors – Subdomain size becomes smaller and smaller – Data access and memory overhead changes n Message passing and shared memory models run into different problems with decreasing subdomain size © 1997 -98 Silicon Graphics Inc. All rights reserved.

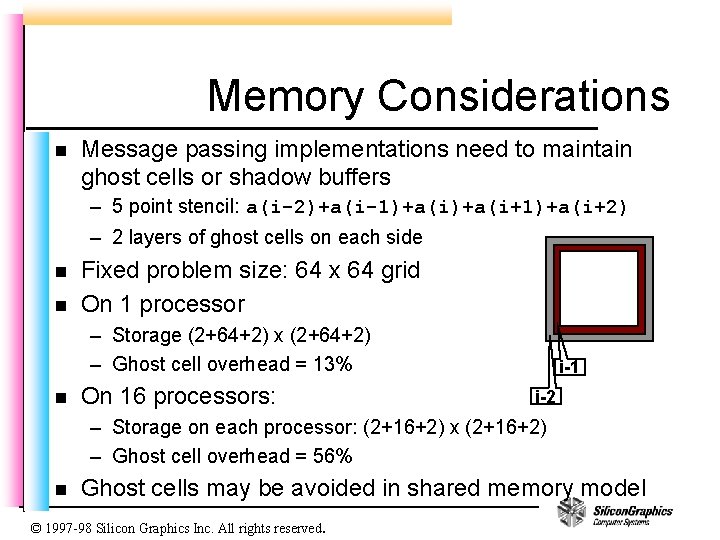

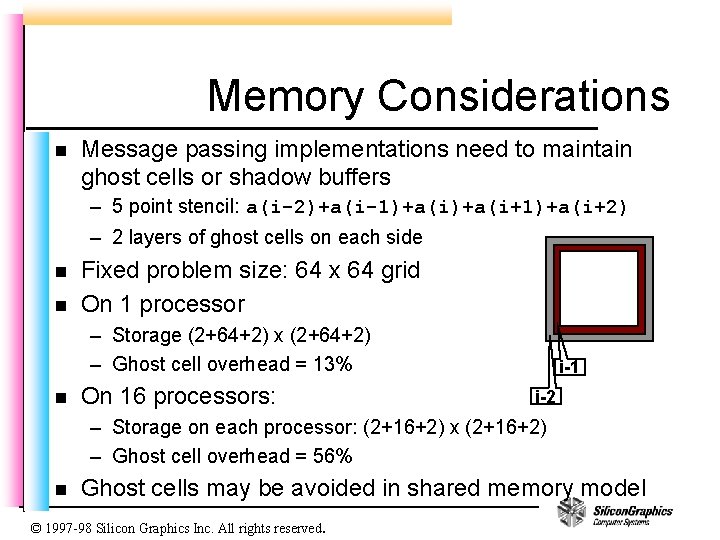

Memory Considerations n Message passing implementations need to maintain ghost cells or shadow buffers – 5 point stencil: a(i-2)+a(i-1)+a(i+1)+a(i+2) – 2 layers of ghost cells on each side n n Fixed problem size: 64 x 64 grid On 1 processor – Storage (2+64+2) x (2+64+2) – Ghost cell overhead = 13% n On 16 processors: i-1 i-2 – Storage on each processor: (2+16+2) x (2+16+2) – Ghost cell overhead = 56% n Ghost cells may be avoided in shared memory model © 1997 -98 Silicon Graphics Inc. All rights reserved.

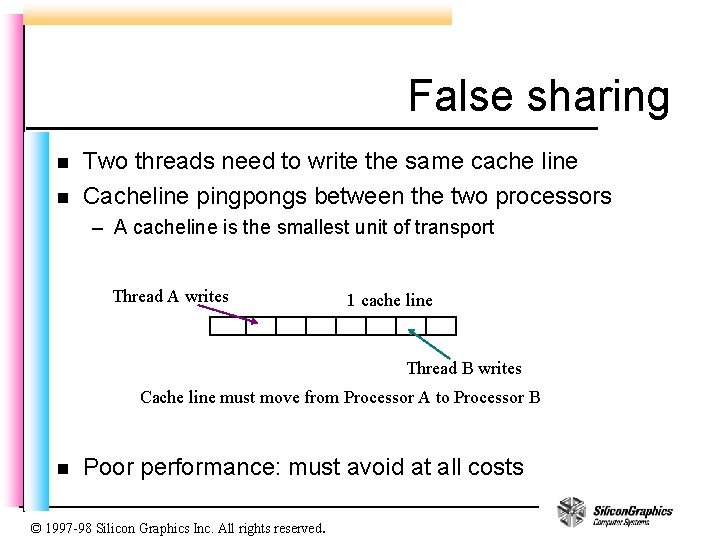

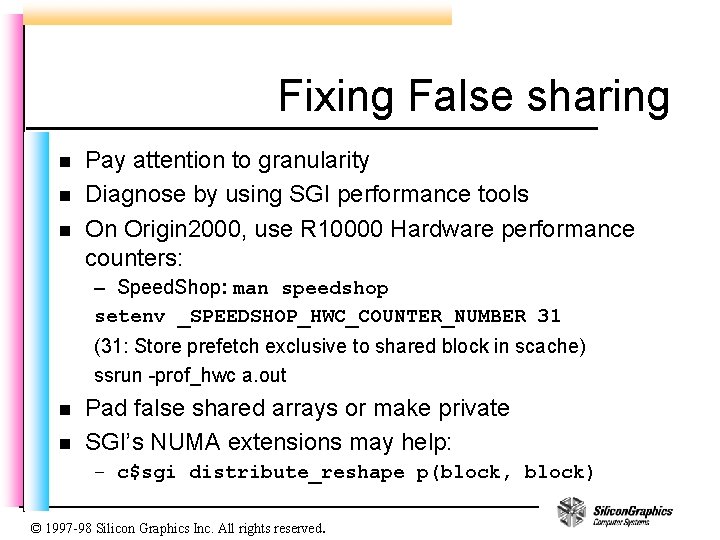

Cache Considerations n Typical shared memory model: field variables shared among threads – Each thread updates field variables for its own subdomain – Field variable is a shared variable with a size same as the whole domain n For fixed problem size scaling subdomain size decreases – Fortran Example – P(20, 20) Field variable 1 cache line 128 bytes on Origin (16 double words) © 1997 -98 Silicon Graphics Inc. All rights reserved. s 1 s 3 s 2 s 4 P(20, 20)

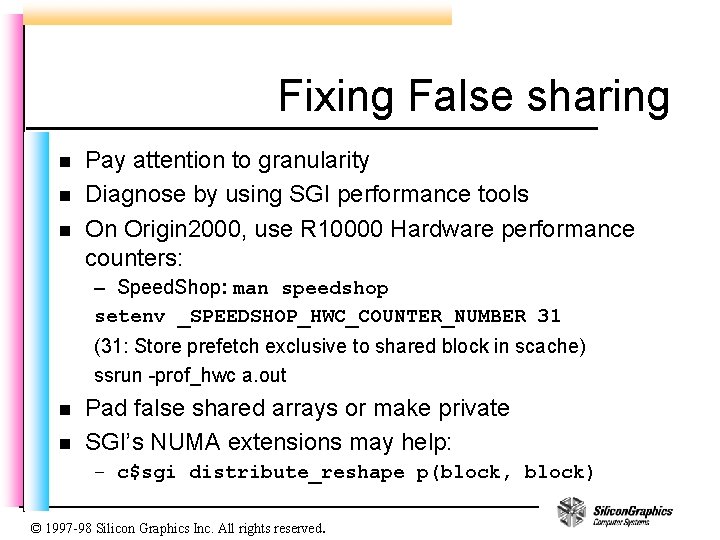

False sharing n n Two threads need to write the same cache line Cacheline pingpongs between the two processors – A cacheline is the smallest unit of transport Thread A writes 1 cache line Thread B writes Cache line must move from Processor A to Processor B n Poor performance: must avoid at all costs © 1997 -98 Silicon Graphics Inc. All rights reserved.

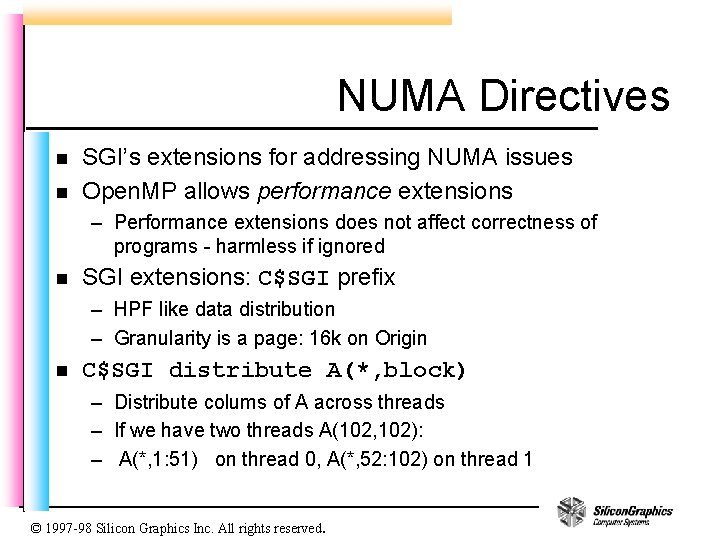

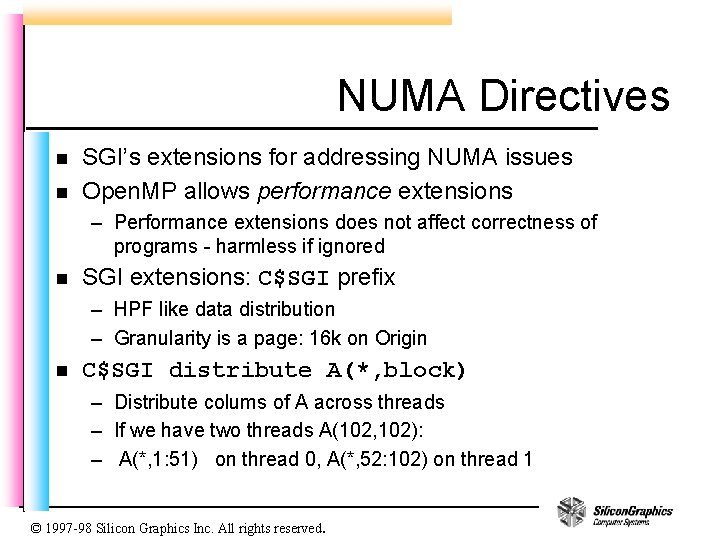

Fixing False sharing n n n Pay attention to granularity Diagnose by using SGI performance tools On Origin 2000, use R 10000 Hardware performance counters: – Speed. Shop: man speedshop setenv _SPEEDSHOP_HWC_COUNTER_NUMBER 31 (31: Store prefetch exclusive to shared block in scache) ssrun -prof_hwc a. out n n Pad false shared arrays or make private SGI’s NUMA extensions may help: – c$sgi distribute_reshape p(block, block) © 1997 -98 Silicon Graphics Inc. All rights reserved.

NUMA Directives n n SGI’s extensions for addressing NUMA issues Open. MP allows performance extensions – Performance extensions does not affect correctness of programs - harmless if ignored n SGI extensions: C$SGI prefix – HPF like data distribution – Granularity is a page: 16 k on Origin n C$SGI distribute A(*, block) – Distribute colums of A across threads – If we have two threads A(102, 102): – A(*, 1: 51) on thread 0, A(*, 52: 102) on thread 1 © 1997 -98 Silicon Graphics Inc. All rights reserved.

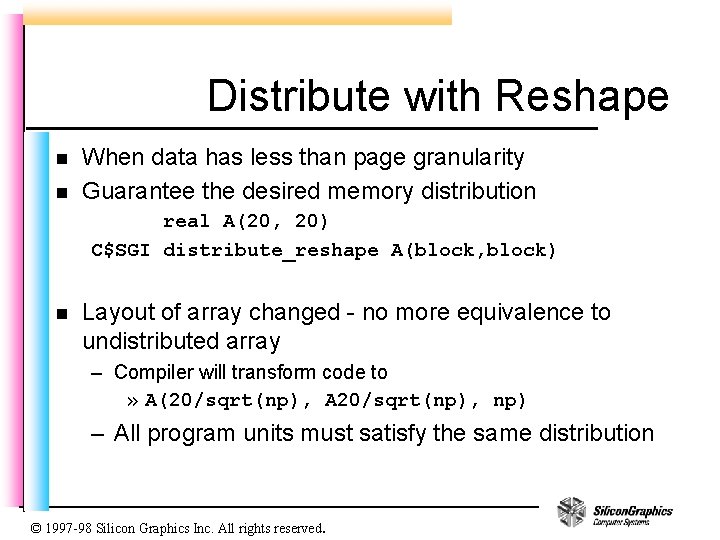

Distribute with Reshape n n When data has less than page granularity Guarantee the desired memory distribution real A(20, 20) C$SGI distribute_reshape A(block, block) n Layout of array changed - no more equivalence to undistributed array – Compiler will transform code to » A(20/sqrt(np), A 20/sqrt(np), np) – All program units must satisfy the same distribution © 1997 -98 Silicon Graphics Inc. All rights reserved.

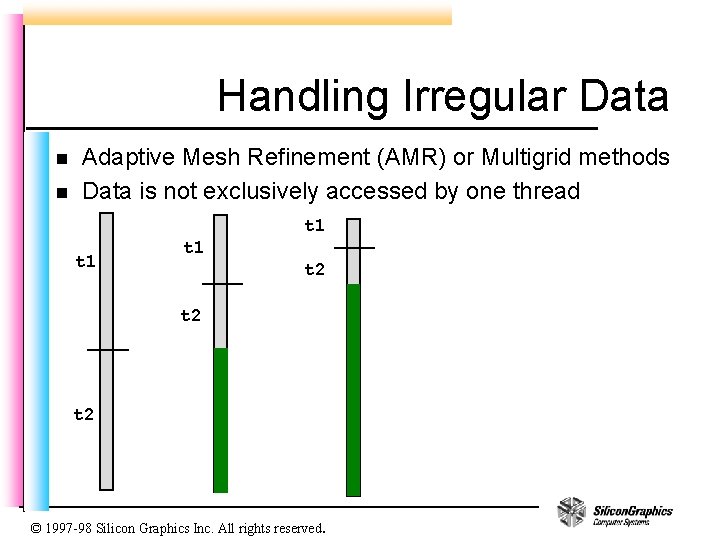

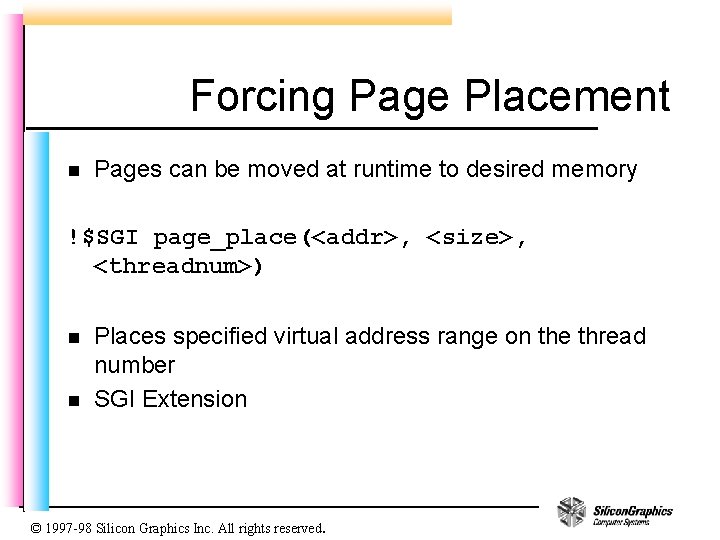

Handling Irregular Data n n Adaptive Mesh Refinement (AMR) or Multigrid methods Data is not exclusively accessed by one thread t 1 t 1 t 2 t 2 © 1997 -98 Silicon Graphics Inc. All rights reserved.

Forcing Page Placement n Pages can be moved at runtime to desired memory !$SGI page_place(<addr>, <size>, <threadnum>) n n Places specified virtual address range on the thread number SGI Extension © 1997 -98 Silicon Graphics Inc. All rights reserved.

Reducing Barriers n Common issue when converting from message passing model – One process sends data, another receives data » Usually the received data is filled into the ghost cells around the subdomain » Field variable is “ready” for use after the receive: Ghost cells are consistent with neighboring subdomains – Receive is an implicit synchronization point n This synchronization is very often done using barrier – But BARRIER synchronizes all threads in the region – BARRIERs have high overhead at large processor counts – By synchronizing all threads makes load imbalance worse n Reduce BARRIERs to the minimum © 1997 -98 Silicon Graphics Inc. All rights reserved.

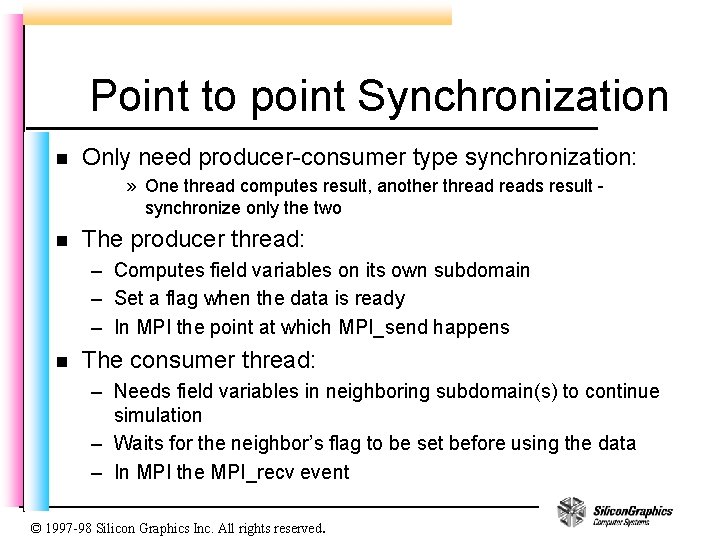

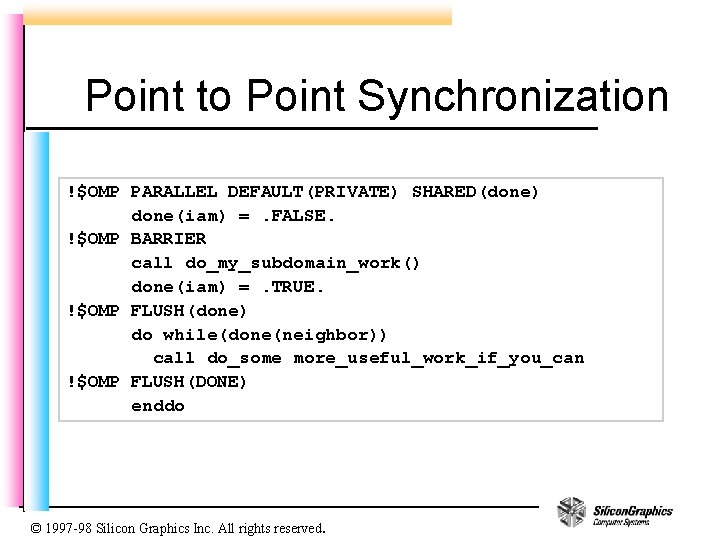

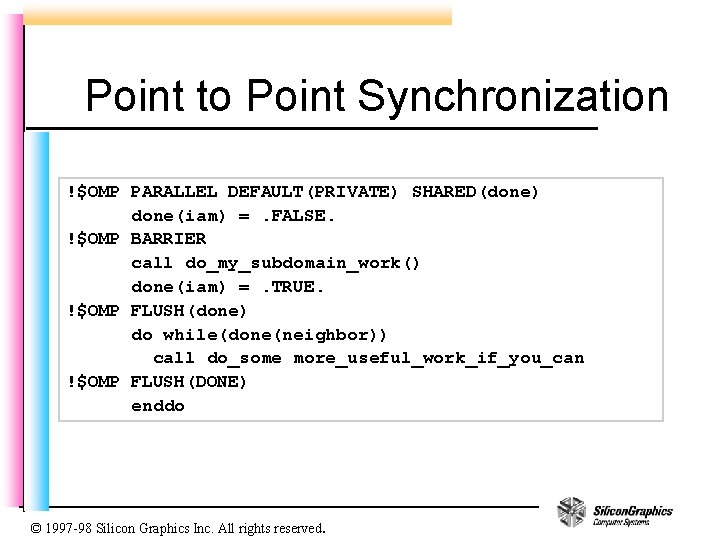

Point to point Synchronization n Only need producer-consumer type synchronization: » One thread computes result, another threads result synchronize only the two n The producer thread: – Computes field variables on its own subdomain – Set a flag when the data is ready – In MPI the point at which MPI_send happens n The consumer thread: – Needs field variables in neighboring subdomain(s) to continue simulation – Waits for the neighbor’s flag to be set before using the data – In MPI the MPI_recv event © 1997 -98 Silicon Graphics Inc. All rights reserved.

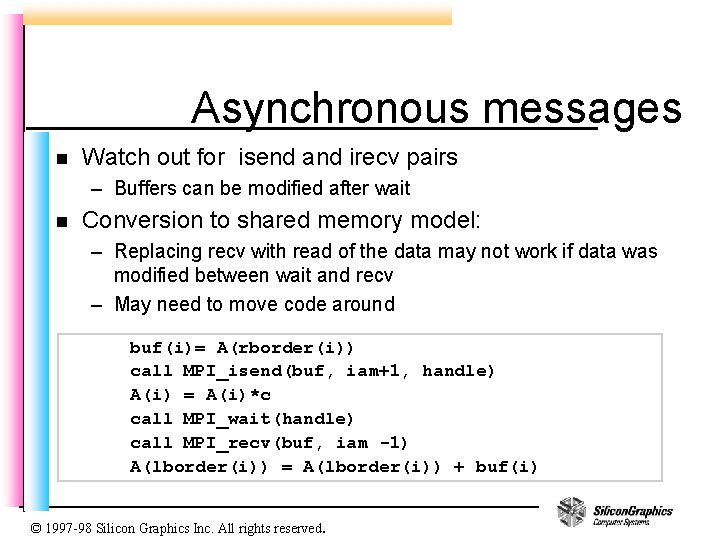

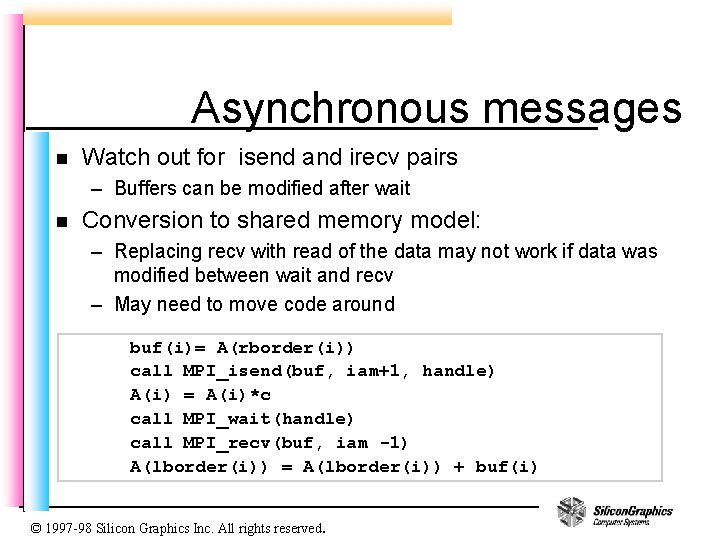

Asynchronous messages n Watch out for isend and irecv pairs – Buffers can be modified after wait n Conversion to shared memory model: – Replacing recv with read of the data may not work if data was modified between wait and recv – May need to move code around buf(i)= A(rborder(i)) call MPI_isend(buf, iam+1, handle) A(i) = A(i)*c call MPI_wait(handle) call MPI_recv(buf, iam -1) A(lborder(i)) = A(lborder(i)) + buf(i) © 1997 -98 Silicon Graphics Inc. All rights reserved.

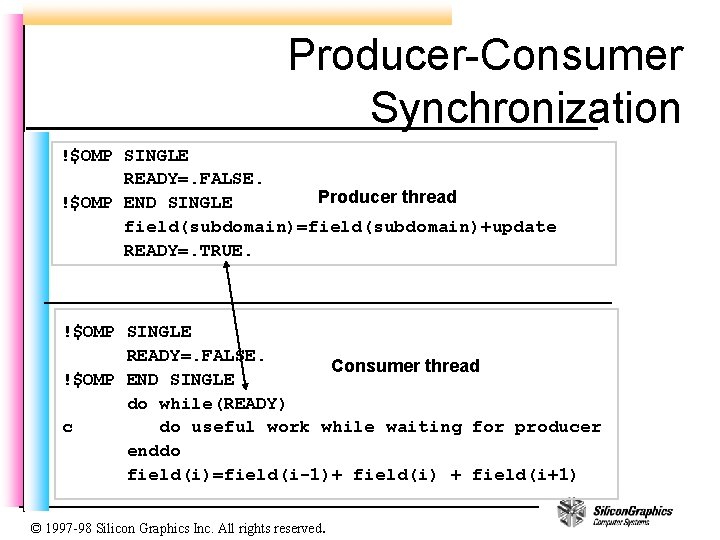

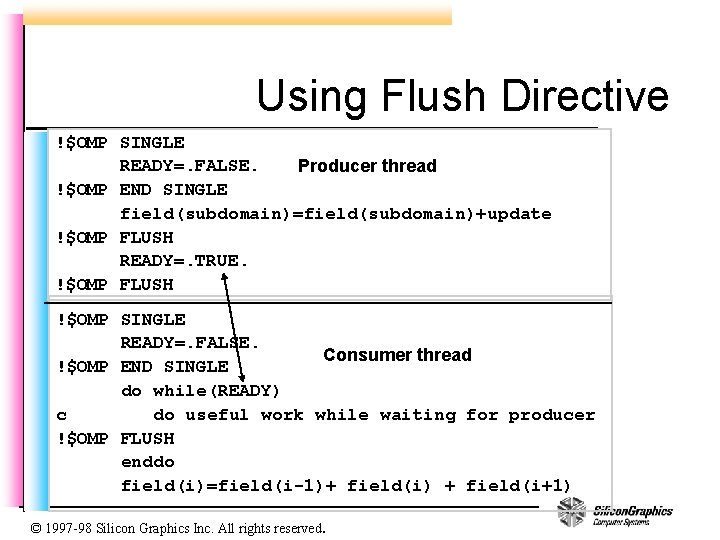

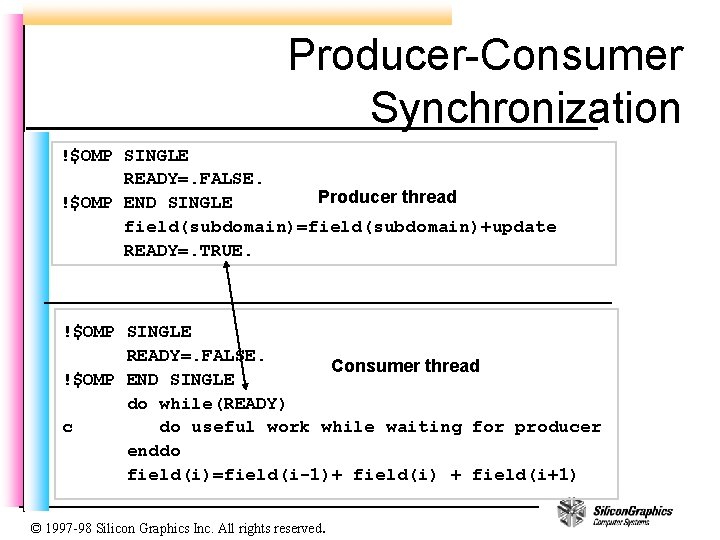

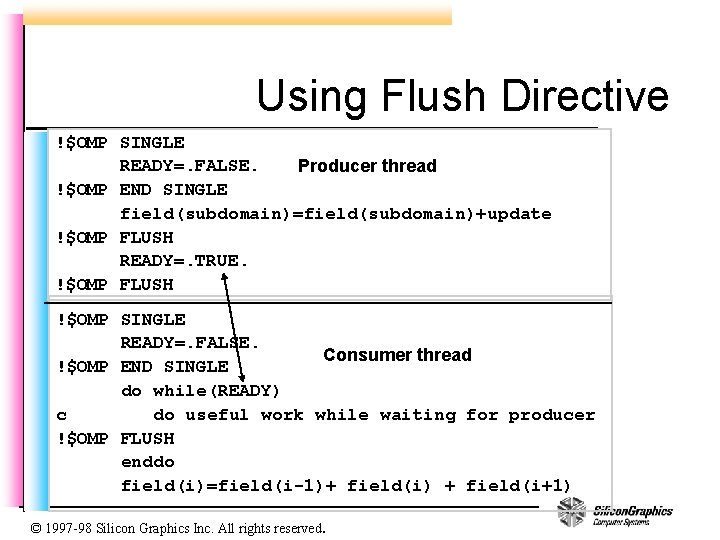

Producer-Consumer Synchronization !$OMP SINGLE READY=. FALSE. Producer thread !$OMP END SINGLE field(subdomain)=field(subdomain)+update READY=. TRUE. !$OMP SINGLE READY=. FALSE. Consumer thread !$OMP END SINGLE do while(READY) c do useful work while waiting for producer enddo field(i)=field(i-1)+ field(i) + field(i+1) © 1997 -98 Silicon Graphics Inc. All rights reserved.

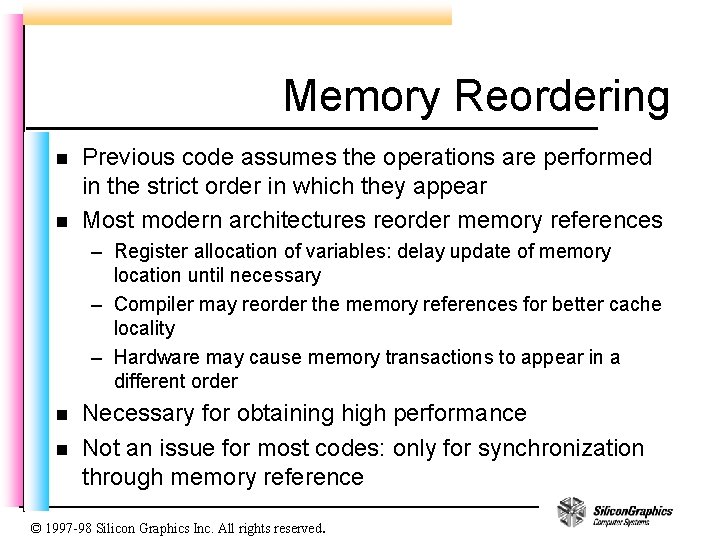

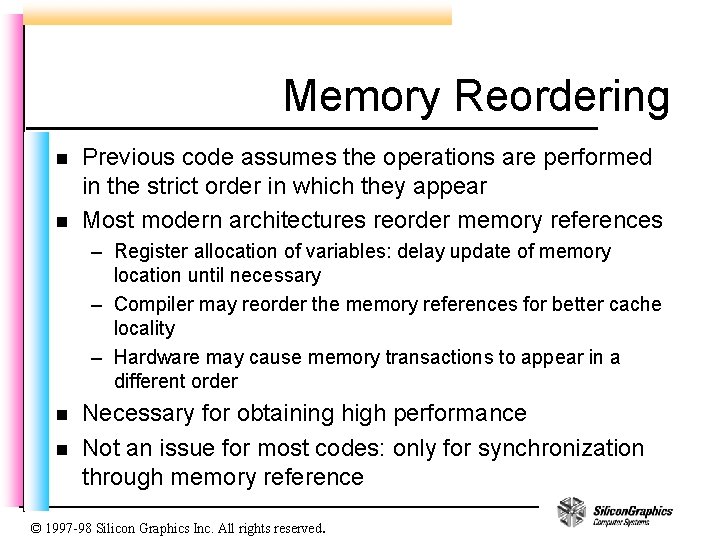

Memory Reordering n n Previous code assumes the operations are performed in the strict order in which they appear Most modern architectures reorder memory references – Register allocation of variables: delay update of memory location until necessary – Compiler may reorder the memory references for better cache locality – Hardware may cause memory transactions to appear in a different order n n Necessary for obtaining high performance Not an issue for most codes: only for synchronization through memory reference © 1997 -98 Silicon Graphics Inc. All rights reserved.

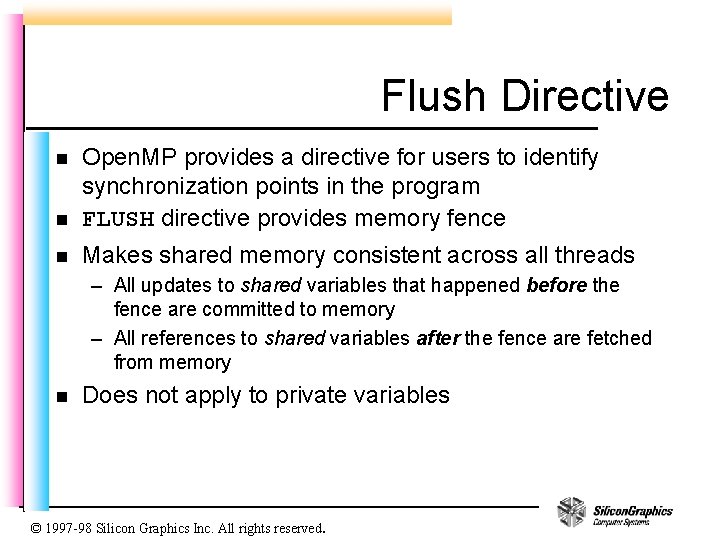

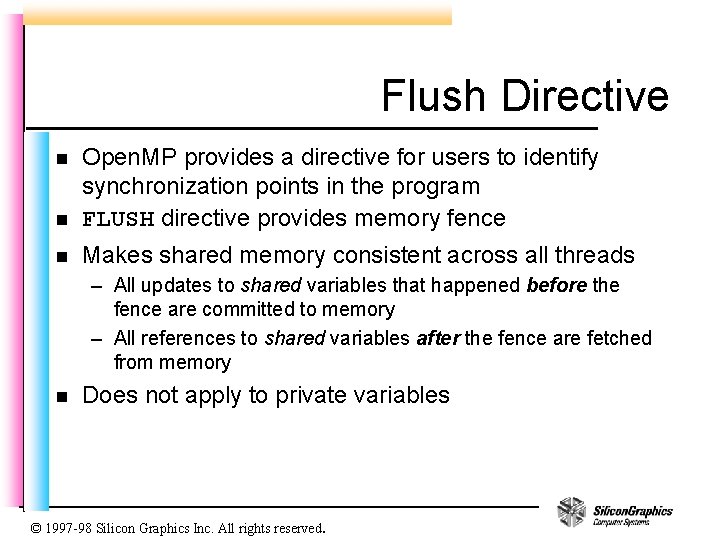

Flush Directive n Open. MP provides a directive for users to identify synchronization points in the program FLUSH directive provides memory fence n Makes shared memory consistent across all threads n – All updates to shared variables that happened before the fence are committed to memory – All references to shared variables after the fence are fetched from memory n Does not apply to private variables © 1997 -98 Silicon Graphics Inc. All rights reserved.

Using Flush Directive !$OMP SINGLE READY=. FALSE. Producer thread !$OMP END SINGLE field(subdomain)=field(subdomain)+update !$OMP FLUSH READY=. TRUE. !$OMP FLUSH !$OMP SINGLE READY=. FALSE. Consumer thread !$OMP END SINGLE do while(READY) c do useful work while waiting for producer !$OMP FLUSH enddo field(i)=field(i-1)+ field(i) + field(i+1) © 1997 -98 Silicon Graphics Inc. All rights reserved.

Point to Point Synchronization !$OMP PARALLEL DEFAULT(PRIVATE) SHARED(done) done(iam) =. FALSE. !$OMP BARRIER call do_my_subdomain_work() done(iam) =. TRUE. !$OMP FLUSH(done) do while(done(neighbor)) call do_some more_useful_work_if_you_can !$OMP FLUSH(DONE) enddo © 1997 -98 Silicon Graphics Inc. All rights reserved.

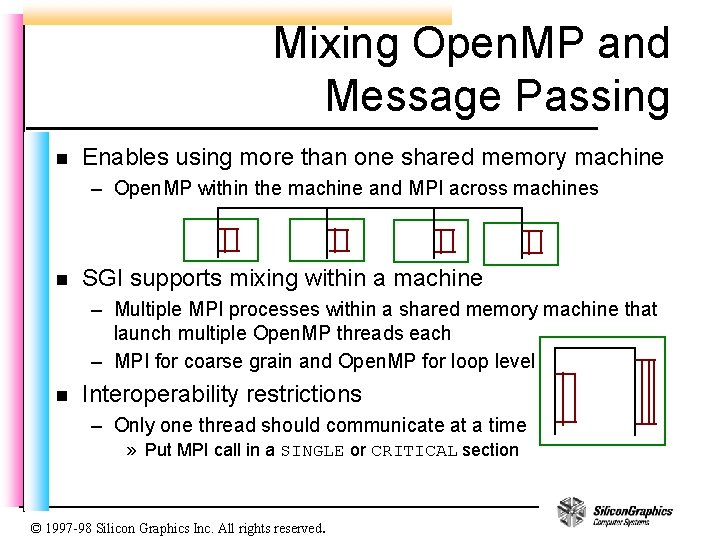

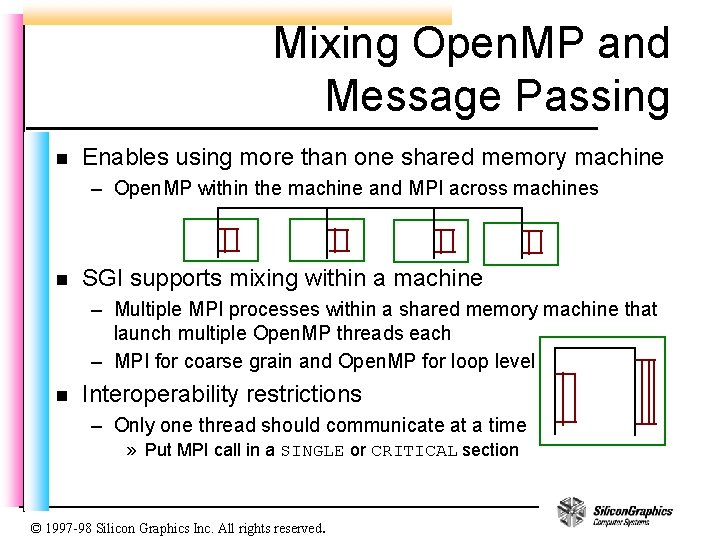

Mixing Open. MP and Message Passing n Enables using more than one shared memory machine – Open. MP within the machine and MPI across machines n SGI supports mixing within a machine – Multiple MPI processes within a shared memory machine that launch multiple Open. MP threads each – MPI for coarse grain and Open. MP for loop level n Interoperability restrictions – Only one thread should communicate at a time » Put MPI call in a SINGLE or CRITICAL section © 1997 -98 Silicon Graphics Inc. All rights reserved.

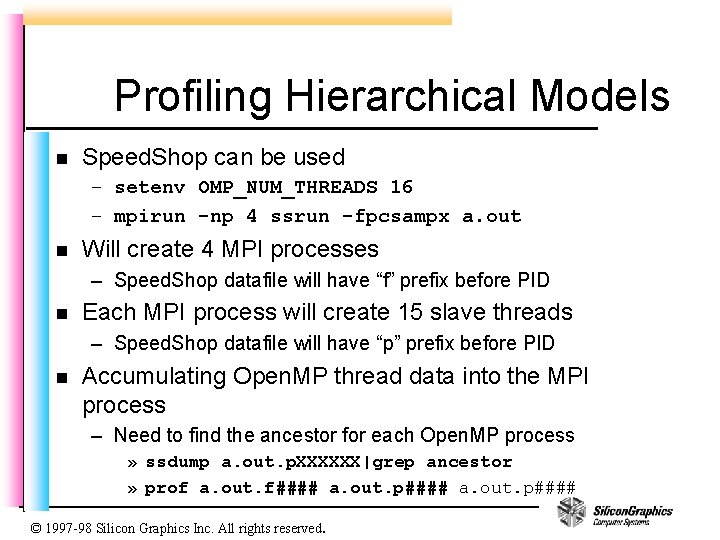

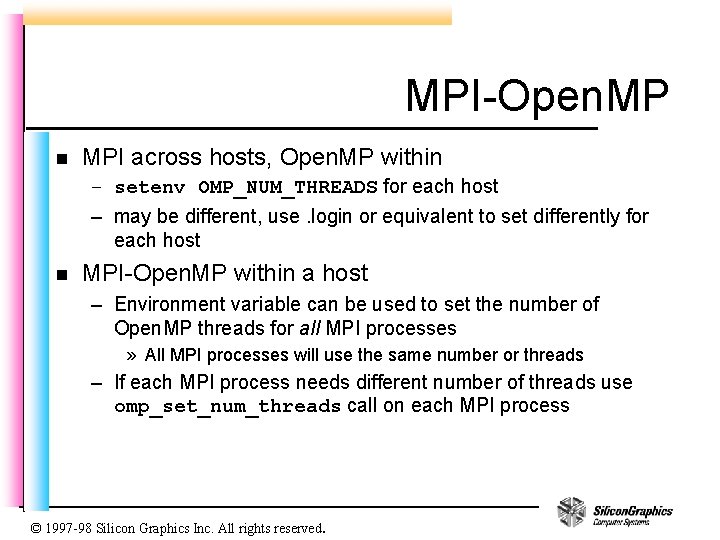

MPI-Open. MP n MPI across hosts, Open. MP within – setenv OMP_NUM_THREADS for each host – may be different, use. login or equivalent to set differently for each host n MPI-Open. MP within a host – Environment variable can be used to set the number of Open. MP threads for all MPI processes » All MPI processes will use the same number or threads – If each MPI process needs different number of threads use omp_set_num_threads call on each MPI process © 1997 -98 Silicon Graphics Inc. All rights reserved.

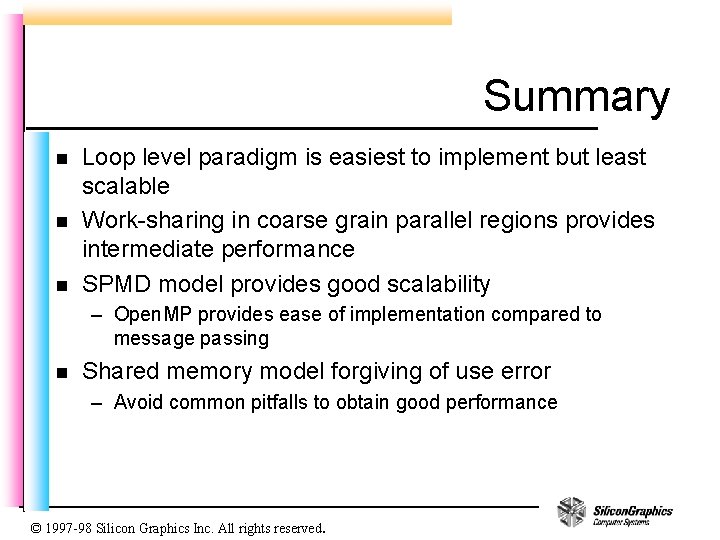

Profiling Hierarchical Models n Speed. Shop can be used – setenv OMP_NUM_THREADS 16 – mpirun -np 4 ssrun -fpcsampx a. out n Will create 4 MPI processes – Speed. Shop datafile will have “f” prefix before PID n Each MPI process will create 15 slave threads – Speed. Shop datafile will have “p” prefix before PID n Accumulating Open. MP thread data into the MPI process – Need to find the ancestor for each Open. MP process » ssdump a. out. p. XXXXXX|grep ancestor » prof a. out. f#### a. out. p#### © 1997 -98 Silicon Graphics Inc. All rights reserved.

Summary n n n Loop level paradigm is easiest to implement but least scalable Work-sharing in coarse grain parallel regions provides intermediate performance SPMD model provides good scalability – Open. MP provides ease of implementation compared to message passing n Shared memory model forgiving of use error – Avoid common pitfalls to obtain good performance © 1997 -98 Silicon Graphics Inc. All rights reserved.