A Reconfigurable Processor Architecture and Software Development Environment

A Reconfigurable Processor Architecture and Software Development Environment for Embedded Systems Andrea Cappelli F. Campi, R. Guerrieri, A. Lodi, M. Toma, A. La Rosa, L. Lavagno, C. Passerone, R. Canegallo 23 Ottobre 2002 Nice, France April 22, 2003 Sviluppo di una metodologia di progetto per un sistema on-chip basato su architettura riconfigurabile

Outline n Motivations n Xi. Risc: a VLIW Processor n Pi. Co. GA: A Pipelined Configurable Gate Array n Software Development Environment n Results & Measurements n Conclusions

Motivations Increased on-chip Transistor density n Increased Integration costs n Algorithm complexity Moore’s law 400 Millions of transistors/Chip 300 200 Technology (nm) 100 0 1997 1999 2001 2003 2005 2007 2009 Quest for performance and flexibility 1997 1999 2001 2003 2005 2007 2009 Battery capacity n Increased Algorithmic complexity n Strong limitations in power supply Severe power consumption constraints

Embedded systems Algorithms analysis n 90% of computational complexity is concentrated in small kernels covering small parts of overall code n Many algorithms show a relevant instruction-level parallelism ü Performance improved by multiple parallel data paths n Operand granularity is typically different from 32 -bit ü Traditional ALU is power-inefficient Significant improvements can be obtained extending embedded processors with application-specific function units Reconfigurable computing to achieve maximum flexibility

Existing Architectures Standard processor coupled with embedded programmable logic where application specific functions are dynamically remapped depending on the performed algorithm 1: Coprocessor model 2: Function unit model

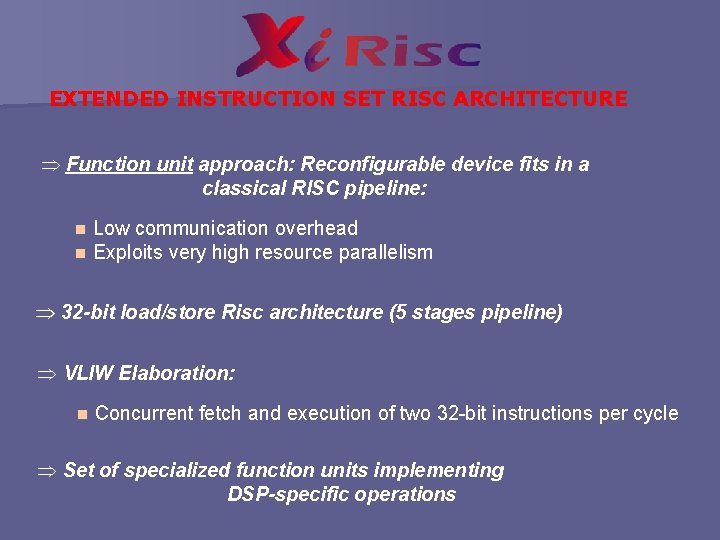

EXTENDED INSTRUCTION SET RISC ARCHITECTURE Þ Function unit approach: Reconfigurable device fits in a classical RISC pipeline: n Low communication overhead n Exploits very high resource parallelism Þ 32 -bit load/store Risc architecture (5 stages pipeline) Þ VLIW Elaboration: n Concurrent fetch and execution of two 32 -bit instructions per cycle Þ Set of specialized function units implementing DSP-specific operations

Architecture n n Duplicated instruction decode logic (2 simmetrical datachannels) n Duplicated commonly used function Units (Alu and Shifter) n All others function units are shared (DSP operations, Memory handler) A tightly coupled pipelined configurable Gate Array

Dynamic Instruction Set Extension n Specific operation to transfer data from a configuration cache to the Pi. Co. GA: p. GA-load n 32 -bit region specification configuration specification and 64 -bit operation to launch the execution inside the Pi. Co. GA (Data exchange through register file): 32 -bit p. GA-op 64 -bit p. GA-op Source 1 Source 2 Dest 1 Source 2 Source 3 Source 4 Dest 2 operation specification Dest 1 Dest 2 operation specification

Pi. Co. GA: a Pipelined Configurable Gate Array Þ Embedded function unit for dynamic extension of the Instruction Set Pi. Co. GA Two-dimensional array of LUT-based Reconfigurable Logic Cells n Each row implements a possible stage of a customized pipeline, independent and concurrent with the processor n Up to 4 x 32 -bit input data and up to 2 x 32 -bit output data from/to register File n

DFG-based elaboration n Row elaboration is activated by an embedded control unit n Execution enable signal for of each pipeline stage n Pi. Co. GA operation latency is dependent on the operation performed

Pi. Co. GA Configuration Layer 1 Layer 3 Pi. Co. GA Layer 2 Layer 4 Configuration Cache Goal: to reduce cache misses due to Pi. Co. GA configuration n Multi-context programming (4 cache layers/planes inside the array) n Dedicated Configuration Cache with high bandwith bus to the Pi. Co. GA (192 bits) n Partial Run-Time Reconfiguration (A region is configured while another one is active) n Configuration is completely concurrent with processor elaboration

The Software Development Environment Inititial C code Profiling Assembler Level Scheduler p. GA-op Latency information Computation Pi. Co. GA mapping kernel extraction Executable code 100010100001 1001010 1101100100101110101 101001011101 101001010110 1111101

Software Simulation Goals: check the correctness of the algorithm and evaluate performances In the source code p. GA-op is described using a pragma directive: #pragma p. GA shift_add 0 x 12 5 c a b c = ( a << 2 ) + b #pragma end /*******************/ /* Shift_add mapped on Pi. Co. GA */ /*******************/ #if defined(Pi. Co. GA). . . asm(“p. GA-op 0 x 12. . . ”). . . /*******************/ /* Emulation function _shift_add */ /******************/ #else void _shift_add(){. . . c = ( a << 2 ) + b. . . } #endif

Sofware Simulation Two special instructions are defined to support emulation: . . . topga. . . jal _shft_add fmpga. . . n topga saves current state and passes arguments to emulation function. Function clock cycle count is halted n fmpga copies emulation function result(s) and restores registers; cycle count is incremented with the latency value of the p. GA-op Evaluation of overall performances by counting elaboration cycles

Results and Measurements Speed-ups for several signal processing cores: DES CRC Median Filter Motion Estimation Motion Prediction Turbo Codes 13. 5 x 4. 3 x 7. 7 x 12. 4 x 4. 5 x 12 x Strong reduction of accesses to instruction memory Normalized Energy Histogram 75% of energy consumption for a VLIW architecture is due to accesses to instruction and data memory

Conclusions n Xi. Risc: VLIW Risc architecture enhanced by run-time reconfigurable function unit n Pi. Co. GA: pipelined, runtime configurable, row-oriented array of LUT-based cells n Specific software development toolchain n Speedups range from 4. 3 x to 13. 5 x n Up to 93% energy consumption reduction

- Slides: 16