A Programmable Memory Hierarchy for Prefetching Linked Data

A Programmable Memory Hierarchy for Prefetching Linked Data Structures Chia-Lin Yang Alvin R. Lebeck Department of Computer Science and Information Engineering National Taiwan University Department of Computer Science Duke University

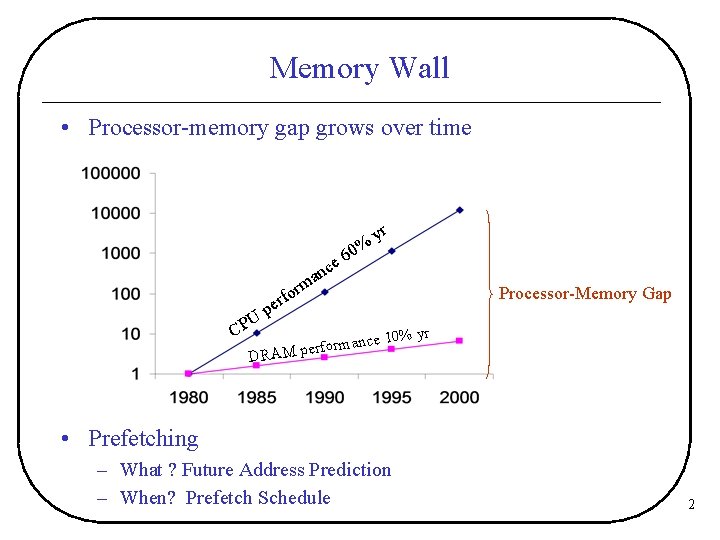

Memory Wall • Processor-memory gap grows over time % c n ma PU p C 0 e 6 yr or f r e DR rmance AM perfo Processor-Memory Gap 10% yr • Prefetching – What ? Future Address Prediction – When? Prefetch Schedule 2

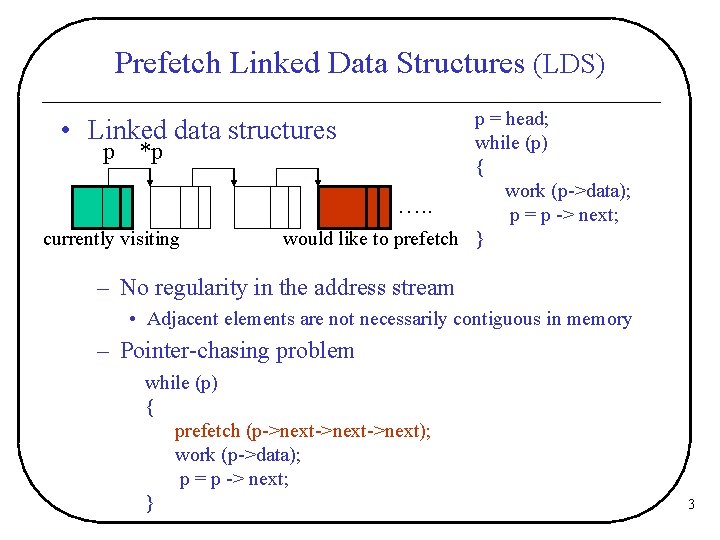

Prefetch Linked Data Structures (LDS) p = head; while (p) { work (p->data); …. . p = p -> next; would like to prefetch } • Linked data structures p *p currently visiting – No regularity in the address stream • Adjacent elements are not necessarily contiguous in memory – Pointer-chasing problem while (p) { prefetch (p->next->next); work (p->data); p = p -> next; } 3

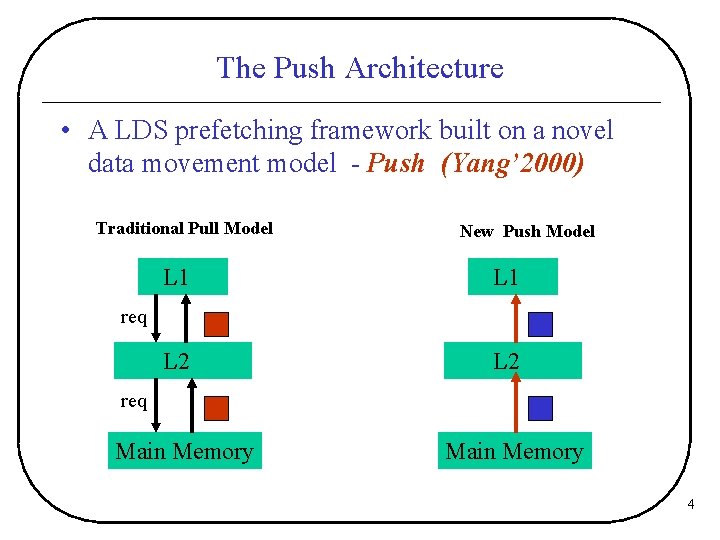

The Push Architecture • A LDS prefetching framework built on a novel data movement model - Push (Yang’ 2000) Traditional Pull Model New Push Model L 1 L 2 req Main Memory 4

Outline • • Background & Motivation What is the Push Architecture? Design of the Push Architecture Variations of the Push Architecture Experimental Results Related Research Conclusion 5

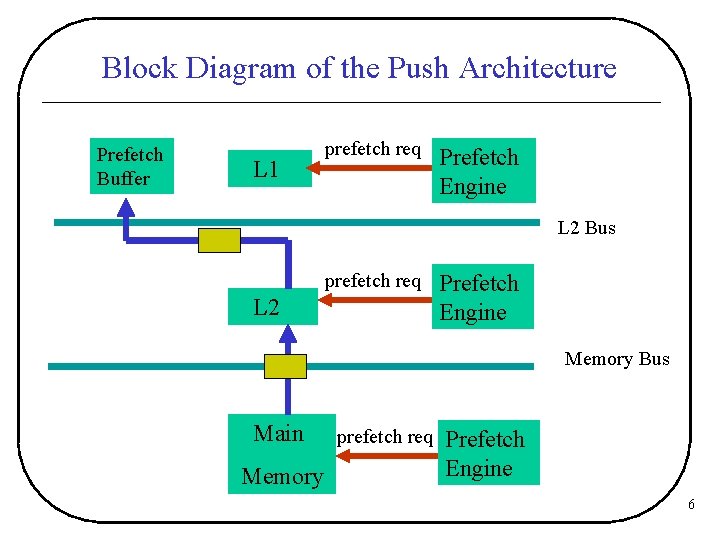

Block Diagram of the Push Architecture Prefetch Buffer L 1 prefetch req Prefetch Engine L 2 Bus prefetch req L 2 Prefetch Engine Memory Bus Main Memory prefetch req Prefetch Engine 6

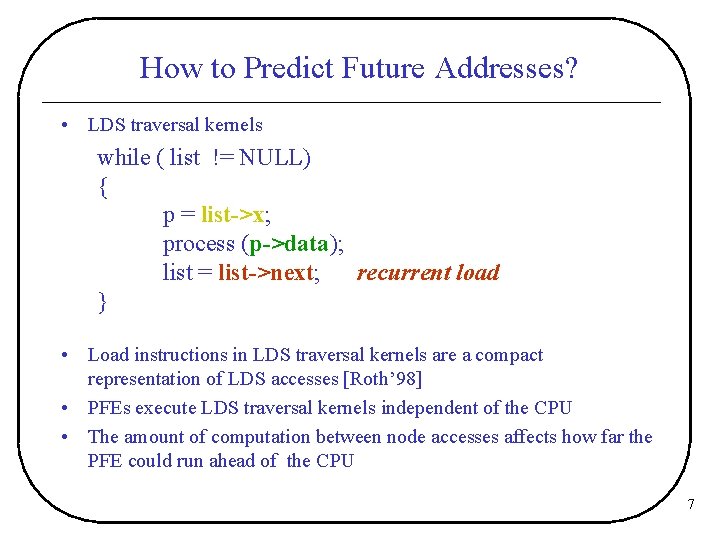

How to Predict Future Addresses? • LDS traversal kernels while ( list != NULL) { p = list->x; process (p->data); list = list->next; recurrent load } • Load instructions in LDS traversal kernels are a compact representation of LDS accesses [Roth’ 98] • PFEs execute LDS traversal kernels independent of the CPU • The amount of computation between node accesses affects how far the PFE could run ahead of the CPU 7

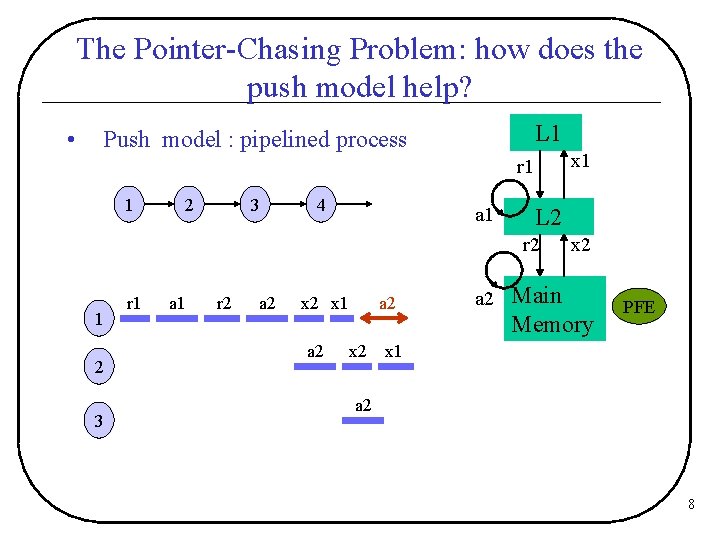

The Pointer-Chasing Problem: how does the push model help? • L 1 Push model : pipelined process x 1 r 1 1 2 3 4 a 1 L 2 r 2 1 2 3 r 1 a 1 r 2 a 2 x 1 a 2 x 2 Main Memory PFE x 1 a 2 8

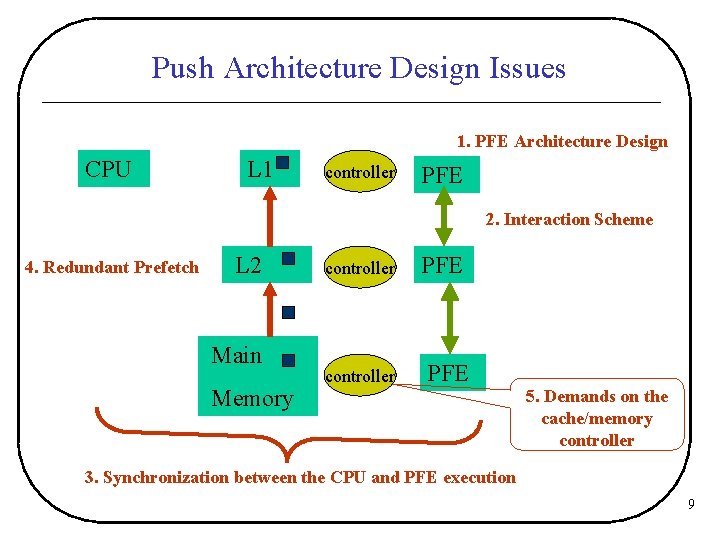

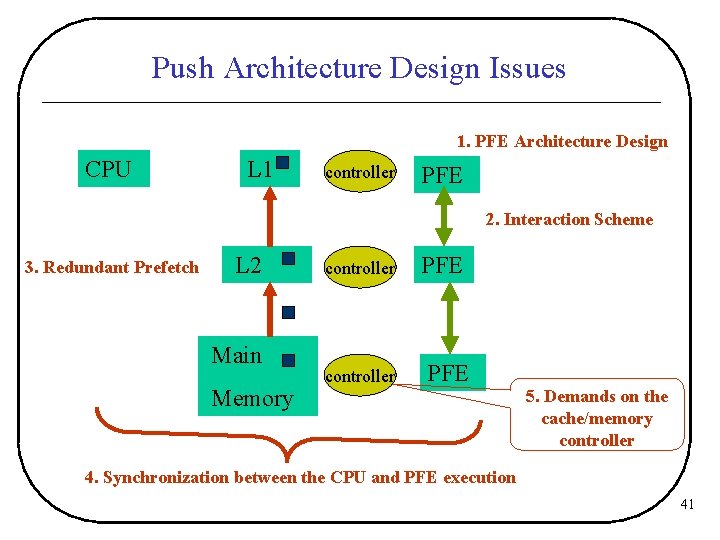

Push Architecture Design Issues 1. PFE Architecture Design CPU L 1 controller PFE 2. Interaction Scheme 4. Redundant Prefetch L 2 Main Memory controller PFE 5. Demands on the cache/memory controller 3. Synchronization between the CPU and PFE execution 9

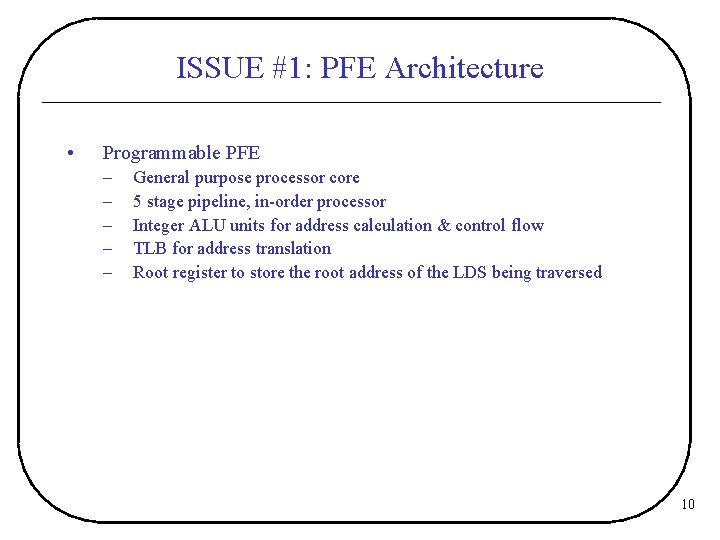

ISSUE #1: PFE Architecture • Programmable PFE – – – General purpose processor core 5 stage pipeline, in-order processor Integer ALU units for address calculation & control flow TLB for address translation Root register to store the root address of the LDS being traversed 10

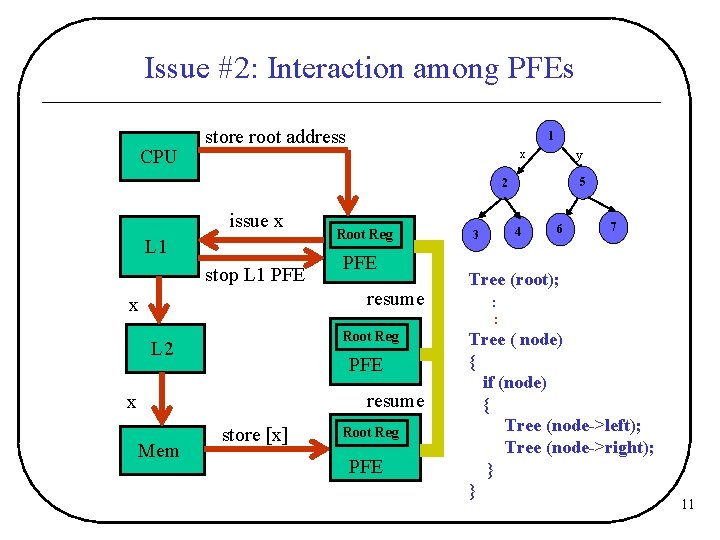

Issue #2: Interaction among PFEs CPU store root address 1 x y 5 2 issue x L 1 stop L 1 PFE Root Reg PFE resume x Root Reg L 2 PFE resume x Mem store [x] Root Reg PFE 4 3 6 7 Tree (root); : : Tree ( node) { if (node) { Tree (node->left); Tree (node->right); } } 11

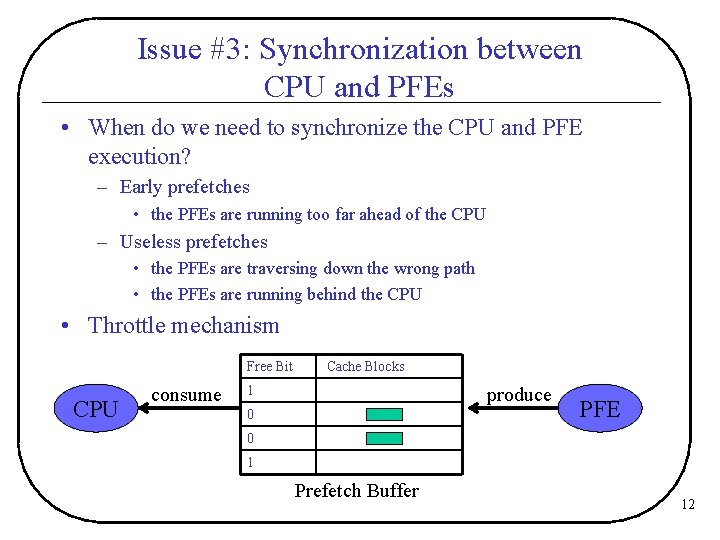

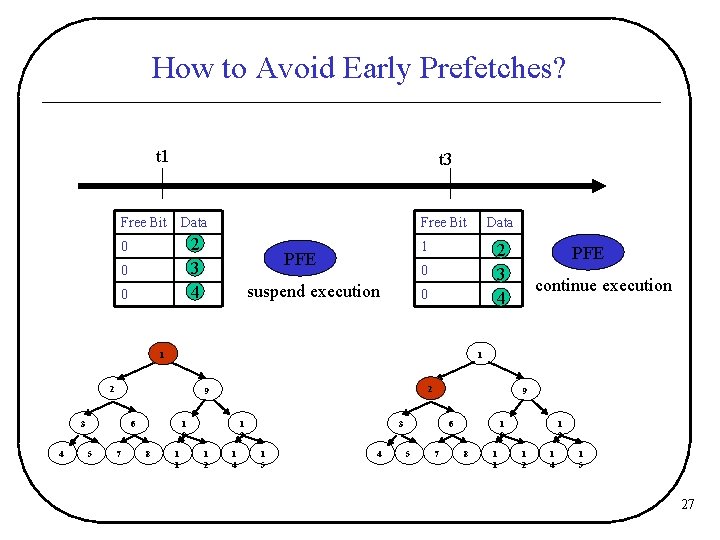

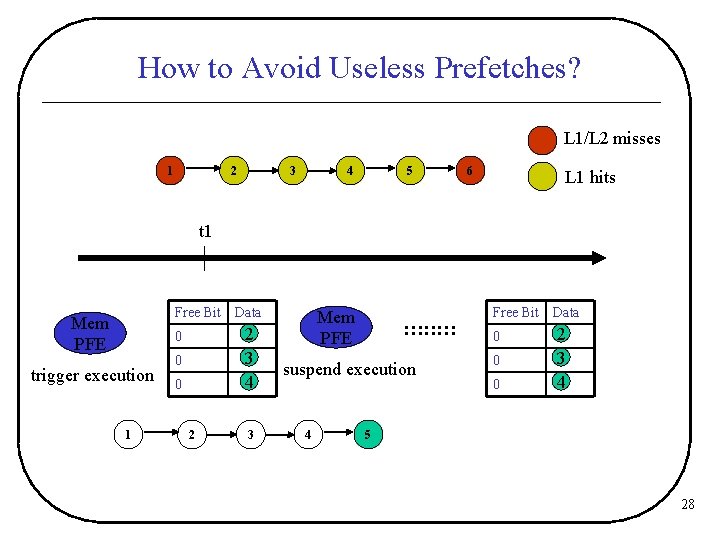

Issue #3: Synchronization between CPU and PFEs • When do we need to synchronize the CPU and PFE execution? – Early prefetches • the PFEs are running too far ahead of the CPU – Useless prefetches • the PFEs are traversing down the wrong path • the PFEs are running behind the CPU • Throttle mechanism Free Bit CPU consume Cache Blocks 1 produce 0 PFE 0 1 Prefetch Buffer 12

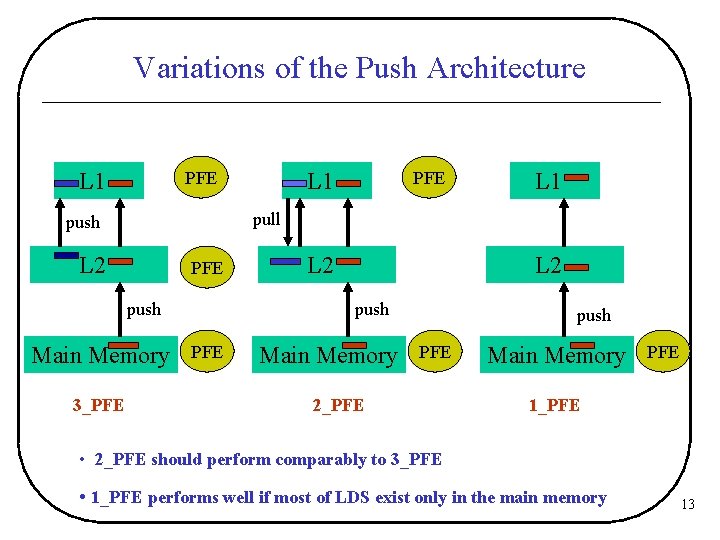

Variations of the Push Architecture L 1 PFE L 1 pull push L 2 PFE push Main Memory 3_PFE L 1 PFE L 2 push PFE Main Memory push PFE 2_PFE Main Memory PFE 1_PFE • 2_PFE should perform comparably to 3_PFE • 1_PFE performs well if most of LDS exist only in the main memory 13

Outline • • Background & Motivation What is the Push Architecture? Design of the Push Architecture Variations of the Push Architecture Experimental Results Related Research Conclusion 14

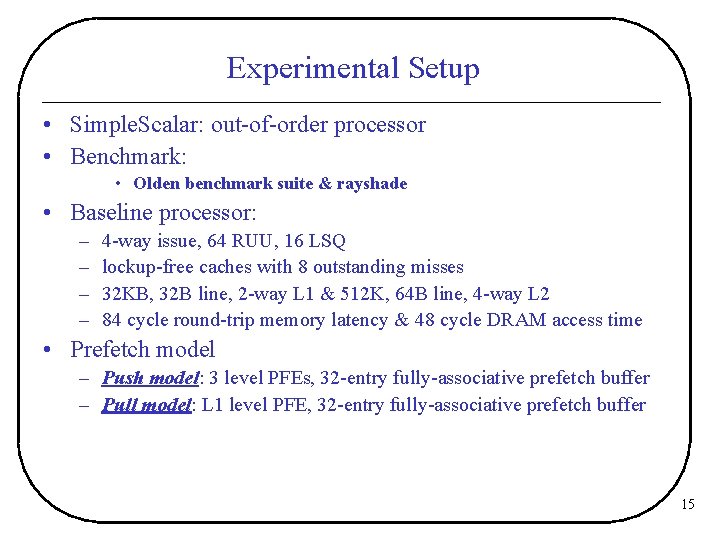

Experimental Setup • Simple. Scalar: out-of-order processor • Benchmark: • Olden benchmark suite & rayshade • Baseline processor: – – 4 -way issue, 64 RUU, 16 LSQ lockup-free caches with 8 outstanding misses 32 KB, 32 B line, 2 -way L 1 & 512 K, 64 B line, 4 -way L 2 84 cycle round-trip memory latency & 48 cycle DRAM access time • Prefetch model – Push model: 3 level PFEs, 32 -entry fully-associative prefetch buffer – Pull model: L 1 level PFE, 32 -entry fully-associative prefetch buffer 15

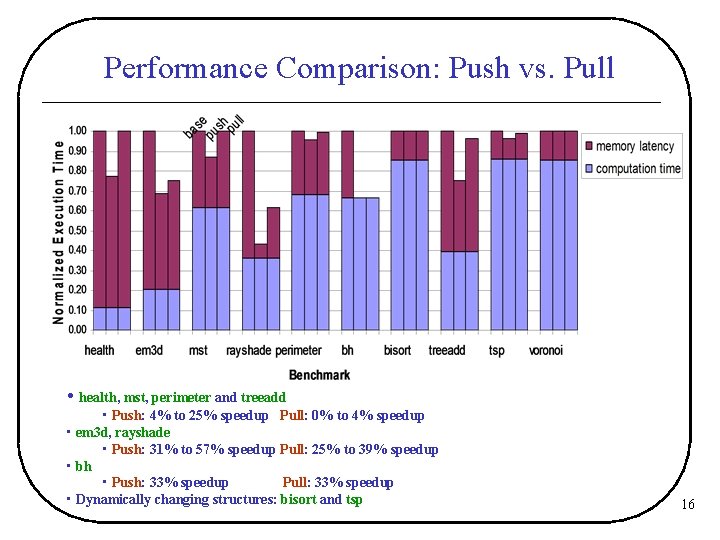

Performance Comparison: Push vs. Pull • health, mst, perimeter and treeadd • Push: 4% to 25% speedup Pull: 0% to 4% speedup • em 3 d, rayshade • Push: 31% to 57% speedup Pull: 25% to 39% speedup • bh • Push: 33% speedup Pull: 33% speedup • Dynamically changing structures: bisort and tsp 16

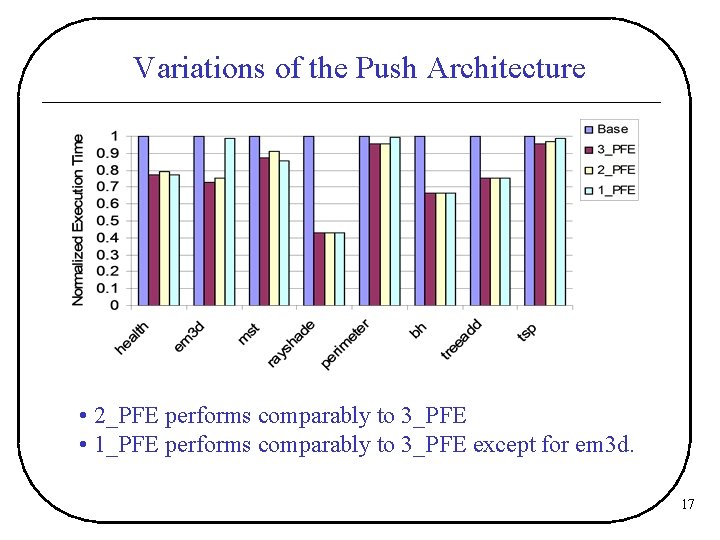

Variations of the Push Architecture • 2_PFE performs comparably to 3_PFE • 1_PFE performs comparably to 3_PFE except for em 3 d. 17

Related Work • Prefetching for Irregular Applications: – – Correlation based prefetch (Joseph’ 97 and Alexander’ 96) Compiler based prefetch (Luk’ 96) Dependence based prefetch (Roth’ 98) Jump-pointer prefetch (Roth’ 99) • Decoupled Architecture – Decoupled Access Execute (Smith’ 82) – Pre-execution (Annavaram’ 2001, Collin’ 2001, Roth’ 2001, Zilles’ 2001, Luk’ 2001) • Processor-in-Memory – – – Berkley IRAM Group (Patterson’ 97) Active Page (Oskin’ 98) Flex. RAM (Kang’ 99) Impulse (Carter’ 99) Memory-side prefetching (Hughes’ 2000) 18

Conclusion • Build a general architectural solution for the push model • The push model is effective in reducing the impact of the pointer-chasing problem on prefetching performance – applications with tight traversal loops • Push : 4% to 25% Pull: 0% to 4% – applications with longer computation between node accesses • Push : 31% to 57% Pull: 25% to 39% • 2_PFE performs comparably to 3_PFE. 19

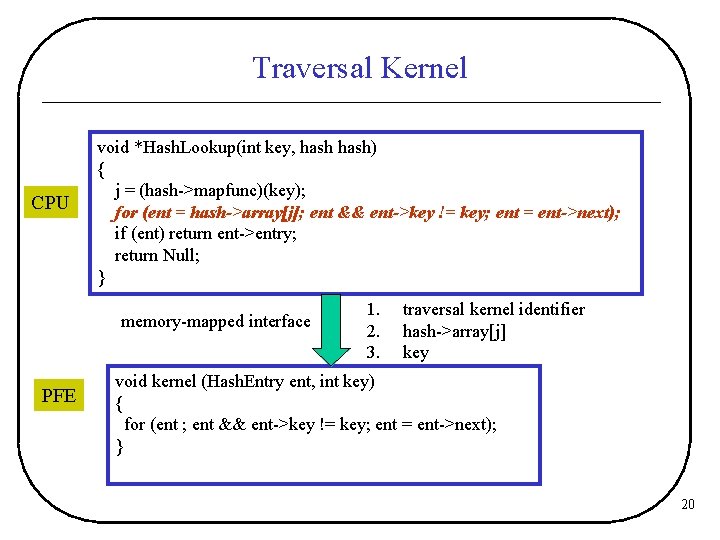

Traversal Kernel CPU void *Hash. Lookup(int key, hash) { j = (hash->mapfunc)(key); for (ent = hash->array[j]; ent && ent->key != key; ent = ent->next); if (ent) return ent->entry; return Null; } memory-mapped interface PFE 1. 2. 3. traversal kernel identifier hash->array[j] key void kernel (Hash. Entry ent, int key) { for (ent ; ent && ent->key != key; ent = ent->next); } 20

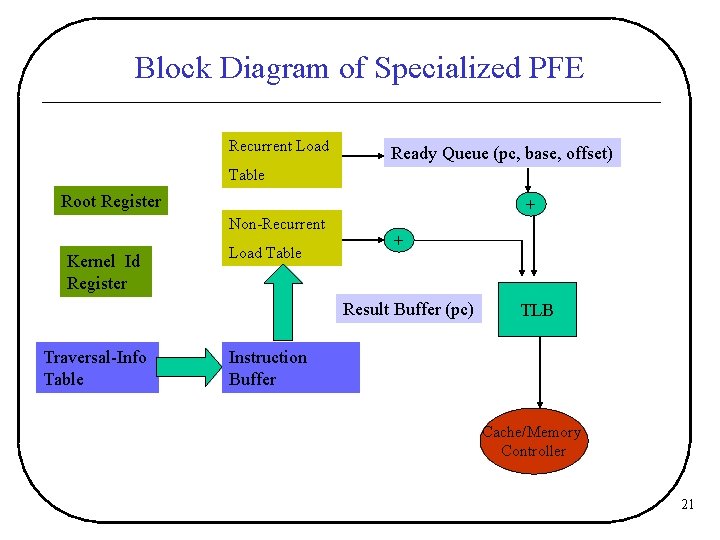

Block Diagram of Specialized PFE Recurrent Load Ready Queue (pc, base, offset) Table Root Register + Non-Recurrent Kernel Id Register Load Table + Result Buffer (pc) Traversal-Info Table TLB Instruction Buffer Cache/Memory Controller 21

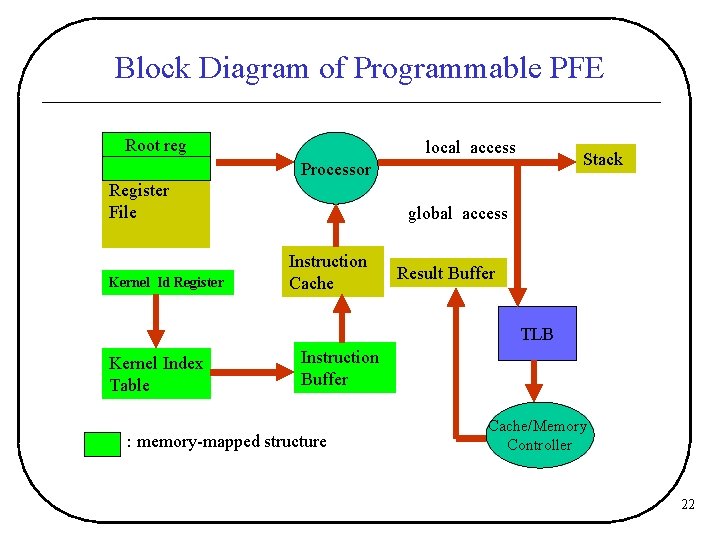

Block Diagram of Programmable PFE Root reg local access Stack Processor Register File Kernel Id Register global access Instruction Cache Result Buffer TLB Kernel Index Table Instruction Buffer : memory-mapped structure Cache/Memory Controller 22

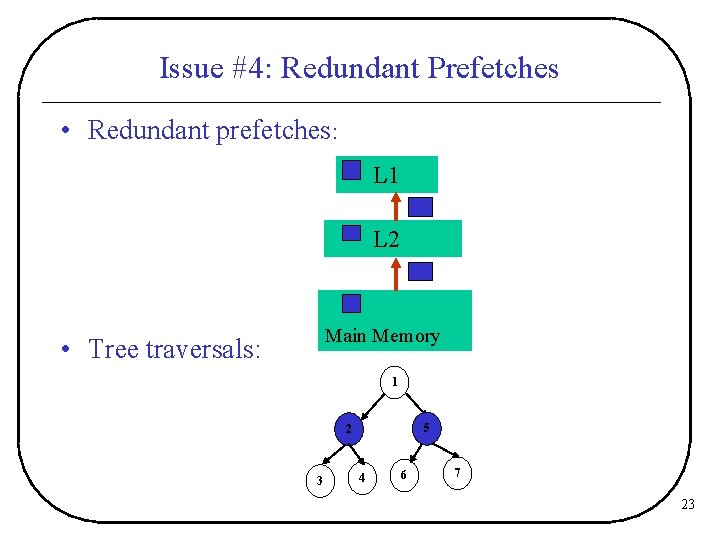

Issue #4: Redundant Prefetches • Redundant prefetches: L 1 L 2 Main Memory • Tree traversals: 1 5 2 3 4 6 7 23

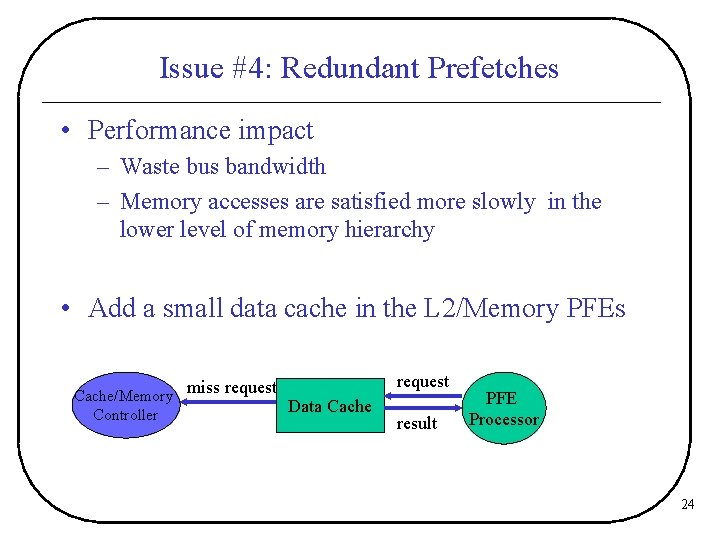

Issue #4: Redundant Prefetches • Performance impact – Waste bus bandwidth – Memory accesses are satisfied more slowly in the lower level of memory hierarchy • Add a small data cache in the L 2/Memory PFEs miss request Cache/Memory Controller request Data Cache result PFE Processor 24

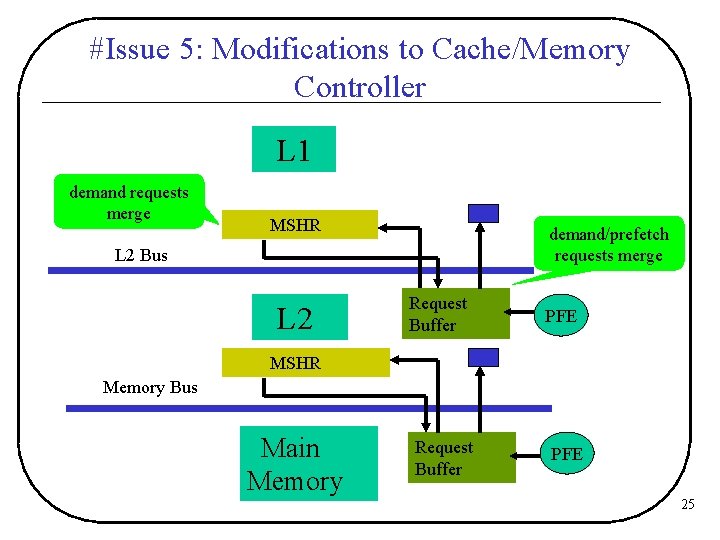

#Issue 5: Modifications to Cache/Memory Controller L 1 demand requests merge MSHR demand/prefetch requests merge L 2 Bus L 2 Request Buffer PFE MSHR Memory Bus Main Memory Request Buffer PFE 25

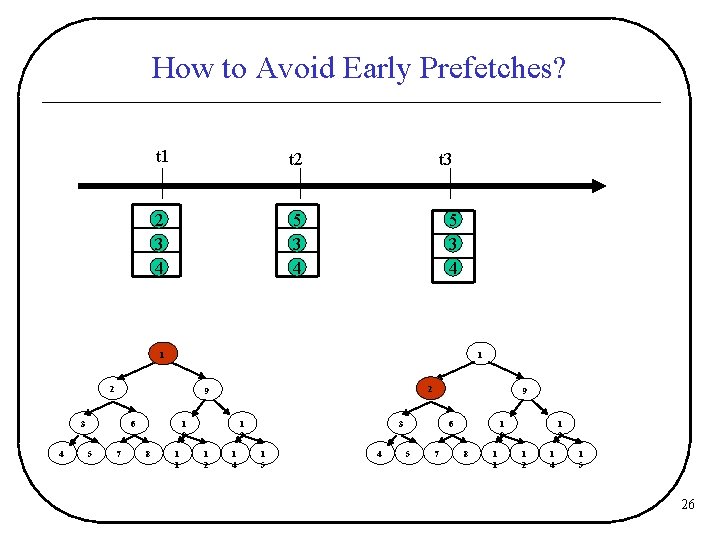

How to Avoid Early Prefetches? t 1 t 2 t 3 2 3 4 5 3 4 1 1 2 3 4 6 5 2 9 7 1 0 8 1 1 1 3 1 2 1 4 9 3 1 5 4 6 5 7 1 0 8 1 1 1 3 1 2 1 4 1 5 26

How to Avoid Early Prefetches? t 1 t 3 Free Bit Data Free Bit 2 3 4 0 0 0 1 PFE 2 3 4 0 suspend execution 0 1 4 2 9 6 5 7 1 0 8 PFE continue execution 1 2 3 Data 1 1 1 3 1 2 1 4 9 3 1 5 4 6 5 7 1 0 8 1 1 1 3 1 2 1 4 1 5 27

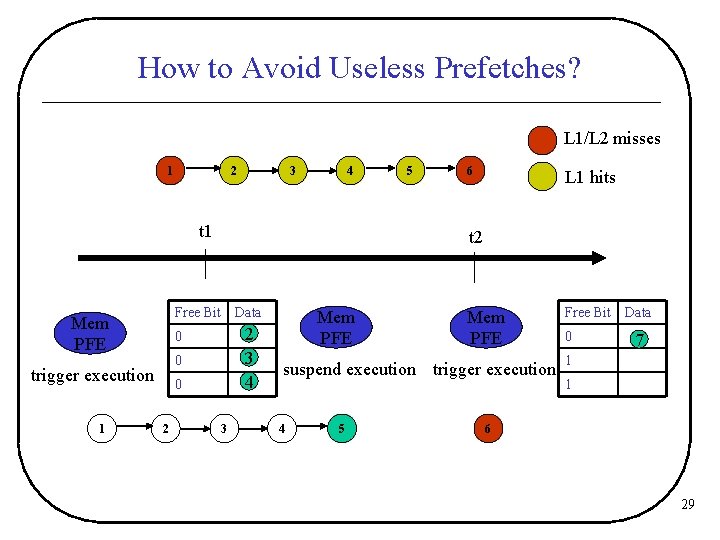

How to Avoid Useless Prefetches? L 1/L 2 misses 1 2 3 5 4 6 L 1 hits t 1 Free Bit Mem PFE 2 3 4 0 trigger execution 1 Data 0 0 2 3 Mem PFE : : : : suspend execution 4 Free Bit Data 0 2 3 4 0 0 5 28

How to Avoid Useless Prefetches? L 1/L 2 misses 1 2 3 4 t 1 1 Data 2 3 4 0 0 trigger execution 0 2 6 L 1 hits t 2 Free Bit Mem PFE 5 3 Mem PFE suspend execution trigger execution 4 5 Free Bit 0 Data 7 1 1 6 29

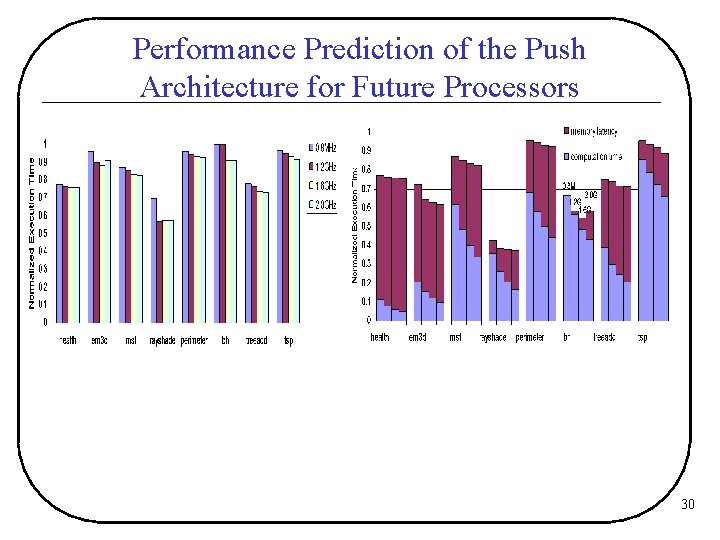

Performance Prediction of the Push Architecture for Future Processors 30

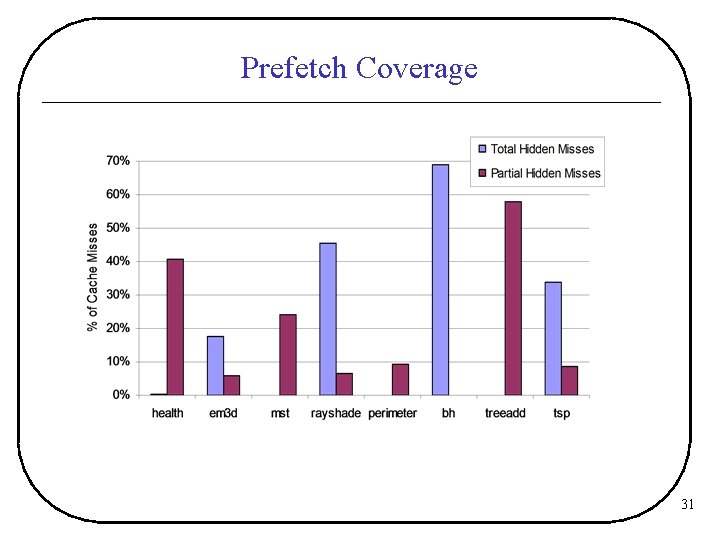

Prefetch Coverage 31

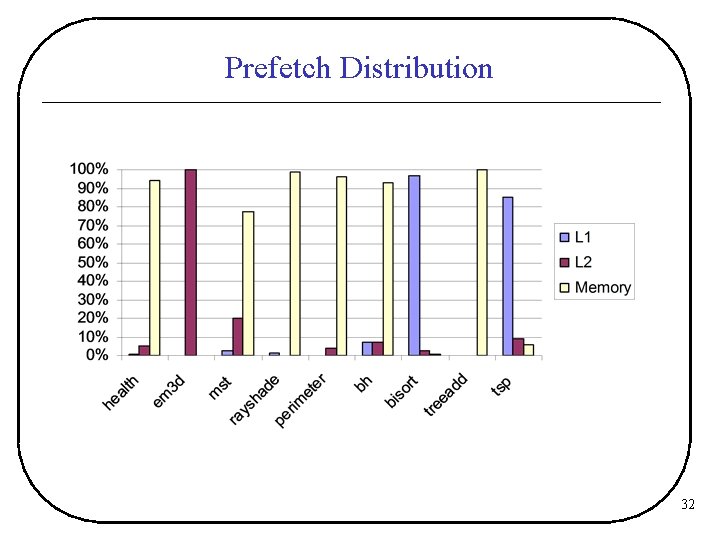

Prefetch Distribution 32

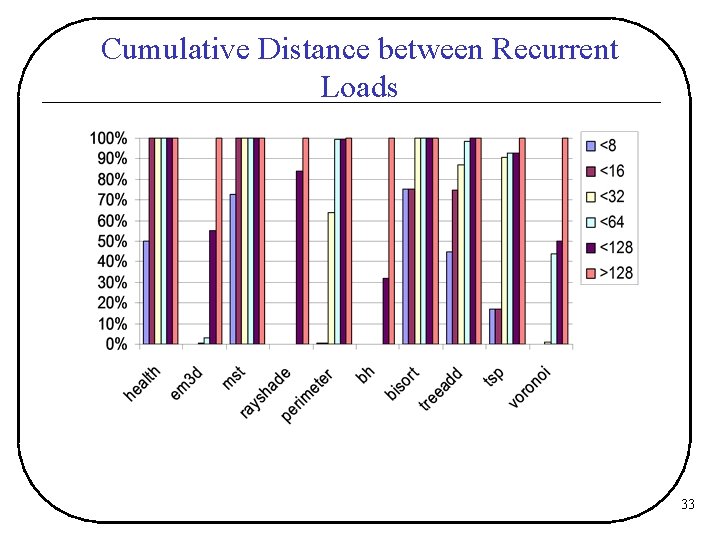

Cumulative Distance between Recurrent Loads 33

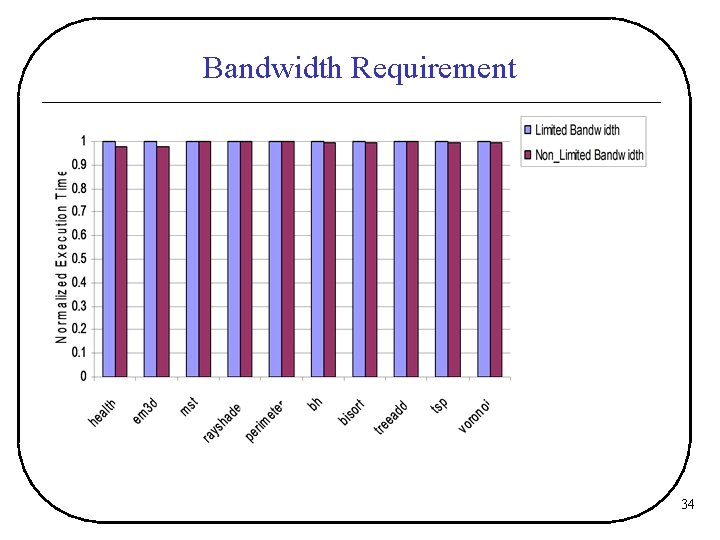

Bandwidth Requirement 34

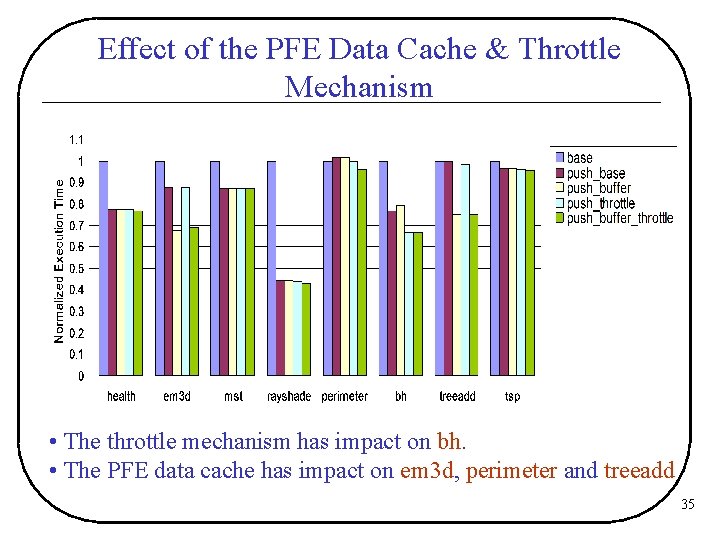

Effect of the PFE Data Cache & Throttle Mechanism • The throttle mechanism has impact on bh. • The PFE data cache has impact on em 3 d, perimeter and treeadd 35

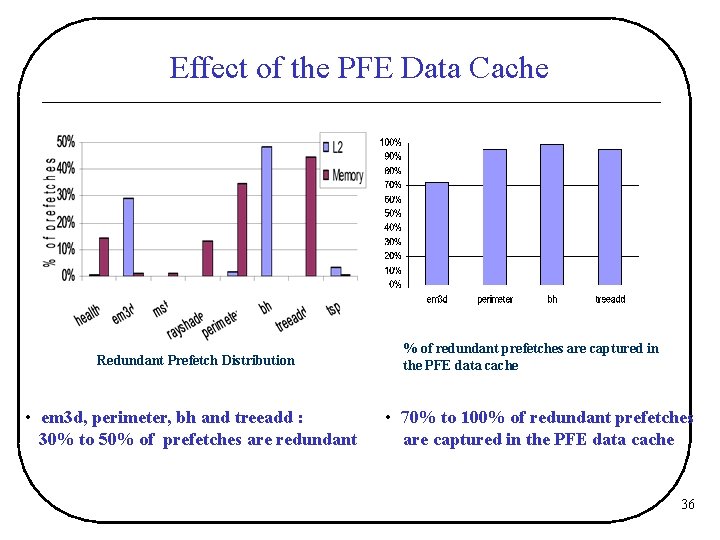

Effect of the PFE Data Cache Redundant Prefetch Distribution • em 3 d, perimeter, bh and treeadd : 30% to 50% of prefetches are redundant % of redundant prefetches are captured in the PFE data cache • 70% to 100% of redundant prefetches are captured in the PFE data cache 36

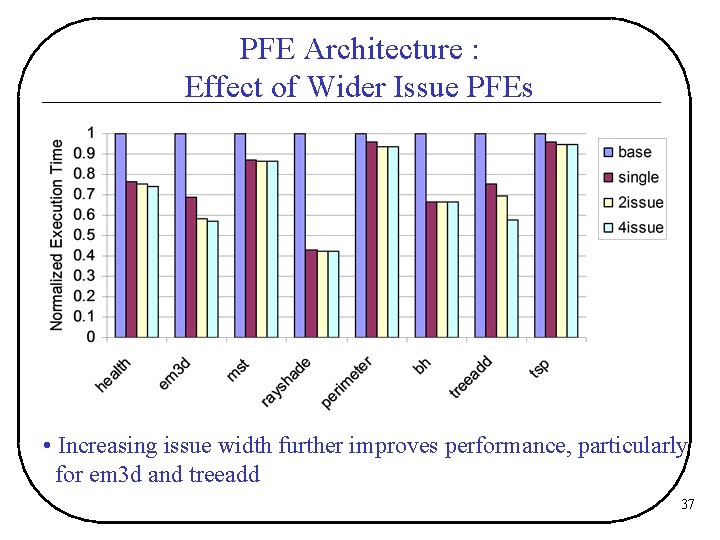

PFE Architecture : Effect of Wider Issue PFEs • Increasing issue width further improves performance, particularly for em 3 d and treeadd 37

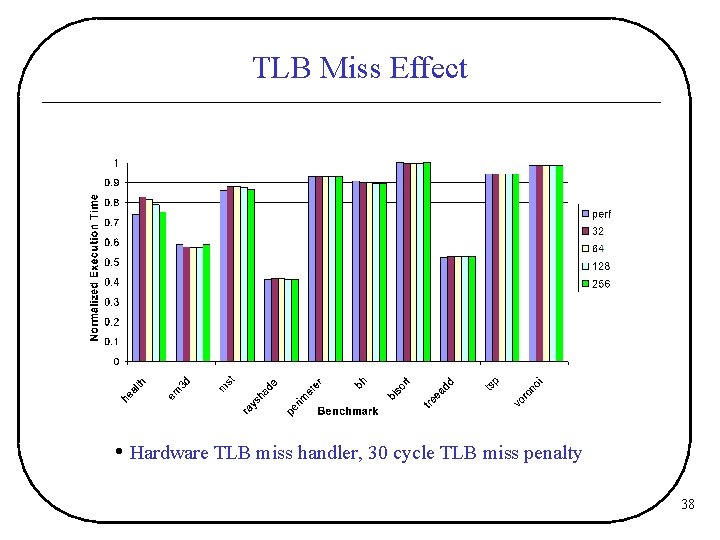

TLB Miss Effect • Hardware TLB miss handler, 30 cycle TLB miss penalty 38

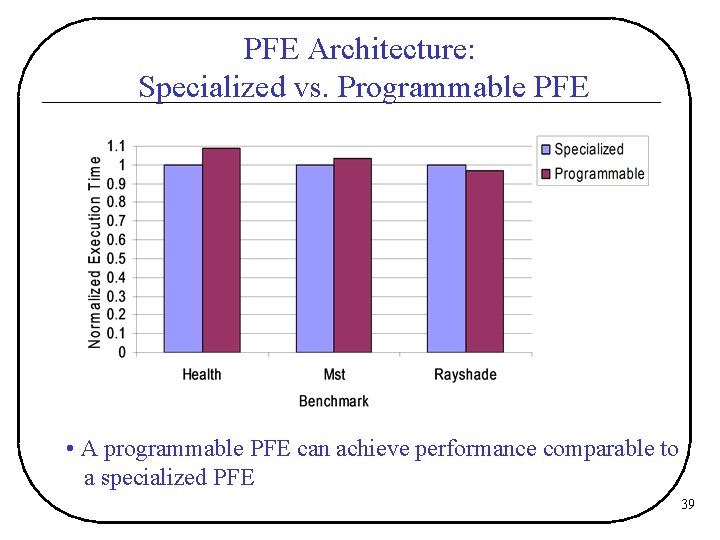

PFE Architecture: Specialized vs. Programmable PFE • A programmable PFE can achieve performance comparable to a specialized PFE 39

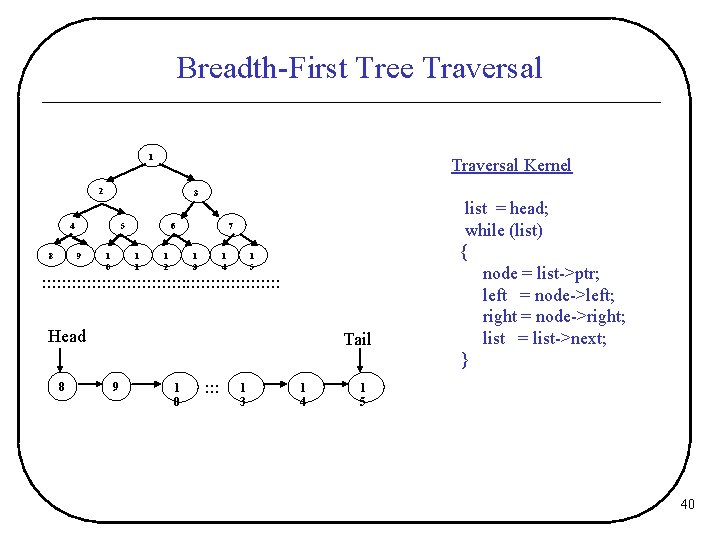

Breadth-First Tree Traversal 1 Traversal Kernel 2 3 4 8 5 9 1 0 6 1 1 1 2 7 1 3 1 4 1 5 : : : : : : : : : : : : Head 8 Tail 9 1 0 : : : 1 3 1 4 list = head; while (list) { node = list->ptr; left = node->left; right = node->right; list = list->next; } 1 5 40

Push Architecture Design Issues 1. PFE Architecture Design CPU L 1 controller PFE 2. Interaction Scheme 3. Redundant Prefetch L 2 Main Memory controller PFE 5. Demands on the cache/memory controller 4. Synchronization between the CPU and PFE execution 41

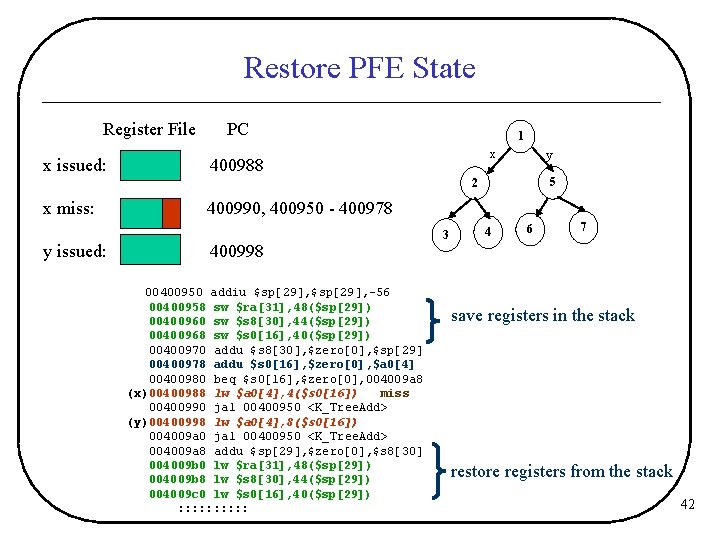

Restore PFE State Register File x issued: PC 1 x 400988 y 5 2 x miss: 400990, 400950 - 400978 3 y issued: 4 6 7 400998 00400950 addiu $sp[29], -56 00400958 sw $ra[31], 48($sp[29]) 00400960 sw $s 8[30], 44($sp[29]) 00400968 sw $s 0[16], 40($sp[29]) 00400970 addu $s 8[30], $zero[0], $sp[29] 00400978 addu $s 0[16], $zero[0], $a 0[4] 00400980 beq $s 0[16], $zero[0], 004009 a 8 (x)00400988 lw $a 0[4], 4($s 0[16]) miss 00400990 jal 00400950 <K_Tree. Add> (y)00400998 lw $a 0[4], 8($s 0[16]) 004009 a 0 jal 00400950 <K_Tree. Add> 004009 a 8 addu $sp[29], $zero[0], $s 8[30] 004009 b 0 lw $ra[31], 48($sp[29]) 004009 b 8 lw $s 8[30], 44($sp[29]) 004009 c 0 lw $s 0[16], 40($sp[29]) : : : : : save registers in the stack restore registers from the stack 42

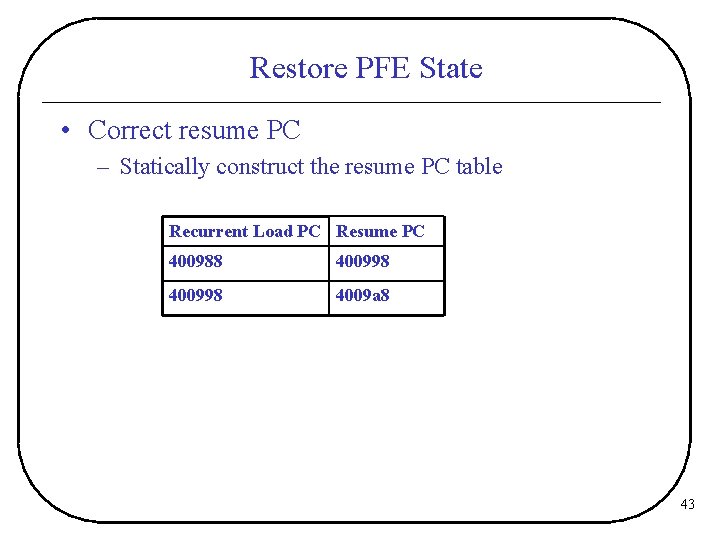

Restore PFE State • Correct resume PC – Statically construct the resume PC table Recurrent Load PC Resume PC 400988 400998 4009 a 8 43

- Slides: 43