A Perspective on the Future of Massively Parallel

- Slides: 28

A Perspective on the Future of Massively Parallel Computing Presented by: Cerise Wuthrich June 23, 2005

A Perspective on the Future of Massively Parallel Computing: Fine-Grain vs. Coarse-Grain Parallel Models Predrag T. Tosic Proceedings of the 1 st Conference on Computing Frontiers April 2004 p-tosic@cs. uiuc. edu

Outline ¨ Intro & Background of Current Models – Limits of Sequential Models – Tightly Coupled MP – Loosely Coupled DS ¨ Fine-Grain Parallel Models – ANN – Cellular Automata ¨ Fine-Grain vs Coarse-Grain – Architecture – Functions – Potential Advantages ¨ Summary and Conclusions

Introduction and Background of Current Models ¨ Hardware limitations ¨ There are physical limits to how fast we can compute – Limits to increasing the densities and decreasing the size of basic microcomponents – No signal can propagate faster than the speed of light

Introduction and Background of Current Models ¨ Limitations of Von Neuman Model – There is a clear distinction (physical and logical) where data and programs are stored (memory) and where the computation is executed (processor) – Sequential

Parallel Processing ¨ Realization that parallel processing was a necessity ¨ Classical Models – Multiprocessing Supercomputers – Networked Distributed Systems – Both models are actually coarse-grain ¨ Proposal – “Truly fine-grain connectionist massively parallel model”

Characteristics of Multiprocessing Supercomputers ¨ Communication Medium – Shared, Distributed, Hybrid ¨ Nature of Memory Access – Uniform vs. NUMA ¨ Granularity ¨ Instruction Streams (single or multiple) ¨ Data Streams (single or multiple)

Characteristics of Distributed Systems ¨ Loosely Coupled ¨ Heterogeneous collection ¨ Networked by middleware ¨ Scalable ¨ Flexible ¨ Energy dissipation not an issue ¨ Harder to program, control, detect errors and failures

The model we should really consider ¨ Current supercomputers use thousands of processors ¨ Current DS (like WWW) can use hundreds of millions of computers ¨ We shouldn’t base parallel computing on CS or engineering principles ¨ Instead look at the most sophisticated IP device engineered – the human brain

Human Brain 10 ¨ Tens of billions (10 ) of processors (neurons) ¨ Highly interconnected with 1015 interconnections ¨ Each neuron is a very simple basic information processing unit

Artificial Neural Networks ¨ Best known and most studied class of a connectionist model ¨ 1942 – Linear Perceptron ¨ Multi-Layer Perceptron ¨ Radial Basis Function NN ¨ Hopfield NN

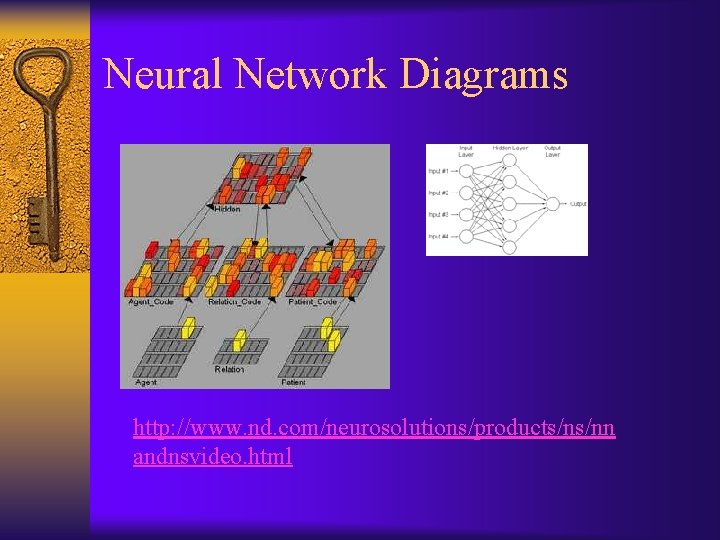

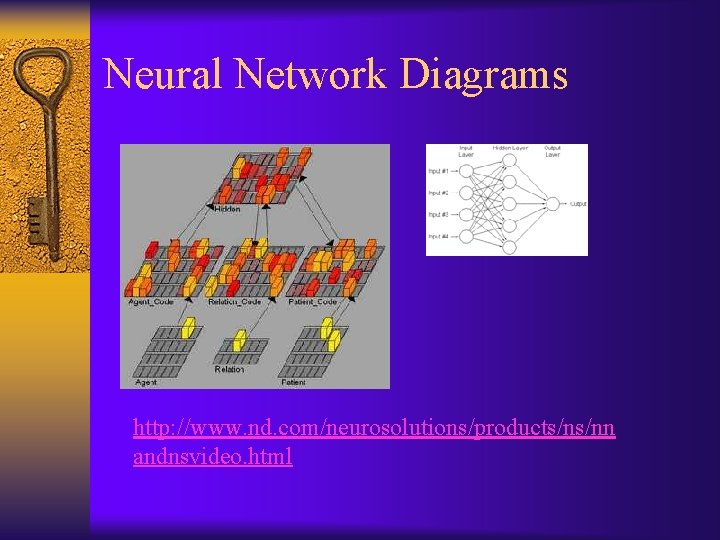

Neural Network Diagrams http: //www. nd. com/neurosolutions/products/ns/nn andnsvideo. html

Artificial Neural Networks ¨ Each neuron (processor) computes a single pre-determined function of its inputs ¨ Neuron similar to a logical gate ¨ Neurons connected with synapses ¨ Each synapse stores about 10 bits of info ¨ Each synapse fired about 10 times/sec ¨ Receptors are input devices ¨ Effectors are output devices

Artificial Neural Networks ¨ Just as the brain grows, changes, and adapts, ANNs allow for – creation of new synapses – Dynamic modification of already existing synapses ¨ ANNs – Memory – No separate place for memory – All info stored in nodes and edges – Dynamic changes in edge weights

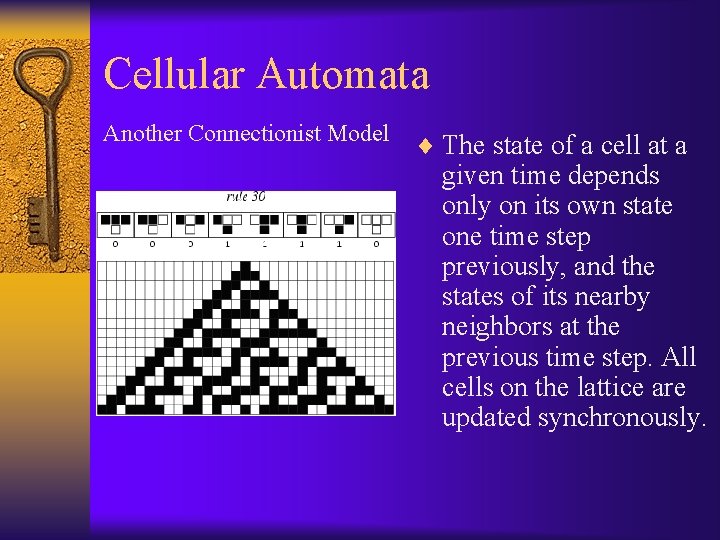

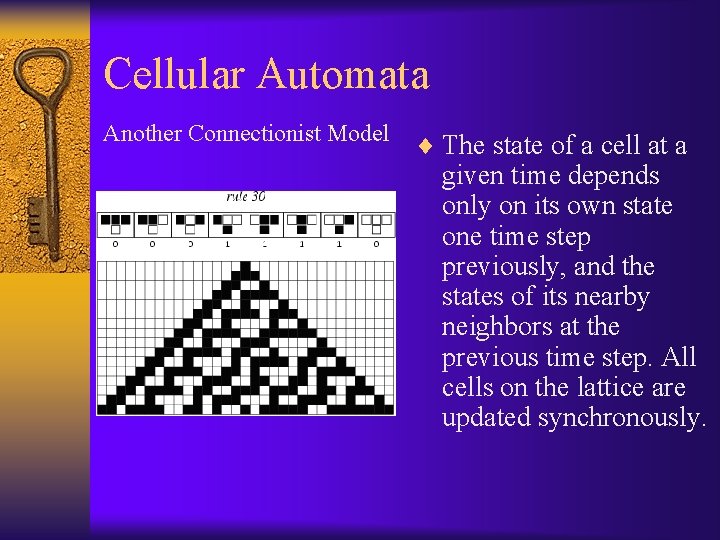

Cellular Automata Another Connectionist Model ¨ The state of a cell at a given time depends only on its own state one time step previously, and the states of its nearby neighbors at the previous time step. All cells on the lattice are updated synchronously.

Cellular Automata ¨ Model inspired by physics ¨ Grid where each node is a Finite State Machine – Edge-labeled directed graphs – Each vertex represents one of n states – Each edge a transition from one state to the other on receipt of the alphabet symbol that labels the edge

Cellular Automata ¨ Only 2 possible states – 0 is quiescent – 1 is active ¨ Only input is current states of its neighbors ¨ All nodes execute in unison ¨ A one-dimensional infinite CA is a “countably infinite set of nodes capable of universal computation”

Connectionist Models ¨ Appear to be a legitimate model of a universal massively parallel computer ¨ ANNs are suitable for learning, but not Cellular Automata ¨ CA find most of their applications in studying paradigms of dynamics of complex systems

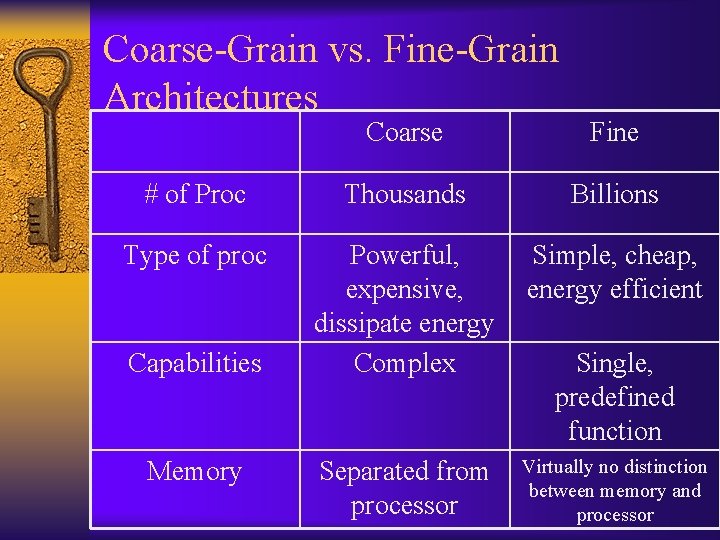

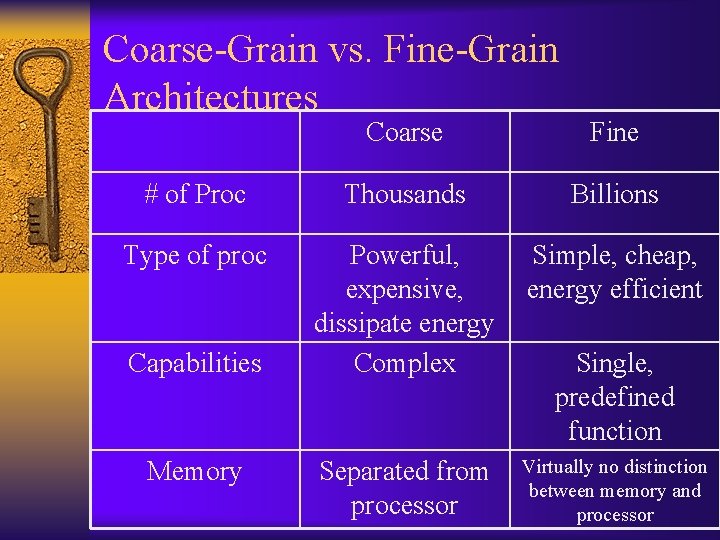

Coarse-Grain vs. Fine-Grain Architectures Coarse Fine # of Proc Thousands Billions Type of proc Powerful, expensive, dissipate energy Complex Simple, cheap, energy efficient Separated from processor Virtually no distinction between memory and processor Capabilities Memory Single, predefined function

Coarse-Grain vs. Fine-Grain Functions ¨ At the very core level, connectionist models are different in how they: – Receive information – Process information – Store information

Limitations of ANNs ¨ ANNs aren’t necessary in all domains – ANN can’t computer more or faster than the human brain – The power of a human brain is an asymptotic upper bound on a connectionist ANN model – Not needed for: • Computation tasks • Searching large databases

Suitable domains for ANNs ¨ Pattern Recognition – “No computer can get anywhere close to the speed and accuracy with which humans recognize and distinguish between, for example, different human faces or other similar context-sensitive, highly structured visual images. ” ¨ Problem domains where computing agent has on- going, dynamic interaction with environment or where computations may have fuzzy components

Potential Advantages of Connectionist Fine-Grain Models ¨ Scalability ¨ Avoid slow storage bottleneck since there is no physically separated memory ¨ Flexibility (not necessary to re-wire or reprogram with additional components) ¨ Graceful Degradation – neurons keep dying in our brains and yet we continue to function reasonably well

Potential Advantages of Connectionist Fine-Grain Models ¨ Robustness – If one component of a tightly coupled supercomputer fails, the whole system can fall apart ¨ Energy consumption – dissipate much less heat

Summary and Conclusions ¨ Connectionist models such as ANNs or CA are capable of massively parallel information processing ¨ They are legitimate candidates for an alternative approach to the design of highly parallel computers of the future ¨ These models are conceptually, architecturally and functionally very different from traditional models

Summary and Conclusions ¨ Connectionist models are: – Very fine-grained – Basic operations are much simpler – Several orders of magnitude more processors – Memory concept is totally different

Summary and Conclusions ¨ Connectionist models are: – Yet to be built – Idea is in its infancy – Currently still too far-fetched an endeavor – Promising future as the underlying abstract model of the general-purpose massively parallel computers of tomorrow

Questions