A Methodology for Implementing Highly Concurrent Data Objects

A Methodology for Implementing Highly Concurrent Data Objects by Maurice Herlihy Slides by Vincent Rayappa

Introduction • Objective: Describe a methodology of transforming sequential data structures into concurrent ones. – Use LL/SC instructions to accomplish this. • Why LL/SC? – Universally applicable to transform data structures into concurrent ones – Easier than using CAS. • A lot of modern architectures support LL/SC: PPC, ARM, MIPS etc. – Not on x 86? 2

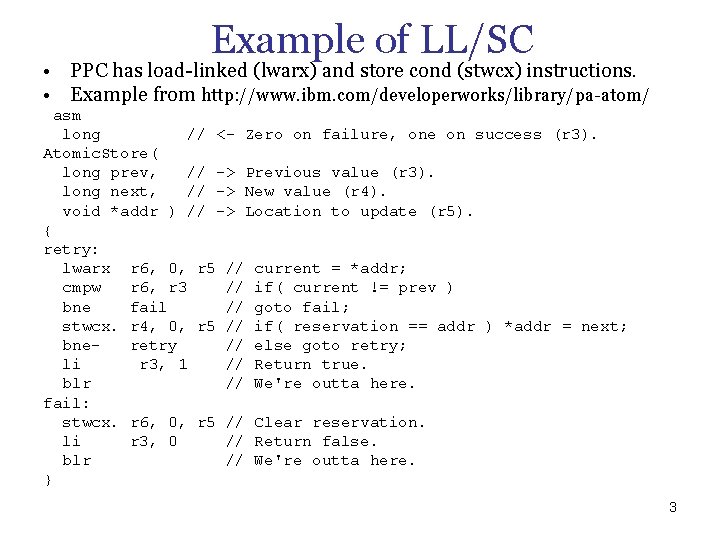

Example of LL/SC • PPC has load linked (lwarx) and store cond (stwcx) instructions. • Example from http: //www. ibm. com/developerworks/library/pa atom/ asm long // <- Zero on failure, one on success (r 3). Atomic. Store( long prev, // -> Previous value (r 3). long next, // -> New value (r 4). void *addr ) // -> Location to update (r 5). { retry: lwarx r 6, 0, r 5 // current = *addr; cmpw r 6, r 3 // if( current != prev ) bne fail // goto fail; stwcx. r 4, 0, r 5 // if( reservation == addr ) *addr = next; bneretry // else goto retry; li r 3, 1 // Return true. blr // We're outta here. fail: stwcx. r 6, 0, r 5 // Clear reservation. li r 3, 0 // Return false. blr // We're outta here. } 3

General Methodology • Programmer provides a non concurrent implementation of data structure (with restrictions) • By applying transformation techniques and memory management steps the data structure is made concurrent. • Small vs. big objects: – Small: efficient to whole data structure from one memory region to another – Big: inefficient to copy whole data structure. 4

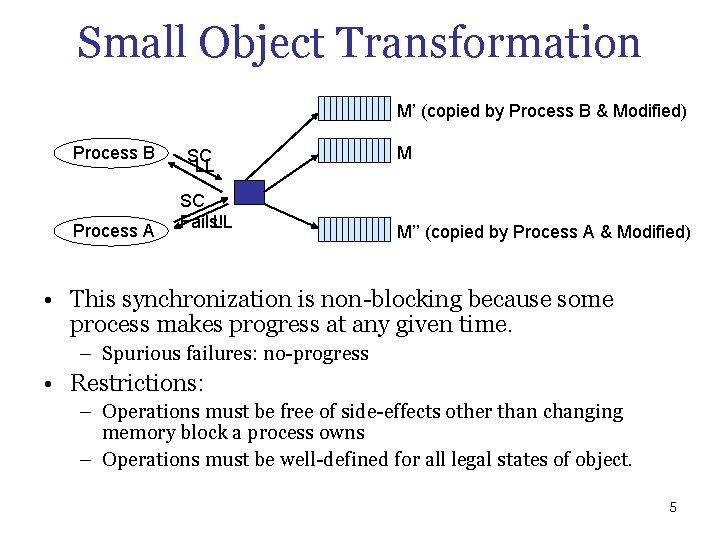

Small Object Transformation M’ (copied by Process B & Modified) Process B Process A SC LL Fails! M M’’ (copied by Process A & Modified) • This synchronization is non blocking because some process makes progress at any given time. – Spurious failures: no progress • Restrictions: – Operations must be free of side effects other than changing memory block a process owns – Operations must be well defined for all legal states of object. 5

Small Object Memory Management • Each process owns memory block big enough to copy data structure – When version pointer successfully updated, process releases ownership of new block and acquires ownership of old block. • Since only once process can swing the pointer each block has well defined owner. 6

Comparison to Type stable memory • TSM: value for tstable was left undefined. • Here we have clearer management (and recycling of memory). – Given up ownership of ‘new version’ when SC succeeds – Acquire ownership of ‘old version’ when SC succeeds. 7

Race condition • Stale read are still possible. In previous example: – Processes A and B read pointer to M – Process A updates versions from M to M’. – Now A owns M and as part of next operation can use it to copy/update contents from current version. – If Process B (because it is slow) is still reading M, it can see incomplete edits that A in now doing! • Operations on stale data can cause unpredictable behavior – Violates the ‘Operations must be well defined …’ restriction. • Prevent operations based on stale data by validating data before using it. 8

![Validating data • Use counters check[0] & check[1] – use 32 bit integers, making Validating data • Use counters check[0] & check[1] – use 32 bit integers, making](http://slidetodoc.com/presentation_image/aa5ff81393b67a664cc1b7164217718b/image-9.jpg)

Validating data • Use counters check[0] & check[1] – use 32 bit integers, making validation robust • Modify: check[0]++ > modify > check[1]++ • Copy: read check[1], copy, read check[0] == check[1] ? . – Yes: copy is consistent – No: we are reading edits in progress i. e. edits in progress • Can also be implemented in hardware – Not clear modern architectures support this. 9

Example: Priority Queue • A binary tree where a node have a value greater than both the subtrees (max. priority queue) • Operations supported – Peak at Max (or min) value – Extract max value – Insert new value • Priority queues implemented via heap data structure encoded as an array. 10

![typedef struct { pqueue_type version; unsigned check[2]; } Pqueue_type; static Pqueue_type *new_pqueue; red – typedef struct { pqueue_type version; unsigned check[2]; } Pqueue_type; static Pqueue_type *new_pqueue; red –](http://slidetodoc.com/presentation_image/aa5ff81393b67a664cc1b7164217718b/image-11.jpg)

typedef struct { pqueue_type version; unsigned check[2]; } Pqueue_type; static Pqueue_type *new_pqueue; red – sequential object blue – concurrent object Q- Shared global int Pqueue_deq(Pqueue_type **Q){ Pqueue_type *old_pqueue; /* concurrent object */ pqueue_type *old_version, *new_version; /* seq object */ int result; unsigned first, last; while (1) { Calls LL old_pqueue = load_linked(Q); Concurrent read of old_pqueue/old_version possible old_version = &old_pqueue >version; new_version = &new_pqueue >version; A process ‘owns’ new_version; others can still read new_version first = old_pqueue >check[1]; copy(old_version, new_version); Guard against concurrent read of new_version last = old_pqueue >check[0]; Consistency check if (first == last) { result = pqueue_deq(new_version); if (store_conditional(Q, new_version)) break; Calls SC } /* if */ } /* while */ new_pqueue = old_pqueue; return result; } /* Pqueue_deq */ 11

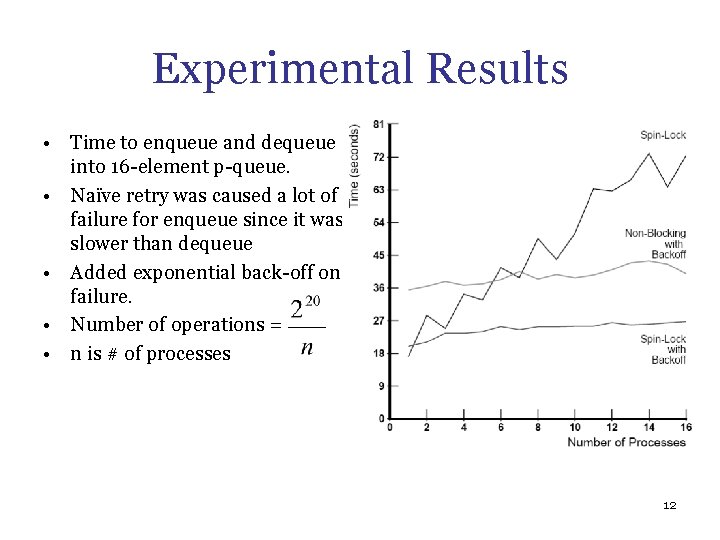

Experimental Results • Time to enqueue and dequeue into 16 element p queue. • Naïve retry was caused a lot of failure for enqueue since it was slower than dequeue • Added exponential back off on failure. • Number of operations = • n is # of processes 12

Large Objects • Large objects cannot be copied fully • Sequential operations create logically distinct version of objects – As opposed to changing them in place – Logically – not physically – distinct since programmer is free to share memory between old and new versions. • Concurrent operation: – Read pointer using LL – Use sequential operation to create new version – Swing pointer to new version using SC 13

Large Object Memory Management • Memory block required per sequential operation not fixed – Depends how much sharing happens between old and new versions • Processes own block of memory. – If they run out of block, they might have to borrow blocks from a common pool. • Memory managed via recoverable set data structure. 14

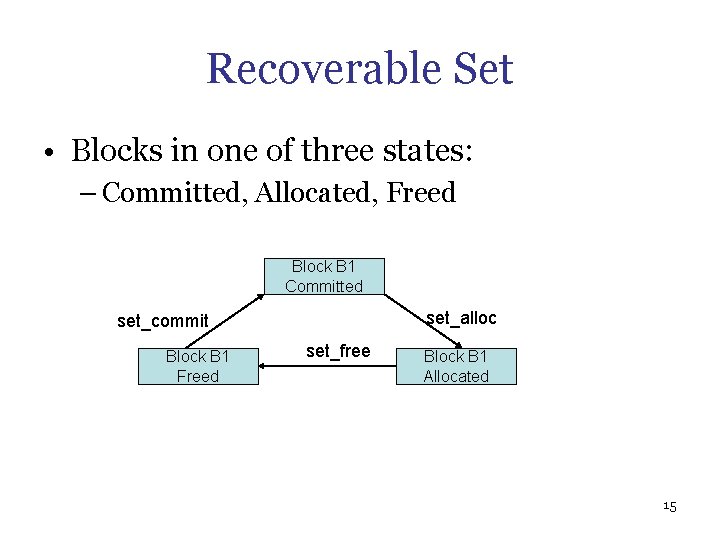

Recoverable Set • Blocks in one of three states: – Committed, Allocated, Freed Block B 1 Committed set_alloc set_commit Block B 1 Freed set_free Block B 1 Allocated 15

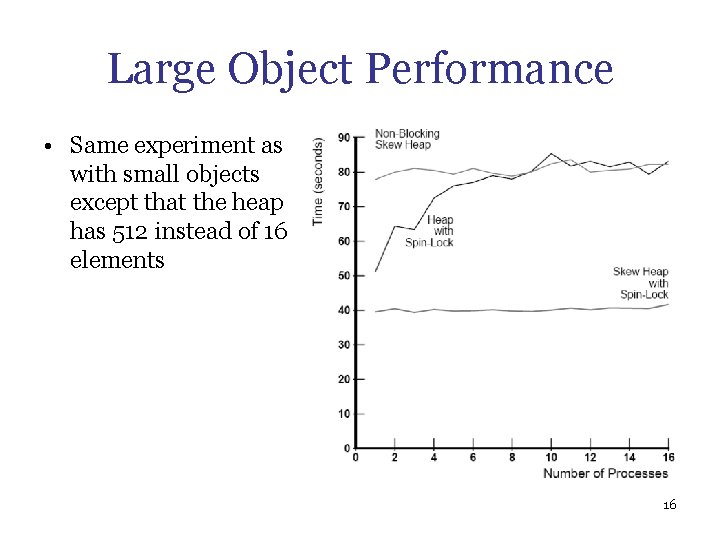

Large Object Performance • Same experiment as with small objects except that the heap has 512 instead of 16 elements 16

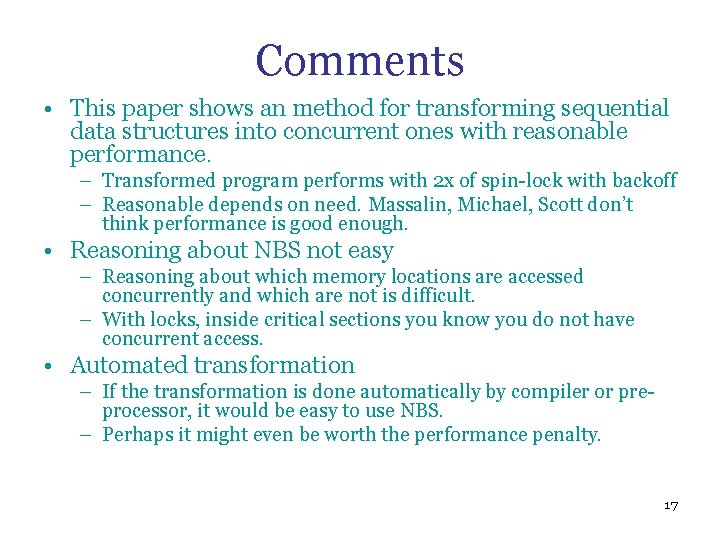

Comments • This paper shows an method for transforming sequential data structures into concurrent ones with reasonable performance. – Transformed program performs with 2 x of spin lock with backoff – Reasonable depends on need. Massalin, Michael, Scott don’t think performance is good enough. • Reasoning about NBS not easy – Reasoning about which memory locations are accessed concurrently and which are not is difficult. – With locks, inside critical sections you know you do not have concurrent access. • Automated transformation – If the transformation is done automatically by compiler or pre processor, it would be easy to use NBS. – Perhaps it might even be worth the performance penalty. 17

- Slides: 17