A Methodology for Creating Fast WaitFree Data Structures

- Slides: 29

A Methodology for Creating Fast Wait-Free Data Structures Alex Koganand Erez Petrank Computer Science Technion, Israel

Concurrency & (Non-blocking) synchronization �Concurrent data-structures require (fast and scalable) synchronization Non-blocking synchronization: �No thread is blocked in waiting for another thread to complete � no 2 locks / critical sections

Lock-free (LF) algorithms Among all threads trying to apply operations on the data structure, one will succeed �Opportunistic approach � read some part of the data structure � make an attempt to apply an operation � when failed, retry �Many scalable and efficient algorithms üGlobal progress All but one threads may starve 3

Wait-free (WF) algorithms �A thread completes its operation a bounded #steps � regardless �Particularly � e. g. , of what other threads are doing important property in several domains real-time systems and operating systems �Commonly regarded as too inefficient and complicated to design 4

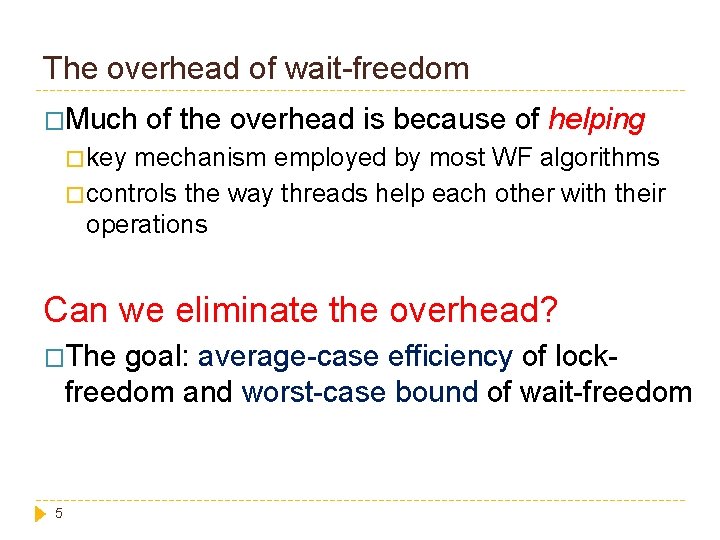

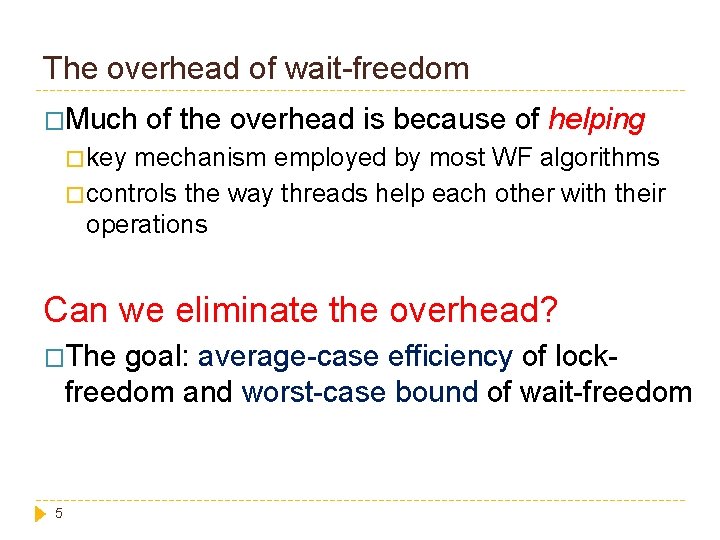

The overhead of wait-freedom �Much of the overhead is because of helping � key mechanism employed by most WF algorithms � controls the way threads help each other with their operations Can we eliminate the overhead? �The goal: average-case efficiency of lockfreedom and worst-case bound of wait-freedom 5

Why is helping slow? �A thread helps others immediately when it starts its operation �All threads help others in exactly the same order contention redundant work �Each operation has to be applied exactly once � usually results in a higher # expensive atomic operations Lock-free MSqueue (PODC, 1996) Wait-free KP-queue (PPOPP, 2011) # CASs in enqueue 2 3 # CASs in dequeue 1 4 6

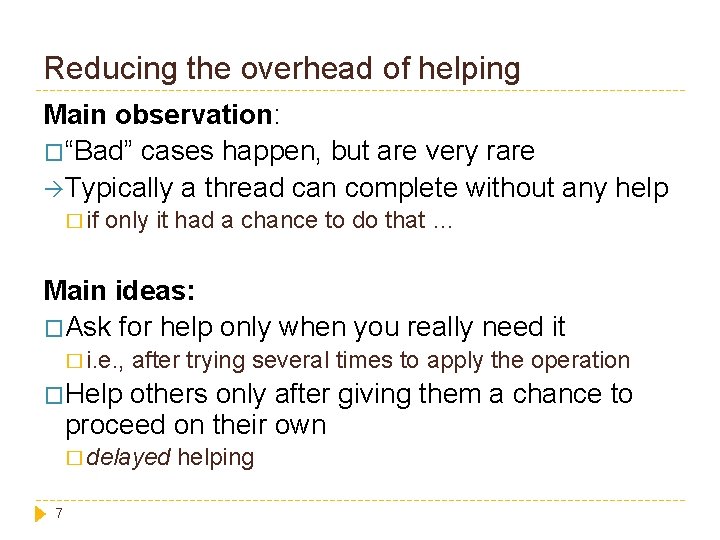

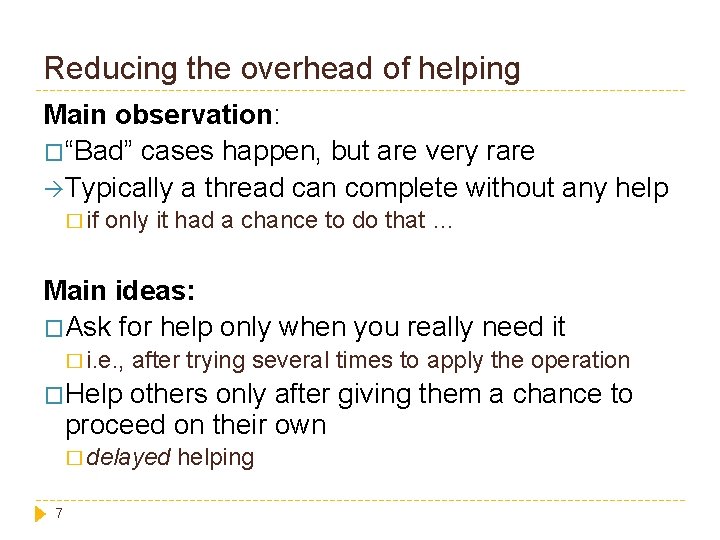

Reducing the overhead of helping Main observation: �“Bad” cases happen, but are very rare Typically a thread can complete without any help � if only it had a chance to do that … Main ideas: �Ask for help only when you really need it � i. e. , after trying several times to apply the operation �Help others only after giving them a chance to proceed on their own � delayed 7 helping

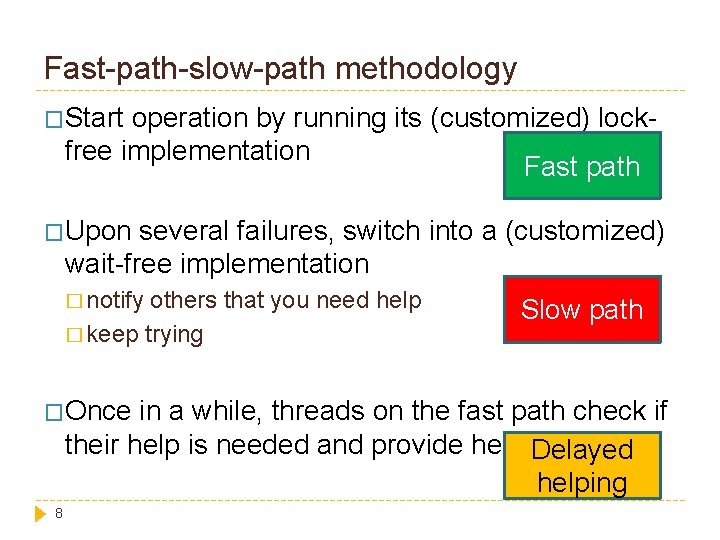

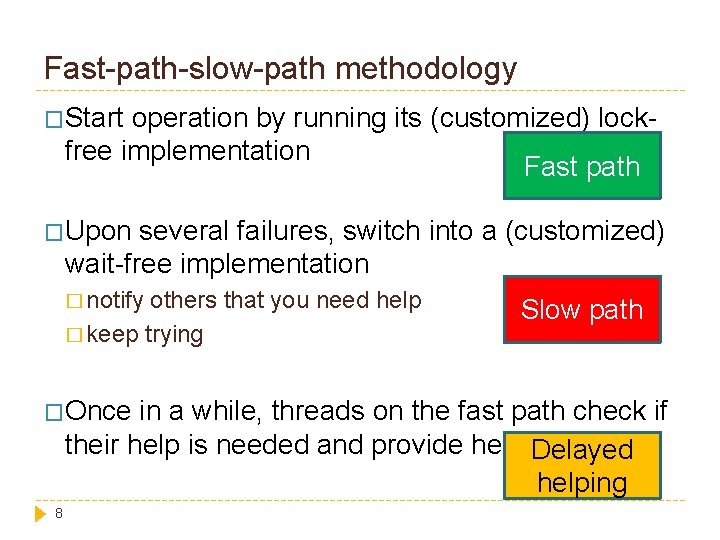

Fast-path-slow-path methodology �Start operation by running its (customized) lockfree implementation Fast path �Upon several failures, switch into a (customized) wait-free implementation � notify others that you need help � keep trying �Once Slow path in a while, threads on the fast path check if their help is needed and provide help Delayed helping 8

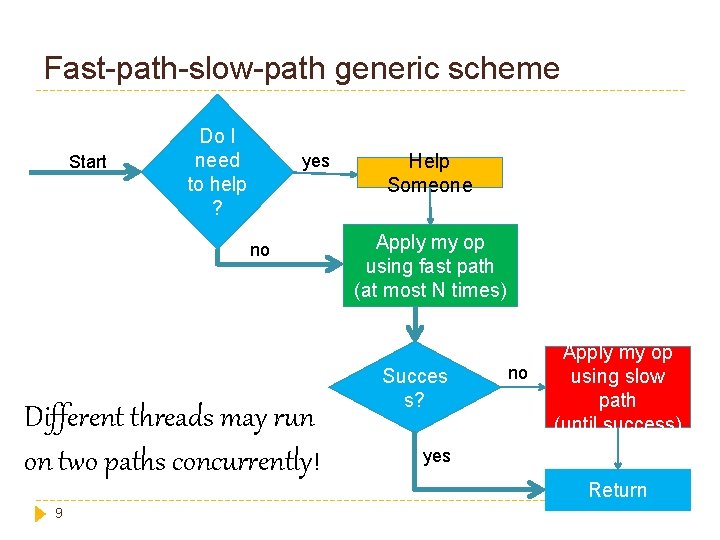

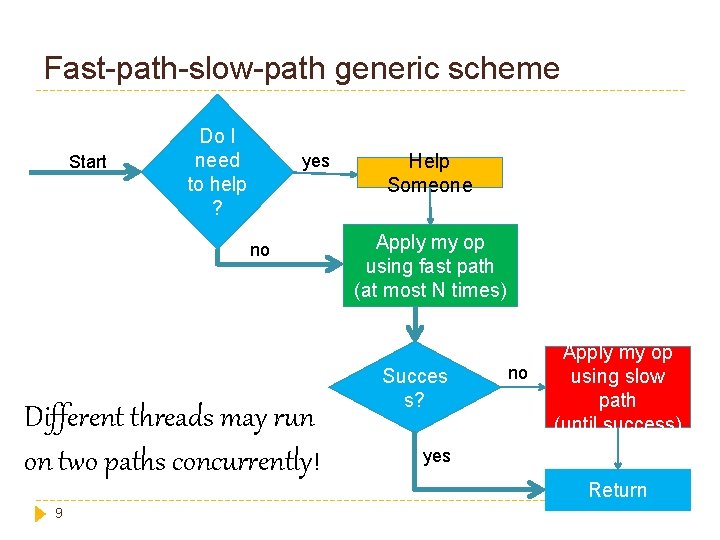

Fast-path-slow-path generic scheme Start Do I need to help ? yes no Different threads may run on two paths concurrently! 9 Help Someone Apply my op using fast path (at most N times) Succes s? no Apply my op using slow path (until success) yes Return

Fast-path-slow-path: queue example Fast path (MS-queue) Slow path (KP-queue) 10

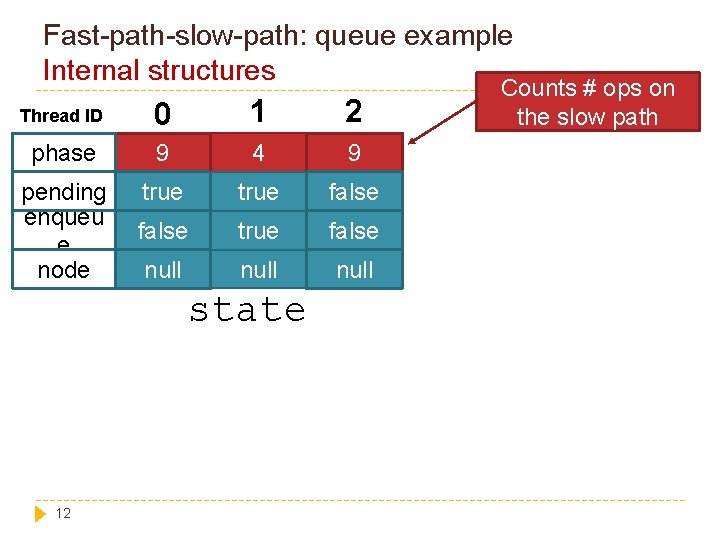

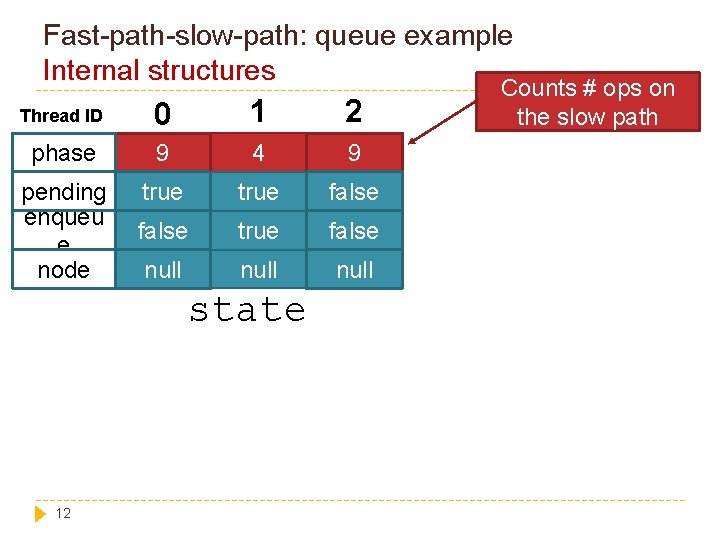

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 phase 9 4 9 pending enqueu e node true false null state 11

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 phase 9 4 9 pending enqueu e node true false null state 12 Counts # ops on the slow path

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 phase 9 4 9 pending enqueu e node true false null state 13 Is there a pending operation on the slow path?

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 phase 9 4 9 pending enqueu e node true false null state 14 What is the pending operation?

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 cur. Tid 1 0 0 last. Phase 4 5 9 next. Check 3 8 0 help. Records 15

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 cur. Tid 1 0 0 last. Phase 4 5 9 next. Check 3 8 0 help. Records 16 ID of the next thread that I will try to help

Fast-path-slow-path: queue example Internal structures Thread ID 0 1 2 cur. Tid 1 0 0 last. Phase 4 5 9 next. Check 3 8 0 help. Records 17 Phase # of that thread at the time the record was created

Fast-path-slow-path: queue example Internal structures 0 1 cur. Tid 1 0 last. Phase 4 5 controls the 0 frequency of helping checks 9 next. Check 3 8 0 Thread ID 2 HELPING_DELAY help. Records 18 Decrements with every my operation. Check if my help is needed when this counter reaches 0

Fast-path-slow-path: queue example Fast path 1. help_if_needed() 2. int trials = 0 while (trials++ < MAX_FAILURES) MAX_FAILURES { controls the number apply_op_with_customized_LF_alg of trials on the fast (finish if succeeded) path } 3. switch to slow path �LF algorithm customization is required to synchronize operations run on two paths 19

Fast-path-slow-path: queue example Slow path 1. my phase ++ 2. announce my operation (in state) 3. apply_op_with_customized_WF_alg (until finished) �WF algorithm customization is required to synchronize operations run on two paths 20

Performance evaluation � 32 -core � 8 Ubuntu server with Open. JDK 1. 6 2. 3 GHz quadcore AMD 8356 processors �The queue is initially empty �Each thread iteratively performs (100 k times): � Enqueue-Dequeue benchmark: enqueue and then dequeue �Measure threads completion time as a function of #

Performance evaluation 140 MS-queue KP-queue 120 time (sec) 100 80 60 40 20 0 1 22 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 number of threads

Performance evaluation 140 MAX_FAILURES MS-queue HELPING_DELAY KP-queue fast WF (0, 0) fast WF (100, 100) 120 time (sec) 100 80 60 40 20 0 1 23 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 number of threads

Performance evaluation 140 MS-queue KP-queue fast WF (0, 0) fast WF (3, 3) fast WF (10, 10) fast WF (20, 20) fast WF (100, 100) 120 time (sec) 100 80 60 40 20 0 1 24 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 number of threads

The impact of configuration parameters 140 fast WF (0, 0) 120 time (sec) 100 MAX_FAILURES HELPING_DELAY fast WF (10, 10) 80 60 40 20 0 1 25 5 9 13 17 21 25 29 33 37 41 45 49 53 57 61 number of threads

The use of the slow path enqueue % ops on slow path 100 dequeue 80 HELPING_DELAY MAX_FAILURES 60 40 20 0 1 11 21 31 41 51 number of threads 26 61 1 11 21 31 41 51 number of threads 61

Tuning performance parameters �Why not just always use large values for both parameters (MAX_FAILURES, HELPING_DELAY)? � (almost) always eliminate slow path �Lemma: The number of steps required for a thread to complete an operation on the queue in the worst-case is O(MAX_FAILURES + HELPING_DELAY * n 2) →Tradeoff between average-case performance and worst-case completion time bound 27

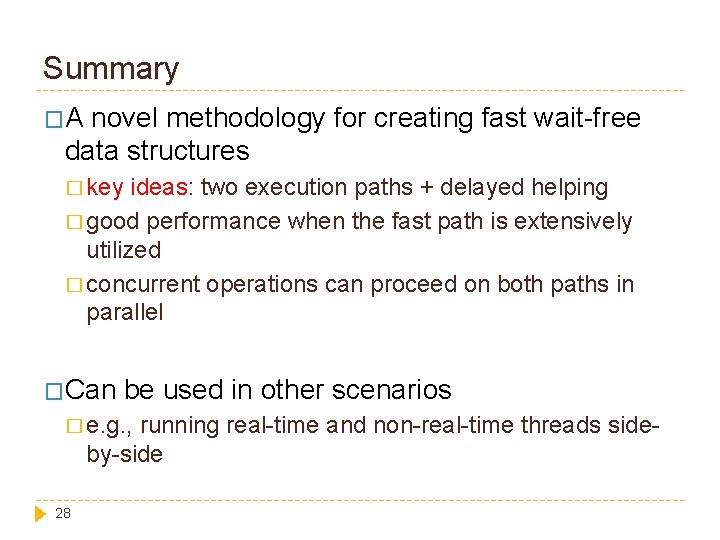

Summary �A novel methodology for creating fast wait-free data structures � key ideas: two execution paths + delayed helping � good performance when the fast path is extensively utilized � concurrent operations can proceed on both paths in parallel �Can be used in other scenarios � e. g. , running real-time and non-real-time threads sideby-side 28

Thank you! Questions? 29