A Map Reduce Framework on Heterogeneous Systems Contents

![Task Scheduling on Worker Node While true do foreach worker i do if worker_info[i]. Task Scheduling on Worker Node While true do foreach worker i do if worker_info[i].](https://slidetodoc.com/presentation_image_h2/b2be127221b42b85d1625f3e17e4bf24/image-15.jpg)

- Slides: 32

A Map. Reduce Framework on Heterogeneous Systems

Contents • • • Architecture Two-level scheduling Multi grain input tasks Task flow configuration Communication

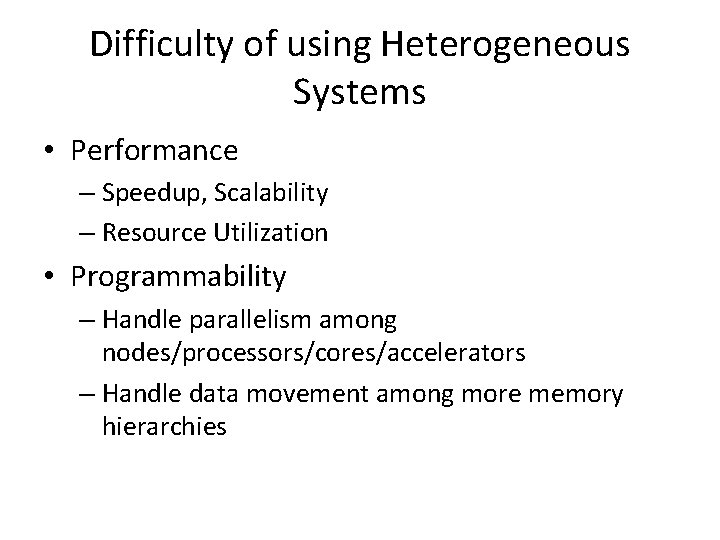

Difficulty of using Heterogeneous Systems • Performance – Speedup, Scalability – Resource Utilization • Programmability – Handle parallelism among nodes/processors/cores/accelerators – Handle data movement among more memory hierarchies

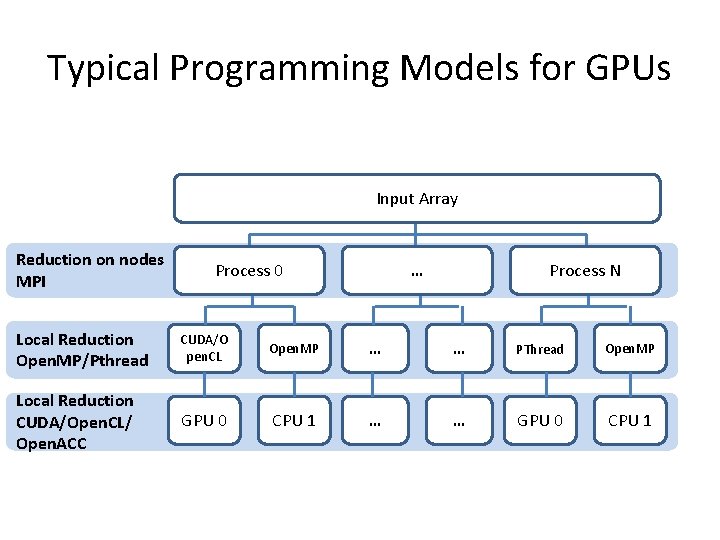

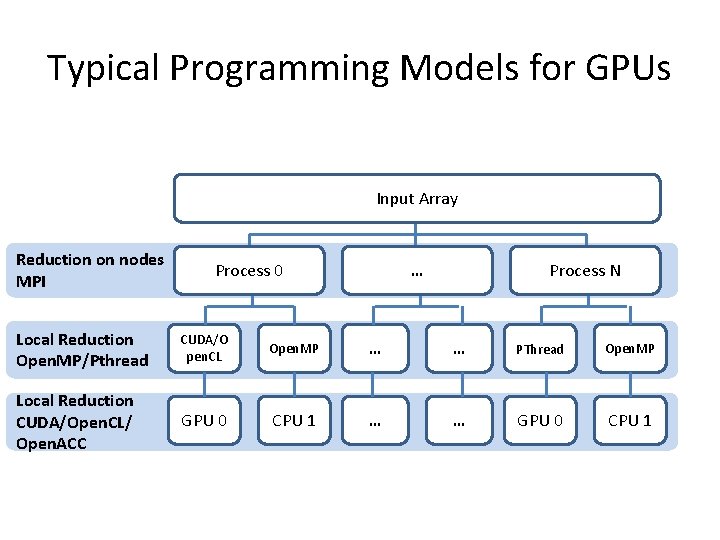

Typical Programming Models for GPUs Input Array Reduction on nodes MPI Process 0 … Process N Local Reduction Open. MP/Pthread CUDA/O pen. CL Open. MP … … PThread Open. MP Local Reduction CUDA/Open. CL/ Open. ACC GPU 0 CPU 1 … … GPU 0 CPU 1

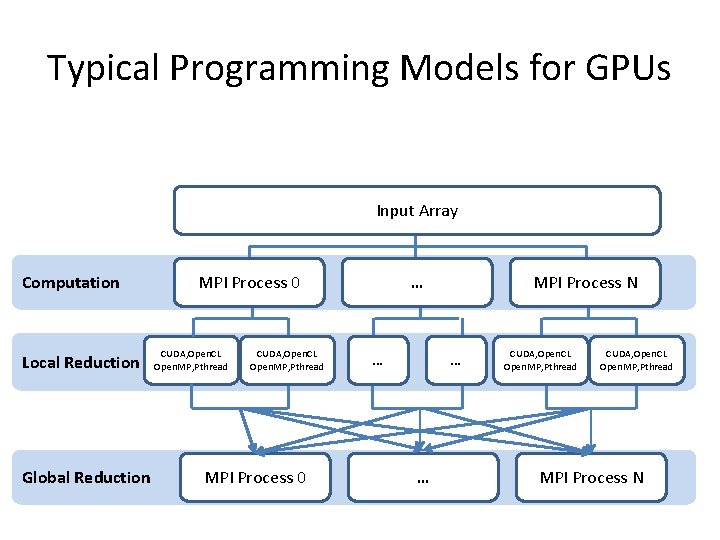

Typical Programming Models for GPUs Input Array Global Reduction Local Reduction Hardware MPI Process 0 … MPI Process N CUDA, Open. CL, Open. MP, Pthread Open. MP/P thread … … CUDA /Open. CL Open. MP /Pthread GPU 0 CPU 1 … … GPU 0 CPU 1

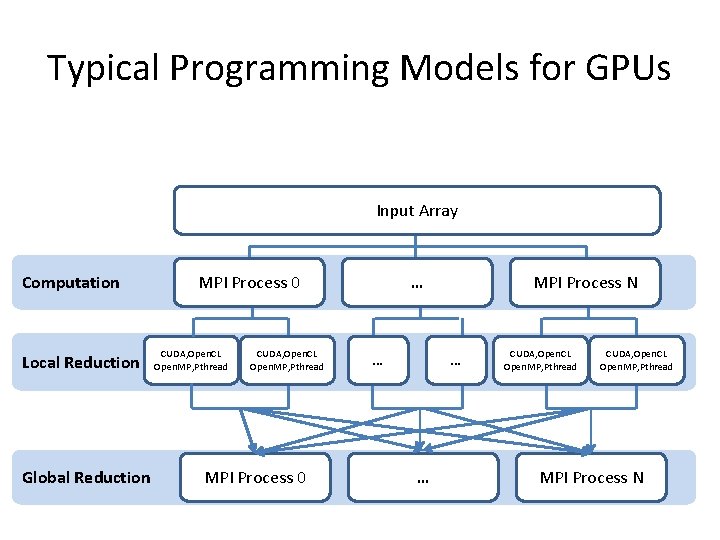

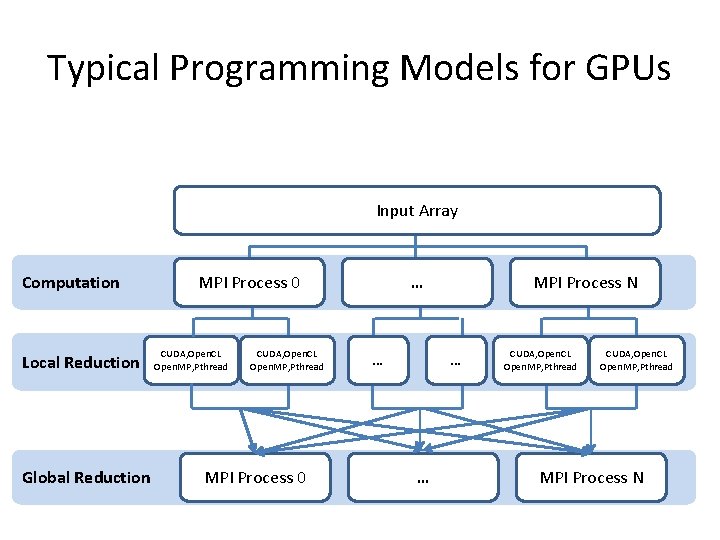

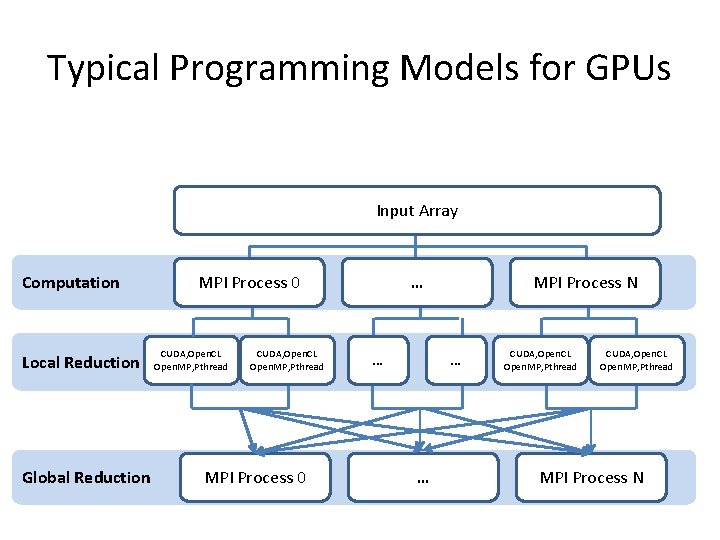

Typical Programming Models for GPUs Input Array Computation Local Reduction Global Reduction MPI Process 0 CUDA, Open. CL Open. MP, Pthread MPI Process 0 … … MPI Process N … … CUDA, Open. CL Open. MP, Pthread MPI Process N

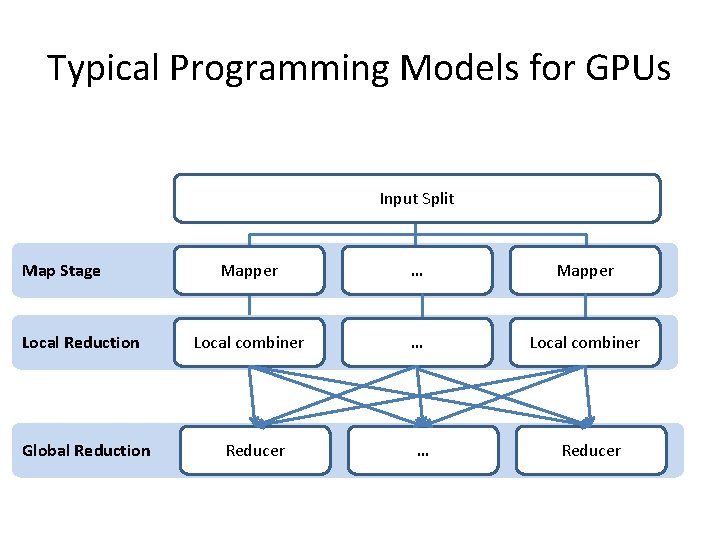

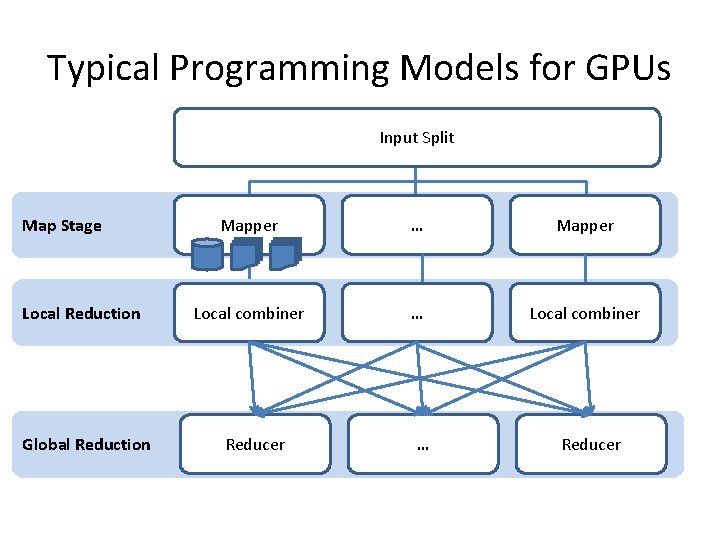

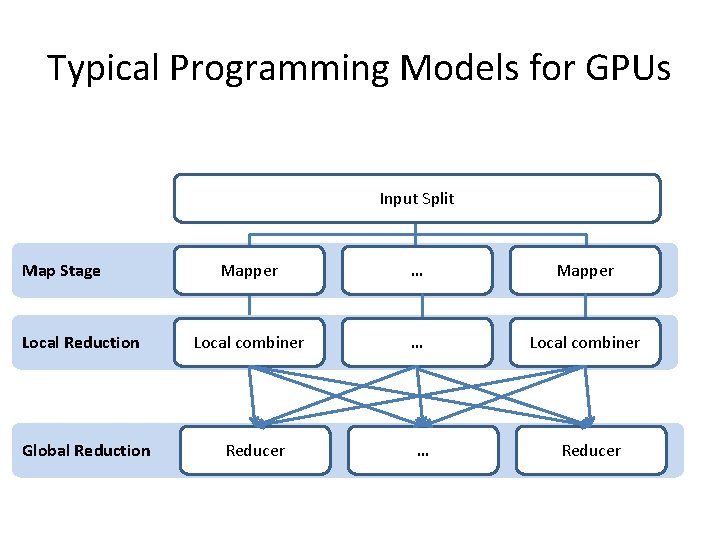

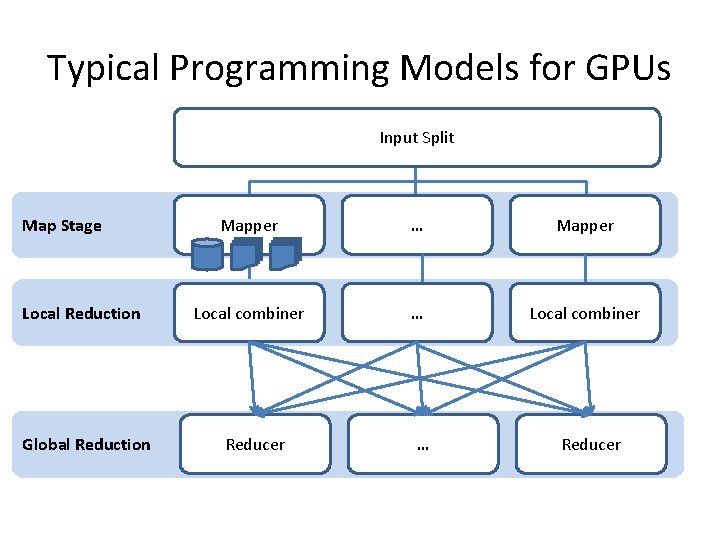

Typical Programming Models for GPUs Input Split Map Stage Local Reduction Global Reduction Mapper … Mapper Local combiner … Local combiner Reducer … Reducer

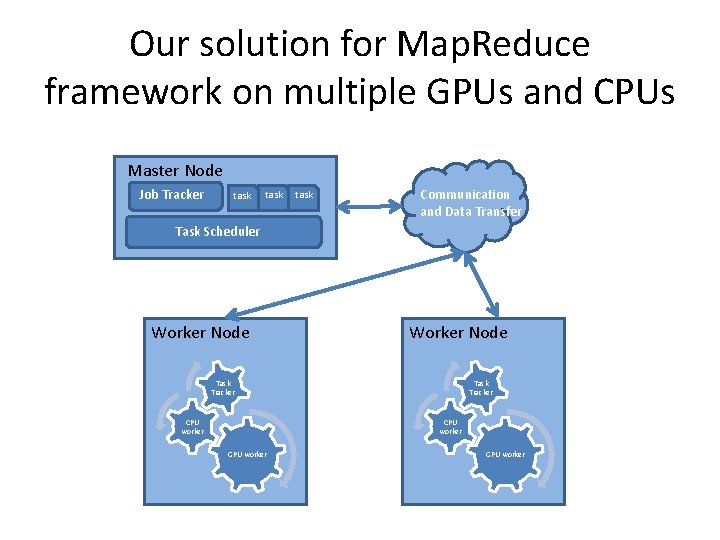

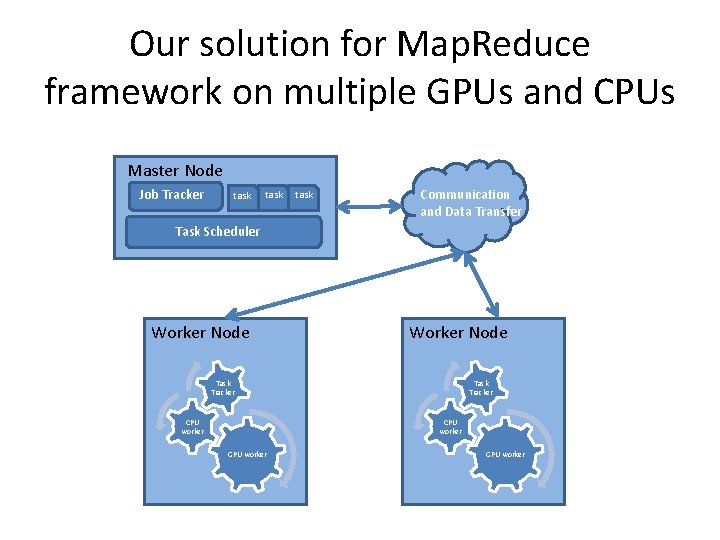

Our solution for Map. Reduce framework on multiple GPUs and CPUs Master Node Job Tracker task Communication and Data Transfer Task Scheduler Worker Node Task Tracker CPU worker GPU worker

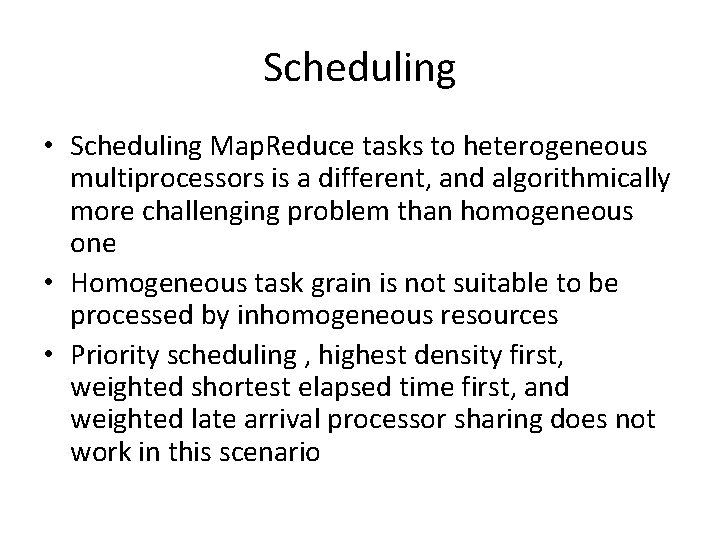

Scheduling • Scheduling Map. Reduce tasks to heterogeneous multiprocessors is a different, and algorithmically more challenging problem than homogeneous one • Homogeneous task grain is not suitable to be processed by inhomogeneous resources • Priority scheduling , highest density first, weighted shortest elapsed time first, and weighted late arrival processor sharing does not work in this scenario

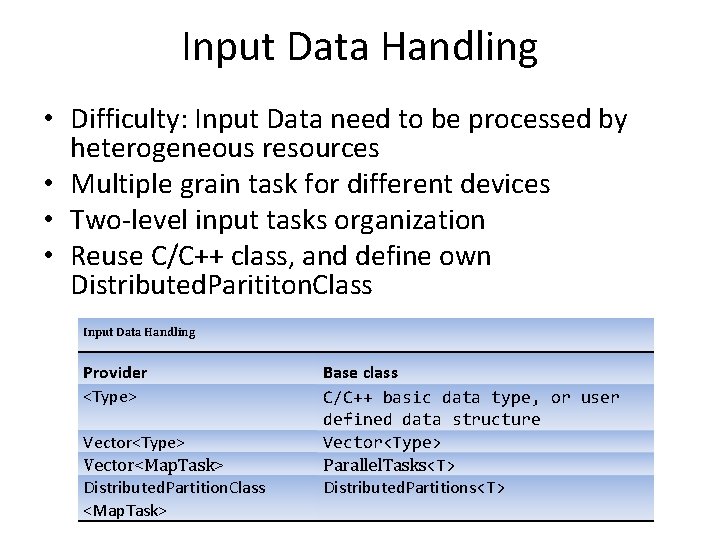

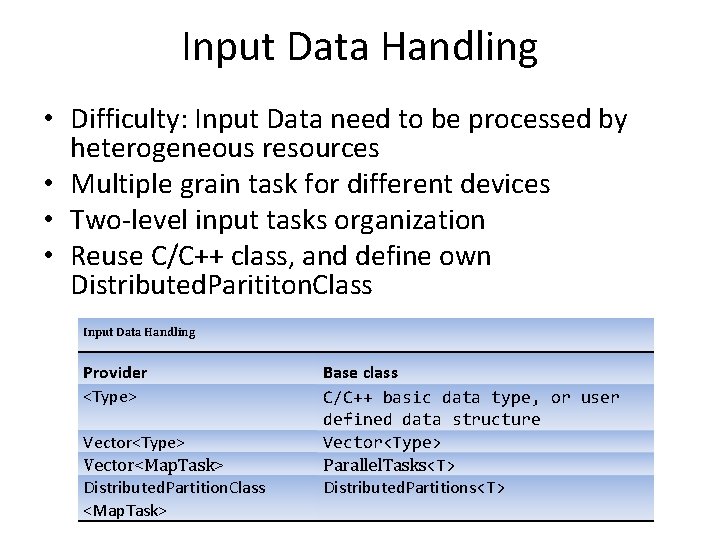

Input Data Handling • Difficulty: Input Data need to be processed by heterogeneous resources • Multiple grain task for different devices • Two-level input tasks organization • Reuse C/C++ class, and define own Distributed. Parititon. Class Input Data Handling Provider <Type> Vector<Map. Task> Distributed. Partition. Class <Map. Task> Base class C/C++ basic data type, or user defined data structure Vector<Type> Parallel. Tasks<T> Distributed. Partitions<T>

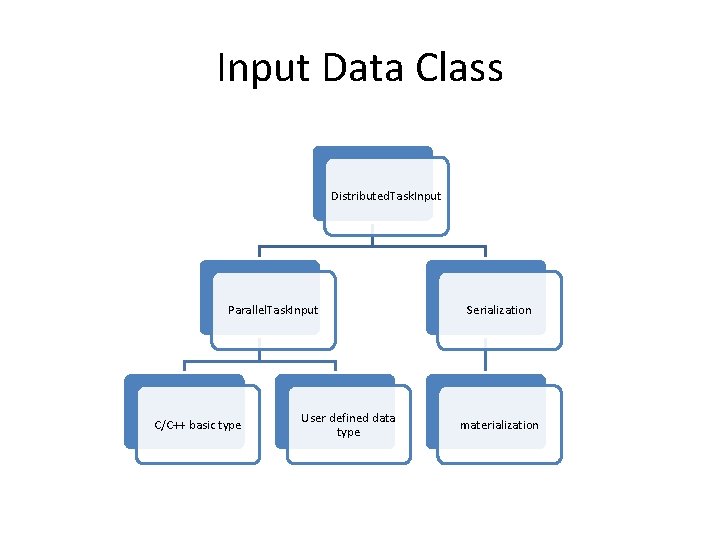

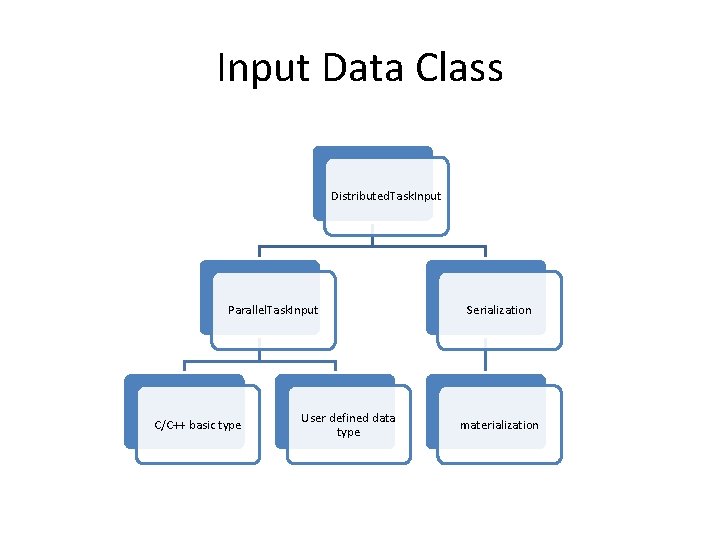

Input Data Class Distributed. Task. Input Parallel. Task. Input C/C++ basic type User defined data type Serialization materialization

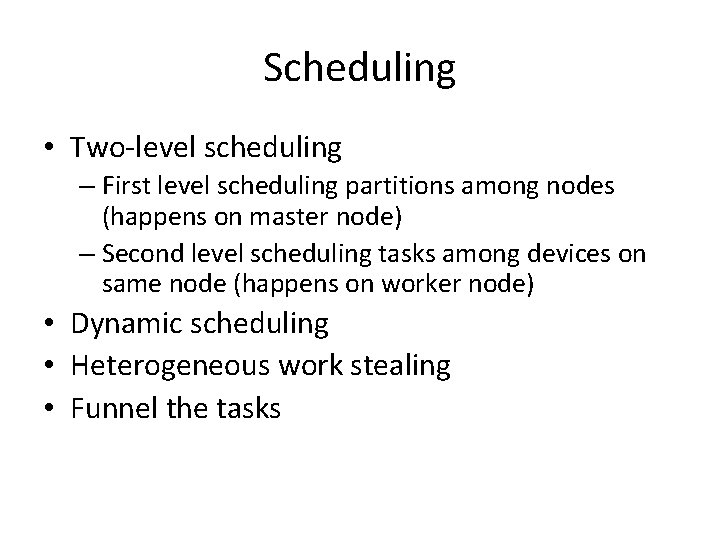

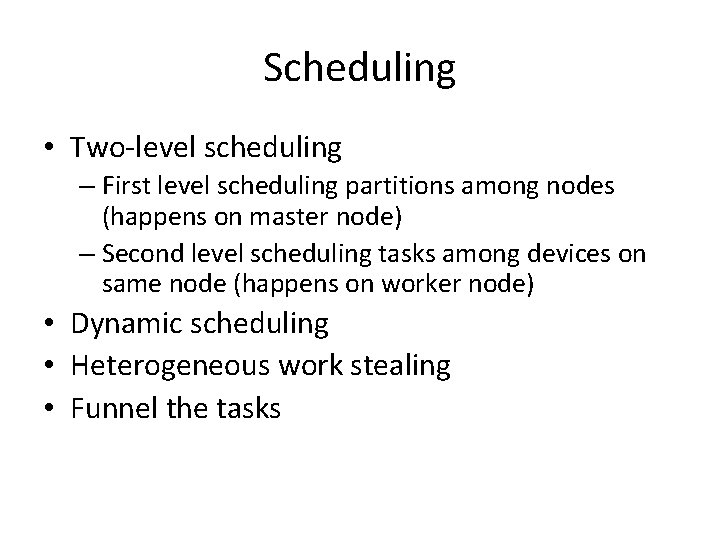

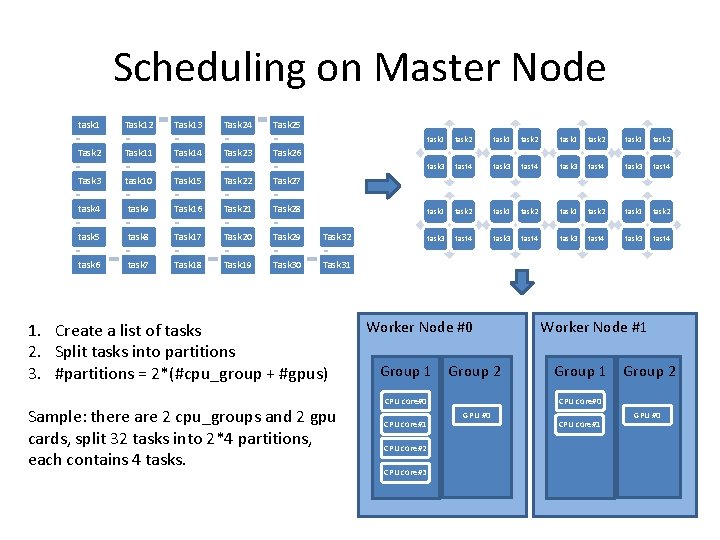

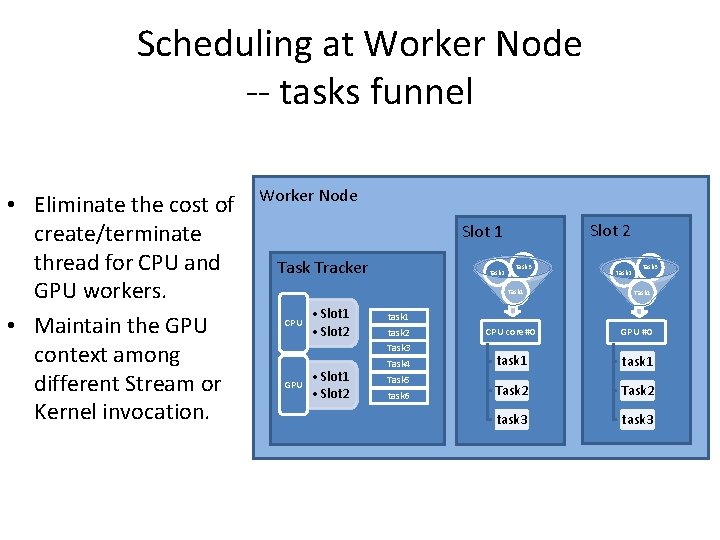

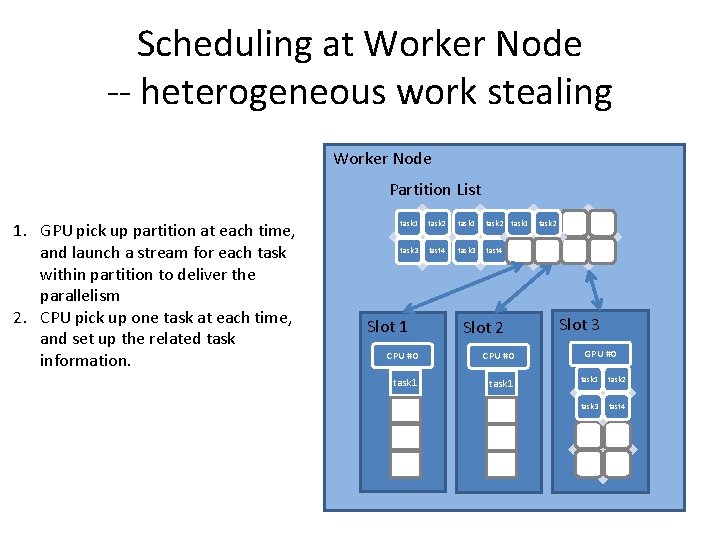

Scheduling • Two-level scheduling – First level scheduling partitions among nodes (happens on master node) – Second level scheduling tasks among devices on same node (happens on worker node) • Dynamic scheduling • Heterogeneous work stealing • Funnel the tasks

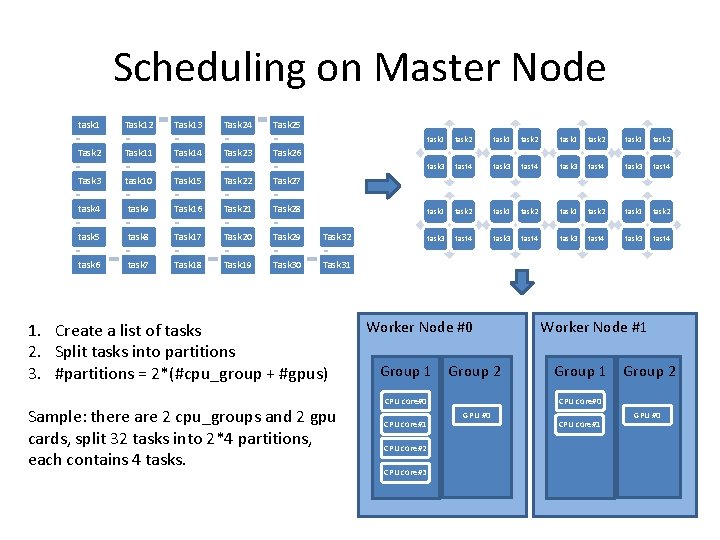

Scheduling on Master Node task 1 Task 2 Task 11 Task 13 Task 14 Task 23 Task 25 task 1 task 2 task 1 task 2 task 3 tast 4 task 3 tast 4 Task 26 Task 3 task 10 Task 15 Task 22 Task 27 task 4 task 9 Task 16 Task 21 Task 28 task 5 task 8 Task 17 Task 20 Task 29 Task 32 task 6 task 7 Task 18 Task 19 Task 30 Task 31 1. Create a list of tasks 2. Split tasks into partitions 3. #partitions = 2*(#cpu_group + #gpus) Sample: there are 2 cpu_groups and 2 gpu cards, split 32 tasks into 2*4 partitions, each contains 4 tasks. Worker Node #0 Group 1 Group 2 CPU core#0 CPU core#2 CPU core#3 Group 1 Group 2 CPU core#0 CPU core#1 Worker Node #1 GPU #0 CPU core#1 CPU core#0 GPU #0

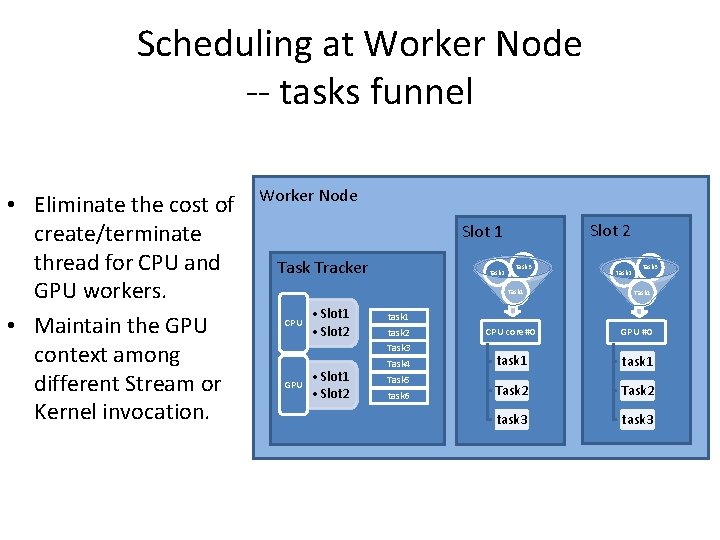

Scheduling at Worker Node -- tasks funnel • Eliminate the cost of create/terminate thread for CPU and GPU workers. • Maintain the GPU context among different Stream or Kernel invocation. Worker Node Slot 2 Slot 1 Task Tracker task 2 task 3 Task 1 CPU • Slot 1 • Slot 2 task 3 Task 1 task 2 Task 3 GPU task 2 Task 4 Task 5 task 6 CPU core#0 GPU #0 task 1 Task 2 task 3

![Task Scheduling on Worker Node While true do foreach worker i do if workerinfoi Task Scheduling on Worker Node While true do foreach worker i do if worker_info[i].](https://slidetodoc.com/presentation_image_h2/b2be127221b42b85d1625f3e17e4bf24/image-15.jpg)

Task Scheduling on Worker Node While true do foreach worker i do if worker_info[i]. has_task = 0 then /*assign a map task block to worker i*/ worker_info[i]. task_index <- task_offset worker_info[i]. task_size <-TASK_BLOCK_SIZE; worker_info[i]. has_task <- 1; task_offset += TASK_BLOCK_SIZE; end /*end the loop when all map tasks are scheduled*/ if task_offset >= total_tasks_size then break; end End Send message to all the worker on this nodes

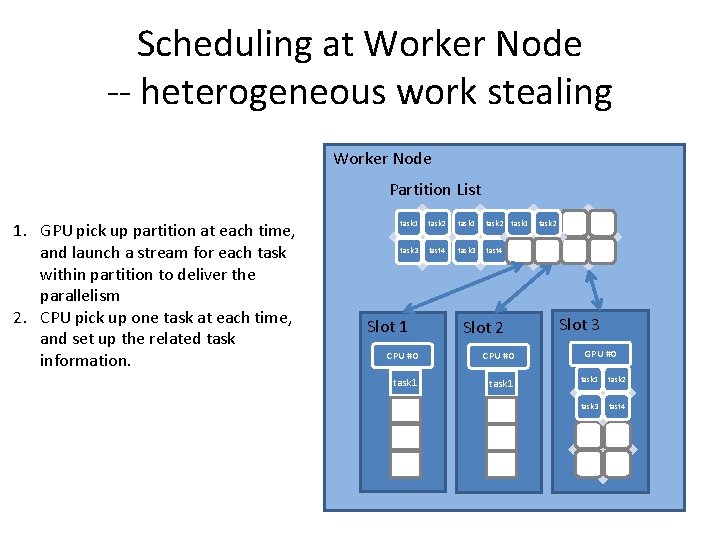

Scheduling at Worker Node -- heterogeneous work stealing Worker Node Partition List 1. GPU pick up partition at each time, and launch a stream for each task within partition to deliver the parallelism 2. CPU pick up one task at each time, and set up the related task information. task 1 task 2 task 3 tast 4 Slot 1 Slot 2 Slot 3 GPU #0 CPU #0 task 1 task 2 2 2 task 3 tast 4 3 3 task 1 task 2 4 4 task 3 tast 4

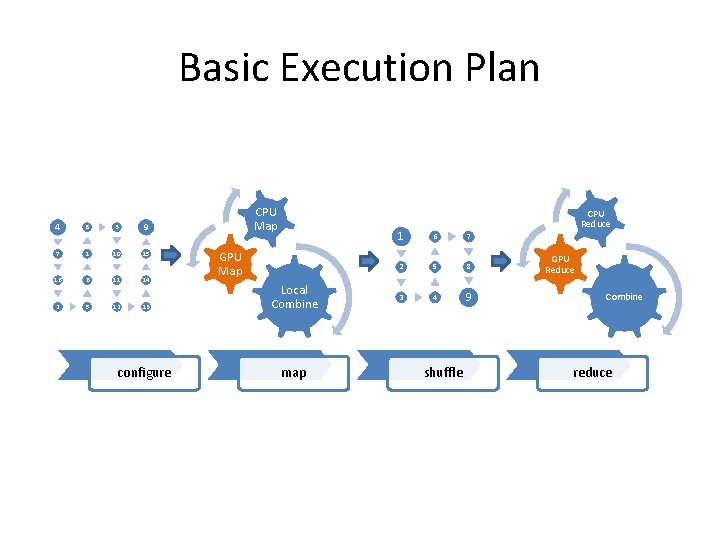

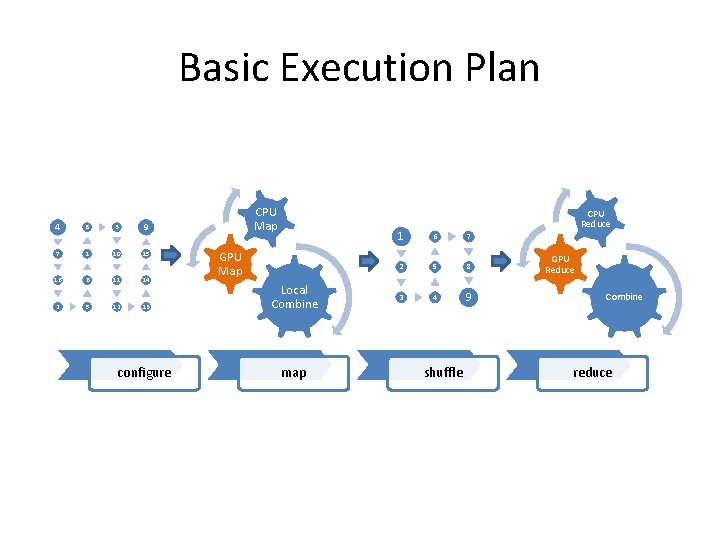

Basic Execution Plan 4 8 7 1 3 10 CPU Map 9 15 GPU Map 16 6 11 14 2 5 12 13 Local Combine configure map CPU Reduce 1 6 7 2 5 8 3 4 9 shuffle GPU Reduce Combine reduce

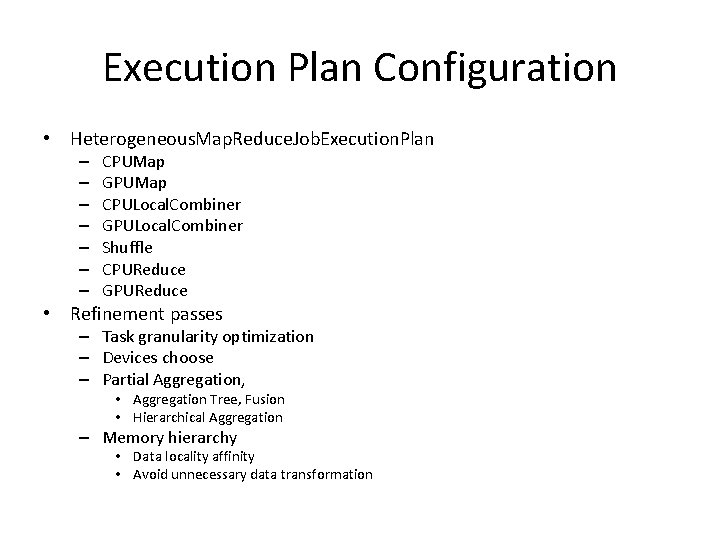

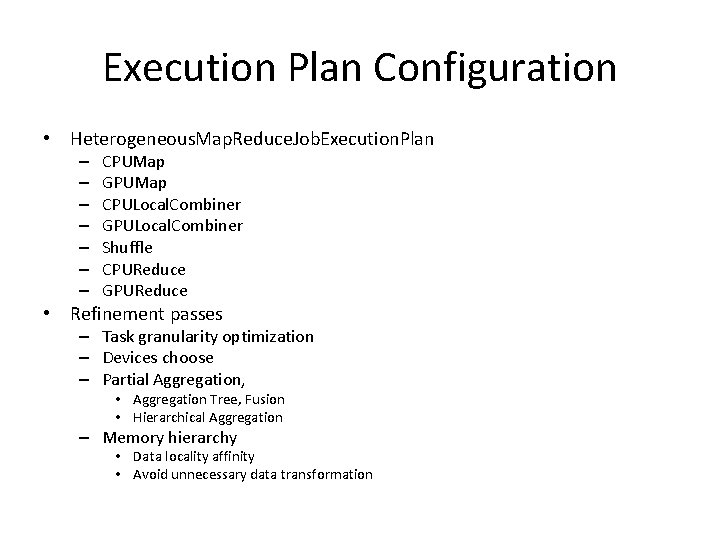

Execution Plan Configuration • Heterogeneous. Map. Reduce. Job. Execution. Plan – – – – CPUMap GPUMap CPULocal. Combiner GPULocal. Combiner Shuffle CPUReduce GPUReduce • Refinement passes – Task granularity optimization – Devices choose – Partial Aggregation, • Aggregation Tree, Fusion • Hierarchical Aggregation – Memory hierarchy • Data locality affinity • Avoid unnecessary data transformation

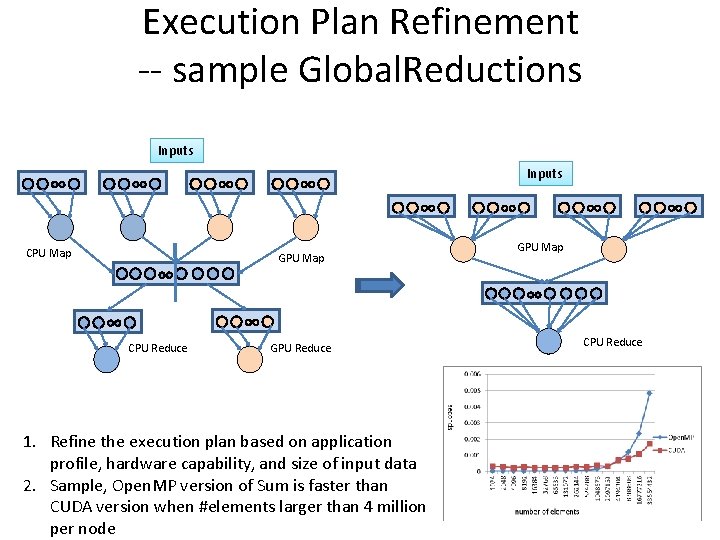

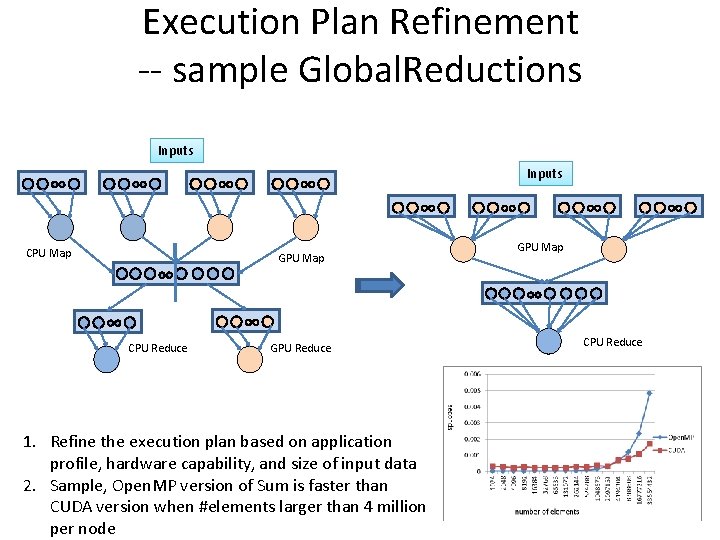

Execution Plan Refinement -- sample Global. Reductions Inputs CPU Map GPU Map CPU Reduce GPU Reduce 1. Refine the execution plan based on application profile, hardware capability, and size of input data 2. Sample, Open. MP version of Sum is faster than CUDA version when #elements larger than 4 million per node GPU Map CPU Reduce

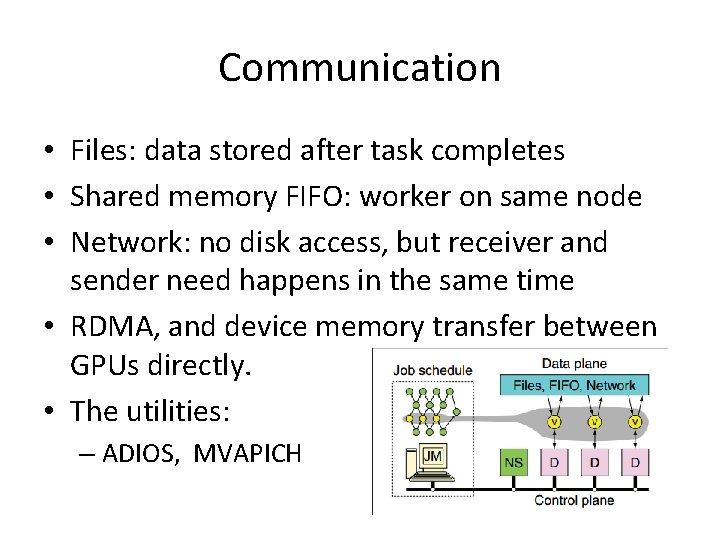

Communication • Files: data stored after task completes • Shared memory FIFO: worker on same node • Network: no disk access, but receiver and sender need happens in the same time • RDMA, and device memory transfer between GPUs directly. • The utilities: – ADIOS, MVAPICH

Backups

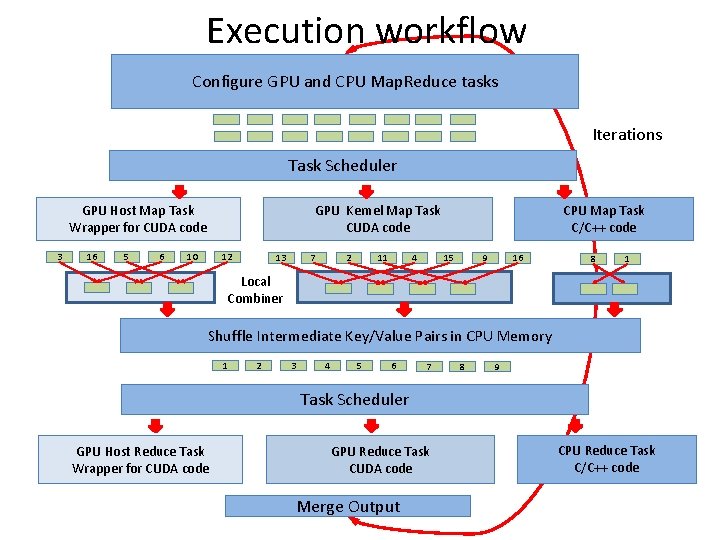

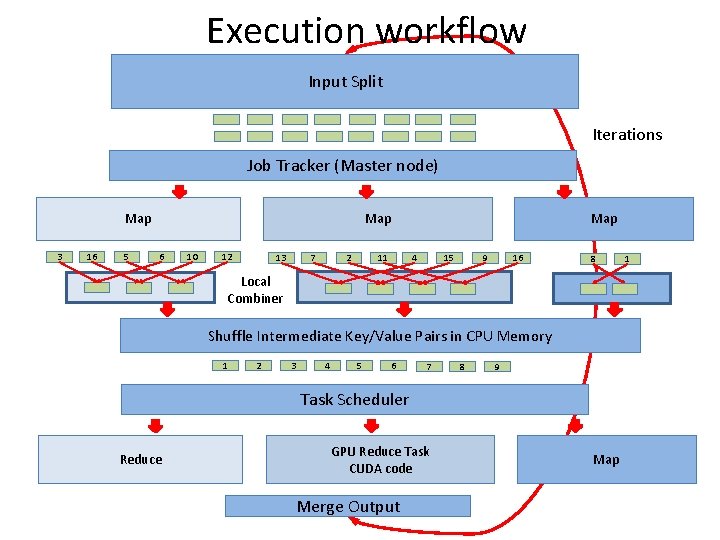

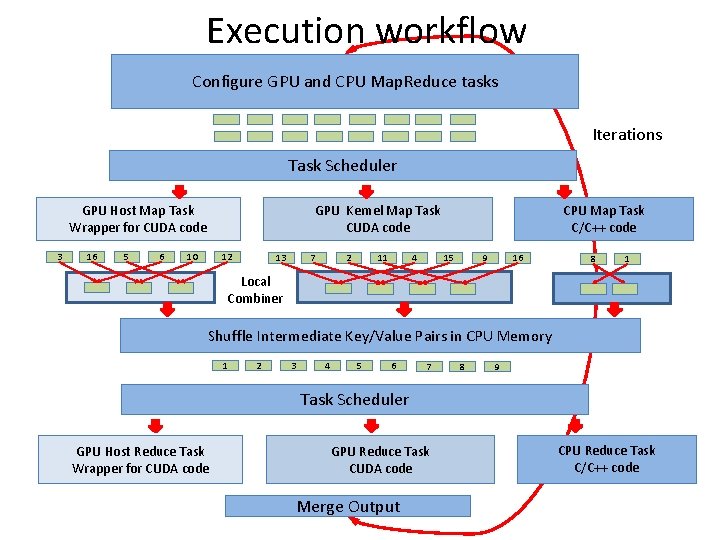

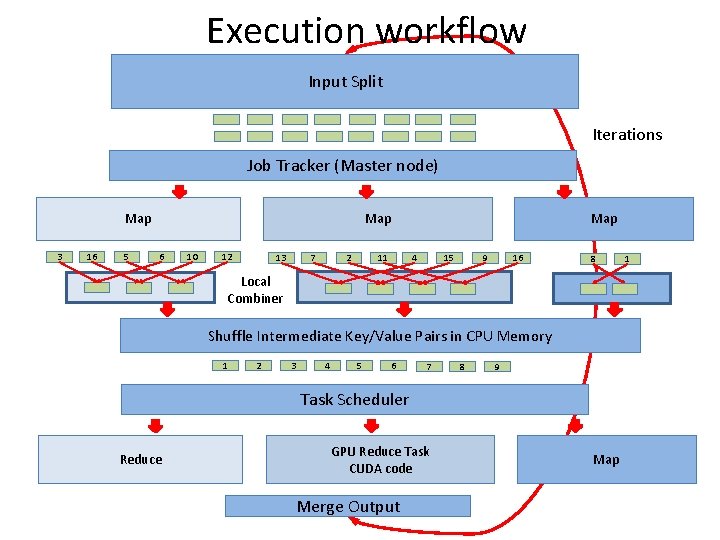

Execution workflow Configure GPU and CPU Map. Reduce tasks Iterations Task Scheduler GPU Host Map Task Wrapper for CUDA code 3 16 5 6 GPU Kernel Map Task CUDA code 10 12 13 7 2 11 4 CPU Map Task C/C++ code 9 15 16 8 1 Local Combiner Shuffle Intermediate Key/Value Pairs in CPU Memory 1 2 3 4 5 6 7 8 9 Task Scheduler GPU Host Reduce Task Wrapper for CUDA code GPU Reduce Task CUDA code Merge Output CPU Reduce Task C/C++ code

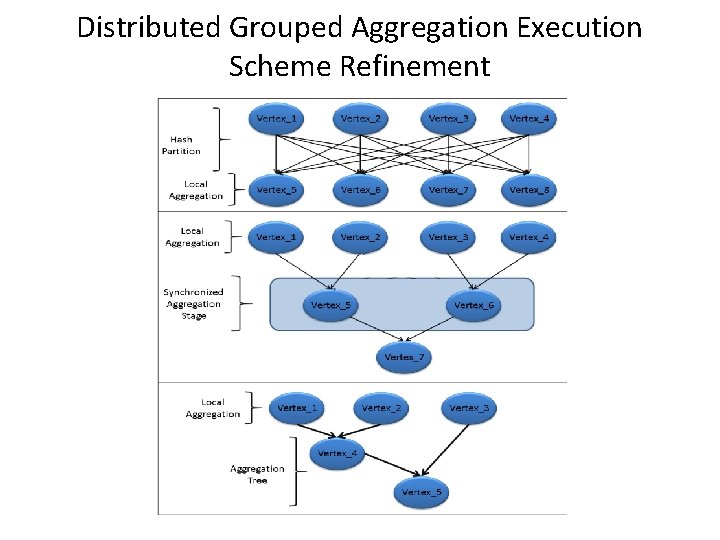

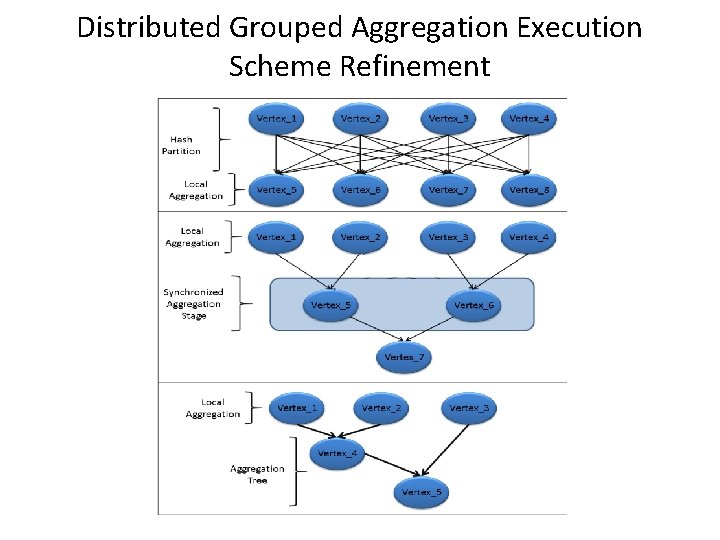

Distributed Grouped Aggregation Execution Scheme Refinement

References • Dryad: Distributed Data-Parallel Programs from Sequential Building Blocks • Multi-GPU Map. Reduce on GPU Clusters • Accelerating Map. Reduce on a Coupled CPU-GPU Architecture • Hybrid Map Task Scheduling for GPU-based Heterogeneous Clusters • Scalable Data Clustering using GPU Clusters • Parallel Processing in CPU and GPU Heterogeneous Environment • Multigrain Affinity for Heterogeneous Work Stealing

Typical Programming Models for GPUs Input Array Computation Local Reduction Global Reduction MPI Process 0 CUDA, Open. CL Open. MP, Pthread MPI Process 0 … … MPI Process N … … CUDA, Open. CL Open. MP, Pthread MPI Process N

Typical Programming Models for GPUs Input Split Map Stage Local Reduction Global Reduction Mapper … Mapper Local combiner … Local combiner Reducer … Reducer

Execution workflow Input Split Iterations Job Tracker (Master node) Map 3 16 5 Map 6 10 12 13 7 2 Map 11 4 9 15 16 8 Local Combiner Shuffle Intermediate Key/Value Pairs in CPU Memory 1 2 3 4 5 6 7 8 9 Task Scheduler Reduce GPU Reduce Task CUDA code Merge Output Map 1

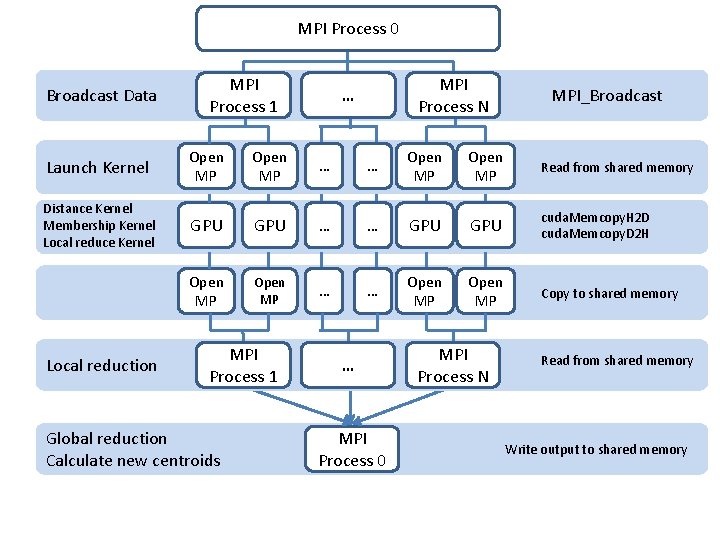

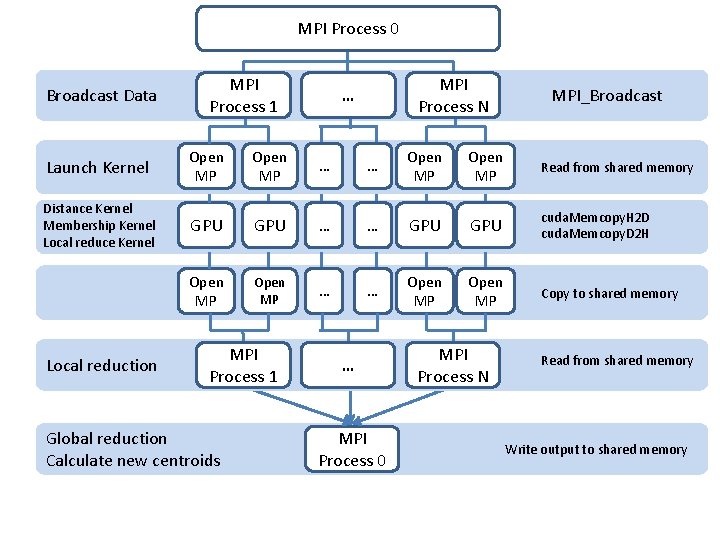

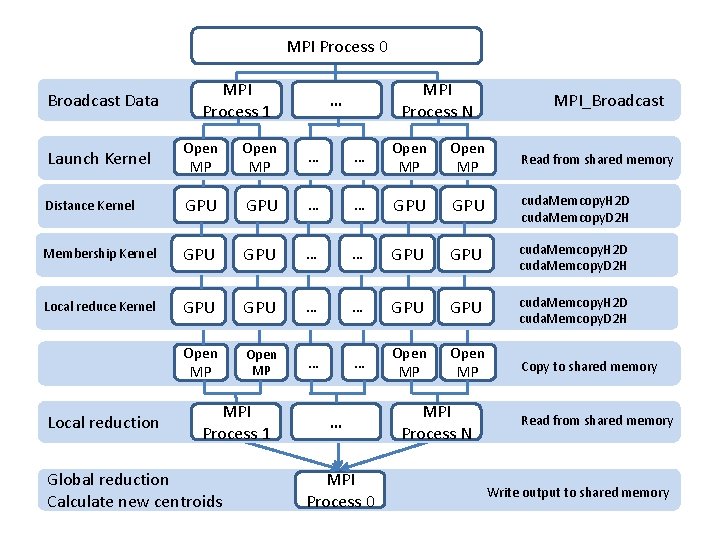

MPI Process 0 Broadcast Data MPI Process 1 MPI Process N … MPI_Broadcast Launch Kernel Open MP … … Open MP Read from shared memory Distance Kernel Membership Kernel Local reduce Kernel GPU … … GPU cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H Open MP … … Open MP Copy to shared memory Local reduction MPI Process 1 Global reduction Calculate new centroids … MPI Process 0 MPI Process N Read from shared memory Write output to shared memory

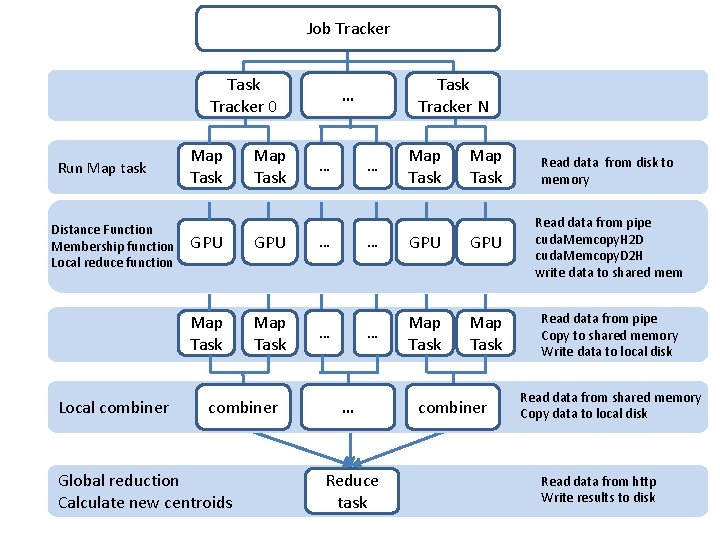

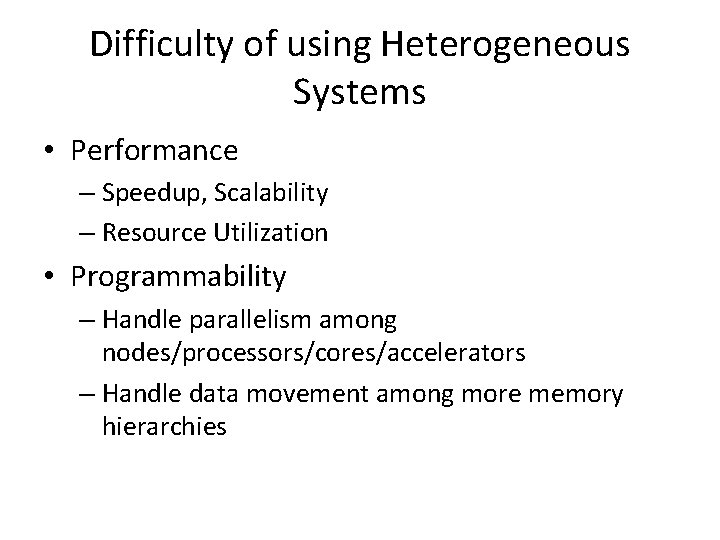

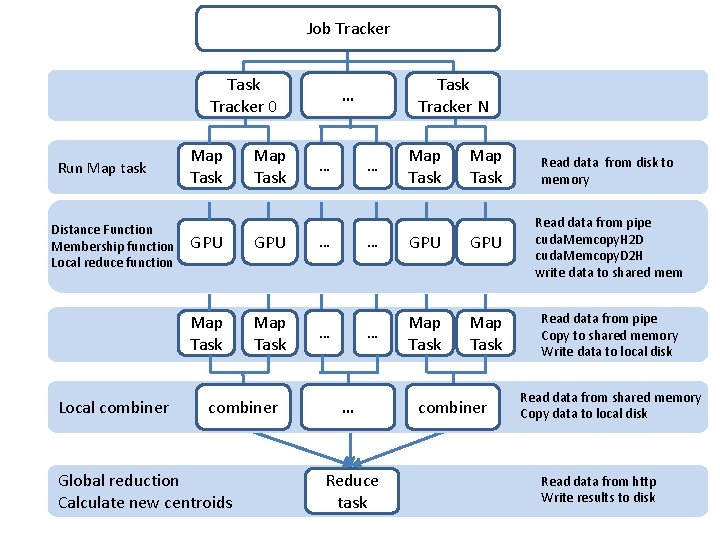

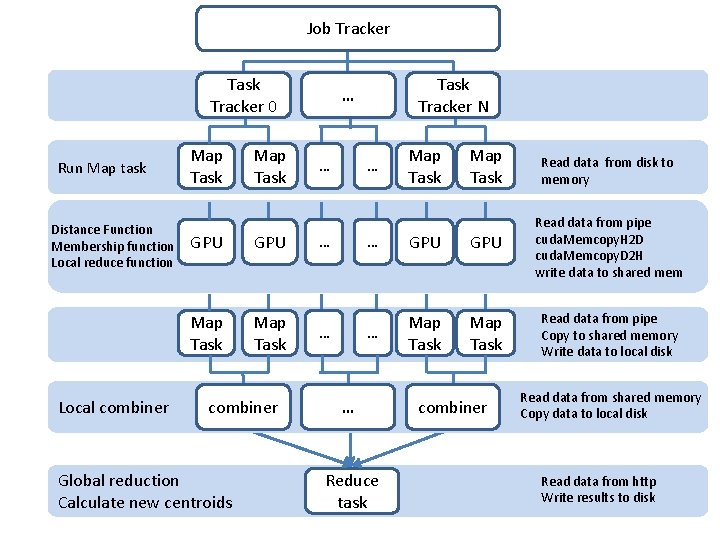

Job Tracker Task Tracker 0 Run Map task Distance Function Membership function Local reduce function Local combiner Map Task GPU Map Task combiner Global reduction Calculate new centroids Task Tracker N … … … Map Task Read data from disk to memory … GPU Read data from pipe cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H write data to shared mem … Map Task Read data from pipe Copy to shared memory Write data to local disk … Reduce task combiner Read data from shared memory Copy data to local disk Read data from http Write results to disk

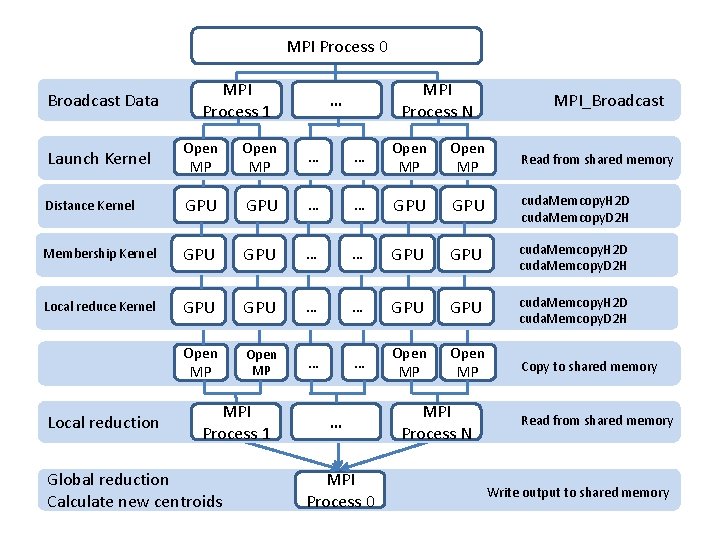

MPI Process 0 Broadcast Data MPI Process 1 MPI Process N … MPI_Broadcast Launch Kernel Open MP … … Open MP Read from shared memory Distance Kernel GPU … … GPU cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H Membership Kernel GPU … … GPU cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H Local reduce Kernel GPU … … GPU cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H Open MP … … Open MP Copy to shared memory Local reduction MPI Process 1 Global reduction Calculate new centroids … MPI Process 0 MPI Process N Read from shared memory Write output to shared memory

Job Tracker Task Tracker 0 Run Map task Distance Function Membership function Local reduce function Local combiner Map Task GPU Map Task combiner Global reduction Calculate new centroids Task Tracker N … … … Map Task Read data from disk to memory … GPU Read data from pipe cuda. Memcopy. H 2 D cuda. Memcopy. D 2 H write data to shared mem … Map Task Read data from pipe Copy to shared memory Write data to local disk … Reduce task combiner Read data from shared memory Copy data to local disk Read data from http Write results to disk