A Machine Learning Based Framework for SubResolution Assist

A Machine Learning Based Framework for Sub-Resolution Assist Feature Generation Xiaoqing Xu 1, Tetsuaki Matsunawa 2 Shigeki Nojima 2, Chikaaki Kodama 2, Toshiya Kotani 2 David Z. Pan 1 1 University of Texas at Austin 2 Toshiba Corporation, Semiconductor & Storage Products Company, Yokohama, Japan

Outline t Introduction on Sub-Resolution Assist Feature t Grid-based SRAF Generation t A Machine Learning based Approach t Experimental Results and Discussions

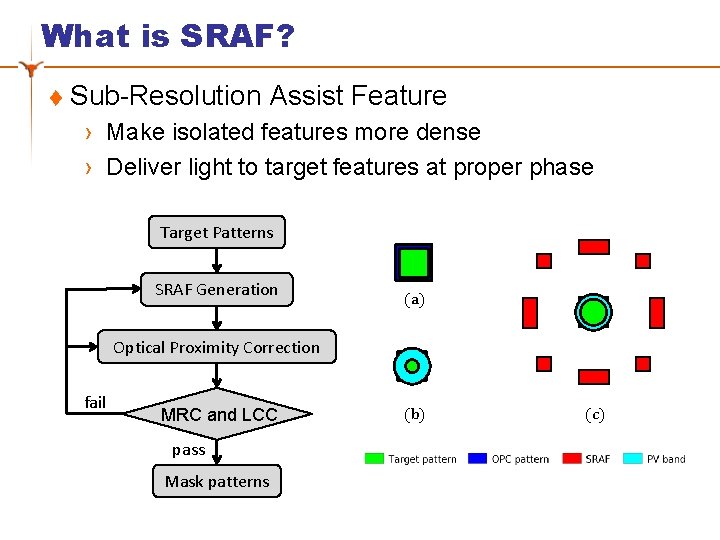

What is SRAF? t Sub-Resolution Assist Feature › Make isolated features more dense › Deliver light to target features at proper phase Target Patterns SRAF Generation (a) Optical Proximity Correction fail MRC and LCC pass Mask patterns (b) (c)

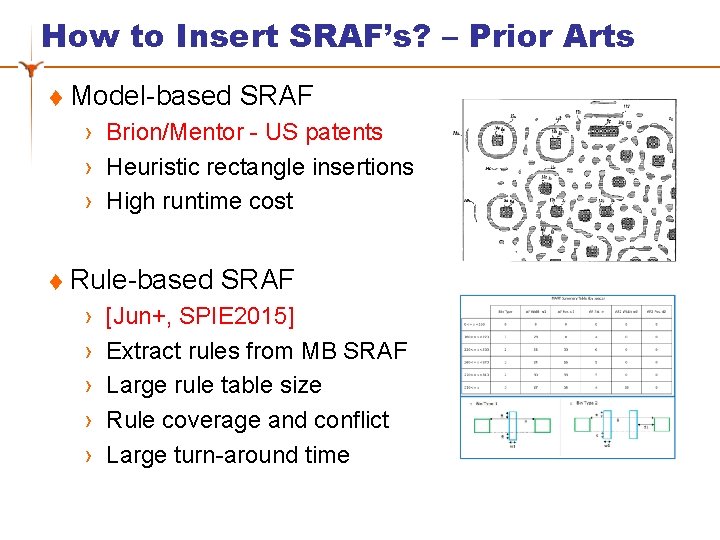

How to Insert SRAF’s? – Prior Arts t Model-based SRAF › Brion/Mentor - US patents › Heuristic rectangle insertions › High runtime cost t Rule-based SRAF › › › [Jun+, SPIE 2015] Extract rules from MB SRAF Large rule table size Rule coverage and conflict Large turn-around time

Our Contributions t Machine learning based techniques for SRAF generation are proposed for the first time t Novel feature extraction and compaction for consistent SRAF generation t Achieve competitive lithographic performance with significant speed up compared with modelbased approach within a complete mask optimization flow

Grid-based SRAF Insertion t From ‘Rule’ to ‘Function’/‘Model’ › y = F(x), x is the position, y is the SRAF label (0 or 1) (x 1, y 1=1) (x 2, y 2=0) (x 3, y 3=1) (x 4, y 4=0) ~ grid error …… …… x 2 x 1 x 3 x 4 Grid Position: X={xk} SRAF label: Y={yk} Grid-based insertion: Y = F(X)

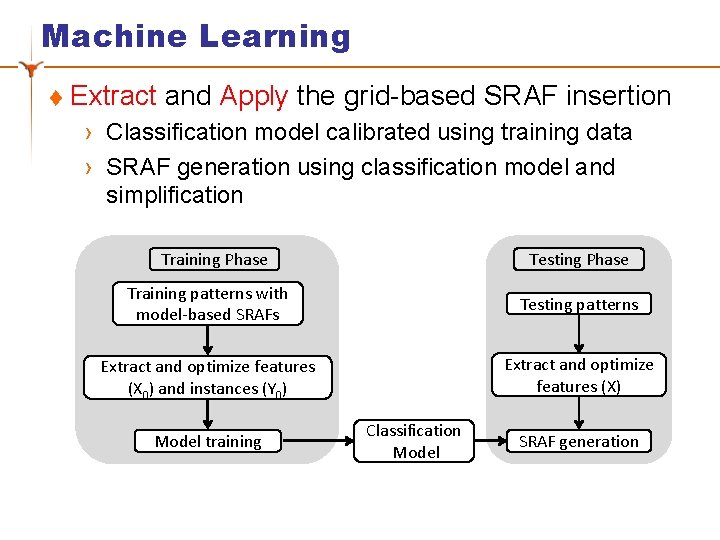

Machine Learning t Extract and Apply the grid-based SRAF insertion › Classification model calibrated using training data › SRAF generation using classification model and simplification Training Phase Testing Phase Training patterns with model-based SRAFs Testing patterns Extract and optimize features (X 0) and instances (Y 0) Extract and optimize features (X) Model training Classification Model SRAF generation

Feature Extraction t Constrained Concentric Circle w/ Area Sampling › Feature vector x 0 and SRAF label y 0 for each grid

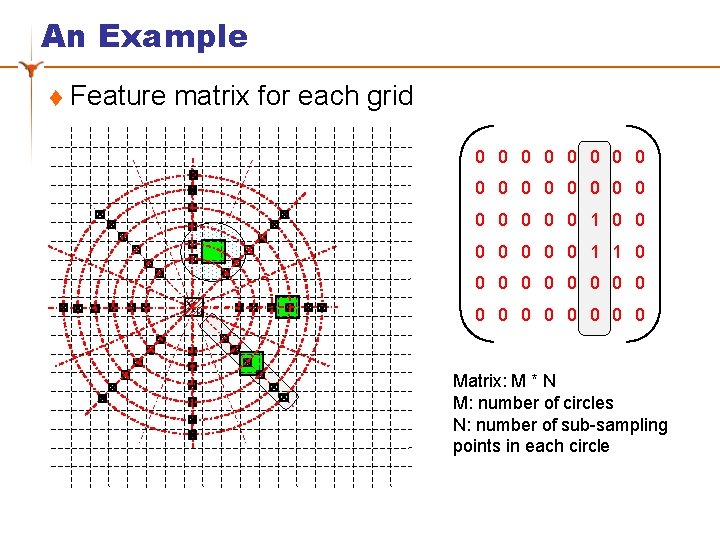

An Example t Feature matrix for each grid 0 0 0 0 0 0 1 0 0 0 0 1 1 0 0 0 0 0 x 0 0 0 0 0 Matrix: M * N M: number of circles N: number of sub-sampling points in each circle

Feature Compaction t Symmetric grids share the same feature › Rolling/Flipping(RF) matrix rows

An Example t Symmetric optical conditions for x 0 and x 1 › Rolling/Flipping(RF) matrix rows RF(x 0) = RF(x 1) Quadrant RF(x. Analysis 0) 1 0 0 0 x 1 1 0 0 0 0 0 0 0 0 x 0 0 0 0 0 Symmetric grids share same features

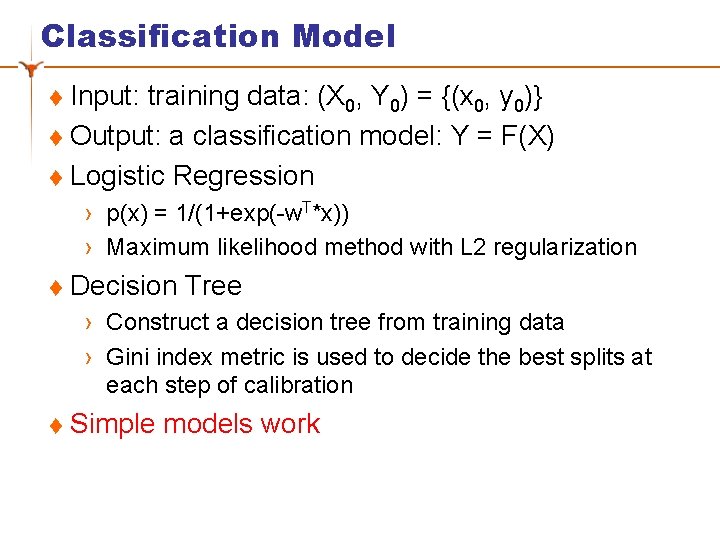

Classification Model Input: training data: (X 0, Y 0) = {(x 0, y 0)} t Output: a classification model: Y = F(X) t Logistic Regression t › p(x) = 1/(1+exp(-w. T*x)) › Maximum likelihood method with L 2 regularization t Decision Tree › Construct a decision tree from training data › Gini index metric is used to decide the best splits at each step of calibration t Simple models work

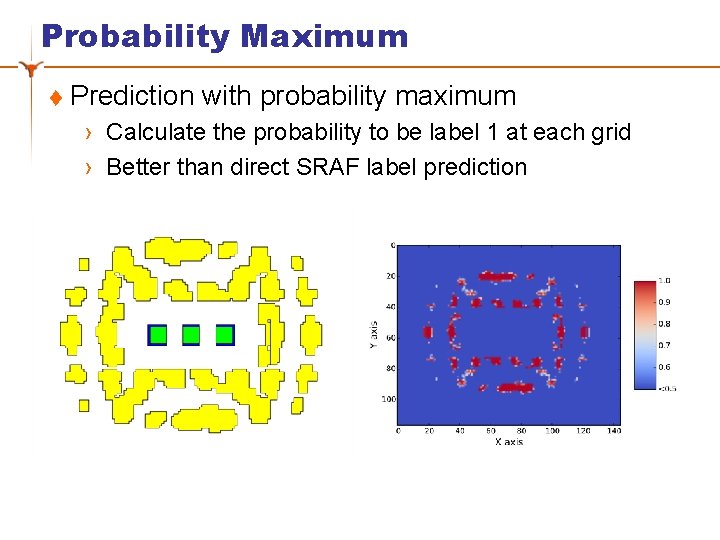

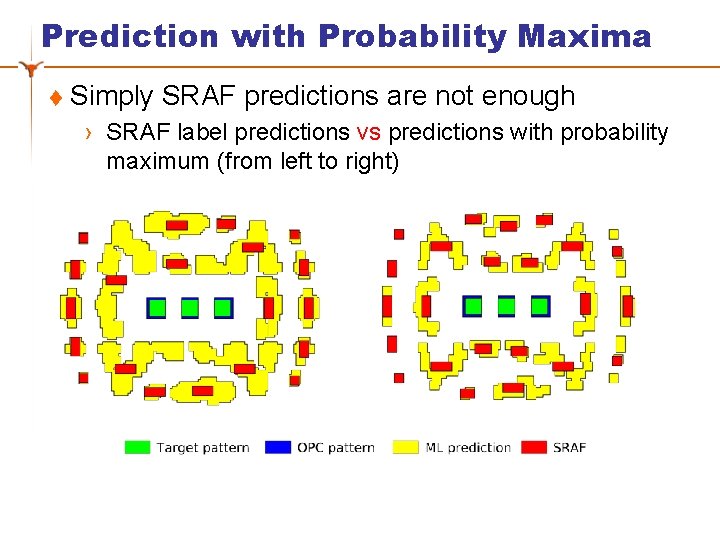

Probability Maximum t Prediction with probability maximum › Calculate the probability to be label 1 at each grid › Better than direct SRAF label prediction

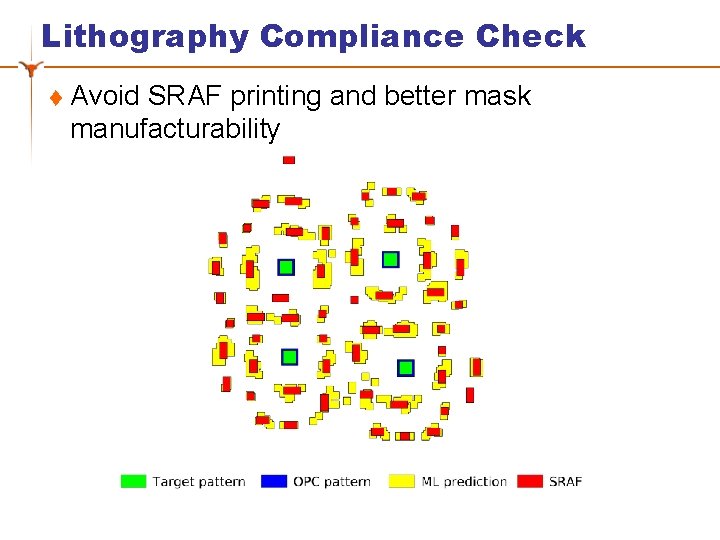

Lithography Compliance Check t Avoid SRAF printing and better mask manufacturability

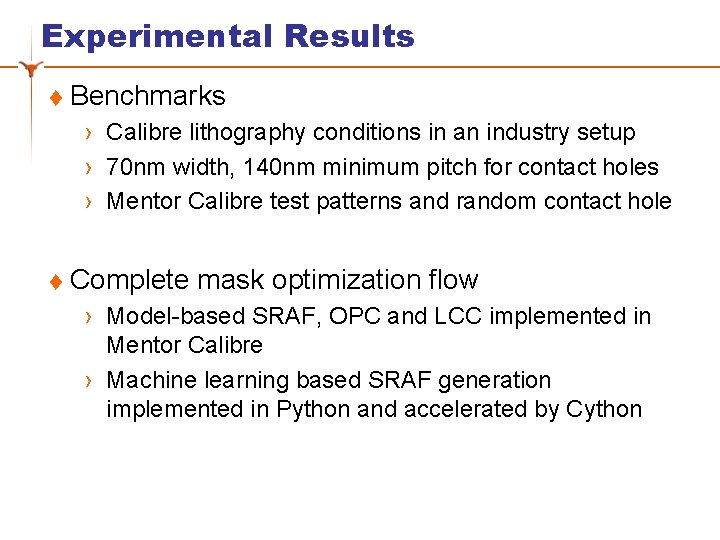

Experimental Results t Benchmarks › Calibre lithography conditions in an industry setup › 70 nm width, 140 nm minimum pitch for contact holes › Mentor Calibre test patterns and random contact hole t Complete mask optimization flow › Model-based SRAF, OPC and LCC implemented in Mentor Calibre › Machine learning based SRAF generation implemented in Python and accelerated by Cython

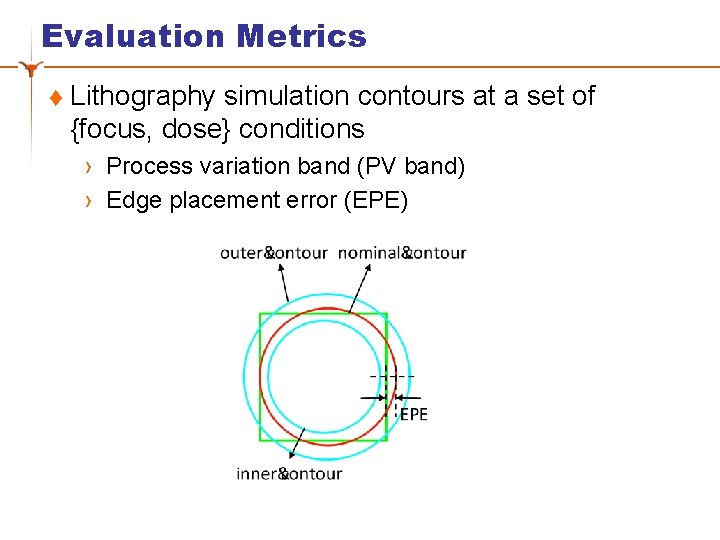

Evaluation Metrics t Lithography simulation contours at a set of {focus, dose} conditions › Process variation band (PV band) › Edge placement error (EPE)

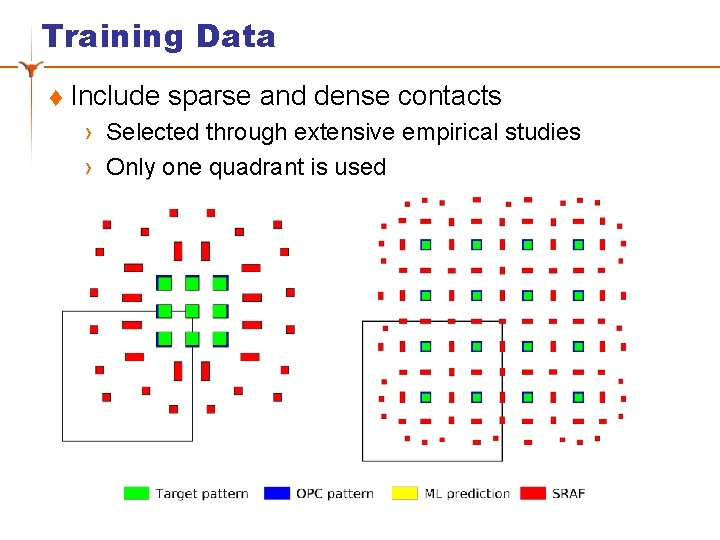

Training Data t Include sparse and dense contacts › Selected through extensive empirical studies › Only one quadrant is used

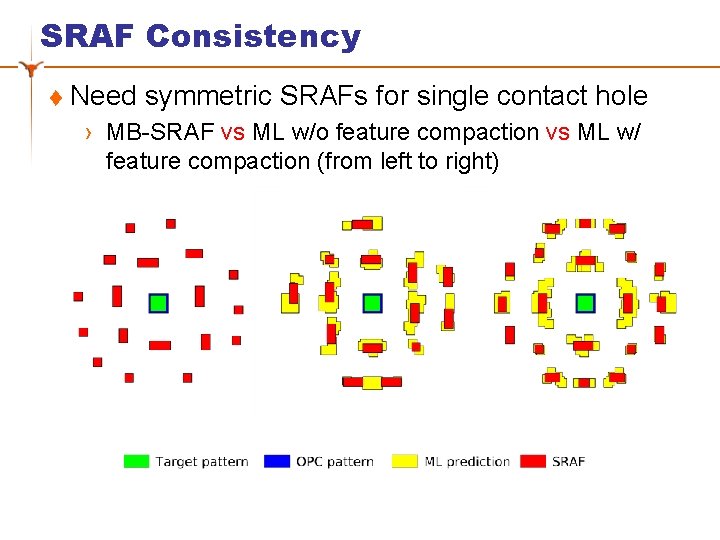

SRAF Consistency t Need symmetric SRAFs for single contact hole › MB-SRAF vs ML w/o feature compaction vs ML w/ feature compaction (from left to right)

Prediction with Probability Maxima t Simply SRAF predictions are not enough › SRAF label predictions vs predictions with probability maximum (from left to right)

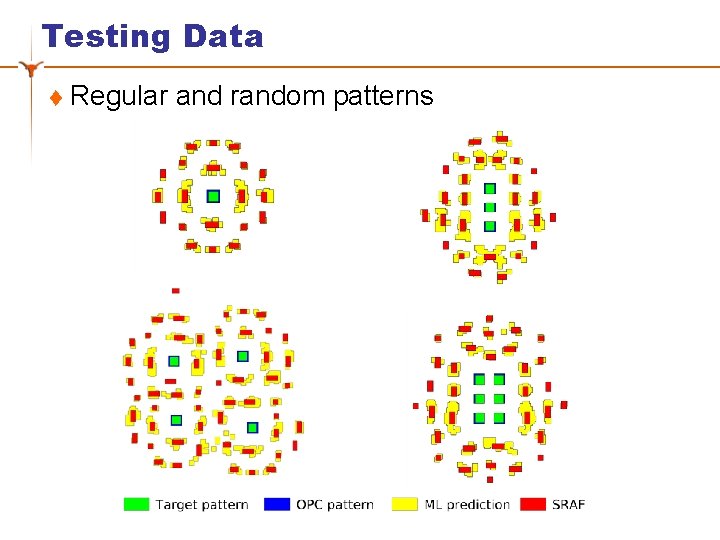

Testing Data t Regular and random patterns

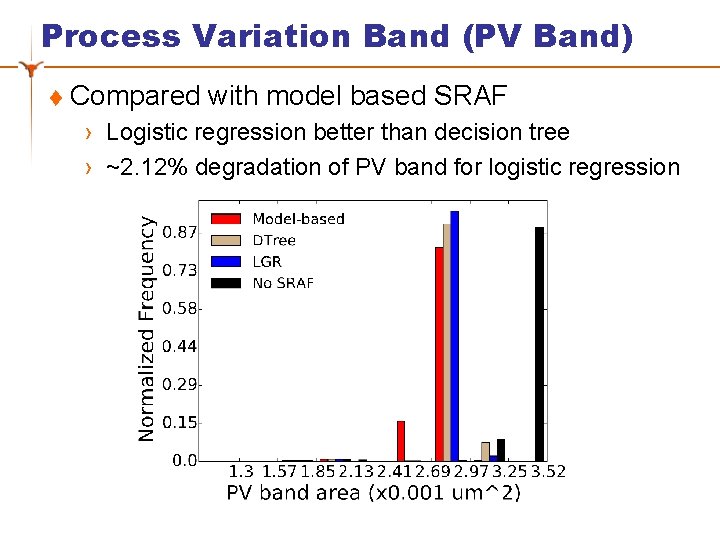

Process Variation Band (PV Band) t Compared with model based SRAF › Logistic regression better than decision tree › ~2. 12% degradation of PV band for logistic regression

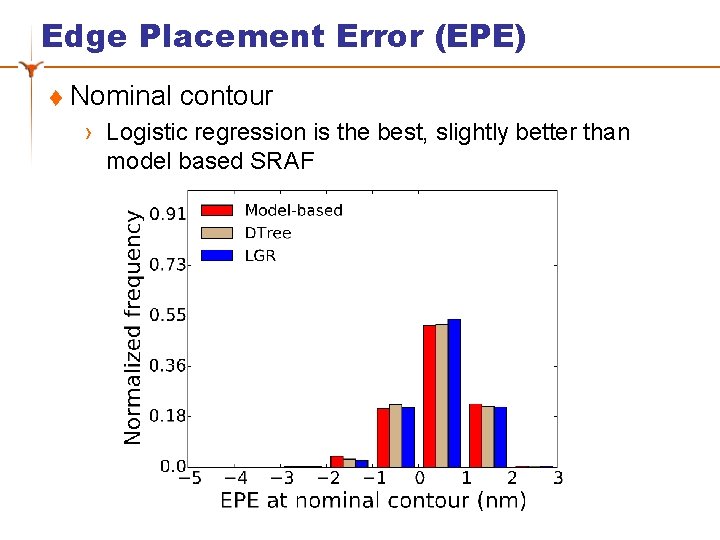

Edge Placement Error (EPE) t Nominal contour › Logistic regression is the best, slightly better than model based SRAF

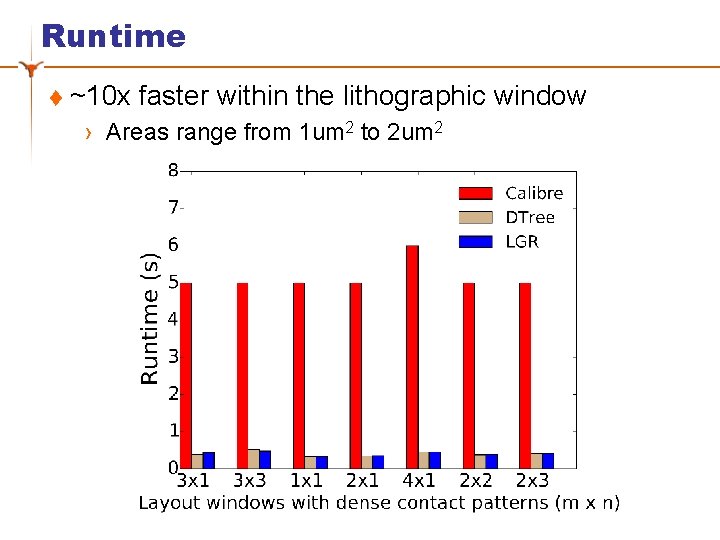

Runtime t ~10 x faster within the lithographic window › Areas range from 1 um 2 to 2 um 2

Summary t A machine learning based framework is proposed for the SRAF generation t A robust feature extraction scheme and a novel feature compaction technique to improve the SRAF consistency. t 10 X speed up in layout windows with comparable performance compared with an industry strength model-based approach.

Thanks Q&A

Back up

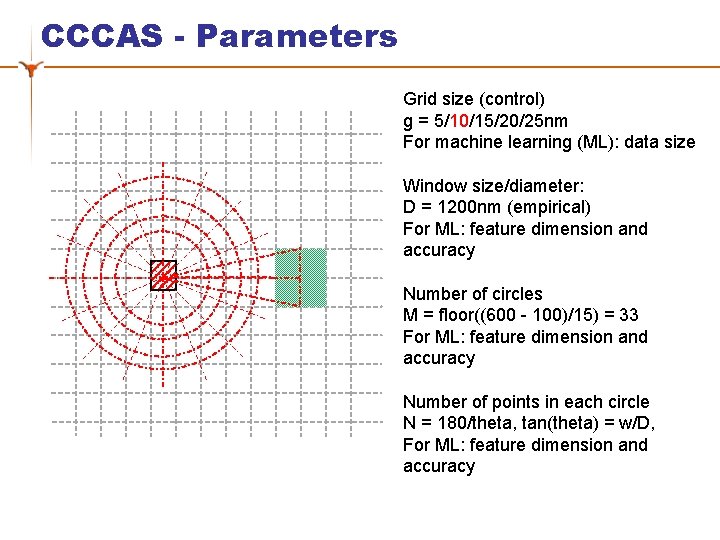

CCCAS - Parameters Grid size (control) g = 5/10/15/20/25 nm For machine learning (ML): data size Window size/diameter: D = 1200 nm (empirical) For ML: feature dimension and accuracy Number of circles M = floor((600 - 100)/15) = 33 For ML: feature dimension and accuracy Number of points in each circle N = 180/theta, tan(theta) = w/D, For ML: feature dimension and accuracy

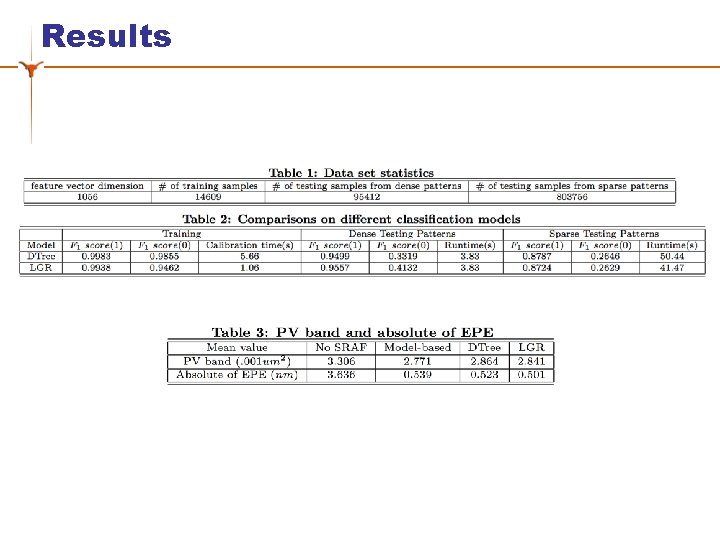

Results

- Slides: 28