A Look Inside Intel The Core Nehalem Microarchitecture

![Example Code For strlen() string equ [esp + 4] mov ecx, string ; ecx Example Code For strlen() string equ [esp + 4] mov ecx, string ; ecx](https://slidetodoc.com/presentation_image_h2/64a80f455cf7cf8935ea3cf40f8d3063/image-87.jpg)

- Slides: 123

A Look Inside Intel®: The Core (Nehalem) Microarchitecture Intel® Beeman Strong Core™ microarchitecture (Nehalem) Architect Intel Corporation

Agenda • Intel® Core™ Microarchitecture (Nehalem) Design Overview • Enhanced Processor Core • Performance Features • Intel® Hyper-Threading Technology • New Platform • New Cache Hierarchy • New Platform Architecture • Performance Acceleration • Virtualization • New Instructions • Power Management Overview • Minimizing Idle Power Consumption • Performance when it counts 2

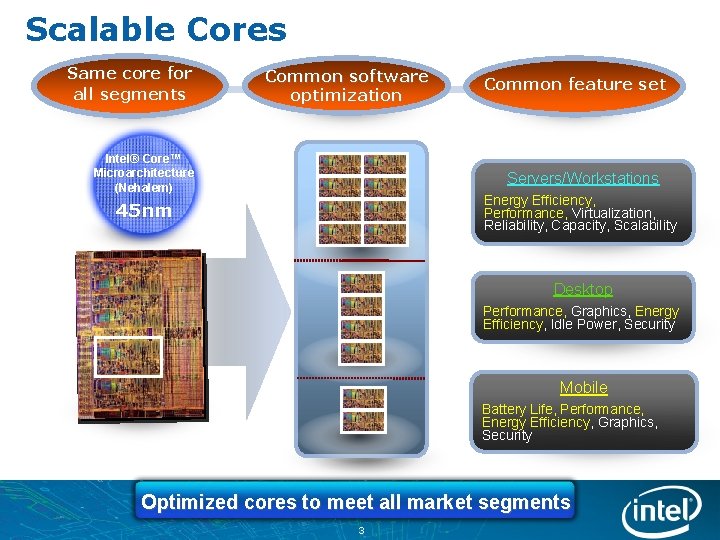

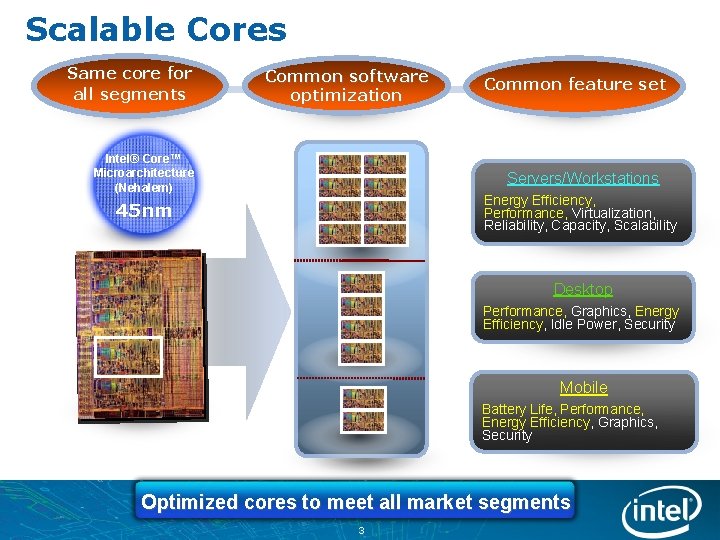

Scalable Cores Same core for all segments Common software optimization Intel® Core™ Microarchitecture (Nehalem) Common feature set Servers/Workstations Energy Efficiency, Performance, Virtualization, Reliability, Capacity, Scalability 45 nm Desktop Performance, Graphics, Energy Efficiency, Idle Power, Security Mobile Battery Life, Performance, Energy Efficiency, Graphics, Security Optimized cores to meet all market segments 3

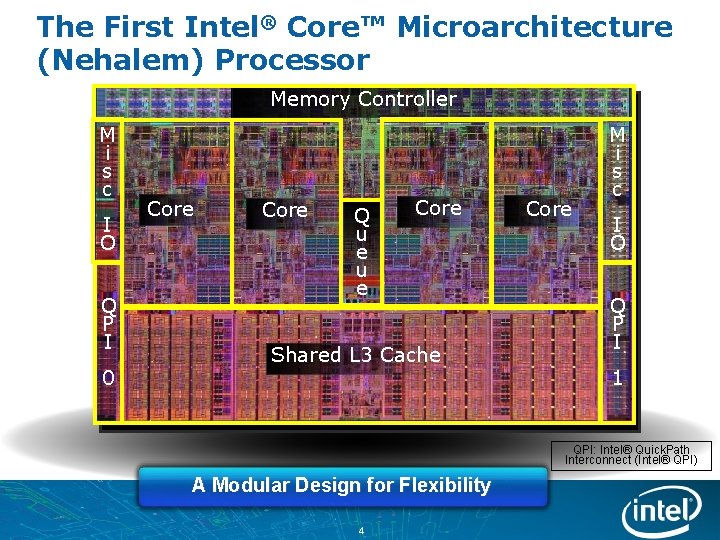

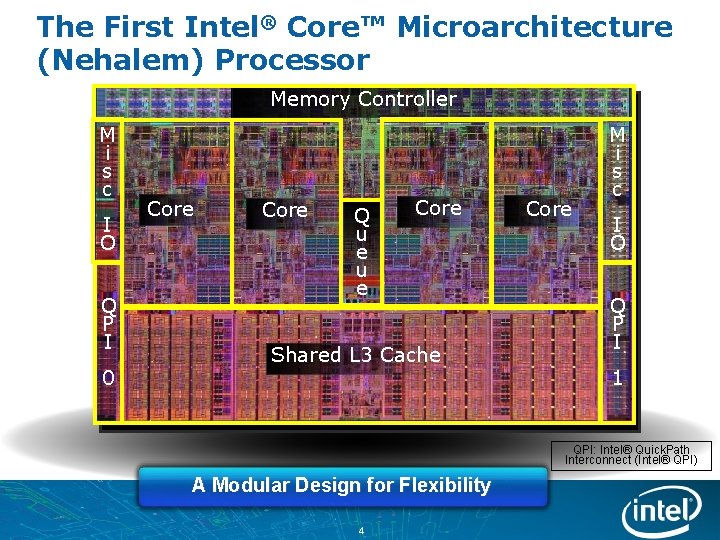

The First Intel® Core™ Microarchitecture (Nehalem) Processor Memory Controller M i s c I O Q P I 0 Core Q u e Core Shared L 3 Cache Core M i s c I O Q P I 1 QPI: Intel® Quick. Path Interconnect (Intel® QPI) A Modular Design for Flexibility 4

Agenda • Intel® Core™ Microarchitecture (Nehalem) Design Overview • Enhanced Processor Core • Performance Features • Intel® Hyper-Threading Technology • New Platform • New Cache Hierarchy • New Platform Architecture • Performance Acceleration • Virtualization • New Instructions • Power Management Overview • Minimizing Idle Power Consumption • Performance when it counts 5

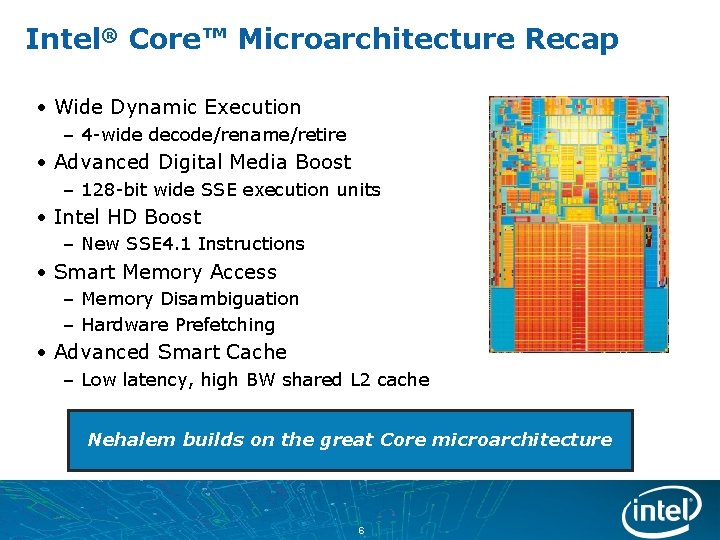

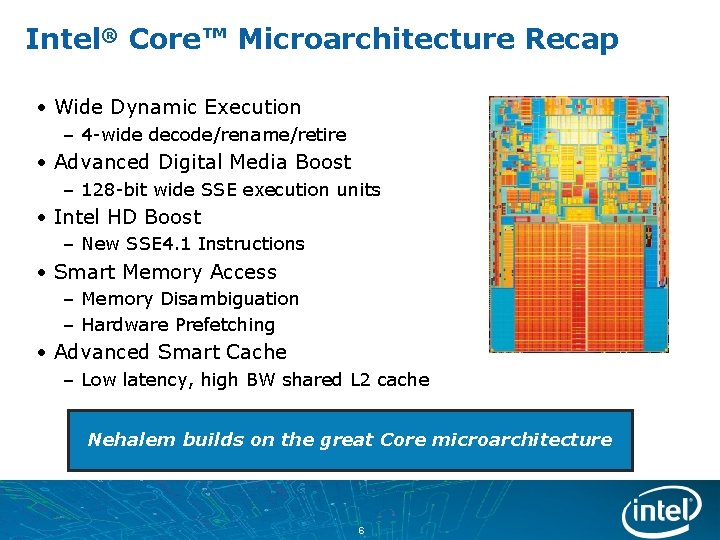

Intel® Core™ Microarchitecture Recap • Wide Dynamic Execution – 4 -wide decode/rename/retire • Advanced Digital Media Boost – 128 -bit wide SSE execution units • Intel HD Boost – New SSE 4. 1 Instructions • Smart Memory Access – Memory Disambiguation – Hardware Prefetching • Advanced Smart Cache – Low latency, high BW shared L 2 cache Nehalem builds on the great Core microarchitecture 6

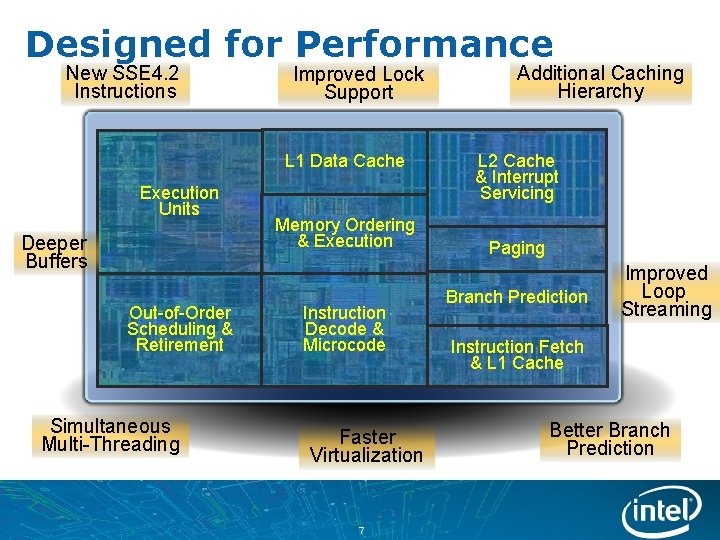

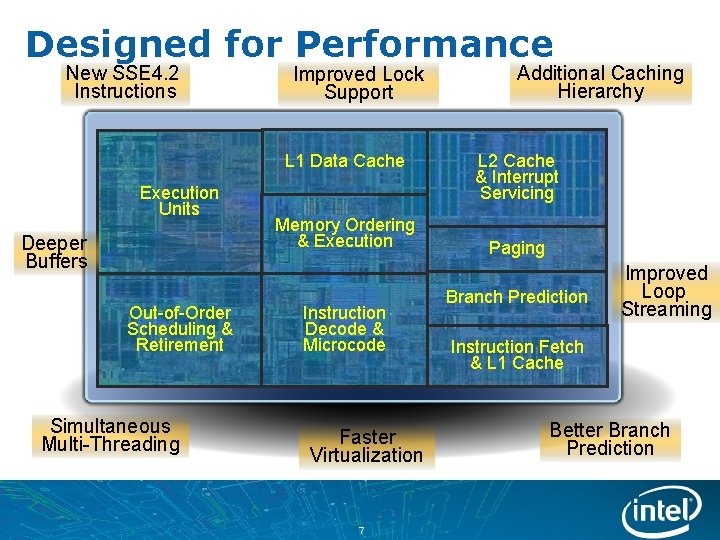

Designed for Performance New SSE 4. 2 Instructions Improved Lock Support L 1 Data Cache Execution Units Deeper Buffers Out-of-Order Scheduling & Retirement Simultaneous Multi-Threading Memory Ordering & Execution Instruction Decode & Microcode Faster Virtualization 7 Additional Caching Hierarchy L 2 Cache & Interrupt Servicing Paging Branch Prediction Improved Loop Streaming Instruction Fetch & L 1 Cache Better Branch Prediction

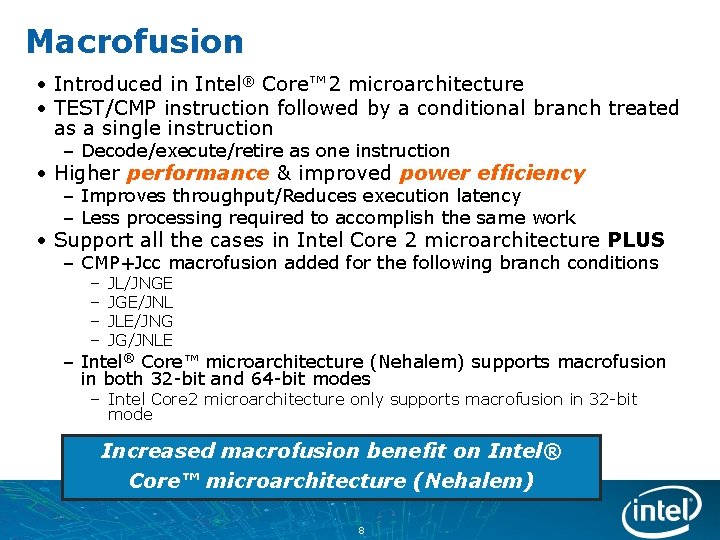

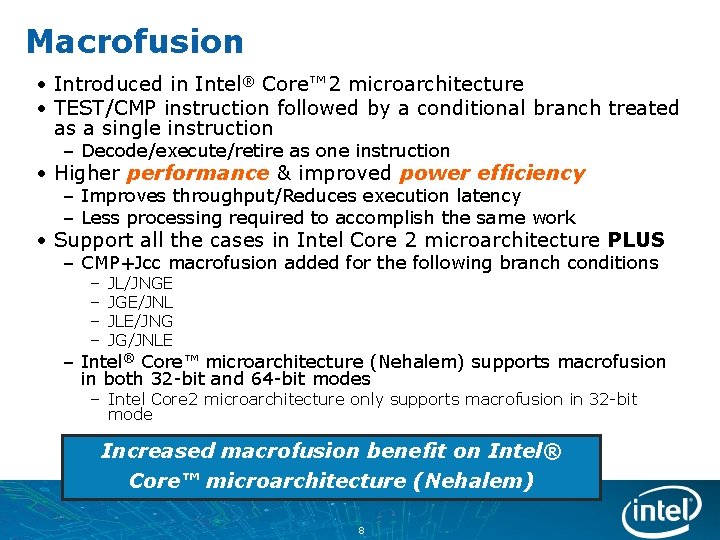

Macrofusion • Introduced in Intel® Core™ 2 microarchitecture • TEST/CMP instruction followed by a conditional branch treated as a single instruction – Decode/execute/retire as one instruction • Higher performance & improved power efficiency – Improves throughput/Reduces execution latency – Less processing required to accomplish the same work • Support all the cases in Intel Core 2 microarchitecture PLUS – CMP+Jcc macrofusion added for the following branch conditions – – JL/JNGE JGE/JNL JLE/JNG JG/JNLE – Intel® Core™ microarchitecture (Nehalem) supports macrofusion in both 32 -bit and 64 -bit modes – Intel Core 2 microarchitecture only supports macrofusion in 32 -bit mode Increased macrofusion benefit on Intel® Core™ microarchitecture (Nehalem) 8

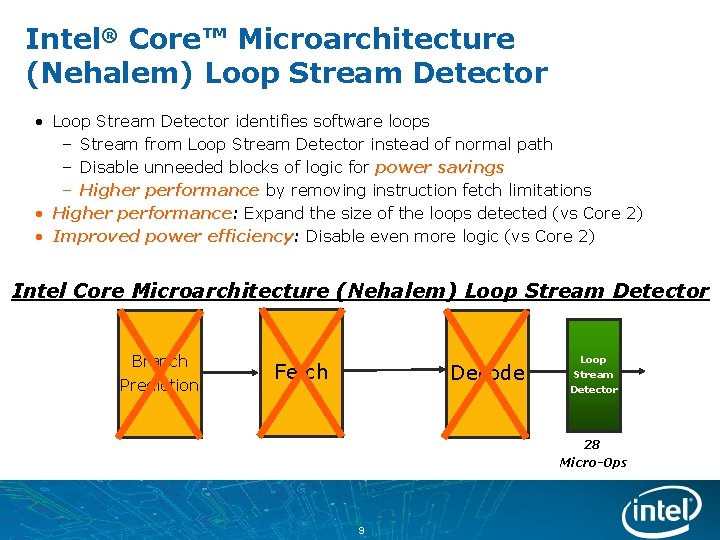

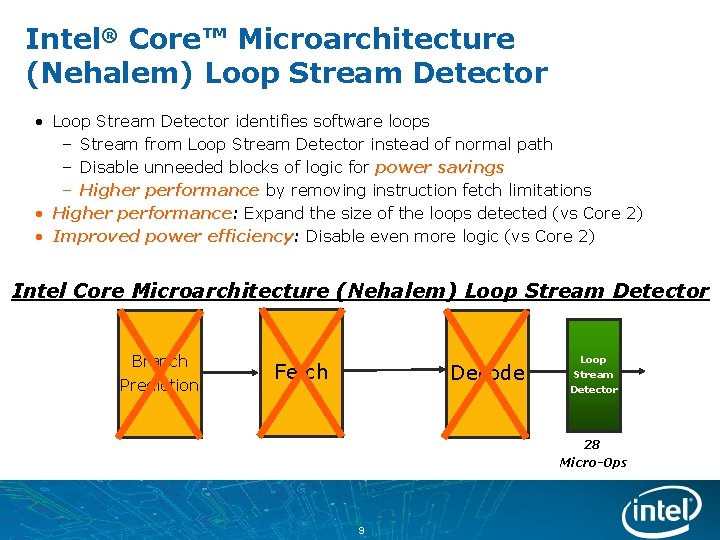

Intel® Core™ Microarchitecture (Nehalem) Loop Stream Detector • Loop Stream Detector identifies software loops – Stream from Loop Stream Detector instead of normal path – Disable unneeded blocks of logic for power savings – Higher performance by removing instruction fetch limitations • Higher performance: Expand the size of the loops detected (vs Core 2) • Improved power efficiency: Disable even more logic (vs Core 2) Intel Core Microarchitecture (Nehalem) Loop Stream Detector Branch Prediction Fetch Decode Loop Stream Detector 28 Micro-Ops 9

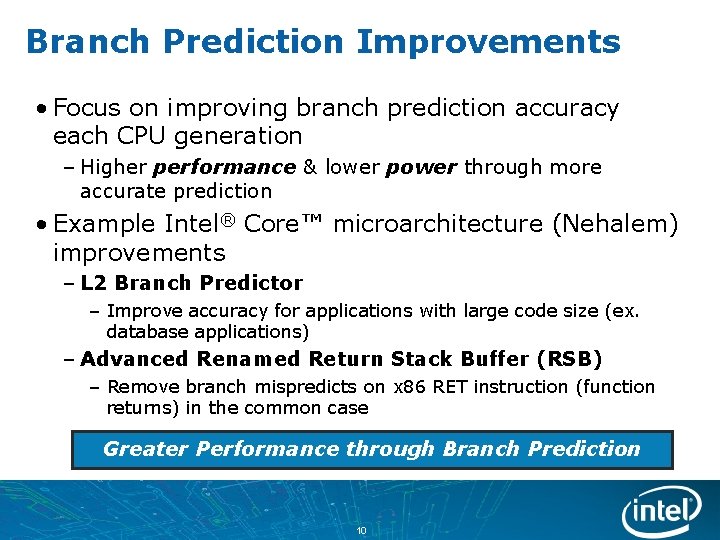

Branch Prediction Improvements • Focus on improving branch prediction accuracy each CPU generation – Higher performance & lower power through more accurate prediction • Example Intel® Core™ microarchitecture (Nehalem) improvements – L 2 Branch Predictor – Improve accuracy for applications with large code size (ex. database applications) – Advanced Renamed Return Stack Buffer (RSB) – Remove branch mispredicts on x 86 RET instruction (function returns) in the common case Greater Performance through Branch Prediction 10

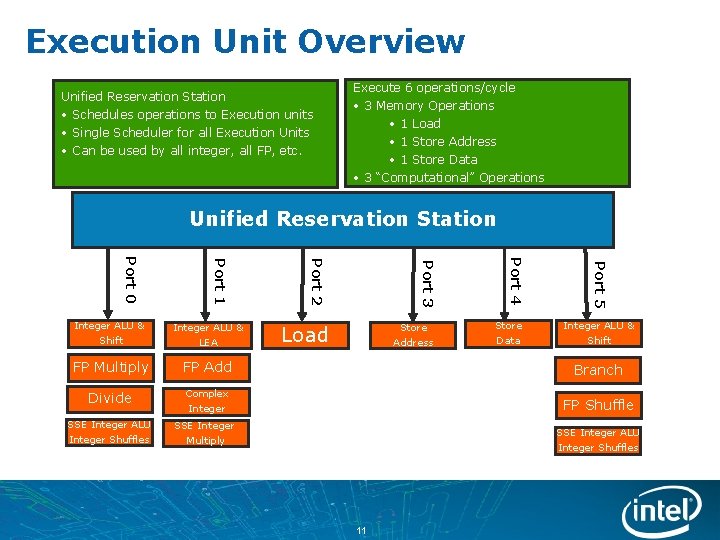

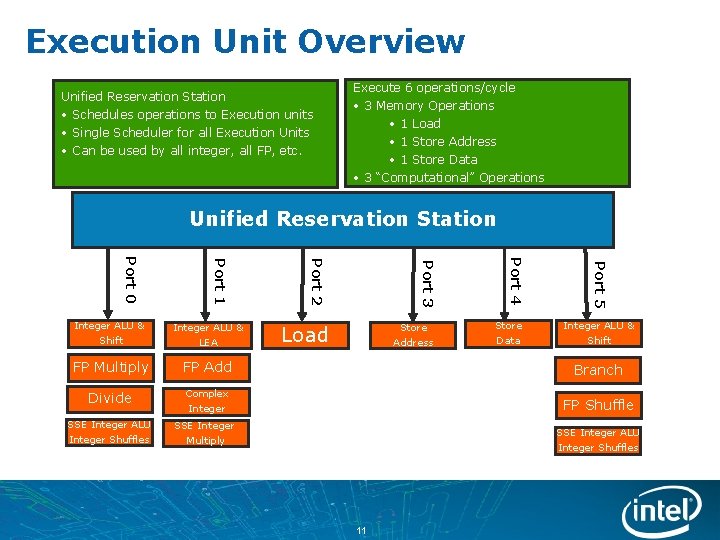

Execution Unit Overview Execute 6 operations/cycle Unified Reservation Station • Schedules operations to Execution units • Single Scheduler for all Execution Units • Can be used by all integer, all FP, etc. • 3 Memory Operations • 1 Load • 1 Store Address • 1 Store Data • 3 “Computational” Operations Unified Reservation Station Port 5 Port 4 Port 3 Port 2 Port 1 Port 0 Store Data Integer ALU & Shift Integer ALU & LEA FP Multiply FP Add Branch Divide Complex Integer FP Shuffle SSE Integer ALU Integer Shuffles SSE Integer Multiply Store Address Load SSE Integer ALU Integer Shuffles 11

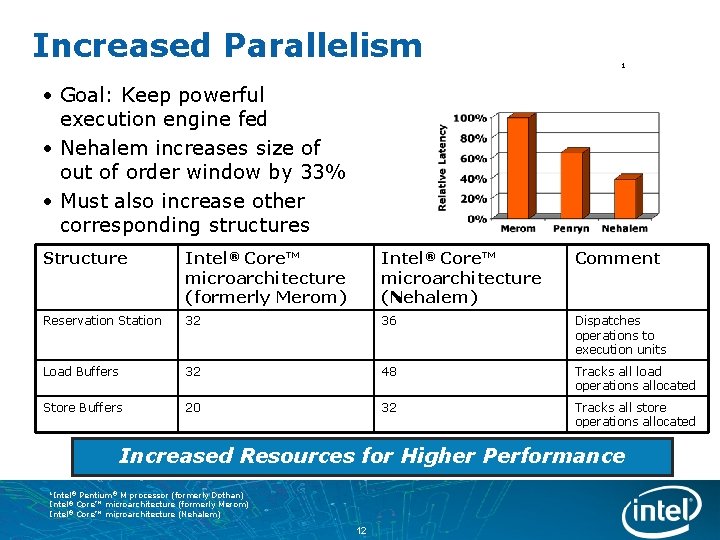

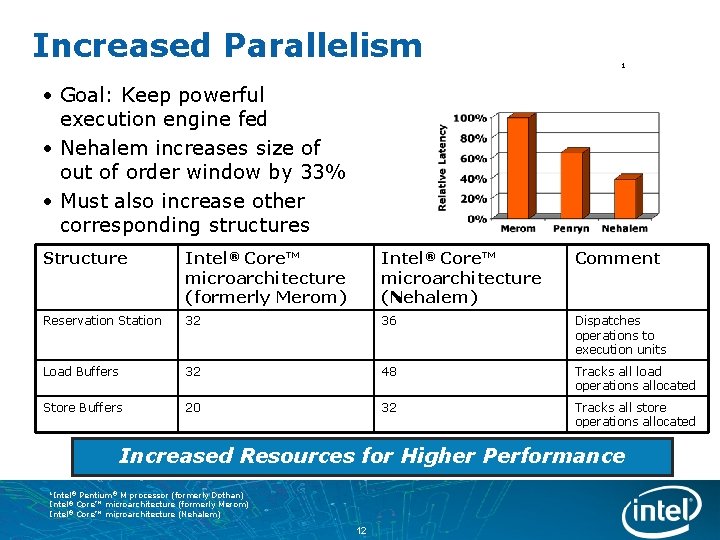

Increased Parallelism 1 • Goal: Keep powerful execution engine fed • Nehalem increases size of out of order window by 33% • Must also increase other corresponding structures Structure Intel® Core™ microarchitecture (formerly Merom) Intel® Core™ microarchitecture (Nehalem) Comment Reservation Station 32 36 Dispatches operations to execution units Load Buffers 32 48 Tracks all load operations allocated Store Buffers 20 32 Tracks all store operations allocated Increased Resources for Higher Performance 1 Intel® Pentium® M processor (formerly Dothan) Intel® Core™ microarchitecture (formerly Merom) Intel® Core™ microarchitecture (Nehalem) 12

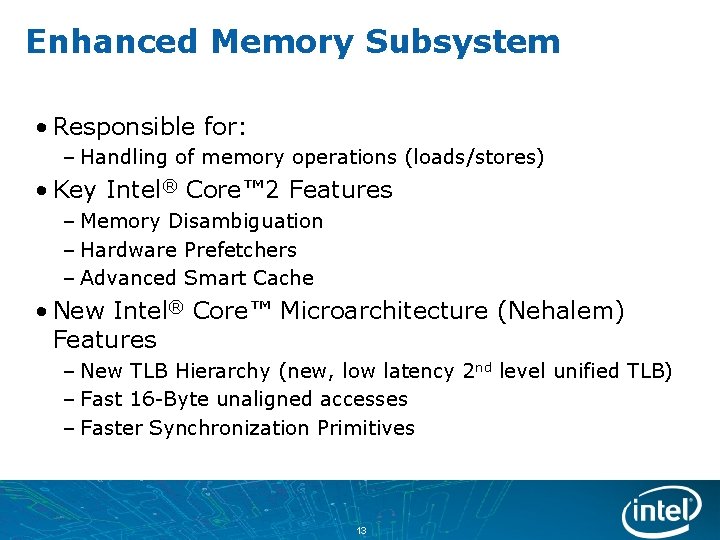

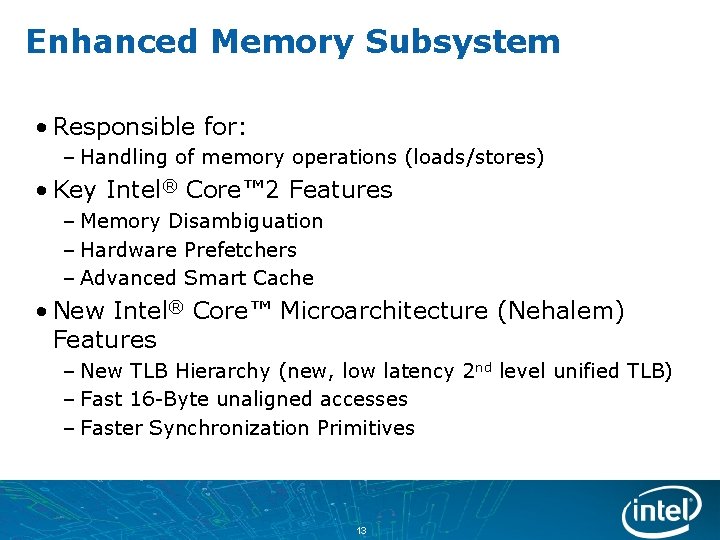

Enhanced Memory Subsystem • Responsible for: – Handling of memory operations (loads/stores) • Key Intel® Core™ 2 Features – Memory Disambiguation – Hardware Prefetchers – Advanced Smart Cache • New Intel® Core™ Microarchitecture (Nehalem) Features – New TLB Hierarchy (new, low latency 2 nd level unified TLB) – Fast 16 -Byte unaligned accesses – Faster Synchronization Primitives 13

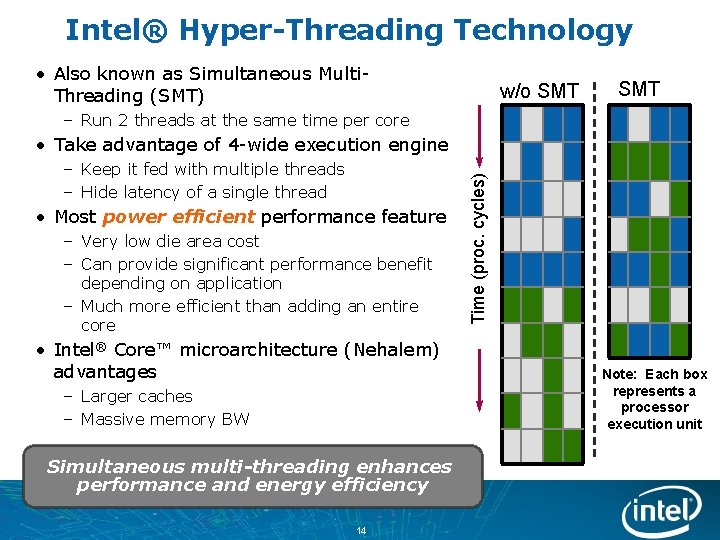

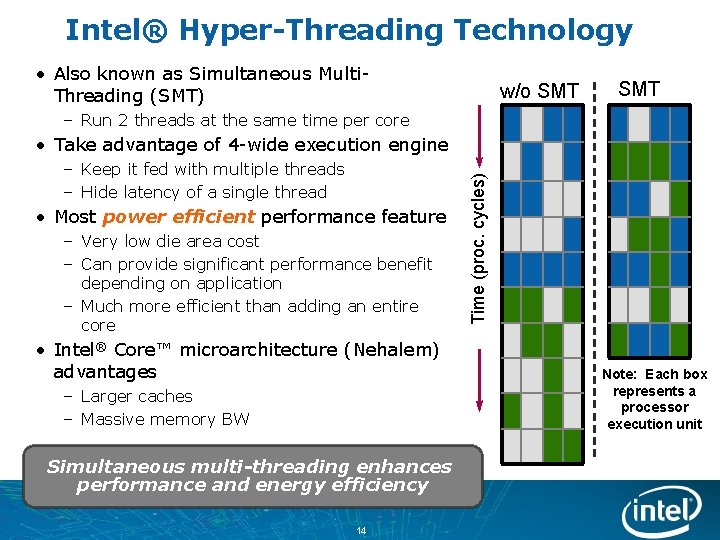

Intel® Hyper-Threading Technology • Also known as Simultaneous Multi. Threading (SMT) w/o SMT – Run 2 threads at the same time per core – Keep it fed with multiple threads – Hide latency of a single thread • Most power efficient performance feature – Very low die area cost – Can provide significant performance benefit depending on application – Much more efficient than adding an entire core • Intel® Core™ microarchitecture (Nehalem) advantages – Larger caches – Massive memory BW Simultaneous multi-threading enhances performance and energy efficiency 14 Time (proc. cycles) • Take advantage of 4 -wide execution engine Note: Each box represents a processor execution unit

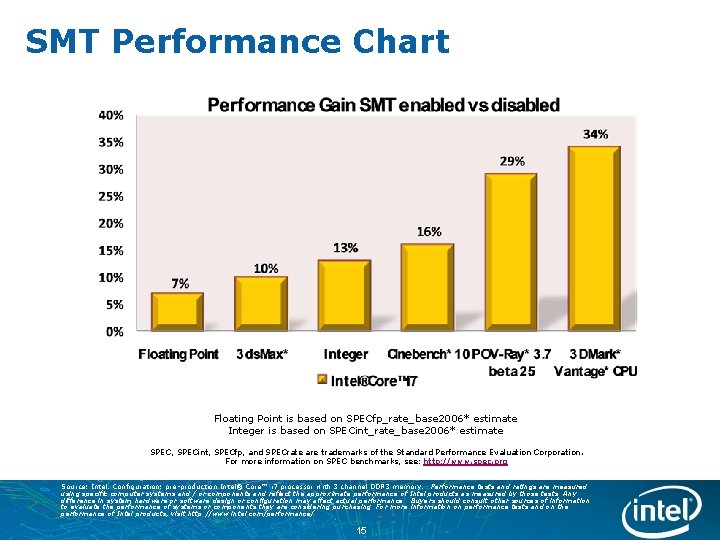

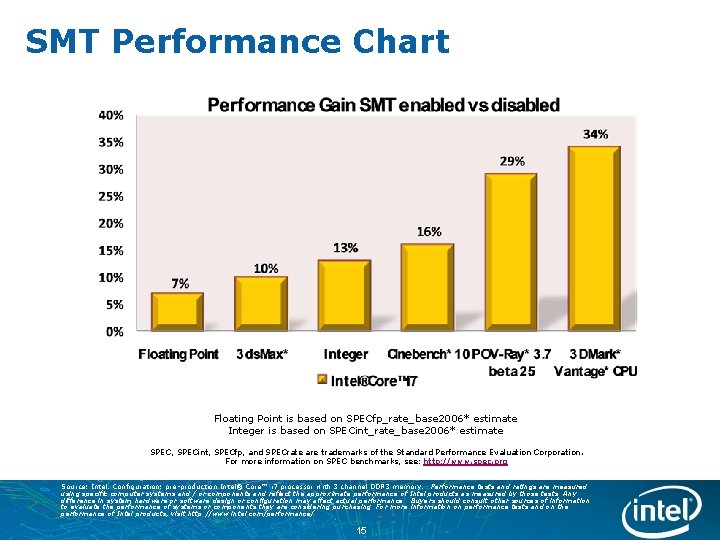

SMT Performance Chart Floating Point is based on SPECfp_rate_base 2006* estimate Integer is based on SPECint_rate_base 2006* estimate SPEC, SPECint, SPECfp, and SPECrate are trademarks of the Standard Performance Evaluation Corporation. For more information on SPEC benchmarks, see: http: //www. spec. org Source: Intel. Configuration: pre-production Intel® Core™ i 7 processor with 3 channel DDR 3 memory. Performance tests and ratings are measured using specific computer systems and / or components and reflect the approximate performance of Intel products as measured by those tests. Any difference in system hardware or software design or configuration may affect actual performance. Buyers should consult other sources of information to evaluate the performance of systems or components they are considering purchasing. For more information on performance tests and on the performance of Intel products, visit http: //www. intel. com/performance/ 15

Agenda • Intel® Core™ Microarchitecture (Nehalem) Design Overview • Enhanced Processor Core • Performance Features • Intel® Hyper-Threading Technology • New Platform • New Cache Hierarchy • New Platform Architecture • Performance Acceleration • Virtualization • New Instructions • Power Management Overview • Minimizing Idle Power Consumption • Performance when it counts 16

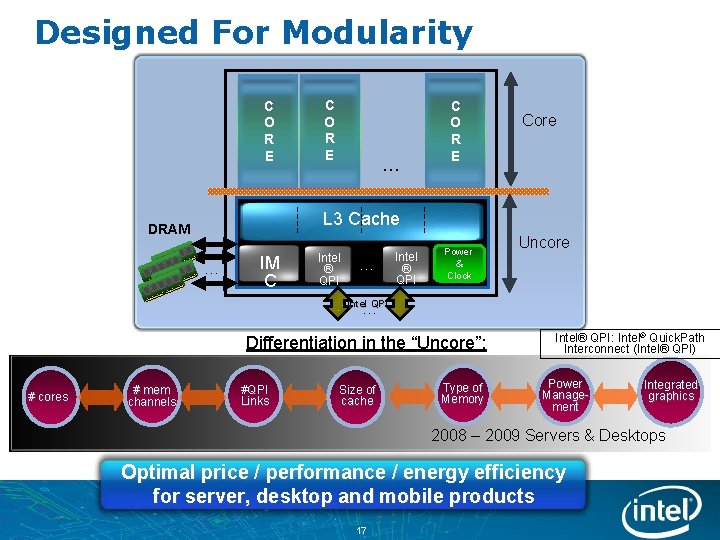

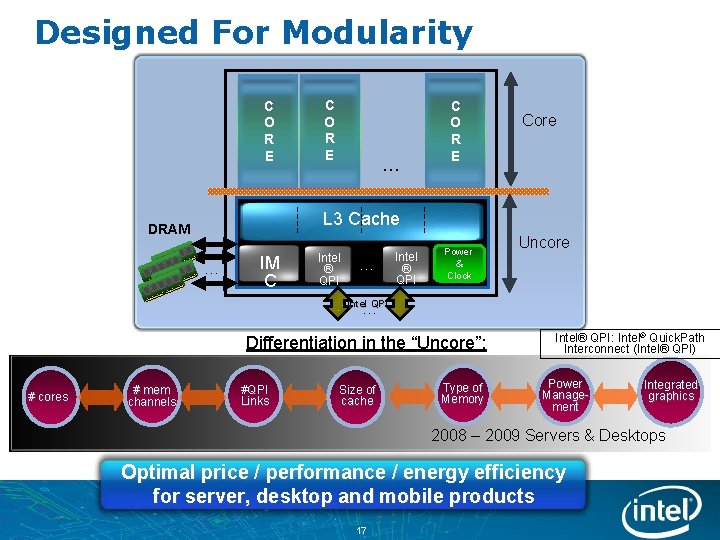

Designed For Modularity C O R E … C O R E Core L 3 Cache DRAM … IM C Intel ® QPI … Intel ® QPI Power & Clock Uncore Intel QPI … Differentiation in the “Uncore”: # cores # mem channels #QPI Links Size of cache Type of Memory Intel® QPI: Intel® Quick. Path Interconnect (Intel® QPI) Power Management Integrated graphics 2008 – 2009 Servers & Desktops Optimal price / performance / energy efficiency for server, desktop and mobile products 17

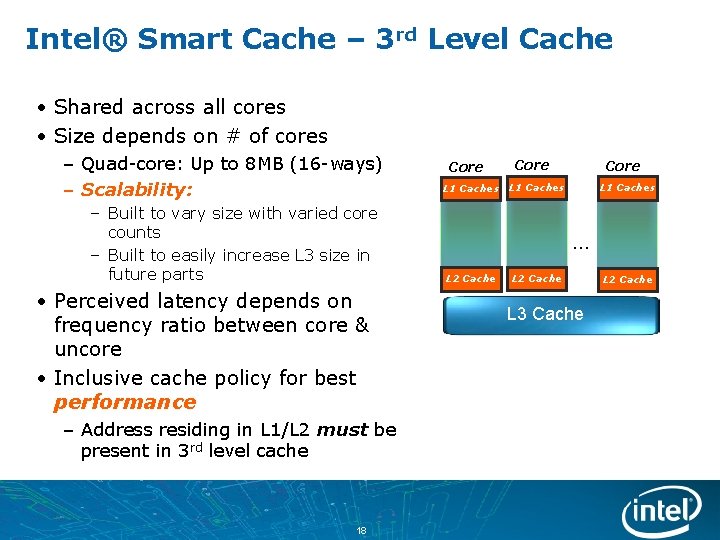

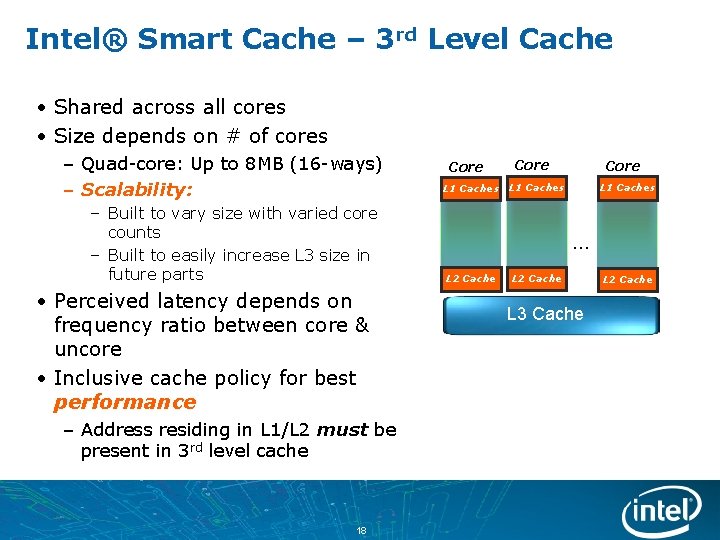

Intel® Smart Cache – 3 rd Level Cache • Shared across all cores • Size depends on # of cores – Quad-core: Up to 8 MB (16 -ways) – Scalability: – Built to vary size with varied core counts – Built to easily increase L 3 size in future parts • Perceived latency depends on frequency ratio between core & uncore • Inclusive cache policy for best performance – Address residing in L 1/L 2 must be present in 3 rd level cache 18 Core L 1 Caches … L 2 Cache L 3 Cache L 2 Cache

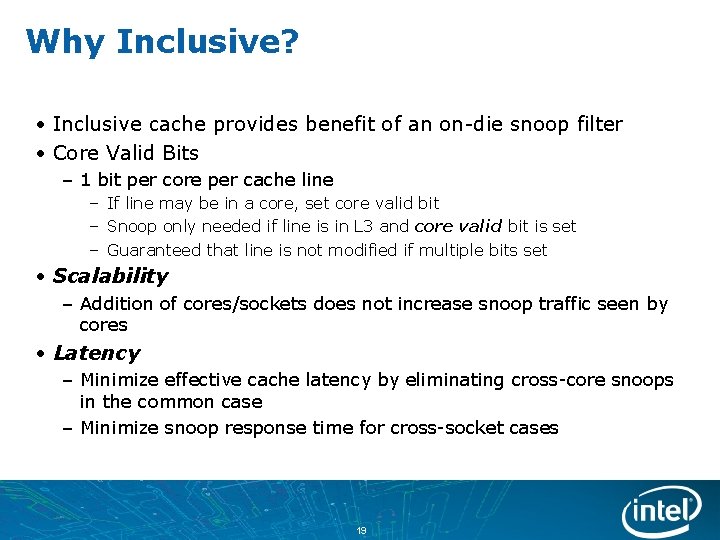

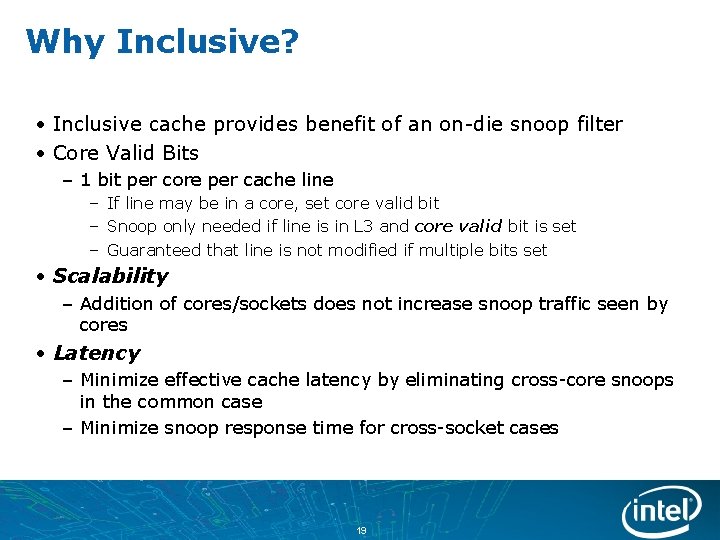

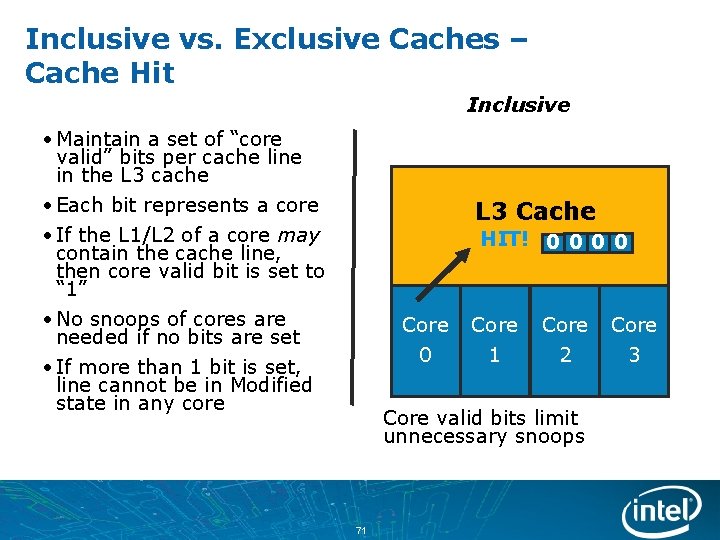

Why Inclusive? • Inclusive cache provides benefit of an on-die snoop filter • Core Valid Bits – 1 bit per core per cache line – If line may be in a core, set core valid bit – Snoop only needed if line is in L 3 and core valid bit is set – Guaranteed that line is not modified if multiple bits set • Scalability – Addition of cores/sockets does not increase snoop traffic seen by cores • Latency – Minimize effective cache latency by eliminating cross-core snoops in the common case – Minimize snoop response time for cross-socket cases 19

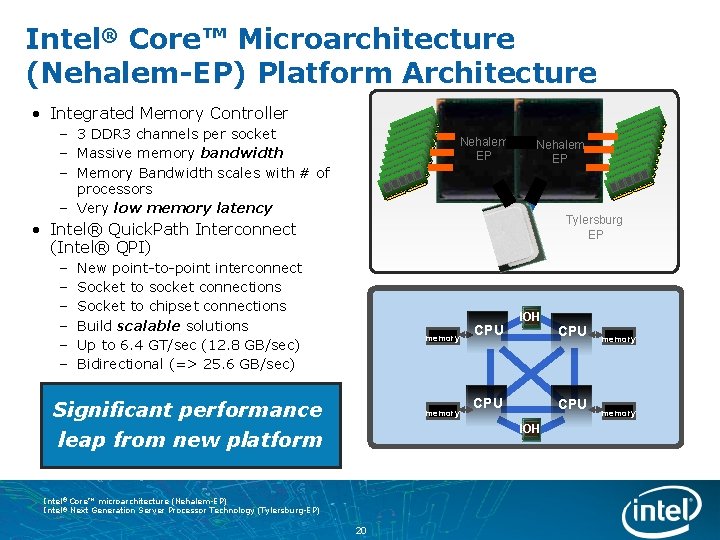

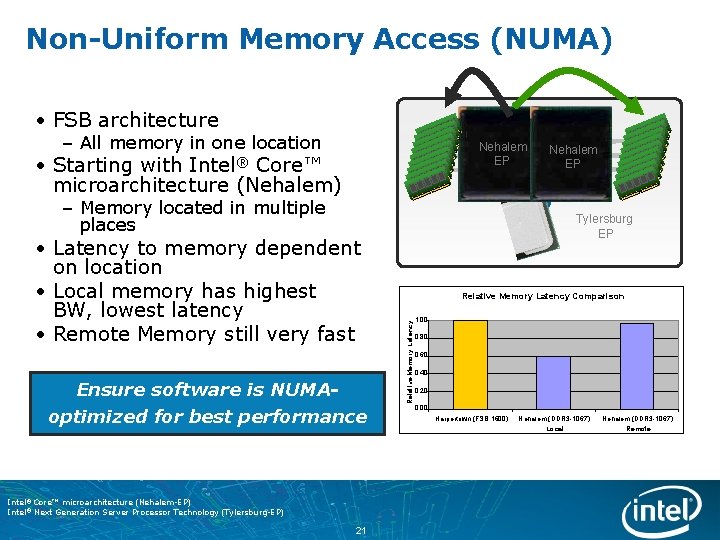

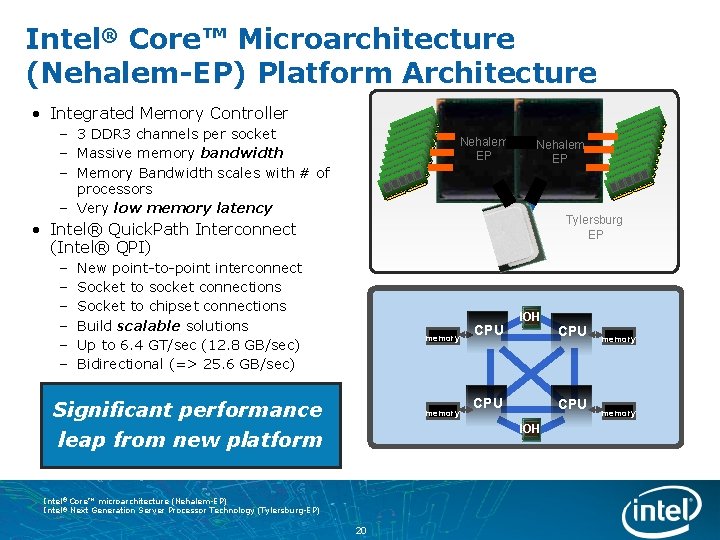

Intel® Core™ Microarchitecture (Nehalem-EP) Platform Architecture • Integrated Memory Controller – 3 DDR 3 channels per socket – Massive memory bandwidth – Memory Bandwidth scales with # of processors – Very low memory latency Nehalem EP Tylersburg EP • Intel® Quick. Path Interconnect (Intel® QPI) – – – New point-to-point interconnect Socket to socket connections Socket to chipset connections Build scalable solutions Up to 6. 4 GT/sec (12. 8 GB/sec) Bidirectional (=> 25. 6 GB/sec) memory Significant performance leap from new platform memory CPU IOH Intel® Core™ microarchitecture (Nehalem-EP) Intel® Next Generation Server Processor Technology (Tylersburg-EP) 20 CPU memory

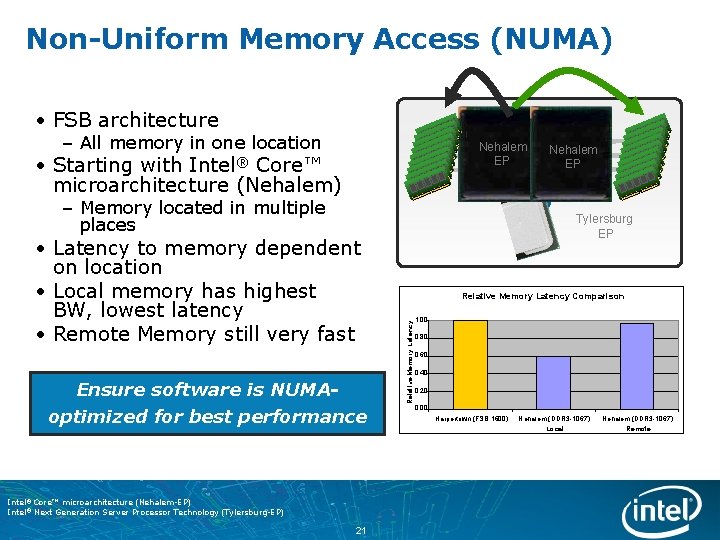

Non-Uniform Memory Access (NUMA) • FSB architecture – All memory in one location Nehalem EP • Starting with Core™ microarchitecture (Nehalem) Intel® Nehalem EP – Memory located in multiple places Ensure software is NUMAoptimized for best performance Intel® Core™ microarchitecture (Nehalem-EP) Intel® Next Generation Server Processor Technology (Tylersburg-EP) 21 Relative Memory Latency Comparison Relative Memory Latency • Latency to memory dependent on location • Local memory has highest BW, lowest latency • Remote Memory still very fast Tylersburg EP 1. 00 0. 80 0. 60 0. 40 0. 20 0. 00 Harpertown (FSB 1600) Nehalem (DDR 3 -1067) Local Remote

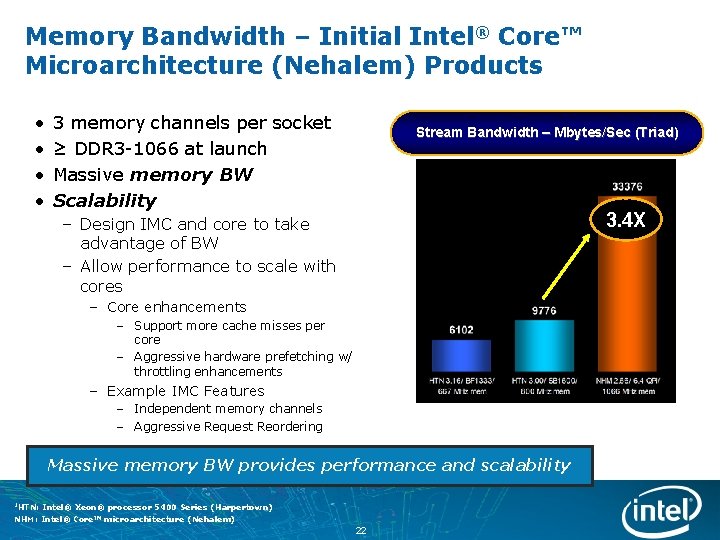

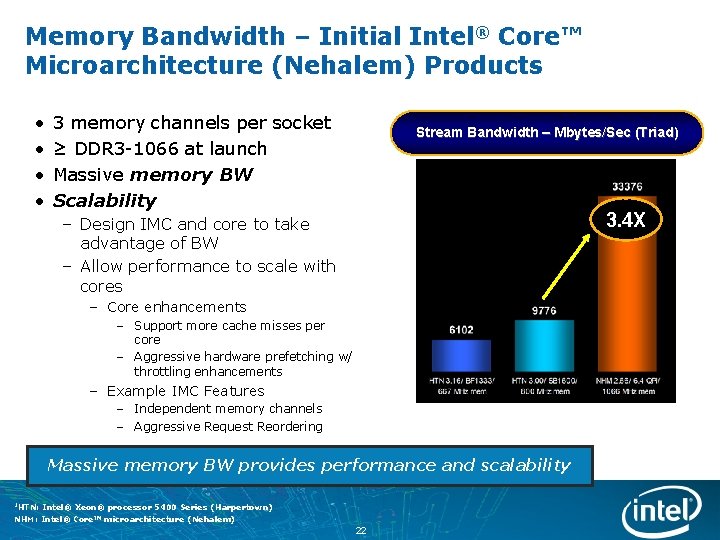

Memory Bandwidth – Initial Intel® Core™ Microarchitecture (Nehalem) Products • • 3 memory channels per socket ≥ DDR 3 -1066 at launch Massive memory BW Scalability Stream Bandwidth – Mbytes/Sec (Triad) 3. 4 X – Design IMC and core to take advantage of BW – Allow performance to scale with cores – Core enhancements – Support more cache misses per core – Aggressive hardware prefetching w/ throttling enhancements – Example IMC Features – Independent memory channels – Aggressive Request Reordering Massive memory BW provides performance and scalability 1 HTN: Intel® Xeon® processor 5400 Series (Harpertown) NHM: Intel® Core™ microarchitecture (Nehalem) 22

Agenda • Intel® Core™ Microarchitecture (Nehalem) Design Overview • Enhanced Processor Core • Performance Features • Intel® Hyper-Threading Technology • New Platform • New Cache Hierarchy • New Platform Architecture • Performance Acceleration • Virtualization • New Instructions • Power Management Overview • Minimizing Idle Power Consumption • Performance when it counts 23

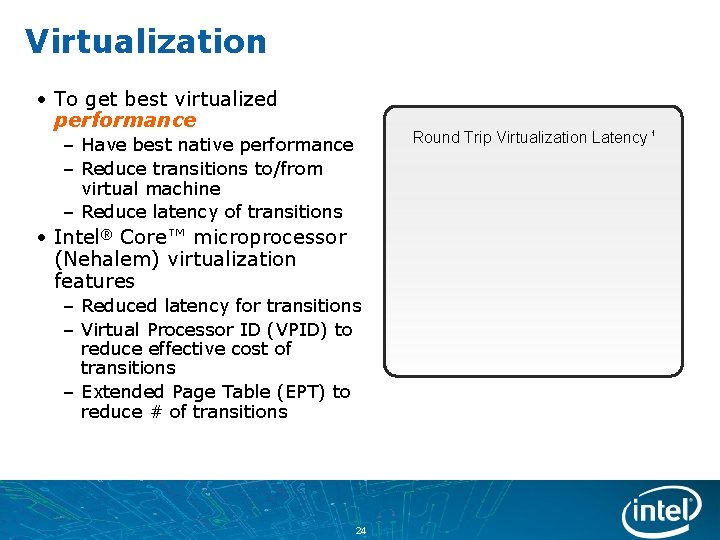

Virtualization • To get best virtualized performance Round Trip Virtualization Latency 1 – Have best native performance – Reduce transitions to/from virtual machine – Reduce latency of transitions • Intel® Core™ microprocessor (Nehalem) virtualization features – Reduced latency for transitions – Virtual Processor ID (VPID) to reduce effective cost of transitions – Extended Page Table (EPT) to reduce # of transitions 24

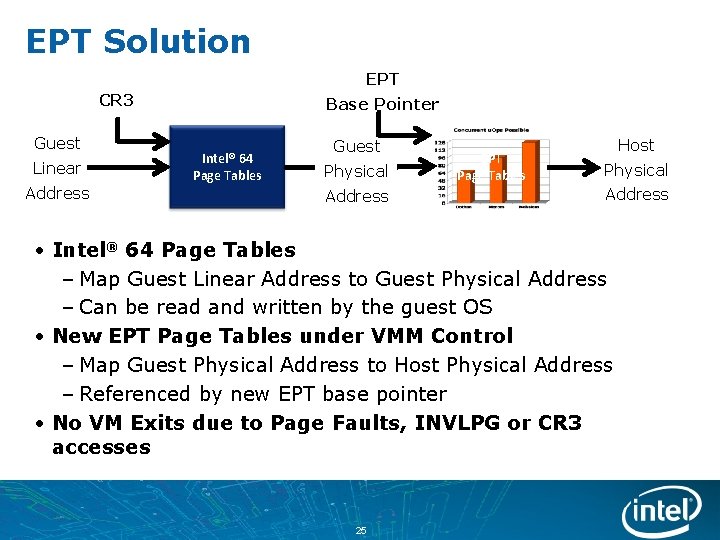

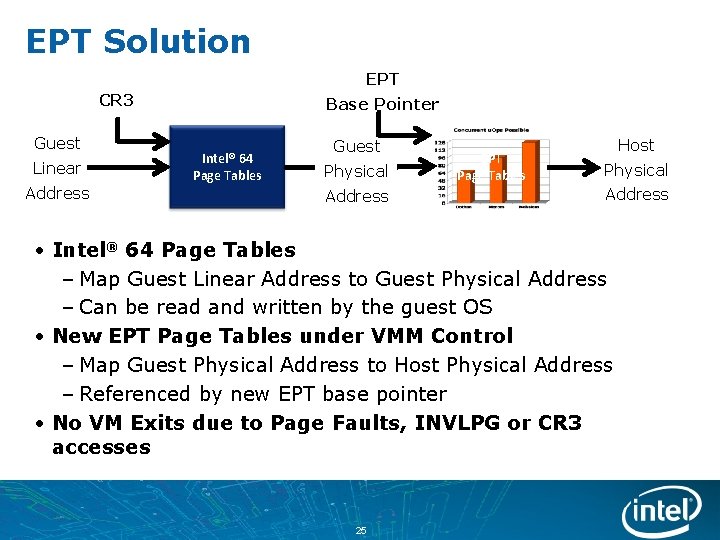

EPT Solution EPT CR 3 Guest Linear Address Base Pointer Intel® 64 Page Tables Guest Physical Address EPT Page Tables Host Physical Address • Intel® 64 Page Tables – Map Guest Linear Address to Guest Physical Address – Can be read and written by the guest OS • New EPT Page Tables under VMM Control – Map Guest Physical Address to Host Physical Address – Referenced by new EPT base pointer • No VM Exits due to Page Faults, INVLPG or CR 3 accesses 25

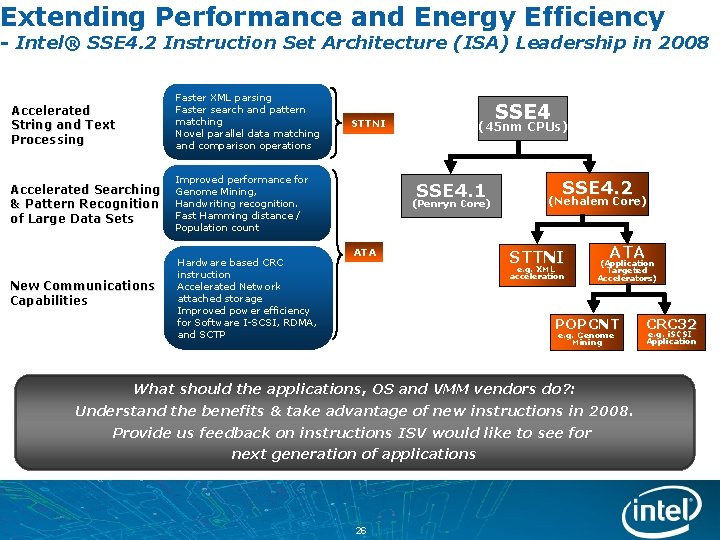

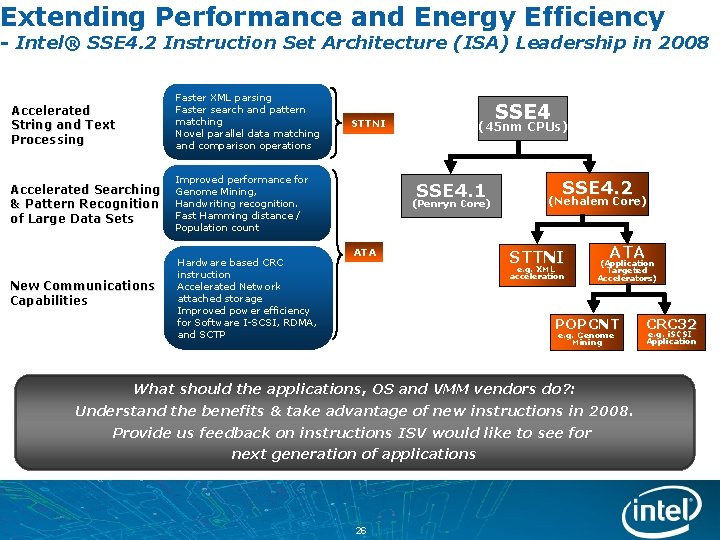

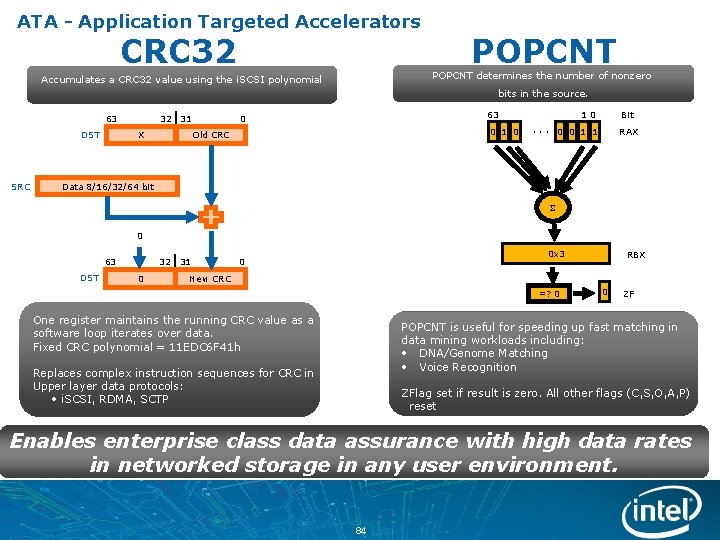

Extending Performance and Energy Efficiency - Intel® SSE 4. 2 Instruction Set Architecture (ISA) Leadership in 2008 Accelerated String and Text Processing Faster XML parsing Faster search and pattern matching Novel parallel data matching and comparison operations Accelerated Searching & Pattern Recognition of Large Data Sets Improved performance for Genome Mining, Handwriting recognition. Fast Hamming distance / Population count New Communications Capabilities Hardware based CRC instruction Accelerated Network attached storage Improved power efficiency for Software I-SCSI, RDMA, and SCTP S S E 4 STTNI (45 nm CPUs) S S E 4. 1 (Penryn Core) ATA S S E 4. 2 (Nehalem Core) ST T N I e. g. XML acceleration AT A (Application Targeted Accelerators) P OP C N T e. g. Genome M in in g What should the applications, OS and VMM vendors do? : Understand the benefits & take advantage of new instructions in 2008. Provide us feedback on instructions ISV would like to see for next generation of applications 26 C RC 3 2 e. g. i. SCSI Application

Agenda • Intel® Core™ Microarchitecture (Nehalem) Design Overview • Enhanced Processor Core • Performance Features • Intel® Hyper-Threading Technology • New Platform • New Cache Hierarchy • New Platform Architecture • Performance Acceleration • Virtualization • New Instructions • Power Management Overview • Minimizing Idle Power Consumption • Performance when it counts 27

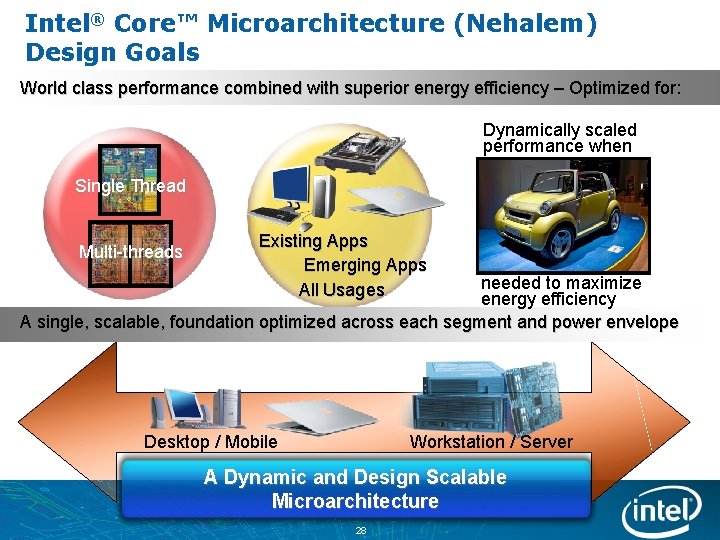

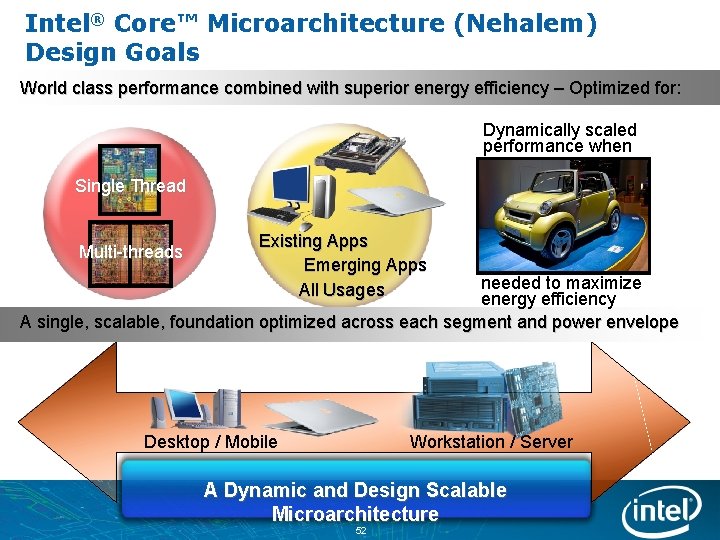

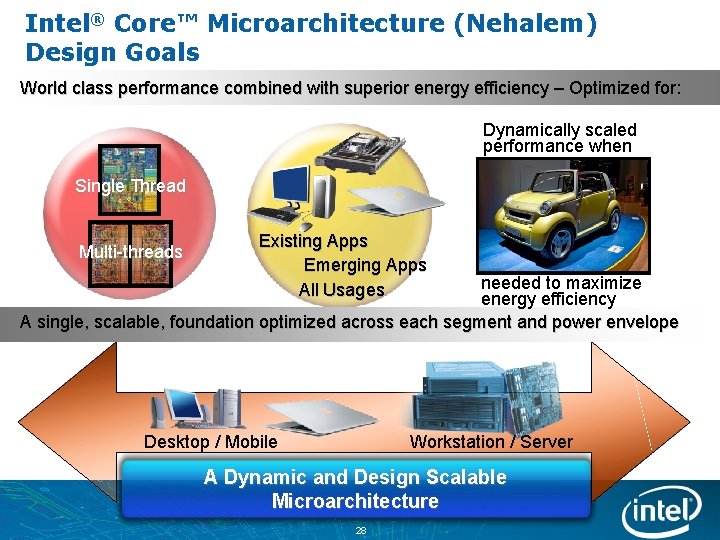

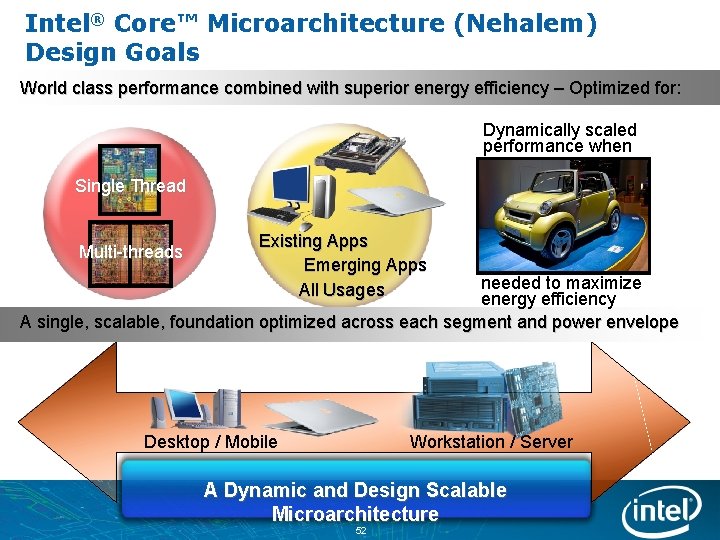

Intel® Core™ Microarchitecture (Nehalem) Design Goals World class performance combined with superior energy efficiency – Optimized for: Dynamically scaled performance when Single Thread Multi-threads Existing Apps Emerging Apps All Usages needed to maximize energy efficiency A single, scalable, foundation optimized across each segment and power envelope Workstation / Server Desktop / Mobile A Dynamic and Design Scalable Microarchitecture 28

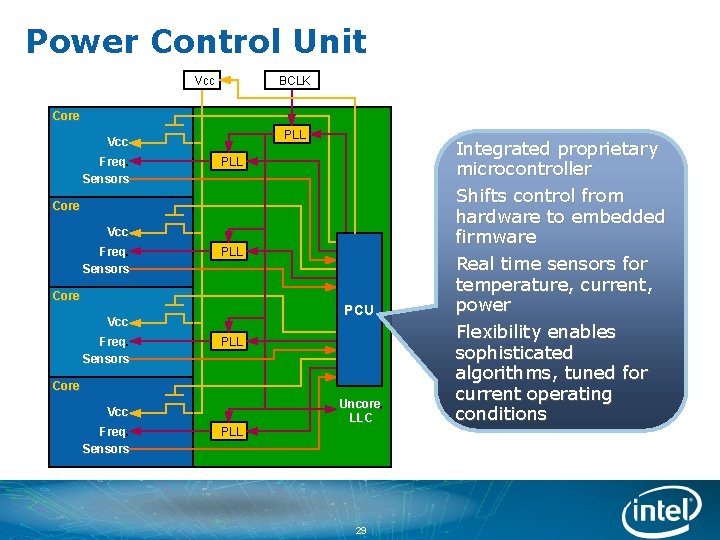

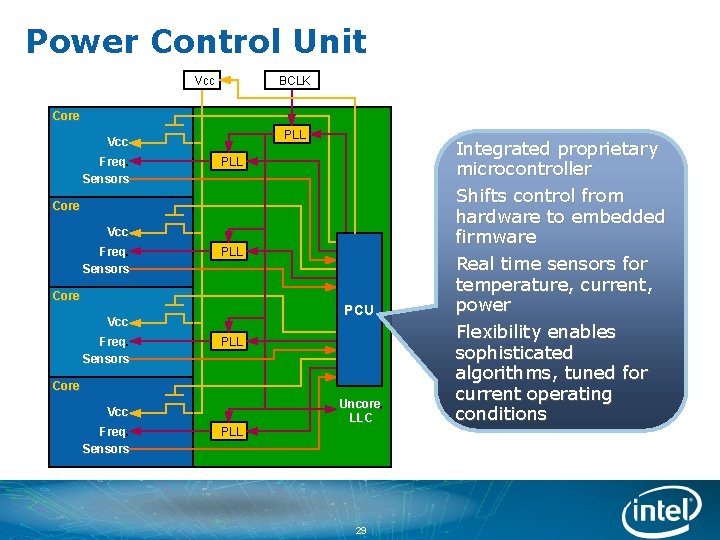

Power Control Unit Vcc BCLK Core PLL Vcc Freq. Sensors PLL Core PCU Vcc Freq. Sensors PLL Core Vcc Freq. Sensors PLL Uncore, LLC 29 Integrated proprietary microcontroller Shifts control from hardware to embedded firmware Real time sensors for temperature, current, power Flexibility enables sophisticated algorithms, tuned for current operating conditions

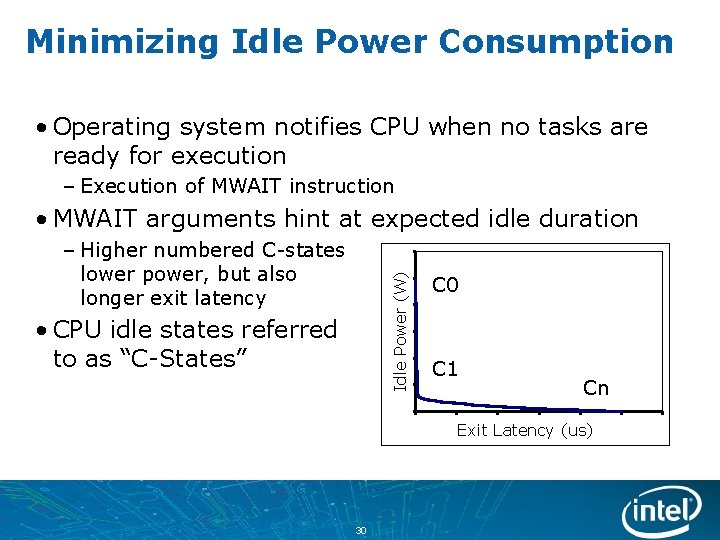

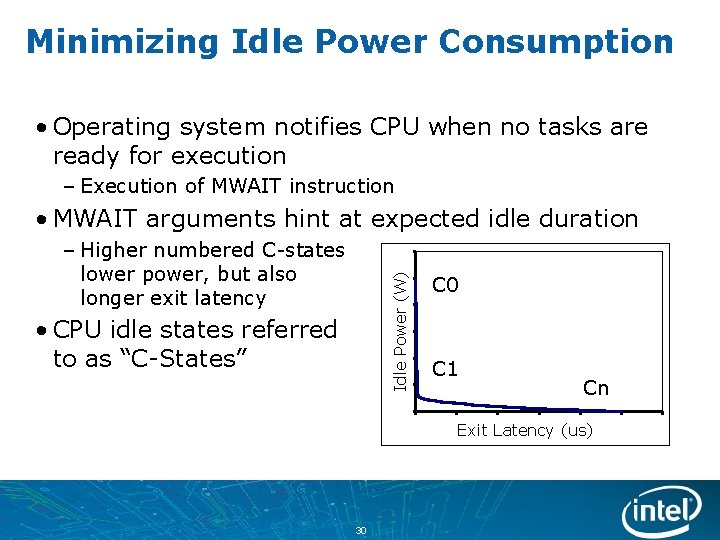

Minimizing Idle Power Consumption • Operating system notifies CPU when no tasks are ready for execution – Execution of MWAIT instruction • MWAIT arguments hint at expected idle duration Idle Power (W) – Higher numbered C-states lower power, but also longer exit latency • CPU idle states referred to as “C-States” C 0 C 1 Cn Exit Latency (us) 30

C 6 on Intel® Core™ Microarchitecture (Nehalem) 31

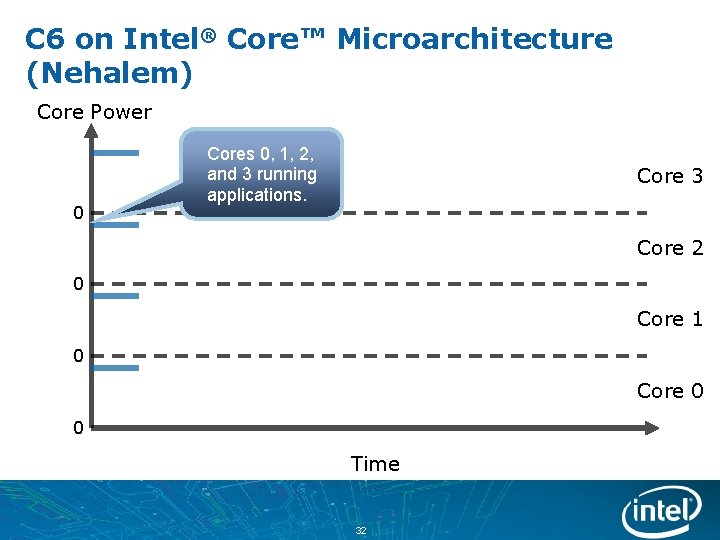

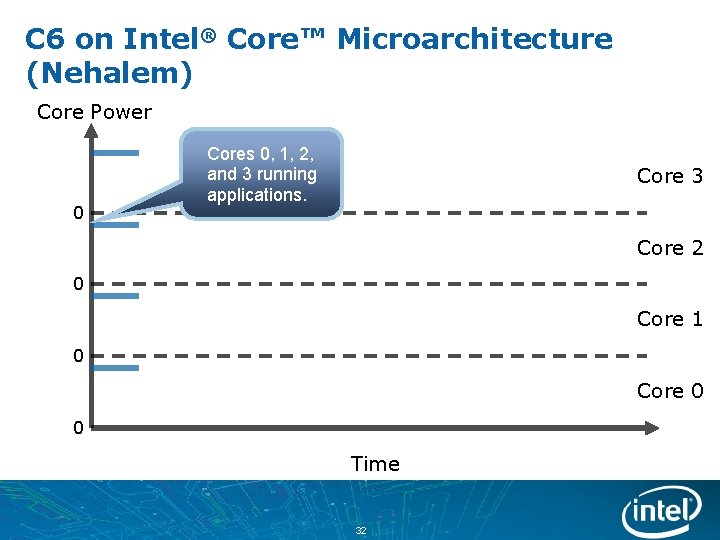

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power 0 Cores 0, 1, 2, and 3 running applications. Core 3 Core 2 0 Core 1 0 Core 0 0 Time 32

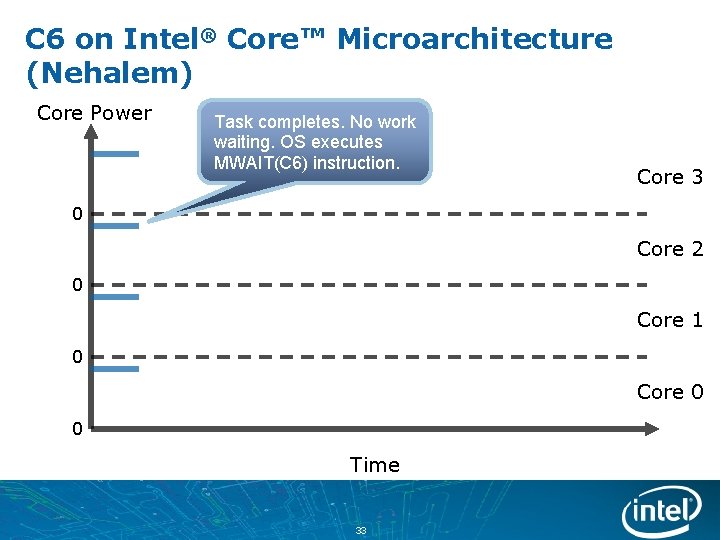

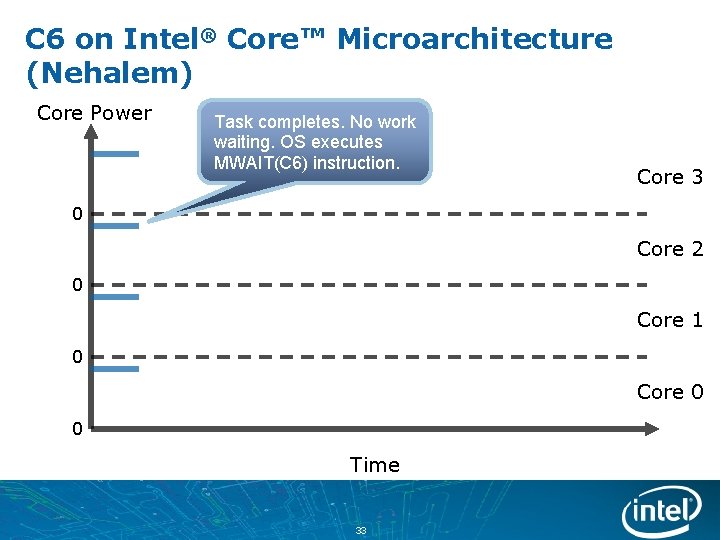

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power Task completes. No work waiting. OS executes MWAIT(C 6) instruction. Core 3 0 Core 2 0 Core 1 0 Core 0 0 Time 33

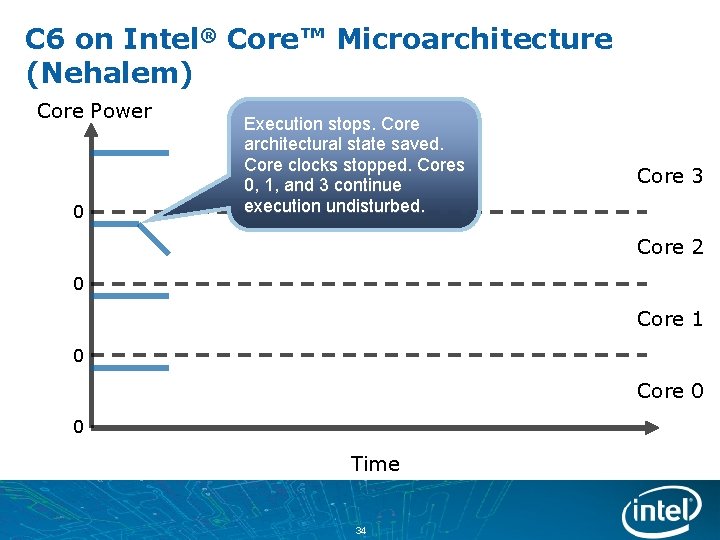

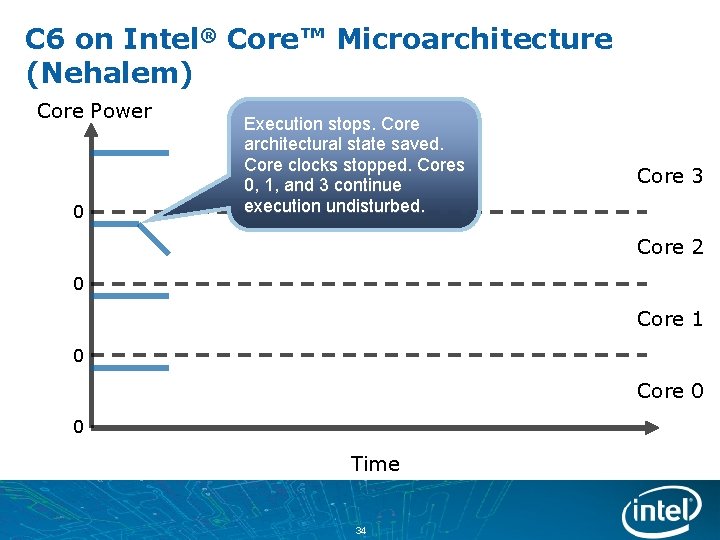

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power 0 Execution stops. Core architectural state saved. Core clocks stopped. Cores 0, 1, and 3 continue execution undisturbed. Core 3 Core 2 0 Core 1 0 Core 0 0 Time 34

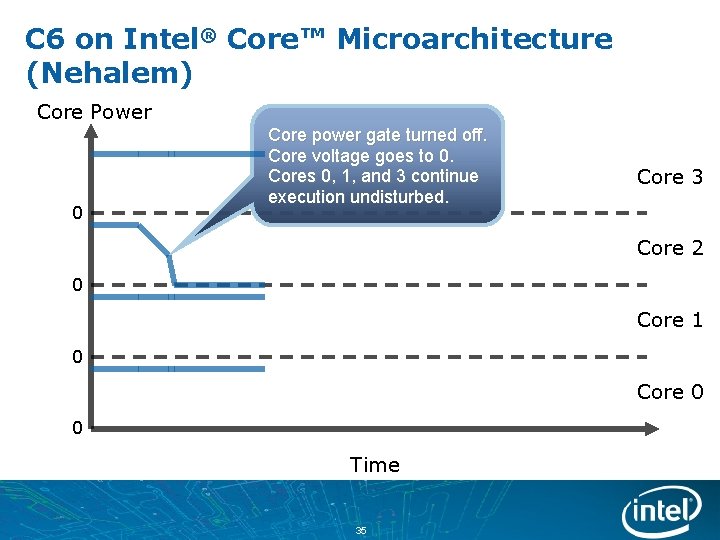

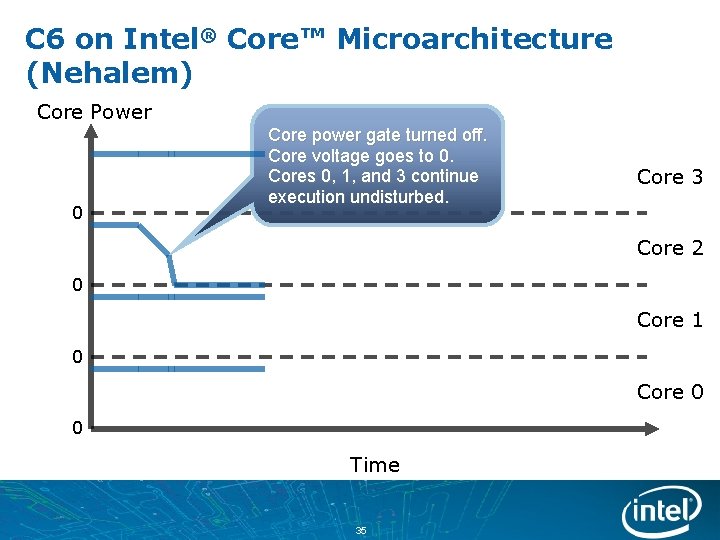

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power 0 Core power gate turned off. Core voltage goes to 0. Cores 0, 1, and 3 continue execution undisturbed. Core 3 Core 2 0 Core 1 0 Core 0 0 Time 35

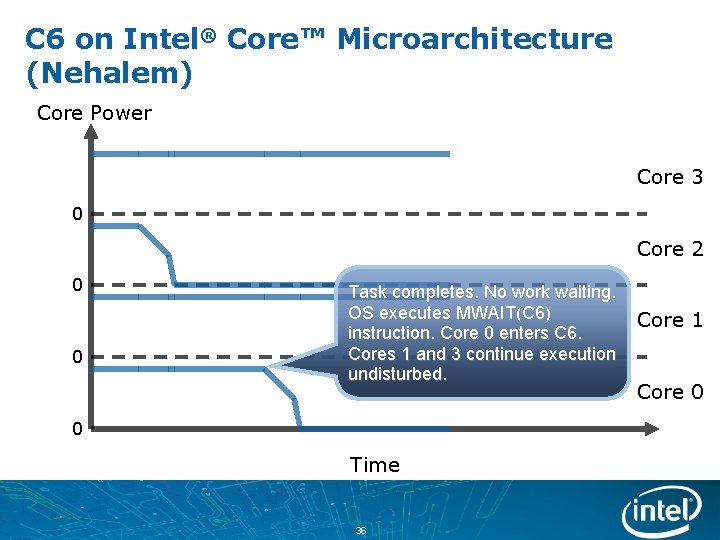

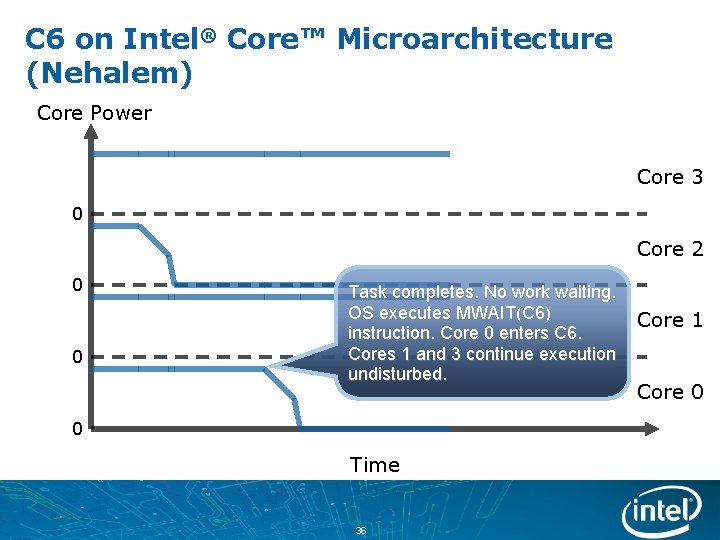

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power Core 3 0 Core 2 0 0 Task completes. No work waiting. OS executes MWAIT(C 6) instruction. Core 0 enters C 6. Cores 1 and 3 continue execution undisturbed. 0 Time 36 Core 1 Core 0

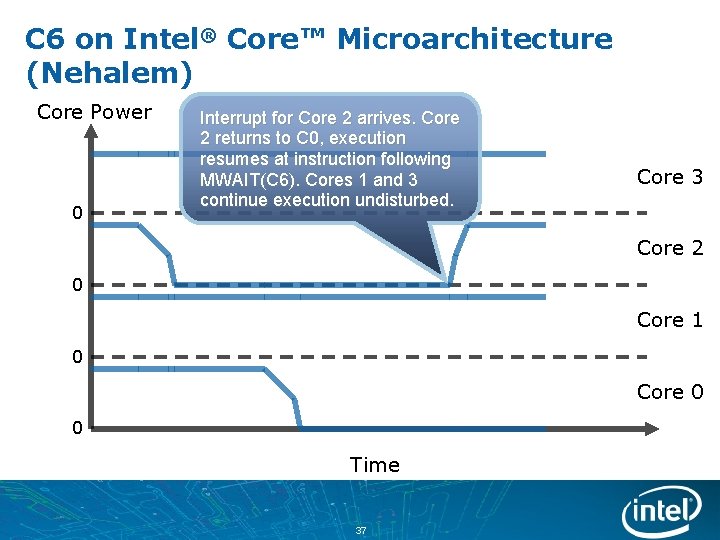

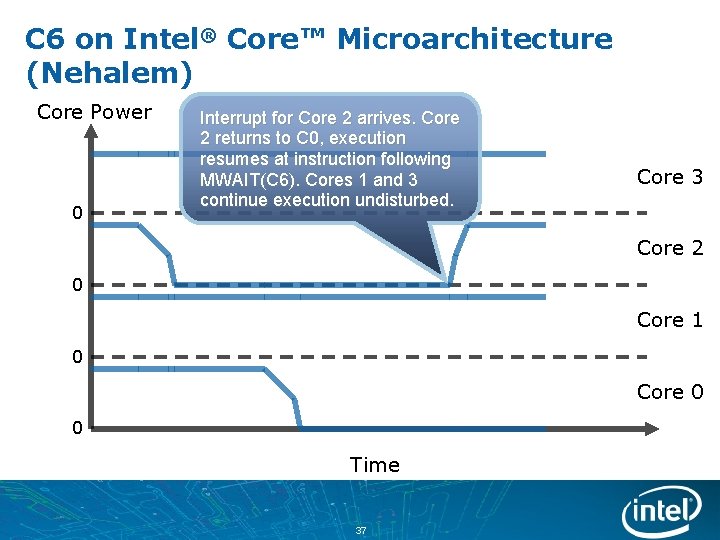

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power 0 Interrupt for Core 2 arrives. Core 2 returns to C 0, execution resumes at instruction following MWAIT(C 6). Cores 1 and 3 continue execution undisturbed. Core 3 Core 2 0 Core 1 0 Core 0 0 Time 37

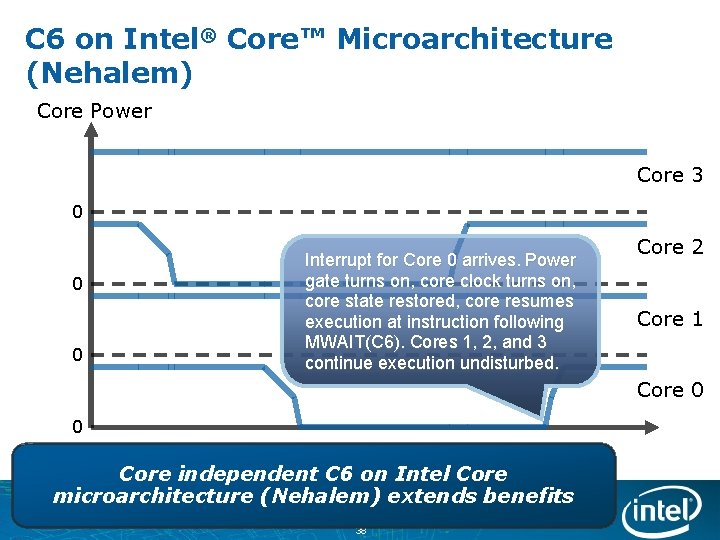

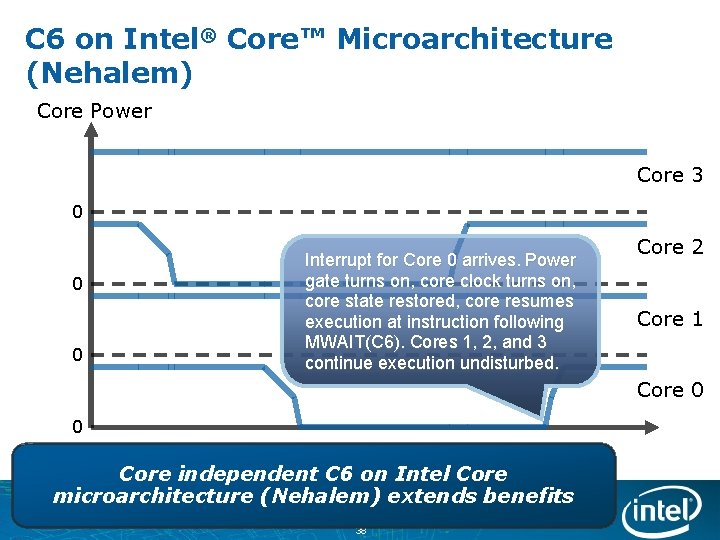

C 6 on Intel® Core™ Microarchitecture (Nehalem) Core Power Core 3 0 0 0 Interrupt for Core 0 arrives. Power gate turns on, core clock turns on, core state restored, core resumes execution at instruction following MWAIT(C 6). Cores 1, 2, and 3 continue execution undisturbed. Core 2 Core 1 Core 0 0 Time Core independent C 6 on Intel Core microarchitecture (Nehalem) extends benefits 38

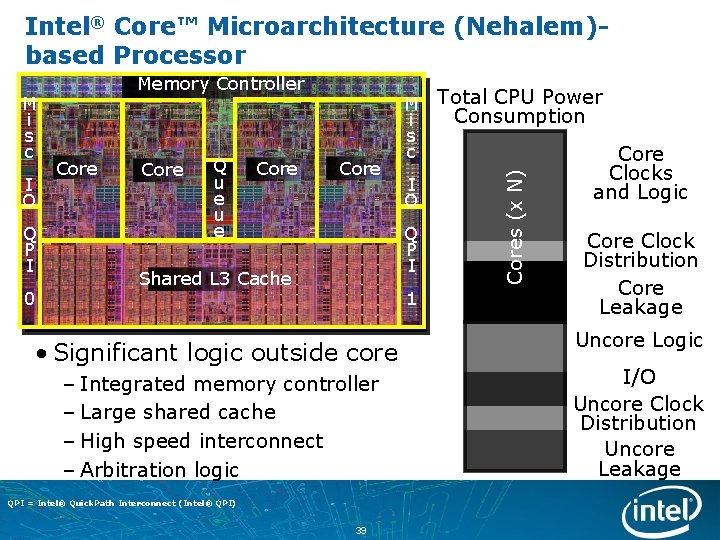

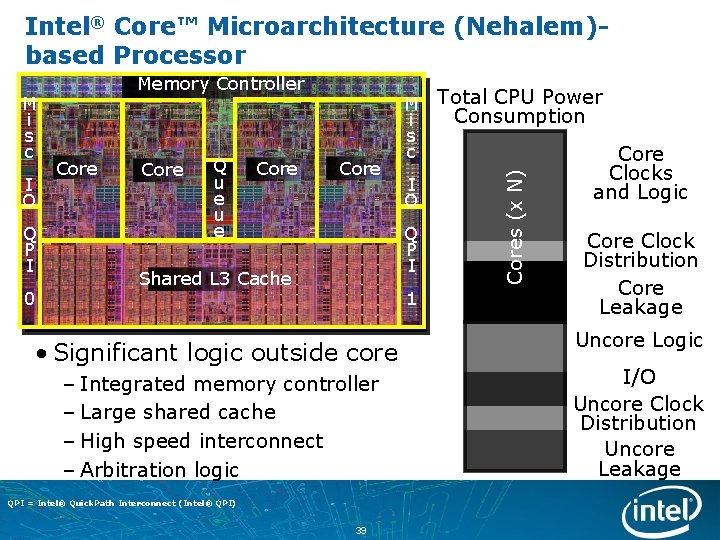

Intel® Core™ Microarchitecture (Nehalem)based Processor I O Q P I 0 Core Q u e Core M i s c I O Q P I Shared L 3 Cache 1 • Significant logic outside core – Integrated memory controller – Large shared cache – High speed interconnect – Arbitration logic QPI = Intel® Quick. Path Interconnect (Intel® QPI) 39 Total CPU Power Consumption Cores (x N) M i s c Memory Controller Core Clocks and Logic Core Clock Distribution Core Leakage Uncore Logic I/O Uncore Clock Distribution Uncore Leakage

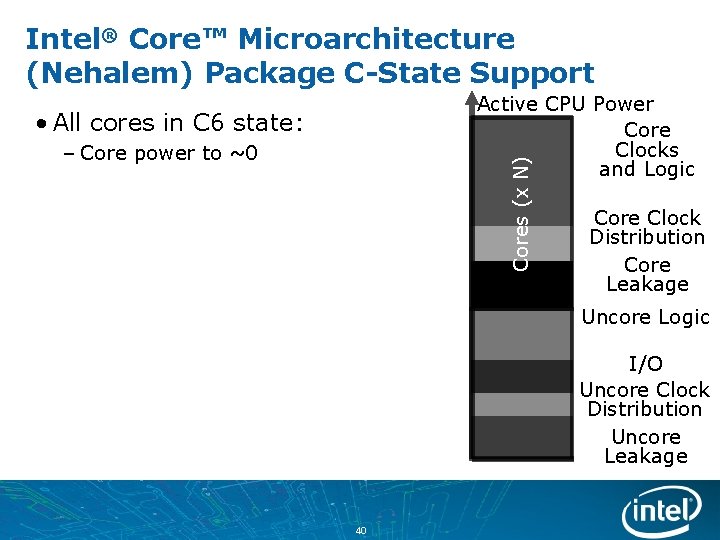

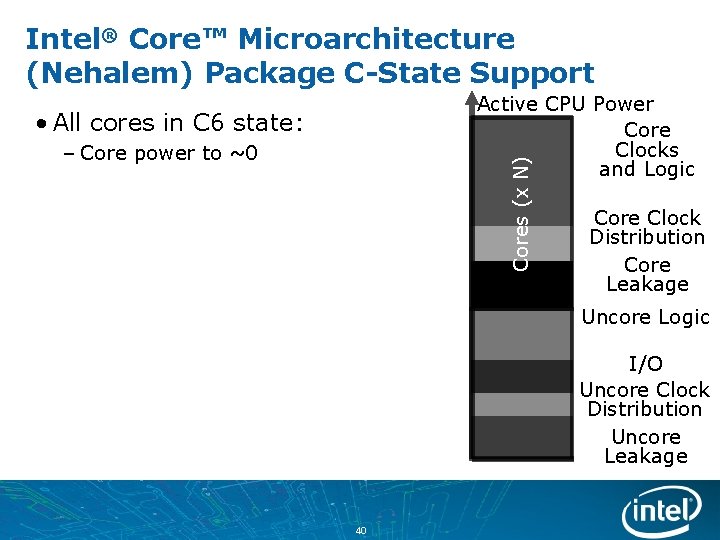

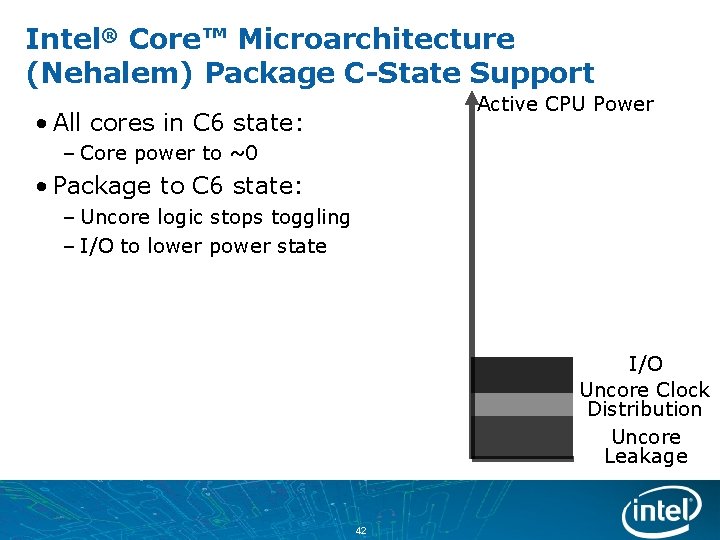

Intel® Core™ Microarchitecture (Nehalem) Package C-State Support Active CPU Power Core Clocks and Logic • All cores in C 6 state: Cores (x N) – Core power to ~0 Core Clock Distribution Core Leakage Uncore Logic I/O Uncore Clock Distribution Uncore Leakage 40

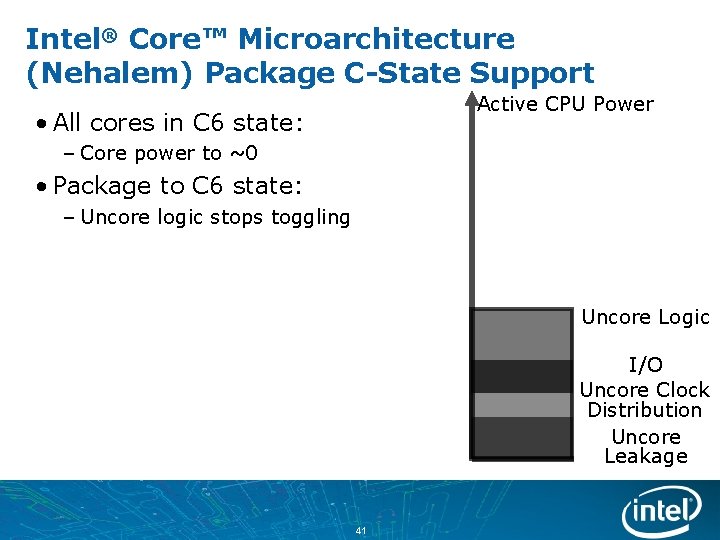

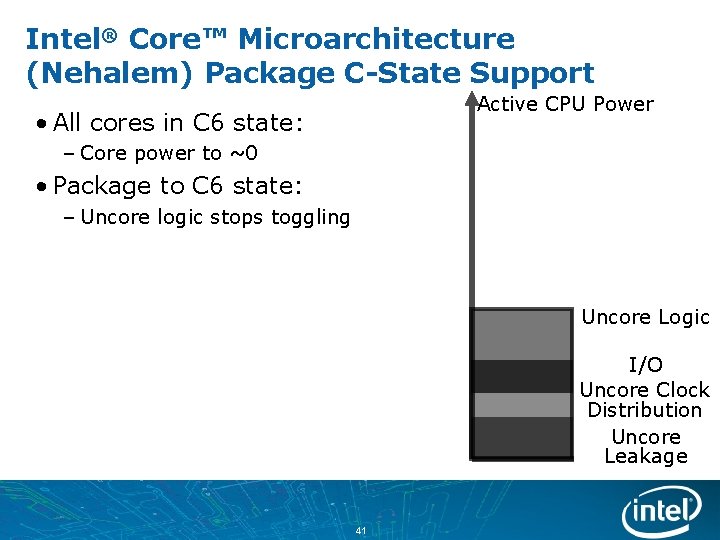

Intel® Core™ Microarchitecture (Nehalem) Package C-State Support Active CPU Power • All cores in C 6 state: – Core power to ~0 • Package to C 6 state: – Uncore logic stops toggling Uncore Logic I/O Uncore Clock Distribution Uncore Leakage 41

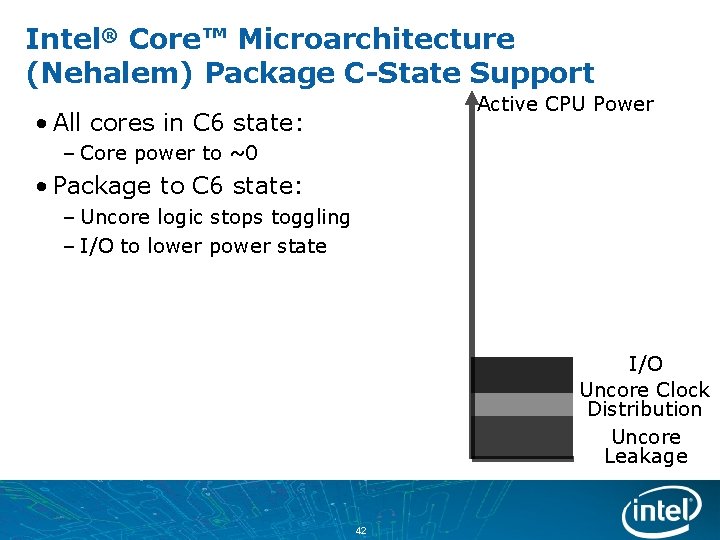

Intel® Core™ Microarchitecture (Nehalem) Package C-State Support Active CPU Power • All cores in C 6 state: – Core power to ~0 • Package to C 6 state: – Uncore logic stops toggling – I/O to lower power state I/O Uncore Clock Distribution Uncore Leakage 42

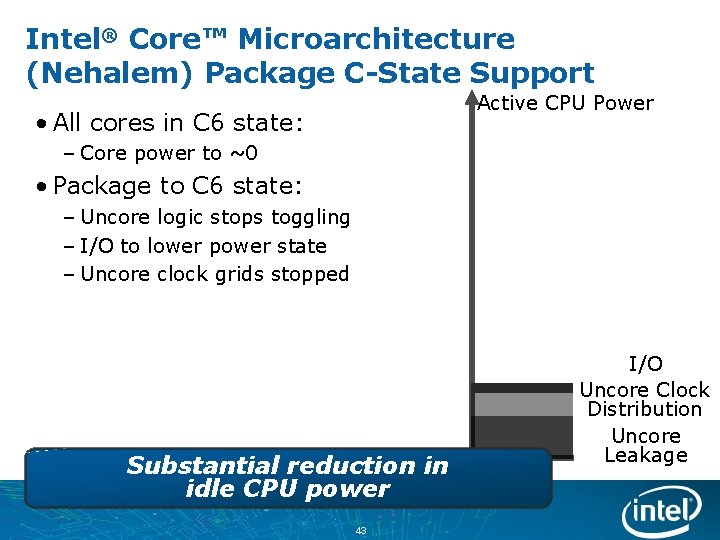

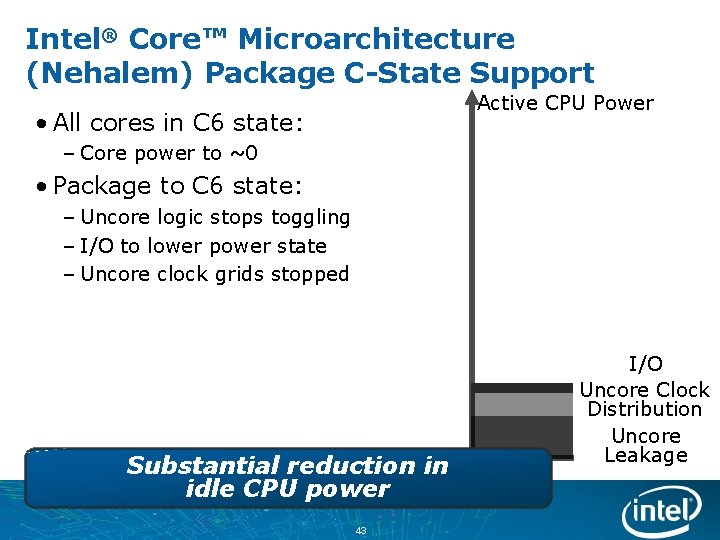

Intel® Core™ Microarchitecture (Nehalem) Package C-State Support Active CPU Power • All cores in C 6 state: – Core power to ~0 • Package to C 6 state: – Uncore logic stops toggling – I/O to lower power state – Uncore clock grids stopped Substantial reduction in idle CPU power 43 I/O Uncore Clock Distribution Uncore Leakage

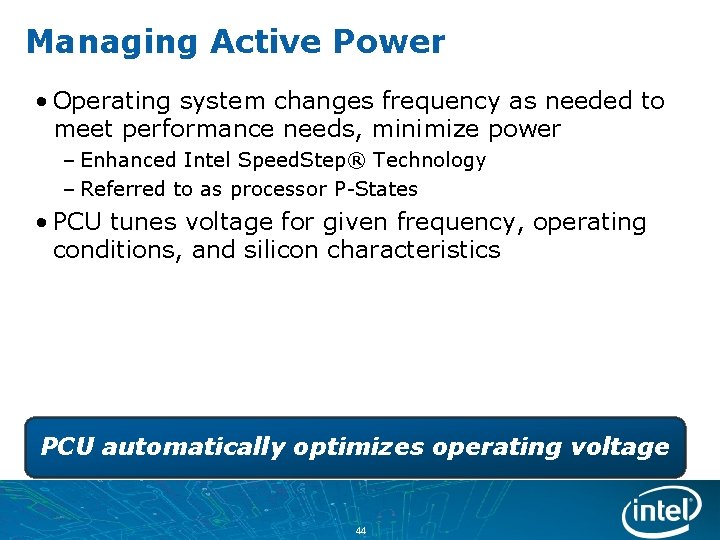

Managing Active Power • Operating system changes frequency as needed to meet performance needs, minimize power – Enhanced Intel Speed. Step® Technology – Referred to as processor P-States • PCU tunes voltage for given frequency, operating conditions, and silicon characteristics PCU automatically optimizes operating voltage 44

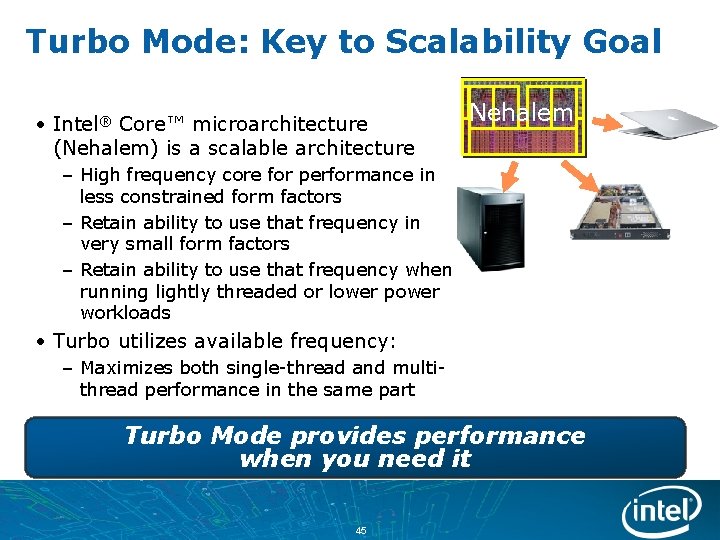

Turbo Mode: Key to Scalability Goal • Intel® Core™ microarchitecture (Nehalem) is a scalable architecture Nehalem – High frequency core for performance in less constrained form factors – Retain ability to use that frequency in very small form factors – Retain ability to use that frequency when running lightly threaded or lower power workloads • Turbo utilizes available frequency: – Maximizes both single-thread and multithread performance in the same part Turbo Mode provides performance when you need it 45

Turbo Mode Enabling • Turbo Mode exposed as additional Enhanced Intel Speed. Step® Technology operating point – Operating system treats as any other P-state, requesting Turbo Mode when it needs more performance – Performance benefit comes from higher operating frequency – no need to enable or tune software • Turbo Mode is transparent to system – Frequency transitions handled completely in hardware – PCU keeps silicon within existing operating limits – Systems designed to same specs, with or without Turbo Mode Performance benefits with existing applications and operating systems 46

Summary • Intel® Core™ microarchitecture (Nehalem) – The 45 nm Tock • Designed for – Power Efficiency – Scalability – Performance • Key Innovations: – Enhanced Processor Core – Brand New Platform Architecture – Sophisticated Power Management High Performance When You Need It Lower Power When You Don’t 47

Q&A 48

Legal Disclaimer • INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL’S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL® PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. INTEL PRODUCTS ARE NOT INTENDED FOR USE IN MEDICAL, LIFE SAVING, OR LIFE SUSTAINING APPLICATIONS. • Intel may make changes to specifications and product descriptions at any time, without notice. • All products, dates, and figures specified are preliminary based on current expectations, and are subject to change without notice. • Intel, processors, chipsets, and desktop boards may contain design defects or errors known as errata, which may cause the product to deviate from published specifications. Current characterized errata are available on request. • Merom, Penryn, Hapertown, Nehalem, Dothan, Westmere, Sandy Bridge, and other code names featured are used internally within Intel to identify products that are in development and not yet publicly announced for release. Customers, licensees and other third parties are not authorized by Intel to use code names in advertising, promotion or marketing of any product or services and any such use of Intel's internal code names is at the sole risk of the user • Performance tests and ratings are measured using specific computer systems and/or components and reflect the approximate performance of Intel products as measured by those tests. Any difference in system hardware or software design or configuration may affect actual performance. • Intel, Intel Inside, Intel Core, Pentium, Intel Speed. Step Technology, and the Intel logo are trademarks of Intel Corporation in the United States and other countries. • *Other names and brands may be claimed as the property of others. • Copyright © 2008 Intel Corporation. 49

Risk Factors This presentation contains forward-looking statements that involve a number of risks and uncertainties. These statements do not reflect the potential impact of any mergers, acquisitions, divestitures, investments or other similar transactions that may be completed in the future. The information presented is accurate only as of today’s date and will not be updated. In addition to any factors discussed in the presentation, the important factors that could cause actual results to differ materially include the following: Demand could be different from Intel's expectations due to factors including changes in business and economic conditions, including conditions in the credit market that could affect consumer confidence; customer acceptance of Intel’s and competitors’ products; changes in customer order patterns, including order cancellations; and changes in the level of inventory at customers. Intel’s results could be affected by the timing of closing of acquisitions and divestitures. Intel operates in intensely competitive industries that are characterized by a high percentage of costs that are fixed or difficult to reduce in the short term and product demand that is highly variable and difficult to forecast. Revenue and the gross margin percentage are affected by the timing of new Intel product introductions and the demand for and market acceptance of Intel's products; actions taken by Intel's competitors, including product offerings and introductions, marketing programs and pricing pressures and Intel’s response to such actions; Intel’s ability to respond quickly to technological developments and to incorporate new features into its products; and the availability of sufficient supply of components from suppliers to meet demand. The gross margin percentage could vary significantly from expectations based on changes in revenue levels; product mix and pricing; capacity utilization; variations in inventory valuation, including variations related to the timing of qualifying products for sale; excess or obsolete inventory; manufacturing yields; changes in unit costs; impairments of long-lived assets, including manufacturing, assembly/test and intangible assets; and the timing and execution of the manufacturing ramp and associated costs, including start-up costs. Expenses, particularly certain marketing and compensation expenses, vary depending on the level of demand for Intel's products, the level of revenue and profits, and impairments of long-lived assets. Intel is in the midst of a structure and efficiency program that is resulting in several actions that could have an impact on expected expense levels and gross margin. Intel's results could be impacted by adverse economic, social, political and physical/infrastructure conditions in the countries in which Intel, its customers or its suppliers operate, including military conflict and other security risks, natural disasters, infrastructure disruptions, health concerns and fluctuations in currency exchange rates. Intel's results could be affected by adverse effects associated with product defects and errata (deviations from published specifications), and by litigation or regulatory matters involving intellectual property, stockholder, consumer, antitrust and other issues, such as the litigation and regulatory matters described in Intel's SEC reports. A detailed discussion of these and other factors that could affect Intel’s results is included in Intel’s SEC filings, including the report on Form 10 -Q for the quarter ended June 28, 2008. 50

Backup Slides 51

Intel® Core™ Microarchitecture (Nehalem) Design Goals World class performance combined with superior energy efficiency – Optimized for: Dynamically scaled performance when Single Thread Multi-threads Existing Apps Emerging Apps All Usages needed to maximize energy efficiency A single, scalable, foundation optimized across each segment and power envelope Workstation / Server Desktop / Mobile A Dynamic and Design Scalable Microarchitecture 52

Tick-Tock Development Model Merom 1 Penryn Nehalem Westmere Sandy Bridge NEW Microarchitecture NEW Process NEW Microarchitecture 65 nm 45 nm 32 nm TOCK TICK TOCK Forecast 1 Intel® Core™ microarchitecture (formerly Merom) 45 nm next generation Intel® Core™ microarchitecture (Penryn) Intel® Core™ Microarchitecture (Nehalem) Intel® Microarchitecture (Westmere) 53 Intel® Microarchitecture (Sandy Bridge) All dates, product descriptions, availability and plans are forecasts and subject to change without notice. 53

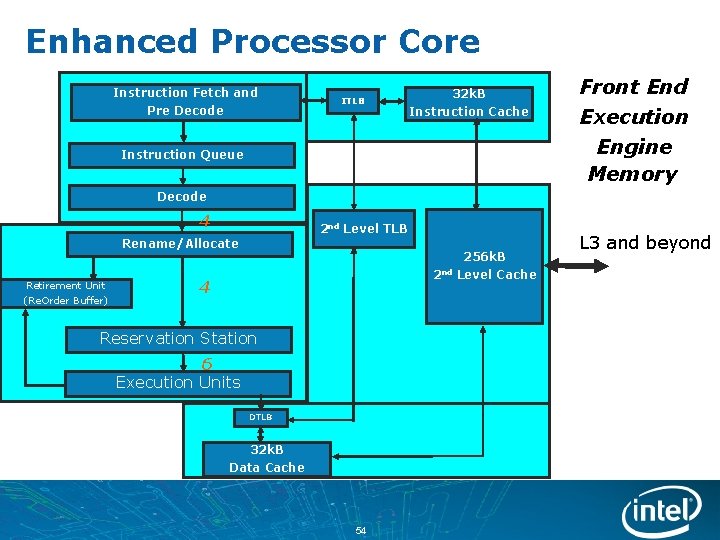

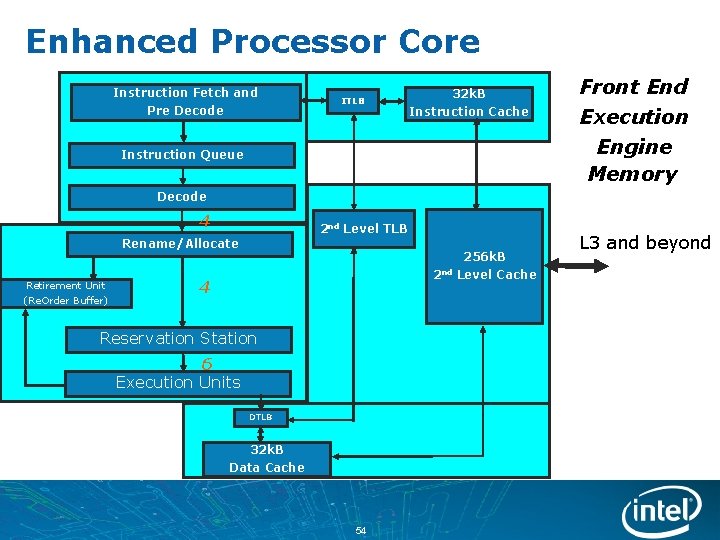

Enhanced Processor Core Instruction Fetch and Pre Decode ITLB 32 k. B Instruction Cache Instruction Queue Front End Execution Engine Memory Decode 4 2 nd Level TLB Rename/Allocate Retirement Unit (Re. Order Buffer) 2 nd 4 Reservation Station 6 Execution Units DTLB 32 k. B Data Cache 54 256 k. B Level Cache L 3 and beyond

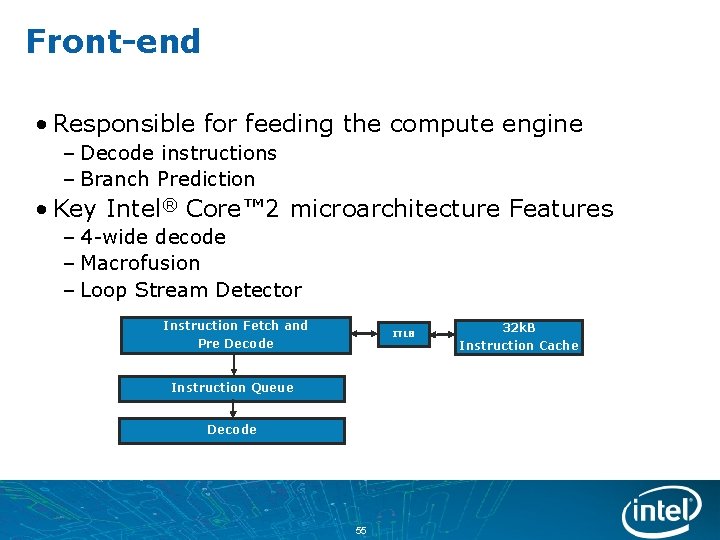

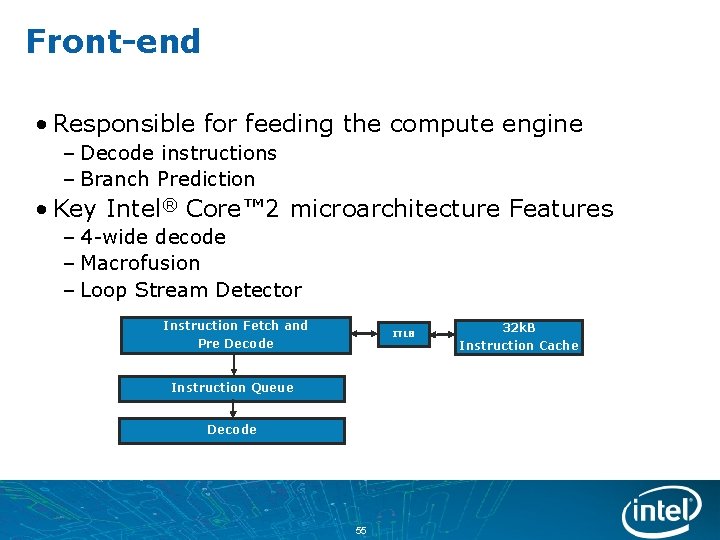

Front-end • Responsible for feeding the compute engine – Decode instructions – Branch Prediction • Key Intel® Core™ 2 microarchitecture Features – 4 -wide decode – Macrofusion – Loop Stream Detector Instruction Fetch and ITLB Pre Decode Instruction Queue Decode 55 32 k. B Instruction Cache

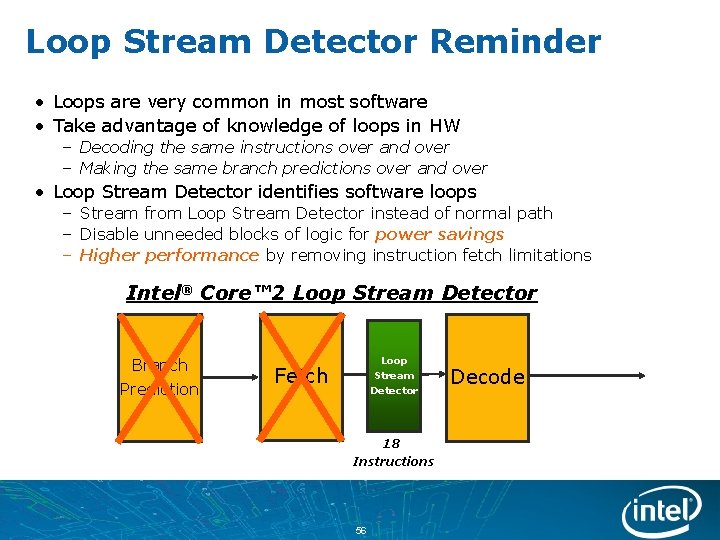

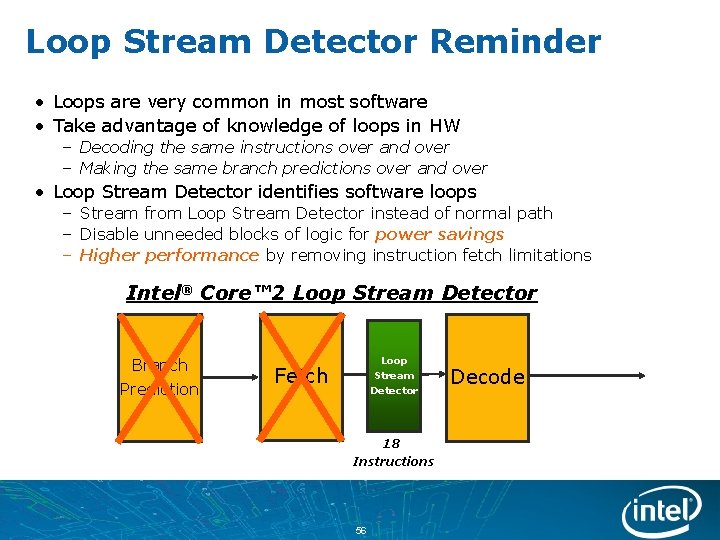

Loop Stream Detector Reminder • Loops are very common in most software • Take advantage of knowledge of loops in HW – Decoding the same instructions over and over – Making the same branch predictions over and over • Loop Stream Detector identifies software loops – Stream from Loop Stream Detector instead of normal path – Disable unneeded blocks of logic for power savings – Higher performance by removing instruction fetch limitations Intel® Core™ 2 Loop Stream Detector Branch Prediction Loop Stream Detector Fetch 18 Instructions 56 Decode

Branch Prediction Reminder • Goal: Keep powerful compute engine fed • Options: – Stall pipeline while determining branch direction/target – Predict branch direction/target and correct if wrong • Minimize amount of time wasted correcting from incorrect branch predictions – Performance: – Through higher branch prediction accuracy – Through faster correction when prediction is wrong – Power efficiency: Minimize number of speculative/incorrect micro-ops that are executed Continued focus on branch prediction improvements 57

L 2 Branch Predictor • Problem: Software with a large code footprint not able to fit well in existing branch predictors – Example: Database applications • Solution: Use multi-level branch prediction scheme • Benefits: – Higher performance through improved branch prediction accuracy – Greater power efficiency through less mis-speculation 58

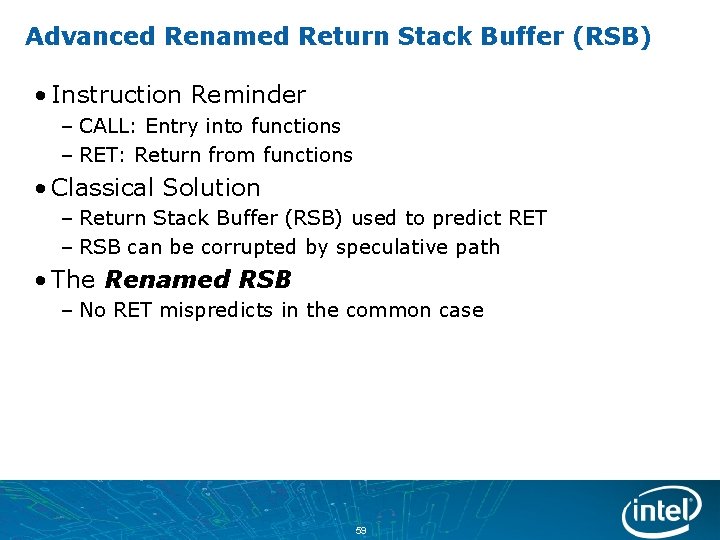

Advanced Renamed Return Stack Buffer (RSB) • Instruction Reminder – CALL: Entry into functions – RET: Return from functions • Classical Solution – Return Stack Buffer (RSB) used to predict RET – RSB can be corrupted by speculative path • The Renamed RSB – No RET mispredicts in the common case 59

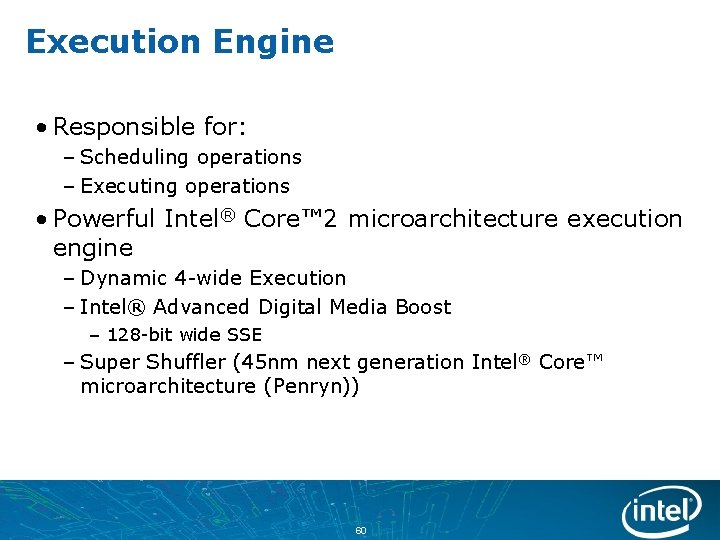

Execution Engine • Responsible for: – Scheduling operations – Executing operations • Powerful Intel® Core™ 2 microarchitecture execution engine – Dynamic 4 -wide Execution – Intel® Advanced Digital Media Boost – 128 -bit wide SSE – Super Shuffler (45 nm next generation Intel® Core™ microarchitecture (Penryn)) 60

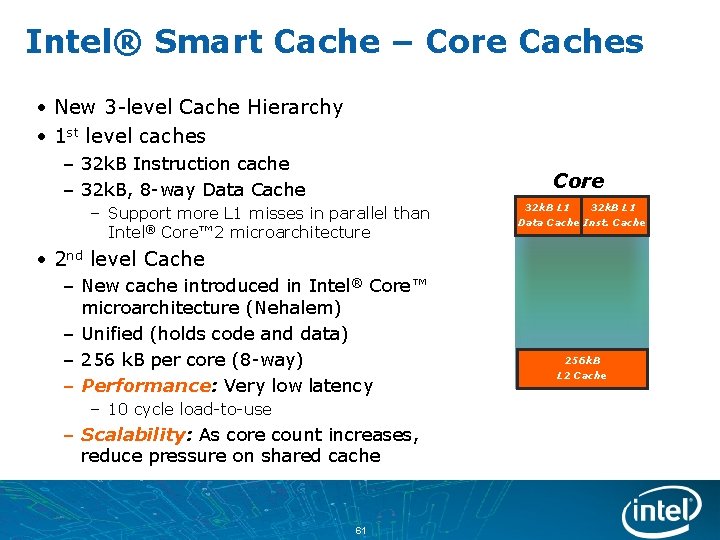

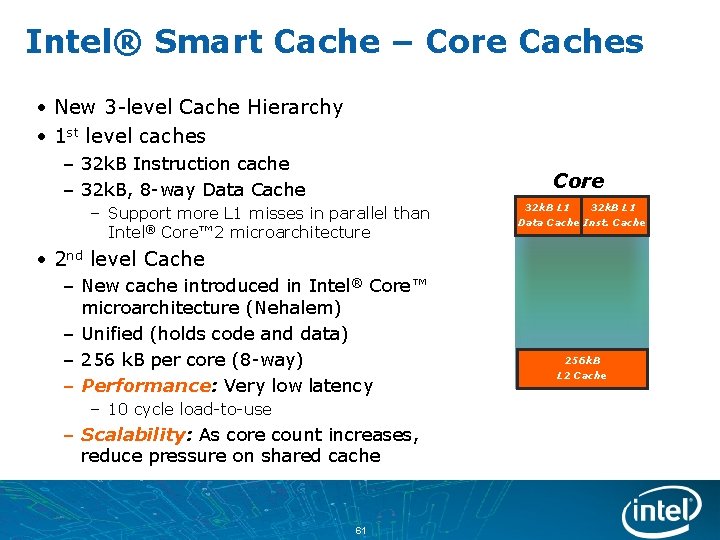

Intel® Smart Cache – Core Caches • New 3 -level Cache Hierarchy • 1 st level caches – 32 k. B Instruction cache – 32 k. B, 8 -way Data Cache Core – Support more L 1 misses in parallel than Intel® Core™ 2 microarchitecture 32 k. B L 1 Data Cache Inst. Cache • 2 nd level Cache – New cache introduced in Intel® Core™ microarchitecture (Nehalem) – Unified (holds code and data) – 256 k. B per core (8 -way) – Performance: Very low latency – 10 cycle load-to-use – Scalability: As core count increases, reduce pressure on shared cache 61 256 k. B L 2 Cache

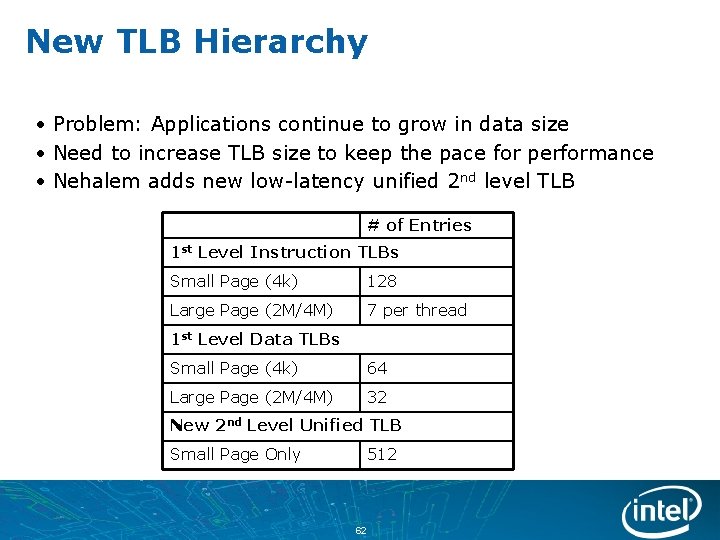

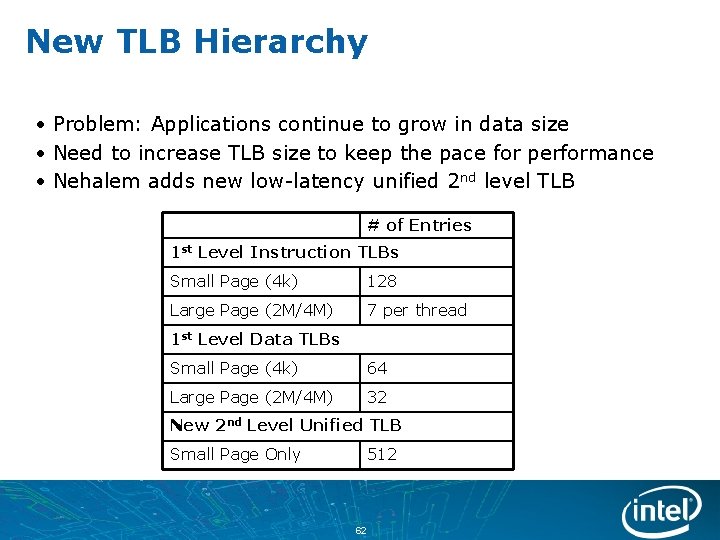

New TLB Hierarchy • Problem: Applications continue to grow in data size • Need to increase TLB size to keep the pace for performance • Nehalem adds new low-latency unified 2 nd level TLB # of Entries 1 st Level Instruction TLBs Small Page (4 k) 128 Large Page (2 M/4 M) 7 per thread 1 st Level Data TLBs Small Page (4 k) 64 Large Page (2 M/4 M) 32 New 2 nd Level Unified TLB Small Page Only 512 62

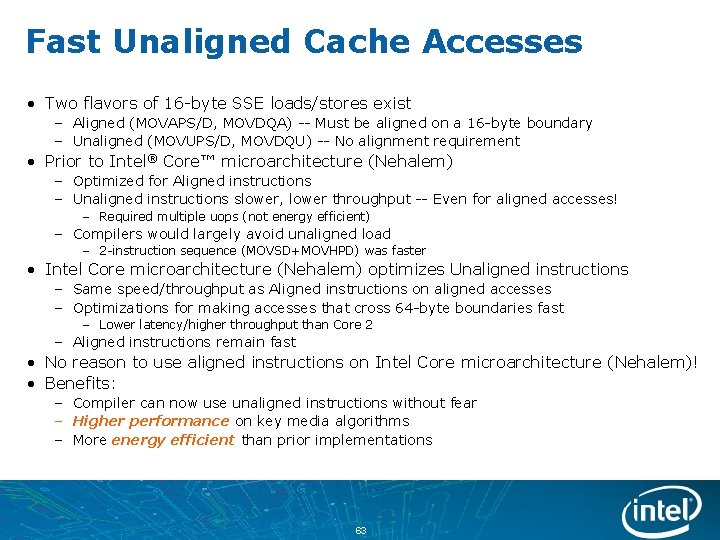

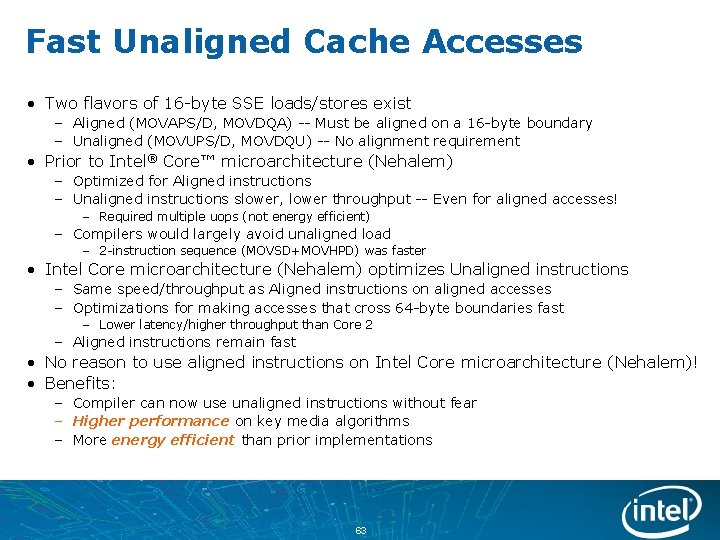

Fast Unaligned Cache Accesses • Two flavors of 16 -byte SSE loads/stores exist – Aligned (MOVAPS/D, MOVDQA) -- Must be aligned on a 16 -byte boundary – Unaligned (MOVUPS/D, MOVDQU) -- No alignment requirement • Prior to Intel® Core™ microarchitecture (Nehalem) – Optimized for Aligned instructions – Unaligned instructions slower, lower throughput -- Even for aligned accesses! – Required multiple uops (not energy efficient) – Compilers would largely avoid unaligned load – 2 -instruction sequence (MOVSD+MOVHPD) was faster • Intel Core microarchitecture (Nehalem) optimizes Unaligned instructions – Same speed/throughput as Aligned instructions on aligned accesses – Optimizations for making accesses that cross 64 -byte boundaries fast – Lower latency/higher throughput than Core 2 – Aligned instructions remain fast • No reason to use aligned instructions on Intel Core microarchitecture (Nehalem)! • Benefits: – Compiler can now use unaligned instructions without fear – Higher performance on key media algorithms – More energy efficient than prior implementations 63

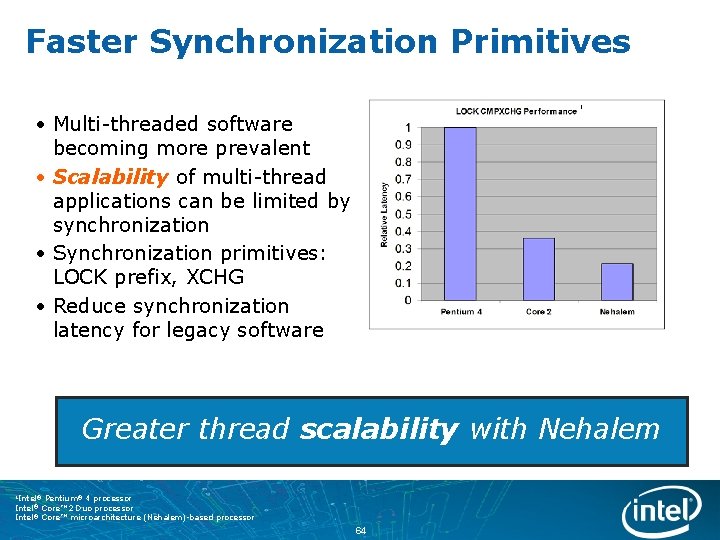

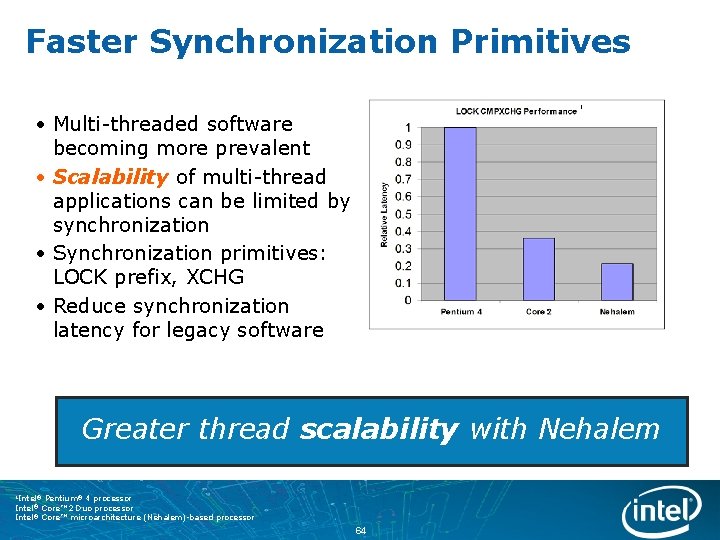

Faster Synchronization Primitives 1 • Multi-threaded software becoming more prevalent • Scalability of multi-thread applications can be limited by synchronization • Synchronization primitives: LOCK prefix, XCHG • Reduce synchronization latency for legacy software Greater thread scalability with Nehalem 1 Intel® Pentium® 4 processor Intel® Core™ 2 Duo processor Intel® Core™ microarchitecture (Nehalem)-based processor 64

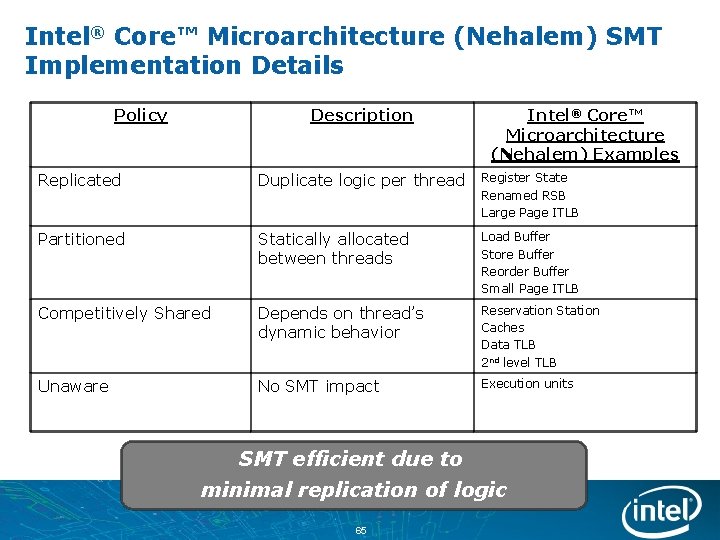

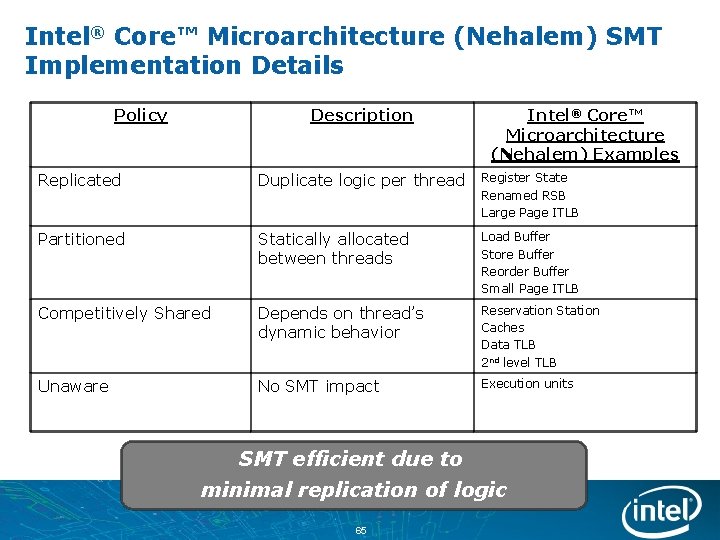

Intel® Core™ Microarchitecture (Nehalem) SMT Implementation Details Policy Description Intel® Core™ Microarchitecture (Nehalem) Examples Replicated Duplicate logic per thread Register State Renamed RSB Large Page ITLB Partitioned Statically allocated between threads Load Buffer Store Buffer Reorder Buffer Small Page ITLB Competitively Shared Depends on thread’s dynamic behavior Reservation Station Caches Data TLB 2 nd level TLB Unaware No SMT impact Execution units SMT efficient due to minimal replication of logic 65

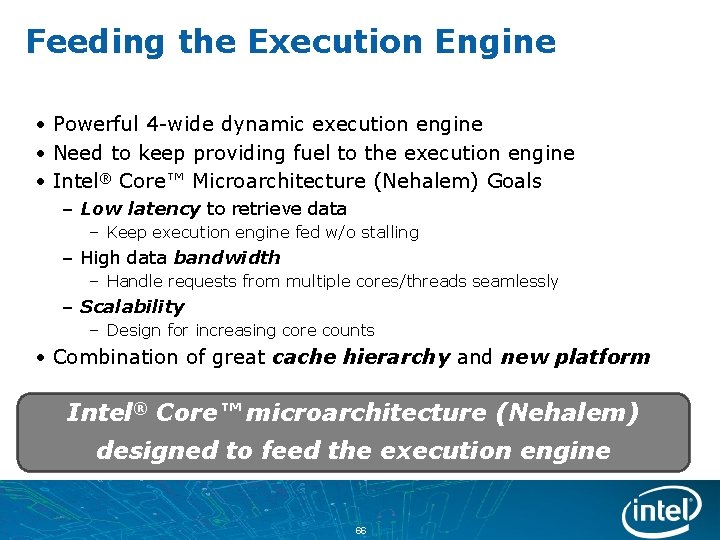

Feeding the Execution Engine • Powerful 4 -wide dynamic execution engine • Need to keep providing fuel to the execution engine • Intel® Core™ Microarchitecture (Nehalem) Goals – Low latency to retrieve data – Keep execution engine fed w/o stalling – High data bandwidth – Handle requests from multiple cores/threads seamlessly – Scalability – Design for increasing core counts • Combination of great cache hierarchy and new platform Intel® Core™ microarchitecture (Nehalem) designed to feed the execution engine 66

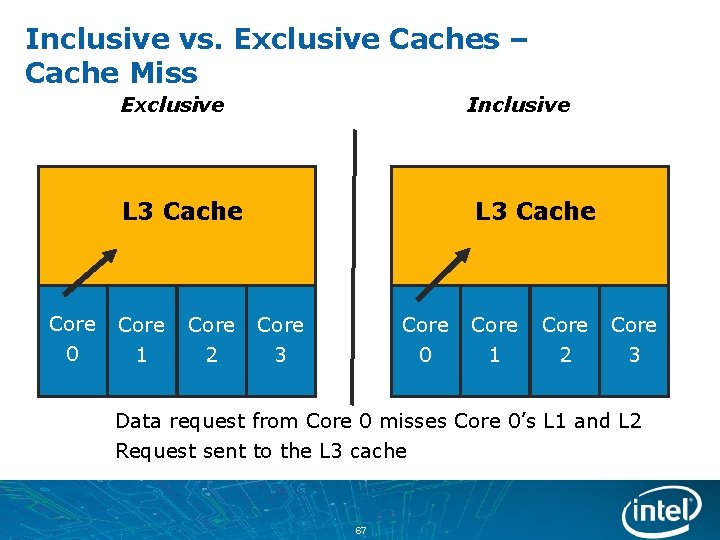

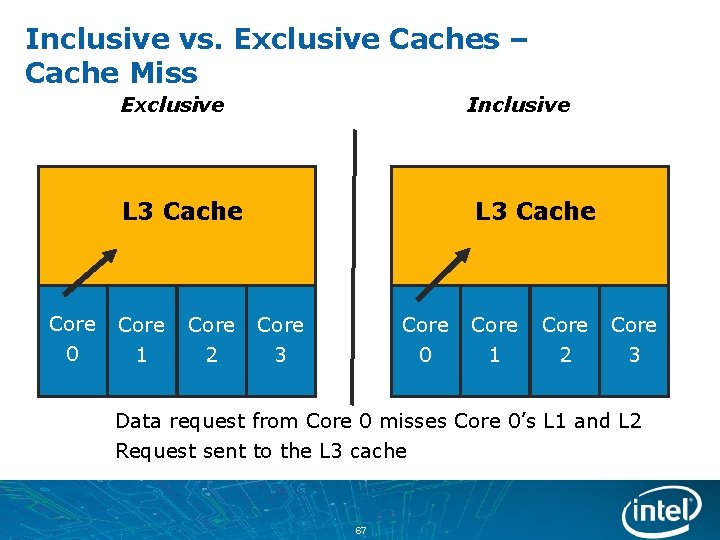

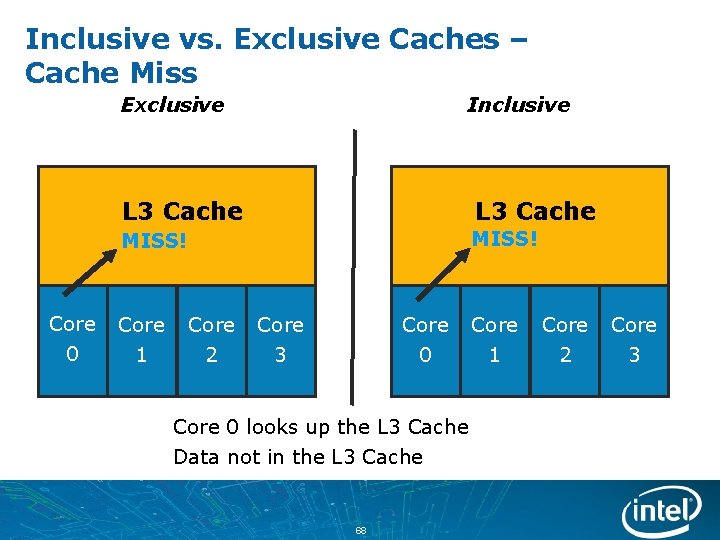

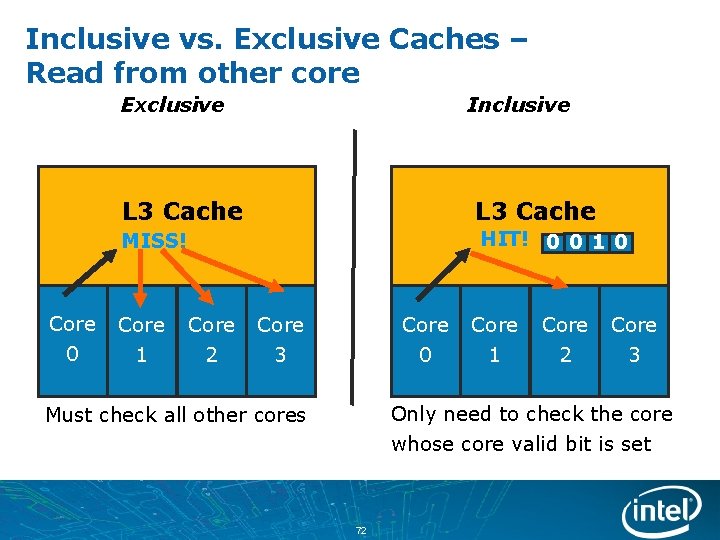

Inclusive vs. Exclusive Caches – Cache Miss Exclusive Inclusive L 3 Cache Core 0 Core 1 Core 2 L 3 Cache Core 0 Core 3 Core 1 Core 2 Core 3 Data request from Core 0 misses Core 0’s L 1 and L 2 Request sent to the L 3 cache 67

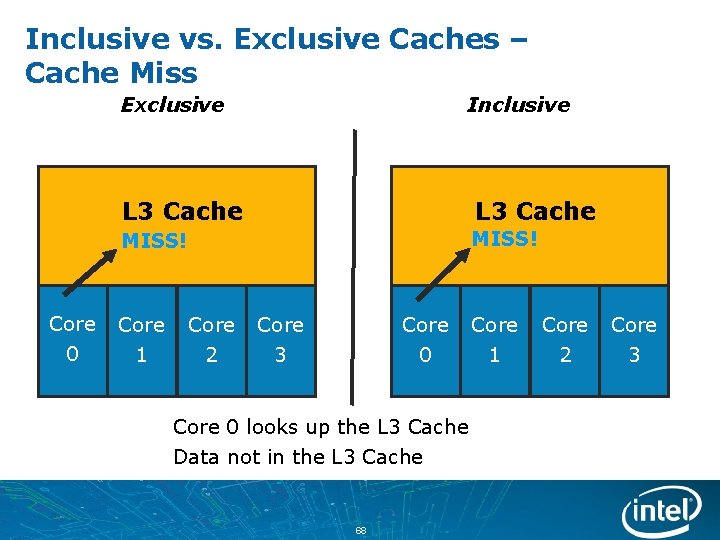

Inclusive vs. Exclusive Caches – Cache Miss Exclusive Core 0 Inclusive L 3 Cache MISS! Core 1 Core 2 Core 0 Core 3 Core 0 looks up the L 3 Cache Data not in the L 3 Cache 68 Core 1 Core 2 Core 3

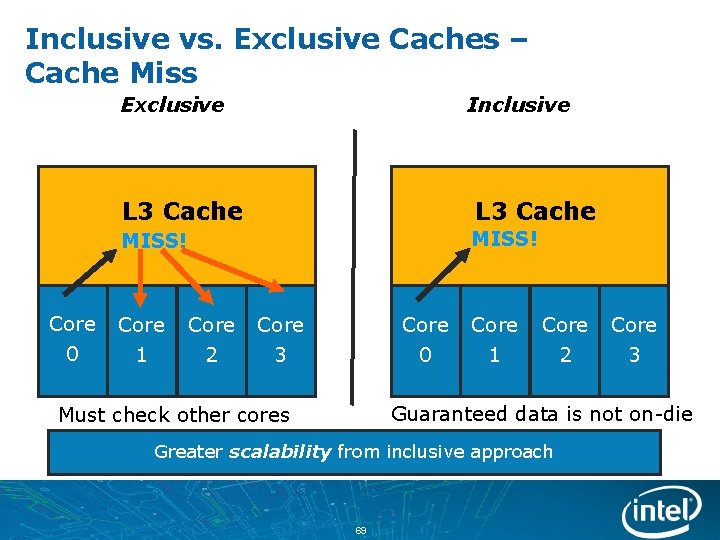

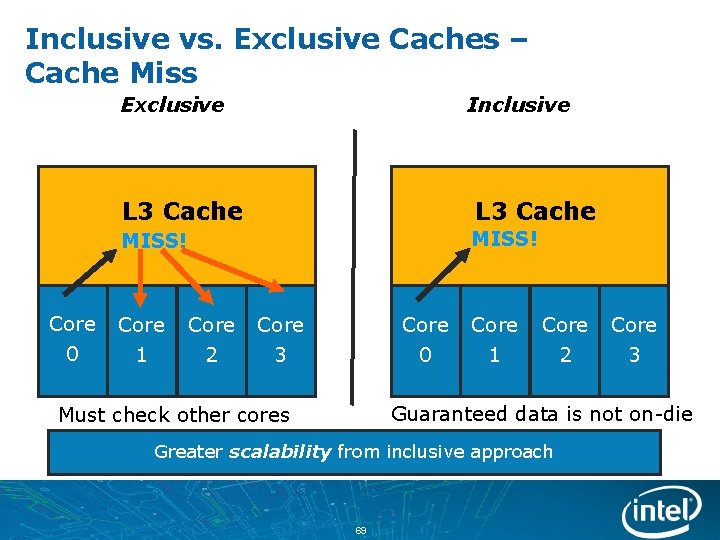

Inclusive vs. Exclusive Caches – Cache Miss Exclusive Core 0 Inclusive L 3 Cache MISS! Core 1 Core 2 Core 0 Core 3 Core 1 Core 2 Core 3 Guaranteed data is not on-die Must check other cores Greater scalability from inclusive approach 69

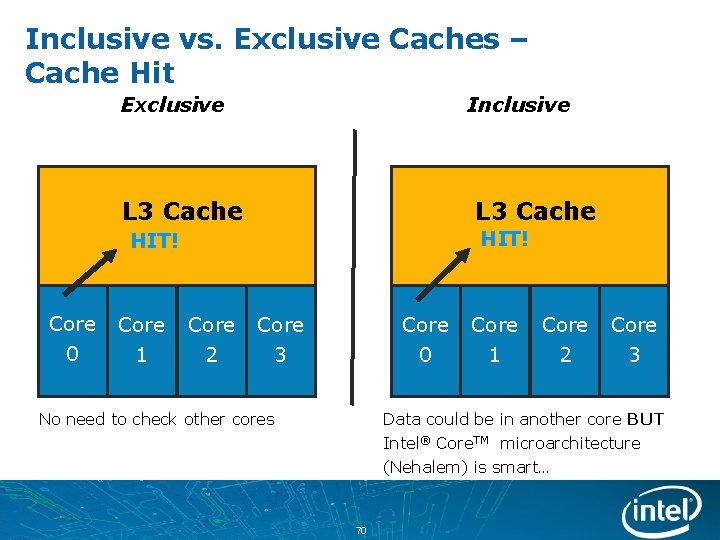

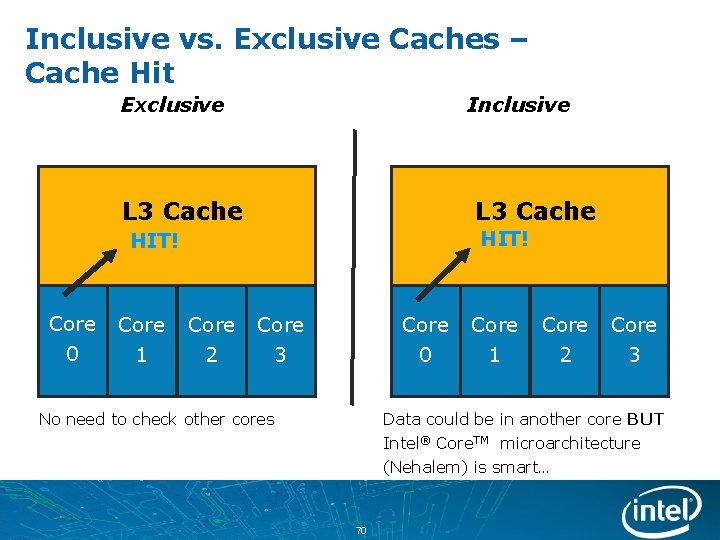

Inclusive vs. Exclusive Caches – Cache Hit Exclusive Inclusive L 3 Cache HIT! Core 0 Core 1 Core 2 Core 0 Core 3 No need to check other cores Core 1 Core 2 Core 3 Data could be in another core BUT Intel® Core. TM microarchitecture (Nehalem) is smart… 70

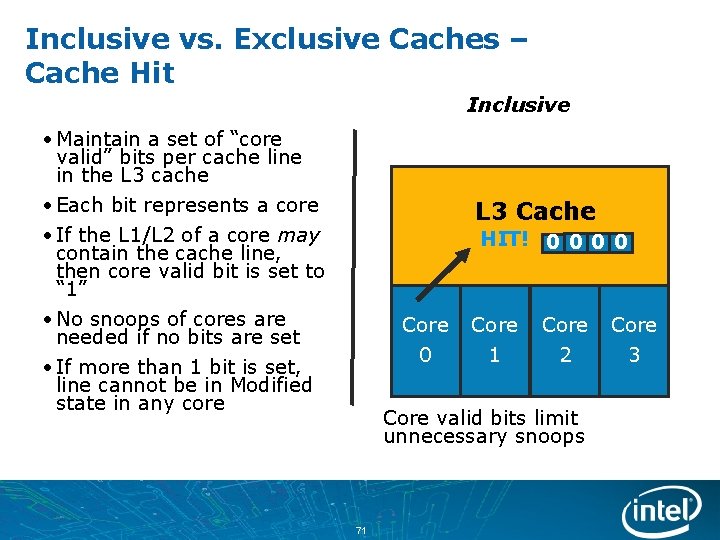

Inclusive vs. Exclusive Caches – Cache Hit Inclusive • Maintain a set of “core valid” bits per cache line in the L 3 cache • Each bit represents a core • If the L 1/L 2 of a core may contain the cache line, then core valid bit is set to “ 1” • No snoops of cores are needed if no bits are set • If more than 1 bit is set, line cannot be in Modified state in any core L 3 Cache HIT! 0 0 Core 1 Core 2 Core valid bits limit unnecessary snoops 71 Core 3

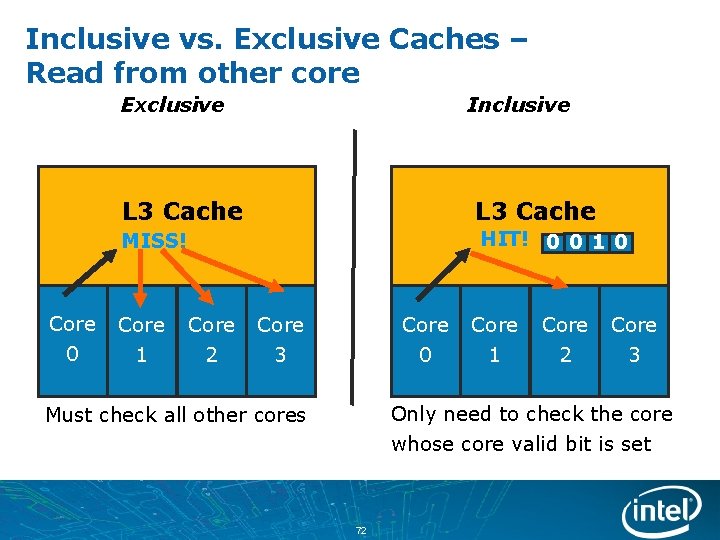

Inclusive vs. Exclusive Caches – Read from other core Exclusive Core 0 Inclusive L 3 Cache MISS! HIT! 0 0 1 0 Core 1 Core 2 Core 0 Core 3 Core 1 Core 2 Core 3 Only need to check the core whose core valid bit is set Must check all other cores 72

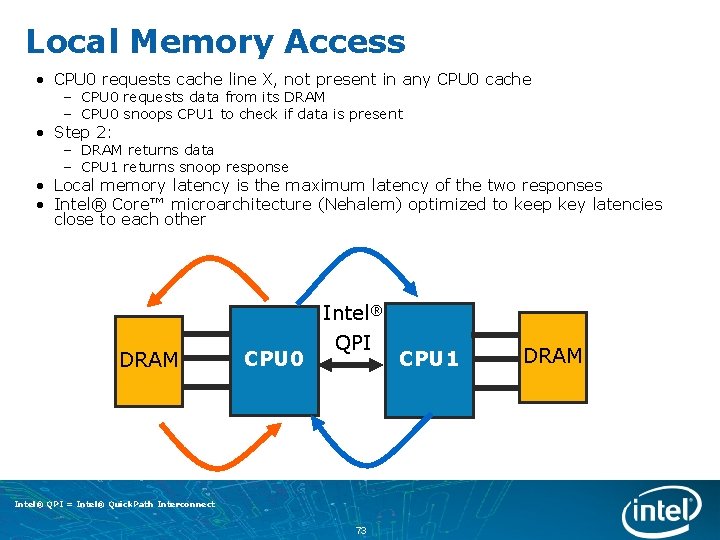

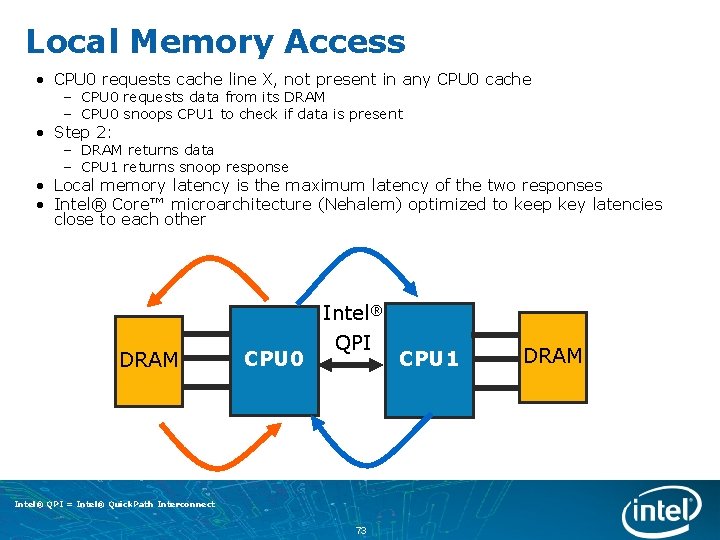

Local Memory Access • CPU 0 requests cache line X, not present in any CPU 0 cache – CPU 0 requests data from its DRAM – CPU 0 snoops CPU 1 to check if data is present • Step 2: – DRAM returns data – CPU 1 returns snoop response • Local memory latency is the maximum latency of the two responses • Intel® Core™ microarchitecture (Nehalem) optimized to keep key latencies close to each other DRAM CPU 0 Intel® QPI = Intel® Quick. Path Interconnect 73 CPU 1 DRAM

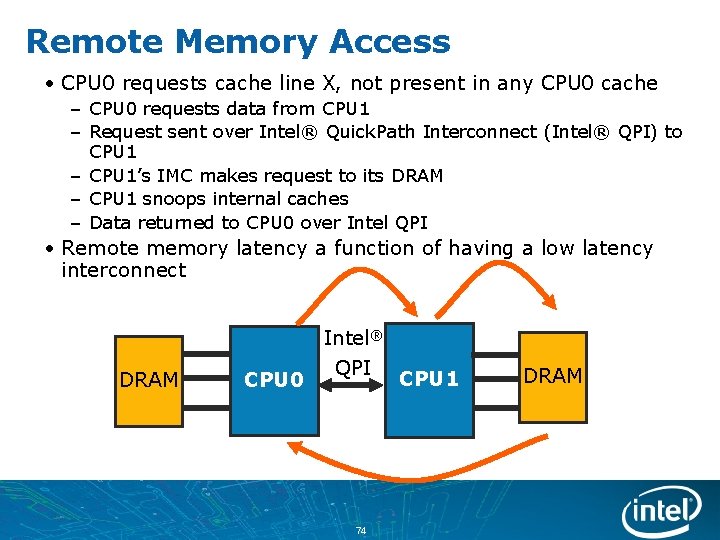

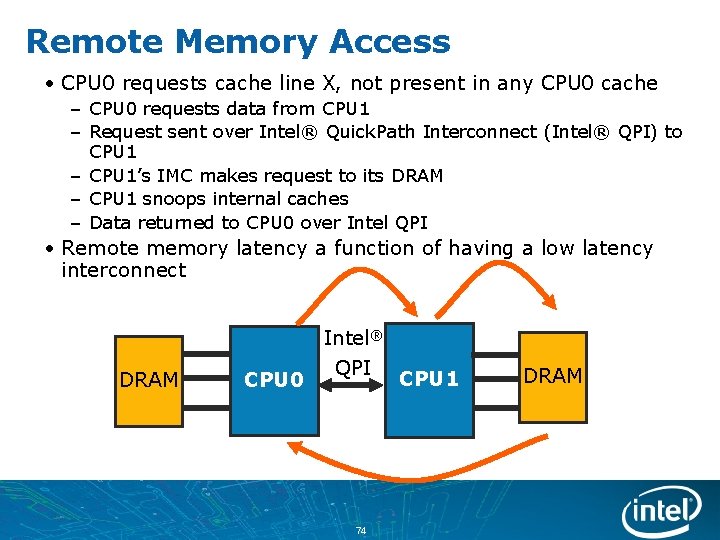

Remote Memory Access • CPU 0 requests cache line X, not present in any CPU 0 cache – CPU 0 requests data from CPU 1 – Request sent over Intel® Quick. Path Interconnect (Intel® QPI) to CPU 1 – CPU 1’s IMC makes request to its DRAM – CPU 1 snoops internal caches – Data returned to CPU 0 over Intel QPI • Remote memory latency a function of having a low latency interconnect DRAM CPU 0 Intel® QPI 74 CPU 1 DRAM

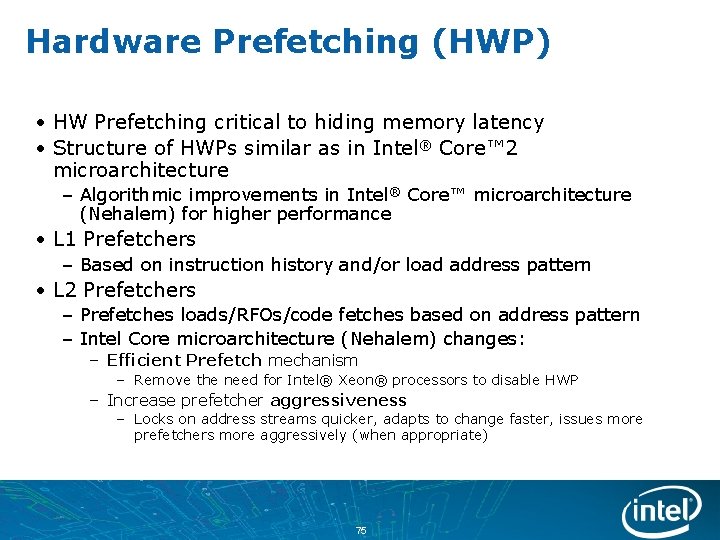

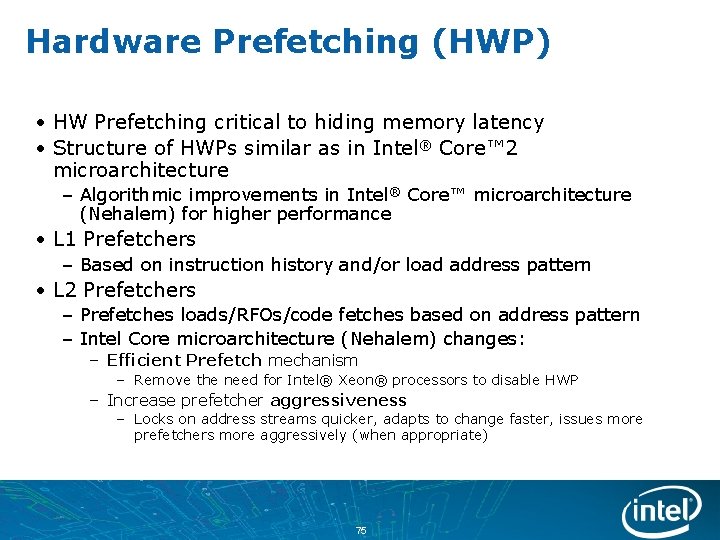

Hardware Prefetching (HWP) • HW Prefetching critical to hiding memory latency • Structure of HWPs similar as in Intel® Core™ 2 microarchitecture – Algorithmic improvements in Intel® Core™ microarchitecture (Nehalem) for higher performance • L 1 Prefetchers – Based on instruction history and/or load address pattern • L 2 Prefetchers – Prefetches loads/RFOs/code fetches based on address pattern – Intel Core microarchitecture (Nehalem) changes: – Efficient Prefetch mechanism – Remove the need for Intel® Xeon® processors to disable HWP – Increase prefetcher aggressiveness – Locks on address streams quicker, adapts to change faster, issues more prefetchers more aggressively (when appropriate) 75

Today’s Platform Architecture 76

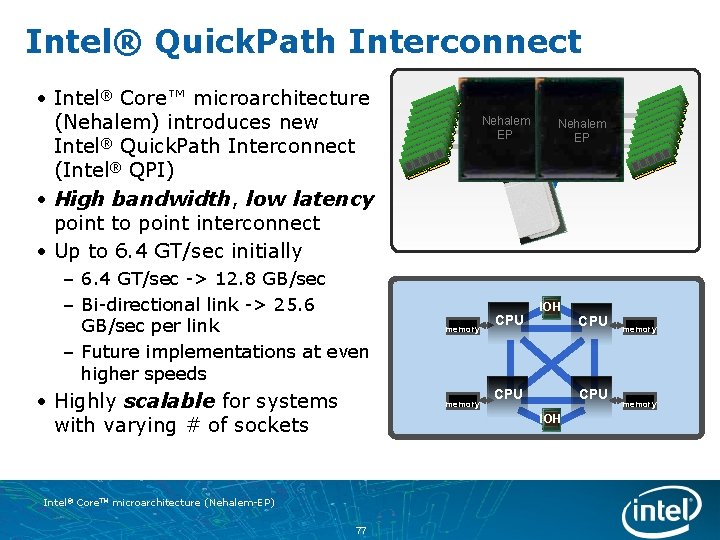

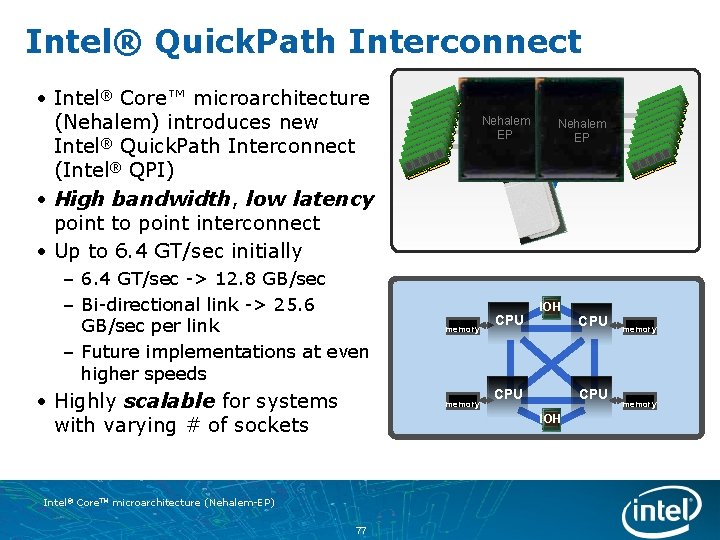

Intel® Quick. Path Interconnect • Intel® Core™ microarchitecture (Nehalem) introduces new Intel® Quick. Path Interconnect (Intel® QPI) • High bandwidth, low latency point to point interconnect • Up to 6. 4 GT/sec initially – 6. 4 GT/sec -> 12. 8 GB/sec – Bi-directional link -> 25. 6 GB/sec per link – Future implementations at even higher speeds • Highly scalable for systems with varying # of sockets Nehalem EP memory CPU Nehalem EP IOH CPU IOH Intel® Core. TM microarchitecture (Nehalem-EP) 77 CPU memory

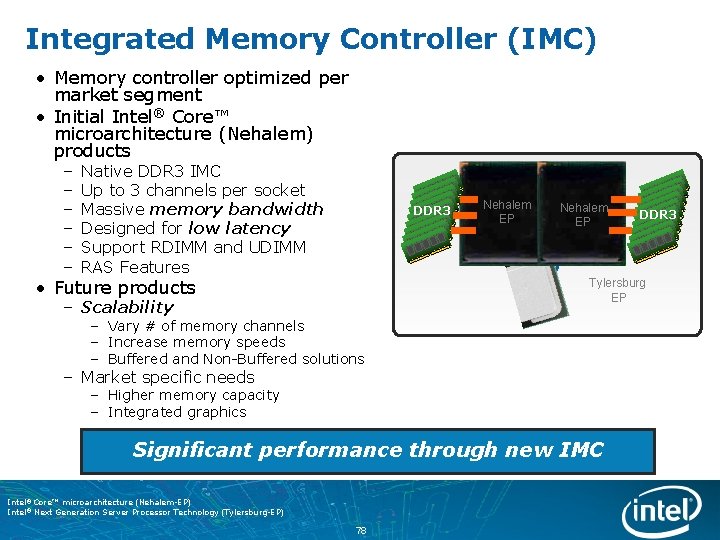

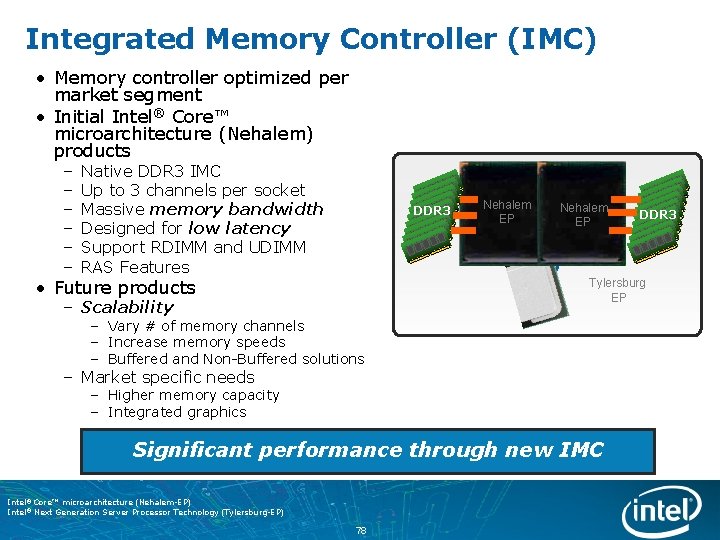

Integrated Memory Controller (IMC) • Memory controller optimized per market segment • Initial Intel® Core™ microarchitecture (Nehalem) products – – – Native DDR 3 IMC Up to 3 channels per socket Massive memory bandwidth Designed for low latency Support RDIMM and UDIMM RAS Features DDR 3 • Future products Nehalem EP DDR 3 Tylersburg EP – Scalability – Vary # of memory channels – Increase memory speeds – Buffered and Non-Buffered solutions – Market specific needs – Higher memory capacity – Integrated graphics Significant performance through new IMC Intel® Core™ microarchitecture (Nehalem-EP) Intel® Next Generation Server Processor Technology (Tylersburg-EP) 78

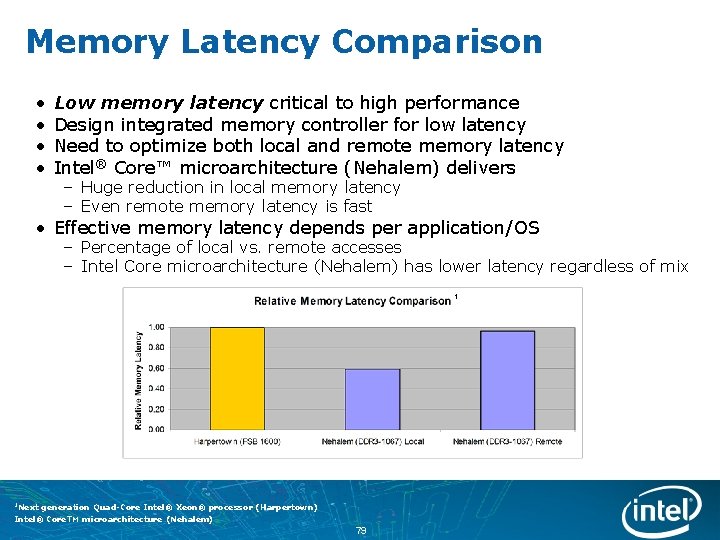

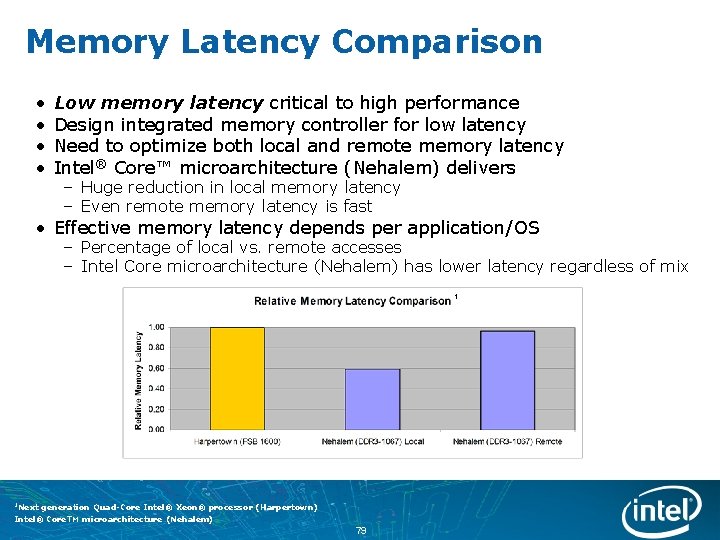

Memory Latency Comparison • • Low memory latency critical to high performance Design integrated memory controller for low latency Need to optimize both local and remote memory latency Intel® Core™ microarchitecture (Nehalem) delivers – Huge reduction in local memory latency – Even remote memory latency is fast • Effective memory latency depends per application/OS – Percentage of local vs. remote accesses – Intel Core microarchitecture (Nehalem) has lower latency regardless of mix 1 1 Next generation Quad-Core Intel® Xeon® processor (Harpertown) Intel® Core. TM microarchitecture (Nehalem) 79

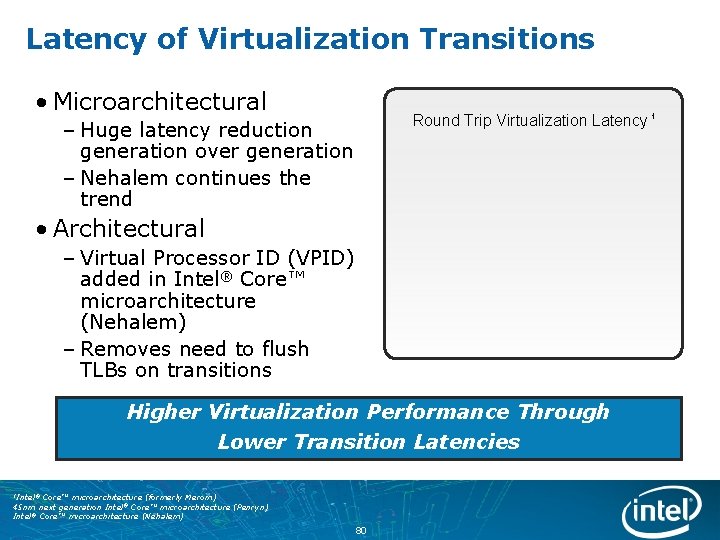

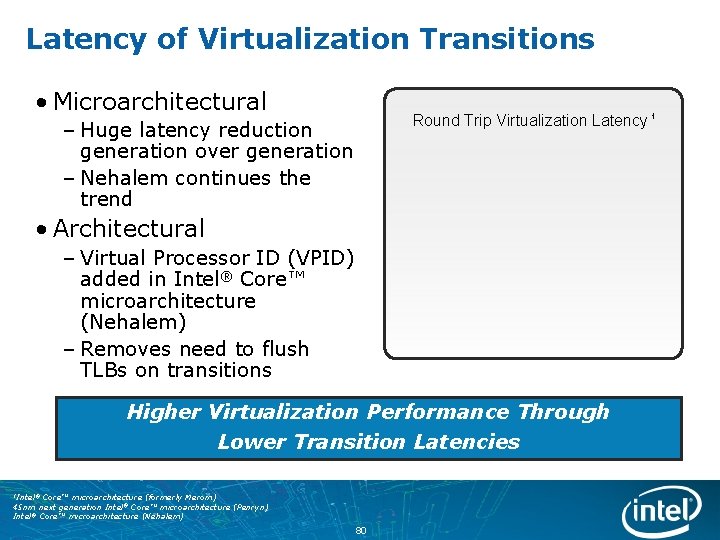

Latency of Virtualization Transitions • Microarchitectural Round Trip Virtualization Latency 1 – Huge latency reduction generation over generation – Nehalem continues the trend • Architectural – Virtual Processor ID (VPID) added in Intel® Core™ microarchitecture (Nehalem) – Removes need to flush TLBs on transitions Higher Virtualization Performance Through Lower Transition Latencies 1 Intel® Core™ microarchitecture (formerly Merom) 45 nm next generation Intel® Core™ microarchitecture (Penryn) Intel® Core™ microarchitecture (Nehalem) 80

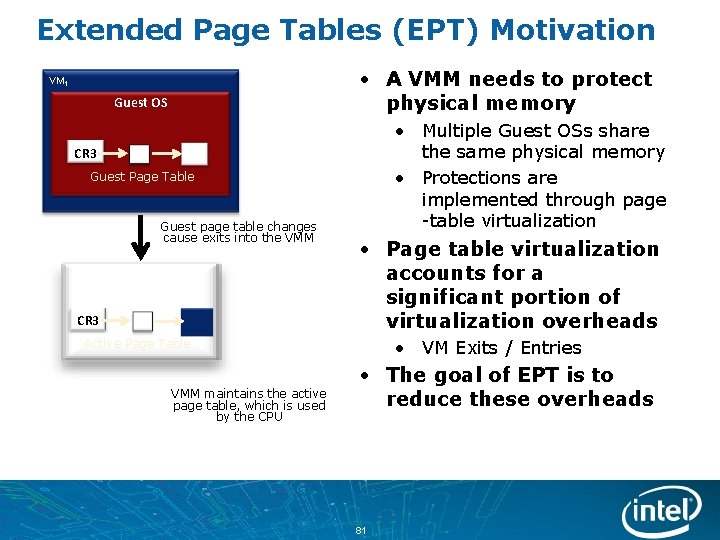

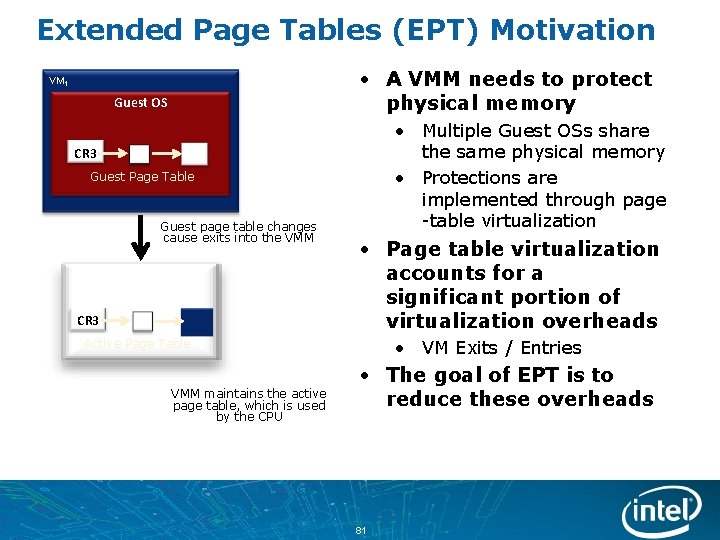

Extended Page Tables (EPT) Motivation • A VMM needs to protect physical memory VM 1 Guest OS • Multiple Guest OSs share the same physical memory • Protections are implemented through page -table virtualization CR 3 Guest Page Table Guest page table changes cause exits into the VMM CR 3 • Page table virtualization accounts for a significant portion of virtualization overheads • VM Exits / Entries Active Page Table VMM maintains the active page table, which is used by the CPU • The goal of EPT is to reduce these overheads 81

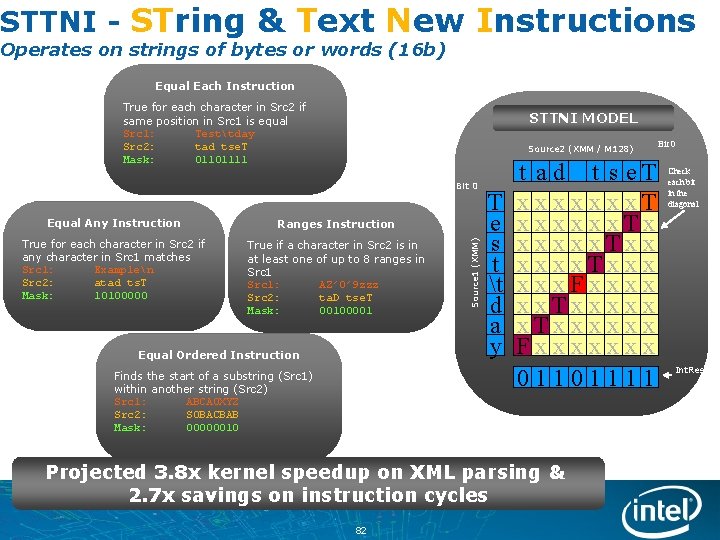

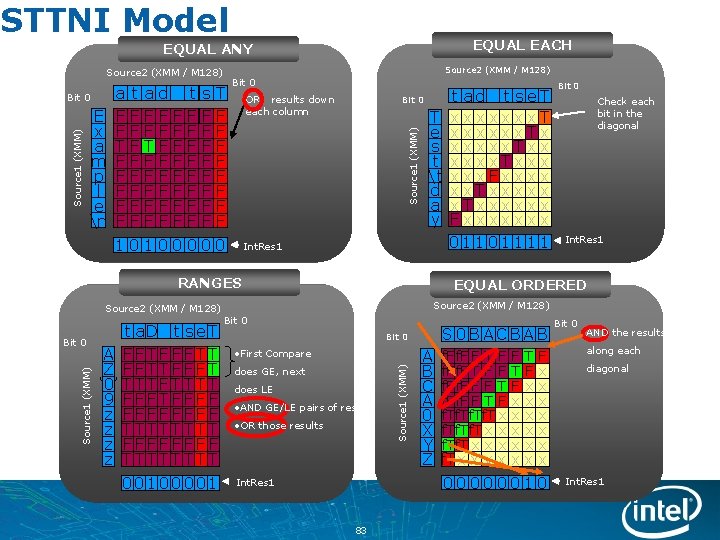

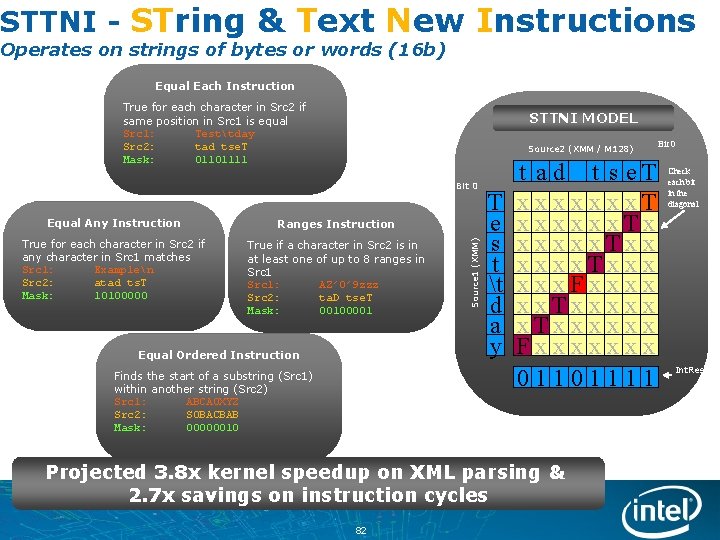

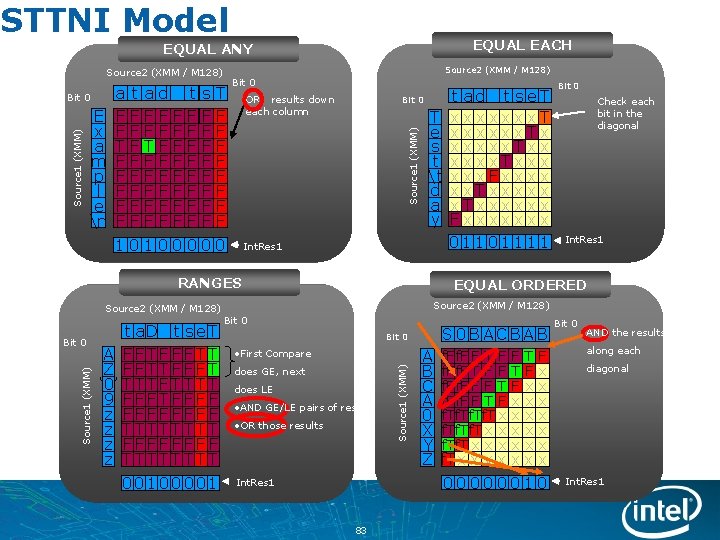

STTNI - STring & Text New Instructions Operates on strings of bytes or words (16 b) Equal Each Instruction True for each character in Src 2 if same position in Src 1 is equal Src 1: Testtday Src 2: tad tse. T Mask: 01101111 STTNI MODEL Source 2 (XMM / M 128) Equal Any Instruction Ranges Instruction True for each character in Src 2 if any character in Src 1 matches Src 1: Examplen Src 2: atad ts. T Mask: 10100000 True if a character in Src 2 is in at least one of up to 8 ranges in Src 1: AZ’ 0’ 9 zzz Src 2: ta. D tse. T Mask: 00100001 Equal Ordered Instruction Finds the start of a substring (Src 1) within another string (Src 2) Src 1: ABCA 0 XYZ Src 2: S 0 BACBAB Mask: 00000010 Source 1 (XMM) Bit 0 T e s t t d a y t ad t s e. T xxxxxxx. Tx xxxxx. Txxx xxx. Fxxxx xx. Txxxxxx Fxxxxxxx 01101111 Projected 3. 8 x kernel speedup on XML parsing & 2. 7 x savings on instruction cycles 82 Bit 0 Check each bit in the diagonal Int. Res 1

STTNI Model EQUAL EACH EQUAL ANY Source 2 (XMM / M 128) Source 1 (XMM) Bit 0 E x a m p l e n a F F T F F F t F F F F ad FFF TFF FFF FFF FFF t F F F F s F F F F T F F F F Bit 0 OR results down each column Bit 0 Source 1 (XMM) Source 2 (XMM / M 128) 10100000 T e s t t d a y Int. Res 1 RANGES t a. D t s e T A FFTFFFTT Z FFTTFFFT ‘ 0’ T T T F T T 9 FFFTFFFF z FFFFFFFF z TTTTTTTT 00100001 Check each bit in the diagonal Int. Res 1 Source 2 (XMM / M 128) Bit 0 • First Compare does GE, next does LE Bit 0 • AND GE/LE pairs of results • OR those results Int. Res 1 83 S 0 BACBAB Bit 0 Source 1 (XMM) Bit 0 EQUAL ORDERED Source 1 (XMM) Source 2 (XMM / M 128) t ad t se. T xxxxxxx. Tx xxxxx. Txxx xxx. Fxxxx xx. Txxxxxx Fxxxxxxx 01101111 A B C A 0 X Y Z f. F F T F f. F T F F f. F F F T f. F F T F f. Tf. T x x f. T x x x F T F x x x x x F x x x f. T x x x x 00000010 Bit 0 AND the results AND the along results each along each diagonal Int. Res 1

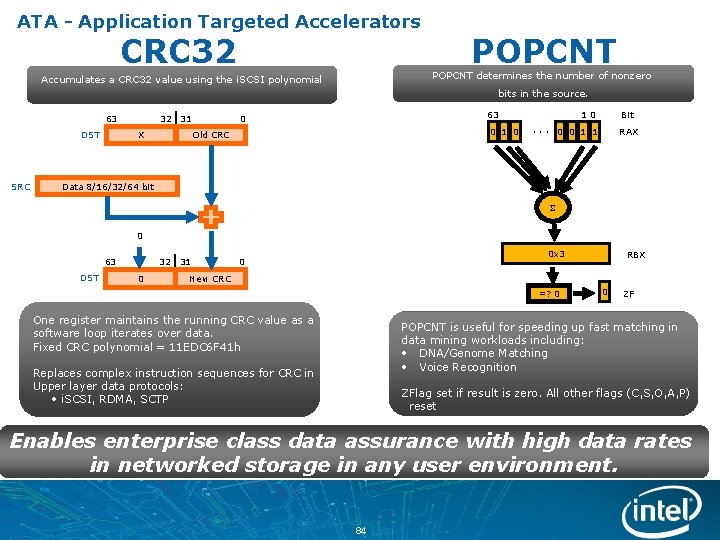

ATA - Application Targeted Accelerators CRC 32 POPCNT determines the number of nonzero Accumulates a CRC 32 value using the i. SCSI polynomial bits in the source. 63 DST SRC 32 31 X 63 0 0 1 0 Old CRC 10 Bit . . . 0 0 1 1 RAX Data 8/16/32/64 bit 0 63 DST 32 31 0 0 x 3 0 RBX New CRC =? 0 One register maintains the running CRC value as a software loop iterates over data. Fixed CRC polynomial = 11 EDC 6 F 41 h 0 ZF POPCNT is useful for speeding up fast matching in data mining workloads including: • DNA/Genome Matching • Voice Recognition Replaces complex instruction sequences for CRC in Upper layer data protocols: • i. SCSI, RDMA, SCTP ZFlag set if result is zero. All other flags (C, S, O, A, P) reset Enables enterprise class data assurance with high data rates in networked storage in any user environment. 84

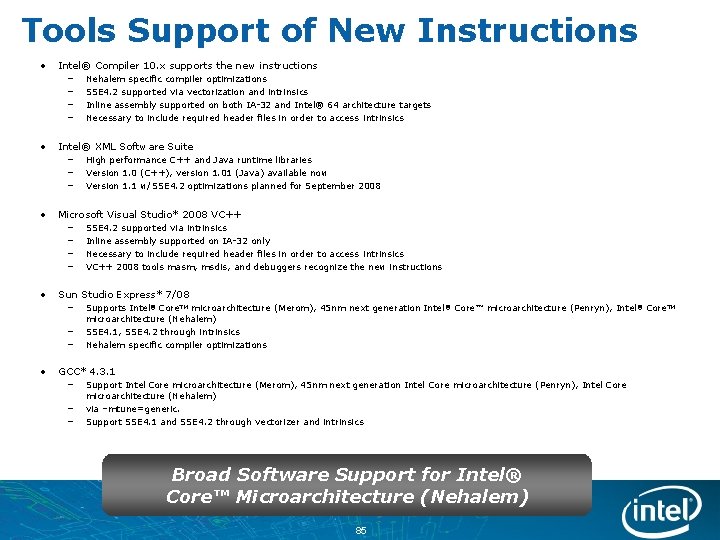

Tools Support of New Instructions • Intel® Compiler 10. x supports the new instructions – – • Intel® XML Software Suite – – – • SSE 4. 2 supported via intrinsics Inline assembly supported on IA-32 only Necessary to include required header files in order to access intrinsics VC++ 2008 tools masm, msdis, and debuggers recognize the new instructions Sun Studio Express* 7/08 – – – • High performance C++ and Java runtime libraries Version 1. 0 (C++), version 1. 01 (Java) available now Version 1. 1 w/SSE 4. 2 optimizations planned for September 2008 Microsoft Visual Studio* 2008 VC++ – – • Nehalem specific compiler optimizations SSE 4. 2 supported via vectorization and intrinsics Inline assembly supported on both IA-32 and Intel® 64 architecture targets Necessary to include required header files in order to access intrinsics Supports Intel® Core. TM microarchitecture (Merom), 45 nm next generation Intel ® Core™ microarchitecture (Penryn), Intel® Core. TM microarchitecture (Nehalem) SSE 4. 1, SSE 4. 2 through intrinsics Nehalem specific compiler optimizations GCC* 4. 3. 1 – – – Support Intel Core microarchitecture (Merom), 45 nm next generation Intel Core microarchitecture (Penryn), Intel Core microarchitecture (Nehalem) via –mtune=generic. Support SSE 4. 1 and SSE 4. 2 through vectorizer and intrinsics Broad Software Support for Intel® Core™ Microarchitecture (Nehalem) 85

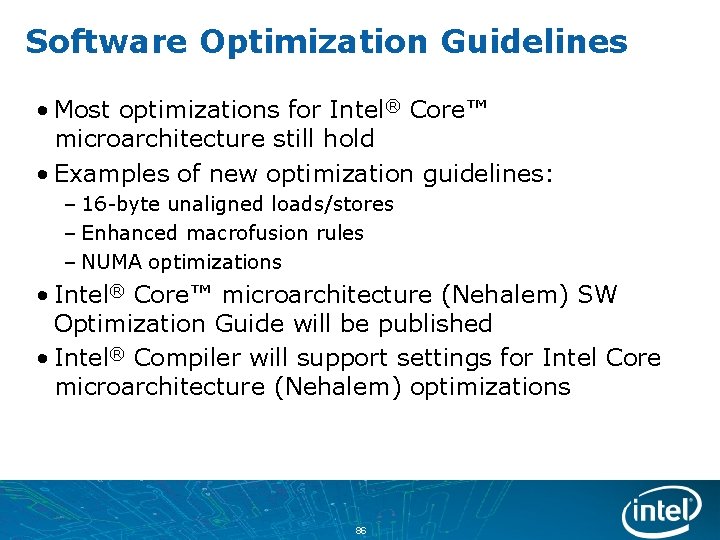

Software Optimization Guidelines • Most optimizations for Intel® Core™ microarchitecture still hold • Examples of new optimization guidelines: – 16 -byte unaligned loads/stores – Enhanced macrofusion rules – NUMA optimizations • Intel® Core™ microarchitecture (Nehalem) SW Optimization Guide will be published • Intel® Compiler will support settings for Intel Core microarchitecture (Nehalem) optimizations 86

![Example Code For strlen string equ esp 4 mov ecx string ecx Example Code For strlen() string equ [esp + 4] mov ecx, string ; ecx](https://slidetodoc.com/presentation_image_h2/64a80f455cf7cf8935ea3cf40f8d3063/image-87.jpg)

Example Code For strlen() string equ [esp + 4] mov ecx, string ; ecx -> string short byte_2 test ecx, 3 ; test if string isjealigned on 32 bits test eax, 0 ff 000000 h je short main_loop ; is it byte 3 str_misaligned: je short byte_3 ; simple byte loop until string is aligned jmp short main_loop mov al, byte ptr [ecx] ; taken if bits 24 -30 are clear and bit add ecx, 1 ; 31 is set test al, al byte_3: je short byte_3 lea eax, [ecx - 1] test ecx, 3 mov ecx, string jne short str_misaligned sub eax, ecx add eax, dword ptr 0 ; 5 byte nop to align label below ret align 16 ; should be redundant byte_2: main_loop: lea eax, [ecx - 2] mov eax, dword ptr [ecx] ; read 4 bytes mov ecx, string mov edx, 7 efefeffh sub eax, ecx add edx, eax ret xor eax, -1 byte_1: xor eax, edx lea eax, [ecx - 3] add ecx, 4 mov ecx, string test eax, 81010100 h sub eax, ecx je short main_loop ret ; found zero byte in the loop byte_0: mov eax, [ecx - 4] lea eax, [ecx - 4] test al, al ; is it byte 0 mov ecx, string je short byte_0 sub eax, ecx test ah, ah ; is it byte 1 ret je short byte_1 strlen endp test eax, 00 ff 0000 h ; is it byte 2 end Current Code: STTNI Version int sttni_strlen(const char * src) { char eom_vals[32] = {1, 255, 0}; __asm{ mov eax, src movdqu xor xmm 2, eom_vals ecx, ecx topofloop: add eax, ecx movdqu xmm 1, OWORD PTR[eax] pcmpistri xmm 2, xmm 1, imm 8 jnz topofloop endofstring: add eax, ecx sub ret eax, src } Minimum of 11 instructions; Inner loop processes 4 bytes with 8 instructions } STTNI Code: Minimum of 10 instructions; A single inner loop processes 16 bytes with only 4 instructions 87

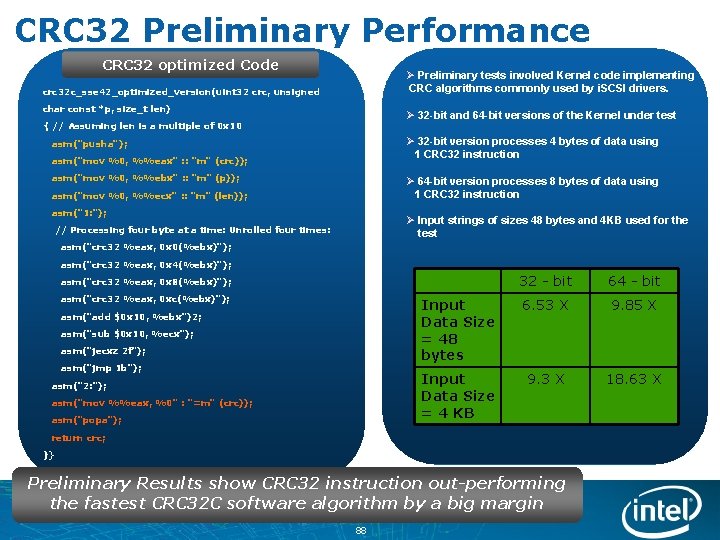

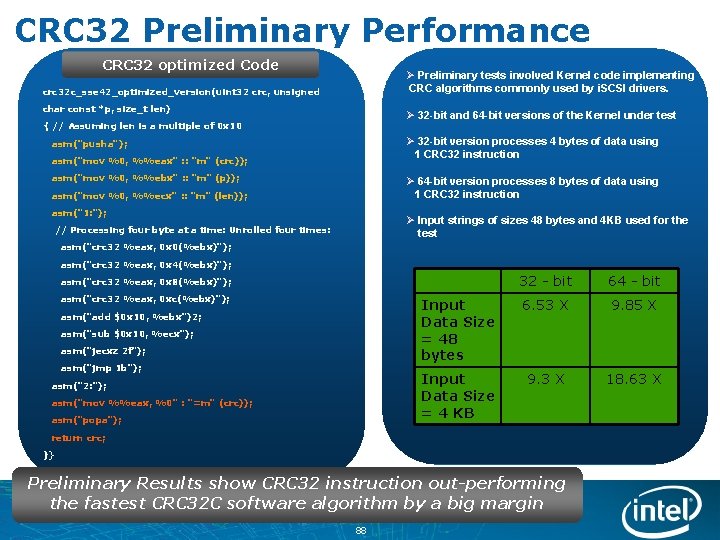

CRC 32 Preliminary Performance CRC 32 optimized Code Ø Preliminary tests involved Kernel code implementing CRC algorithms commonly used by i. SCSI drivers. crc 32 c_sse 42_optimized_version(uint 32 crc, unsigned char const *p, size_t len) Ø 32 -bit and 64 -bit versions of the Kernel under test { // Assuming len is a multiple of 0 x 10 Ø 32 -bit version processes 4 bytes of data using 1 CRC 32 instruction asm("pusha"); asm("mov %0, %%eax" : : "m" (crc)); asm("mov %0, %%ebx" : : "m" (p)); Ø 64 -bit version processes 8 bytes of data using 1 CRC 32 instruction asm("mov %0, %%ecx" : : "m" (len)); asm("1: "); Ø Input strings of sizes 48 bytes and 4 KB used for the test // Processing four byte at a time: Unrolled four times: asm("crc 32 %eax, 0 x 0(%ebx)"); asm("crc 32 %eax, 0 x 4(%ebx)"); 32 - bit 64 - bit Input Data Size = 48 bytes 6. 53 X 9. 85 X Input Data Size = 4 KB 9. 3 X 18. 63 X asm("crc 32 %eax, 0 x 8(%ebx)"); asm("crc 32 %eax, 0 xc(%ebx)"); asm("add $0 x 10, %ebx")2; asm("sub $0 x 10, %ecx"); asm("jecxz 2 f"); asm("jmp 1 b"); asm("2: "); asm("mov %%eax, %0" : "=m" (crc)); asm("popa"); return crc; }} Preliminary Results show CRC 32 instruction out-performing the fastest CRC 32 C software algorithm by a big margin 88

Idle Power Matters • Data center operating costs 1 – 41 M physical servers by 2010, average utilization < 10% – $0. 50 spent on power and cooling for every $1 spent on server hardware • Regulatory requirements affect all segments – ENERGY STAR* and related requirements • Environmental responsibility Idle power consumption not just mobile concern 1. IDC’s Datacenter Trends Survey, January 2007 89

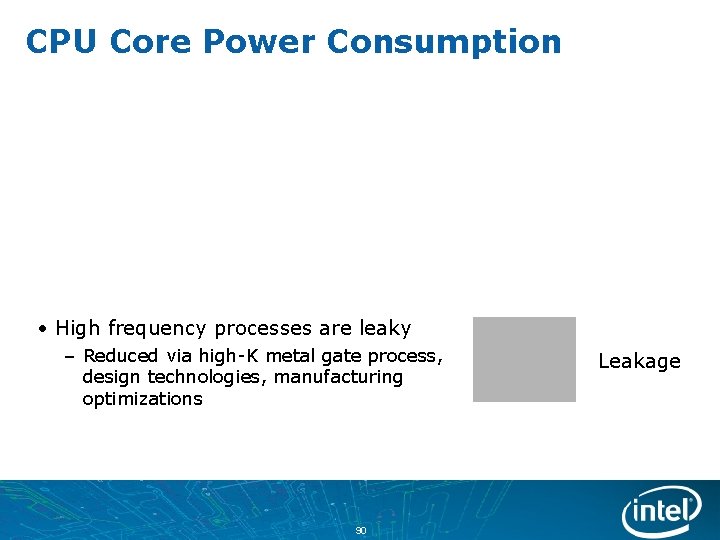

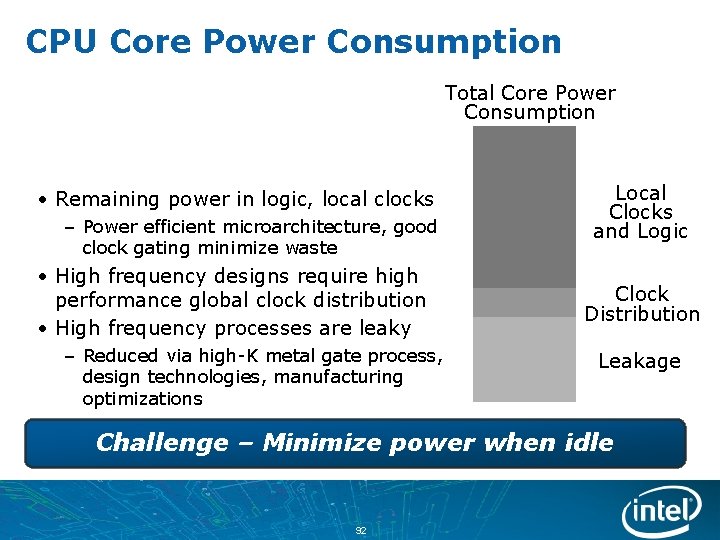

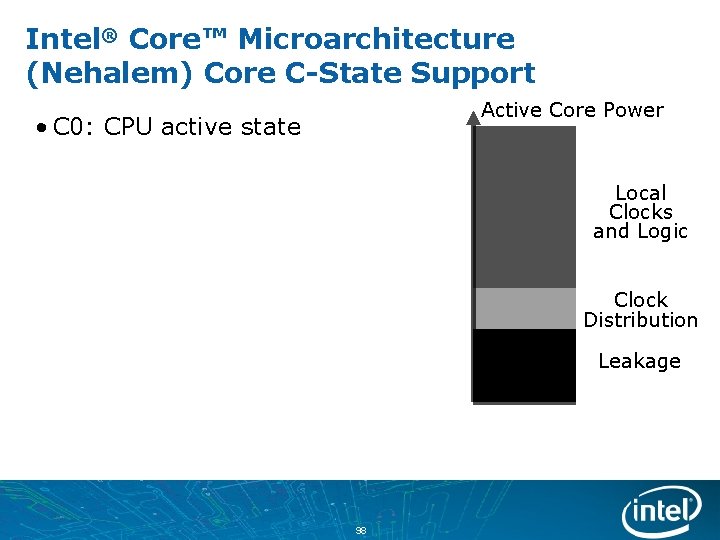

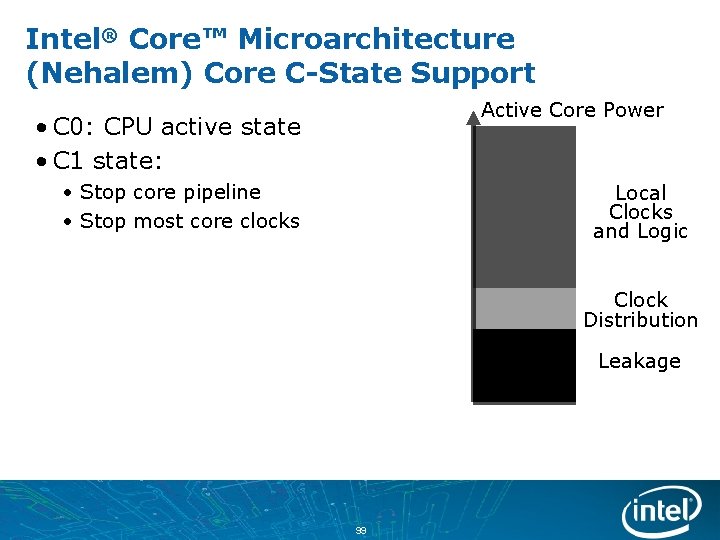

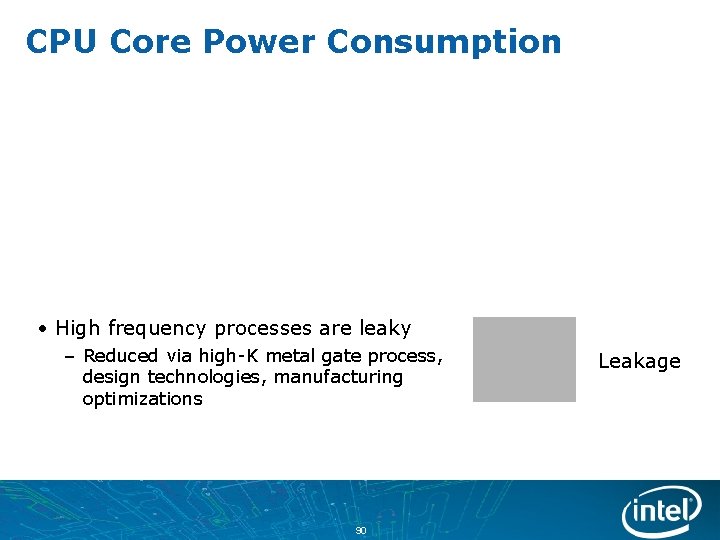

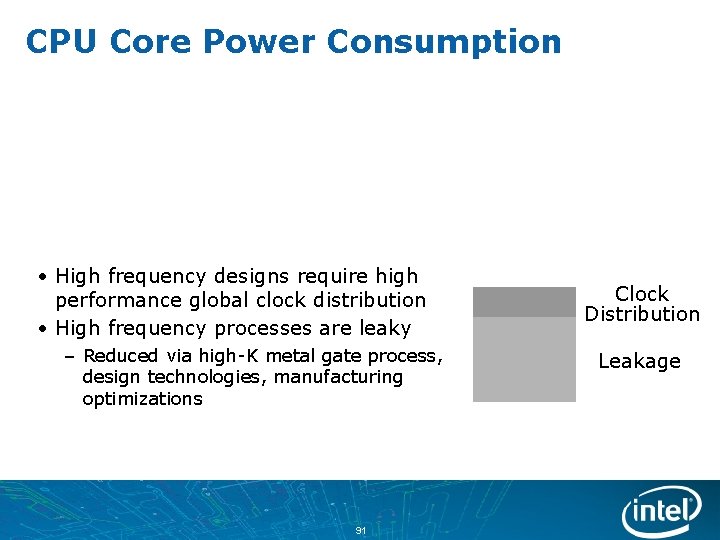

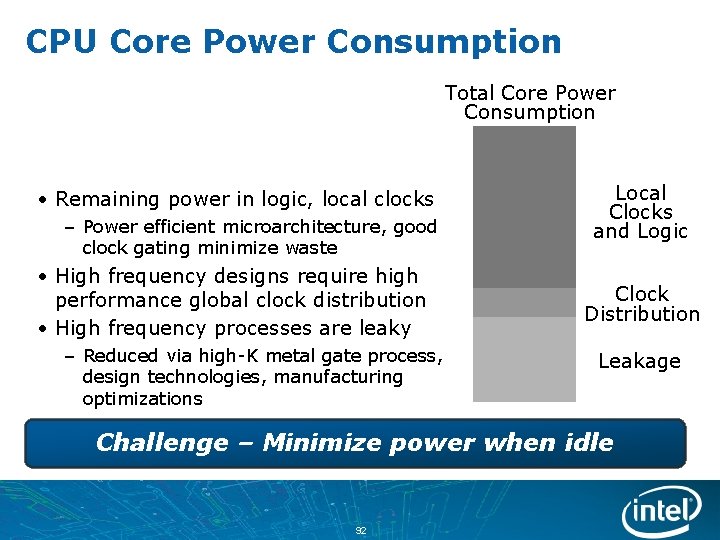

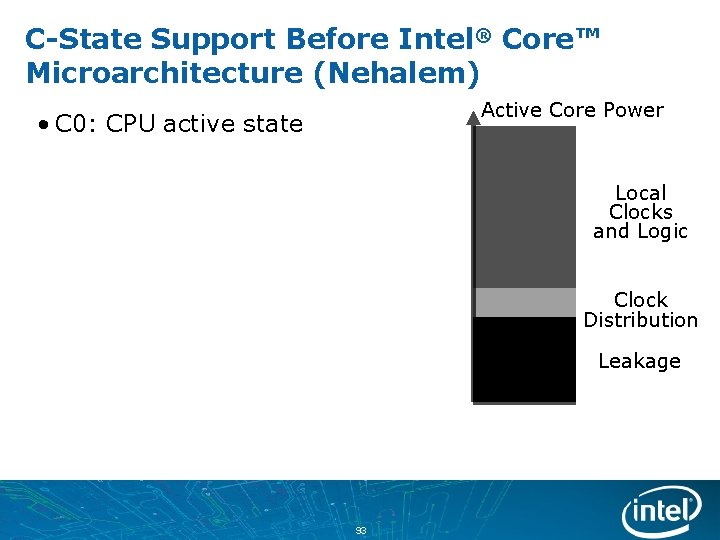

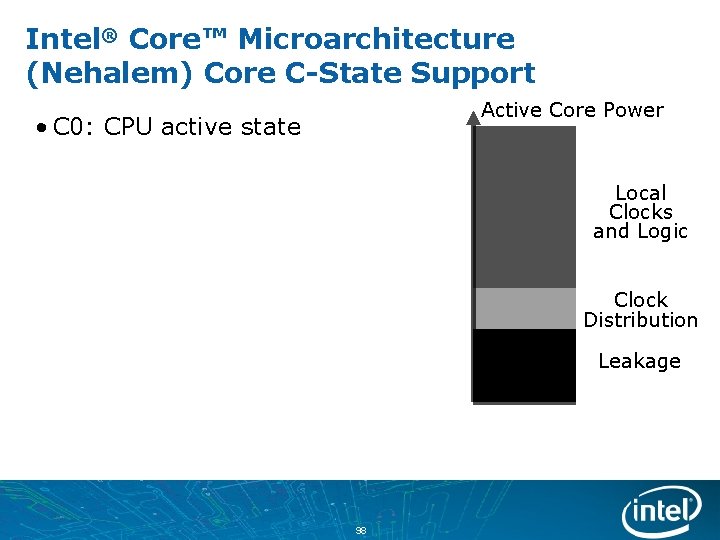

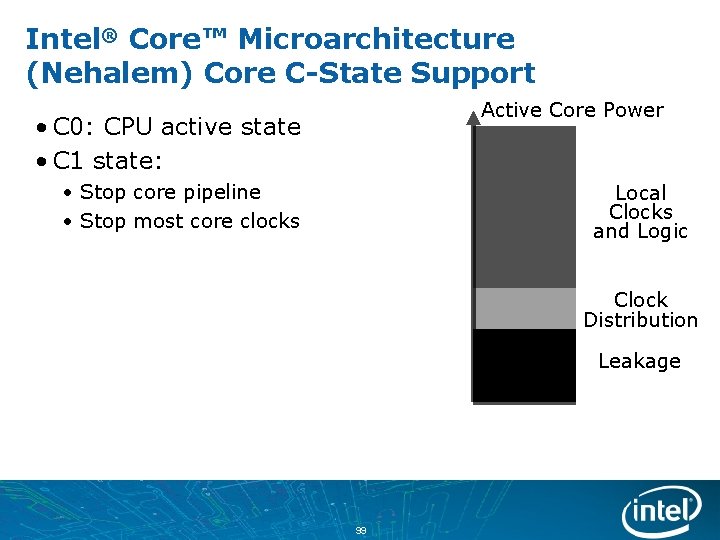

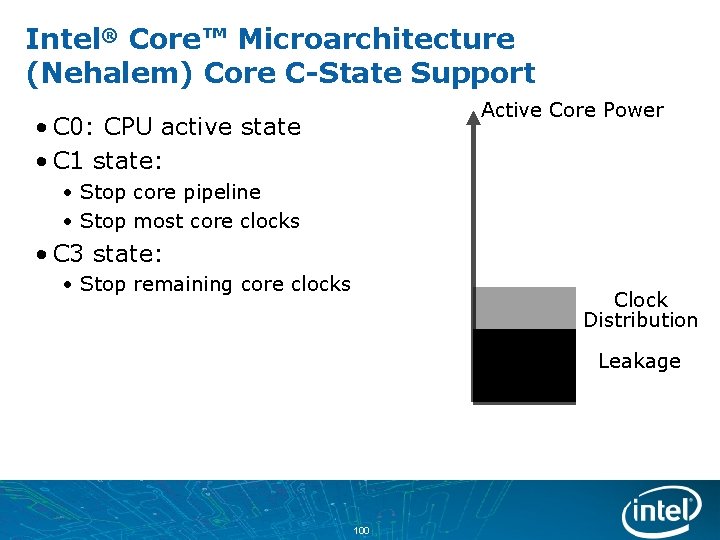

CPU Core Power Consumption • High frequency processes are leaky – Reduced via high-K metal gate process, design technologies, manufacturing optimizations 90 Leakage

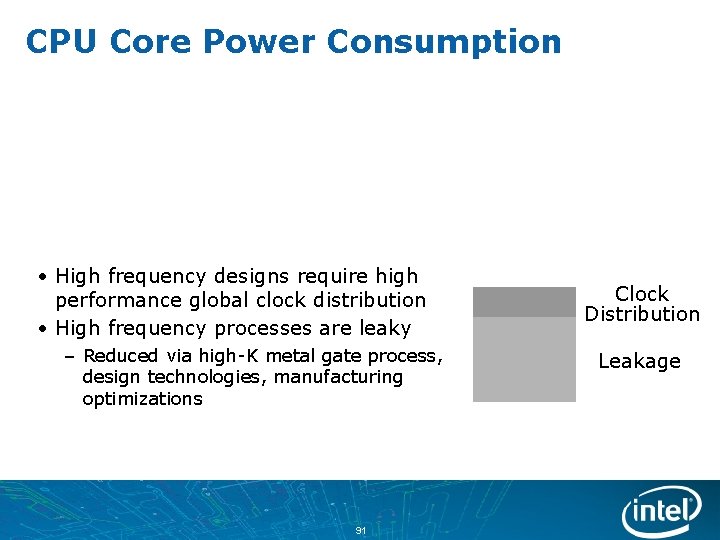

CPU Core Power Consumption • High frequency designs require high performance global clock distribution • High frequency processes are leaky – Reduced via high-K metal gate process, design technologies, manufacturing optimizations 91 Clock Distribution Leakage

CPU Core Power Consumption Total Core Power Consumption • Remaining power in logic, local clocks – Power efficient microarchitecture, good clock gating minimize waste • High frequency designs require high performance global clock distribution • High frequency processes are leaky – Reduced via high-K metal gate process, design technologies, manufacturing optimizations Local Clocks and Logic Clock Distribution Leakage Challenge – Minimize power when idle 92

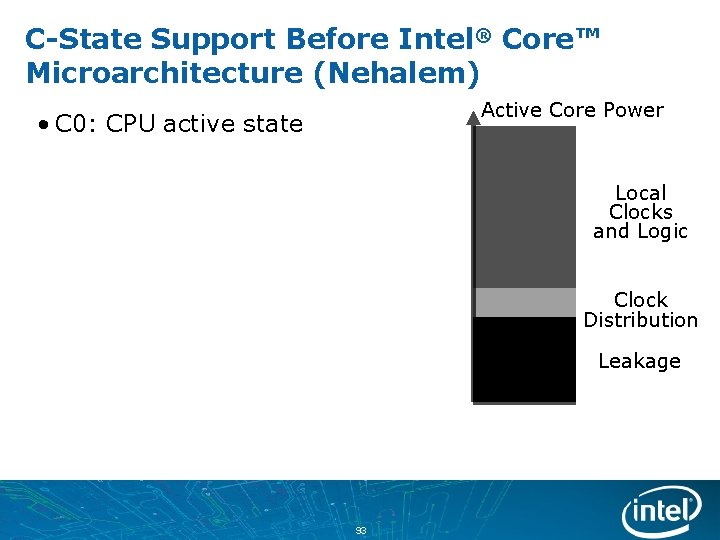

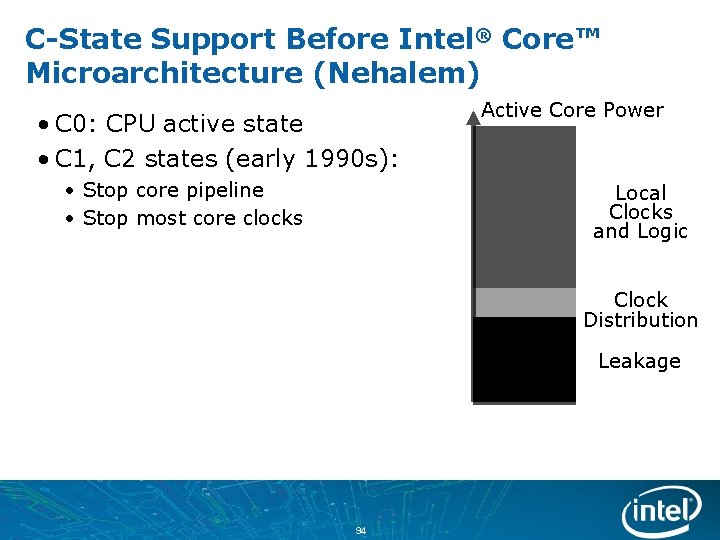

C-State Support Before Intel® Core™ Microarchitecture (Nehalem) Active Core Power • C 0: CPU active state Local Clocks and Logic Clock Distribution Leakage 93

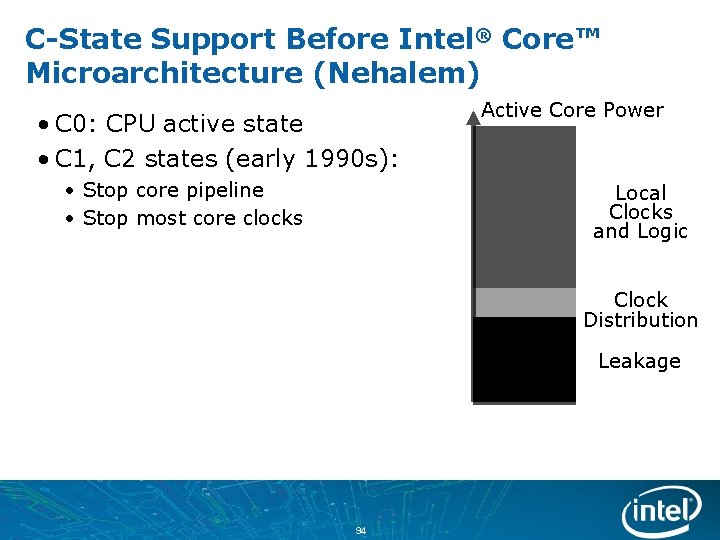

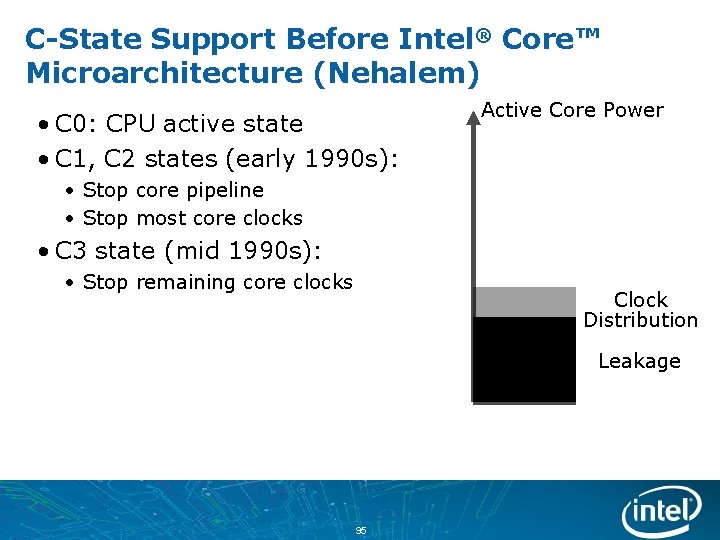

C-State Support Before Intel® Core™ Microarchitecture (Nehalem) • C 0: CPU active state • C 1, C 2 states (early 1990 s): • Stop core pipeline • Stop most core clocks Active Core Power Local Clocks and Logic Clock Distribution Leakage 94

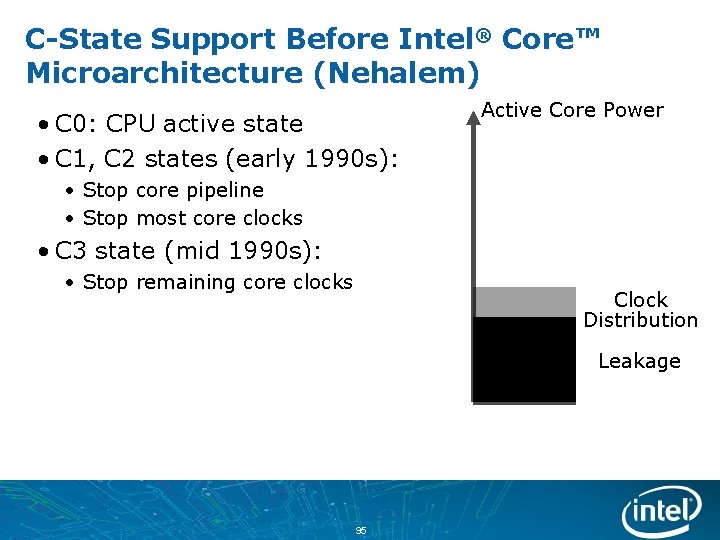

C-State Support Before Intel® Core™ Microarchitecture (Nehalem) • C 0: CPU active state • C 1, C 2 states (early 1990 s): Active Core Power • Stop core pipeline • Stop most core clocks • C 3 state (mid 1990 s): • Stop remaining core clocks Clock Distribution Leakage 95

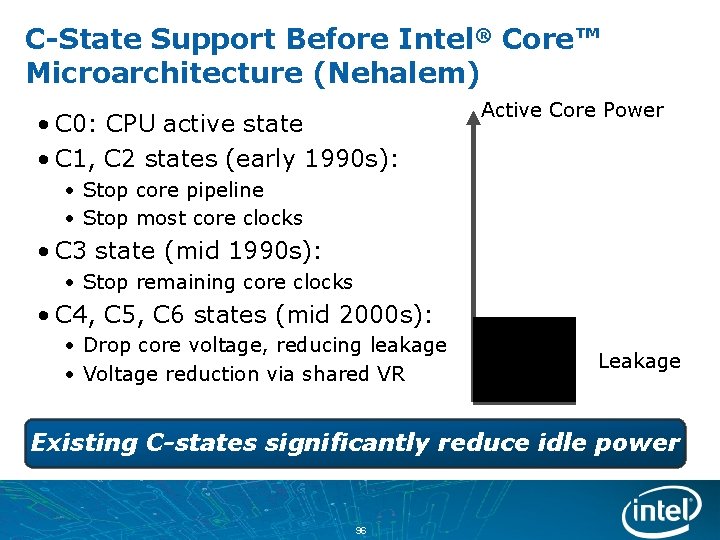

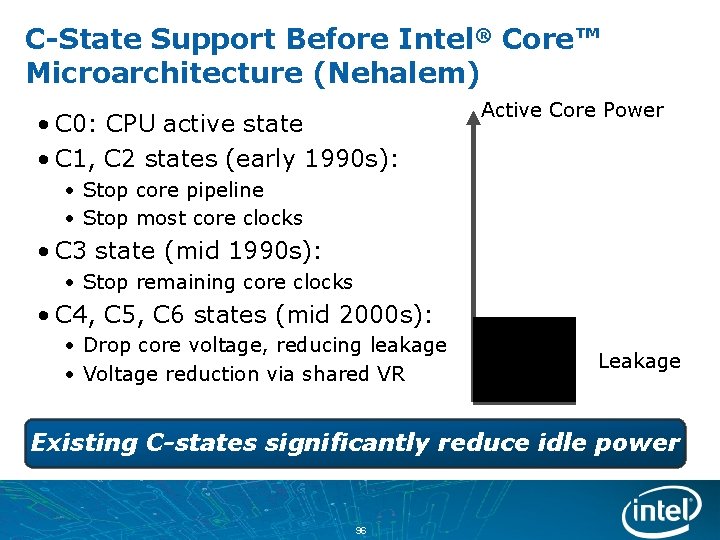

C-State Support Before Intel® Core™ Microarchitecture (Nehalem) • C 0: CPU active state • C 1, C 2 states (early 1990 s): Active Core Power • Stop core pipeline • Stop most core clocks • C 3 state (mid 1990 s): • Stop remaining core clocks • C 4, C 5, C 6 states (mid 2000 s): • Drop core voltage, reducing leakage • Voltage reduction via shared VR Leakage Existing C-states significantly reduce idle power 96

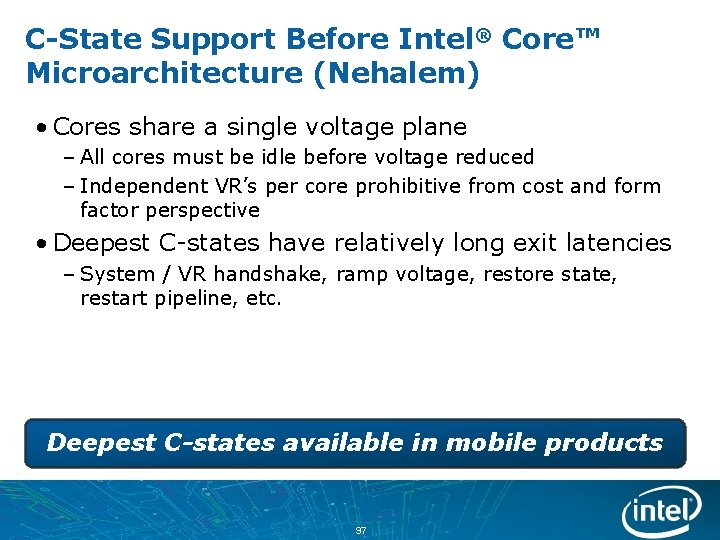

C-State Support Before Intel® Core™ Microarchitecture (Nehalem) • Cores share a single voltage plane – All cores must be idle before voltage reduced – Independent VR’s per core prohibitive from cost and form factor perspective • Deepest C-states have relatively long exit latencies – System / VR handshake, ramp voltage, restore state, restart pipeline, etc. Deepest C-states available in mobile products 97

Intel® Core™ Microarchitecture (Nehalem) Core C-State Support Active Core Power • C 0: CPU active state Local Clocks and Logic Clock Distribution Leakage 98

Intel® Core™ Microarchitecture (Nehalem) Core C-State Support Active Core Power • C 0: CPU active state • C 1 state: • Stop core pipeline • Stop most core clocks Local Clocks and Logic Clock Distribution Leakage 99

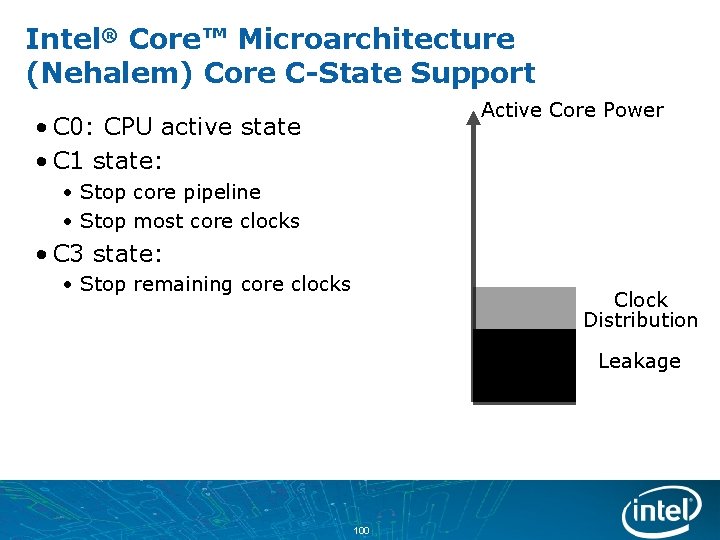

Intel® Core™ Microarchitecture (Nehalem) Core C-State Support Active Core Power • C 0: CPU active state • C 1 state: • Stop core pipeline • Stop most core clocks • C 3 state: • Stop remaining core clocks Clock Distribution Leakage 100

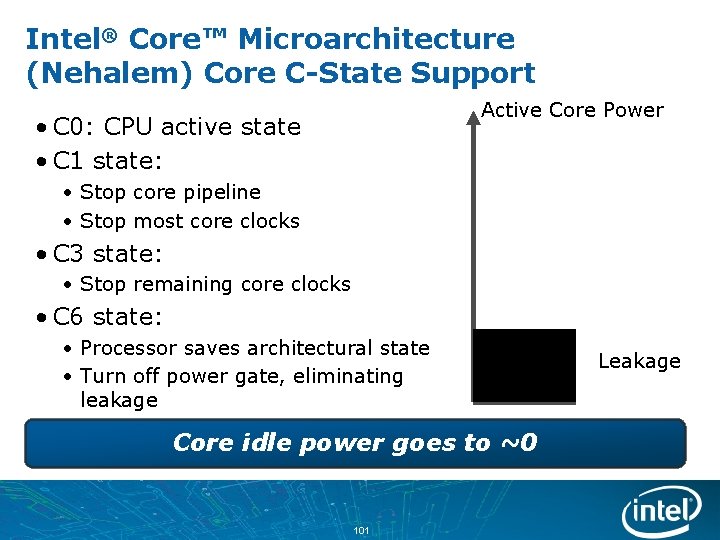

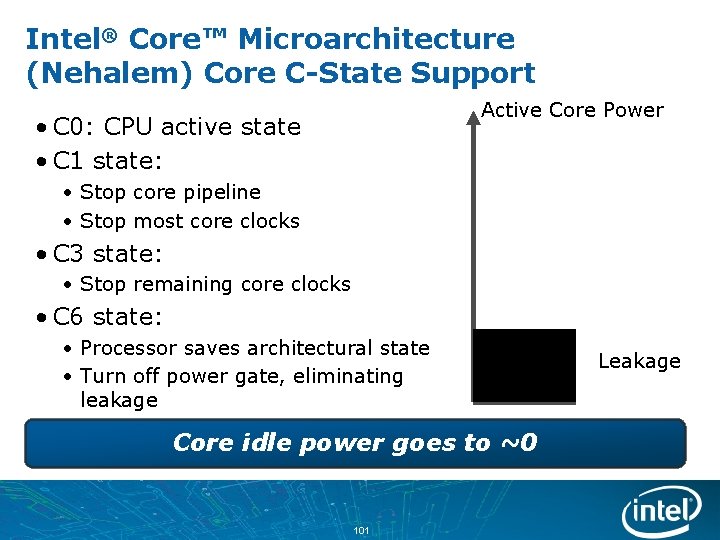

Intel® Core™ Microarchitecture (Nehalem) Core C-State Support Active Core Power • C 0: CPU active state • C 1 state: • Stop core pipeline • Stop most core clocks • C 3 state: • Stop remaining core clocks • C 6 state: • Processor saves architectural state • Turn off power gate, eliminating leakage Core idle power goes to ~0 101 Leakage

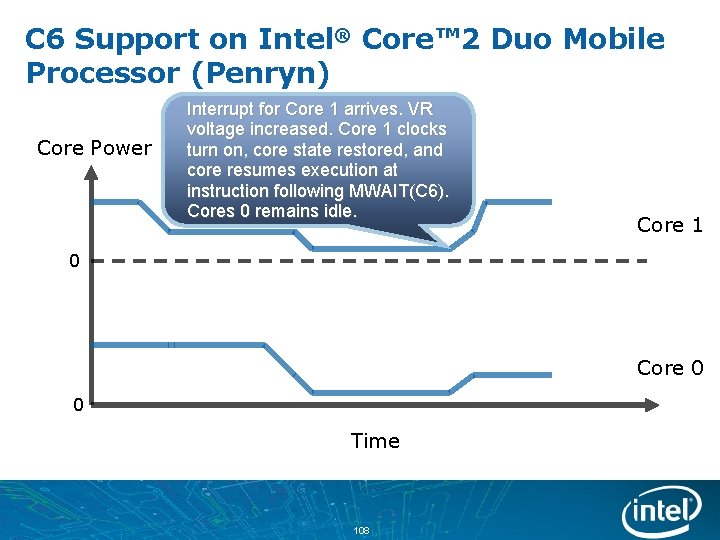

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) 102

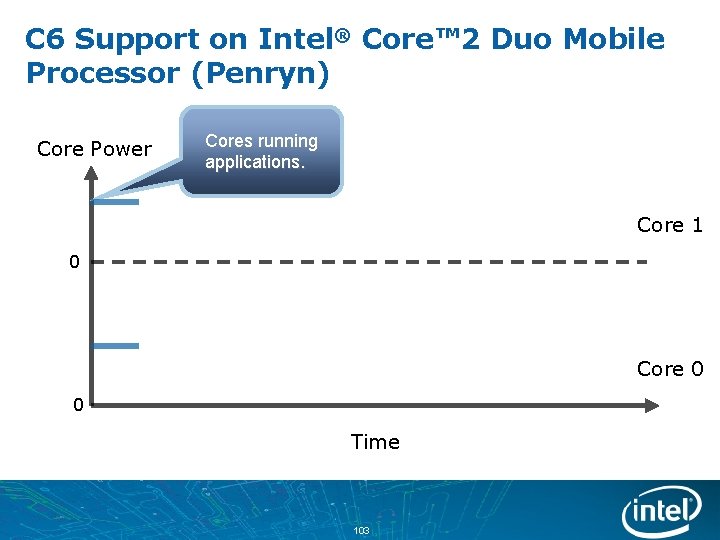

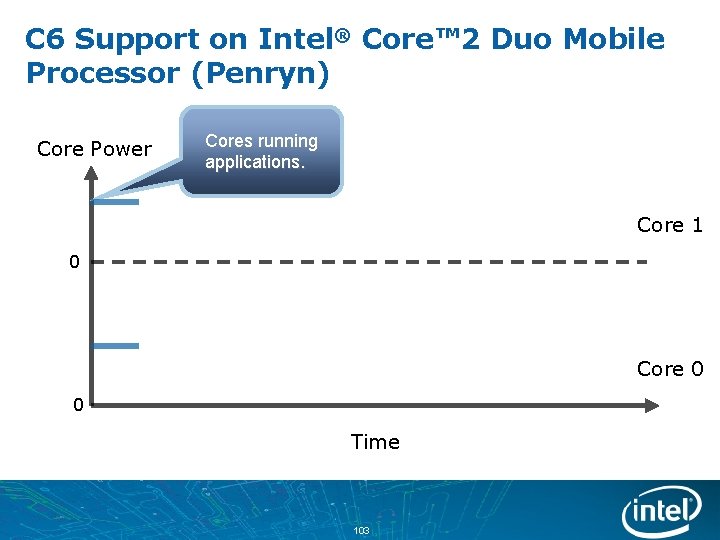

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Cores running applications. Core 1 0 Core 0 0 Time 103

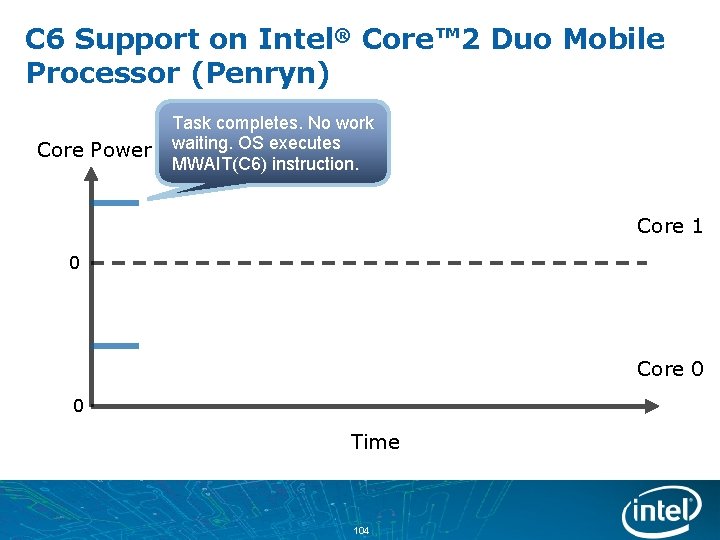

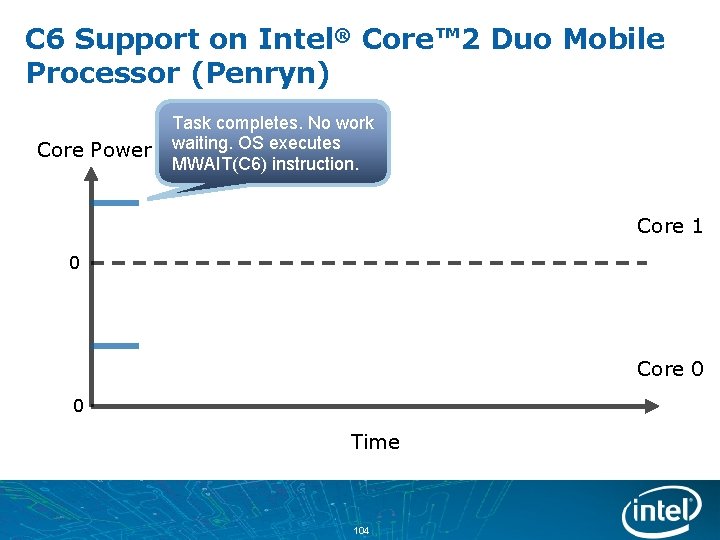

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Task completes. No work waiting. OS executes MWAIT(C 6) instruction. Core 1 0 Core 0 0 Time 104

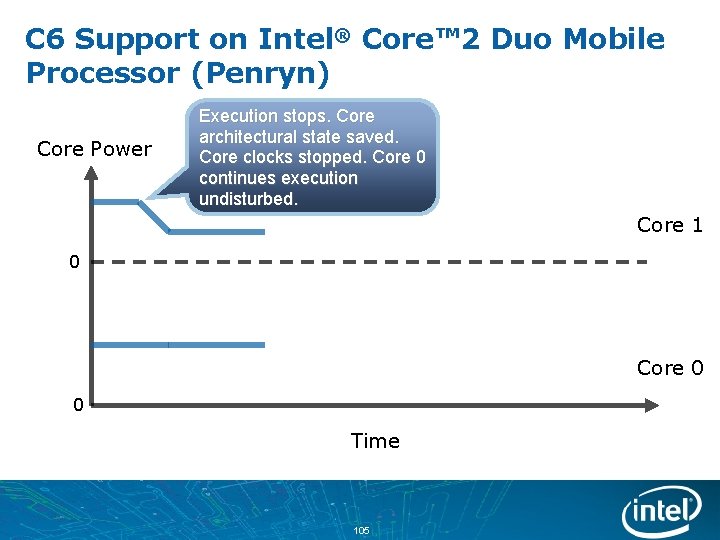

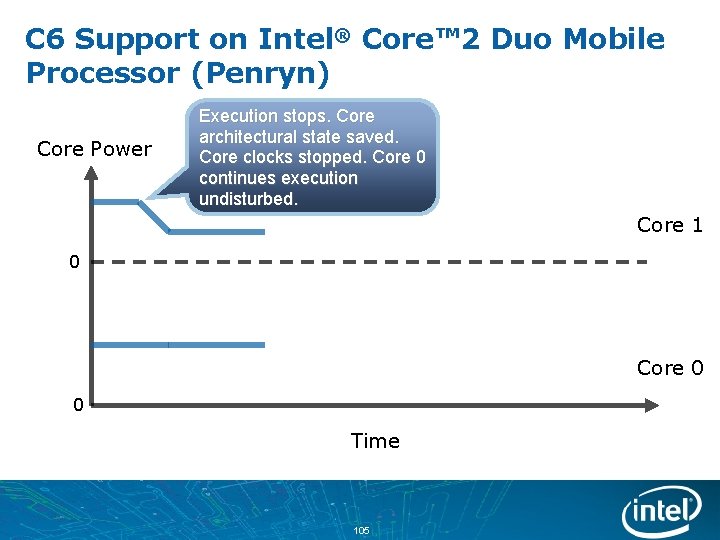

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Execution stops. Core architectural state saved. Core clocks stopped. Core 0 continues execution undisturbed. Core 1 0 Core 0 0 Time 105

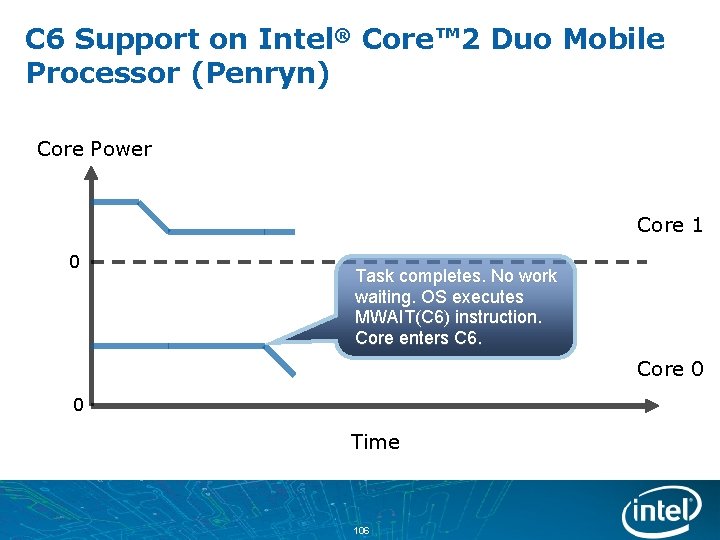

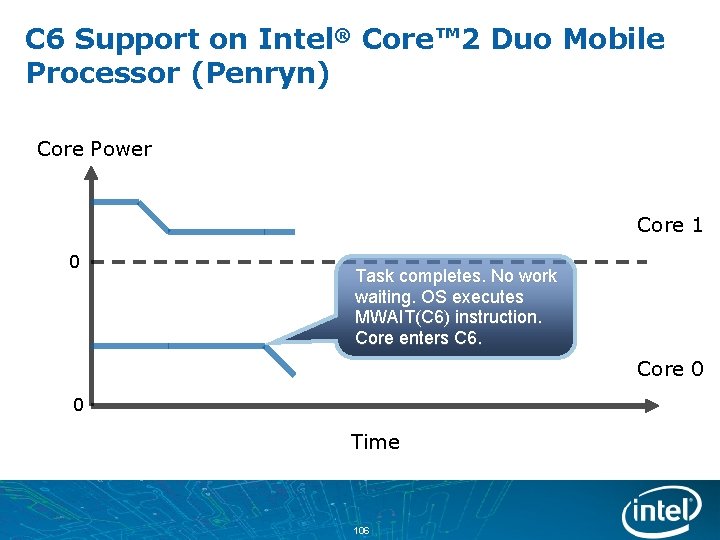

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Core 1 0 Task completes. No work waiting. OS executes MWAIT(C 6) instruction. Core enters C 6. Core 0 0 Time 106

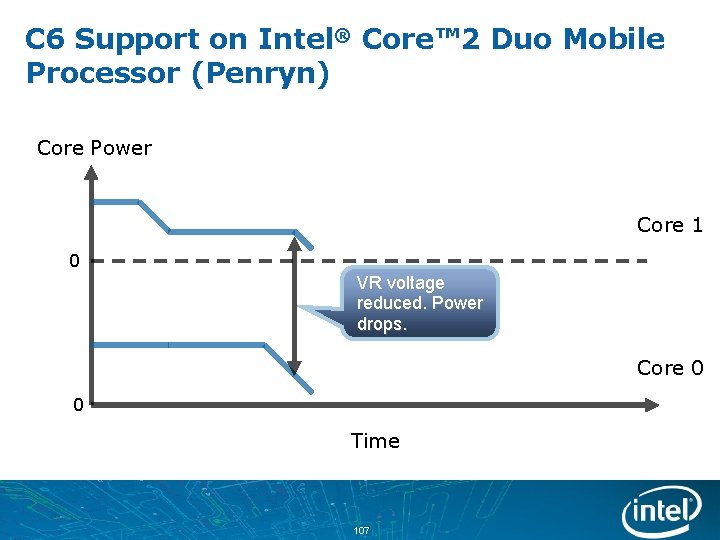

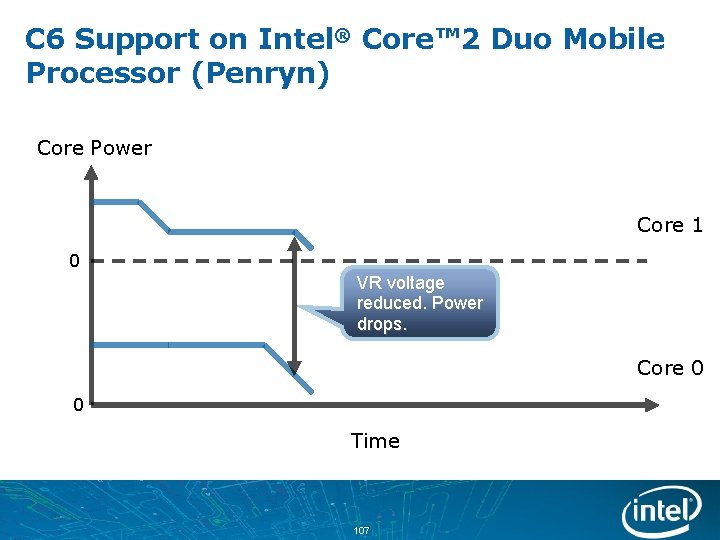

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Core 1 0 VR voltage reduced. Power drops. Core 0 0 Time 107

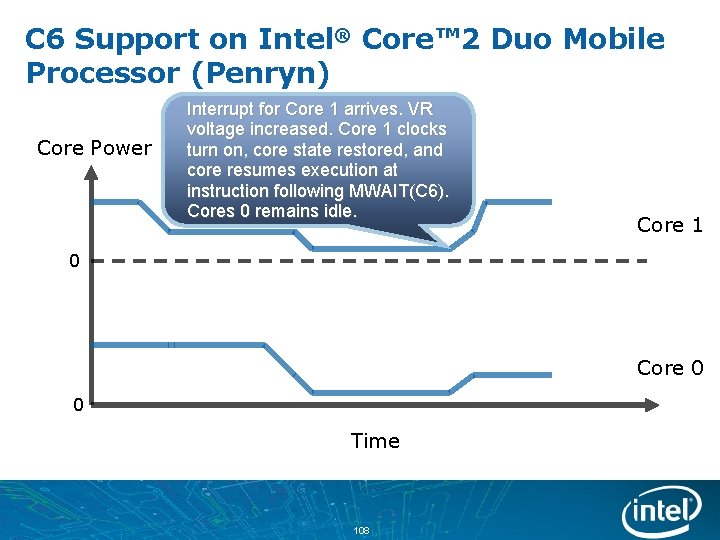

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Interrupt for Core 1 arrives. VR voltage increased. Core 1 clocks turn on, core state restored, and core resumes execution at instruction following MWAIT(C 6). Cores 0 remains idle. Core 1 0 Core 0 0 Time 108

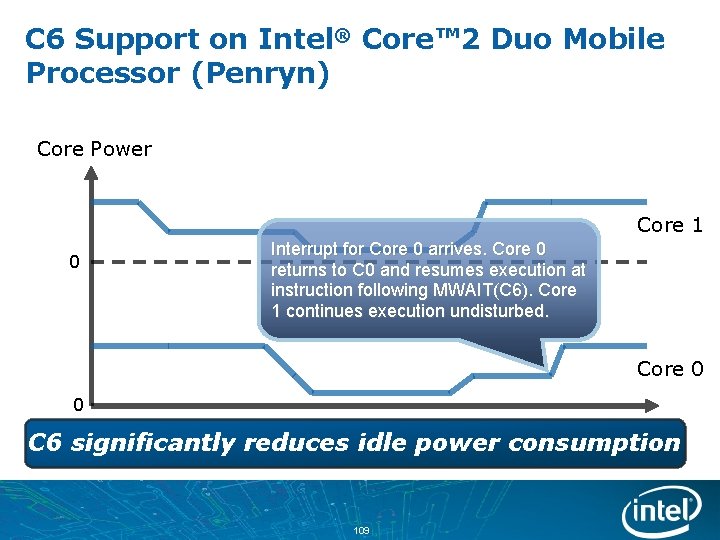

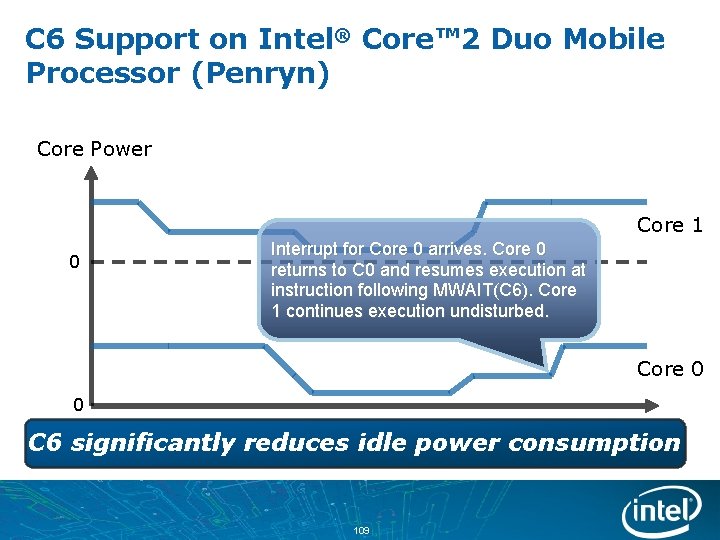

C 6 Support on Intel® Core™ 2 Duo Mobile Processor (Penryn) Core Power Core 1 0 Interrupt for Core 0 arrives. Core 0 returns to C 0 and resumes execution at instruction following MWAIT(C 6). Core 1 continues execution undisturbed. Core 0 0 C 6 significantly reduces Time idle power consumption 109

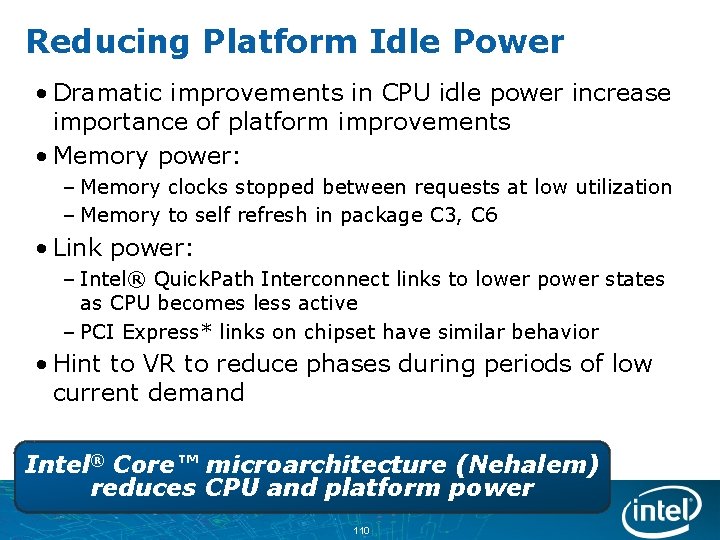

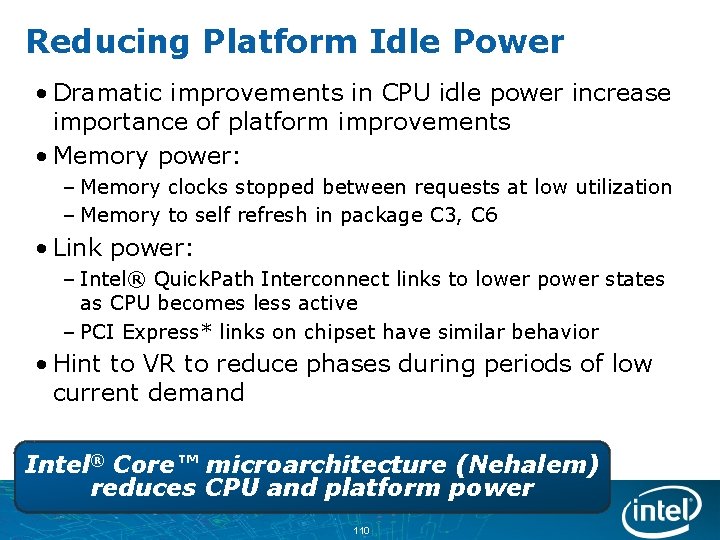

Reducing Platform Idle Power • Dramatic improvements in CPU idle power increase importance of platform improvements • Memory power: – Memory clocks stopped between requests at low utilization – Memory to self refresh in package C 3, C 6 • Link power: – Intel® Quick. Path Interconnect links to lower power states as CPU becomes less active – PCI Express* links on chipset have similar behavior • Hint to VR to reduce phases during periods of low current demand Intel® Core™ microarchitecture (Nehalem) reduces CPU and platform power 110

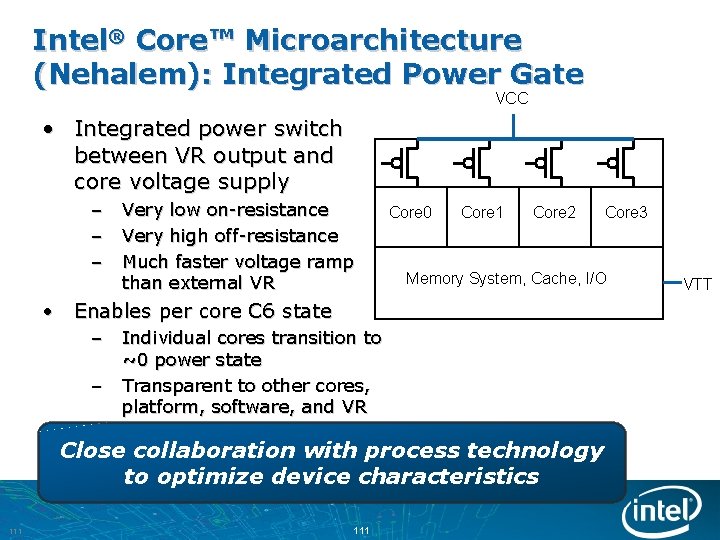

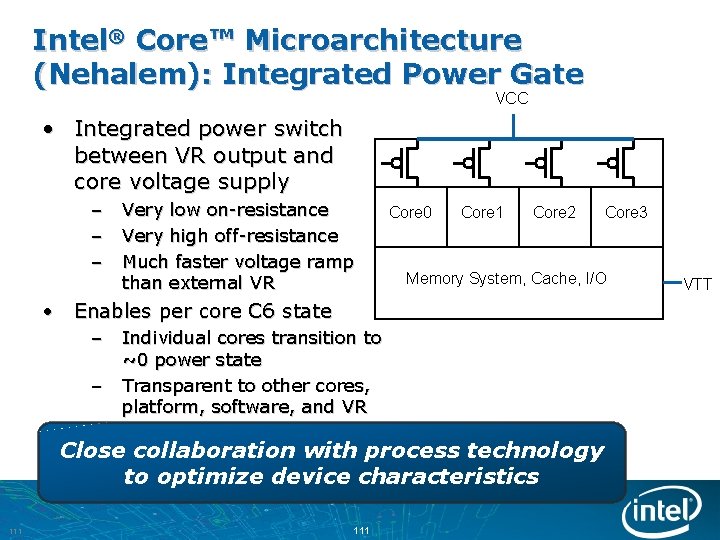

Intel® Core™ Microarchitecture (Nehalem): Integrated Power Gate VCC • Integrated power switch between VR output and core voltage supply – – – Very low on-resistance Very high off-resistance Much faster voltage ramp than external VR Core 0 Core 1 Core 2 Memory System, Cache, I/O • Enables per core C 6 state – – Individual cores transition to ~0 power state Transparent to other cores, platform, software, and VR Close collaboration with process technology to optimize device characteristics 111 Core 3 VTT

Agenda • Intel® Core™ microarchitecture (Nehalem) power management overview • Minimizing idle power consumption • Performance when you need it 112

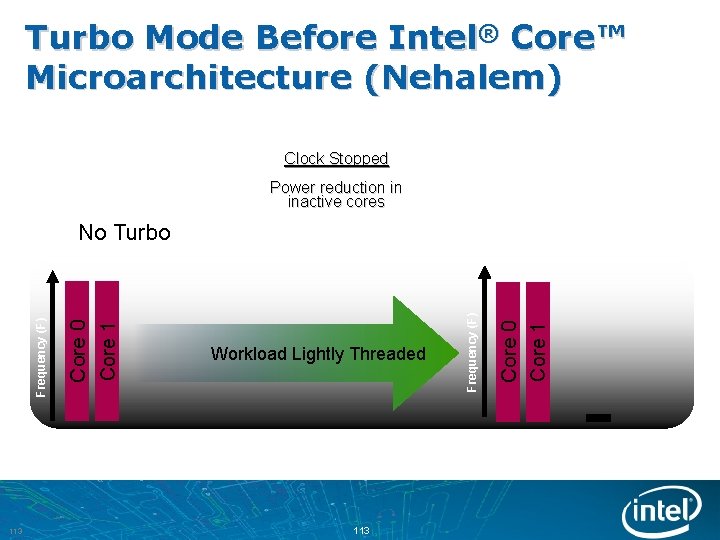

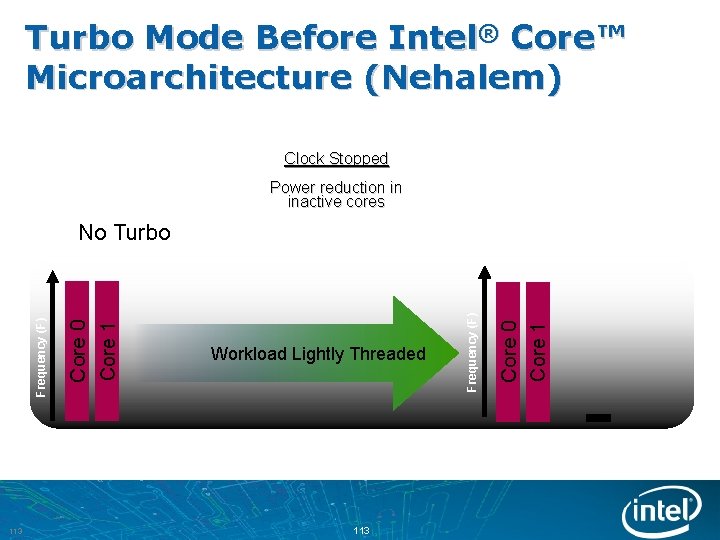

Turbo Mode Before Intel® Core™ Microarchitecture (Nehalem) Clock Stopped Power reduction in inactive cores 113 Core 0 Core 1 Workload Lightly Threaded Frequency (F) Core 0 Core 1 Frequency (F) No Turbo

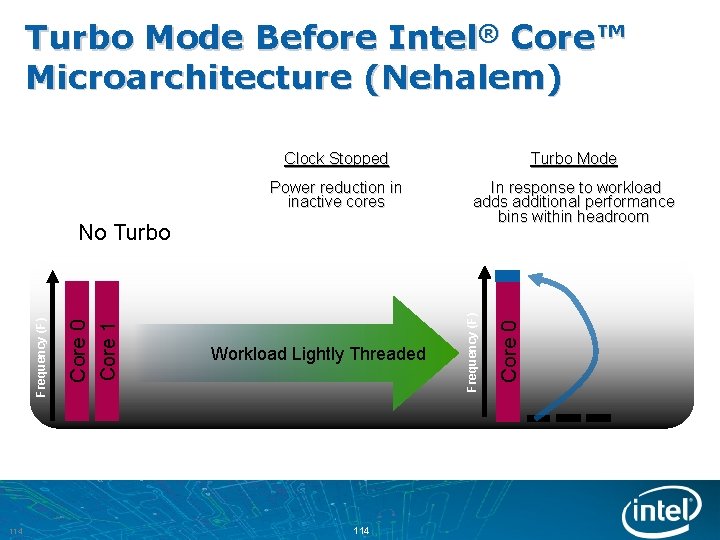

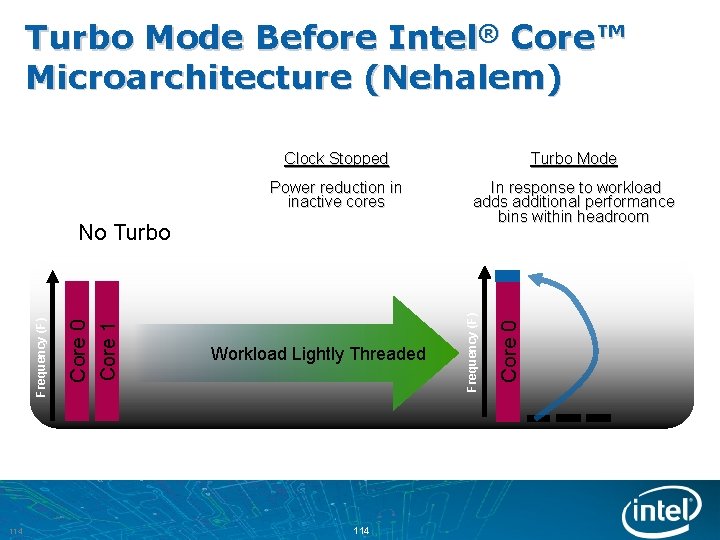

Turbo Mode Before Intel® Core™ Microarchitecture (Nehalem) Power reduction in inactive cores In response to workload adds additional performance bins within headroom 114 Core 0 Core 1 Frequency (F) No Turbo Workload Lightly Threaded 114 Core 0 Turbo Mode Frequency (F) Clock Stopped

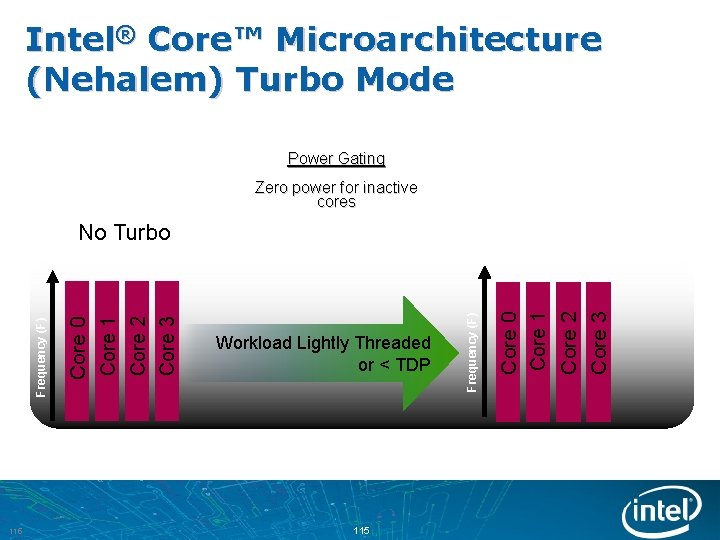

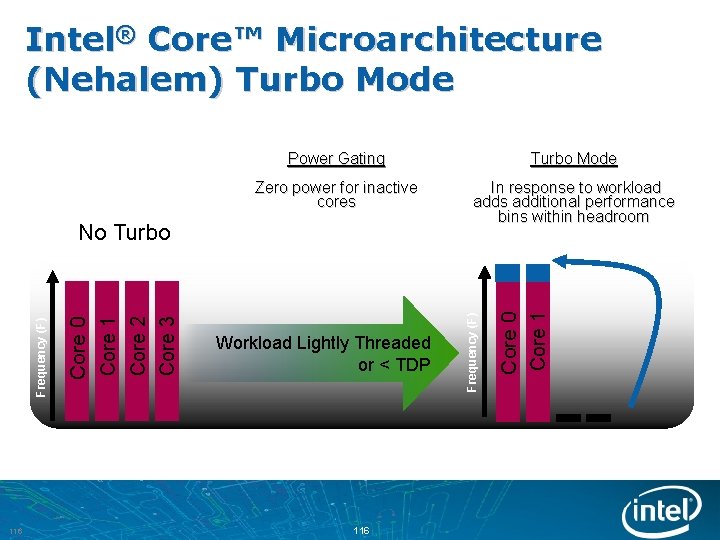

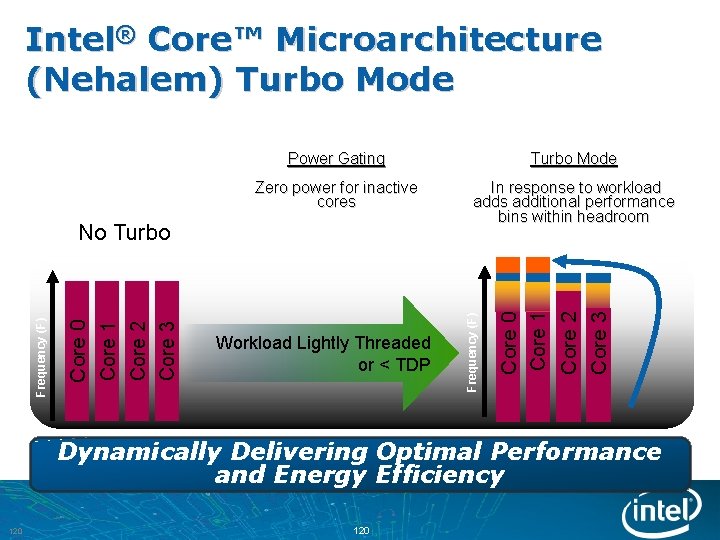

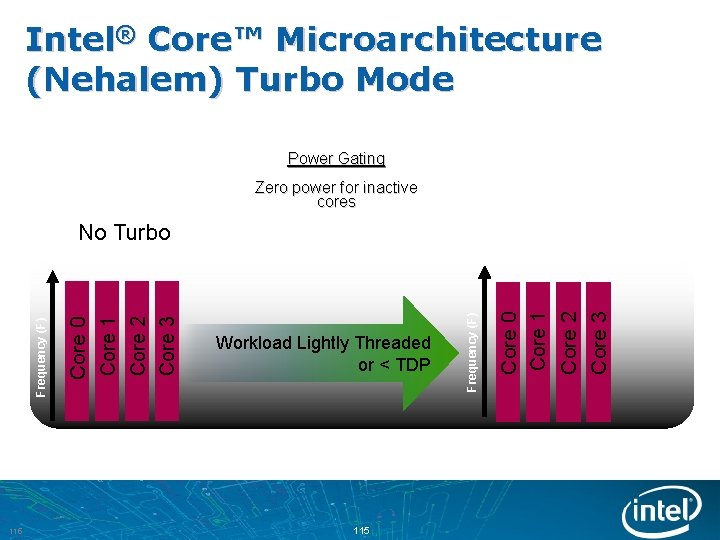

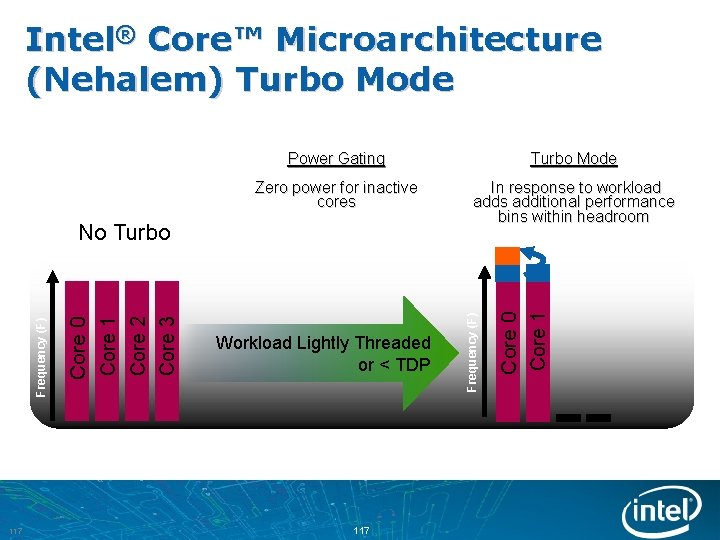

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode Power Gating Zero power for inactive cores 115 Core 0 Core 1 Core 2 Core 3 Workload Lightly Threaded or < TDP Frequency (F) Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo

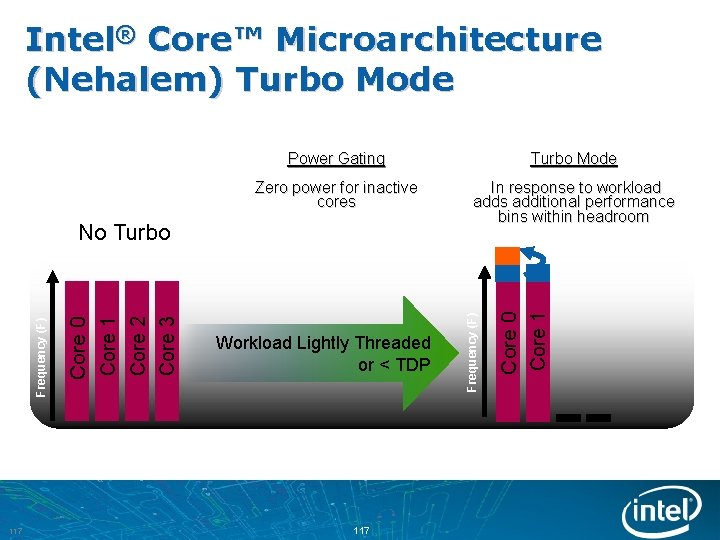

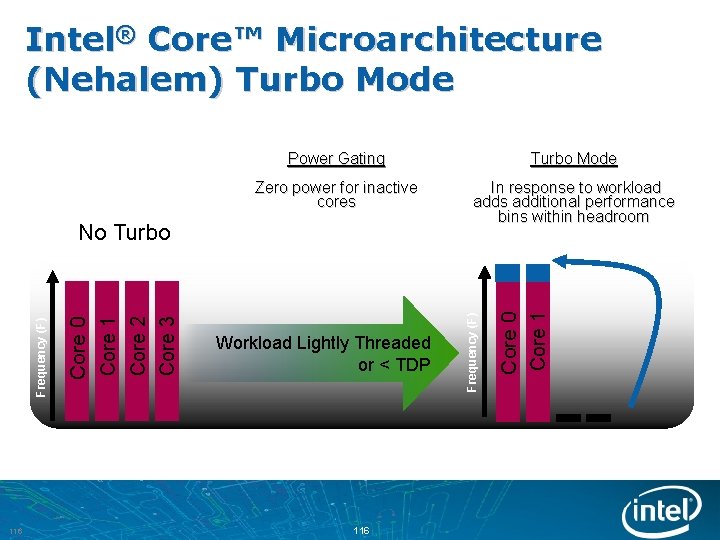

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode Zero power for inactive cores In response to workload adds additional performance bins within headroom 116 Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo Workload Lightly Threaded or < TDP 116 Core 0 Core 1 Turbo Mode Frequency (F) Power Gating

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode Zero power for inactive cores In response to workload adds additional performance bins within headroom 117 Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo Workload Lightly Threaded or < TDP 117 Core 0 Core 1 Turbo Mode Frequency (F) Power Gating

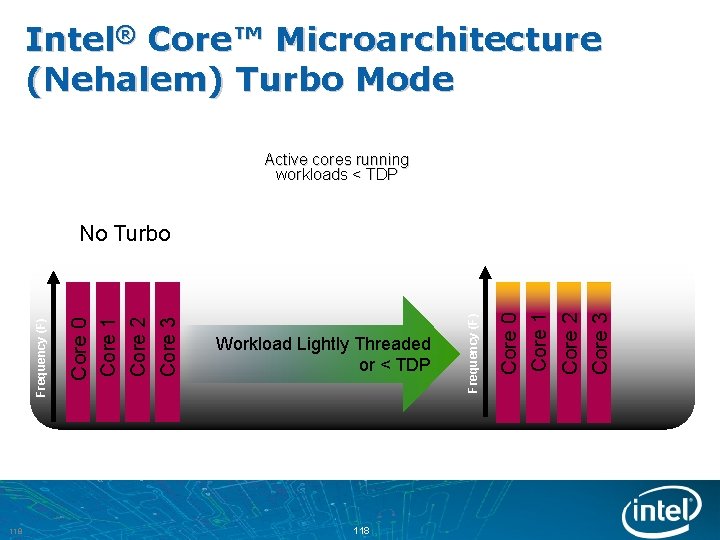

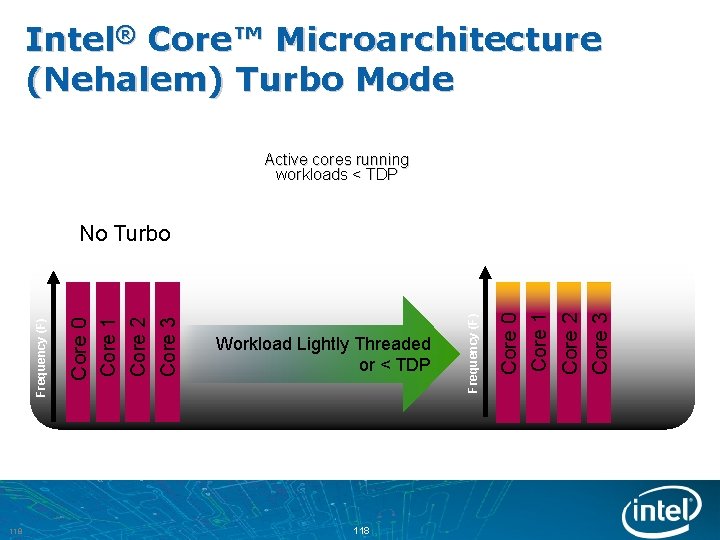

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode Active cores running workloads < TDP 118 Core 0 Core 1 Core 2 Core 3 Workload Lightly Threaded or < TDP Frequency (F) Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo

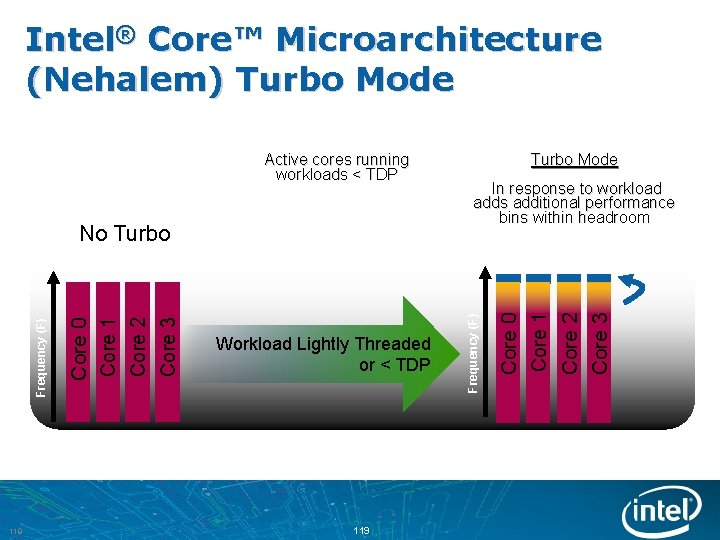

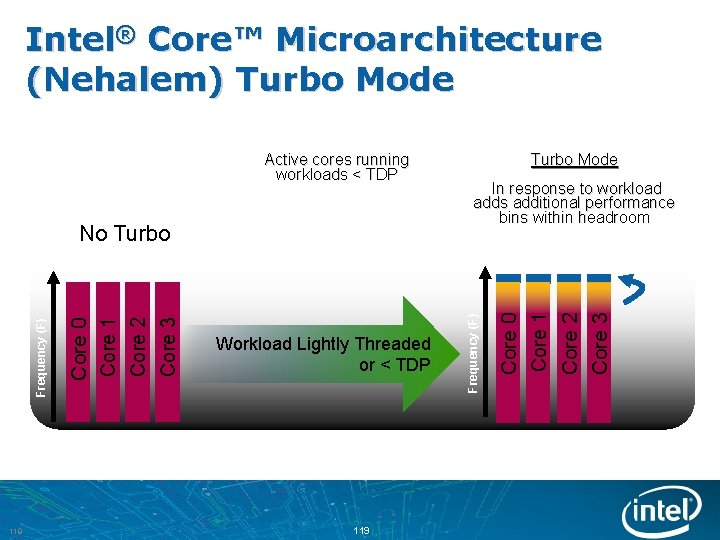

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode 119 Workload Lightly Threaded or < TDP 119 In response to workload adds additional performance bins within headroom Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo Mode Frequency (F) Active cores running workloads < TDP

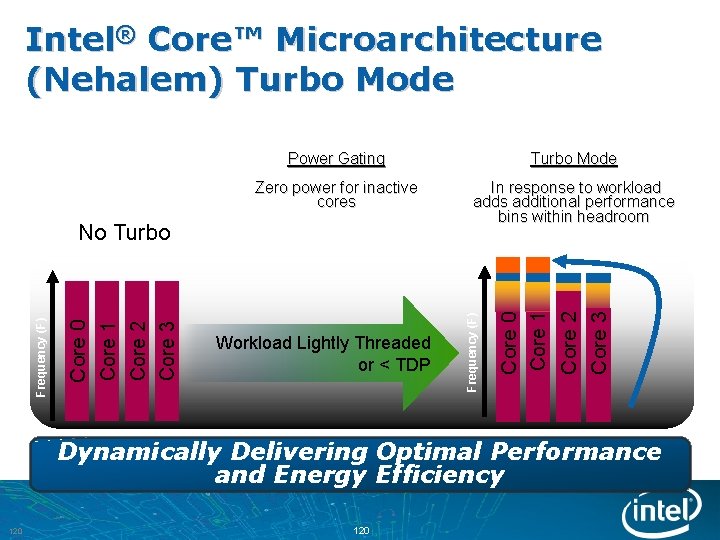

Intel® Core™ Microarchitecture (Nehalem) Turbo Mode Zero power for inactive cores In response to workload adds additional performance bins within headroom Core 0 Core 1 Core 2 Core 3 Frequency (F) No Turbo Workload Lightly Threaded or < TDP Core 0 Core 1 Core 2 Core 3 Turbo Mode Frequency (F) Power Gating Dynamically Delivering Optimal Performance and Energy Efficiency 120

Additional Sources of Information on This Topic: • Other Sessions / Chalk Talks / Labs: – TCHS 001: Next Generation Intel® Core™ Microarchitecture (Nehalem) Family of Processors: Screaming Performance, Efficient Power (8/19, 3: 00 – 3: 50) – DPTS 001: High End Desktop Platform Design Overview for the Next Generation Intel® Microarchitecture (Nehalem) Processor (8/20, 2: 40 – 3: 30) – NGMS 001: Next Generation Intel® Microarchitecture (Nehalem) Family: Architectural Insights and Power Management (8/19, 4: 00 – 5: 50) – NGMC 001: Chalk Talk: Next Generation Intel® Microarchitecture (Nehalem) Family (8/19, 5: 50 – 6: 30) – NGMS 002: Tuning Your Software for the Next Generation Intel® Microarchitecture (Nehalem) Family (8/20, 11: 10 – 12: 00) – PWRS 003: Power Managing the Virtual Data Center with Windows Server* 2008 / Hyper-V and Next Generation Processor-based Intel® Servers Featuring Intel® Dynamic Power Technology (8/19, 3: 00 – 3: 50) – PWRS 005: Platform Power Management Options for Intel® Next Generation Server Processor Technology (Tylersburg-EP) (8/21, 1: 40 – 2: 30) – SVRS 002: Overview of the Intel® Quick. Path Interconnect (8/21, 11: 10 – 12: 00) 121

Session Presentations - PDFs The PDF for this Session presentation is available from our IDF Content Catalog at the end of the day at: www. intel. com/idf or https: //intel. wingateweb. com/US 08/scheduler/public. jsp 122

Please Fill out the Session Evaluation Form Place form in evaluation box at the back of session room Thank you for your input, we use it to improve future Intel Developer Forum events 123