A Large Scale Study of Data Center Network

- Slides: 54

A Large Scale Study of Data Center Network Reliability Justin Meza Carnegie Mellon University Facebook, Inc. jjm@fb. com Safari Group @ETHZ Tianyin Xu University of Illinois Urbana-Champaign Facebook, Inc. tyxu@illinois. edu Kaushik Veeraraghavan Facebook, Inc. kaushikv@fb. com Onur Mutlu ETH Zu rich Carnegie Mellon University onur. mutlu@inf. ethz. ch David Graf | 11/1/2020 | 1

Safari Group @ETHZ 2 | |

Safari Group @ETHZ 3 | |

Safari Group @ETHZ 4 | |

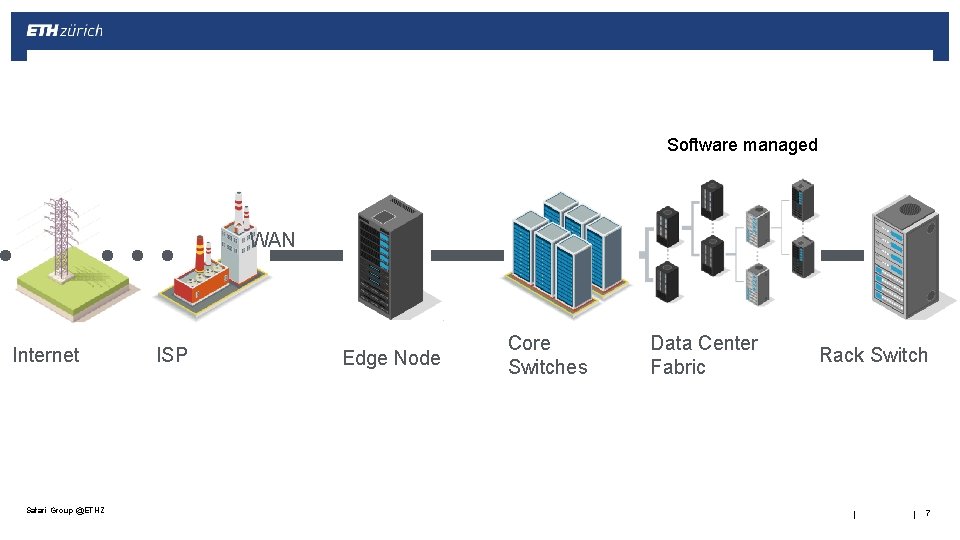

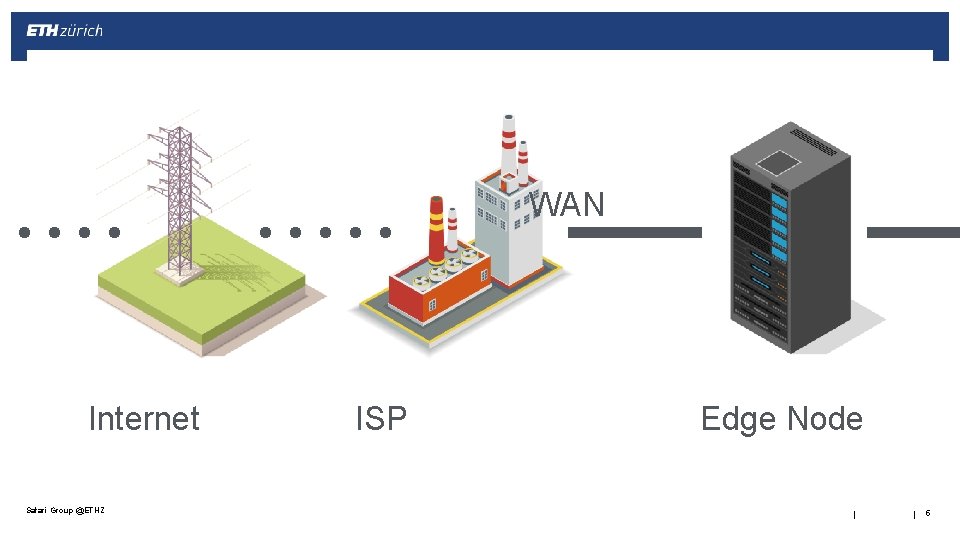

WAN Internet Safari Group @ETHZ ISP Edge Node | | 5

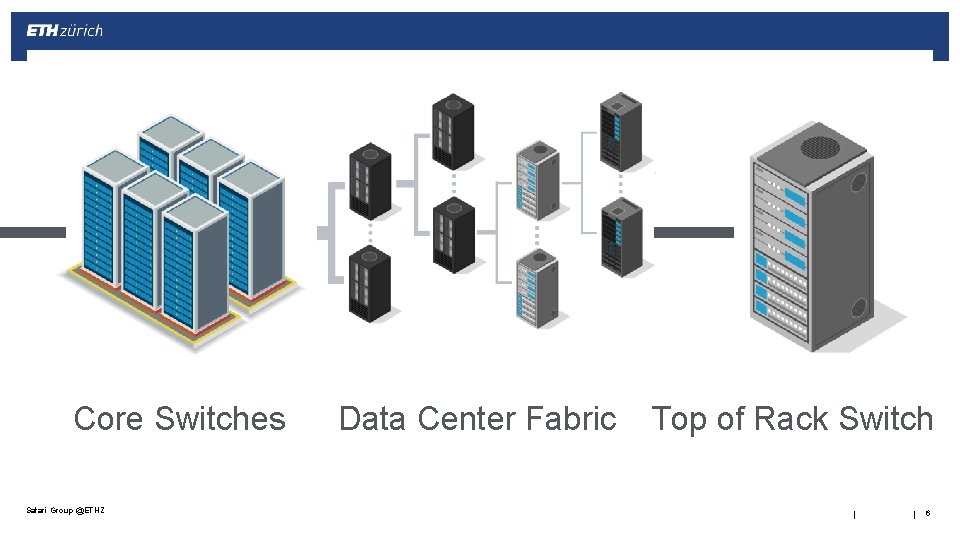

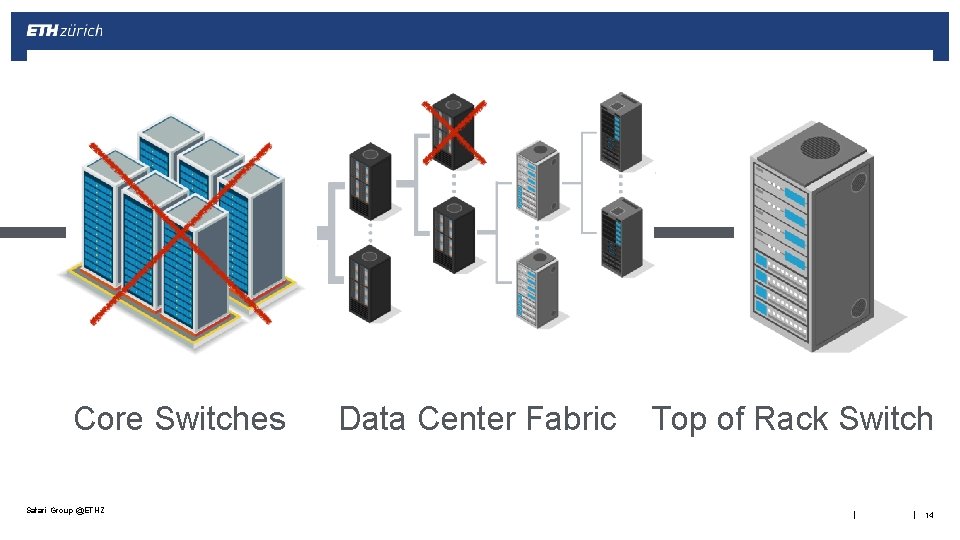

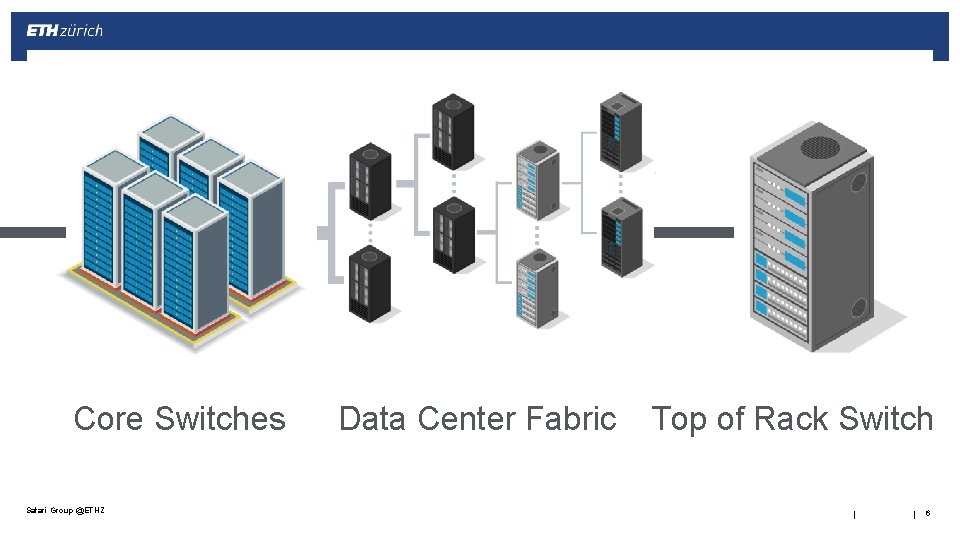

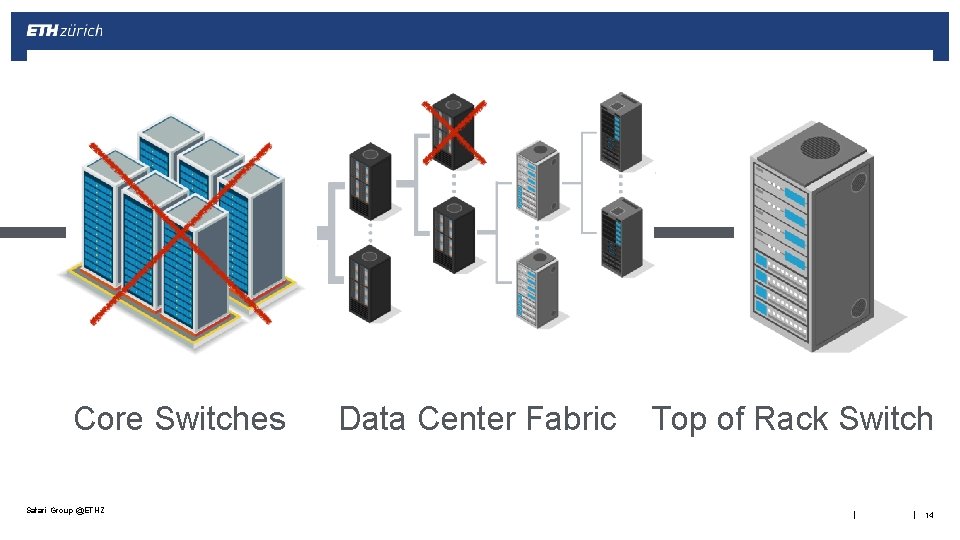

Core Switches Safari Group @ETHZ Data Center Fabric Top of Rack Switch | | 6

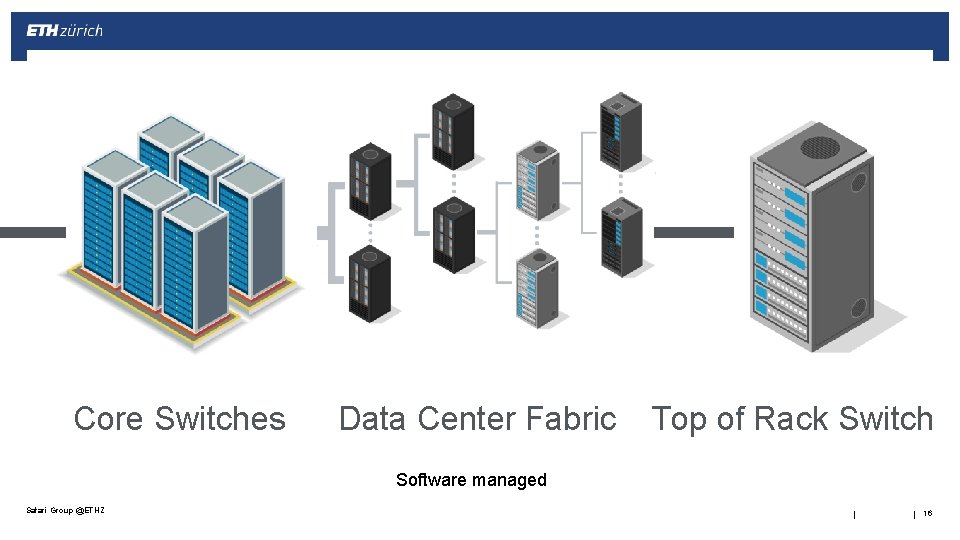

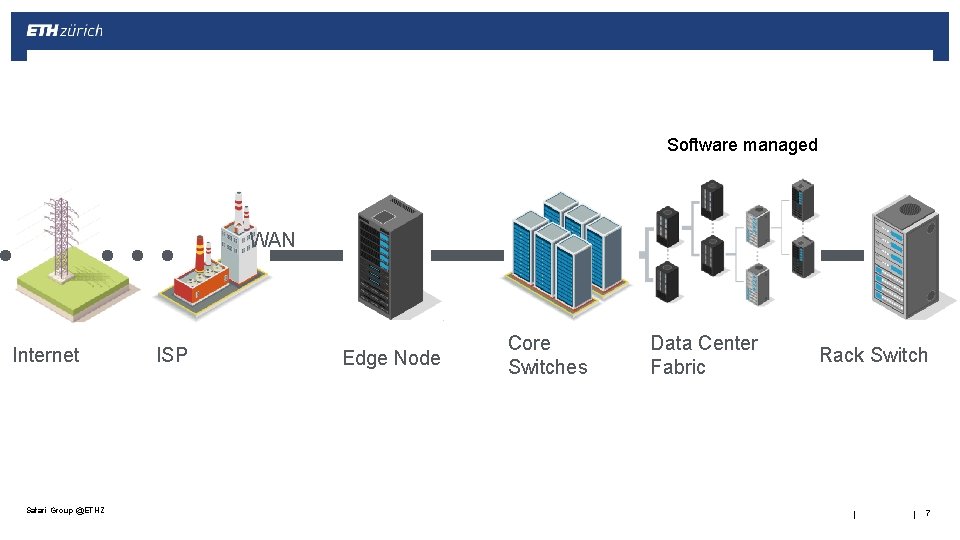

Software managed WAN Internet Safari Group @ETHZ ISP Edge Node Core Switches Data Center Fabric Rack Switch | | 7

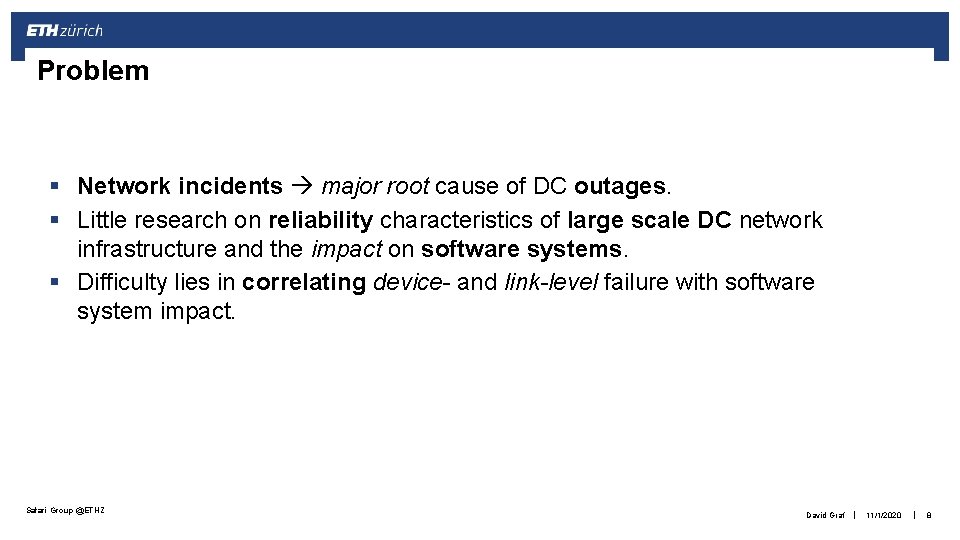

Problem § Network incidents major root cause of DC outages. § Little research on reliability characteristics of large scale DC network infrastructure and the impact on software systems. § Difficulty lies in correlating device- and link-level failure with software system impact. Safari Group @ETHZ David Graf | 11/1/2020 | 8

Goal § Cover reliability characteristics of both intra and inter data center networks. Safari Group @ETHZ David Graf | 11/1/2020 | 9

Key takeaways § DC more software managed § next challenge: make the first and last hop more reliable § Backbone network reliability planning § more important than ever for ensuring good overall site reliability. Safari Group @ETHZ David Graf | 11/1/2020 | 10

Outline § Introduction to data center networks § Intra data center networks § Inter data center networks § Concluding thoughts Safari Group @ETHZ David Graf | 11/1/2020 | 11

Terminology Network Incidents cause Software Failures that result in Site Events (SEVs) § SEVs classified into 3 severity categories § Engineers write the reports § Report contain: § Incident’s root cause § Root cause’s effect on software systems § Steps to prevent the incident from happening again § Network SEV report contain details about: § Network device implicated in the incident § Duration of the incident § Incident’s effect on software systems Safari Group @ETHZ David Graf | 11/1/2020 | 12

Methodology Data Intra data center reliability: 7 years of service level event data collected from SEV database § Root cause § May be undetermined § Device type § Used to classify a network incident by the implicated device’s type § Network design § Classify a network incident based on network architecture Safari Group @ETHZ David Graf | 11/1/2020 | 13

Core Switches Safari Group @ETHZ Data Center Fabric Top of Rack Switch | | 14

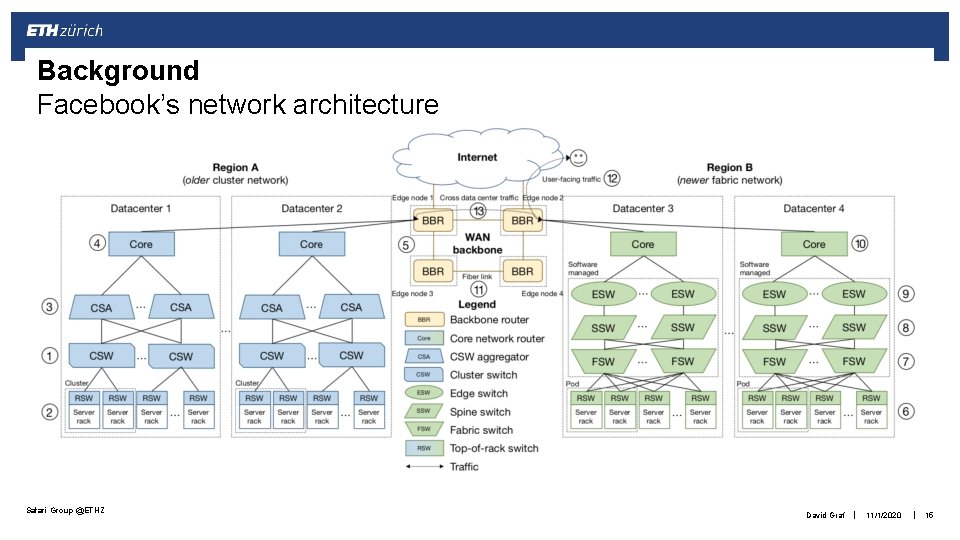

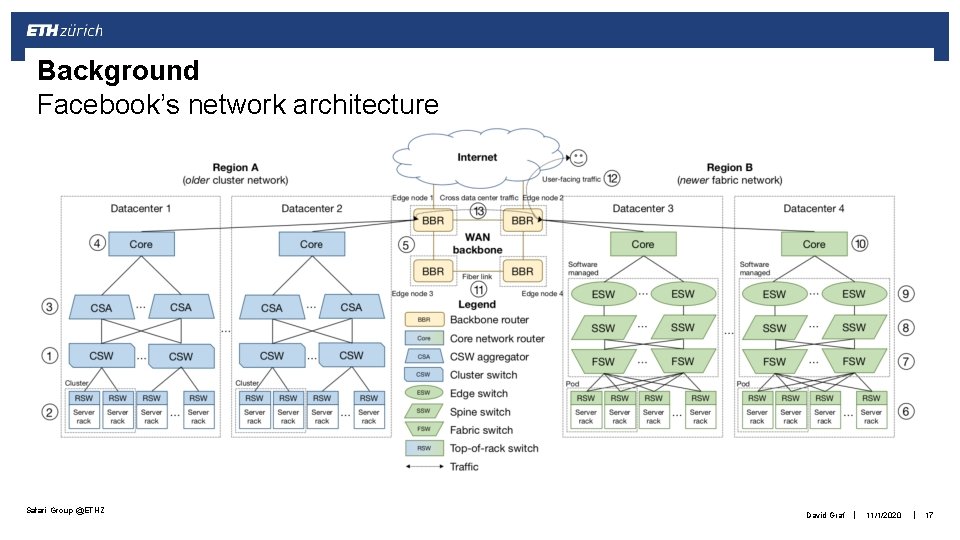

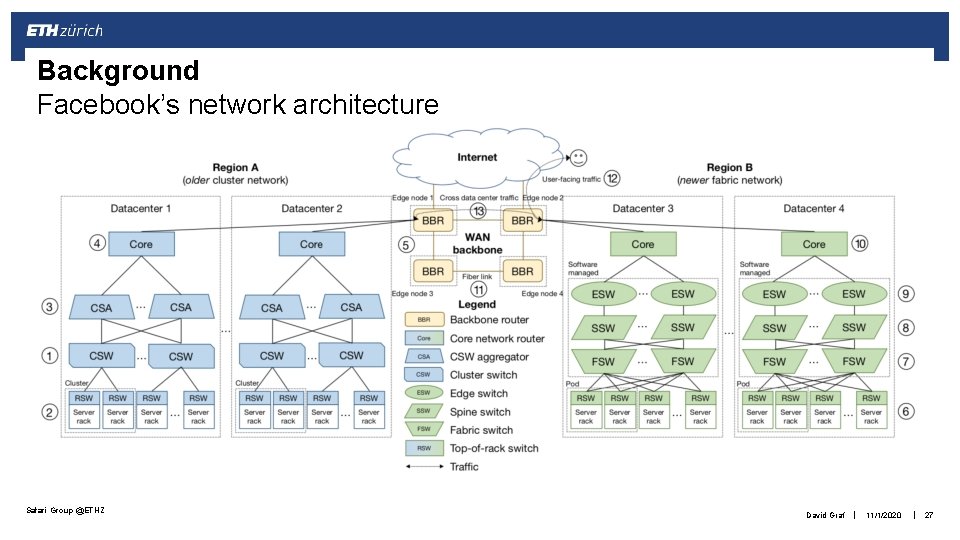

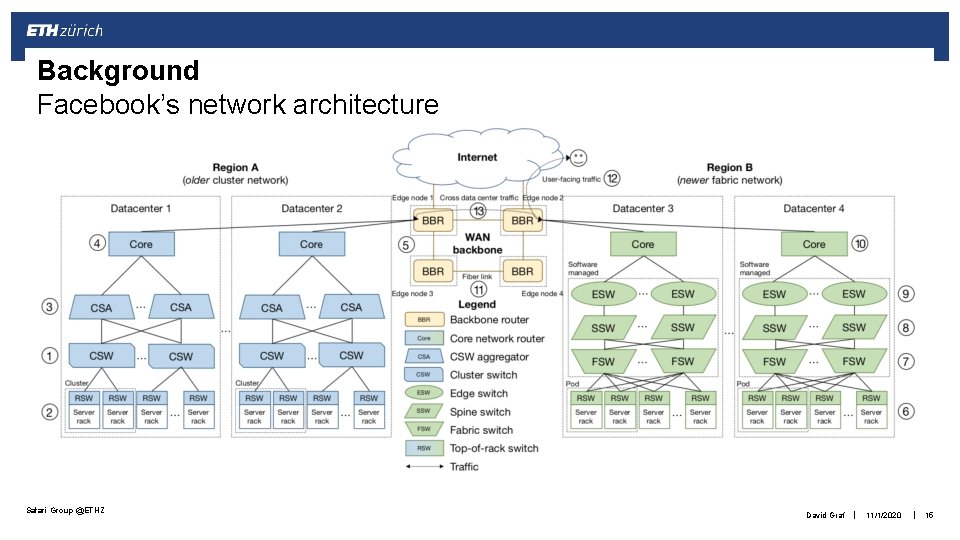

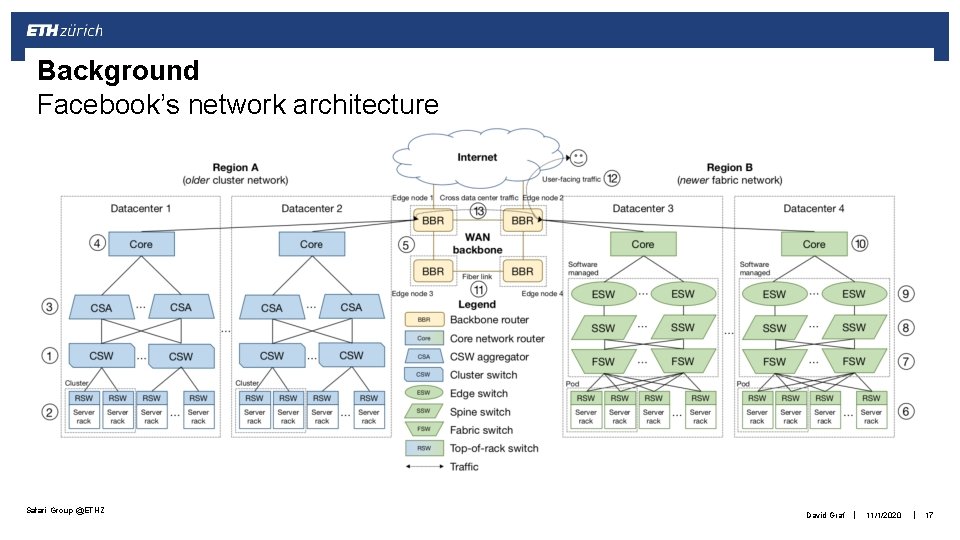

Background Facebook’s network architecture Safari Group @ETHZ David Graf | 11/1/2020 | 15

Core Switches Data Center Fabric Top of Rack Switch Software managed Safari Group @ETHZ | | 16

Background Facebook’s network architecture Safari Group @ETHZ David Graf | 11/1/2020 | 17

Outline § Introduction to data center networks § Intra data center networks § Inter data center networks § Concluding thoughts Safari Group @ETHZ David Graf | 11/1/2020 | 18

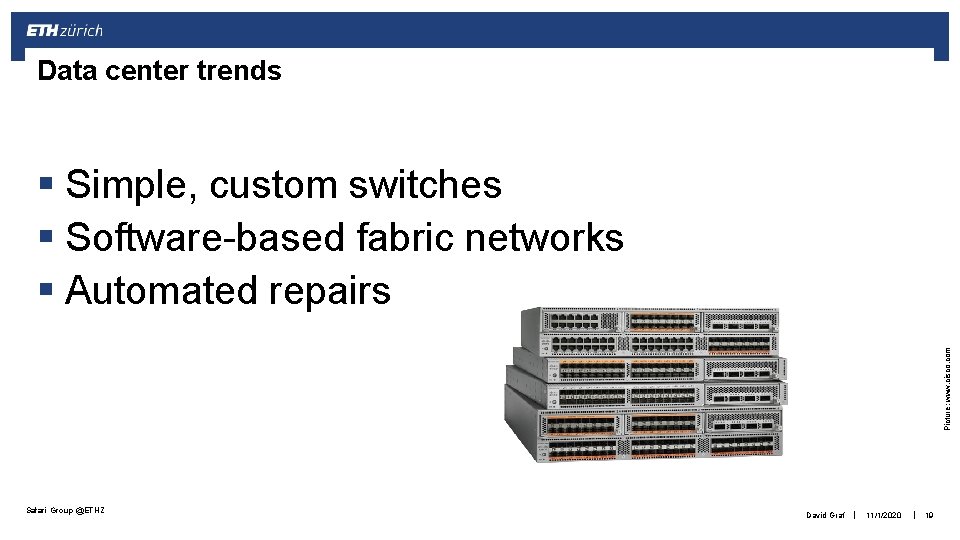

Data center trends Picture: www. cisco. com § Simple, custom switches § Software-based fabric networks § Automated repairs Safari Group @ETHZ David Graf | 11/1/2020 | 19

Data center trends Picture: www. cisco. com § Simple, custom switches § Software-based fabric networks § Automated repairs Safari Group @ETHZ David Graf | 11/1/2020 | 20

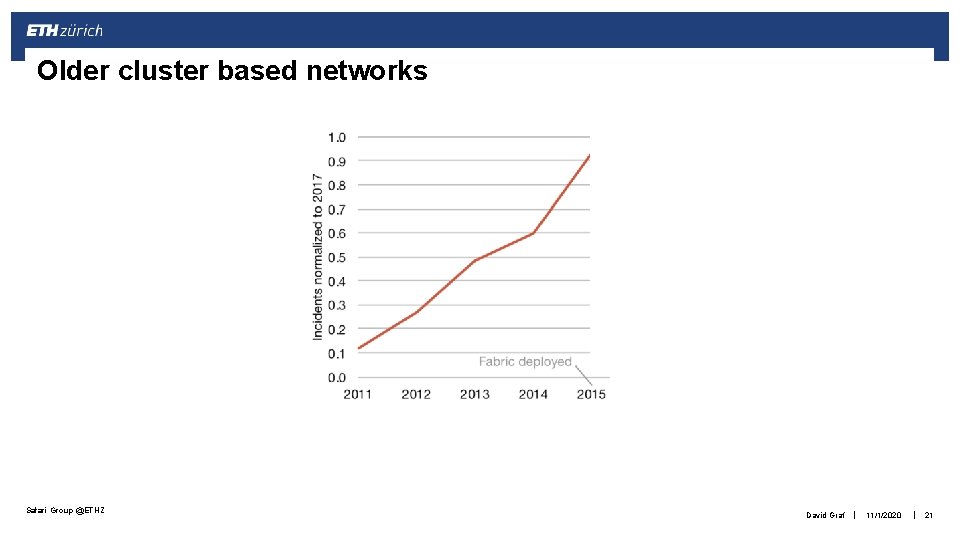

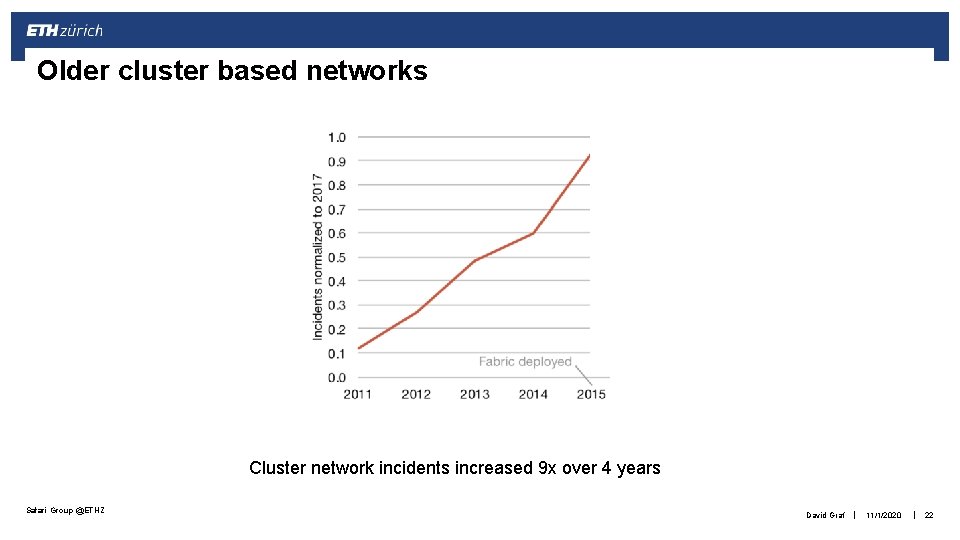

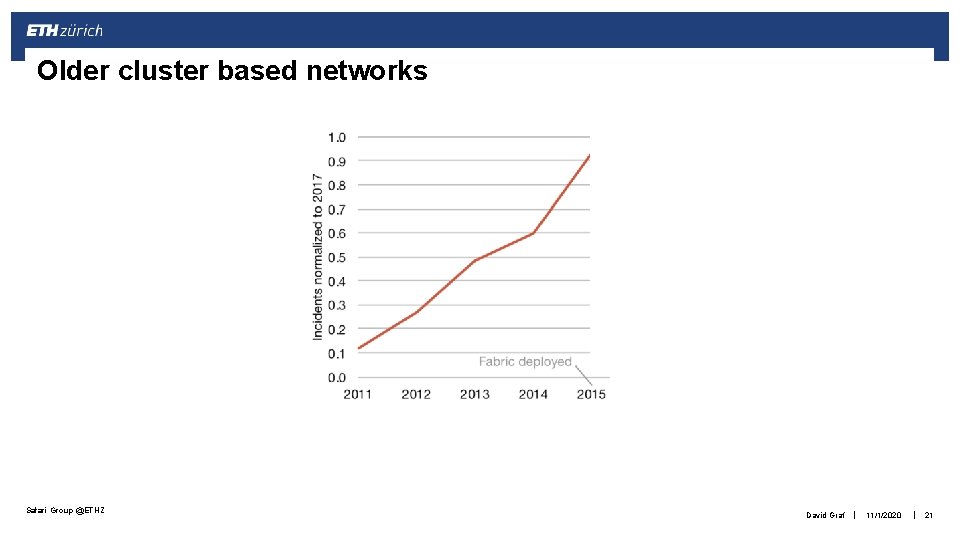

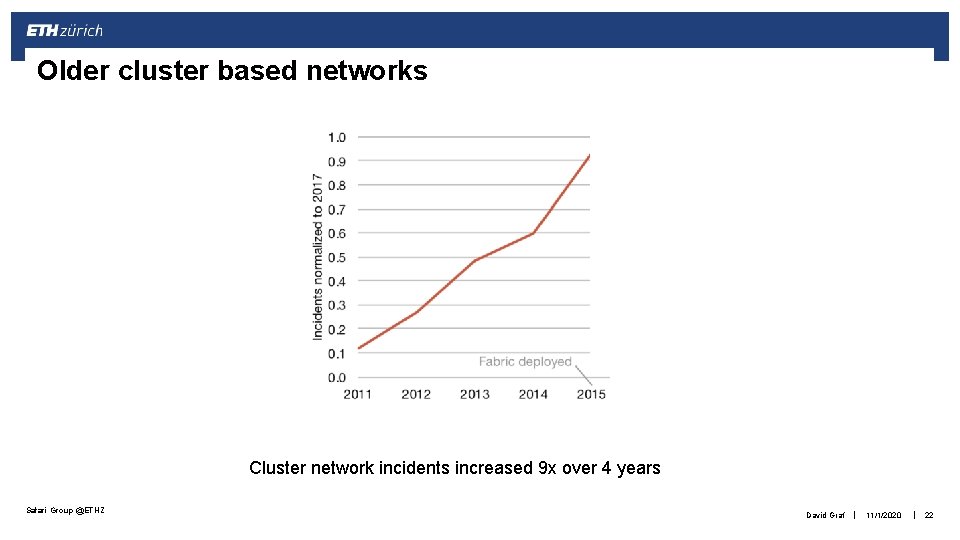

Older cluster based networks Safari Group @ETHZ David Graf | 11/1/2020 | 21

Older cluster based networks Cluster network incidents increased 9 x over 4 years Safari Group @ETHZ David Graf | 11/1/2020 | 22

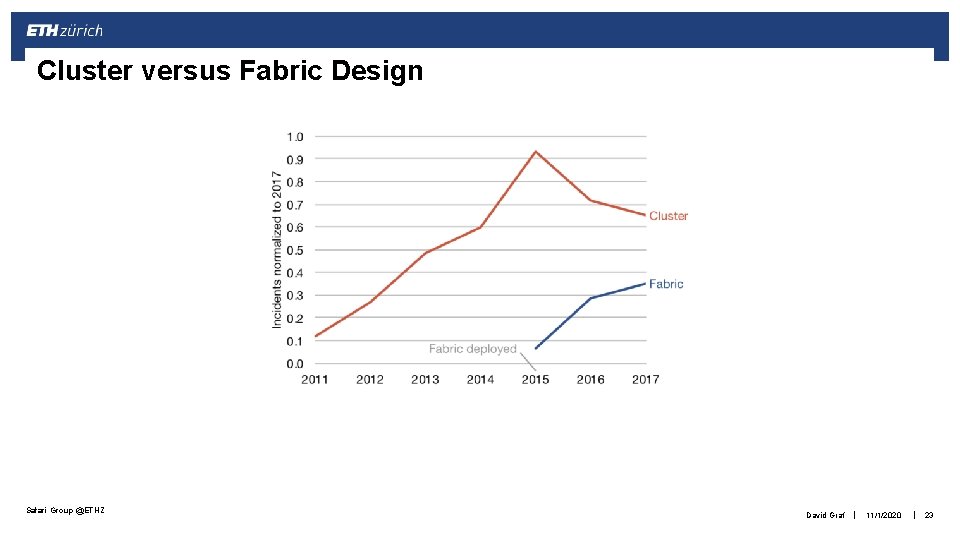

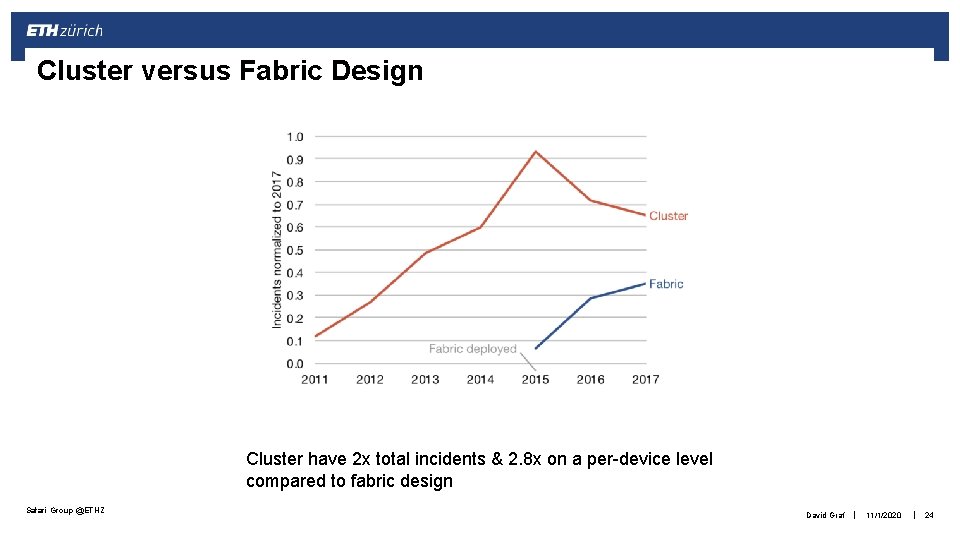

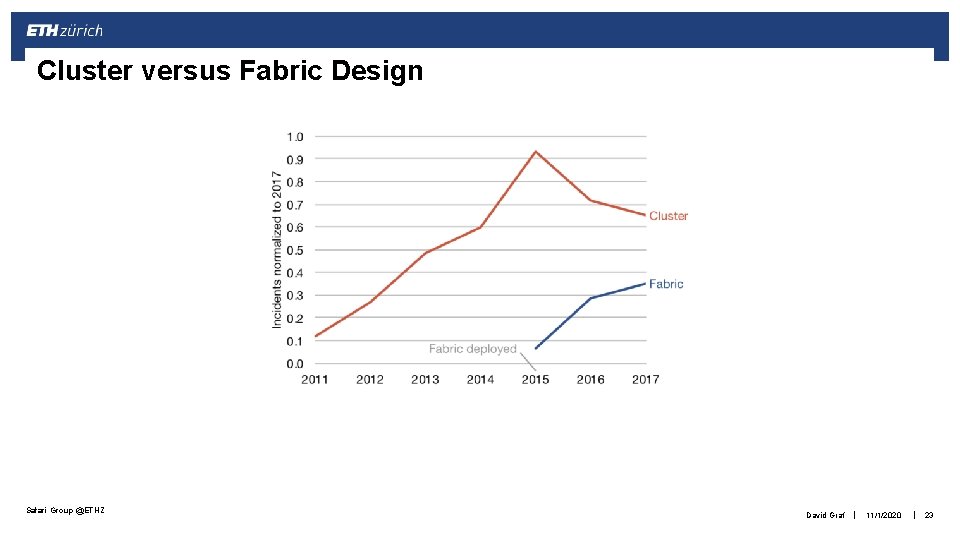

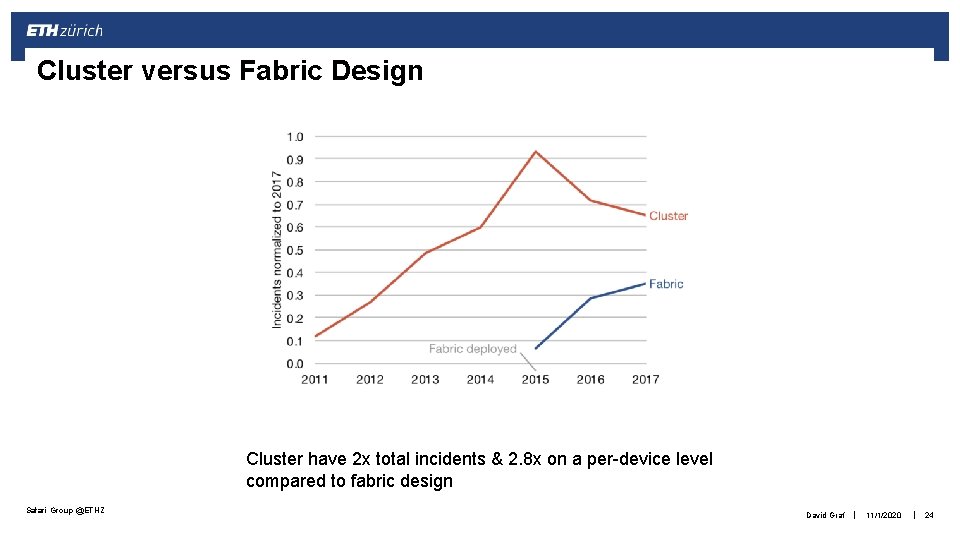

Cluster versus Fabric Design Safari Group @ETHZ David Graf | 11/1/2020 | 23

Cluster versus Fabric Design Cluster have 2 x total incidents & 2. 8 x on a per-device level compared to fabric design Safari Group @ETHZ David Graf | 11/1/2020 | 24

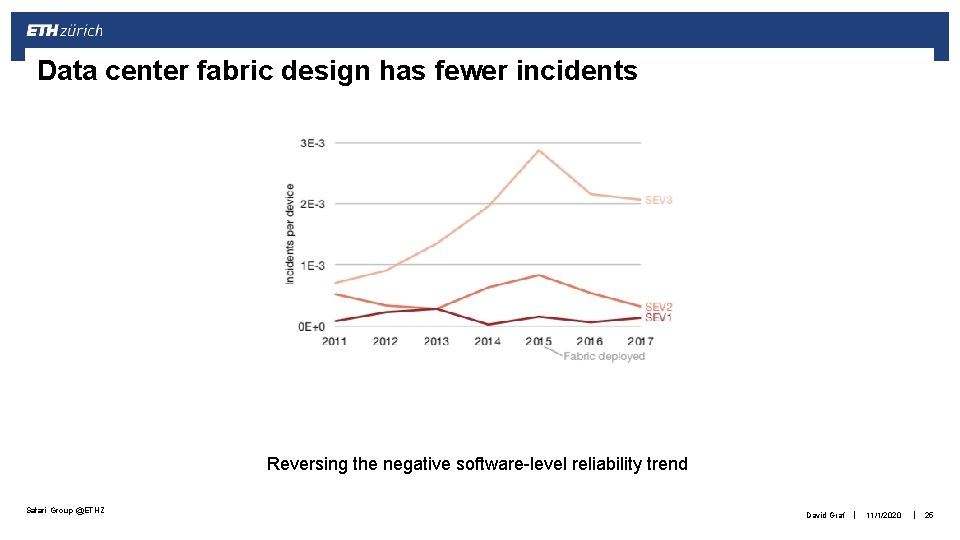

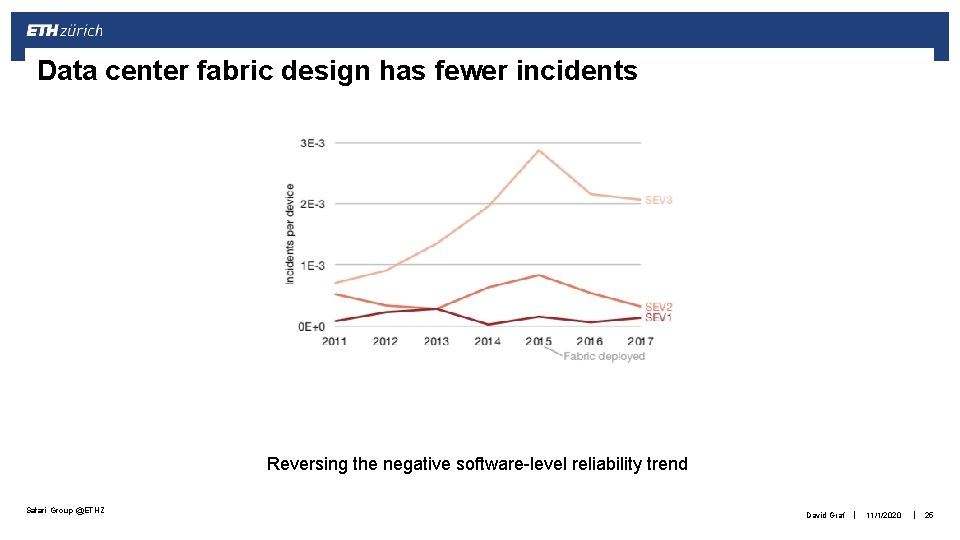

Data center fabric design has fewer incidents Reversing the negative software-level reliability trend Safari Group @ETHZ David Graf | 11/1/2020 | 25

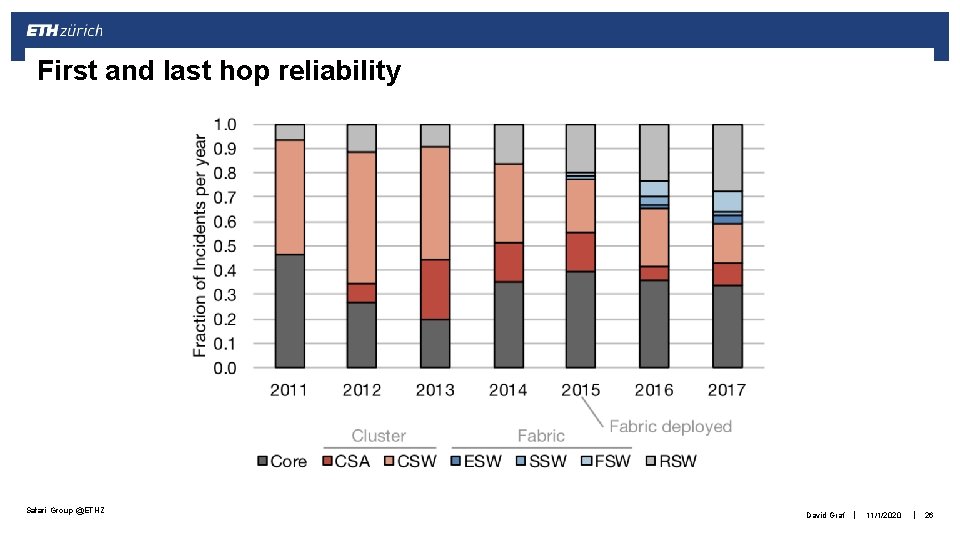

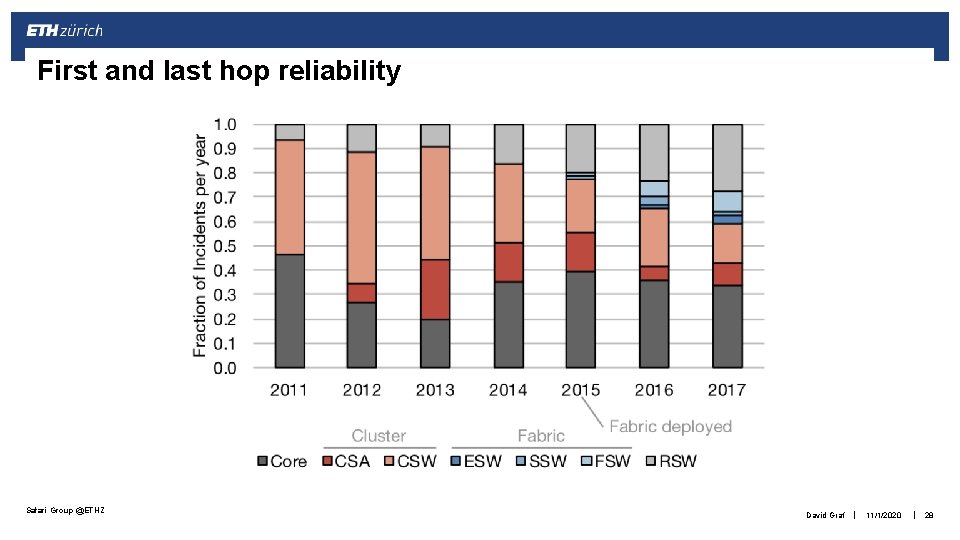

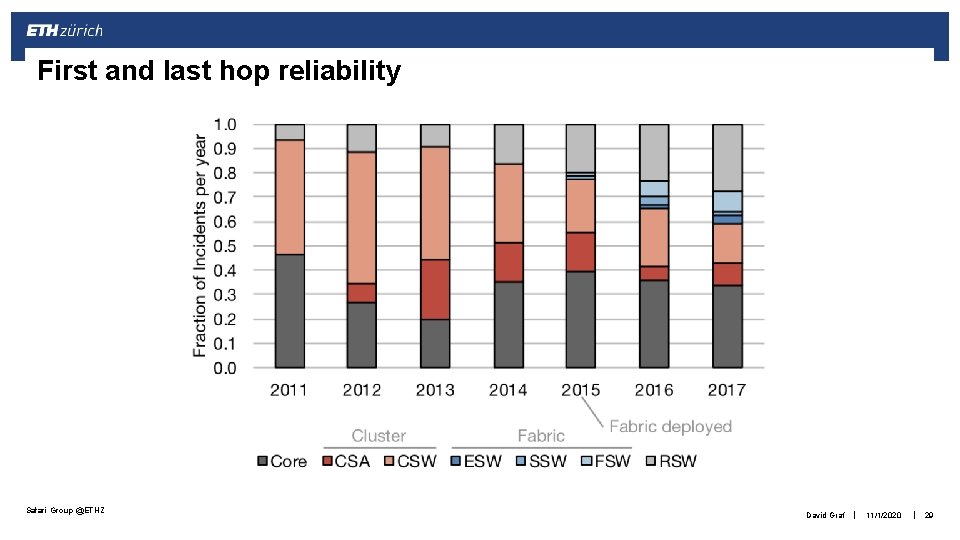

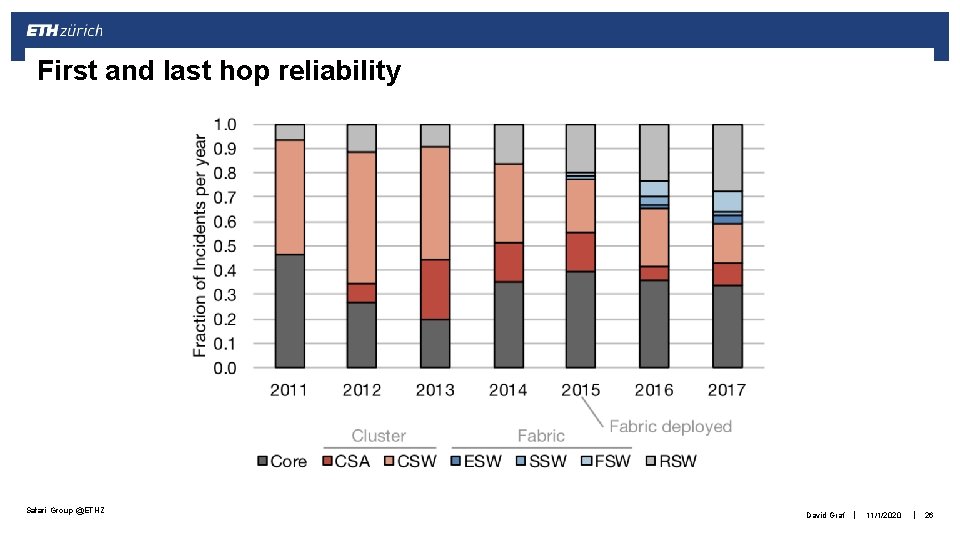

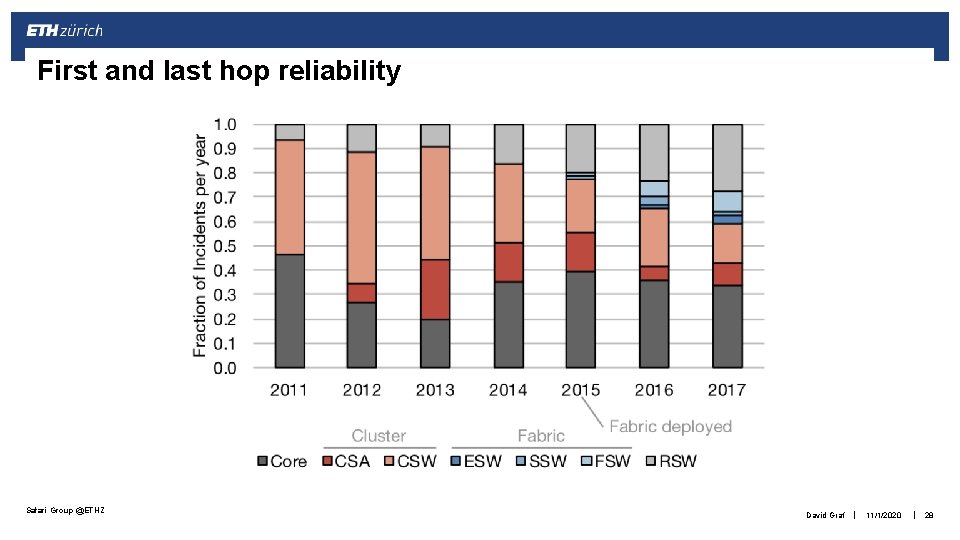

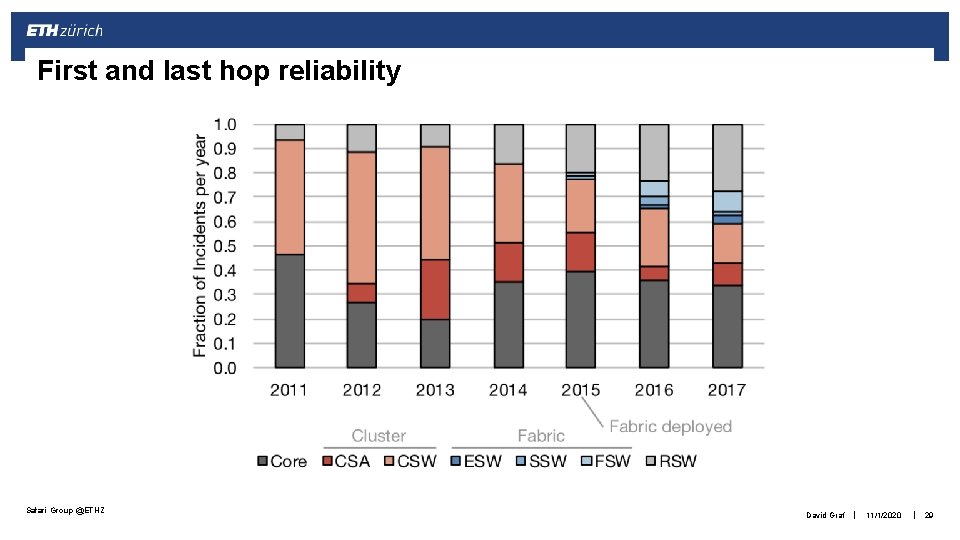

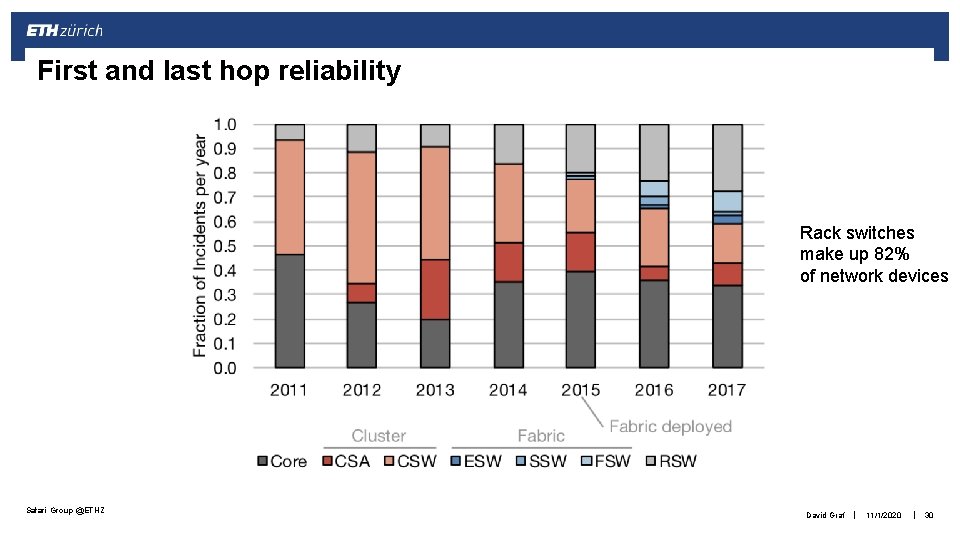

First and last hop reliability Safari Group @ETHZ David Graf | 11/1/2020 | 26

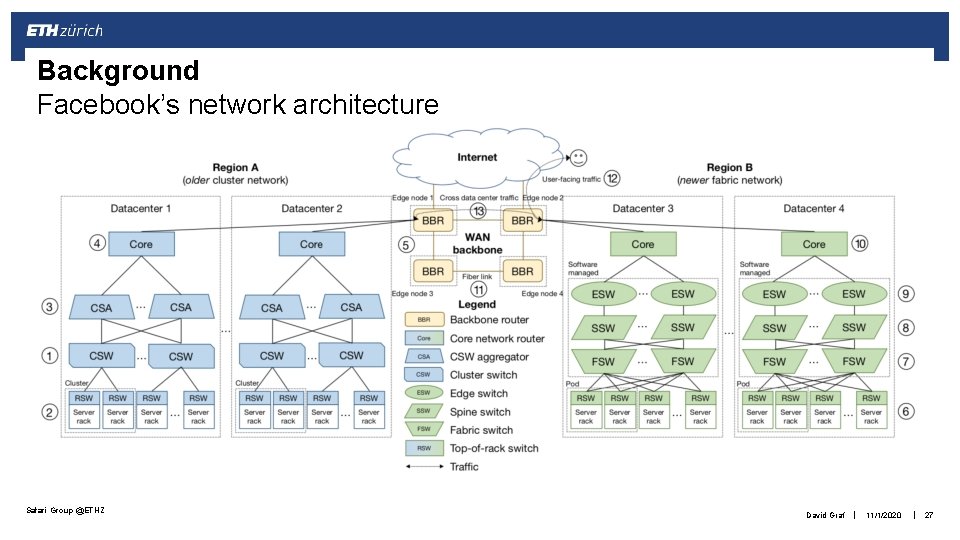

Background Facebook’s network architecture Safari Group @ETHZ David Graf | 11/1/2020 | 27

First and last hop reliability Safari Group @ETHZ David Graf | 11/1/2020 | 28

First and last hop reliability Safari Group @ETHZ David Graf | 11/1/2020 | 29

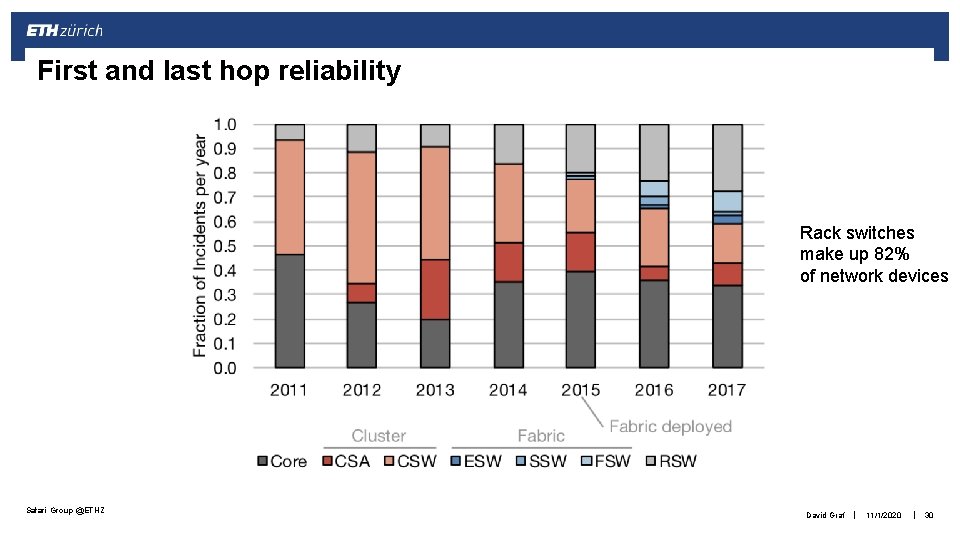

First and last hop reliability Rack switches make up 82% of network devices Safari Group @ETHZ David Graf | 11/1/2020 | 30

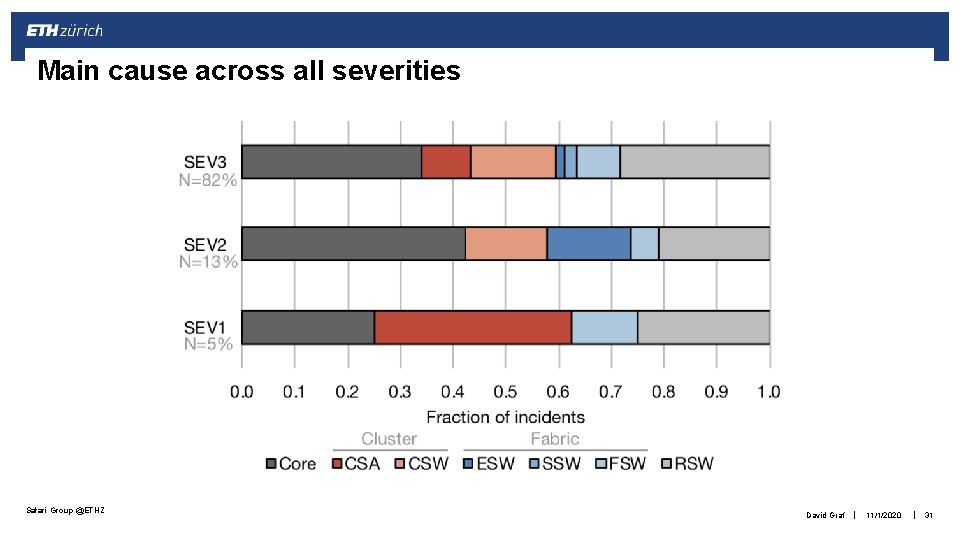

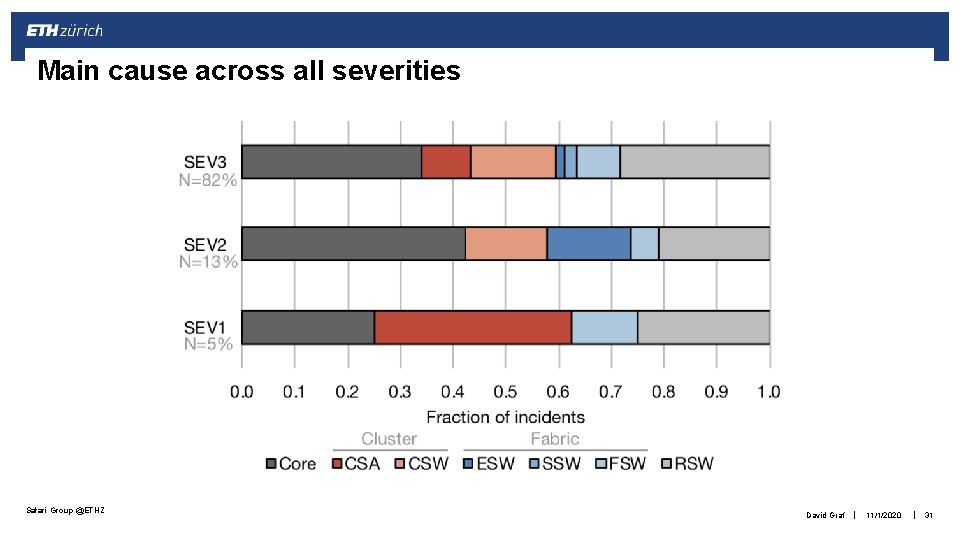

Main cause across all severities Safari Group @ETHZ David Graf | 11/1/2020 | 31

Implications for data center networks § More redundant switches one approach Safari Group @ETHZ David Graf | 11/1/2020 | 32

Implications for data center networks § More redundant switches one approach § Make software more resilient Safari Group @ETHZ David Graf | 11/1/2020 | 33

Implications for data center networks § More redundant switches one approach § Make software more resilient § More aggressive automated repairs Safari Group @ETHZ David Graf | 11/1/2020 | 34

Outline § Introduction to data center networks § Intra data center networks § Inter data center networks § Concluding thoughts Safari Group @ETHZ David Graf | 11/1/2020 | 35

Safari Group @ETHZ 36 | |

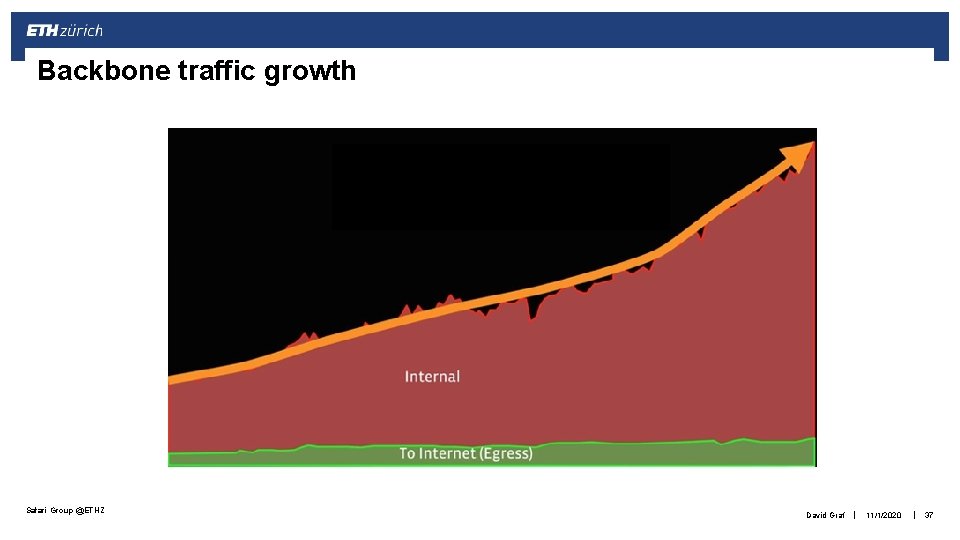

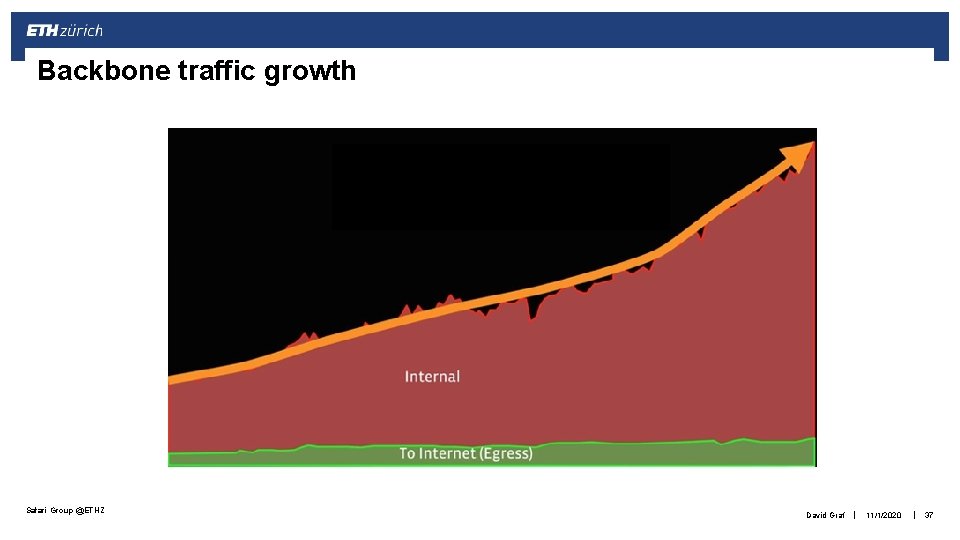

Backbone traffic growth Safari Group @ETHZ David Graf | 11/1/2020 | 37

Safari Group @ETHZ 38 | |

Data center backbones § Shared resource § Frequent link failure § Capacity planning dictates reliability Safari Group @ETHZ David Graf | 11/1/2020 | 39

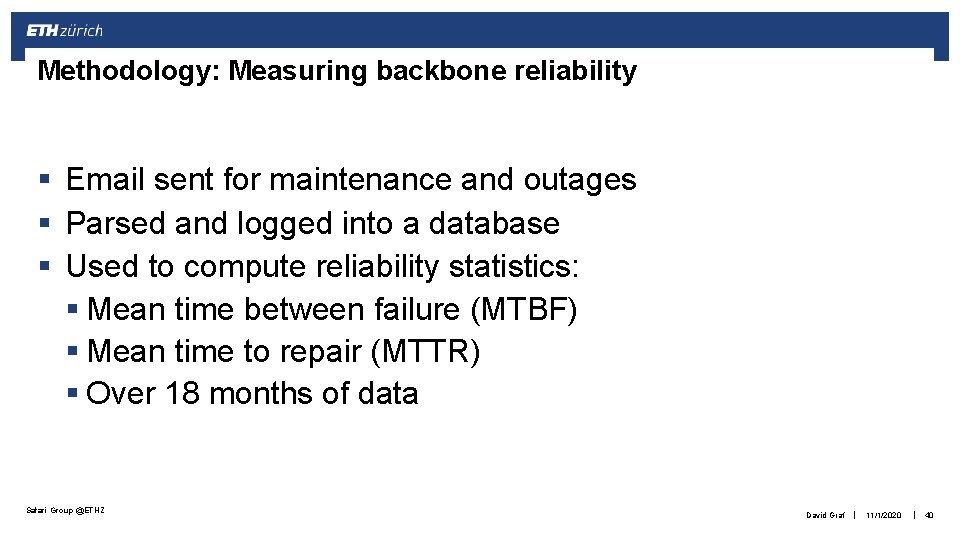

Methodology: Measuring backbone reliability § Email sent for maintenance and outages § Parsed and logged into a database § Used to compute reliability statistics: § Mean time between failure (MTBF) § Mean time to repair (MTTR) § Over 18 months of data Safari Group @ETHZ David Graf | 11/1/2020 | 40

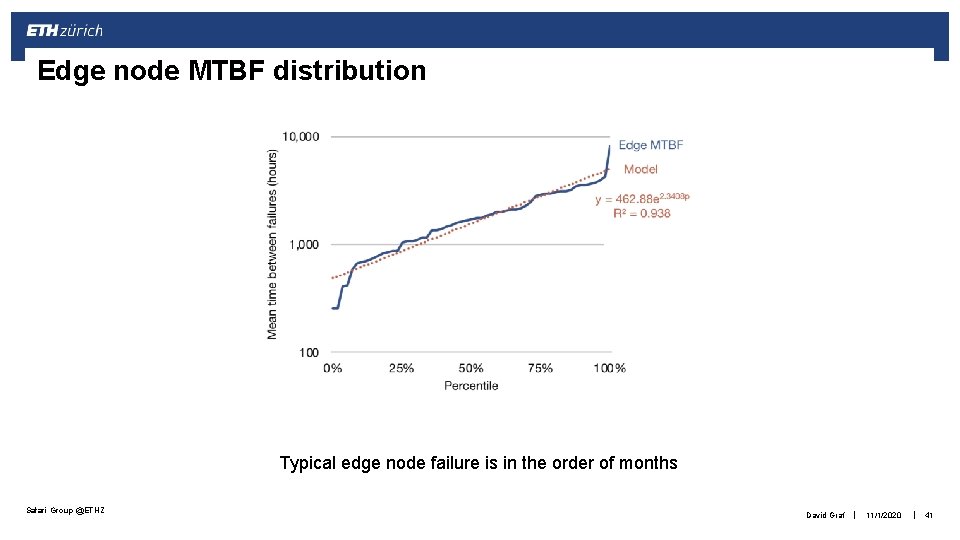

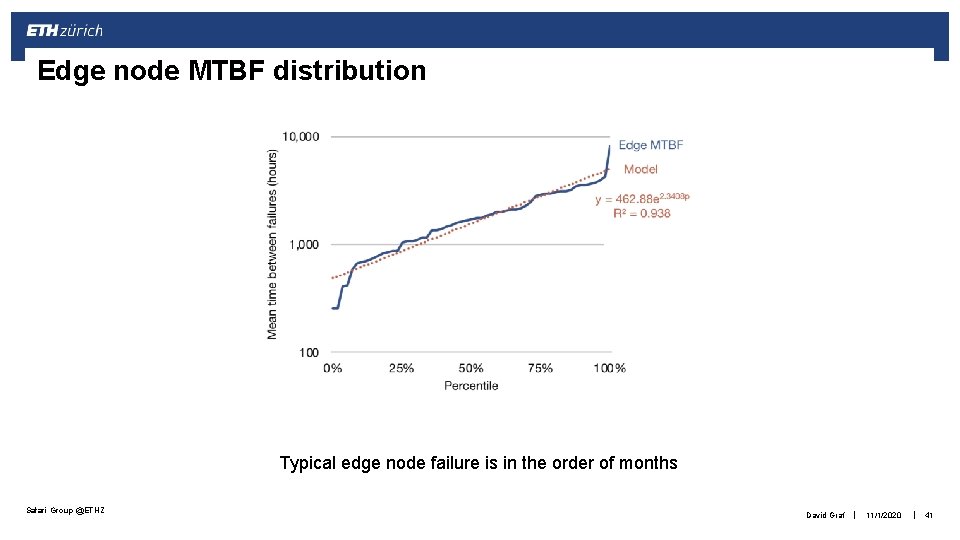

Edge node MTBF distribution Typical edge node failure is in the order of months Safari Group @ETHZ David Graf | 11/1/2020 | 41

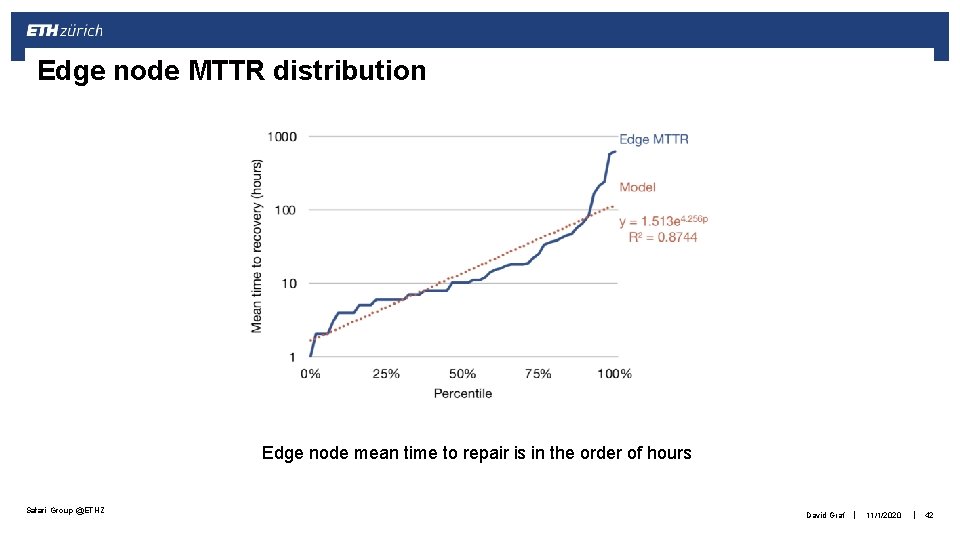

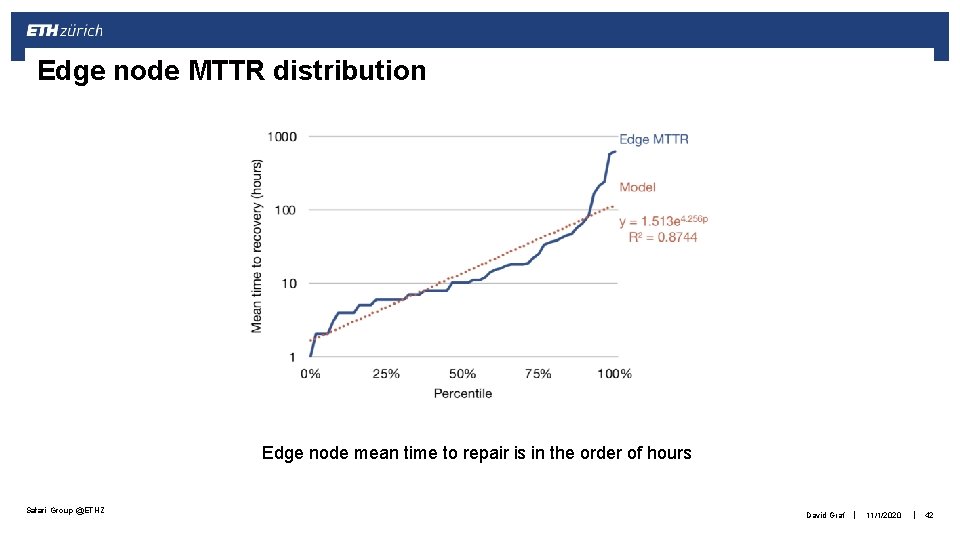

Edge node MTTR distribution Edge node mean time to repair is in the order of hours Safari Group @ETHZ David Graf | 11/1/2020 | 42

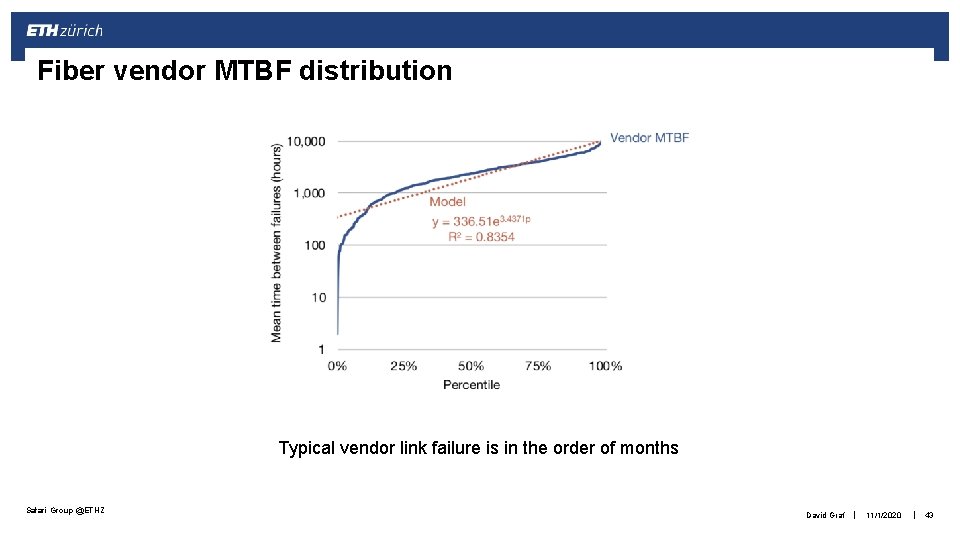

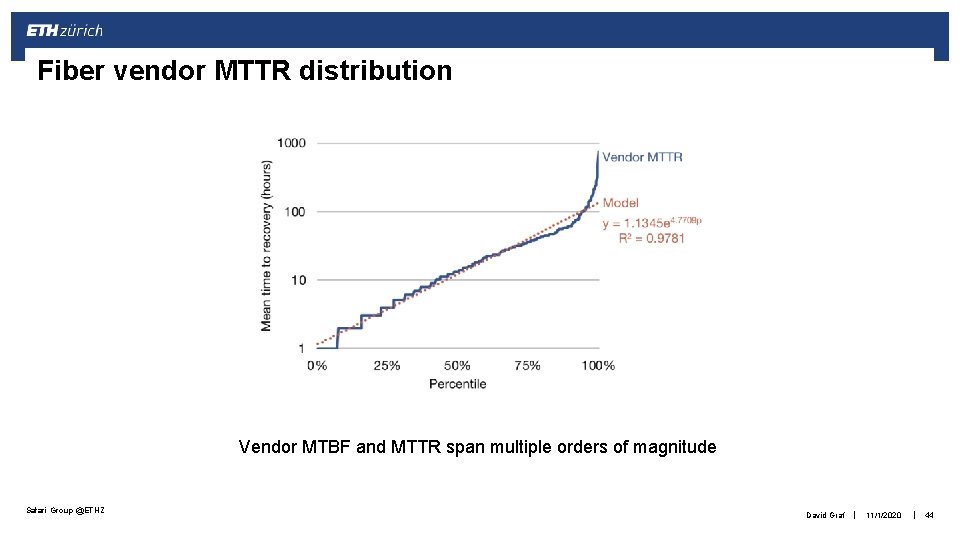

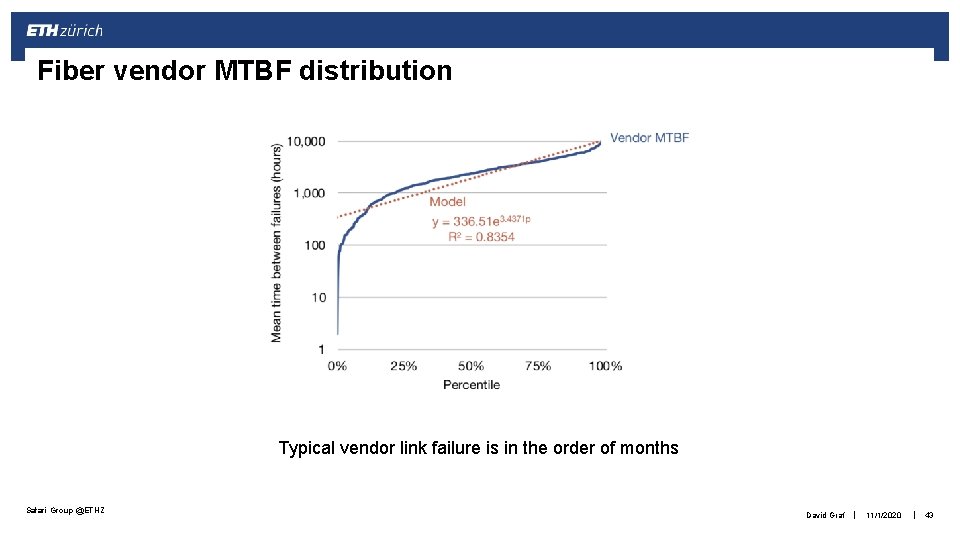

Fiber vendor MTBF distribution Typical vendor link failure is in the order of months Safari Group @ETHZ David Graf | 11/1/2020 | 43

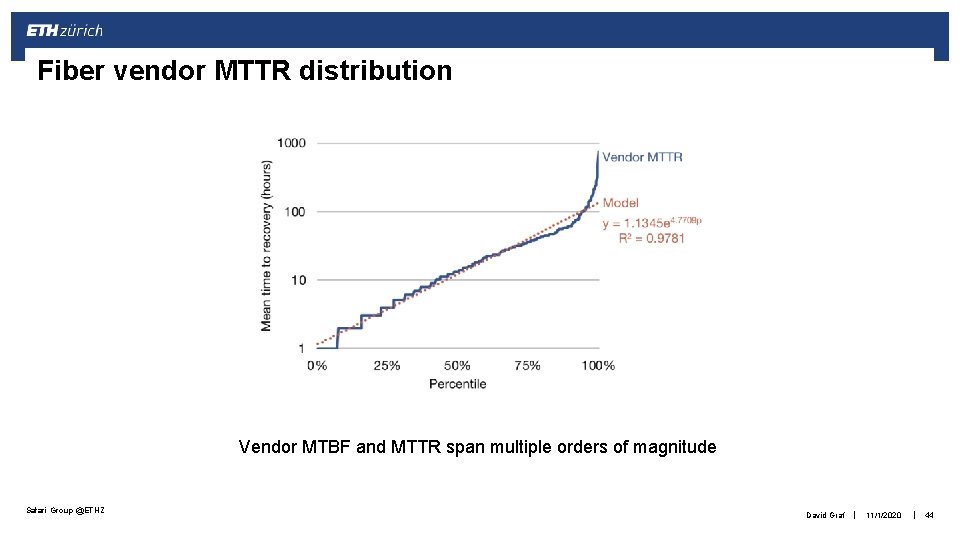

Fiber vendor MTTR distribution Vendor MTBF and MTTR span multiple orders of magnitude Safari Group @ETHZ David Graf | 11/1/2020 | 44

Outline § Introduction to data center networks § Intra data center networks § Inter data center networks § Concluding thoughts Safari Group @ETHZ David Graf | 11/1/2020 | 45

Conclusion § First and last hop reliability forces to rethink how network and software share the task of reliability Safari Group @ETHZ David Graf | 11/1/2020 | 46

Conclusion § First and last hop reliability forces to rethink how network and software share the task of reliability § Reliable backbone planning is a key enabler for geo replication and software management flexibility Safari Group @ETHZ David Graf | 11/1/2020 | 47

Strengths § Based on Facebook’s data § 7 years of intra data center data § 18 months of inter data center data § Large scale data center reliability § Common challenge across the industry. Safari Group @ETHZ David Graf | 11/1/2020 | 48

Weaknesses § § § Why do rack switch incidents increase? Logged versus unlogged failures Technology changes over time More engineers making changes Switch maturity Safari Group @ETHZ David Graf | 11/1/2020 | 49

Brainstorming and Discussion Safari Group @ETHZ ? David Graf | 11/1/2020 | 50

Brainstorming and Discussion § What will happen to the backbone networks and core switches? § Will they see a similar shift from proprietary to more customizable software? Safari Group @ETHZ David Graf | 11/1/2020 | 51

Brainstorming and Discussion § How to make better reports? § Can we automate how they are written? Safari Group @ETHZ David Graf | 11/1/2020 | 52

Brainstorming and Discussion § Why are the rack switch incidents increasing over time? Safari Group @ETHZ David Graf | 11/1/2020 | 53

The End Thank You Safari Group @ETHZ David Graf | 11/1/2020 | 54