A High Performance FPGAbased Accelerator for LargeScale Convolutional

A High Performance FPGA-based Accelerator for Large-Scale Convolutional Neural Networks Huimin Li, Xitian Fan, Li Jiao, Wei Cao*, Xuegong Zhou, Lingli Wang State Key Laboratory of ASIC and System Fudan University Shanghai, China Aug 30, 2016 1

Outline • Introduction • Convolutional neural networks • Alex. Net model • Motivation • Challenges • Previous work • Our contribution • Our Work • • System architecture Parallelism strategies for computing Data access optimization Case study -- Alex. Net • Experimental Results 2

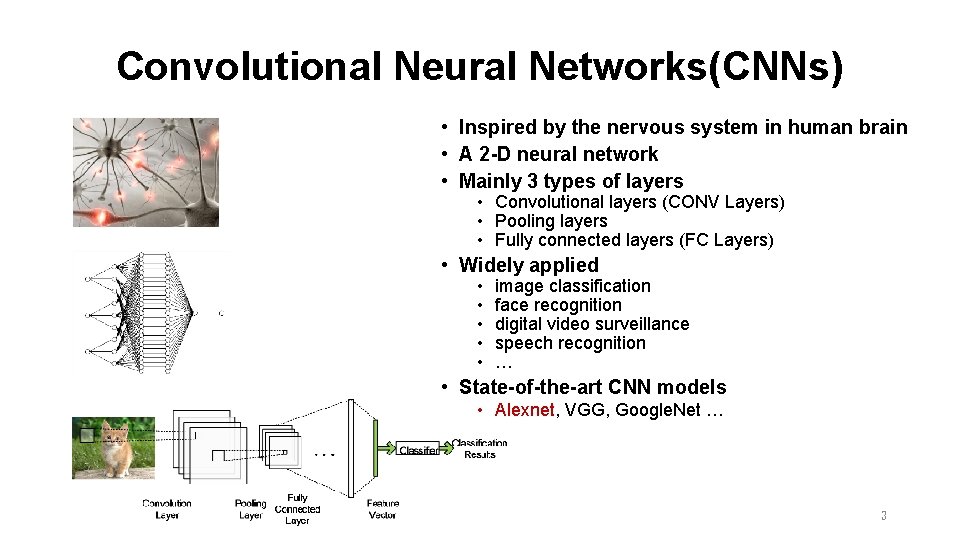

Convolutional Neural Networks(CNNs) • Inspired by the nervous system in human brain • A 2 -D neural network • Mainly 3 types of layers • Convolutional layers (CONV Layers) • Pooling layers • Fully connected layers (FC Layers) • Widely applied • • • image classification face recognition digital video surveillance speech recognition … • State-of-the-art CNN models • Alexnet, VGG, Google. Net … 3

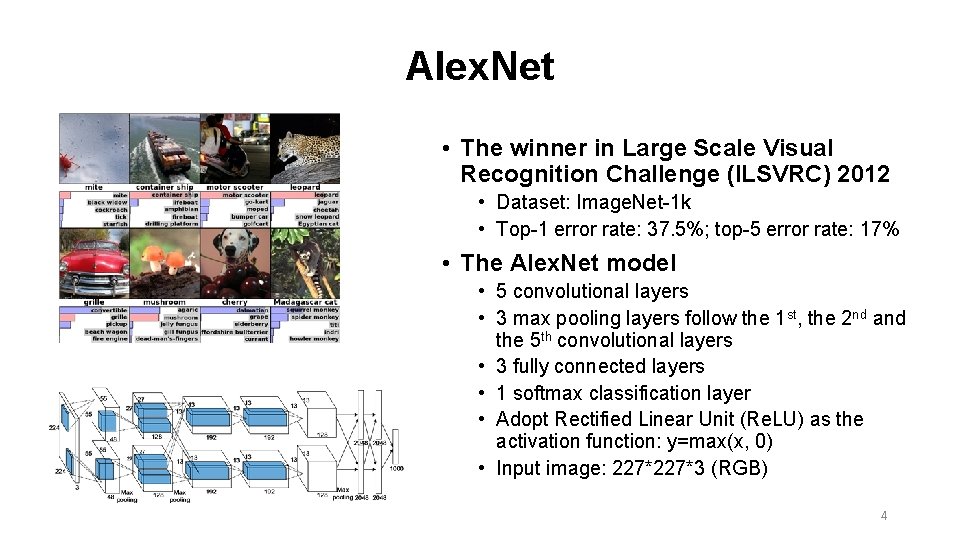

Alex. Net • The winner in Large Scale Visual Recognition Challenge (ILSVRC) 2012 • Dataset: Image. Net-1 k • Top-1 error rate: 37. 5%; top-5 error rate: 17% • The Alex. Net model • 5 convolutional layers • 3 max pooling layers follow the 1 st, the 2 nd and the 5 th convolutional layers • 3 fully connected layers • 1 softmax classification layer • Adopt Rectified Linear Unit (Re. LU) as the activation function: y=max(x, 0) • Input image: 227*3 (RGB) 4

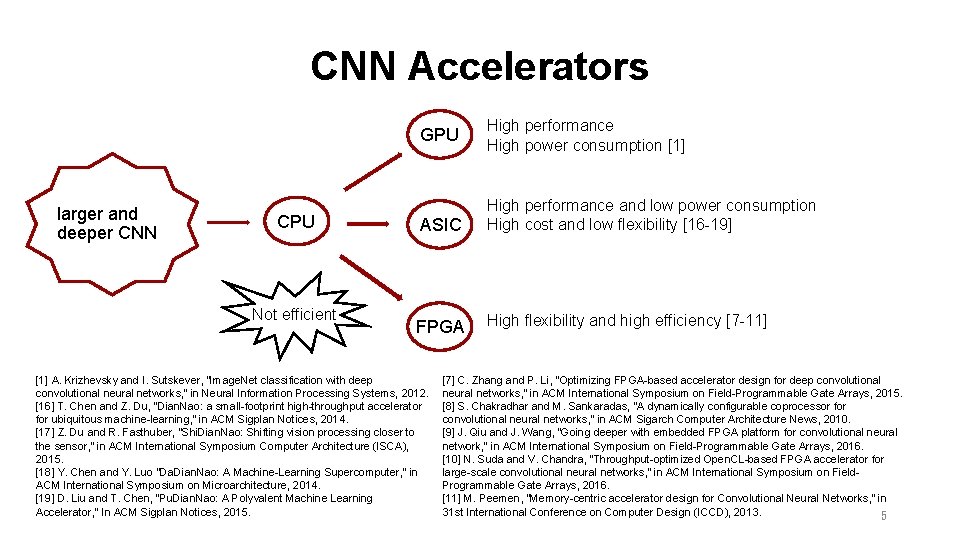

CNN Accelerators GPU larger and deeper CNN CPU Not efficient High performance High power consumption [1] ASIC High performance and low power consumption High cost and low flexibility [16 -19] FPGA High flexibility and high efficiency [7 -11] [1] A. Krizhevsky and I. Sutskever, “Image. Net classification with deep convolutional neural networks, ” in Neural Information Processing Systems, 2012. [16] T. Chen and Z. Du, “Dian. Nao: a small-footprint high-throughput accelerator for ubiquitous machine-learning, ” in ACM Sigplan Notices, 2014. [17] Z. Du and R. Fasthuber, “Shi. Dian. Nao: Shifting vision processing closer to the sensor, ” in ACM International Symposium Computer Architecture (ISCA), 2015. [18] Y. Chen and Y. Luo “Da. Dian. Nao: A Machine-Learning Supercomputer, ” in ACM International Symposium on Microarchitecture, 2014. [19] D. Liu and T. Chen, “Pu. Dian. Nao: A Polyvalent Machine Learning Accelerator, ” In ACM Sigplan Notices, 2015. [7] C. Zhang and P. Li, “Optimizing FPGA-based accelerator design for deep convolutional neural networks, ” in ACM International Symposium on Field-Programmable Gate Arrays, 2015. [8] S. Chakradhar and M. Sankaradas, “A dynamically configurable coprocessor for convolutional neural networks, ” in ACM Sigarch Computer Architecture News, 2010. [9] J. Qiu and J. Wang, “Going deeper with embedded FPGA platform for convolutional neural network, ” in ACM International Symposium on Field-Programmable Gate Arrays, 2016. [10] N. Suda and V. Chandra, “Throughput-optimized Open. CL-based FPGA accelerator for large-scale convolutional neural networks, ” in ACM International Symposium on Field. Programmable Gate Arrays, 2016. [11] M. Peemen, “Memory-centric accelerator design for Convolutional Neural Networks, ” in 31 st International Conference on Computer Design (ICCD), 2013. 5

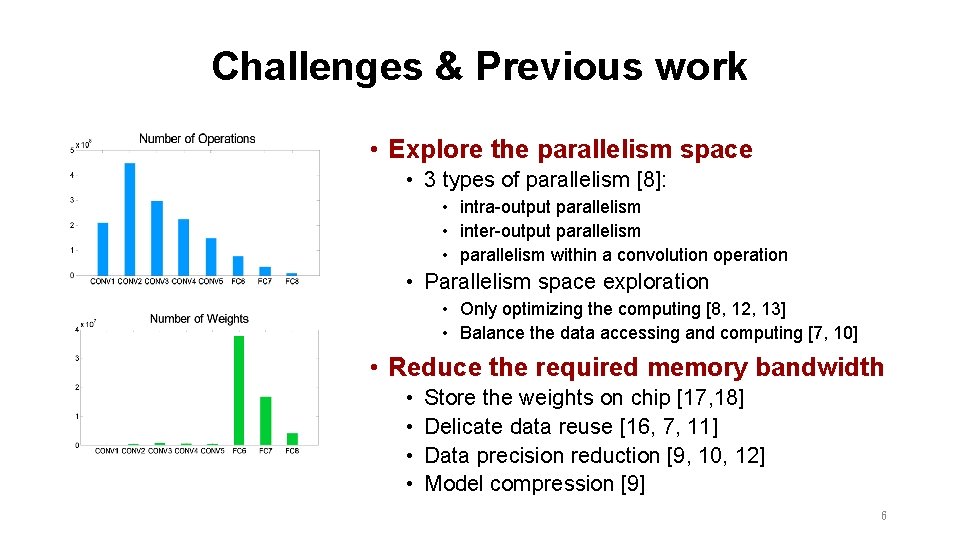

Challenges & Previous work • Explore the parallelism space • 3 types of parallelism [8]: • intra-output parallelism • inter-output parallelism • parallelism within a convolution operation • Parallelism space exploration • Only optimizing the computing [8, 12, 13] • Balance the data accessing and computing [7, 10] • Reduce the required memory bandwidth • • Store the weights on chip [17, 18] Delicate data reuse [16, 7, 11] Data precision reduction [9, 10, 12] Model compression [9] 6

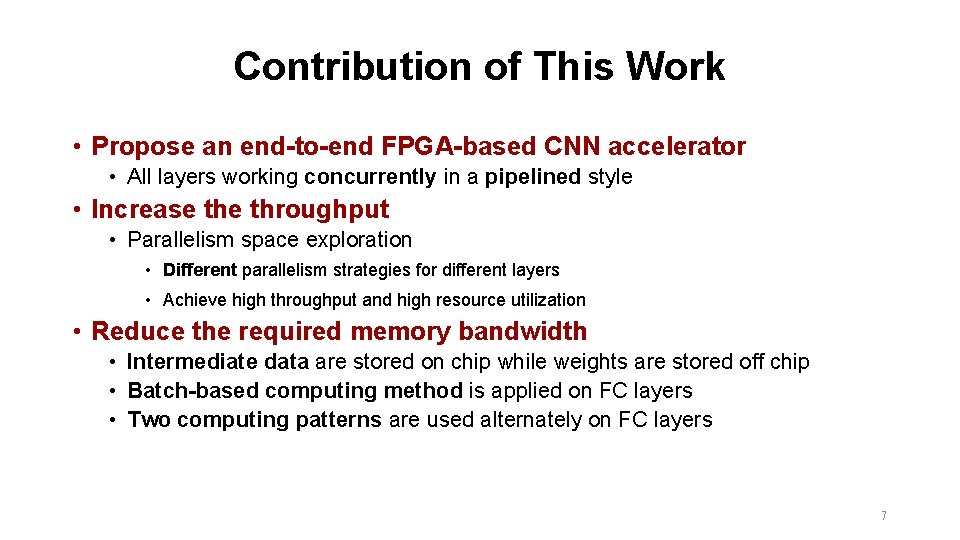

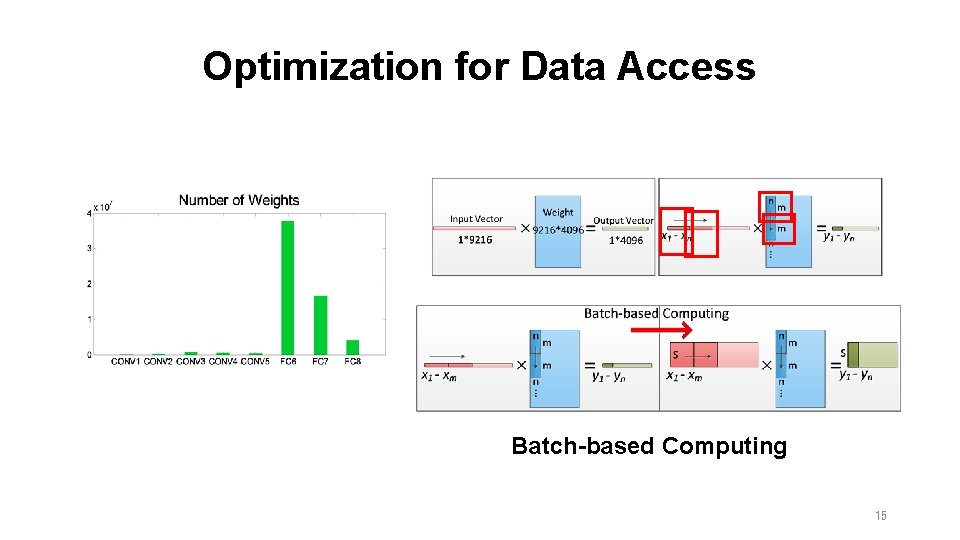

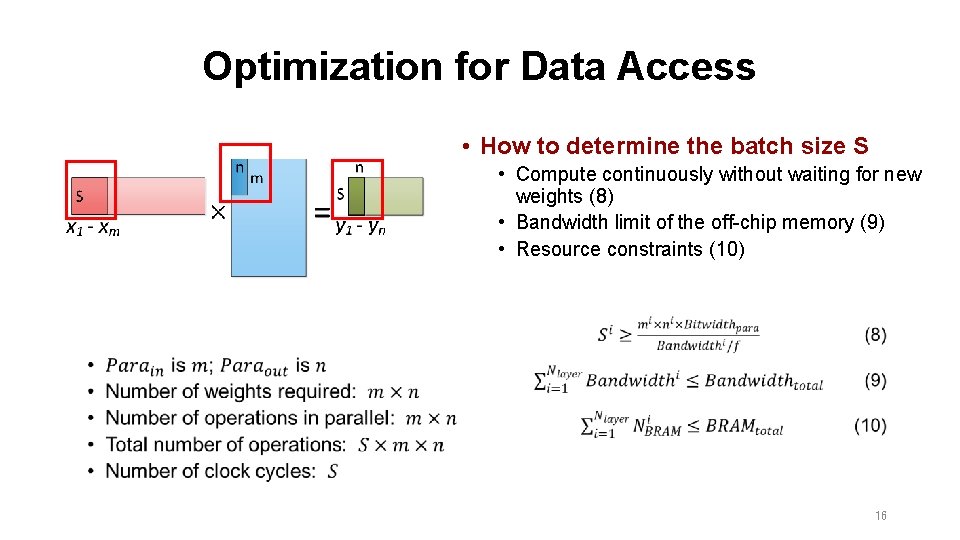

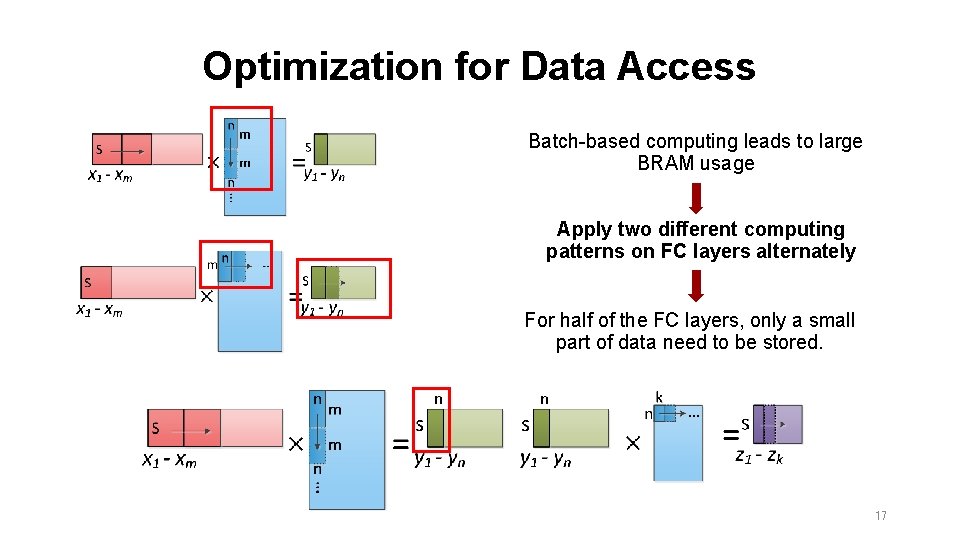

Contribution of This Work • Propose an end-to-end FPGA-based CNN accelerator • All layers working concurrently in a pipelined style • Increase throughput • Parallelism space exploration • Different parallelism strategies for different layers • Achieve high throughput and high resource utilization • Reduce the required memory bandwidth • Intermediate data are stored on chip while weights are stored off chip • Batch-based computing method is applied on FC layers • Two computing patterns are used alternately on FC layers 7

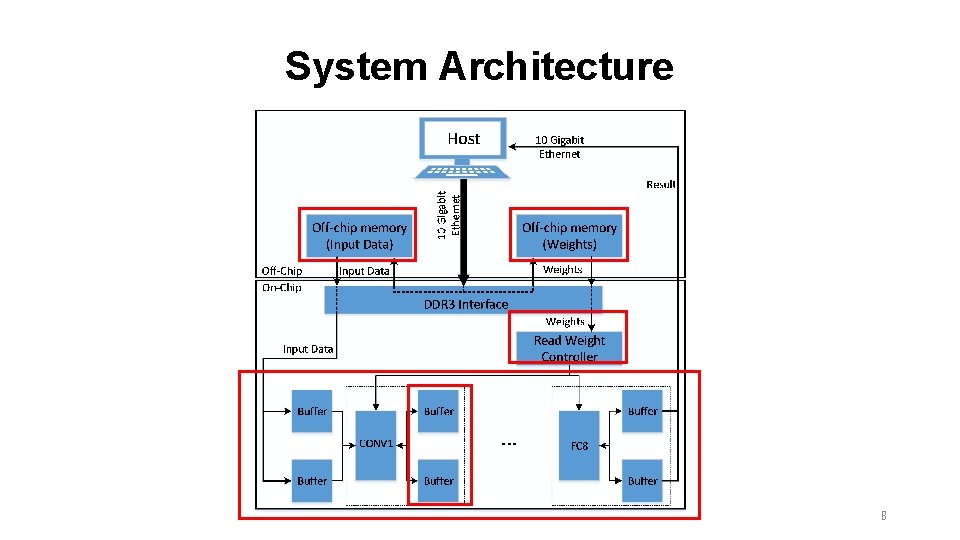

System Architecture 8

![Parallelism Strategies • 3 types of parallelism as in [8] • intra-output parallelism • Parallelism Strategies • 3 types of parallelism as in [8] • intra-output parallelism •](http://slidetodoc.com/presentation_image_h2/c66cf2dc907d7bfffbc0102d52e3a7cb/image-9.jpg)

Parallelism Strategies • 3 types of parallelism as in [8] • intra-output parallelism • inter-output parallelism • parallelism within a convolution operation 9

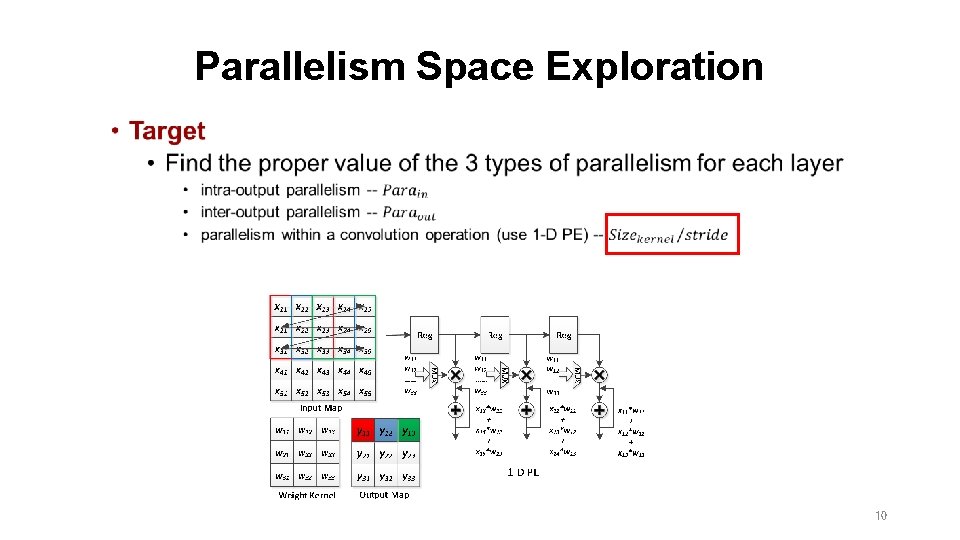

Parallelism Space Exploration • 10

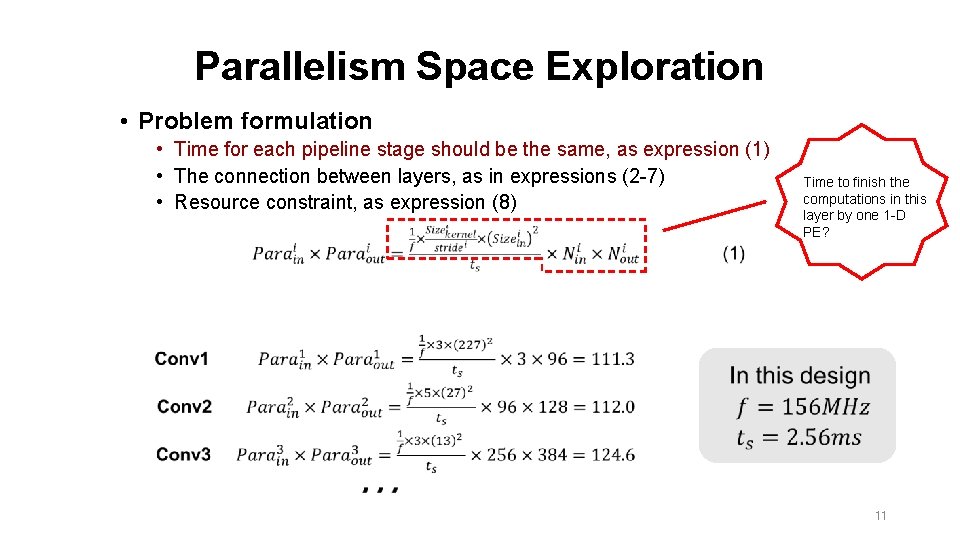

Parallelism Space Exploration • Problem formulation • Time for each pipeline stage should be the same, as expression (1) • The connection between layers, as in expressions (2 -7) • Resource constraint, as expression (8) Time to finish the computations in this layer by one 1 -D PE? 11

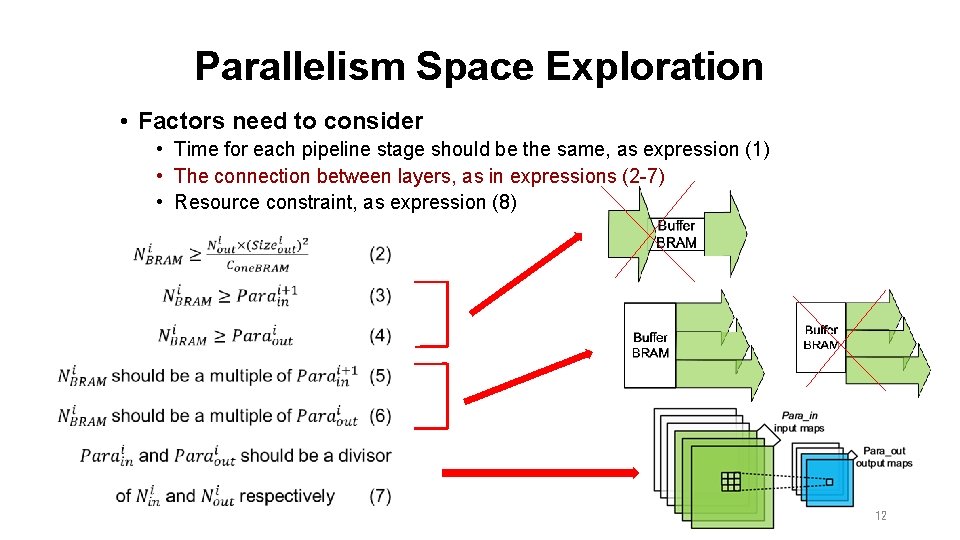

Parallelism Space Exploration • Factors need to consider • Time for each pipeline stage should be the same, as expression (1) • The connection between layers, as in expressions (2 -7) • Resource constraint, as expression (8) 12

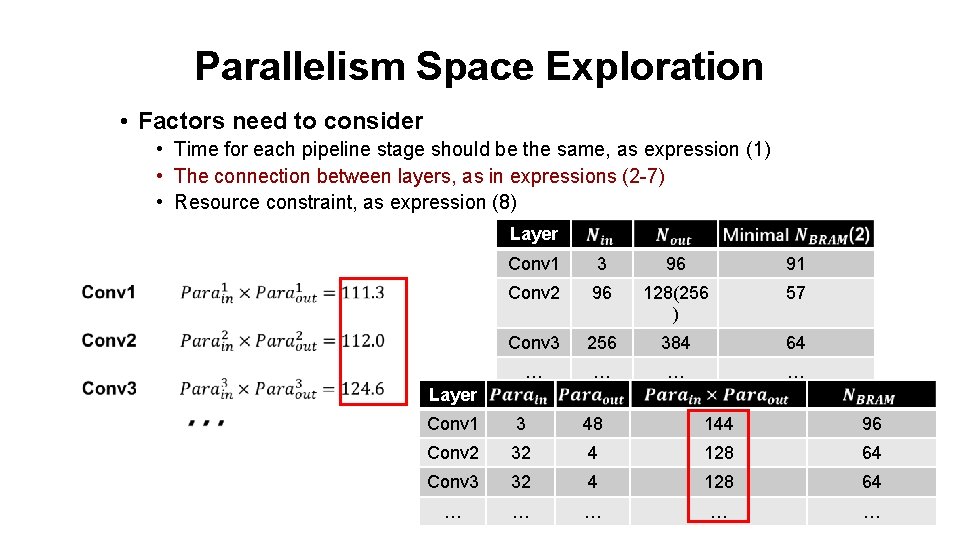

Parallelism Space Exploration • Factors need to consider • Time for each pipeline stage should be the same, as expression (1) • The connection between layers, as in expressions (2 -7) • Resource constraint, as expression (8) Layer Conv 1 3 96 91 Conv 2 96 128(256 ) 57 Conv 3 256 384 64 … … Layer Conv 1 3 48 144 96 Conv 2 32 4 128 64 Conv 3 32 4 128 64 … … … 13

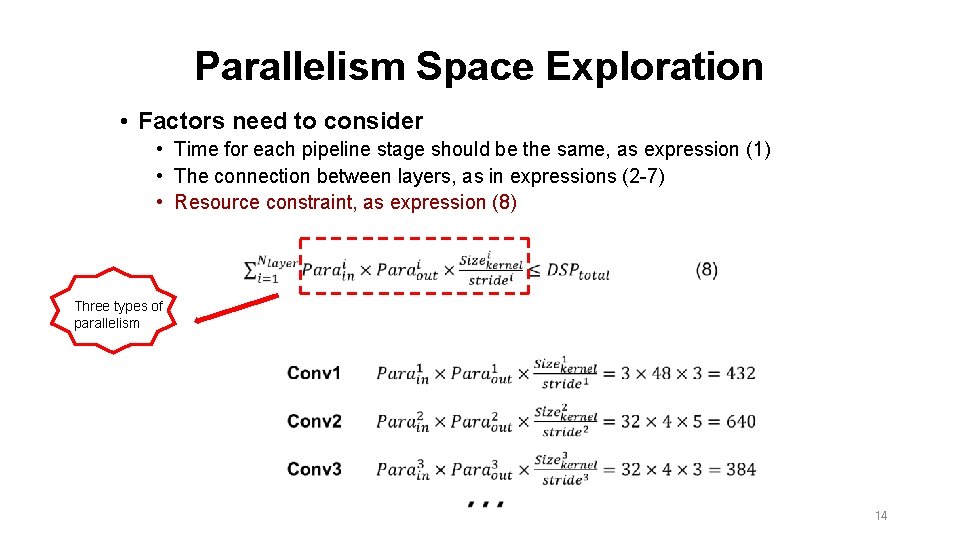

Parallelism Space Exploration • Factors need to consider • Time for each pipeline stage should be the same, as expression (1) • The connection between layers, as in expressions (2 -7) • Resource constraint, as expression (8) Three types of parallelism 14

Optimization for Data Access Batch-based Computing 15

Optimization for Data Access • How to determine the batch size S • Compute continuously without waiting for new weights (8) • Bandwidth limit of the off-chip memory (9) • Resource constraints (10) 16

Optimization for Data Access Batch-based computing leads to large BRAM usage Apply two different computing patterns on FC layers alternately For half of the FC layers, only a small part of data need to be stored. 17

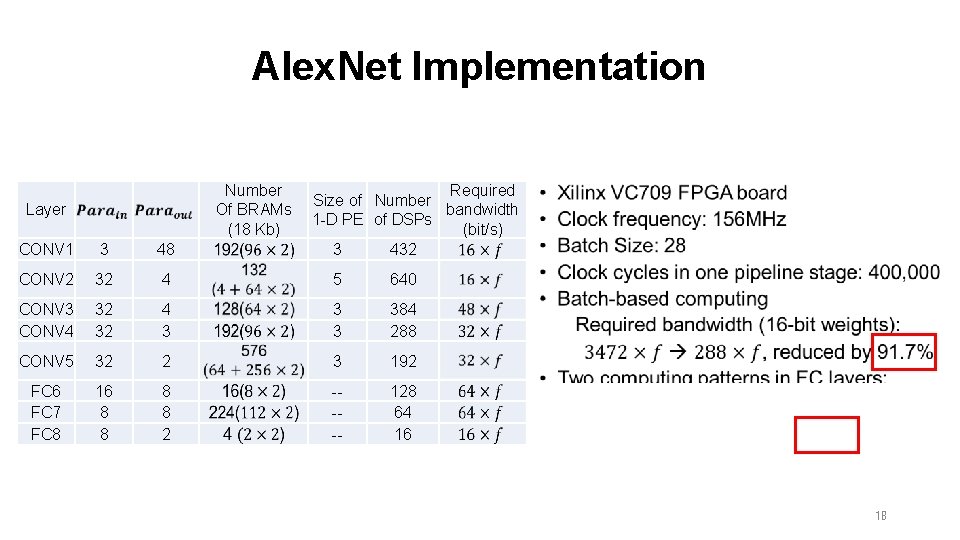

Alex. Net Implementation Number Of BRAMs (18 Kb) Layer Required Size of Number bandwidth 1 -D PE of DSPs (bit/s) 3 432 • CONV 1 3 48 CONV 2 32 4 5 640 CONV 3 CONV 4 32 32 4 3 384 288 CONV 5 32 2 3 192 FC 6 FC 7 FC 8 16 8 8 2 ---- 128 64 16 18

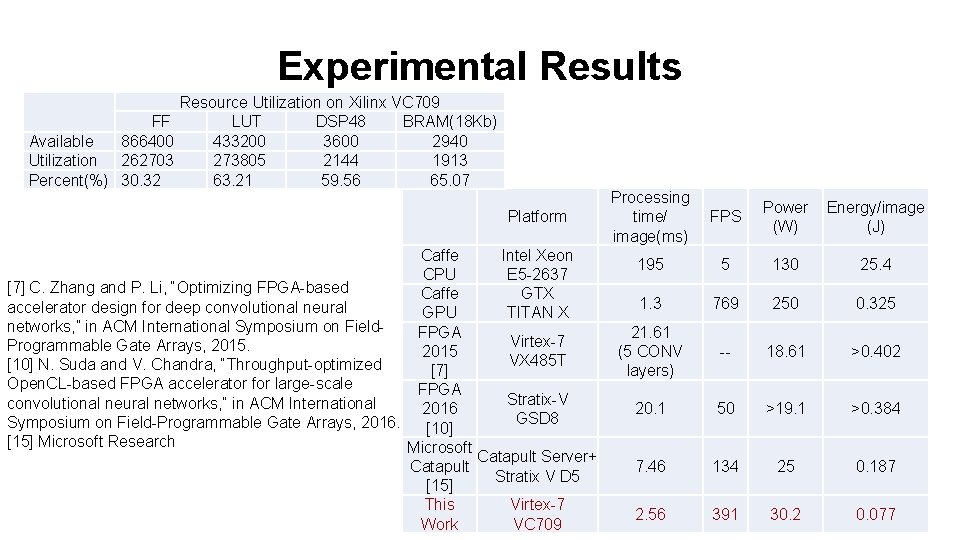

Experimental Results Resource Utilization on Xilinx VC 709 FF LUT DSP 48 BRAM(18 Kb) Available 866400 433200 3600 2940 Utilization 262703 273805 2144 1913 Percent(%) 30. 32 63. 21 59. 56 65. 07 Platform Caffe Intel Xeon CPU E 5 -2637 [7] C. Zhang and P. Li, “Optimizing FPGA-based Caffe GTX accelerator design for deep convolutional neural GPU TITAN X networks, ” in ACM International Symposium on Field. FPGA Virtex-7 Programmable Gate Arrays, 2015 VX 485 T [10] N. Suda and V. Chandra, “Throughput-optimized [7] Open. CL-based FPGA accelerator for large-scale FPGA Stratix-V convolutional neural networks, ” in ACM International 2016 GSD 8 Symposium on Field-Programmable Gate Arrays, 2016. [10] [15] Microsoft Research Microsoft Catapult Server+ Catapult Stratix V D 5 [15] This Virtex-7 Work VC 709 Processing time/ image(ms) FPS Power (W) Energy/image (J) 195 5 130 25. 4 1. 3 769 250 0. 325 21. 61 (5 CONV layers) -- 18. 61 >0. 402 20. 1 50 >19. 1 >0. 384 7. 46 134 25 0. 187 2. 56 391 30. 2 0. 077 19

![Experimental Results Clock (MHz) Virtex-5 SX 240 T 120 FPGA 2015 [7] Virtex-7 VX Experimental Results Clock (MHz) Virtex-5 SX 240 T 120 FPGA 2015 [7] Virtex-7 VX](http://slidetodoc.com/presentation_image_h2/c66cf2dc907d7bfffbc0102d52e3a7cb/image-20.jpg)

Experimental Results Clock (MHz) Virtex-5 SX 240 T 120 FPGA 2015 [7] Virtex-7 VX 485 T 100 Precision 48 -bit fixed 32 -bit float ACM 2010[8] Platform FPGA 2016 [10] Stratix-V GSD 8 120 (8 -16)-bit fixed 30. 9 FPGA 2016 [9] Zynq XC 7 Z 045 150 16 -bit fixed This Work Virtex-7 VC 709 156 16 -bit fixed CNN Size (GOP) 0. 52 1. 33 30. 76 1. 45 Performance 16 61. 62 117. 8 136. 97 565. 94 (GOP/s) Power (W) 14 18. 61 25. 8 9. 63 30. 2 Power Efficiency 1. 14 3. 31 4. 57 14. 22 22. 15 (GOP/s/W) [7] C. Zhang and P. Li, “Optimizing FPGA-based accelerator design for deep convolutional neural networks, ” in ACM International Symposium on Field-Programmable Gate Arrays, 2015. [8] S. Chakradhar and M. Sankaradas, “A dynamically configurable coprocessor for convolutional neural networks, ” in ACM Sigarch Computer Architecture News, 2010. [9] J. Qiu and J. Wang, “Going deeper with embedded FPGA platform for convolutional neural network, ” in ACM International Symposium on Field-Programmable Gate Arrays, 2016. [10] N. Suda and V. Chandra, “Throughput-optimized Open. CL-based FPGA accelerator for large-scale convolutional neural networks, ” in ACM International Symposium on Field. Programmable Gate Arrays, 2016. 20

Demo 21

Thank you! Q&A 22

- Slides: 22