A Hadoop Overview Outline Progress Report Map Reduce

- Slides: 49

A Hadoop Overview

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

Progress �Hadoop buildup has been completed. Version 0. 19. 0, running under Standalone mode. �HBase buildup has been completed. Version 0. 19. 3, with no assists of HDFS. �Simple demonstration over Map. Reduce. Simple word count program.

Testing Platform �Fedora 10 �JDK 1. 6. 0_18 �Hadoop-0. 19. 0 �Hbase-0. 19. 3 �One can connect to the machine using pietty or putty. Host: 140. 112. 90. 180 Account: labuser Password: robot 3233 Port: 3385 (using ssh connection)

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

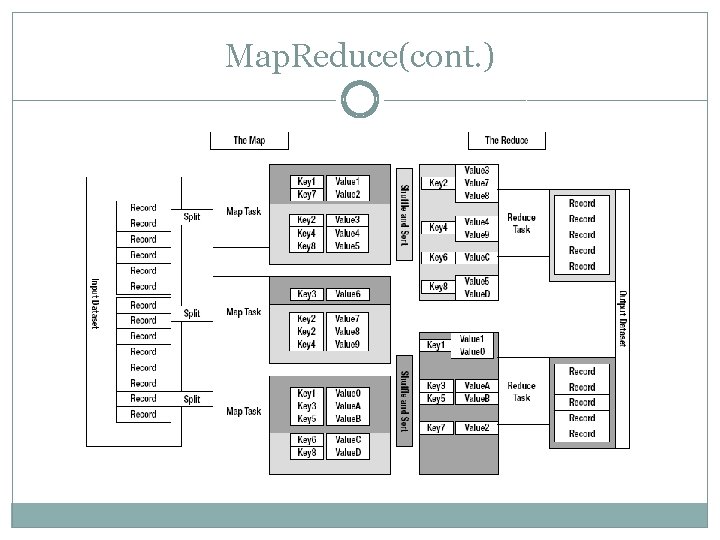

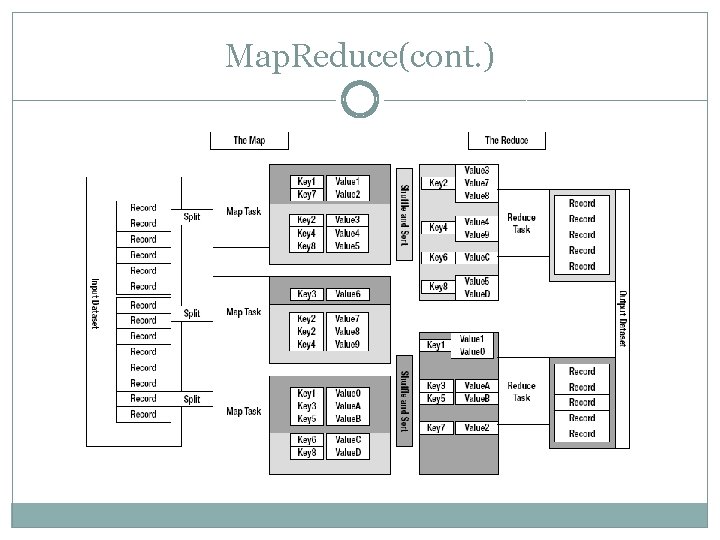

Map. Reduce �A computing framework including map phase, shuffling phase and reduce phase. �Map function and Reduce function are provided by the user. �Key-Value Pair(KVP) map is initiated with each KVP ingested, and output any number of KVPs. reduce is initiated with each key and its corresponding values, and output any number of KVPs.

Map. Reduce(cont. )

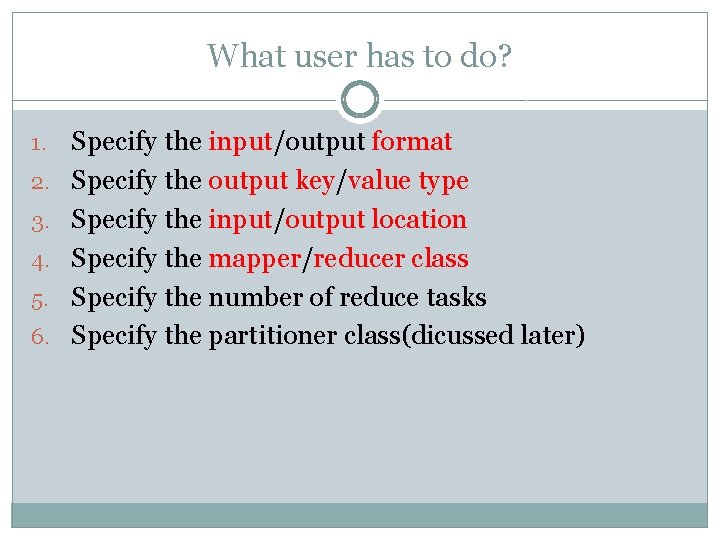

What user has to do? 1. 2. 3. 4. 5. 6. Specify the input/output format Specify the output key/value type Specify the input/output location Specify the mapper/reducer class Specify the number of reduce tasks Specify the partitioner class(dicussed later)

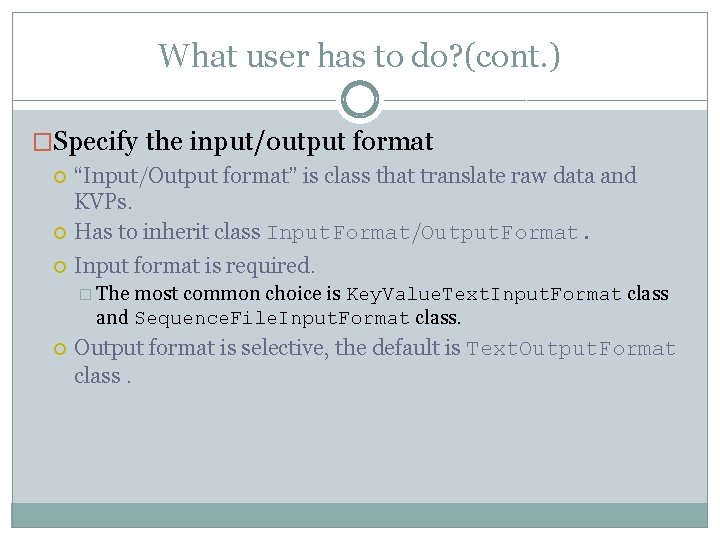

What user has to do? (cont. ) �Specify the input/output format “Input/Output format” is class that translate raw data and KVPs. Has to inherit class Input. Format/Output. Format. Input format is required. � The most common choice is Key. Value. Text. Input. Format class and Sequence. File. Input. Format class. Output format is selective, the default is Text. Output. Format class.

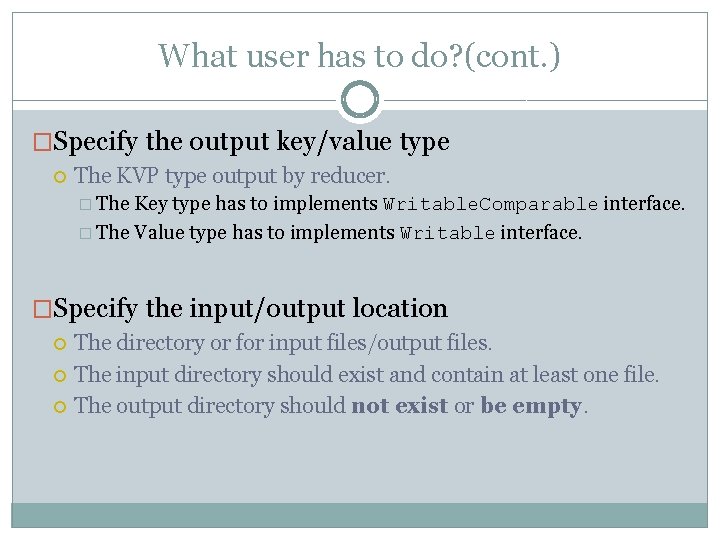

What user has to do? (cont. ) �Specify the output key/value type The KVP type output by reducer. � The Key type has to implements Writable. Comparable interface. � The Value type has to implements Writable interface. �Specify the input/output location The directory or for input files/output files. The input directory should exist and contain at least one file. The output directory should not exist or be empty.

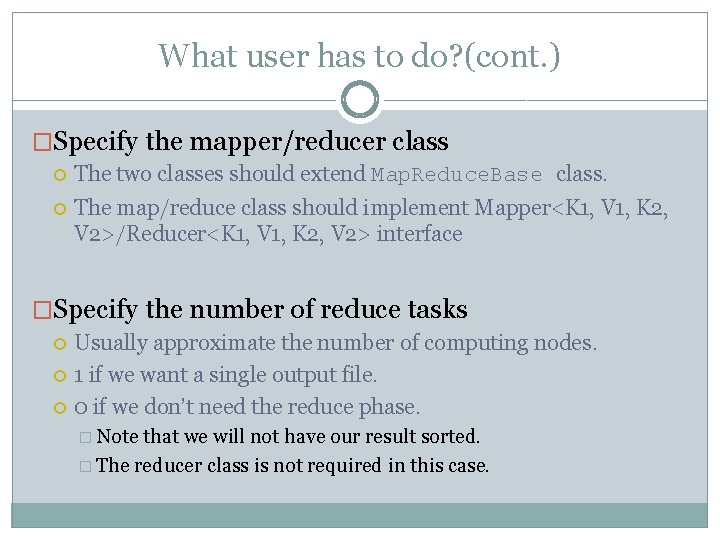

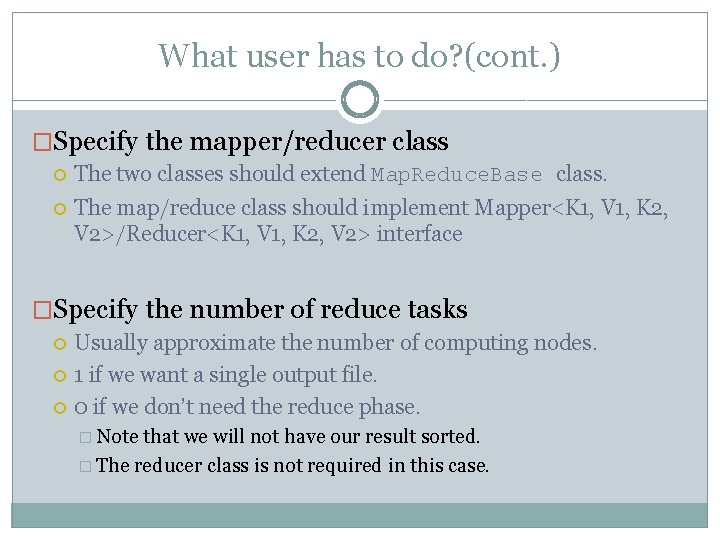

What user has to do? (cont. ) �Specify the mapper/reducer class The two classes should extend Map. Reduce. Base class. The map/reduce class should implement Mapper<K 1, V 1, K 2, V 2>/Reducer<K 1, V 1, K 2, V 2> interface �Specify the number of reduce tasks Usually approximate the number of computing nodes. 1 if we want a single output file. 0 if we don’t need the reduce phase. � Note that we will not have our result sorted. � The reducer class is not required in this case.

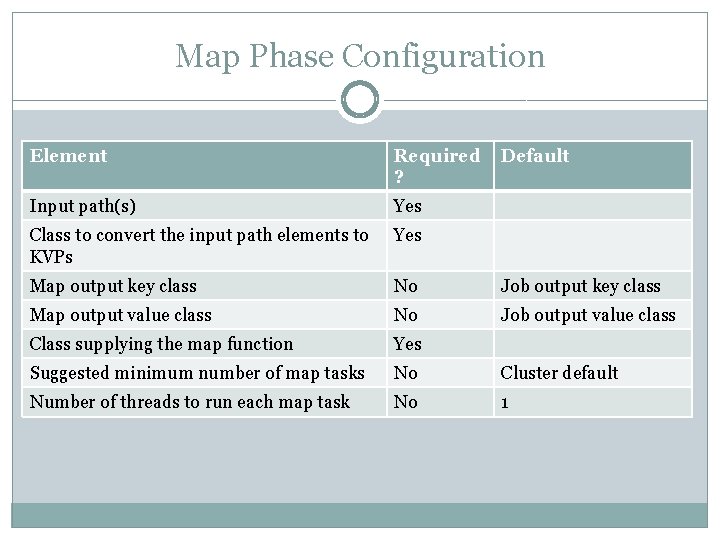

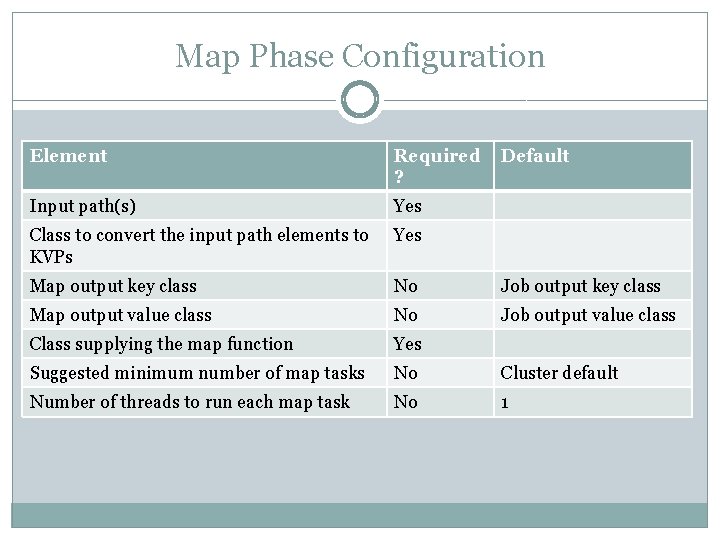

Map Phase Configuration Element Required ? Default Input path(s) Yes Class to convert the input path elements to KVPs Yes Map output key class No Job output key class Map output value class No Job output value class Class supplying the map function Yes Suggested minimum number of map tasks No Cluster default Number of threads to run each map task No 1

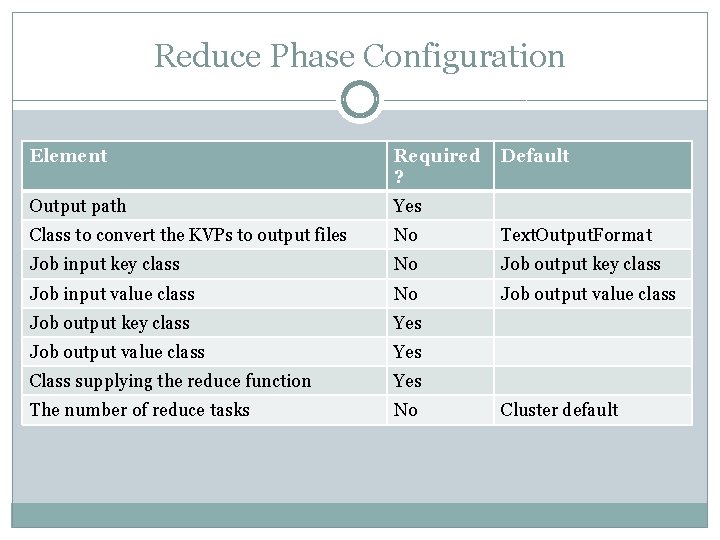

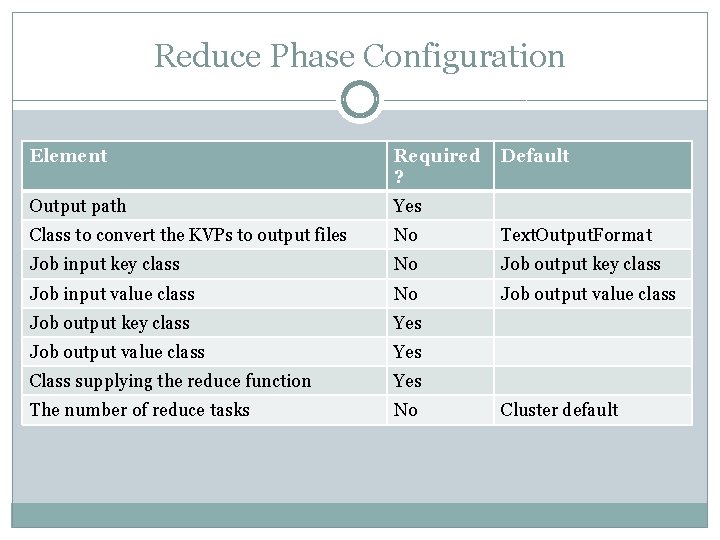

Reduce Phase Configuration Element Required ? Default Output path Yes Class to convert the KVPs to output files No Text. Output. Format Job input key class No Job output key class Job input value class No Job output value class Job output key class Yes Job output value class Yes Class supplying the reduce function Yes The number of reduce tasks No Cluster default

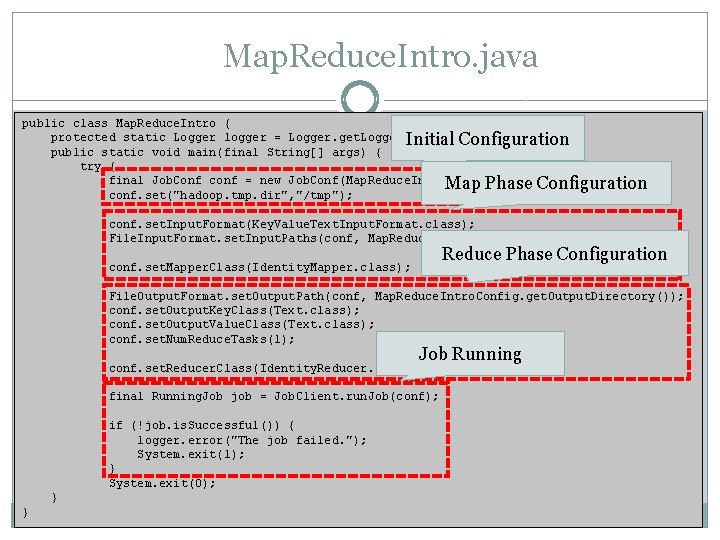

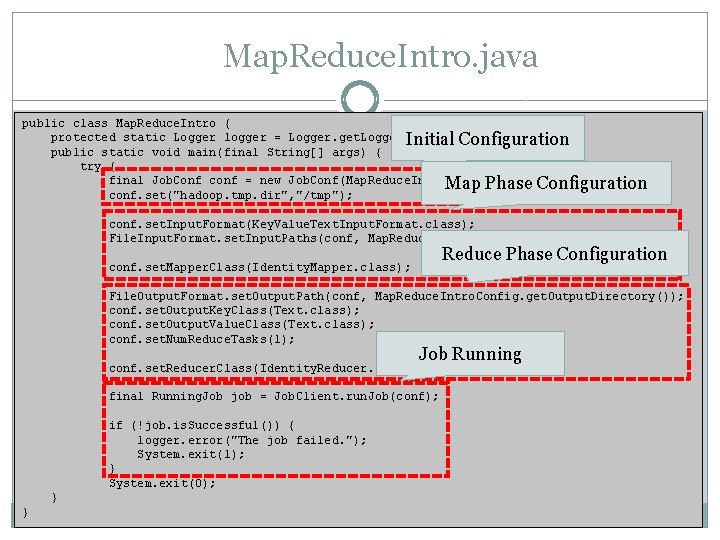

Map. Reduce. Intro. java public class Map. Reduce. Intro { protected static Logger logger = Logger. get. Logger(Map. Reduce. Intro. class); Initial Configuration public static void main(final String[] args) { try { final Job. Conf conf = new Job. Conf(Map. Reduce. Intro. class); Map Phase Configuration conf. set("hadoop. tmp. dir", "/tmp"); conf. set. Input. Format(Key. Value. Text. Input. Format. class); File. Input. Format. set. Input. Paths(conf, Map. Reduce. Intro. Config. get. Input. Directory()); Reduce Phase Configuration conf. set. Mapper. Class(Identity. Mapper. class); File. Output. Format. set. Output. Path(conf, Map. Reduce. Intro. Config. get. Output. Directory()); conf. set. Output. Key. Class(Text. class); conf. set. Output. Value. Class(Text. class); conf. set. Num. Reduce. Tasks(1); Job Running conf. set. Reducer. Class(Identity. Reducer. class); final Running. Job job = Job. Client. run. Job(conf); if (!job. is. Successful()) { logger. error("The job failed. "); System. exit(1); } System. exit(0); } }

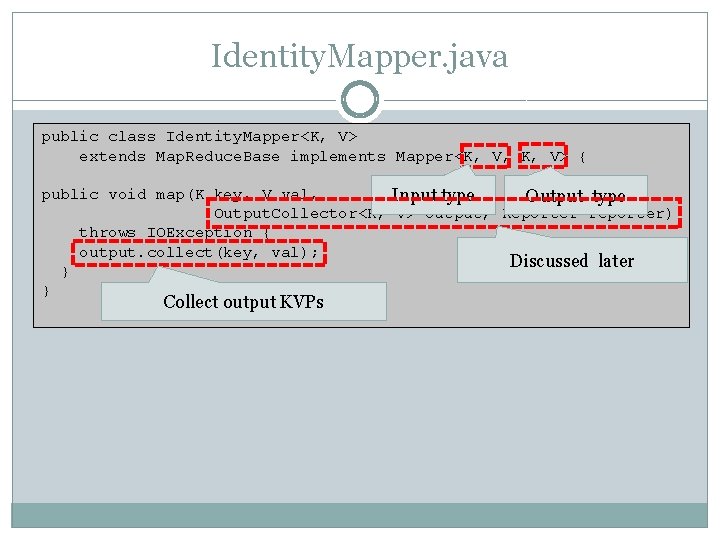

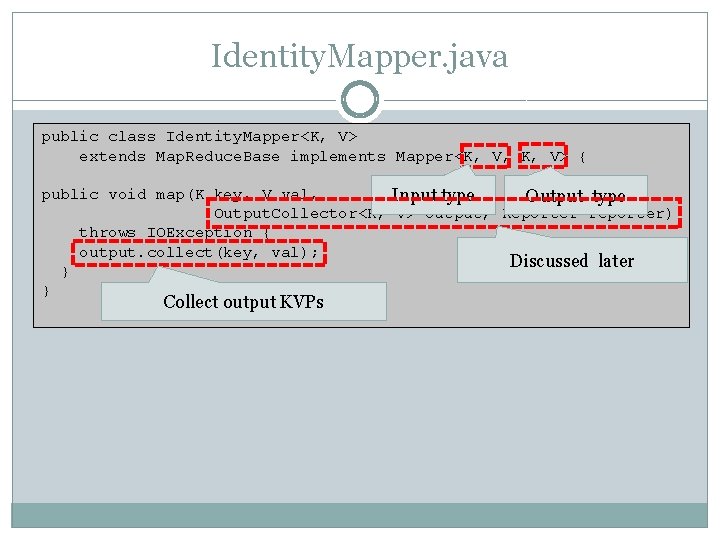

Identity. Mapper. java public class Identity. Mapper<K, V> extends Map. Reduce. Base implements Mapper<K, V, K, V> { public void map(K key, V val, Input type Output. Collector<K, V> output, Reporter reporter) throws IOException { output. collect(key, val); Discussed later } } Collect output KVPs

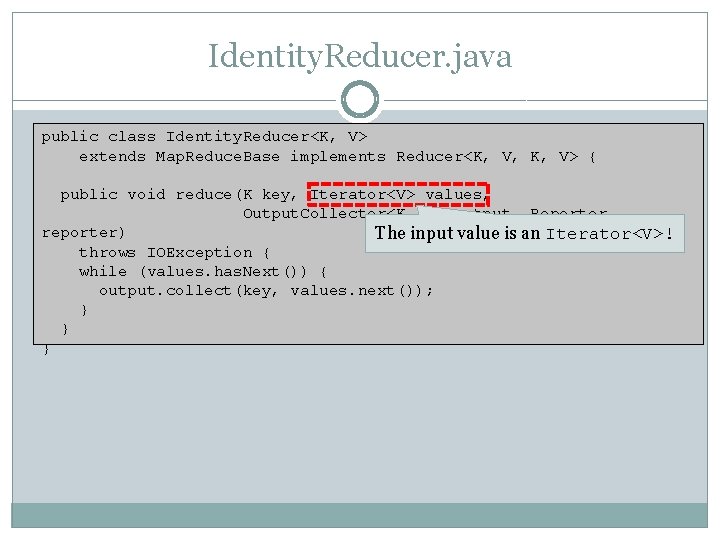

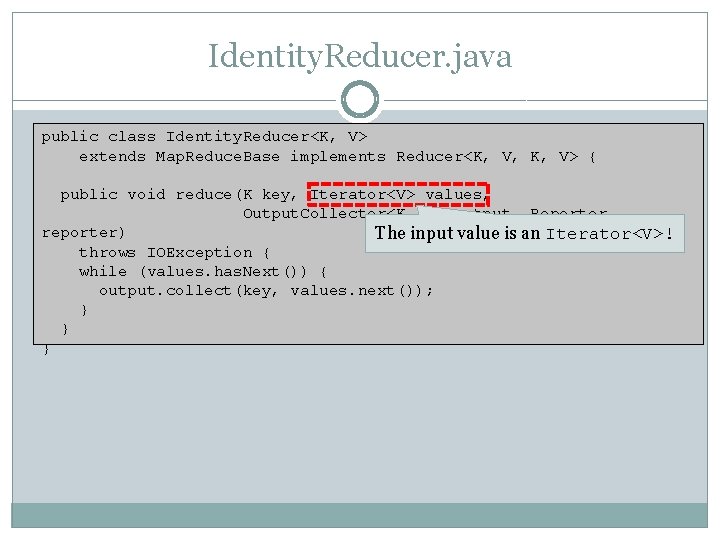

Identity. Reducer. java public class Identity. Reducer<K, V> extends Map. Reduce. Base implements Reducer<K, V, K, V> { public void reduce(K key, Iterator<V> values, Output. Collector<K, V> output, Reporter reporter) The input value is an Iterator<V>! throws IOException { while (values. has. Next()) { output. collect(key, values. next()); } } }

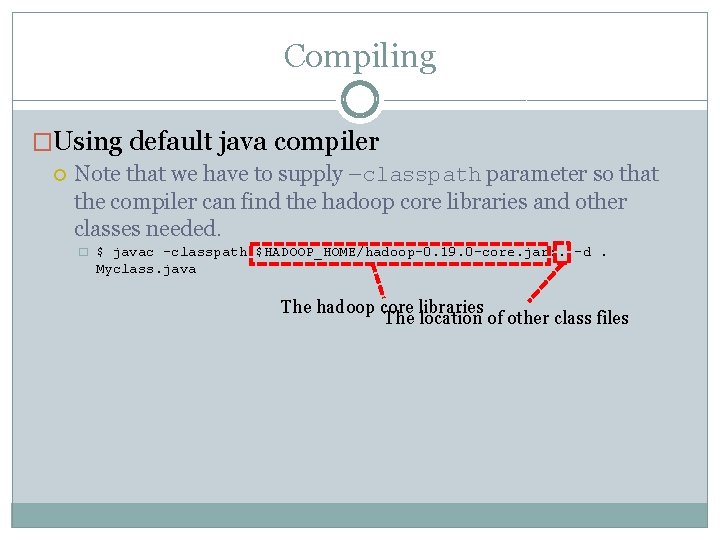

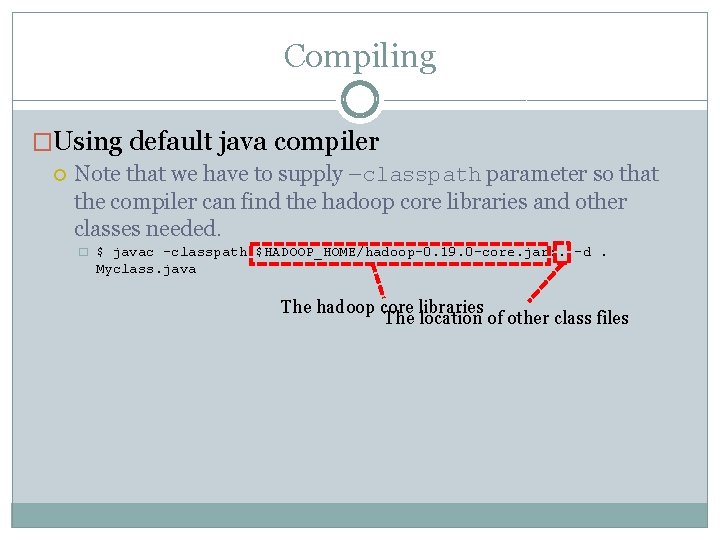

Compiling �Using default java compiler Note that we have to supply –classpath parameter so that the compiler can find the hadoop core libraries and other classes needed. � $ javac –classpath $HADOOP_HOME/hadoop-0. 19. 0 -core. jar: . –d. Myclass. java The hadoop core libraries The location of other class files

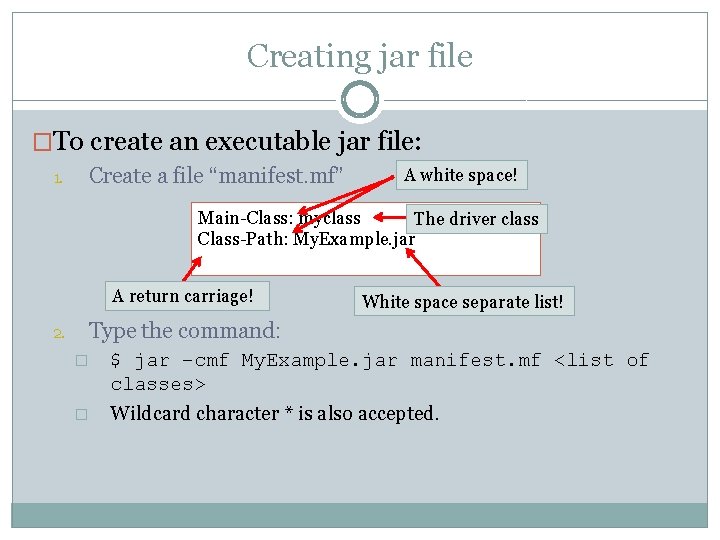

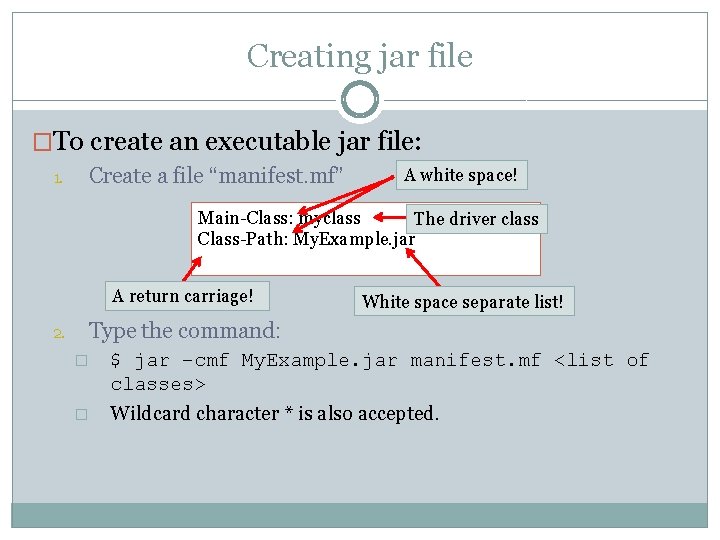

Creating jar file �To create an executable jar file: 1. Create a file “manifest. mf” A white space! Main-Class: myclass The driver class Class-Path: My. Example. jar A return carriage! 2. White space separate list! Type the command: � $ jar –cmf My. Example. jar manifest. mf <list of classes> � Wildcard character * is also accepted.

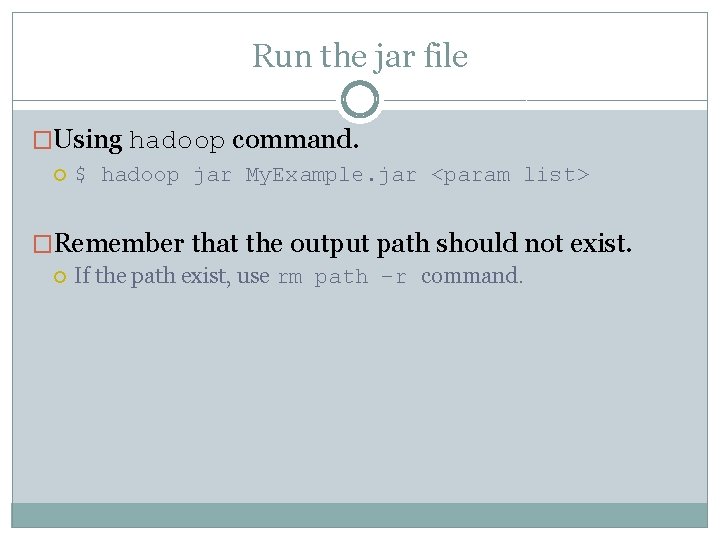

Run the jar file �Using hadoop command. $ hadoop jar My. Example. jar <param list> �Remember that the output path should not exist. If the path exist, use rm path –r command.

A simple demonstration �A simple word count program.

Reporter

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

Hadoop �Full name Apache Hadoop project. Open source implementation for reliable, scalable distributed computing. An aggregation of the following projects (and its core): � Avro � Chukwa � HBase � HDFS � Hive � Map. Reduce � Pig � Zoo. Keeper

Virtual Machine (VM) �Virtualization All services are delivered through VMs. Allows for dynamically configuring and managing. There can be multiple VMs running on a single commodity machine. � VMware

HDFS(Hadoop Distributed File System) �The highly scalable distributed file system of Hadoop. Resembles Google File System(GFS). Provides reliability by replication. �Name. Node & Data. Node Name. Node � Maintains file system metadata and namespace. � Provides management and control services. � Usually one instance. Data. Node � Provides data storage and retrieval services. � Usually several instances.

Map. Reduce �The sophisticate distributed computing service of Hadoop. A computation framework. Usually resides on HDFS. �Job. Tracker & Task. Tracker Job. Tracker � Manages the distribution of tasks to the Task. Trackers. � Provides job monitoring and control, and the submission of jobs. Task. Tracker � Manages single map or reduce tasks on a compute node.

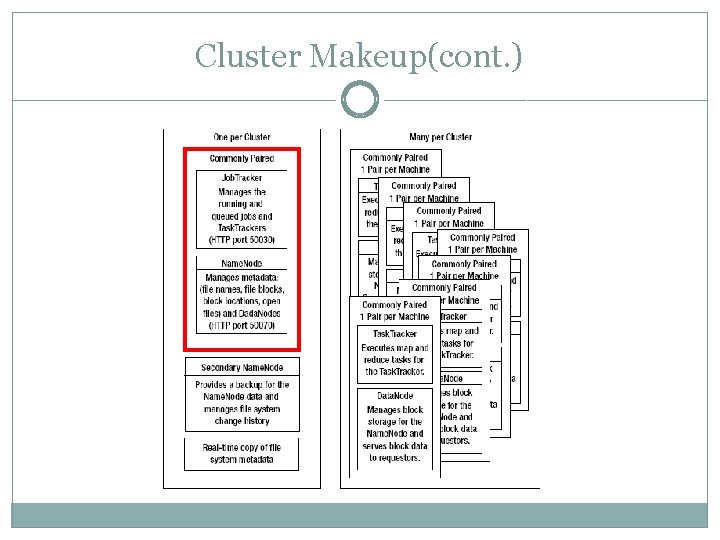

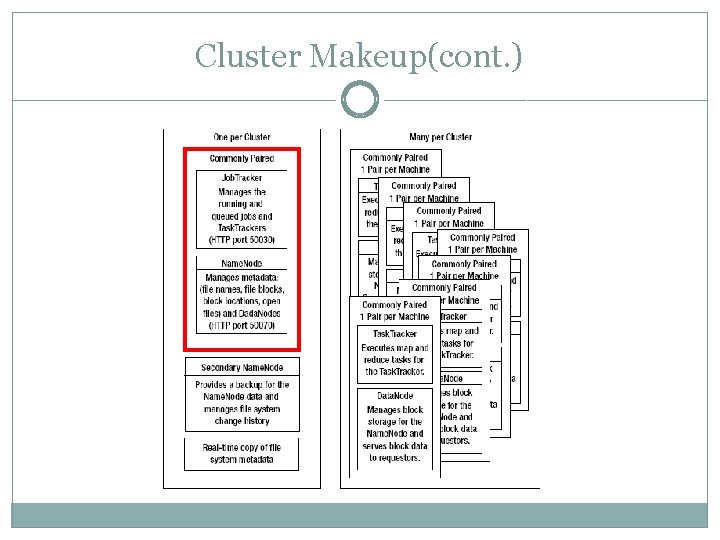

Cluster Makeup �A Hadoop cluster is usually make up by: Real Machines. � Not required to be homogeneous. � Homogeneity will help maintainability. Server Process. � Multiple process can be run on a single VM. �Master & Slave The node/machine running the Job. Tracker or Name. Node will be Master node. The ones running the Task. Tracker or Data. Node will be Slave node.

Cluster Makeup(cont. )

Administrator Scripts �Administrator can use the following script files to start or stop server processes. Can be located in $HADOOP_HOME/bin � start-all. sh/stop-all. sh � start-mapred. sh/stop-mapred. sh � start-dfs. sh/stop-dfs. sh � slaves. sh � hadoop

Configuration �By default, each Hadoop Core server will load the configuration from several files. These file will be located in $HADOOP_HOME/conf Usually identical copies of those files are maintained in every machine in the cluster.

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

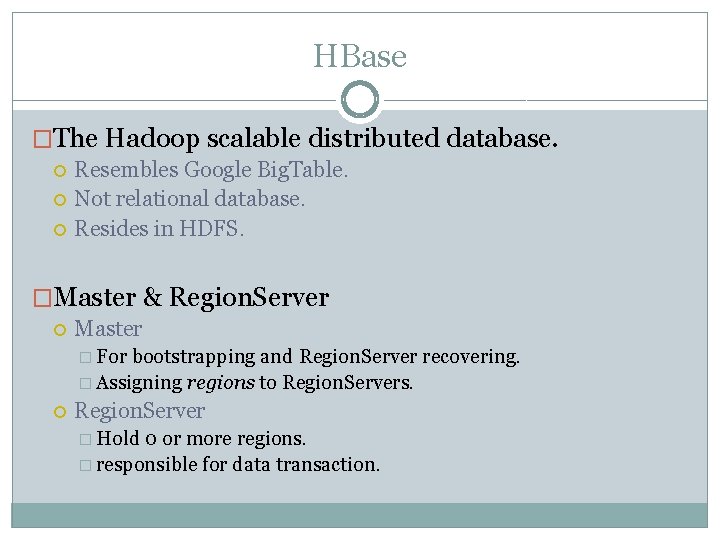

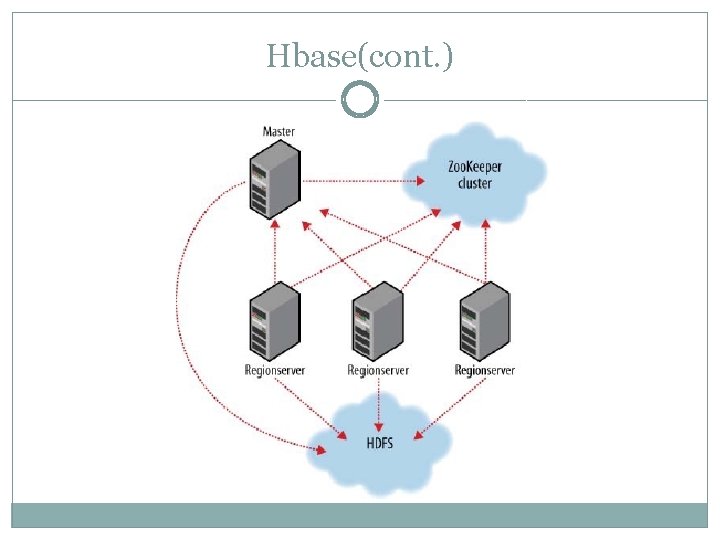

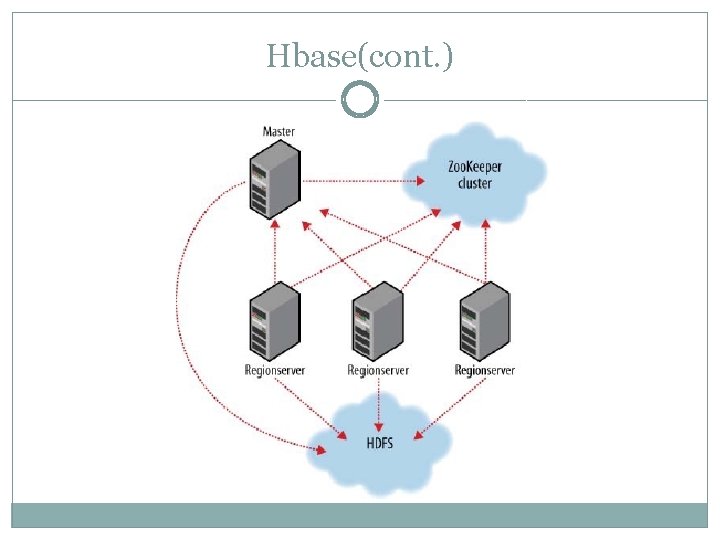

HBase �The Hadoop scalable distributed database. Resembles Google Big. Table. Not relational database. Resides in HDFS. �Master & Region. Server Master � For bootstrapping and Region. Server recovering. � Assigning regions to Region. Servers. Region. Server � Hold 0 or more regions. � responsible for data transaction.

Hbase(cont. )

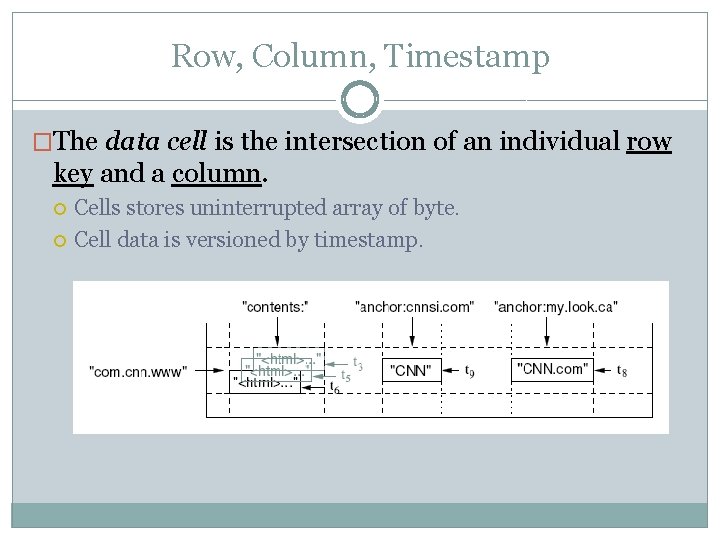

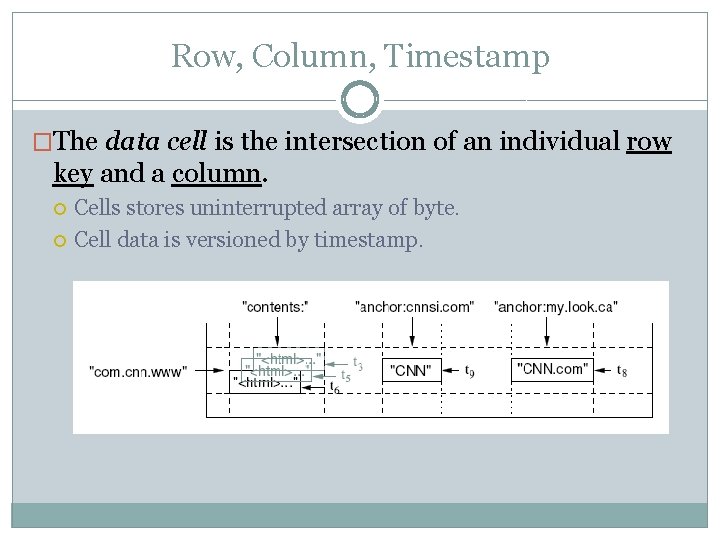

Row, Column, Timestamp �The data cell is the intersection of an individual row key and a column. Cells stores uninterrupted array of byte. Cell data is versioned by timestamp.

Row �Row (Key) is the primary key of database Can be consisted by arbitrary byte array. � Strings, binary data. Each row has to be distinguished. The table is sorted by row key. Any mutation action of a single row is atomic.

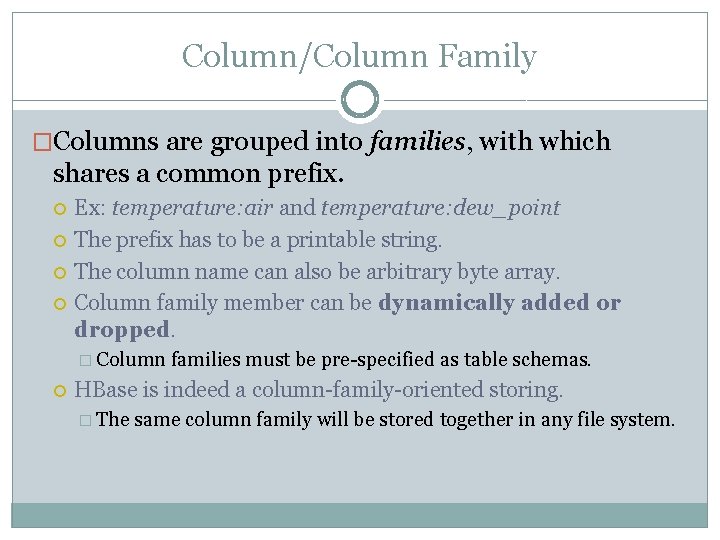

Column/Column Family �Columns are grouped into families, with which shares a common prefix. Ex: temperature: air and temperature: dew_point The prefix has to be a printable string. The column name can also be arbitrary byte array. Column family member can be dynamically added or dropped. � Column families must be pre-specified as table schemas. HBase is indeed a column-family-oriented storing. � The same column family will be stored together in any file system.

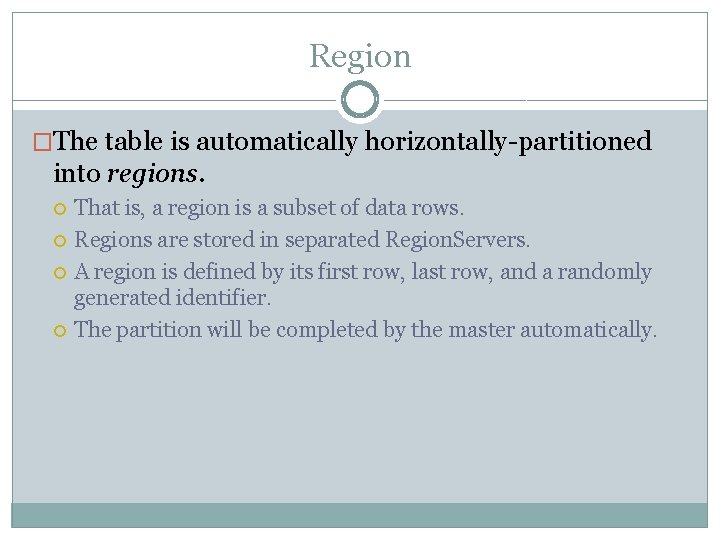

Region �The table is automatically horizontally-partitioned into regions. That is, a region is a subset of data rows. Regions are stored in separated Region. Servers. A region is defined by its first row, last row, and a randomly generated identifier. The partition will be completed by the master automatically.

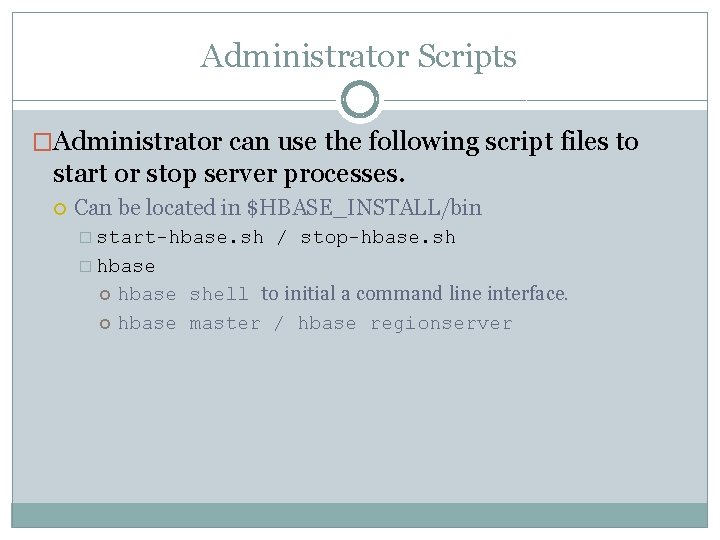

Administrator Scripts �Administrator can use the following script files to start or stop server processes. Can be located in $HBASE_INSTALL/bin � start-hbase. sh / stop-hbase. sh � hbase shell to initial a command line interface. hbase master / hbase regionserver

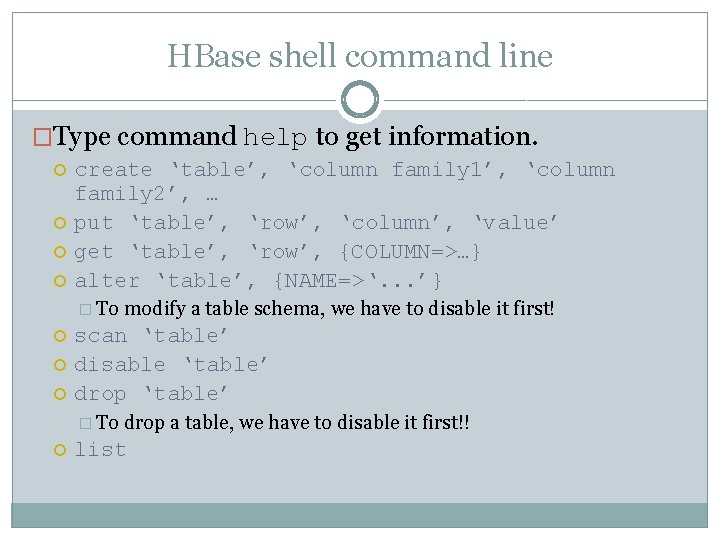

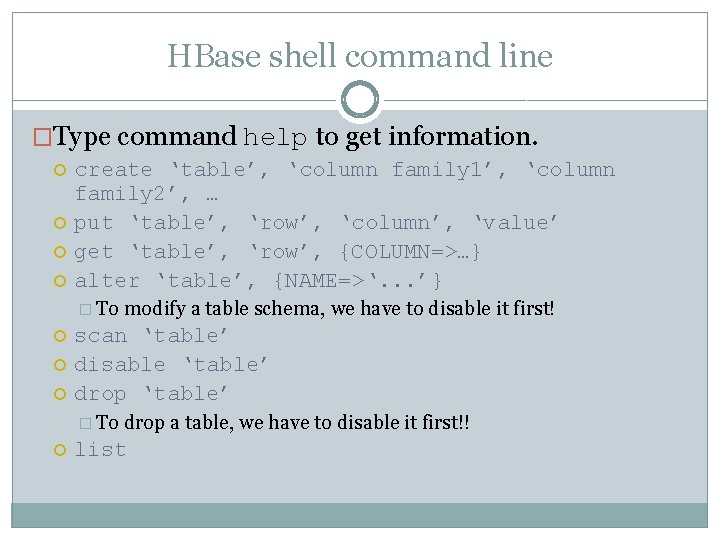

HBase shell command line �Type command help to get information. create ‘table’, ‘column family 1’, ‘column family 2’, … put ‘table’, ‘row’, ‘column’, ‘value’ get ‘table’, ‘row’, {COLUMN=>…} alter ‘table’, {NAME=>‘. . . ’} � To scan ‘table’ disable ‘table’ drop ‘table’ � To modify a table schema, we have to disable it first! drop a table, we have to disable it first!! list

A Simple Demonstration �Command line operation

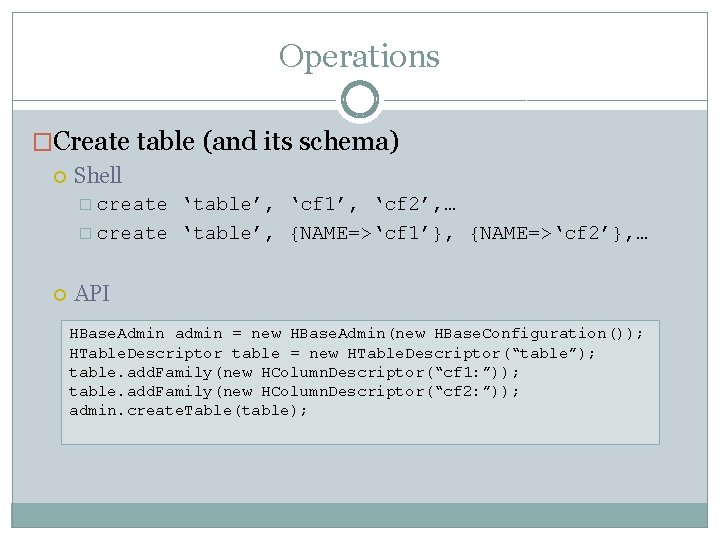

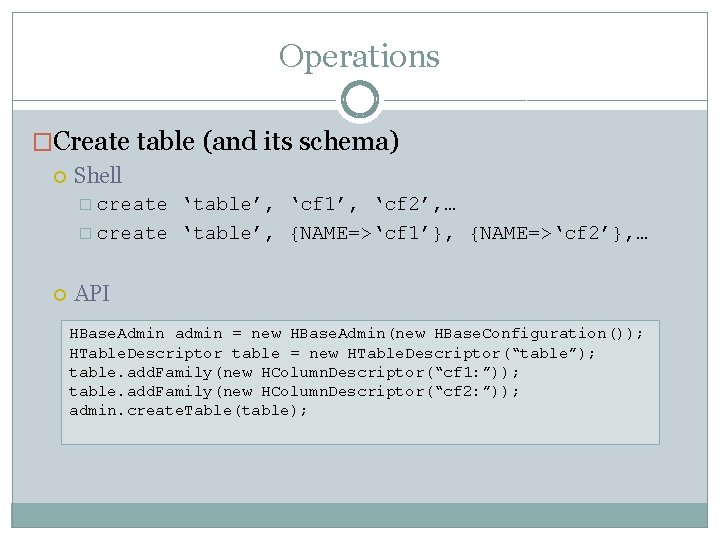

Operations �Create table (and its schema) Shell � create ‘table’, ‘cf 1’, ‘cf 2’, … � create ‘table’, {NAME=>‘cf 1’}, {NAME=>‘cf 2’}, … API HBase. Admin admin = new HBase. Admin(new HBase. Configuration()); HTable. Descriptor table = new HTable. Descriptor(“table”); table. add. Family(new HColumn. Descriptor(“cf 1: ”)); table. add. Family(new HColumn. Descriptor(“cf 2: ”)); admin. create. Table(table);

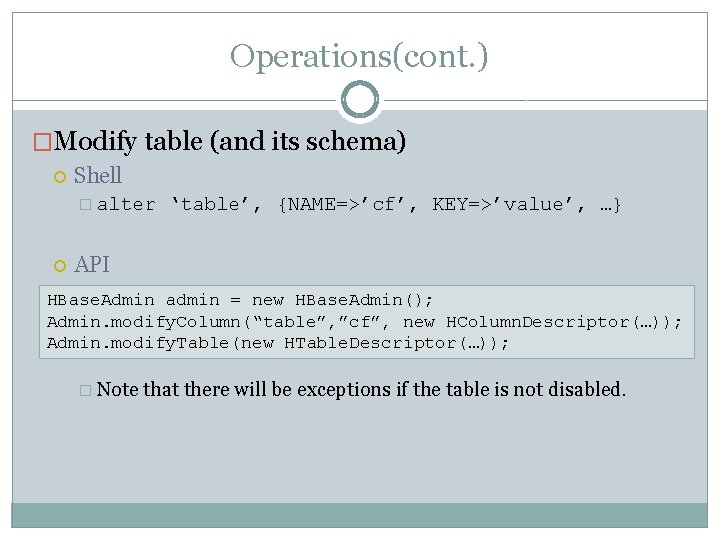

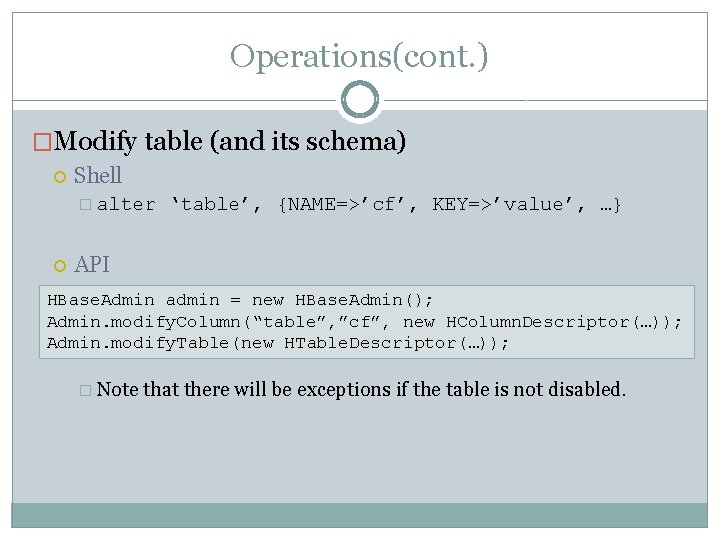

Operations(cont. ) �Modify table (and its schema) Shell � alter ‘table’, {NAME=>’cf’, KEY=>’value’, …} API HBase. Admin admin = new HBase. Admin(); Admin. modify. Column(“table”, ”cf”, new HColumn. Descriptor(…)); Admin. modify. Table(new HTable. Descriptor(…)); � Note that there will be exceptions if the table is not disabled.

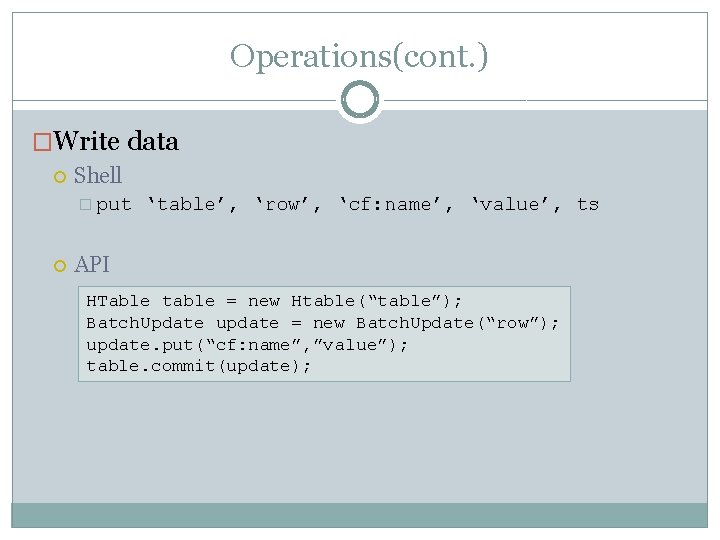

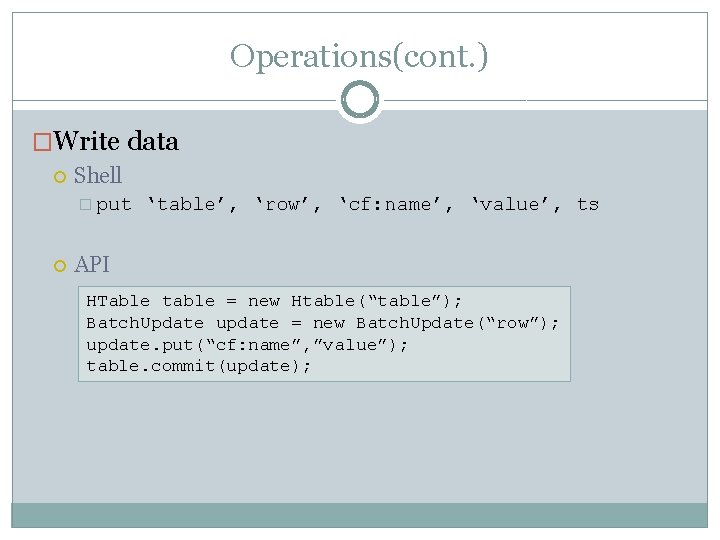

Operations(cont. ) �Write data Shell � put ‘table’, ‘row’, ‘cf: name’, ‘value’, ts API HTable table = new Htable(“table”); Batch. Update update = new Batch. Update(“row”); update. put(“cf: name”, ”value”); table. commit(update);

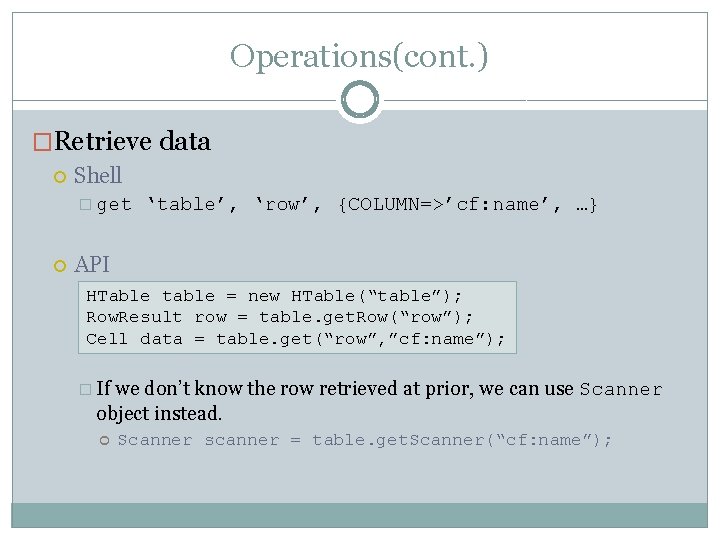

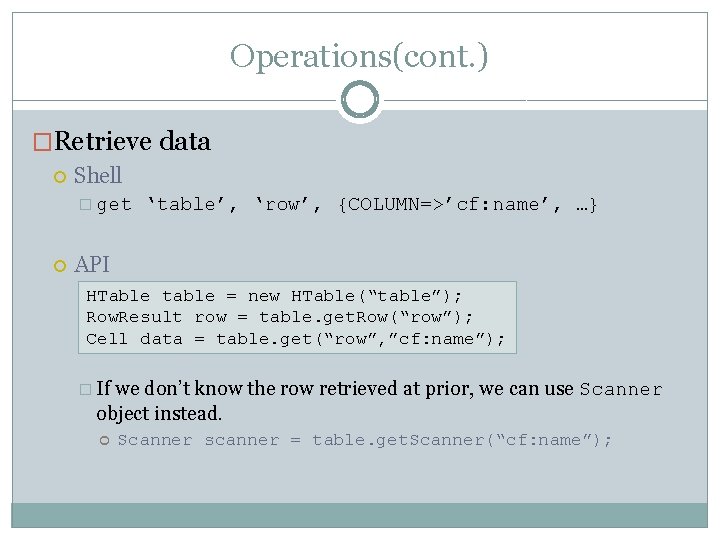

Operations(cont. ) �Retrieve data Shell � get ‘table’, ‘row’, {COLUMN=>’cf: name’, …} API HTable table = new HTable(“table”); Row. Result row = table. get. Row(“row”); Cell data = table. get(“row”, ”cf: name”); � If we don’t know the row retrieved at prior, we can use Scanner object instead. Scanner scanner = table. get. Scanner(“cf: name”);

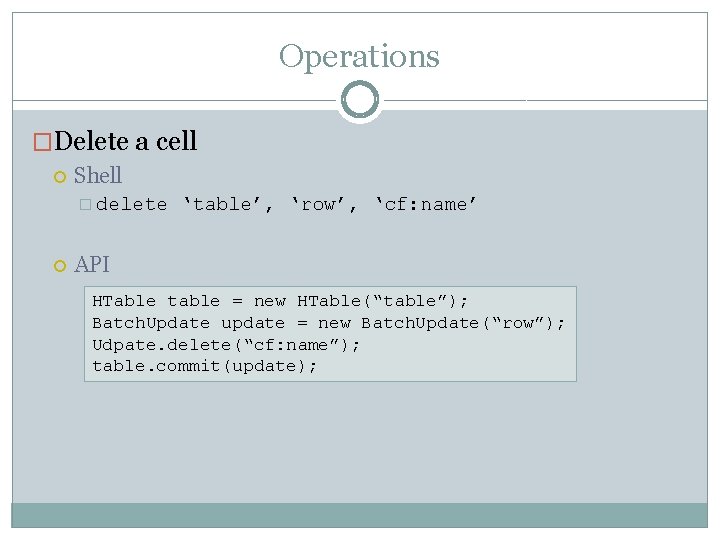

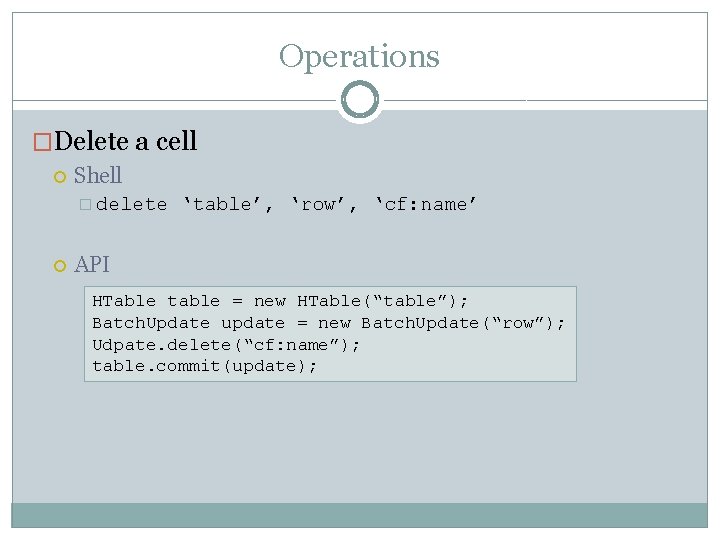

Operations �Delete a cell Shell � delete ‘table’, ‘row’, ‘cf: name’ API HTable table = new HTable(“table”); Batch. Update update = new Batch. Update(“row”); Udpate. delete(“cf: name”); table. commit(update);

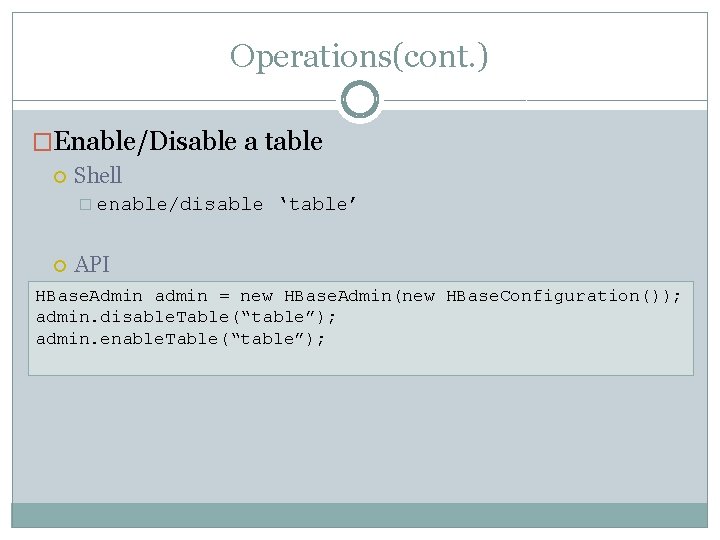

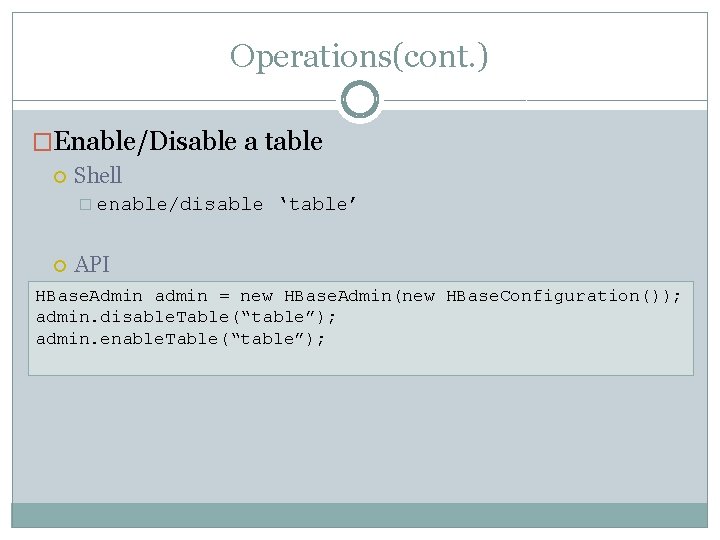

Operations(cont. ) �Enable/Disable a table Shell � enable/disable ‘table’ API HBase. Admin admin = new HBase. Admin(new HBase. Configuration()); admin. disable. Table(“table”); admin. enable. Table(“table”);

Outline �Progress Report �Map. Reduce Programming �Hadoop Cluster Overview �HBase Overview �Q & A

Q&A �Hadoop 0. 19. 0 API http: //hadoop. apache. org/common/docs/r 0. 19. 0/api/index. h tml �HBase 0. 19. 3 API http: //hadoop. apache. org/hbase/docs/r 0. 19. 3/api/index. html �Any question?