A Hadoop Map Reduce Performance Prediction Method Ge

![Cost Model [1] • Analysis about Map - Modeling the resources (CPU Disk Network) Cost Model [1] • Analysis about Map - Modeling the resources (CPU Disk Network)](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-11.jpg)

![Cost Model [1] • Cost Function Parameters Analysis – Type One:Constant • Hadoop System Cost Model [1] • Cost Function Parameters Analysis – Type One:Constant • Hadoop System](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-12.jpg)

![Cost Model [1] • Analysis about Reduce - Modeling the resources (CPU Disk Network) Cost Model [1] • Analysis about Reduce - Modeling the resources (CPU Disk Network)](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-28.jpg)

- Slides: 29

A Hadoop Map. Reduce Performance Prediction Method Ge Song*+, Zide Meng*, Fabrice Huet*, Frederic Magoules+, Lei Yu# and Xuelian Lin# * University of Nice Sophia Antipolis, CNRS, I 3 S, UMR 7271, France + Ecole Centrale de Paris, France # Beihang University, Beijing China 1

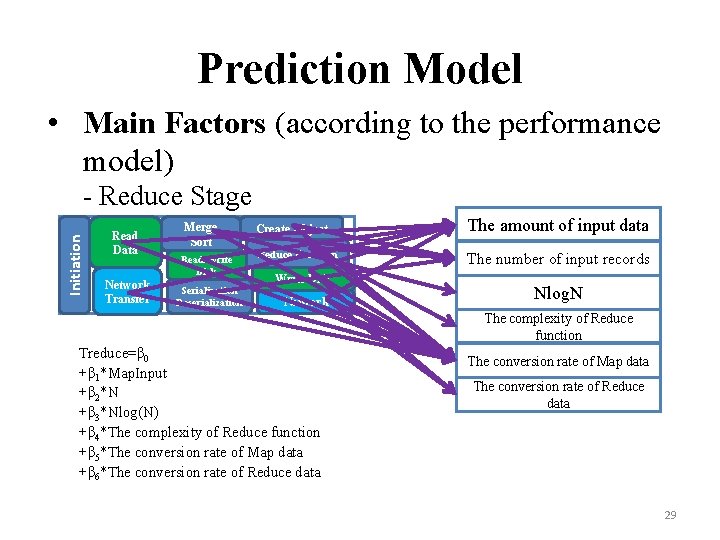

Background • Hadoop Map. Reduce Job Map Reduce (Key, Value) + I N P U T Partion 1 Partion 2 Map Reduce Map Split Reduce D A T A Map HDFS 2

Background • Hadoop • Many steps within Map stage and Reduce stage • Different step may consume different type of resource Map R E A D Map S O R T M E R G E O U T P U T 3

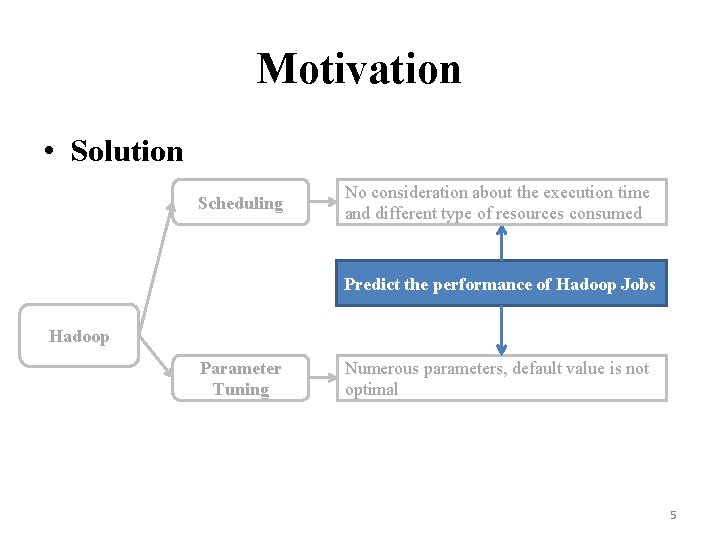

Motivation • Problems Scheduling CPU Intensive No consideration about the execution time and different type of resources consumed Hadoop Parameter Tuning Numerous parameters, default value is not optimal Job Hadoop Job Default Hadoop Default Conf 4

Motivation • Solution Scheduling No consideration about the execution time and different type of resources consumed Predict the performance of Hadoop Jobs Hadoop Parameter Tuning Numerous parameters, default value is not optimal 5

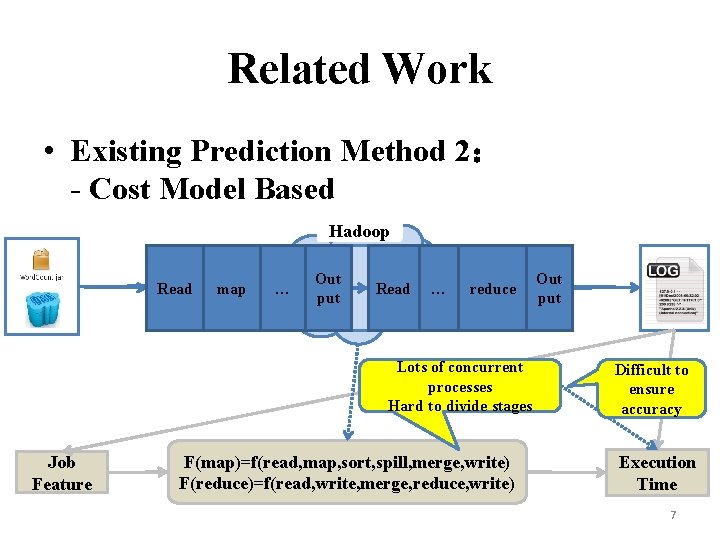

Related Work • Existing Prediction Method 1: - Black Box Based Hadoop Hard to choose Job Features Lack of the analysis about Hadoop Statistic/Learning Models Execution Time 6

Related Work • Existing Prediction Method 2: - Cost Model Based Hadoop Read map … Out Hadoop. Read put … reduce Lots of concurrent processes Hard to divide stages Job Feature F(map)=f(read, map, sort, spill, merge, write) F(reduce)=f(read, write, merge, reduce, write) Out put Difficult to ensure accuracy Execution Time 7

Related Work • A Brief Summary about Existing Prediction Method Black Box Cost Model Advantage l. Simple and Effective l. High accuracy l. High isomorphism l. Detailed analysis about Hadoop processing l. Division is flexible (stage, resource) l. Multiple prediction Short Coming l. Lack of job feature extraction l. Lack of analysis l. Hard to divide each step and resource l. Lack of job feature extraction l. A lot of concurrent, hard to model l. Better for theoretical analysis, not suitable for prediction o Simple prediction, o Lack of jobs (jar package + data) analysis 8

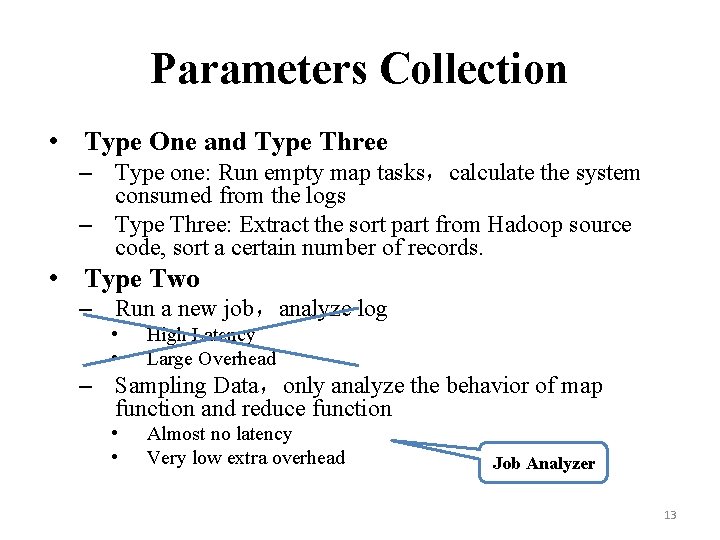

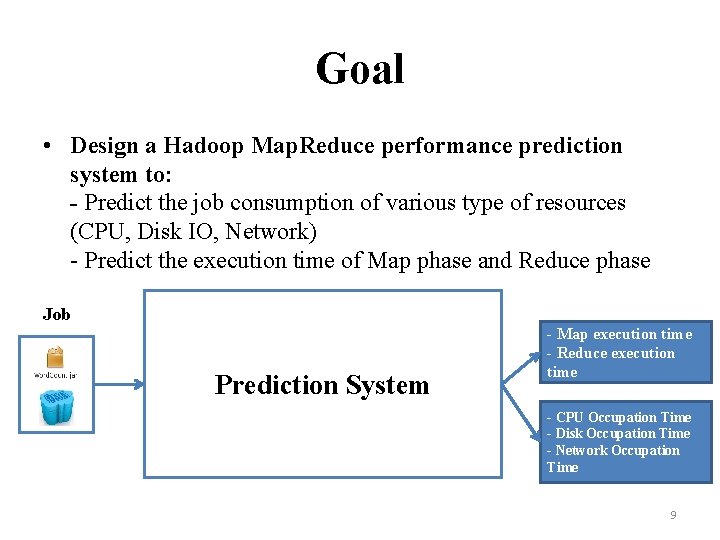

Goal • Design a Hadoop Map. Reduce performance prediction system to: - Predict the job consumption of various type of resources (CPU, Disk IO, Network) - Predict the execution time of Map phase and Reduce phase Job Prediction System - Map execution time - Reduce execution time - CPU Occupation Time - Disk Occupation Time - Network Occupation Time 9

Design - 1 • Cost Model Job C O S T M O D E L - Map execution time - Reduce execution time - CPU Occupation Time - Disk Occupation Time - Network Occupation Time 10

![Cost Model 1 Analysis about Map Modeling the resources CPU Disk Network Cost Model [1] • Analysis about Map - Modeling the resources (CPU Disk Network)](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-11.jpg)

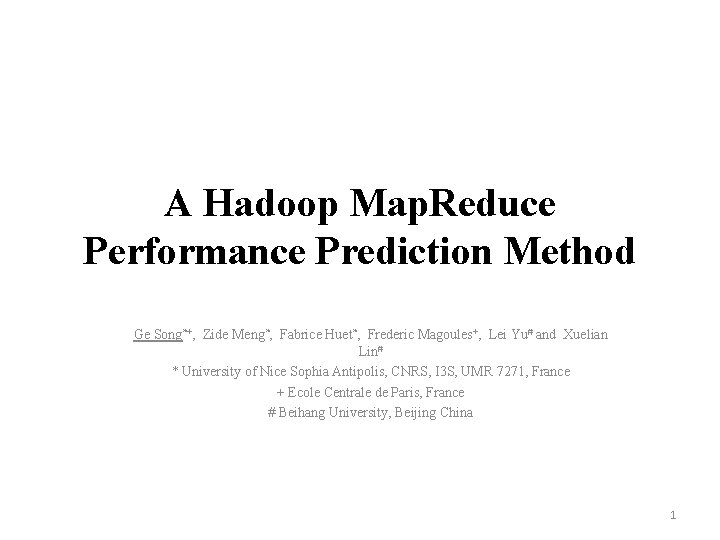

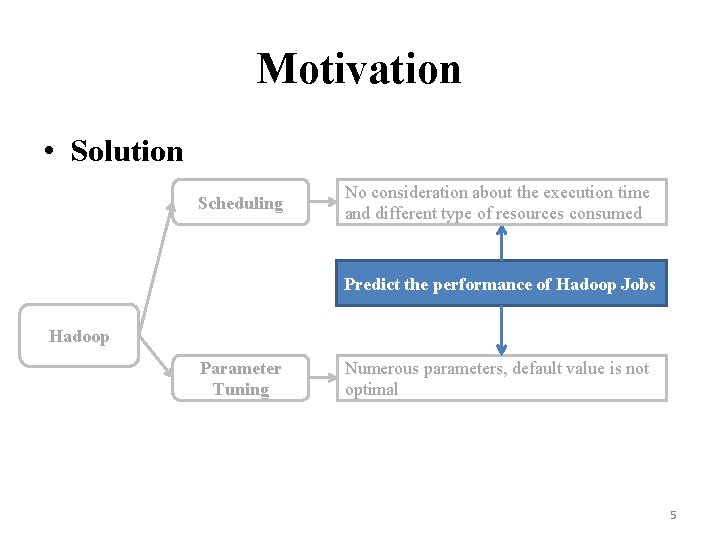

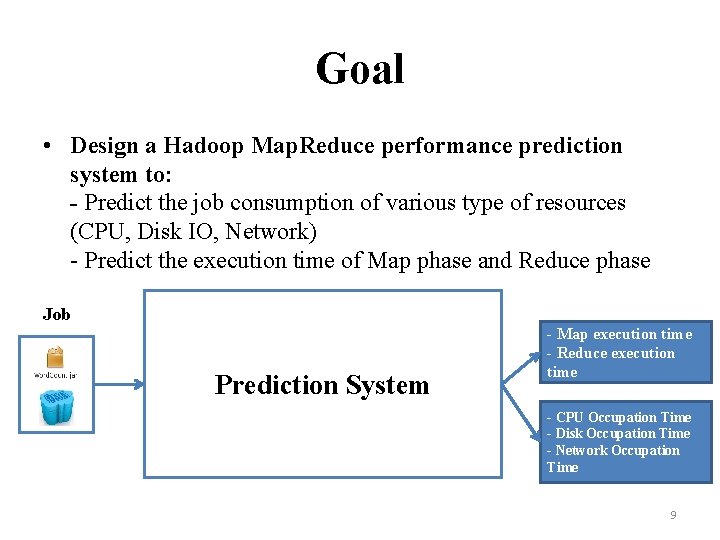

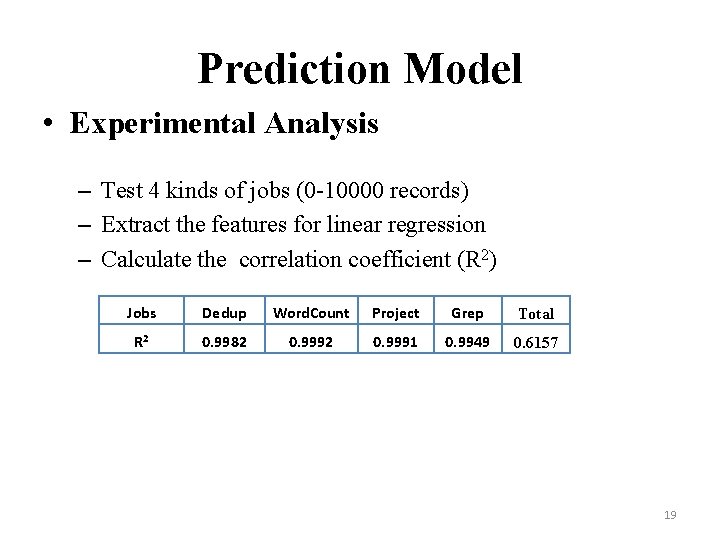

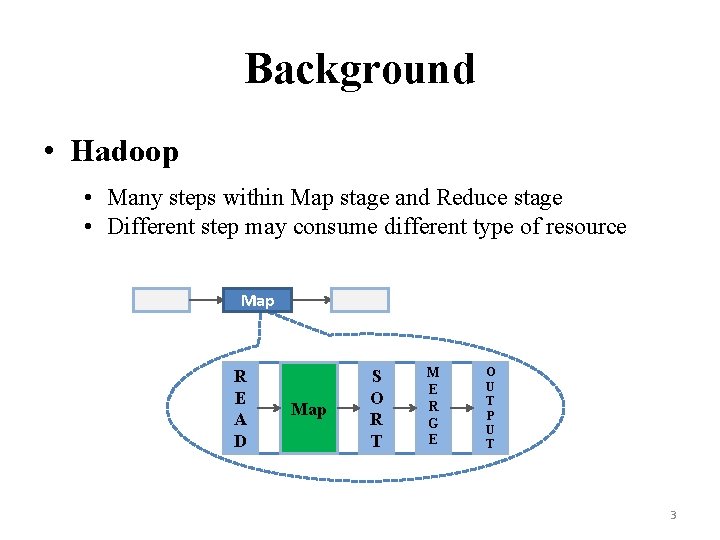

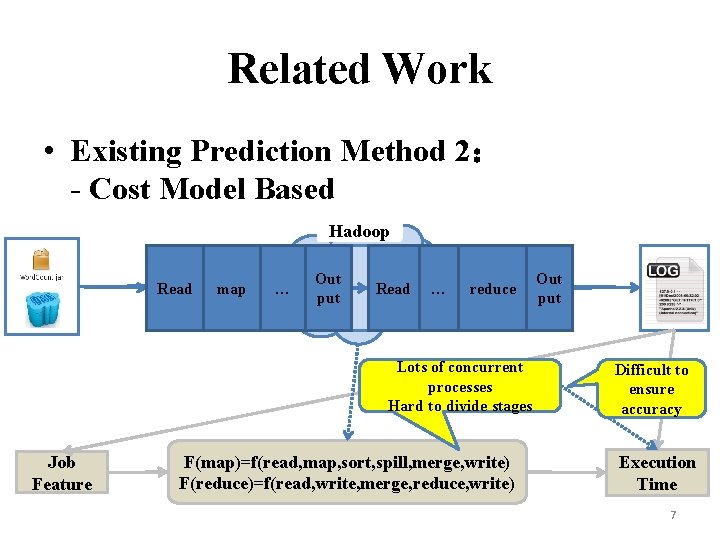

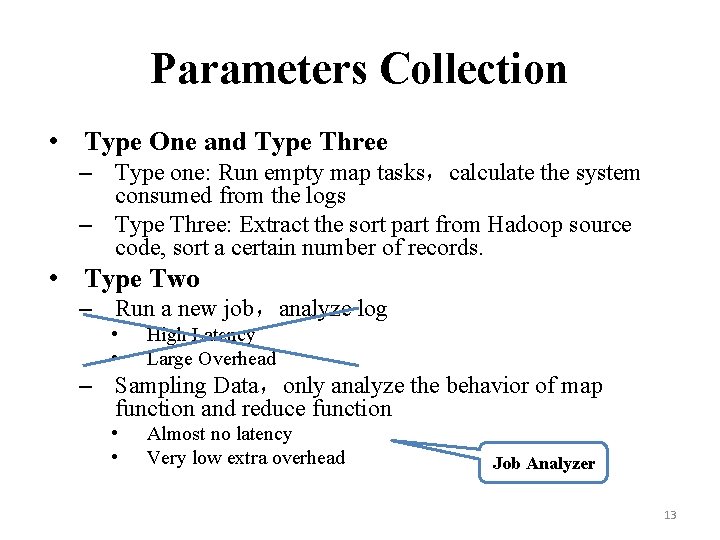

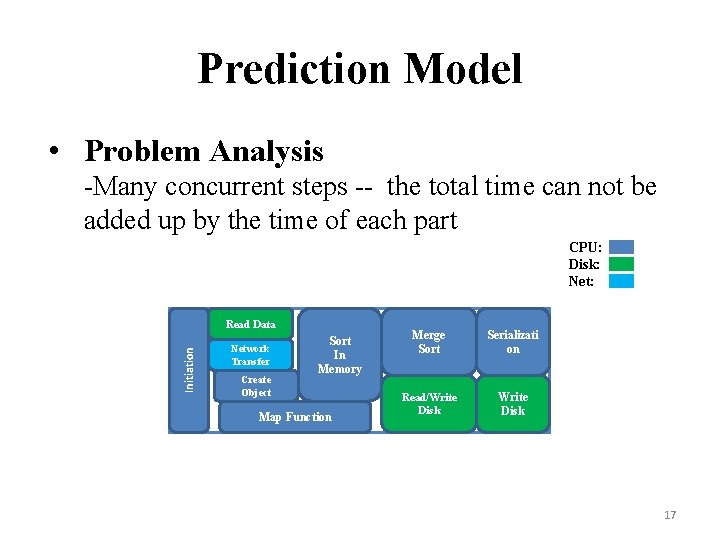

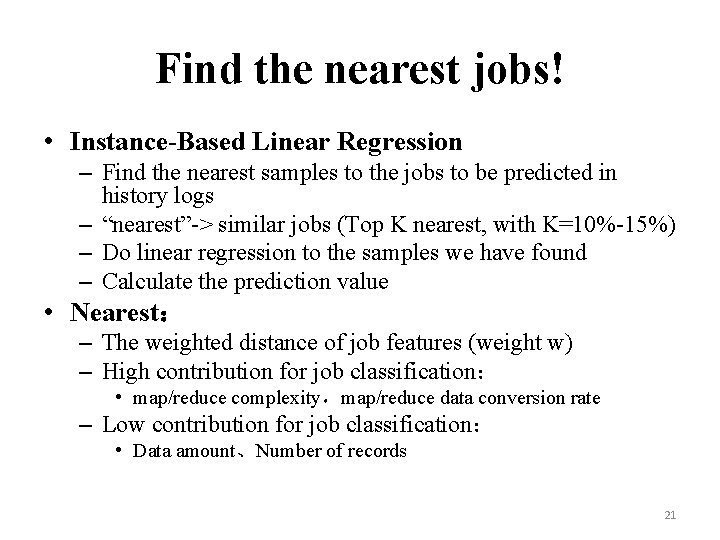

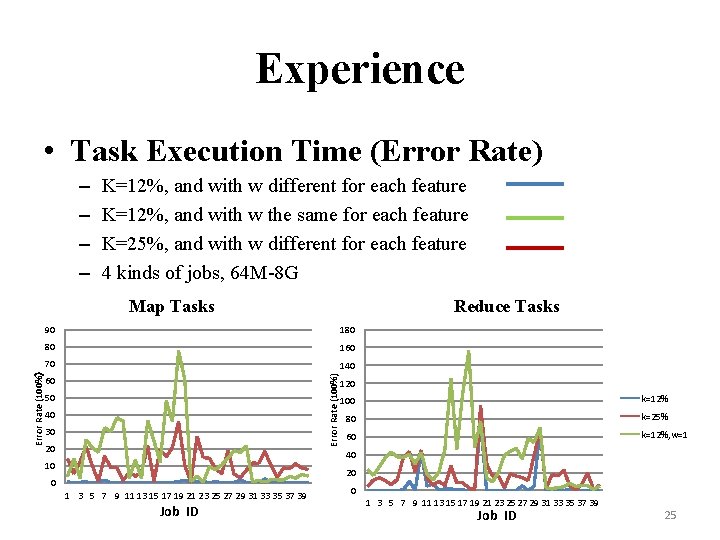

Cost Model [1] • Analysis about Map - Modeling the resources (CPU Disk Network) consumption - Each stage involves only one type of resources CPU: Disk: Net: Initiation Map Read Data Network Transfer Create Object Sort In Memory Map Function Merge Sort Seriali zation Read/Write Disk 11 [1] X. Lin, Z. Meng, C. Xu, and M. Wang, “A practical performance model for hadoop mapreduce, ” in CLUSTER Workshops, 2012, pp. 231– 239.

![Cost Model 1 Cost Function Parameters Analysis Type OneConstant Hadoop System Cost Model [1] • Cost Function Parameters Analysis – Type One:Constant • Hadoop System](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-12.jpg)

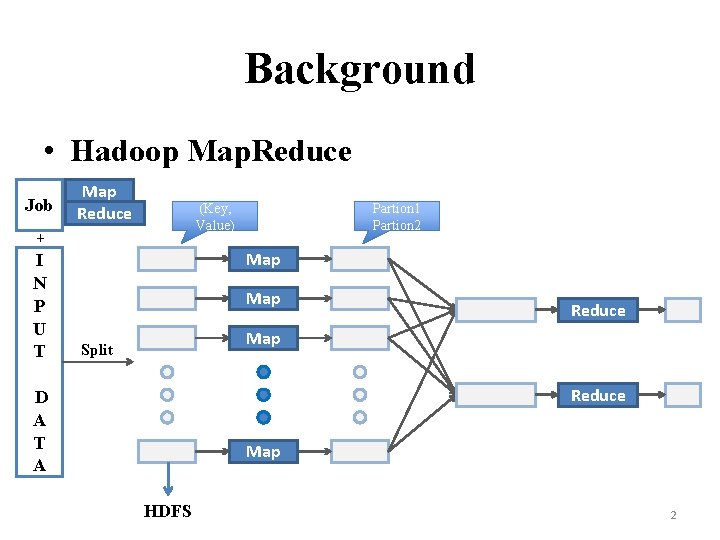

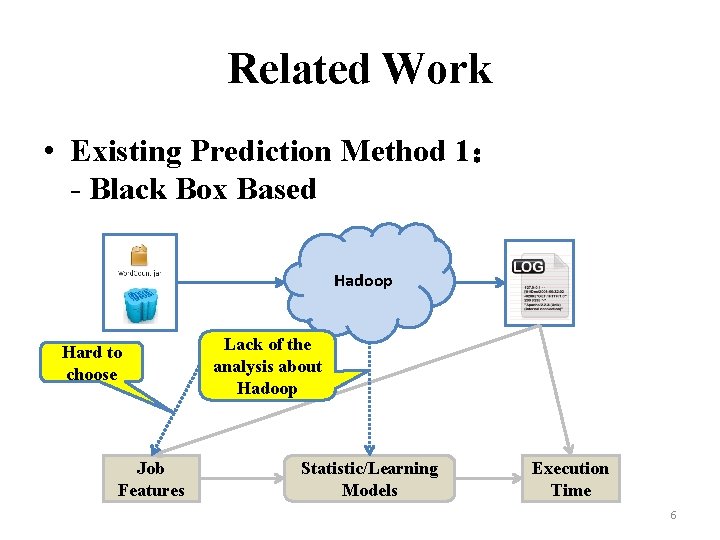

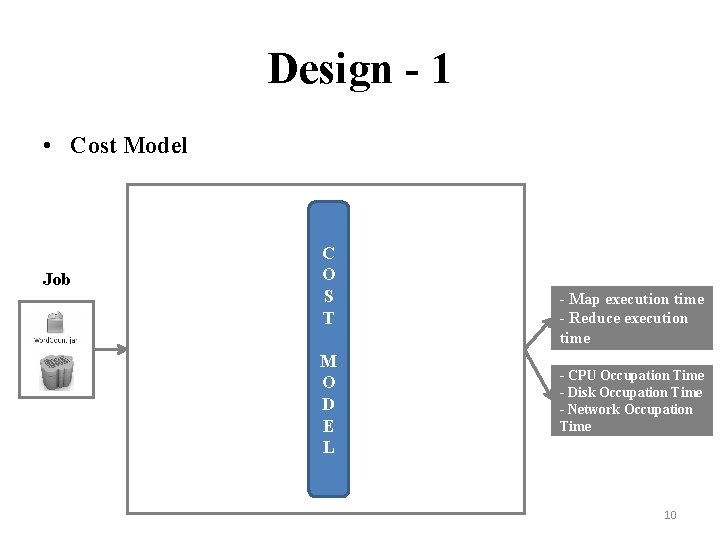

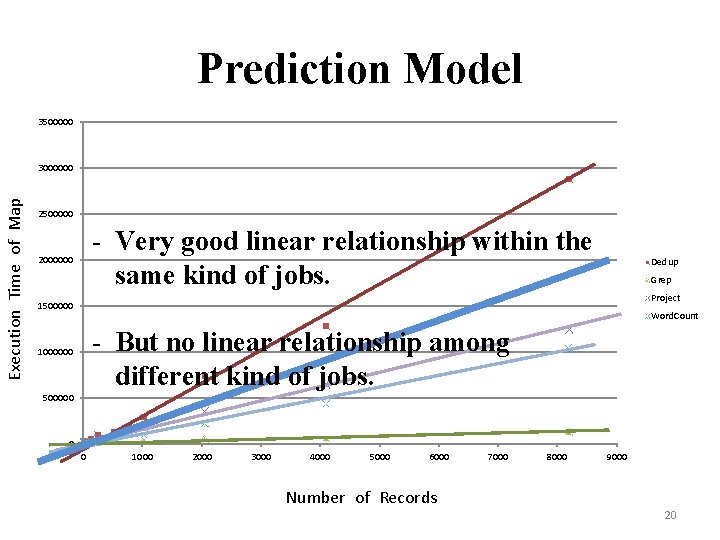

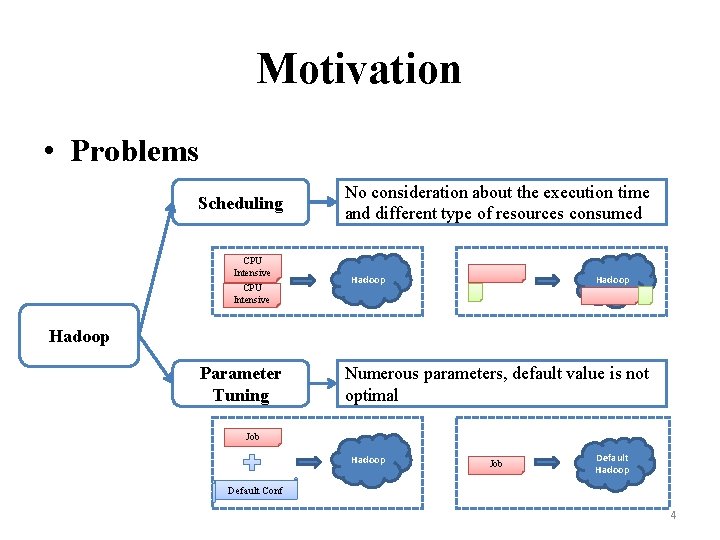

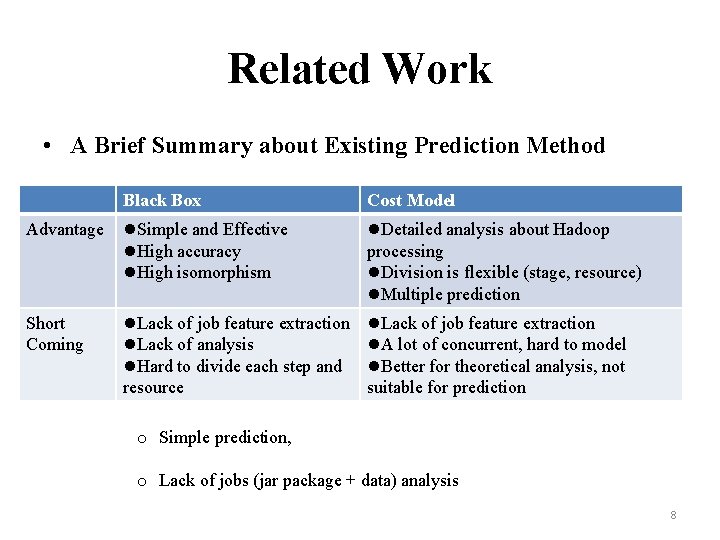

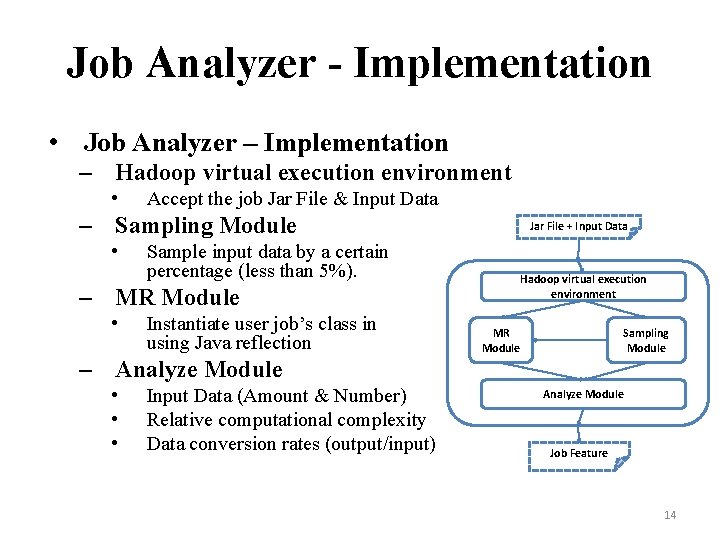

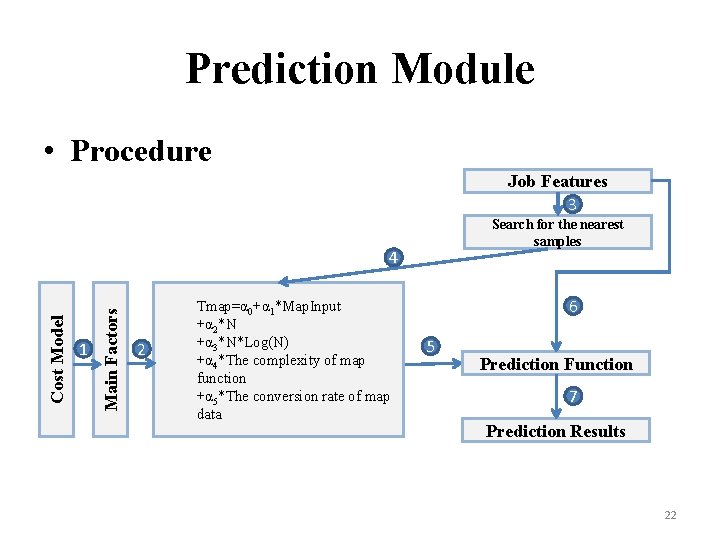

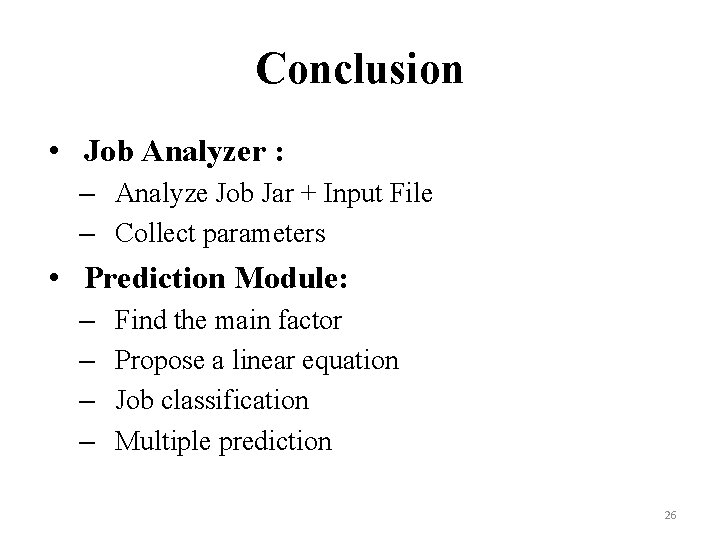

Cost Model [1] • Cost Function Parameters Analysis – Type One:Constant • Hadoop System Consume,Initialization Consume – Type Two:Job-related Parameters • Map Function Computational Complexity,Map Input Records – Type Three:Parameters defined by Cost Model • Sorting Coefficient, Complexity Factor 12 [1] X. Lin, Z. Meng, C. Xu, and M. Wang, “A practical performance model for hadoop mapreduce, ” in CLUSTER Workshops, 2012, pp. 231– 239.

Parameters Collection • Type One and Type Three – Type one: Run empty map tasks,calculate the system consumed from the logs – Type Three: Extract the sort part from Hadoop source code, sort a certain number of records. • Type Two – Run a new job,analyze log • • High Latency Large Overhead – Sampling Data,only analyze the behavior of map function and reduce function • • Almost no latency Very low extra overhead Job Analyzer 13

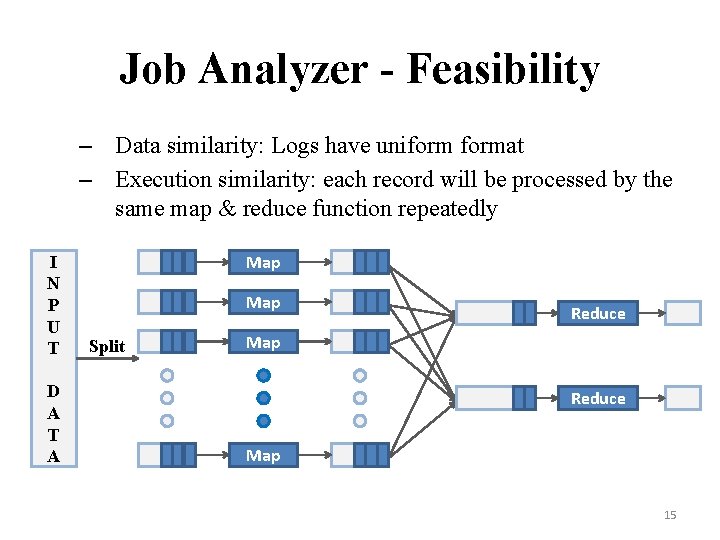

Job Analyzer - Implementation • Job Analyzer – Implementation – Hadoop virtual execution environment • Accept the job Jar File & Input Data – Sampling Module • Sample input data by a certain percentage (less than 5%). – MR Module • Instantiate user job’s class in using Java reflection Jar File + Input Data Hadoop virtual execution environment MR Module Sampling Module – Analyze Module • • • Input Data (Amount & Number) Relative computational complexity Data conversion rates (output/input) Analyze Module Job Feature 14

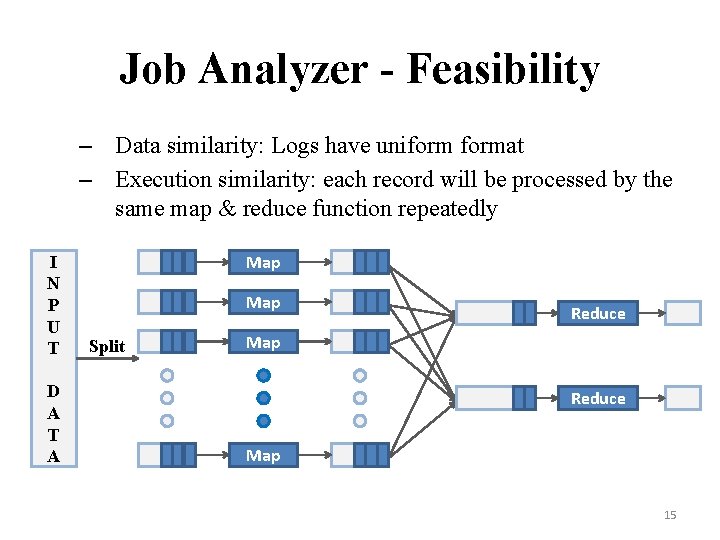

Job Analyzer - Feasibility – Data similarity: Logs have uniformat – Execution similarity: each record will be processed by the same map & reduce function repeatedly I N P U T D A T A Map Split Reduce Map 15

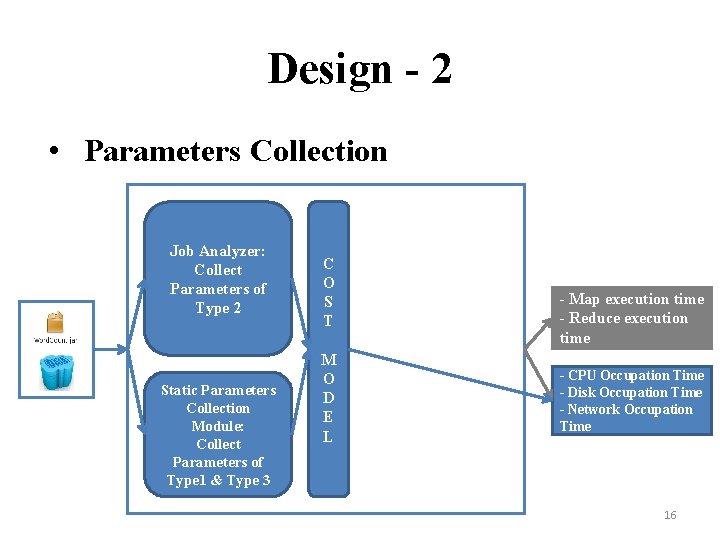

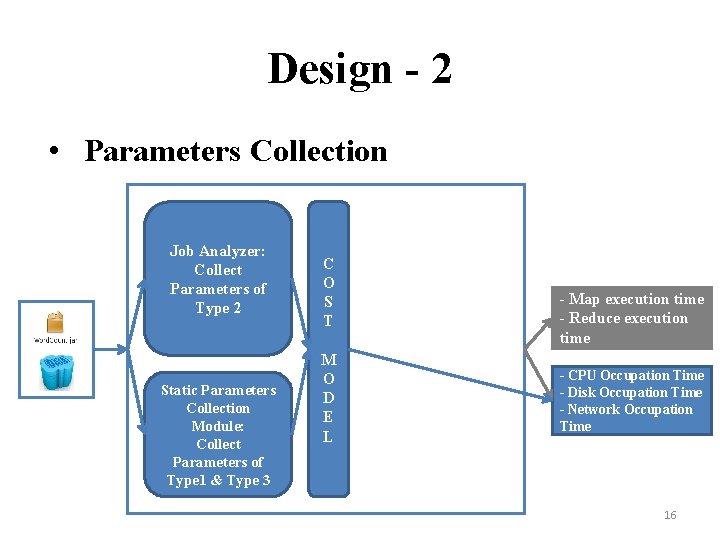

Design - 2 • Parameters Collection Job Analyzer: Collect Parameters of Type 2 Static Parameters Collection Module: Collect Parameters of Type 1 & Type 3 C O S T M O D E L - Map execution time - Reduce execution time - CPU Occupation Time - Disk Occupation Time - Network Occupation Time 16

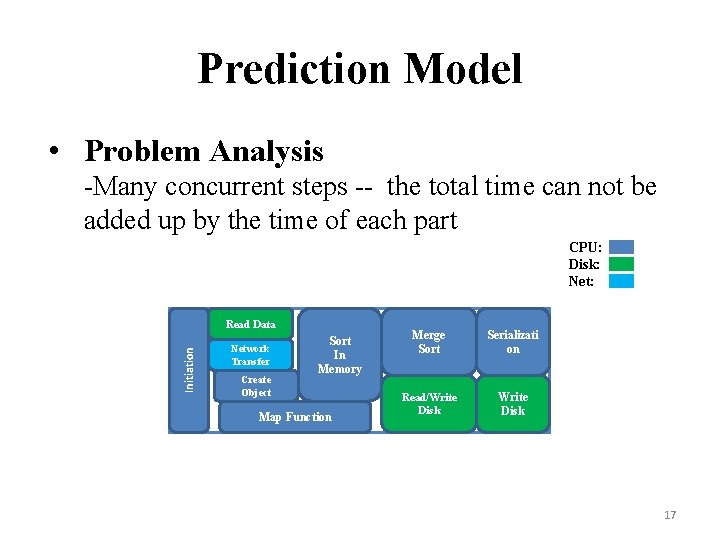

Prediction Model • Problem Analysis -Many concurrent steps -- the total time can not be added up by the time of each part CPU: Disk: Net: Initiation Read Data Network Transfer Create Object Sort In Memory Map Function Merge Sort Serializati on Read/Write Disk 17

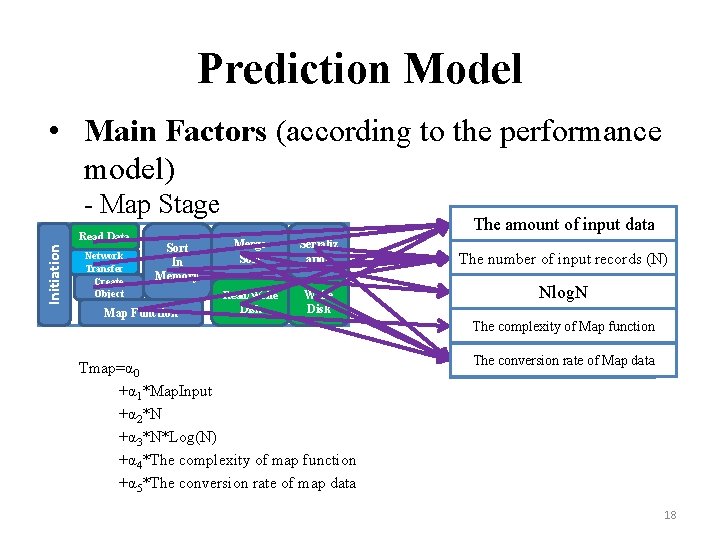

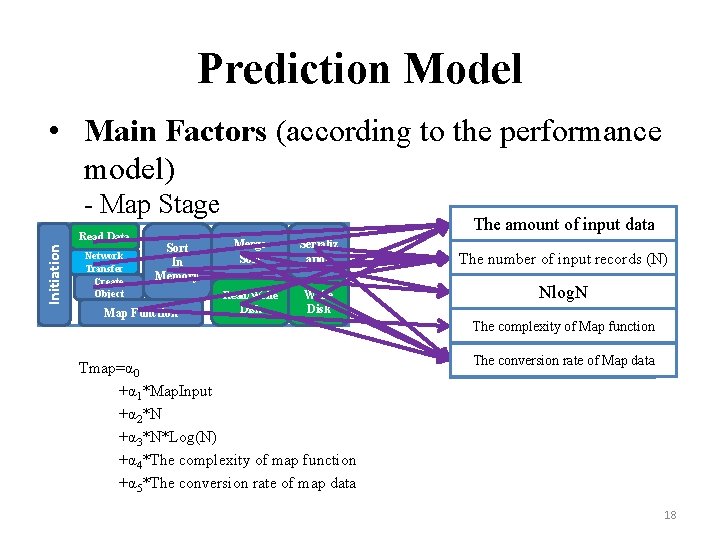

Prediction Model • Main Factors (according to the performance model) - Map Stage Initiation Read Data Network Transfer Create Object Sort In Memory Map Function The amount of input data Merge Sort Serializ ation Read/Write Disk Tmap=α 0 +α 1*Map. Input +α 2*N +α 3*N*Log(N) +α 4*The complexity of map function +α 5*The conversion rate of map data The number of input records (N) Nlog. N The complexity of Map function The conversion rate of Map data 18

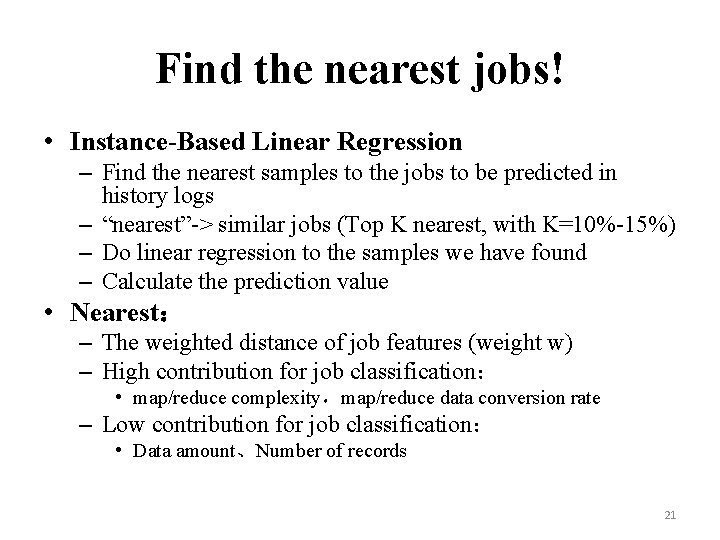

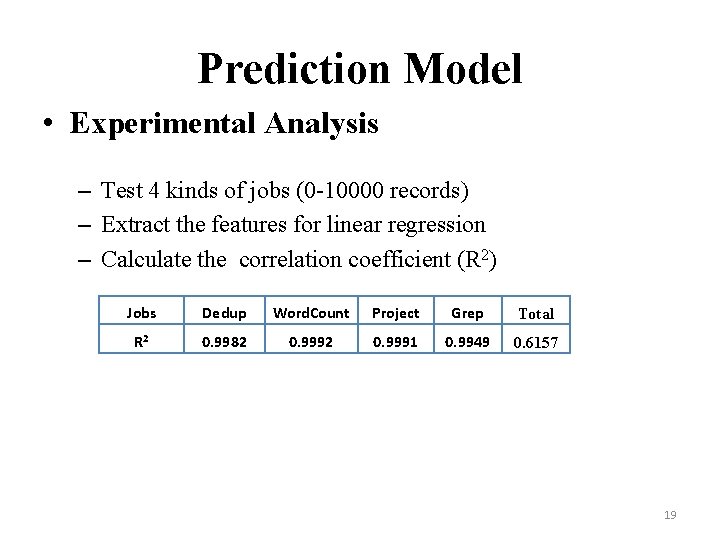

Prediction Model • Experimental Analysis – Test 4 kinds of jobs (0 -10000 records) – Extract the features for linear regression – Calculate the correlation coefficient (R 2) Jobs Dedup Word. Count Project Grep Total R 2 0. 9982 0. 9991 0. 9949 0. 6157 19

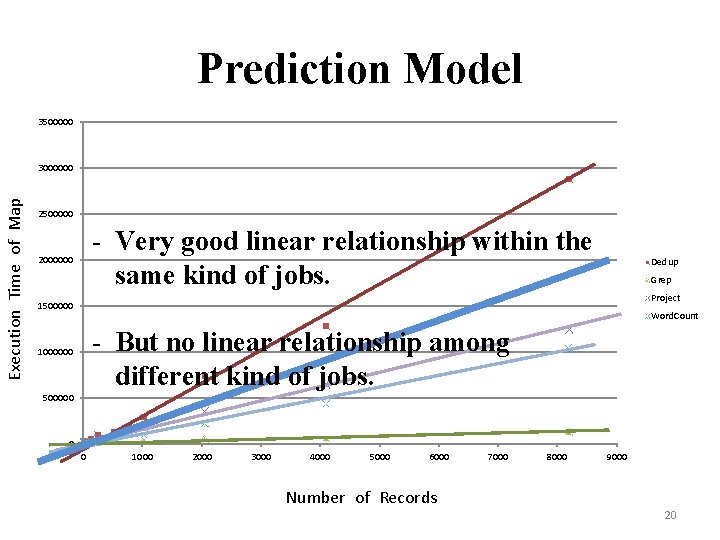

Prediction Model 3500000 Execution Time of Map 3000000 2500000 - Very good linear relationship within the same kind of jobs. 2000000 Dedup Grep Project 1500000 Word. Count - But no linear relationship among different kind of jobs. 1000000 500000 0 0 1000 2000 3000 4000 5000 6000 Number of Records 7000 8000 9000 20

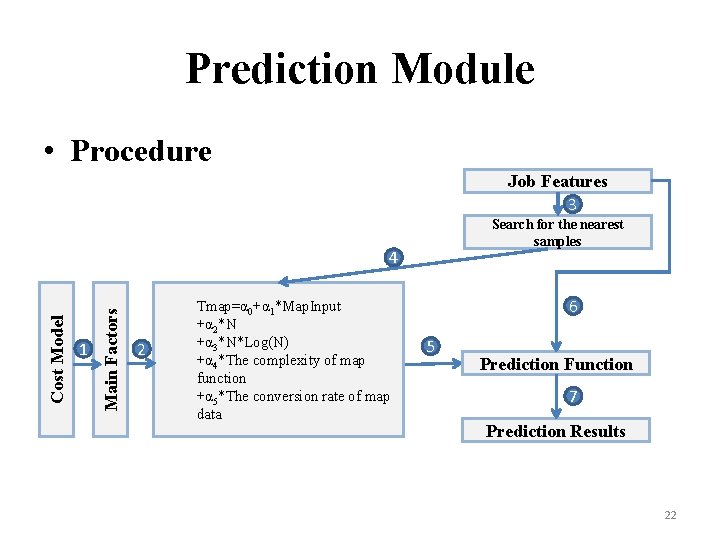

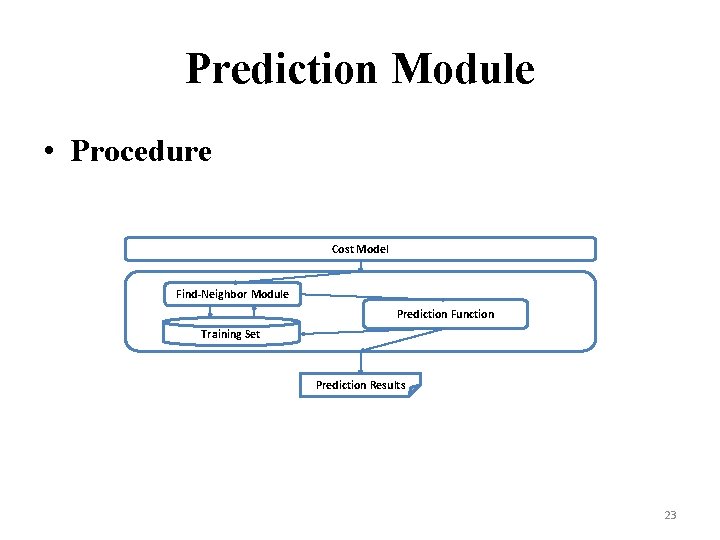

Find the nearest jobs! • Instance-Based Linear Regression – Find the nearest samples to the jobs to be predicted in history logs – “nearest”-> similar jobs (Top K nearest, with K=10%-15%) – Do linear regression to the samples we have found – Calculate the prediction value • Nearest: – The weighted distance of job features (weight w) – High contribution for job classification: • map/reduce complexity,map/reduce data conversion rate – Low contribution for job classification: • Data amount、Number of records 21

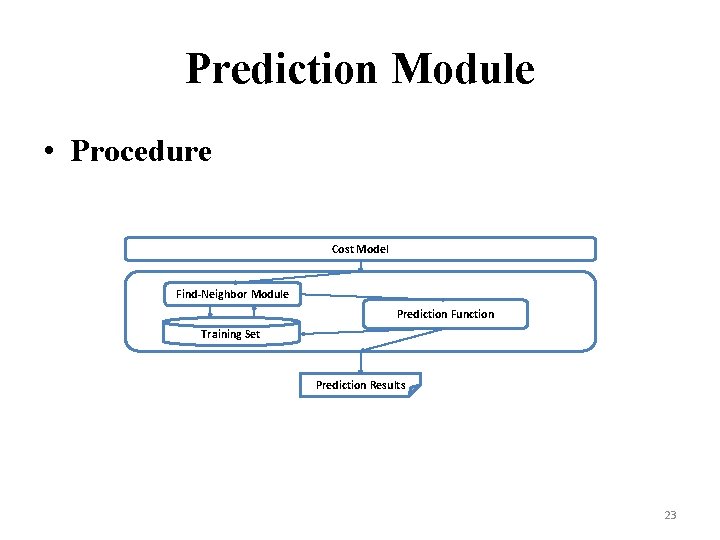

Prediction Module • Procedure Job Features 3 Search for the nearest samples 1 Main Factors Cost Model 4 2 Tmap=α 0+α 1*Map. Input +α 2*N +α 3*N*Log(N) +α 4*The complexity of map function +α 5*The conversion rate of map data 6 5 Prediction Function 7 Prediction Results 22

Prediction Module • Procedure Cost Model Find-Neighbor Module Prediction Function Training Set Prediction Results 23

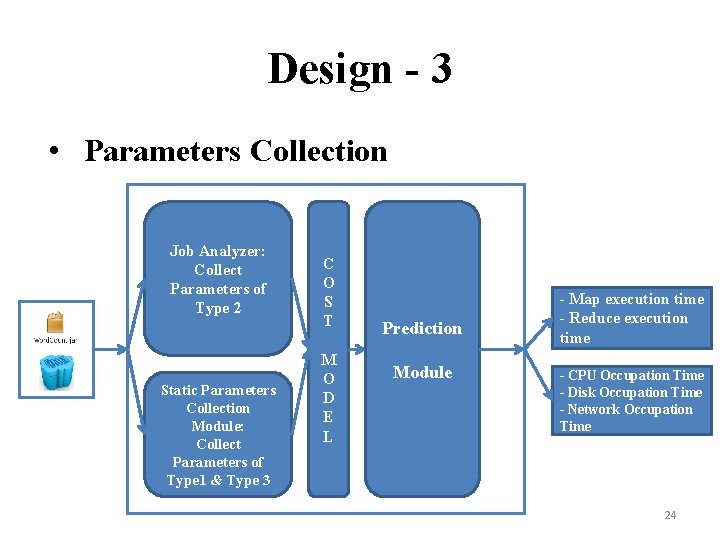

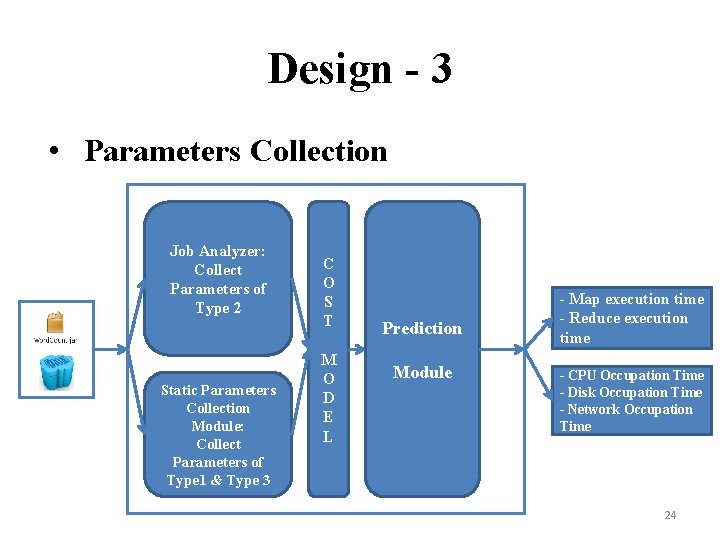

Design - 3 • Parameters Collection Job Analyzer: Collect Parameters of Type 2 Static Parameters Collection Module: Collect Parameters of Type 1 & Type 3 C O S T M O D E L Prediction Module - Map execution time - Reduce execution time - CPU Occupation Time - Disk Occupation Time - Network Occupation Time 24

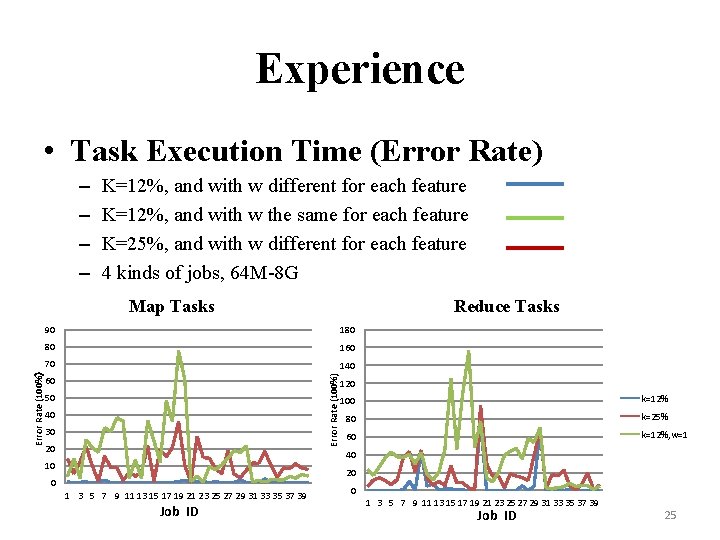

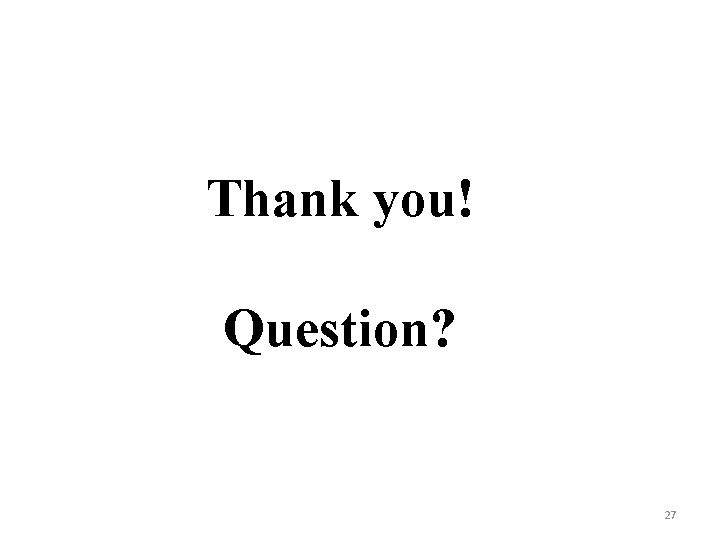

Experience • Task Execution Time (Error Rate) – – K=12%, and with w different for each feature K=12%, and with w the same for each feature K=25%, and with w different for each feature 4 kinds of jobs, 64 M-8 G Reduce Tasks 90 180 80 160 70 140 Error Rate (100%) Error Rate (100%) Map Tasks 60 50 40 30 20 100 k=12% 80 k=25% 60 k=12%, w=1 40 10 20 0 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 37 39 Job ID 25

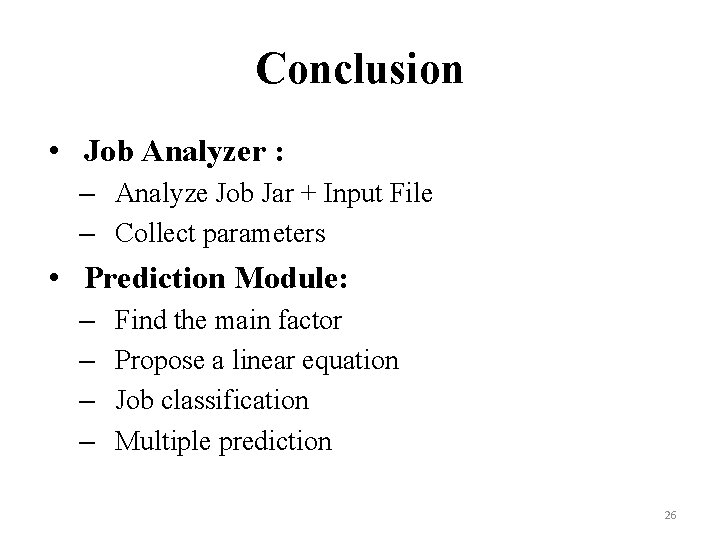

Conclusion • Job Analyzer : – Analyze Job Jar + Input File – Collect parameters • Prediction Module: – – Find the main factor Propose a linear equation Job classification Multiple prediction 26

Thank you! Question? 27

![Cost Model 1 Analysis about Reduce Modeling the resources CPU Disk Network Cost Model [1] • Analysis about Reduce - Modeling the resources (CPU Disk Network)](https://slidetodoc.com/presentation_image/e5c333db6e4723fca8c20fbb0aa9ce92/image-28.jpg)

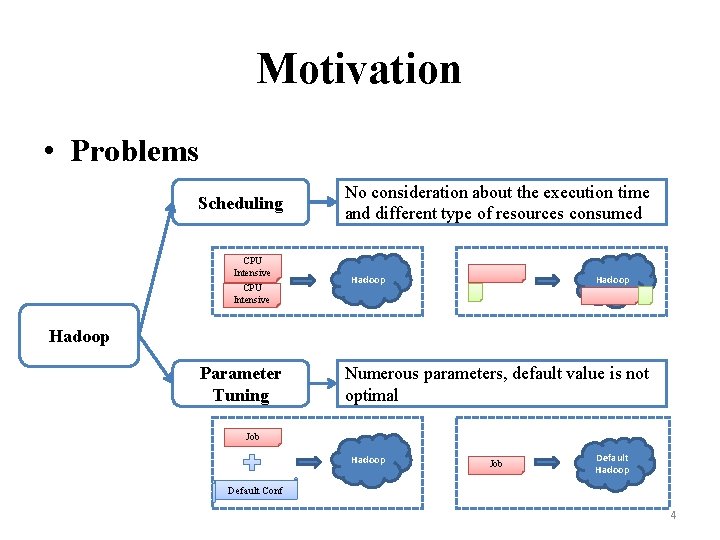

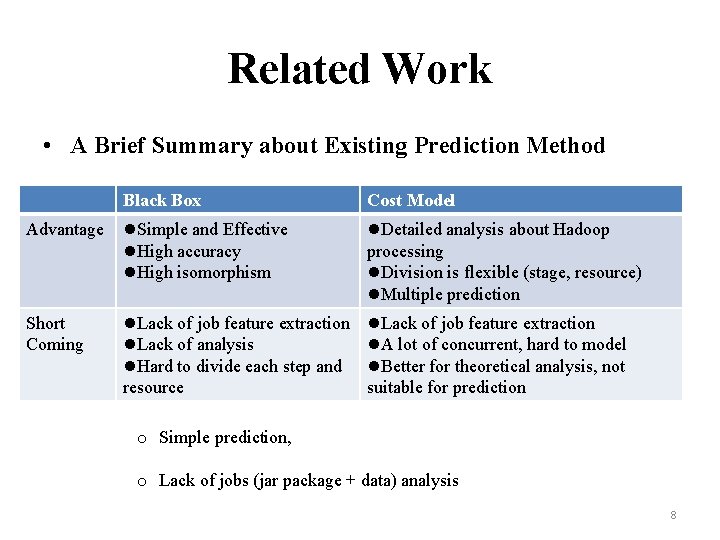

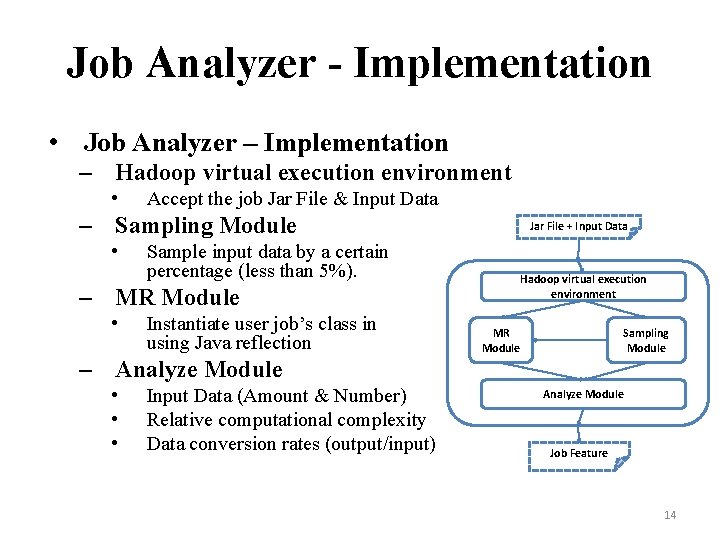

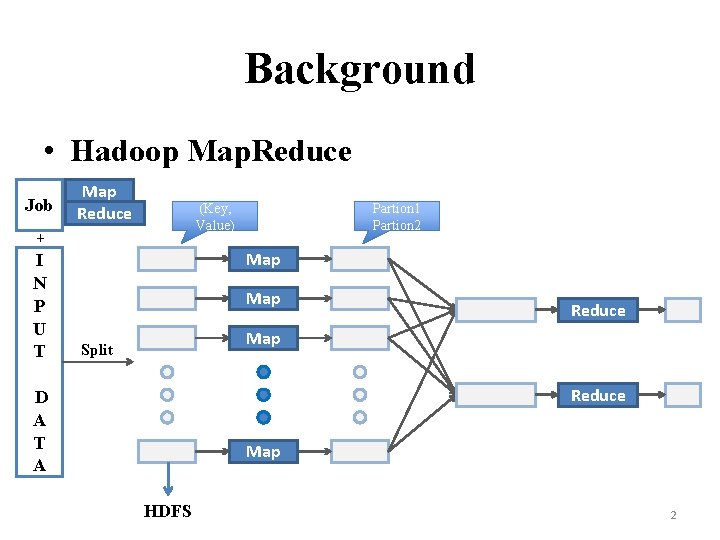

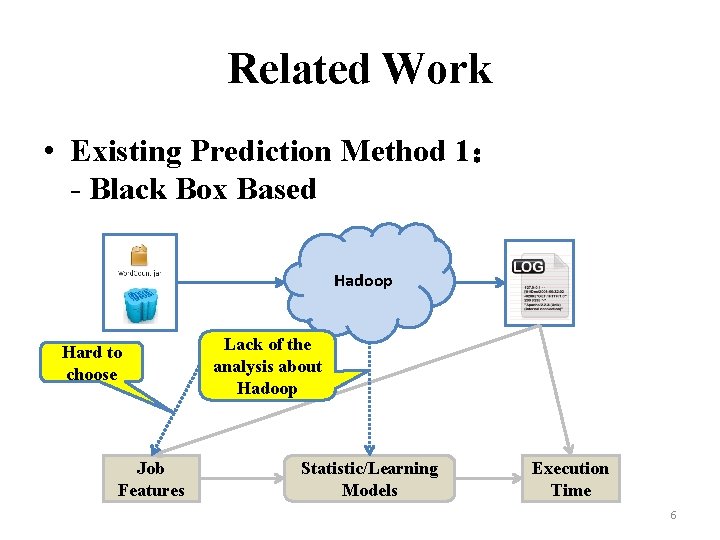

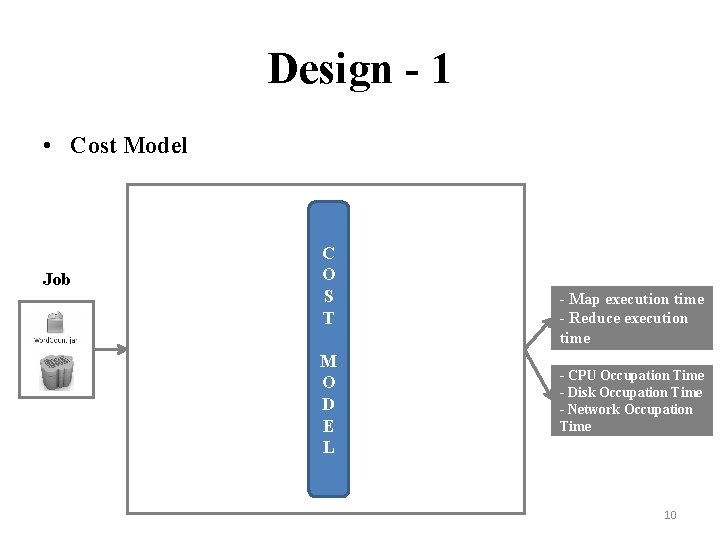

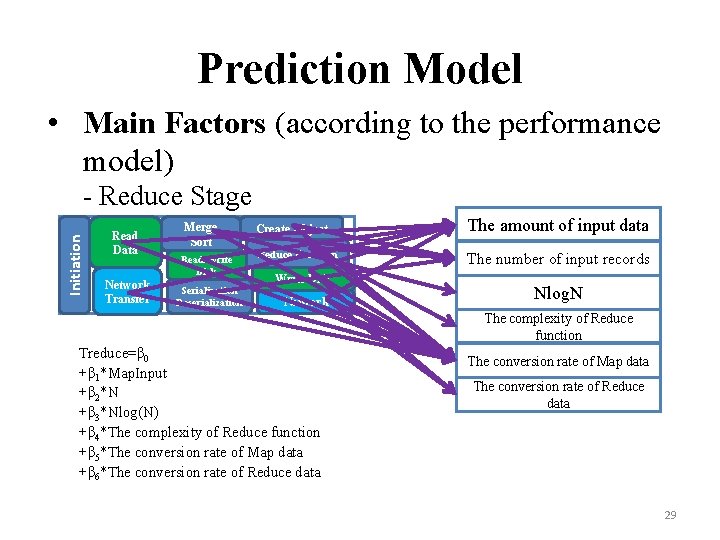

Cost Model [1] • Analysis about Reduce - Modeling the resources (CPU Disk Network) consumption - Each stage involves only one type of resources Initiation Reduce Read Data Network Transfer CPU: Disk: Net: Merge Sort Read/Write Disk Serialization Deserialization Create Object Reduce Function Write Disk Network 28

Prediction Model • Main Factors (according to the performance model) Initiation - Reduce Stage Read Data Network Transfer Merge Sort Read/Write Disk Serialization Deserialization Create Object The amount of input data Reduce Function The number of input records Write Disk Network Nlog. N The complexity of Reduce function Treduce=β 0 +β 1*Map. Input +β 2*N +β 3*Nlog(N) +β 4*The complexity of Reduce function +β 5*The conversion rate of Map data +β 6*The conversion rate of Reduce data The conversion rate of Map data The conversion rate of Reduce data 29