A Graph Based Algorithm for Data Path Optimization

A Graph Based Algorithm for Data Path Optimization in Custom Processors J. Trajkovic, M. Reshadi, B. Gorjiara, D. Gajski Center for Embedded Computer Systems University of California, Irvine

Outline • • Introduction Design Methodology Initial Allocation Architecture Wizard • Critical Path Extraction • Spill Algorithm • Results • Conclusion Copyright Ó 2006, CECS 2

Introduction (1 of 2) • • • Complexity of So. C rising Short time to market Need for processors specialized for different application domains • General purpose processors • Often slow and power hungry • Full HW design • Expensive and rigid for debugging and feature extension • Custom processor • Adapt the data path to a given application Þ Need for automatic generation of application specific architectures Copyright Ó 2006, CECS 3

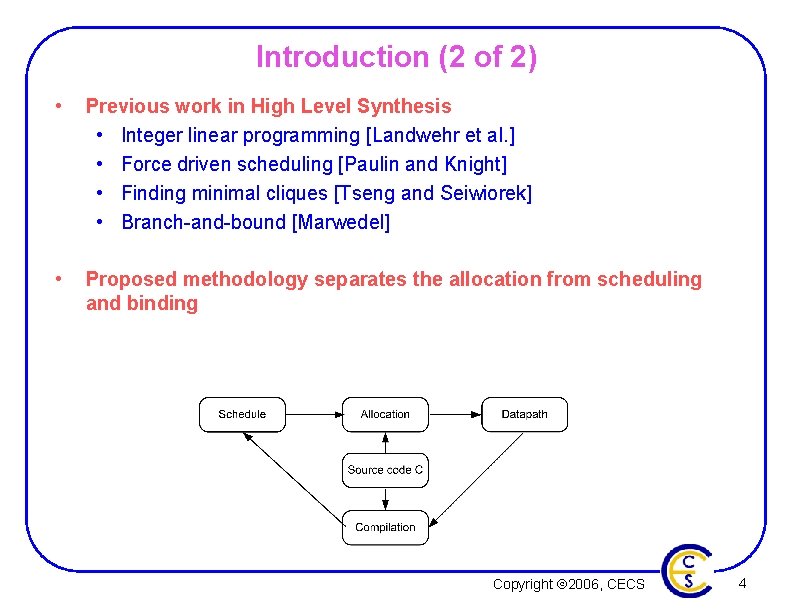

Introduction (2 of 2) • Previous work in High Level Synthesis • Integer linear programming [Landwehr et al. ] • Force driven scheduling [Paulin and Knight] • Finding minimal cliques [Tseng and Seiwiorek] • Branch-and-bound [Marwedel] • Proposed methodology separates the allocation from scheduling and binding Copyright Ó 2006, CECS 4

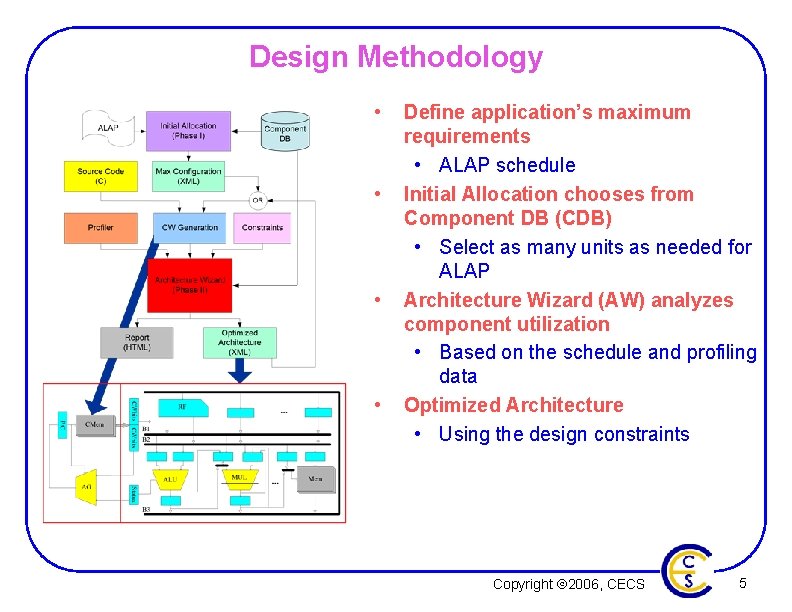

Design Methodology • • Define application’s maximum requirements • ALAP schedule Initial Allocation chooses from Component DB (CDB) • Select as many units as needed for ALAP Architecture Wizard (AW) analyzes component utilization • Based on the schedule and profiling data Optimized Architecture • Using the design constraints Copyright Ó 2006, CECS 5

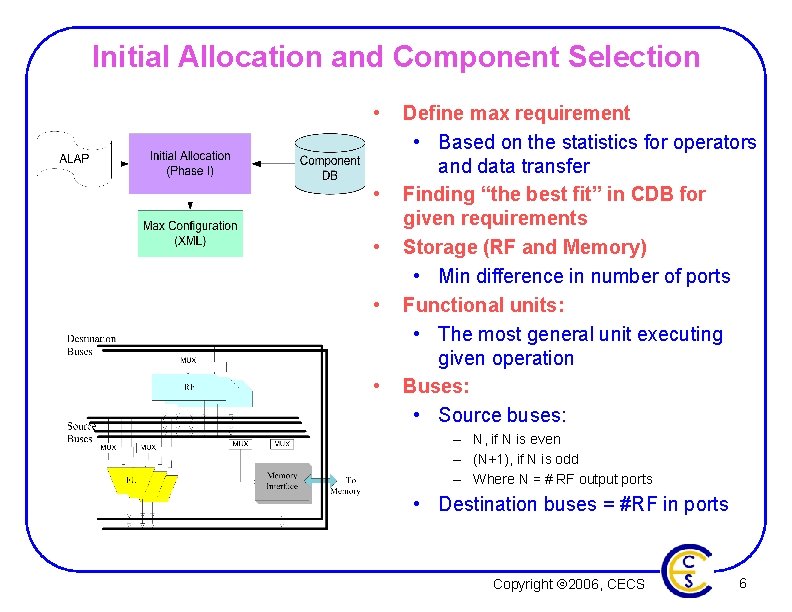

Initial Allocation and Component Selection • • • Define max requirement • Based on the statistics for operators and data transfer Finding “the best fit” in CDB for given requirements Storage (RF and Memory) • Min difference in number of ports Functional units: • The most general unit executing given operation Buses: • Source buses: – N, if N is even – (N+1), if N is odd – Where N = # RF output ports • Destination buses = #RF in ports Copyright Ó 2006, CECS 6

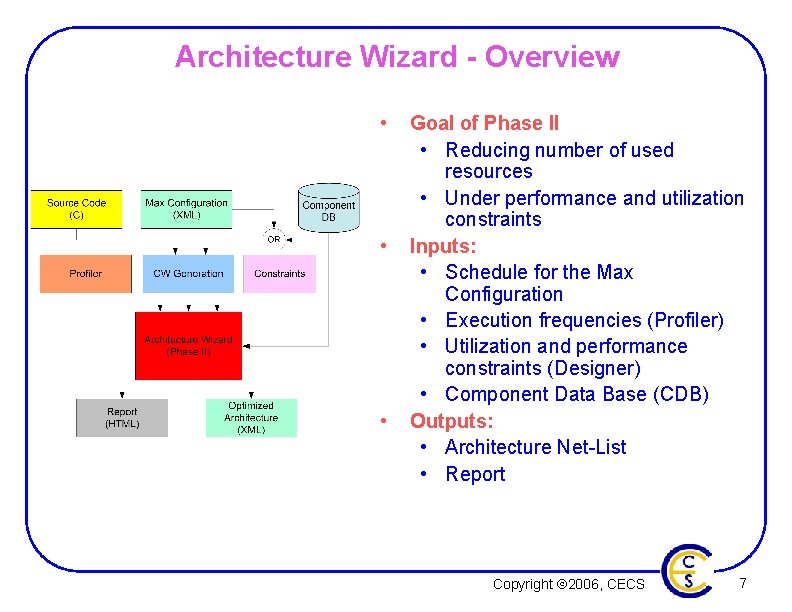

Architecture Wizard - Overview • • • Goal of Phase II • Reducing number of used resources • Under performance and utilization constraints Inputs: • Schedule for the Max Configuration • Execution frequencies (Profiler) • Utilization and performance constraints (Designer) • Component Data Base (CDB) Outputs: • Architecture Net-List • Report Copyright Ó 2006, CECS 7

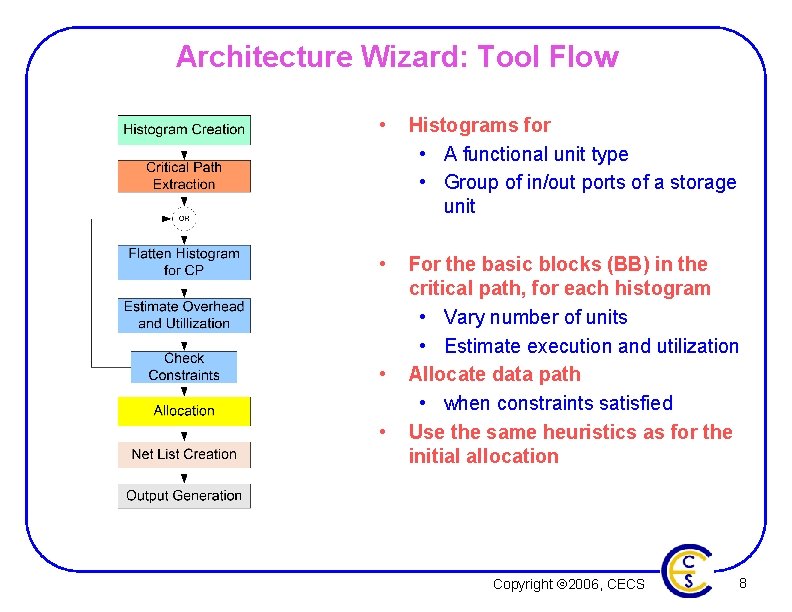

Architecture Wizard: Tool Flow • Histograms for • A functional unit type • Group of in/out ports of a storage unit • For the basic blocks (BB) in the critical path, for each histogram • Vary number of units • Estimate execution and utilization Allocate data path • when constraints satisfied Use the same heuristics as for the initial allocation • • Copyright Ó 2006, CECS 8

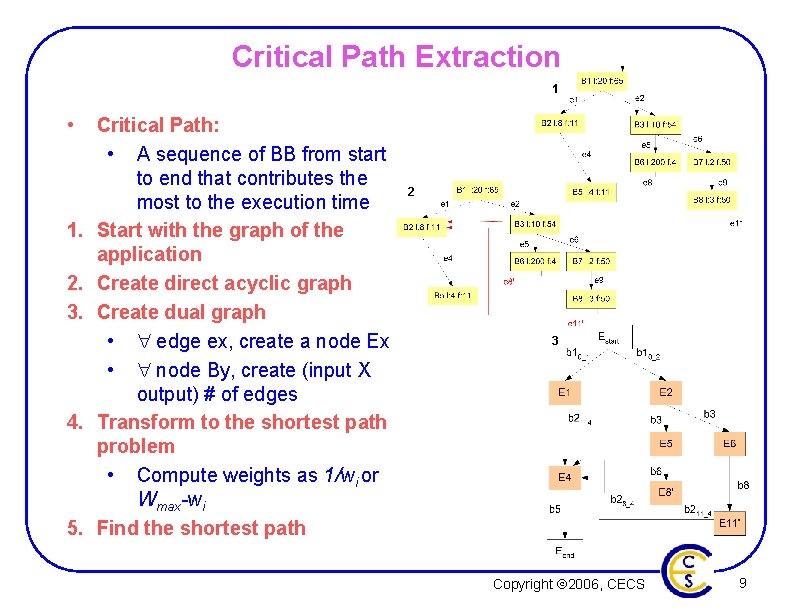

Critical Path Extraction 1 • 1. 2. 3. 4. 5. Critical Path: • A sequence of BB from start to end that contributes the most to the execution time Start with the graph of the application Create direct acyclic graph Create dual graph • edge ex, create a node Ex • node By, create (input X output) # of edges Transform to the shortest path problem • Compute weights as 1/wi or Wmax-wi Find the shortest path 2 3 Copyright Ó 2006, CECS 9

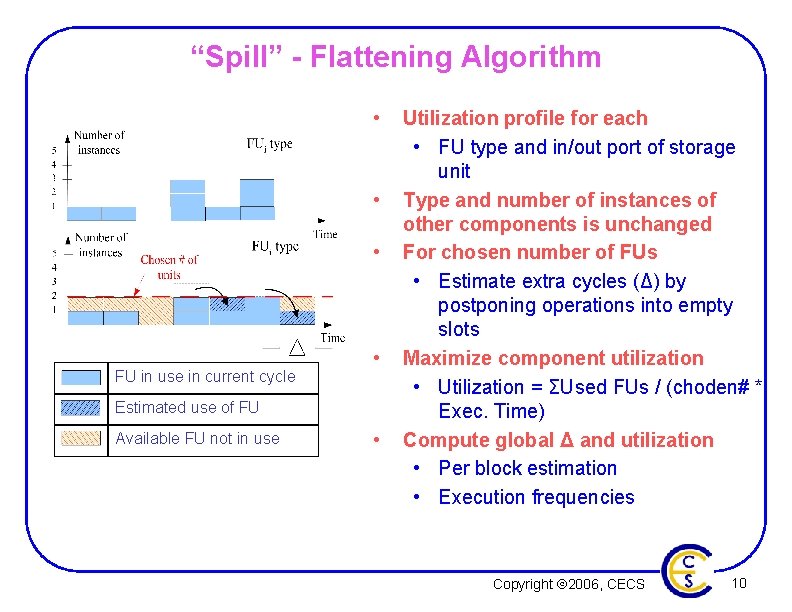

“Spill” - Flattening Algorithm • • • FU in use in current cycle • Estimated use of FU Available FU not in use • Utilization profile for each • FU type and in/out port of storage unit Type and number of instances of other components is unchanged For chosen number of FUs • Estimate extra cycles (Δ) by postponing operations into empty slots Maximize component utilization • Utilization = ΣUsed FUs / (choden# * Exec. Time) Compute global Δ and utilization • Per block estimation • Execution frequencies Copyright Ó 2006, CECS 10

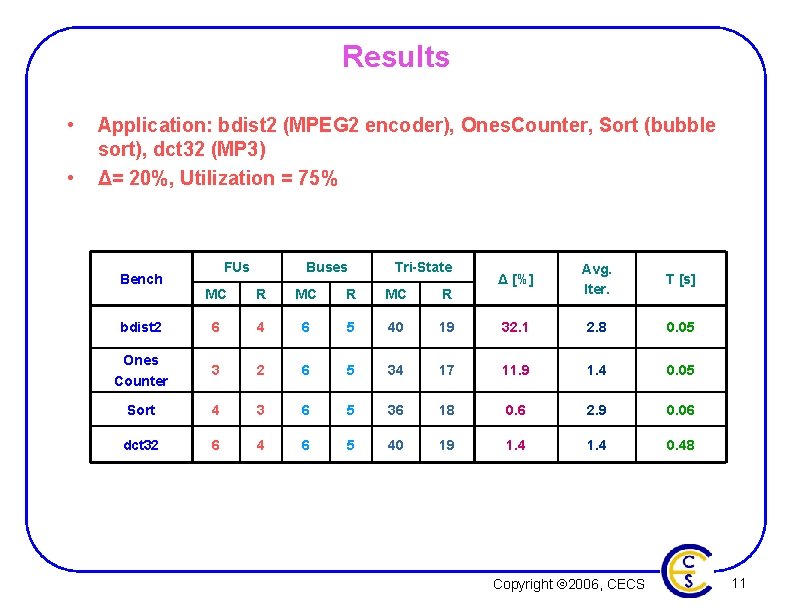

Results • • Application: bdist 2 (MPEG 2 encoder), Ones. Counter, Sort (bubble sort), dct 32 (MP 3) Δ= 20%, Utilization = 75% Bench FUs Buses Tri-State Δ [%] Avg. Iter. T [s] MC R bdist 2 6 4 6 5 40 19 32. 1 2. 8 0. 05 Ones Counter 3 2 6 5 34 17 11. 9 1. 4 0. 05 Sort 4 3 6 5 36 18 0. 6 2. 9 0. 06 dct 32 6 4 6 5 40 19 1. 4 0. 48 Copyright Ó 2006, CECS 11

Conclusion • Automatic generation of data path • Separate allocation from scheduling and binding • Initial Allocation – creates dense architecture • Architecture Wizard – refines architecture for given constraints • Future work and issues • Reduce area – Reduce complexity of FU – Further reduce interconnect • Features – Pipelining, chaining, forwarding, special function units Copyright Ó 2006, CECS 12

Thank You! Copyright Ó 2006, CECS 13

- Slides: 13