A Fully Pipelined and Dynamically Composable Architecture of

A Fully Pipelined and Dynamically Composable Architecture of CGRA Faculty: Jason Cong Project members: Hui Huang, Chiyuan Ma, Bingjun Xiao, Peipei Zhou* VAST Lab Computer Science Department University of California, Los Angeles

Computing Beyond Processors u CPU § General purpose computing solution § Sharing hardware among all instructions § Low energy efficiency u ASIC § Customized for dedicated applications § High efficiency but no flexibility u FPGA § Configurable at bit level to keep both efficiency and flexibility u CGRA § Programming granularity to word level most applications use 2 full precision

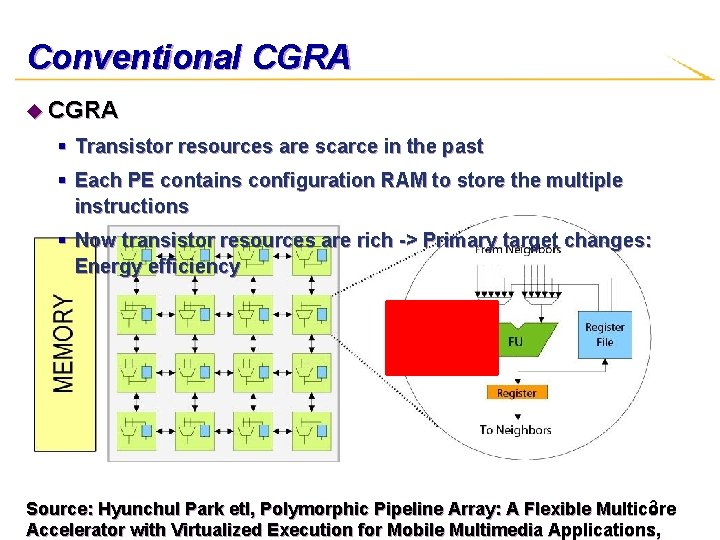

Conventional CGRA u CGRA § Transistor resources are scarce in the past § Each PE contains configuration RAM to store the multiple instructions § Now transistor resources are rich -> Primary target changes: Energy efficiency 3 Source: Hyunchul Park etl, Polymorphic Pipeline Array: A Flexible Multicore Accelerator with Virtualized Execution for Mobile Multimedia Applications,

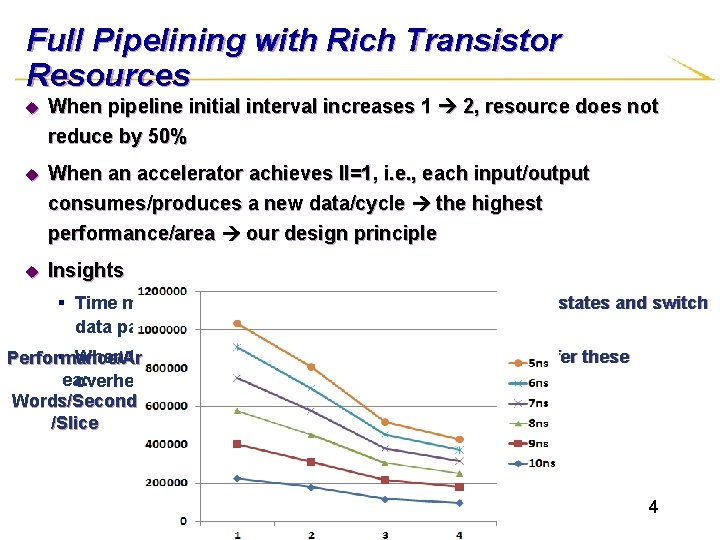

Full Pipelining with Rich Transistor Resources u When pipeline initial interval increases 1 2, resource does not reduce by 50% u When an accelerator achieves II=1, i. e. , each input/output consumes/produces a new data/cycle the highest performance/area our design principle u Insights Resource (normalized) § Time multiplexing costs resource to store more pipeline states and switch data paths § When there are rich transistor resources, no need to suffer these Performance/Ar ea: overheads Words/Second /Slice Pipeline Initial Interval (II) 4

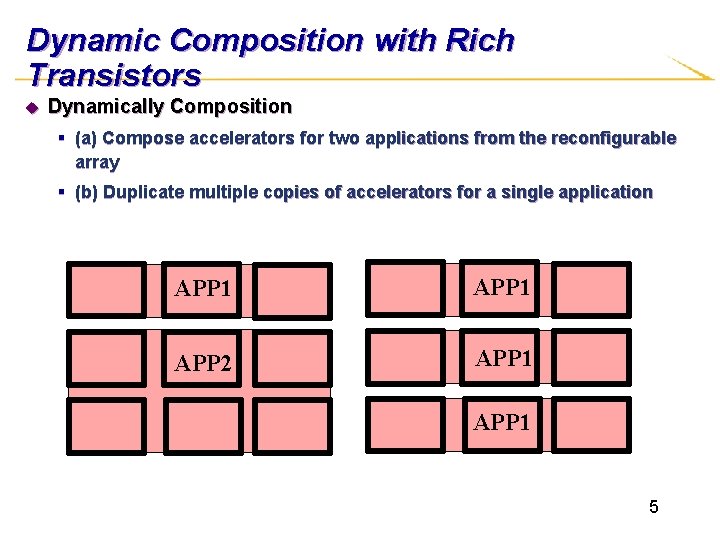

Dynamic Composition with Rich Transistors u Dynamically Composition § (a) Compose accelerators for two applications from the reconfigurable array § (b) Duplicate multiple copies of accelerators for a single application APP 1 APP 2 APP 1 5

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 6

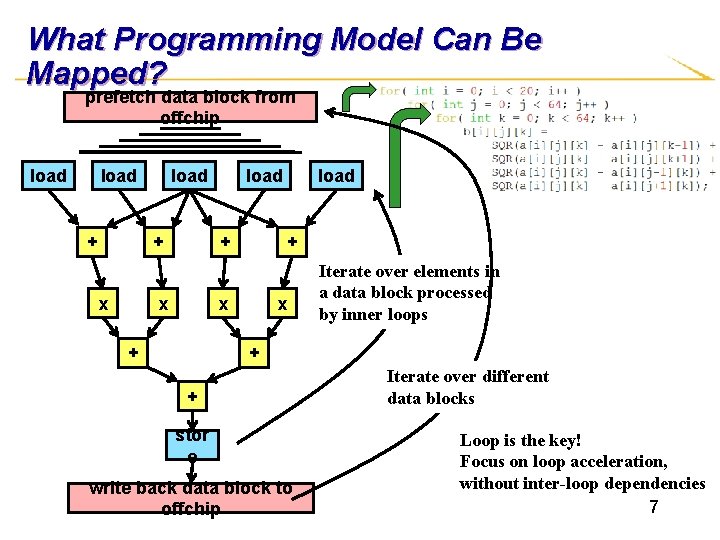

What Programming Model Can Be Mapped? prefetch data block from offchip load + x + x + load x Iterate over elements in a data block processed by inner loops + + stor e write back data block to offchip Iterate over different data blocks Loop is the key! Focus on loop acceleration, without inter-loop dependencies 7

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 8

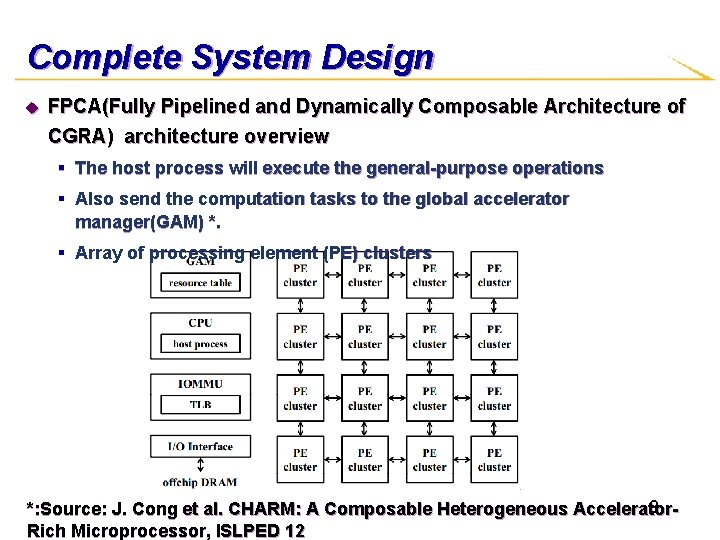

Complete System Design u FPCA(Fully Pipelined and Dynamically Composable Architecture of CGRA) architecture overview § The host process will execute the general-purpose operations § Also send the computation tasks to the global accelerator manager(GAM) *. § Array of processing element (PE) clusters 9 *: Source: J. Cong et al. CHARM: A Composable Heterogeneous Accelerator. Rich Microprocessor, ISLPED 12

![Overview of Our Composable Accelerator Computation Complex ……… [31: 0]Pipelined Data Network LMU CE Overview of Our Composable Accelerator Computation Complex ……… [31: 0]Pipelined Data Network LMU CE](http://slidetodoc.com/presentation_image/22c2edce6a3175c9c5d2d97cdc9ba5dc/image-10.jpg)

Overview of Our Composable Accelerator Computation Complex ……… [31: 0]Pipelined Data Network LMU CE Data flow though @ II=1 ……… LMU Registe … r Chain CE r Chain … Registe …………… Configuration Unit CE Memory Complex ……… Controll er GA Global Data Transfer Unit AXI data IOMM Synchronizat ion Unit 10

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 11

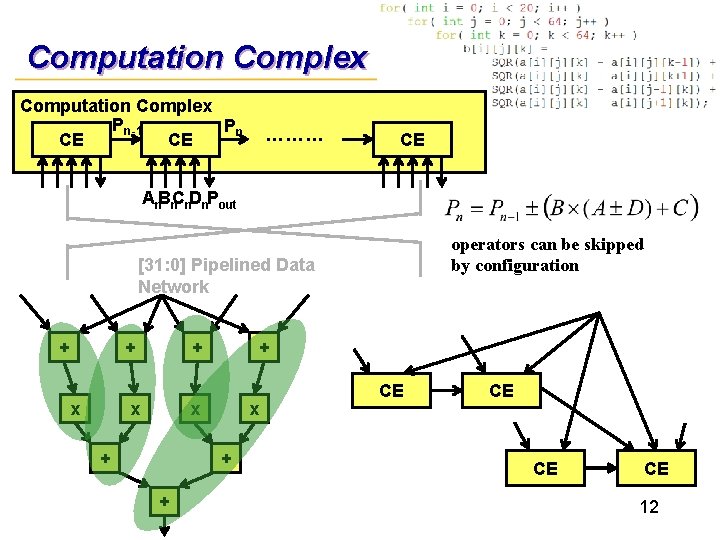

Computation Complex Pn-1 Pn CE CE ……… CE An. Bn. Cn. Dn. Pout operators can be skipped by configuration [31: 0] Pipelined Data Network + + x + x + + CE CE 12

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 13

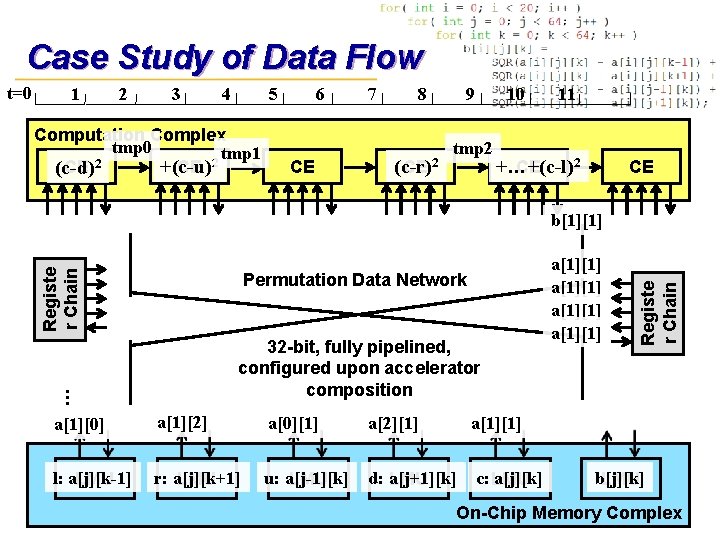

Case Study of Data Flow 1 2 3 4 5 Computation Complex tmp 0 tmp 1 2 2 +(c-u) CE CE (c-d) 6 CE 7 8 (c-r) CE 2 9 10 tmp 2 11 2 +…+(c-l) CE CE Permutation Data Network … 32 -bit, fully pipelined, configured upon accelerator composition a[1][0] a[1][2] a[0][1] a[2][1] a[1][1] l: a[j][k-1] LMU r: a[j][k+1] LMU u: a[j-1][k] LMU d: a[j+1][k] LMU c: LMU a[j][k] a[1][1] Registe r Chain b[1][1] Registe r Chain t=0 b[j][k] LMU 14 On-Chip Memory Complex

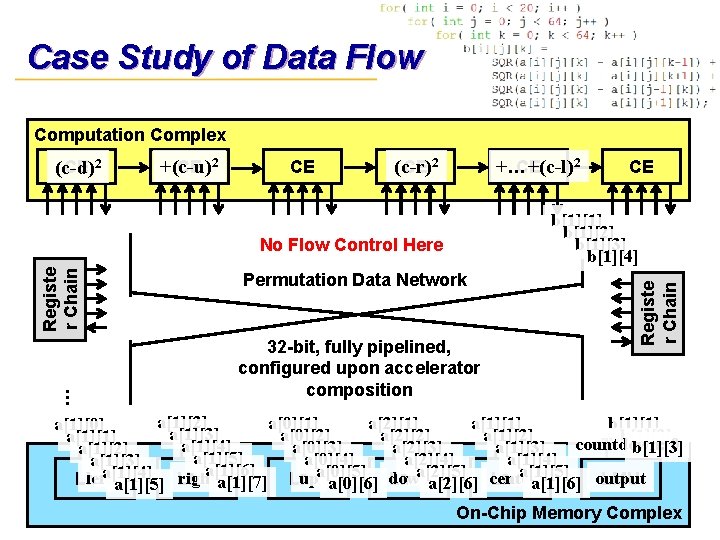

Case Study of Data Flow Computation Complex +(c-u) CE 2 CE (c-r) CE 2 … Registe r Chain No Flow Control Here Permutation Data Network 32 -bit, fully pipelined, configured upon accelerator composition 2 +…+(c-l) CE CE b[1][1] b[1][2] b[1][3] b[1][4] Registe r Chain CE 2 (c-d) a[1][2] a[0][1] a[2][1] a[1][1] b[1][1] a[1][0] a[1][3] a[0][2] a[2][2] a[1][2] b[1][2]0123 a[1][1] countdown: a[1][4] a[0][3] a[2][3] a[1][3] b[1][3] a[1][2] a[1][5] a[0][4] a[2][4] a[1][3] a[1][6] a[2][5] center a[1][5] lefta[1][4] right upa[0][5] LMU LMU a[1][7] LMU a[0][6] down a[2][6] LMU a[1][6] output a[1][5] LMU 15 On-Chip Memory Complex

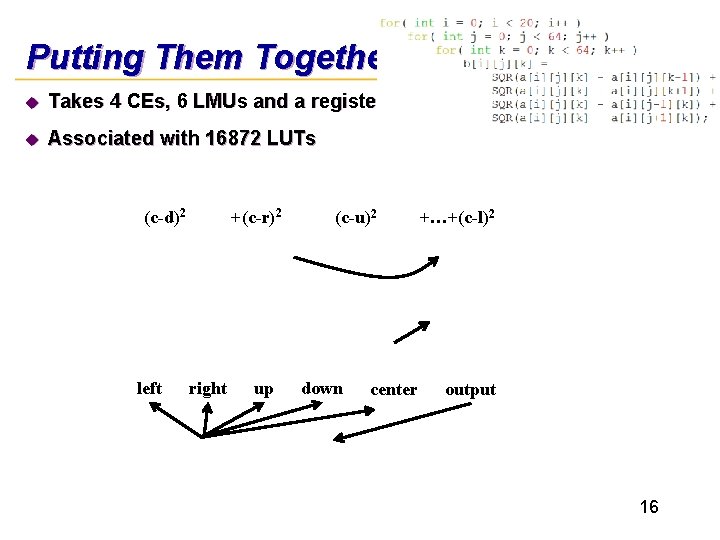

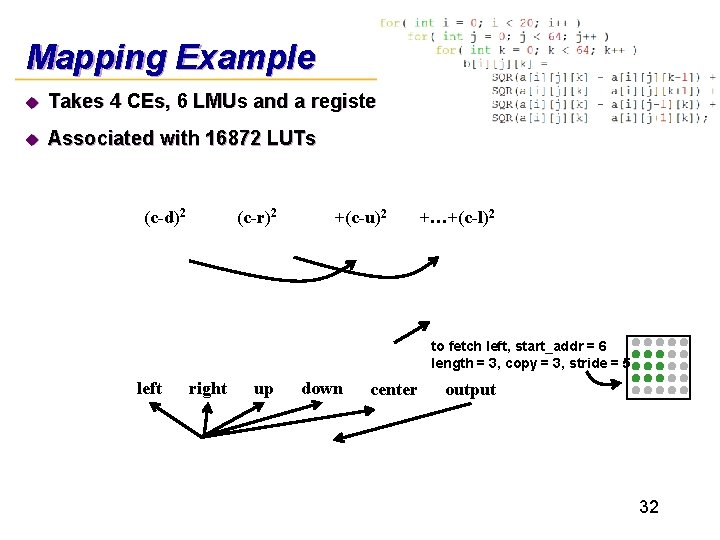

Putting Them Together u Takes 4 CEs, 6 LMUs and a register chain u Associated with 16872 LUTs (c-d)2 left +(c-r)2 right up (c-u)2 down center +…+(c-l)2 output 16

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 17

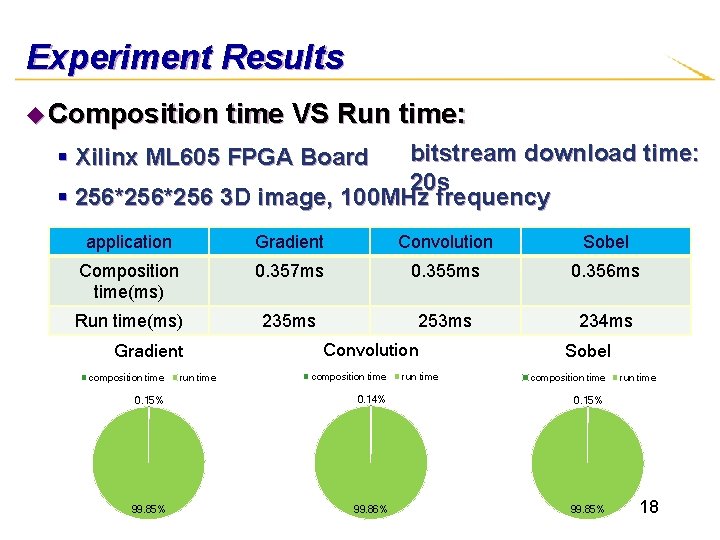

Experiment Results u Composition time VS Run time: bitstream download time: 20 s § 256*256 3 D image, 100 MHz frequency § Xilinx ML 605 FPGA Board application Gradient Convolution Sobel Composition time(ms) 0. 357 ms 0. 355 ms 0. 356 ms Run time(ms) 235 ms 253 ms 234 ms Gradient composition time run time Convolution composition time run time Sobel composition time 0. 15% 0. 14% 0. 15% 99. 86% 99. 85% run time 18

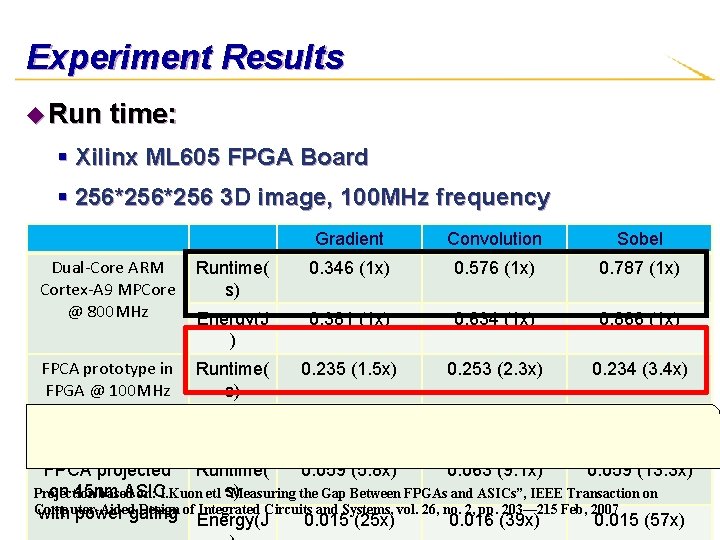

Experiment Results u Run time: § Xilinx ML 605 FPGA Board § 256*256 3 D image, 100 MHz frequency Gradient Convolution Sobel Dual-Core ARM Cortex-A 9 MPCore @ 800 MHz Runtime( s) 0. 346 (1 x) 0. 576 (1 x) 0. 787 (1 x) Energy(J ) 0. 381 (1 x) 0. 634 (1 x) 0. 866 (1 x) FPCA prototype in FPGA @ 100 MHz Runtime( s) 0. 235 (1. 5 x) 0. 253 (2. 3 x) 0. 234 (3. 4 x) Energy(J ) 0. 729 (0. 52 x) 0. 784(0. 81 x) 0. 725 (1. 19 x) FPCA projected Runtime( 0. 059 (5. 8 x) 0. 063 (9. 1 x) 0. 059 (13. 3 x) on 45 nm ASIC s) Projection based on: I. Kuon etl “Measuring the Gap Between FPGAs and ASICs”, IEEE Transaction on Computer-Aided Design of Integrated Circuits and Systems, vol. 26, no. 2, pp. 203— 215 Feb, 2007 19 with power gating Energy(J 0. 015 (25 x) 0. 016 (39 x) 0. 015 (57 x)

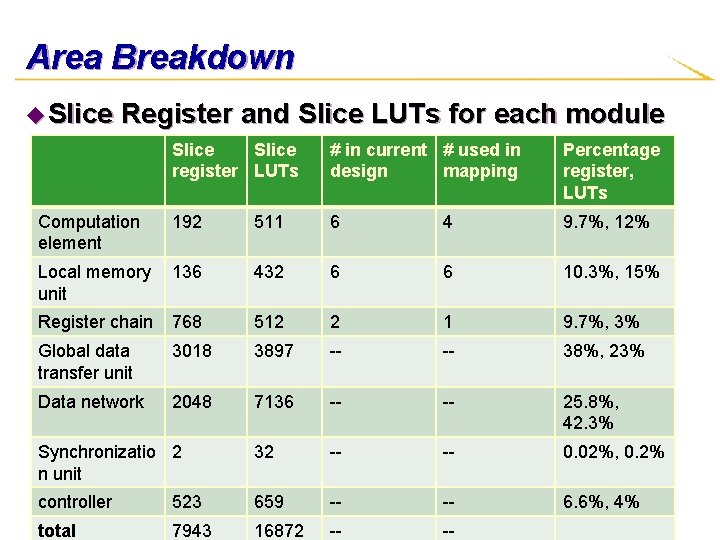

Area Breakdown u Slice Register and Slice LUTs for each module Slice register LUTs # in current # used in design mapping Percentage register, LUTs Computation element 192 511 6 4 9. 7%, 12% Local memory unit 136 432 6 6 10. 3%, 15% Register chain 768 512 2 1 9. 7%, 3% Global data transfer unit 3018 3897 -- -- 38%, 23% Data network 2048 7136 -- -- 25. 8%, 42. 3% Synchronizatio 2 n unit 32 -- -- 0. 02%, 0. 2% controller 523 659 -- -- 6. 6%, 4%20 total 7943 16872 -- --

Outline u Programming u Architecture Overview u Computation u Case Model Complex Study u Experiment Result u Conclusion and Future Work 21

Conclusion & Future Work u. A Novel CGRA Architecture Enables § Full pipelining § Dynamic composition u Future Work § CE: The selection of computation patterns for different domains § Heterogeneous or Homogeneous CE design § Reduce overhead of composition 22

Thank you u Q&A? 23

Back Up Slides u Compared to VLIW u Difference § There is no routing problems for VLIW since all FUs can read from register file 24

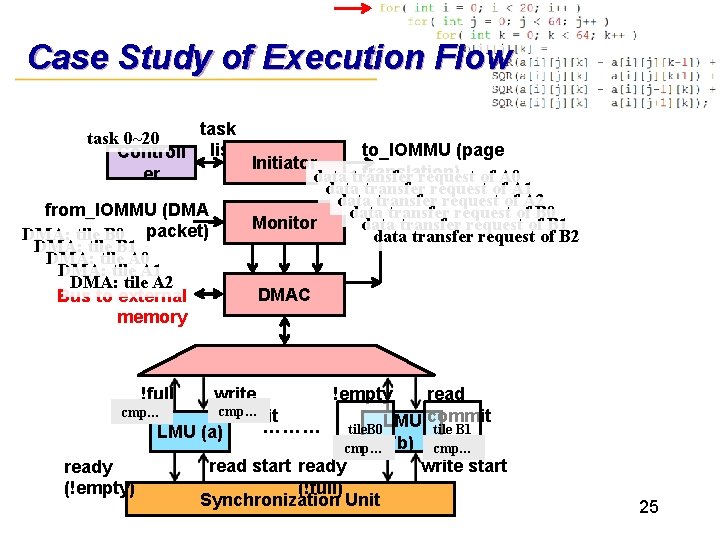

Case Study of Execution Flow task 0~20 to_IOMMU (page Controll list Initiator translation) er data transfer request of A 0 data transfer request of A 1 data transfer request of A 2 from_IOMMU (DMA data transfer request of B 0 Monitor data transfer request of B 1 DMA: tile B 0 packet) data transfer request of B 2 DMA: tile B 1 DMA: tile A 0 DMA: tile A 1 DMA: tile A 2 DMAC Bus to external memory !full write !empty read cmp… commit LMU commit ……… tile. B 0 tile B 1 LMU (a) cmp… (b) cmp… read start ready write start ready (!full) (!empty) Synchronization Unit 25

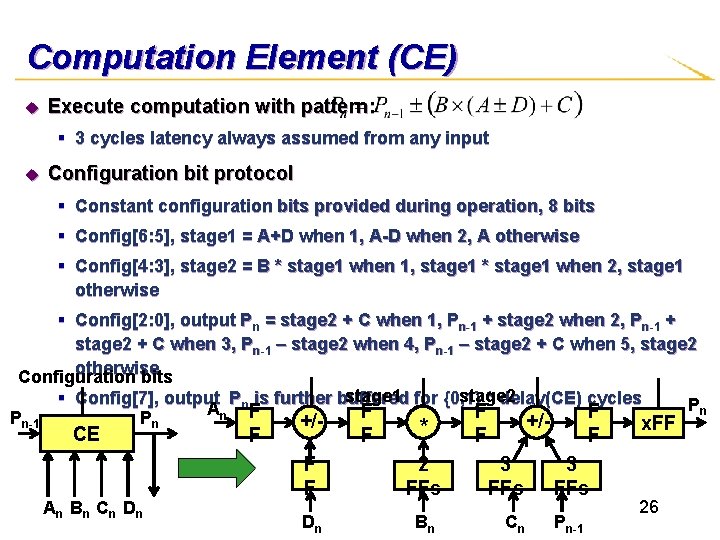

Computation Element (CE) u Execute computation with pattern: § 3 cycles latency always assumed from any input u Configuration bit protocol § Constant configuration bits provided during operation, 8 bits § Config[6: 5], stage 1 = A+D when 1, A-D when 2, A otherwise § Config[4: 3], stage 2 = B * stage 1 when 1, stage 1 * stage 1 when 2, stage 1 otherwise § Config[2: 0], output Pn = stage 2 + C when 1, Pn-1 + stage 2 when 2, Pn-1 + stage 2 + C when 3, Pn-1 – stage 2 when 4, Pn-1 – stage 2 + C when 5, stage 2 otherwise Configuration bits stage 1 for {0, 1} stage 2 § Config[7], output Pn is further buffered * delay(CE) cycles Pn A F F n Pn-1 Pn +/+/x. FF CE An Bn Cn Dn F F * F F 2 FFs Dn Bn F F 3 FFs Cn 3 FFs Pn-1 26

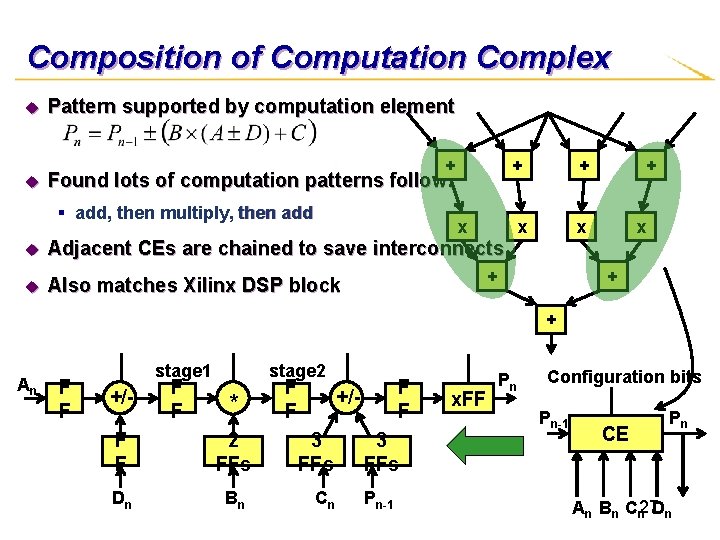

Composition of Computation Complex u u Pattern supported by computation element + Found lots of computation patterns follow: § add, then multiply, then add x u Adjacent CEs are chained to save interconnects u Also matches Xilinx DSP block + + x x + + An F F stage 1 +/- F F stage 2 * F F 2 FFs Dn Bn F F +/3 FFs Cn 3 FFs Pn-1 x. FF Pn Configuration bits Pn-1 CE Pn An Bn Cn 27 Dn

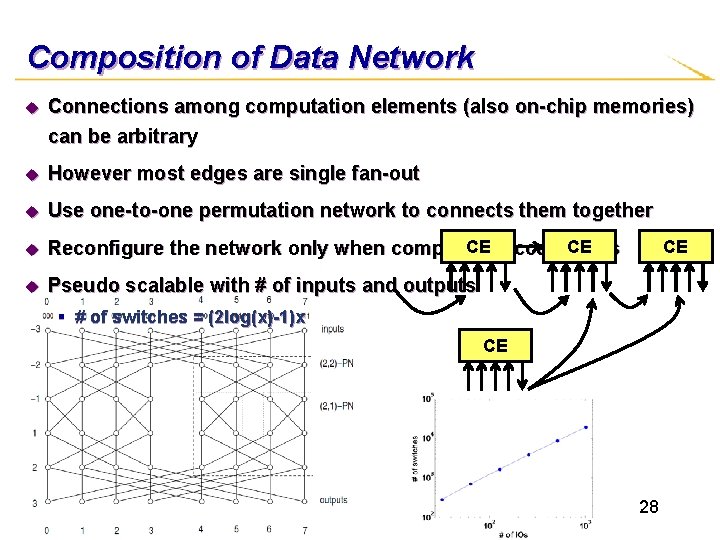

Composition of Data Network u Connections among computation elements (also on-chip memories) can be arbitrary u However most edges are single fan-out u Use one-to-one permutation network to connects them together u CE accelerators CE Reconfigure the network only when composing u Pseudo scalable with # of inputs and outputs CE § # of switches = (2 log(x)-1)x CE 28

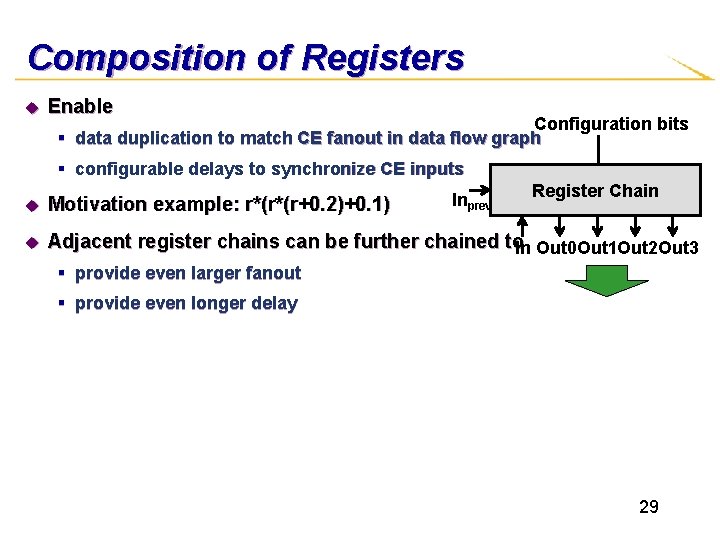

Composition of Registers u Enable Configuration bits § data duplication to match CE fanout in data flow graph § configurable delays to synchronize CE inputs Inprev Register Chain u Motivation example: r*(r*(r+0. 2)+0. 1) u Adjacent register chains can be further chained to. In Out 0 Out 1 Out 2 Out 3 § provide even larger fanout § provide even longer delay 29

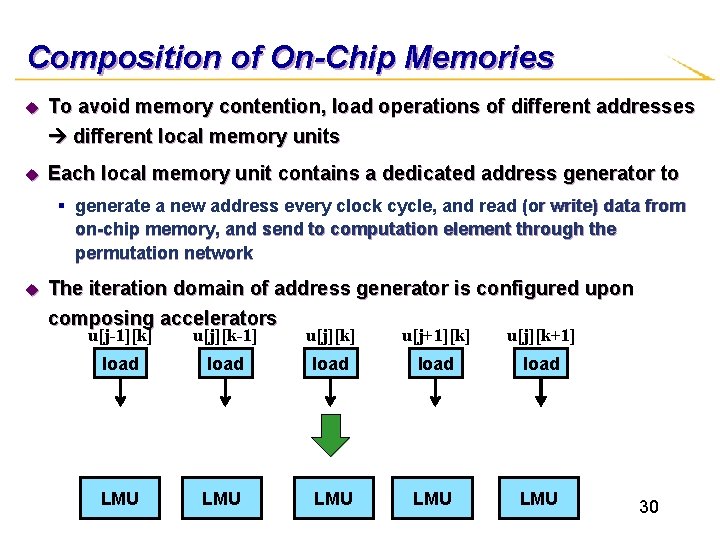

Composition of On-Chip Memories u To avoid memory contention, load operations of different addresses different local memory units u Each local memory unit contains a dedicated address generator to § generate a new address every clock cycle, and read (or write) data from on-chip memory, and send to computation element through the permutation network u The iteration domain of address generator is configured upon composing accelerators u[j-1][k] u[j][k-1] u[j][k] u[j+1][k] u[j][k+1] load load LMU LMU LMU 30

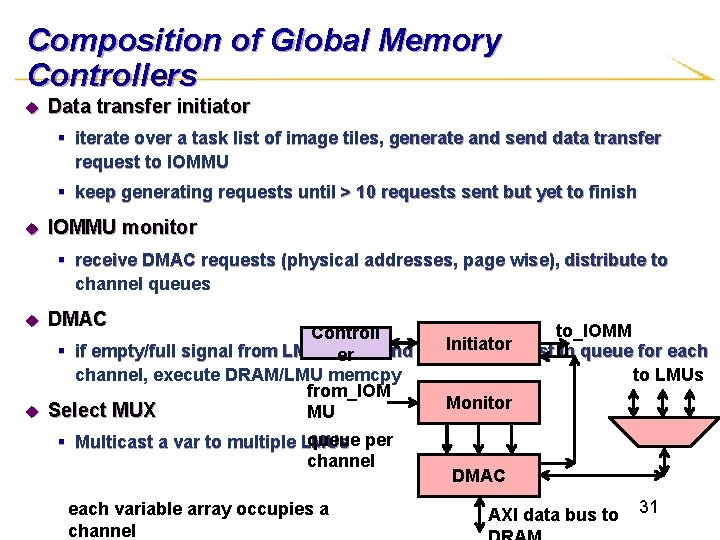

Composition of Global Memory Controllers u Data transfer initiator § iterate over a task list of image tiles, generate and send data transfer request to IOMMU § keep generating requests until > 10 requests sent but yet to finish u IOMMU monitor § receive DMAC requests (physical addresses, page wise), distribute to channel queues u DMAC to_IOMM Controll Initiator § if empty/full signal from LMUs is in queue for each er ‘ 0’ and exists a request U to LMUs channel, execute DRAM/LMU memcpy from_IOM Monitor u Select MUX MU queue per § Multicast a var to multiple LMUs channel each variable array occupies a channel DMAC AXI data bus to 31

Mapping Example u Takes 4 CEs, 6 LMUs and a register chain u Associated with 16872 LUTs (c-d)2 (c-r)2 +(c-u)2 +…+(c-l)2 to fetch left, start_addr = 6 length = 3, copy = 3, stride = 5 left right up down center output 32

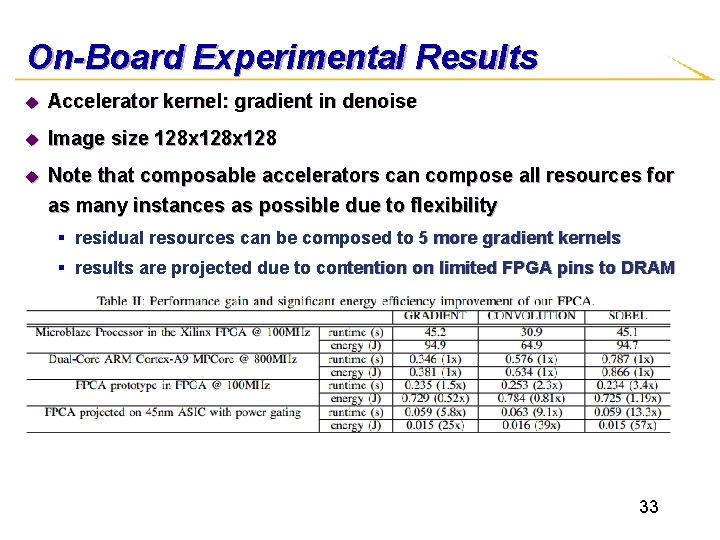

On-Board Experimental Results u Accelerator kernel: gradient in denoise u Image size 128 x 128 u Note that composable accelerators can compose all resources for as many instances as possible due to flexibility § residual resources can be composed to 5 more gradient kernels § results are projected due to contention on limited FPGA pins to DRAM 33

- Slides: 33