A Framework for Machine Learning and Data Mining

- Slides: 65

A Framework for Machine Learning and Data Mining in the Cloud Yucheng Low Joseph Gonzalez Aapo Kyrola Danny Bickson Carlos Guestrin Joe Hellerstein Carnegie Mellon

Big Data is Everywhere 6 Billion Flickr Photos 28 Million Wikipedia Pages 900 Million Facebook Users 72 Hours a Minute You. Tube “…growing at 50 percent a year…” “… data a new class of economic asset, like currency or gold. ” 2

Big Learning How will we design and implement Big learning systems? 3

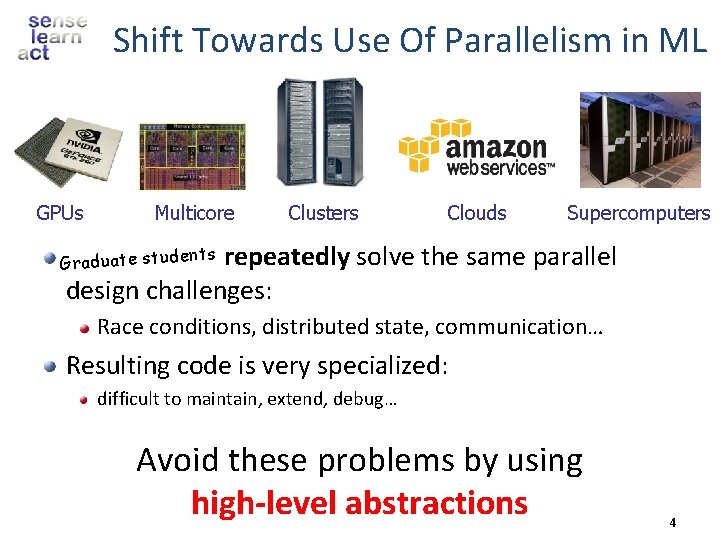

Shift Towards Use Of Parallelism in ML GPUs Multicore Clusters Clouds Supercomputers e students aduatexperts Gr. ML repeatedly solve the same parallel design challenges: Race conditions, distributed state, communication… Resulting code is very specialized: difficult to maintain, extend, debug… Avoid these problems by using high-level abstractions 4

Map. Reduce – Map Phase CPU 1 CPU 2 CPU 3 CPU 4 5

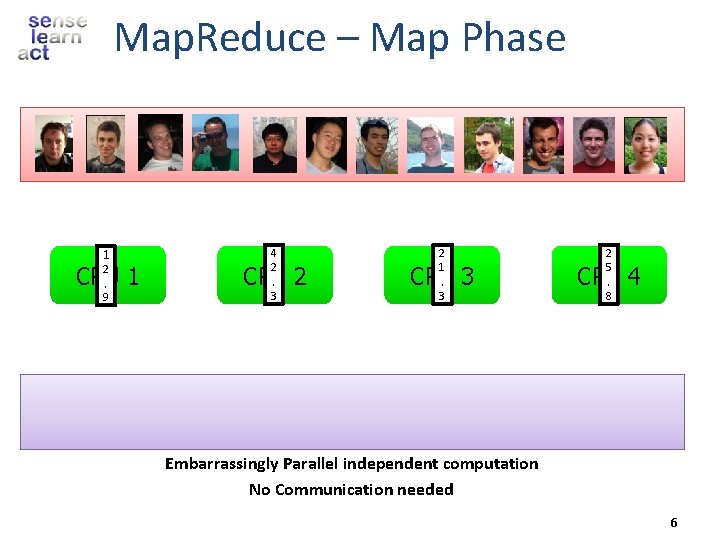

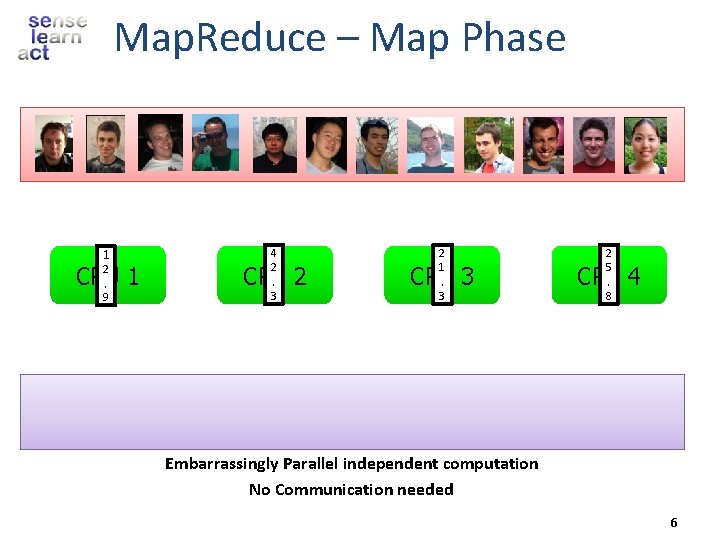

Map. Reduce – Map Phase 1 2. 9 CPU 1 4 2. 3 CPU 2 2 1. 3 CPU 3 2 5. 8 CPU 4 Embarrassingly Parallel independent computation No Communication needed 6

Map. Reduce – Map Phase 8 4. 3 2 4. 1 CPU 1 1 2. 9 1 8. 4 CPU 2 4 2. 3 8 4. 4 CPU 3 2 1. 3 CPU 4 2 5. 8 Embarrassingly Parallel independent computation No Communication needed 7

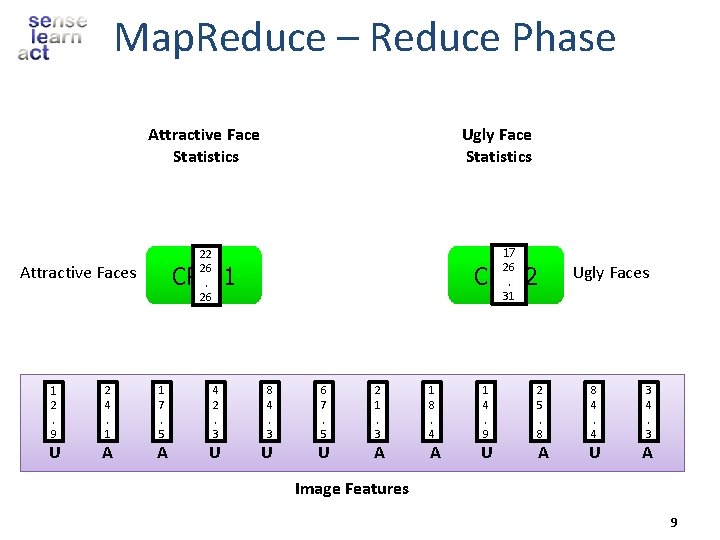

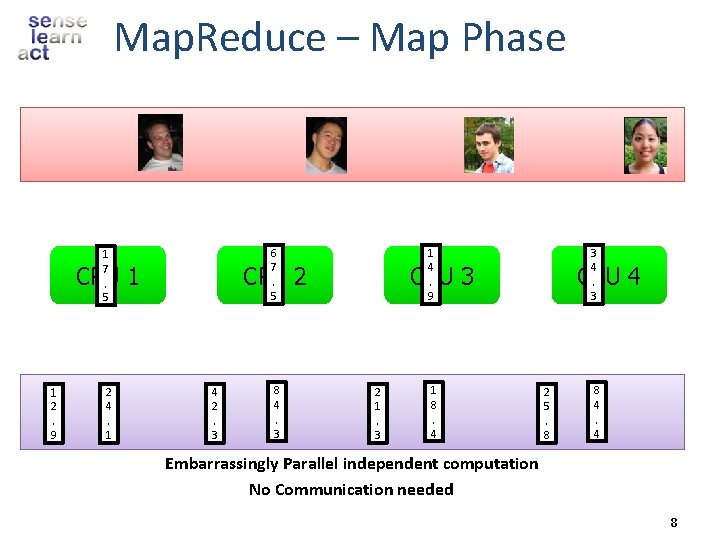

Map. Reduce – Map Phase 6 7. 5 1 7. 5 CPU 1 1 2. 9 2 4. 1 1 4. 9 CPU 2 4 2. 3 8 4. 3 3 4. 3 CPU 3 2 1. 3 1 8. 4 CPU 4 2 5. 8 8 4. 4 Embarrassingly Parallel independent computation No Communication needed 8

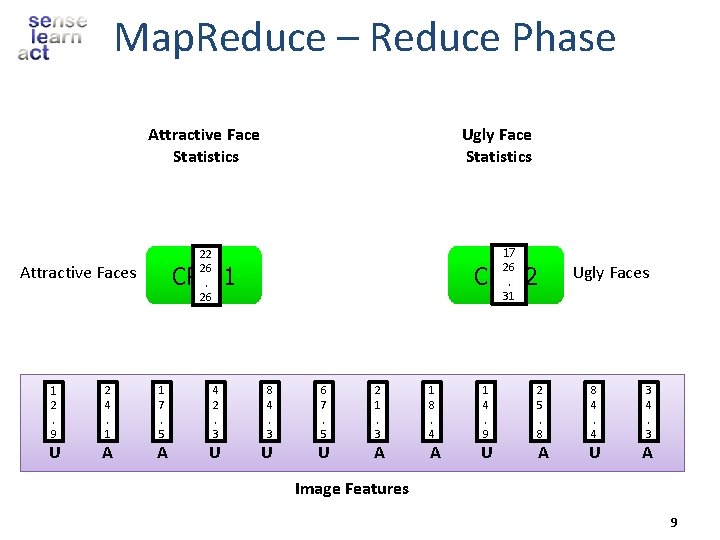

Map. Reduce – Reduce Phase Attractive Face Statistics Ugly Face Statistics 17 26. 31 22 26. 26 CPU 1 Attractive Faces CPU 2 Ugly Faces 1 2. 9 2 4. 1 1 7. 5 4 2. 3 8 4. 3 6 7. 5 2 1. 3 1 8. 4 1 4. 9 2 5. 8 8 4. 4 3 4. 3 U A A U U U A A U A Image Features 9

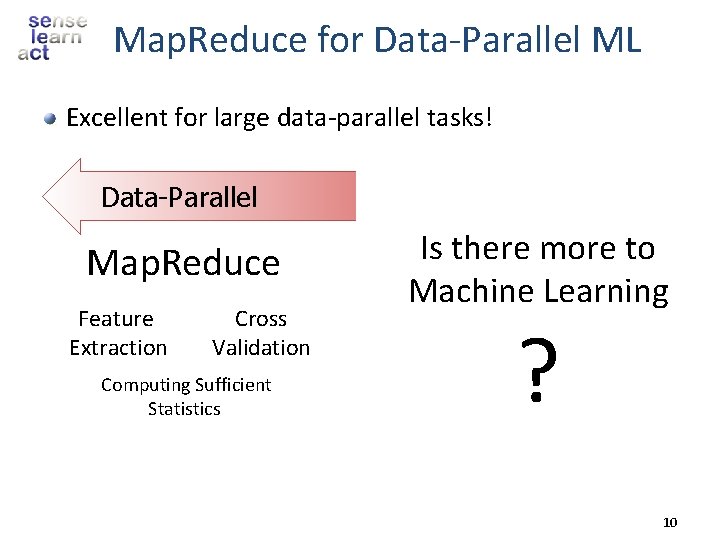

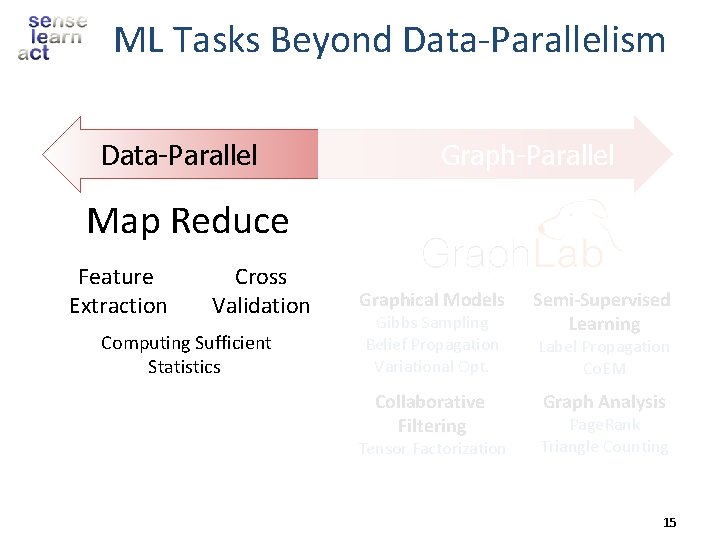

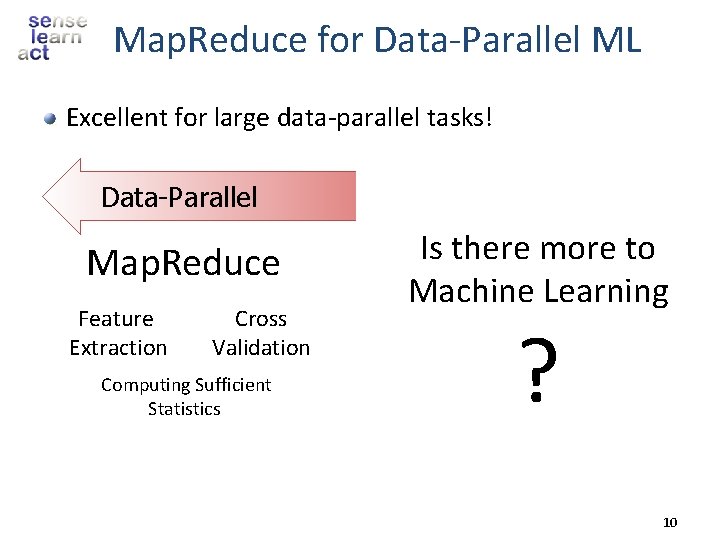

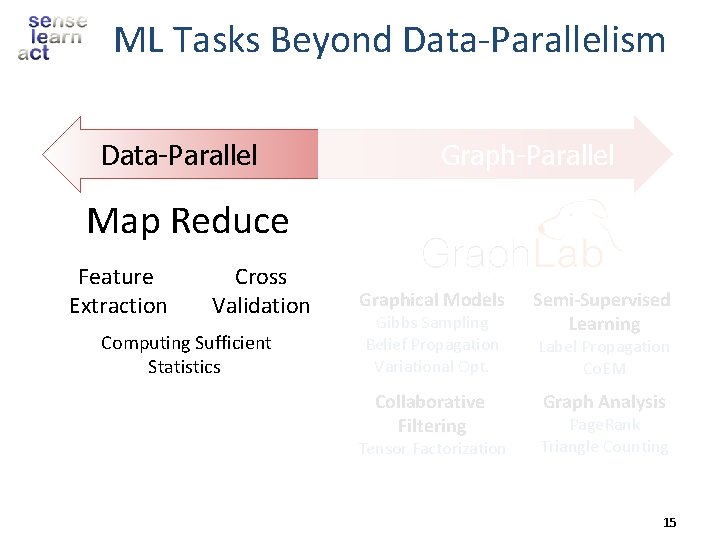

Map. Reduce for Data-Parallel ML Excellent for large data-parallel tasks! Data-Parallel Map. Reduce Feature Extraction Cross Validation Computing Sufficient Statistics Graph-Parallel Is there more to Machine Learning Graphical Models Gibbs Sampling Belief Propagation Variational Opt. Collaborative Filtering Tensor Factorization Semi-Supervised Learning ? Label Propagation Co. EM Graph Analysis Page. Rank Triangle Counting 10

Exploit Dependencies Carnegie Mellon

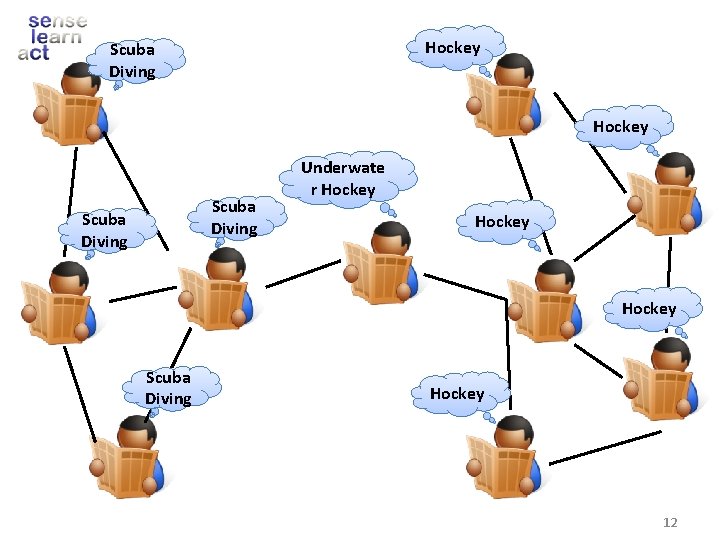

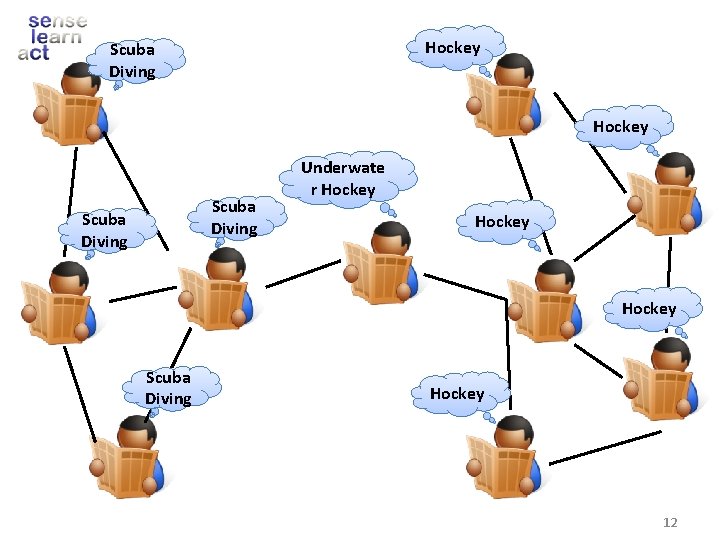

Hockey Scuba Diving Underwate r Hockey Scuba Diving Hockey 12

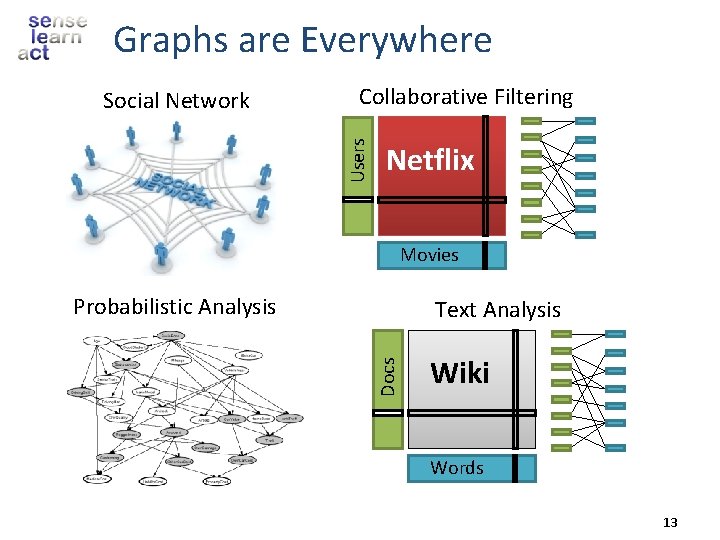

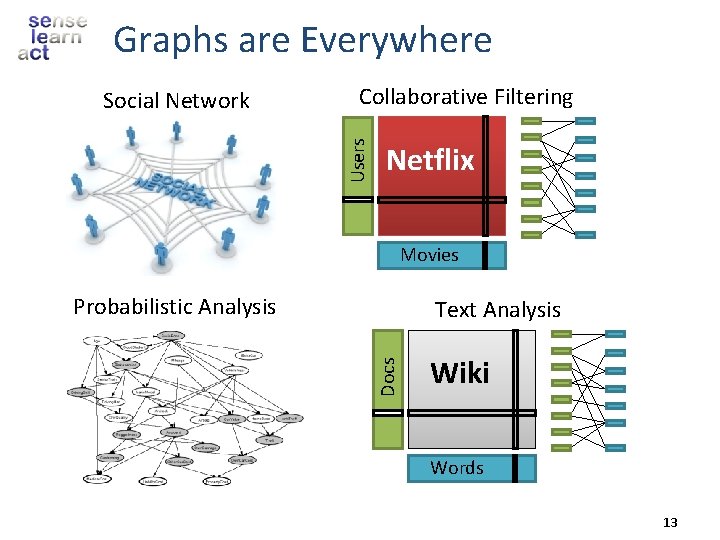

Graphs are Everywhere Collaborative Filtering Users Social Network Netflix Movies Probabilistic Analysis Docs Text Analysis Wiki Words 13

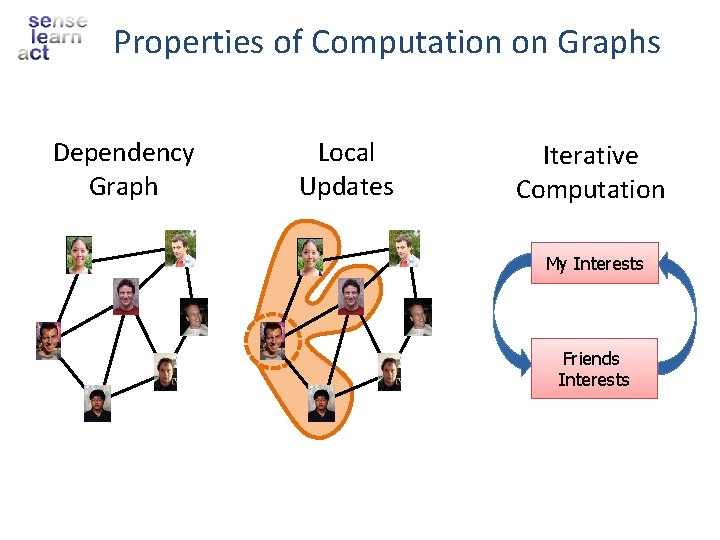

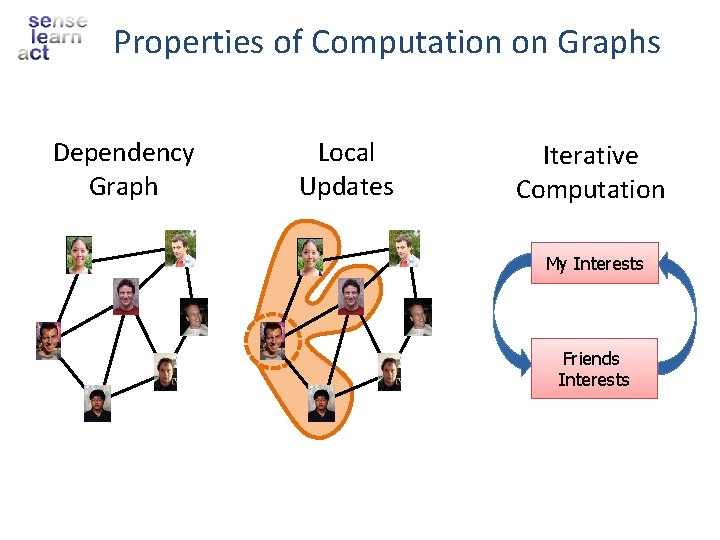

Properties of Computation on Graphs Dependency Graph Local Updates Iterative Computation My Interests Friends Interests

ML Tasks Beyond Data-Parallelism Data-Parallel Graph-Parallel Map Reduce Feature Extraction Cross Validation Computing Sufficient Statistics Graphical Models Gibbs Sampling Belief Propagation Variational Opt. Collaborative Filtering Tensor Factorization Semi-Supervised Learning Label Propagation Co. EM Graph Analysis Page. Rank Triangle Counting 15

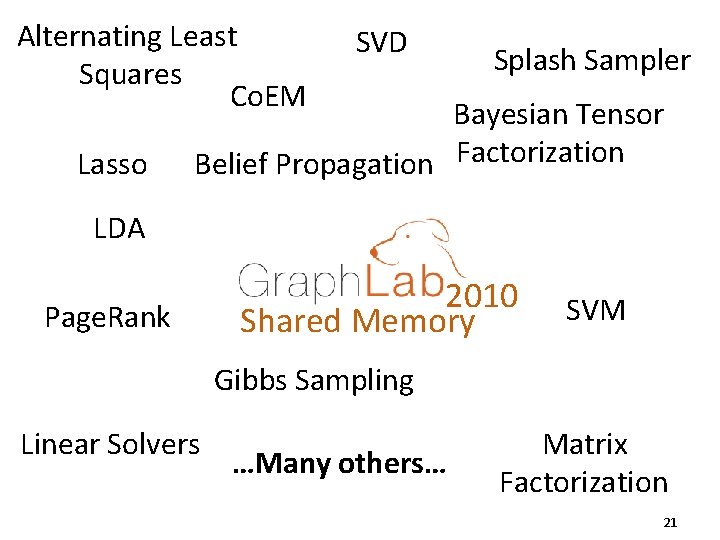

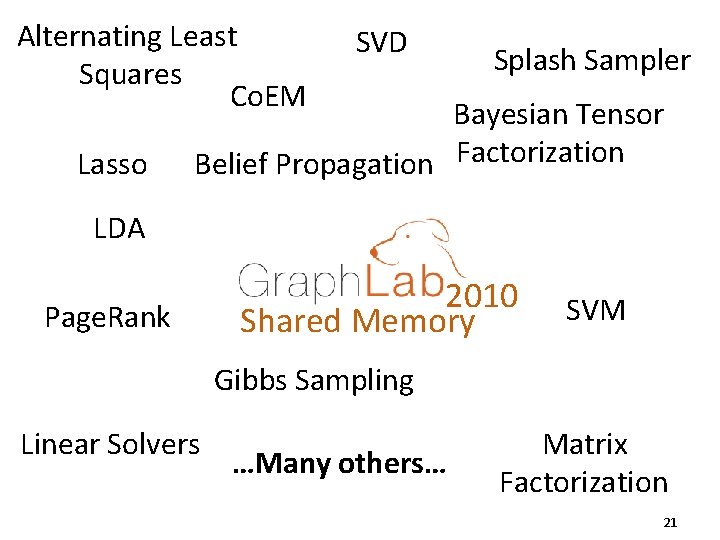

Alternating Least Squares Co. EM Lasso SVD Splash Sampler Bayesian Tensor Belief Propagation Factorization LDA Page. Rank 2010 Shared Memory SVM Gibbs Sampling Linear Solvers …Many others… Matrix Factorization 21

Limited CPU Power Limited Memory Limited Scalability 22

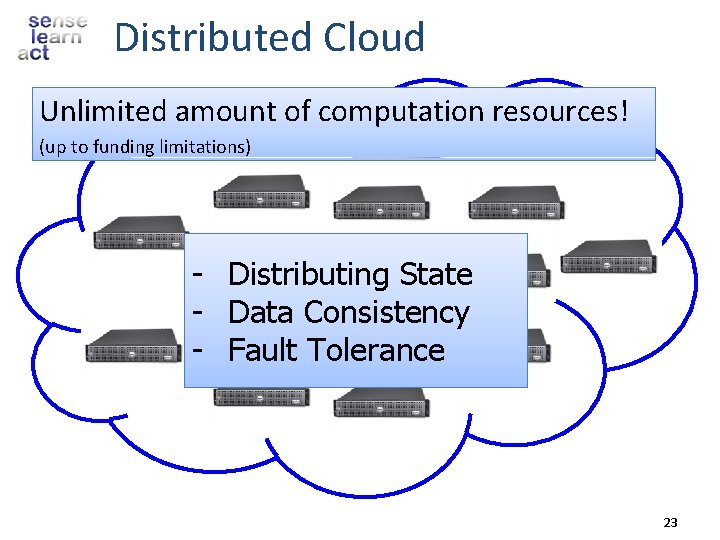

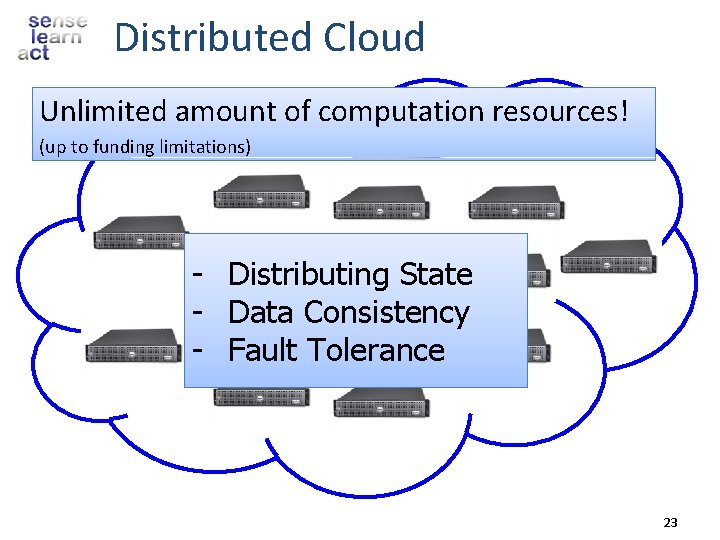

Distributed Cloud Unlimited amount of computation resources! (up to funding limitations) - Distributing State - Data Consistency - Fault Tolerance 23

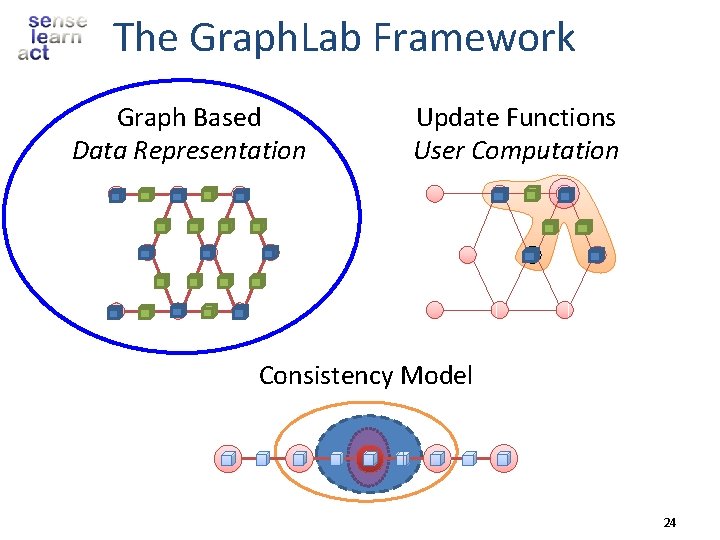

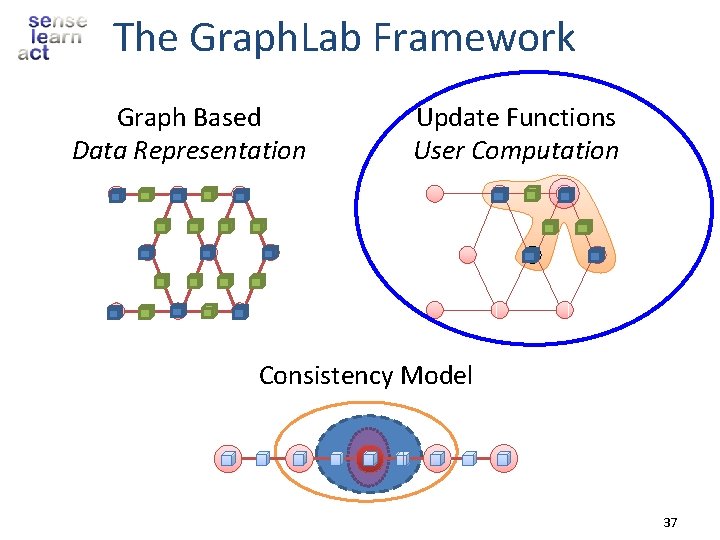

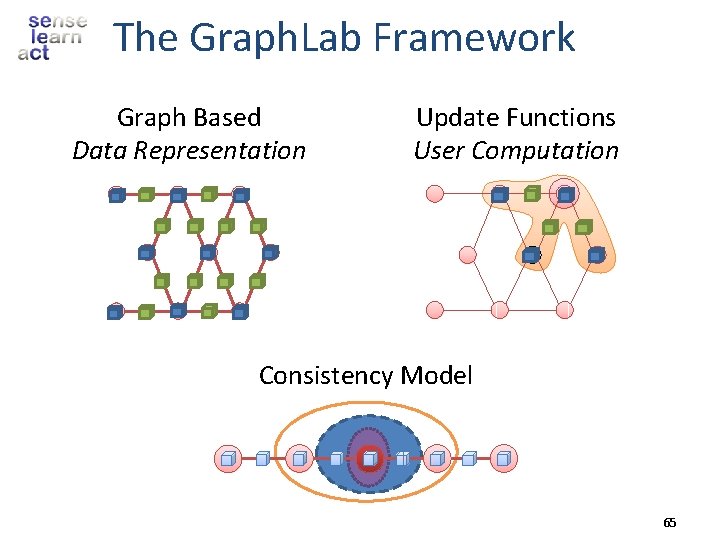

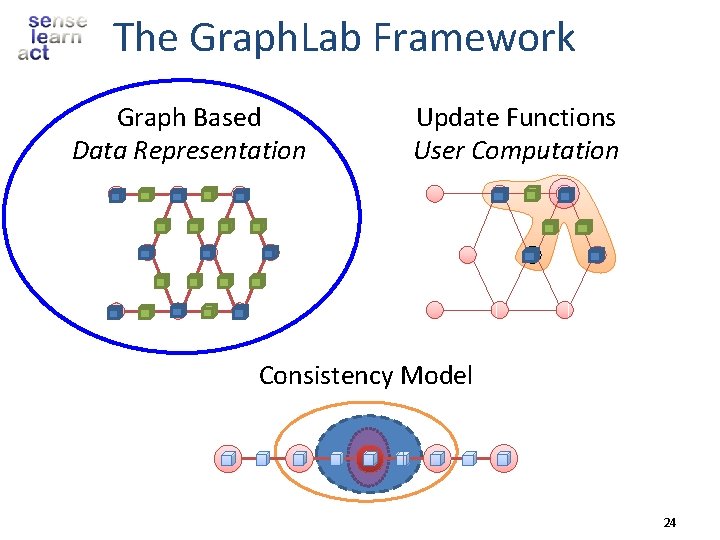

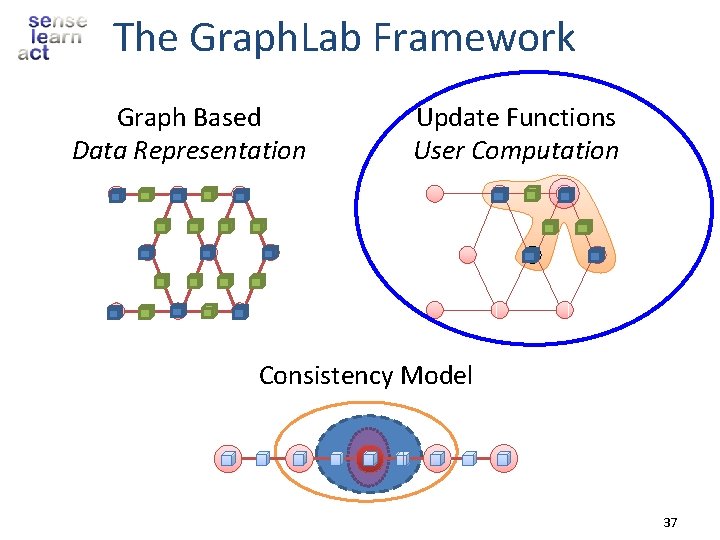

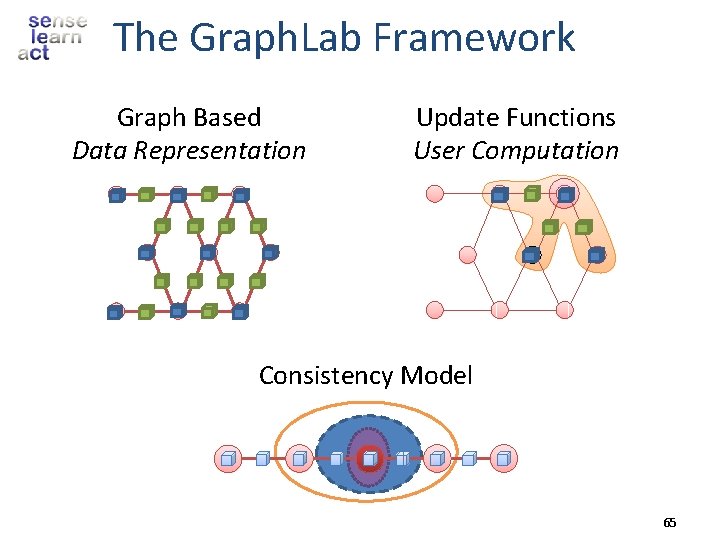

The Graph. Lab Framework Graph Based Data Representation Update Functions User Computation Consistency Model 24

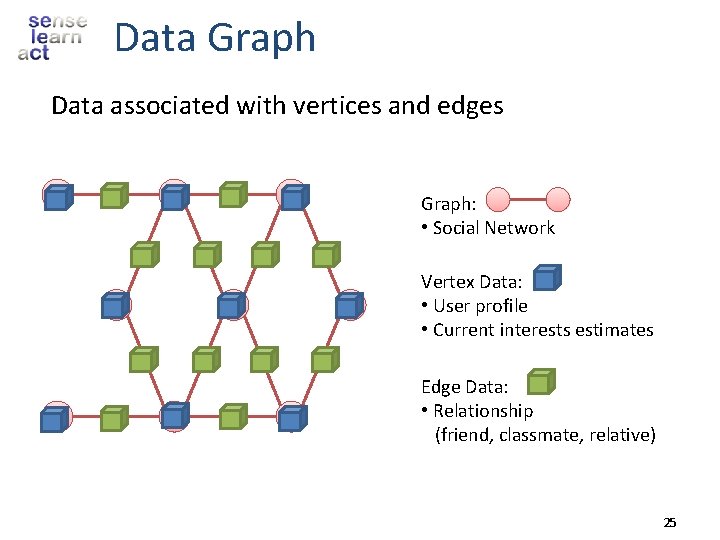

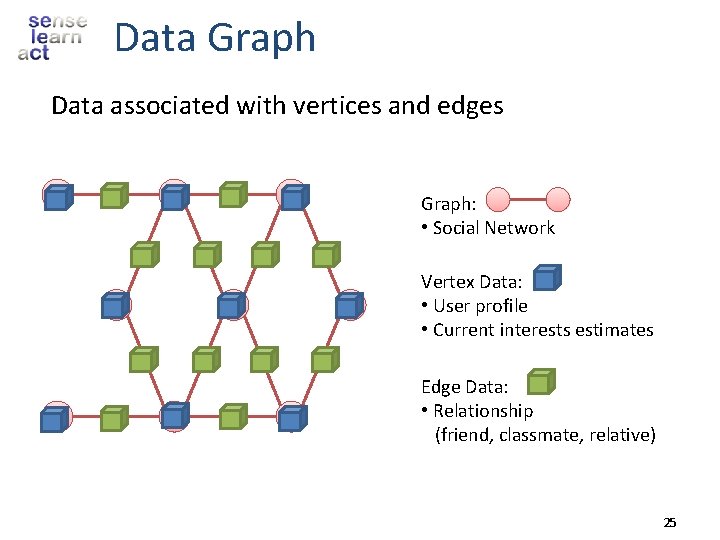

Data Graph Data associated with vertices and edges Graph: • Social Network Vertex Data: • User profile • Current interests estimates Edge Data: • Relationship (friend, classmate, relative) 25

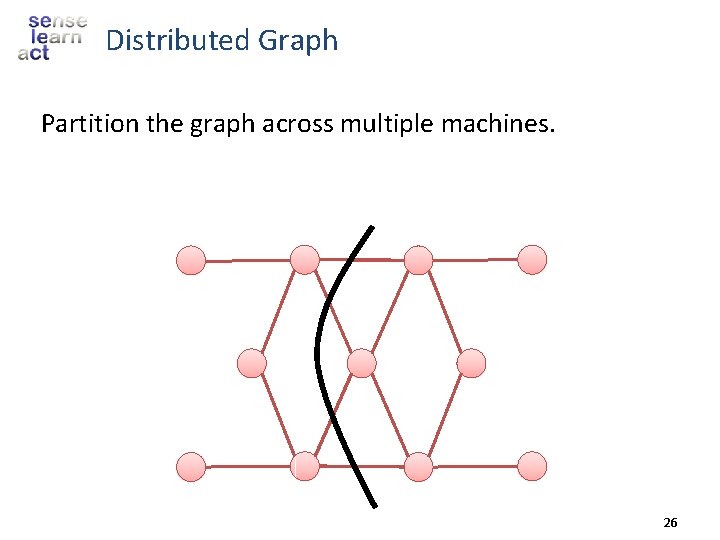

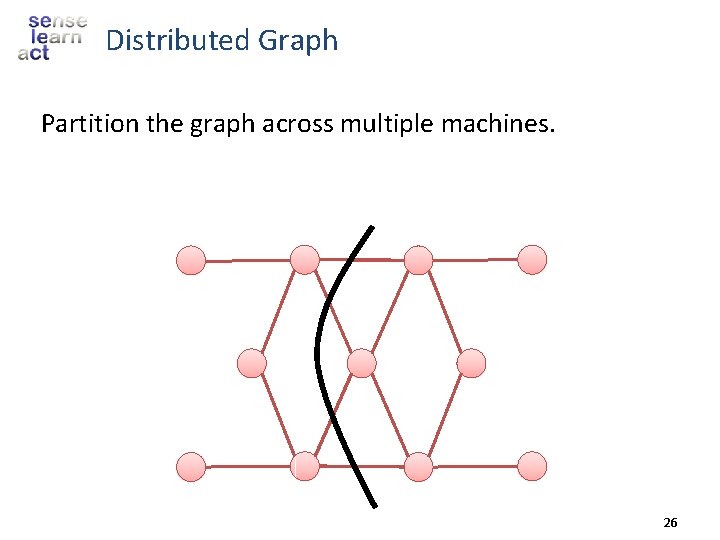

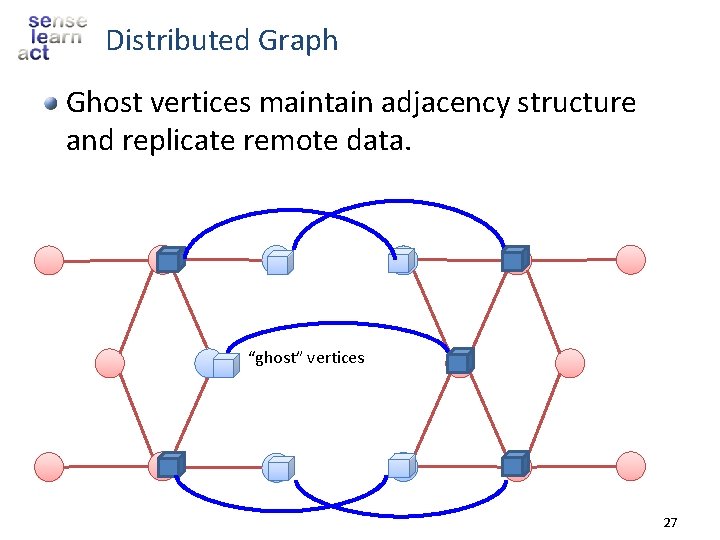

Distributed Graph Partition the graph across multiple machines. 26

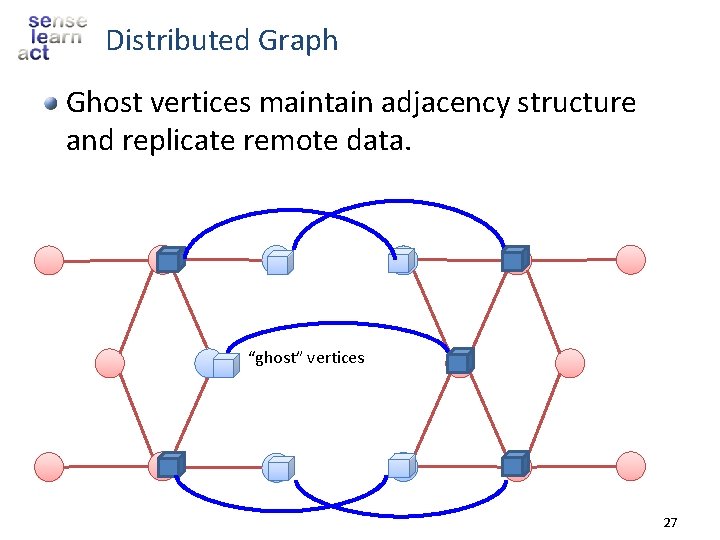

Distributed Graph Ghost vertices maintain adjacency structure and replicate remote data. “ghost” vertices 27

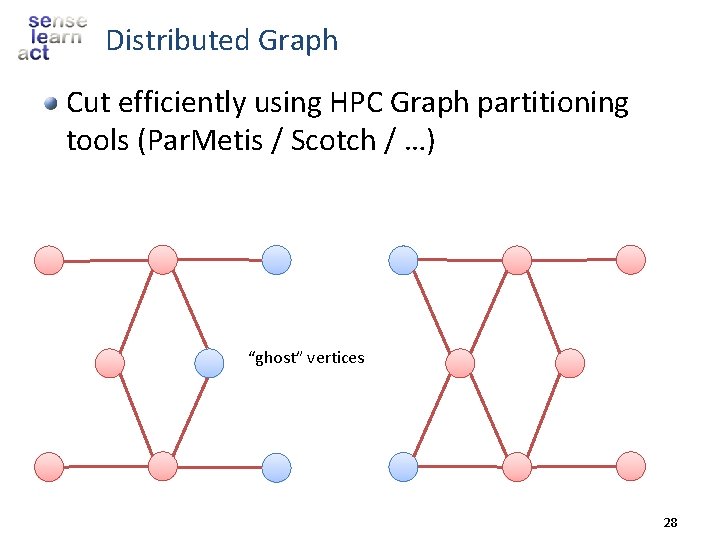

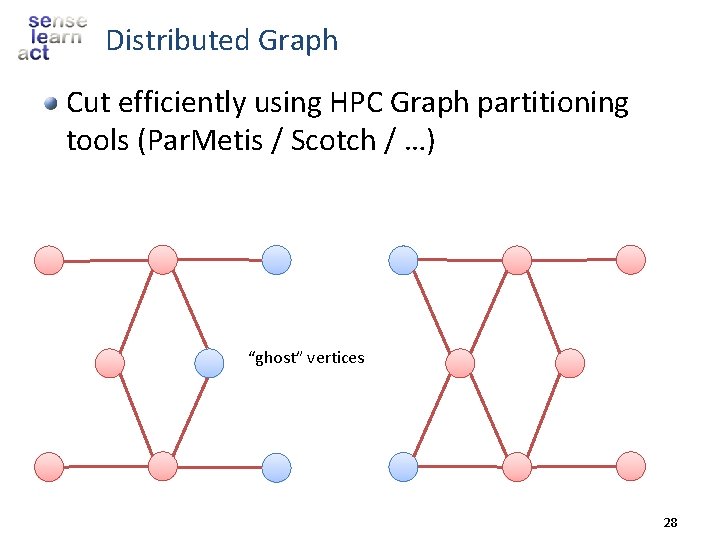

Distributed Graph Cut efficiently using HPC Graph partitioning tools (Par. Metis / Scotch / …) “ghost” vertices 28

The Graph. Lab Framework Graph Based Data Representation Update Functions User Computation Consistency Model 29

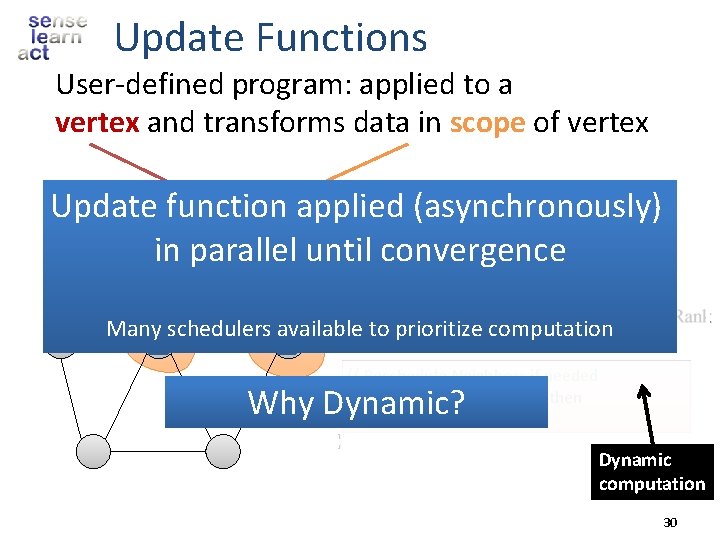

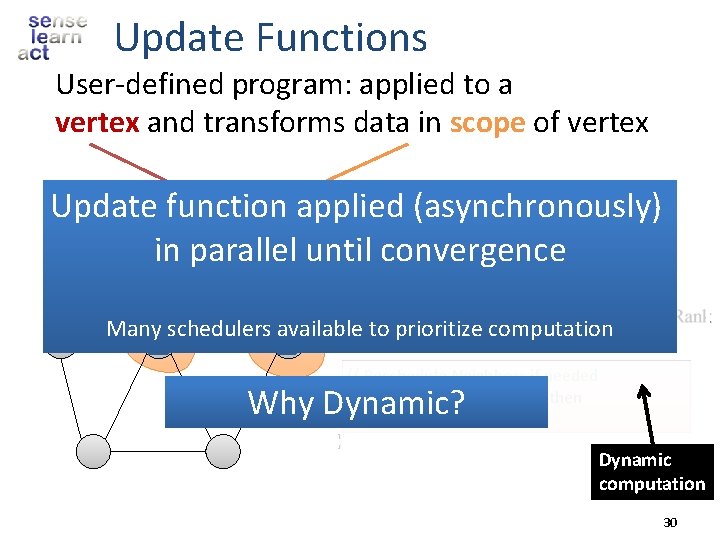

Update Functions User-defined program: applied to a vertex and transforms data in scope of vertex Pagerank(scope){ Update function applied (asynchronously) // Update the current vertex data in parallel until convergence Many schedulers available to prioritize computation // Reschedule Neighbors if needed if vertex. Page. Rank changes then reschedule_all_neighbors; Why Dynamic? } Dynamic computation 30

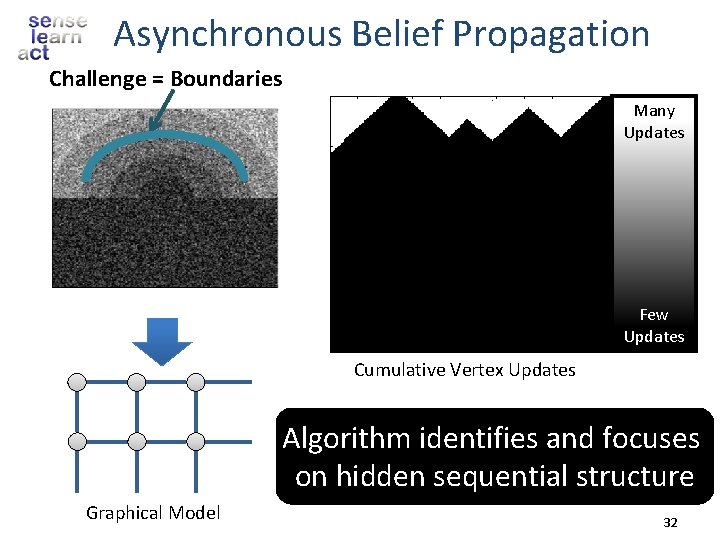

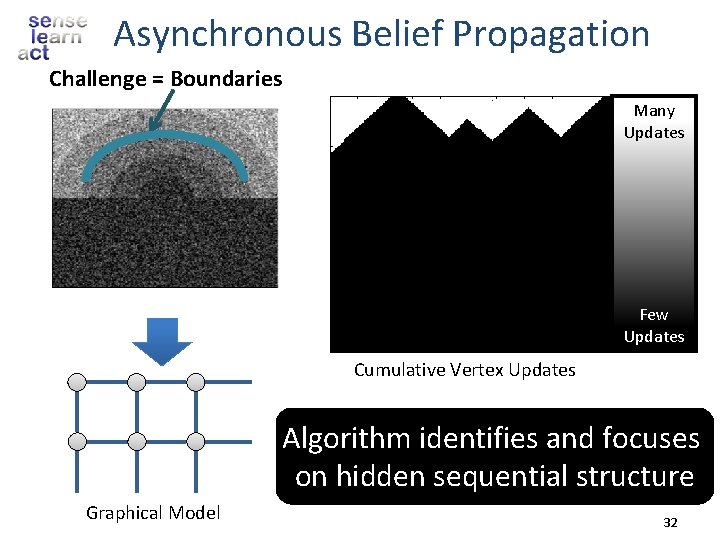

Asynchronous Belief Propagation Challenge = Boundaries Many Updates Few Updates Cumulative Vertex Updates Algorithm identifies and focuses on hidden sequential structure Graphical Model 32

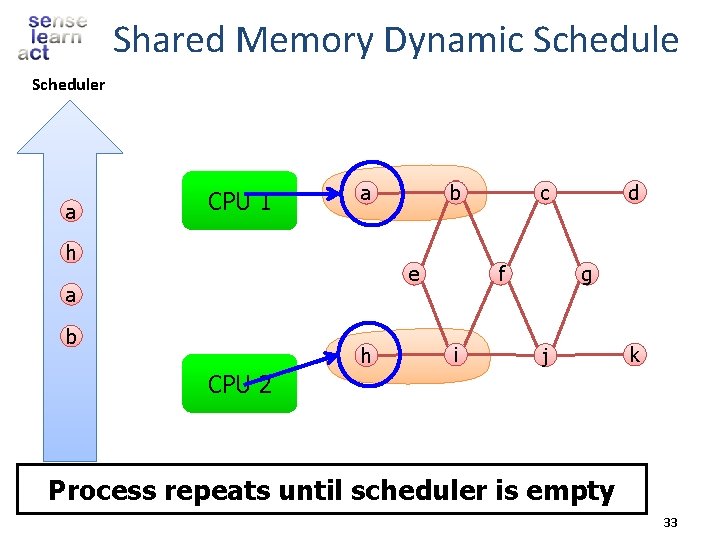

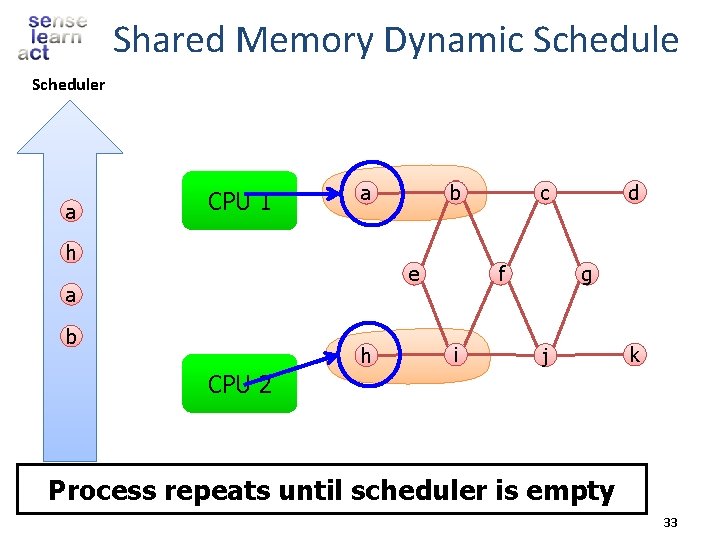

Shared Memory Dynamic Scheduler a CPU 1 b a h e a b h f i d c g j k CPU 2 Process repeats until scheduler is empty 33

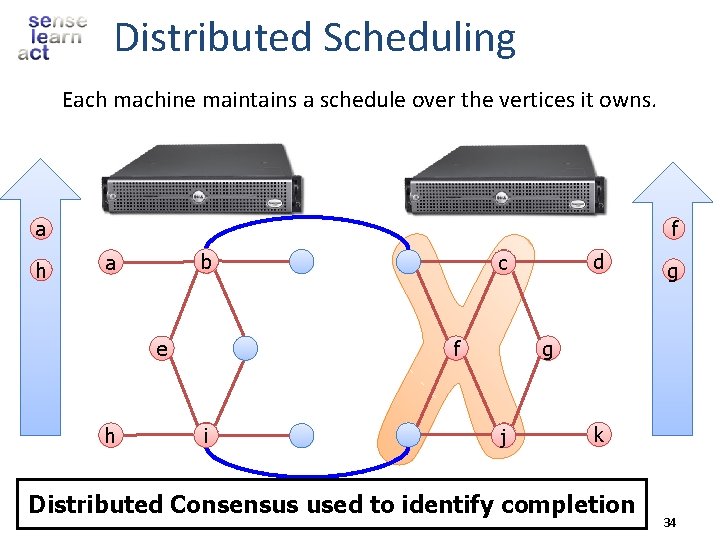

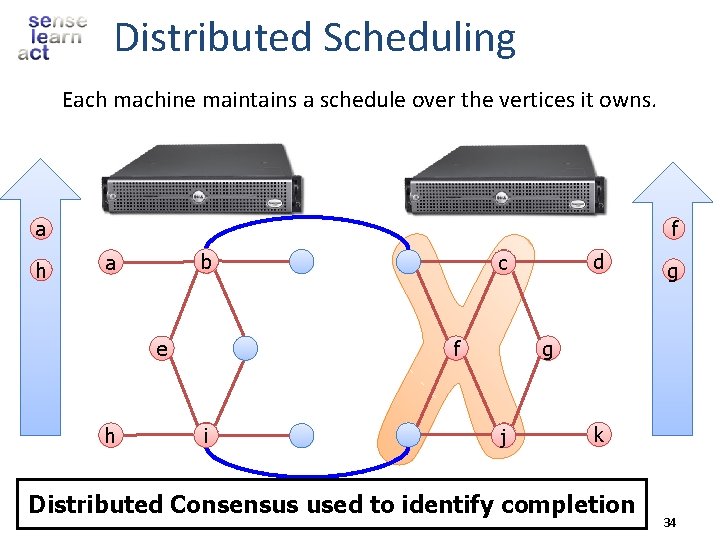

Distributed Scheduling Each machine maintains a schedule over the vertices it owns. a h f b a f e h i d c g g j k Distributed Consensus used to identify completion 34

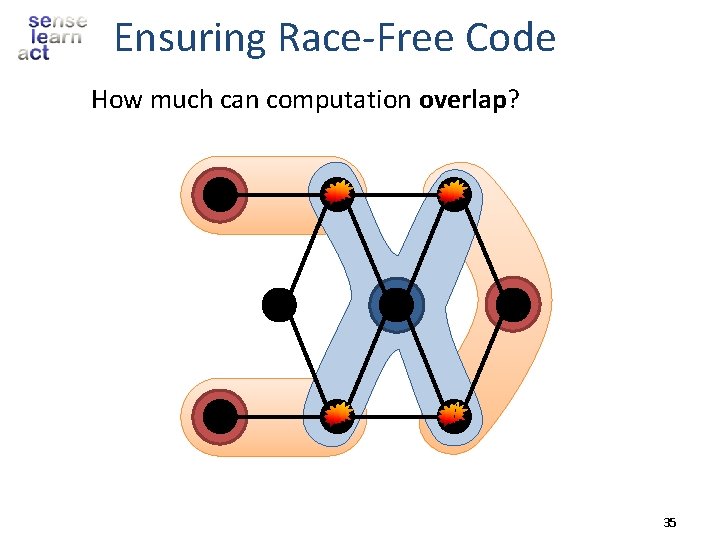

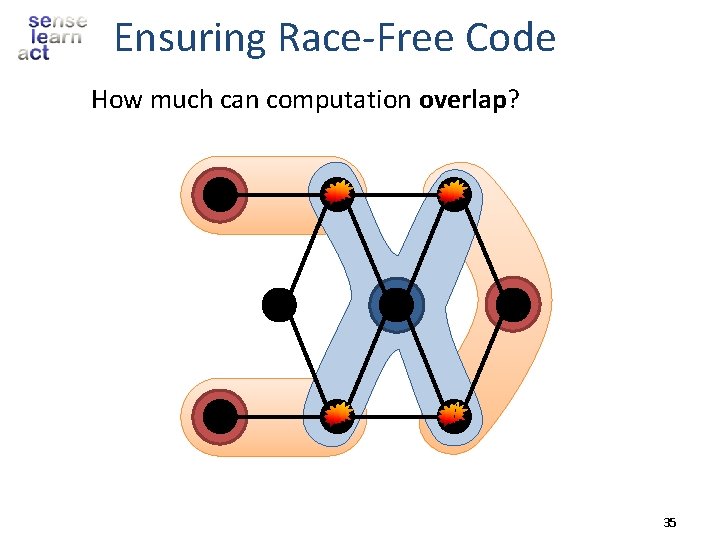

Ensuring Race-Free Code How much can computation overlap? 35

The Graph. Lab Framework Graph Based Data Representation Update Functions User Computation Consistency Model 37

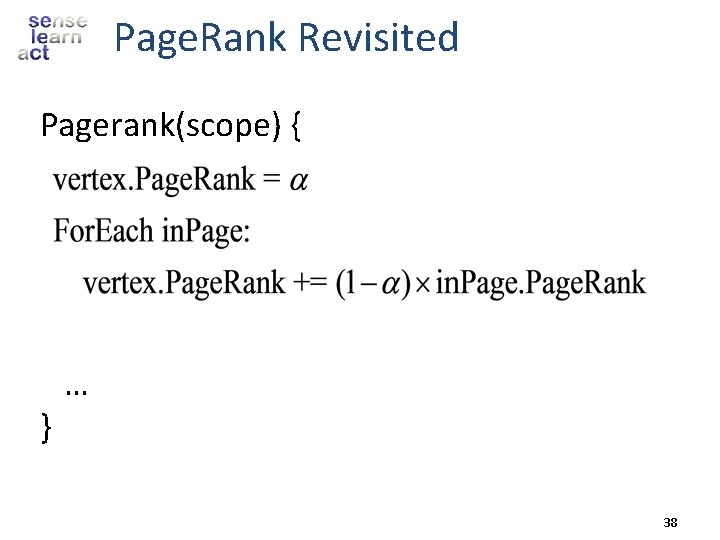

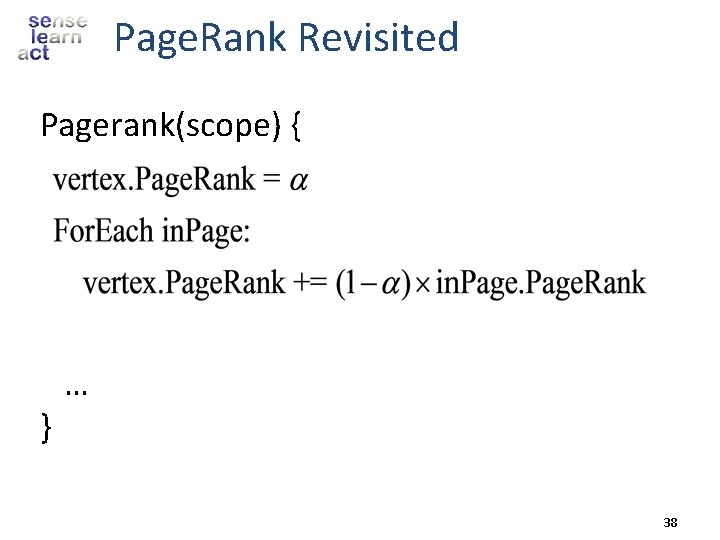

Page. Rank Revisited Pagerank(scope) { } … 38

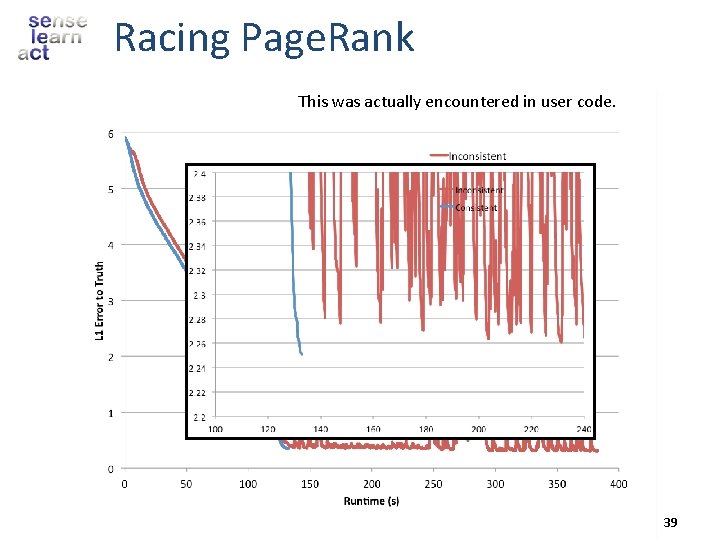

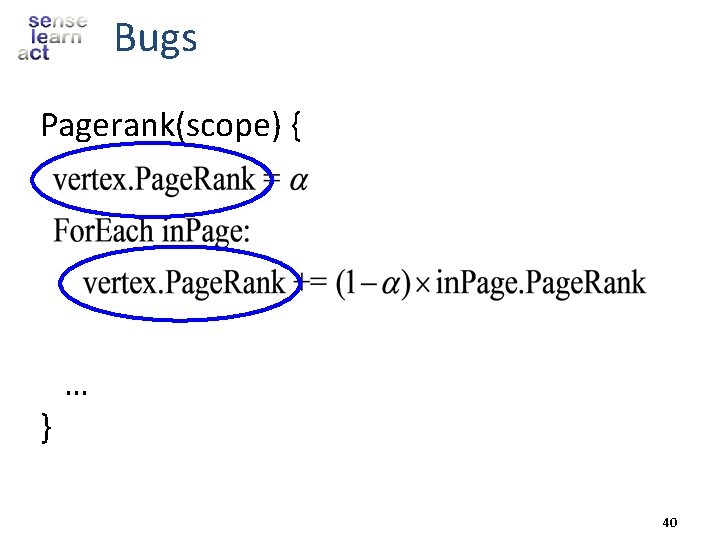

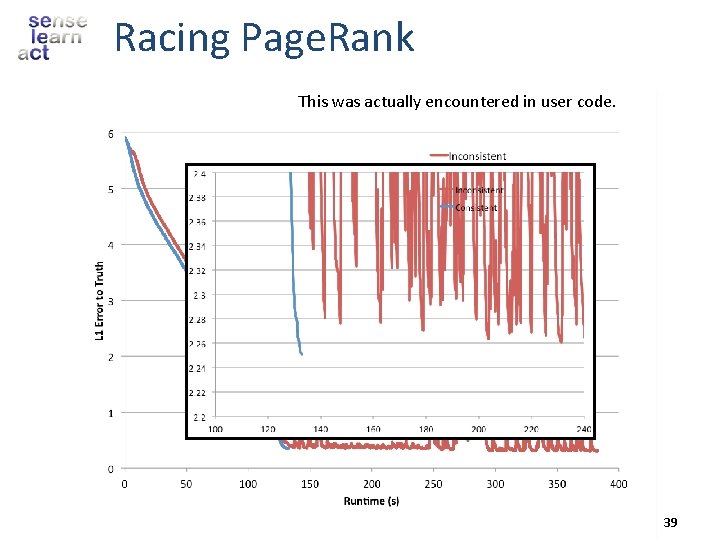

Racing Page. Rank This was actually encountered in user code. 39

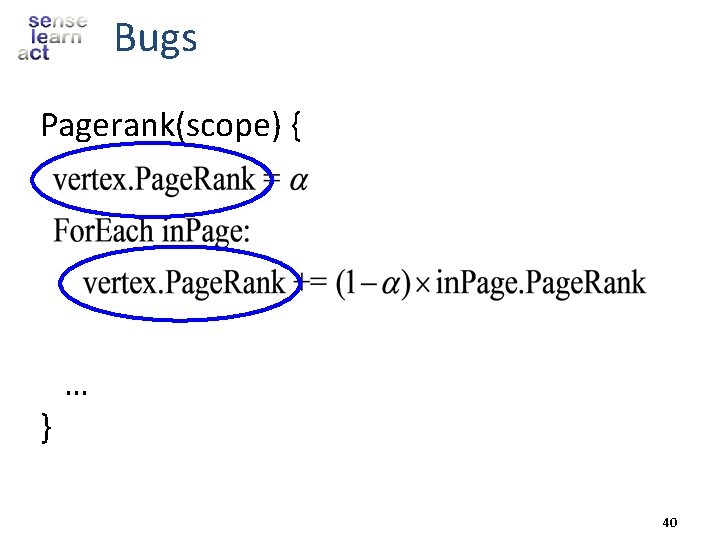

Bugs Pagerank(scope) { } … 40

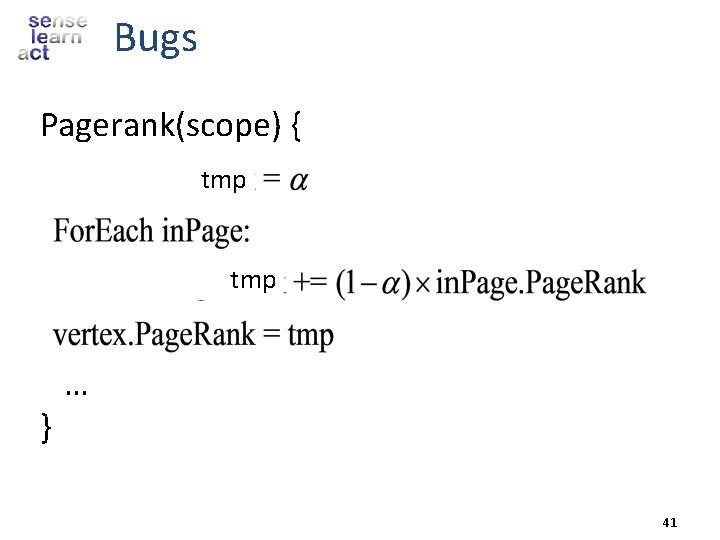

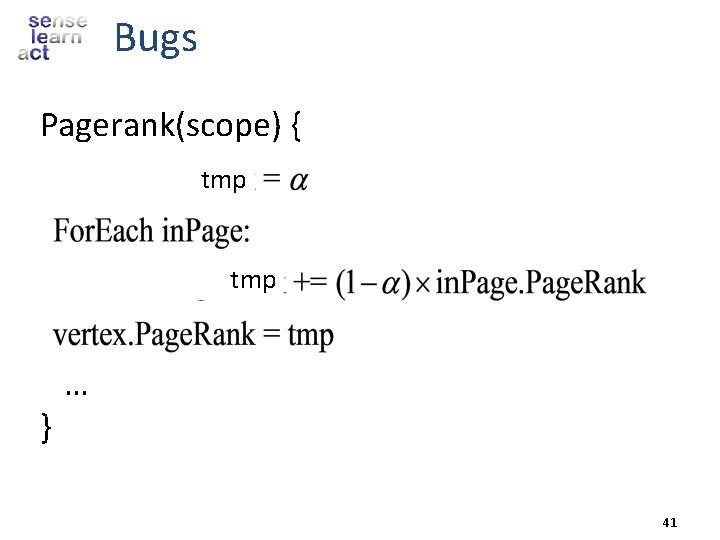

Bugs Pagerank(scope) { tmp } … 41

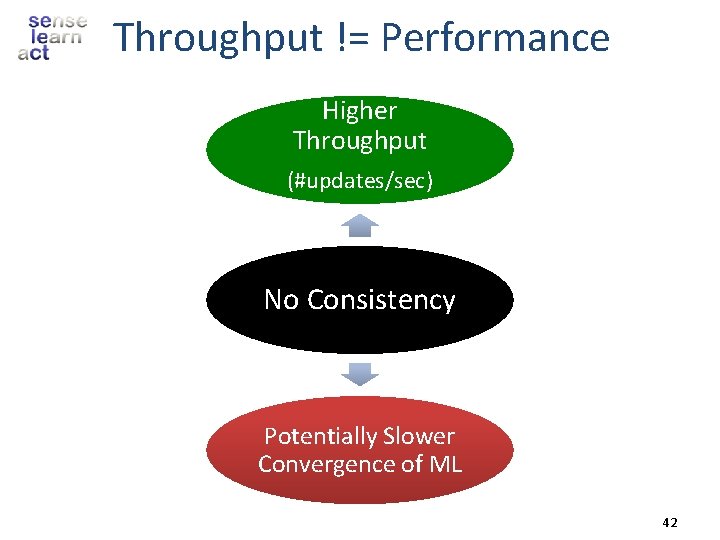

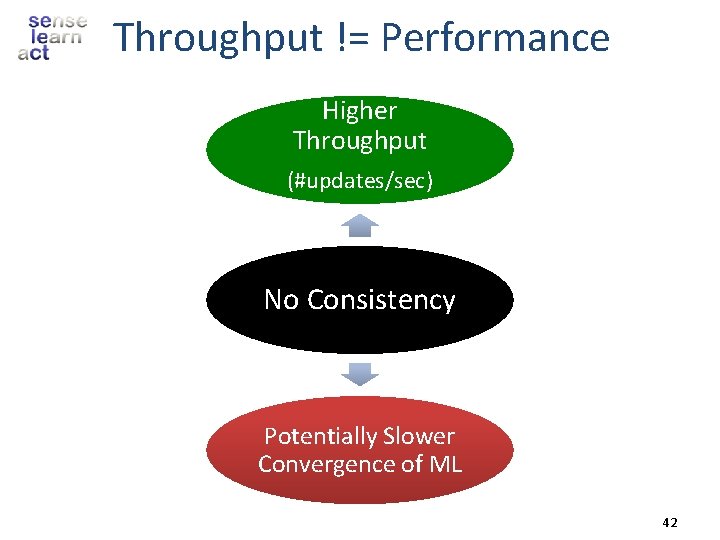

Throughput != Performance Higher Throughput (#updates/sec) No Consistency Potentially Slower Convergence of ML 42

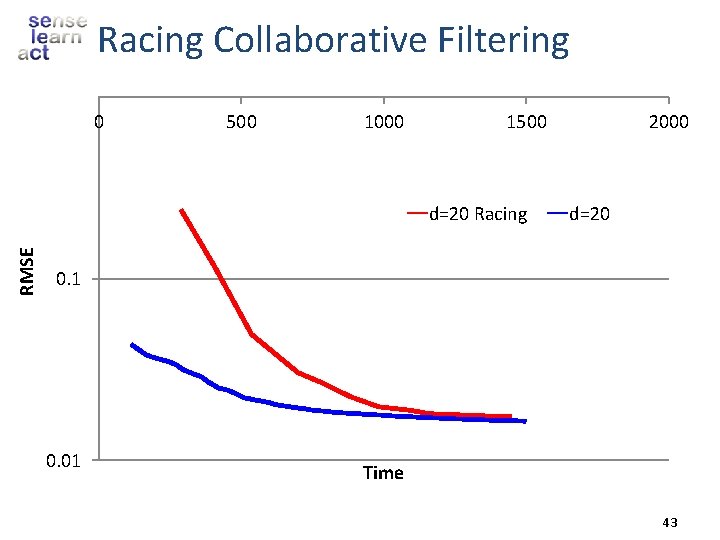

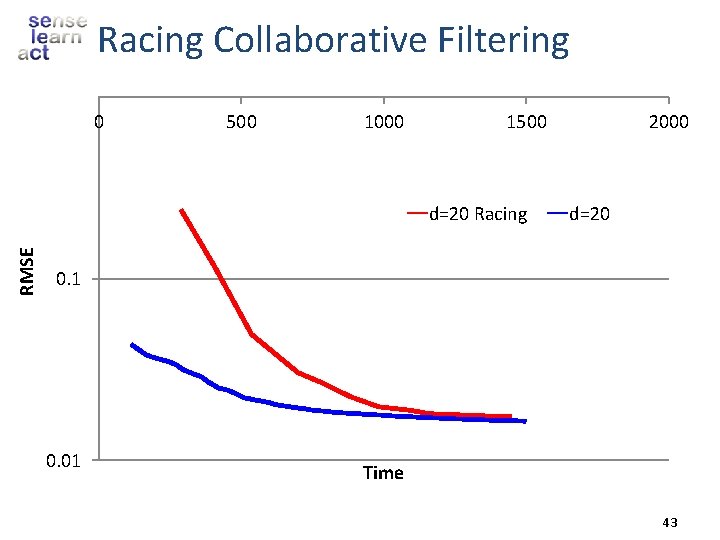

Racing Collaborative Filtering 0 500 1000 1500 RMSE d=20 Racing 2000 d=20 0. 1 0. 01 Time 43

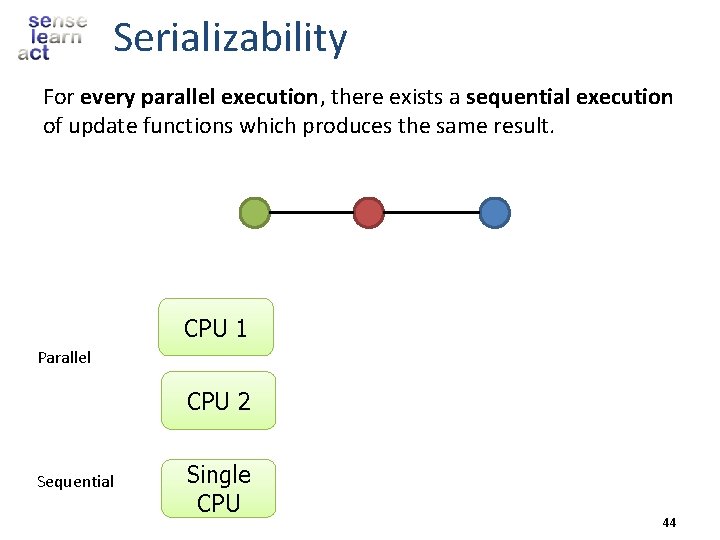

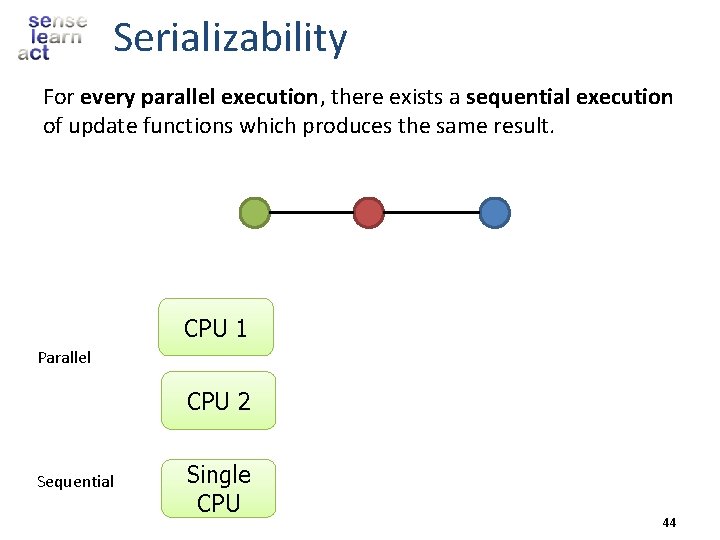

Serializability For every parallel execution, there exists a sequential execution of update functions which produces the same result. CPU 1 time Parallel CPU 2 Sequential Single CPU 44

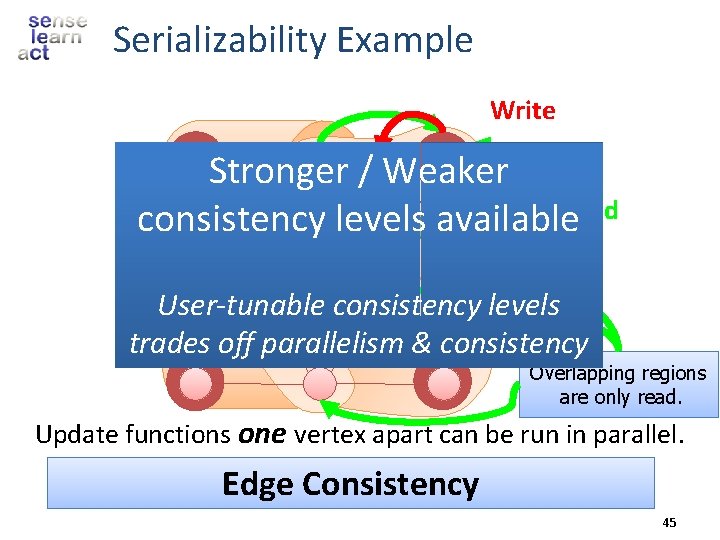

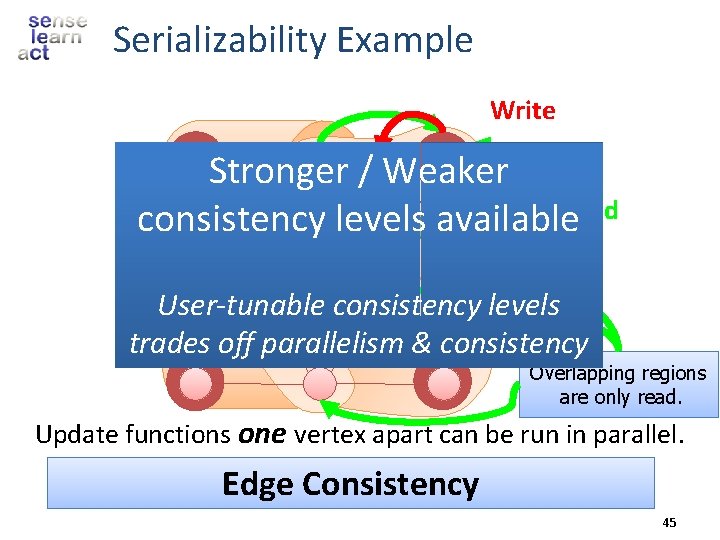

Serializability Example Write Stronger / Weaker consistency levels available. Read User-tunable consistency levels trades off parallelism & consistency Overlapping regions are only read. Update functions one vertex apart can be run in parallel. Edge Consistency 45

Distributed Consistency Solution 1 Solution 2 Graph Coloring Distributed Locking

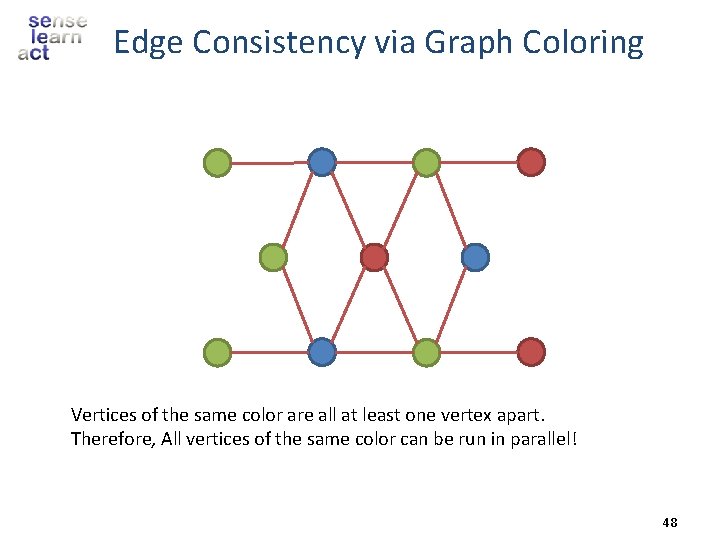

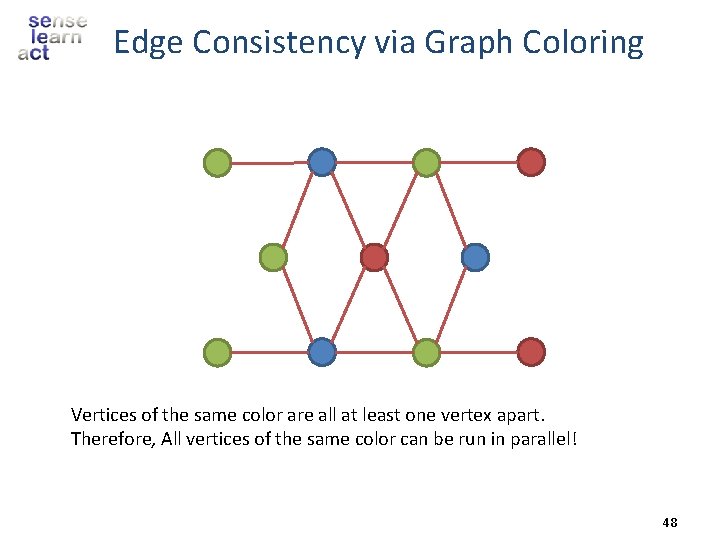

Edge Consistency via Graph Coloring Vertices of the same color are all at least one vertex apart. Therefore, All vertices of the same color can be run in parallel! 48

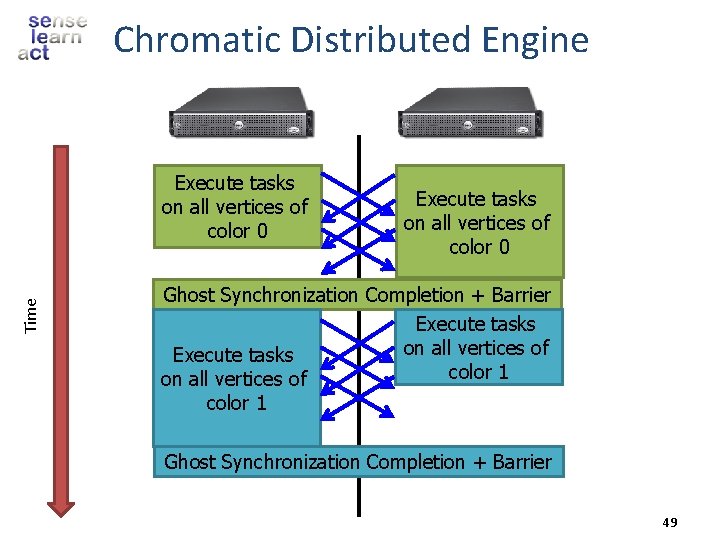

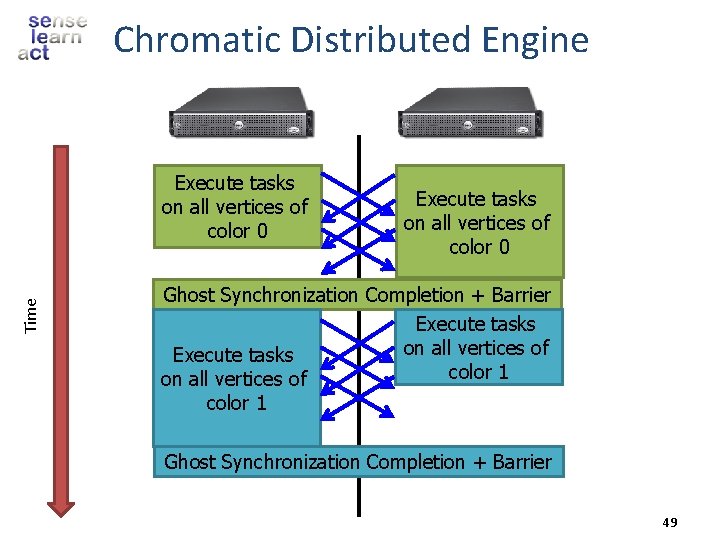

Chromatic Distributed Engine Time Execute tasks on all vertices of color 0 Ghost Synchronization Completion + Barrier Execute tasks on all vertices of Execute tasks color 1 on all vertices of color 1 Ghost Synchronization Completion + Barrier 49

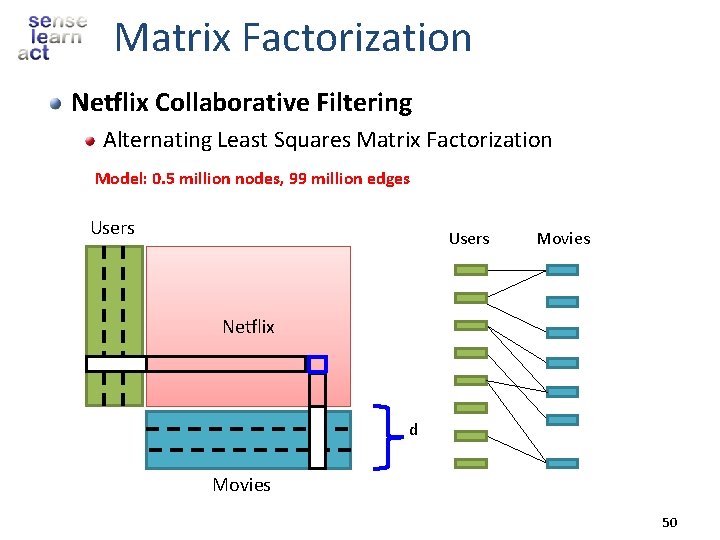

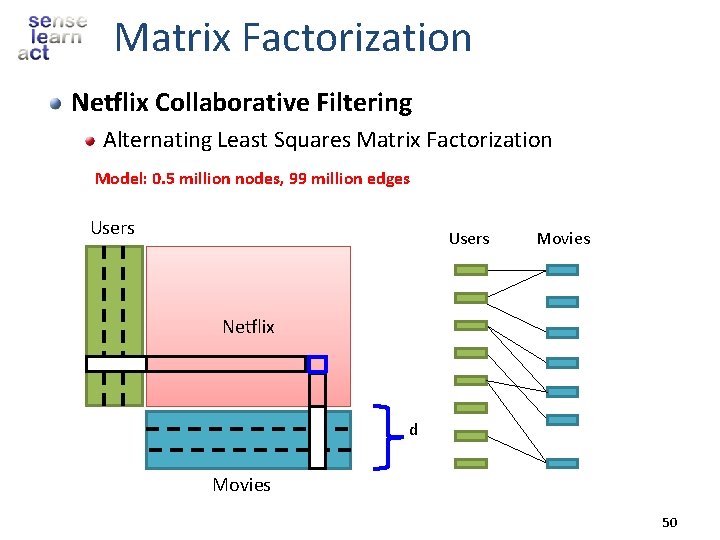

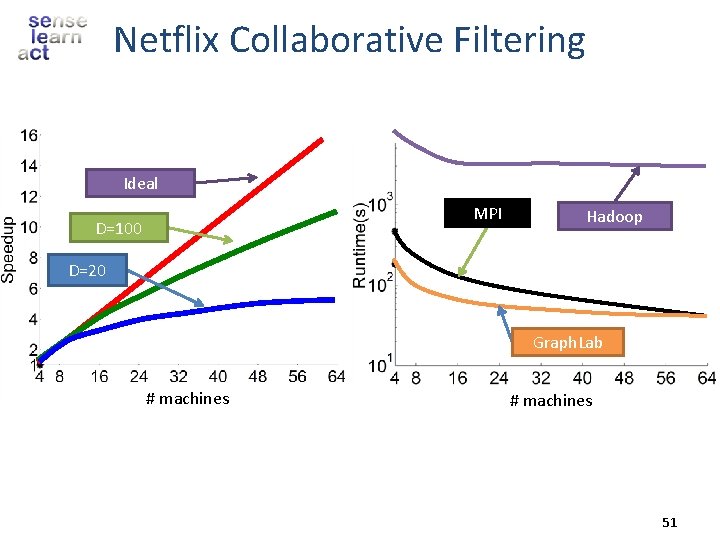

Matrix Factorization Netflix Collaborative Filtering Alternating Least Squares Matrix Factorization Model: 0. 5 million nodes, 99 million edges Users Movies Netflix d Movies 50

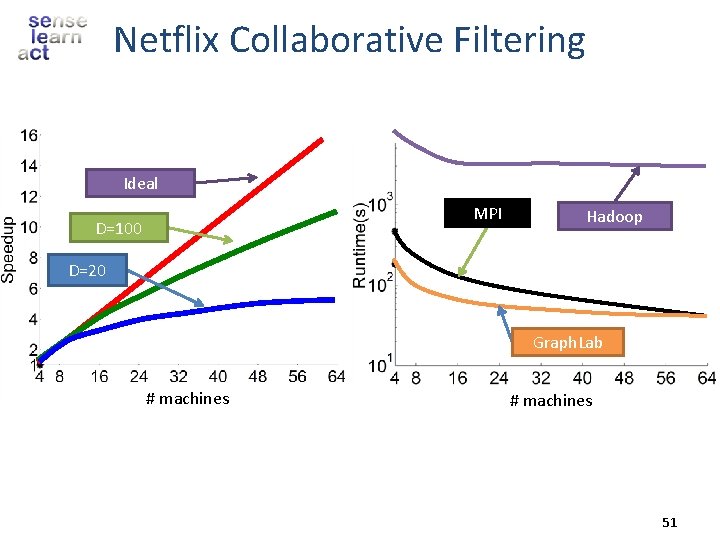

Netflix Collaborative Filtering Ideal MPI D=100 Hadoop D=20 Graph. Lab # machines 51

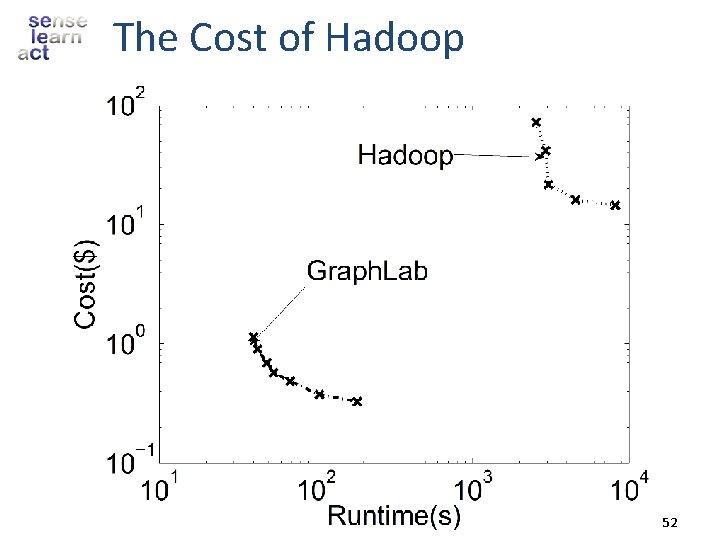

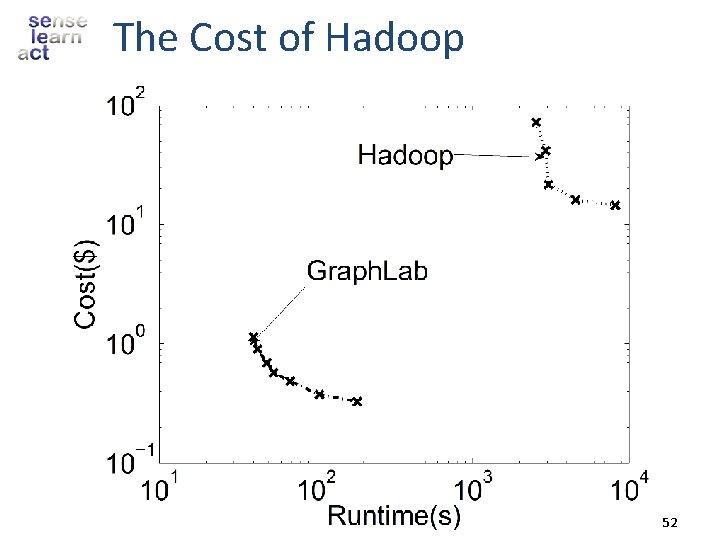

The Cost of Hadoop 52

Co. EM (Rosie Jones, 2005) Named Entity Recognition Task the cat Is “Cat” an animal? Is “Istanbul” a place? Vertices: 2 Million Edges: 200 Million <X> ran quickly Australia travelled to <X> Istanbul <X> is pleasant 0. 3% of Hadoop time Hadoop 95 Cores 7. 5 hrs Graph. Lab 16 Cores 30 min Distributed GL 32 EC 2 Nodes 80 secs 53

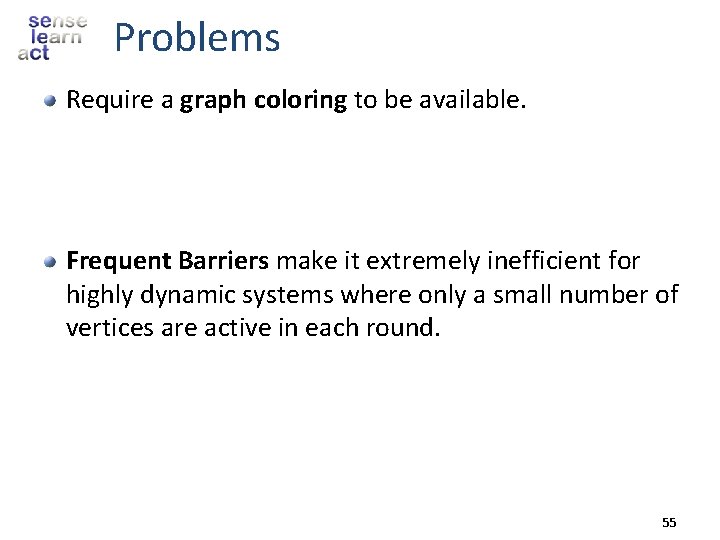

Problems Require a graph coloring to be available. Frequent Barriers make it extremely inefficient for highly dynamic systems where only a small number of vertices are active in each round. 55

Distributed Consistency Solution 1 Solution 2 Graph Coloring Distributed Locking

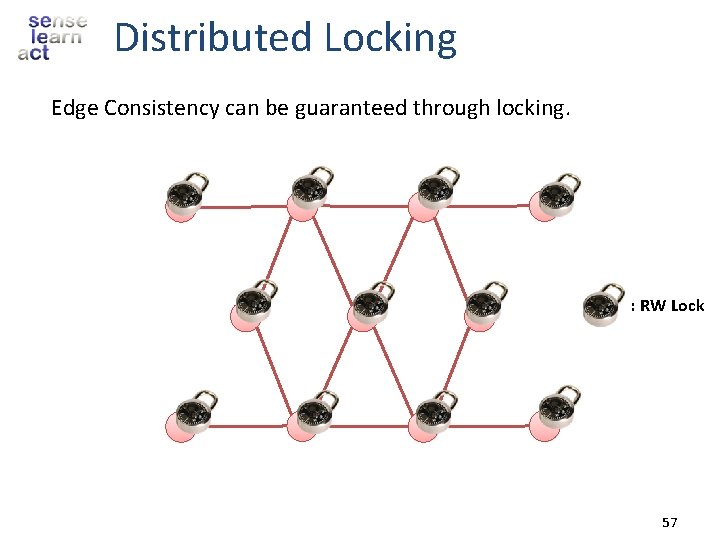

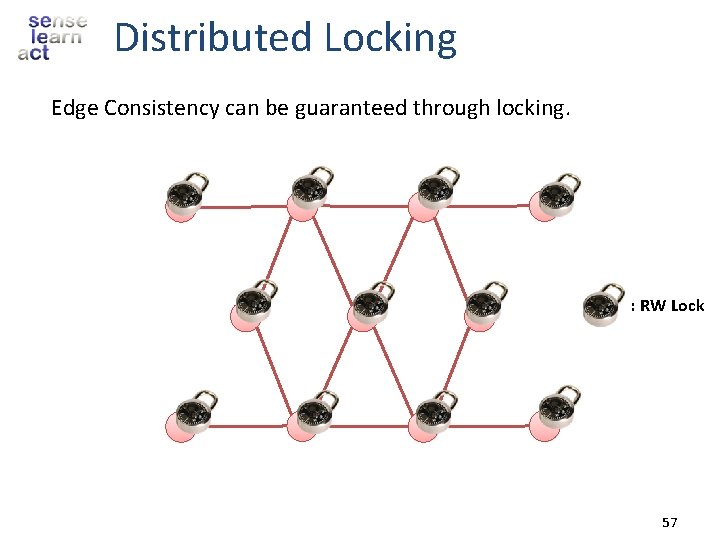

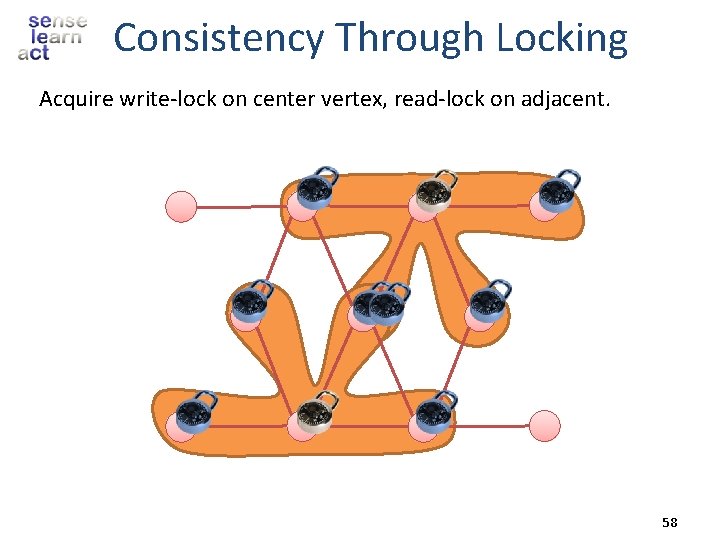

Distributed Locking Edge Consistency can be guaranteed through locking. : RW Lock 57

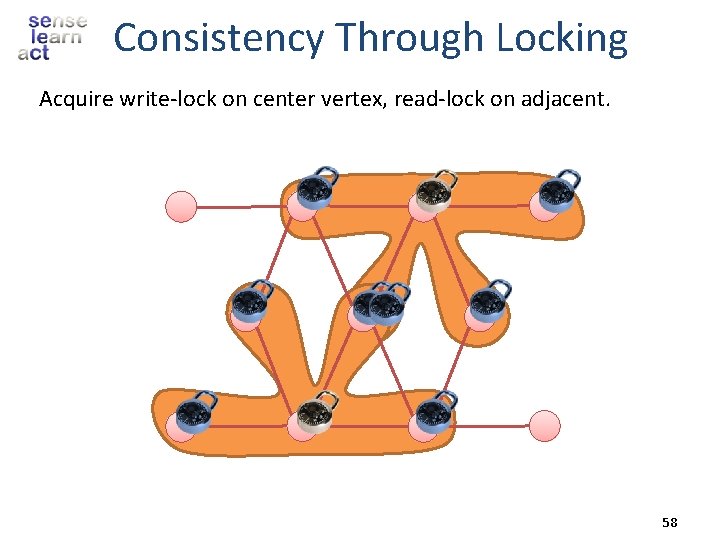

Consistency Through Locking Acquire write-lock on center vertex, read-lock on adjacent. 58

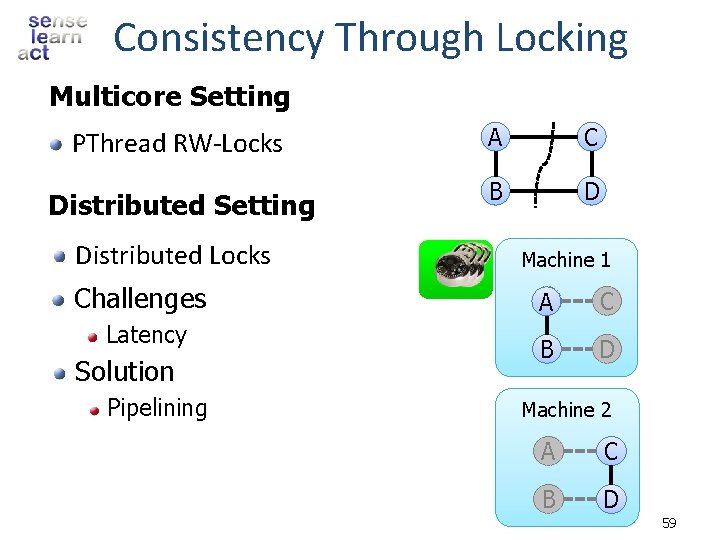

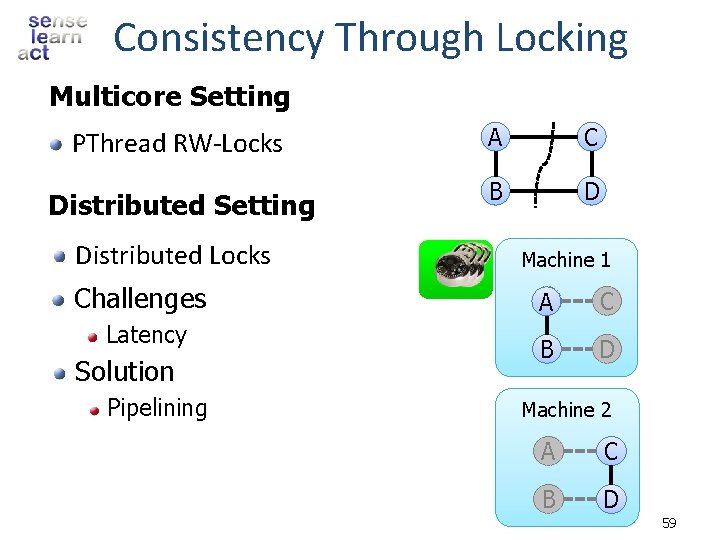

Consistency Through Locking Multicore Setting PThread RW-Locks A C Distributed Setting B D Distributed Locks Challenges Latency Solution Pipelining CPU Machine 1 A C B D Machine 2 A C B D 59

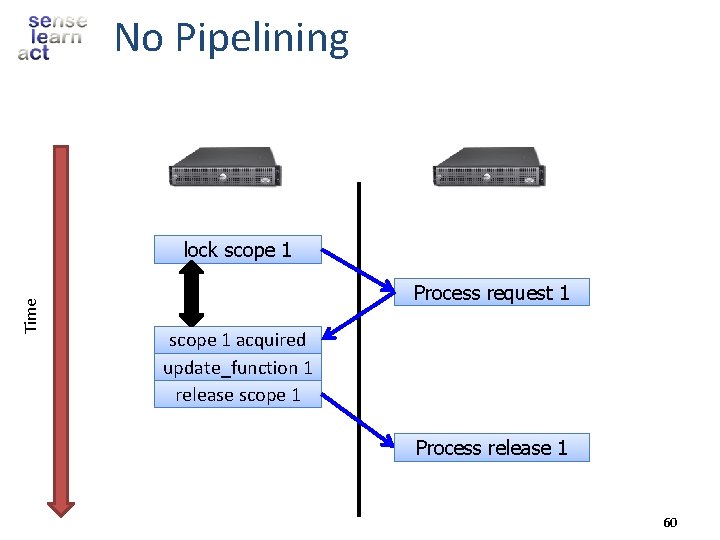

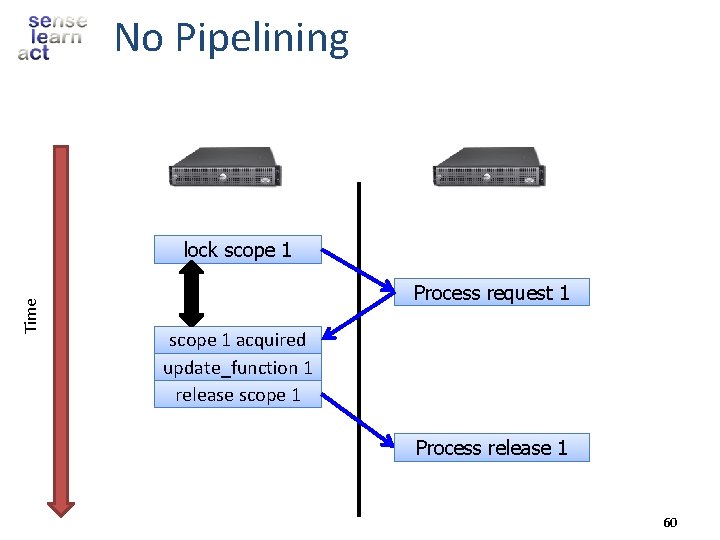

No Pipelining Time lock scope 1 Process request 1 scope 1 acquired update_function 1 release scope 1 Process release 1 60

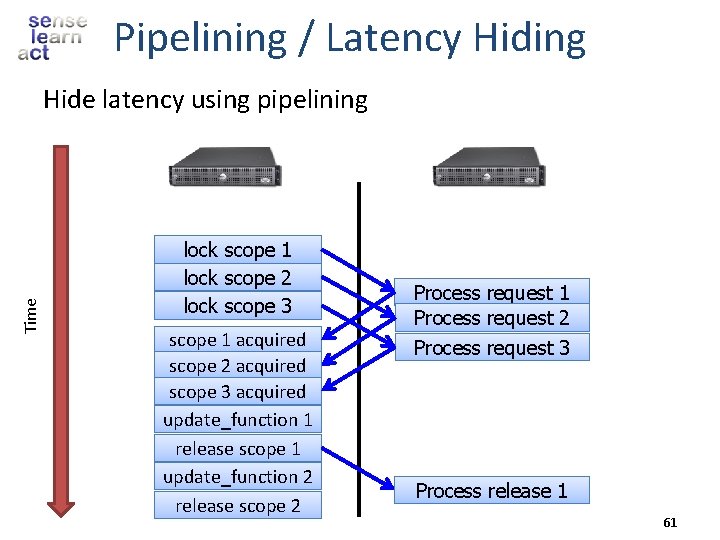

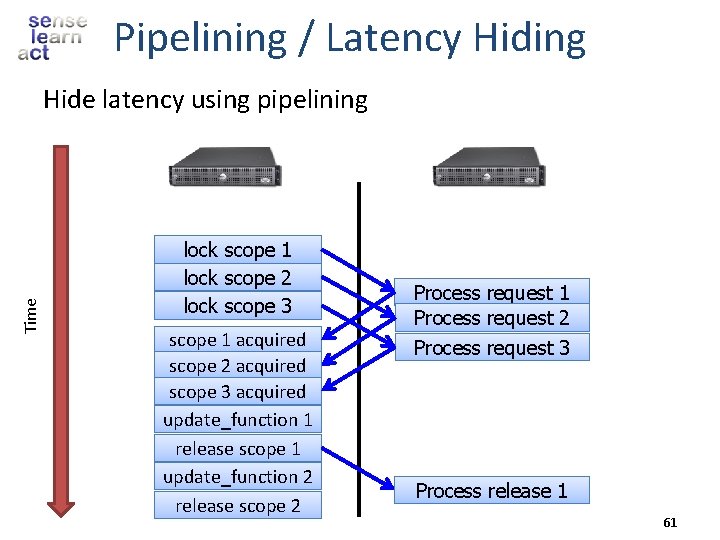

Pipelining / Latency Hiding Time Hide latency using pipelining lock scope 1 lock scope 2 lock scope 3 scope 1 acquired scope 2 acquired scope 3 acquired update_function 1 release scope 1 update_function 2 release scope 2 Process request 1 Process request 2 Process request 3 Process release 1 61

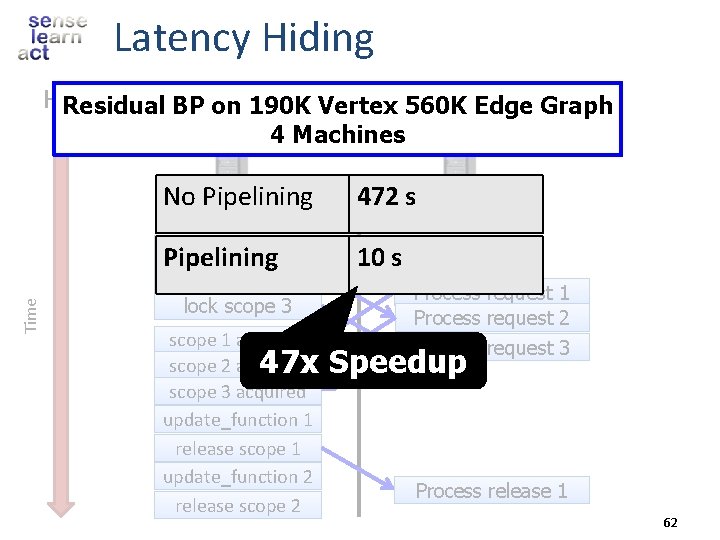

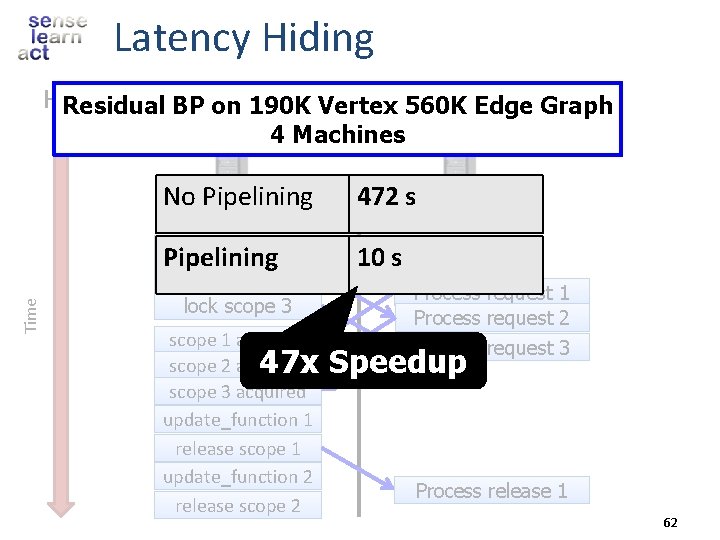

Latency Hiding Hide latency. BPusing request buffering Residual on 190 K Vertex 560 K Edge Graph Time 4 Machines No Pipelining 472 s lock scope 1 Pipelining 10 s lock scope 2 lock scope 3 scope 1 acquired scope 2 acquired scope 3 acquired update_function 1 release scope 1 update_function 2 release scope 2 Process request 1 Process request 2 Process request 3 47 x Speedup Process release 1 62

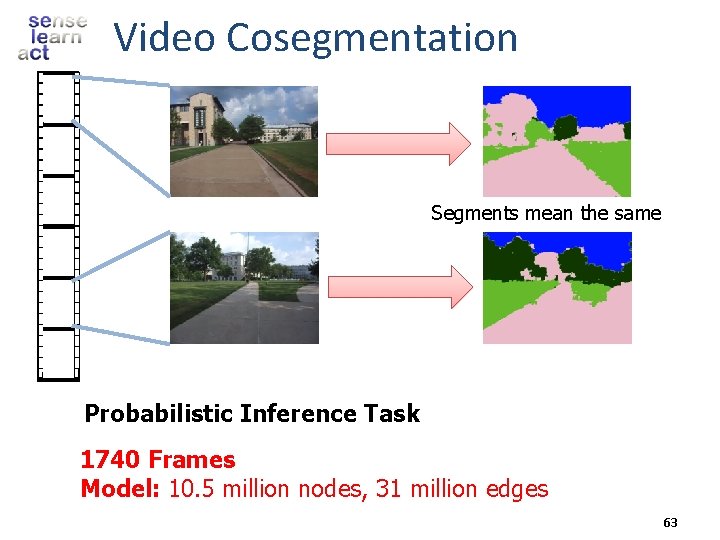

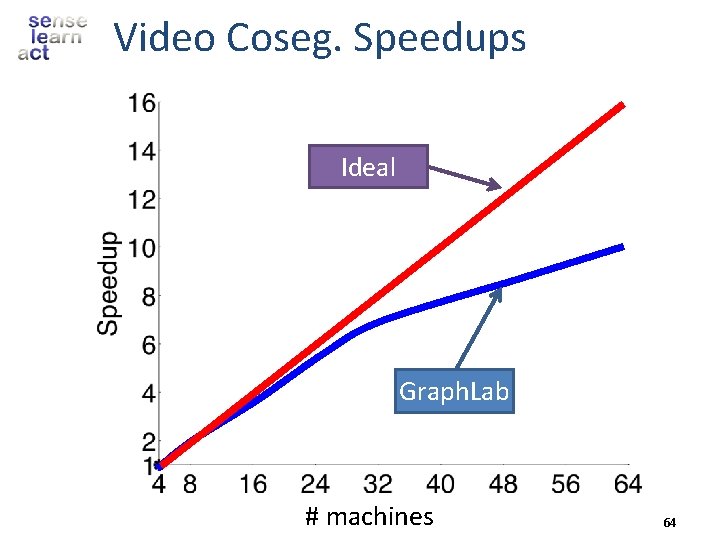

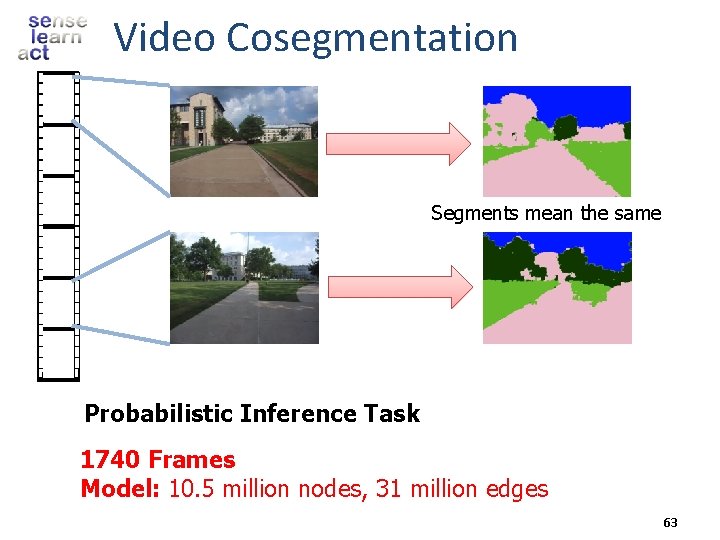

Video Cosegmentation Segments mean the same Probabilistic Inference Task 1740 Frames Model: 10. 5 million nodes, 31 million edges 63

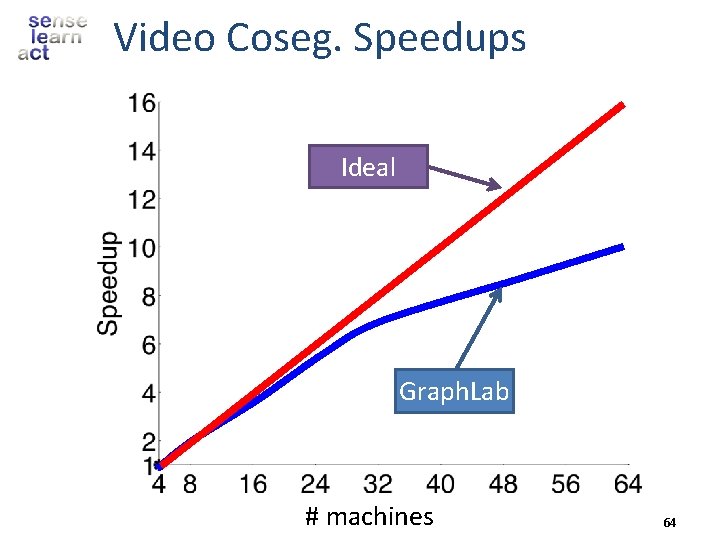

Video Coseg. Speedups Ideal Graph. Lab # machines 64

The Graph. Lab Framework Graph Based Data Representation Update Functions User Computation Consistency Model 65

What if machines fail? How do we provide fault tolerance?

Checkpoint 1: Stop the world 2: Write state to disk

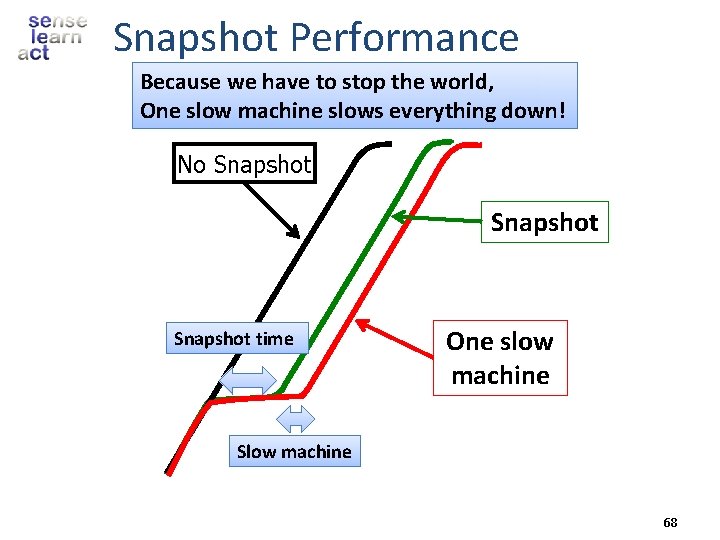

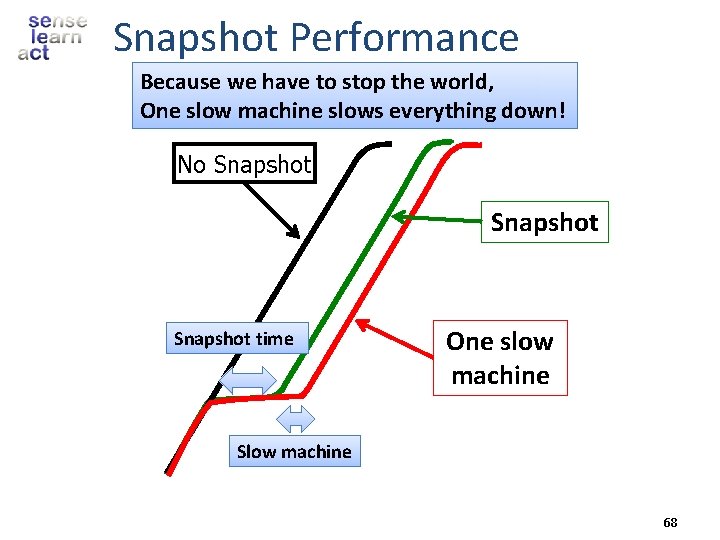

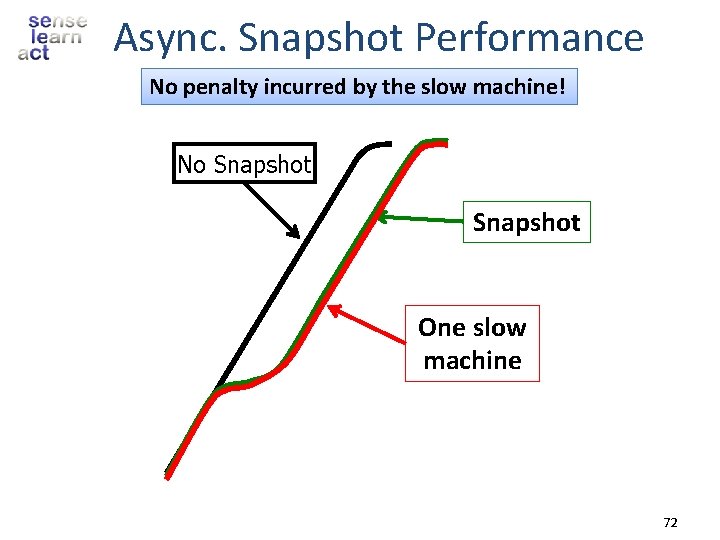

Snapshot Performance Because we have to stop the world, One slow machine slows everything down! No Snapshot time One slow machine Slow machine 68

How can we do better? Take advantage of consistency

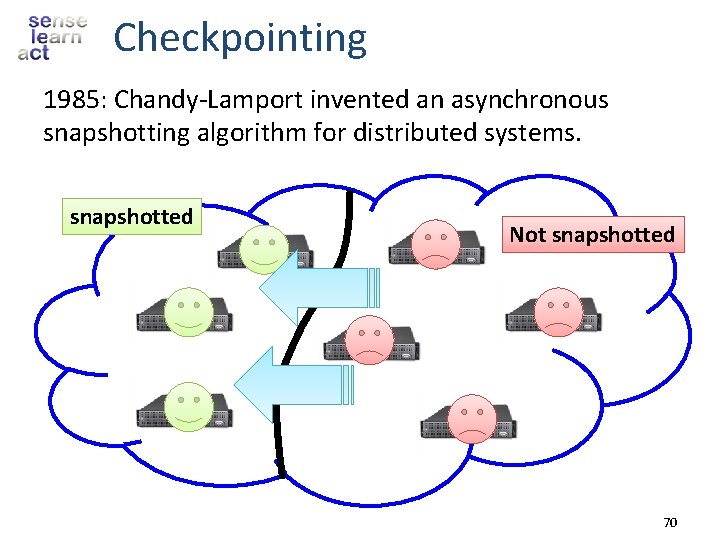

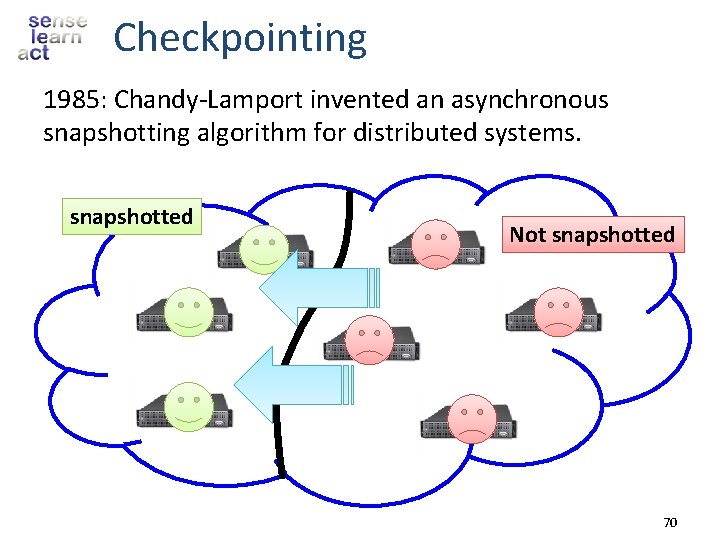

Checkpointing 1985: Chandy-Lamport invented an asynchronous snapshotting algorithm for distributed systems. snapshotted Not snapshotted 70

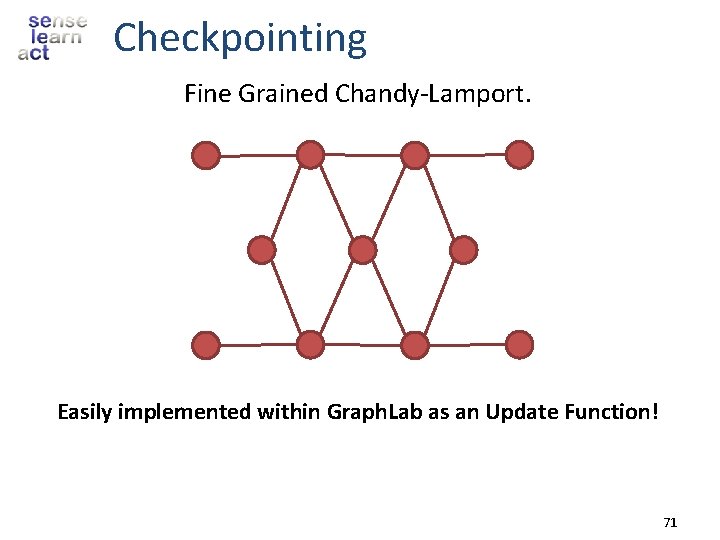

Checkpointing Fine Grained Chandy-Lamport. Easily implemented within Graph. Lab as an Update Function! 71

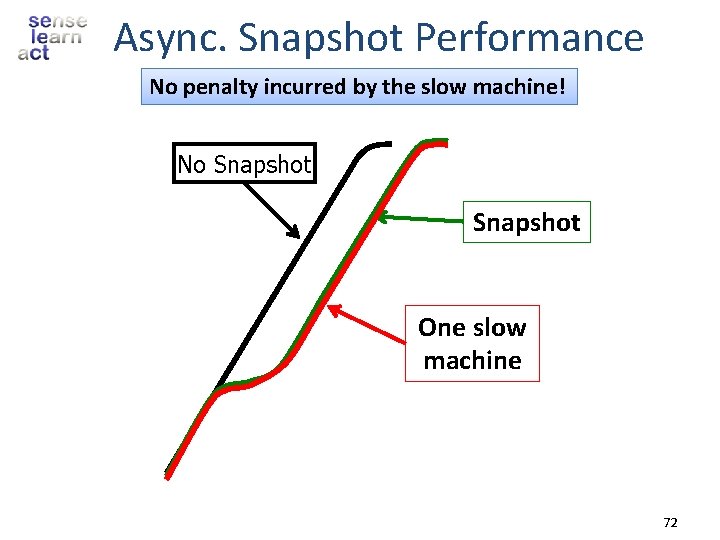

Async. Snapshot Performance No penalty incurred by the slow machine! No Snapshot One slow machine 72

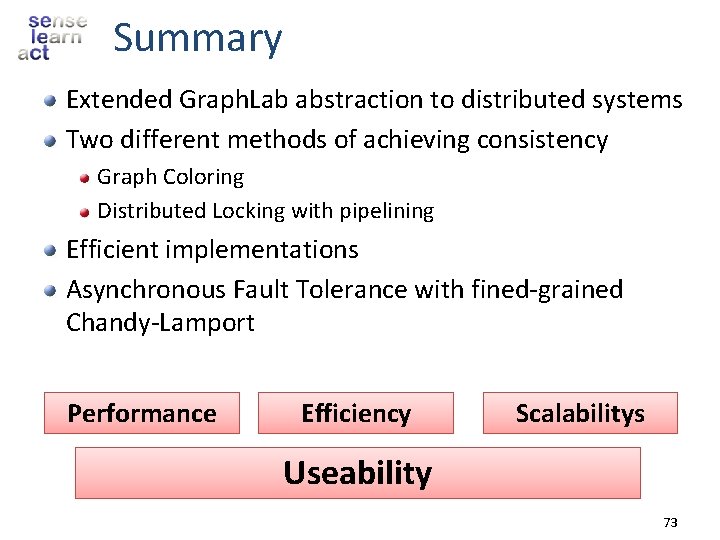

Summary Extended Graph. Lab abstraction to distributed systems Two different methods of achieving consistency Graph Coloring Distributed Locking with pipelining Efficient implementations Asynchronous Fault Tolerance with fined-grained Chandy-Lamport Performance Efficiency Scalabilitys Useability 73

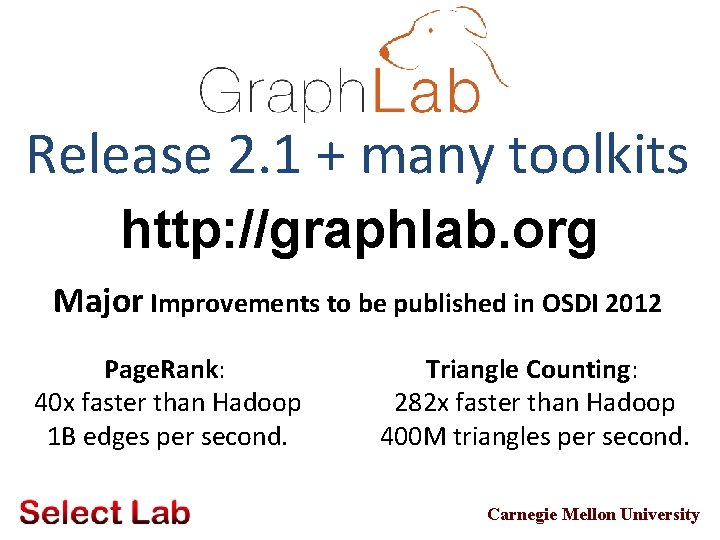

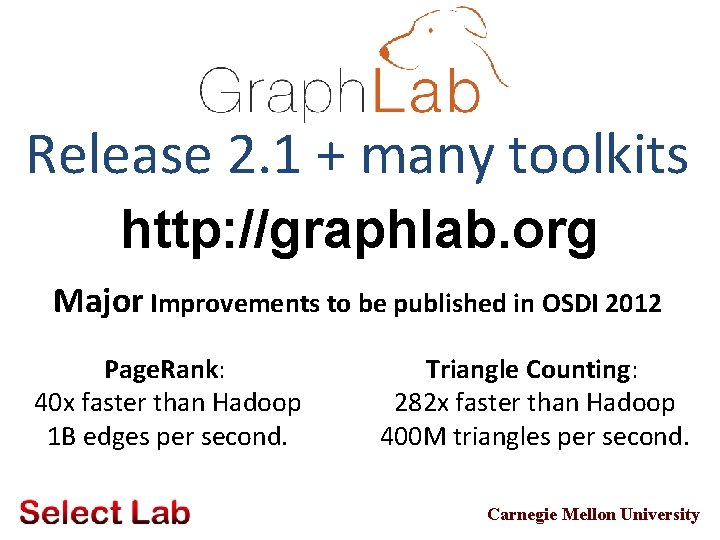

Release 2. 1 + many toolkits http: //graphlab. org Major Improvements to be published in OSDI 2012 Page. Rank: 40 x faster than Hadoop 1 B edges per second. Triangle Counting: 282 x faster than Hadoop 400 M triangles per second. Carnegie Mellon University