A Fast OnChip Profiler Memory Roman Lysecky Susan

- Slides: 37

A Fast On-Chip Profiler Memory Roman Lysecky, Susan Cotterell, Frank Vahid* Department of Computer Science and Engineering University of California, Riverside *Also with the Center for Embedded Computer Systems, UC Irvine This work was supported in part by the National Science Foundation

Outline • Introduction • Problem Definition • Profiling Techniques • Pipelined Binary Search Tree • Pro. Mem • Conclusions 2

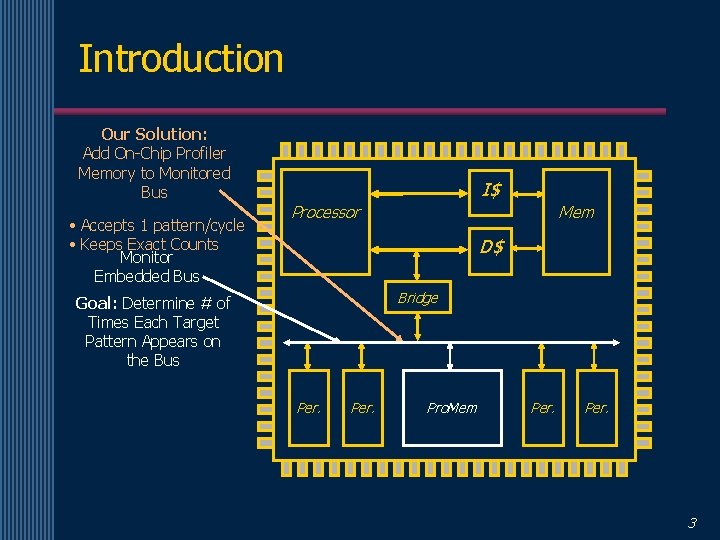

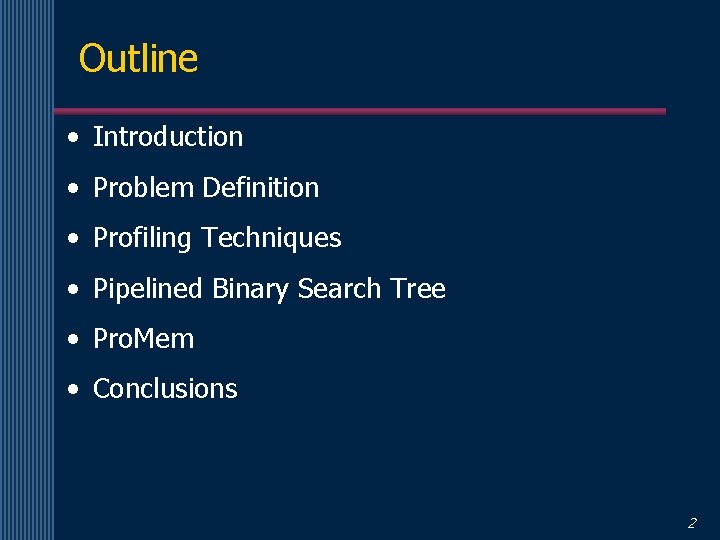

Introduction Our Solution: Add On-Chip Profiler Memory to Monitored Bus • Accepts 1 pattern/cycle • Keeps Exact Counts Monitor Embedded Bus I$ Mem Processor D$ Bridge Goal: Determine # of Times Each Target Pattern Appears on the Bus Per. Pro. Mem Per. 3

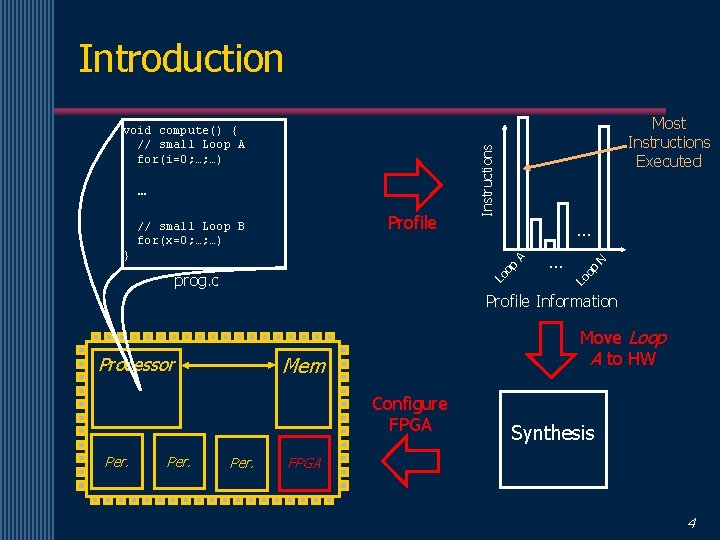

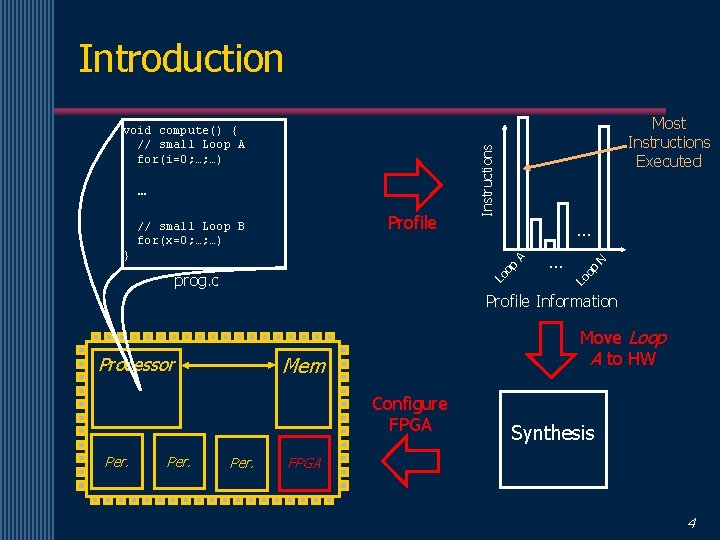

Introduction … Lo prog. c … Lo op A } N Profile // small Loop B for(x=0; …; …) op … Most Instructions Executed Instructions void compute() { // small Loop A for(i=0; …; …) Profile Information Move Loop A to HW Mem Processor Configure FPGA Per. Synthesis FPGA 4

Introduction • Profiling Can Be Used to Solve Many Problems – Optimization of frequently executed subroutines – Mapping frequently executed code and data to non-interfering cache regions – Synthesis of optimized hardware for common cases – Identifying frequent loops to map to a small lowpower loop cache – Many Others! 5

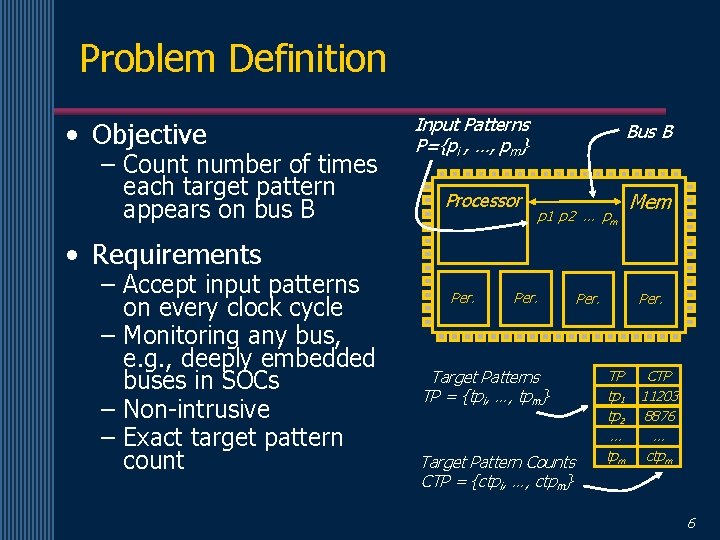

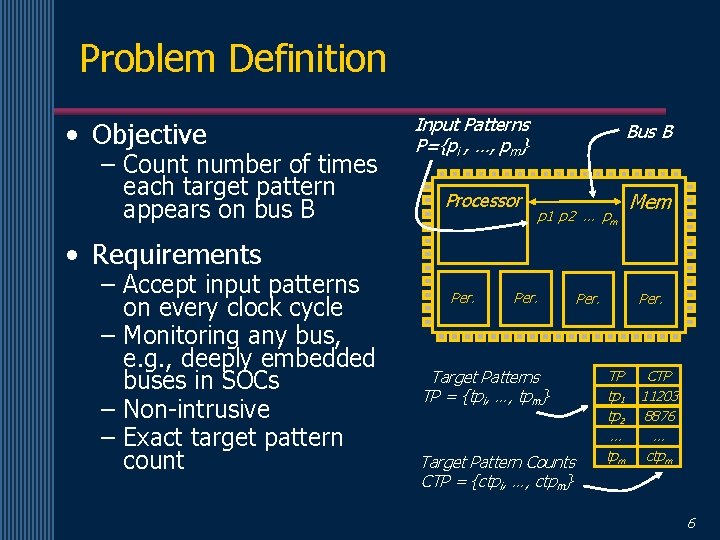

Problem Definition • Objective – Count number of times each target pattern appears on bus B Input Patterns P={pi , …, pm} Processor Bus B p 1 p 2 … pm Mem • Requirements – Accept input patterns on every clock cycle – Monitoring any bus, e. g. , deeply embedded buses in SOCs – Non-intrusive – Exact target pattern count Per. Target Patterns TP = {tpi, …, tpm} Target Pattern Counts CTP = {ctpi, …, ctpm} Per. TP CTP tp 1 11203 tp 2 8876 … … tpm ctpm 6

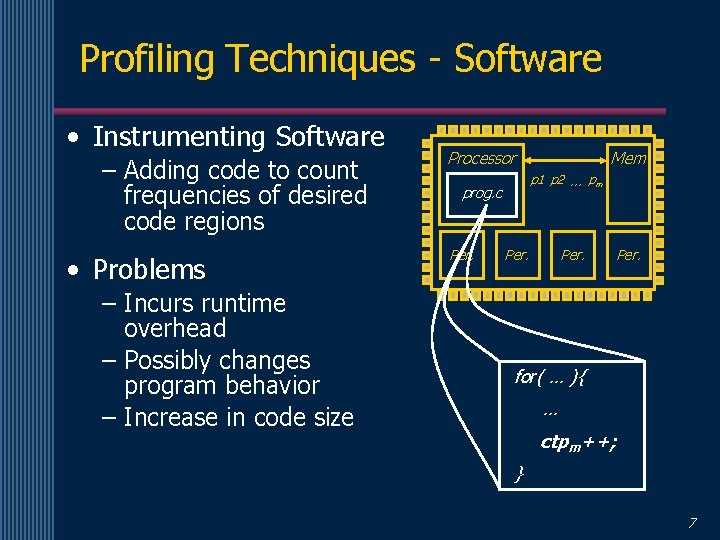

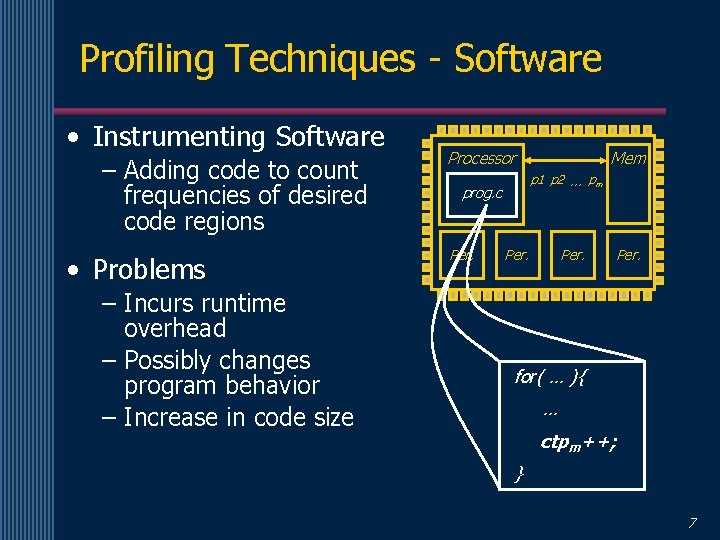

Profiling Techniques - Software • Instrumenting Software – Adding code to count frequencies of desired code regions • Problems – Incurs runtime overhead – Possibly changes program behavior – Increase in code size Processor p 1 p 2 … pm prog. c Per. Mem Per. for( … ){ … ctpm++; } 7

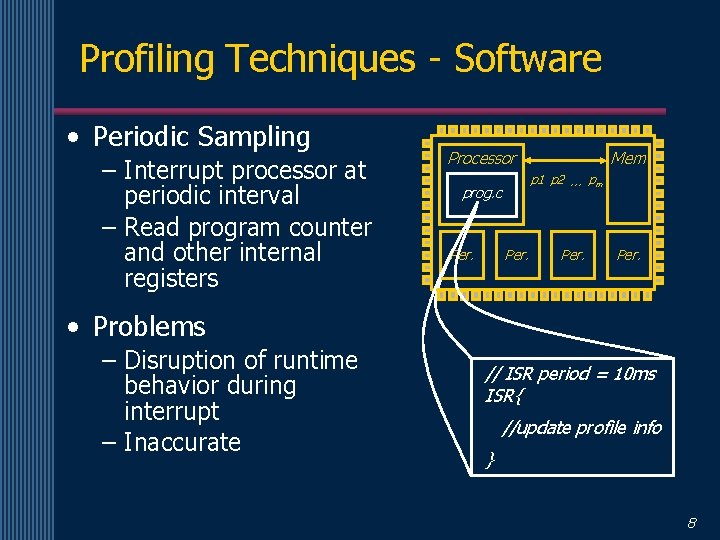

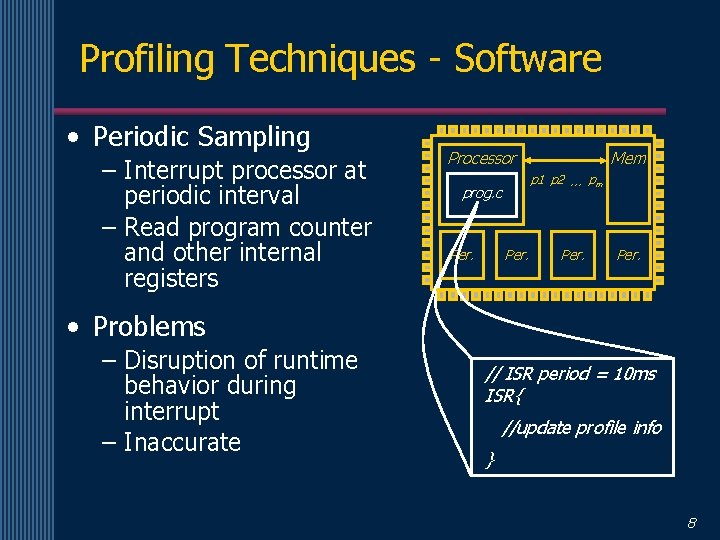

Profiling Techniques - Software • Periodic Sampling – Interrupt processor at periodic interval – Read program counter and other internal registers Processor p 1 p 2 … pm prog. c Per. Mem Per. • Problems – Disruption of runtime behavior during interrupt – Inaccurate // ISR period = 10 ms ISR{ //update profile info } 8

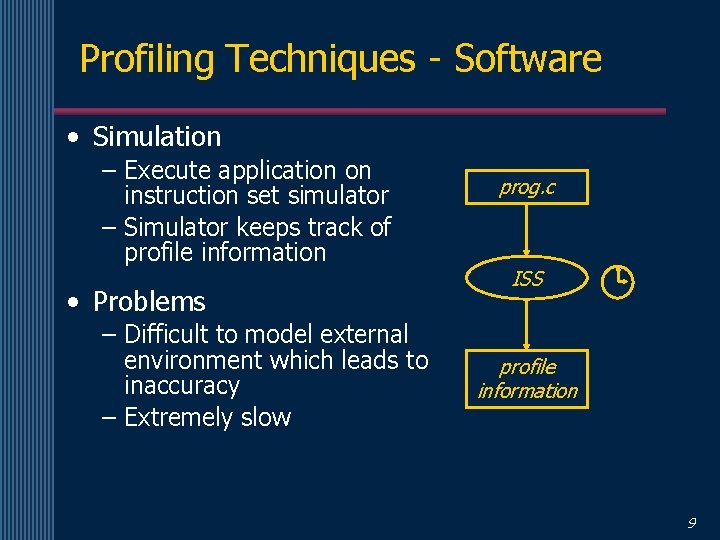

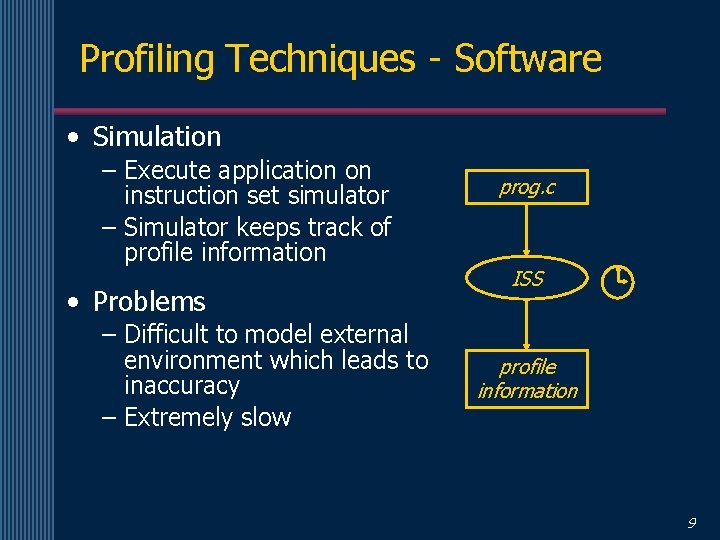

Profiling Techniques - Software • Simulation – Execute application on instruction set simulator – Simulator keeps track of profile information • Problems – Difficult to model external environment which leads to inaccuracy – Extremely slow prog. c ISS profile information 9

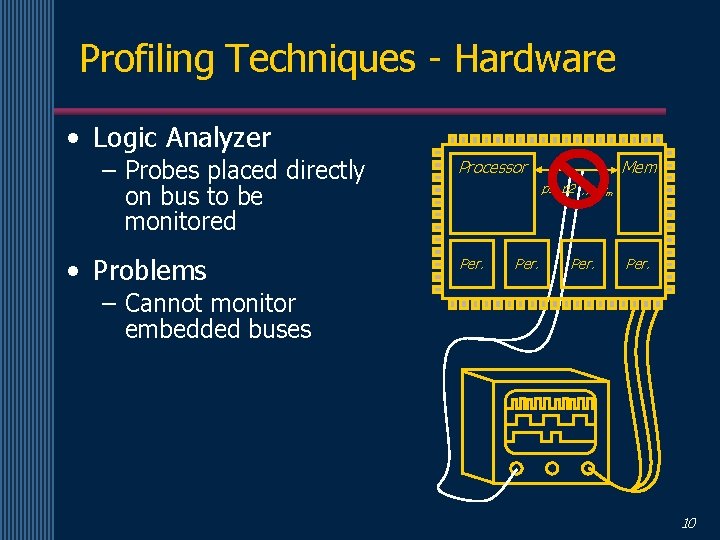

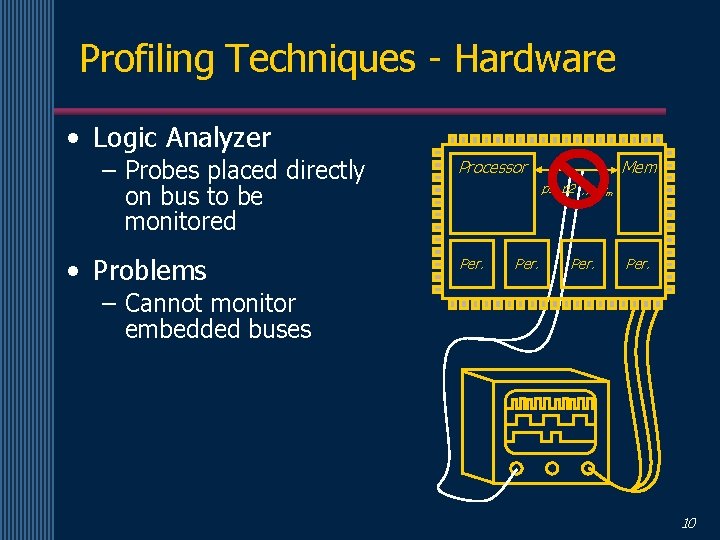

Profiling Techniques - Hardware • Logic Analyzer – Probes placed directly on bus to be monitored • Problems Processor Mem p 1 p 2 … pm Per. – Cannot monitor embedded buses 10

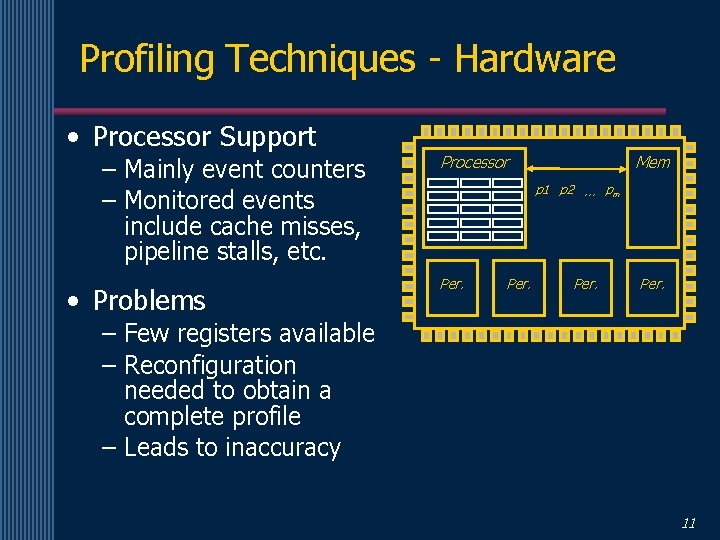

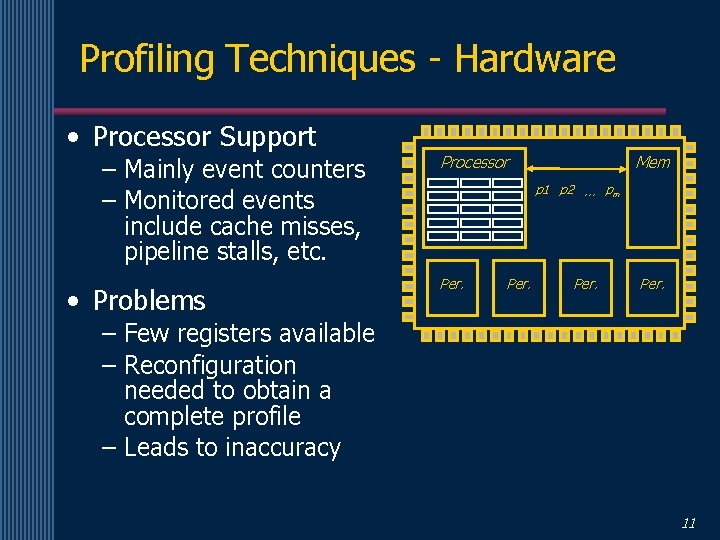

Profiling Techniques - Hardware • Processor Support – Mainly event counters – Monitored events include cache misses, pipeline stalls, etc. • Problems Processor Mem p 1 p 2 … pm Per. – Few registers available – Reconfiguration needed to obtain a complete profile – Leads to inaccuracy 11

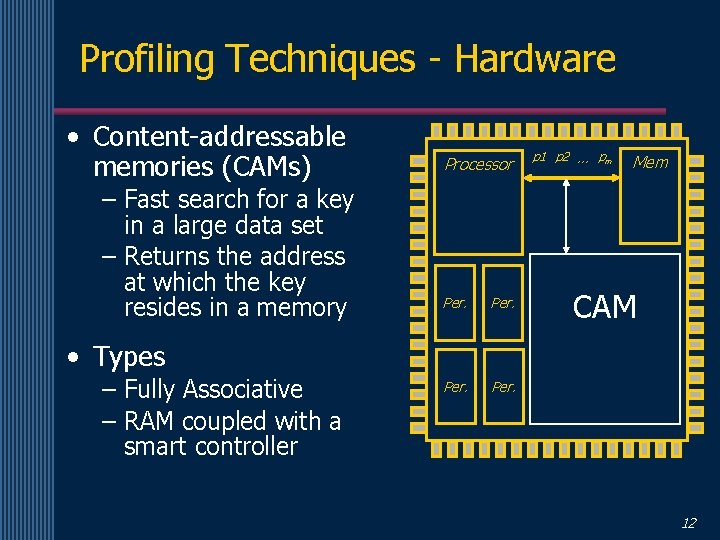

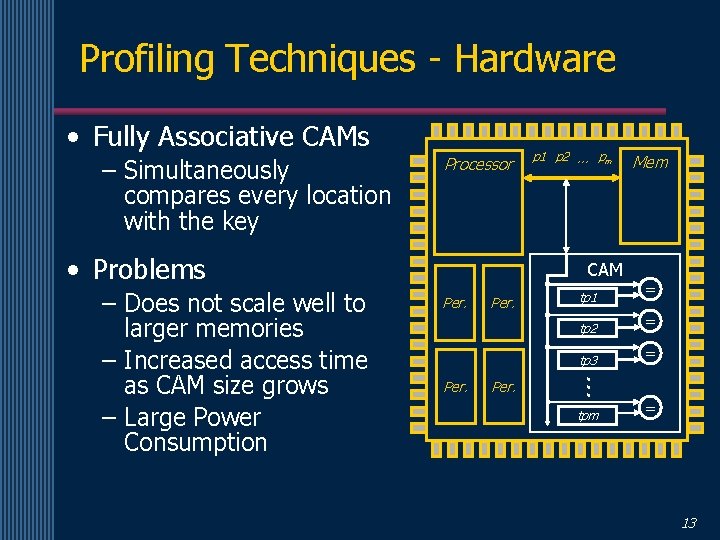

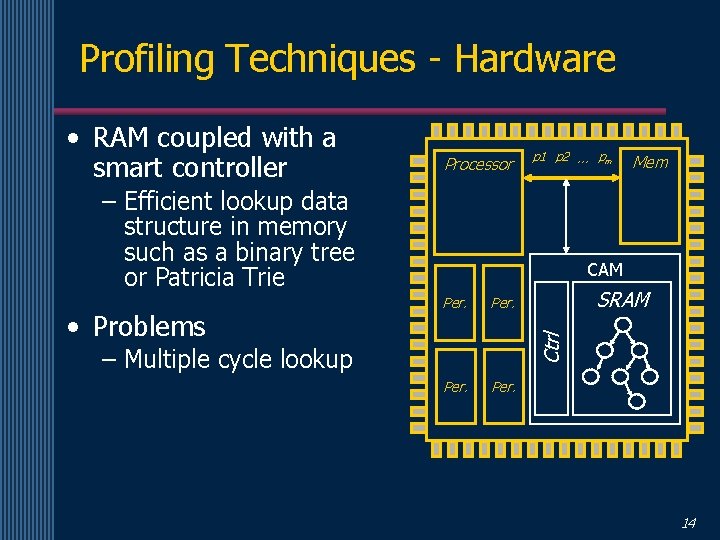

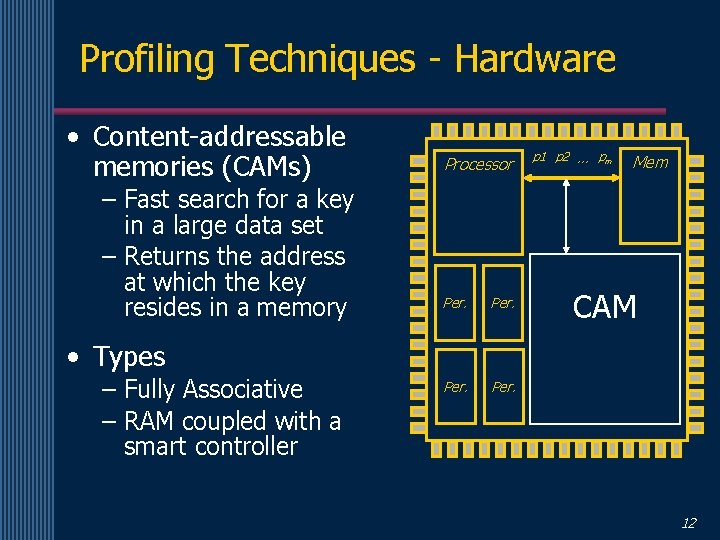

Profiling Techniques - Hardware • Content-addressable memories (CAMs) – Fast search for a key in a large data set – Returns the address at which the key resides in a memory Processor Per. p 1 p 2 … pm Mem CAM • Types – Fully Associative – RAM coupled with a smart controller 12

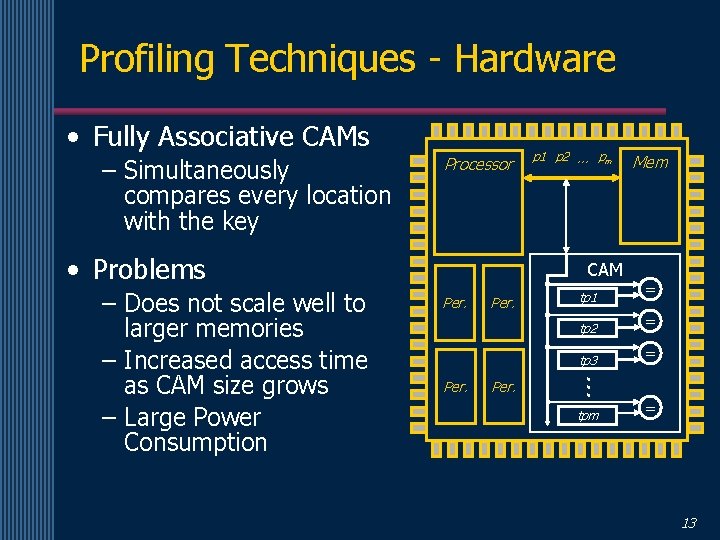

Profiling Techniques - Hardware • Fully Associative CAMs – Simultaneously compares every location with the key Processor • Problems CAM Per. Mem tp 1 = tp 2 = tp 3 = … – Does not scale well to larger memories – Increased access time as CAM size grows – Large Power Consumption p 1 p 2 … pm tpm = 13

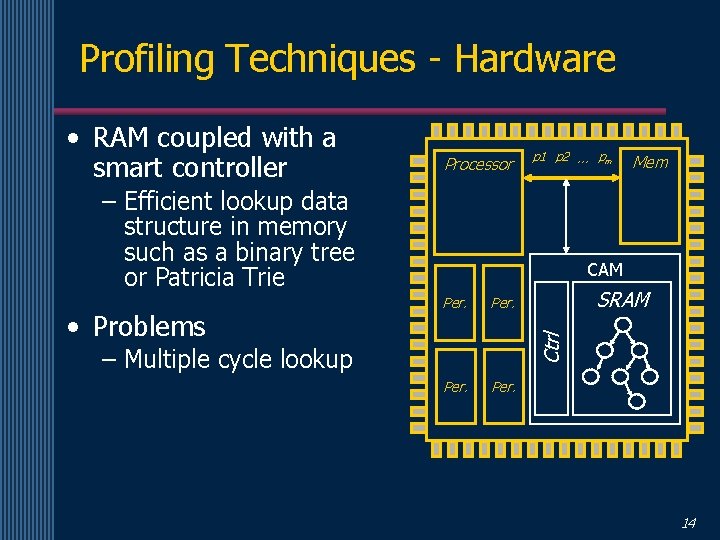

Profiling Techniques - Hardware • RAM coupled with a smart controller Processor p 1 p 2 … pm – Efficient lookup data structure in memory such as a binary tree or Patricia Trie CAM Per. SRAM Ctrl • Problems Mem – Multiple cycle lookup 14

Observations • Not necessary to have 1 cycle look up • Only need to accept one input pattern every cycle 15

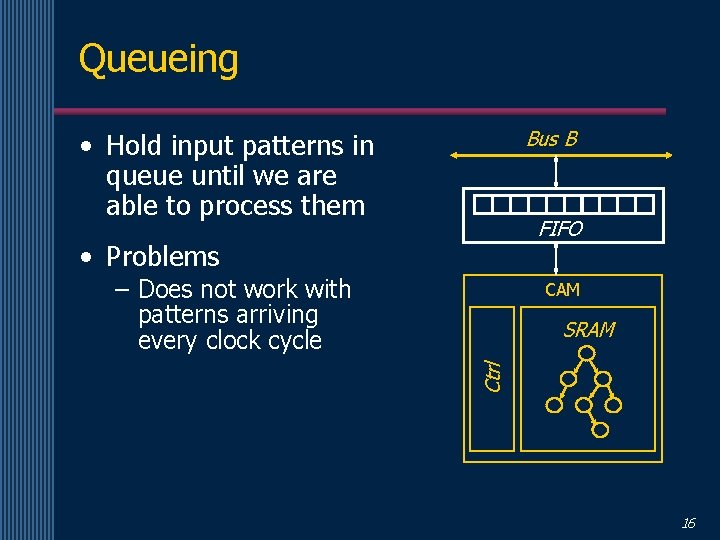

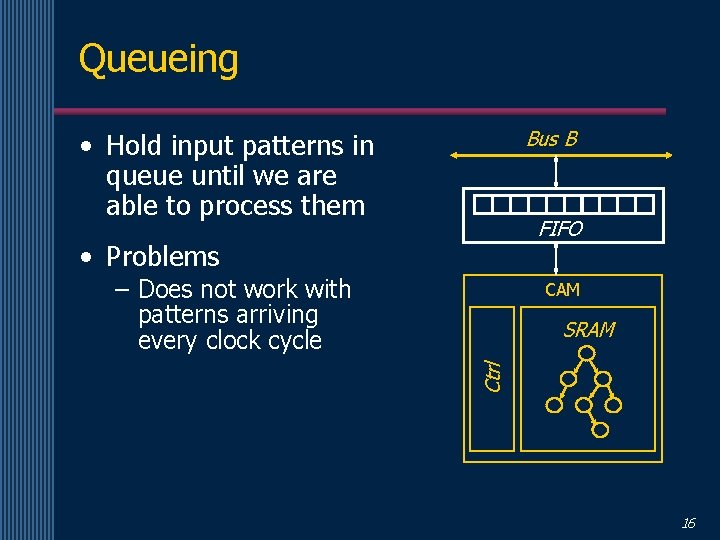

Queueing Bus B • Hold input patterns in queue until we are able to process them FIFO • Problems – Does not work with patterns arriving every clock cycle CAM Ctrl SRAM 16

Pipelining • Implemented in processors such that instructions can be executed every cycle • Can we use pipelining to solve our problem? 17

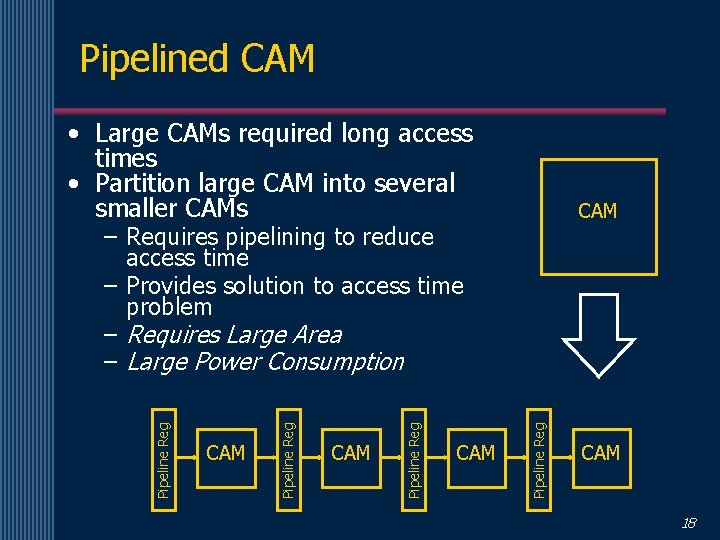

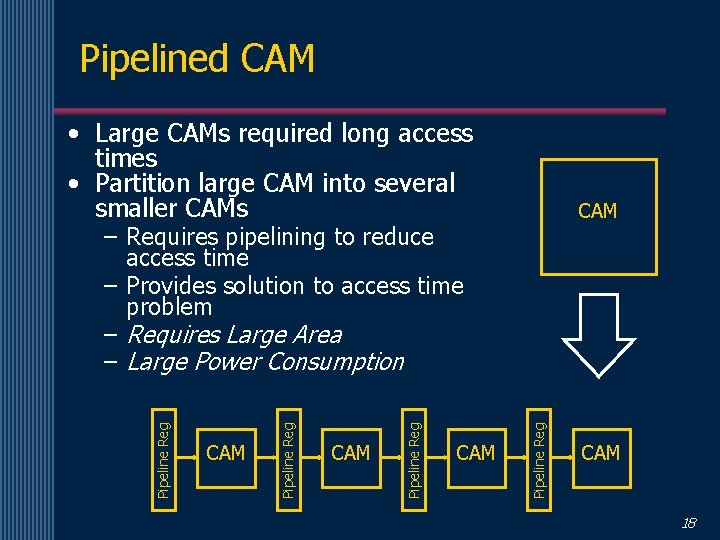

Pipelined CAM • Large CAMs required long access times • Partition large CAM into several smaller CAMs CAM Pipeline Reg – Requires pipelining to reduce access time – Provides solution to access time problem – Requires Large Area – Large Power Consumption CAM 18

Pipelined CAM • Entries can be stored in a CAM in any order – requires sequential lookup in pipelined CAM approach • Is there a benefit to sorting the entries? – not necessary to search all entries – leads to faster lookup time • Tree structure provides a inherently sorted structure – Search time remains a problem – Can we pipeline the structure? 19

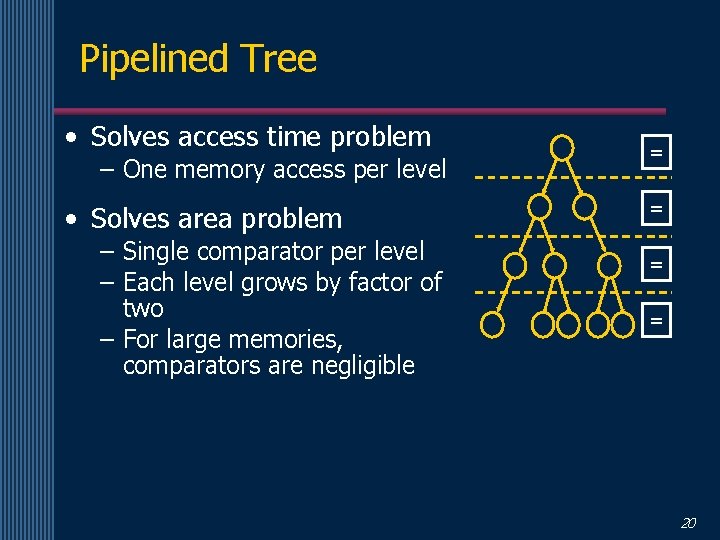

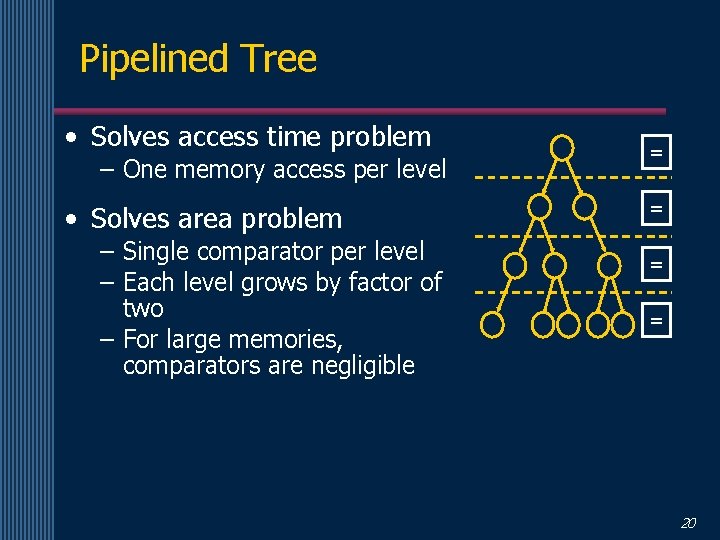

Pipelined Tree • Solves access time problem – One memory access per level • Solves area problem – Single comparator per level – Each level grows by factor of two – For large memories, comparators are negligible = = 20

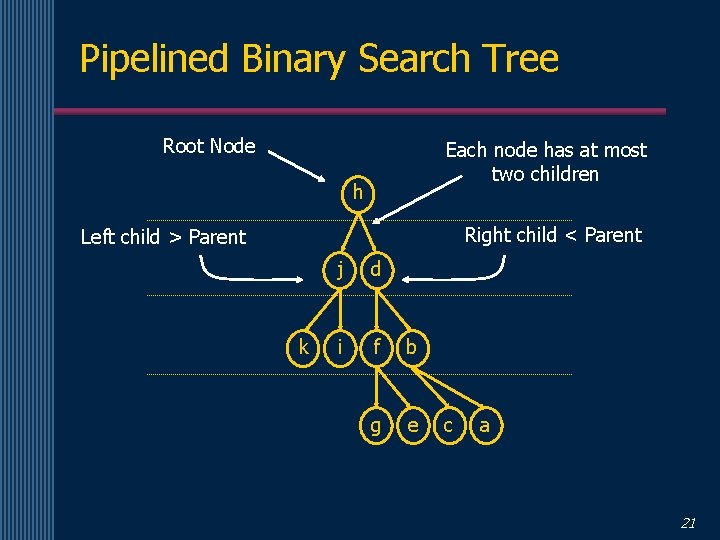

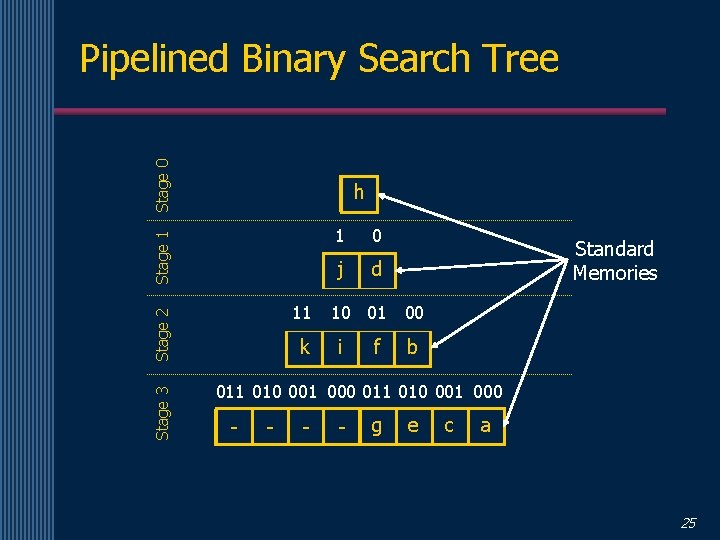

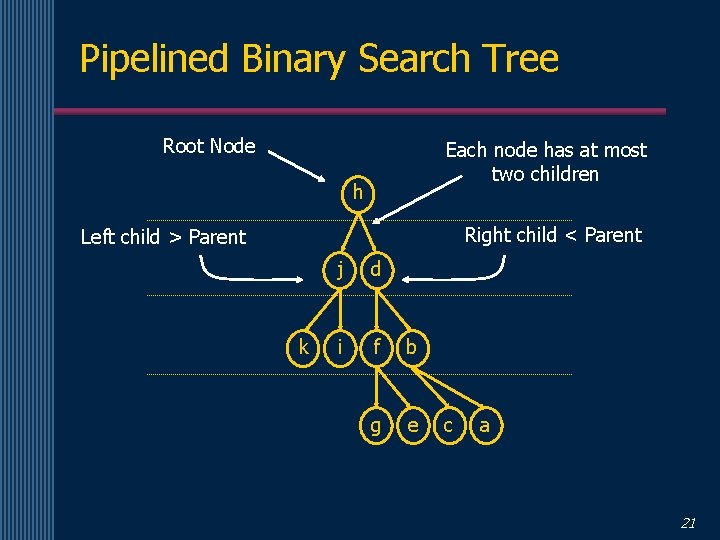

Pipelined Binary Search Tree Root Node Each node has at most two children h Right child < Parent Left child > Parent k j d i f b g e c a 21

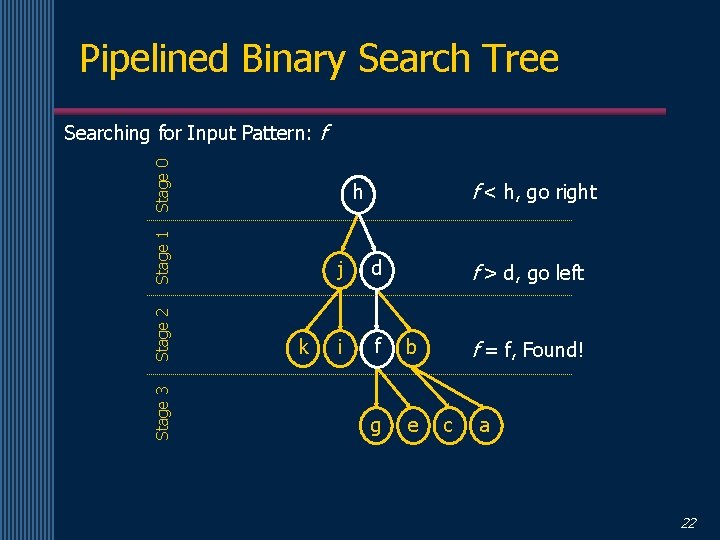

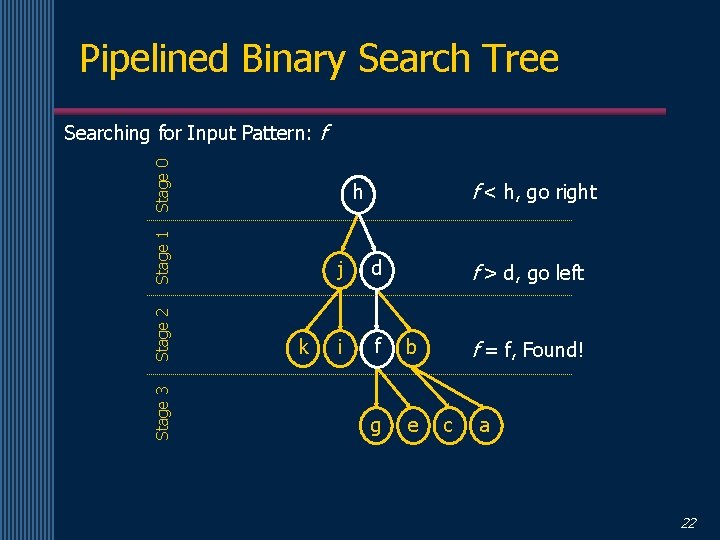

Pipelined Binary Search Tree Stage 0 Searching for Input Pattern: f Stage 1 Stage 2 Stage 3 f < h, go right h k j d i f b g e f > d, go left f = f, Found! c a 22

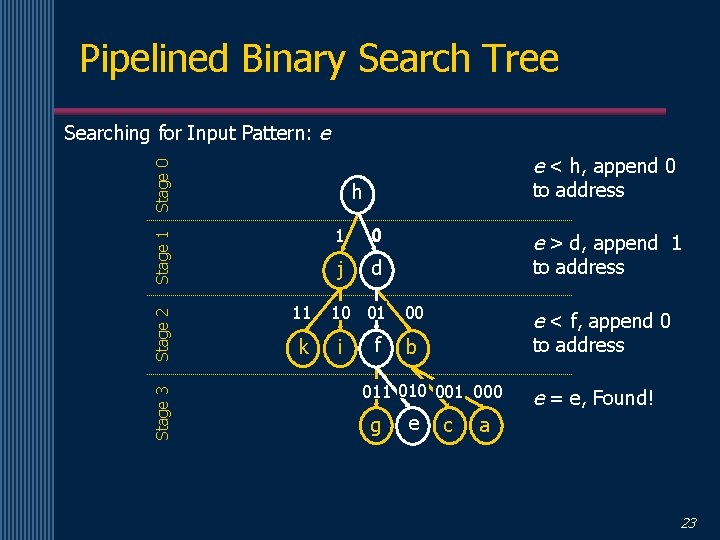

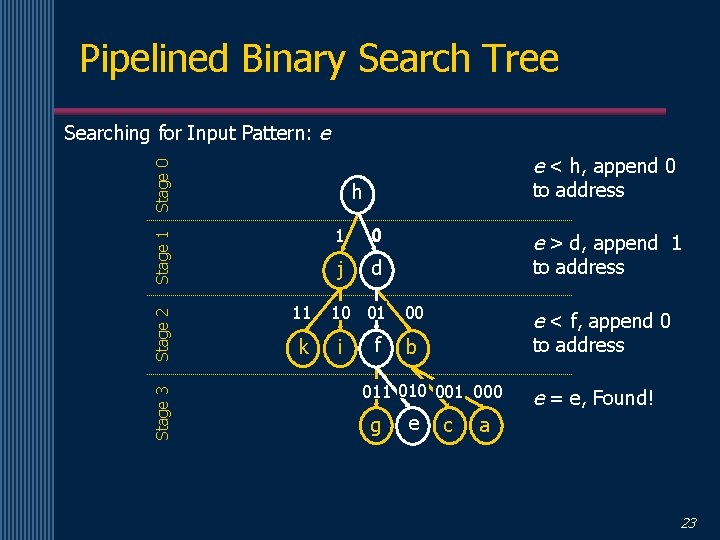

Pipelined Binary Search Tree Searching for Input Pattern: e Stage 0 e < h, append 0 Stage 1 Stage 2 Stage 3 to address h 11 k 1 0 j d 10 01 i f e > d, append 1 to address 00 e < f, append 0 to address b 011 010 001 000 g e c e = e, Found! a 23

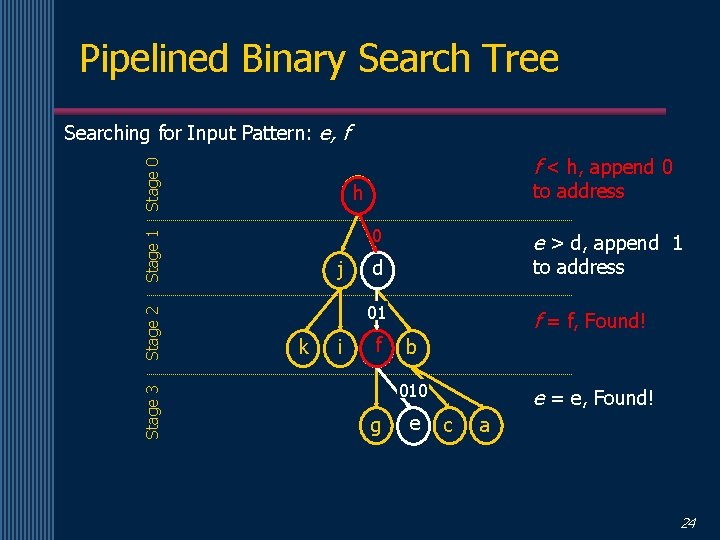

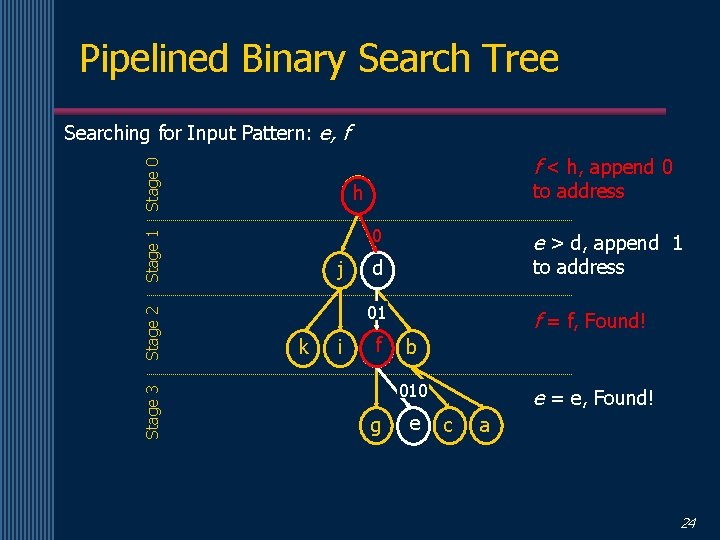

Pipelined Binary Search Tree Searching for Input Pattern: e, f Stage 0 fe << h, h, append 00 0 Stage 1 Stage 2 Stage 3 to address h j k i ef >> d, d, append 11 d to address 01 fe =< f, f, Found! append 0 ff to address b 010 g ee e = e, Found! c a 24

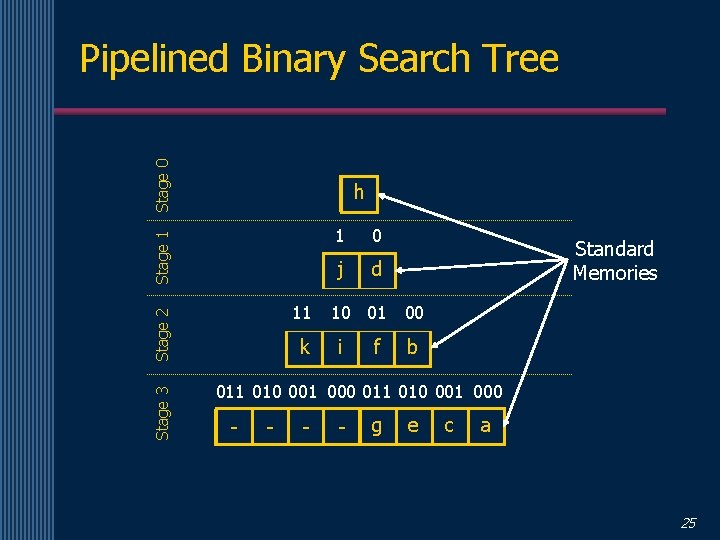

Stage 0 Pipelined Binary Search Tree Stage 1 h Stage 3 Stage 2 11 k 1 0 j d 10 01 i f Standard Memories 00 b 011 010 001 000 - - g e c a 25

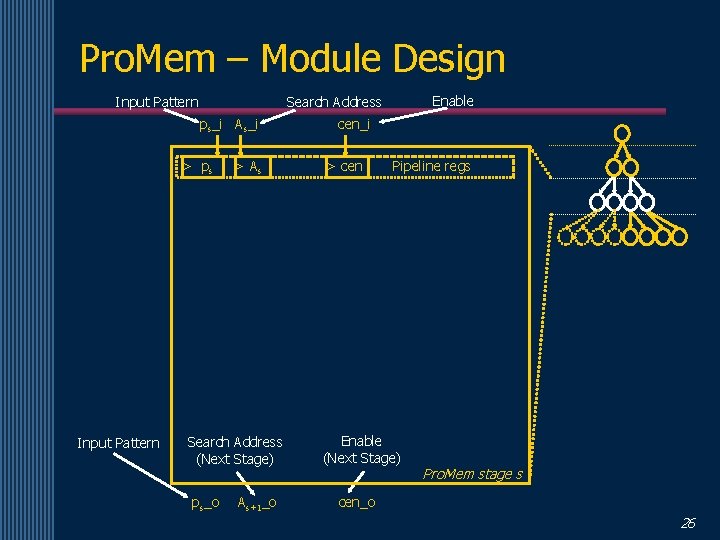

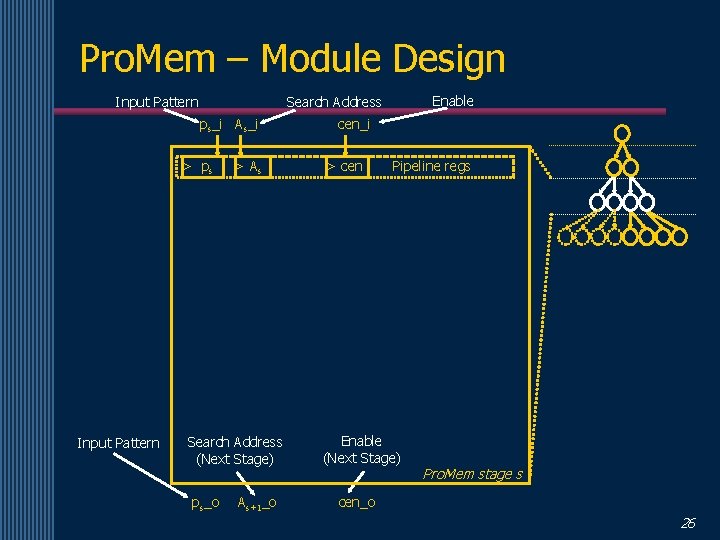

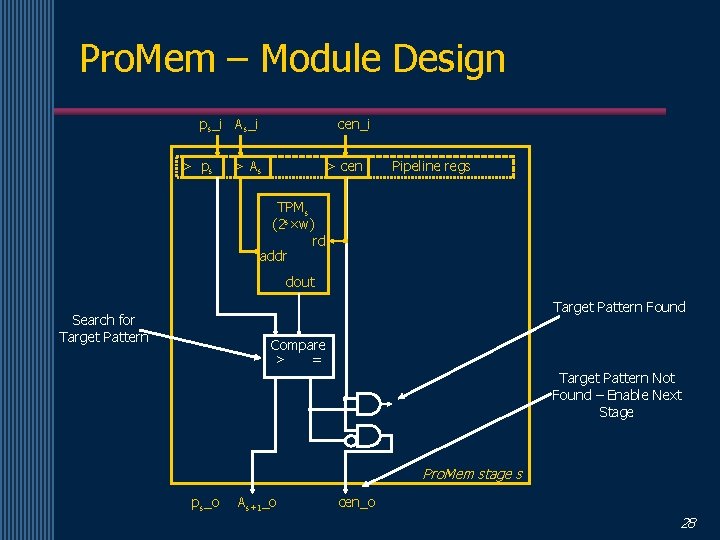

Pro. Mem – Module Design Input Pattern ps_i As_i > ps Input Pattern Enable Search Address > As Search Address (Next Stage) ps_o As+1_o cen_i > cen Pipeline regs Enable (Next Stage) Pro. Mem stage s cen_o 26

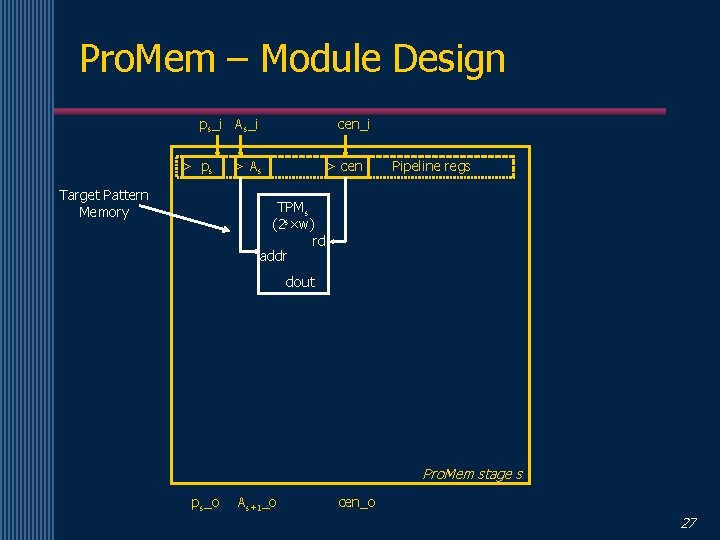

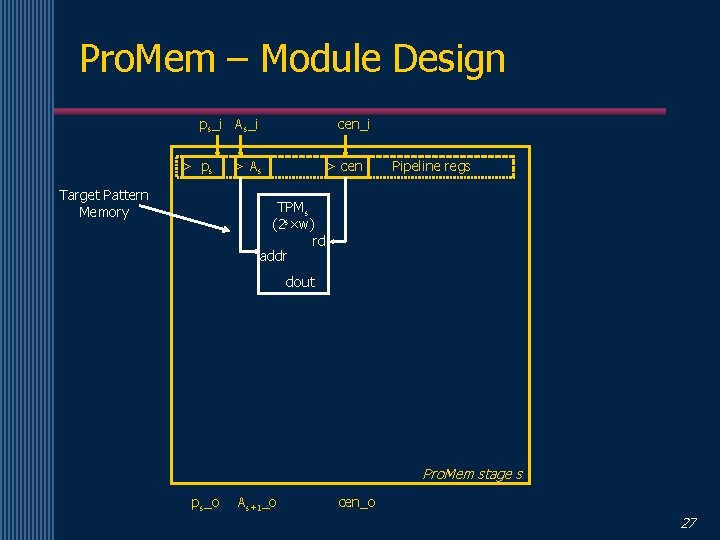

Pro. Mem – Module Design ps_i As_i > ps Target Pattern Memory cen_i > As > cen Pipeline regs TPMs (2 s×w) rd addr dout Pro. Mem stage s ps_o As+1_o cen_o 27

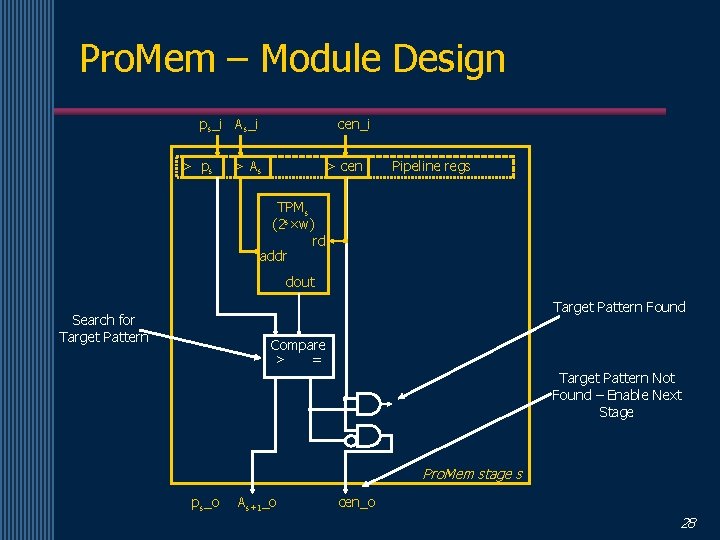

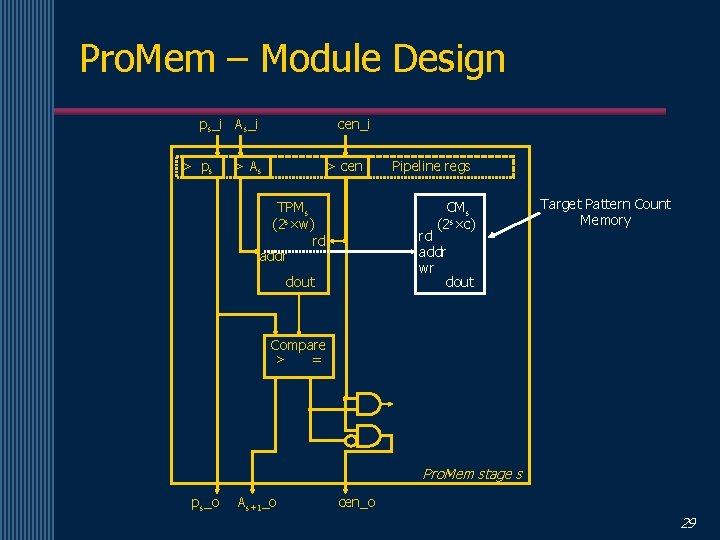

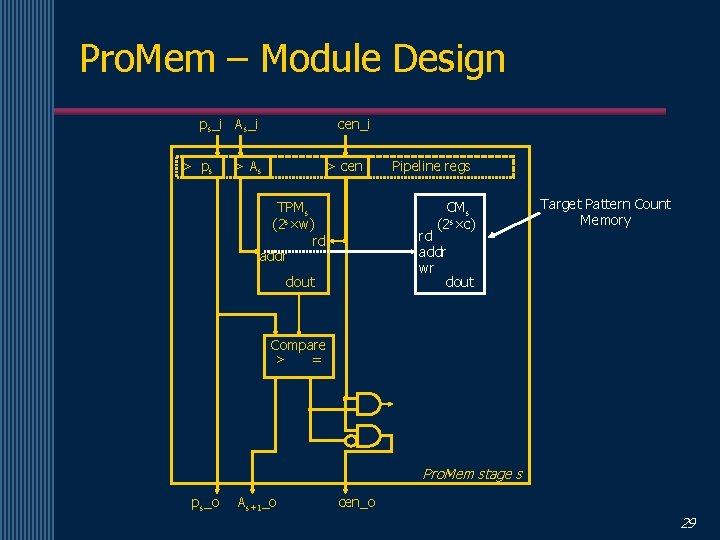

Pro. Mem – Module Design ps_i As_i > ps cen_i > As > cen Pipeline regs TPMs (2 s×w) rd addr dout Target Pattern Found Search for Target Pattern Compare > = Target Pattern Not Found – Enable Next Stage Pro. Mem stage s ps_o As+1_o cen_o 28

Pro. Mem – Module Design ps_i As_i > ps cen_i > As > cen TPMs (2 s×w) rd addr Pipeline regs CMs (2 s×c) rd addr wr dout Target Pattern Count Memory Compare > = Pro. Mem stage s ps_o As+1_o cen_o 29

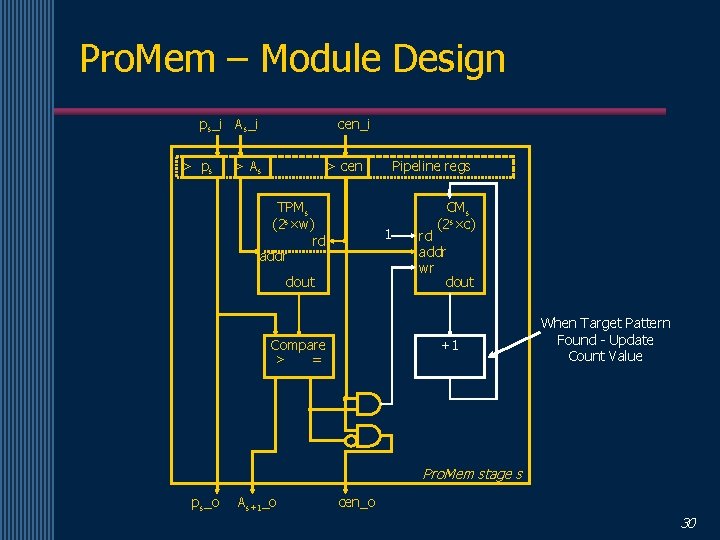

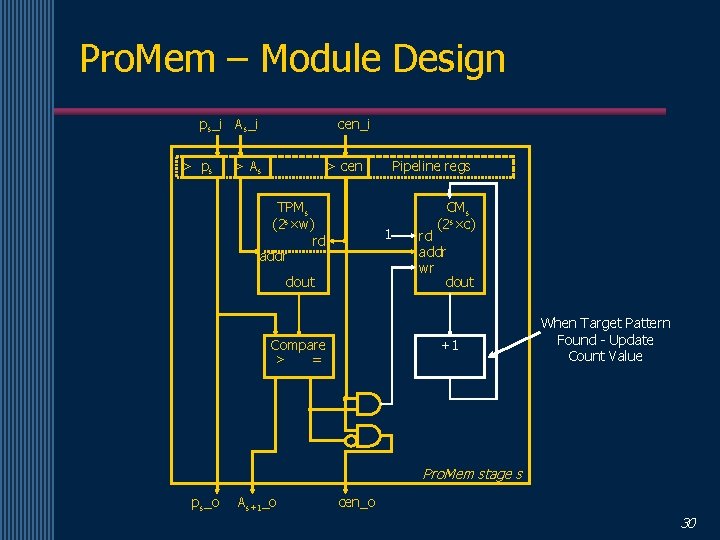

Pro. Mem – Module Design ps_i As_i > ps cen_i > As > cen TPMs (2 s×w) rd addr Pipeline regs 1 dout Compare > = CMs (2 s×c) rd addr wr dout +1 When Target Pattern Found - Update Count Value Pro. Mem stage s ps_o As+1_o cen_o 30

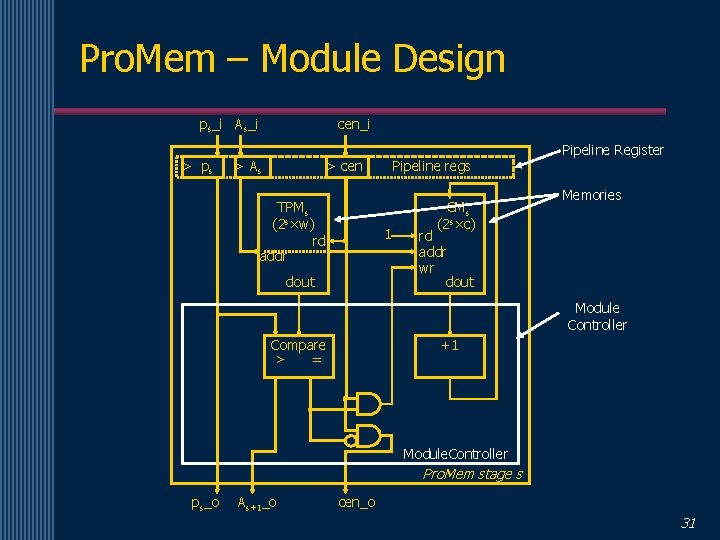

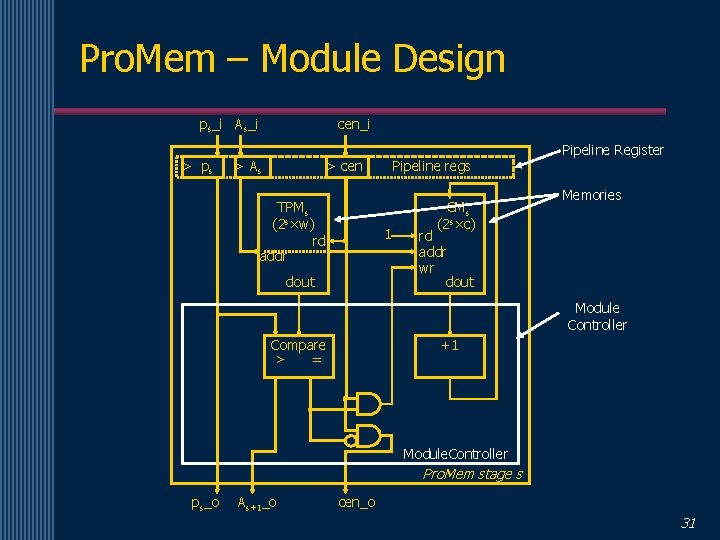

Pro. Mem – Module Design ps_i As_i > ps cen_i > As > cen TPMs (2 s×w) rd addr Pipeline regs 1 dout CMs (2 s×c) Pipeline Register Memories rd addr wr dout Module Controller Compare > = +1 Module. Controller Pro. Mem stage s ps_o As+1_o cen_o 31

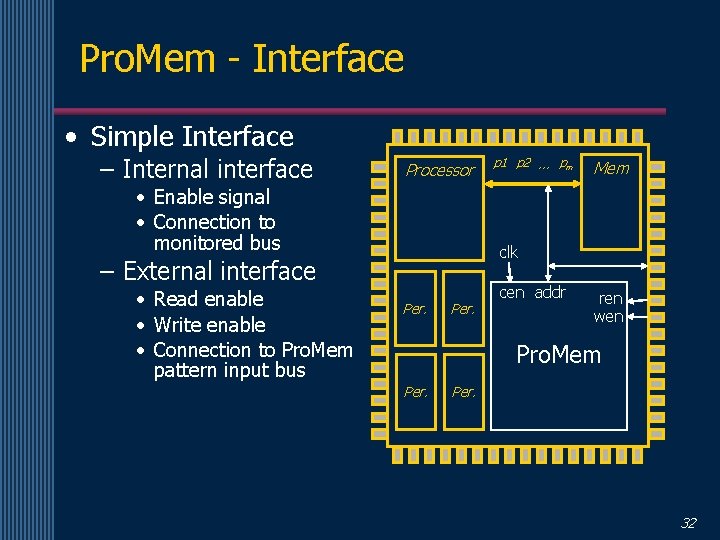

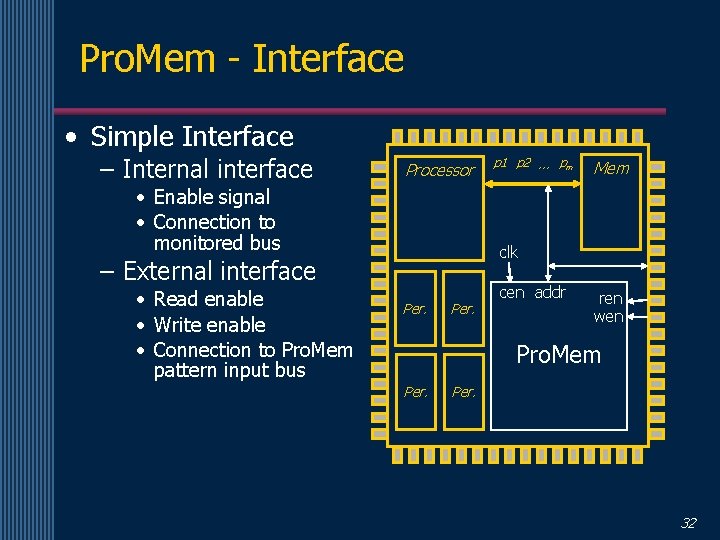

Pro. Mem - Interface • Simple Interface – Internal interface Processor • Enable signal • Connection to monitored bus Mem clk – External interface • Read enable • Write enable • Connection to Pro. Mem pattern input bus p 1 p 2 … pm Per. cen addr ren wen Pro. Mem Per. 32

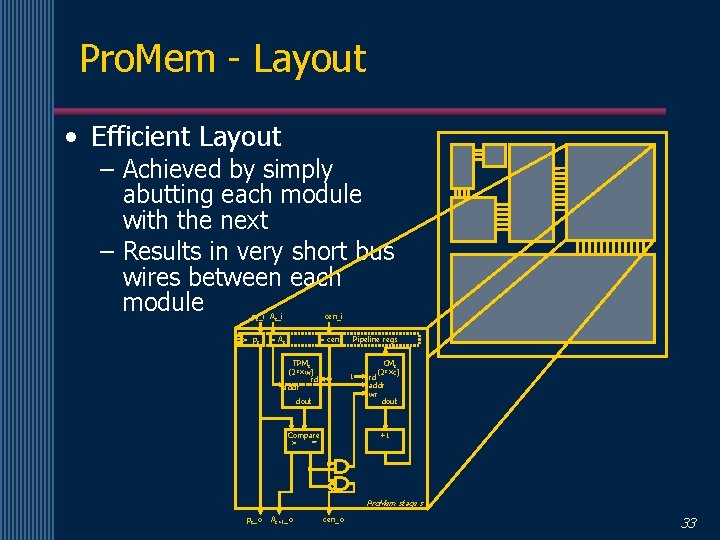

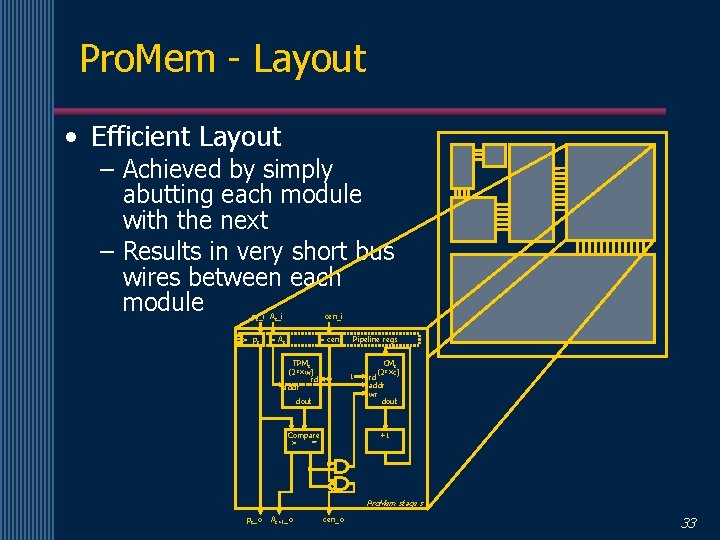

Pro. Mem - Layout • Efficient Layout – Achieved by simply abutting each module with the next – Results in very short bus wires between each module ps_i As_i > ps cen_i > As > cen TPMs (2 s×w) rd addr Pipeline regs 1 dout Compare > = CMs (2 s×c) rd addr wr dout +1 Pro. Mem stage s ps_o As+1_o cen_o 33

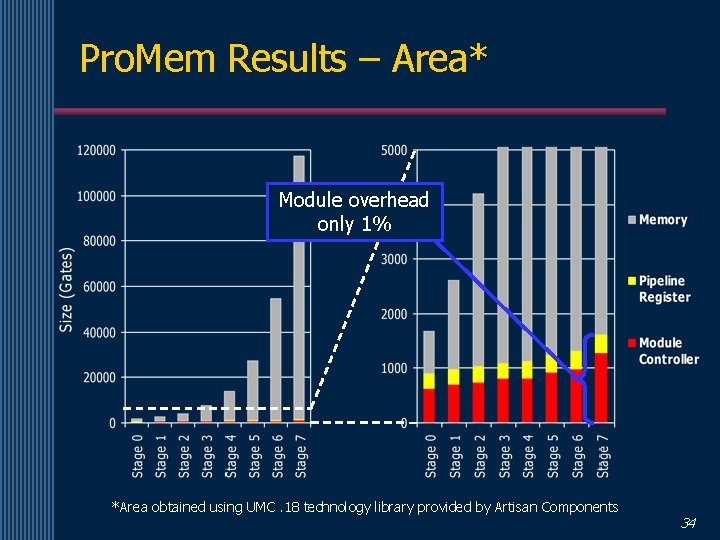

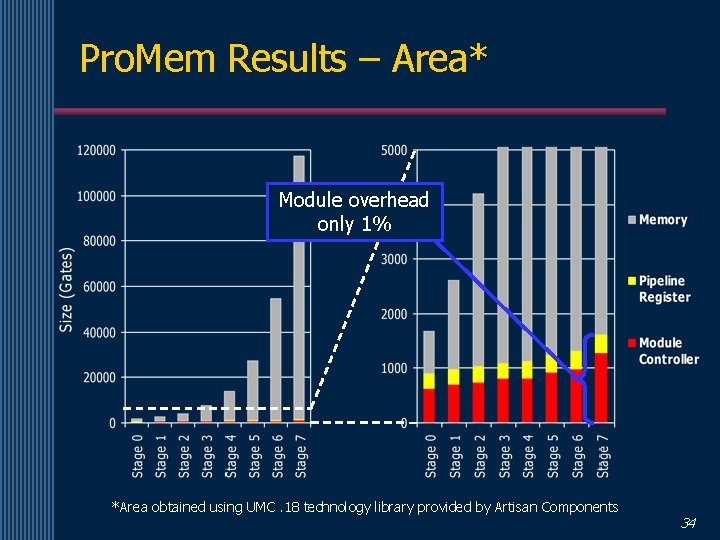

Pro. Mem Results – Area* Module overhead only 1% *Area obtained using UMC. 18 technology library provided by Artisan Components 34

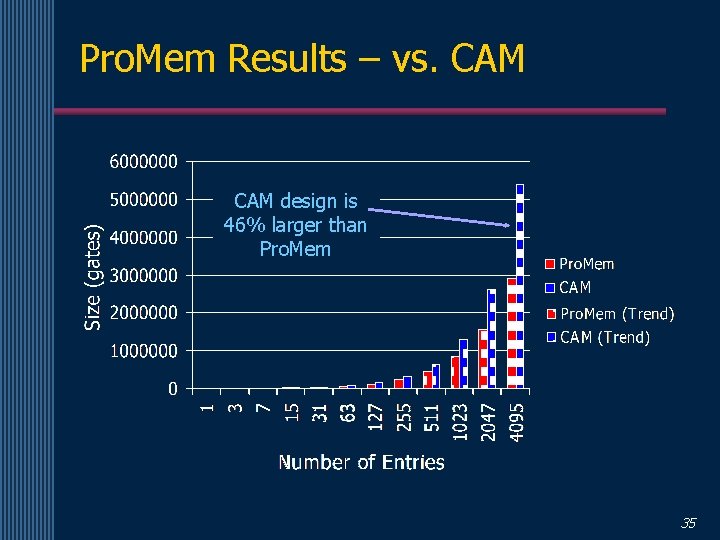

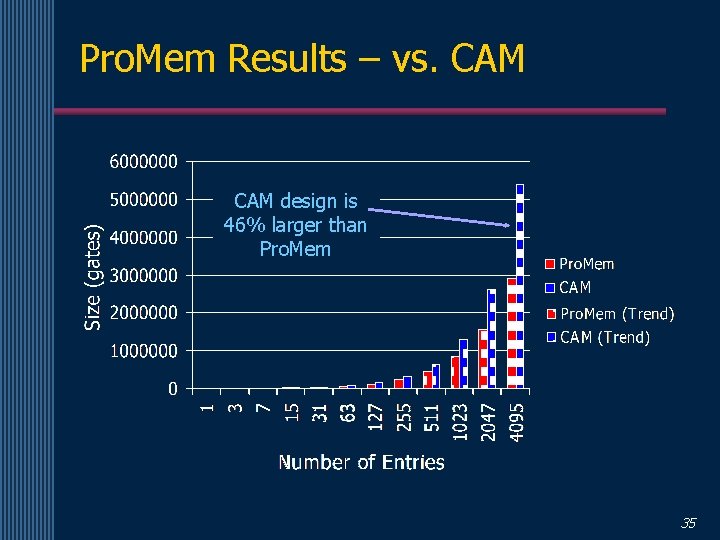

Pro. Mem Results – vs. CAM design is 46% larger than Pro. Mem 35

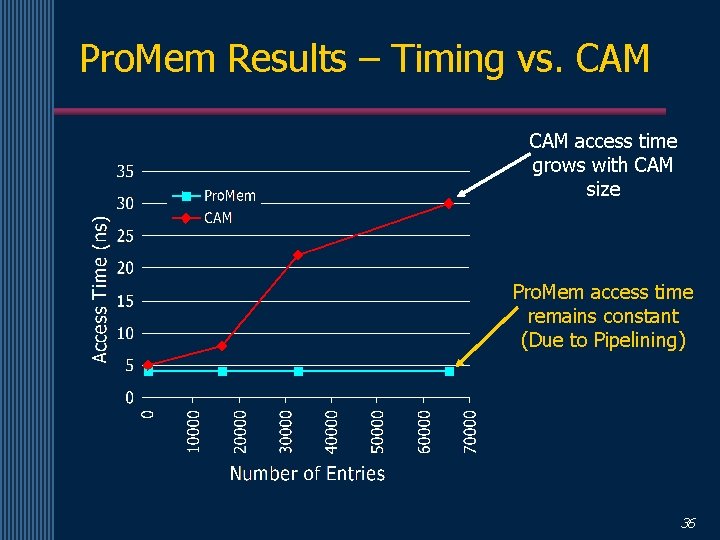

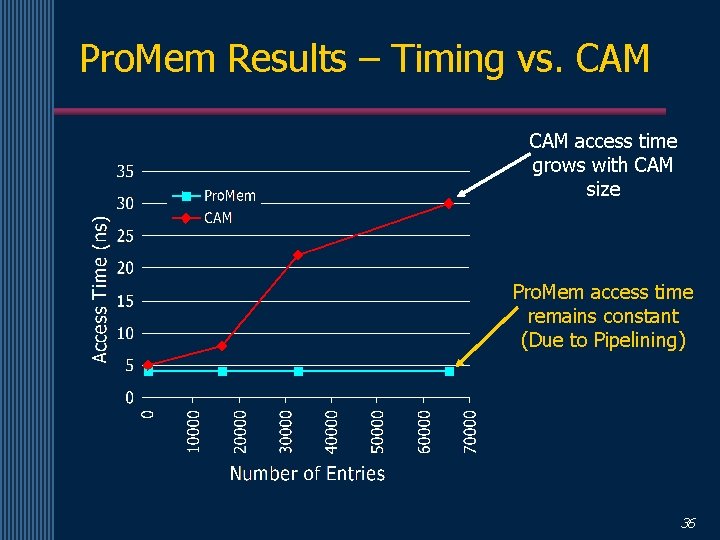

Pro. Mem Results – Timing vs. CAM access time grows with CAM size Pro. Mem access time remains constant (Due to Pipelining) 36

Conclusions • Introduced a new memory structure specifically for fast on-chip profiling • One pattern per cycle throughput • Simple interface to monitored bus • Efficient design is very scalable 37