A Design Space Exploration of Grid Processor Architectures

- Slides: 17

A Design Space Exploration of Grid Processor Architectures R. Nagarajan, K. Sankaralingam D. Burger and S. W. Keckler The University of Texas at Austin MICRO, 2001 Presented by Jie Xiao April 2, 2008

Outline n n n Motivation Block-Atomic Execution Model GPA Implementation Evaluation Conclusion

Motivation n Performance Improvement came from: n n n Existing Problems: n n Clock rate ↑ ILP improvement is small Pipeline depth limits Clock rate increase Wire delay slows down IPC Goal: n n n Clock rate ↑ ILP ↑ Wire delay ↓

Block-Atomic Execution Model n An atomic unit / group / hyperblock n n n A block of instructions is an atomic unit of fetch/schedule/execute/commit Groupings are done by the compiler Three types of data for each group n n n Group inputs move: reg file -> ALU Group temporaries point-to-point operand delivery -> reg file bandwidth↓ data-driven – dataflow machine Group outputs

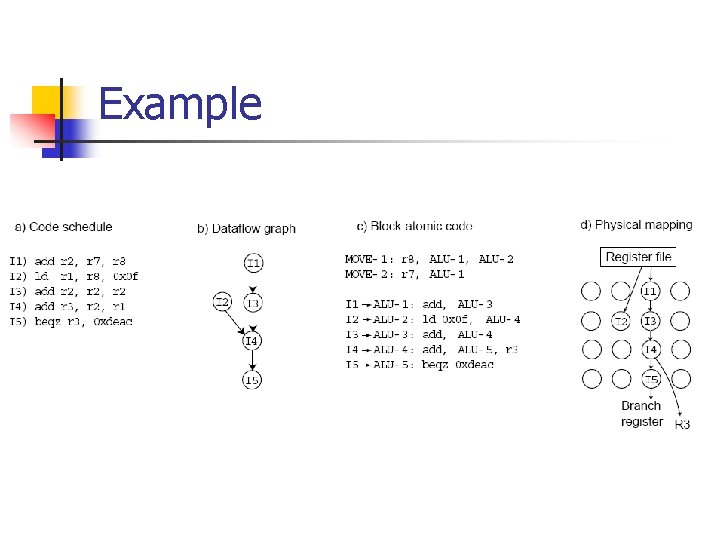

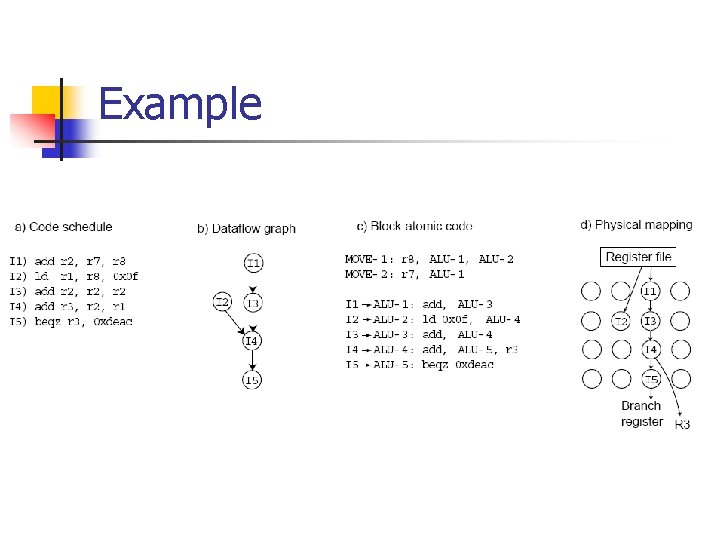

Example

Block-Atomic Execution Model n Advantages n n n No centralized, associative issue window No reg renaming table Fewer reg file reads and writes No broadcasting Reduced conventional wire and communication delay Compiler-controlled physical layout ensures that the critical path is scheduled along the shortest physical path

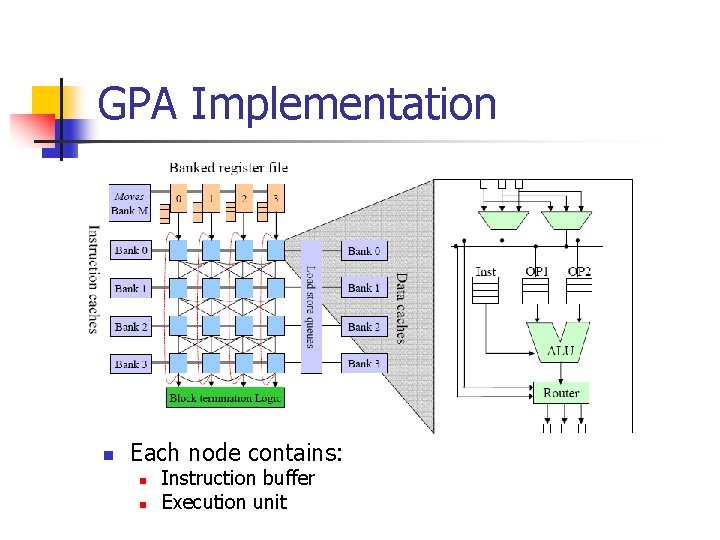

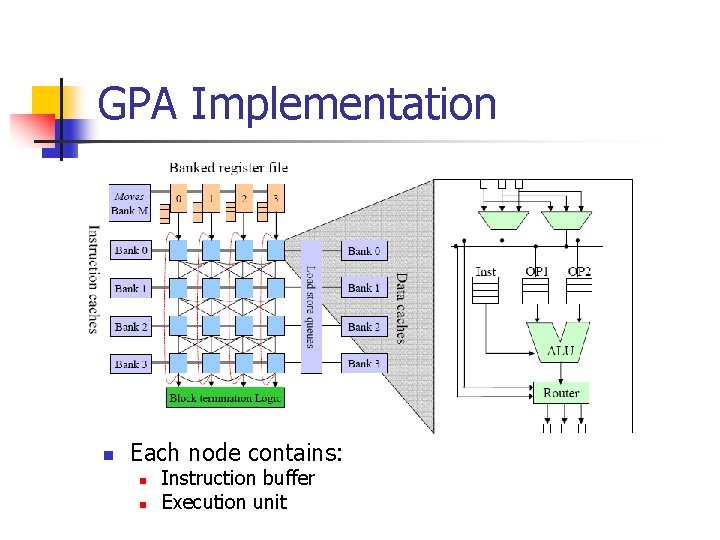

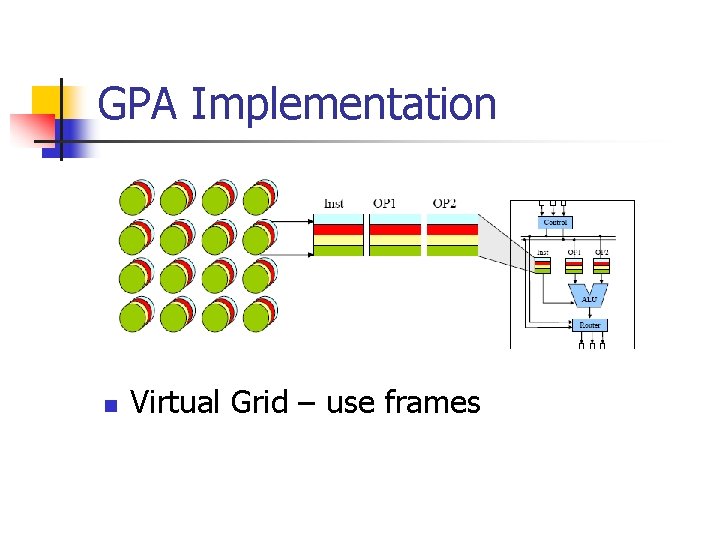

GPA Implementation n Each node contains: n n Instruction buffer Execution unit

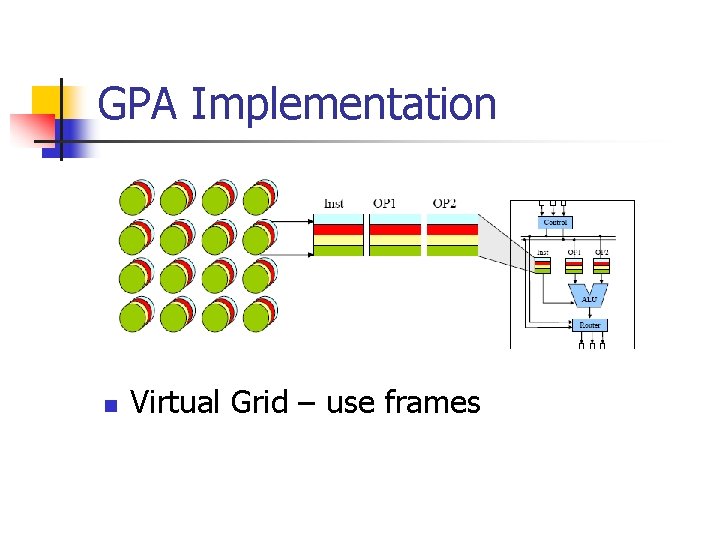

GPA Implementation n Virtual Grid – use frames

GPA Implementation n Block stitching n n Overlap fetch, map and execution Speculatively – using a block level predicator

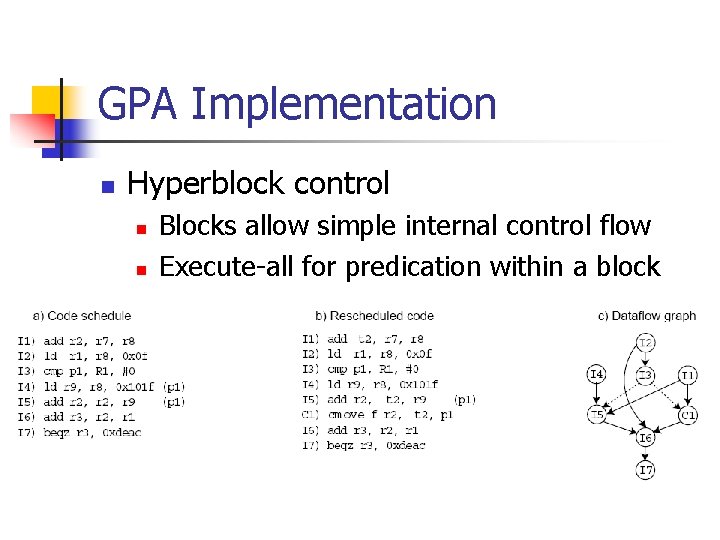

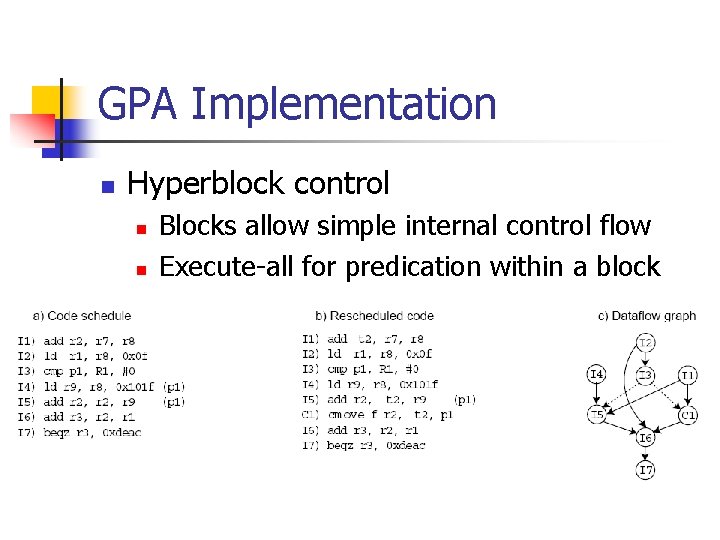

GPA Implementation n Hyperblock control n n Blocks allow simple internal control flow Execute-all for predication within a block

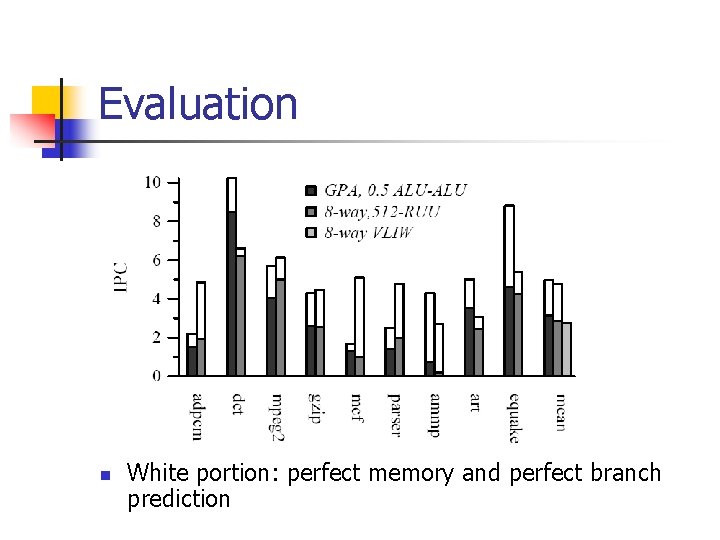

Evaluation n n 3 SPECInt 2000, 3 SPECFP 2000, 3 Mediabenchmarks Compiled using the Trimaran toolset Trimaran simulator Load instructions are placed close to Dcaches

Evaluation n Superscalar n n GPA n n n 5 stage pipeline, 8 -wide 0 cycle router and wire delay! 512 entry instruction window 8 x 8 grid 0. 25 cycle router + 0. 25 cycle wire delay 32 slots at every node • Alpha 21264 functional unit latencies • L 1: 3 cycles, L 2: 13 cycles, Main memory: 62 cycles

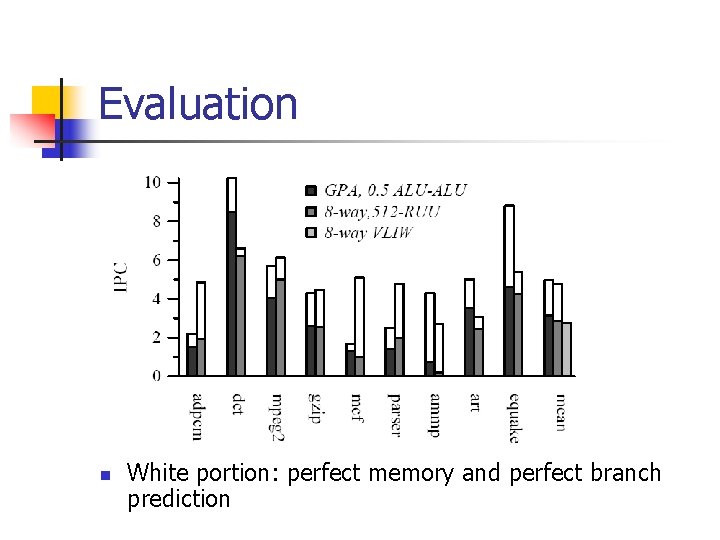

Evaluation n White portion: perfect memory and perfect branch prediction

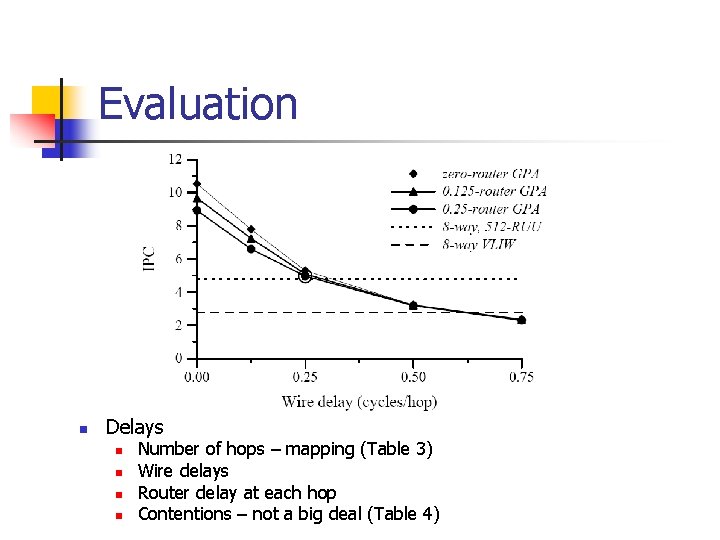

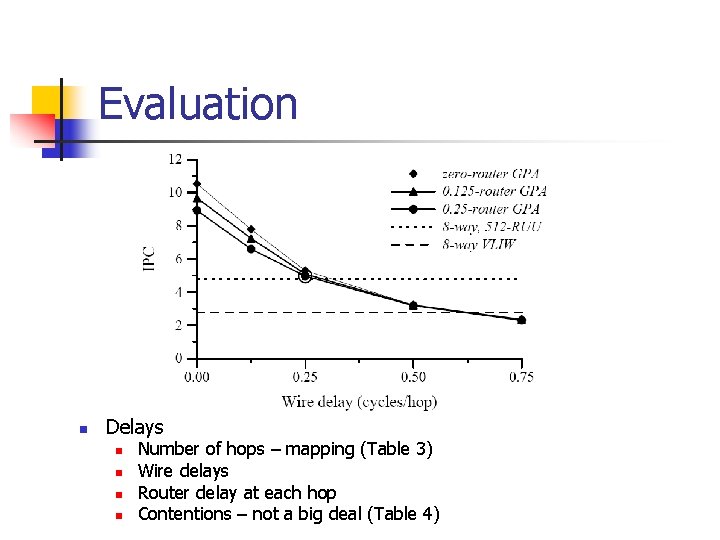

Evaluation n Delays n n Number of hops – mapping (Table 3) Wire delays Router delay at each hop Contentions – not a big deal (Table 4)

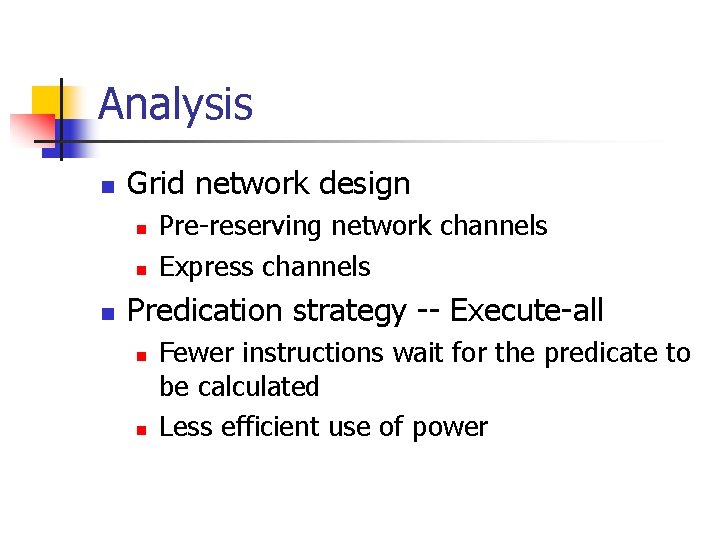

Analysis n Grid network design n Pre-reserving network channels Express channels Predication strategy -- Execute-all n n Fewer instructions wait for the predicate to be calculated Less efficient use of power

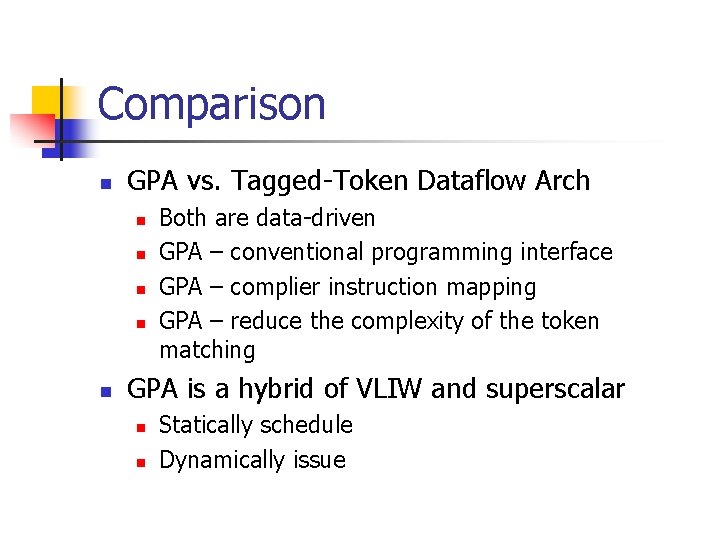

Comparison n GPA vs. Tagged-Token Dataflow Arch n n n Both are data-driven GPA – conventional programming interface GPA – complier instruction mapping GPA – reduce the complexity of the token matching GPA is a hybrid of VLIW and superscalar n n Statically schedule Dynamically issue

Conclusion § Strength § No centralized, associative issue window n No reg renaming table n Fewer reg file reads and writes n No broadcasting n Reduced conventional wire and communication delay n Compiler-controlled physical layout ensures that the critical path is scheduled along the shortest physical path n Challenges n n Wire delay and router delay Complex frame management and block stitching