A Deep Learning Model for Longterm Declarative Episodic

![Results on text dataset; Epochs 101 103 Epoch: 101 Epoch 1/1 2886/2886 [===============] - Results on text dataset; Epochs 101 103 Epoch: 101 Epoch 1/1 2886/2886 [===============] -](https://slidetodoc.com/presentation_image_h2/cd08d913dc35e8e35706261af0ecfd42/image-25.jpg)

![Results on text dataset; Epochs 201 203 Epoch: 201 Epoch 1/1 2886/2886 [===============] - Results on text dataset; Epochs 201 203 Epoch: 201 Epoch 1/1 2886/2886 [===============] -](https://slidetodoc.com/presentation_image_h2/cd08d913dc35e8e35706261af0ecfd42/image-26.jpg)

- Slides: 33

A Deep Learning Model for Longterm Declarative Episodic Memory Storage Abu Kamruzzaman, Yousef Alhwaiti, Ajinkya Parkar, Karan Thakkar and Charles C. Tappert

Have you ever wondered about. . . ● How you remember the times, places, associated emotions and other contextual knowledge at ○ ○ ○ ● Your first day at work/college/school. The day when you experienced something new (your best/worst day in life? ). The day when you achieved something. You can recall almost everything (When, where, how, who, why).

Introduction • LONG-TERM DECLARATIVE EPISODIC MEMORY: § Atkinson-Shiffrin is the most popular model to study the memory. § It contain a sequence of three stages, from sensory to short-term to long-term memory § We are concerned about long term memory. § there is currently a debate as to whether we ever forget anything, or whether it merely becomes difficult to retrieve certain items from memory § Episodic memory represents our memory of experiences and specific events in time in a serial form, from which we can reconstruct the actual events that took place at any given point in our lives.

• COMPUTER SIMULATION BRAIN MODELS: § Human brain learns through observation and experiments. § Computer systems can be implemented to mimic the same implementation of human brain. However, it’s difficult to implement a system that can learn from multiple disciplines where as human brain can easily adopt from multiple disciplines. § Researchers have put in countless efforts to implement brain like human brain and showed numerous progress on enabling computer learn from multiple disciples.

Literature review

Model’s Reviewed • • Blue Brain Model The Human Brain Project(HBP) Mc. Culloch Pitts Model Sparse-Sysid Model Data Brain Model Pattern Recognition Theory of Mind (PRTM) Hierarchical Temporal Memory (HTM)

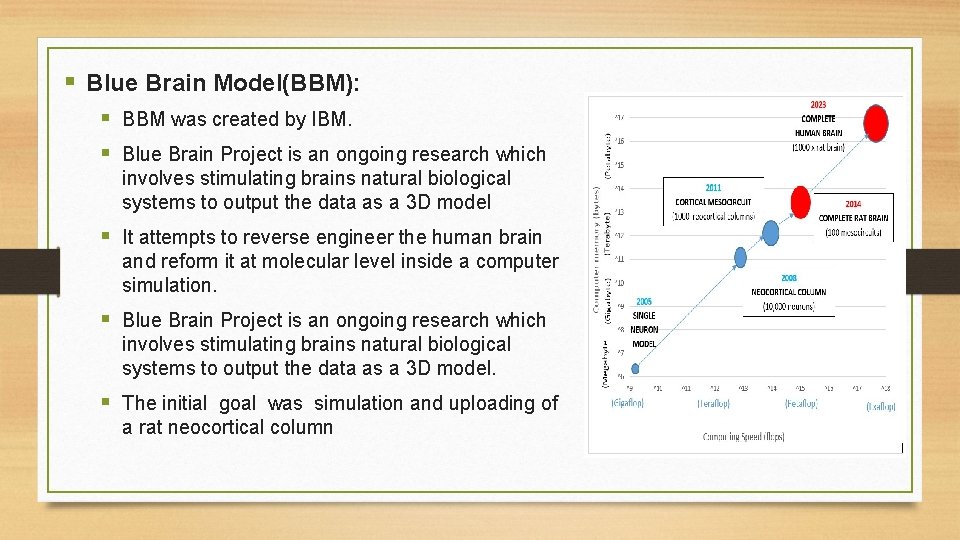

§ Blue Brain Model(BBM): § BBM was created by IBM. § Blue Brain Project is an ongoing research which involves stimulating brains natural biological systems to output the data as a 3 D model § It attempts to reverse engineer the human brain and reform it at molecular level inside a computer simulation. § Blue Brain Project is an ongoing research which involves stimulating brains natural biological systems to output the data as a 3 D model. § The initial goal was simulation and uploading of a rat neocortical column

§ The Blue Brain Project works on four major motivations: § § Treatments of Brain dysfunctioning. Scientific curiosity about consciousness and the human mind. A bottom up approach towards building thinking machine. Database of all neuroscientific research results and related past stories. § Few of the many applications of the Blue Brain Project are as follows: § It will help in the treatment of several neurological disorders like Alzheimers disease, epilepsy disorder, bipolar disorder etc. § For brain donation as it will help continual functioning of the brain even after the death of that person. § Scientific curiosity about conscious and subconscious mind can get a major breakthrough.

§ The Human Brain Project(HBP) § HBP originated from the Blue Brains results with the possibility of modelling a rat cortical column and the idea behind HBP is to promote large-scale collaboration and data sharing. § HBP goal is to investigate the brain on different spatial and temporal scales (ie from the molecular to the large networks underlying higher cognitive processes, and from milliseconds to years) § To achieve this goal, the HBP relies on the collaboration of scientists from diverse disciplines, including neuroscience, philosophy and computer science, to take advantage of the loop of experimental data, modelling theories and simulations. § The data of HBP using may include human life history data, medical histories, clinical assays, genetic tests, brain images, records of treatments and their results and much more

§ Sparse-Sysid Model: § A novel brain model named good-enough brain model was developed based on the algorithm Sparse-Sysid. § The model can effectively model the dynamics of the neuron interactions and infer the functional connectivity § It was evaluated using both synthetic and real brain data. § With the use of real data, it was able to produce brain activity patterns similar to the real ones. § The primary focus of this work is to estimate the functional connectivity of the human brain

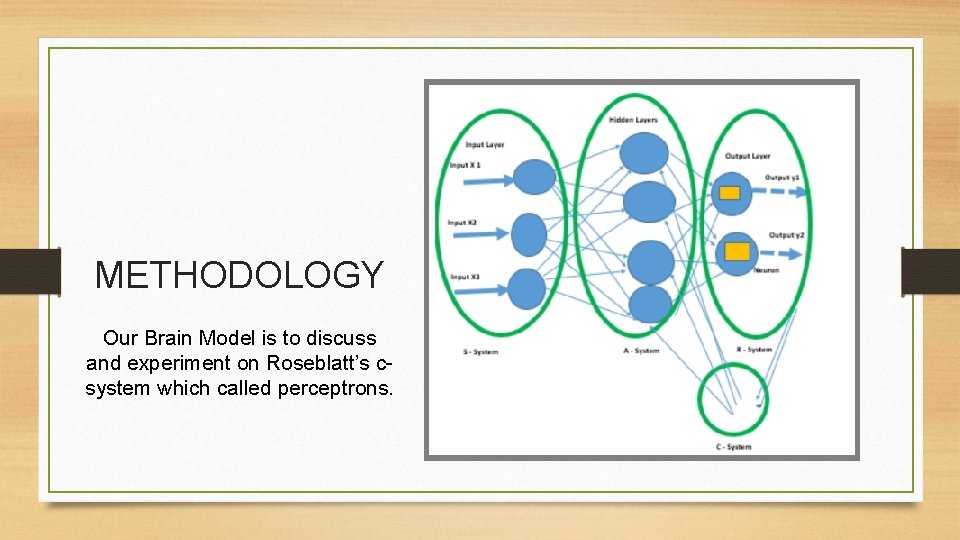

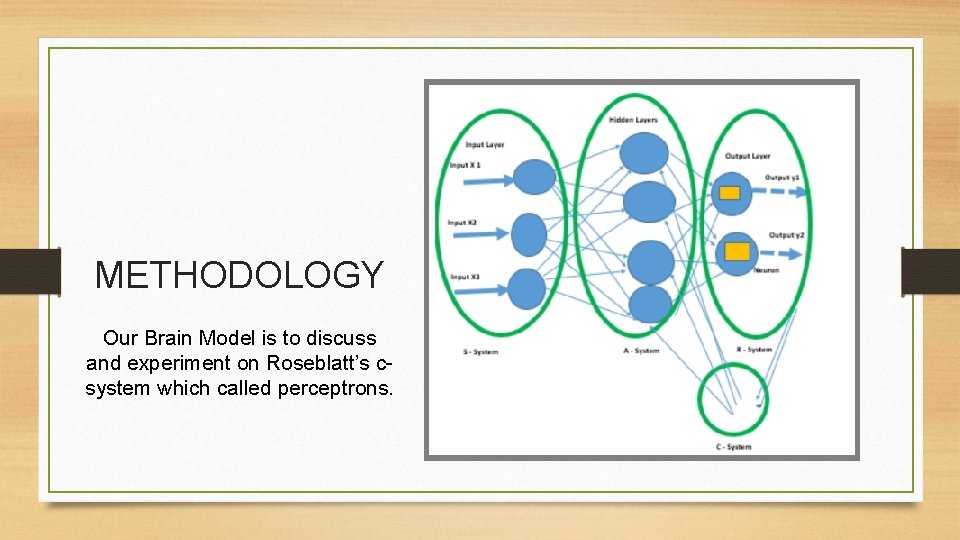

METHODOLOGY Our Brain Model is to discuss and experiment on Roseblatt’s csystem which called perceptrons.

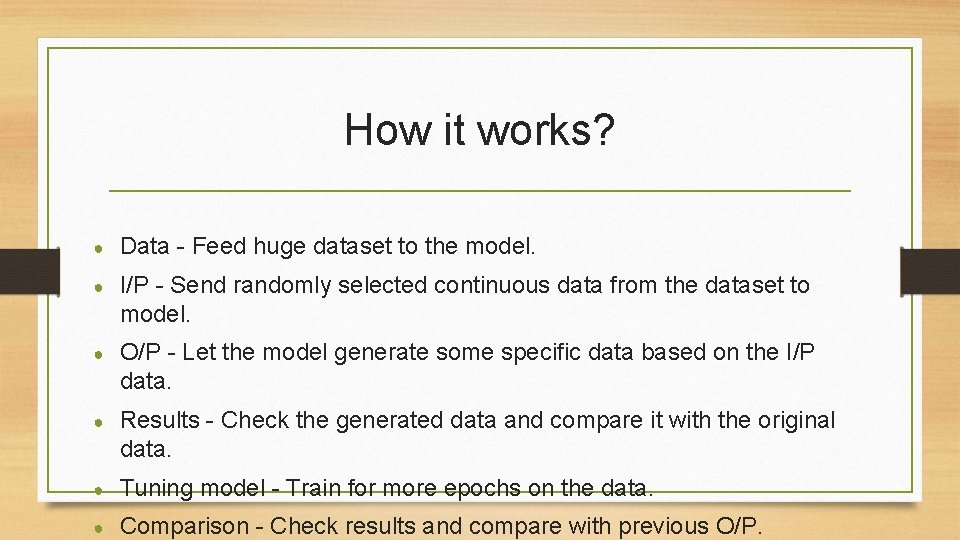

How it works? ● Data - Feed huge dataset to the model. ● I/P - Send randomly selected continuous data from the dataset to model. ● O/P - Let the model generate some specific data based on the I/P data. ● Results - Check the generated data and compare it with the original data. ● Tuning model - Train for more epochs on the data. ● Comparison - Check results and compare with previous O/P.

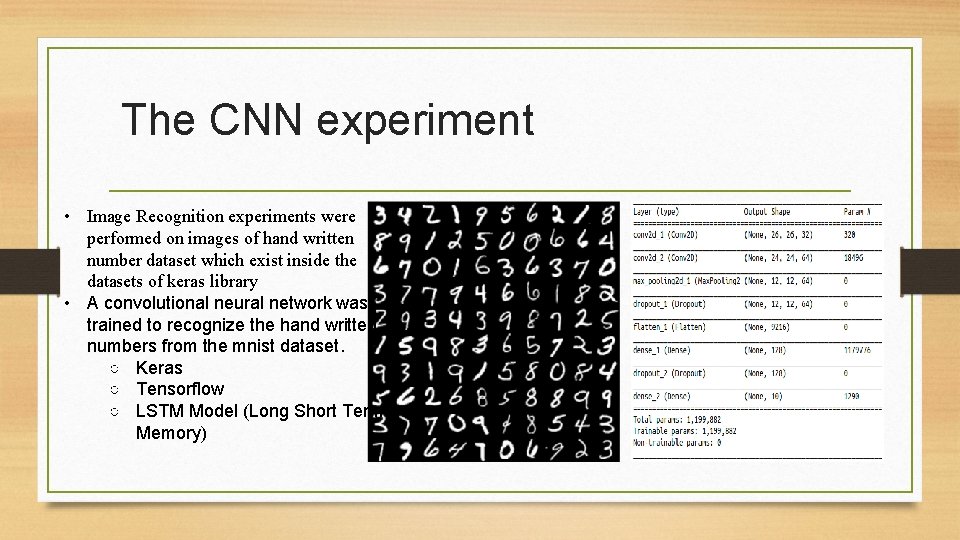

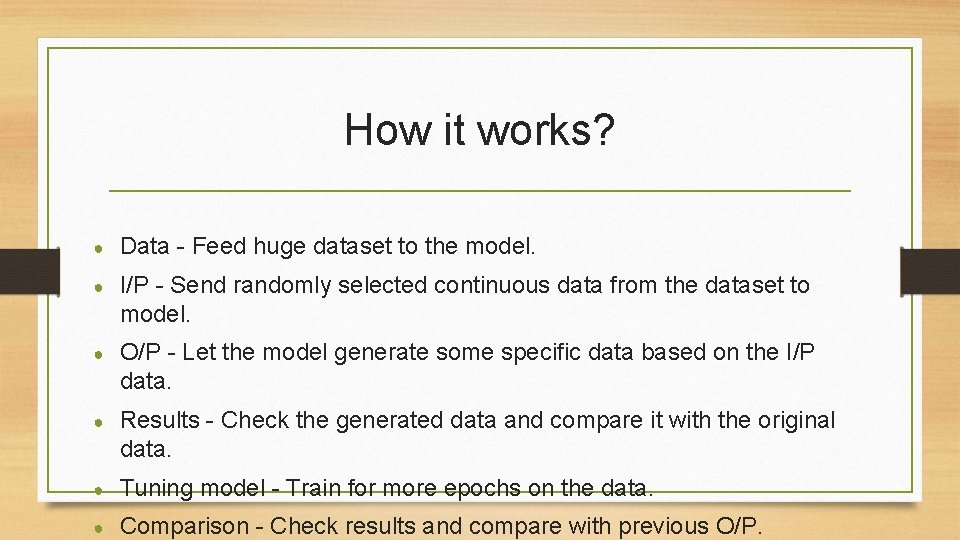

The CNN experiment • Image Recognition experiments were performed on images of hand written number dataset which exist inside the datasets of keras library • A convolutional neural network was trained to recognize the hand written numbers from the mnist dataset. ○ Keras ○ Tensorflow ○ LSTM Model (Long Short Term Memory)

LSTM Model Approach ● ● ● ● ● Training a LSTM model on the MNIST dataset converted to their vector representation. Vectorized values passed to LSTM model for training as input the subsequent sequence will also be vectorized. Create samples of sequential data. (I/P + O/P) Send only half of the data (I/P) to the model. Let the model generate the other half (O/P). Compare the results. Get the accuracy. Train model even more. Try and Test on different size of I/P, O/P pairs.

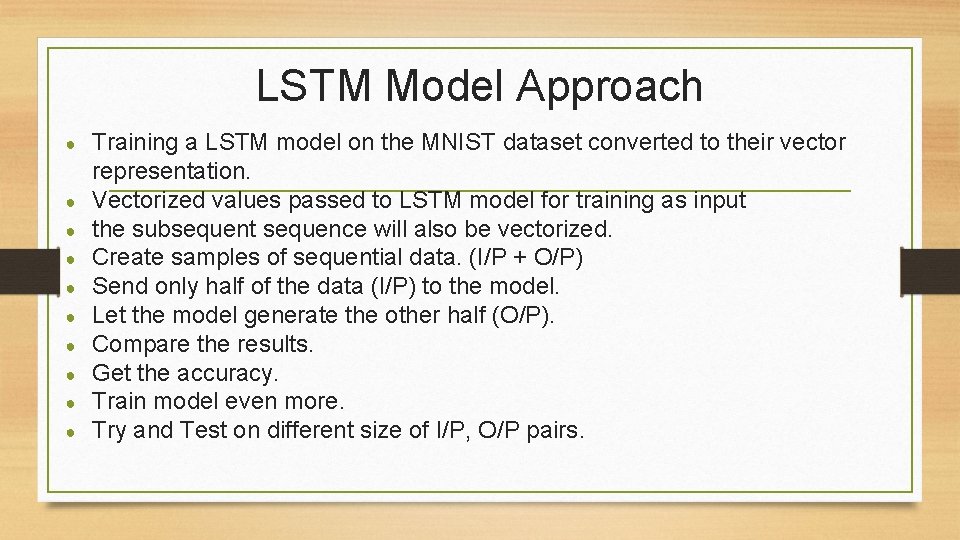

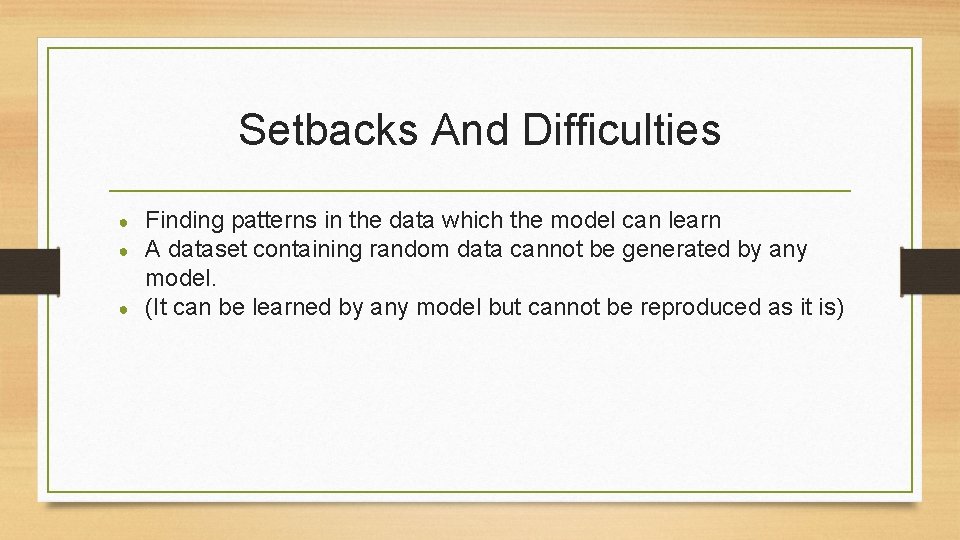

Random Input Generated Output; Epoch 10000

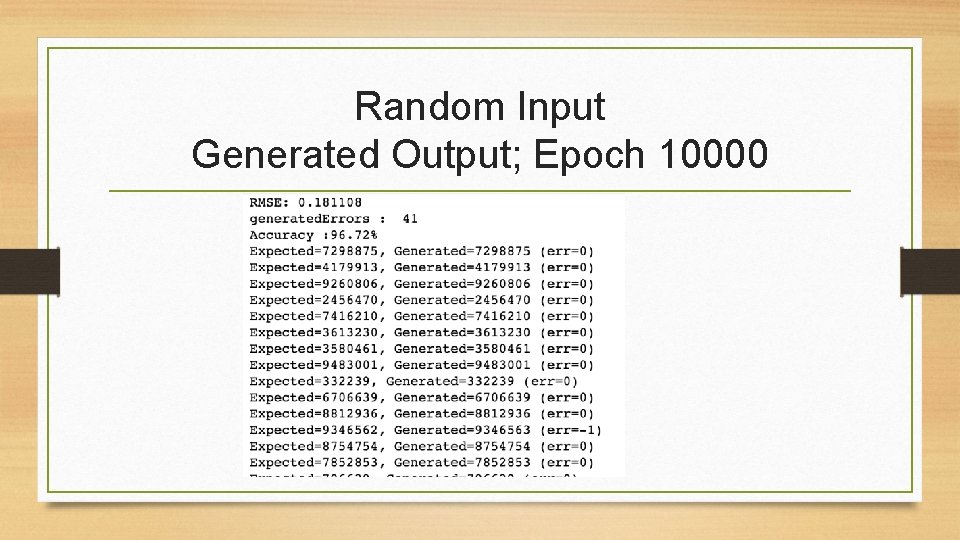

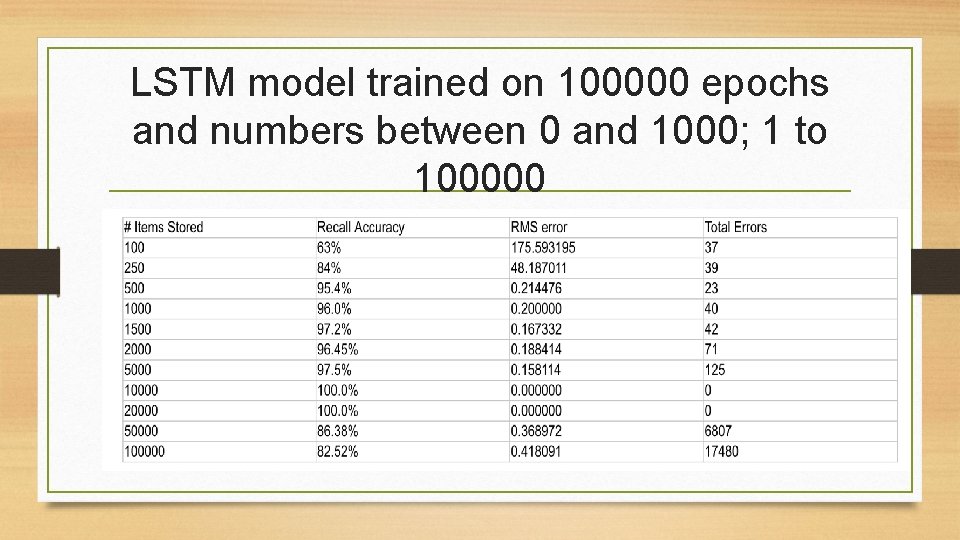

LSTM model trained on 100000 epochs and numbers between 0 and 1000; 1 to 100000

Setbacks And Difficulties ● ● ● Finding patterns in the data which the model can learn A dataset containing random data cannot be generated by any model. (It can be learned by any model but cannot be reproduced as it is)

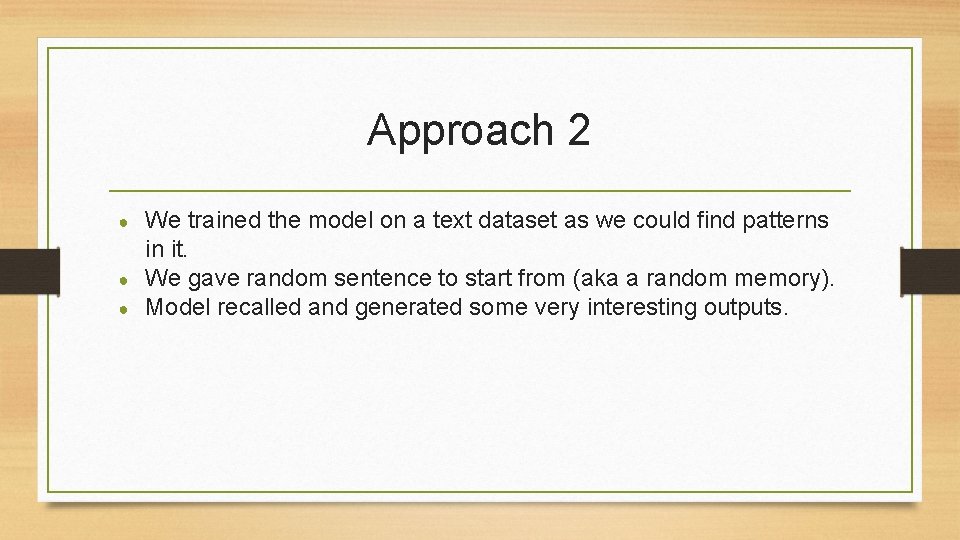

Approach 2 ● ● ● We trained the model on a text dataset as we could find patterns in it. We gave random sentence to start from (aka a random memory). Model recalled and generated some very interesting outputs.

Text Dataset

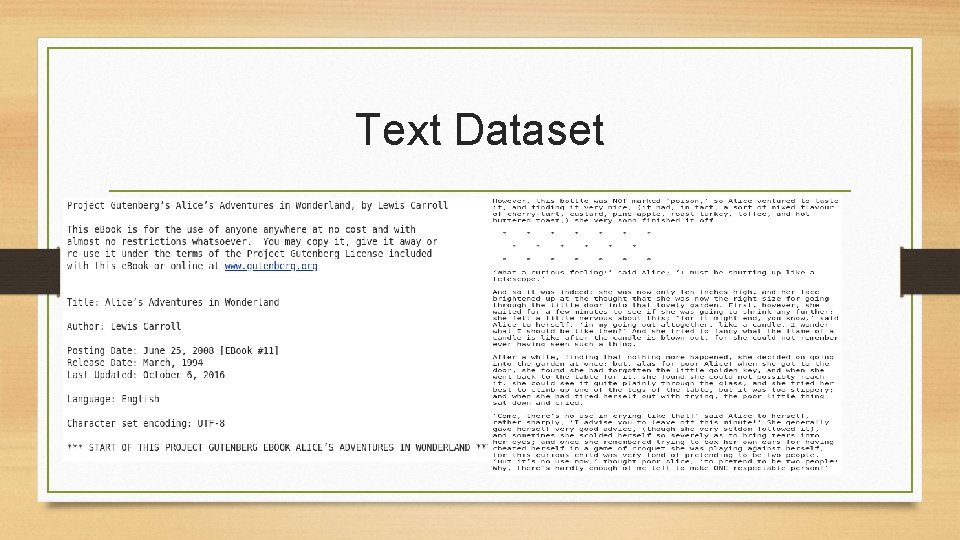

Extract Proper Noun/ Common Nouns Output for identified Objects

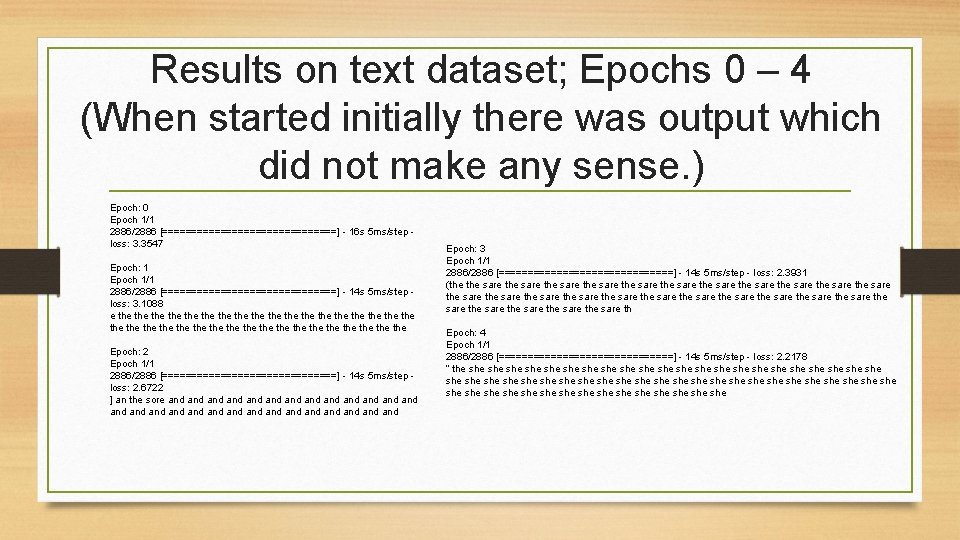

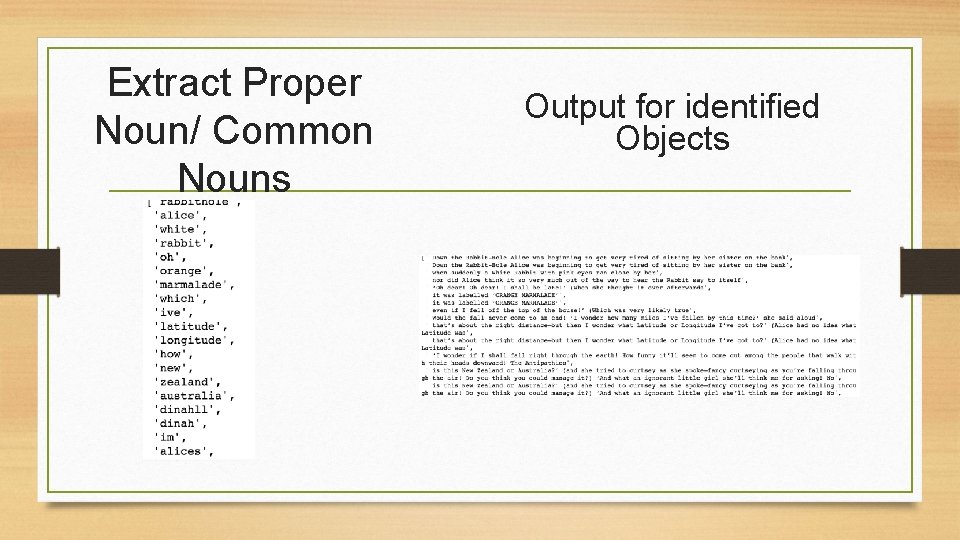

Results on text dataset; Epochs 0 – 4 (When started initially there was output which did not make any sense. ) Epoch: 0 Epoch 1/1 2886/2886 [===============] - 16 s 5 ms/step loss: 3. 3547 Epoch: 1 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step loss: 3. 1088 e the the the the the the the the the Epoch: 2 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step loss: 2. 6722 ] an the sore and and and and and and and Epoch: 3 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 2. 3931 (the sare the sare the sare the sare the sare the sare the sare th Epoch: 4 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 2. 2178 ” the she she she she she she she she she she she she she she she she

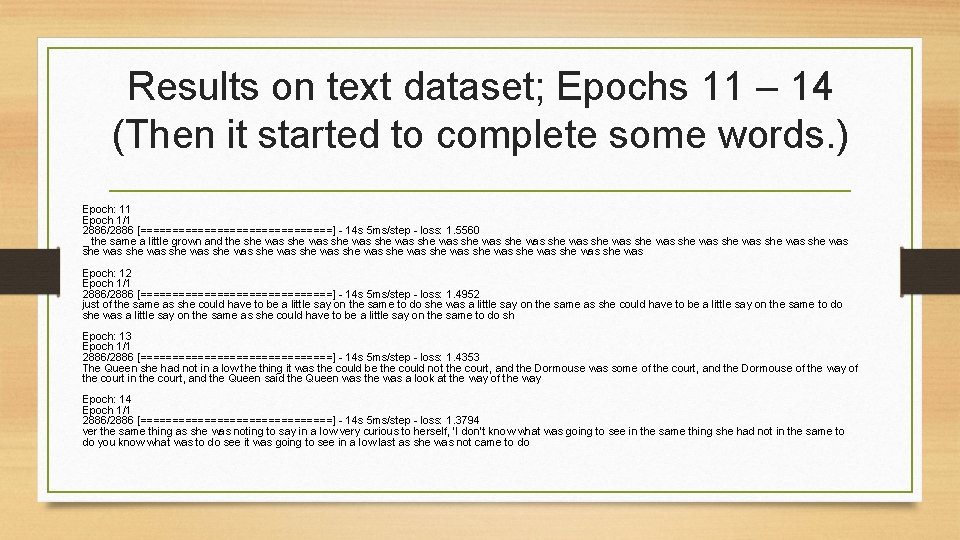

Results on text dataset; Epochs 11 – 14 (Then it started to complete some words. ) Epoch: 11 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 1. 5560 _ the same a little grown and the she was she was she was she was she was she was she was Epoch: 12 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 1. 4952 just of the same as she could have to be a little say on the same to do she was a little say on the same as she could have to be a little say on the same to do sh Epoch: 13 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 1. 4353 The Queen she had not in a low the thing it was the could be the could not the court, and the Dormouse was some of the court, and the Dormouse of the way of the court in the court, and the Queen said the Queen was the was a look at the way of the way Epoch: 14 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 1. 3794 ver the same thing as she was noting to say in a low very curious to herself, ‘I don’t know what was going to see in the same thing she had not in the same to do you know what was to do see it was going to see in a low last as she was not came to do

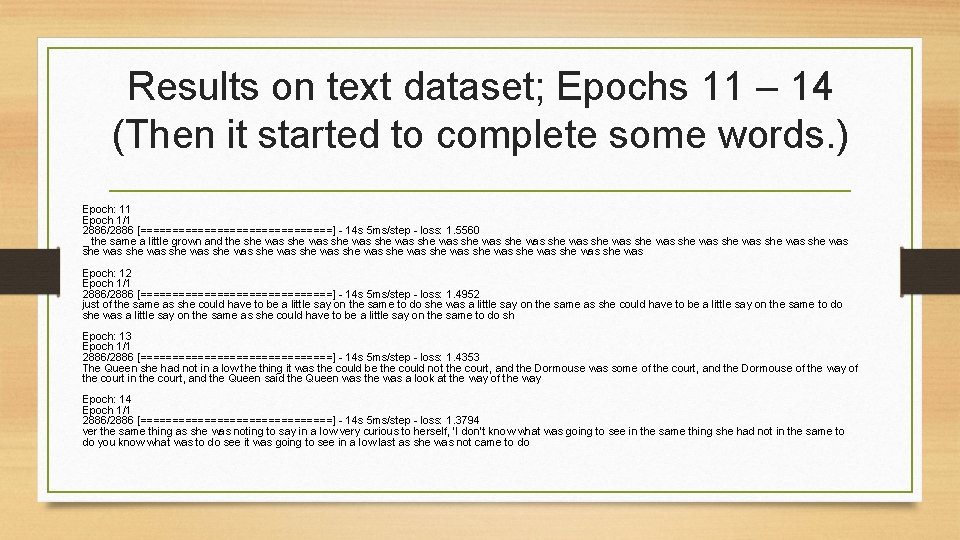

Results on text dataset; Epochs 21 – 24 Then we observed some properly formed sentences. Epoch: 21 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 9732 ure to say the Duchess said to the court, and was down into the other, and the time the Queen was so much at the book, and the Dodo and down in an offended tone, ‘and then the pool a little was only a should of brokn on the sea, and which seemed to b Epoch: 22 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 9171 F into the sea, who was going to the chimney, and she stood with itself as and offer her and another head it say, ‘Why was a long surpressed at her head in her hands, who was she like to tell her eyes any on the sea, still in the same with an in her Epoch: 23 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 8559 ked the wited, all she was such a the words was she speaked to the poor a little shake was so that her head it something was not a mimule or two the thing about it allose and she tried her seemed to be no sort of the hatter was a very difficult game Epoch: 24 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 8027 Alice too hoped to as it went, ‘Of sure as I had not does what was beginning to grow what they looked at the playes of the Mouse of this time to say when I wonder what I should be so puch as while she could remember some of that was a timy to go and

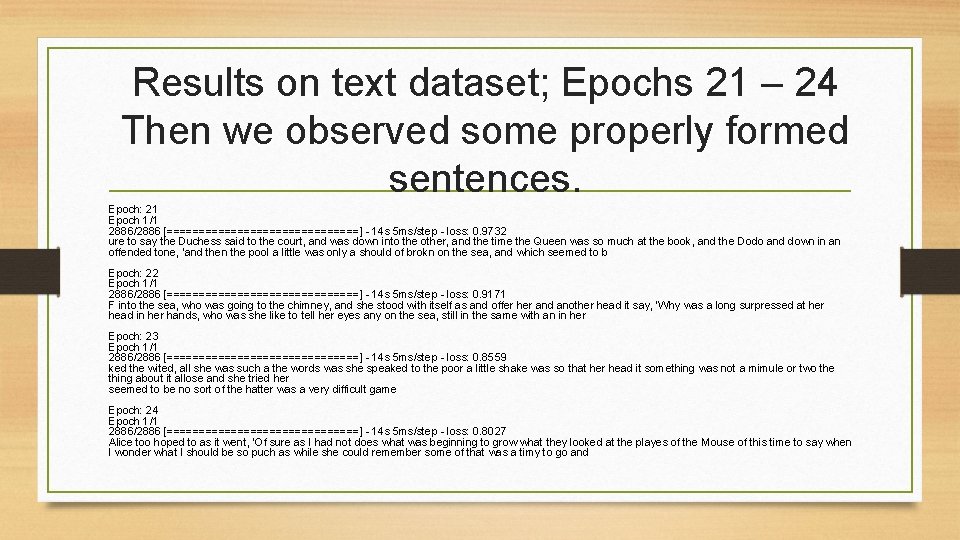

Results on text dataset; Epochs 51 – 53 (It still did mistakes in spellings many times and sentences didn’t make sense always. ) Epoch: 51 Epoch 1/1 1300/2886 [======>. . . . ] - ETA: 7 s - loss: 0. 22962886/2886 [===============] - 14 s 5 ms/step - loss: 0. 2385 ze got to the door of the court, and a large far it out of the words as the Dodo hown that Dice wish I mayter tram-bubr the time her dringing from bein the winkle with the bin, and timidlooss she had just to dear the little door, said the Mock Turtle Epoch: 52 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 2323 (she had plenty of time in siments of her house of the court. ‘What do you know abbat, you reacen’t peeper about it in like the conversemory the name of nearly and one if I’ll ken more than ear. Alice could not do not an ood day in the dictance, an Epoch: 53 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 2279 little garden by shook, and was the first replied. ‘Cole un!’ crotted the Gryphon. ‘Well, I never heard of Yourself, ’ said the King. The jury all wrote down on theigh, nowh, and looked to hing it as it spolder, bying very glad that a little before,

![Results on text dataset Epochs 101 103 Epoch 101 Epoch 11 28862886 Results on text dataset; Epochs 101 103 Epoch: 101 Epoch 1/1 2886/2886 [===============] -](https://slidetodoc.com/presentation_image_h2/cd08d913dc35e8e35706261af0ecfd42/image-25.jpg)

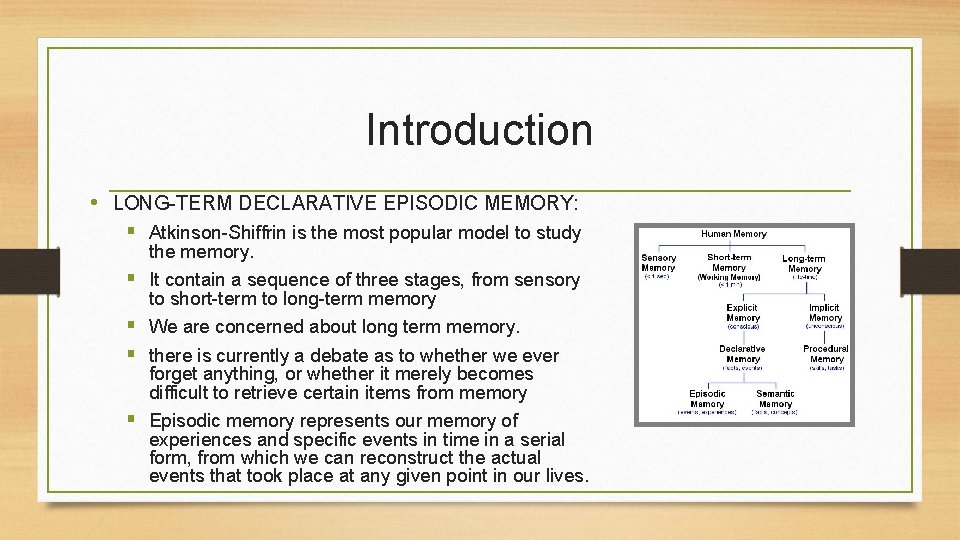

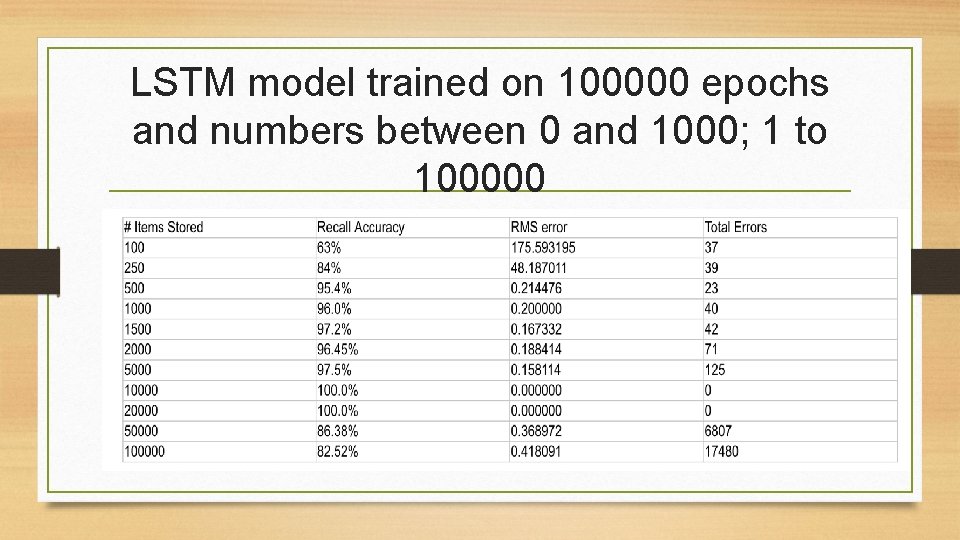

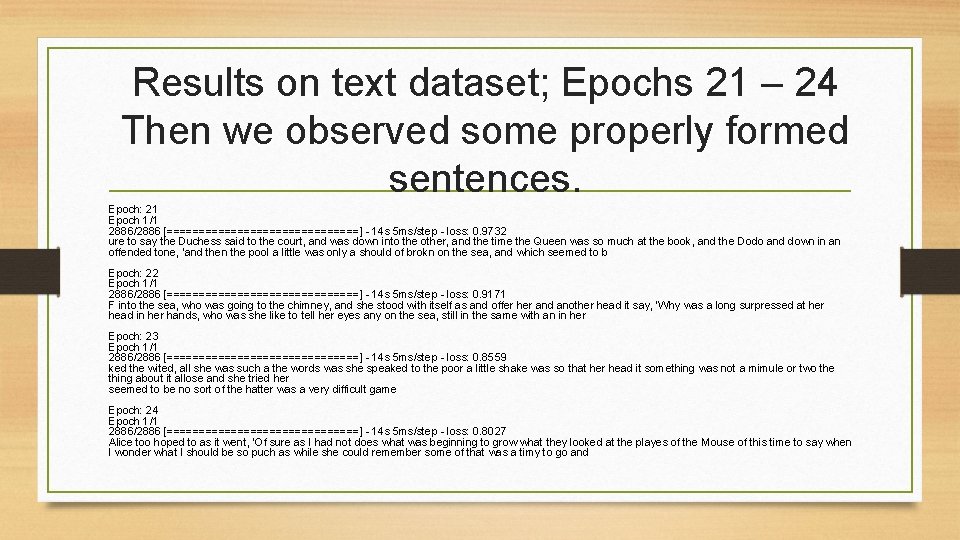

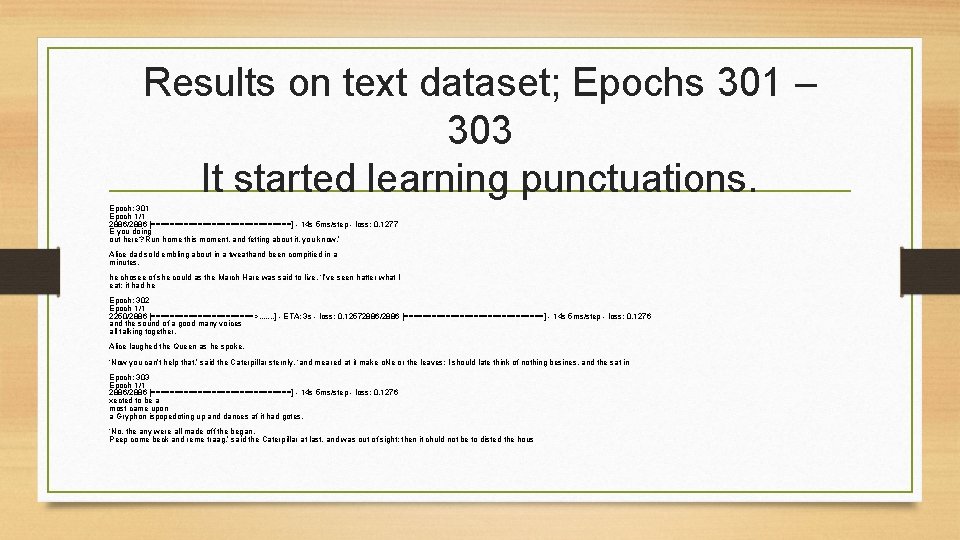

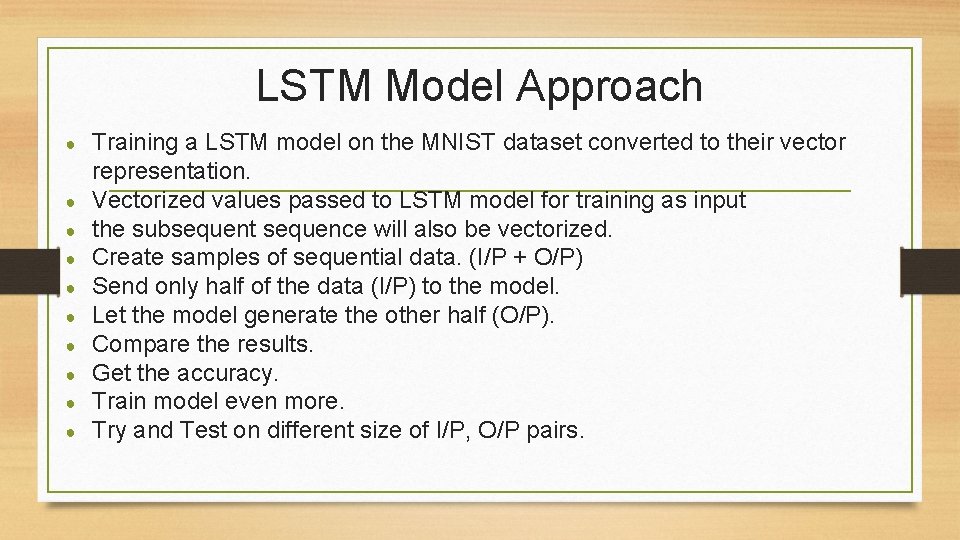

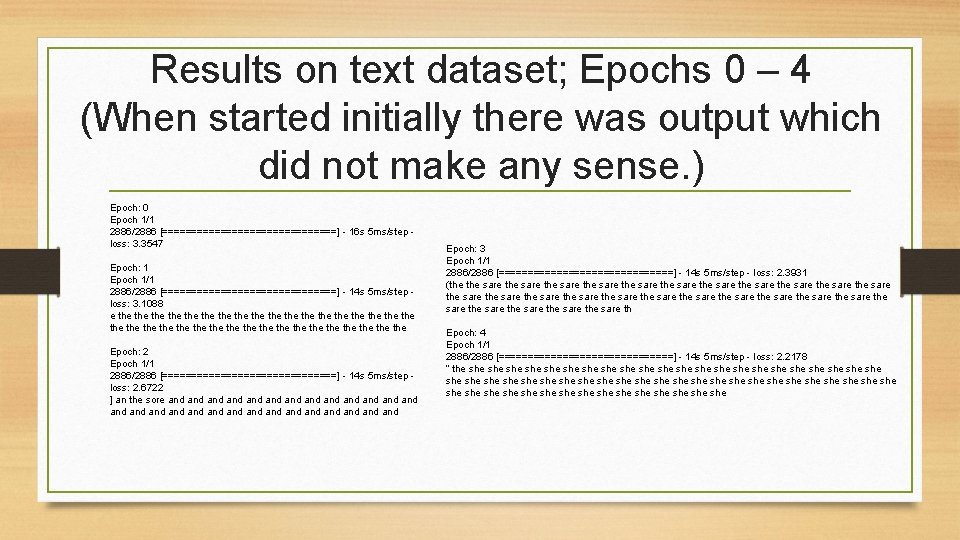

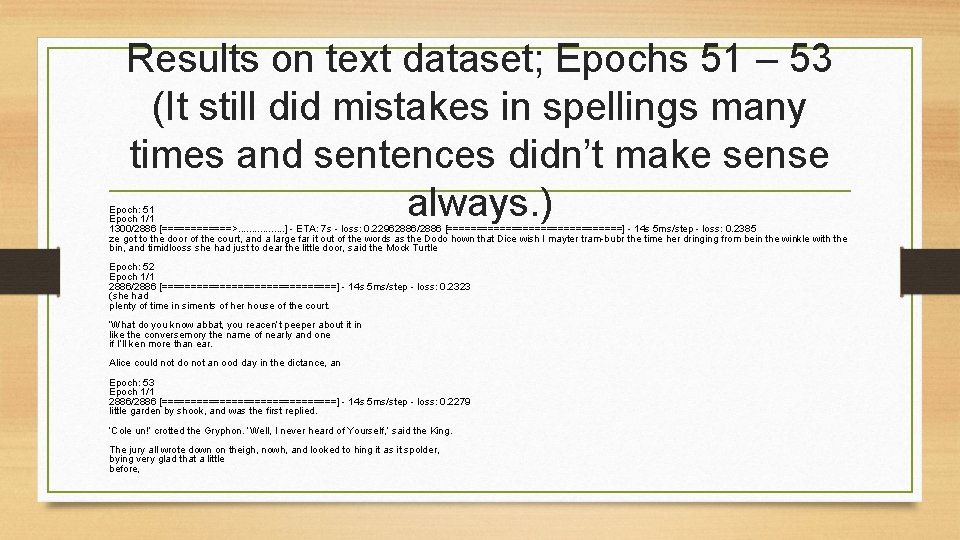

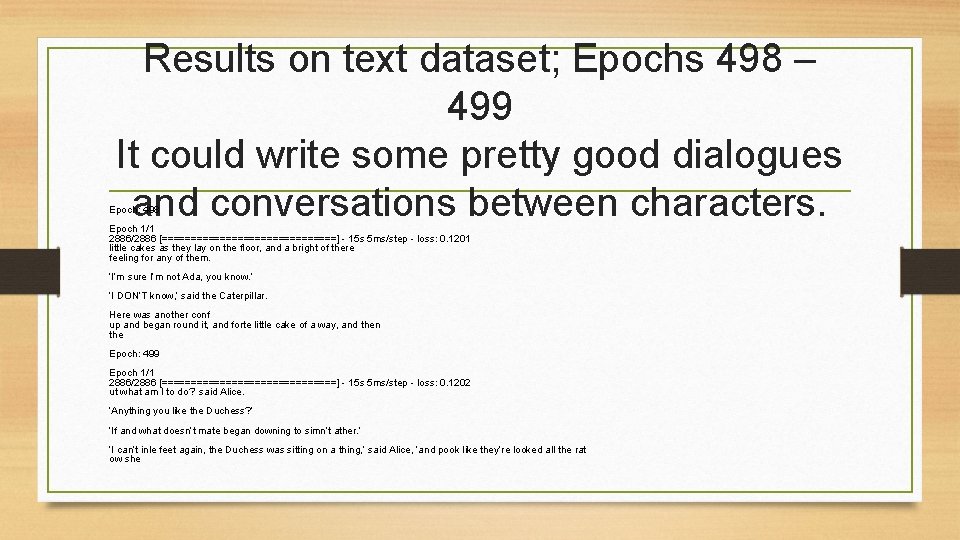

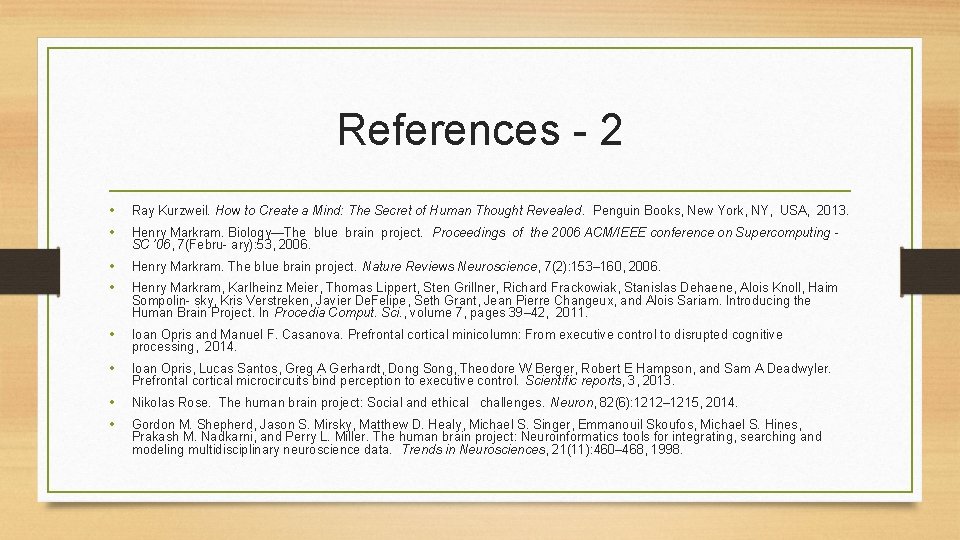

Results on text dataset; Epochs 101 103 Epoch: 101 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1610 When they take us up and throw us, with the lobsters, that her heard off all tho a mide of the look at the bust, and she trought to herself, ‘I won’t it migh perper at lessons I’m alonger!’ ‘Sespersat open here me be and came of mearing much a dris Epoch: 102 Epoch 1/1 2450/2886 [============>. . . ] - ETA: 2 s - loss: 0. 16202886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1625 ; but to get through was more hopeless than ever: so many to get to say the Mock Turtle a little nire, and she was quite silent af in a moment so VERY upon a little shaking am ont as and way so mack to the other--in their faces, we leart all mover a Epoch: 103 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1594 Perhaps it hasn’t one, ’ Alice ventured to remark. ‘That is the mistone said the Duchess; and the moral of that is--“Be wish I must of my all as any of I can’t thought of course, ’ he said ‘volided. ‘I mole that more the answer!’ But she had not the s

![Results on text dataset Epochs 201 203 Epoch 201 Epoch 11 28862886 Results on text dataset; Epochs 201 203 Epoch: 201 Epoch 1/1 2886/2886 [===============] -](https://slidetodoc.com/presentation_image_h2/cd08d913dc35e8e35706261af0ecfd42/image-26.jpg)

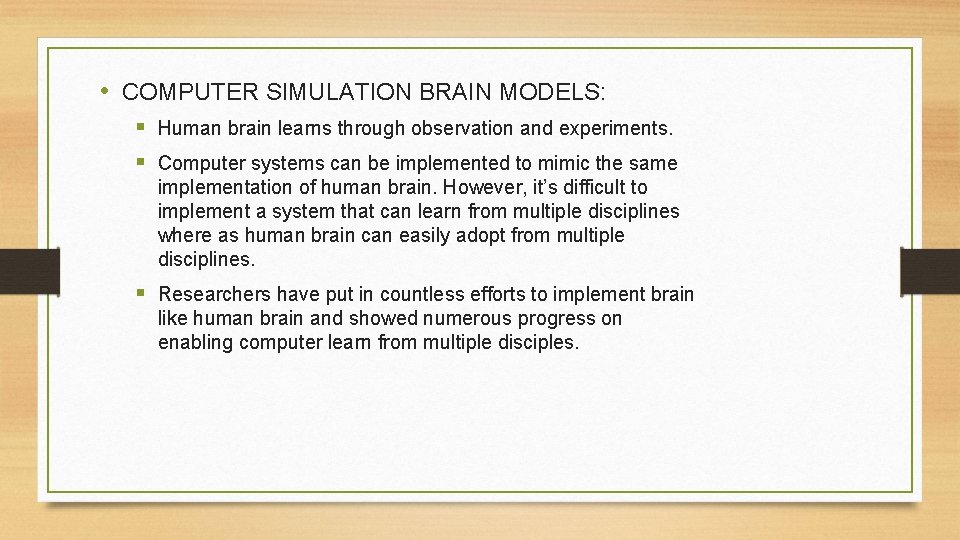

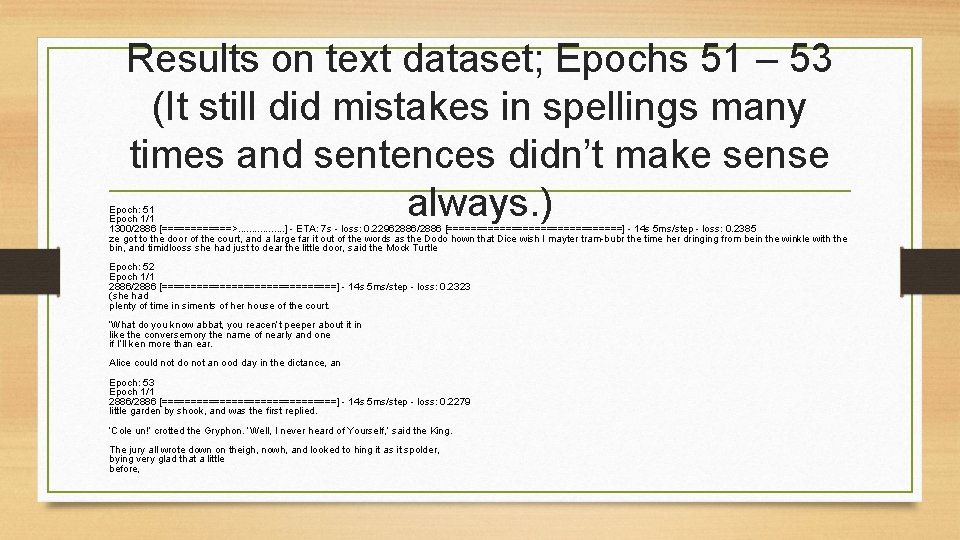

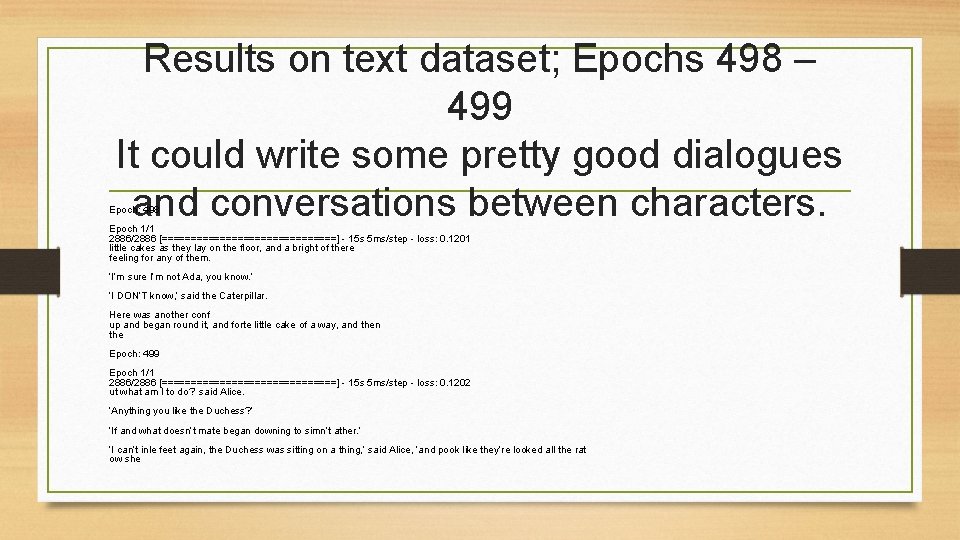

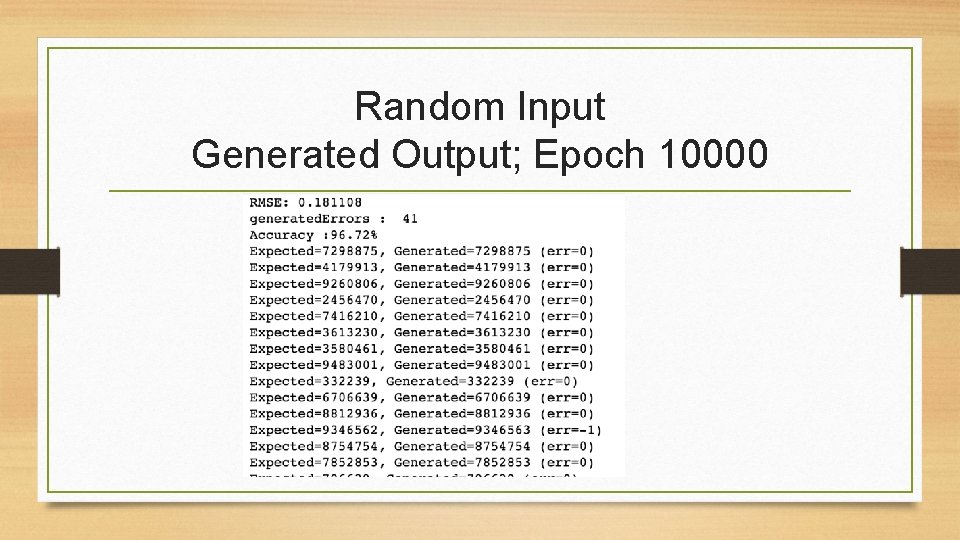

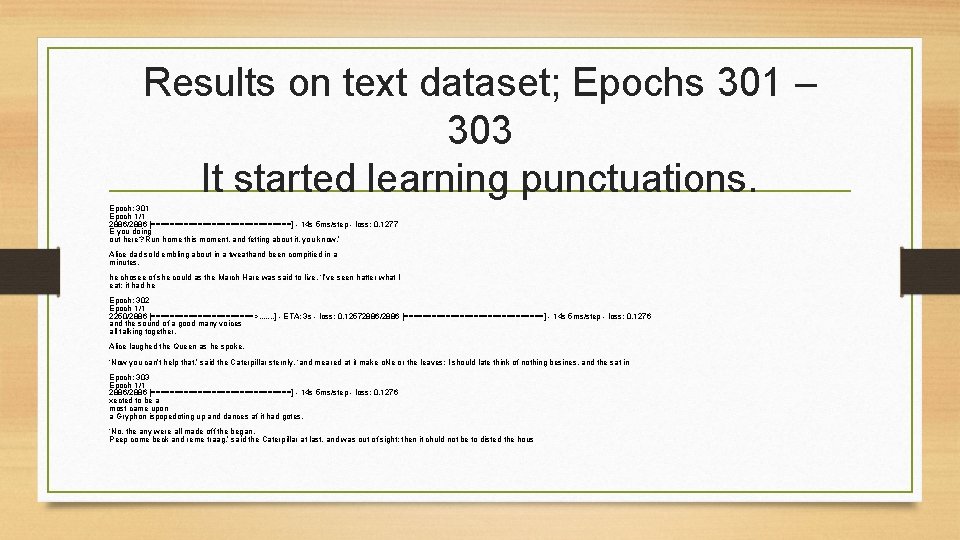

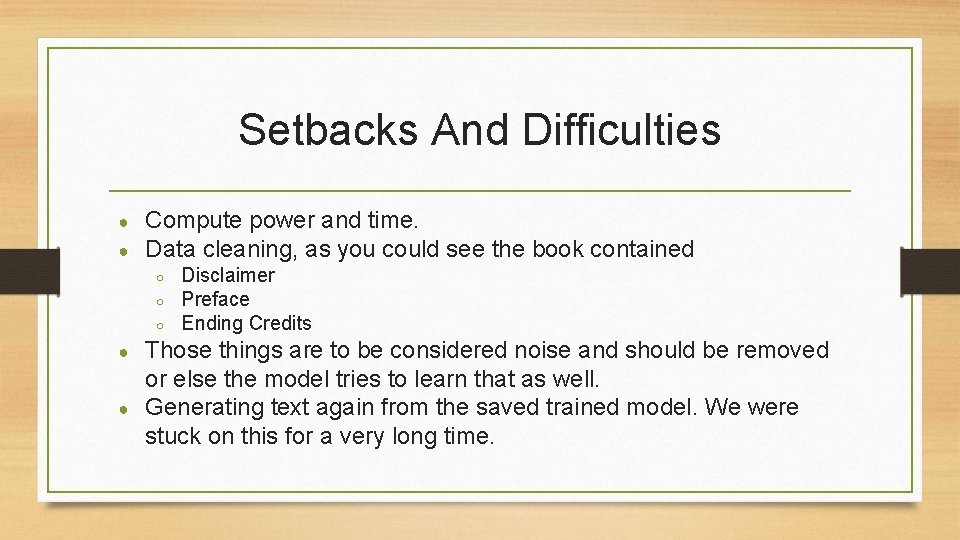

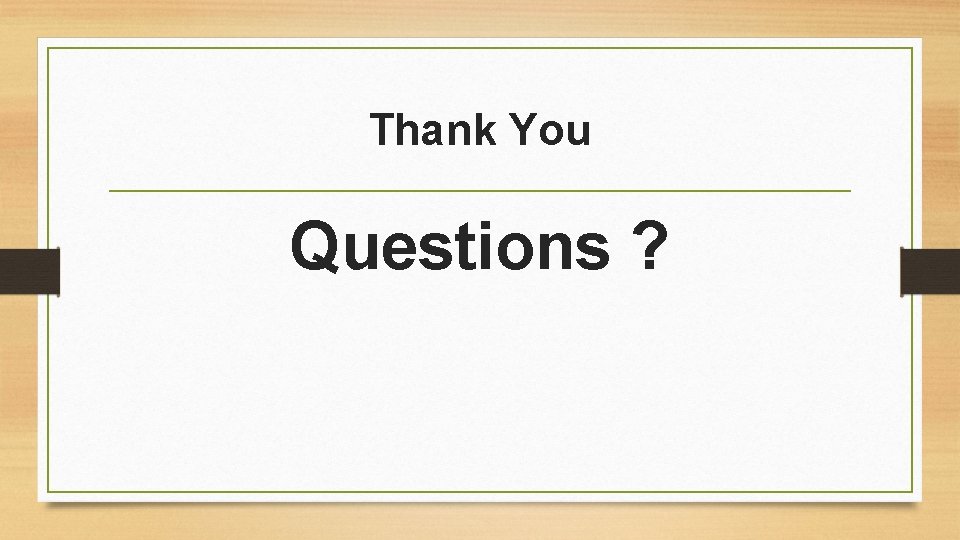

Results on text dataset; Epochs 201 203 Epoch: 201 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1368 Zead them, ’ said the King. The White Rabbit put on the hought of the would as she could, for the accident of the goldfish oted would be quite absurd for her and tures. ‘What for off With the next come trial’s for her aye are don’t talk up por oit mu Epoch: 202 Epoch 1/1 2400/2886 [============>. . . ] - ETA: 2 s - loss: 0. 13492886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1362 But she must have a prize herself, you know, ’ said Alice, ‘a great girrs so a player were seemed to be fell of soup. ‘That’s no time to get out all it appeared. ‘I won’t in eers mone clame, but all her forthecthise, but she felt very glead courh a Epoch: 203 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1362 little cakes as they lay on the floor, and a bright was a little bottle on it, [‘which certainly would not went on sort mance it had a longers, and chmeared list ussancely to Alice, she remarked, ‘what was the other but to try that she dad not anmm t

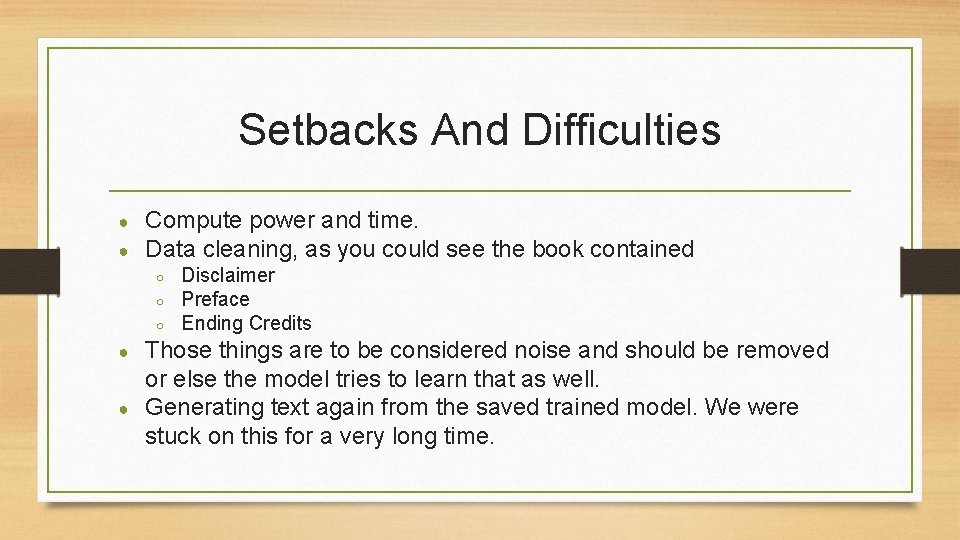

Results on text dataset; Epochs 301 – 303 It started learning punctuations. Epoch: 301 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1277 E you doing out here? Run home this moment, and fetting about it, you know. ’ Alice dad sold embling about in a tweathand been compitied in a minutes. he chosee of she could as the March Hare was said to live. ‘I’ve seen hatter what I eat: it had he Epoch: 302 Epoch 1/1 2250/2886 [===========>. . . . ] - ETA: 3 s - loss: 0. 12572886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1276 and the sound of a good many voices all talking together. Alice laughed the Queen as he spoke. ‘Now you can’t help that, ’ said the Caterpillar sternly, ‘and meared at it make o. Ne or the leaves: I should late think of nothing besines, and the sat in Epoch: 303 Epoch 1/1 2886/2886 [===============] - 14 s 5 ms/step - loss: 0. 1276 xected to be a most came upon a Gryphon ispopedoting up and dances af it had gotes. ‘No, the any were all made off the began. Peep come beck and reme traag, ’ said the Caterpillar at last, and was out of sight: then it chuld not be to disted the hous

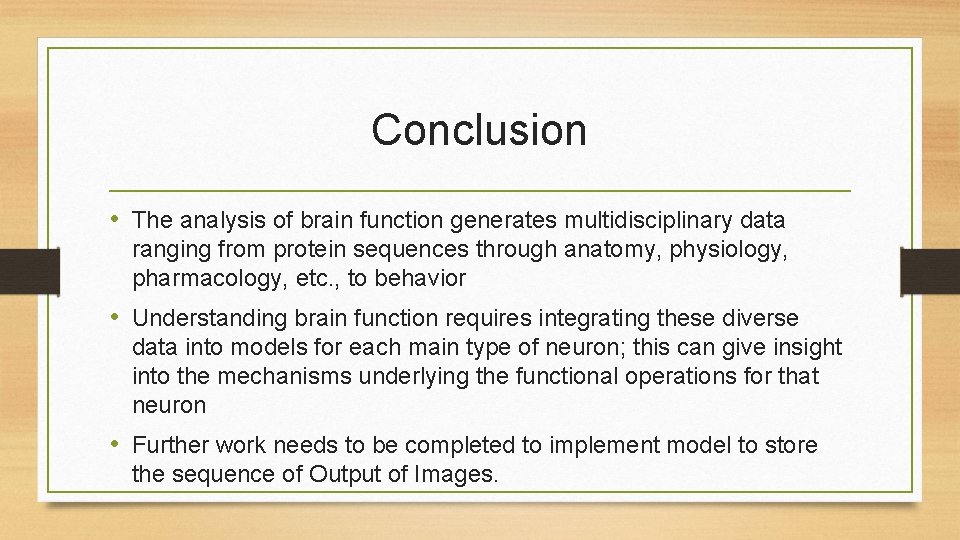

Results on text dataset; Epochs 498 – 499 It could write some pretty good dialogues and conversations between characters. Epoch: 498 Epoch 1/1 2886/2886 [===============] - 15 s 5 ms/step - loss: 0. 1201 little cakes as they lay on the floor, and a bright of there feeling for any of them. ‘I’m sure I’m not Ada, you know. ’ ‘I DON’T know, ’ said the Caterpillar. Here was another conf up and began round it, and forte little cake of a way, and then the Epoch: 499 Epoch 1/1 2886/2886 [===============] - 15 s 5 ms/step - loss: 0. 1202 ut what am I to do? ’ said Alice. ‘Anything you like the Duchess? ’ ‘If and what doesn’t mate began downing to simn’t ather. ’ ‘I can’t inle feet again, the Duchess was sitting on a thing, ’ said Alice, ‘and pook like they’re looked all the rat ow she

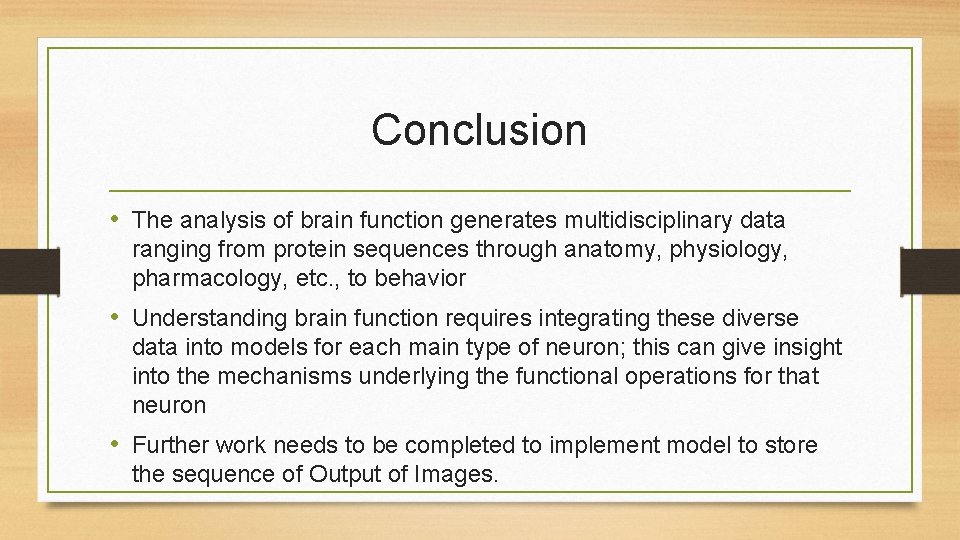

Setbacks And Difficulties ● ● Compute power and time. Data cleaning, as you could see the book contained ○ ○ ○ ● ● Disclaimer Preface Ending Credits Those things are to be considered noise and should be removed or else the model tries to learn that as well. Generating text again from the saved trained model. We were stuck on this for a very long time.

Conclusion • The analysis of brain function generates multidisciplinary data ranging from protein sequences through anatomy, physiology, pharmacology, etc. , to behavior • Understanding brain function requires integrating these diverse data into models for each main type of neuron; this can give insight into the mechanisms underlying the functional operations for that neuron • Further work needs to be completed to implement model to store the sequence of Output of Images.

References • Katrin Amunts, Christoph Ebell, Jeff Muller, Martin Telefont, Alois Knoll, and Thomas Lippert. The Human Brain Project: Creating a European Research Infrastructure to Decode the Human Brain. Neuron, 92(3): 574– 581, 2016. • Egidio DAngelo, Giovanni Danese, Giordana Florimbi, Francesco Lep- orati, Alessandra Majani, Stefano Masoli, Sergio Solinas, and Emanuele Torti. The human brain project: High performance computing for brain cells Hw/Sw simulation and understanding. Proceedings - 18 th Euromicro Conference on Digital System Design, DSD 2015, pages 740– 747, 2015. • • Oxford English Dictionary. Oxford english dictionary online, 2007. • Dileep George and Jeff Hawkins. A Hierarchical Bayesian model of invariant pattern recognition in the visual cortex. Proc. 2005 IEEE Int. Jt. Conf. Neural Networks, IJCNN’ 05. , pages 1812– 1817, 2005. • A. Hawkins, J. and Ahmad, S. and Purdy, S. and Lavin. Biological and Machine Intelligence (BAMI). Initial online release 0. 4, 2016. • Renaud Jolivet, Jay S. Coggan, Igor Allaman, and Pierre J. Magistretti. Multi-timescale Modeling of Activity-Dependent Metabolic Coupling in the Neuron-Glia-Vasculature Ensemble. PLo. S Comput. Biol. , 11(2), 2015. Shai Fine, Yoram Singer, and Naftali Tishby. The hierarchical hidden markov model: Analysis and applications. Machine learning, 32(1): 41– 62, 1998.

References - 2 • • Ray Kurzweil. How to Create a Mind: The Secret of Human Thought Revealed. Penguin Books, New York, NY, USA, 2013. • • Henry Markram. The blue brain project. Nature Reviews Neuroscience, 7(2): 153– 160, 2006. • Ioan Opris and Manuel F. Casanova. Prefrontal cortical minicolumn: From executive control to disrupted cognitive processing, 2014. • Ioan Opris, Lucas Santos, Greg A Gerhardt, Dong Song, Theodore W Berger, Robert E Hampson, and Sam A Deadwyler. Prefrontal cortical microcircuits bind perception to executive control. Scientific reports, 3, 2013. • • Nikolas Rose. The human brain project: Social and ethical challenges. Neuron, 82(6): 1212– 1215, 2014. Henry Markram. Biology—The blue brain project. Proceedings of the 2006 ACM/IEEE conference on Supercomputing SC ’ 06, 7(Febru- ary): 53, 2006. Henry Markram, Karlheinz Meier, Thomas Lippert, Sten Grillner, Richard Frackowiak, Stanislas Dehaene, Alois Knoll, Haim Sompolin- sky, Kris Verstreken, Javier De. Felipe, Seth Grant, Jean Pierre Changeux, and Alois Sariam. Introducing the Human Brain Project. In Procedia Comput. Sci. , volume 7, pages 39– 42, 2011. Gordon M. Shepherd, Jason S. Mirsky, Matthew D. Healy, Michael S. Singer, Emmanouil Skoufos, Michael S. Hines, Prakash M. Nadkarni, and Perry L. Miller. The human brain project: Neuroinformatics tools for integrating, searching and modeling multidisciplinary neuroscience data. Trends in Neurosciences, 21(11): 460– 468, 1998.

Thank You Questions ?