A Cool Frontier Stress testing COOL via Frontier

![like. Luis 1 iovsquid. Scale. Clients [1, 20, 40, 60, 80] Clients , 1 like. Luis 1 iovsquid. Scale. Clients [1, 20, 40, 60, 80] Clients , 1](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-20.jpg)

![1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload 1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-25.jpg)

![1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload 1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-26.jpg)

![2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload 2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-27.jpg)

![2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload 2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-28.jpg)

- Slides: 39

A Cool Frontier Stress testing COOL via Frontier, Squid and Oracle David Front Weizmann Institute PSS CERN LCG 3 D workshop @ CERN 13 September 2006 A Cool Frontier 1

Agenda • • • Goals Related Atlas testing Atlas requirements Connections architecture Workloads Reading use-cases Results Client robustness What next 13 September 2006 A Cool Frontier 2

Goals • Frontier and squid are being stress tested by others ‘directly’, driven directly by CORAL • This activity adds the COOL layer as a means to use Frontier and squid, in a little less direct way • It is aiming to simulate Atlas requirements for COOL/Frontier, even though they are not clear • The goals are to – – learn if Frontier and squid endure stress via COOL find the performance look for performance bottlenecks (and to see if adding COOL has a heavy penalty compared to direct usage via CORAL) 13 September 2006 A Cool Frontier 3

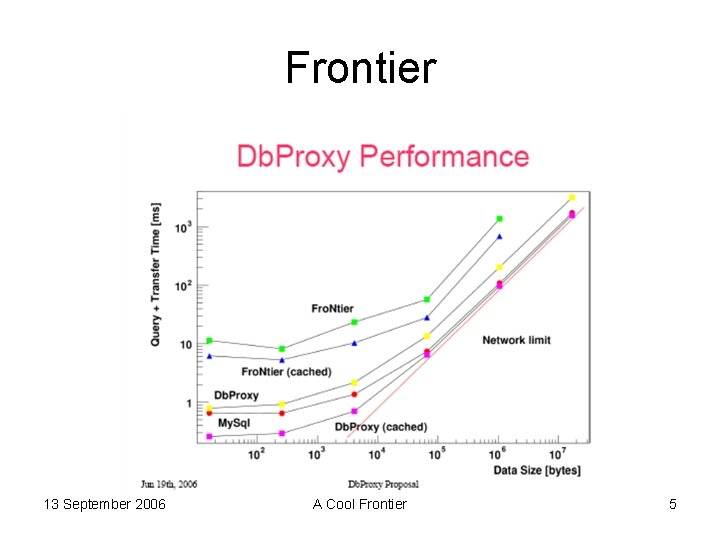

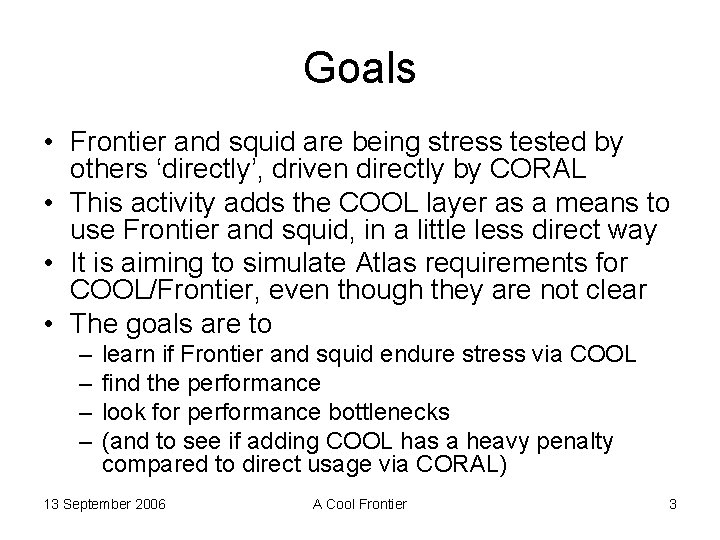

Related Atlas testing • Some measurements of Frontier performance which caused the SLAC group to look at an alternative DBProxy idea can be found at the talk of Amedeo Perazzo in the ATLAS online DB meeting of 19 th June 2006: http: //indico. cern. ch/conference. Display. py ? conf. Id=a 062174 13 September 2006 A Cool Frontier 4

Frontier 13 September 2006 A Cool Frontier 5

Atlas testing requirements Requirements not clear: Tier-2 s will run different job types: • Simulation - many 'repeated' queries, requesting same geometry and conditions data. may use static replication of SQLite files. • Calibration production - might be similar to reconstruction production • calibration analysis and user analysis - likely to be rather chaotic. • Probably one of the biggest causes of repeat queries here will be users running similar jobs many times over the same conditions data. 13 September 2006 A Cool Frontier 6

Tested connections architecture Client application User managers Her own CORAL (HW and user) sessions COOL CORAL (connection manager) Frontier (implements conn. Mng. plugins) squid Server Connection created per query Frontier server Connections Allocated (once) by j 2 ee Oracle server 13 September 2006 A Cool Frontier cache J 2 EE DB Connection pool Connections Allocated (once) by j 2 ee 7

An observation What should be faster: 1. Application queries Oracle directly 2. Application queries Oracle via Frontier ? My answer: It depends (among other things) on: 1. If application does many queries per Coral connection, Oracle will be faster 2. If application does few queries per connection, Frontier may have an advantage, because the penalty of creating DB connections is smaller, because J 2 EE uses a connection pool 13 September 2006 A Cool Frontier 8

Tested environment Similar to Luis’s testing environment, using different testing SW and clients call COOL rather than CORAL: • Oracle server: cooldev, non RAC • SQUID and Frontier servers on fronteir 3 d 2 • Clients: 9 lxb machines • SW: COOL_1_3_3, CORAL_1_5_3 • Frontier: Servlet 3. 3, Client 2. 4. 5 13 September 2006 A Cool Frontier 9

Testing/monitoring tools • Verification. Client: runs tests – Flexible testing parameters configurable – Monitors client performance and can monitor Oracle session statistics metrics (not used here) – Creates multi line graphs of results • Oracle enterprise manager. In particular: – Performance graph – ‘Top activity’ – Detailed report (per hour) Complaints: is slow and asks to login frequently • Lemon to monitor machine resources 13 September 2006 A Cool Frontier 10

Workloads • like. Luis 1 - Read 1 1 MB table (a’la Luis testing) • Small. Queries – small ‘reconstruction like’ workload 13 September 2006 A Cool Frontier 11

like. Luis 1 workloads Motivation: Read 1 1 MB table – to be ‘Luis compatible’ For both: – 1 folder – Record length • string payload: 50 B • Cool overhead: ~53 B • Total: ~103 – Size of IOV table: (~100*10000) ~1 MB – Payload counts for about half of the total throughput 1. like. Luis 1 iov – "channels. Per. Folder" : 10000 – "num. IOVs" : 1 2. like. Luis 1 channel – "channels. Per. Folder" : 1 – "num. IOVs" : 10000 13 September 2006 A Cool Frontier 12

‘Small. Queries’ Workload A small ‘reconstruction like’ workload: – – 200 folders 500 channelids 50 IOVs Record length • mixed payload ~ 177 B long: – 10 numbers – 1 float – 100 long string • Cool overhead: ~53 B • Total: ~227 – Size of IOV tables ~5 MB – Payload counts for about 3/4 of the total throughput 13 September 2006 A Cool Frontier 13

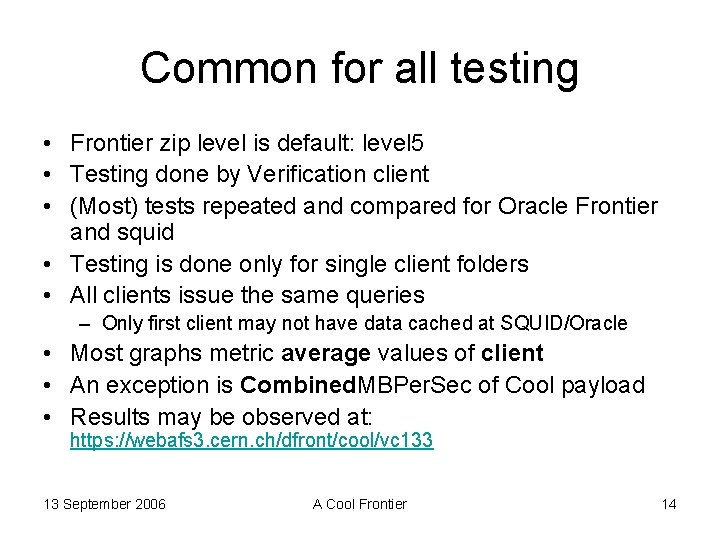

Common for all testing • Frontier zip level is default: level 5 • Testing done by Verification client • (Most) tests repeated and compared for Oracle Frontier and squid • Testing is done only for single client folders • All clients issue the same queries – Only first client may not have data cached at SQUID/Oracle • Most graphs metric average values of client • An exception is Combined. MBPer. Sec of Cool payload • Results may be observed at: https: //webafs 3. cern. ch/dfront/cool/vc 133 13 September 2006 A Cool Frontier 14

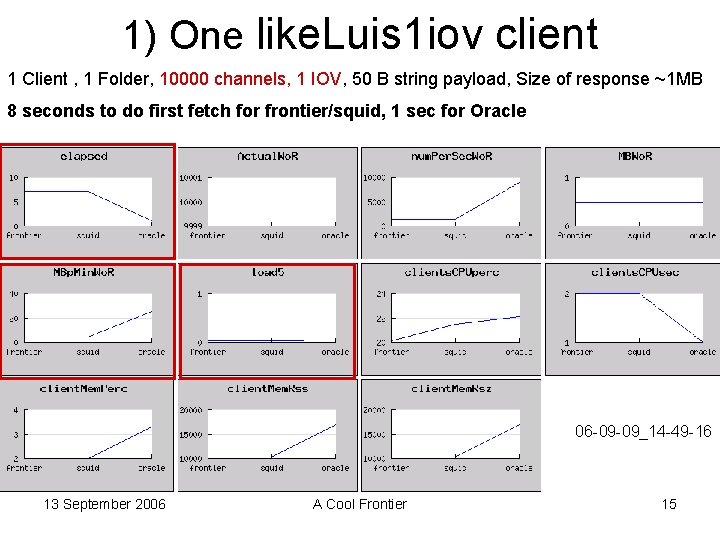

1) One like. Luis 1 iov client 1 Client , 1 Folder, 10000 channels, 1 IOV, 50 B string payload, Size of response ~1 MB 8 seconds to do first fetch for frontier/squid, 1 sec for Oracle 06 -09 -09_14 -49 -16 13 September 2006 A Cool Frontier 15

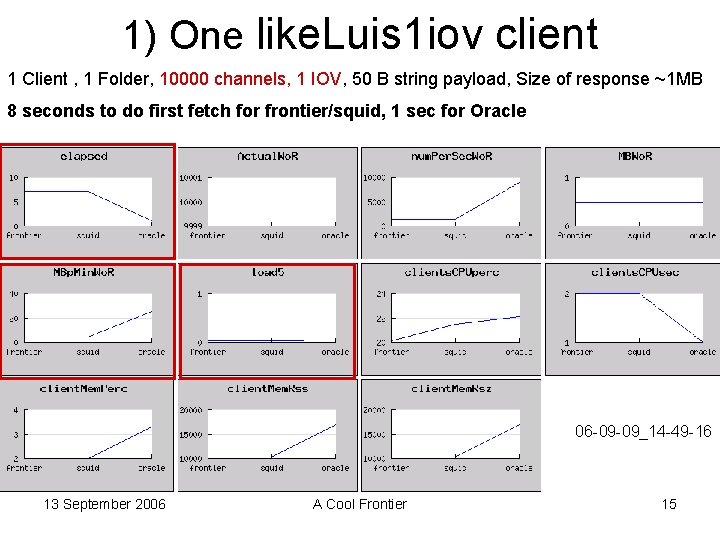

1) One like. Luis 1 iov client 1 Client , 1 Folder, 10000 channels, 1 IOV, 50 B string payload, Size of response ~1 MB 7 seconds to do first fetch for frontier, 2 sec for squid, 1 sec for Oracle 06 -09 -09_21 -04 -05 13 September 2006 A Cool Frontier 16

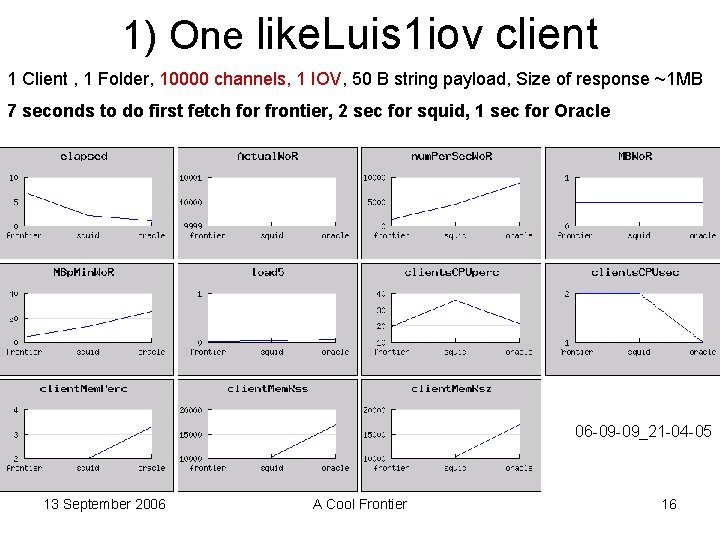

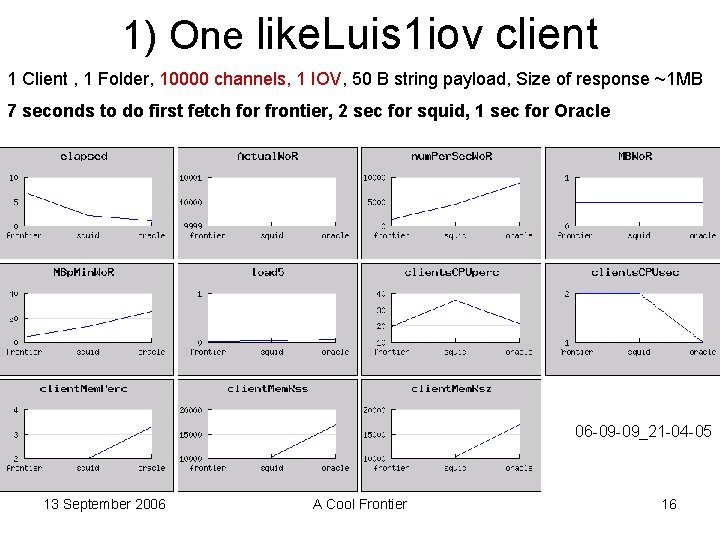

2) One like. Luis 1 channel client 1 Client , 1 Folder, 1 channel, 10000 IOVs, 50 B string payload, Size of response ~1 MB ~1/2 an hour to do first fetch for Frontier/squid (for Oracle the result was cached) 06 -09 -09_19 -07 -17 13 September 2006 A Cool Frontier 17

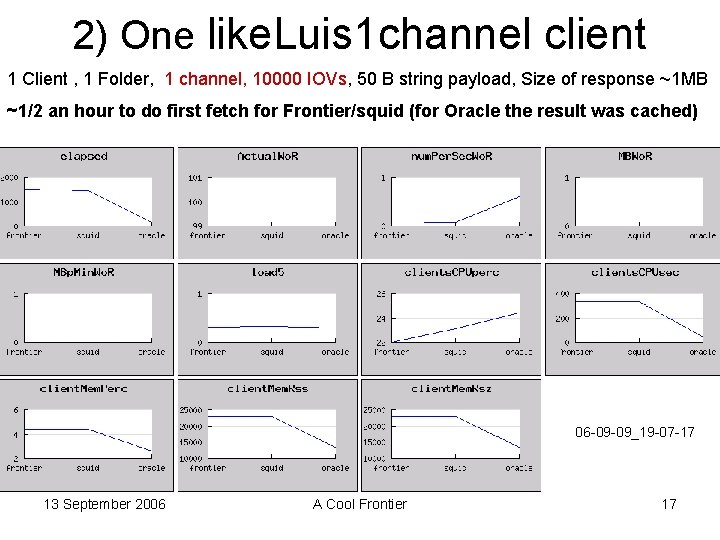

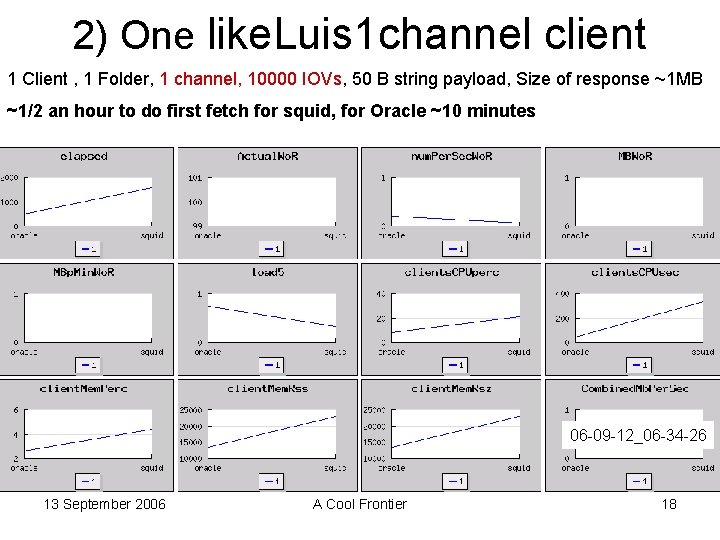

2) One like. Luis 1 channel client 1 Client , 1 Folder, 1 channel, 10000 IOVs, 50 B string payload, Size of response ~1 MB ~1/2 an hour to do first fetch for squid, for Oracle ~10 minutes 06 -09 -12_06 -34 -26 13 September 2006 A Cool Frontier 18

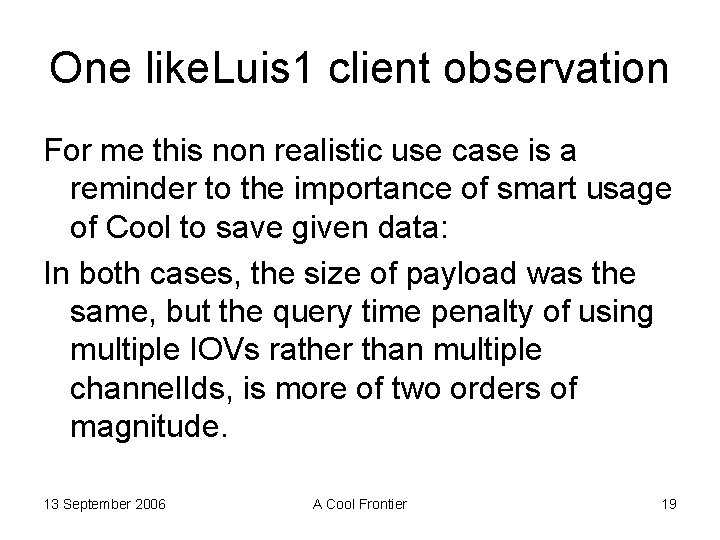

One like. Luis 1 client observation For me this non realistic use case is a reminder to the importance of smart usage of Cool to save given data: In both cases, the size of payload was the same, but the query time penalty of using multiple IOVs rather than multiple channel. Ids, is more of two orders of magnitude. 13 September 2006 A Cool Frontier 19

![like Luis 1 iovsquid Scale Clients 1 20 40 60 80 Clients 1 like. Luis 1 iovsquid. Scale. Clients [1, 20, 40, 60, 80] Clients , 1](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-20.jpg)

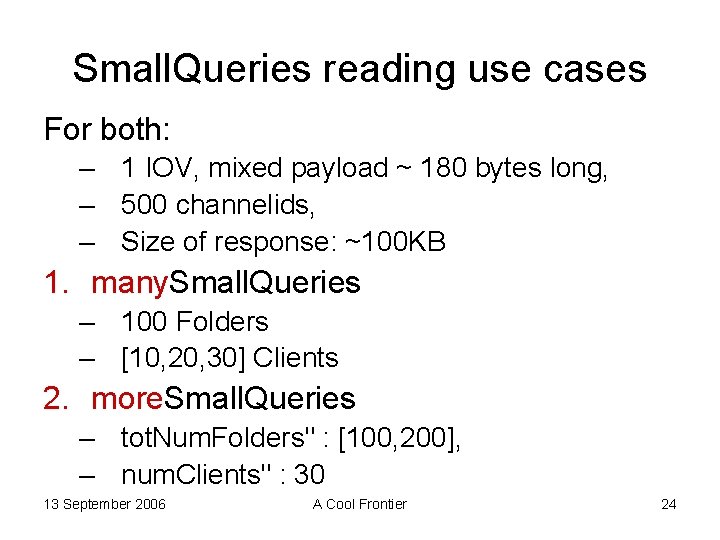

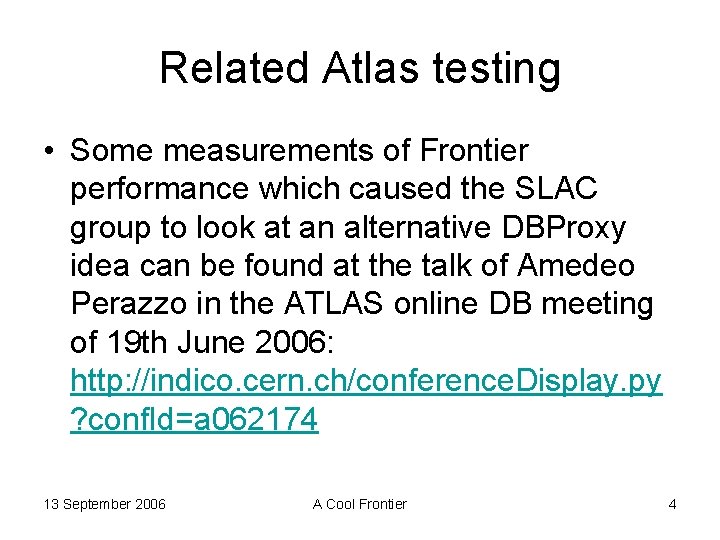

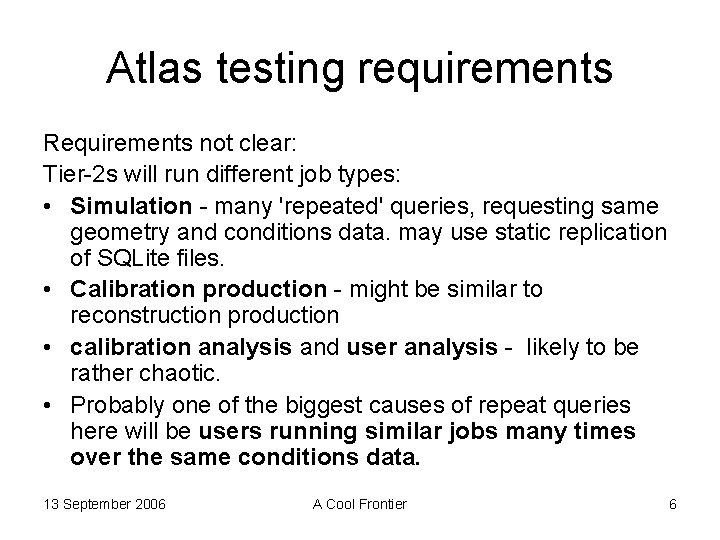

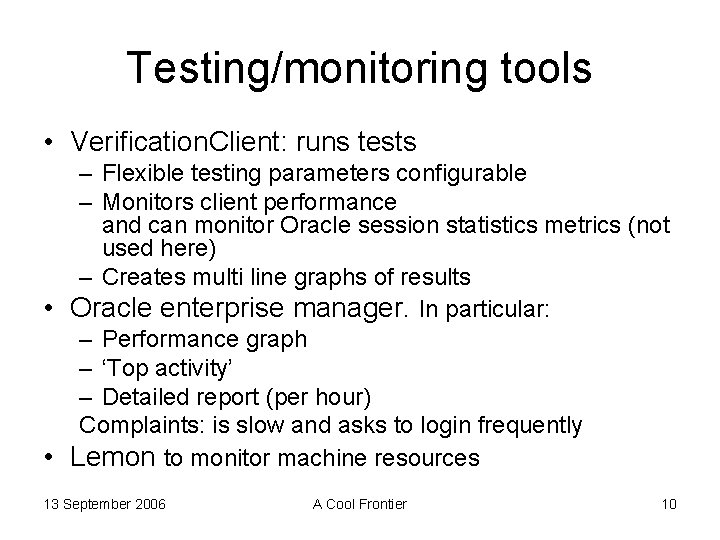

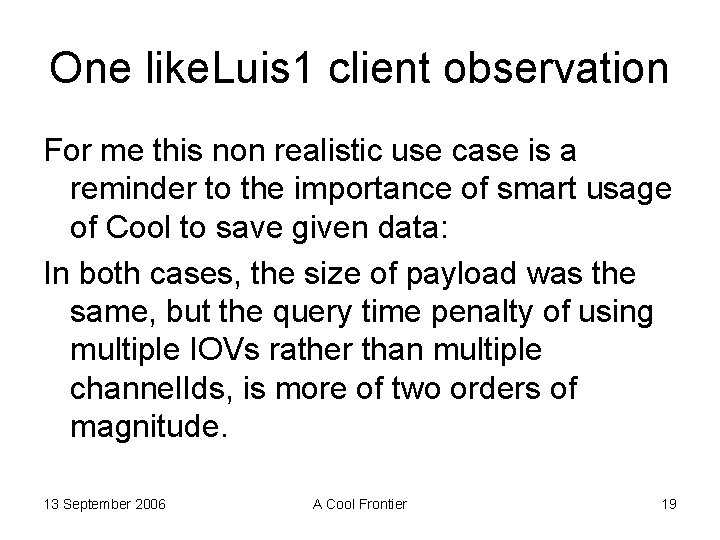

like. Luis 1 iovsquid. Scale. Clients [1, 20, 40, 60, 80] Clients , 1 Folder, 10000 channels, 1 IOV, 50 B payload, response ~1 MB (num Clients-> Throughput )[MB/sec]: (1 ->0. 2), (20 ->3), (40 ->4. 6), (60 ->5. 3), (80 ->5. 6) 06 -09 -10_02 -21 -42 13 September 2006 A Cool Frontier 20

like. Luis 1 iov 30 Clients 30 Clients , 1 Folder, 10000 channels, 1 IOV, 50 B payload, response ~1 MB Throughput [MB/sec]: (Frontier->2)(squid->6. 3), (oracle->13) 06 -09 -09_15 -17 -20 13 September 2006 A Cool Frontier 21

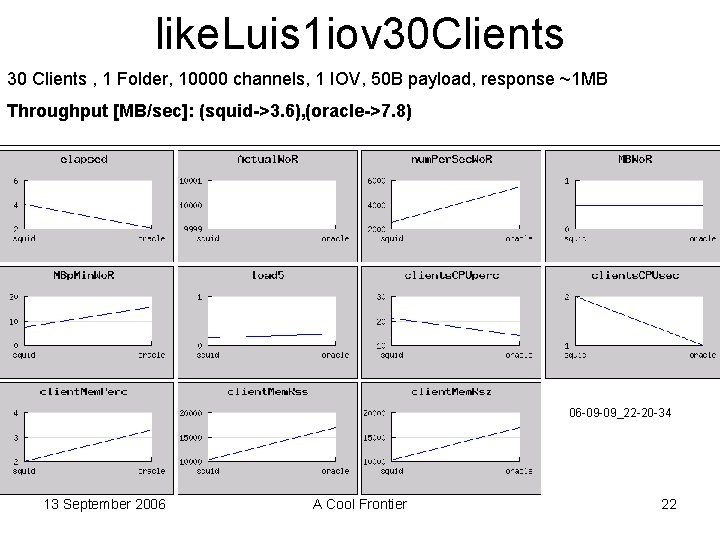

like. Luis 1 iov 30 Clients 30 Clients , 1 Folder, 10000 channels, 1 IOV, 50 B payload, response ~1 MB Throughput [MB/sec]: (squid->3. 6), (oracle->7. 8) 06 -09 -09_22 -20 -34 13 September 2006 A Cool Frontier 22

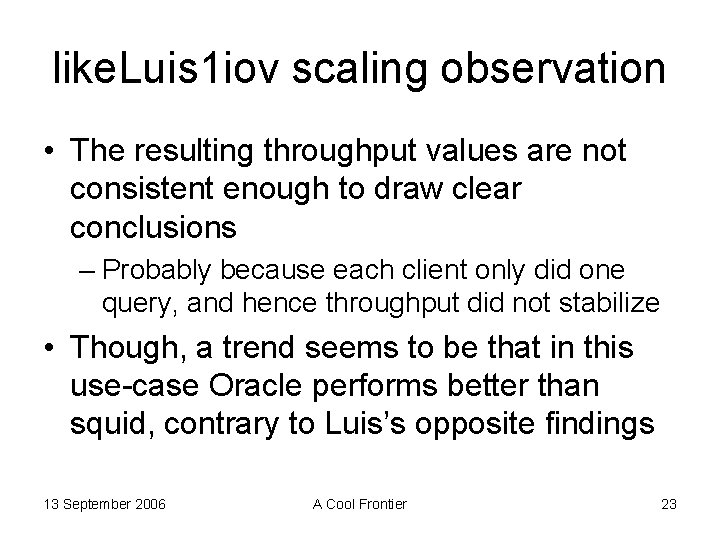

like. Luis 1 iov scaling observation • The resulting throughput values are not consistent enough to draw clear conclusions – Probably because each client only did one query, and hence throughput did not stabilize • Though, a trend seems to be that in this use-case Oracle performs better than squid, contrary to Luis’s opposite findings 13 September 2006 A Cool Frontier 23

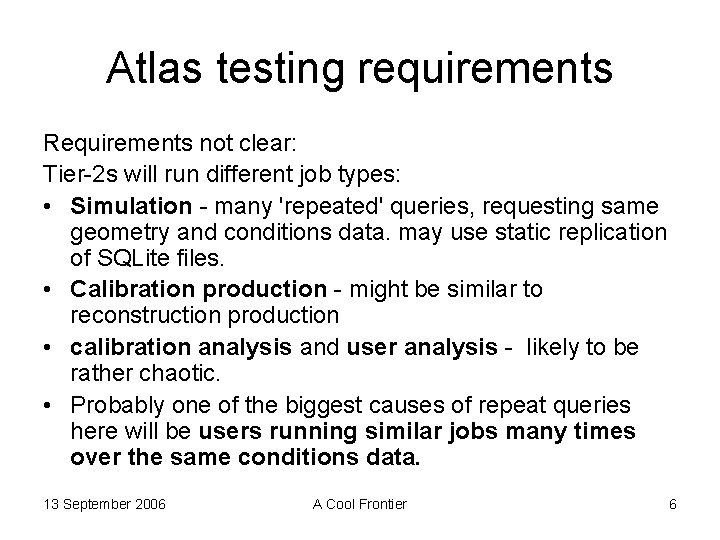

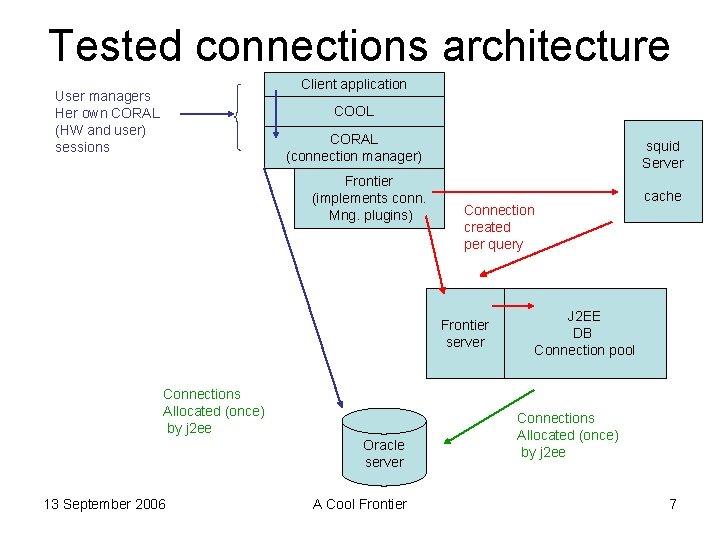

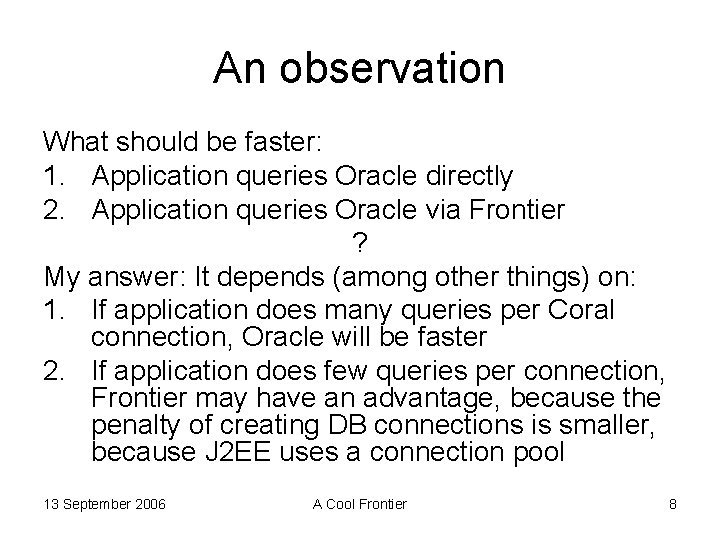

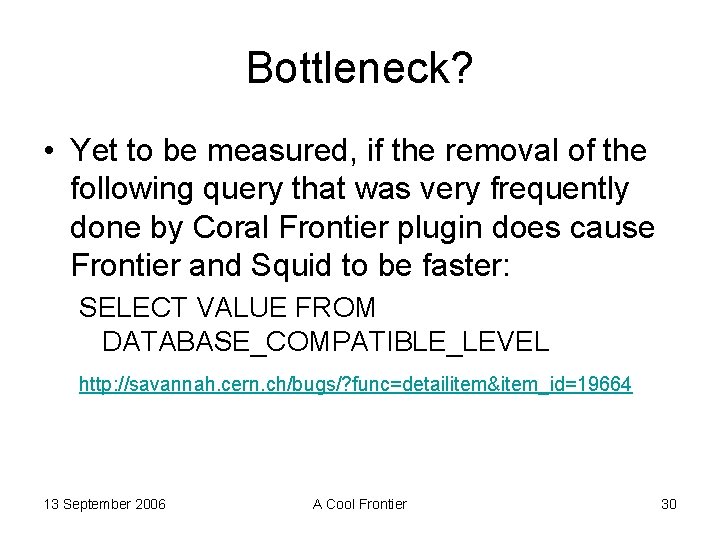

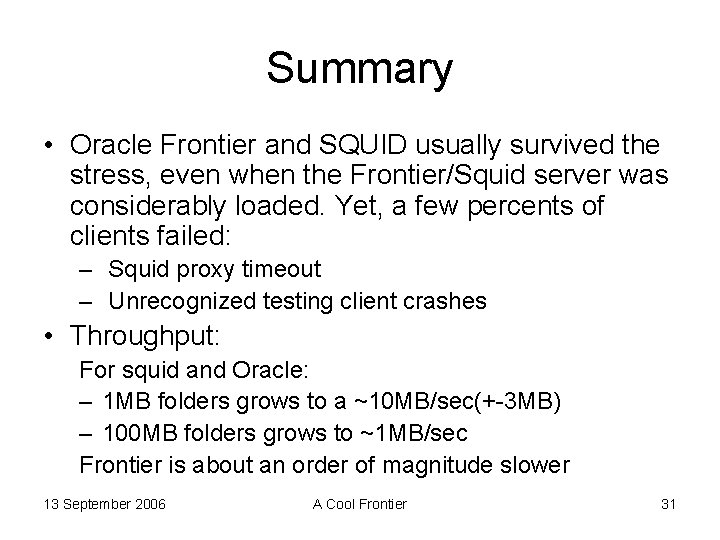

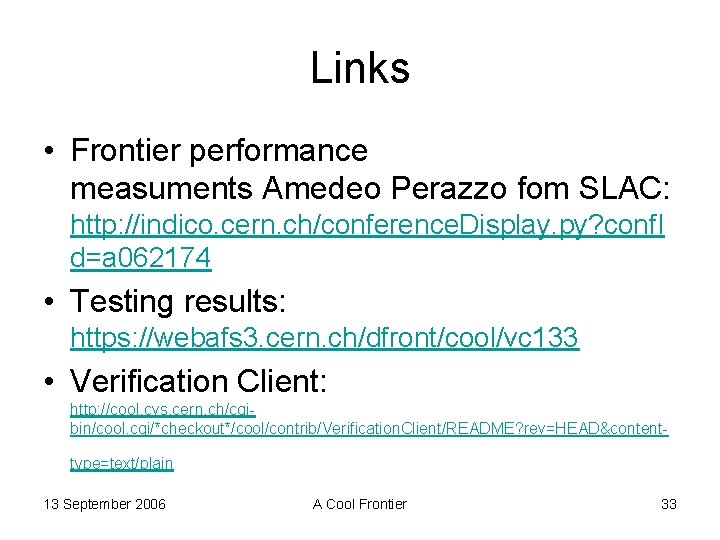

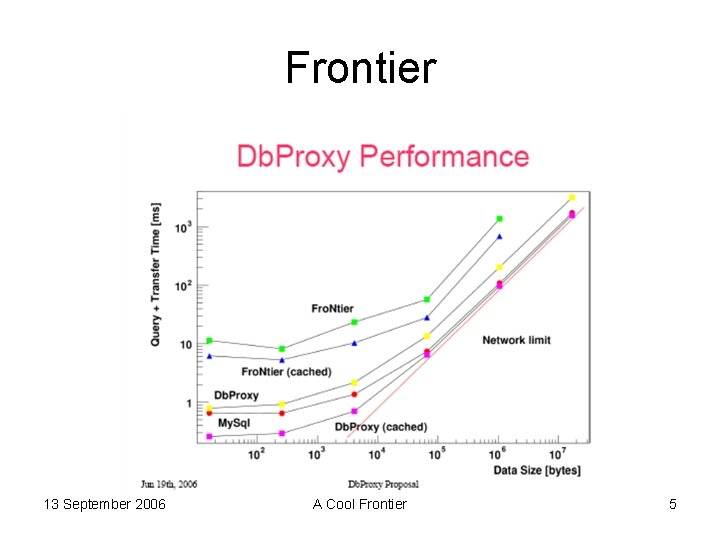

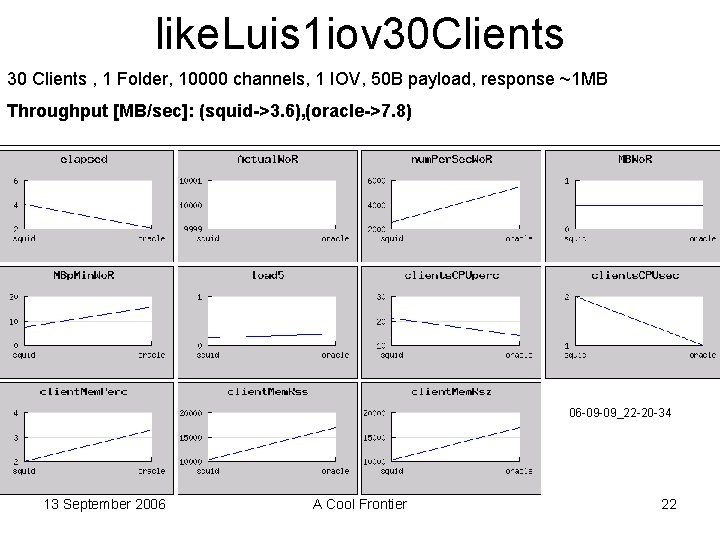

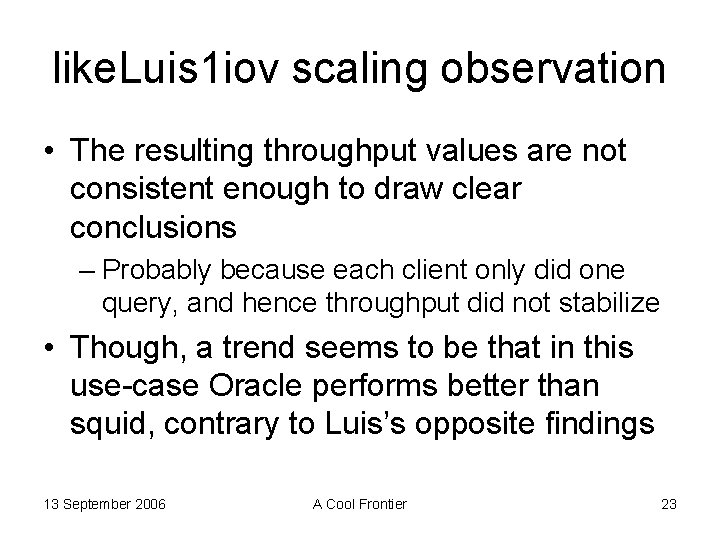

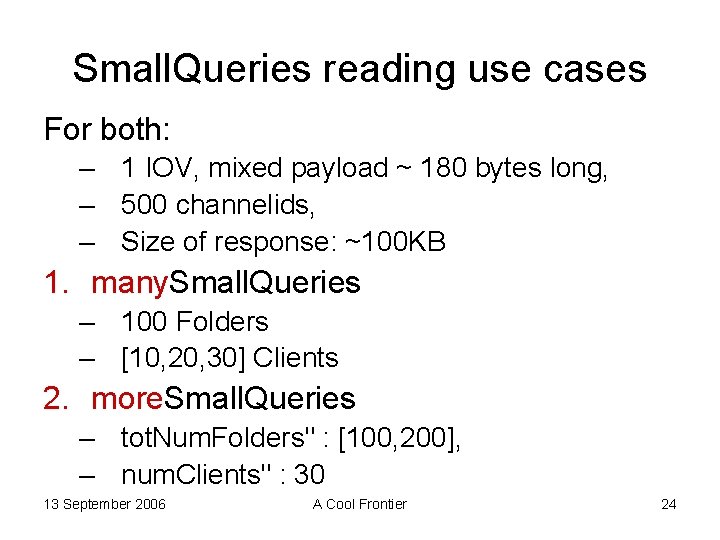

Small. Queries reading use cases For both: – 1 IOV, mixed payload ~ 180 bytes long, – 500 channelids, – Size of response: ~100 KB 1. many. Small. Queries – 100 Folders – [10, 20, 30] Clients 2. more. Small. Queries – tot. Num. Folders" : [100, 200], – num. Clients" : 30 13 September 2006 A Cool Frontier 24

![1 many Small Queries 10 20 30 Clients 100 Folders 1 IOV payload 1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-25.jpg)

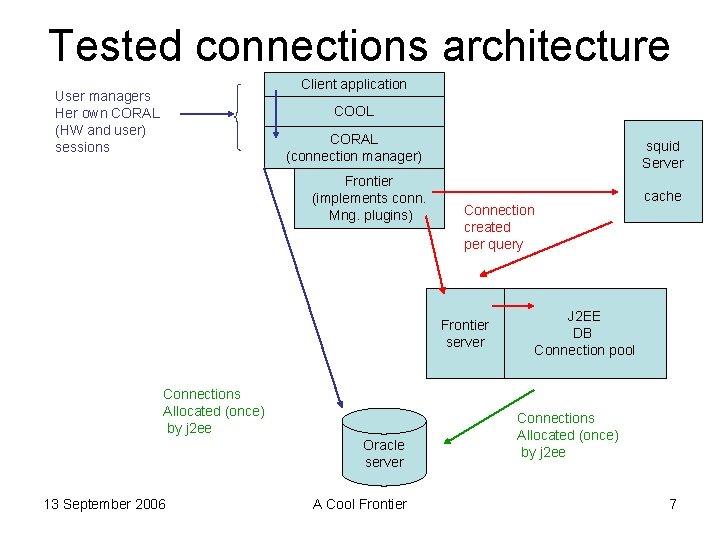

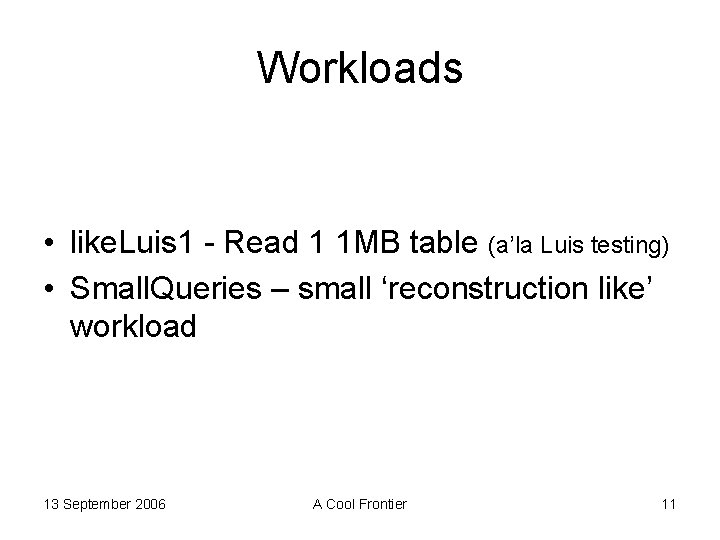

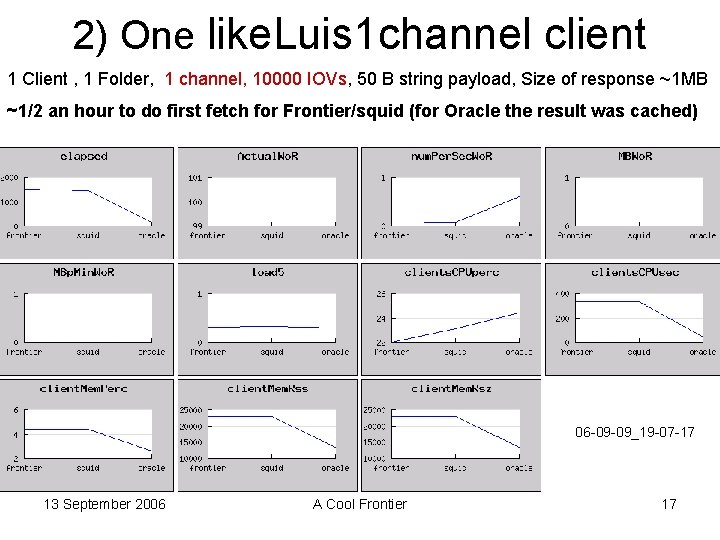

1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload ~ 180 B, 500 channels, response ~100 KB Throughput for Oracle and Squid grows to ~1 MB/sec, Frontier ~100 KB/sec 13 September 2006 A Cool Frontier 06 -09 -11_09 -59 -43 25

![1 many Small Queries 10 20 30 Clients 100 Folders 1 IOV payload 1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-26.jpg)

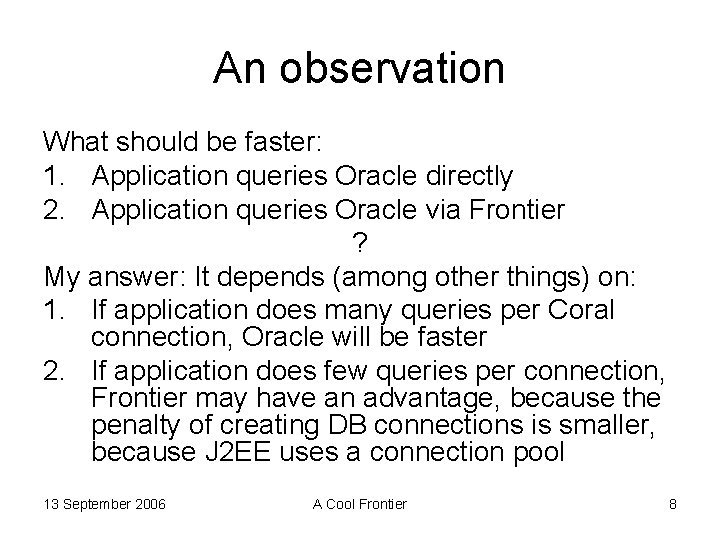

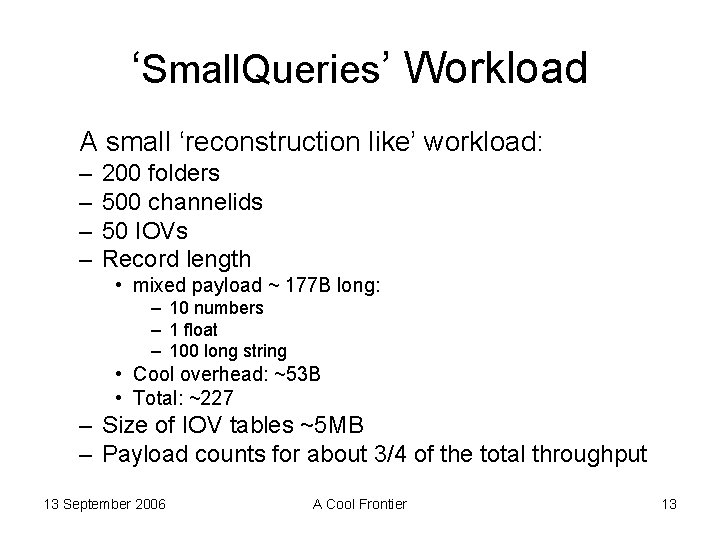

1) many. Small. Queries [10, 20, 30] Clients , 100 Folders, 1 IOV, payload ~ 180 B, 500 channels, response ~100 KB Throughput for Oracle and Squid grows to ~1 MB/sec, Frontier ~100 KB/sec 13 September 2006 A Cool Frontier 06 -09 -11_18 -01 -20 26

![2 more Small Queries 30 Clients 100 200 Folders 1 IOV mixed payload 2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-27.jpg)

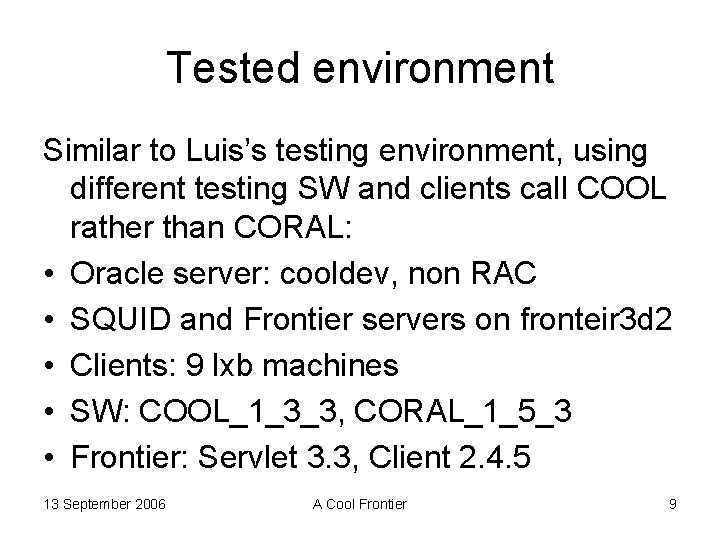

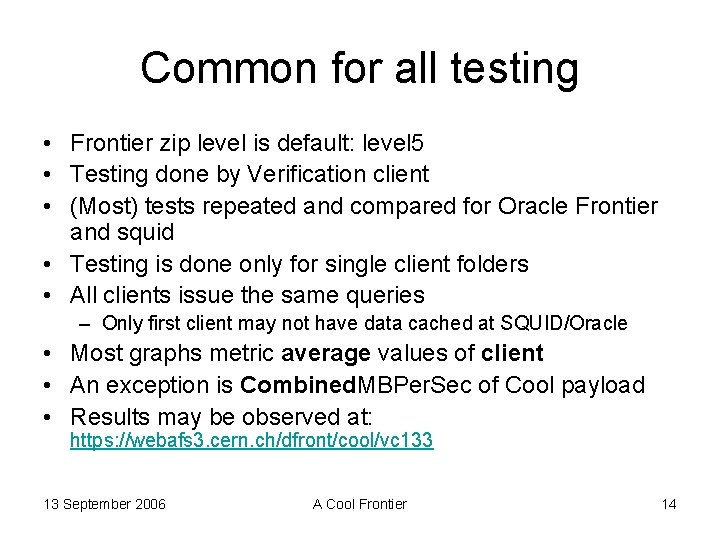

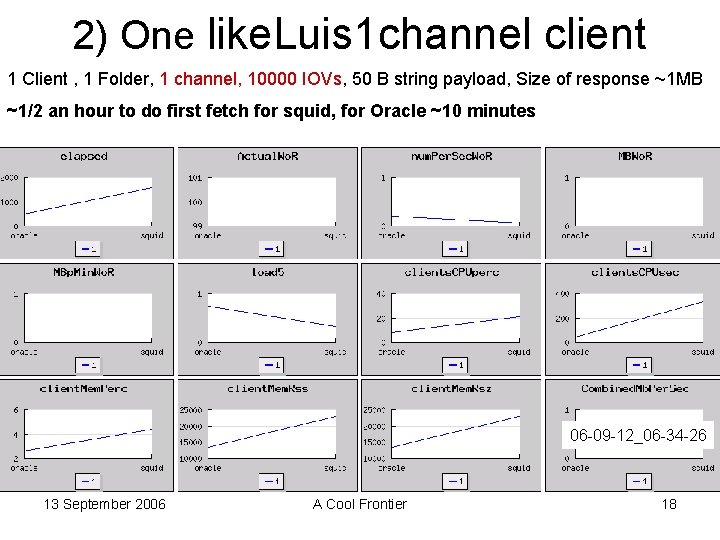

2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload ~ 180 B, 500 channels, Network ok Oracle and squid throughput is about 1 MB/sec 13 September 2006 A Cool Frontier 06 -09 -12_07 -10 -23 27

![2 more Small Queries 30 Clients 100 200 Folders 1 IOV mixed payload 2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload](https://slidetodoc.com/presentation_image/29e9150d34763addc2ab1e79d4b61b5a/image-28.jpg)

2) more. Small. Queries 30 Clients , [100, 200] Folders, 1 IOV, mixed payload ~ 180 B, 500 channels, Network problems – mainly during SQUID – lower throughput for squid 13 September 2006 A Cool Frontier 06 -09 -11_23 -20 -40 28

Small. Queries observation • Oracle and Squid throughput grows to about 1 MB/s for 30 clients, each reading 100 -200 folders • Yet to be learned what bottlenecks restricts throughput to about 1 MB • Frontier is one order of magnitude slower 13 September 2006 A Cool Frontier 29

Bottleneck? • Yet to be measured, if the removal of the following query that was very frequently done by Coral Frontier plugin does cause Frontier and Squid to be faster: SELECT VALUE FROM DATABASE_COMPATIBLE_LEVEL http: //savannah. cern. ch/bugs/? func=detailitem&item_id=19664 13 September 2006 A Cool Frontier 30

Summary • Oracle Frontier and SQUID usually survived the stress, even when the Frontier/Squid server was considerably loaded. Yet, a few percents of clients failed: – Squid proxy timeout – Unrecognized testing client crashes • Throughput: For squid and Oracle: – 1 MB folders grows to a ~10 MB/sec(+-3 MB) – 100 MB folders grows to ~1 MB/sec Frontier is about an order of magnitude slower 13 September 2006 A Cool Frontier 31

What next • Further test to understand the bottlenecks that result in 1 MB/sec throughput at ‘Small. Queries’ testing • Test squid where a given percent of queries are not cached • Test with multiple versioned folders • Learn the performance advantage of remote squids • Testing with TBD Squid (Atlas? ) consistency policies 13 September 2006 A Cool Frontier 32

Links • Frontier performance measuments Amedeo Perazzo fom SLAC: http: //indico. cern. ch/conference. Display. py? conf. I d=a 062174 • Testing results: https: //webafs 3. cern. ch/dfront/cool/vc 133 • Verification Client: http: //cool. cvs. cern. ch/cgibin/cool. cgi/*checkout*/cool/contrib/Verification. Client/README? rev=HEAD&contenttype=text/plain 13 September 2006 A Cool Frontier 33

Frontier bug fixes in COOL 133 (Andrea) • Frontier tests were failing before COOL 133 due to many independent problems in CORAL, Frontier client and server – all fixed now • Bug fixes in Frontier. Access in CORAL 153 (thanks to Rado) – Bug #18275 in using column metadata – cause of COOL bug #18270 for MAX(IOV_SINCE) and bug #16995 for DISTINCT(CHANNEL_ID) queries • Bug fixes in frontier_client 2. 5. 1 (thanks to Dave Dykstra and Luis) – Bug #18359 in data compression on AMD 64 • Bug fixes and upgrades in frontier 3 d. cern. ch server (thanks to Luis) – Upgrade 3. 0 server to 3. 1 to enable compression (needed by 2. 4. 5 client) – Luis’s fix over 3. 2 server for bug #18191 in providing column metadata 13 September 2006 A Cool Frontier 34

Pending Frontier bugs in COOL (Andrea) • New bugs have been recently identified in COOL 133: – COOL (CORAL? ) bug #19753 with table aliases (Richard) • High priority bug for Atlas (COOL multi-version data with user tags) – CORAL bug #19758: segfault in Query. Definition: : process – CORAL (SEAL? ) bug #19762: abort in Session: : is. Connected • Lower priority bugs pending from previous releases: – COOL (CORAL? ) bug #18146 for unsigned char data types 13 September 2006 A Cool Frontier 35

More details 13 September 2006 A Cool Frontier 36

Squid proxy timeout At my most stressed test: (more. Small. Queries/06 -09 -12_07 -10 -23): • 2 out of 60 squid clients crashed with proxy timeout: std: : exception caught: coral: : Frontier. Access: : Statement: : execute ( CORAL : "Can not get data (Additional Information: [frontier. c: 434]: No more servers/proxies, last error: Request failed: -6 [fn-socket. c: 219]: read timed out)" from "CORAL/Relational. Plugins/frontier" ) • Yet to be learned (according to Luis) if and how this is connected with the new Frontier ‘keep alive’ feature, intended to avoid such failures. 13 September 2006 A Cool Frontier 37

Testing client crashes 2 frontier and 2 squid clients of /many. Small. Queries/06 -09 -11_18 -01 -20, About 2% of the clients. Probably an exception not caught by client. ~/vc 133/logs/many. Small. Queries/06 -09 -11_18 -01 -20/m_c_num. Clients_10_frontier_6_read_2_err_2006 -09 -11_18 -35 -00_lxb 0678_read_Run_0_Phase_1 stack trace: 0 x 007 ed 5 e 4 _ZN 4 seal 9 Debug. Aids 10 stacktrace. Ei + 0 x 60 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Base. so] 0 x 0081812 a _ZN 4 seal 6 Signal 9 fatal. Dump. Ei. P 7 siginfo. Pv + 0 xde [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Base. so] 0 x 00817 b 55 _ZN 4 seal 6 Signal 5 fatal. Ei. P 7 siginfo. Pv + 0 xd 5 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Base. so] 0 x 00 c 98 f 80 ? + 0 xc 98 f 80 [/lib/tls/libpthread. so. 0] 0 x 006 ac 705 abort + 0 x 1 d 5 [/lib/tls/libc. so. 6] 0 x 009834 f 7 ? + 0 x 9834 f 7 [/usr/libstdc++. so. 5] 0 x 00983544 ? + 0 x 983544 [/usr/libstdc++. so. 5] 0 x 00983 a 47 ? + 0 x 983 a 47 [/usr/libstdc++. so. 5] 0 x 004 bceba _ZN 4 seal 13 Message. Stream 8 do. Output. Ev + 0 x 226 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Kernel. so] 0 x 00 bc 3 fec _ZN 4 seal 5 flush. ERNS_13 Message. Stream. E + 0 x 3 c [/afs/cern. ch/user/d/dfront/my. LCG/COOL_1_3_3/slc 3_ia 32_gcc 323/liblcg_Cool. Application. so] 0 x 009 f 7 bca _ZN 4 cool 14 Ral. Database. Svc. D 0 Ev + 0 xce [/afs/cern. ch/user/d/dfront/my. LCG/COOL_1_3_3/slc 3_ia 32_gcc 323/liblcg_Relational. Cool. so] 0 x 004 b 7 ea 8 _ZN 5 boost 13 intrusive_ptr. IN 4 seal 9 Component. EED 2 Ev + 0 x 40 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Kernel. so] 0 x 004 b 93 f 1 _ZSt 13__destroy_aux. IN 9__gnu_cxx 17__normal_iterator. IPN 4 seal 6 Handle. INS 2_9 Component. EEESt 6 vector. IS 5_Sa. IS 5_EEEEEv. T_SB_12__false_type + 0 x 2 d [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Kernel. so] 0 x 004 b 86 da _ZN 4 seal 7 Context. D 0 Ev + 0 x 62 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Kernel. so] 0 x 004 c 047 d _ZN 4 seal 7 Service. D 2 Ev + 0 x 99 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Kernel. so] 0 x 00567786 _ZN 4 seal 11 Application. D 0 Ev + 0 x 82 [/afs/cern. ch/sw/lcg/app/releases/SEAL_1_8_1/slc 3_ia 32_gcc 323/liblcg_Seal. Services. so] 0 x 00 bc 21 cd _ZN 4 cool 11 Application. D 1 Ev + 0 x 101 [/afs/cern. ch/user/d/dfront/my. LCG/COOL_1_3_3/slc 3_ia 32_gcc 323/liblcg_Cool. Application. so] 0 x 00 bc 4837 ? + 0 xbc 4837 [/afs/cern. ch/user/d/dfront/my. LCG/COOL_1_3_3/slc 3_ia 32_gcc 323/liblcg_Cool. Application. so] 0 x 006 ad 8 f 3 exit + 0 x 63 [/lib/tls/libc. so. 6] 0 x 006987 fc ? + 0 x 6987 fc [/lib/tls/libc. so. 6] 13 September 2006 A Cool Frontier 38

Testing limitations • Client crashes (a few percentage) are not taken into account in the measurements. 13 September 2006 A Cool Frontier 39