A ContentBased Approach to Collaborative Filtering Brandon DouthitWood

A Content-Based Approach to Collaborative Filtering Brandon Douthit-Wood CS 470 – Final Presentation

Collaborative Filtering • Method of automating word-of-mouth • Large groups of users collaborate by rating products, services, news articles, etc. • Analyze ratings data of the group to produce recommendations for individual users – Find users with similar tastes

Problems with Collaborative Filtering Methods • Performance – Prohibitively large dataset • Scalability – Will the solution scale to millions of users on the Internet? • Sparsity of data – User who has rated few items – Item with few ratings

Problems with Collaborative Filtering Methods • Cannot compare users that have no common ratings User 1 User 2 Billy Madison 4 Happy Gilmore 5 Mr. Deeds 4 50 First Dates 5 Big Daddy 4 (Ratings on a scale of 1 -5)

A Content-Based Approach • Build a feature list for each user based on content of items rated • Compare users’ features to make recommendations • Now we can find similarity between users with no common ratings

Data Source • Each. Movie Project – Compaq Systems Research Center – Over 18 months collected 2, 811, 983 ratings for 1, 628 movies from 72, 916 users – Ratings given on 1 -5 scale – Dataset split into 75% training, 25% testing • Internet Movie Database (IMDb) – Huge database of movie information • Actors, director, genre, plot description, etc.

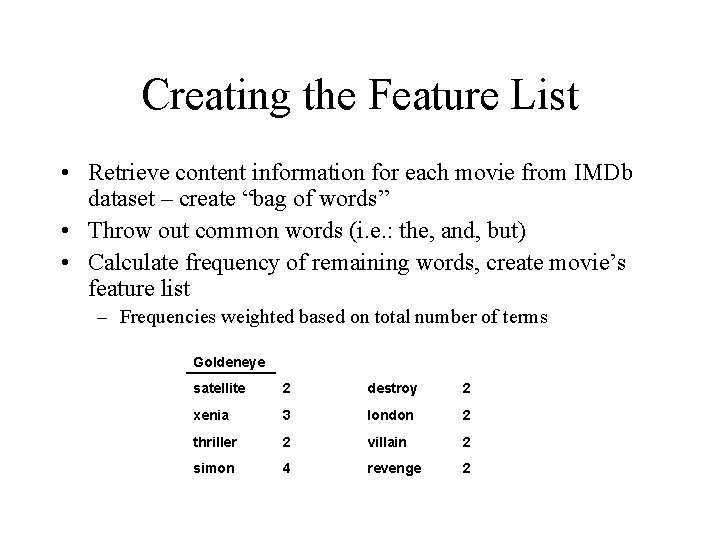

Creating the Feature List • Retrieve content information for each movie from IMDb dataset – create “bag of words” • Throw out common words (i. e. : the, and, but) • Calculate frequency of remaining words, create movie’s feature list – Frequencies weighted based on total number of terms Goldeneye satellite 2 destroy 2 xenia 3 london 2 thriller 2 villain 2 simon 4 revenge 2

Comparing Users • Each user has positive and negative feature list – Combine feature lists of movies they have rated • Compare user’s feature lists using Pearson Correlation Coefficient • Users can be compared with no common ratings • Able to recommend items with few ratings • Users only need to rate a few items to receive recommendations

Methods • Three methods attempted to improve performance: – Clustering of users – Random groups of users – Compare users directly to items

User Clustering • Simple algorithm, starting with first user: – Compare to existing clusters first • If similarity is high, merge user into cluster – Compare to each remaining user – Stop if correlation is above threshold – Once a similar user is found, create a new cluster from the two users • Cluster has combined feature list of all its users • Not as efficient as possible - O(n 2)

User Clustering • Once clusters are formed, we can predict ratings for each item – For each user, find their 10 nearest neighbors – Predicted rating is the average rating of item from these neighbors

Selecting a Random Group • Randomly select 5000 users as a (hopefully) representative sample • As before, find a user’s 10 nearest neighbors from the random group – Predicted rating is the average rating of item from these neighbors • Much less work than clustering – How much accuracy (if any) will be lost?

Comparing Users to Items • No collaborative filtering involved • Compare the positive and negative feature lists of user to feature list of item – Make prediction based on which feature list has higher correlation with item • Pretty quick and easy to do – How accurate will this be?

Analyzing Predictions • Collected 3 metrics to evaluate predictions – Accuracy: all items predicted correctly – Precision: positive items predicted correctly – Recall: unseen positive items predicted correctly • Precision and recall have inverse relationship

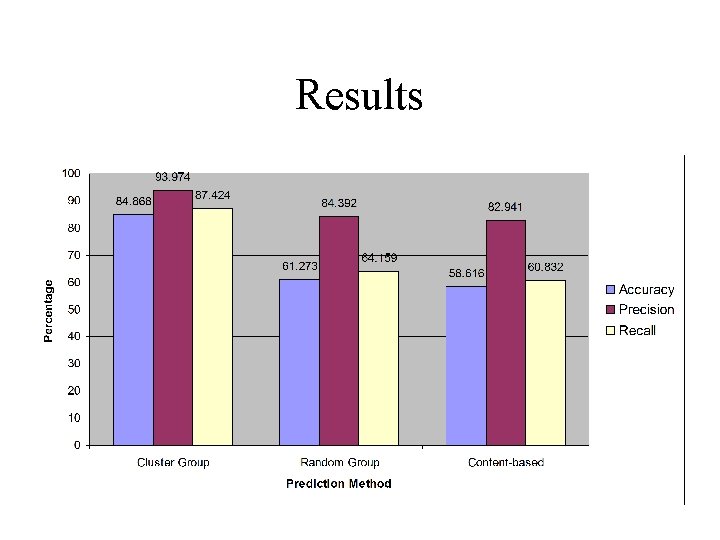

Results

Conclusions • Large gain from clustering users – Is the extra work worth it? – Depends on the application • Purely content-based predictions worked pretty well – Simple, fast solution • Random group prediction also performed reasonably well • Problems solved by content-based analysis: – Sparsity of data – Performance – Scalability

- Slides: 16