A Comparison of String Matching Distance Metrics for

- Slides: 13

A Comparison of String Matching Distance Metrics for Name-Matching Tasks William Cohen, Pradeep Ravi. Kumar, Stephen Fienberg

Motivating Example • List of people and some attributes compiled by one source • Updates by another source need to be merged • Need to locate matching records • Forcing exact match not sufficient – Typographical errors (letter “B” vs. letter “V”) – Scanning errors (letter “I” vs. numeral “ 1”) – Such errors exceed 20% in some cases • Decide when two records match Decide when two strings (or words) are identical

History – String Matching • Statistics – Treat as a classification problem [Fellegi & Sunter] – Use of other prior knowledge • String represented as a feature vector • Databases – No prior knowledge • Use of distance functions – edit distance, Monge & Elkan, TFIDF – Knowledge-intensive approaches • User interaction [Hernandez & Stolfo] • Artificial Intelligence – Learn the parameters of the edit distance functions – Combine the results of different distance functions • Compare string matching distance functions for the task of name matching

Edit Distance • Number of edit operations needed to go from string s to string t • Operations: insert, delete, substitution • Levenstein: assigns unit cost – Distance (“smile”, “mile”) = 1 – Distance (“meet”, “meat”) = 1 • Computed by dynamic programming • Reordering of words can be misleading – “Cohen, William” vs. “William Cohen”

Edit Distance • Monger-Elkan: assigns relatively lower cost to sequence of insertions or deletions – A + B*(n – 1) for n insertions or deletions (B < A) • Other methods that assign decreasing costs to subsequent insertions

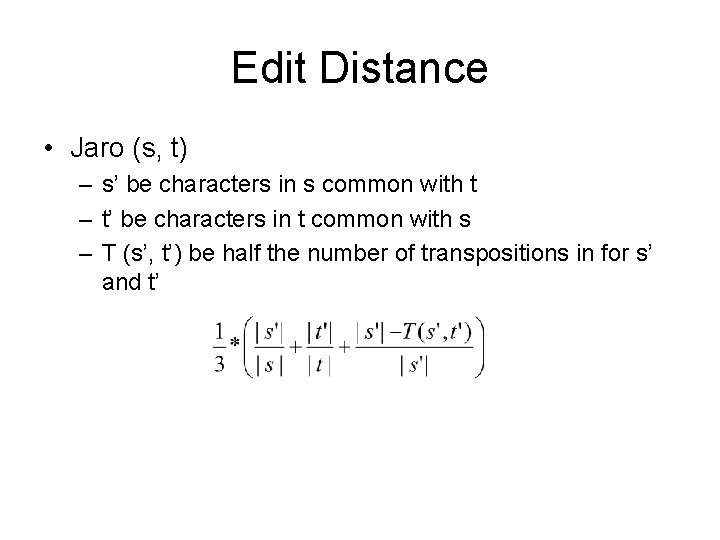

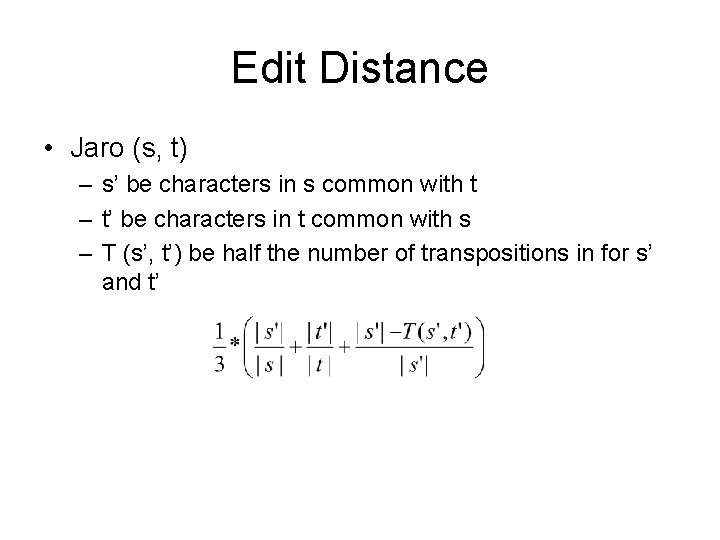

Edit Distance • Jaro (s, t) – s’ be characters in s common with t – t’ be characters in t common with s – T (s’, t’) be half the number of transpositions in for s’ and t’

Improvements to Jaro • Mc. Laughlin – Exact match – weight of 1. 0 – Similar characters – weight of 0. 3 • Scanning error (“I” vs. “ 1”) • Typographical error (“B” vs. “V”) • Pollock and Zamora – Error rates increase as the position in string moves to the right – Adjust output of Jaro by fixed amount depending upon how many of the first 4 characters match

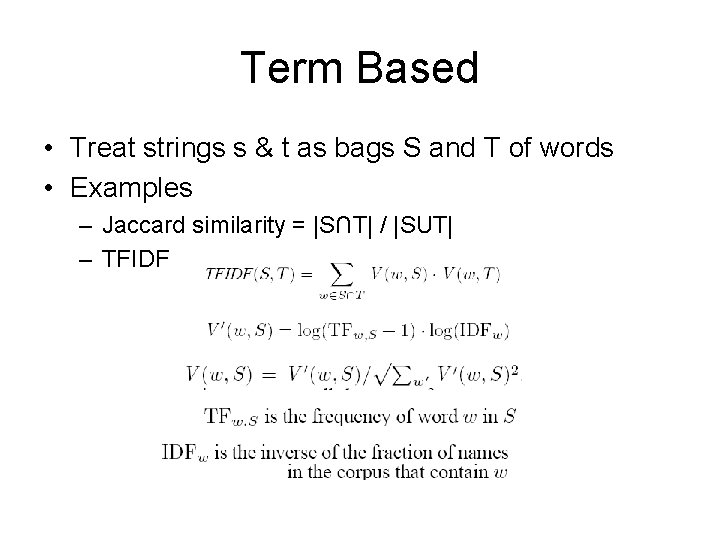

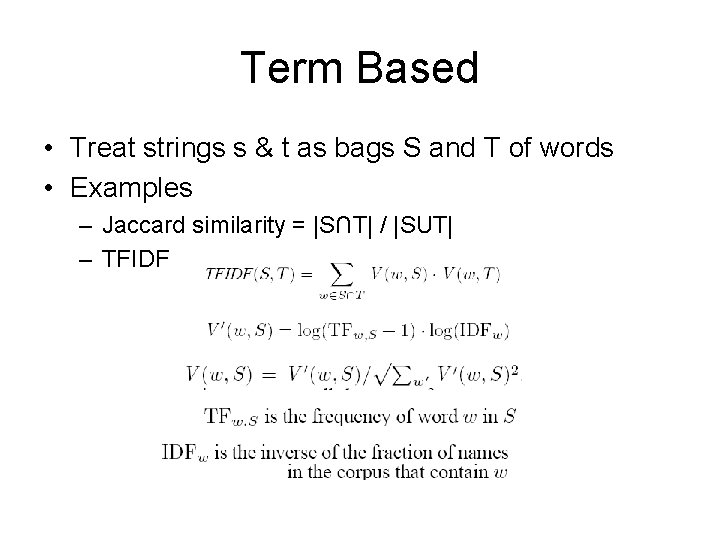

Term Based • Treat strings s & t as bags S and T of words • Examples – Jaccard similarity = |S∩T| / |SUT| – TFIDF

Term Based • Words may be weighted to make the common words count less • Advantages – Exploits frequency information – Ordering of words doesn’t matter (Cohen, William vs. William Cohen) • Disadvantages – Sensitive to errors in spelling (Cohen vs. Cohon) and abbreviations (Univ. vs. University) – Ordering of words ignored (City National Bank vs. National City Bank)

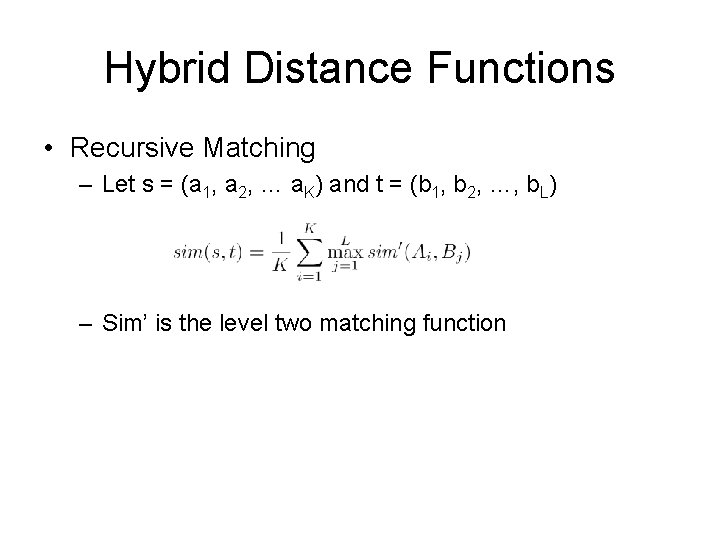

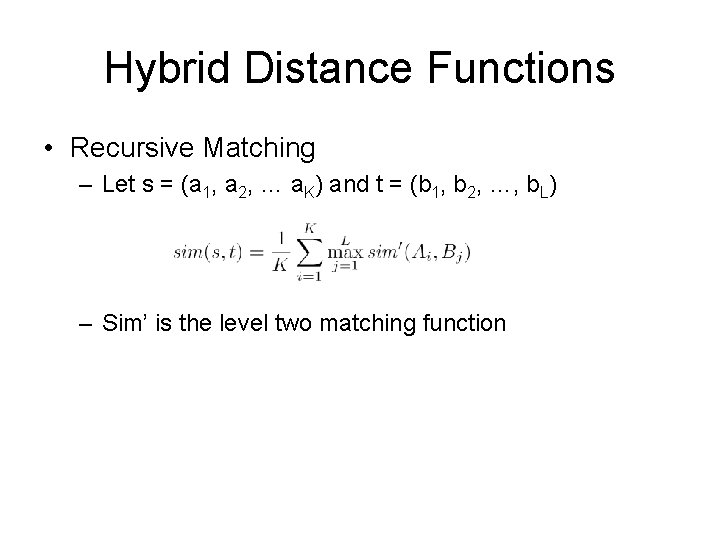

Hybrid Distance Functions • Recursive Matching – Let s = (a 1, a 2, … a. K) and t = (b 1, b 2, …, b. L) – Sim’ is the level two matching function

Blocking / Pruning Methods • Comparing all pairs – too expensive when lists are large • A pair (s, t) is a candidate for match if they share some substring v that appears in at most a fraction f of all names • Using a v of length 4 and f = 1% finds on an average of 99% correct pairs

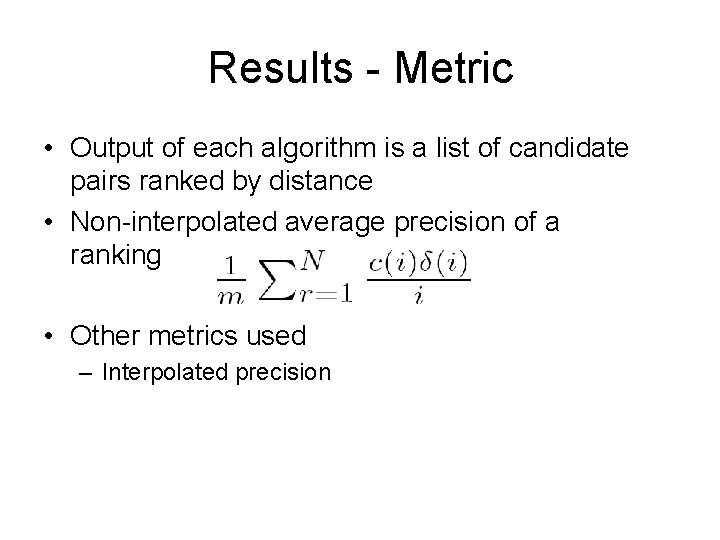

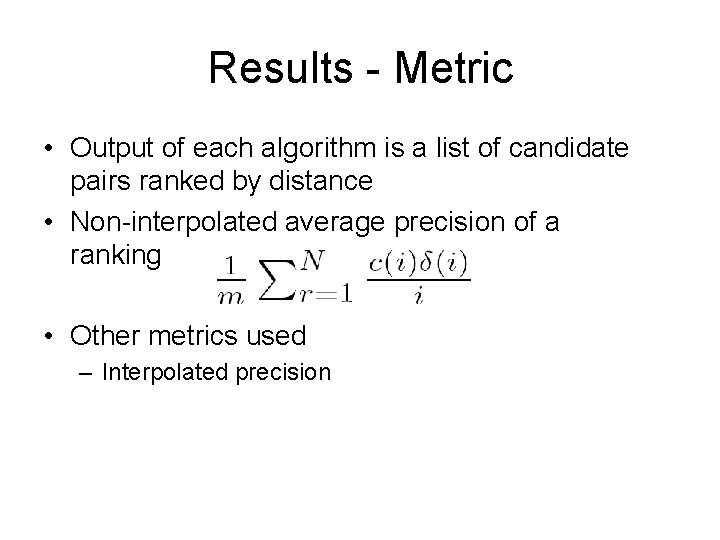

Results - Metric • Output of each algorithm is a list of candidate pairs ranked by distance • Non-interpolated average precision of a ranking • Other metrics used – Interpolated precision

Results - Matching • Term based: TFIDF most accurate • Edit distance based: Monge-Elkan most accurate • Jaro as accurate as Monge-Elkan, but much faster • Combine TFIDF and Jaro