A Comparison of Methods for Scoring Multidimensional Constructs

- Slides: 1

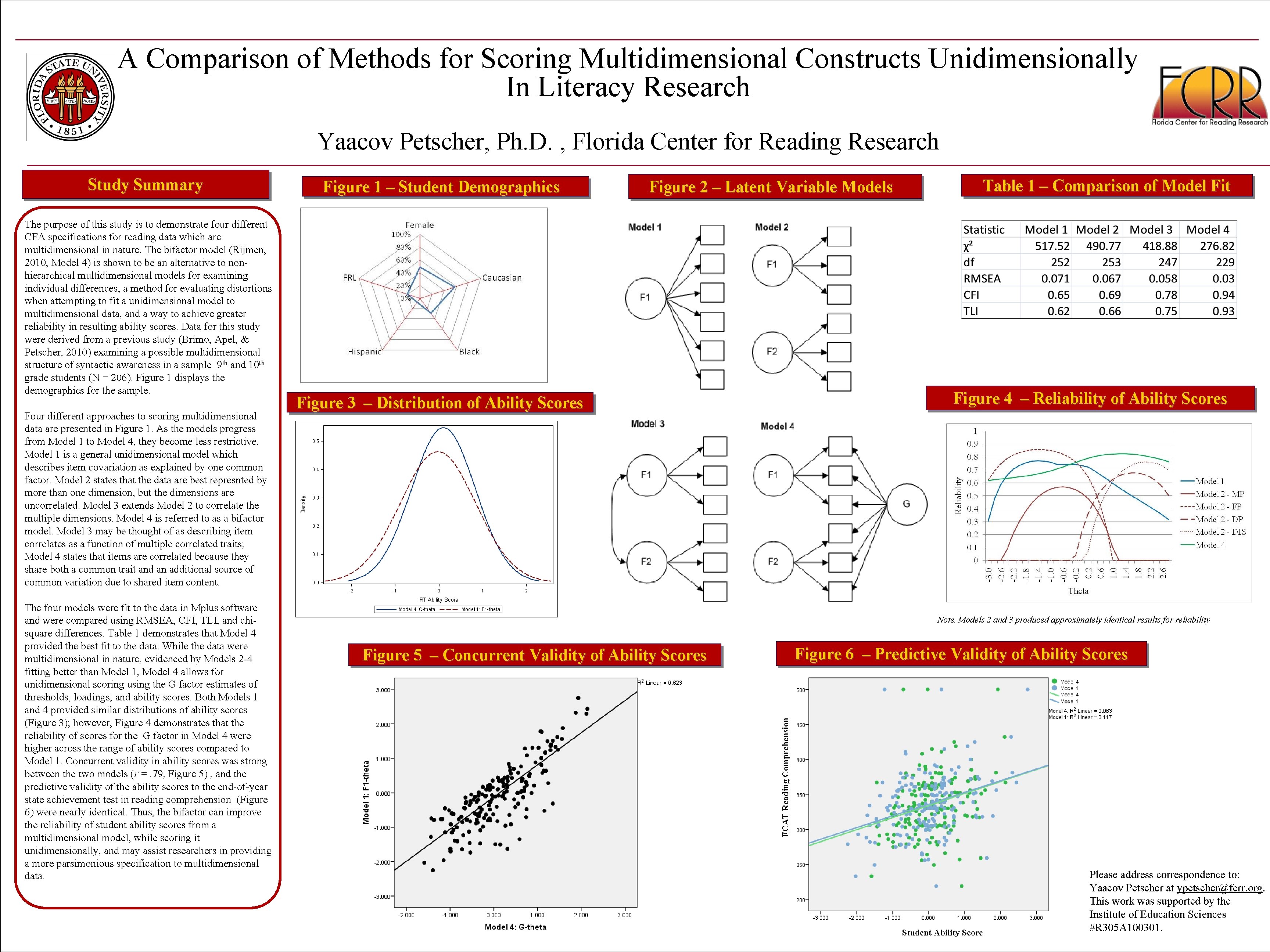

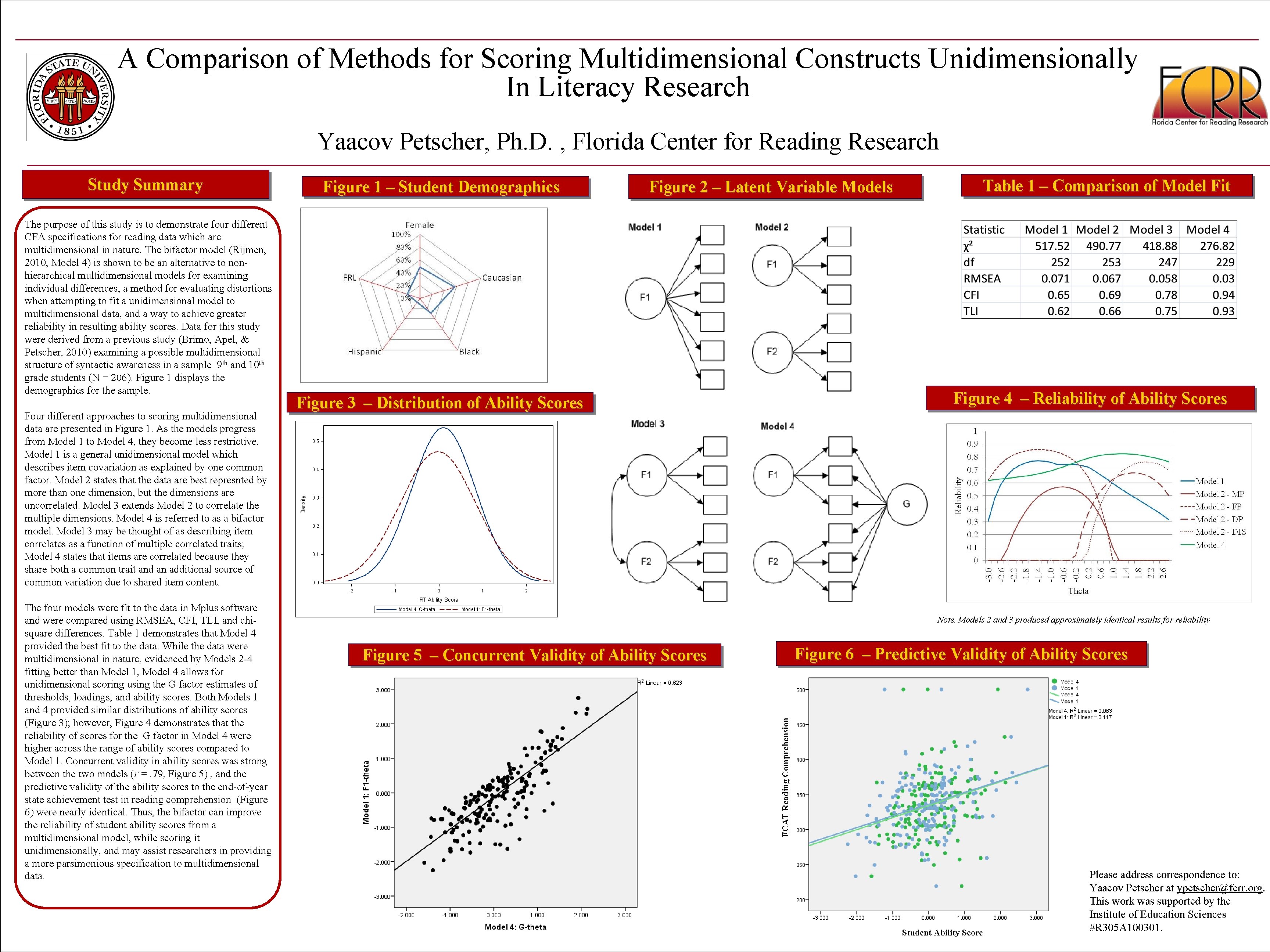

A Comparison of Methods for Scoring Multidimensional Constructs Unidimensionally In Literacy Research Yaacov Petscher, Ph. D. , Florida Center for Reading Research The purpose of this study is to demonstrate four different CFA specifications for reading data which are multidimensional in nature. The bifactor model (Rijmen, 2010, Model 4) is shown to be an alternative to nonhierarchical multidimensional models for examining individual differences, a method for evaluating distortions when attempting to fit a unidimensional model to multidimensional data, and a way to achieve greater reliability in resulting ability scores. Data for this study were derived from a previous study (Brimo, Apel, & Petscher, 2010) examining a possible multidimensional structure of syntactic awareness in a sample 9 th and 10 th grade students (N = 206). Figure 1 displays the demographics for the sample. Four different approaches to scoring multidimensional data are presented in Figure 1. As the models progress from Model 1 to Model 4, they become less restrictive. Model 1 is a general unidimensional model which describes item covariation as explained by one common factor. Model 2 states that the data are best represnted by more than one dimension, but the dimensions are uncorrelated. Model 3 extends Model 2 to correlate the multiple dimensions. Model 4 is referred to as a bifactor model. Model 3 may be thought of as describing item correlates as a function of multiple correlated traits; Model 4 states that items are correlated because they share both a common trait and an additional source of common variation due to shared item content. The four models were fit to the data in Mplus software and were compared using RMSEA, CFI, TLI, and chisquare differences. Table 1 demonstrates that Model 4 provided the best fit to the data. While the data were multidimensional in nature, evidenced by Models 2 -4 fitting better than Model 1, Model 4 allows for unidimensional scoring using the G factor estimates of thresholds, loadings, and ability scores. Both Models 1 and 4 provided similar distributions of ability scores (Figure 3); however, Figure 4 demonstrates that the reliability of scores for the G factor in Model 4 were higher across the range of ability scores compared to Model 1. Concurrent validity in ability scores was strong between the two models (r =. 79, Figure 5) , and the predictive validity of the ability scores to the end-of-year state achievement test in reading comprehension (Figure 6) were nearly identical. Thus, the bifactor can improve the reliability of student ability scores from a multidimensional model, while scoring it unidimensionally, and may assist researchers in providing a more parsimonious specification to multidimensional data. Figure 1 – Student Demographics Figure 2 – Latent Variable Models Table 1 – Comparison of Model Fit Figure 4 – Reliability of Ability Scores Figure 3 – Distribution of Ability Scores Note. Models 2 and 3 produced approximately identical results for reliability Figure 6 – Predictive Validity of Ability Scores Figure 5 – Concurrent Validity of Ability Scores FCAT Reading Comprehension Study Summary Student Ability Score Please address correspondence to: Yaacov Petscher at ypetscher@fcrr. org. This work was supported by the Institute of Education Sciences #R 305 A 100301.