A Clusteringbased Qo S Prediction Approach for Web

A Clustering-based Qo. S Prediction Approach for Web Service Recommendation Jieming Zhu, Yu Kang, Zibin Zheng and Michael R. Lyu Shenzhen, China April 12, 2012 i. VCE 2012

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 2

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 3

Motivation u Web services: computational components to build service-oriented distributed systems v To communicate between applications v To reuse existing services v Rapid development u The rising popularity of Web service v E. g. Google Map Service, Yahoo! Weather Service v Web Services take Web-applications to the Next Level 4

Motivation u Web service recommendation: Improve the performance of service-oriented system u Quality-of-Service (Qo. S): Non-functional performance v Response time, throughput, failure probability v Different users receive different performance u Active Qo. S measurement is infeasible v The large number of Web service candidates v Time consuming and resource consuming u Qo. S prediction: an urgent task 5

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 6

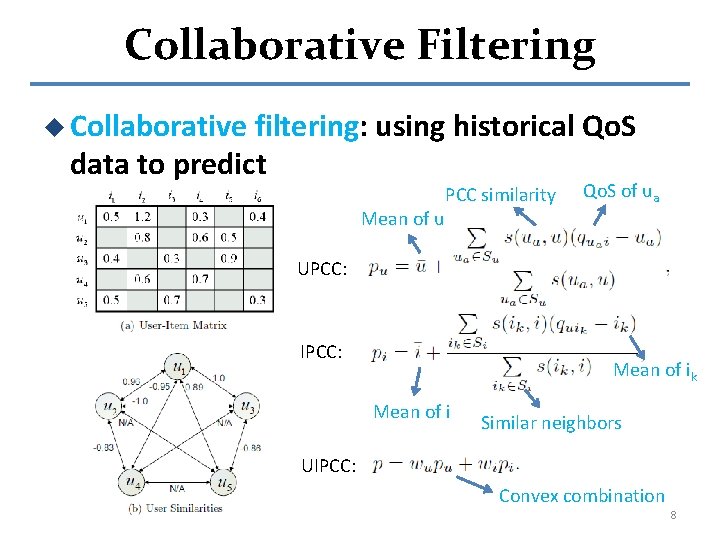

Related Work u Collaborative filtering (CF) based approaches v UPCC (ICWS ’ 07) v IPCC, UIPCC (ICWS ’ 09, ICWS’ 10, ICWS’ 11) v Suffer from the sparsity of available historical Qo. S data v Especially run into malfunction for new users u Our approach: A landmark-based Qo. S prediction framework A clustering-based prediction algorithm 7

Collaborative Filtering u Collaborative filtering: using historical Qo. S data to predict Mean of u PCC similarity Qo. S of ua UPCC: IPCC: Mean of ik Mean of i Similar neighbors UIPCC: Convex combination 8

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 9

WS Recommendation Framework u Web service monitoring by landmarks a. The landmarks are deployed and monitor the Qo. S info by periodical invocations b. Clustering the landmarks using the obtained data 10

WS Recommendation Framework u Service user request for WS invocation c. The user measures the latencies to the landmarks d. Cluster the user e. Make Qo. S prediction with information of landmarks in the same cluster f. WS recommendation for users 11

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 12

Prediction Algorithm u Landmarks Clustering v UBC: User based Clustering The network distances between pairwise landmarks NL the number of landmarks The clustering algorithm of landmarks 13

Prediction Algorithm u Landmarks Clustering v WSBC: Web Service based Clustering The Qo. S values between NL landmarks and W Web services W is the number of Web services Similarity computation between landmarks Call hierarchical algorithm to cluster the landmarks 14

Prediction Algorithm u Qo. S Prediction The network distances between NU service users and NL landmarks NU is the number of service users The distances between user u and landmarks in the same cluster Similarity between u and l Prediction using landmark information in the same cluster 15

Outline u Motivation u Related u WS Work Recommendation Framework u Qo. S Prediction Algorithm v Landmark Clustering v Qo. S Value Prediction u Experiments u Conclusion & Future Work 16

Experiments u Data Collection v The response times between 200 users (Planet. Lab nodes) and 1, 597 Web services v The latency time between the 200 distributed nodes 17

Experiments u Evaluation Metrics v MAE: to measure the average prediction accuracy v RMSE: presents the deviation of the prediction error v MRE (Median Relative Error): a key metric to identify the error effect of different magnitudes of prediction values 50% of the relative errors are below MRE 18

Experiments u Performance Comparison v Parameters setting: 100 Landmarks, 100 users, 1, 597 Web services, Nc=50, matrix density = 50%. v WSBC & UBC: Our approaches UBC outperforms the others! 19

Experiments u The Impact of Parameters The impact of Nc The performance is sensitive to Nc. Optimal Nc is important. The impact of landmarks selection The landmarks deployment is important to the prediction performance improvement. 20

Conclusion & Future Work u Propose a landmark-based Qo. S prediction framework u Our clustering-based approaches outperform the other existing approaches u Release a large-scale Web service Qo. S dataset with the info between landmarks v http: //www. wsdream. net u Future work: v Validate our approach by realizing the system v Apply some other approaches with landmarks to Qo. S prediction 21

Thank you Q&A 22

- Slides: 22