A Case for Flash Memory SSD in Enterprise

- Slides: 21

A Case for Flash Memory SSD in Enterprise Database Applications Authors: Sang-Won Lee, Bongki Moon, Chanik Park, Jae-Myung Kim, Sang-Woo Kim Published on SIGMOD 2008 Presented by Jin Xiong 11/4/2008

Outline • • Flash memory SSD DB storage and workload Experimental settings Transaction log MVCC rollback segment Temporary table spaces Conclusions 2

Flash memory SSD (1) • Flash memory SSD – NAND-type flash memory – SAMSUNG – Interface: IDE 3

Flash memory SSD (2) • Characteristics – Uniform random access speed • Purely electronic device, no mechanically moving parts • Access latency is almost linearly proportional to the amount of data irrespective of their physical locations in flash memory. • One of the key characteristics we can take advantage of – Erase before overwriting • Data on SSD cannot be updated in place • Erase unit is much larger than a sector, 128 KB vs. 1 KB • Erase is time consuming, typically 1 -2 ms – Asymmetry of read and write speed • Write is much slower than read on SSD, 0. 4 ms vs 0. 2 ms in this paper 4

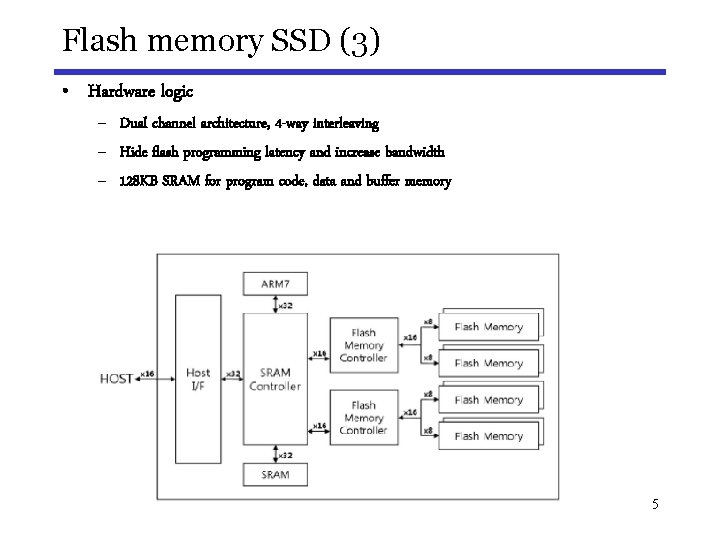

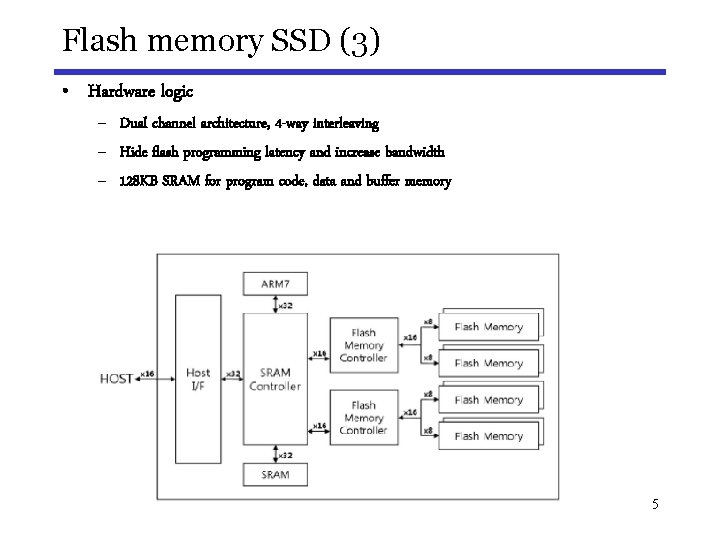

Flash memory SSD (3) • Hardware logic – Dual channel architecture, 4 -way interleaving – Hide flash programming latency and increase bandwidth – 128 KB SRAM for program code, data and buffer memory 5

Flash memory SSD (4) • Firmware: Flash translation layer (FTL) – Address mapping and wear leveling • Address the issue of limited write cycles of each sector • Based-on super-blocks: 1 MB, 8 erase units, 2 on each flash chip • Limit the amount of information required for mapping • Trends – Two-fold annual increase in the density – Original used in mobile computing devices • PDA’s, MP 3 players, mobile phones, digital cameras – Recently more and more used in portable computers and enterprise server market – Tremendous potential as a new storage medium that can replace magnetic disk and achieve much higher performance for enterprise database servers 6

DB Storage • Data structures in DB systems – Database tables and indexes • Not within the scope of this paper – Transaction log • Whenever a transaction updates a data object, its log record is created • Must be kept on stable storage for recoverability and durability – Temporary tables • Used to store temporary data required for performing operations such as sorts or joins – Rollback segments • Used in multiversion concurrent control (MVCC) 7

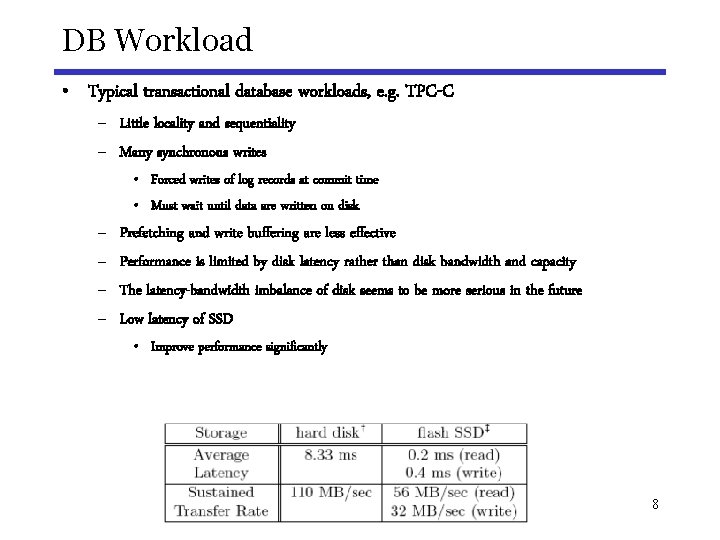

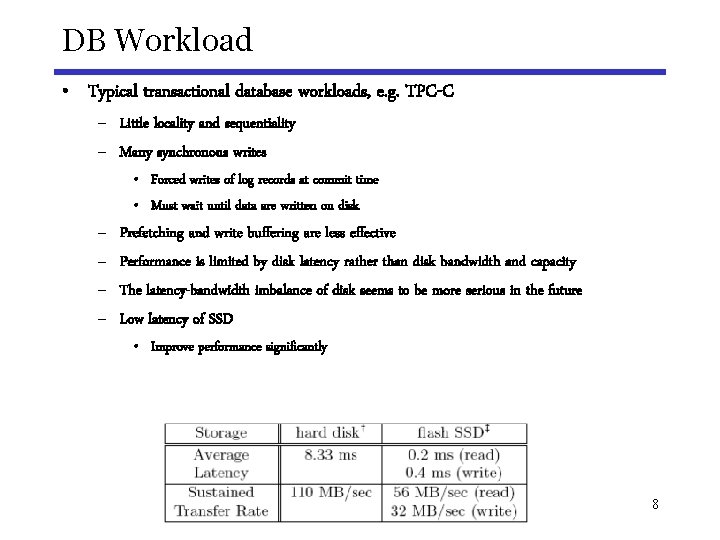

DB Workload • Typical transactional database workloads, e. g. TPC-C – Little locality and sequentiality – Many synchronous writes • Forced writes of log records at commit time • Must wait until data are written on disk – – Prefetching and write buffering are less effective Performance is limited by disk latency rather than disk bandwidth and capacity The latency-bandwidth imbalance of disk seems to be more serious in the future Low latency of SSD • Improve performance significantly 8

Experimental Settings • Two machines with identical hardware except disk – 1. 86 GHz Intel Pentium dual-core processor – 2 GB RAM • OS: Linux-2. 6. 22 • Disk – SSD: Samsung Standard Type, 32 GB, PATA (IDE), SLD NAND – HDD: Seagate Barracuda, 250 GB, 7200 rpm, SATA • DB – A commercial database server – Used HDD/SSD as a raw device (not through FS) – Database tables were cached in memory 9

Transaction log • Synchronous writes – When a transaction commits, it appends a commit type log record to the log, and forcewrites the log tail to stable storage • Response time – Tresponse = Tcpu + Tread + Twrite + Tcommit – Tcommit is a significant overhead, waiting disk I/O – Commit time delay is a serious bottleneck • Append-only sequential writes – HDD: no seek delay , avg latency 4. 17 ms (7200 rpm) – SSD: do not cause expensive merge or erase operations if clean blocks are available 10

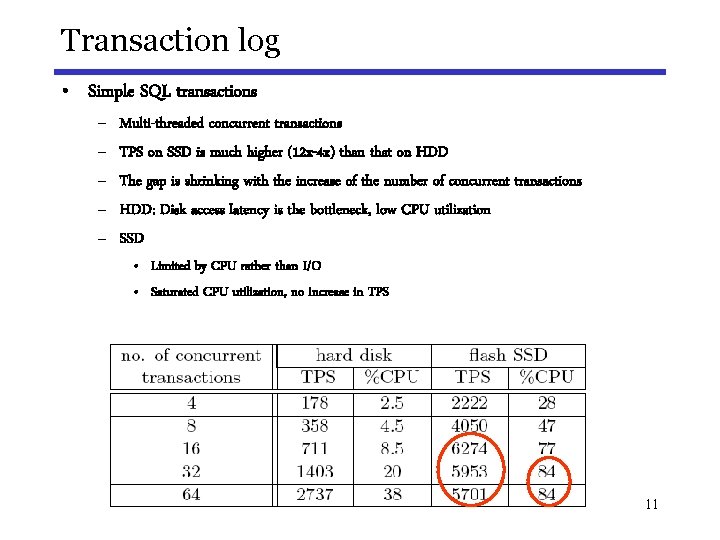

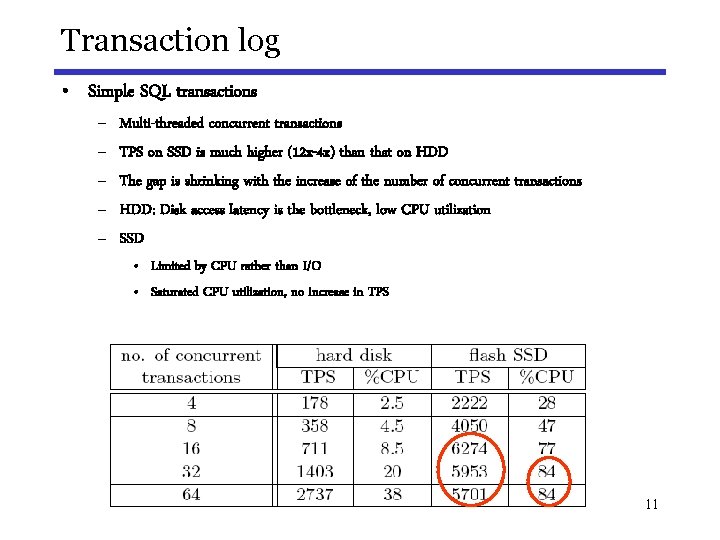

Transaction log • Simple SQL transactions – – – Multi-threaded concurrent transactions TPS on SSD is much higher (12 x-4 x) than that on HDD The gap is shrinking with the increase of the number of concurrent transactions HDD: Disk access latency is the bottleneck, low CPU utilization SSD • Limited by CPU rather than I/O • Saturated CPU utilization, no increase in TPS 11

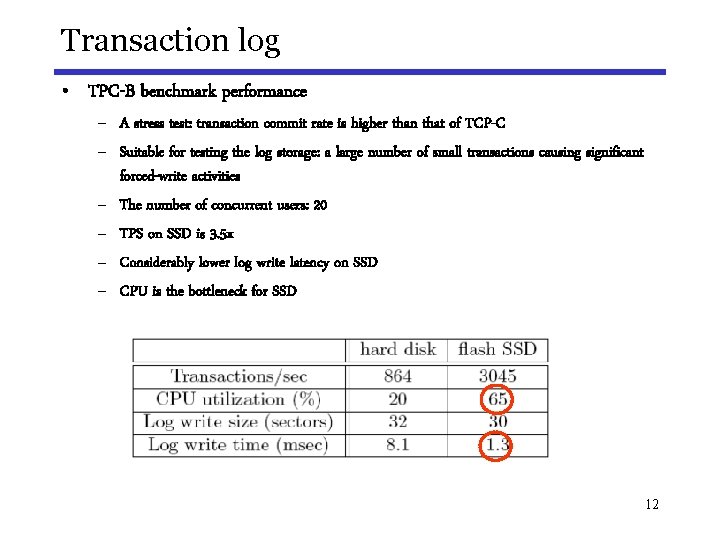

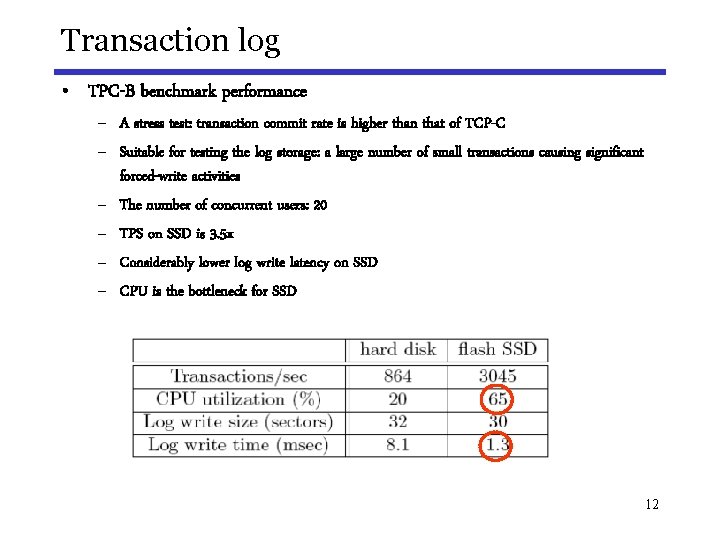

Transaction log • TPC-B benchmark performance – A stress test: transaction commit rate is higher than that of TCP-C – Suitable for testing the log storage: a large number of small transactions causing significant forced-write activities – The number of concurrent users: 20 – TPS on SSD is 3. 5 x – Considerably lower log write latency on SSD – CPU is the bottleneck for SSD 12

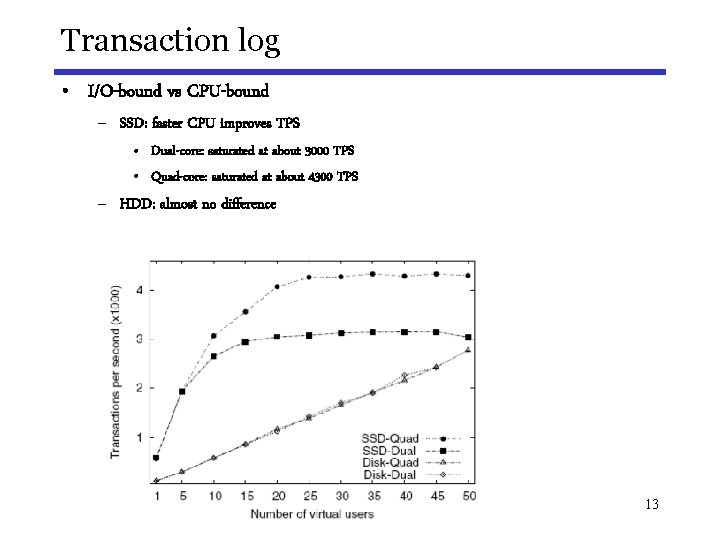

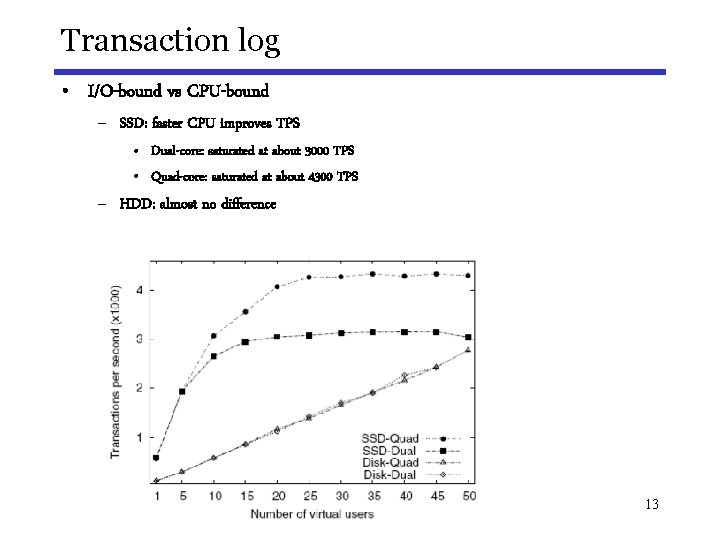

Transaction log • I/O-bound vs CPU-bound – SSD: faster CPU improves TPS • Dual-core: saturated at about 3000 TPS • Quad-core: saturated at about 4300 TPS – HDD: almost no difference 13

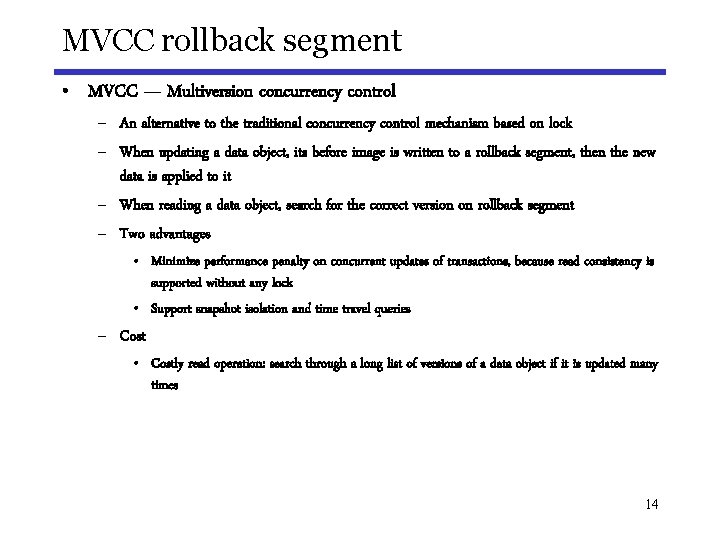

MVCC rollback segment • MVCC — Multiversion concurrency control – An alternative to the traditional concurrency control mechanism based on lock – When updating a data object, its before image is written to a rollback segment, then the new data is applied to it – When reading a data object, search for the correct version on rollback segment – Two advantages • Minimize performance penalty on concurrent updates of transactions, because read consistency is supported without any lock • Support snapshot isolation and time travel queries – Cost • Costly read operation: search through a long list of versions of a data object if it is updated many times 14

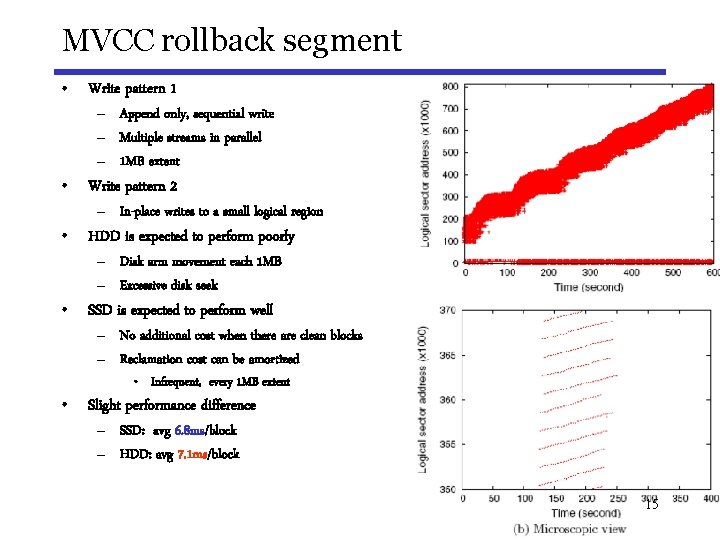

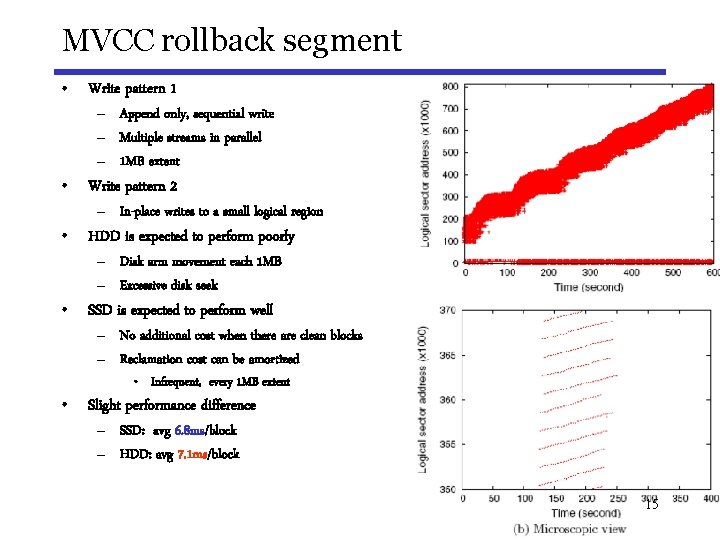

MVCC rollback segment • Write pattern 1 – Append only, sequential write – Multiple streams in parallel – 1 MB extent • Write pattern 2 – In-place writes to a small logical region • HDD is expected to perform poorly – Disk arm movement each 1 MB – Excessive disk seek • SSD is expected to perform well – No additional cost when there are clean blocks – Reclamation cost can be amortized • Infrequent, every 1 MB extent • Slight performance difference – SSD: avg 6. 8 ms/block – HDD: avg 7. 1 ms/block 15

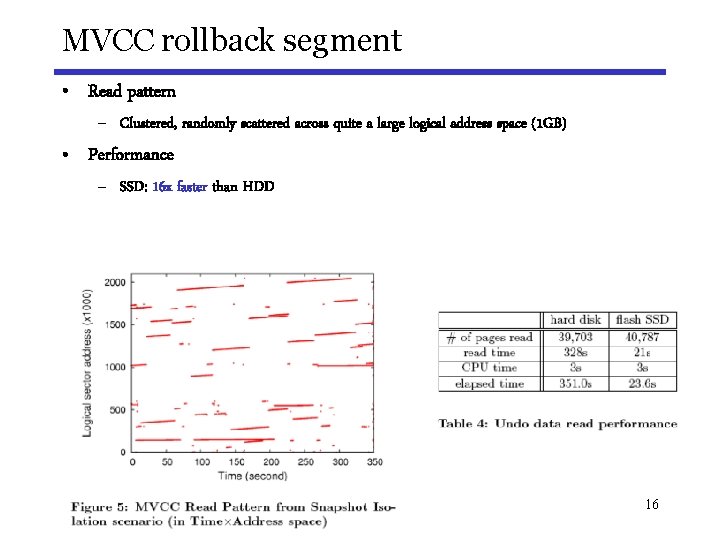

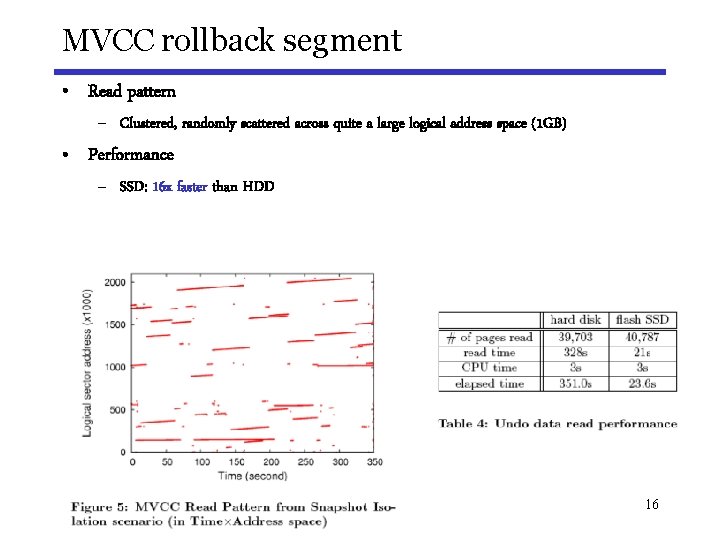

MVCC rollback segment • Read pattern – Clustered, randomly scattered across quite a large logical address space (1 GB) • Performance – SSD: 16 x faster than HDD 16

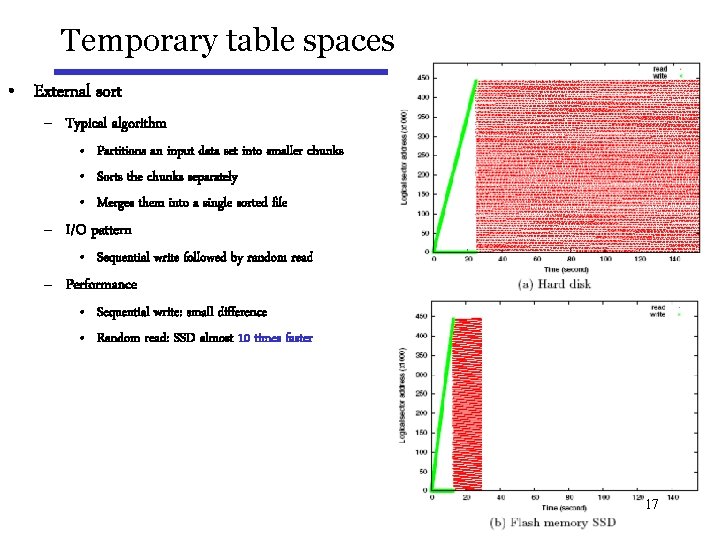

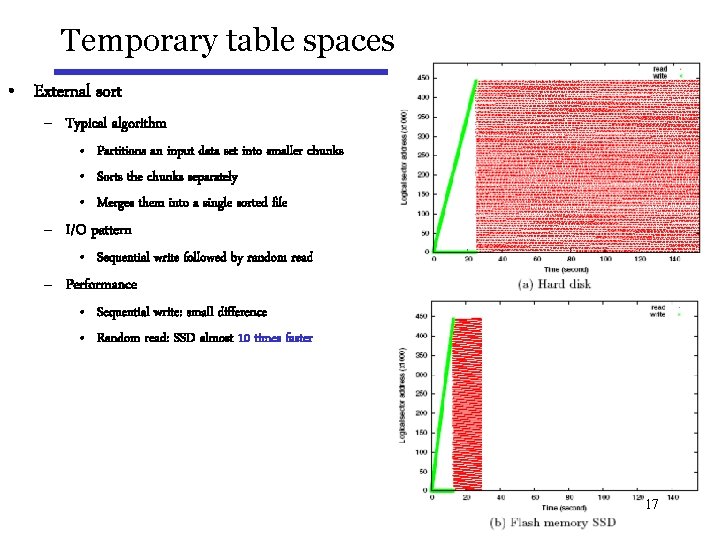

Temporary table spaces • External sort – Typical algorithm • Partitions an input data set into smaller chunks • Sorts the chunks separately • Merges them into a single sorted file – I/O pattern • Sequential write followed by random read – Performance • Sequential write: small difference • Random read: SSD almost 10 times faster 17

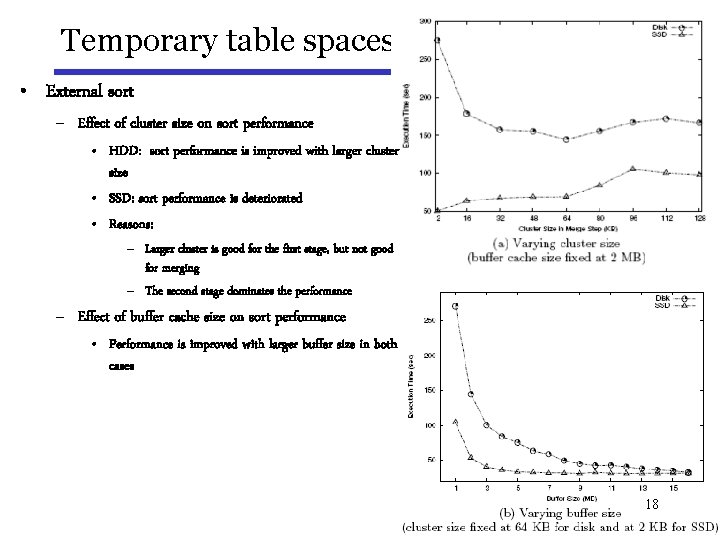

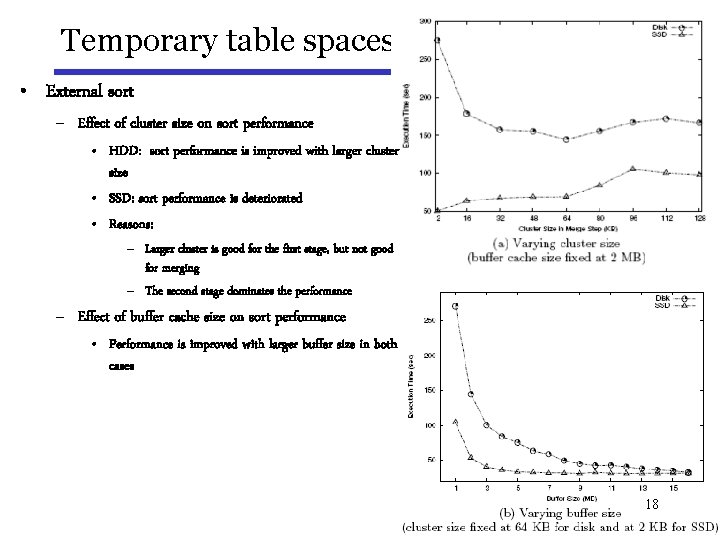

Temporary table spaces • External sort – Effect of cluster size on sort performance • HDD: sort performance is improved with larger cluster size • SSD: sort performance is deteriorated • Reasons: – Larger cluster is good for the first stage, but not good for merging – The second stage dominates the performance – Effect of buffer cache size on sort performance • Performance is improved with larger buffer size in both cases 18

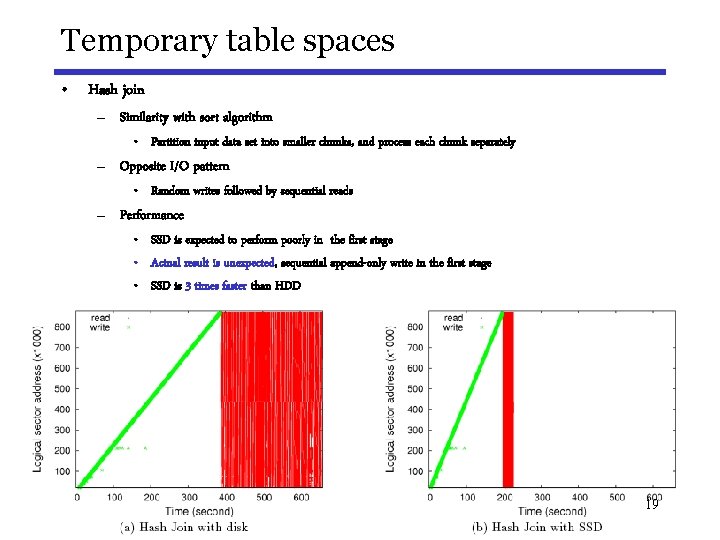

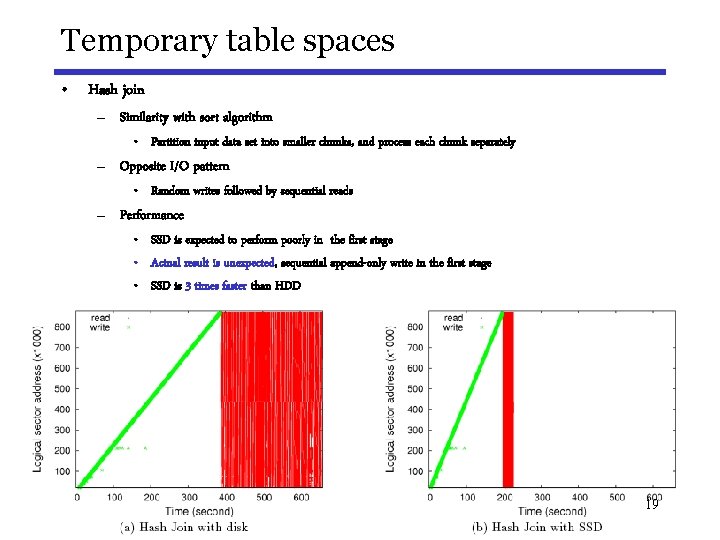

Temporary table spaces • Hash join – Similarity with sort algorithm • Partition input data set into smaller chunks, and process each chunk separately – Opposite I/O pattern • Random writes followed by sequential reads – Performance • SSD is expected to perform poorly in the first stage • Actual result is unexpected, sequential append-only write in the first stage • SSD is 3 times faster than HDD 19

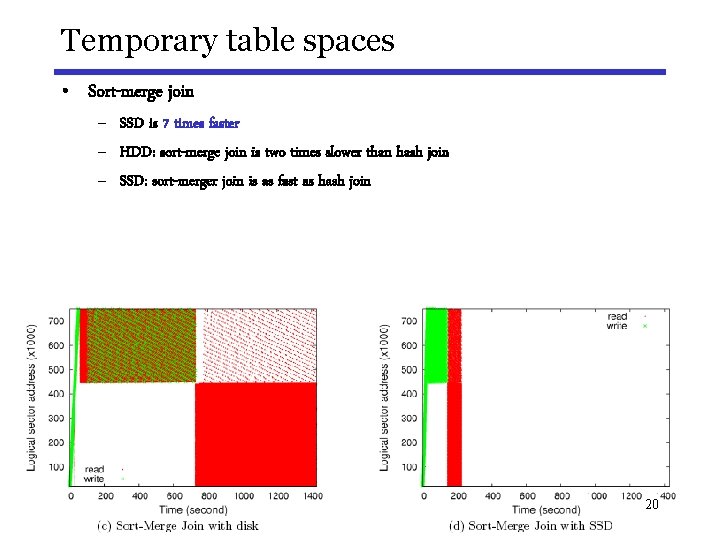

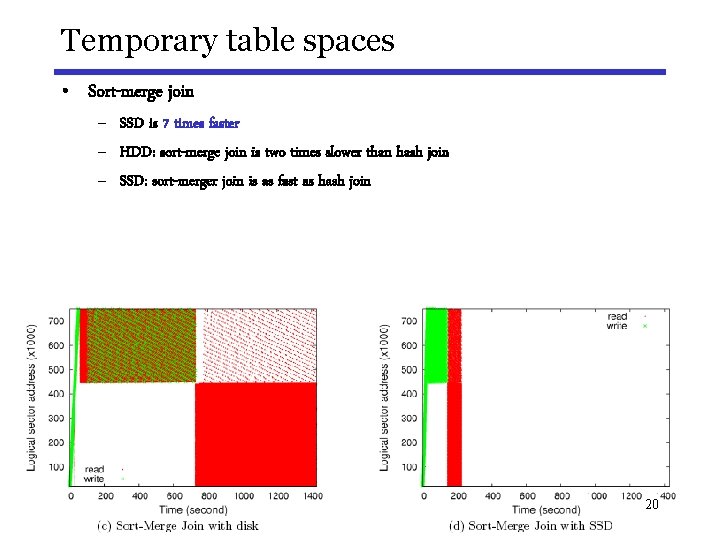

Temporary table spaces • Sort-merge join – SSD is 7 times faster – HDD: sort-merge join is two times slower than hash join – SSD: sort-merger join is as fast as hash join 20

Conclusions • Demonstrated that processing I/O requests for transaction log, rollback and temporary data can become a serious bottleneck for transaction processing • Showed that flash memory SSD can alleviate this bottleneck drastically • Due attention should be paid to SSD in all aspect of DB system design to maximize the benefit from this new technology 21