A Brief Introduction to Adaboost Hongbo Deng 6

A Brief Introduction to Adaboost Hongbo Deng 6 Feb, 2007 1 Some of the slides are borrowed from Derek Hoiem & Jan ˇSochman.

Outline n Background n Adaboost Algorithm n Theory/Interpretations 2

What’s So Good About Adaboost n Can be used with many different classifiers n Improves classification accuracy n Commonly used in many areas n Simple to implement n Not prone to overfitting 3

A Brief History n Bootstrapping n Bagging n Boosting (Schapire 1989) n Adaboost (Schapire 1995) Resampling for estimating statistic Resampling for classifier design 4

Bootstrap Estimation Repeatedly draw n samples from D n For each set of samples, estimate a statistic n The bootstrap estimate is the mean of the individual estimates n Used to estimate a statistic (parameter) and its variance n 5

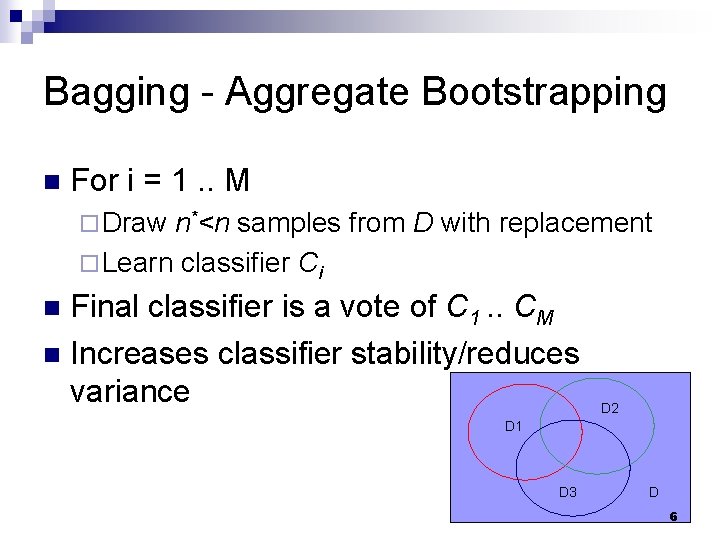

Bagging - Aggregate Bootstrapping n For i = 1. . M ¨ Draw n*<n samples from D with replacement ¨ Learn classifier Ci Final classifier is a vote of C 1. . CM n Increases classifier stability/reduces variance n D 2 D 1 D 3 D 6

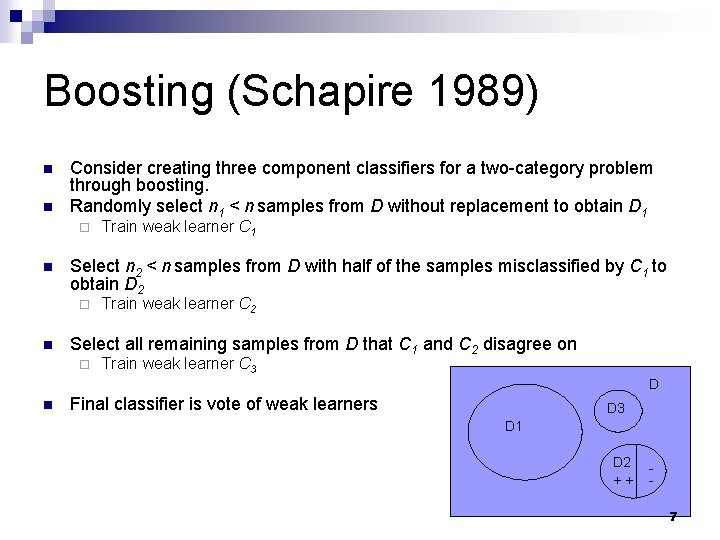

Boosting (Schapire 1989) n n Consider creating three component classifiers for a two-category problem through boosting. Randomly select n 1 < n samples from D without replacement to obtain D 1 ¨ n Select n 2 < n samples from D with half of the samples misclassified by C 1 to obtain D 2 ¨ n Train weak learner C 1 Train weak learner C 2 Select all remaining samples from D that C 1 and C 2 disagree on ¨ Train weak learner C 3 D n Final classifier is vote of weak learners D 3 D 1 D 2 ++ 7

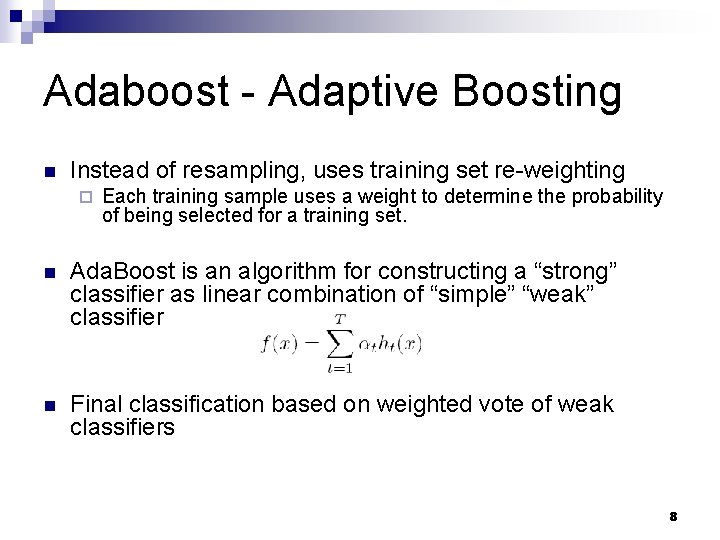

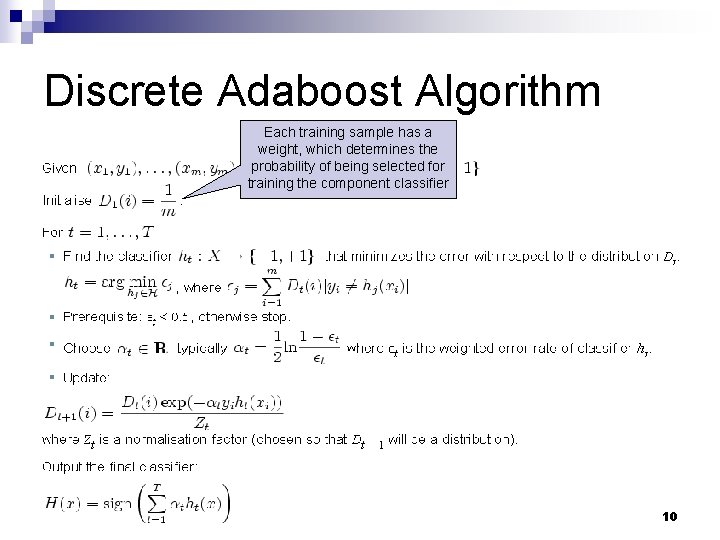

Adaboost - Adaptive Boosting n Instead of resampling, uses training set re-weighting ¨ Each training sample uses a weight to determine the probability of being selected for a training set. n Ada. Boost is an algorithm for constructing a “strong” classifier as linear combination of “simple” “weak” classifier n Final classification based on weighted vote of weak classifiers 8

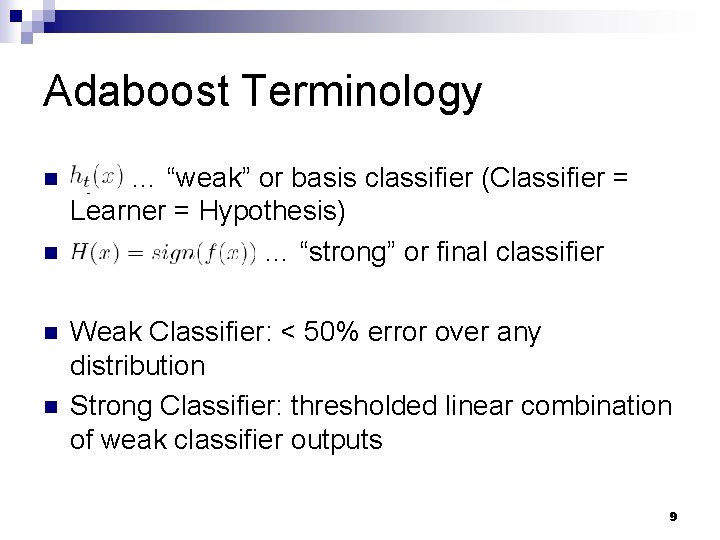

Adaboost Terminology n n ht(x) … “weak” or basis classifier (Classifier = Learner = Hypothesis) … “strong” or final classifier Weak Classifier: < 50% error over any distribution Strong Classifier: thresholded linear combination of weak classifier outputs 9

Discrete Adaboost Algorithm Each training sample has a weight, which determines the probability of being selected for training the component classifier 10

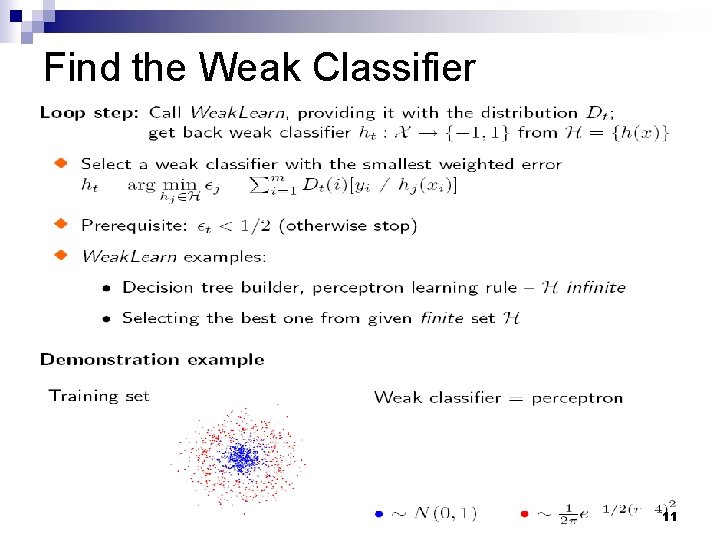

Find the Weak Classifier 11

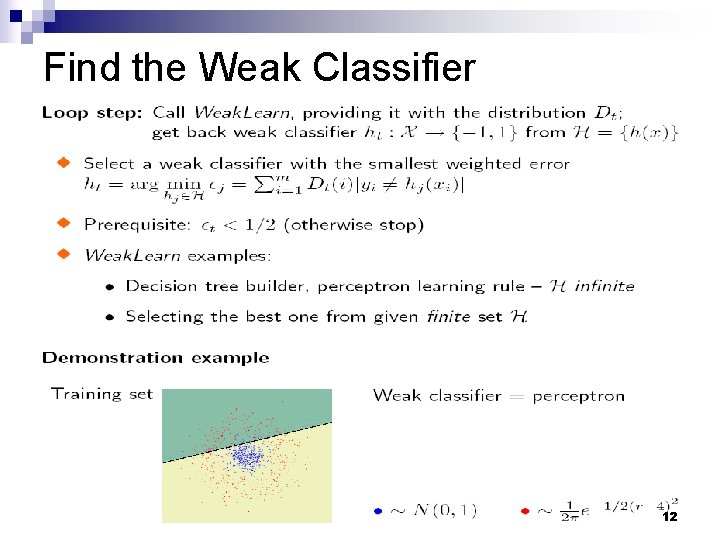

Find the Weak Classifier 12

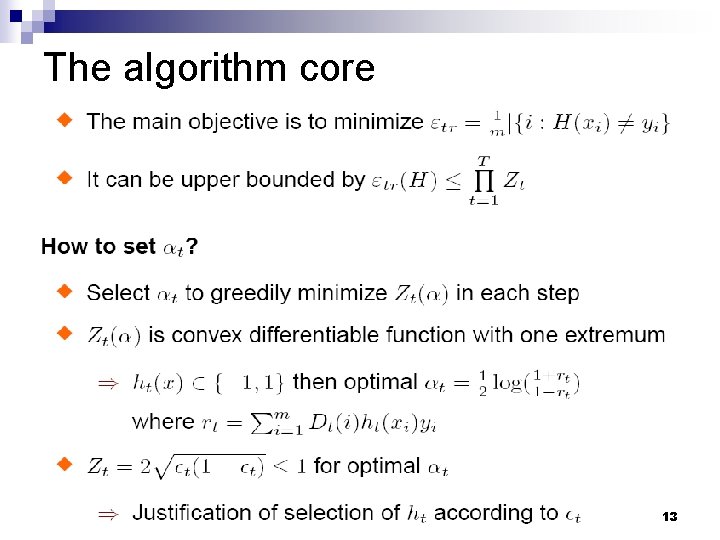

The algorithm core 13

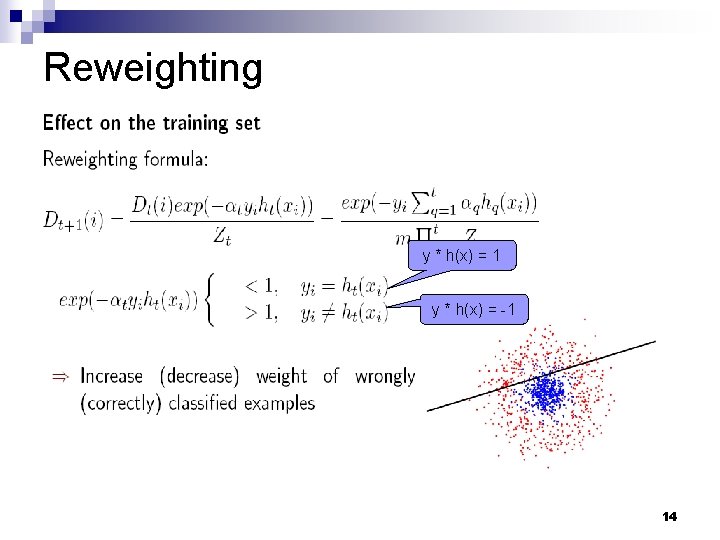

Reweighting y * h(x) = 1 y * h(x) = -1 14

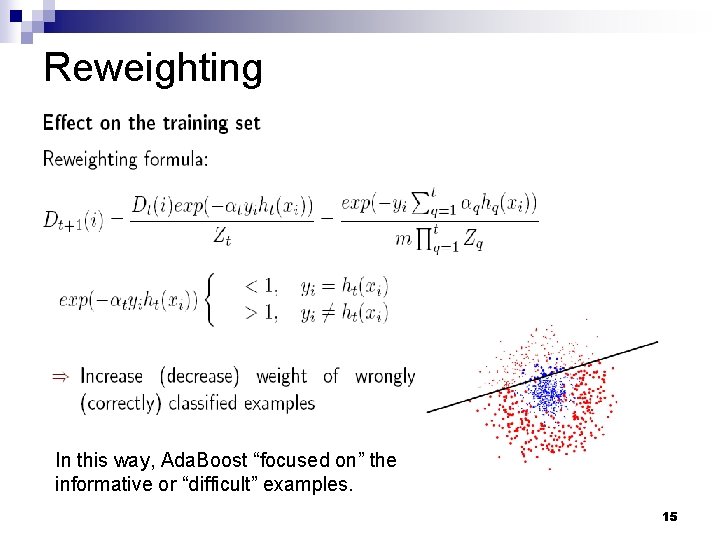

Reweighting In this way, Ada. Boost “focused on” the informative or “difficult” examples. 15

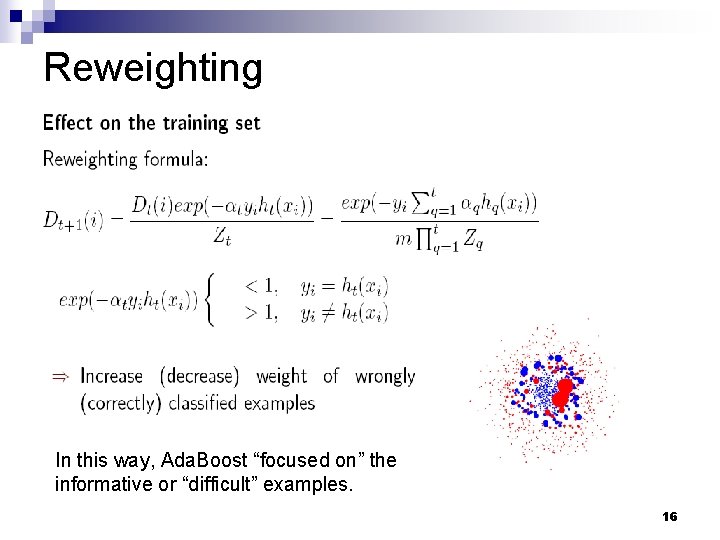

Reweighting In this way, Ada. Boost “focused on” the informative or “difficult” examples. 16

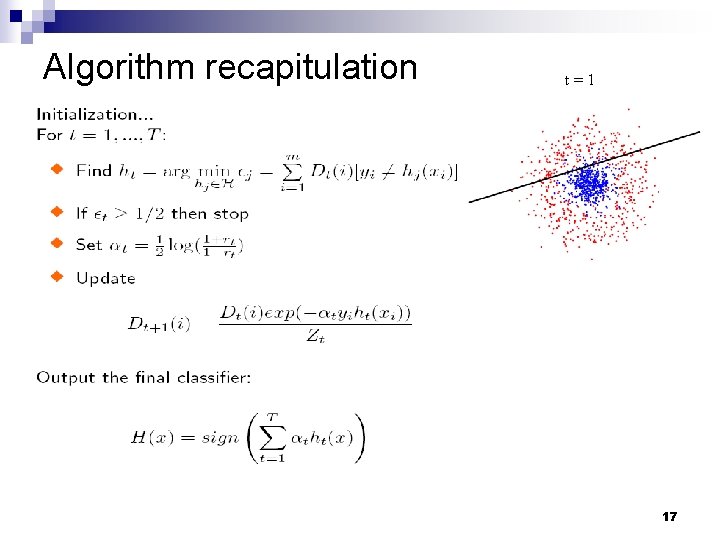

Algorithm recapitulation t=1 17

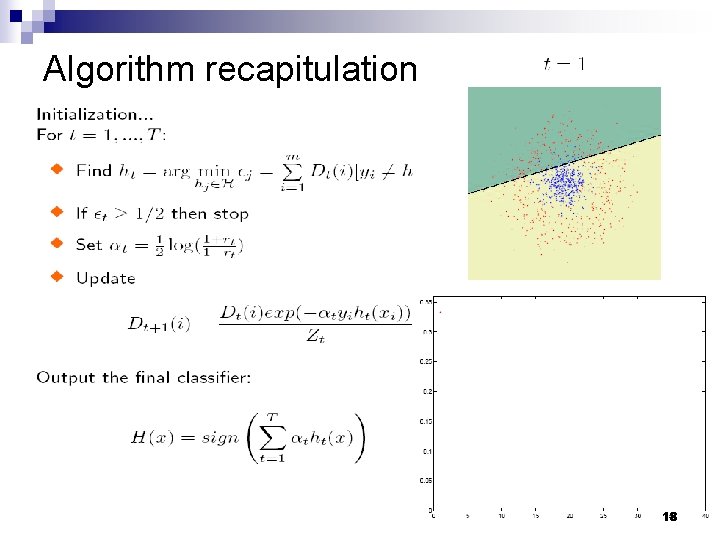

Algorithm recapitulation 18

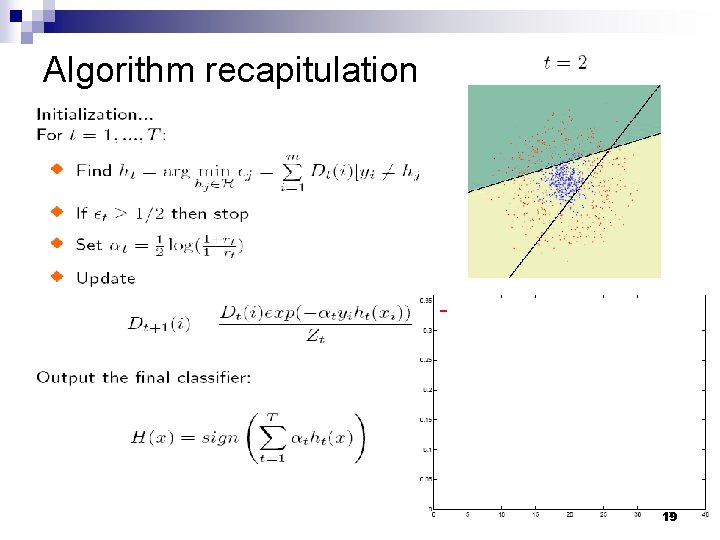

Algorithm recapitulation 19

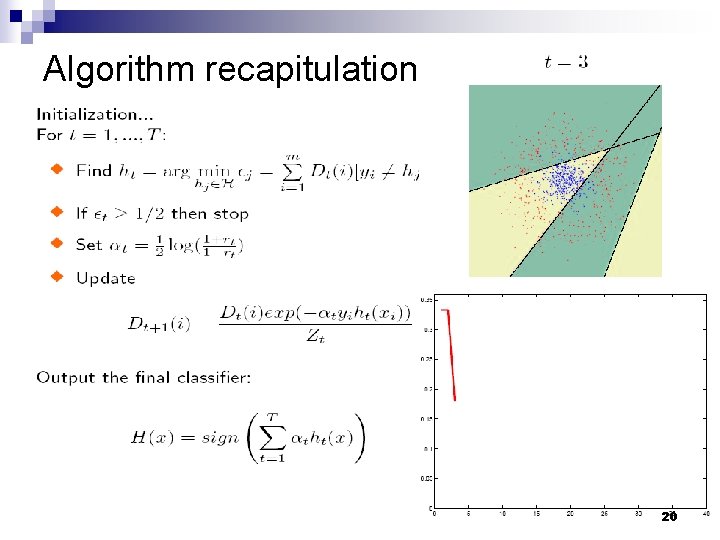

Algorithm recapitulation 20

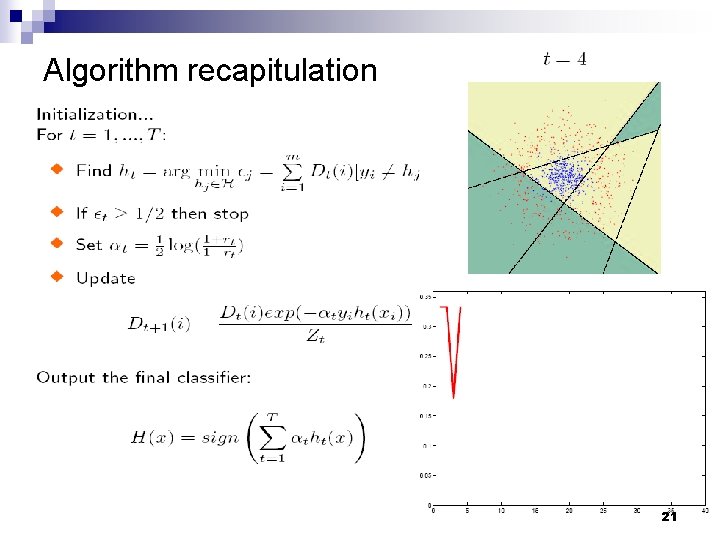

Algorithm recapitulation 21

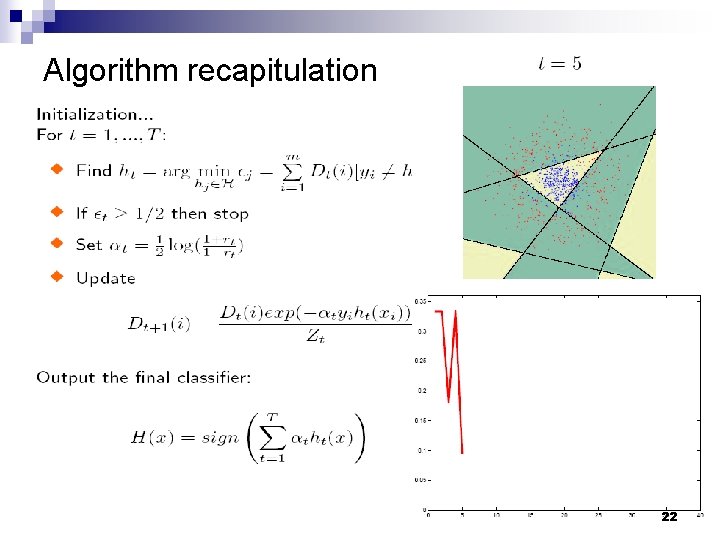

Algorithm recapitulation 22

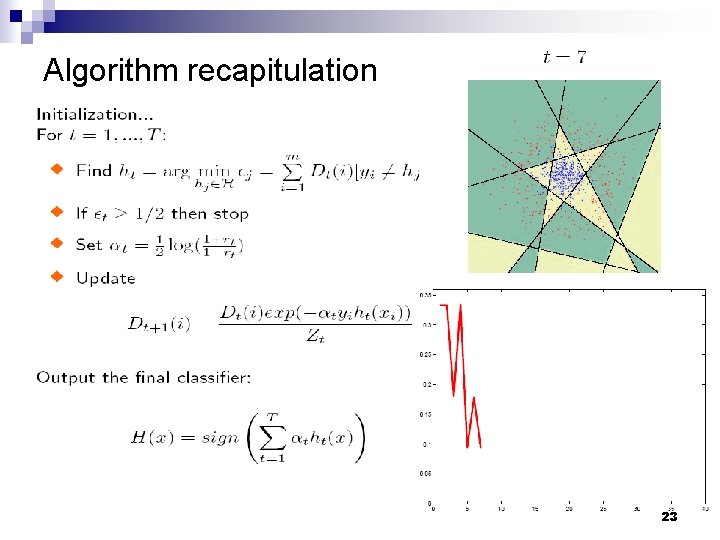

Algorithm recapitulation 23

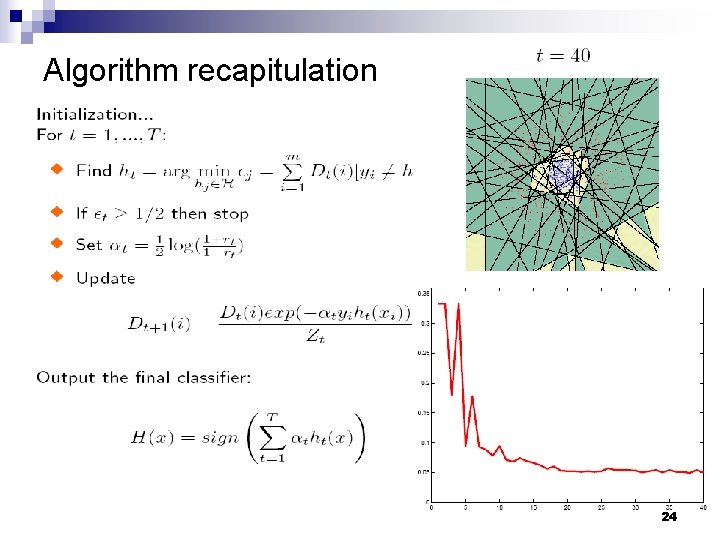

Algorithm recapitulation 24

Pros and cons of Ada. Boost Advantages ¨ Very simple to implement ¨ Does feature selection resulting in relatively simple classifier ¨ Fairly good generalization Disadvantages ¨ Suboptimal solution ¨ Sensitive to noisy data and outliers 25

References n Duda, Hart, ect – Pattern Classification n Freund – “An adaptive version of the boost by majority algorithm” n Freund – “Experiments with a new boosting algorithm” n Freund, Schapire – “A decision-theoretic generalization of on-line learning and an application to boosting” n Friedman, Hastie, etc – “Additive Logistic Regression: A Statistical View of Boosting” n Jin, Liu, etc (CMU) – “A New Boosting Algorithm Using Input-Dependent Regularizer” n Li, Zhang, etc – “Floatboost Learning for Classification” n Opitz, Maclin – “Popular Ensemble Methods: An Empirical Study” n Ratsch, Warmuth – “Efficient Margin Maximization with Boosting” n Schapire, Freund, etc – “Boosting the Margin: A New Explanation for the Effectiveness of Voting Methods” n Schapire, Singer – “Improved Boosting Algorithms Using Confidence-Weighted Predictions” n Schapire – “The Boosting Approach to Machine Learning: An overview” n Zhang, Li, etc – “Multi-view Face Detection with Floatboost” 26

Appendix Bound on training error n Adaboost Variants n 27

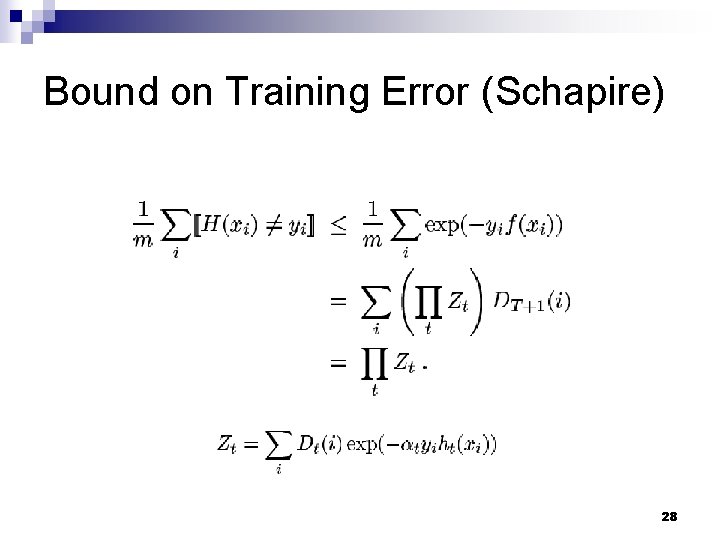

Bound on Training Error (Schapire) 28

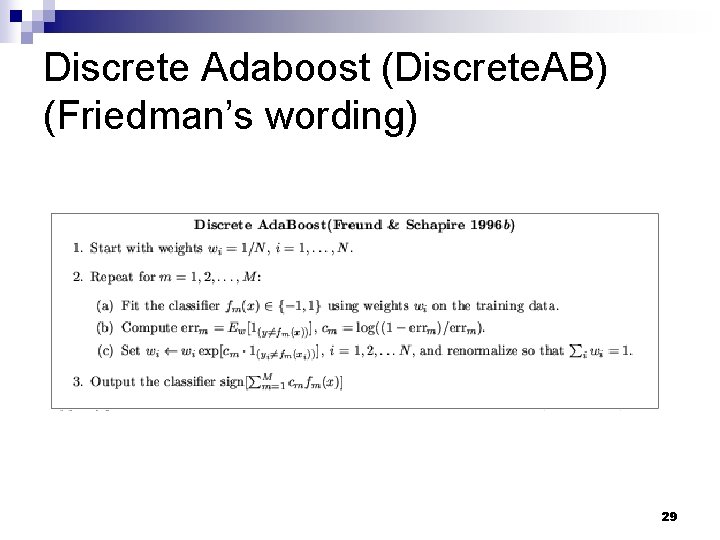

Discrete Adaboost (Discrete. AB) (Friedman’s wording) 29

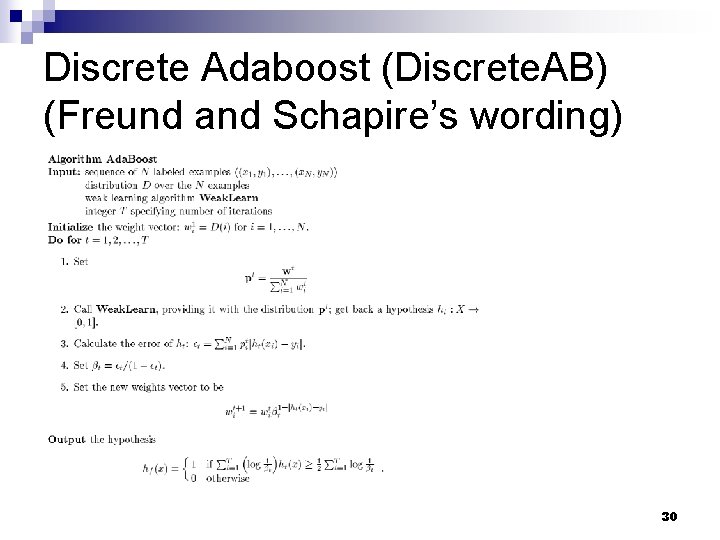

Discrete Adaboost (Discrete. AB) (Freund and Schapire’s wording) 30

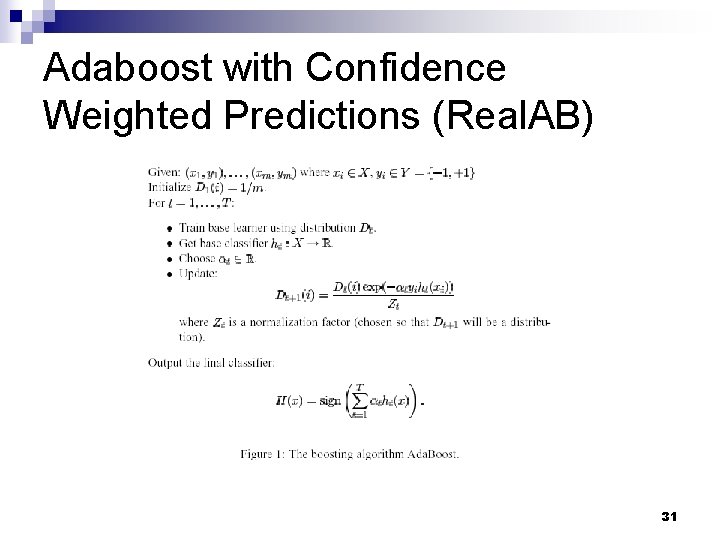

Adaboost with Confidence Weighted Predictions (Real. AB) 31

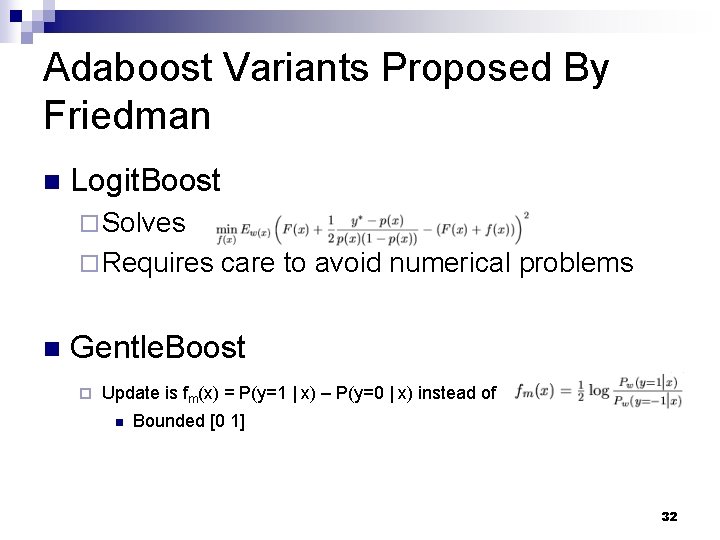

Adaboost Variants Proposed By Friedman n Logit. Boost ¨ Solves ¨ Requires n care to avoid numerical problems Gentle. Boost ¨ Update is fm(x) = P(y=1 | x) – P(y=0 | x) instead of n Bounded [0 1] 32

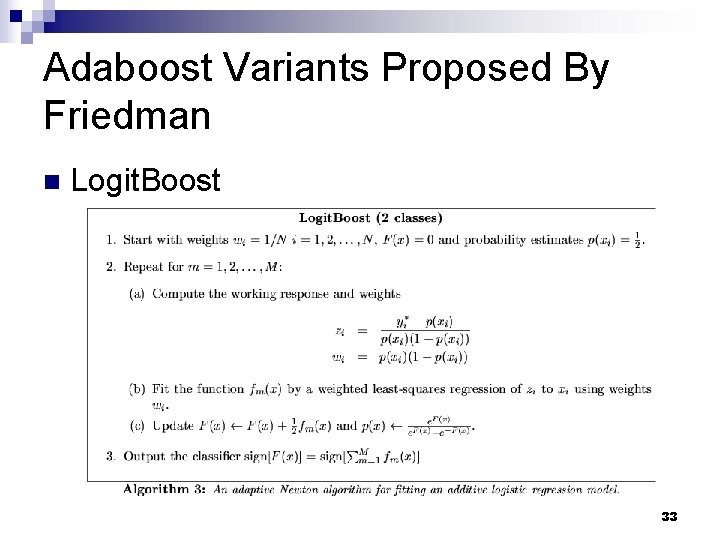

Adaboost Variants Proposed By Friedman n Logit. Boost 33

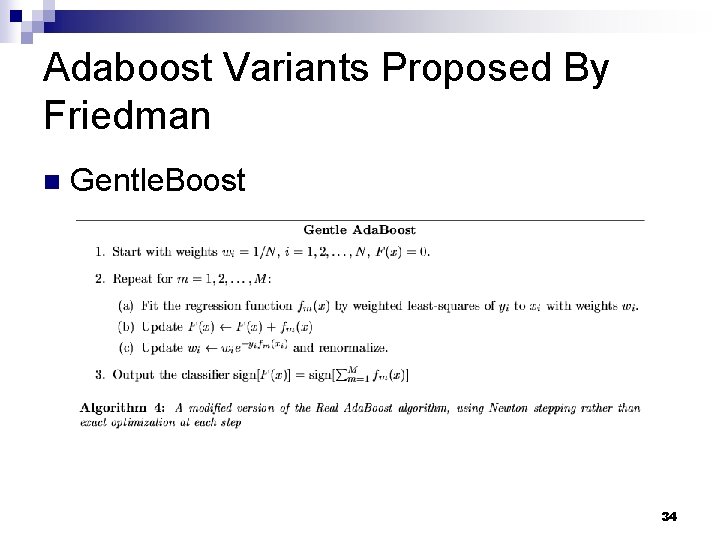

Adaboost Variants Proposed By Friedman n Gentle. Boost 34

Thanks!!! Any comments or questions? 35

- Slides: 35