8 3 AGENT ANDDECISIONMAKING AI Agent driven AI

- Slides: 25

8. 3. AGENT ANDDECISIONMAKING AI Agent driven AI and associated decision making techniques

QUESTION CLINIC : FAQ In lecture exploration of answers to frequently asked student questions

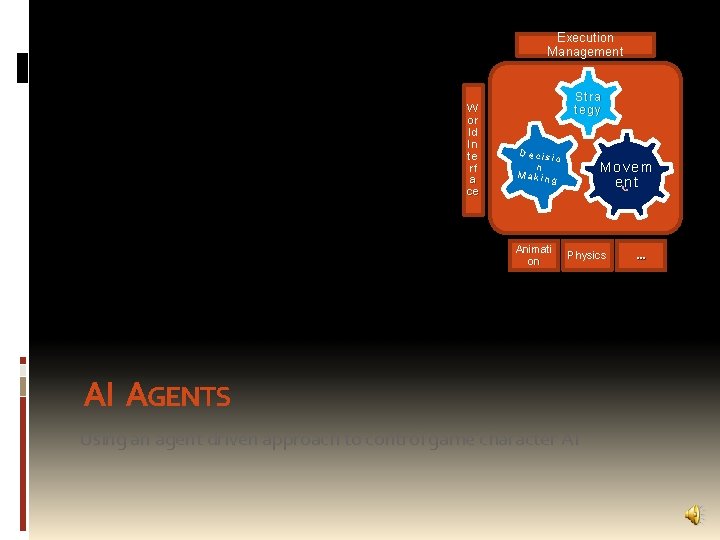

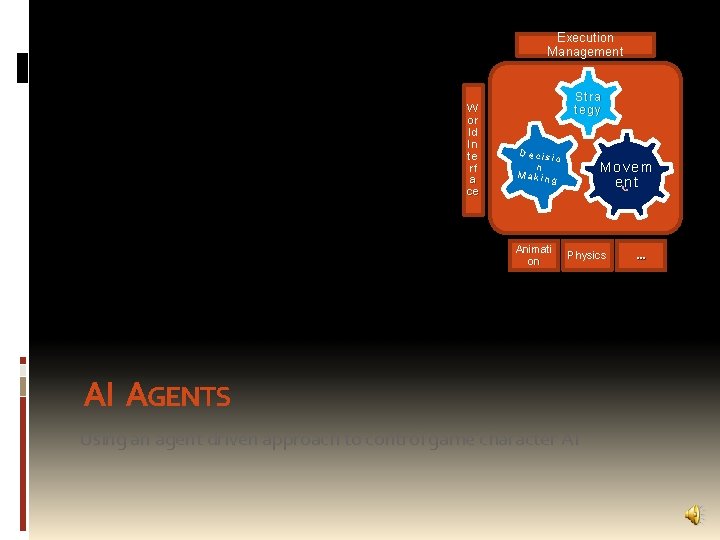

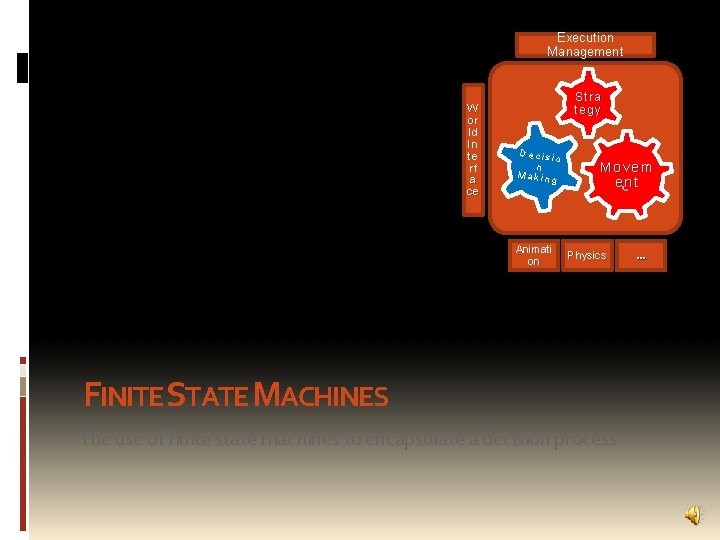

Execution Management W or ld In te rf a ce Stra tegy Decisi o n Making Animati on Movem ent Physics AI AGENTS Using an agent driven approach to control game character AI . . .

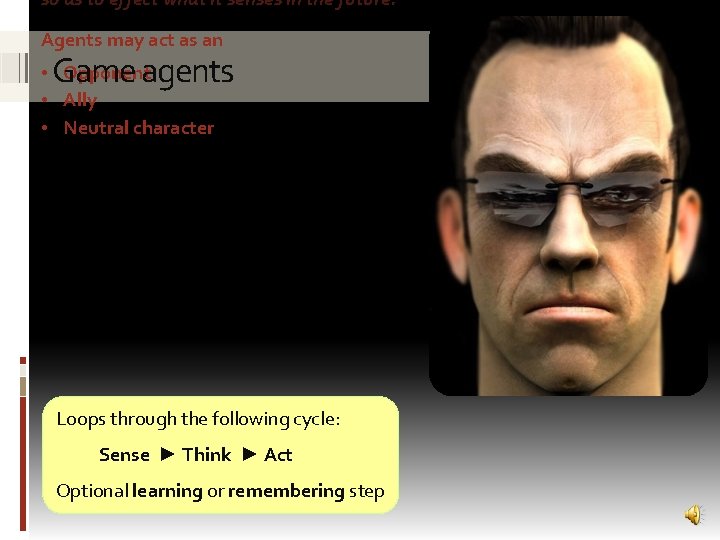

so as to effect what it senses in the future. ” Agents may act as an Game agents • Opponent • Ally • Neutral character Loops through the following cycle: Sense ► Think ► Act Optional learning or remembering step

‘cheating’ and ensure agents cannot ‘see’ through walls, know about unexplored areas, etc. Game agents: Example sensing model: For each game object: • Is it within the viewing distance of the agent? • Is it within the viewing angle of the agent? • Is it unobscured by the environment?

determine how far sound can travel Game agents: • Travel distance may also depend upon type of incident surface and movement speed of the player, etc.

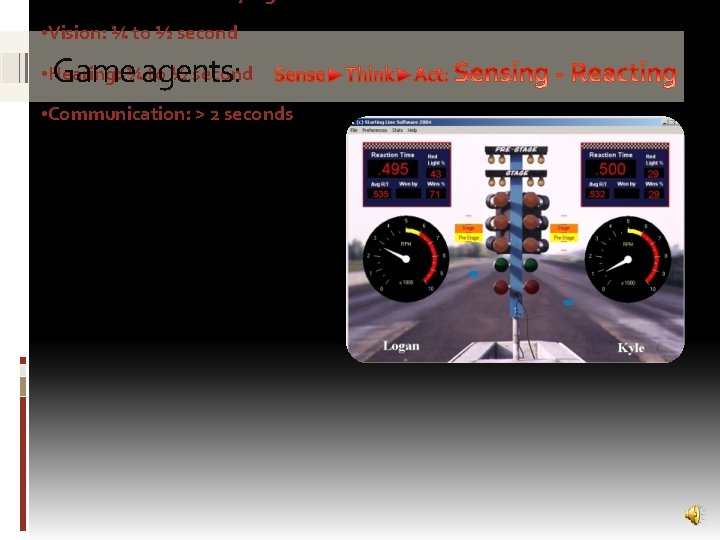

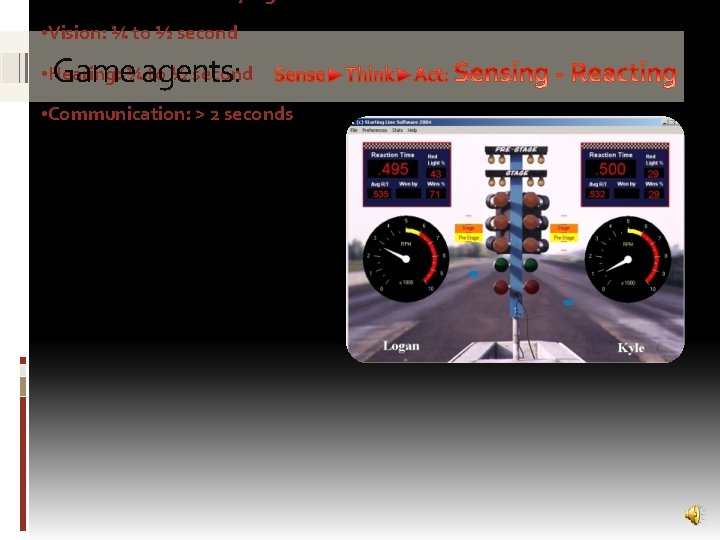

• Vision: ¼ to ½ second Game agents: • Hearing: ¼ to ½ second • Communication: > 2 seconds

• Use pre-coded expert knowledge • Algorithmically search for a solution Game agents: Many different techniques exist (we will explore some later) Aside: Encoding expert knowledge is appealing as it is relatively easy to obtain and use, but may not be scalable or adaptable. Whilst often scalable and adaptable, algorithmic approaches may not match a human expert in the quality of decision making or be computationally expensive.

down’ agents, for example: • Make shooting less accurate • Introduce longer reaction times • Change locations to make self more vulnerable, etc. Game agents: Letting agents cheat This is sometimes necessary for: • Highest difficultly levels • CPU computation reasons • Development time reasons

Example of actions include: Game agents: • Move location • Pick up object • Play animation • Play sound effect • Fire weapon to: • Alter other agents to some situation (i. e. agent hurt) • Share agent knowledge (i. e. player last seen at location x)

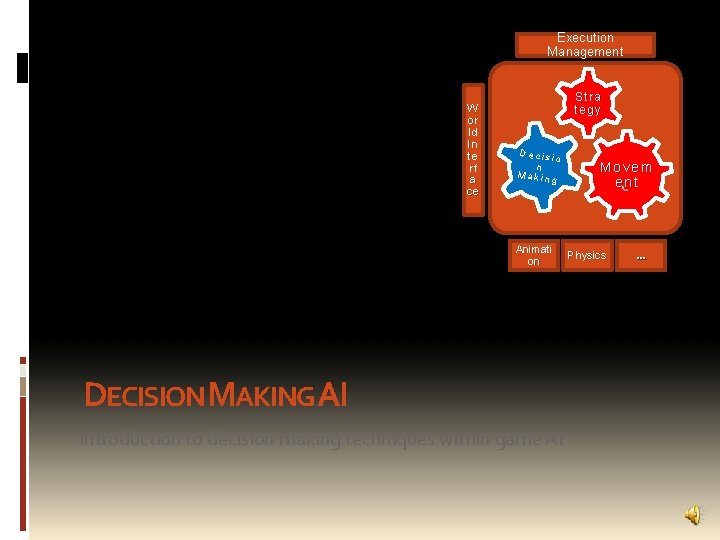

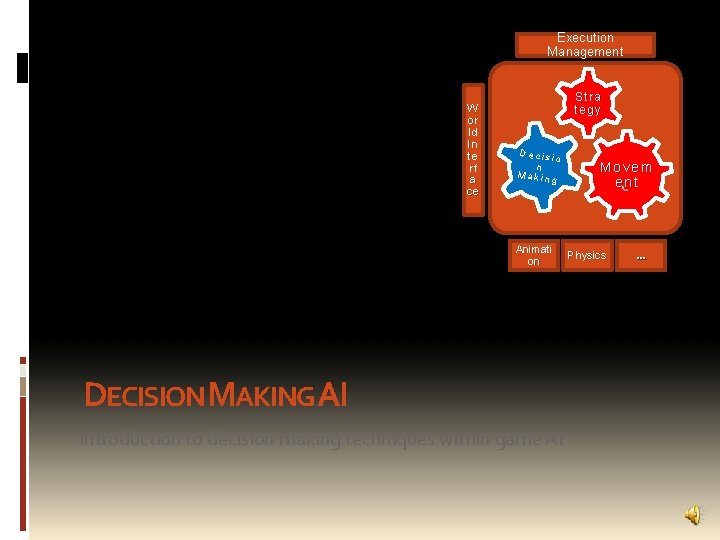

Execution Management W or ld In te rf a ce Stra tegy Decisi o n Making Animati on DECISION MAKING AI Introduction to decision making techniques within game AI Movem ent Physics . . .

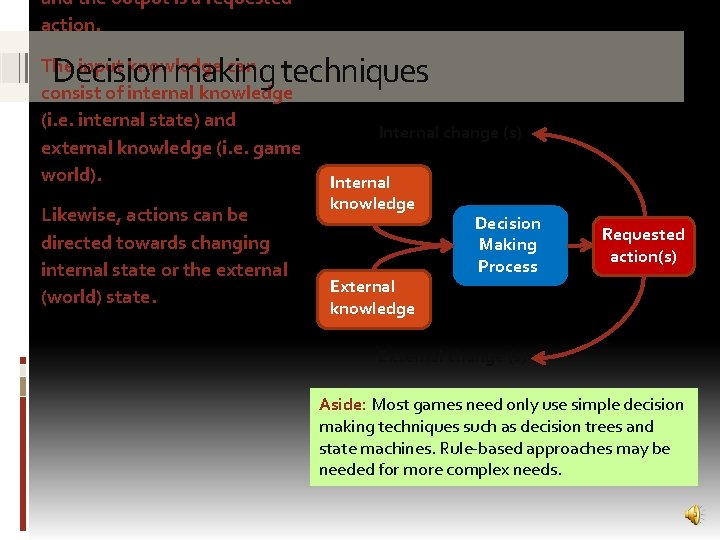

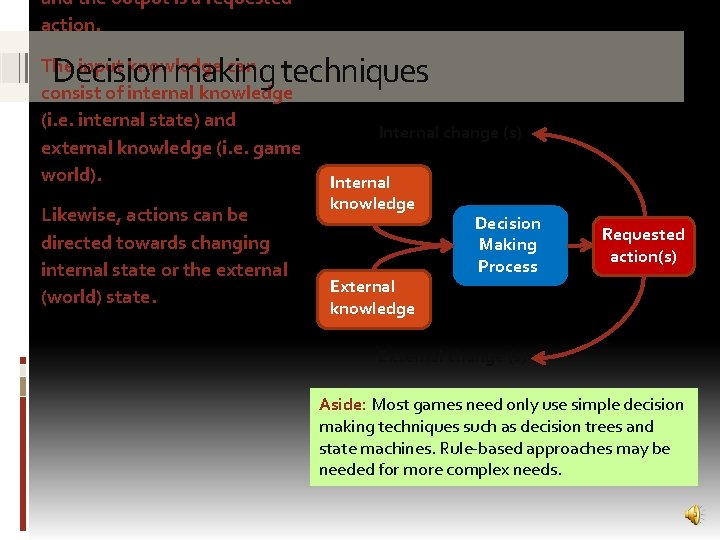

and the output is a requested action. Decision making techniques The input knowledge can consist of internal knowledge (i. e. internal state) and external knowledge (i. e. game world). Likewise, actions can be directed towards changing internal state or the external (world) state. Internal change (s) Internal knowledge External knowledge Decision Making Process Requested action(s) External change (s) Aside: Most games need only use simple decision making techniques such as decision trees and state machines. Rule-based approaches may be needed for more complex needs.

Execution Management W or ld In te rf a ce Stra tegy Decisi o n Making Animati on DECISION TREES Simple decision making using decision trees Movem ent Physics . . .

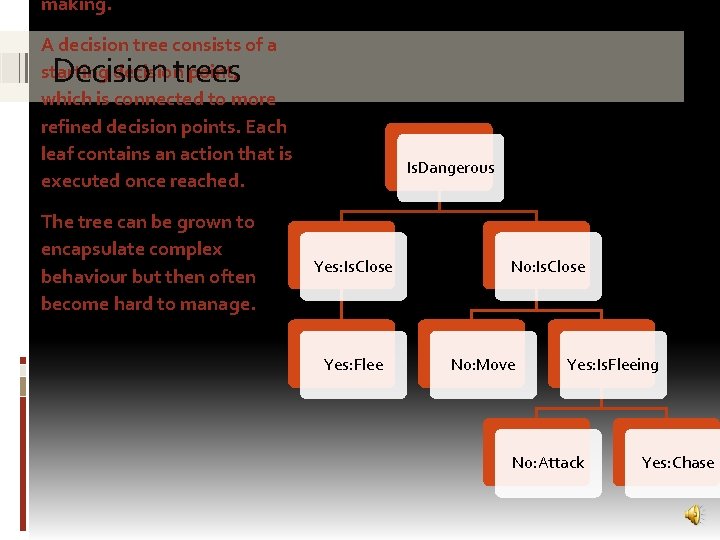

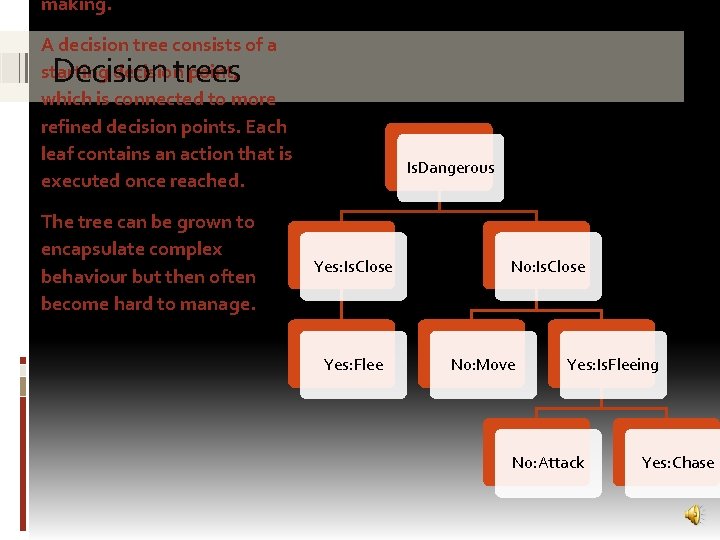

making. A decision tree consists of a starting decision point, which is connected to more refined decision points. Each leaf contains an action that is executed once reached. Decision trees The tree can be grown to encapsulate complex behaviour but then often become hard to manage. Is. Dangerous Yes: Is. Close Yes: Flee No: Is. Close No: Move Yes: Is. Fleeing No: Attack Yes: Chase

Execution Management W or ld In te rf a ce Stra tegy Decisi o n Making Animati on Movem ent Physics FINITE STATE MACHINES The use of finite state machines to encapsulate a decision process . . .

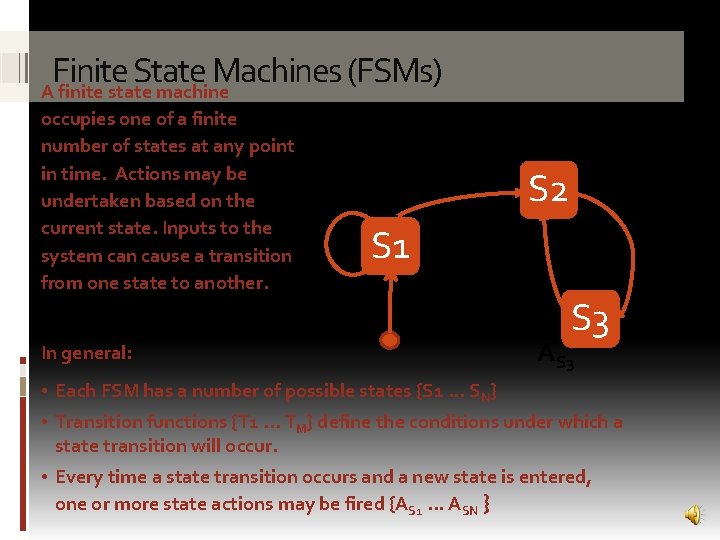

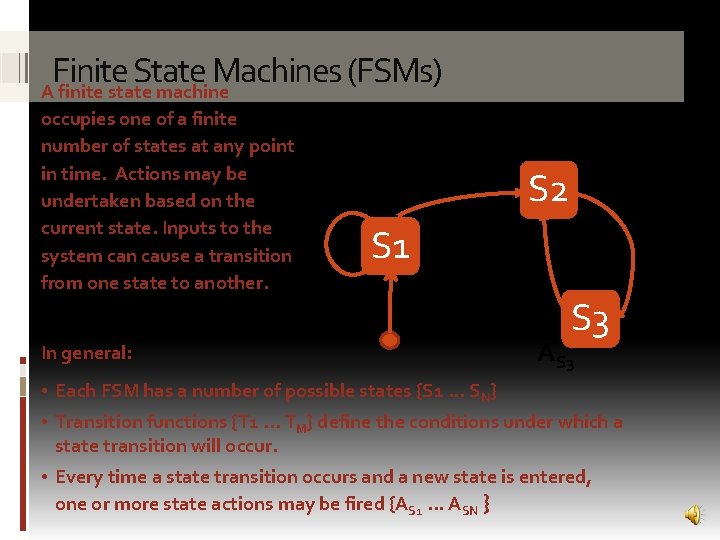

Finite State Machines (FSMs) A finite state machine occupies one of a finite number of states at any point in time. Actions may be undertaken based on the current state. Inputs to the system can cause a transition from one state to another. AS 2 AS 1 T 2 T 1 In general: S 2 T 3 T 4 S 3 AS 3 • Each FSM has a number of possible states {S 1. . . SN} • Transition functions {T 1. . . TM} define the conditions under which a state transition will occur. • Every time a state transition occurs and a new state is entered, one or more state actions may be fired {AS 1. . . ASN }

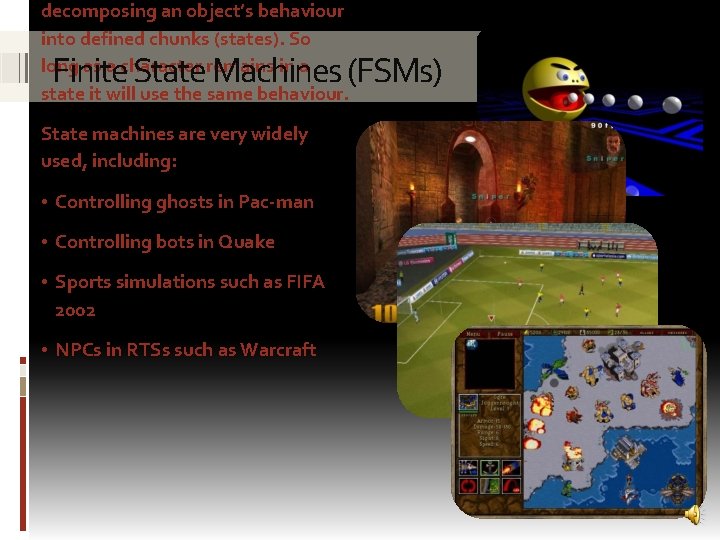

decomposing an object’s behaviour into defined chunks (states). So long as a character remains in a state it will use the same behaviour. Finite State Machines (FSMs) State machines are very widely used, including: • Controlling ghosts in Pac-man • Controlling bots in Quake • Sports simulations such as FIFA 2002 • NPCs in RTSs such as Warcraft

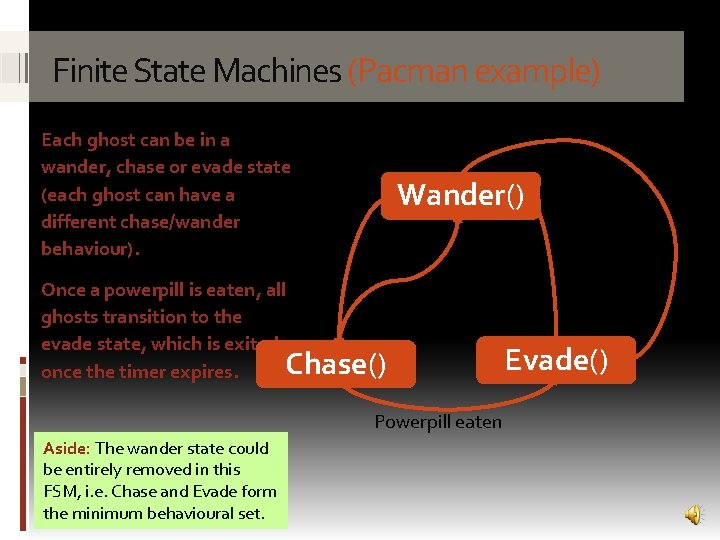

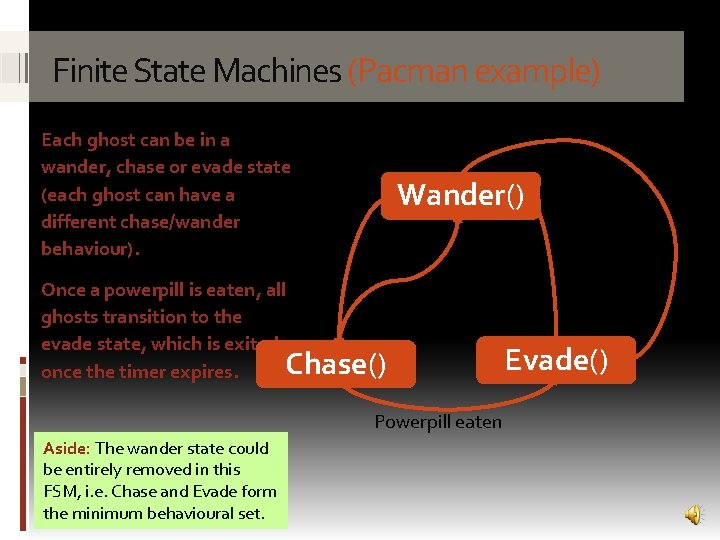

Finite State Machines (Pacman example) Each ghost can be in a wander, chase or evade state Pacman (each ghost can have a in range different chase/wander behaviour). Once a powerpill is eaten, all ghosts transition to the evade state, which is exited once the timer expires. Wander() Pacman out of range Chase() Powerpill eaten Aside: The wander state could be entirely removed in this FSM, i. e. Chase and Evade form the minimum behavioural set. Powerpill eaten Powerpill expired Evade()

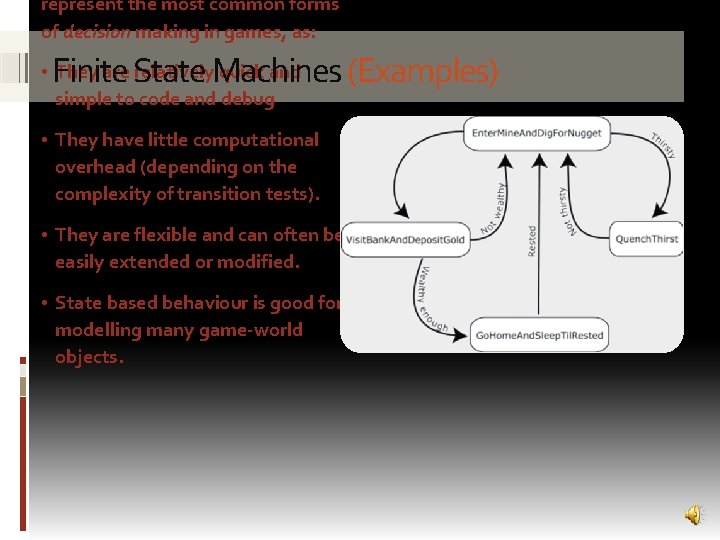

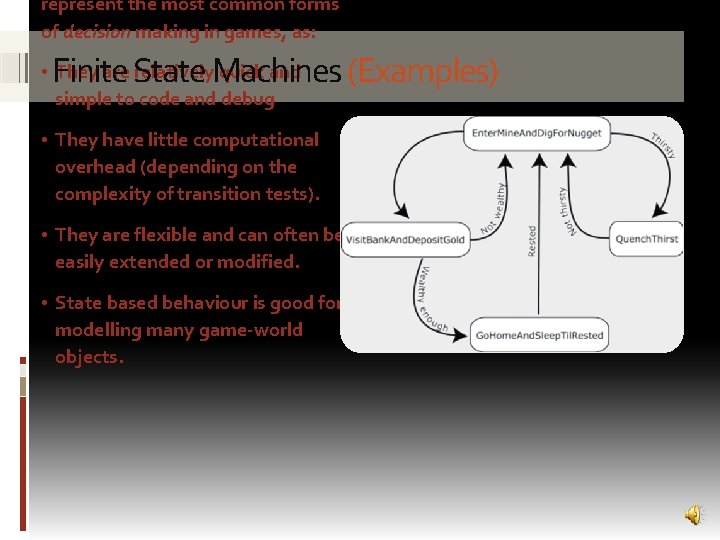

represent the most common forms of decision making in games, as: Finite State Machines (Examples) • They are relatively quick and simple to code and debug • They have little computational overhead (depending on the complexity of transition tests). • They are flexible and can often be easily extended or modified. • State based behaviour is good for modelling many game-world objects.

all forms of behaviour. One example is ‘alarm behaviour’, an action that can be triggered from any state. Hierarchical FSMs Consider a robot whose ‘alarm’ behaviour is to recharge when power levels become low. Using a hierarchical FSM, the states can transition between ‘cleaning up’ and ‘getting power’ (at the top level). When in the ‘cleaning up’ state a lower-level FSMs controls behaviour.

Execution Management W or ld In te rf a ce Stra tegy Decisi o n Making Animati on GOAL ORIENTED BEHAVIOUR Using goals to drive behaviour Movem ent Physics . . .

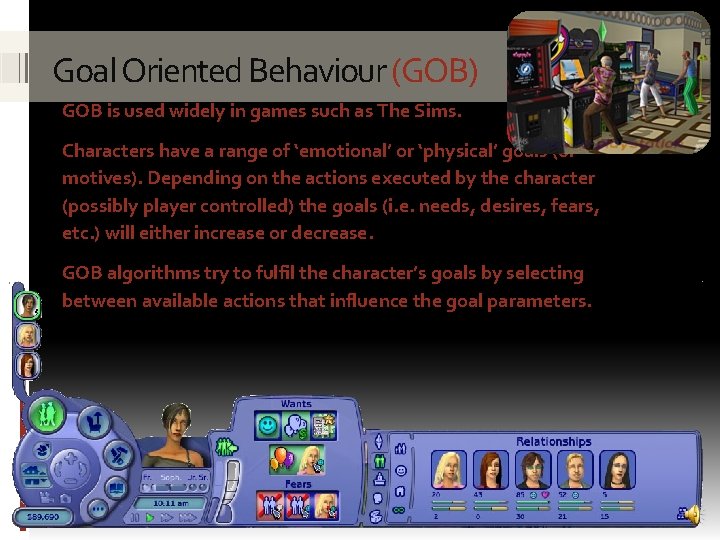

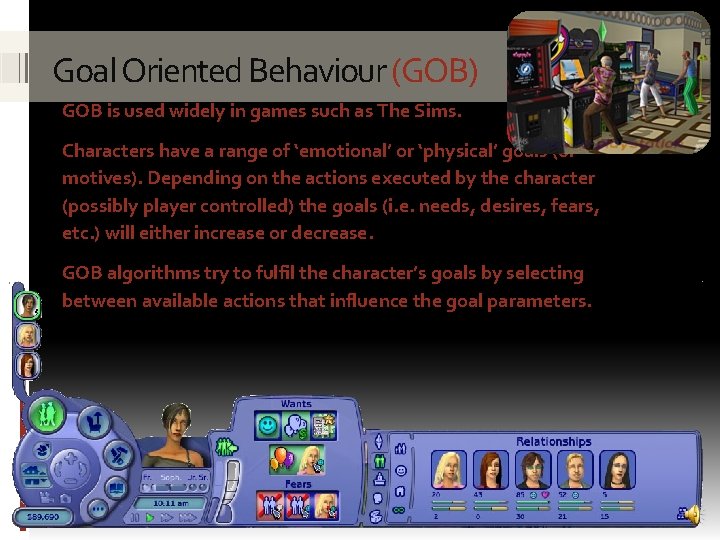

Goal Oriented Behaviour (GOB) GOB is used widely in games such as The Sims. Characters have a range of ‘emotional’ or ‘physical’ goals (or motives). Depending on the actions executed by the character (possibly player controlled) the goals (i. e. needs, desires, fears, etc. ) will either increase or decrease. GOB algorithms try to fulfil the character’s goals by selecting between available actions that influence the goal parameters.

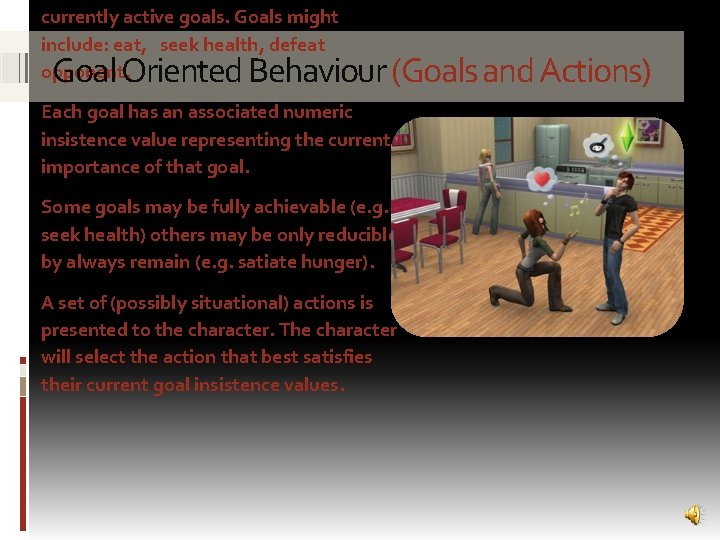

currently active goals. Goals might include: eat, seek health, defeat opponent. Goal Oriented Behaviour (Goals and Actions) Each goal has an associated numeric insistence value representing the current importance of that goal. Some goals may be fully achievable (e. g. seek health) others may be only reducible by always remain (e. g. satiate hunger). A set of (possibly situational) actions is presented to the character. The character will select the action that best satisfies their current goal insistence values.

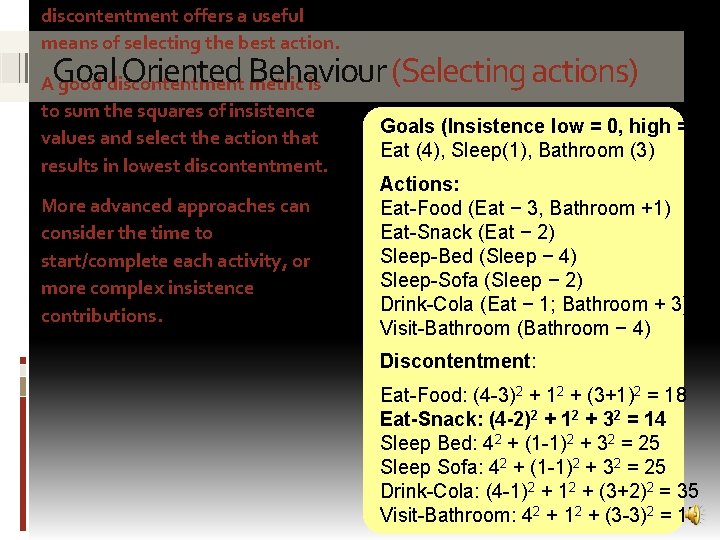

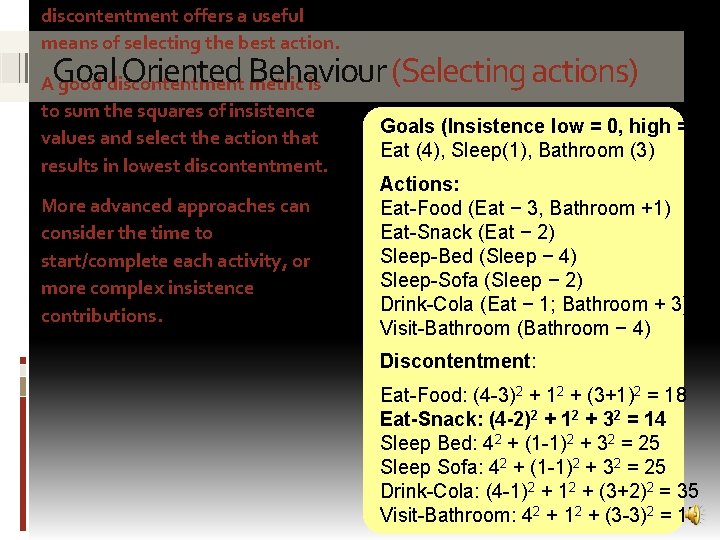

discontentment offers a useful means of selecting the best action. Goal Oriented Behaviour (Selecting actions) A good discontentment metric is to sum the squares of insistence values and select the action that results in lowest discontentment. More advanced approaches can consider the time to start/complete each activity, or more complex insistence contributions. Goals (Insistence low = 0, high = 5): Eat (4), Sleep(1), Bathroom (3) Actions: Eat-Food (Eat − 3, Bathroom +1) Eat-Snack (Eat − 2) Sleep-Bed (Sleep − 4) Sleep-Sofa (Sleep − 2) Drink-Cola (Eat − 1; Bathroom + 3) Visit-Bathroom (Bathroom − 4) Discontentment: Eat-Food: (4 -3)2 + 12 + (3+1)2 = 18 Eat-Snack: (4 -2)2 + 12 + 32 = 14 Sleep Bed: 42 + (1 -1)2 + 32 = 25 Sleep Sofa: 42 + (1 -1)2 + 32 = 25 Drink-Cola: (4 -1)2 + 12 + (3+2)2 = 35 Visit-Bathroom: 42 + 12 + (3 -3)2 = 17

Summary Today we explored: ü The notion of a game AI agent ü Decision making processes including finite state machines and goal driven behaviour : o d o T ur o y o t e l b a c i l üIf app et i n i f e r o l p x e game, nts e g a , s e n i h c a state m en and goal driv behaviour. ur o y s d r a w o t üWord als. o g n i d n a h alpha