7 Speech Recognition Concepts Speech Recognition Approaches Recognition

- Slides: 34

7 -Speech Recognition Concepts Speech Recognition Approaches Recognition Theories Bayse Rule Simple Language Model P(A|W) Network Types 1

7 -Speech Recognition (Cont’d) HMM Calculating Approaches Neural Components Three Basic HMM Problems Viterbi Algorithm State Duration Modeling Training In HMM 2

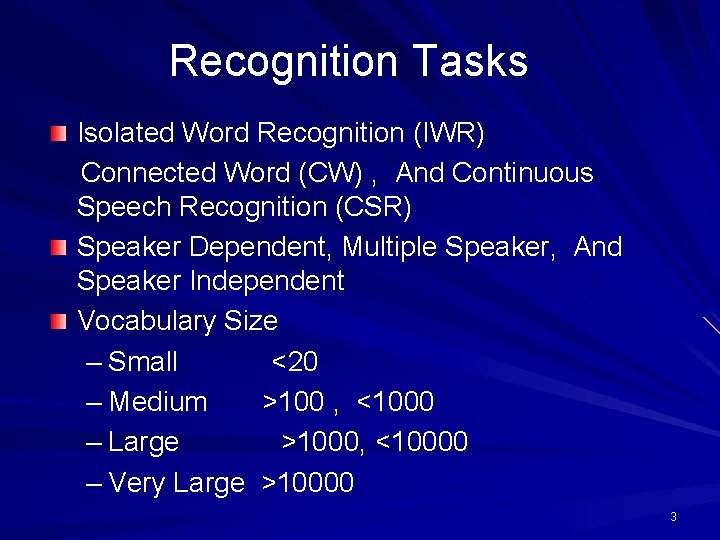

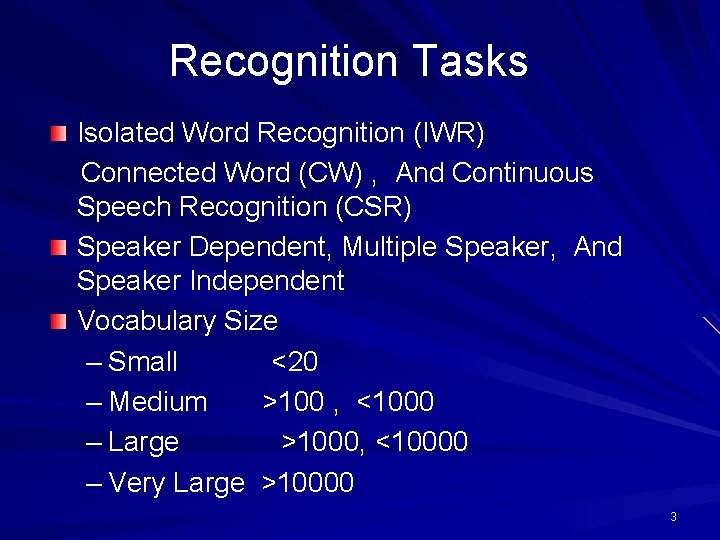

Recognition Tasks Isolated Word Recognition (IWR) Connected Word (CW) , And Continuous Speech Recognition (CSR) Speaker Dependent, Multiple Speaker, And Speaker Independent Vocabulary Size – Small <20 – Medium >100 , <1000 – Large >1000, <10000 – Very Large >10000 3

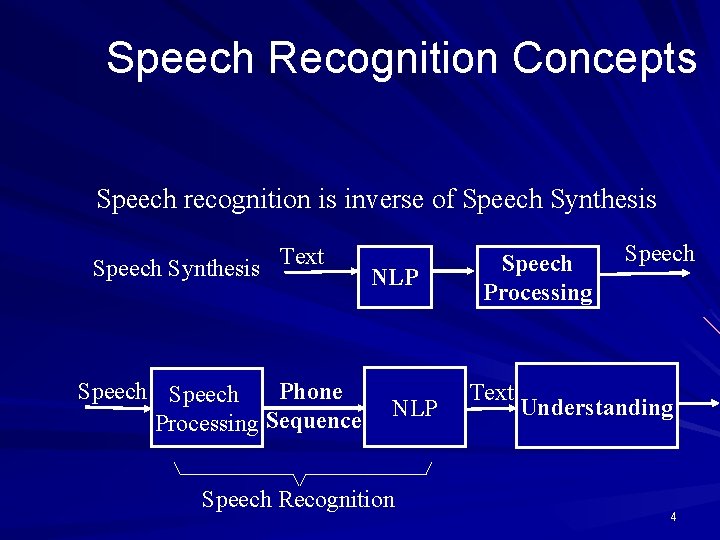

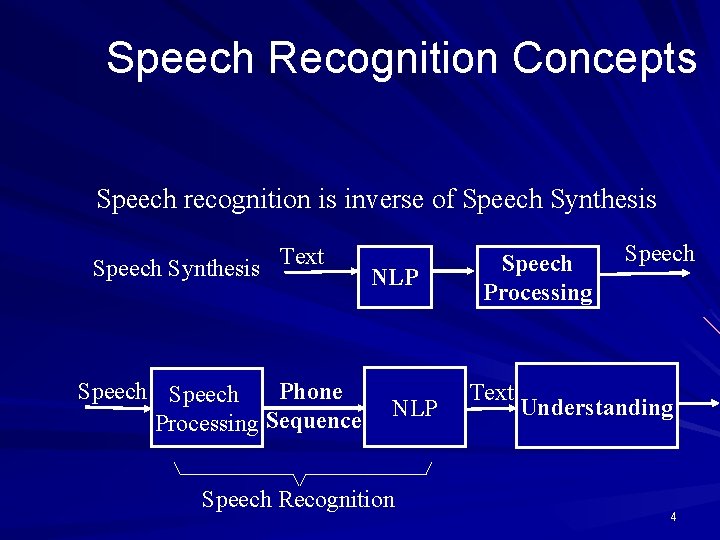

Speech Recognition Concepts Speech recognition is inverse of Speech Synthesis Text Speech Phone Processing Sequence NLP Speech Recognition Speech Processing Text Speech Understanding 4

Speech Recognition Approaches Bottom-Up Approach Top-Down Approach Blackboard Approach 5

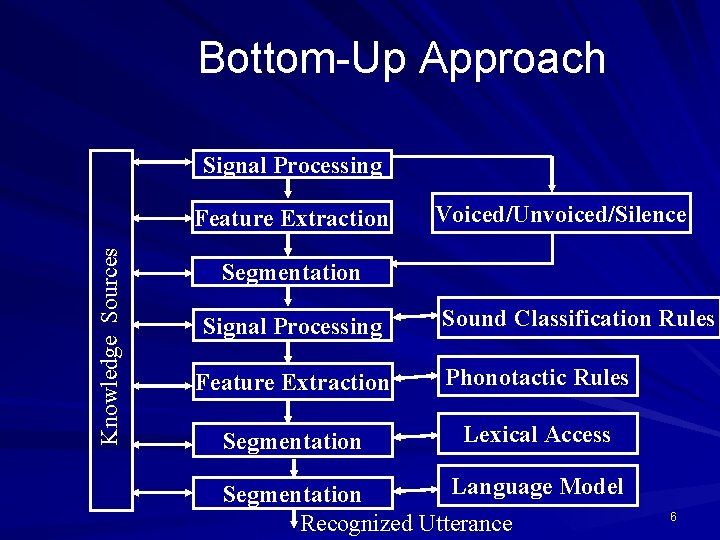

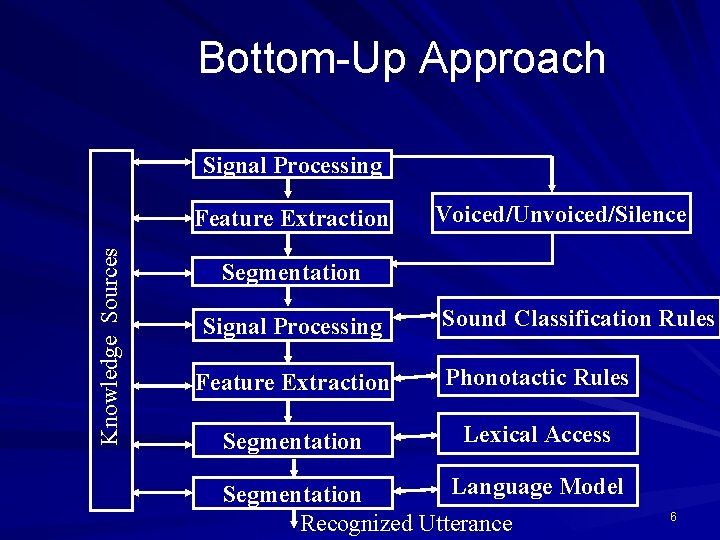

Bottom-Up Approach Signal Processing Knowledge Sources Feature Extraction Voiced/Unvoiced/Silence Segmentation Signal Processing Sound Classification Rules Feature Extraction Phonotactic Rules Segmentation Lexical Access Language Model Segmentation Recognized Utterance 6

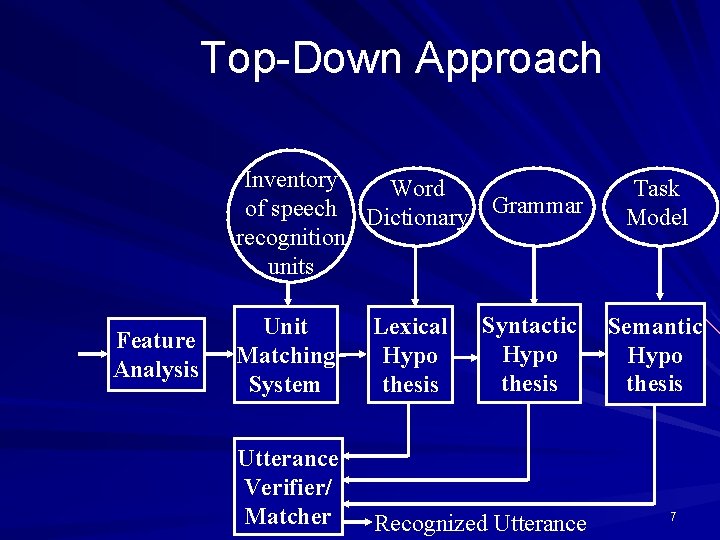

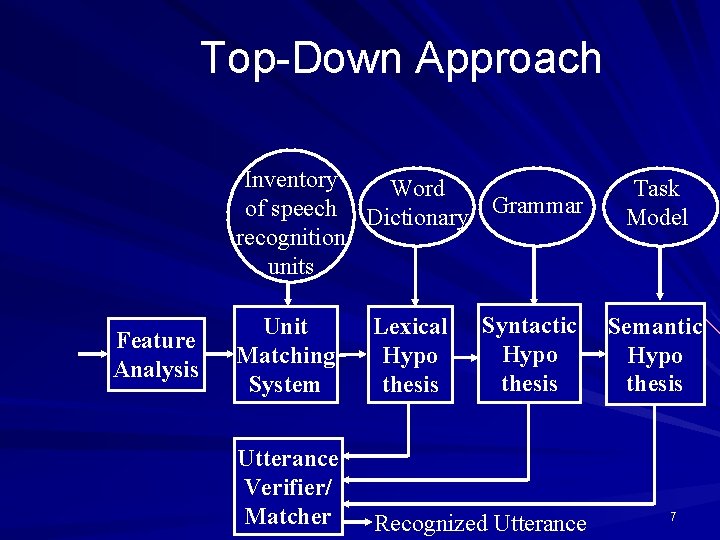

Top-Down Approach Inventory Word of speech Dictionary Grammar recognition units Feature Analysis Syntactic Hypo thesis Unit Matching System Lexical Hypo thesis Utterance Verifier/ Matcher Recognized Utterance Task Model Semantic Hypo thesis 7

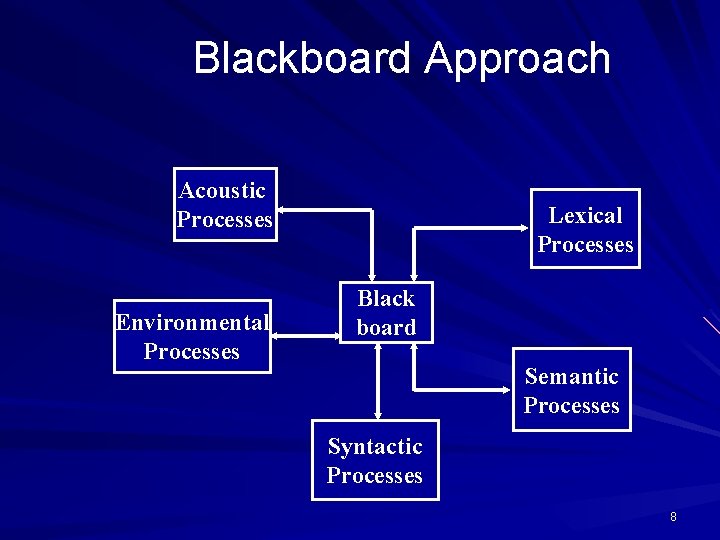

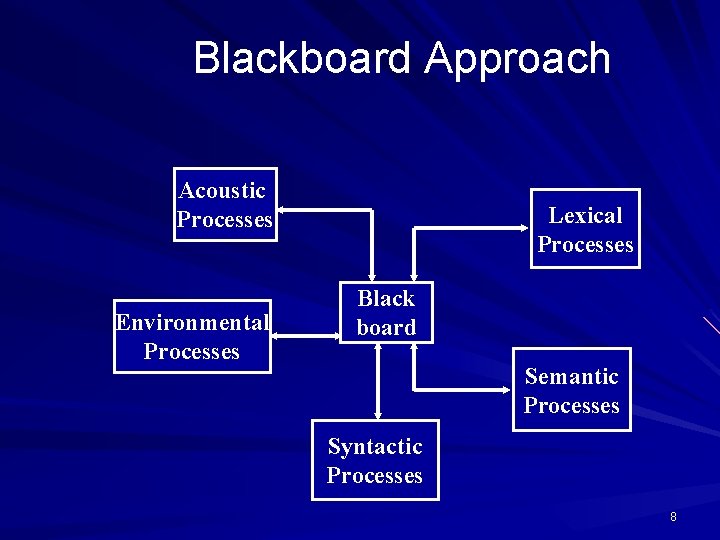

Blackboard Approach Acoustic Processes Environmental Processes Lexical Processes Black board Semantic Processes Syntactic Processes 8

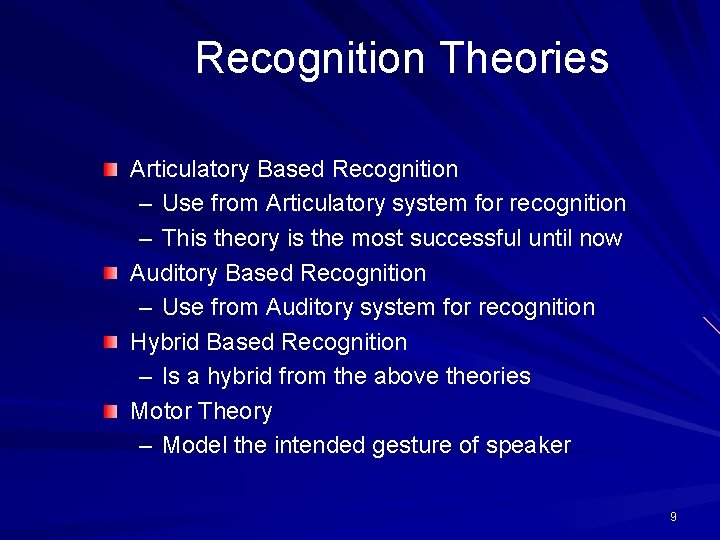

Recognition Theories Articulatory Based Recognition – Use from Articulatory system for recognition – This theory is the most successful until now Auditory Based Recognition – Use from Auditory system for recognition Hybrid Based Recognition – Is a hybrid from the above theories Motor Theory – Model the intended gesture of speaker 9

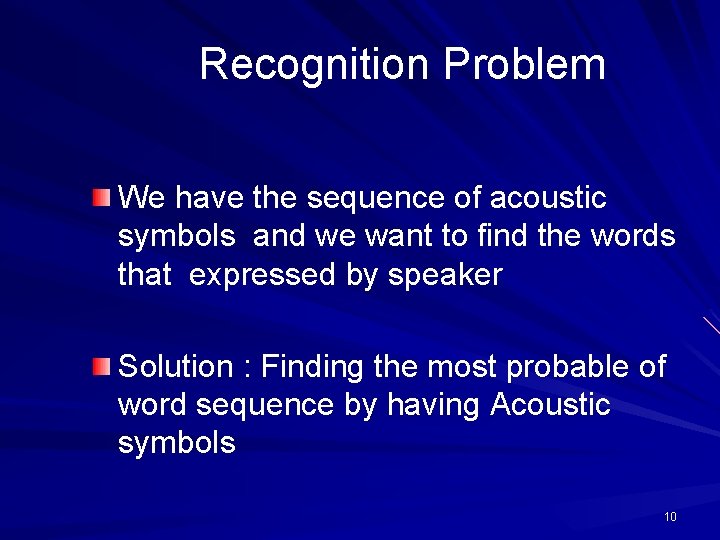

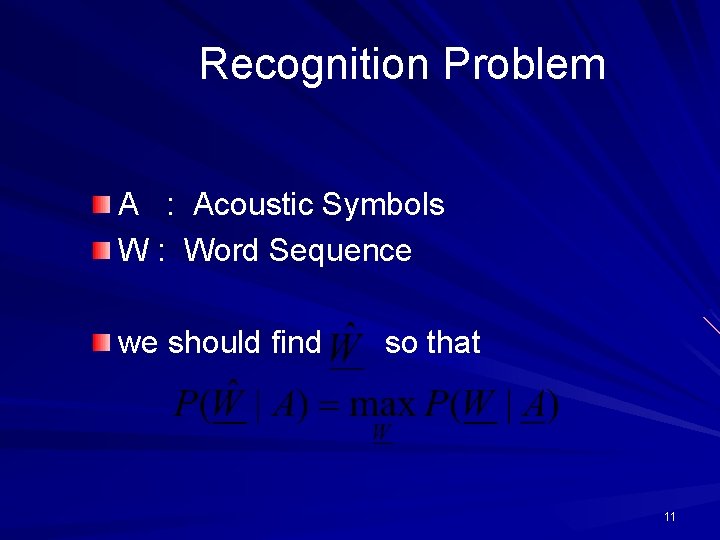

Recognition Problem We have the sequence of acoustic symbols and we want to find the words that expressed by speaker Solution : Finding the most probable of word sequence by having Acoustic symbols 10

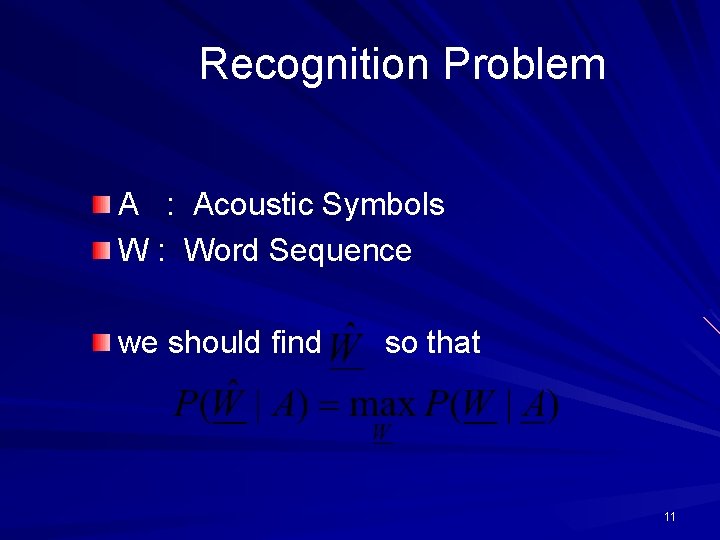

Recognition Problem A : Acoustic Symbols W : Word Sequence we should find so that 11

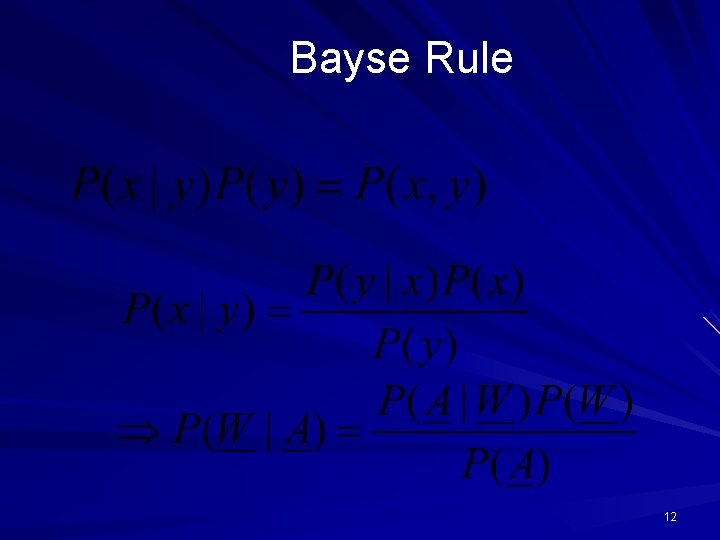

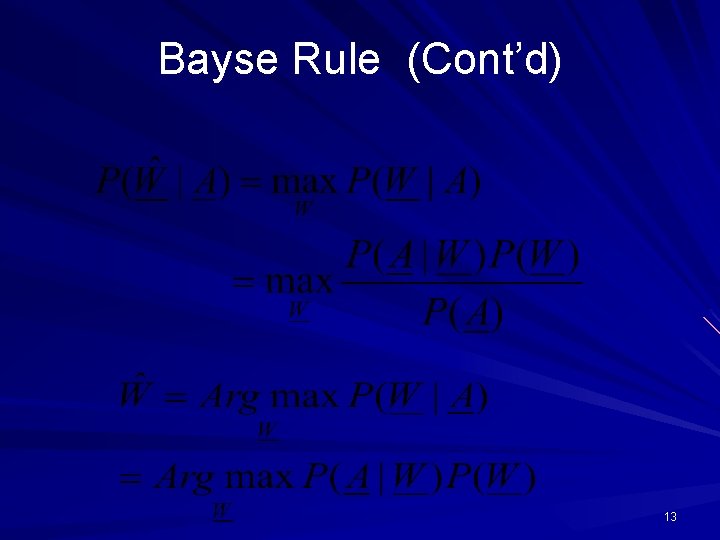

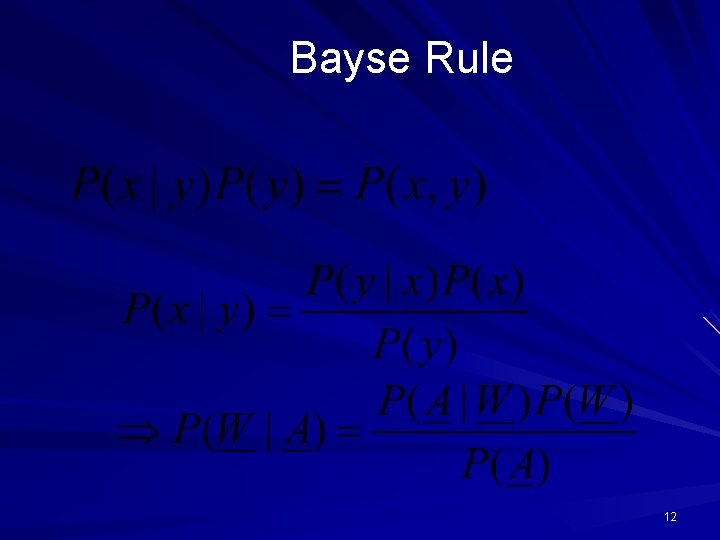

Bayse Rule 12

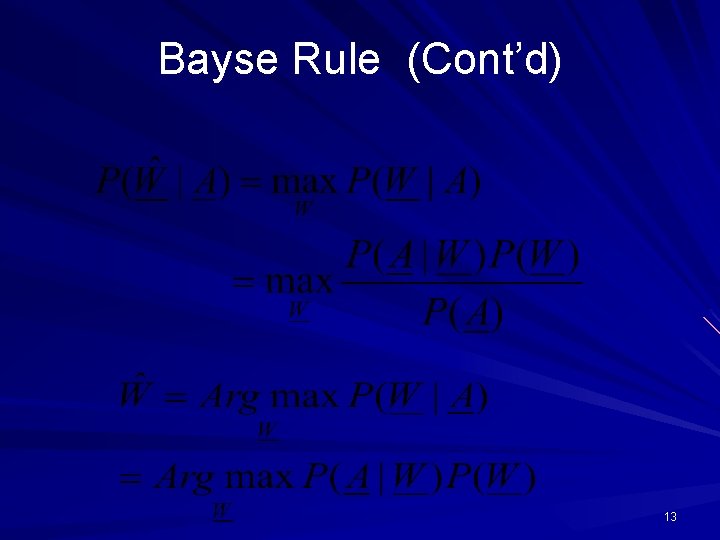

Bayse Rule (Cont’d) 13

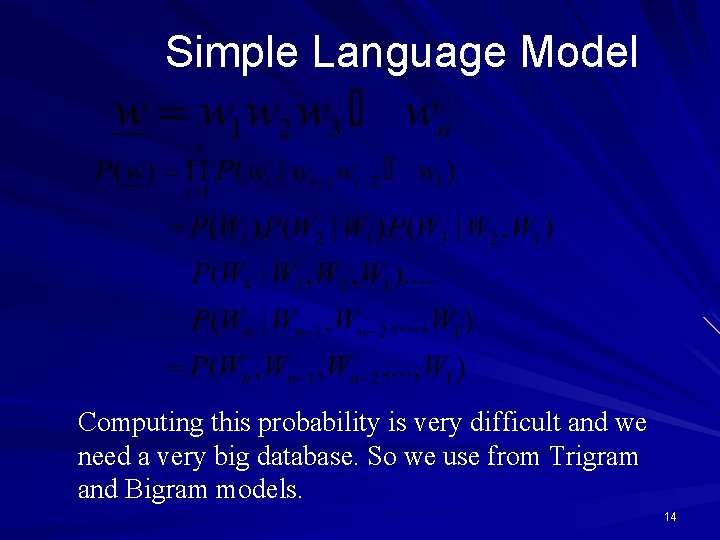

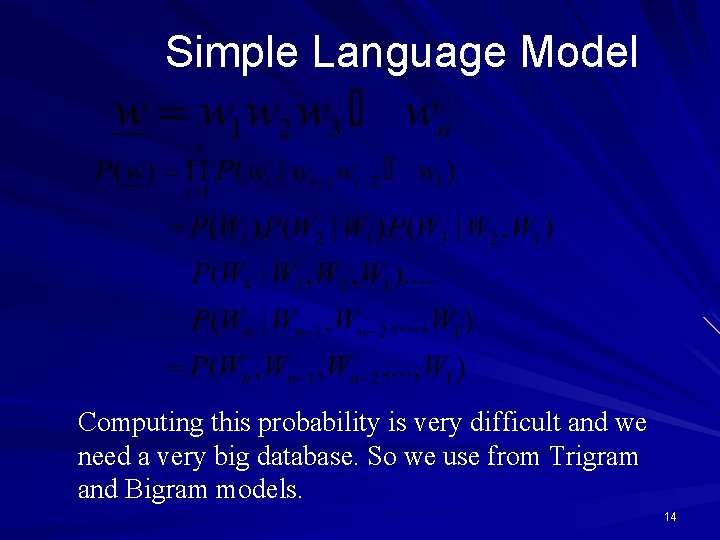

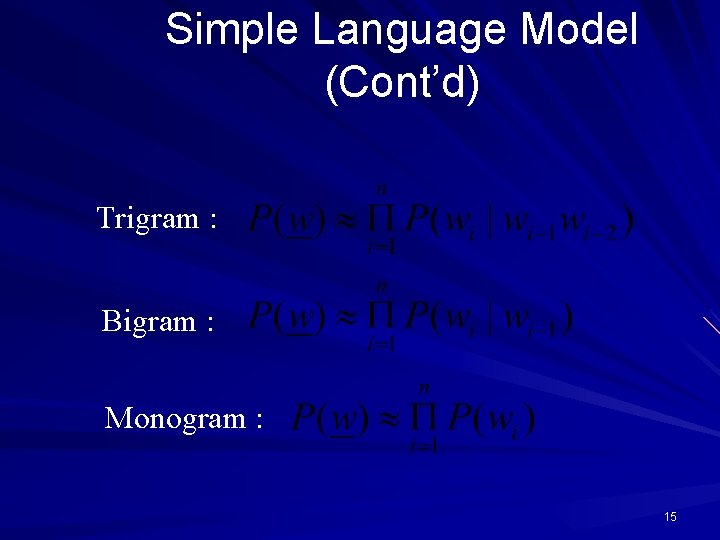

Simple Language Model Computing this probability is very difficult and we need a very big database. So we use from Trigram and Bigram models. 14

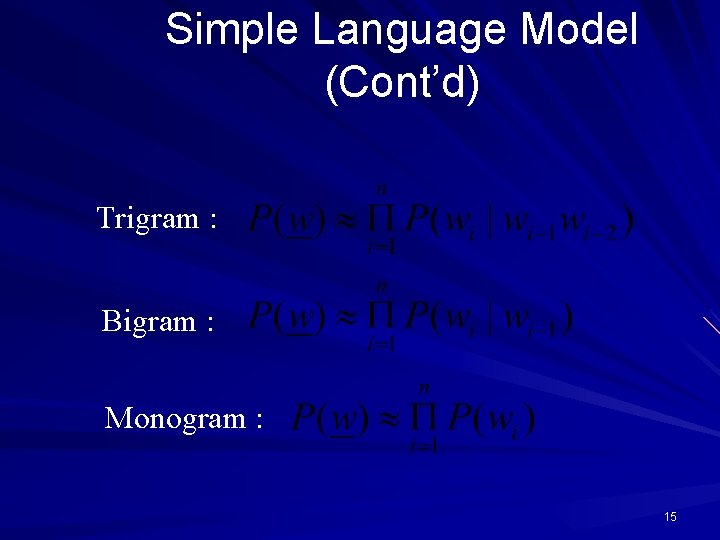

Simple Language Model (Cont’d) Trigram : Bigram : Monogram : 15

Simple Language Model (Cont’d) Computing Method : Number of happening W 3 after W 1 W 2 Total number of happening W 1 W 2 Ad. Hoc Method : 16

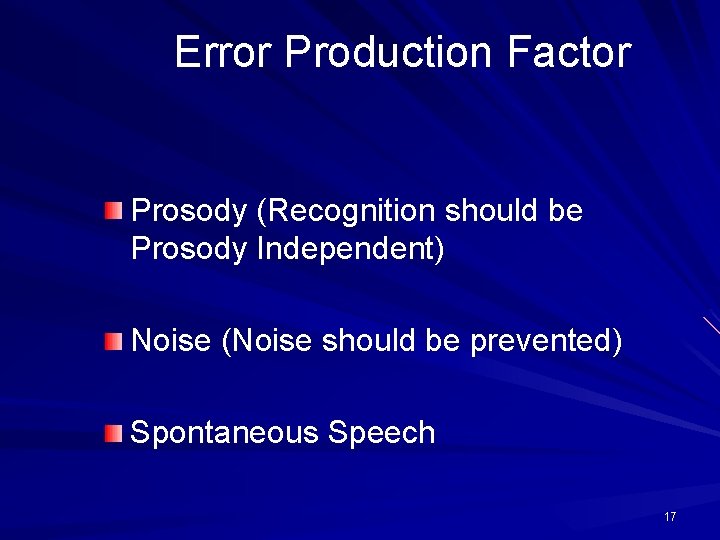

Error Production Factor Prosody (Recognition should be Prosody Independent) Noise (Noise should be prevented) Spontaneous Speech 17

P(A|W) Computing Approaches Dynamic Time Warping (DTW) Hidden Markov Model (HMM) Artificial Neural Network (ANN) Hybrid Systems 18

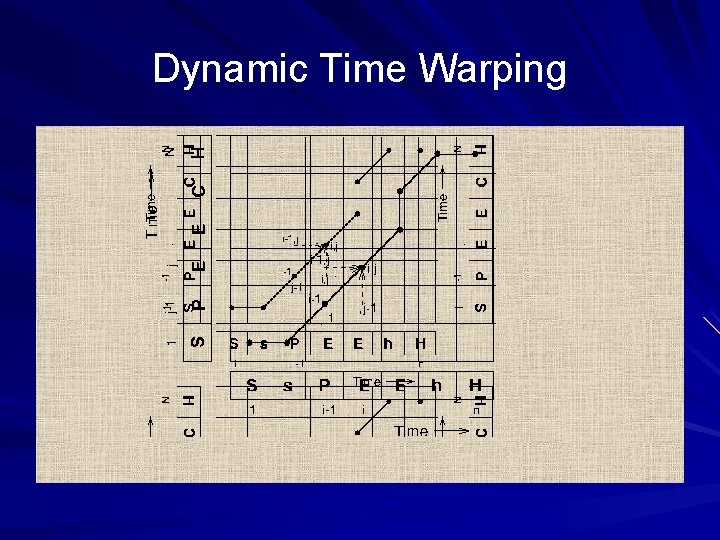

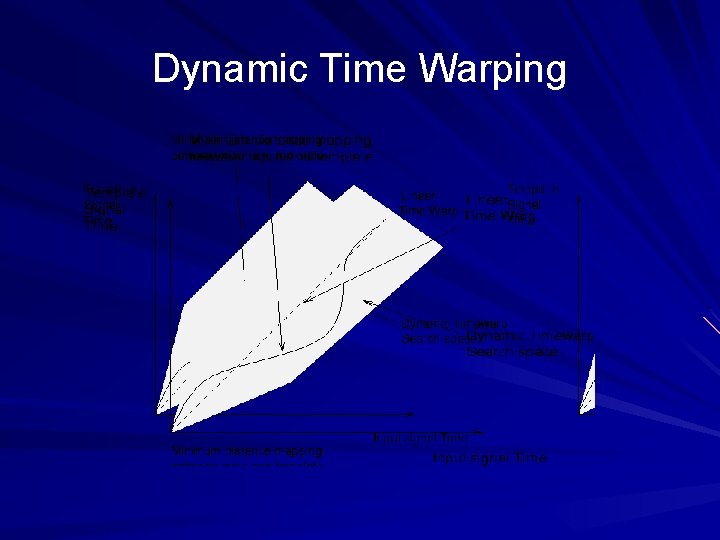

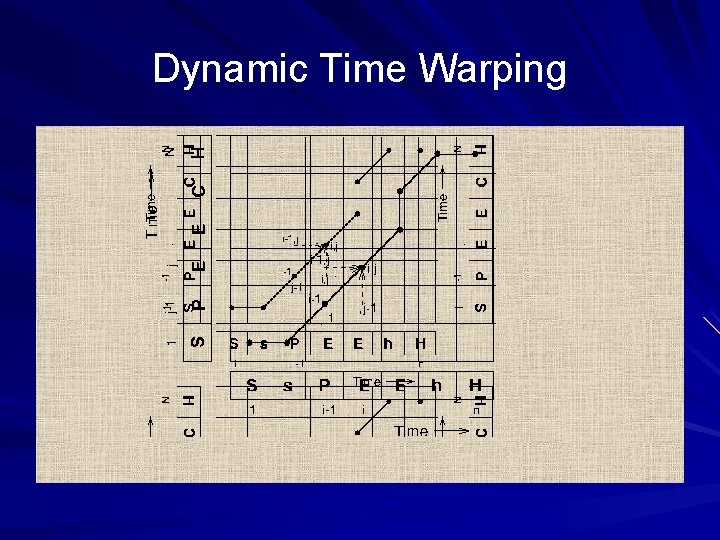

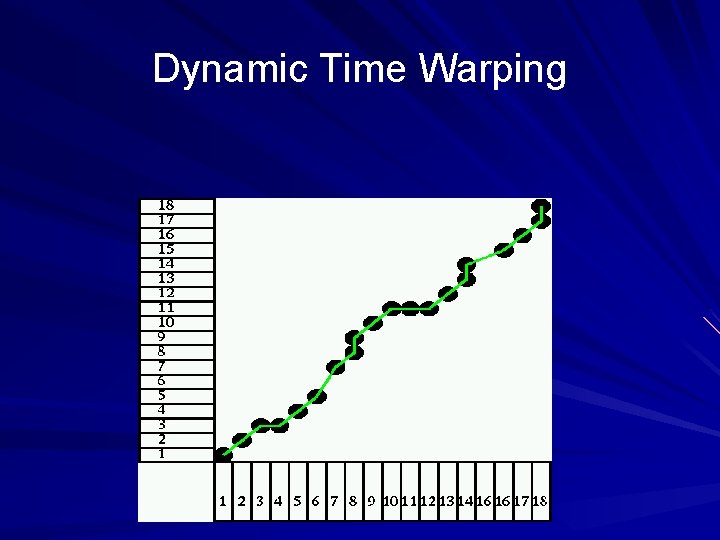

Dynamic Time Warping

Dynamic Time Warping

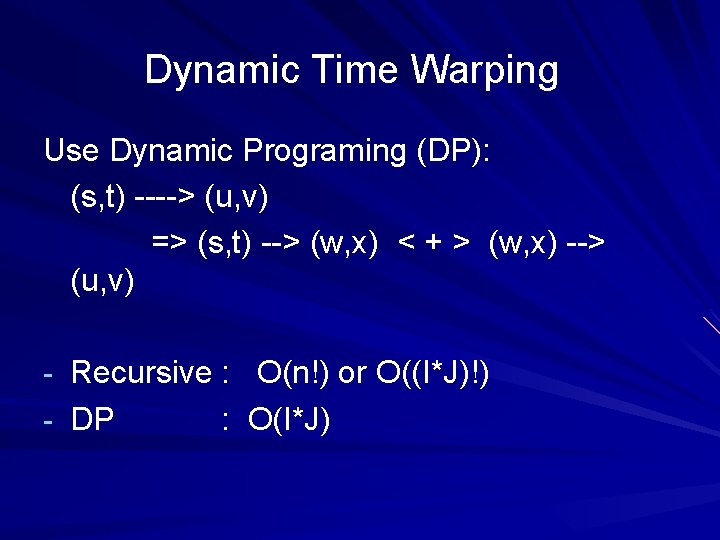

Dynamic Time Warping Use Dynamic Programing (DP): (s, t) ----> (u, v) => (s, t) --> (w, x) < + > (w, x) --> (u, v) - Recursive : O(n!) or O((I*J)!) - DP : O(I*J)

Dynamic Time Warping for I=J=50 then O(recursive) / O(DP) ~ 1. 2 * 10^61

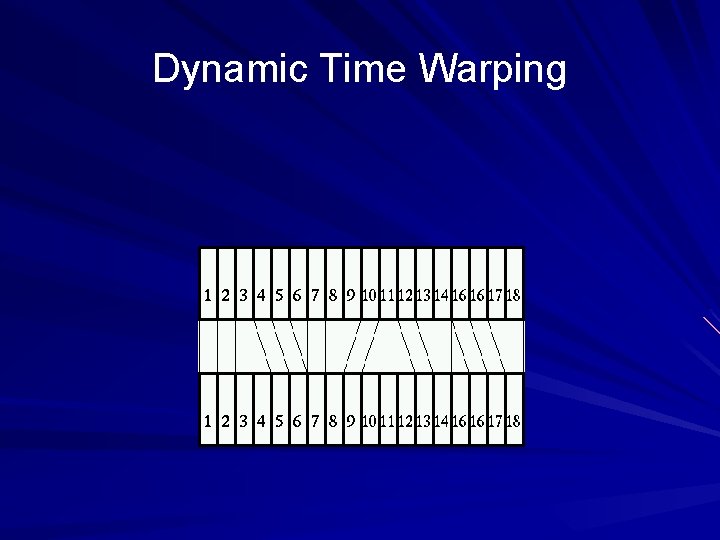

Dynamic Time Warping Detail of DTW - Distance - Selecting Same Frams

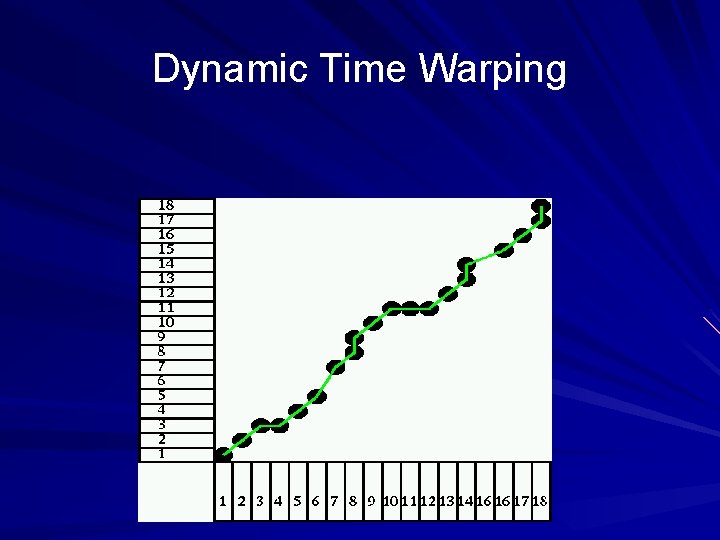

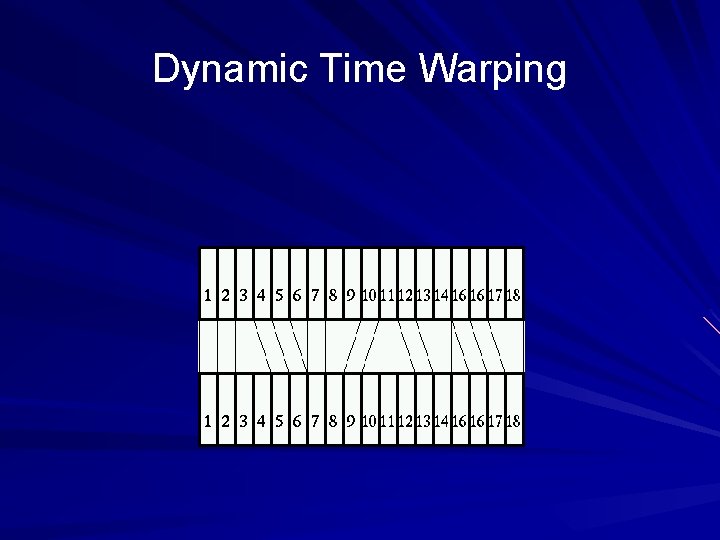

Dynamic Time Warping

Dynamic Time Warping

Dynamic Time Warping Search Limitation : - First & End Interval - Global Limitation - Local Limitation

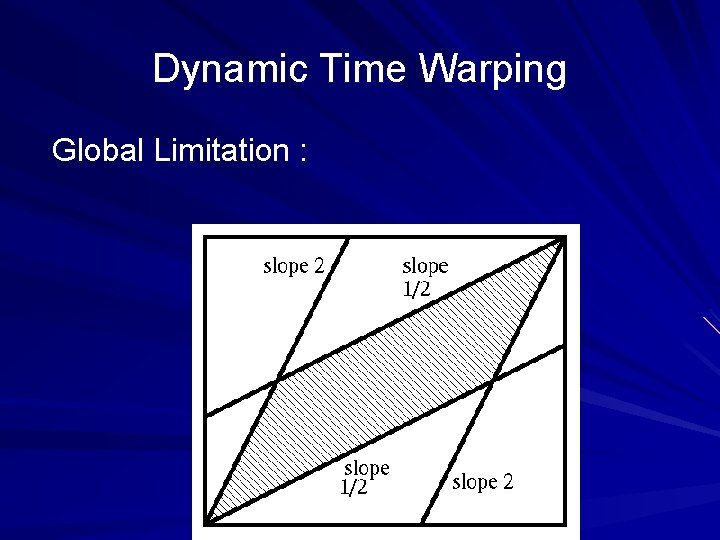

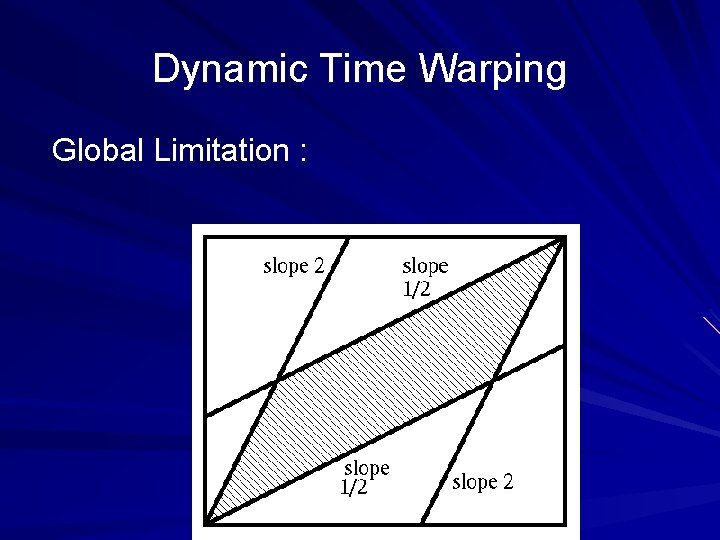

Dynamic Time Warping Global Limitation :

Dynamic Time Warping Local Limitation :

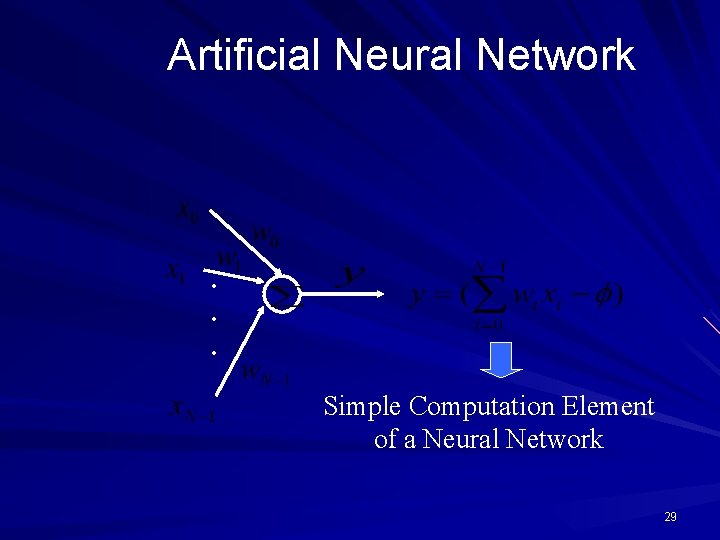

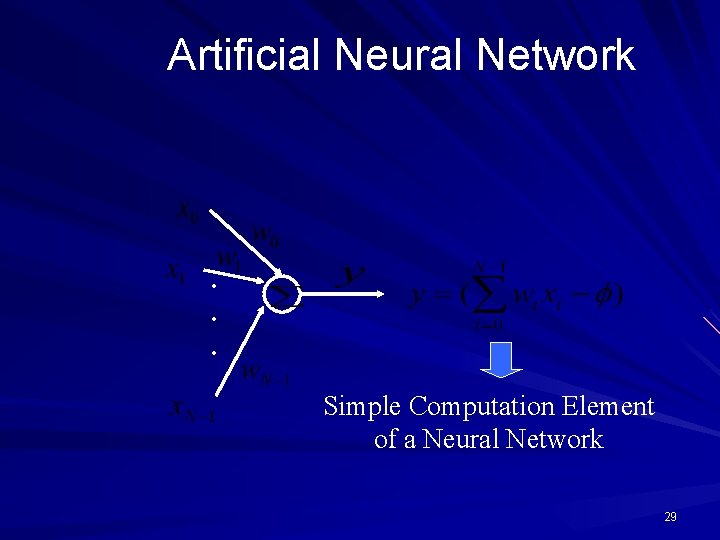

Artificial Neural Network . . . Simple Computation Element of a Neural Network 29

Artificial Neural Network (Cont’d) Neural Network Types – Perceptron – Time Delay Neural Network Computational Element (TDNN) 30

Artificial Neural Network (Cont’d) Single Layer Perceptron. . . 31

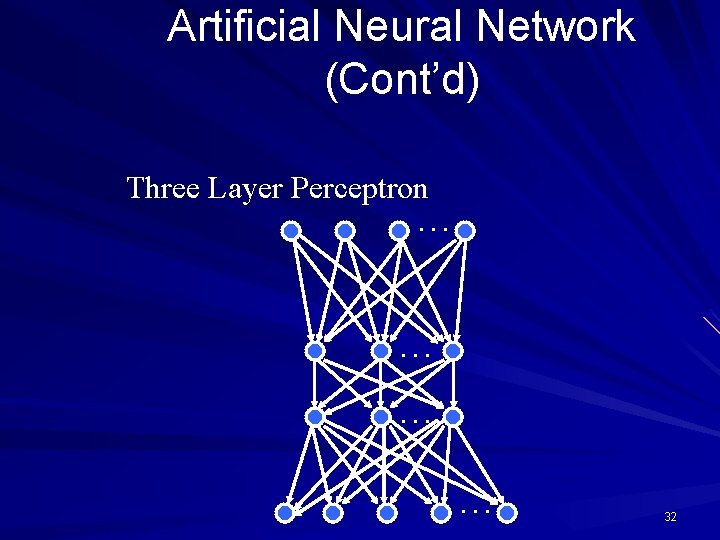

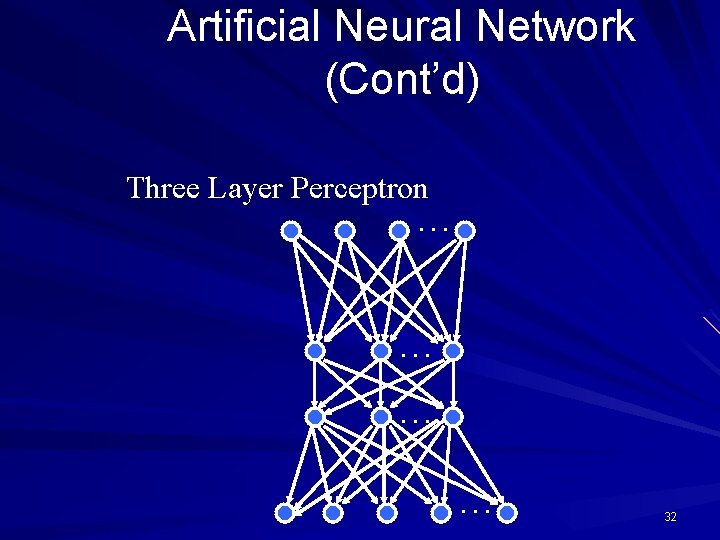

Artificial Neural Network (Cont’d) Three Layer Perceptron. . . 32

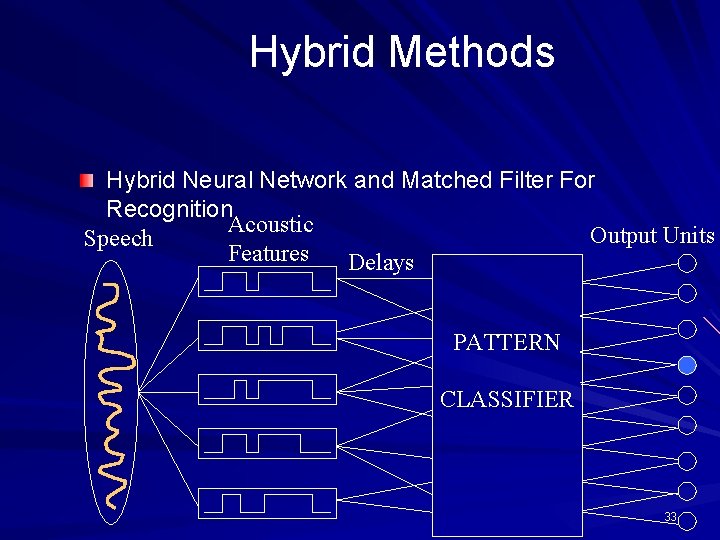

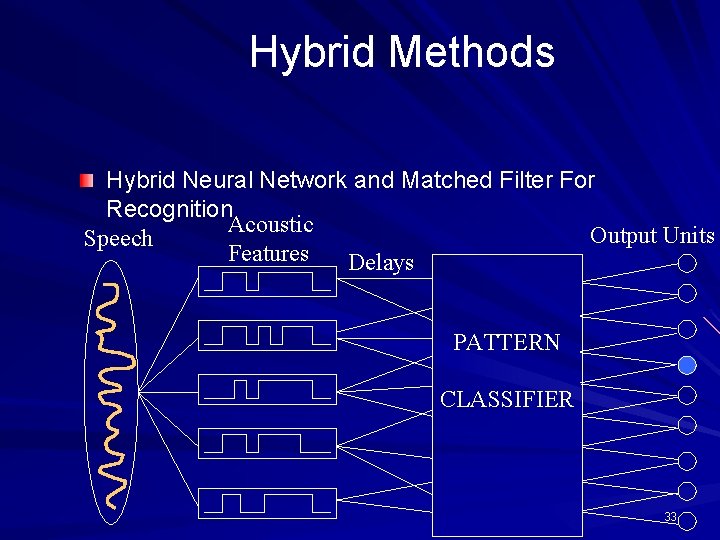

Hybrid Methods Hybrid Neural Network and Matched Filter For Recognition Acoustic Output Units Speech Features Delays PATTERN CLASSIFIER 33

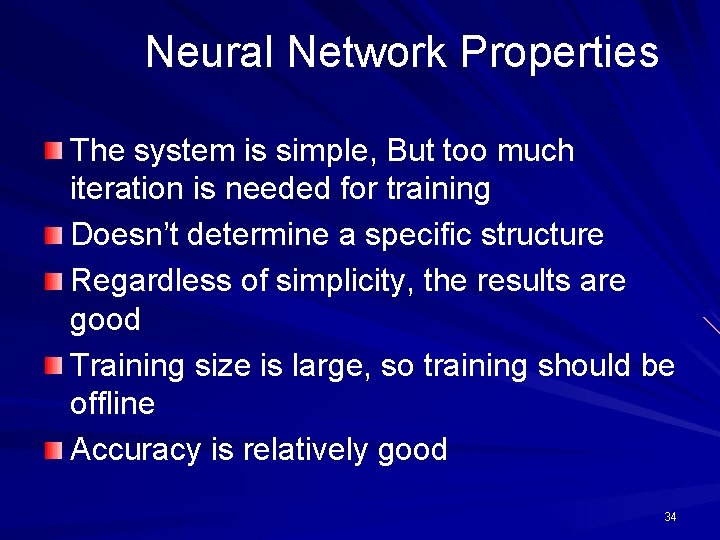

Neural Network Properties The system is simple, But too much iteration is needed for training Doesn’t determine a specific structure Regardless of simplicity, the results are good Training size is large, so training should be offline Accuracy is relatively good 34