7 Speech Recognition Concepts Speech Recognition Approaches Recognition

- Slides: 60

7 -Speech Recognition Concepts Speech Recognition Approaches Recognition Theories Bayse Rule Simple Language Model P(A|W) Network Types 1

7 -Speech Recognition (Cont’d) HMM Calculating Approaches Neural Components Three Basic HMM Problems Viterbi Algorithm State Duration Modeling Training In HMM 2

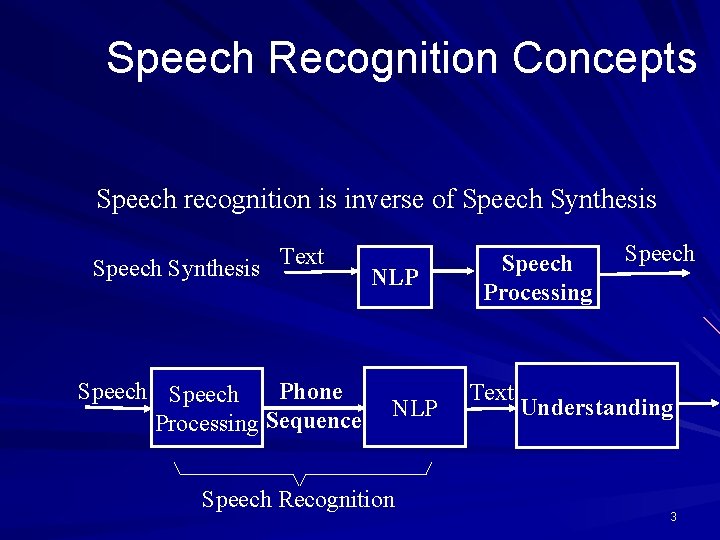

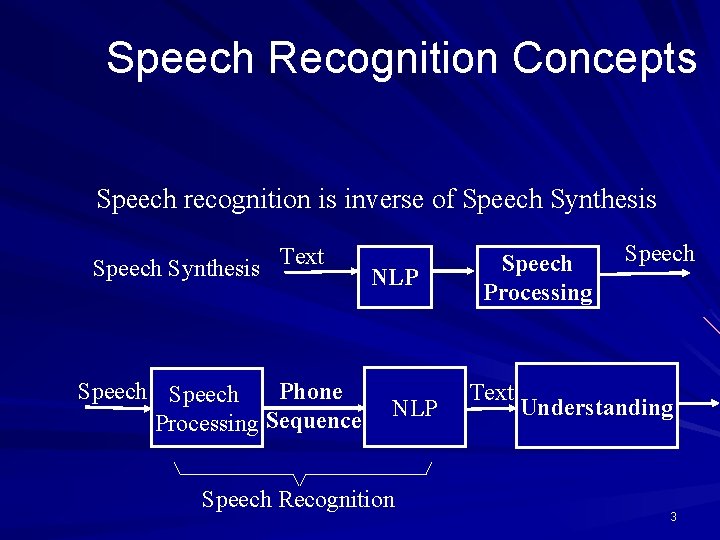

Speech Recognition Concepts Speech recognition is inverse of Speech Synthesis Text Speech Phone Processing Sequence NLP Speech Recognition Speech Processing Text Speech Understanding 3

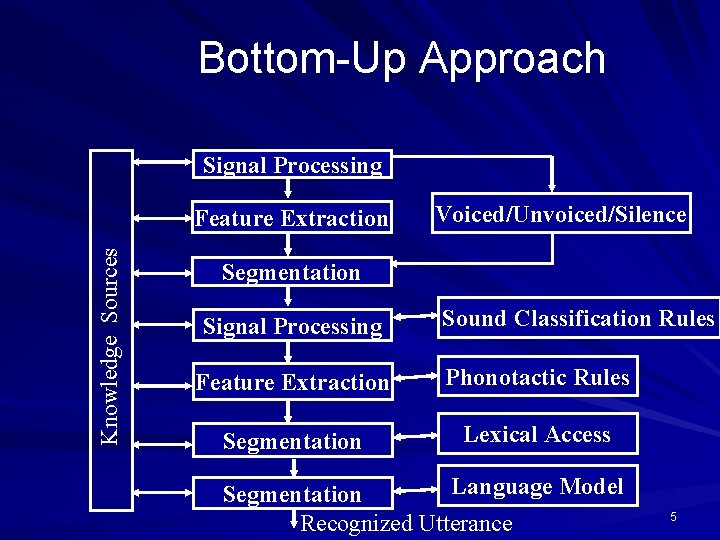

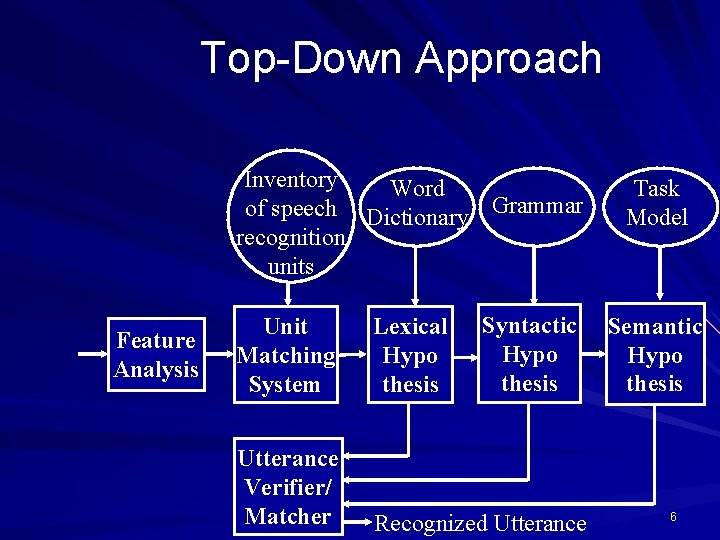

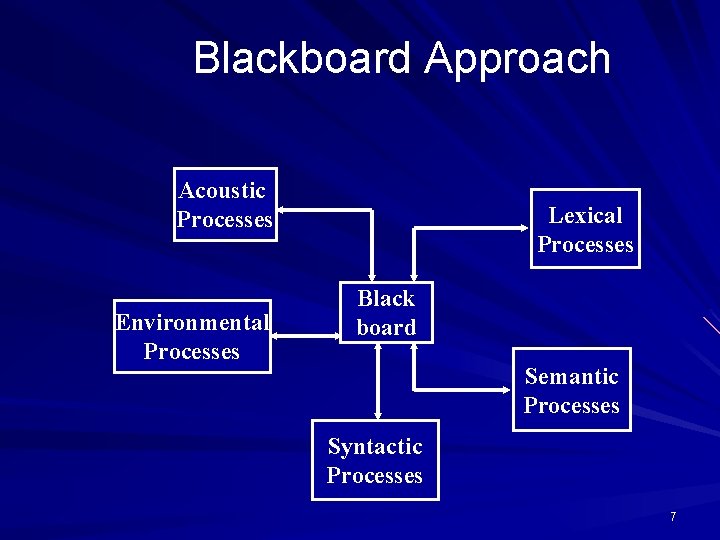

Speech Recognition Approaches Bottom-Up Approach Top-Down Approach Blackboard Approach 4

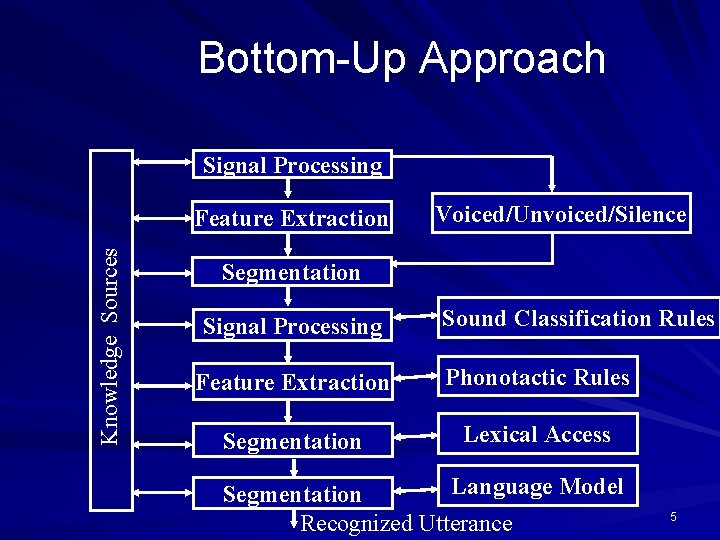

Bottom-Up Approach Signal Processing Knowledge Sources Feature Extraction Voiced/Unvoiced/Silence Segmentation Signal Processing Sound Classification Rules Feature Extraction Phonotactic Rules Segmentation Lexical Access Language Model Segmentation Recognized Utterance 5

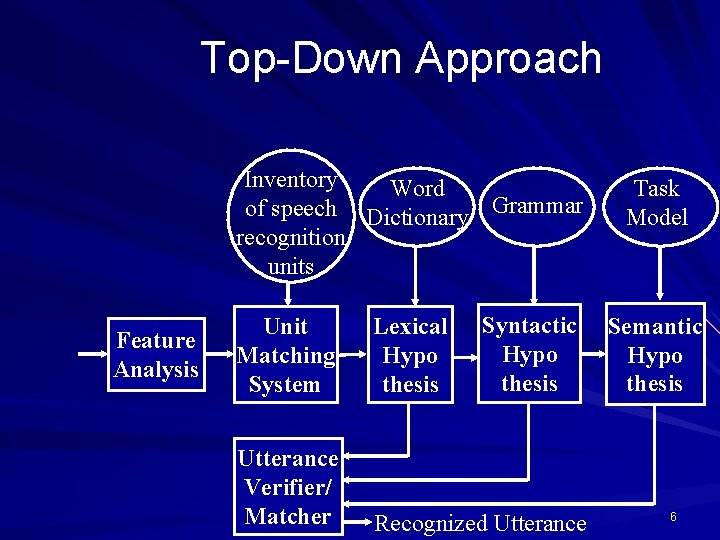

Top-Down Approach Inventory Word of speech Dictionary Grammar recognition units Feature Analysis Syntactic Hypo thesis Unit Matching System Lexical Hypo thesis Utterance Verifier/ Matcher Recognized Utterance Task Model Semantic Hypo thesis 6

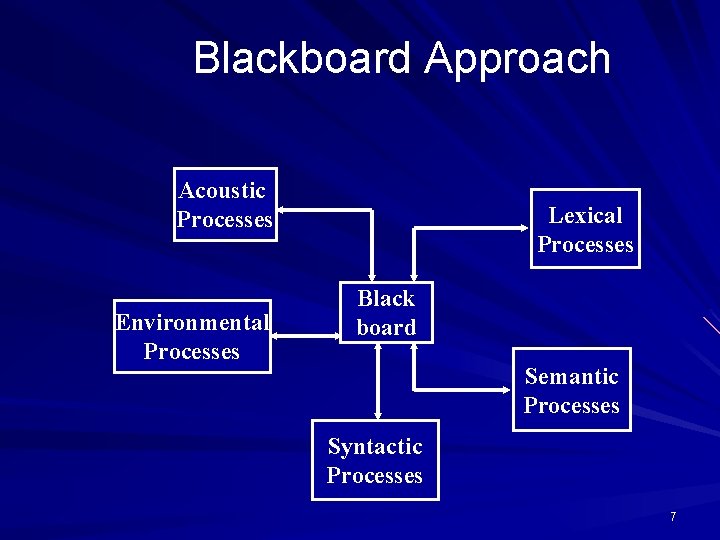

Blackboard Approach Acoustic Processes Environmental Processes Lexical Processes Black board Semantic Processes Syntactic Processes 7

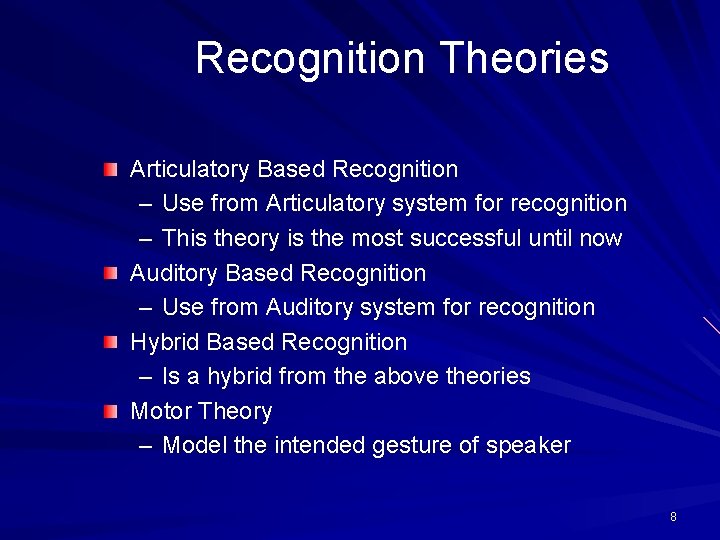

Recognition Theories Articulatory Based Recognition – Use from Articulatory system for recognition – This theory is the most successful until now Auditory Based Recognition – Use from Auditory system for recognition Hybrid Based Recognition – Is a hybrid from the above theories Motor Theory – Model the intended gesture of speaker 8

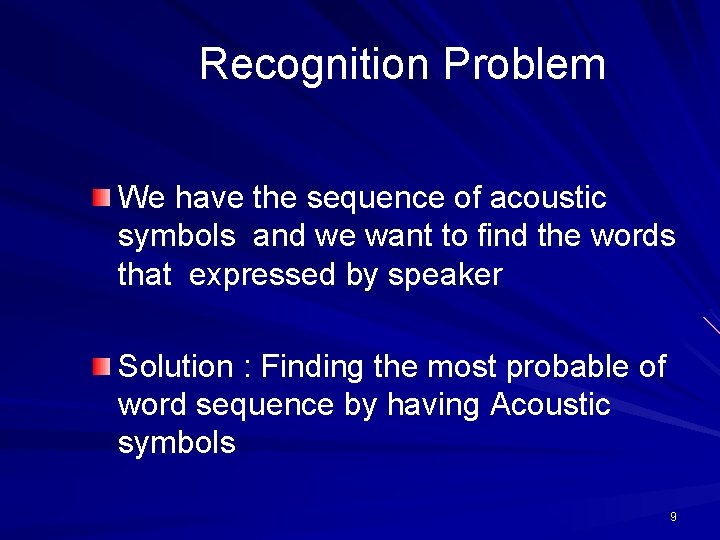

Recognition Problem We have the sequence of acoustic symbols and we want to find the words that expressed by speaker Solution : Finding the most probable of word sequence by having Acoustic symbols 9

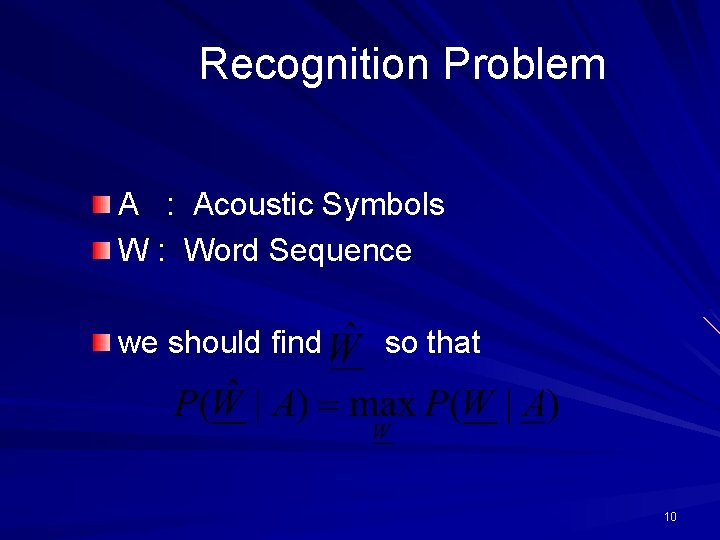

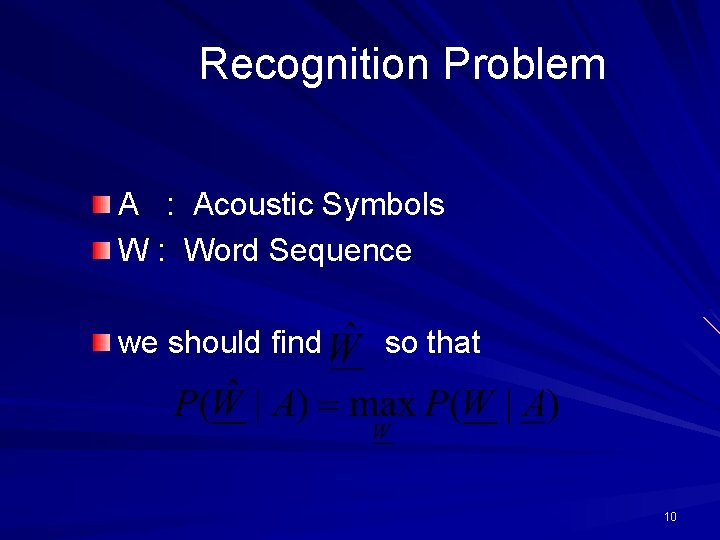

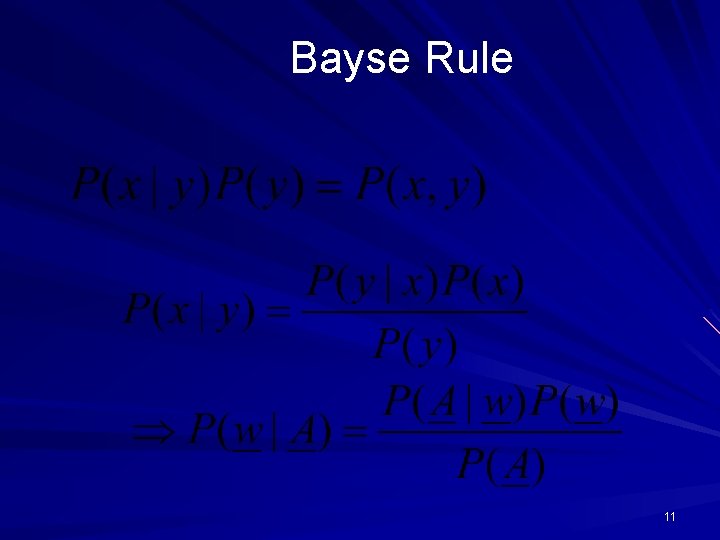

Recognition Problem A : Acoustic Symbols W : Word Sequence we should find so that 10

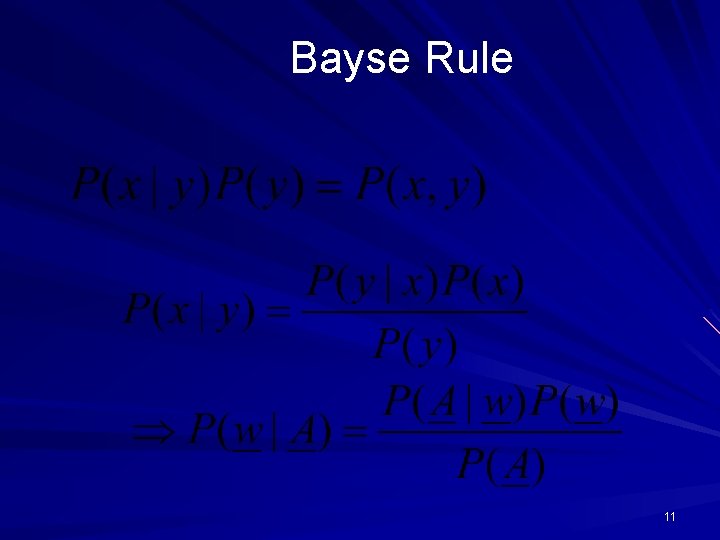

Bayse Rule 11

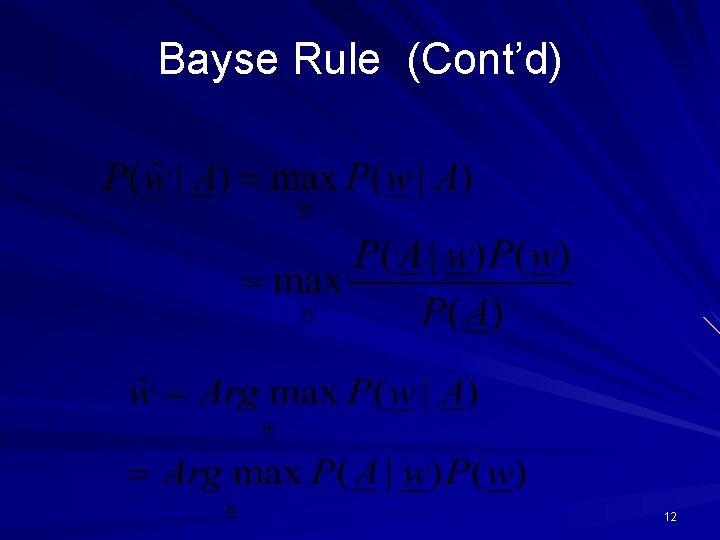

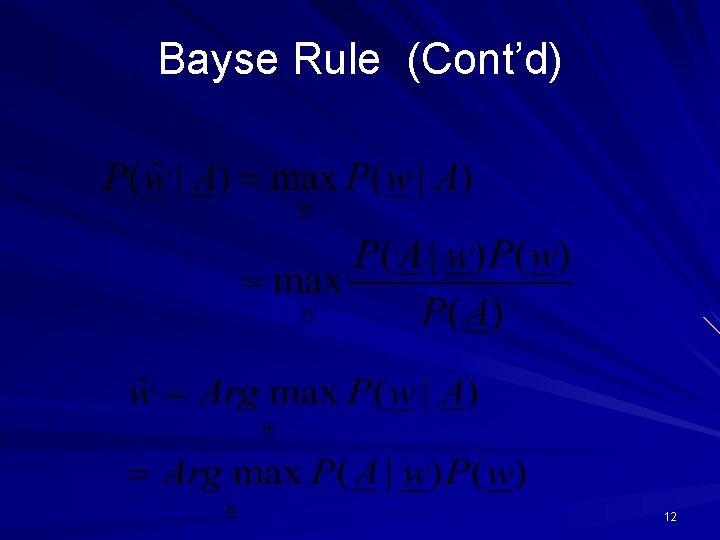

Bayse Rule (Cont’d) 12

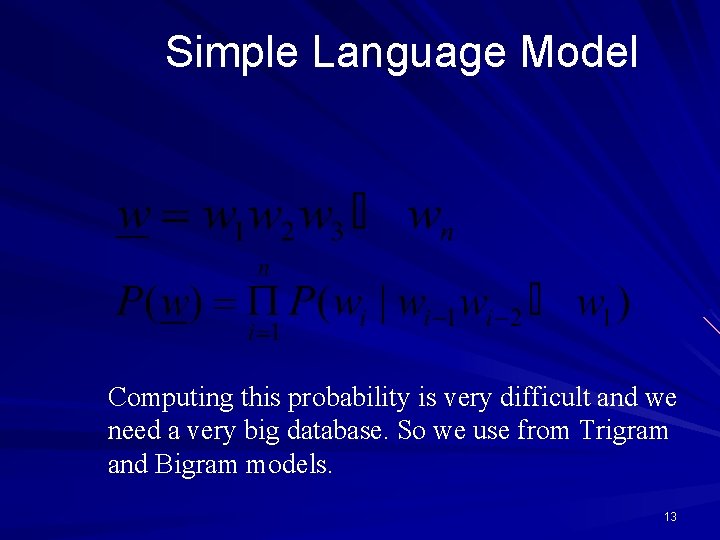

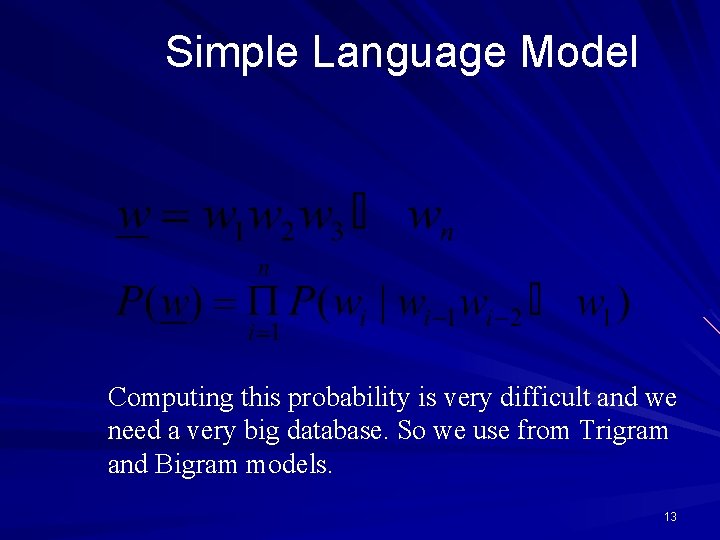

Simple Language Model Computing this probability is very difficult and we need a very big database. So we use from Trigram and Bigram models. 13

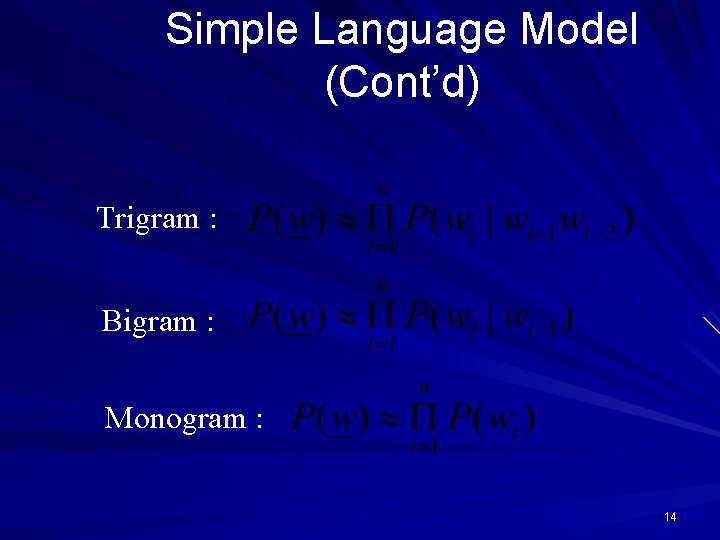

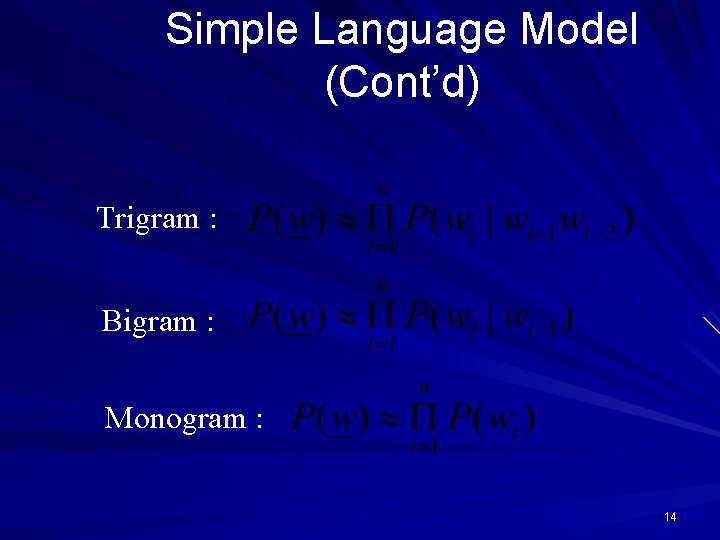

Simple Language Model (Cont’d) Trigram : Bigram : Monogram : 14

Simple Language Model (Cont’d) Computing Method : Number of happening W 3 after W 1 W 2 Total number of happening W 1 W 2 Ad. Hoc Method : 15

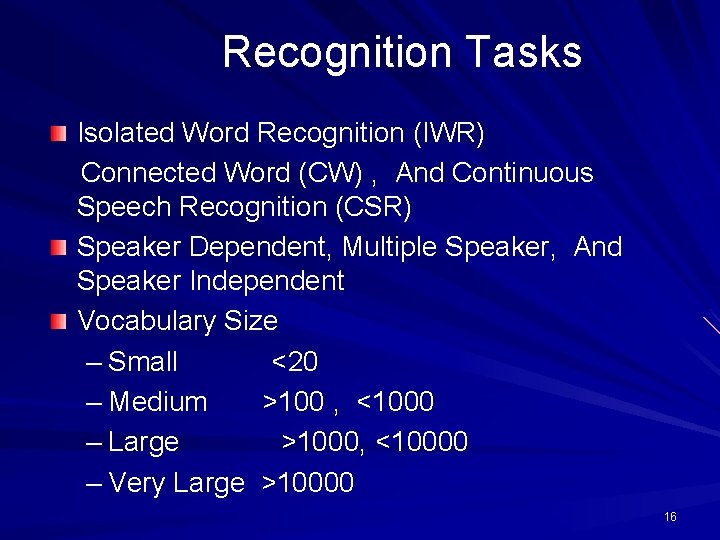

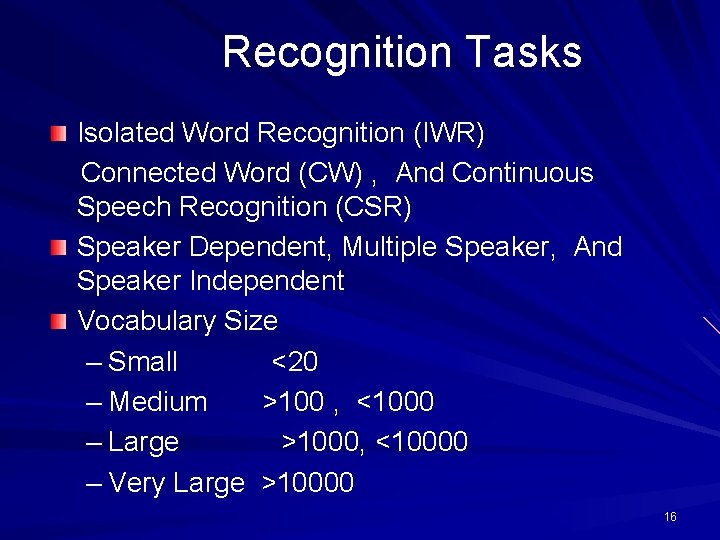

Recognition Tasks Isolated Word Recognition (IWR) Connected Word (CW) , And Continuous Speech Recognition (CSR) Speaker Dependent, Multiple Speaker, And Speaker Independent Vocabulary Size – Small <20 – Medium >100 , <1000 – Large >1000, <10000 – Very Large >10000 16

Error Production Factor Prosody (Recognition should be Prosody Independent) Noise (Noise should be prevented) Spontaneous Speech 17

P(A|W) Computing Approaches Dynamic Time Warping (DTW) Hidden Markov Model (HMM) Artificial Neural Network (ANN) Hybrid Systems 18

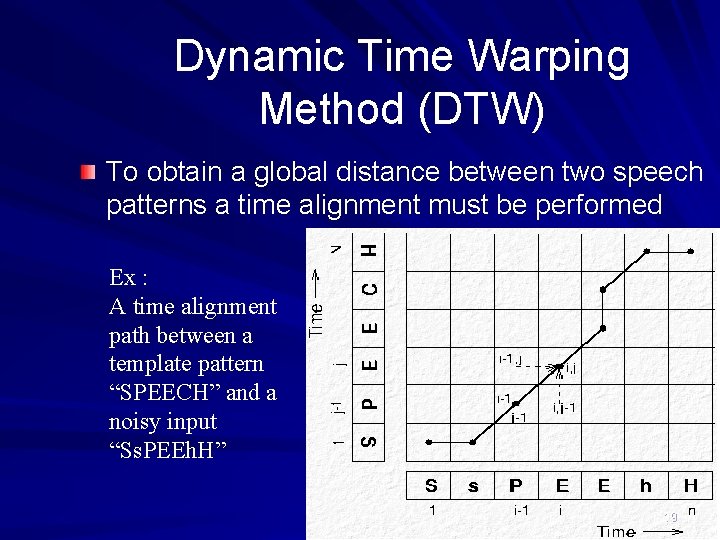

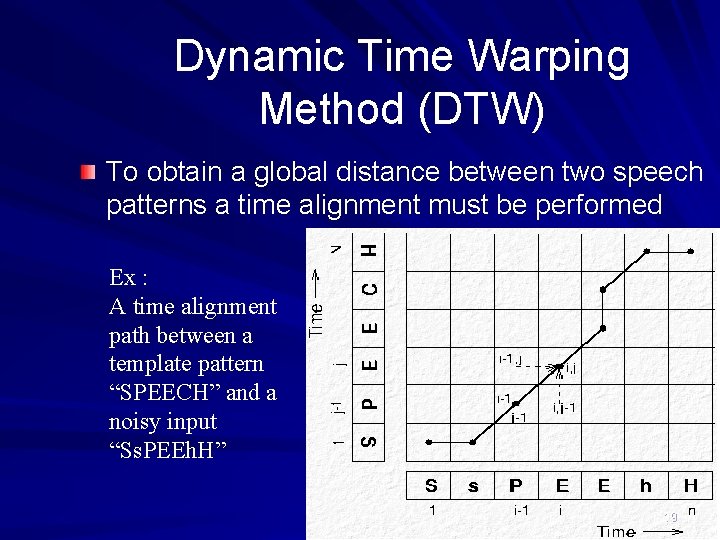

Dynamic Time Warping Method (DTW) To obtain a global distance between two speech patterns a time alignment must be performed Ex : A time alignment path between a template pattern “SPEECH” and a noisy input “Ss. PEEh. H” 19

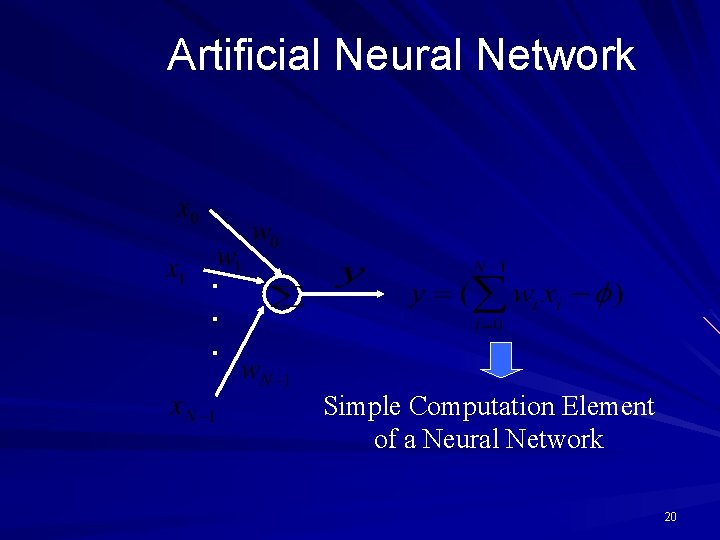

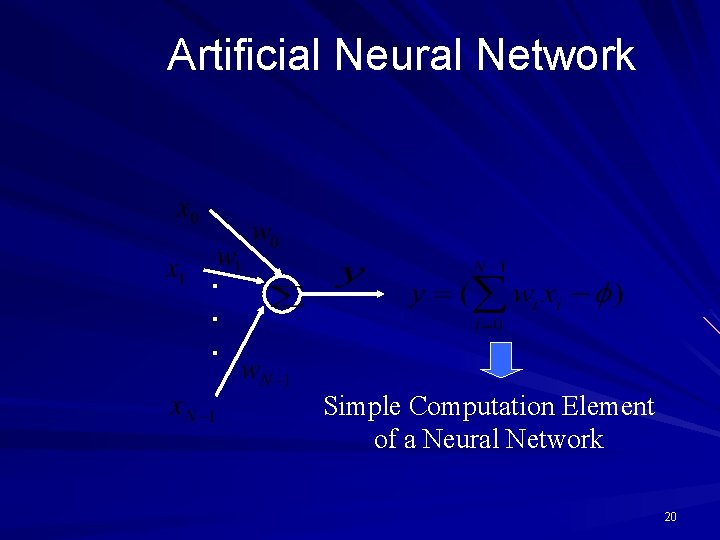

Artificial Neural Network . . . Simple Computation Element of a Neural Network 20

Artificial Neural Network (Cont’d) Neural Network Types – Perceptron – Time Delay Neural Network Computational Element (TDNN) 21

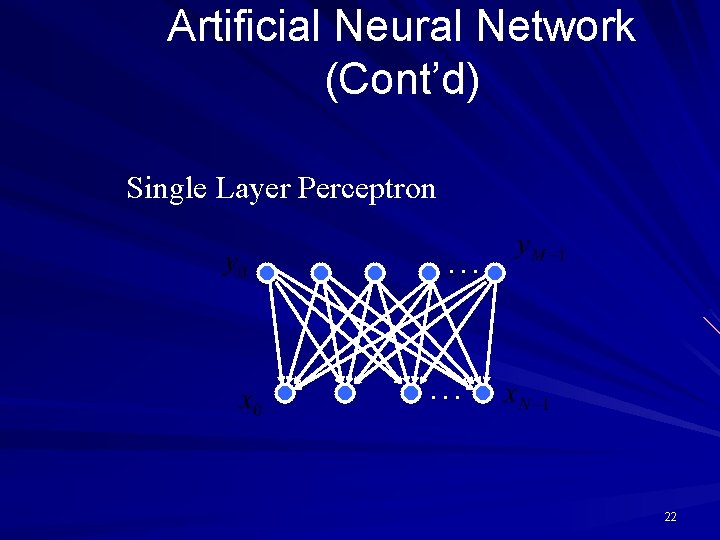

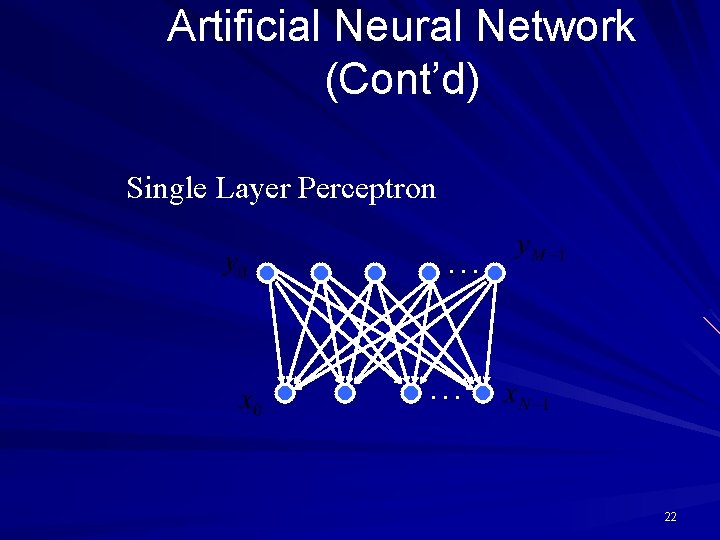

Artificial Neural Network (Cont’d) Single Layer Perceptron. . . 22

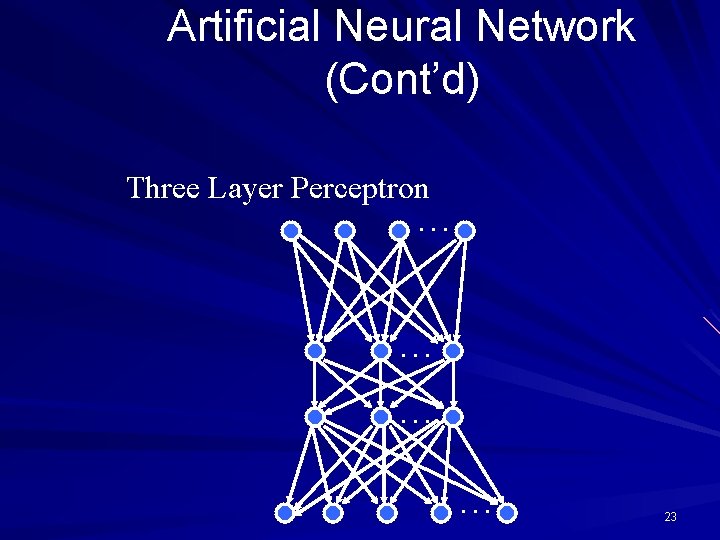

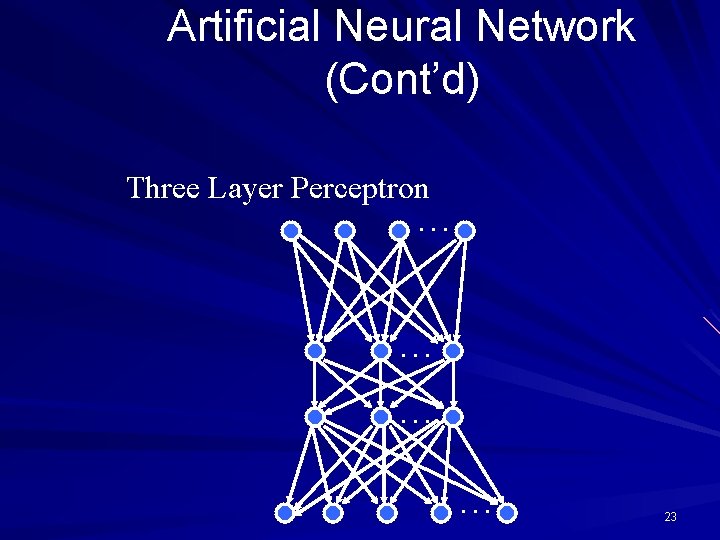

Artificial Neural Network (Cont’d) Three Layer Perceptron. . . 23

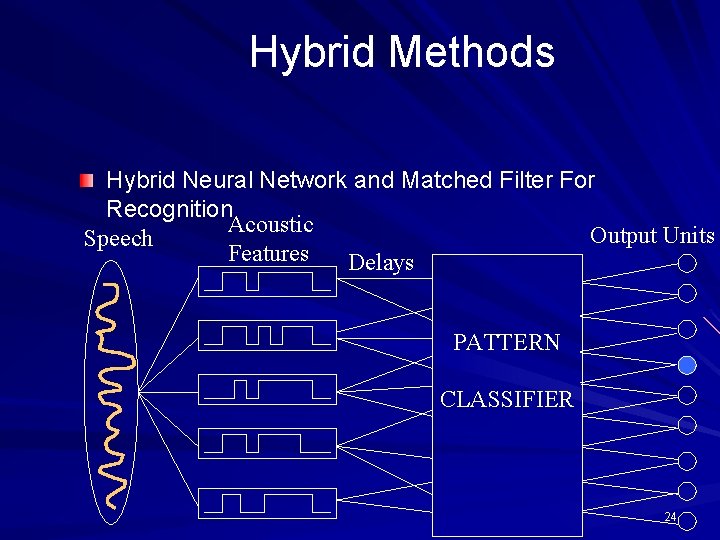

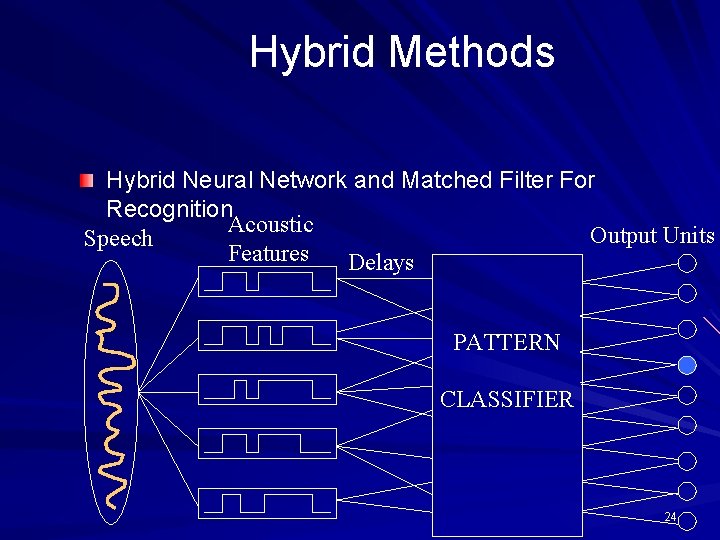

Hybrid Methods Hybrid Neural Network and Matched Filter For Recognition Acoustic Output Units Speech Features Delays PATTERN CLASSIFIER 24

Neural Network Properties The system is simple, But too much iteration is needed for training Doesn’t determine a specific structure Regardless of simplicity, the results are good Training size is large, so training should be offline Accuracy is relatively good 25

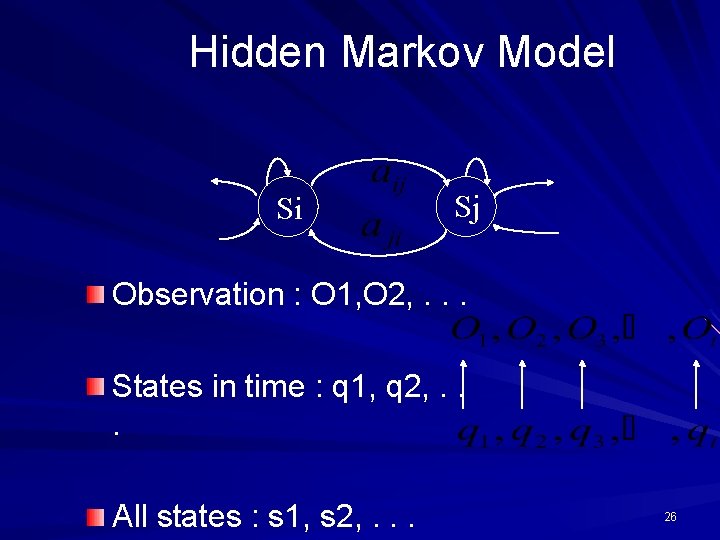

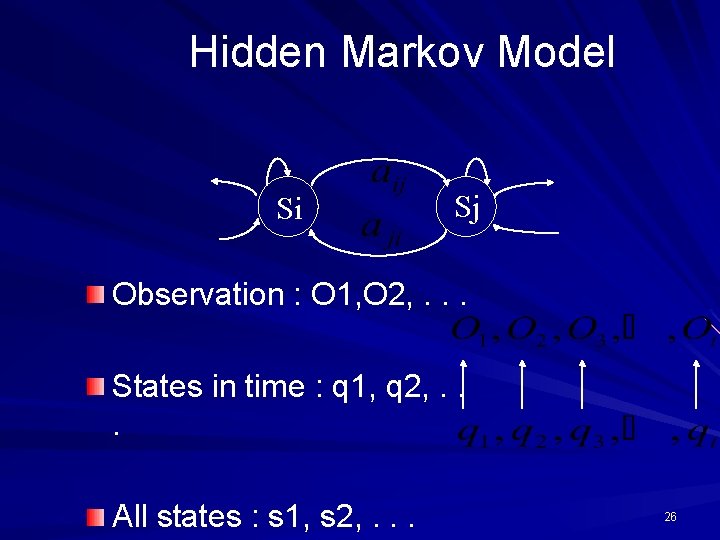

Hidden Markov Model Si Sj Observation : O 1, O 2, . . . States in time : q 1, q 2, . . . All states : s 1, s 2, . . . 26

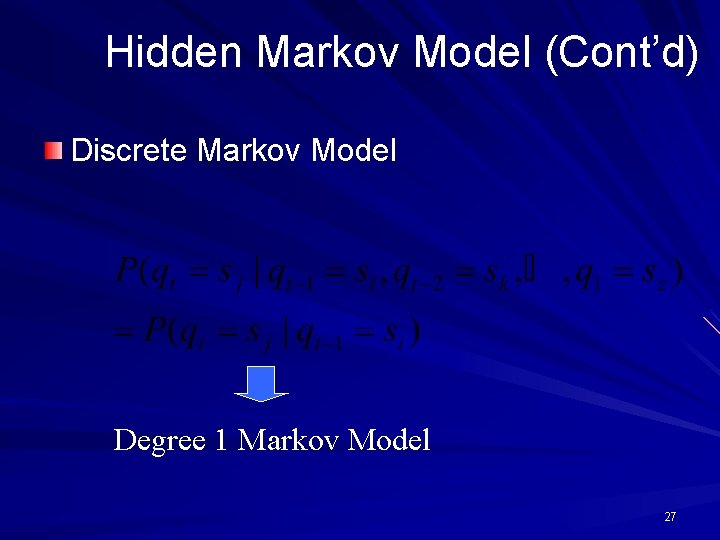

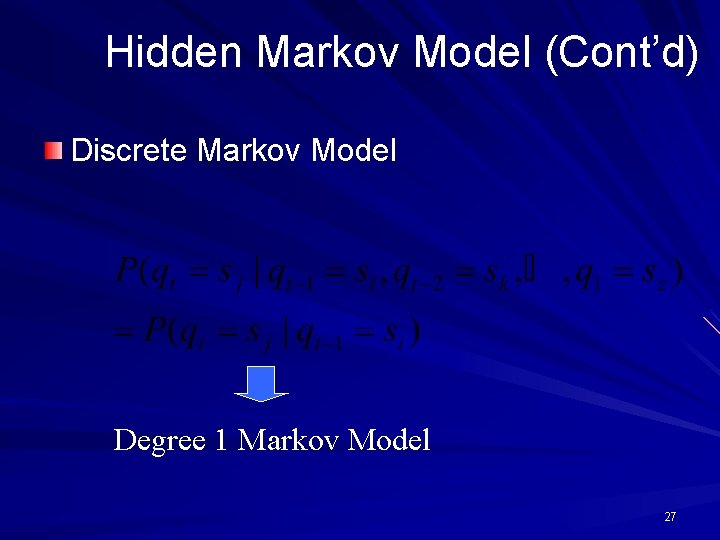

Hidden Markov Model (Cont’d) Discrete Markov Model Degree 1 Markov Model 27

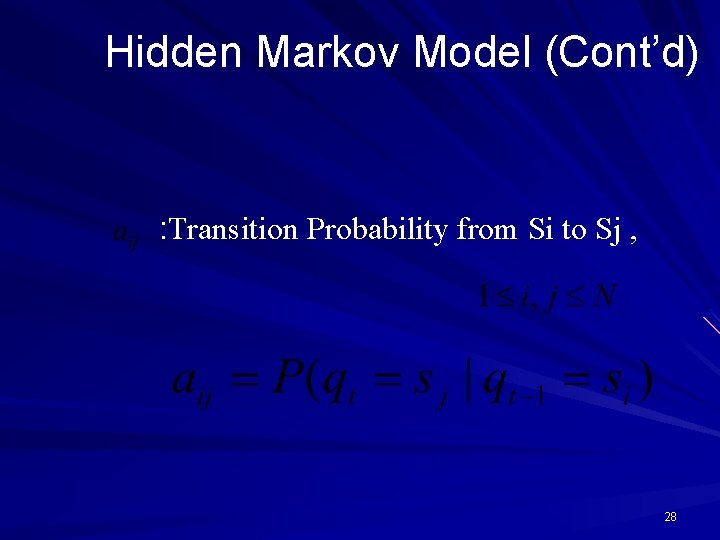

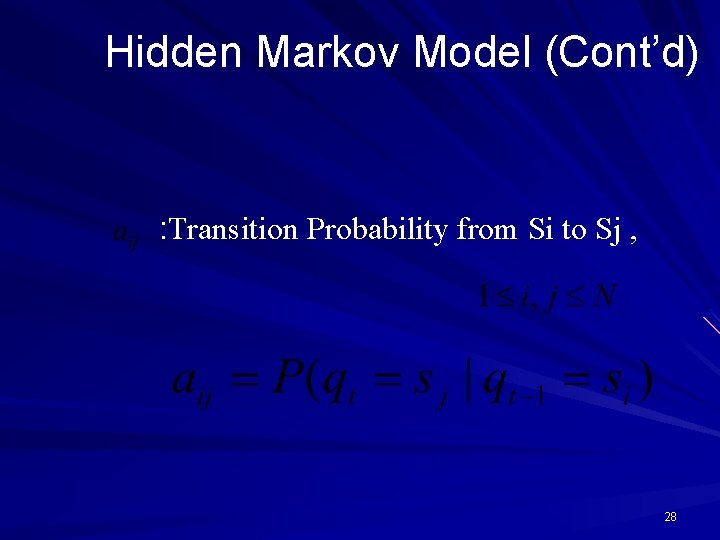

Hidden Markov Model (Cont’d) : Transition Probability from Si to Sj , 28

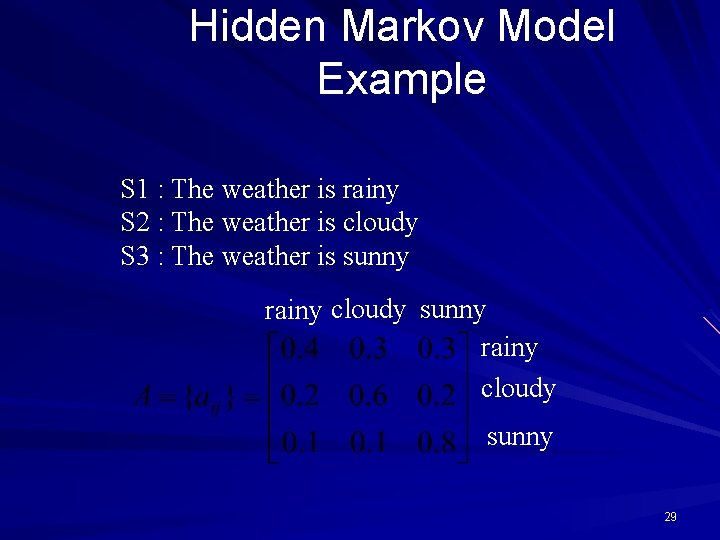

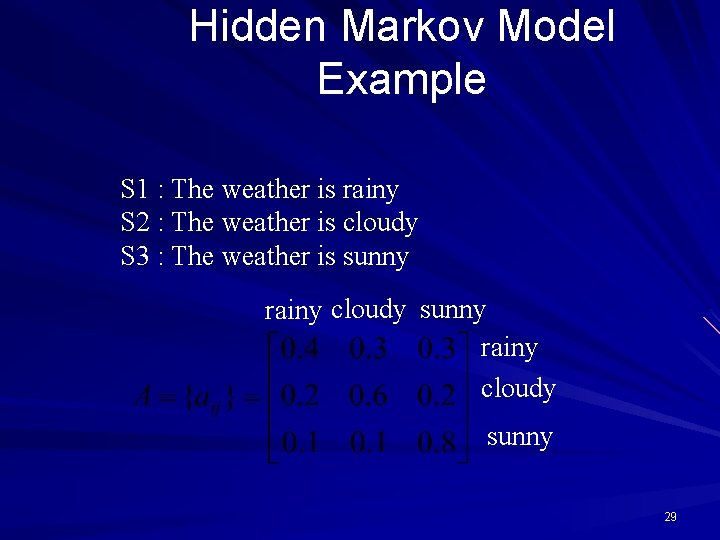

Hidden Markov Model Example S 1 : The weather is rainy S 2 : The weather is cloudy S 3 : The weather is sunny rainy cloudy sunny 29

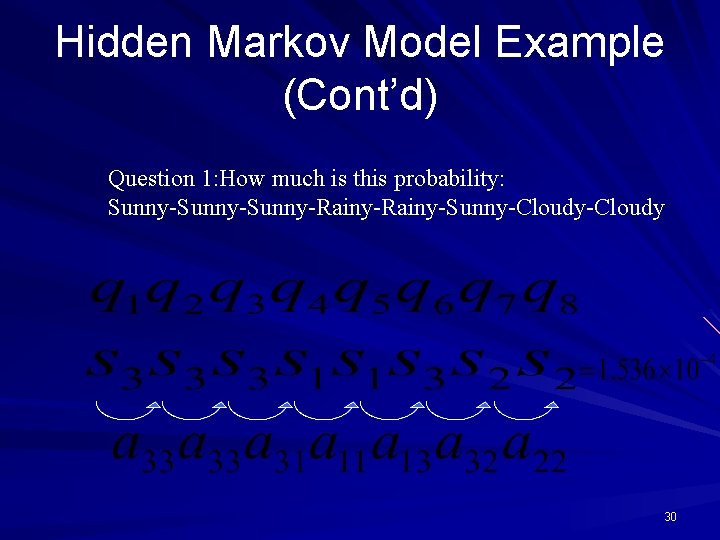

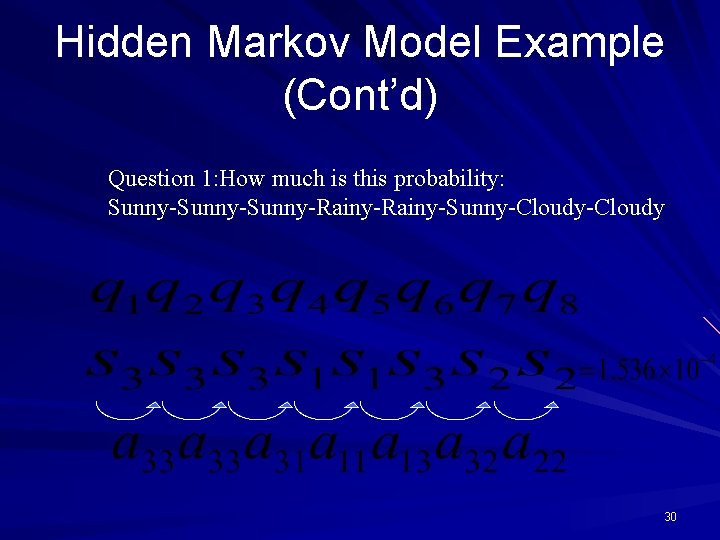

Hidden Markov Model Example (Cont’d) Question 1: How much is this probability: Sunny-Sunny-Rainy-Sunny-Cloudy 30

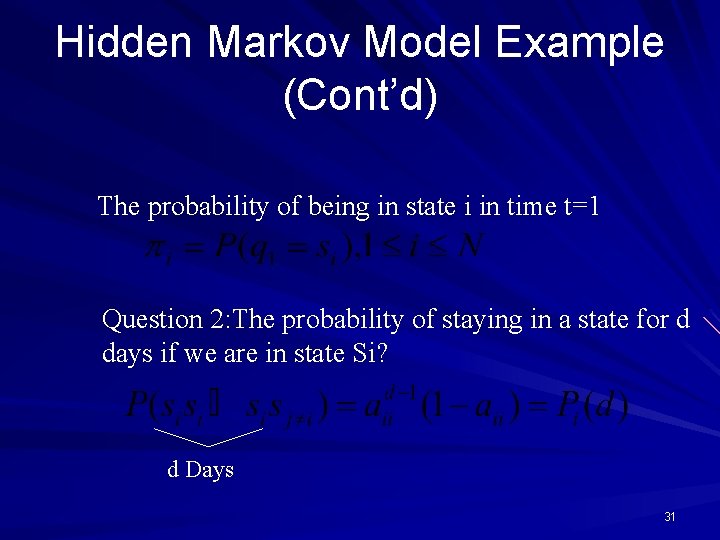

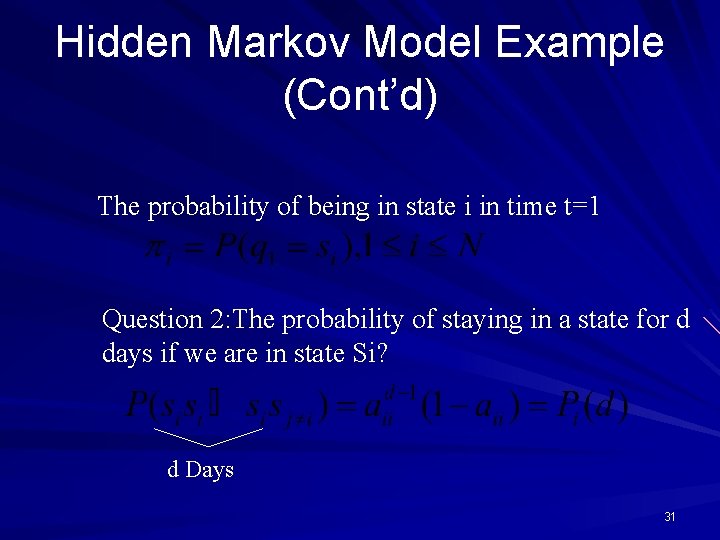

Hidden Markov Model Example (Cont’d) The probability of being in state i in time t=1 Question 2: The probability of staying in a state for d days if we are in state Si? d Days 31

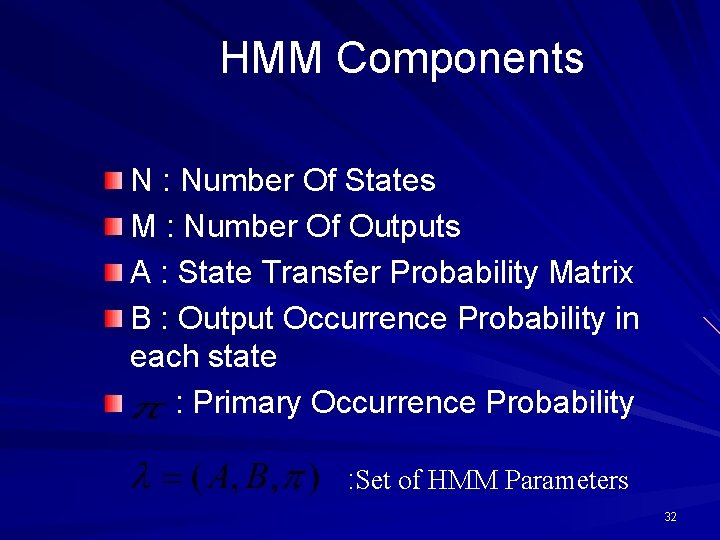

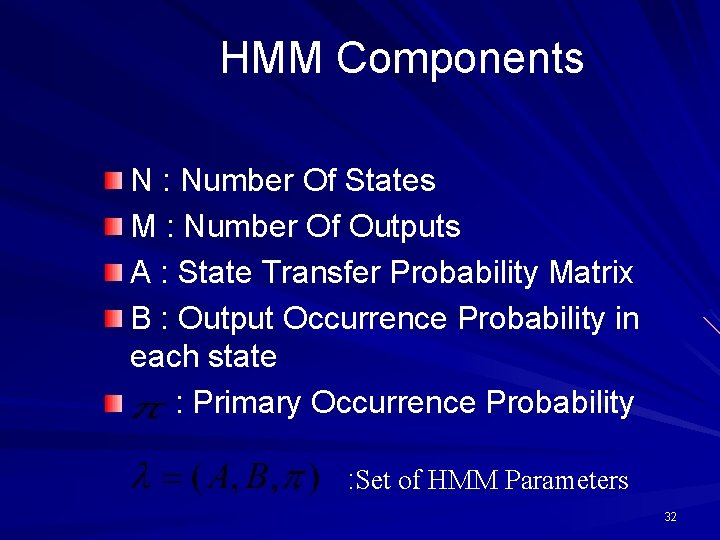

HMM Components N : Number Of States M : Number Of Outputs A : State Transfer Probability Matrix B : Output Occurrence Probability in each state : Primary Occurrence Probability : Set of HMM Parameters 32

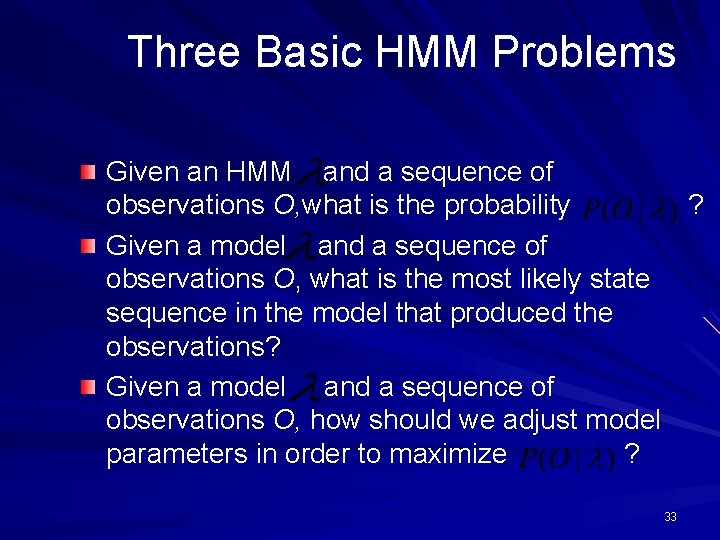

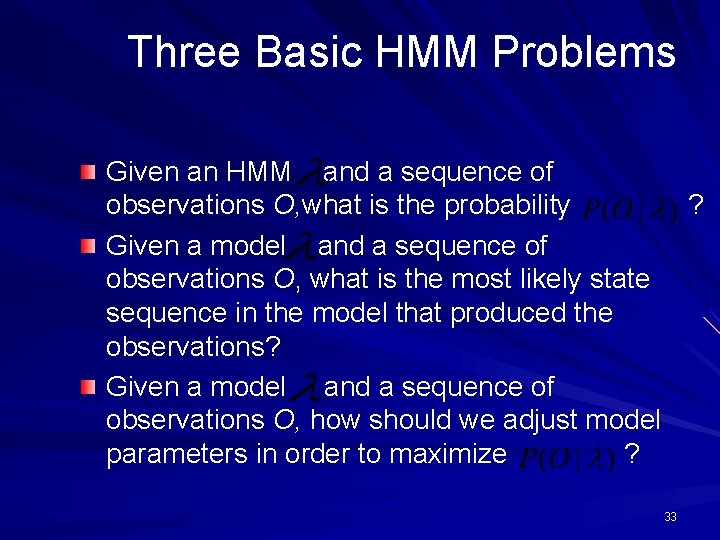

Three Basic HMM Problems Given an HMM and a sequence of observations O, what is the probability ? Given a model and a sequence of observations O, what is the most likely state sequence in the model that produced the observations? Given a model and a sequence of observations O, how should we adjust model parameters in order to maximize ? 33

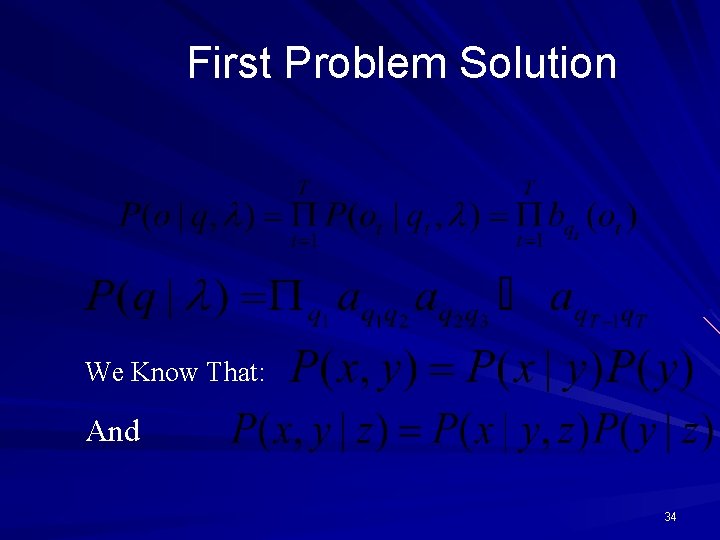

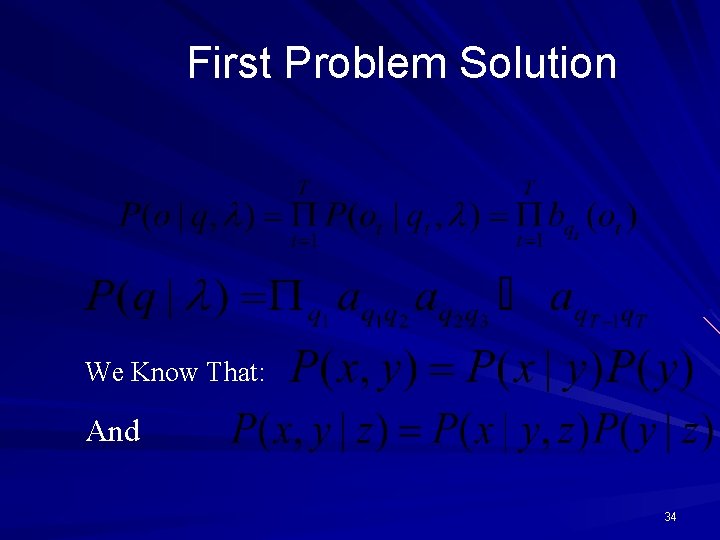

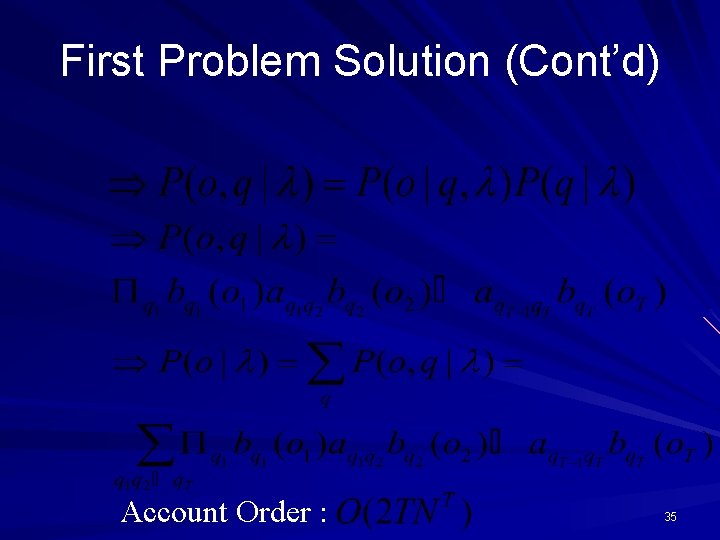

First Problem Solution We Know That: And 34

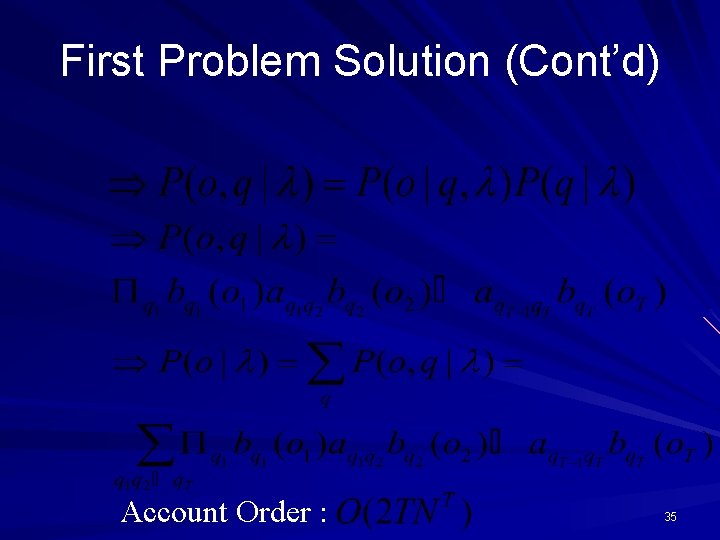

First Problem Solution (Cont’d) Account Order : 35

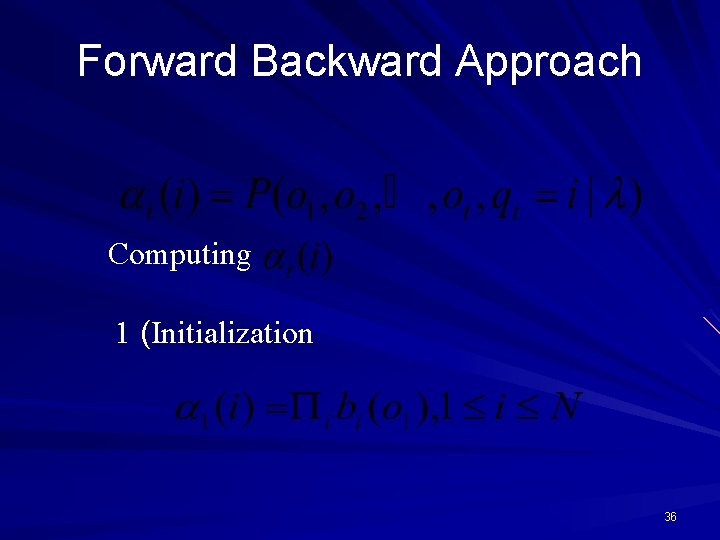

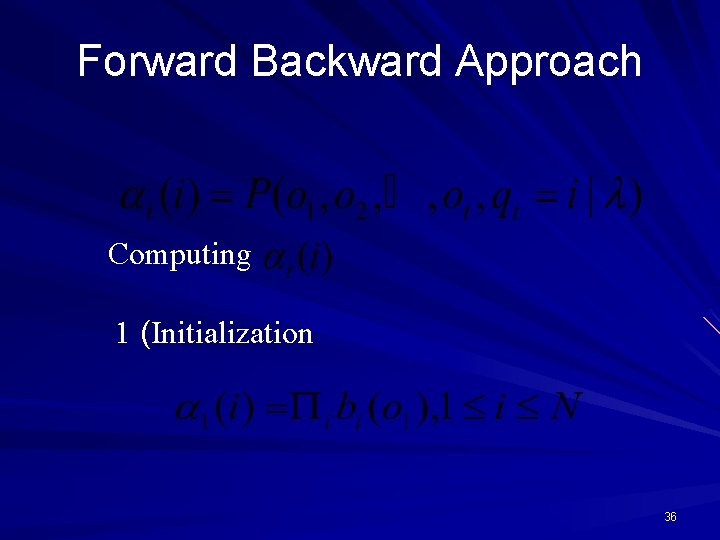

Forward Backward Approach Computing 1 (Initialization 36

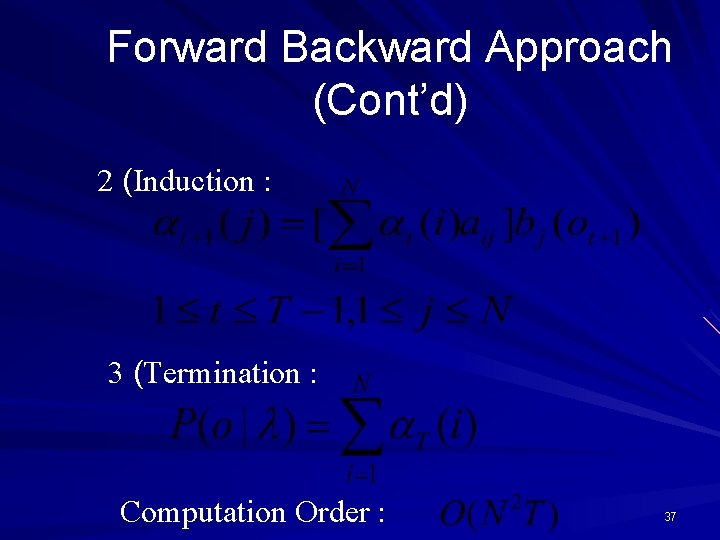

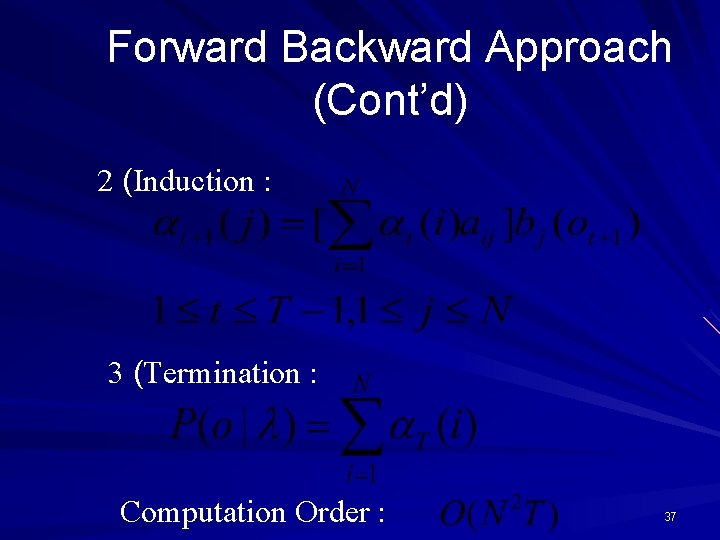

Forward Backward Approach (Cont’d) 2 (Induction : 3 (Termination : Computation Order : 37

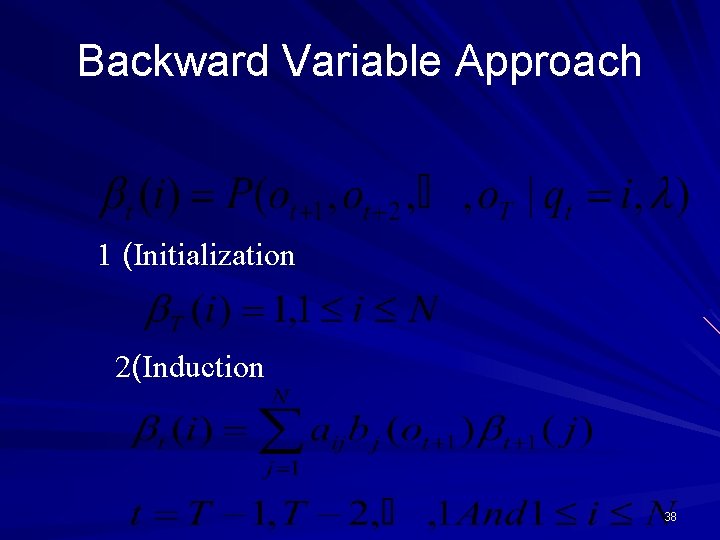

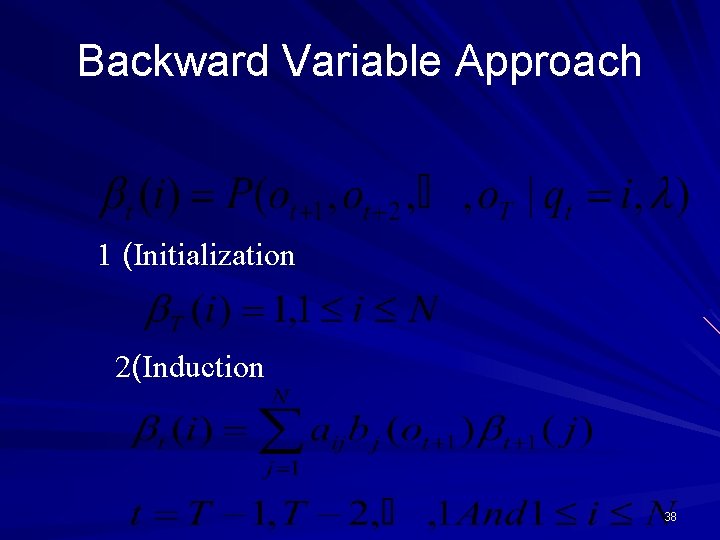

Backward Variable Approach 1 (Initialization 2(Induction 38

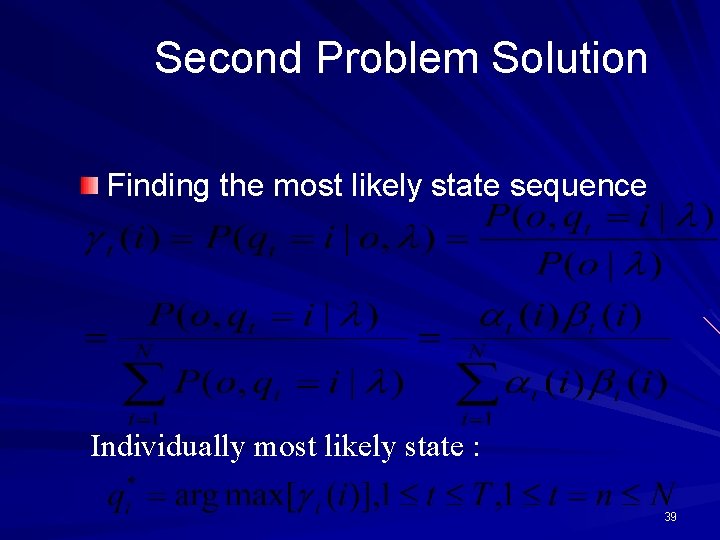

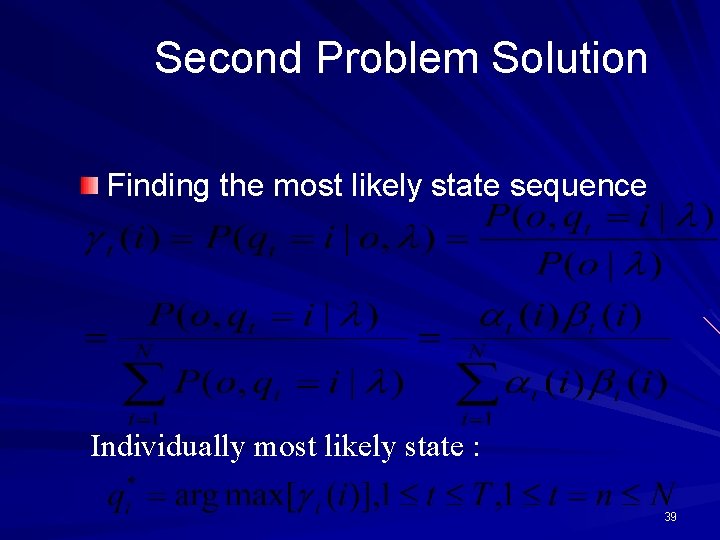

Second Problem Solution Finding the most likely state sequence Individually most likely state : 39

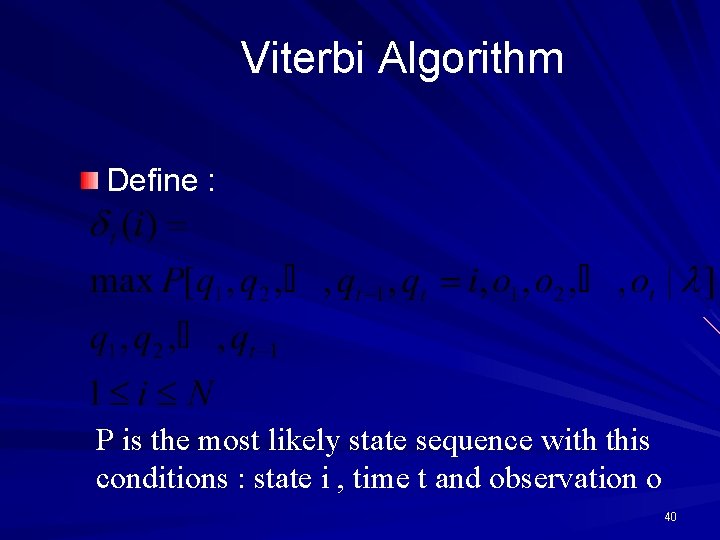

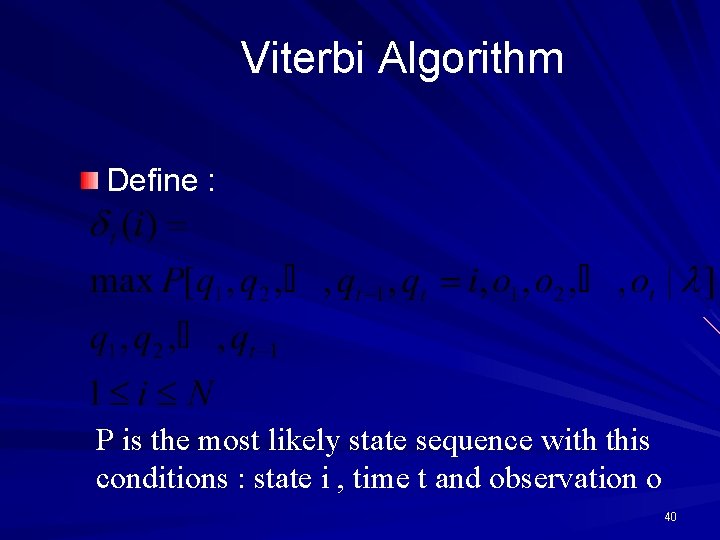

Viterbi Algorithm Define : P is the most likely state sequence with this conditions : state i , time t and observation o 40

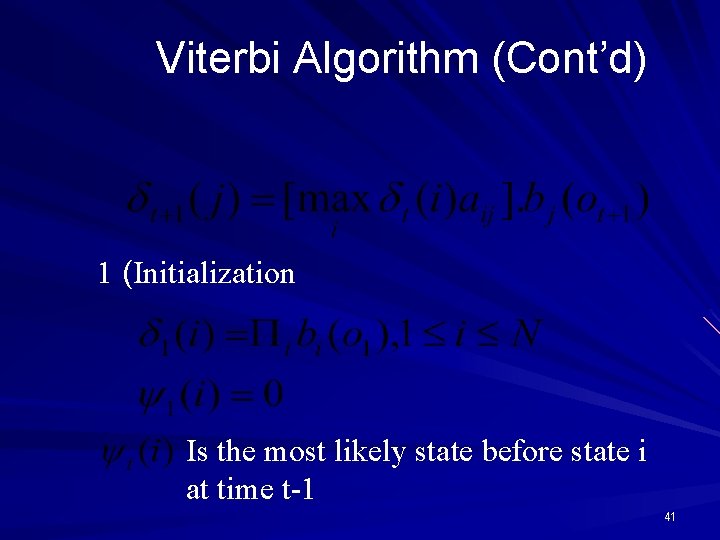

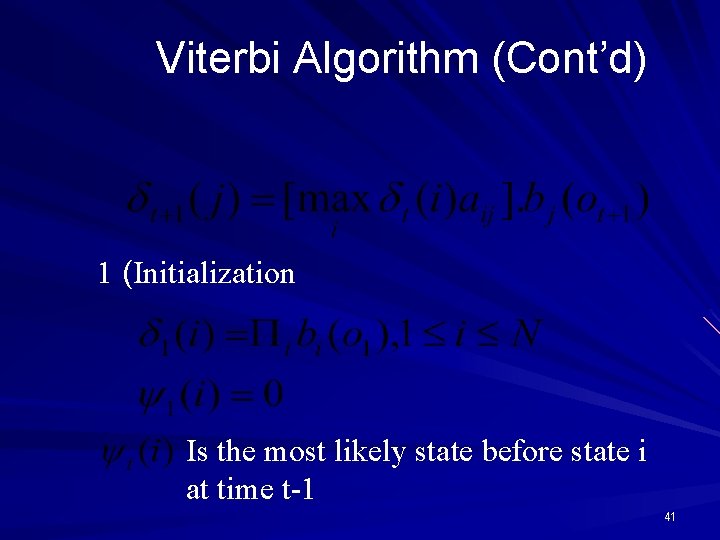

Viterbi Algorithm (Cont’d) 1 (Initialization Is the most likely state before state i at time t-1 41

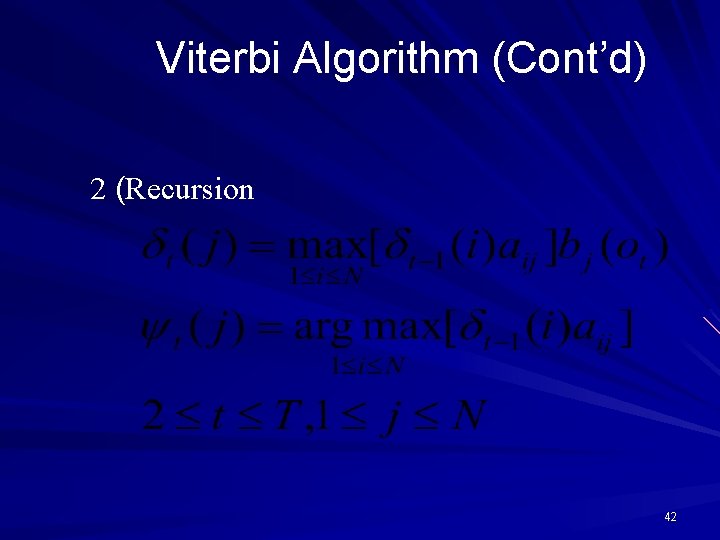

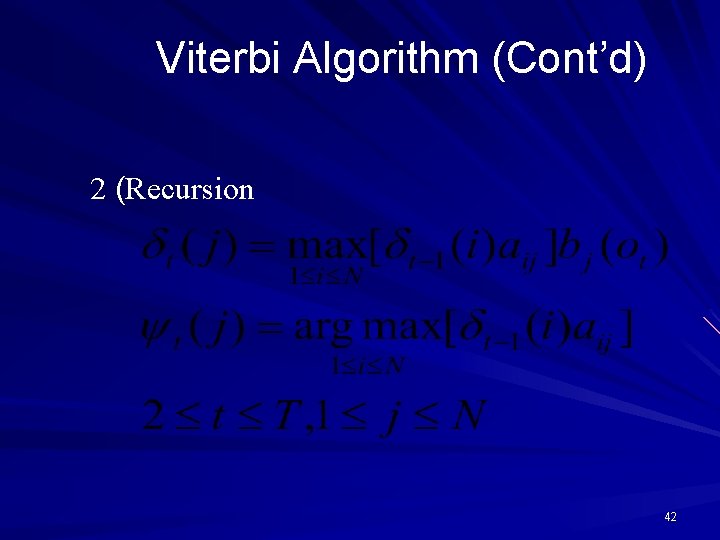

Viterbi Algorithm (Cont’d) 2 (Recursion 42

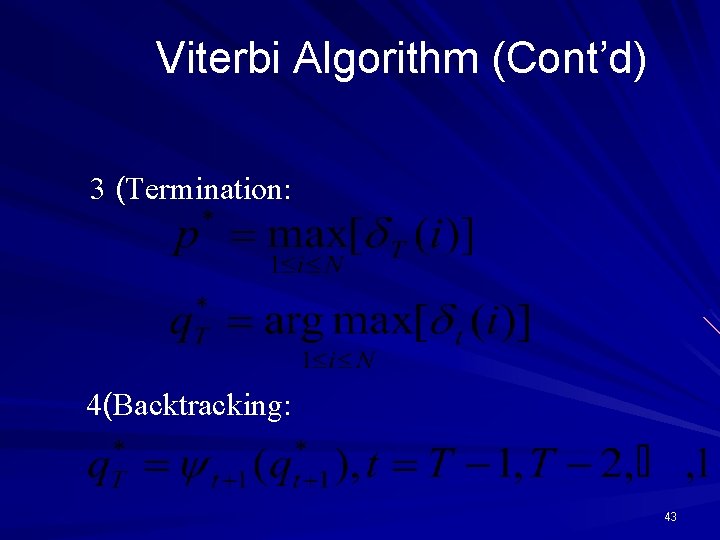

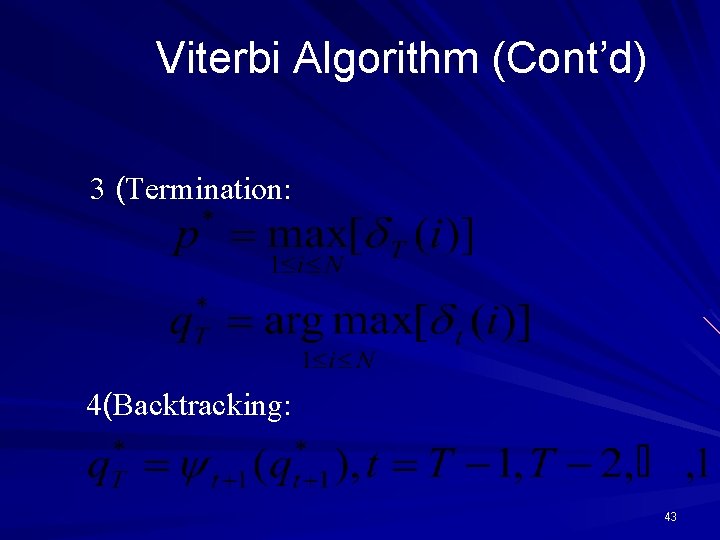

Viterbi Algorithm (Cont’d) 3 (Termination: 4(Backtracking: 43

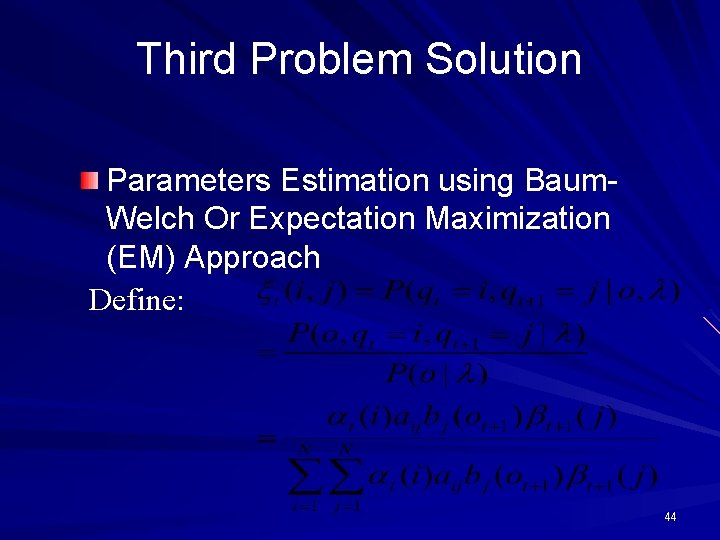

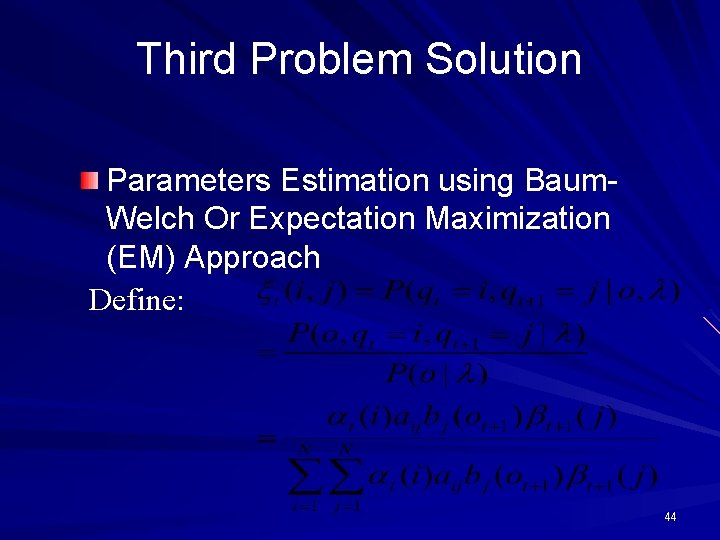

Third Problem Solution Parameters Estimation using Baum. Welch Or Expectation Maximization (EM) Approach Define: 44

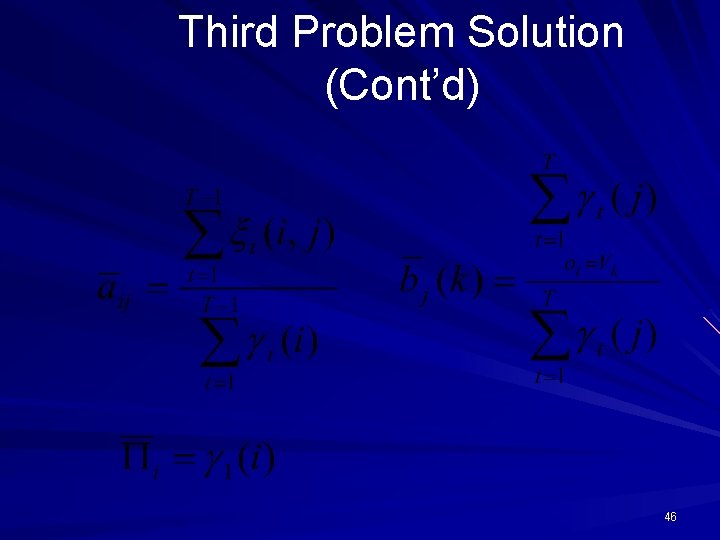

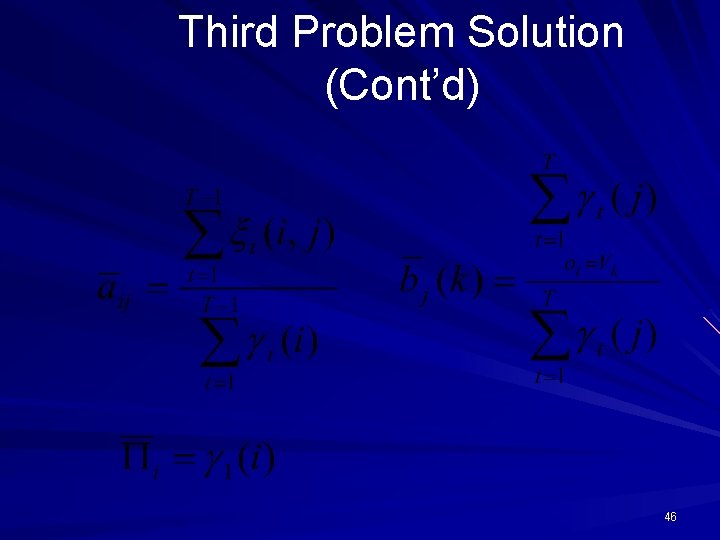

Third Problem Solution (Cont’d) : Expectation value of the number of jumps from state i to state j 45

Third Problem Solution (Cont’d) 46

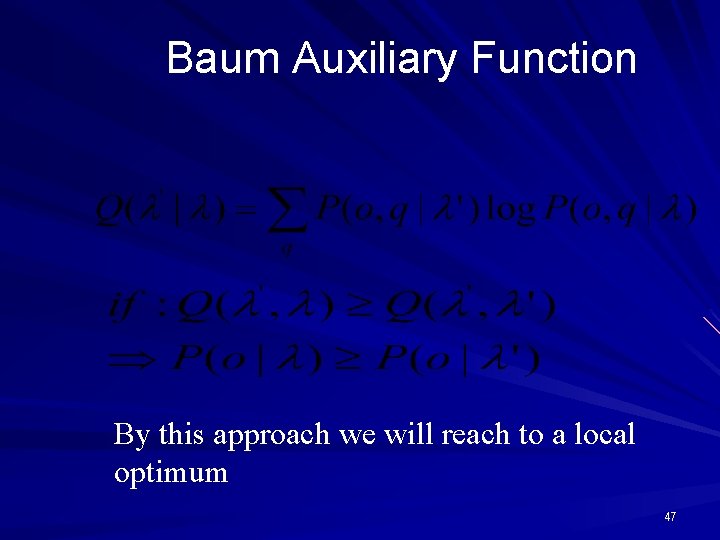

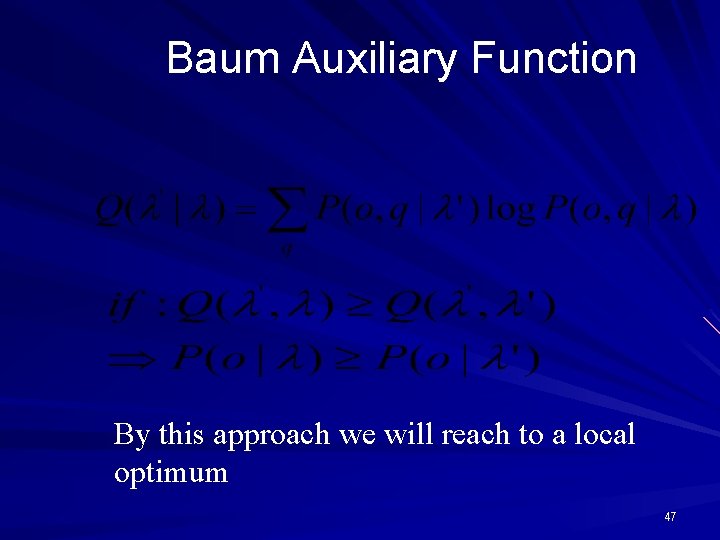

Baum Auxiliary Function By this approach we will reach to a local optimum 47

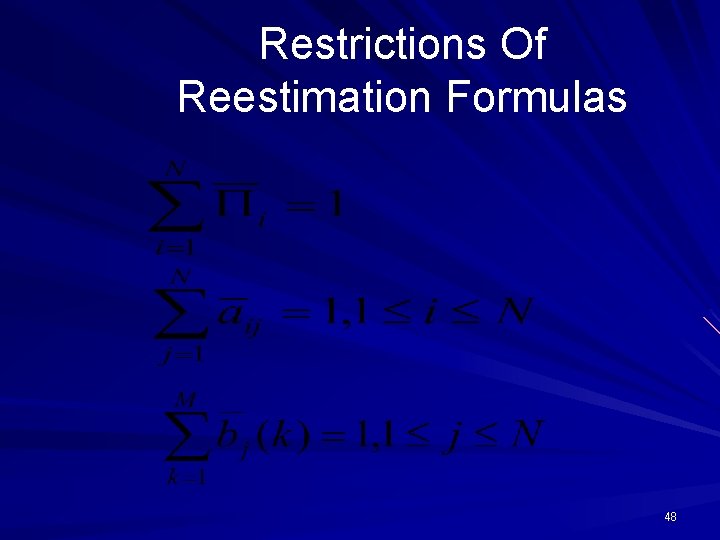

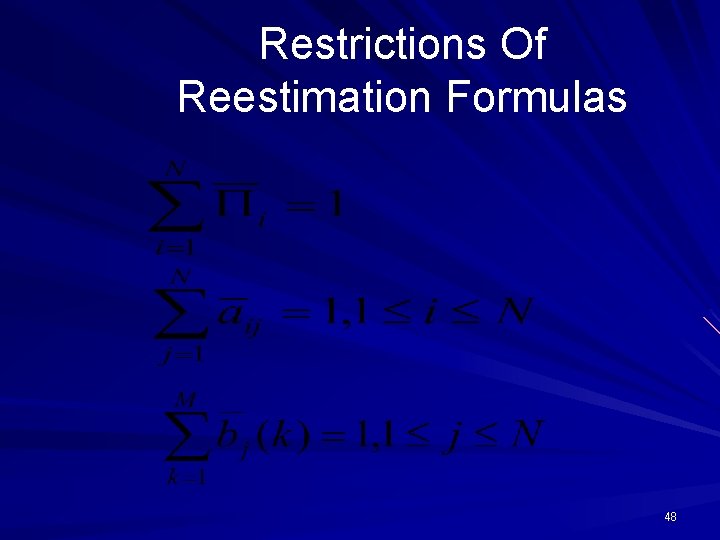

Restrictions Of Reestimation Formulas 48

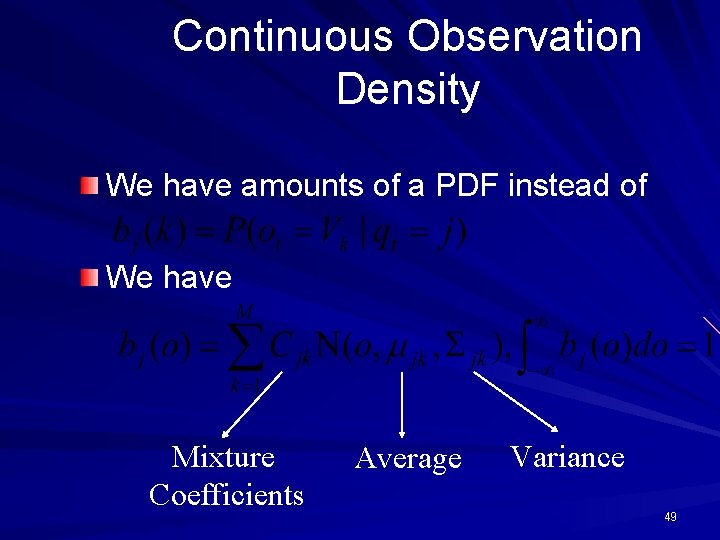

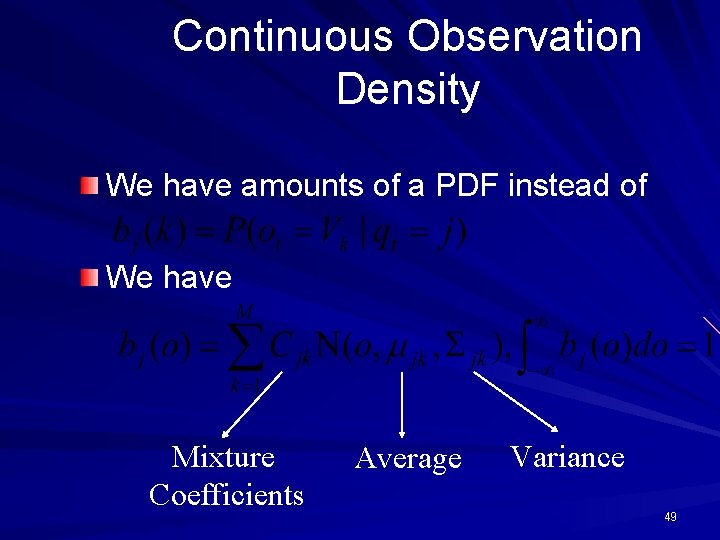

Continuous Observation Density We have amounts of a PDF instead of We have Mixture Coefficients Average Variance 49

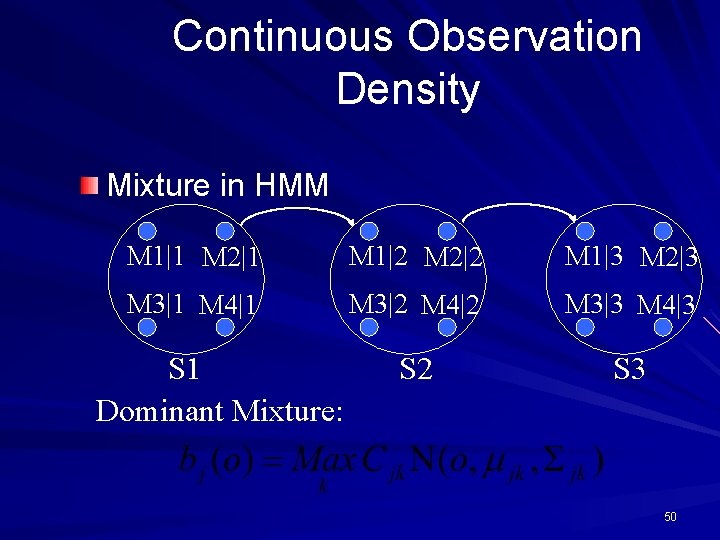

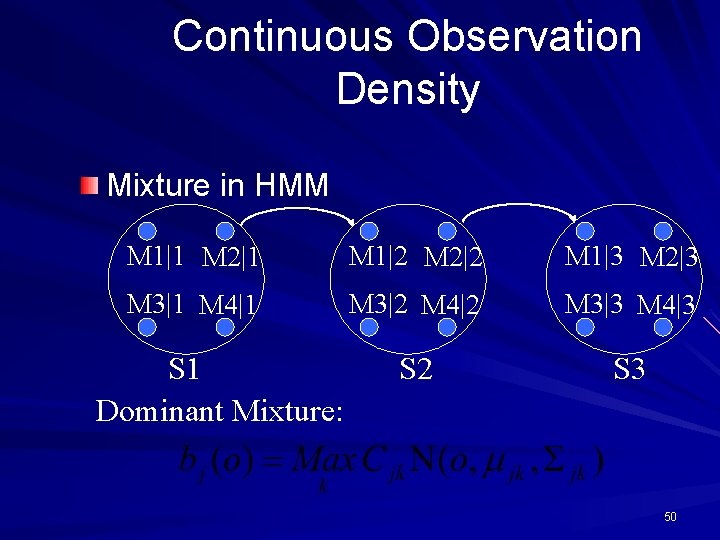

Continuous Observation Density Mixture in HMM M 1|1 M 2|1 M 1|2 M 2|2 M 1|3 M 2|3 M 3|1 M 4|1 M 3|2 M 4|2 M 3|3 M 4|3 S 2 S 3 S 1 Dominant Mixture: 50

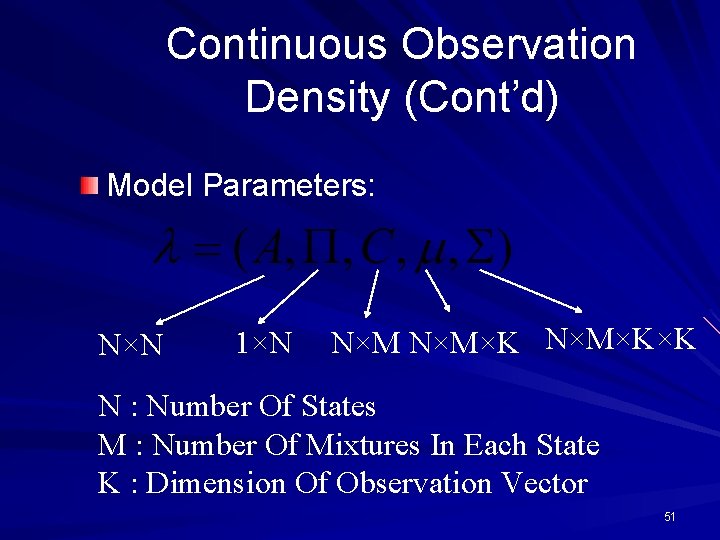

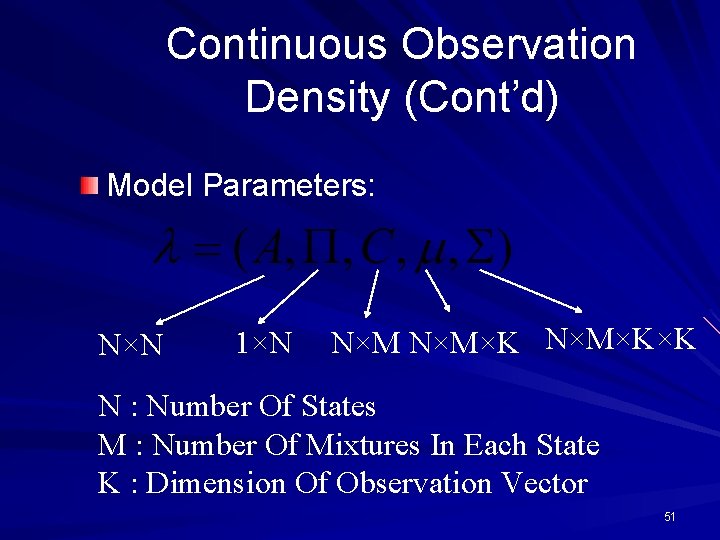

Continuous Observation Density (Cont’d) Model Parameters: N×N 1×N N×M×K×K N : Number Of States M : Number Of Mixtures In Each State K : Dimension Of Observation Vector 51

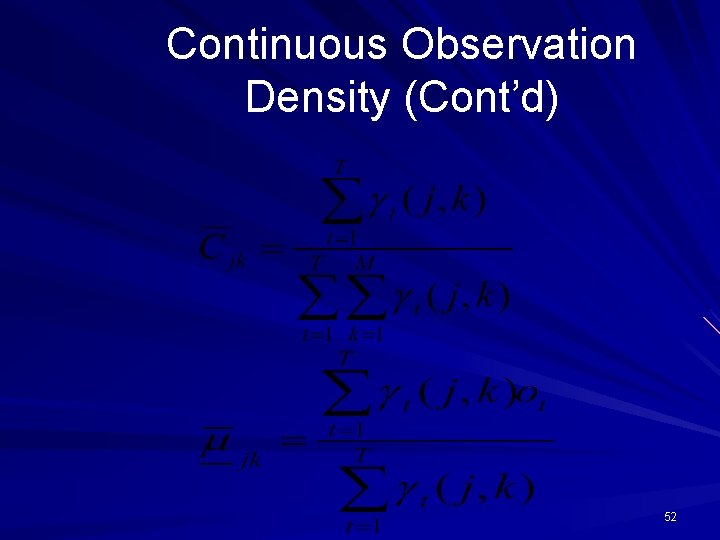

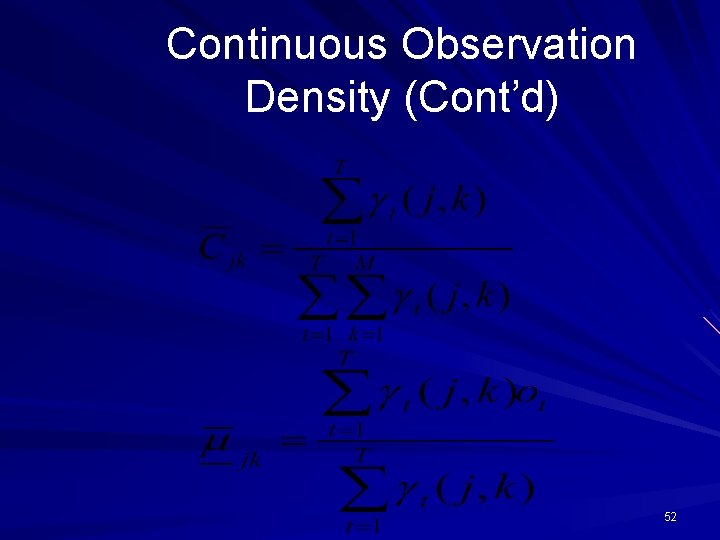

Continuous Observation Density (Cont’d) 52

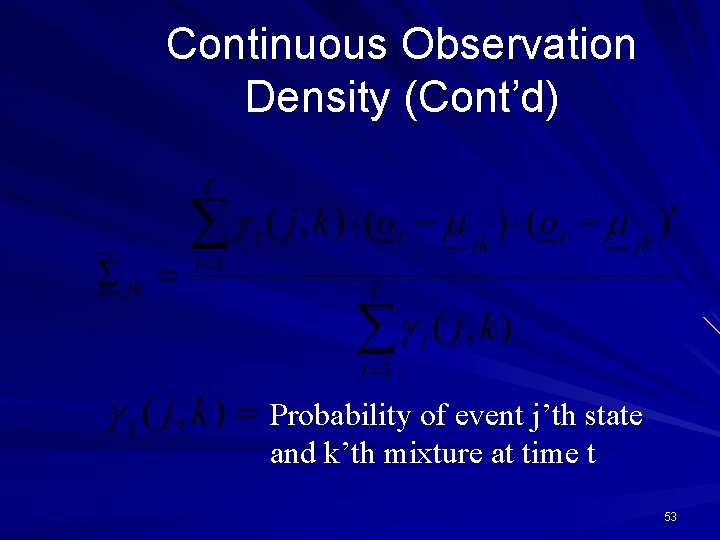

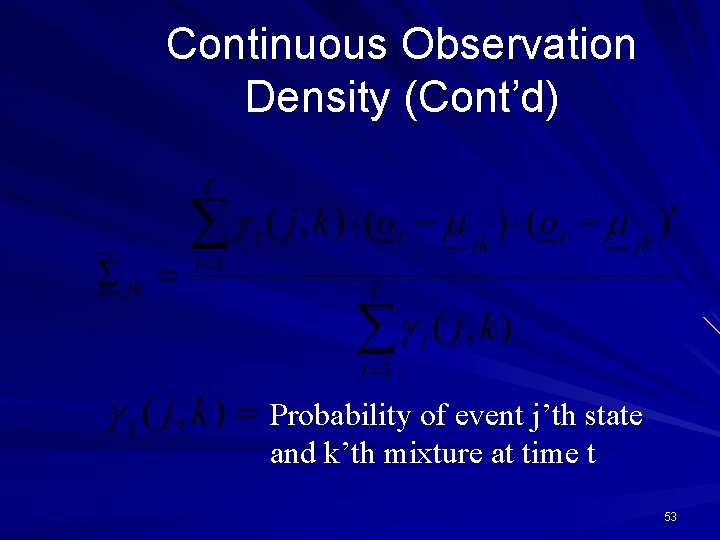

Continuous Observation Density (Cont’d) Probability of event j’th state and k’th mixture at time t 53

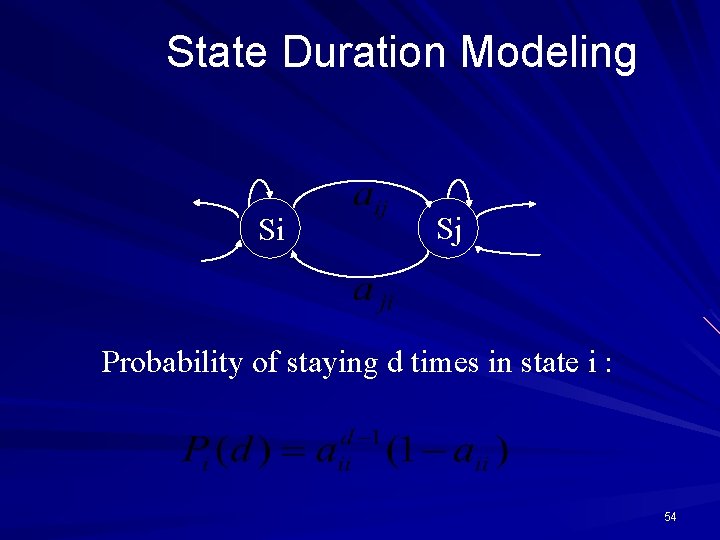

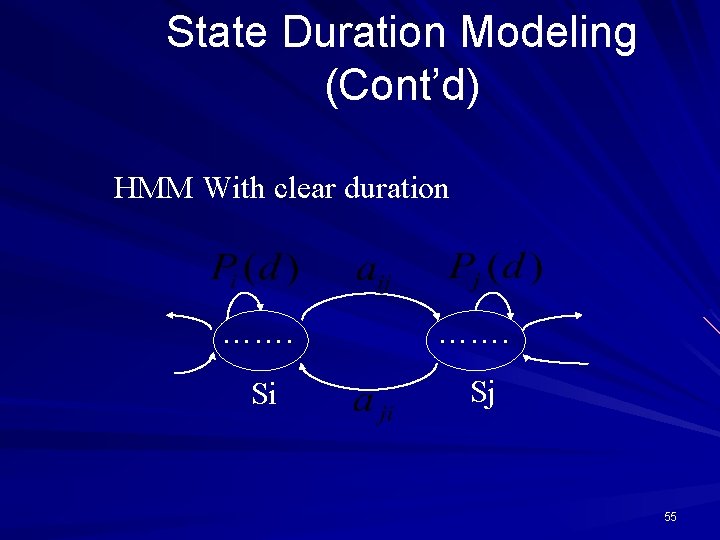

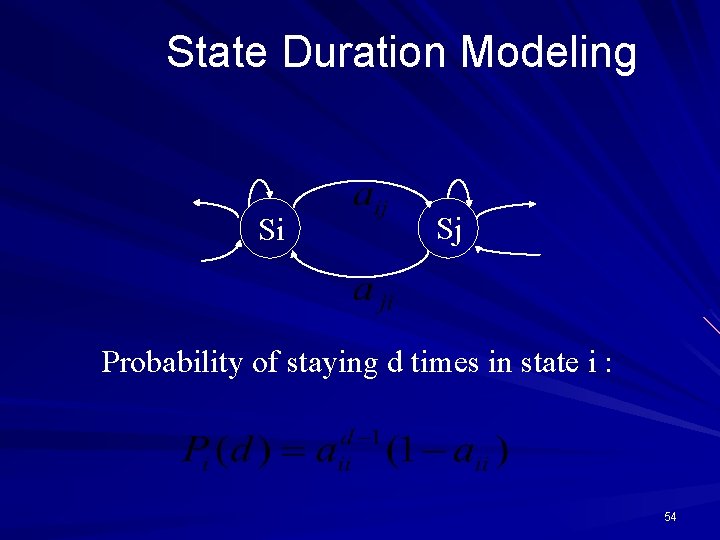

State Duration Modeling Si Sj Probability of staying d times in state i : 54

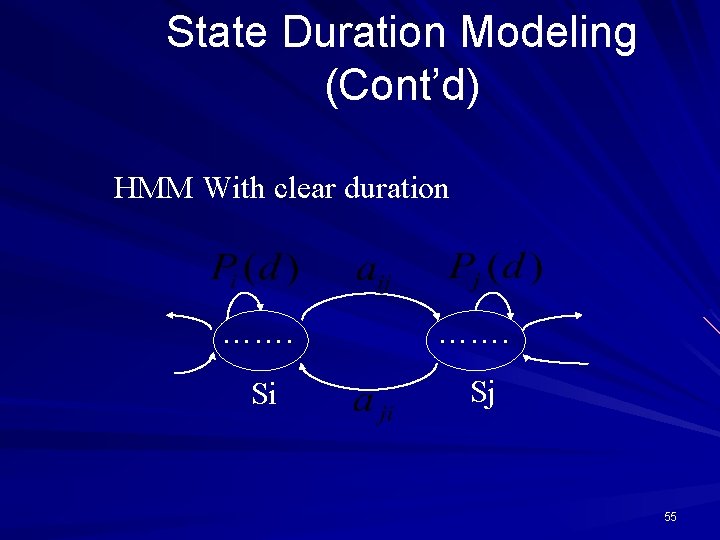

State Duration Modeling (Cont’d) HMM With clear duration ……. Si Sj 55

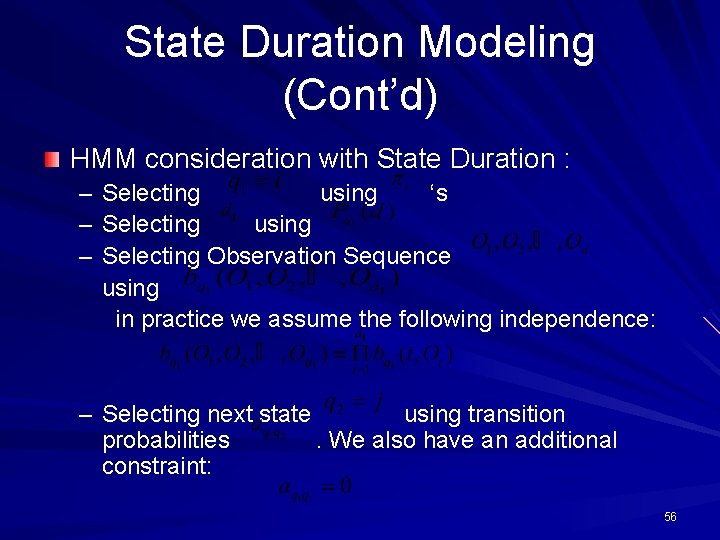

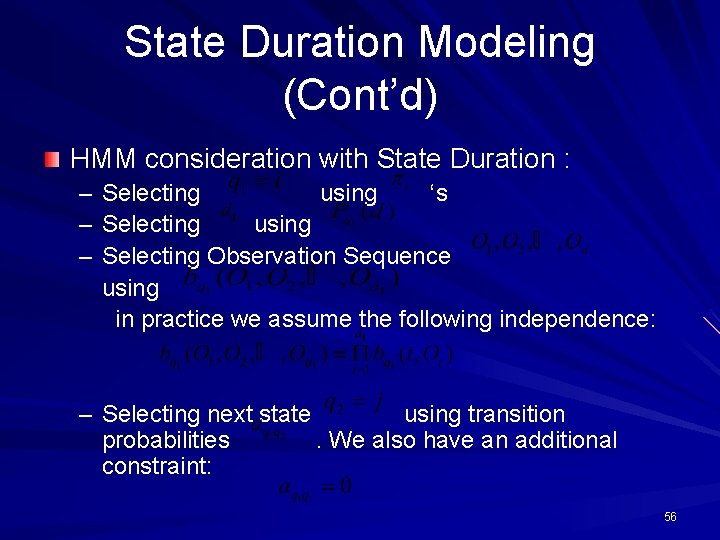

State Duration Modeling (Cont’d) HMM consideration with State Duration : – – – Selecting using ‘s Selecting using Selecting Observation Sequence using in practice we assume the following independence: – Selecting next state using transition probabilities. We also have an additional constraint: 56

Training In HMM Maximum Likelihood (ML) Maximum Mutual Information (MMI) Minimum Discrimination Information (MDI) 57

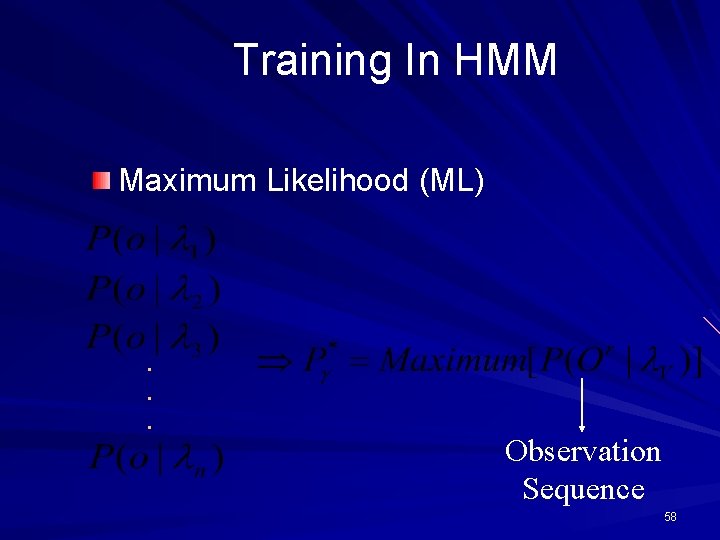

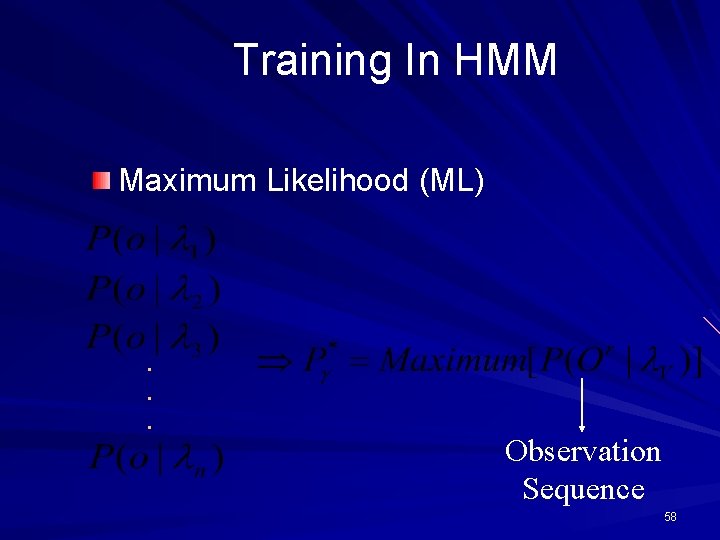

Training In HMM Maximum Likelihood (ML) . . . Observation Sequence 58

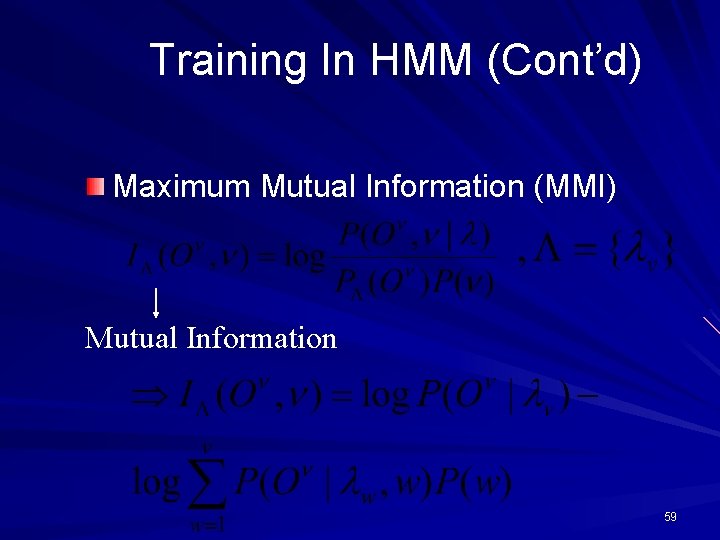

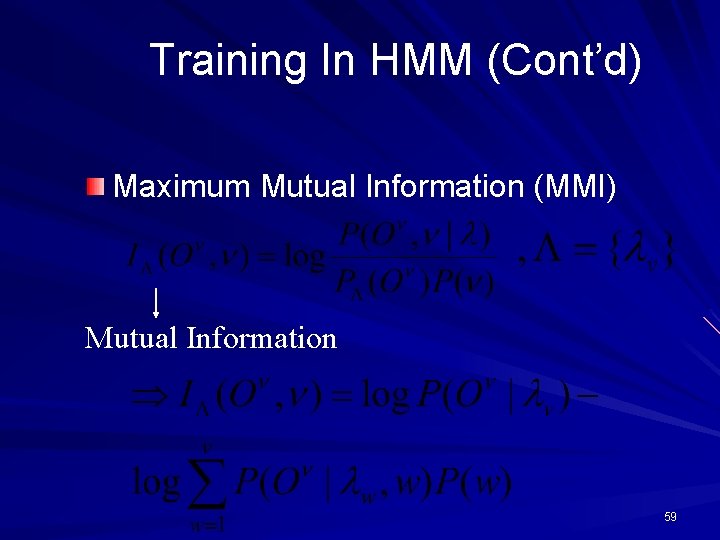

Training In HMM (Cont’d) Maximum Mutual Information (MMI) Mutual Information 59

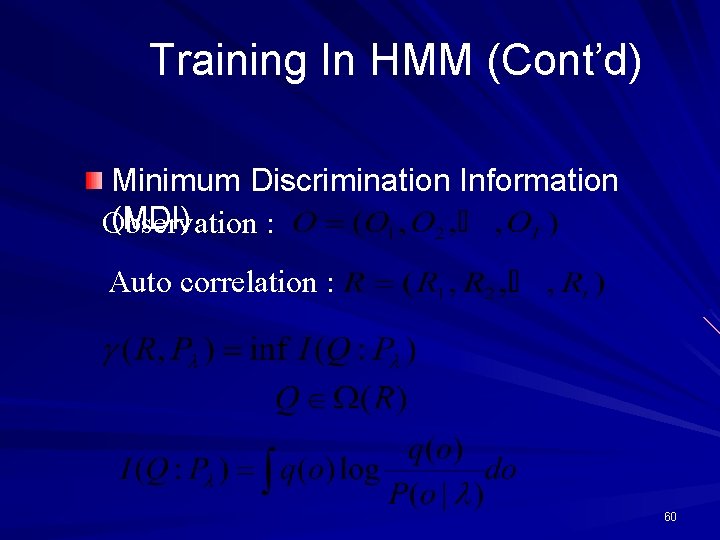

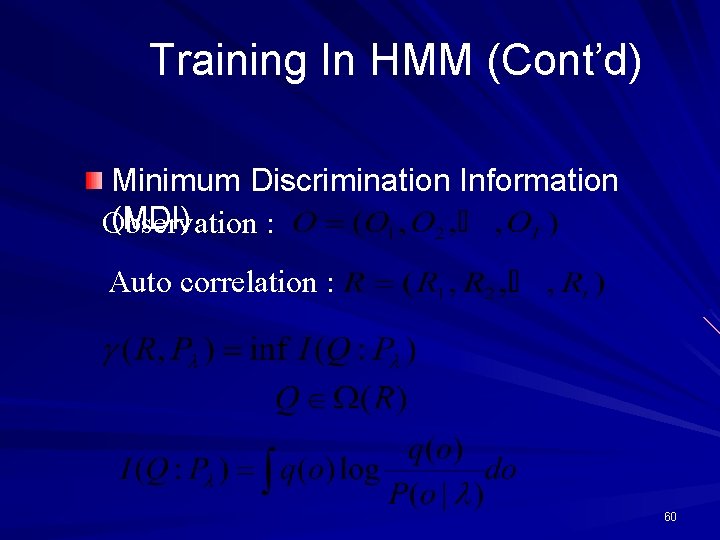

Training In HMM (Cont’d) Minimum Discrimination Information (MDI) Observation : Auto correlation : 60