6306 Advanced Operating Systems Instructor Dr Mohan Kumar

- Slides: 34

6306 Advanced Operating Systems Instructor : Dr. Mohan Kumar Room : 315 NH kumar@cse. uta. edu Class : TTh 7 - 8: 20 PM Office Hours : TTh 1 -3 PM GTA : Byung Sung sung@cse. uta. edu Kumar CSE@UTA 1

Distributed Systems R Chow, Distributed Operating Systems n Provide a conceptually simple model n Efficient, flexible, consistent and robust n Goals n Efficiency n Communication delays? ? n Scheduling, load balancing n Flexibility n Users decide how, where, and when to use the system n Environment for building additional tools and services n System’s view - scalability, portability, and interoperability n Consistency n n Lack of global information, potential replication and partitioning of data, component failures and interaction among modules Robustness Kumar CSE@UTA 2

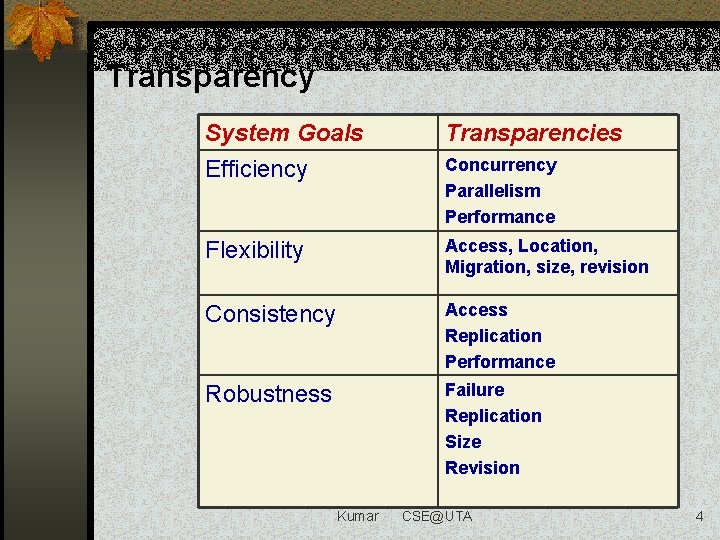

Transparency n Logical view of a Physical System n Reduce the effect and awareness of the physical system n System-dependent information n Tradeoff between simplicity and effectiveness n Access transparency –local and remote objects n Location transparency –objects refs by logical names n Migration transparency –location independence n Concurrency transparency n Replication transparency n Parallelism, Failure, Performance, Size (modularity and scalability), Revision (software) Kumar CSE@UTA 3

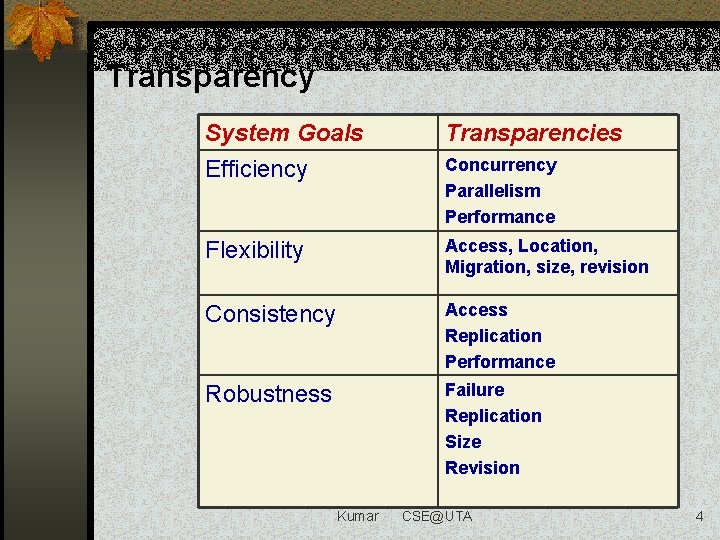

Transparency System Goals Efficiency Transparencies Flexibility Access, Location, Migration, size, revision Consistency Access Replication Performance Robustness Failure Replication Size Revision Kumar Concurrency Parallelism Performance CSE@UTA 4

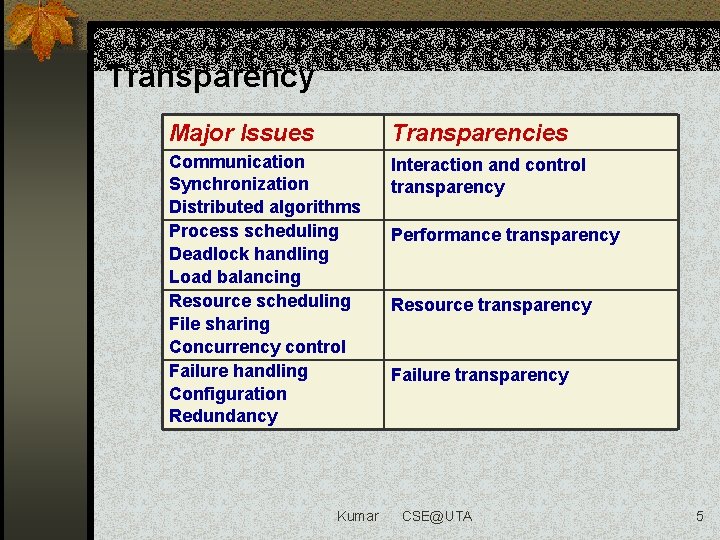

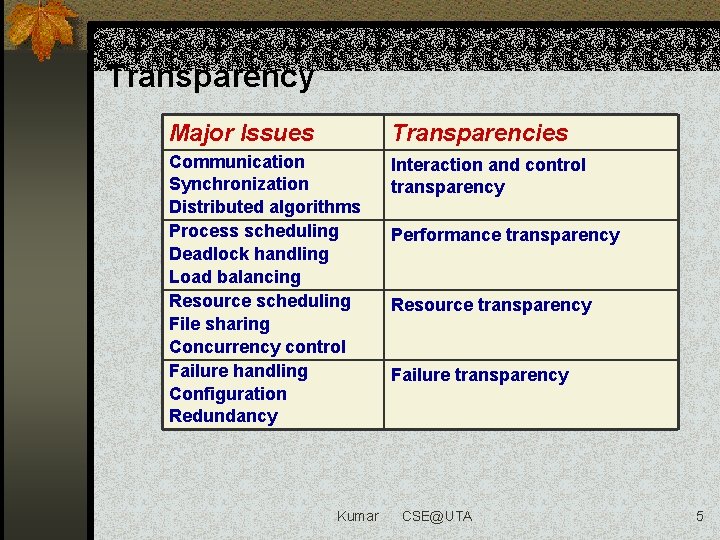

Transparency Major Issues Transparencies Communication Synchronization Distributed algorithms Process scheduling Deadlock handling Load balancing Resource scheduling File sharing Concurrency control Failure handling Configuration Redundancy Interaction and control transparency Kumar Performance transparency Resource transparency Failure transparency CSE@UTA 5

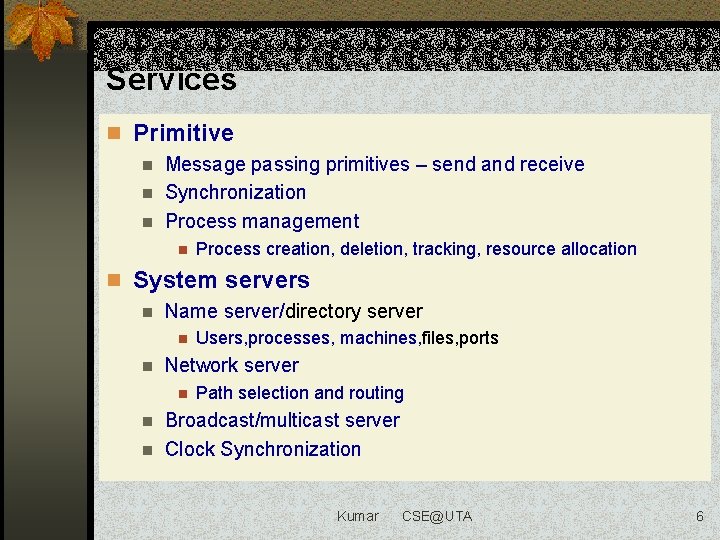

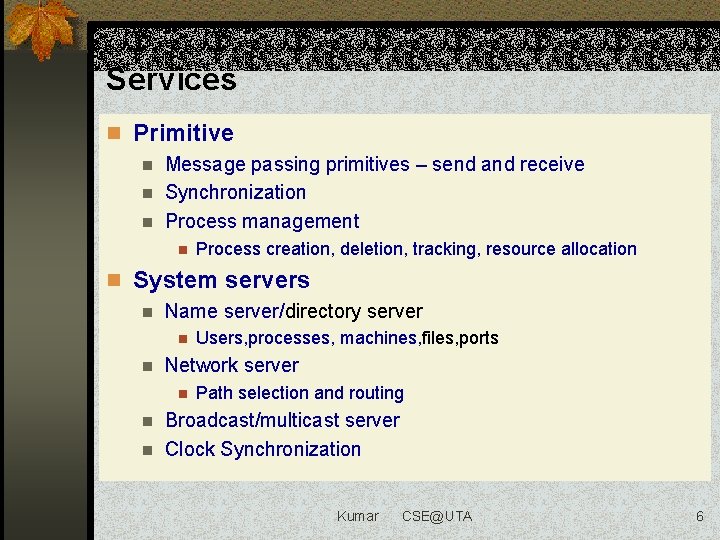

Services n Primitive n Message passing primitives – send and receive n Synchronization n Process management n Process creation, deletion, tracking, resource allocation n System servers n Name server/directory server n n Users, processes, machines, files, ports Network server n Path selection and routing Broadcast/multicast server n Clock Synchronization n Kumar CSE@UTA 6

Architecture Models n Workstation-server model n Processor-pool model n Communication network protocols Kumar CSE@UTA 7

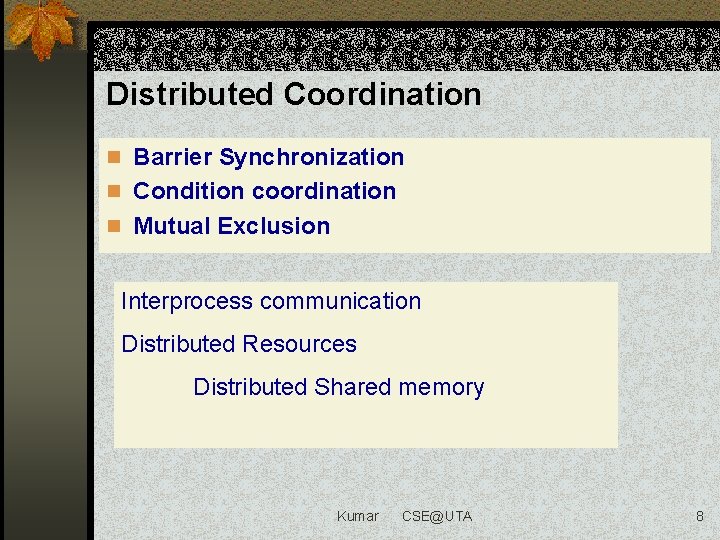

Distributed Coordination n Barrier Synchronization n Condition coordination n Mutual Exclusion Interprocess communication Distributed Resources Distributed Shared memory Kumar CSE@UTA 8

Two Processor Model Indurkhya and H Stone, IEEE Software, 1997 Consider execution of application program that contains M tasks on a Two processor (or PE) system We make the following assumptions to simplify analysis 1. Each task executes in R units of time all tasks are of equal complexity 2. Each task communicates with every other task with an overhead cost of C units of time when the communicating tasks are on different PEs and with zero cost when the communicating tasks are co-resident 3. Non-overlapped computation/communication Kumar CSE@UTA 9

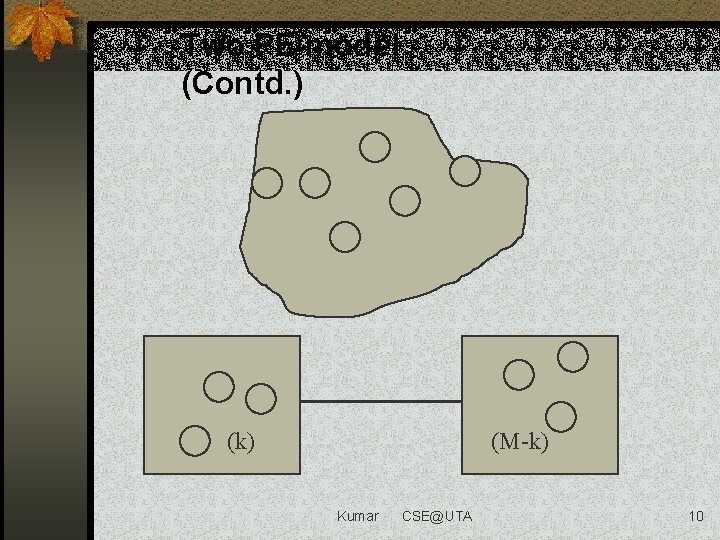

Two PE model (Contd. ) (k) (M-k) Kumar CSE@UTA 10

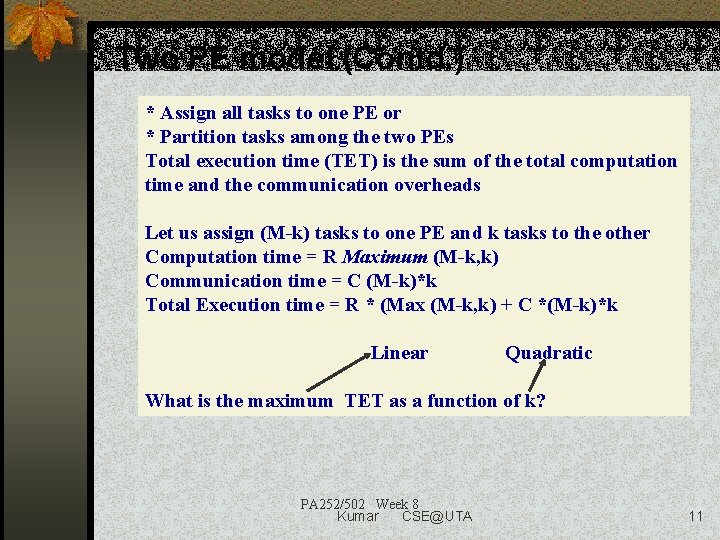

Two PE model (Contd. ) * Assign all tasks to one PE or * Partition tasks among the two PEs Total execution time (TET) is the sum of the total computation time and the communication overheads Let us assign (M-k) tasks to one PE and k tasks to the other Computation time = R Maximum (M-k, k) Communication time = C (M-k)*k Total Execution time = R * (Max (M-k, k) + C *(M-k)*k Linear Quadratic What is the maximum TET as a function of k? PA 252/502 Week 8 Kumar CSE@UTA 11

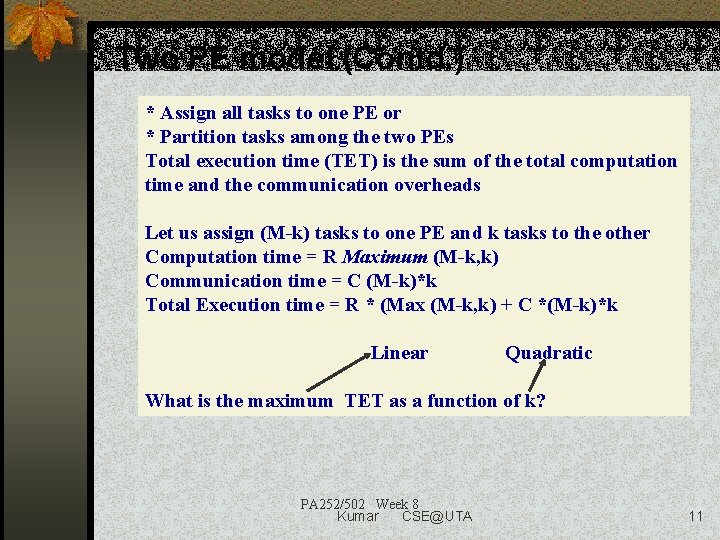

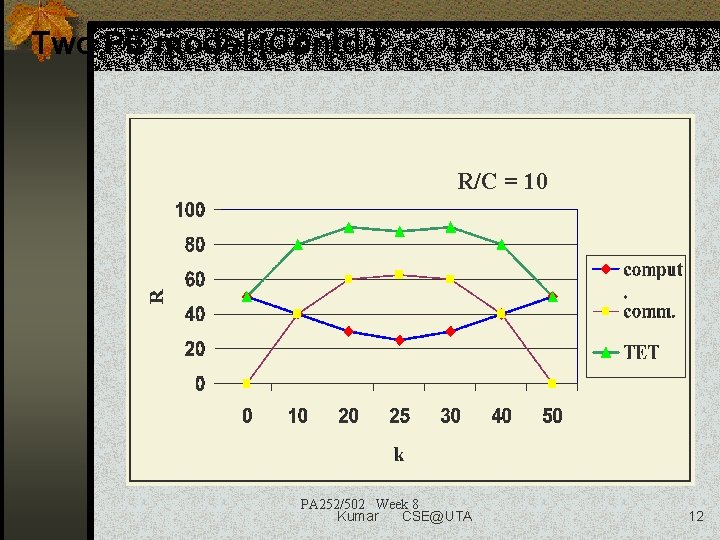

Two PE model (Contd. ) R/C = 10 PA 252/502 Week 8 Kumar CSE@UTA 12

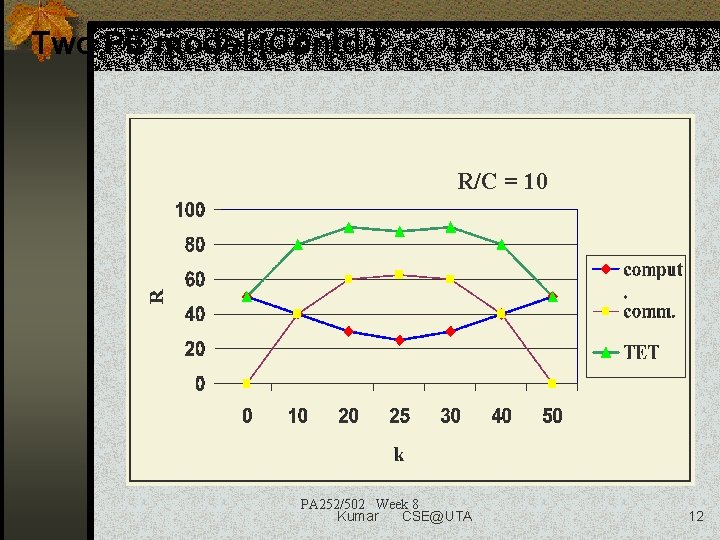

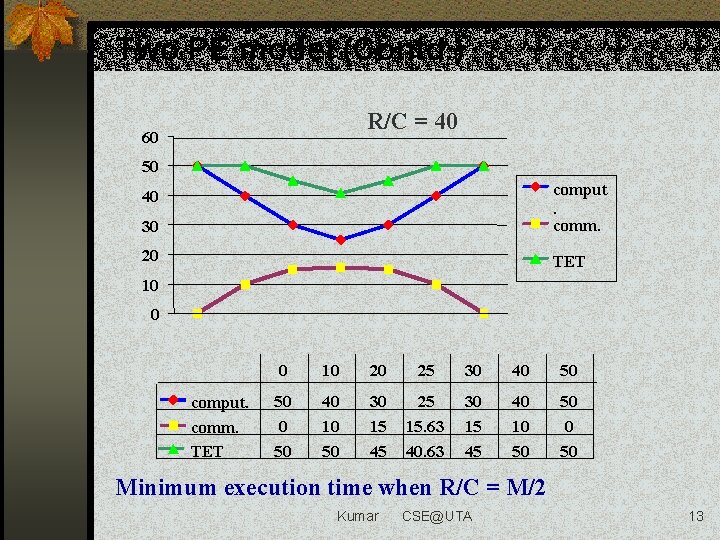

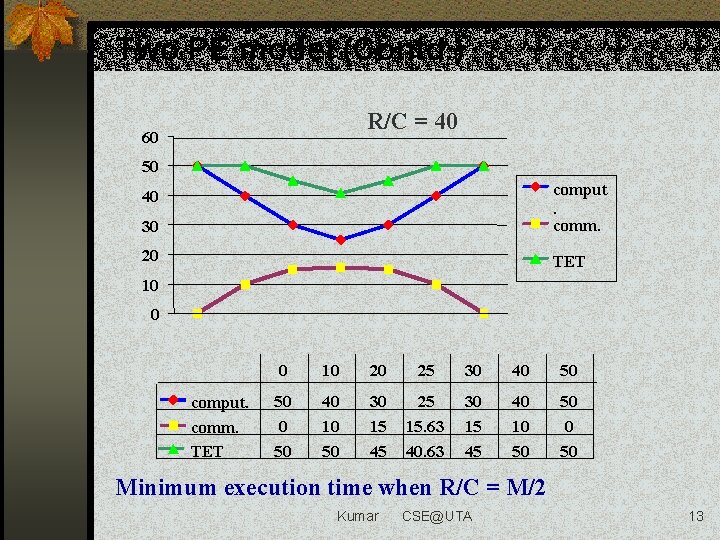

Two PE model (Contd. ) R/C = 40 60 50 30 comput. comm. 20 TET 40 10 0 comput. comm. TET 0 10 20 25 30 40 50 50 40 10 50 30 15 45 25 15. 63 40. 63 30 15 45 40 10 50 50 Minimum execution time when R/C = M/2 Kumar CSE@UTA 13

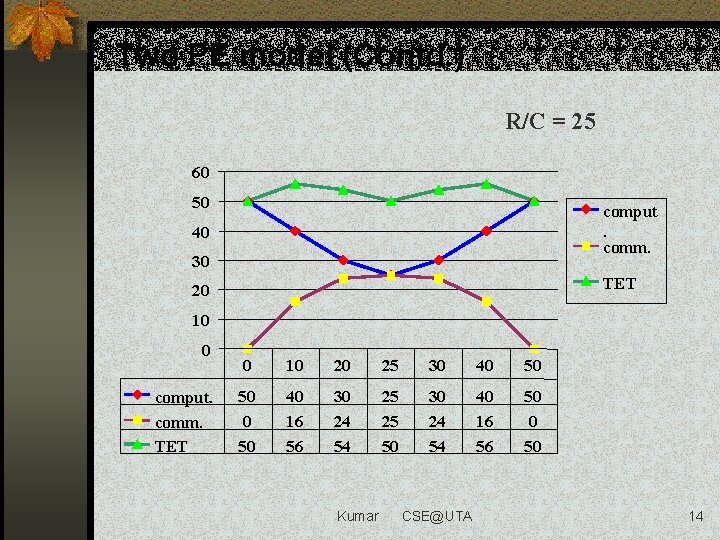

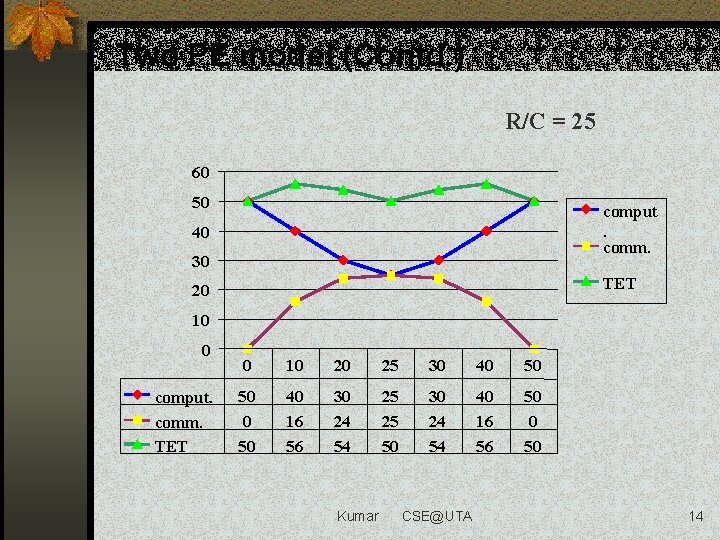

Two PE model (Contd. ) R/C = 25 60 50 comput. comm. 40 30 TET 20 10 0 comput. comm. TET 0 10 20 25 30 40 50 50 40 16 56 30 24 54 25 25 50 30 24 54 40 16 56 50 0 50 Kumar CSE@UTA 14

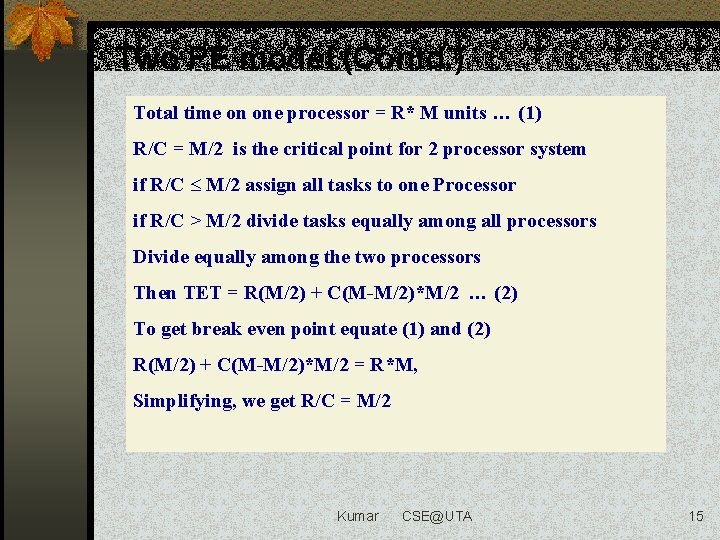

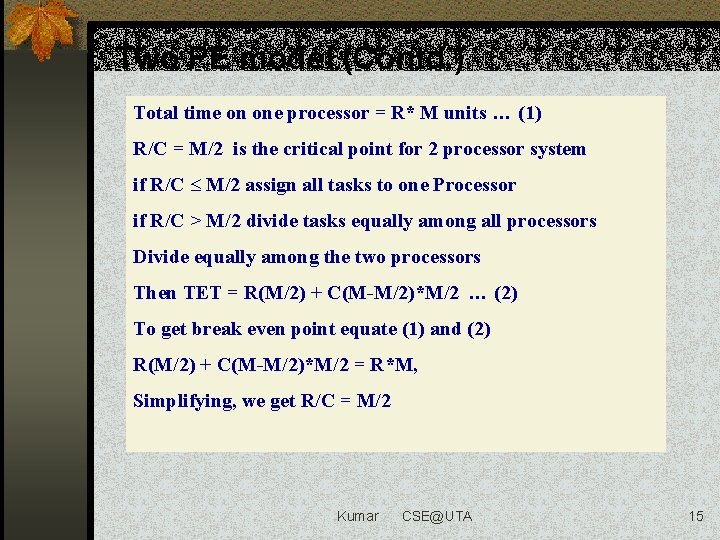

Two PE model (Contd. ) Total time on one processor = R* M units … (1) R/C = M/2 is the critical point for 2 processor system if R/C M/2 assign all tasks to one Processor if R/C > M/2 divide tasks equally among all processors Divide equally among the two processors Then TET = R(M/2) + C(M-M/2)*M/2 … (2) To get break even point equate (1) and (2) R(M/2) + C(M-M/2)*M/2 = R*M, Simplifying, we get R/C = M/2 Kumar CSE@UTA 15

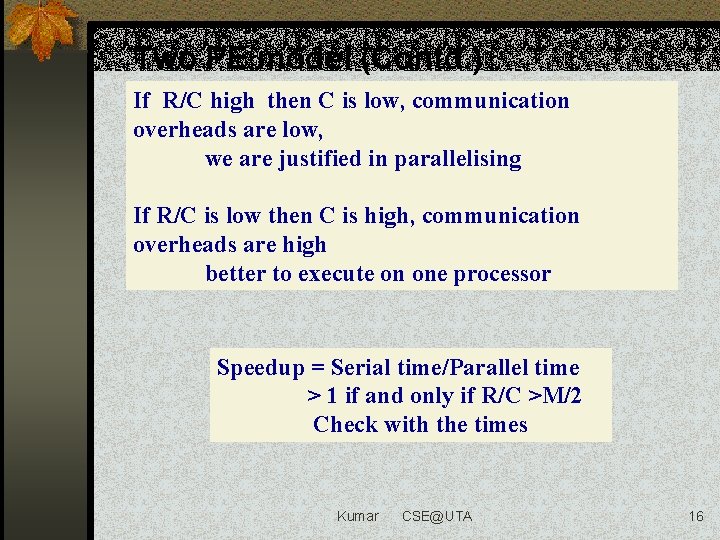

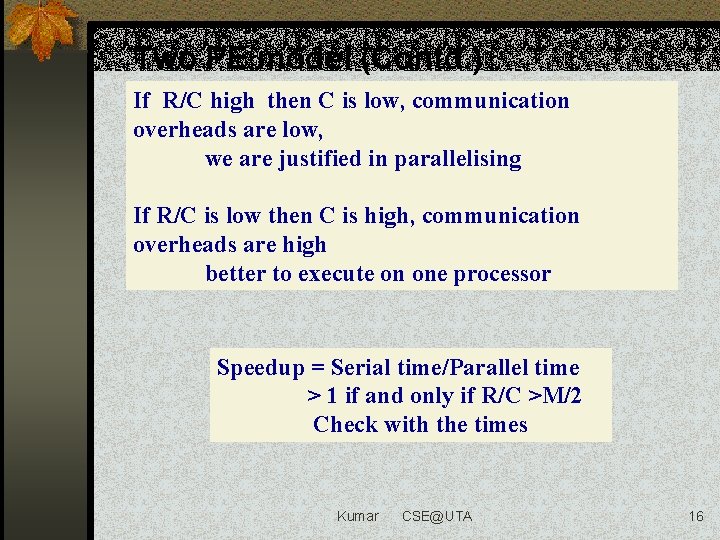

Two PE model (Contd. ) If R/C high then C is low, communication overheads are low, we are justified in parallelising If R/C is low then C is high, communication overheads are high better to execute on one processor Speedup = Serial time/Parallel time > 1 if and only if R/C >M/2 Check with the times Kumar CSE@UTA 16

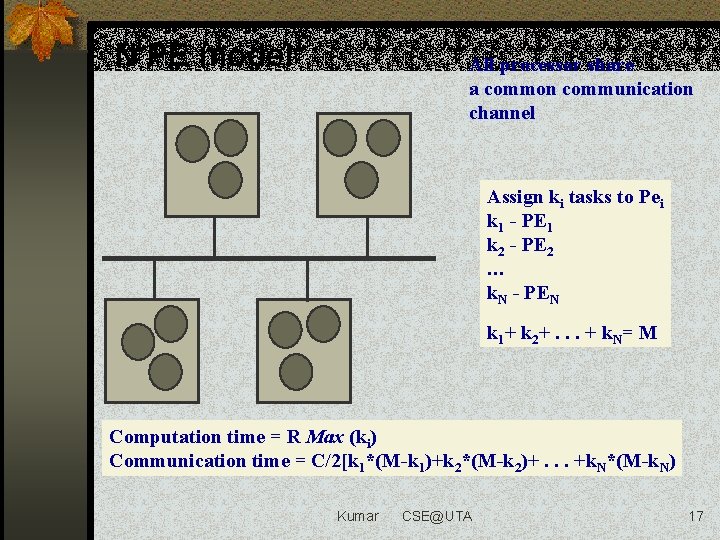

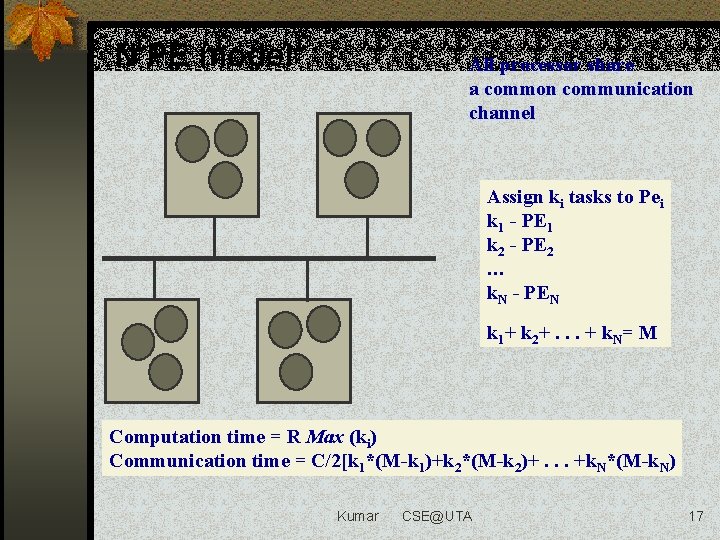

N PE model All processor share a common communication channel Assign ki tasks to Pei k 1 - PE 1 k 2 - PE 2 … k. N - PEN k 1+ k 2+. . . + k. N= M Computation time = R Max (ki) Communication time = C/2[k 1*(M-k 1)+k 2*(M-k 2)+. . . +k. N*(M-k. N) Kumar CSE@UTA 17

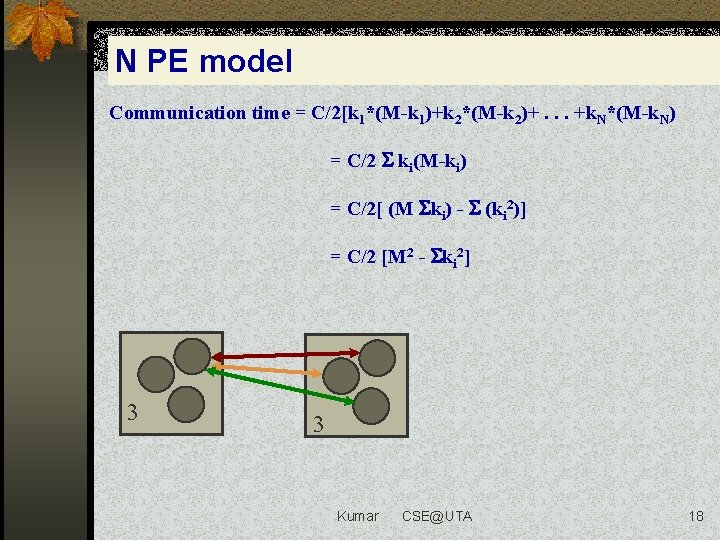

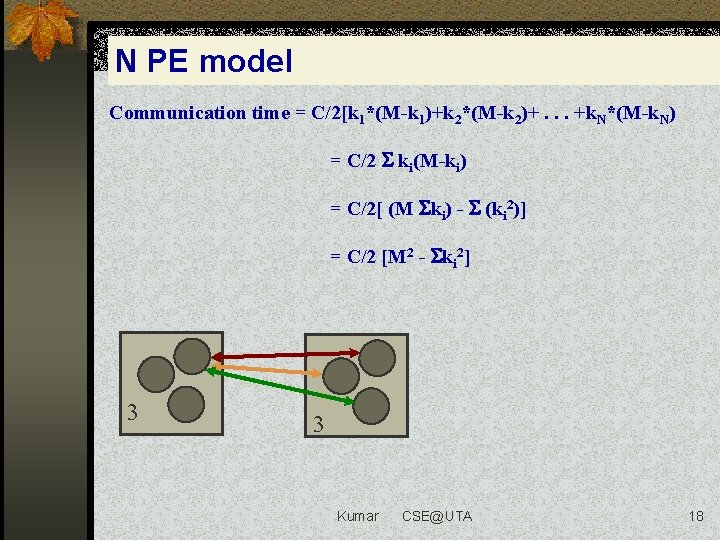

N PE model Communication time = C/2[k 1*(M-k 1)+k 2*(M-k 2)+. . . +k. N*(M-k. N) = C/2 ki(M-ki) = C/2[ (M ki) - (ki 2)] = C/2 [M 2 - ki 2] 3 3 Kumar CSE@UTA 18

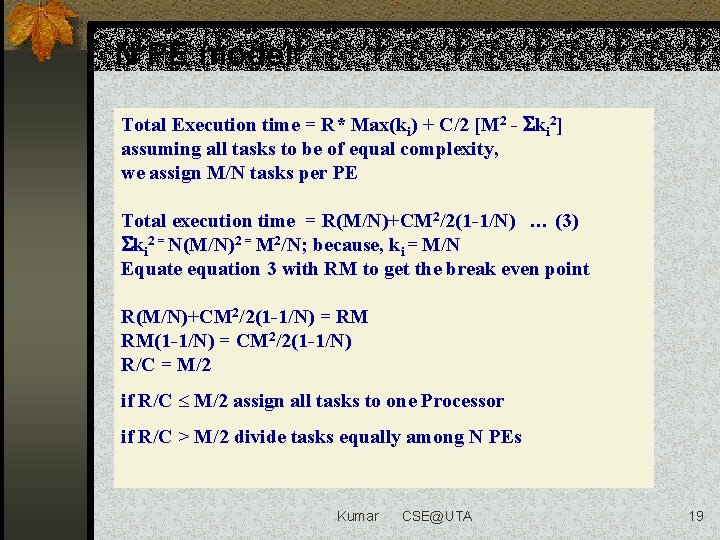

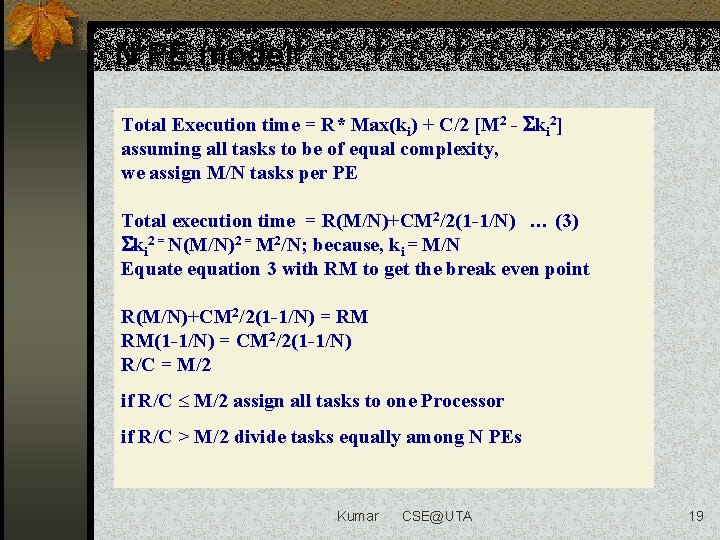

N PE model Total Execution time = R* Max(ki) + C/2 [M 2 - ki 2] assuming all tasks to be of equal complexity, we assign M/N tasks per PE Total execution time = R(M/N)+CM 2/2(1 -1/N) … (3) ki 2 = N(M/N)2 = M 2/N; because, ki = M/N Equate equation 3 with RM to get the break even point R(M/N)+CM 2/2(1 -1/N) = RM RM(1 -1/N) = CM 2/2(1 -1/N) R/C = M/2 if R/C M/2 assign all tasks to one Processor if R/C > M/2 divide tasks equally among N PEs Kumar CSE@UTA 19

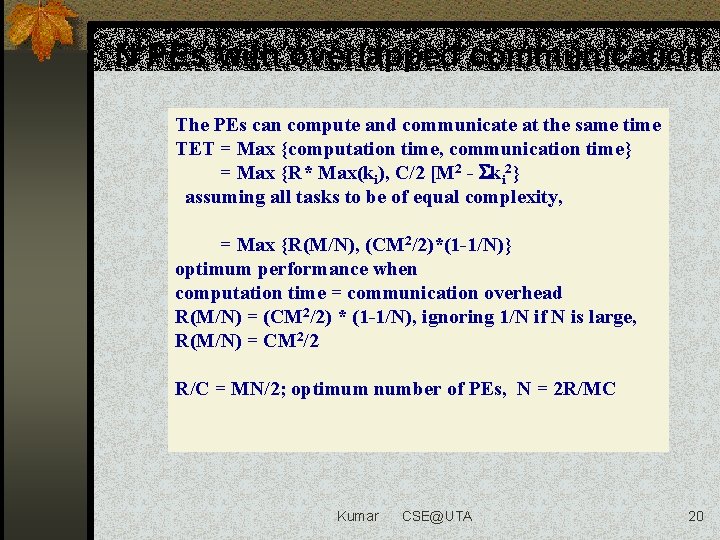

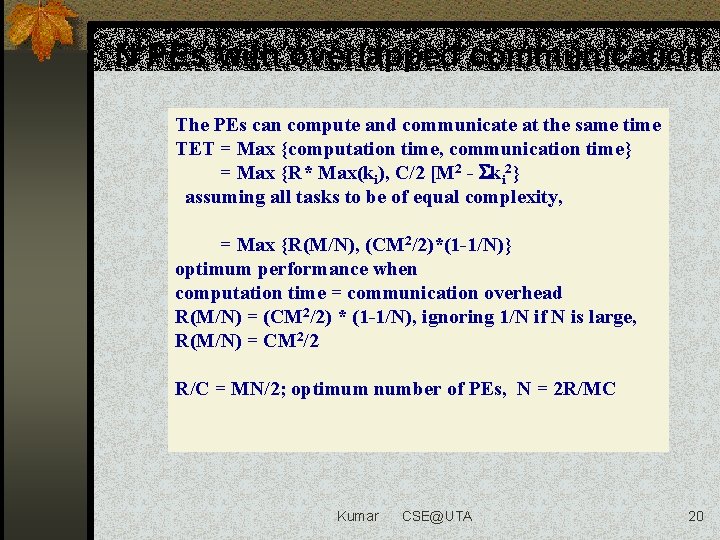

N PEs with overlapped communication The PEs can compute and communicate at the same time TET = Max {computation time, communication time} = Max {R* Max(ki), C/2 [M 2 - ki 2} assuming all tasks to be of equal complexity, = Max {R(M/N), (CM 2/2)*(1 -1/N)} optimum performance when computation time = communication overhead R(M/N) = (CM 2/2) * (1 -1/N), ignoring 1/N if N is large, R(M/N) = CM 2/2 R/C = MN/2; optimum number of PEs, N = 2 R/MC Kumar CSE@UTA 20

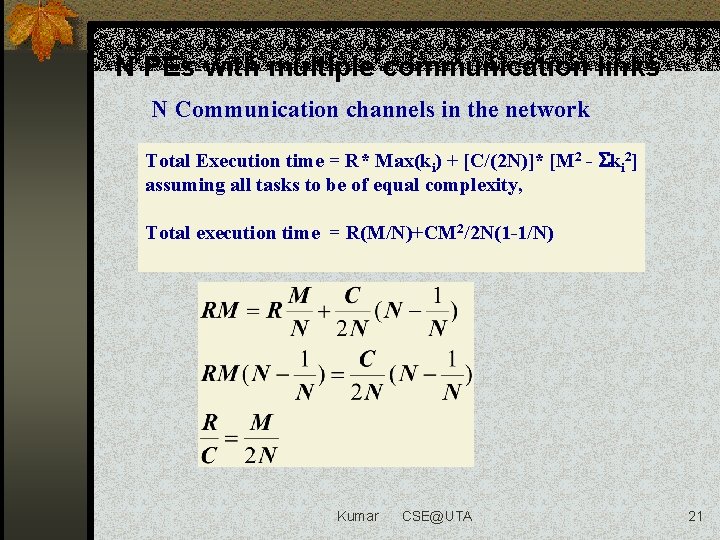

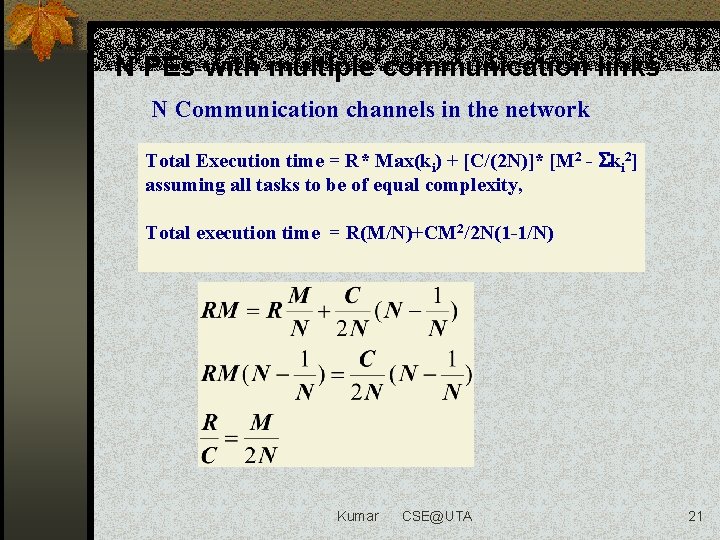

N PEs with multiple communication links N Communication channels in the network Total Execution time = R* Max(ki) + [C/(2 N)]* [M 2 - ki 2] assuming all tasks to be of equal complexity, Total execution time = R(M/N)+CM 2/2 N(1 -1/N) Kumar CSE@UTA 21

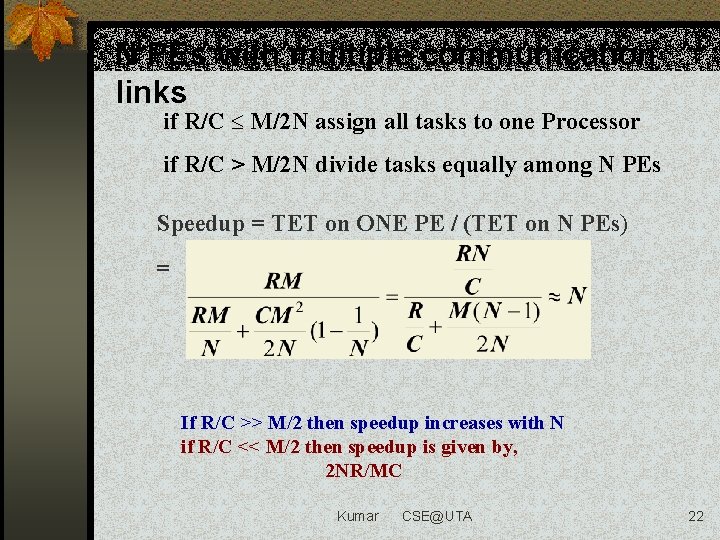

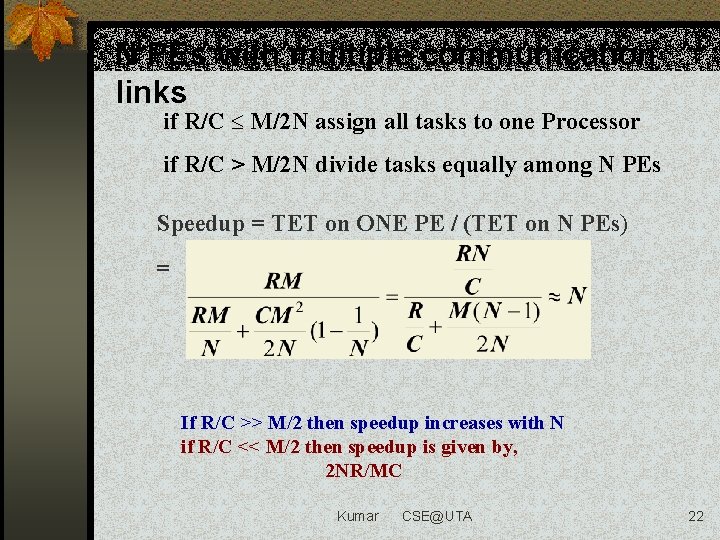

N PEs with multiple communication links if R/C M/2 N assign all tasks to one Processor if R/C > M/2 N divide tasks equally among N PEs Speedup = TET on ONE PE / (TET on N PEs) = If R/C >> M/2 then speedup increases with N if R/C << M/2 then speedup is given by, 2 NR/MC Kumar CSE@UTA 22

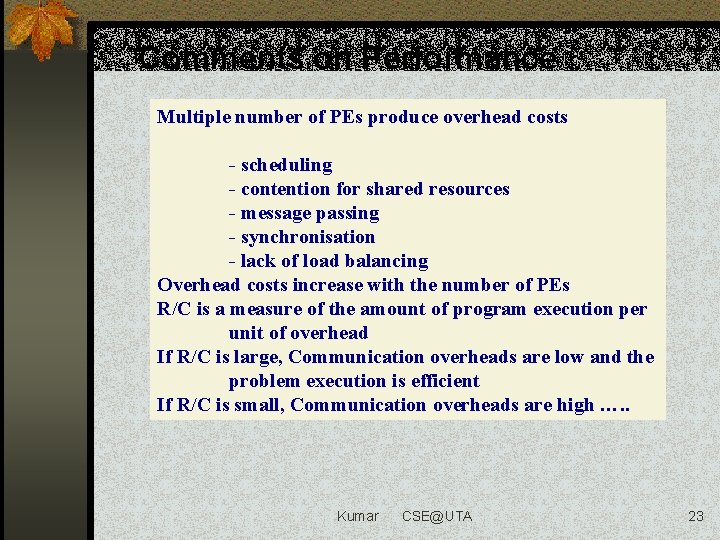

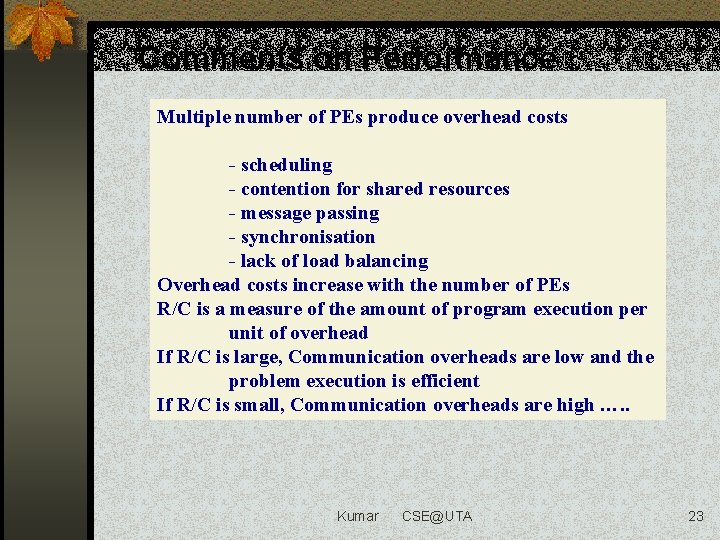

Comments on Performance Multiple number of PEs produce overhead costs - scheduling - contention for shared resources - message passing - synchronisation - lack of load balancing Overhead costs increase with the number of PEs R/C is a measure of the amount of program execution per unit of overhead If R/C is large, Communication overheads are low and the problem execution is efficient If R/C is small, Communication overheads are high …. . Kumar CSE@UTA 23

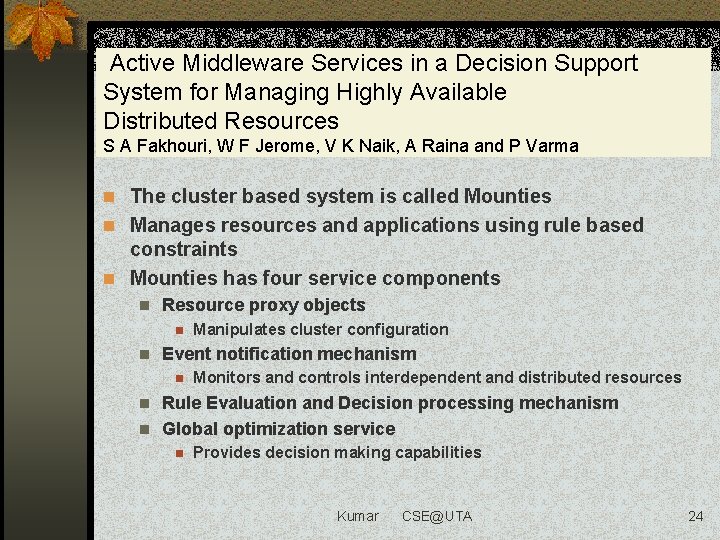

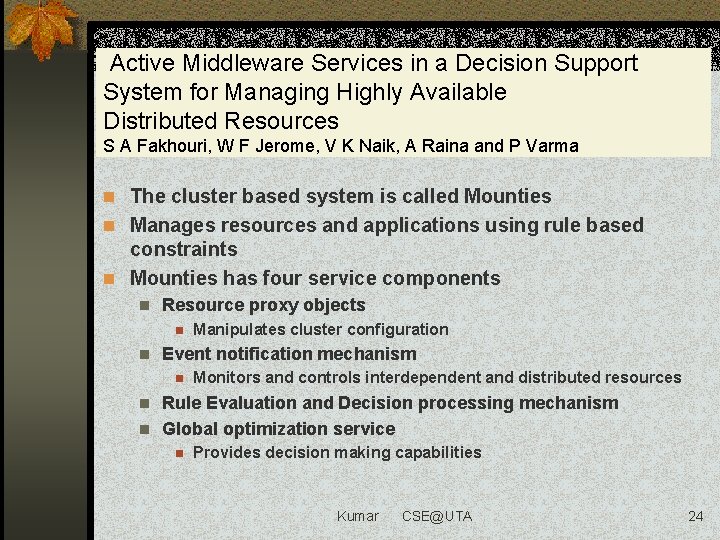

Active Middleware Services in a Decision Support System for Managing Highly Available Distributed Resources S A Fakhouri, W F Jerome, V K Naik, A Raina and P Varma n The cluster based system is called Mounties n Manages resources and applications using rule based constraints n Mounties has four service components n Resource proxy objects n n Manipulates cluster configuration Event notification mechanism n Monitors and controls interdependent and distributed resources Rule Evaluation and Decision processing mechanism n Global optimization service n n Provides decision making capabilities Kumar CSE@UTA 24

What’s in the paper? n Architecture and design of service components n Interaction between the components and the decision making component n General programming paradigm Kumar CSE@UTA 25

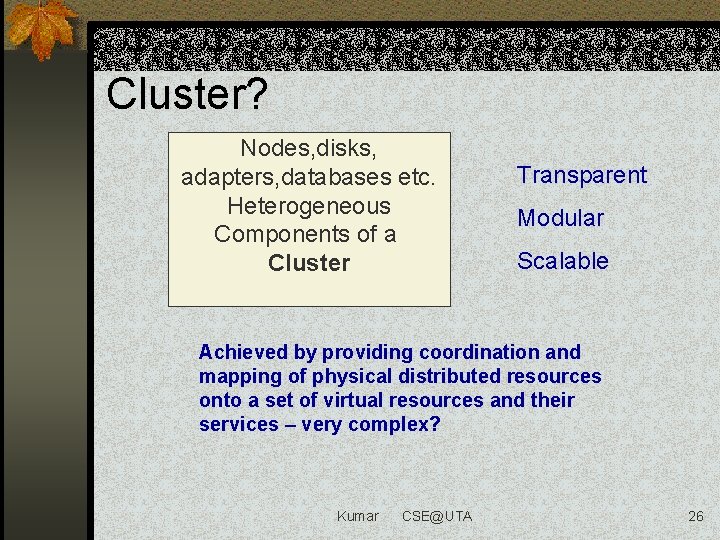

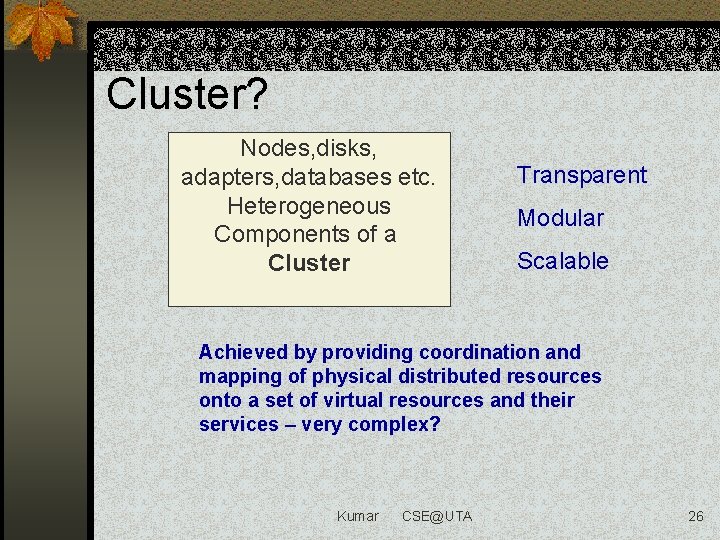

Cluster? Nodes, disks, adapters, databases etc. Heterogeneous Components of a Cluster Transparent Modular Scalable Achieved by providing coordination and mapping of physical distributed resources onto a set of virtual resources and their services – very complex? Kumar CSE@UTA 26

Authors’ approach n Clusters and resources – 2 dimensions n Semistatic nature of resource n n n Type and quality of supporting services needed to enable its services Formalized as simple rules Dynamic state of the services provided by the cluster – captured by events n Coordination and mapping – centralized and rule-based n Events and rules are combined only when necessary Kumar CSE@UTA 27

Resources n Attributes n Unique Name n Type – functionality n Capacity – number of dependent sources it can serve n Priority (L 1. . 10 H) n State n ONLINE n OFFLINE n FAILED Kumar CSE@UTA 28

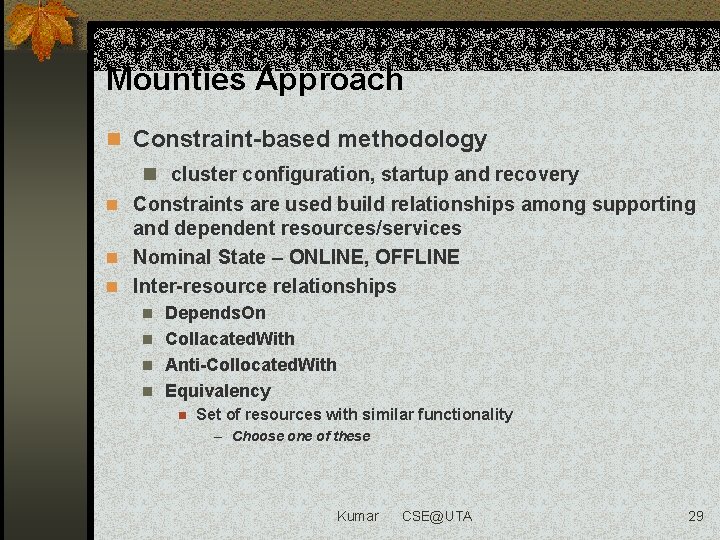

Mounties Approach n Constraint-based methodology n cluster configuration, startup and recovery n Constraints are used build relationships among supporting and dependent resources/services n Nominal State – ONLINE, OFFLINE n Inter-resource relationships Depends. On n Collacated. With n Anti-Collocated. With n Equivalency n n Set of resources with similar functionality – Choose one of these Kumar CSE@UTA 29

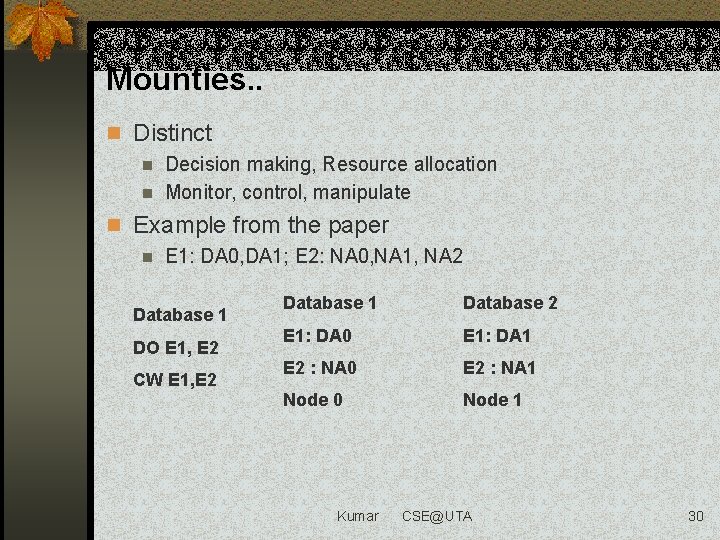

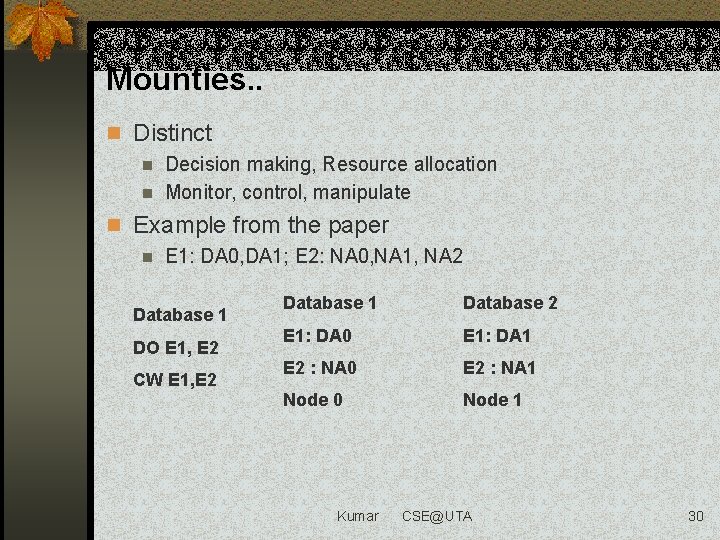

Mounties. . n Distinct n Decision making, Resource allocation n Monitor, control, manipulate n Example from the paper n E 1: DA 0, DA 1; E 2: NA 0, NA 1, NA 2 Database 1 DO E 1, E 2 CW E 1, E 2 Database 1 Database 2 E 1: DA 0 E 1: DA 1 E 2 : NA 0 E 2 : NA 1 Node 0 Node 1 Kumar CSE@UTA 30

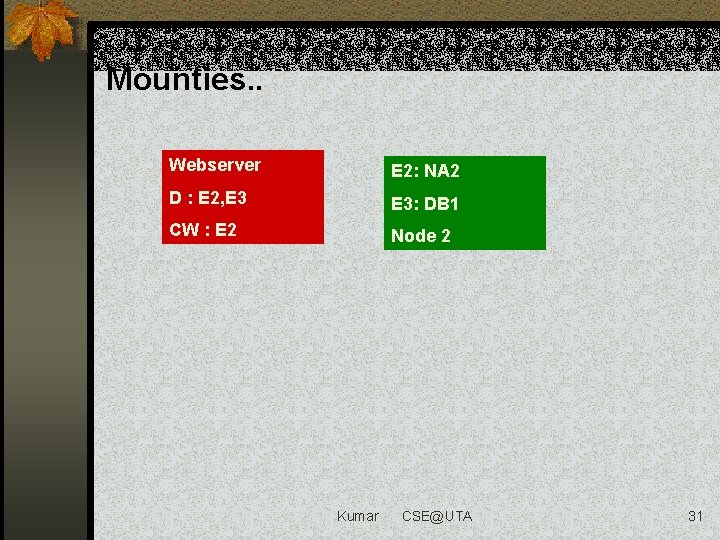

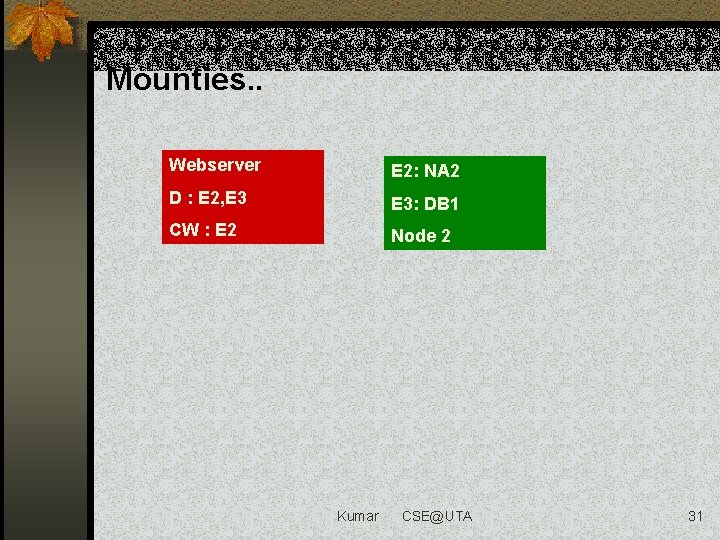

Mounties. . Webserver E 2: NA 2 D : E 2, E 3: DB 1 CW : E 2 Node 2 Kumar CSE@UTA 31

Events and Clusters n Resource related n Response to a command n Enduser interactions/directives n Alerts/alarms Resource Specific Constraints capacity, dependency, location, Kumar CSE@UTA 32

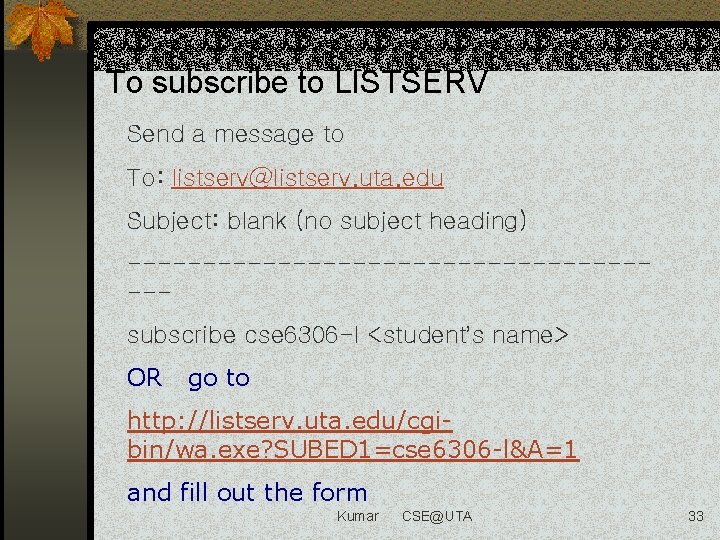

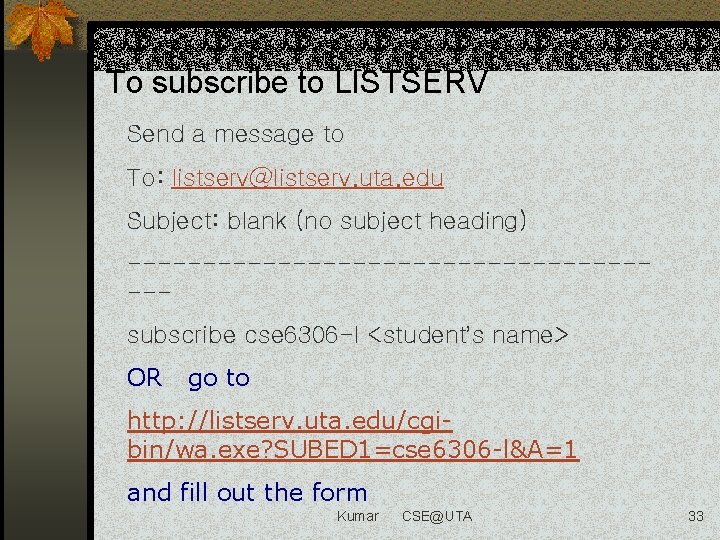

To subscribe to LISTSERV Send a message to To: listserv@listserv. uta. edu Subject: blank (no subject heading) ------------------subscribe cse 6306 -l <student’s name> OR go to http: //listserv. uta. edu/cgibin/wa. exe? SUBED 1=cse 6306 -l&A=1 and fill out the form Kumar CSE@UTA 33

Kumar CSE@UTA 34