6 January 2011 Preventing Encrypted Traffic Analysis Nabl

6 January 2011 Preventing Encrypted Traffic Analysis Nabíl Adam Schear University of Illinois at Urbana-Champaign Department of Computer Science nschear 2@illinois. edu Committee: Nikita Borisov (UIUC-ECE) Karen L. Bintz (Los Alamos National Laboratory) Matthew Caesar (UIUC-CS) Carl A. Gunter (UIUC-CS) David M. Nicol (UIUC-ECE) For a copy of the slides or dissertation: http: //helious. net/ UNCLASSIFIED Open Release LA-UR 11 -01317

Encrypted Traffic Analysis • Encrypted protocols provide strong confidentiality through encryption of content • But – encryption does not mask packet sizes and timing – Privacy can be breached by traffic analysis attacks • Traffic analysis can recover: – – – Browsed websites through encrypted proxies Keystrokes in real-time systems Language and phrases in Vo. IP Identity in anonymity systems Embedded protocols in tunnels • Who needs defense against traffic analysis? – Privacy seeking users and enterprises with VPNs, SSL, Vo. IP, anonymity networks etc. 2

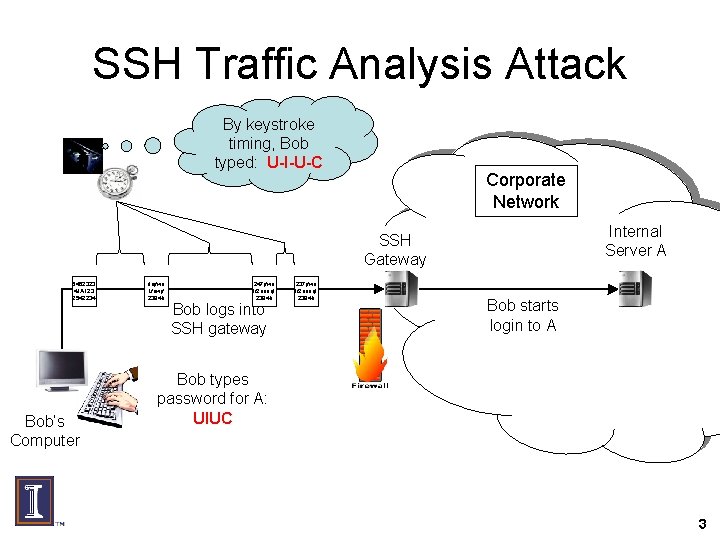

SSH Traffic Analysis Attack By keystroke timing, Bob typed: U-I-U-C Corporate Network Internal Server A SSH Gateway 5452323 4 JA 123 2542234 Bob’s Computer dejfwo Lfowjf 2394 h 247 jfwo lf 2 uenql 2394 h Bob logs into SSH gateway 237 jfwo lf 2 uenql 2394 h Bob starts login to A Bob types password for A: UIUC 3

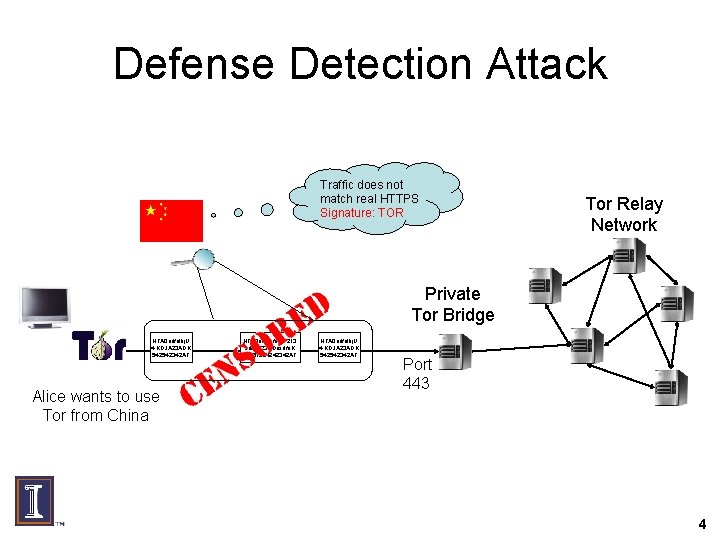

Defense Detection Attack Traffic does not match real HTTPS Signature: TOR Tor Relay Network Private Tor Bridge HFA 0 adfalkj. U 4; KDJA 23 ADK 542542342 AF Alice wants to use Tor from China HFA 0 adfdsfaal. U 213 sdfsdf 23 ADasdfa. K 54251234242342 AF HFA 0 adfalkj. U 4; KDJA 23 ADK 542542342 AF Port 443 4

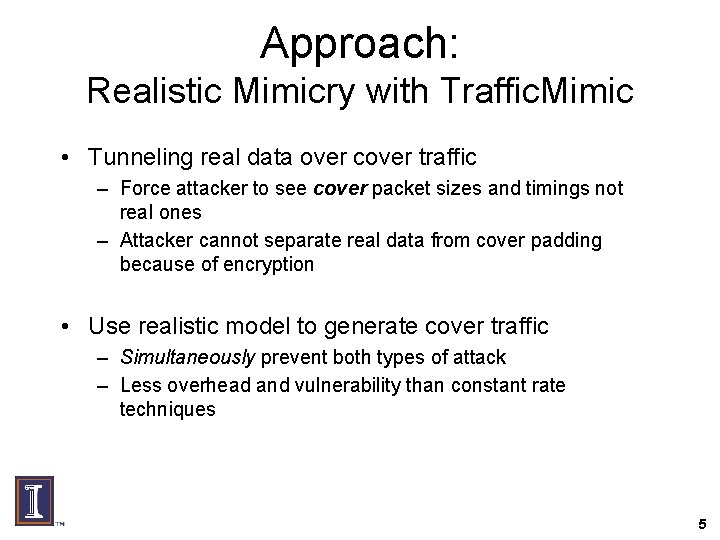

Approach: Realistic Mimicry with Traffic. Mimic • Tunneling real data over cover traffic – Force attacker to see cover packet sizes and timings not real ones – Attacker cannot separate real data from cover padding because of encryption • Use realistic model to generate cover traffic – Simultaneously prevent both types of attack – Less overhead and vulnerability than constant rate techniques 5

Thesis Statement “Tunneling real data through realistic cover traffic models is a robust defense that provides balanced performance and security against powerful traffic analysis attacks. ” 6

Traffic. Mimic Goals • Offer the user choices for performance versus security trade-offs – Quantify risk and performance gain • Robustly defend traffic analysis and defense detection attacks against a powerful adversary – Favor adversary with resources and access • Retain realism, practicality, and usability in implementation and evaluation – Ensure that real users can benefit from Traffic. Mimic 7

Outline • Introduction • Traffic. Mimic design and implementation • • Independent cover traffic evaluation Simulation and modeling performance study Improving performance with biasing Conclusions and future work 8

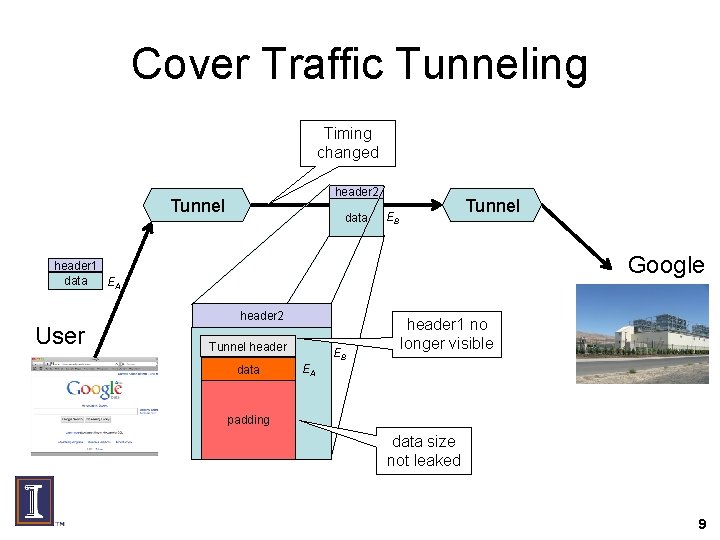

Cover Traffic Tunneling Timing changed header 2 Tunnel data Tunnel EB Google header 1 data EA User header 2 Tunnel header data EB header 1 no longer visible EA padding data size not leaked 9

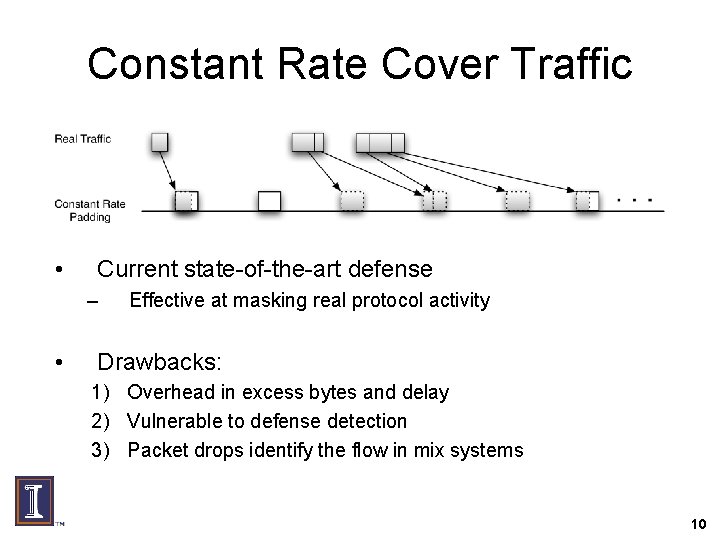

Constant Rate Cover Traffic • Current state-of-the-art defense – • Effective at masking real protocol activity Drawbacks: 1) Overhead in excess bytes and delay 2) Vulnerable to defense detection 3) Packet drops identify the flow in mix systems 10

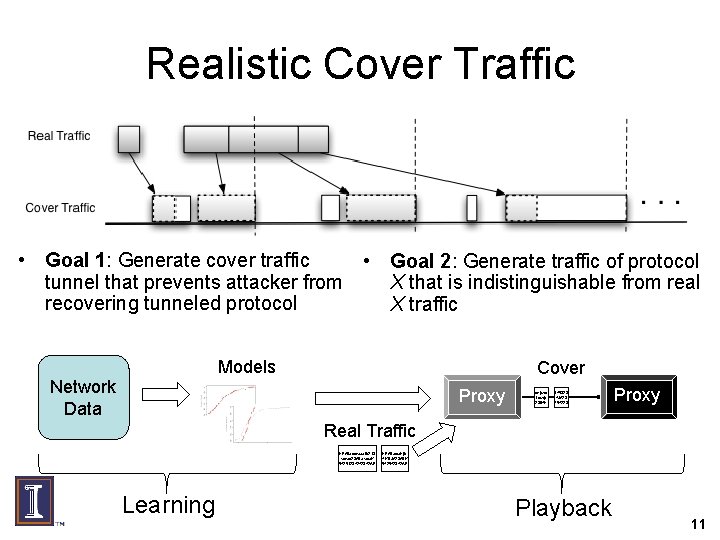

Realistic Cover Traffic • Goal 1: Generate cover traffic • Goal 2: Generate traffic of protocol tunnel that prevents attacker from X that is indistinguishable from real recovering tunneled protocol X traffic Models Cover Network Data Proxy dejfwo Lfowjf 2394 h 545323 4 JA 23 254223 Proxy Real Traffic HFA 0 adfdsfaal. U 213 sdfsdf 23 ADasdfa. K 54251234242342 AF Learning HFA 0 adfalkj. U 4; KDJA 23 ADK 542542342 AF Playback 11

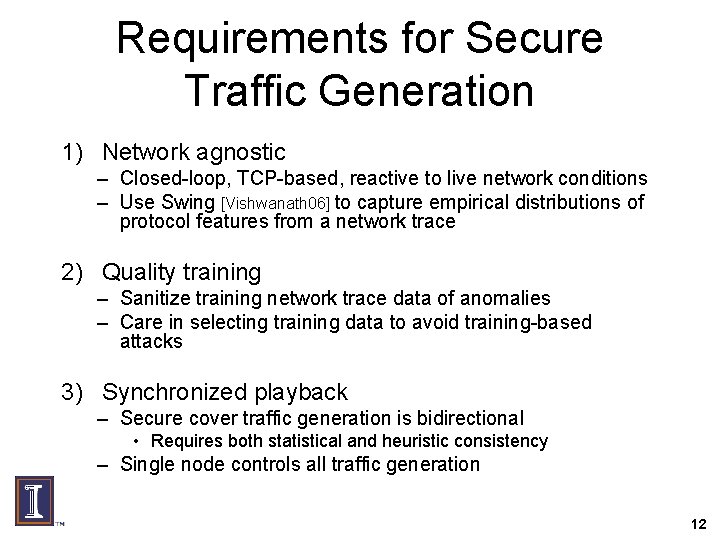

Requirements for Secure Traffic Generation 1) Network agnostic – Closed-loop, TCP-based, reactive to live network conditions – Use Swing [Vishwanath 06] to capture empirical distributions of protocol features from a network trace 2) Quality training – Sanitize training network trace data of anomalies – Care in selecting training data to avoid training-based attacks 3) Synchronized playback – Secure cover traffic generation is bidirectional • Requires both statistical and heuristic consistency – Single node controls all traffic generation 12

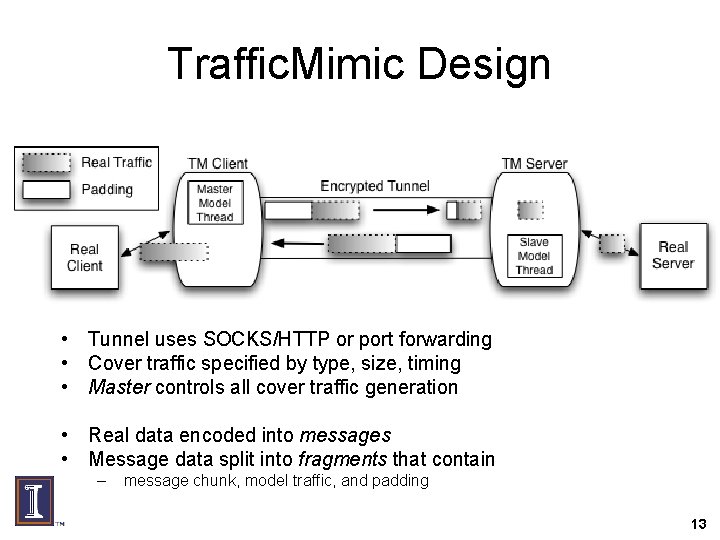

Traffic. Mimic Design • Tunnel uses SOCKS/HTTP or port forwarding • Cover traffic specified by type, size, timing • Master controls all cover traffic generation • Real data encoded into messages • Message data split into fragments that contain – message chunk, model traffic, and padding 13

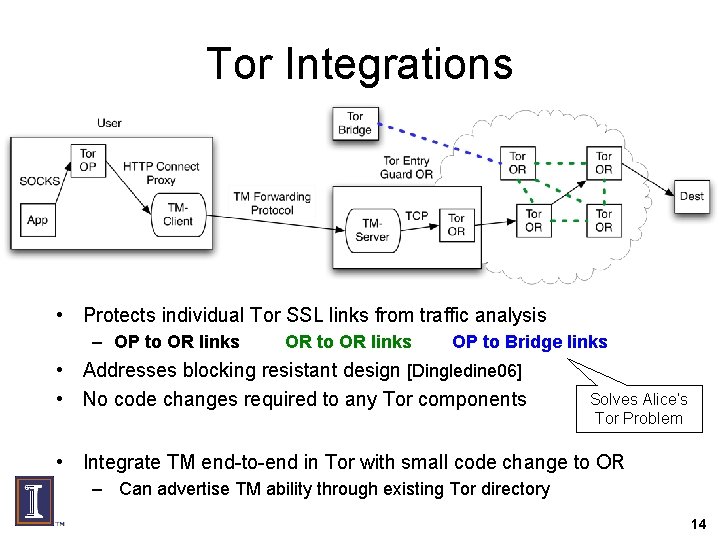

Tor Integrations • Protects individual Tor SSL links from traffic analysis – OP to OR links OR to OR links OP to Bridge links • Addresses blocking resistant design [Dingledine 06] • No code changes required to any Tor components Solves Alice’s Tor Problem • Integrate TM end-to-end in Tor with small code change to OR – Can advertise TM ability through existing Tor directory 14

Outline • Introduction • Traffic. Mimic design and implementation • Independent cover traffic evaluation • Simulation and modeling performance study • Improving performance with biasing • Conclusions and future work 15

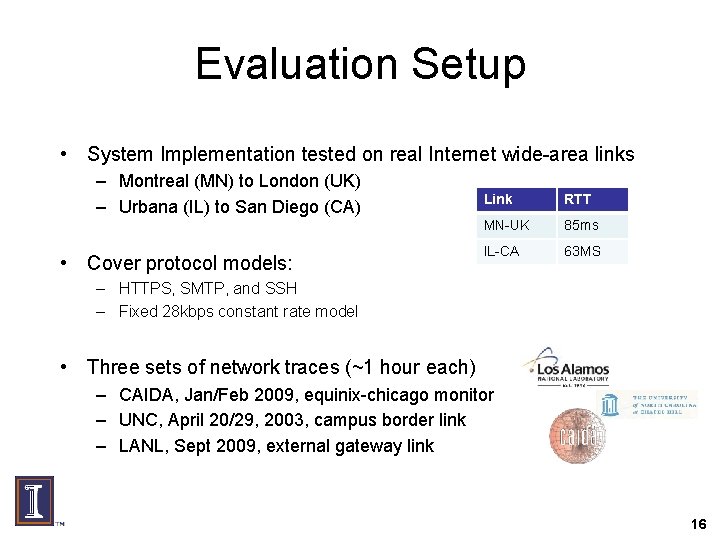

Evaluation Setup • System Implementation tested on real Internet wide-area links – Montreal (MN) to London (UK) – Urbana (IL) to San Diego (CA) • Cover protocol models: Link RTT MN-UK 85 ms IL-CA 63 MS – HTTPS, SMTP, and SSH – Fixed 28 kbps constant rate model • Three sets of network traces (~1 hour each) – CAIDA, Jan/Feb 2009, equinix-chicago monitor – UNC, April 20/29, 2003, campus border link – LANL, Sept 2009, external gateway link 16

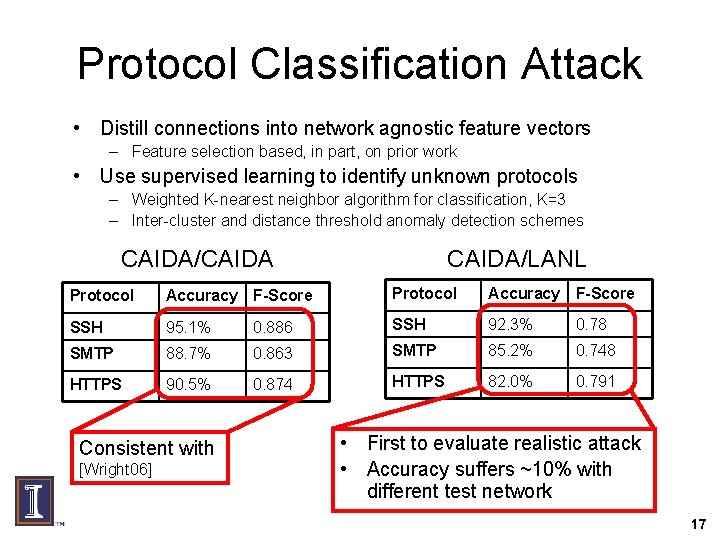

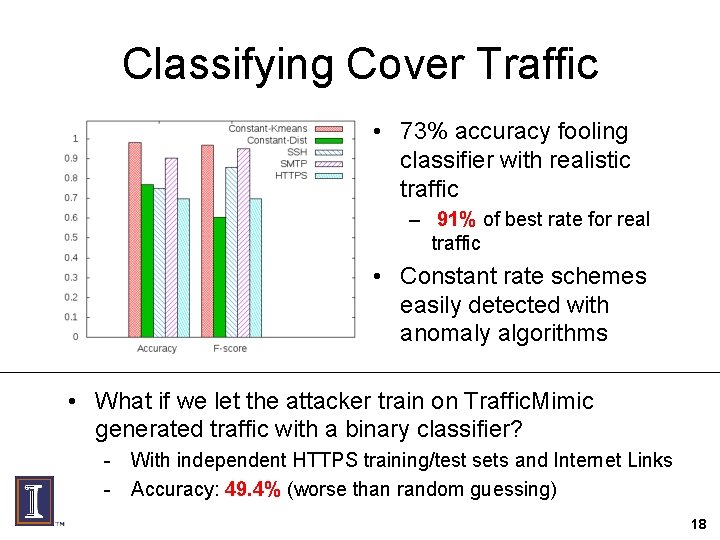

Protocol Classification Attack • Distill connections into network agnostic feature vectors – Feature selection based, in part, on prior work • Use supervised learning to identify unknown protocols – Weighted K-nearest neighbor algorithm for classification, K=3 – Inter-cluster and distance threshold anomaly detection schemes CAIDA/CAIDA/LANL Protocol Accuracy F-Score SSH 95. 1% 0. 886 SSH 92. 3% 0. 78 SMTP 88. 7% 0. 863 SMTP 85. 2% 0. 748 HTTPS 90. 5% 0. 874 HTTPS 82. 0% 0. 791 Consistent with [Wright 06] • First to evaluate realistic attack • Accuracy suffers ~10% with different test network 17

Classifying Cover Traffic • 73% accuracy fooling classifier with realistic traffic – 91% of best rate for real traffic • Constant rate schemes easily detected with anomaly algorithms • What if we let the attacker train on Traffic. Mimic generated traffic with a binary classifier? - With independent HTTPS training/test sets and Internet Links - Accuracy: 49. 4% (worse than random guessing) 18

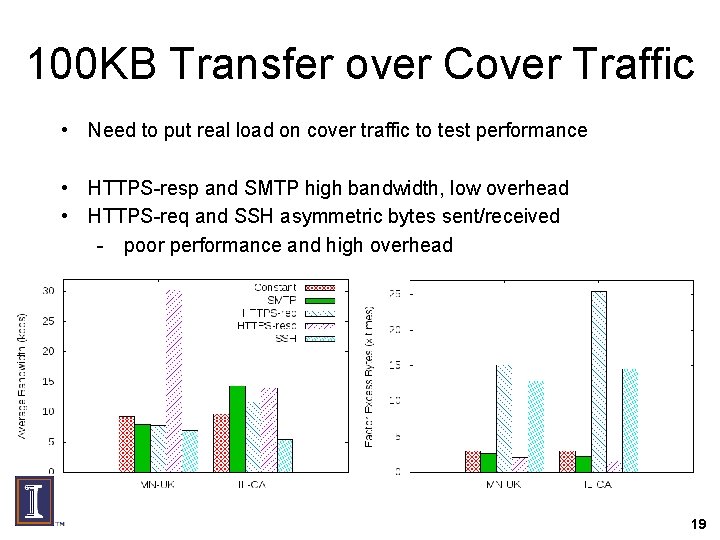

100 KB Transfer over Cover Traffic • Need to put real load on cover traffic to test performance • HTTPS-resp and SMTP high bandwidth, low overhead • HTTPS-req and SSH asymmetric bytes sent/received - poor performance and high overhead 19

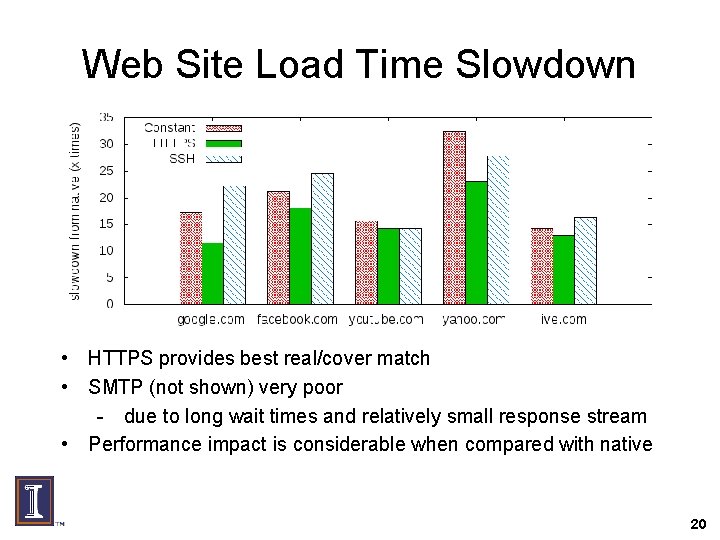

Web Site Load Time Slowdown • HTTPS provides best real/cover match • SMTP (not shown) very poor - due to long wait times and relatively small response stream • Performance impact is considerable when compared with native 20

Outline • Introduction • Traffic. Mimic design and implementation • Independent cover traffic evaluation • Simulation and modeling performance study • Improving performance with biasing • Conclusions and future work 21

Performance Study • Deeper understanding of performance with simulation/modeling • Questions – Cover traffic tunneling impact on user experience? – Overhead compared to transmitting without cover traffic? – Dependence on relationship between real and cover traffic? • We assess these impacts with: – tunnel-free network properties derived from simulation – real trace-driven protocol models – analytic models of cover traffic tunneling 22

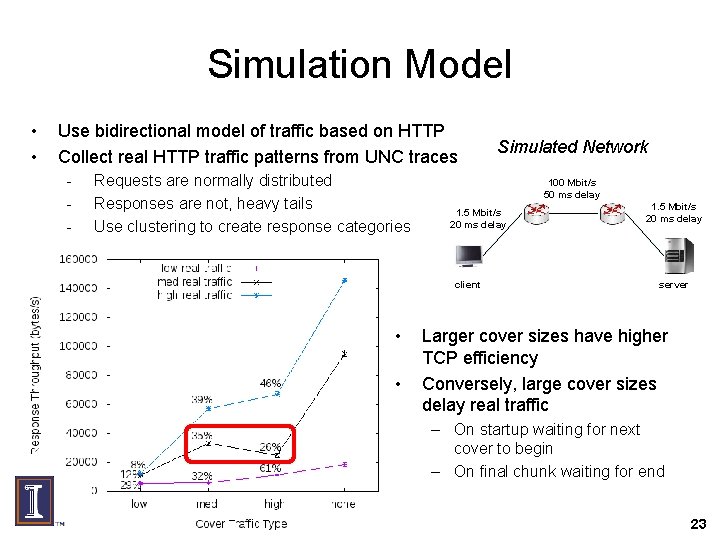

Simulation Model • • Use bidirectional model of traffic based on HTTP Collect real HTTP traffic patterns from UNC traces - Requests are normally distributed Responses are not, heavy tails Use clustering to create response categories 100 Mbit/s 50 ms delay 1. 5 Mbit/s 20 ms delay client • • Simulated Network 1. 5 Mbit/s 20 ms delay server Larger cover sizes have higher TCP efficiency Conversely, large cover sizes delay real traffic – On startup waiting for next cover to begin – On final chunk waiting for end 23

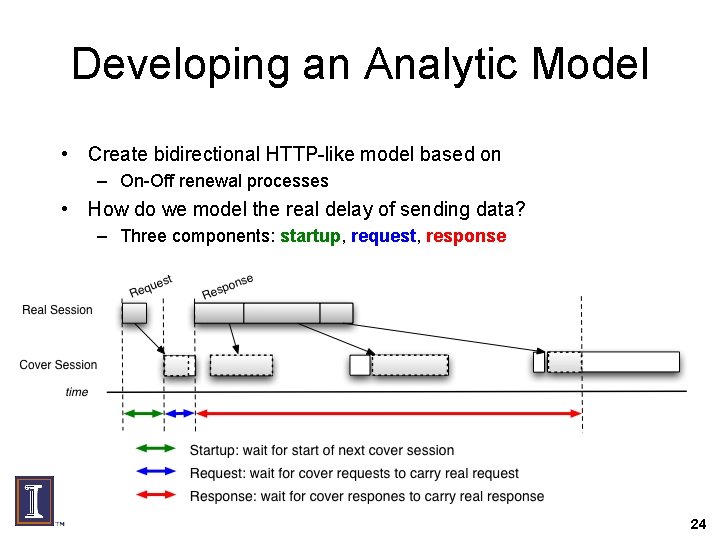

Developing an Analytic Model • Create bidirectional HTTP-like model based on – On-Off renewal processes • How do we model the real delay of sending data? – Three components: startup, request, response 24

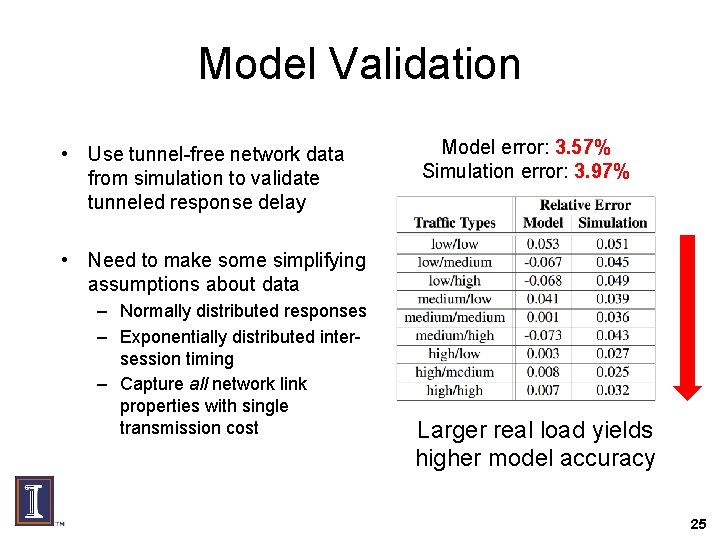

Model Validation • Use tunnel-free network data from simulation to validate tunneled response delay Model error: 3. 57% Simulation error: 3. 97% • Need to make some simplifying assumptions about data – Normally distributed responses – Exponentially distributed intersession timing – Capture all network link properties with single transmission cost Larger real load yields higher model accuracy 25

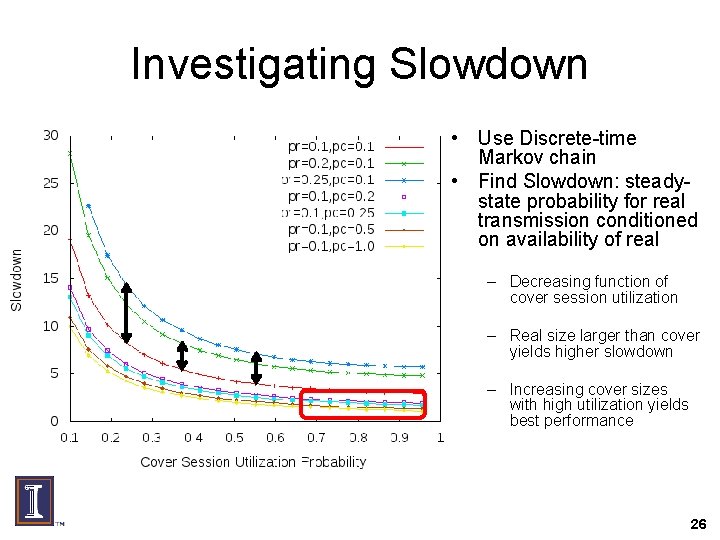

Investigating Slowdown • Use Discrete-time Markov chain • Find Slowdown: steadystate probability for real transmission conditioned on availability of real – Decreasing function of cover session utilization – Real size larger than cover yields higher slowdown – Increasing cover sizes with high utilization yields best performance 26

Performance Observations • Best when there is plenty of cover traffic to carry real – Similar to intuition for constant rate cover traffic • But mismatches between real and cover are painful – Cover too small, wait time hurts – Cover too large, real traffic has to wait for next cover to begin • Even when size ratio is favorable low utilization yields high slowdown • Waiting times dominate effects of padding and network transmission 27

Outline • • Introduction Traffic. Mimic design and implementation Independent cover traffic evaluation Simulation and modeling performance study • Improving performance with biasing • Conclusions and future work 28

Performance Enhancements • So far: prevent attack using independent cover traffic – Attacker inference cannot recover information from real flow • Can we relax strict independence without sacrificing security? – Against both traffic analysis and defense detection – Need to quantify security impact • Concept: influence the traffic generation process with biasing 29

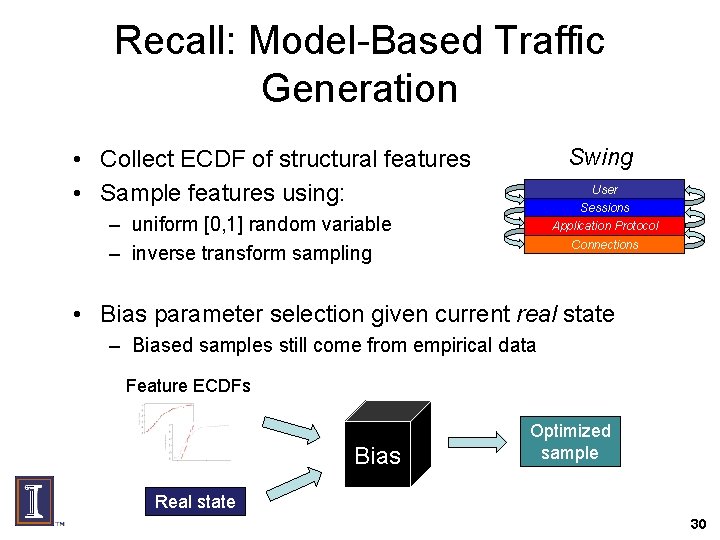

Recall: Model-Based Traffic Generation Swing • Collect ECDF of structural features • Sample features using: User Sessions – uniform [0, 1] random variable – inverse transform sampling Application Protocol Connections • Bias parameter selection given current real state – Biased samples still come from empirical data Feature ECDFs Bias Optimized sample Real state 30

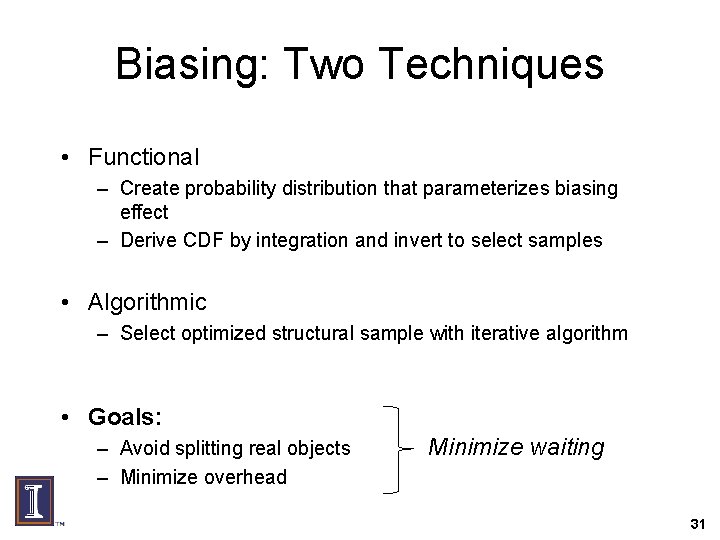

Biasing: Two Techniques • Functional – Create probability distribution that parameterizes biasing effect – Derive CDF by integration and invert to select samples • Algorithmic – Select optimized structural sample with iterative algorithm • Goals: – Avoid splitting real objects – Minimize overhead Minimize waiting 31

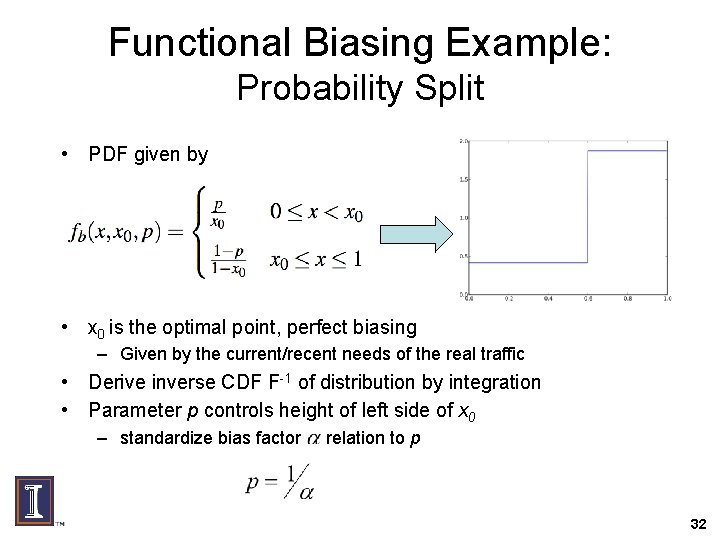

Functional Biasing Example: Probability Split • PDF given by • x 0 is the optimal point, perfect biasing – Given by the current/recent needs of the real traffic • Derive inverse CDF F-1 of distribution by integration • Parameter p controls height of left side of x 0 – standardize bias factor relation to p 32

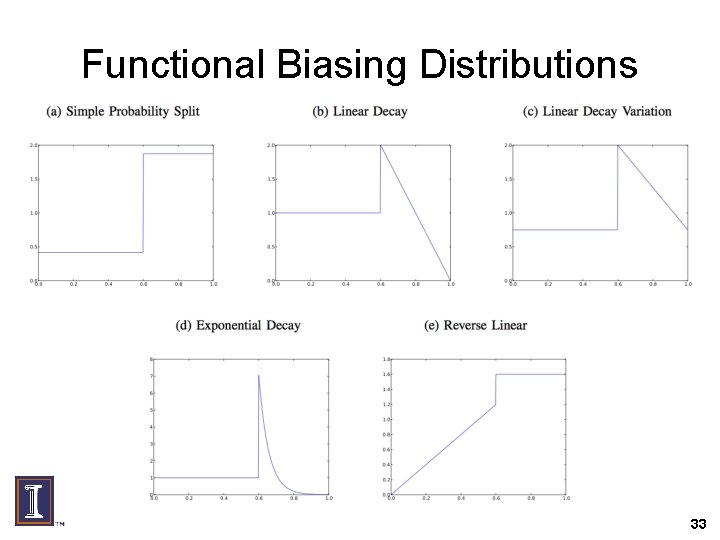

Functional Biasing Distributions 33

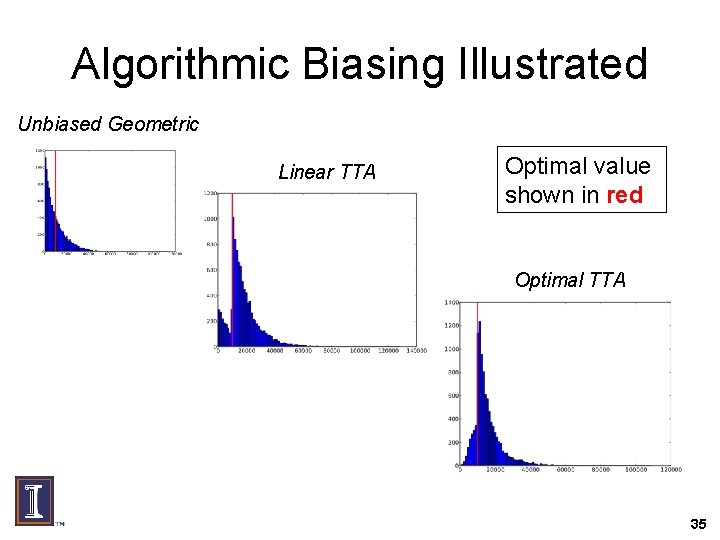

Algorithmic Biasing • Directly try to achieve biasing goals with algorithm • Try Again (TTA) – Select sample from empirical CDF – If greater than real buffer size, use it – If not, repeat up to R times • Linear algorithm takes first sample that does not split • Optimal algorithm finds minimum of all R samples that does not split 34

Algorithmic Biasing Illustrated Unbiased Geometric Linear TTA Optimal value shown in red Optimal TTA 35

Simulator • Simulate 20, 000 real objects being sent by a variable number of cover sizes • Estimate performance with – Number of cover sessions (num splits) – Excess padding needed in final cover (overage) • Simulation outputs results in tuples: – Denote random variables corresponding to these values Qsim and Csim 36

Attacking Biasing • Deduce the real size given observation of cover size using: – Bayesian inference – Maximum likelihood estimation • Real attack contains history of multiple cover sizes - Approximate real attack by inferring estimate qest from individual ci observations • Theoretical attack used for quantifying security impact of biasing – Performance in practice does not recover actionable information (acc ~5%) 37

Quantifying Information Leakage • Use information theory: Mutual Information • Intuitively: given two RV Qsim and Qest: measure how much knowing one variable reduces our uncertainty about the other – Measured in bits per sample pair • Mathematically: 38

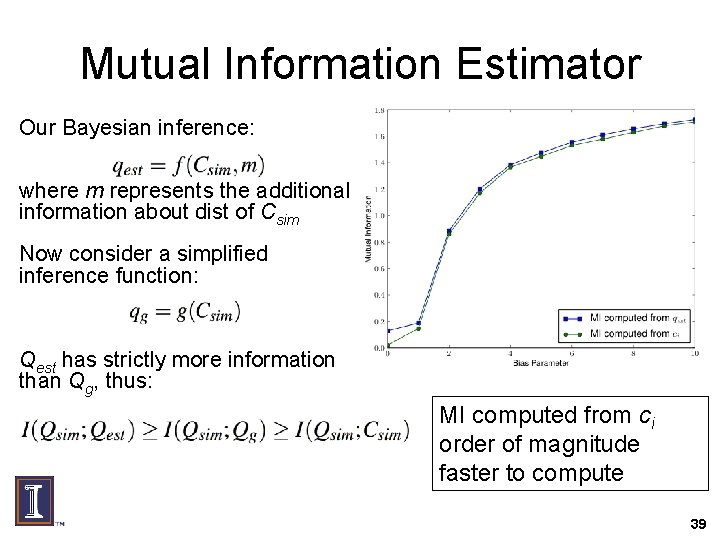

Mutual Information Estimator Our Bayesian inference: where m represents the additional information about dist of Csim Now consider a simplified inference function: Qest has strictly more information than Qg, thus: MI computed from ci order of magnitude faster to compute 39

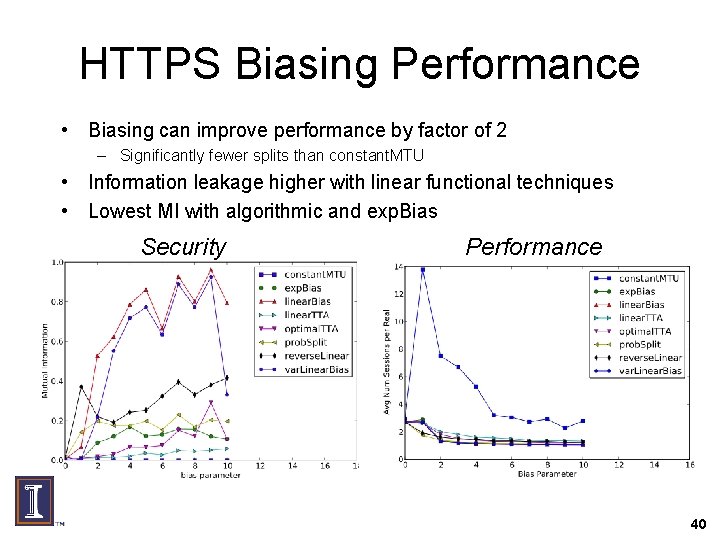

HTTPS Biasing Performance • Biasing can improve performance by factor of 2 – Significantly fewer splits than constant. MTU • Information leakage higher with linear functional techniques • Lowest MI with algorithmic and exp. Bias Security Performance 40

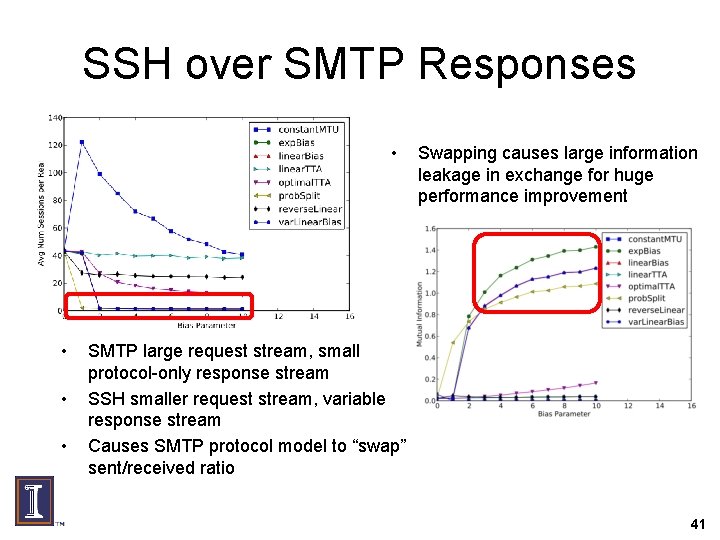

SSH over SMTP Responses • • Swapping causes large information leakage in exchange for huge performance improvement SMTP large request stream, small protocol-only response stream SSH smaller request stream, variable response stream Causes SMTP protocol model to “swap” sent/received ratio 41

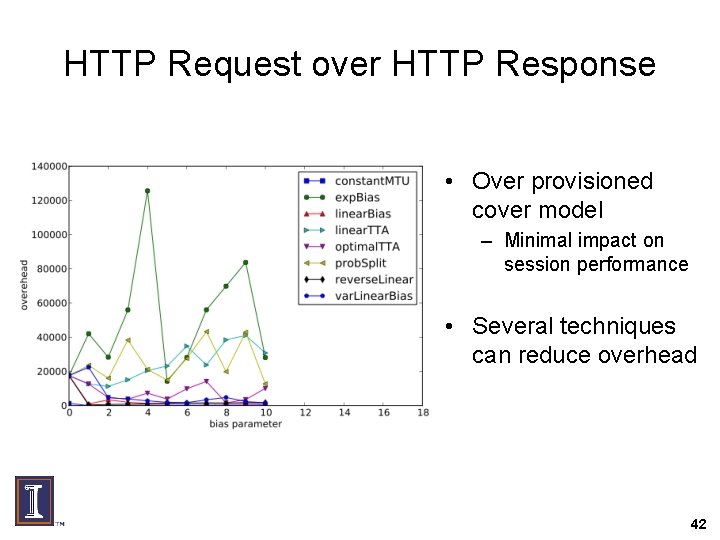

HTTP Request over HTTP Response • Over provisioned cover model – Minimal impact on session performance • Several techniques can reduce overhead 42

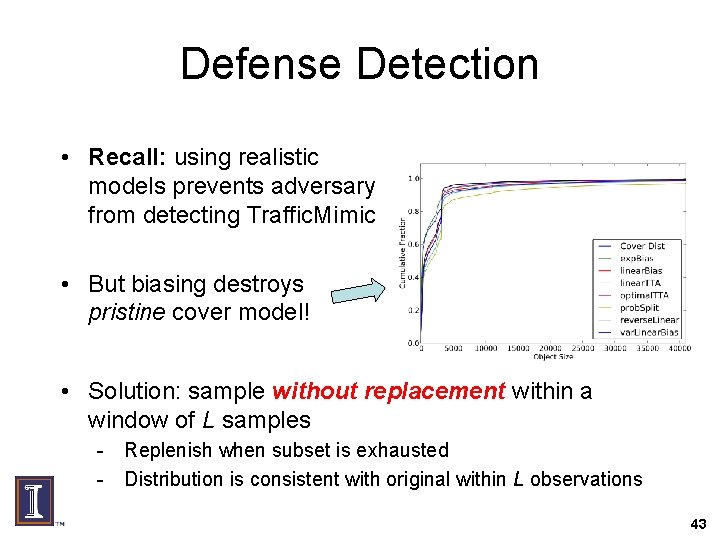

Defense Detection • Recall: using realistic models prevents adversary from detecting Traffic. Mimic • But biasing destroys pristine cover model! • Solution: sample without replacement within a window of L samples - Replenish when subset is exhausted - Distribution is consistent with original within L observations 43

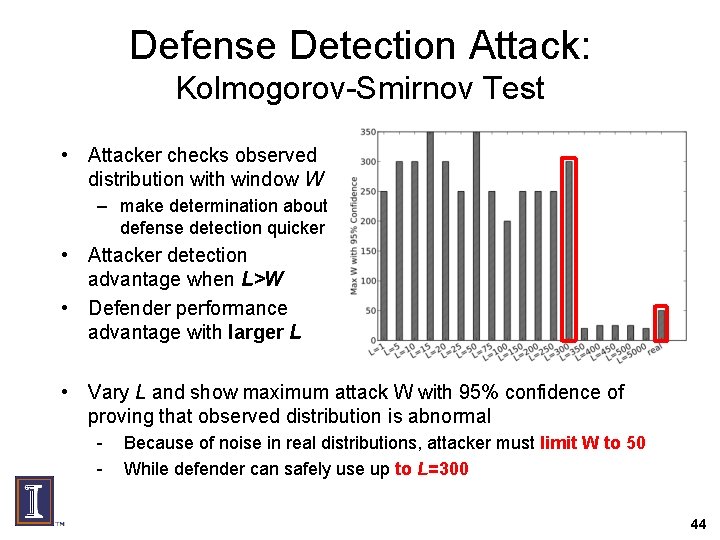

Defense Detection Attack: Kolmogorov-Smirnov Test • Attacker checks observed distribution with window W – make determination about defense detection quicker • Attacker detection advantage when L>W • Defender performance advantage with larger L • Vary L and show maximum attack W with 95% confidence of proving that observed distribution is abnormal - Because of noise in real distributions, attacker must limit W to 50 While defender can safely use up to L=300 44

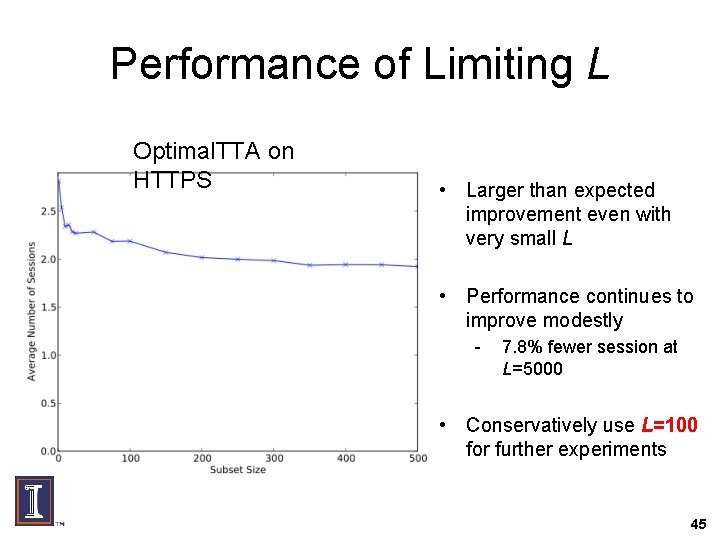

Performance of Limiting L Optimal. TTA on HTTPS • Larger than expected improvement even with very small L • Performance continues to improve modestly - 7. 8% fewer session at L=5000 • Conservatively use L=100 for further experiments 45

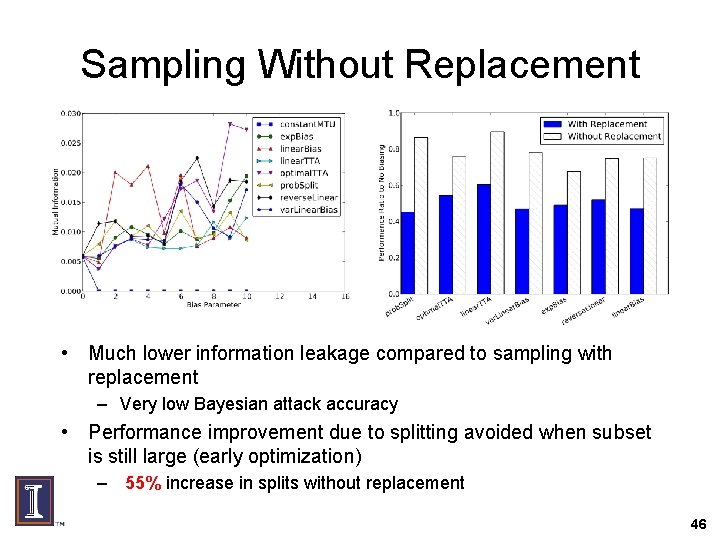

Sampling Without Replacement • Much lower information leakage compared to sampling with replacement – Very low Bayesian attack accuracy • Performance improvement due to splitting avoided when subset is still large (early optimization) – 55% increase in splits without replacement 46

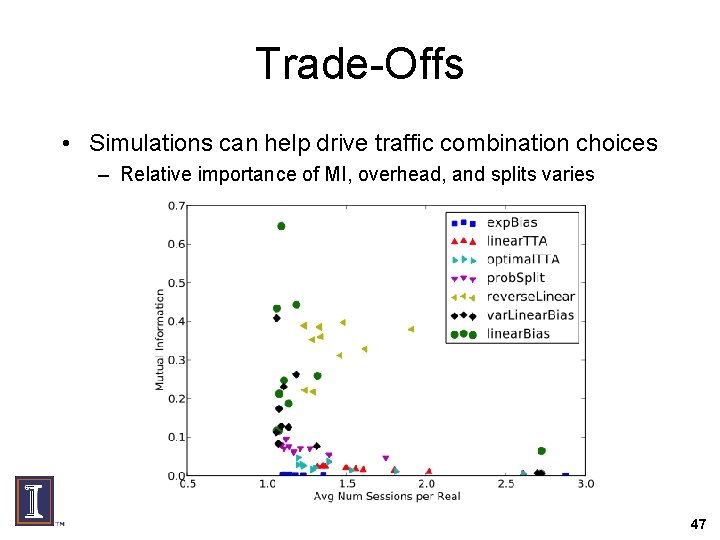

Trade-Offs • Simulations can help drive traffic combination choices – Relative importance of MI, overhead, and splits varies 47

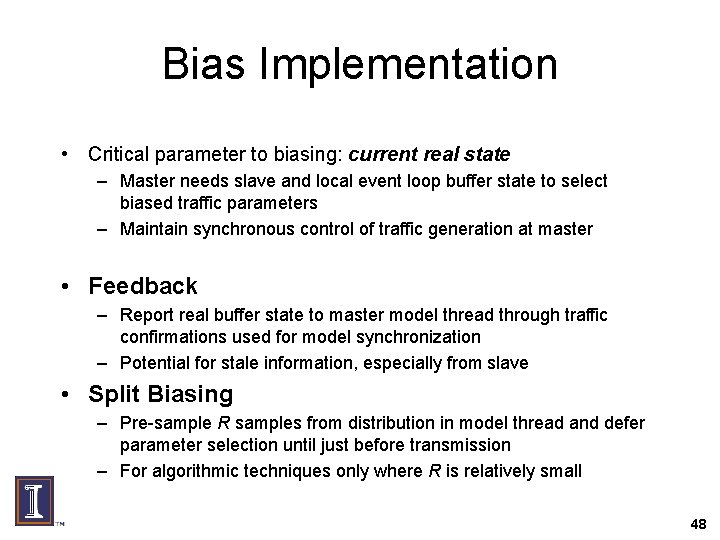

Bias Implementation • Critical parameter to biasing: current real state – Master needs slave and local event loop buffer state to select biased traffic parameters – Maintain synchronous control of traffic generation at master • Feedback – Report real buffer state to master model thread through traffic confirmations used for model synchronization – Potential for stale information, especially from slave • Split Biasing – Pre-sample R samples from distribution in model thread and defer parameter selection until just before transmission – For algorithmic techniques only where R is relatively small 48

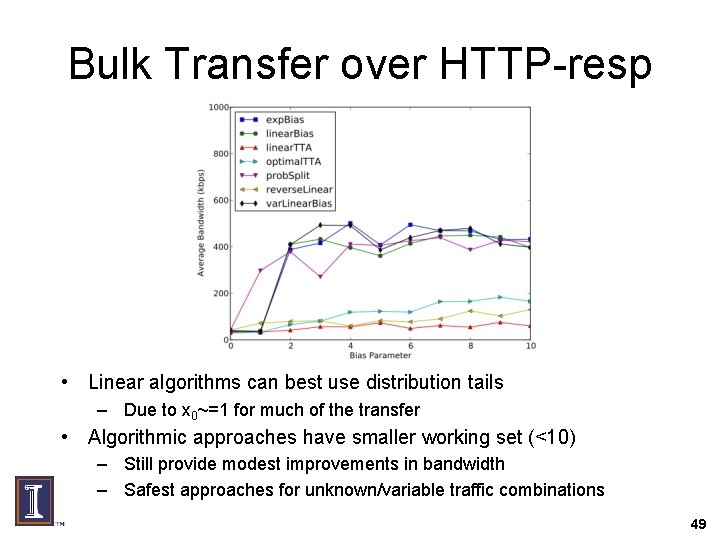

Bulk Transfer over HTTP-resp • Linear algorithms can best use distribution tails – Due to x 0~=1 for much of the transfer • Algorithmic approaches have smaller working set (<10) – Still provide modest improvements in bandwidth – Safest approaches for unknown/variable traffic combinations 49

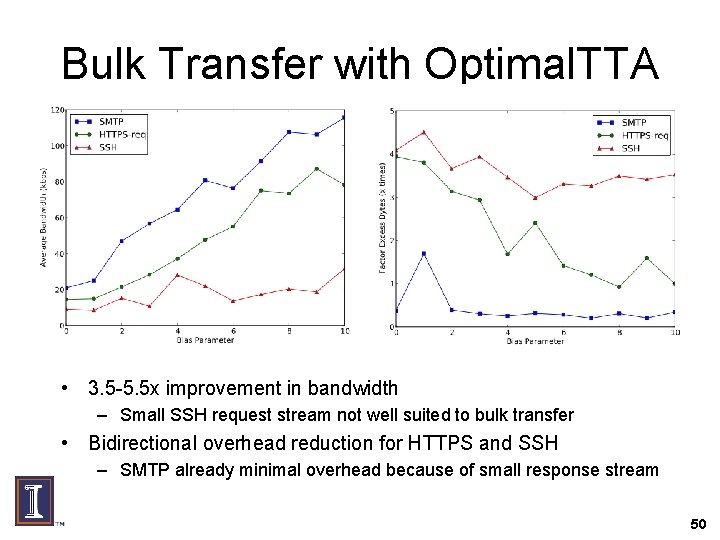

Bulk Transfer with Optimal. TTA • 3. 5 -5. 5 x improvement in bandwidth – Small SSH request stream not well suited to bulk transfer • Bidirectional overhead reduction for HTTPS and SSH – SMTP already minimal overhead because of small response stream 50

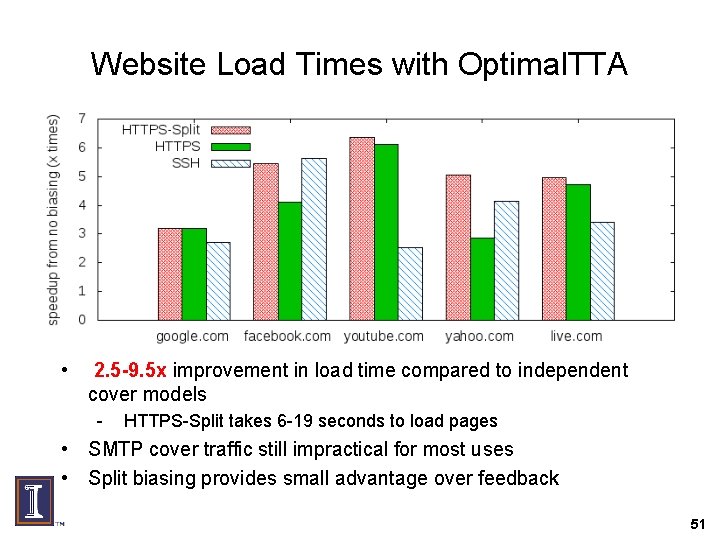

Website Load Times with Optimal. TTA • 2. 5 -9. 5 x improvement in load time compared to independent cover models - HTTPS-Split takes 6 -19 seconds to load pages • SMTP cover traffic still impractical for most uses • Split biasing provides small advantage over feedback 51

Outline • • • Introduction Traffic. Mimic design and implementation Independent cover traffic evaluation Simulation and modeling performance study Improving performance with biasing • Conclusions and future work 52

Recall: Thesis Statement • Robust defense to powerful attack – Tested against generic protocol classification, Bayesian Inference, and KS-test attacks • Offers users more performance/security trade-offs – Biasing study helps users decide how to combine protocols – Algorithmic biasing provides safest performance boost • Practical design, evaluation, and deployment – Used real network data, system implementations, and Internet evaluation to prove effectiveness – Provided integration with existing privacy tools to aid adoption 53

Conclusions • Traffic Analysis is daunting security challenge with few deployable defenses – New and existing attacks growing in effectiveness • Traffic. Mimic addresses the traffic analysis defense gap – Mimicked realistic cover traffic with 91% accuracy against powerful protocol classification attack – Resists defense detection and traffic analysis simultaneously – Up to 9 x performance increase with biasing 54

Usable Technology Transition • Integration with existing services – E. g. , Tor Anonymity network, VPN clients • Integrate with Open. SSL library – Allows arbitrary applications to utilize Traffic. Mimic without code modification – Platform dependent if using library hooking • Continue development/adoption of Traffic. Mimic as a stand-alone proxy – Could be Jack of all trades, but master of none 55

- Slides: 55