6 894 Distributed Operating System Engineering Lecturers Frans

6. 894: Distributed Operating System Engineering Lecturers: Frans Kaashoek (kaashoek@mit. edu) Robert Morris (rtm@lcs. mit. edu) TA: Jinyang Li (jinyang@lcs. mit. edu) www. pdos. lcs. mit. edu/6. 894

Operating System • Software that turns silicon into something useful – Provides applications with a programming interface – Manages hardware resources on behalf of applications

Distributed Operating System • The holy grail: transparency – provide applications with a virtual machine consisting of many processors distributed around the network. • Distributed OS engineering is difficult: – – Failures High-degree of concurrency Long latencies New classes of security attacks

Client/Server Architecture • A modular architecture to structure distributed systems – Clients request services from servers – Client and servers communicate with messages – Servers are typically trusted • Other architectures – Peer-to-peer (decentralized) – Single address space

6. 894 topics • Client-server components – Remote procedure call, threads, address spaces, etc. • Storage – File systems, transactions • Security – Confidentiality, authentication, etc. • Scalable servers

6. 894 is an advanced 6. 033 • Perform actual systems research – Perform a research project – Study recent research papers • Design systems for real workloads – New abstractions, protocols, datastructures, algorithms, etc. • Build a real system (lab) – Real enough that you can use it

Internet video-on-demand server • Example to study issues and overview 6. 894 • Requirements: – Low and high-quality video – Many users, spread around the Internet – Last mile bandwidth may be low – Access control

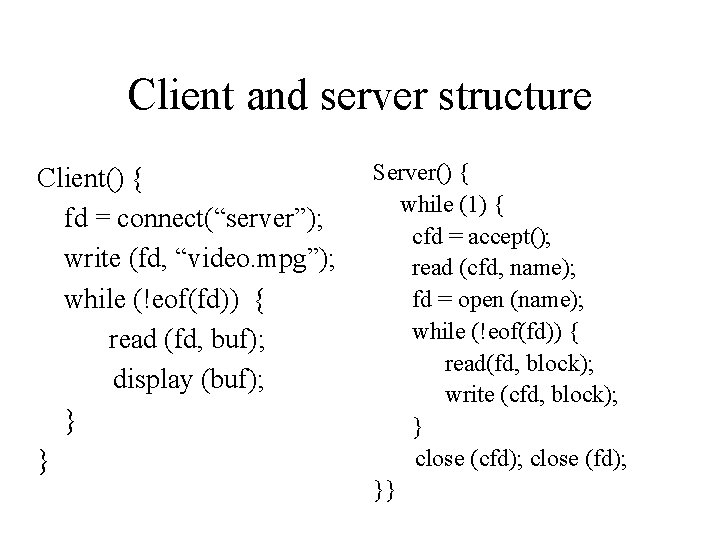

Client and server structure Client() { fd = connect(“server”); write (fd, “video. mpg”); while (!eof(fd)) { read (fd, buf); display (buf); } } Server() { while (1) { cfd = accept(); read (cfd, name); fd = open (name); while (!eof(fd)) { read(fd, block); write (cfd, block); } close (cfd); close (fd); }}

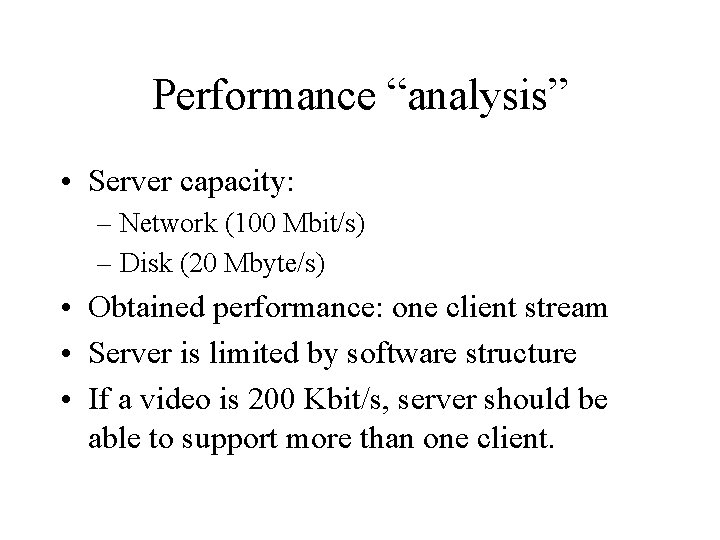

Performance “analysis” • Server capacity: – Network (100 Mbit/s) – Disk (20 Mbyte/s) • Obtained performance: one client stream • Server is limited by software structure • If a video is 200 Kbit/s, server should be able to support more than one client.

Better single-server performance • Goal: run at server’s hardware speed – Disk or network should be bottleneck • Method: – Pipeline blocks of each request – Multiplex requests from multiple clients • Two implementation approaches: – Multithreaded server – Asynchronous I/O

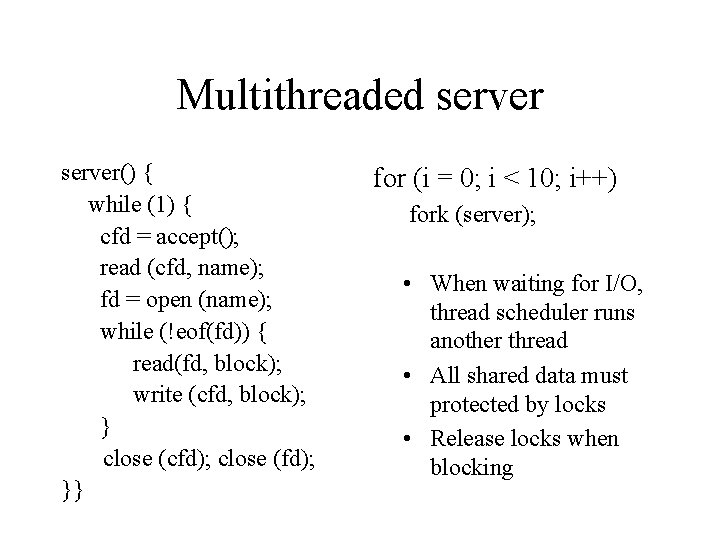

Multithreaded server() { while (1) { cfd = accept(); read (cfd, name); fd = open (name); while (!eof(fd)) { read(fd, block); write (cfd, block); } close (cfd); close (fd); }} for (i = 0; i < 10; i++) fork (server); • When waiting for I/O, thread scheduler runs another thread • All shared data must protected by locks • Release locks when blocking

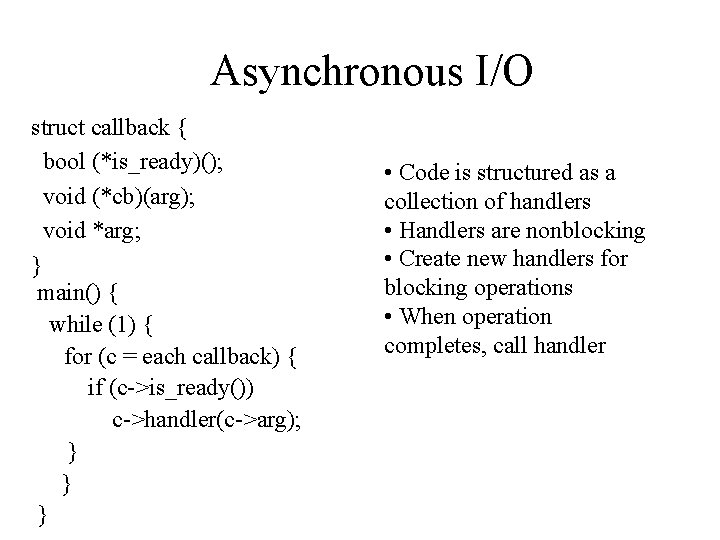

Asynchronous I/O struct callback { bool (*is_ready)(); void (*cb)(arg); void *arg; } main() { while (1) { for (c = each callback) { if (c->is_ready()) c->handler(c->arg); } } } • Code is structured as a collection of handlers • Handlers are nonblocking • Create new handlers for blocking operations • When operation completes, call handler

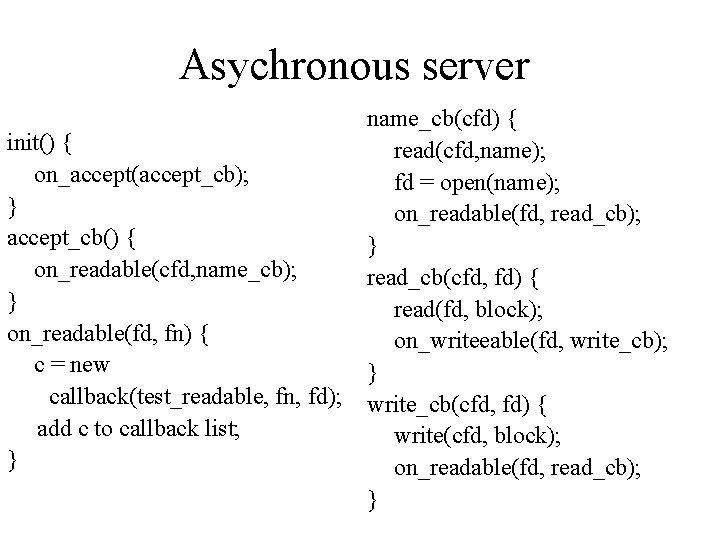

Asychronous server init() { on_accept(accept_cb); } accept_cb() { on_readable(cfd, name_cb); } on_readable(fd, fn) { c = new callback(test_readable, fn, fd); add c to callback list; } name_cb(cfd) { read(cfd, name); fd = open(name); on_readable(fd, read_cb); } read_cb(cfd, fd) { read(fd, block); on_writeeable(fd, write_cb); } write_cb(cfd, fd) { write(cfd, block); on_readable(fd, read_cb); }

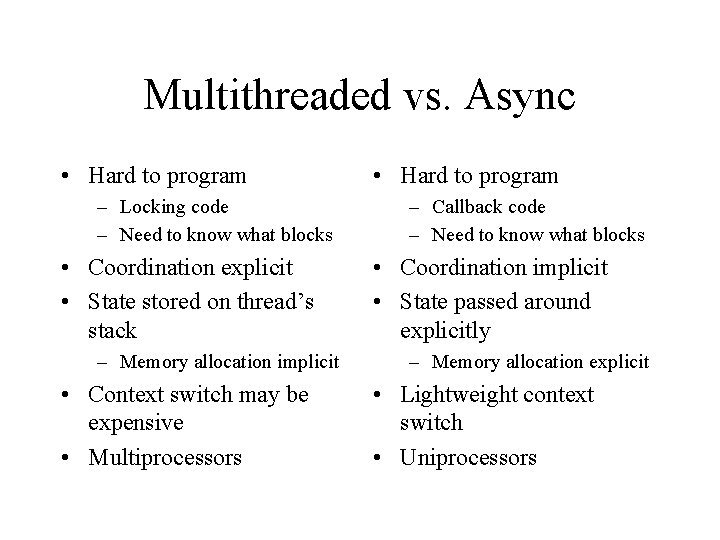

Multithreaded vs. Async • Hard to program – Locking code – Need to know what blocks • Coordination explicit • State stored on thread’s stack – Memory allocation implicit • Context switch may be expensive • Multiprocessors • Hard to program – Callback code – Need to know what blocks • Coordination implicit • State passed around explicitly – Memory allocation explicit • Lightweight context switch • Uniprocessors

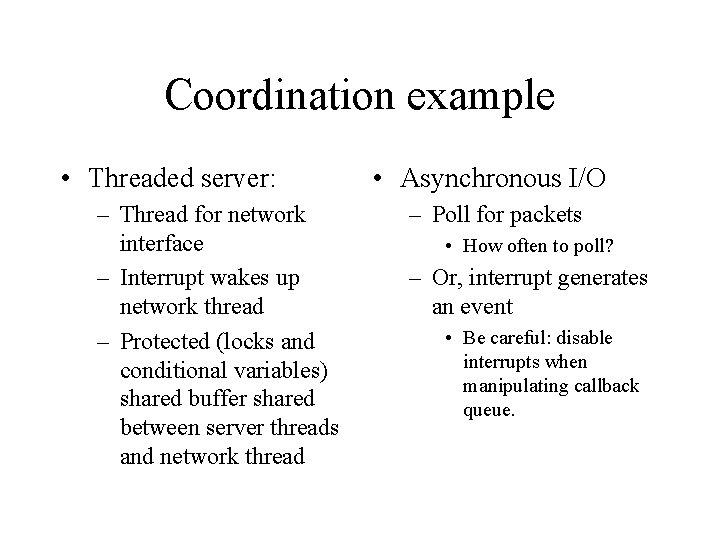

Coordination example • Threaded server: – Thread for network interface – Interrupt wakes up network thread – Protected (locks and conditional variables) shared buffer shared between server threads and network thread • Asynchronous I/O – Poll for packets • How often to poll? – Or, interrupt generates an event • Be careful: disable interrupts when manipulating callback queue.

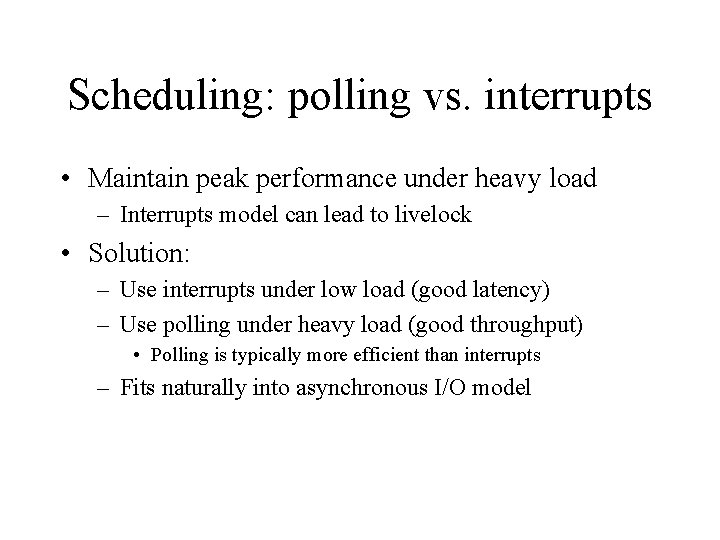

Scheduling: polling vs. interrupts • Maintain peak performance under heavy load – Interrupts model can lead to livelock • Solution: – Use interrupts under low load (good latency) – Use polling under heavy load (good throughput) • Polling is typically more efficient than interrupts – Fits naturally into asynchronous I/O model

Other design issues • Disk scheduling – Elevator algorithm • Memory management – File system buffer cache • Address spaces (VM management) – Fault isolate different servers • Efficient local communication? • Efficient transfers between disk and networks – Avoid copies

More than one processor • Problem: single machine may not scale to enough clients • Solutions: – Multiprocessors • Helps when CPU is bottleneck – Server clusters • Helps when bandwidth between server and backbone is high – Distributed server clusters • Helps when bandwidth between client and distant server is low

Clusters • Naming transparency – Server cluster transparent to client? • Server selection – Metrics: CPU load, presence of data • Consistency – Partition data • Availability – More processors can decrease reliability – Replicate data (makes consistency more difficult)

Distributed clusters • • • Replication policies Data distribution Consistency Network monitoring and modeling Global load balancing Tradeoff between accuracy, latency, and network load

Making it secure: access control • Redo design: don’t add on – Firewalls: insecure and break many things • CPU cycles is an issue – A secure HTTP server can do about 10 -20 connections a second • Pulls in other global issues – Name to key binding – Key management infrastructure

Example summary • Pipelining of disk and network requests – Need a lot of sophisticated software infrastructure • Replication for reliability and performance – Need sophisticated protocols • Difficult: We did it for one application – What if data changes rapidly? – Lack of abstractions!

6. 894 lab: real systems • Multi-finger (due next week) – Asynchronous I/O • HTTP proxy – High-performance proxy – Cache, consistency, etc. • Open-ended file system project – Research

- Slides: 23