6 829 Computer Networks Lecture 4 RouterAssisted Congestion

- Slides: 33

6. 829 Computer Networks Lecture 4: Router-Assisted Congestion Control Mohammad Alizadeh Fall 2016 1

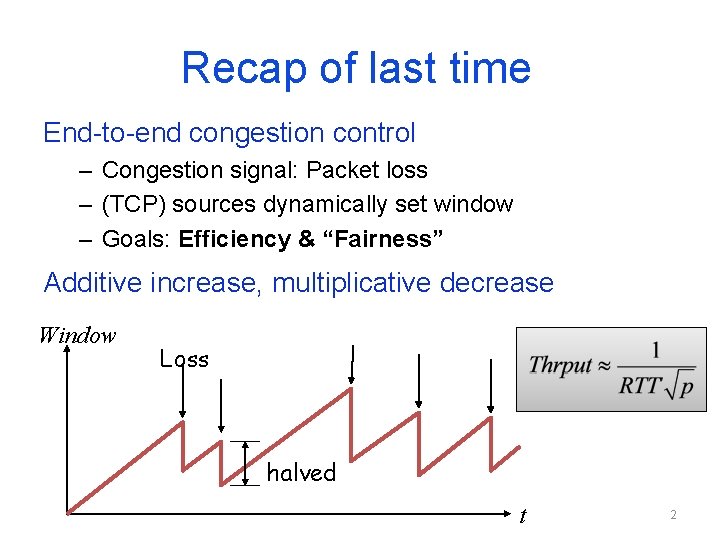

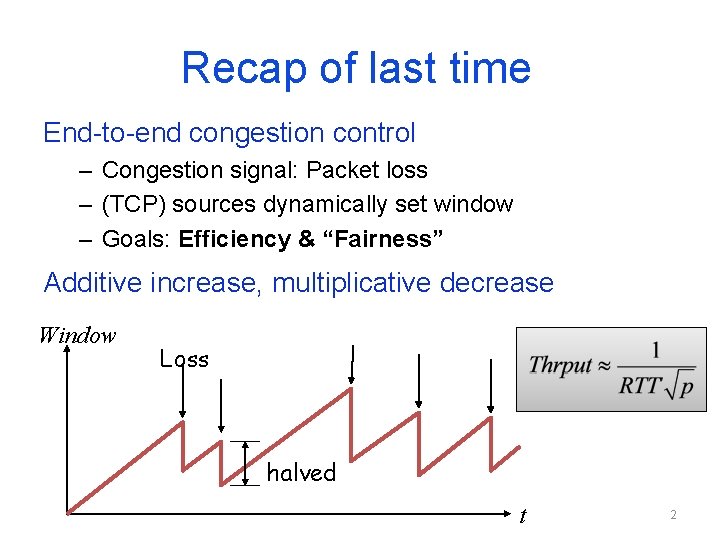

Recap of last time End-to-end congestion control – Congestion signal: Packet loss – (TCP) sources dynamically set window – Goals: Efficiency & “Fairness” Additive increase, multiplicative decrease Window Loss halved t 2

Routers can do more than drop packets Congestion happens at the routers – Have a lot of visibility – Extent of congestion, queue size, which flows are misbehaving, etc. E 2 E congestion control cannot enforce isolation – Where the actions of one flow does not affect others Protecting against uncooperative (e. g. , malicious or buggy) sources needs router support 3

4

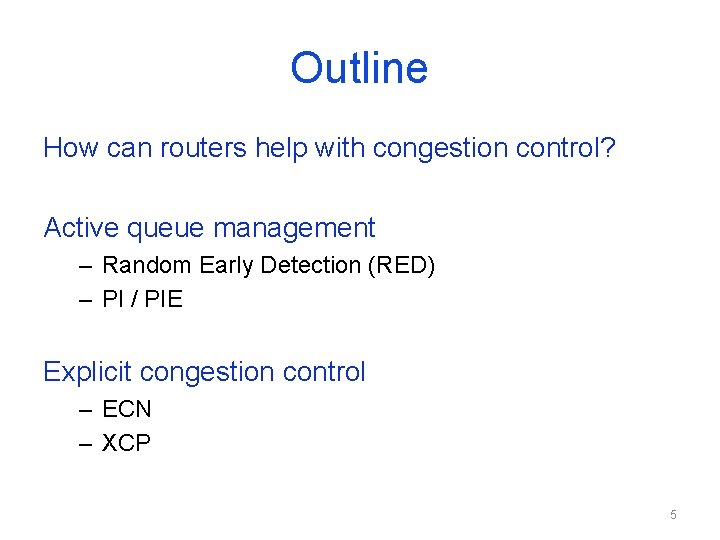

Outline How can routers help with congestion control? Active queue management – Random Early Detection (RED) – PI / PIE Explicit congestion control – ECN – XCP 5

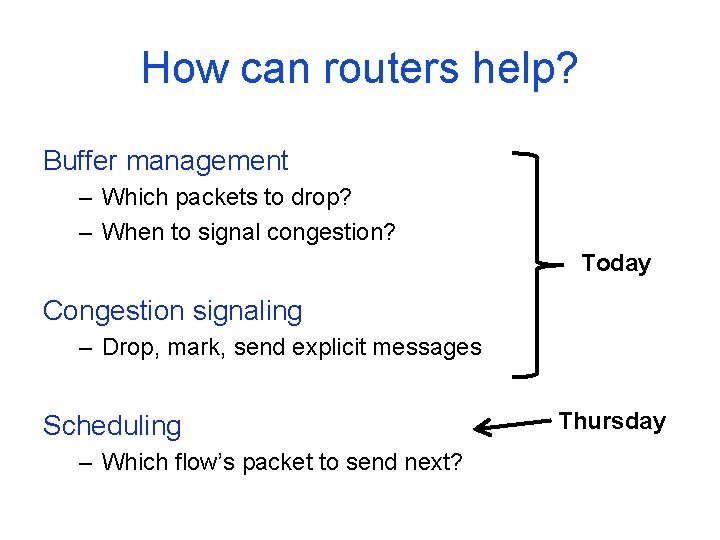

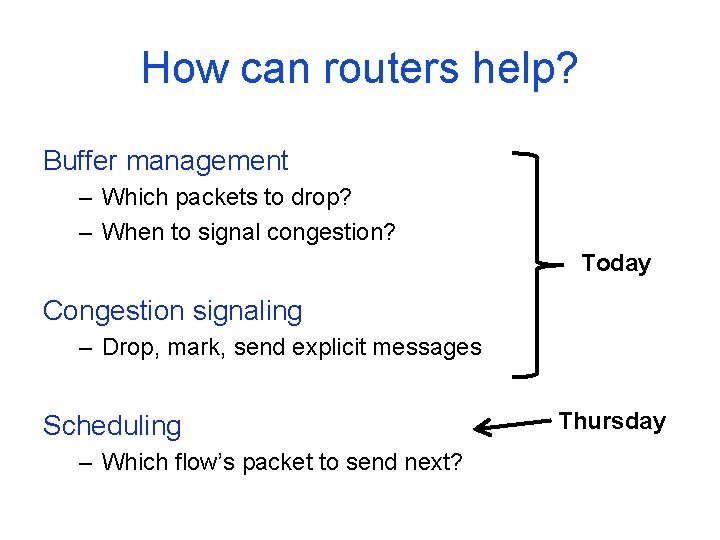

How can routers help? Buffer management – Which packets to drop? – When to signal congestion? Today Congestion signaling – Drop, mark, send explicit messages Scheduling – Which flow’s packet to send next? Thursday

Active Queue Management 7

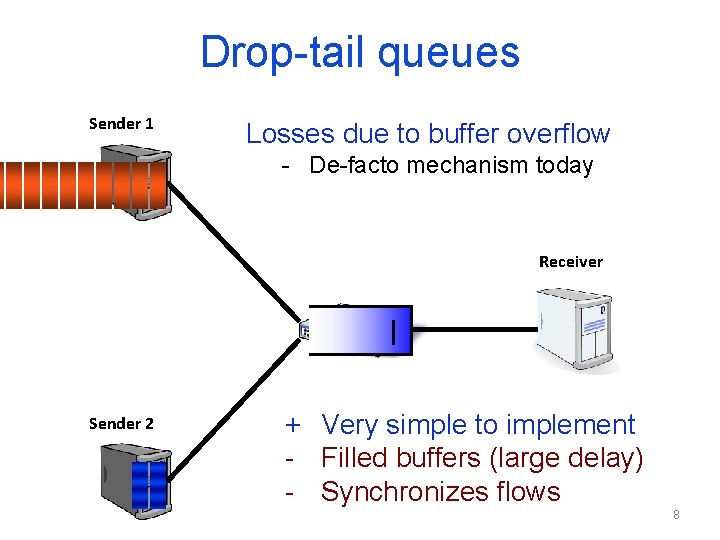

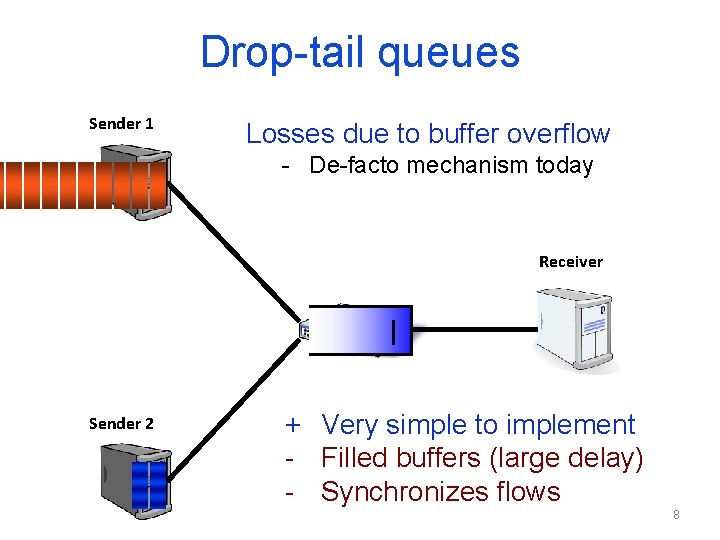

Drop-tail queues Sender 1 Losses due to buffer overflow - De-facto mechanism today Receiver Sender 2 + Very simple to implement - Filled buffers (large delay) - Synchronizes flows 8

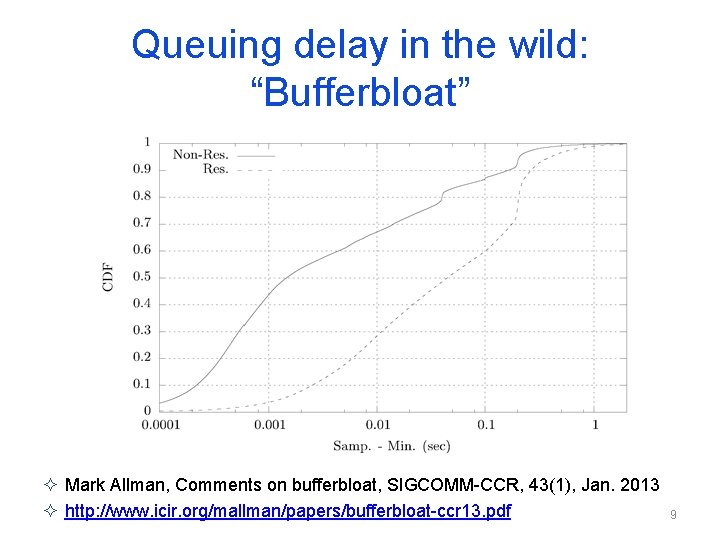

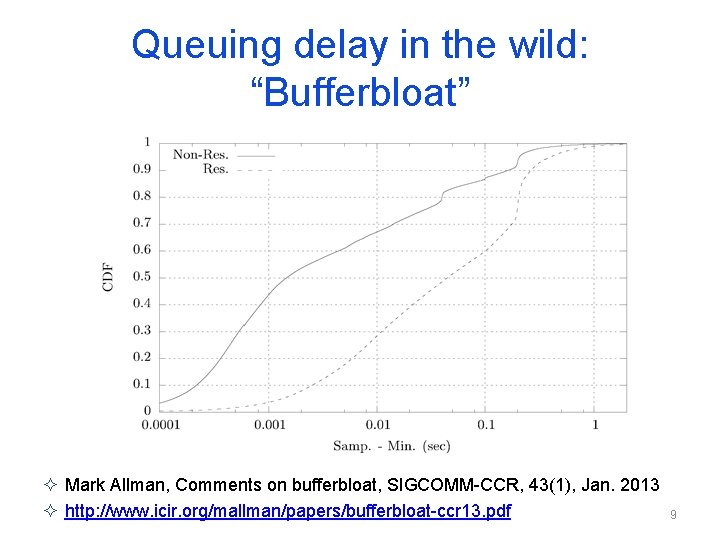

Queuing delay in the wild: “Bufferbloat” ² Mark Allman, Comments on bufferbloat, SIGCOMM-CCR, 43(1), Jan. 2013 ² http: //www. icir. org/mallman/papers/bufferbloat-ccr 13. pdf 9

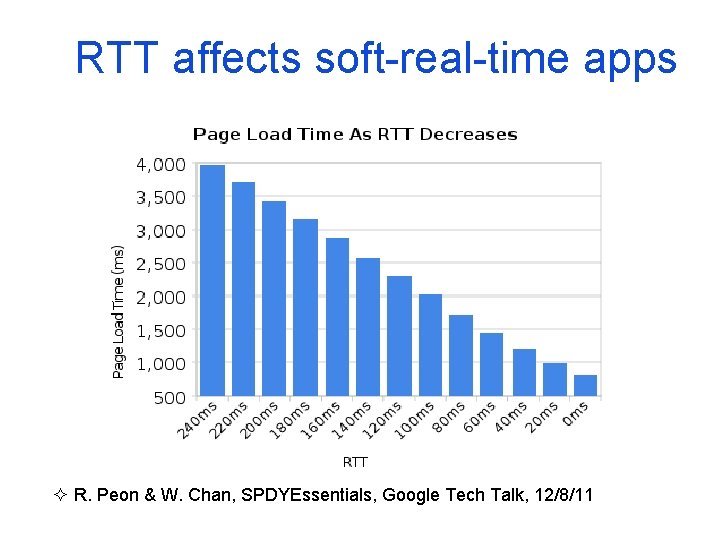

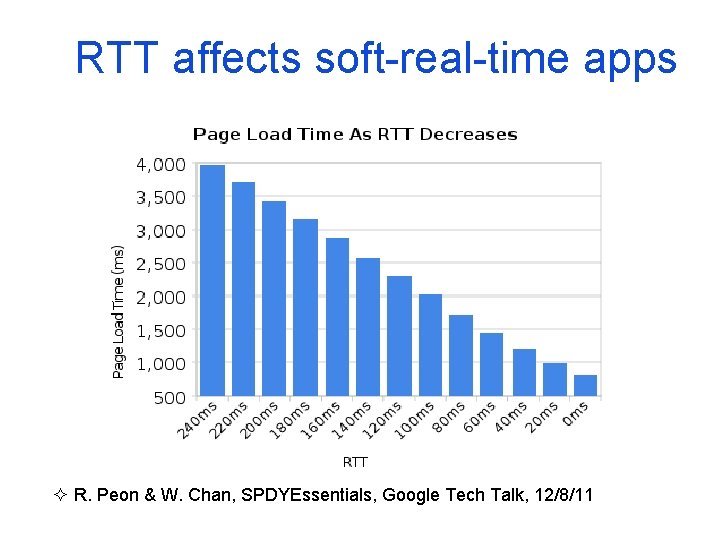

RTT affects soft-real-time apps ² R. Peon & W. Chan, SPDYEssentials, Google Tech Talk, 12/8/11

Random Early Detection (RED) Proposed in 1993 Proactively drop packets probabilistically to – Prevent onset of congestion by reacting early – Remove synchronization between flows

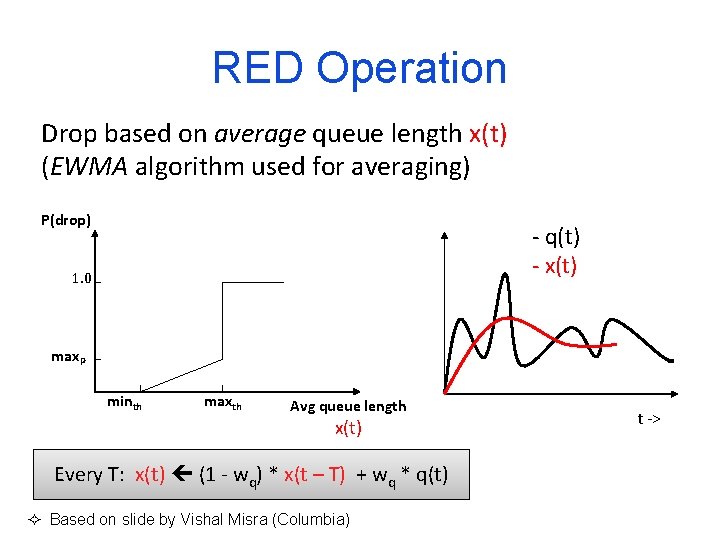

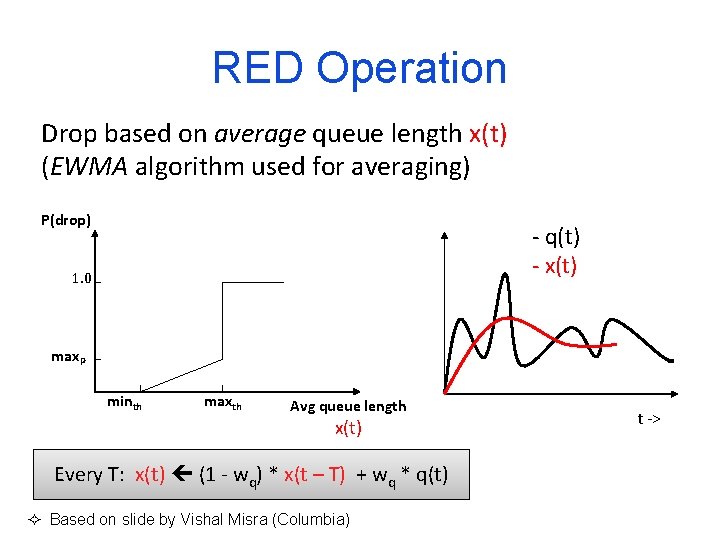

RED Operation Drop based on average queue length x(t) (EWMA algorithm used for averaging) P(drop) - q(t) - x(t) 1. 0 max. P minth maxth Avg queue length x(t): T: smoothed, averaged Every x(t) (1 - wtime – T) + wq *q(t) q) * x(t ² Based on slide by Vishal Misra (Columbia) t ->

RED Problems Many parameters – minth, maxth, wq (EWMA averaging), … Performance very sensitive to parameters – Tuning is difficult; control theory provides framework – Poorly tuned system can be worse than drop-tail Implemented in routers, but usually turned off 13

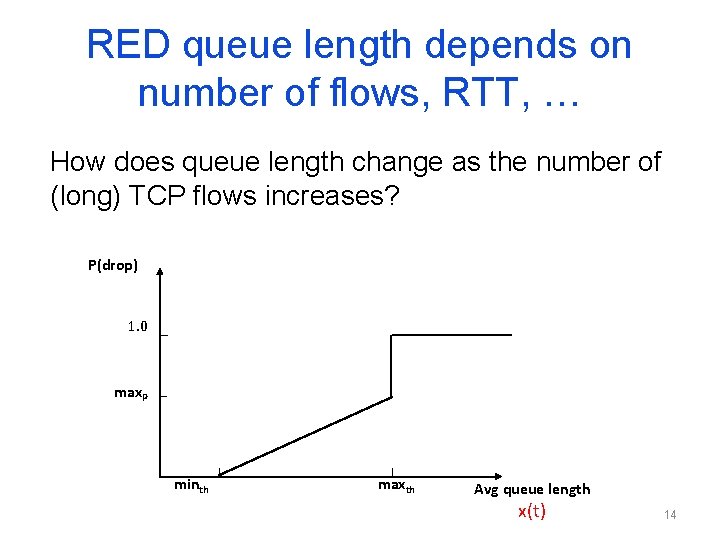

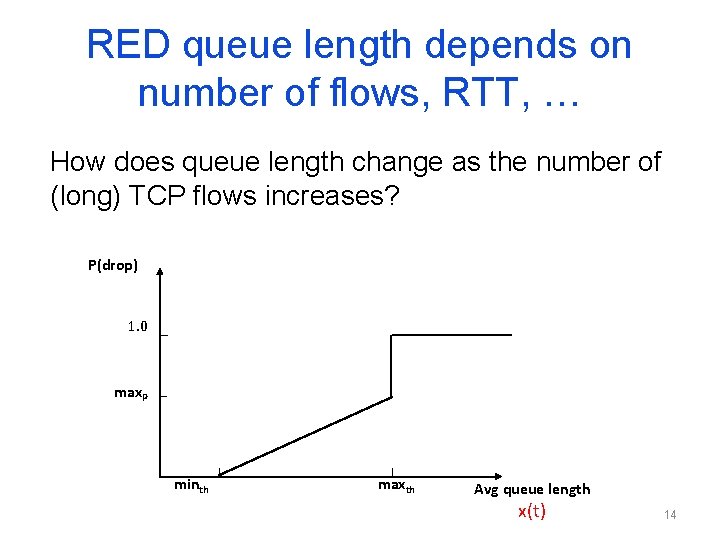

RED queue length depends on number of flows, RTT, … How does queue length change as the number of (long) TCP flows increases? P(drop) 1. 0 max. P minth maxth Avg queue length x(t) 14

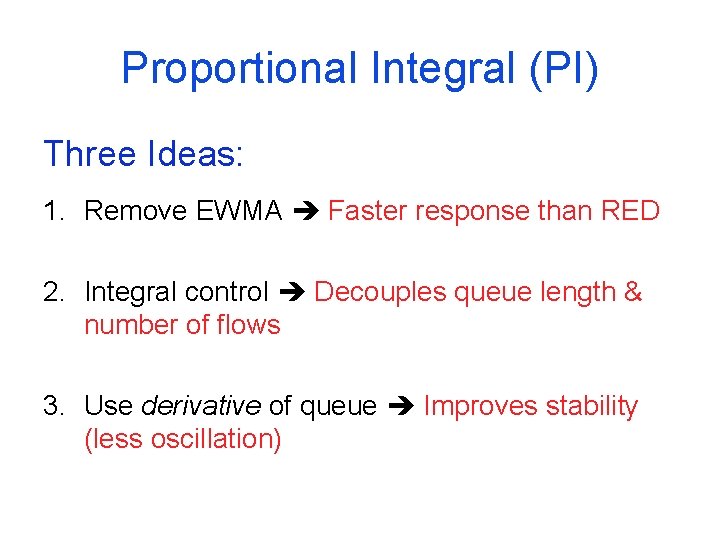

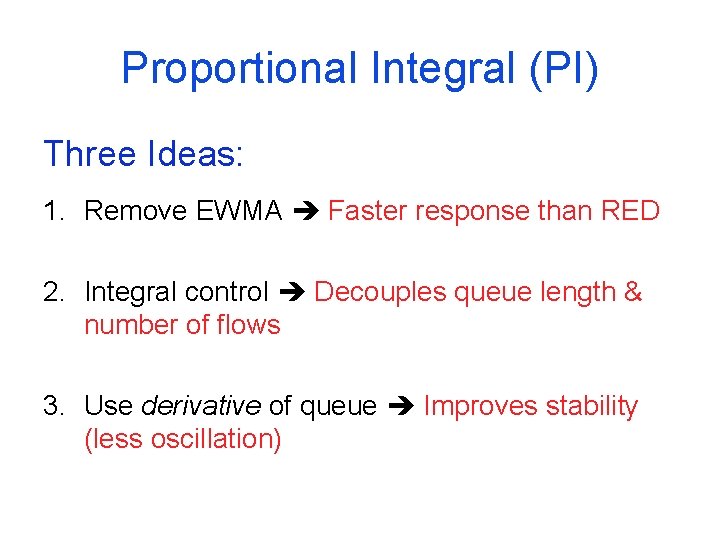

Proportional Integral (PI) Three Ideas: 1. Remove EWMA Faster response than RED 2. Integral control Decouples queue length & number of flows 3. Use derivative of queue Improves stability (less oscillation)

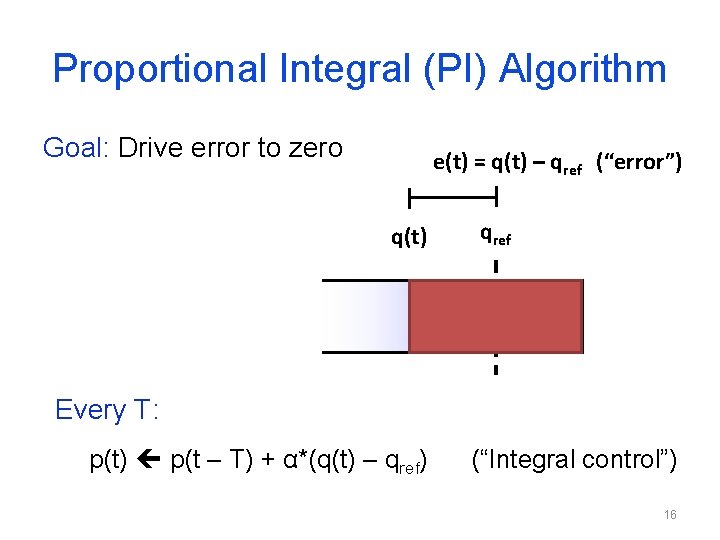

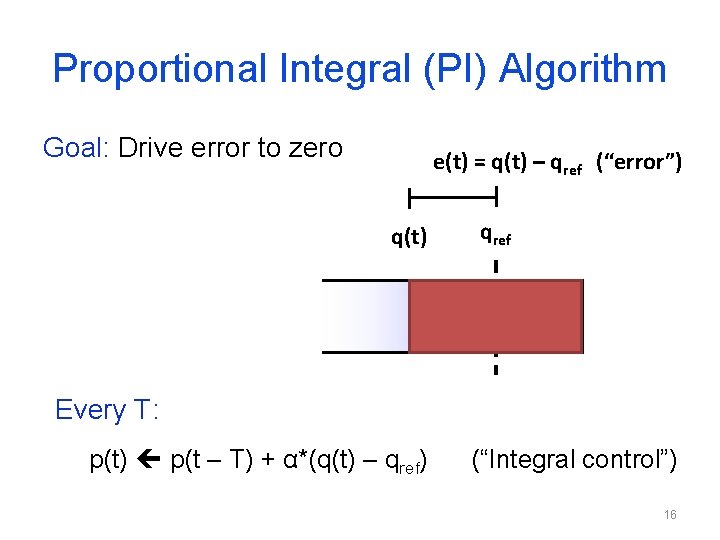

Proportional Integral (PI) Algorithm Goal: Drive error to zero e(t) = q(t) – qref (“error”) q(t) qref Every T: p(t) p(t – T) + α*(q(t) – qref) (“Integral control”) 16

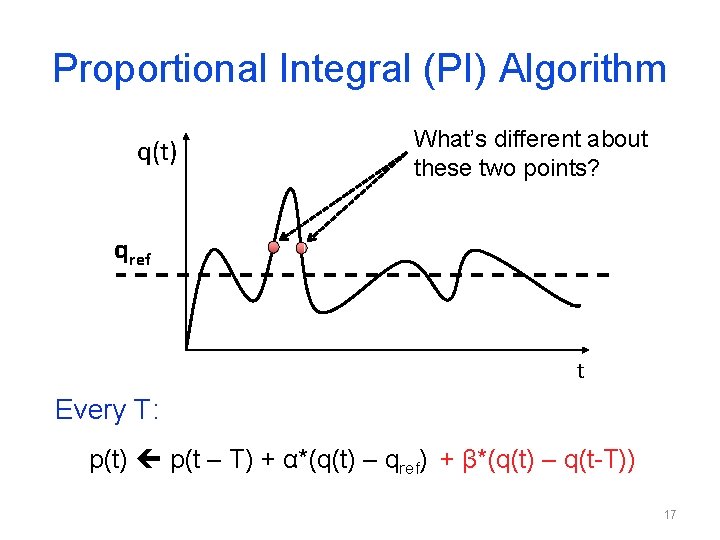

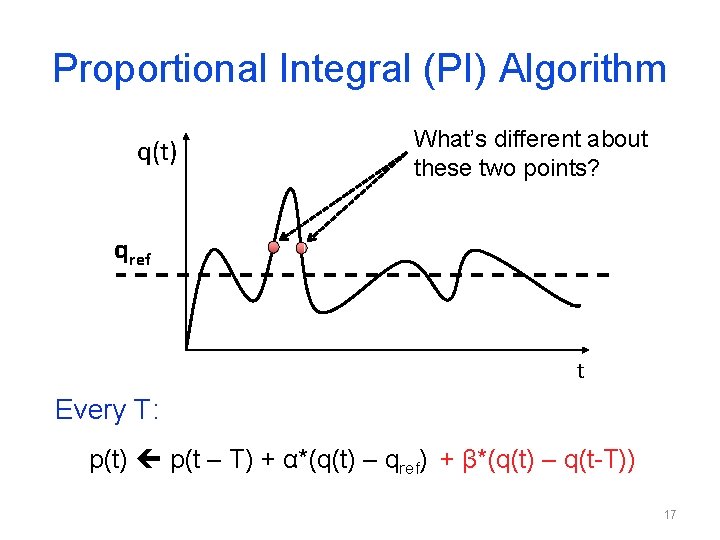

Proportional Integral (PI) Algorithm q(t) What’s different about these two points? qref t Every T: p(t) p(t – T) + α*(q(t) – qref) + β*(q(t) – q(t-T)) 17

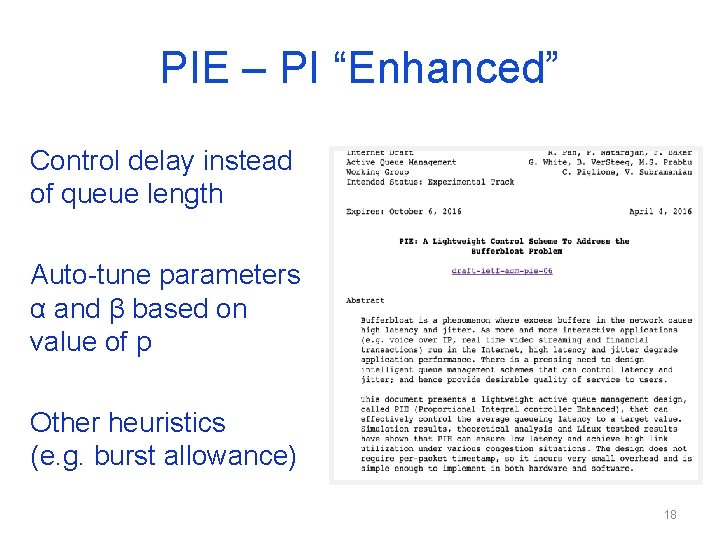

PIE – PI “Enhanced” Control delay instead of queue length Auto-tune parameters α and β based on value of p Other heuristics (e. g. burst allowance) 18

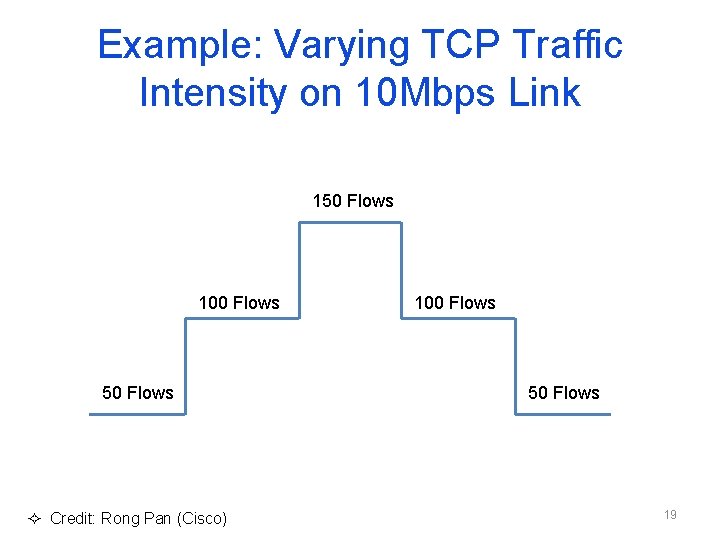

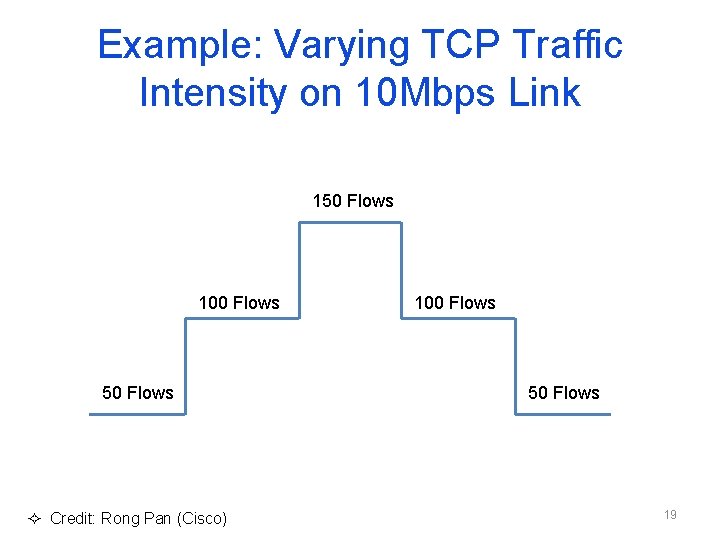

Example: Varying TCP Traffic Intensity on 10 Mbps Link 150 Flows 100 Flows 50 Flows ² Credit: Rong Pan (Cisco) 100 Flows 50 Flows 19

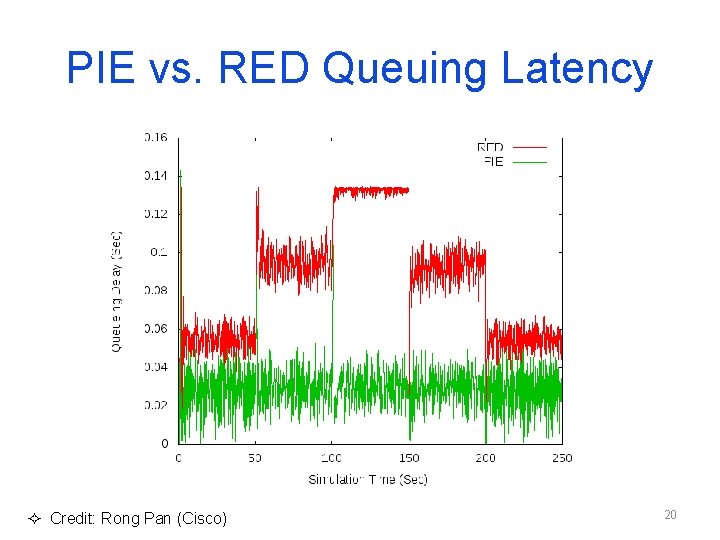

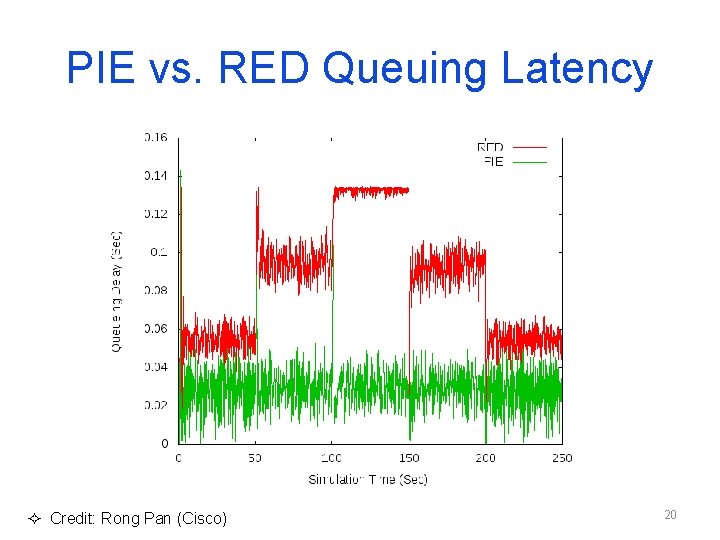

PIE vs. RED Queuing Latency ² Credit: Rong Pan (Cisco) 20

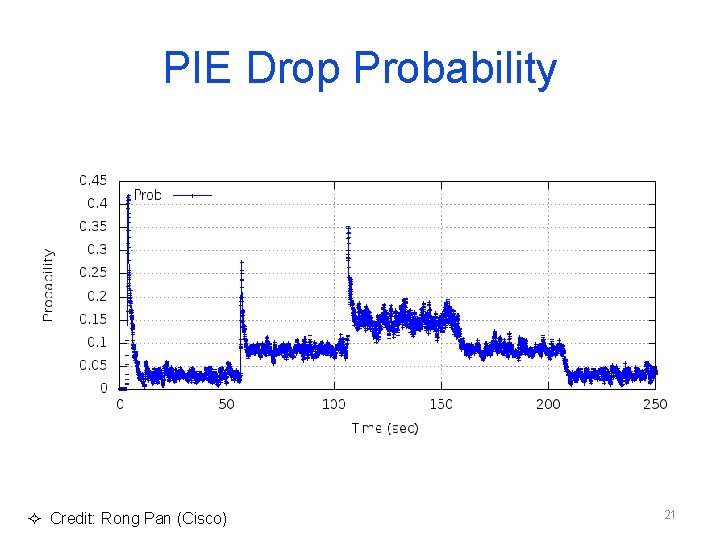

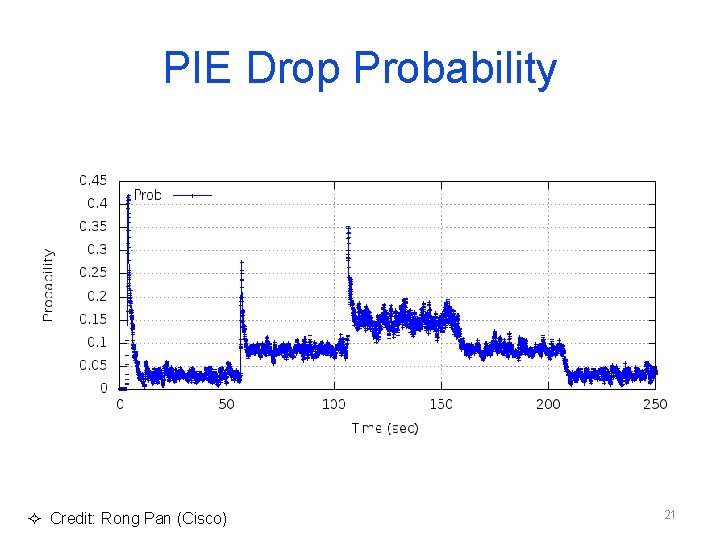

PIE Drop Probability ² Credit: Rong Pan (Cisco) 21

Explicit Congestion Control 22

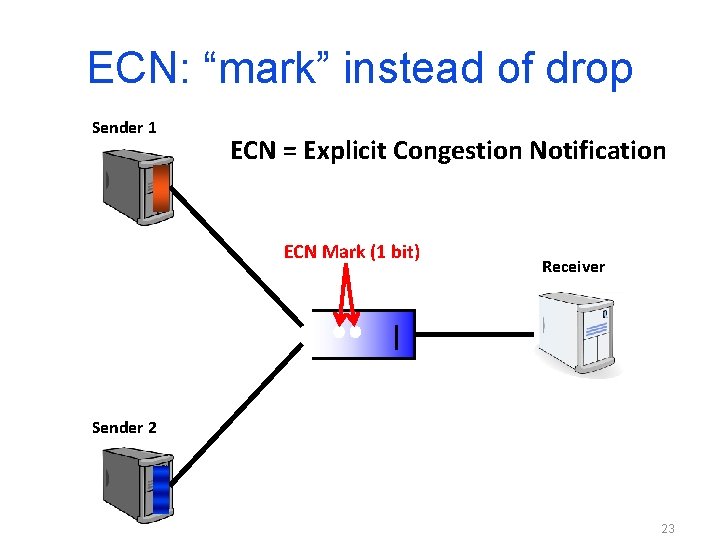

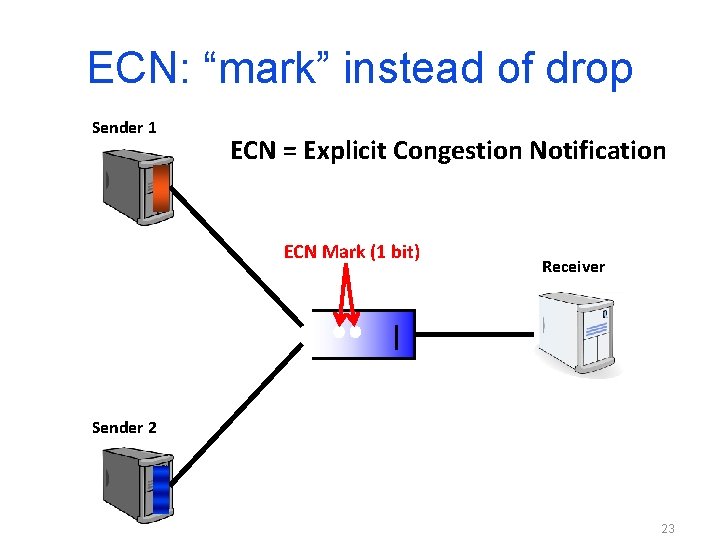

ECN: “mark” instead of drop Sender 1 ECN = Explicit Congestion Notification ECN Mark (1 bit) Receiver Sender 2 23

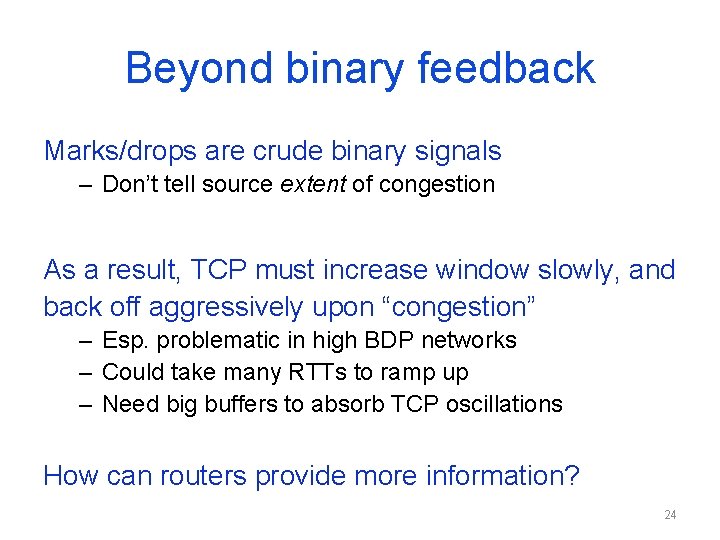

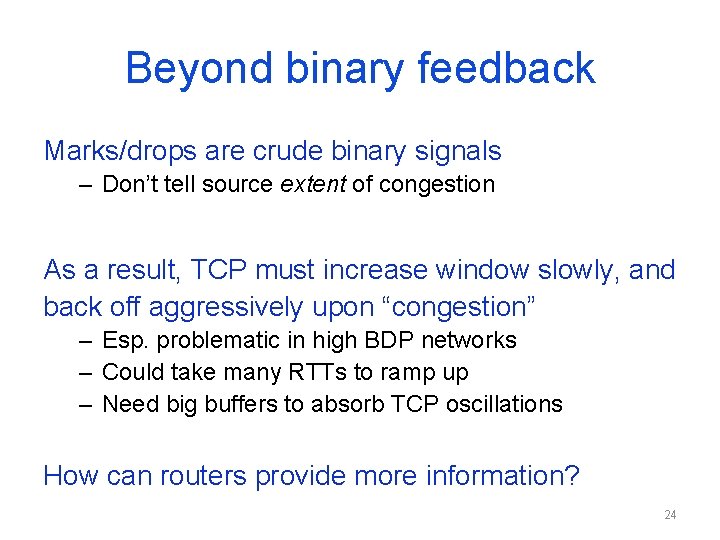

Beyond binary feedback Marks/drops are crude binary signals – Don’t tell source extent of congestion As a result, TCP must increase window slowly, and back off aggressively upon “congestion” – Esp. problematic in high BDP networks – Could take many RTTs to ramp up – Need big buffers to absorb TCP oscillations How can routers provide more information? 24

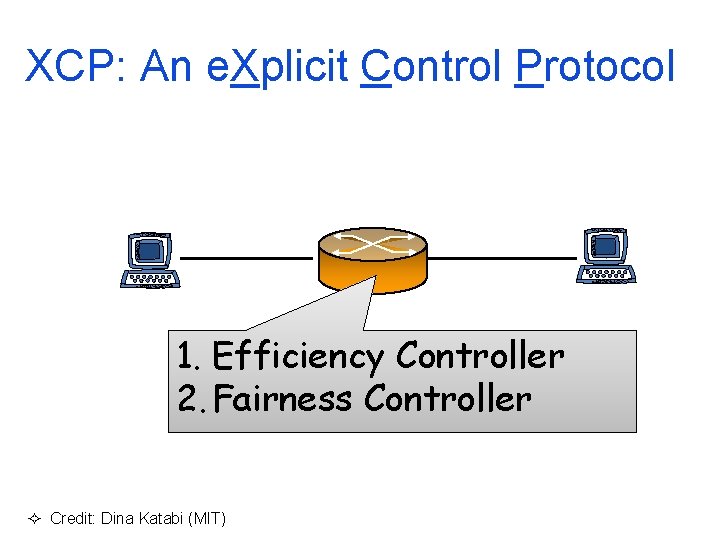

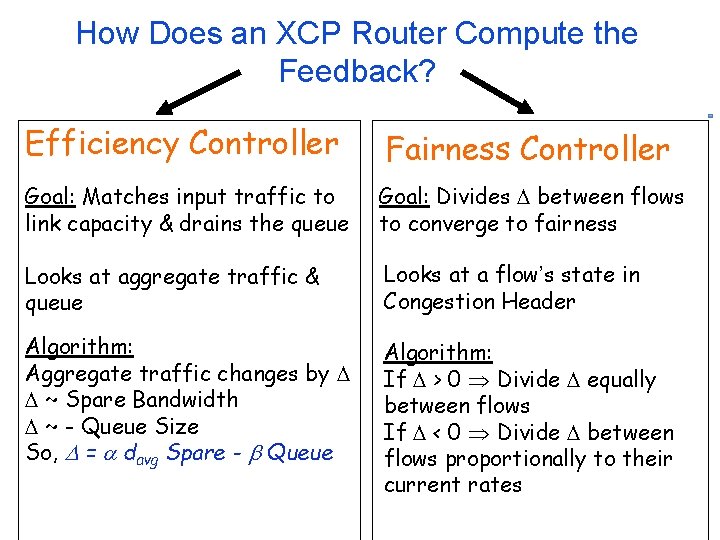

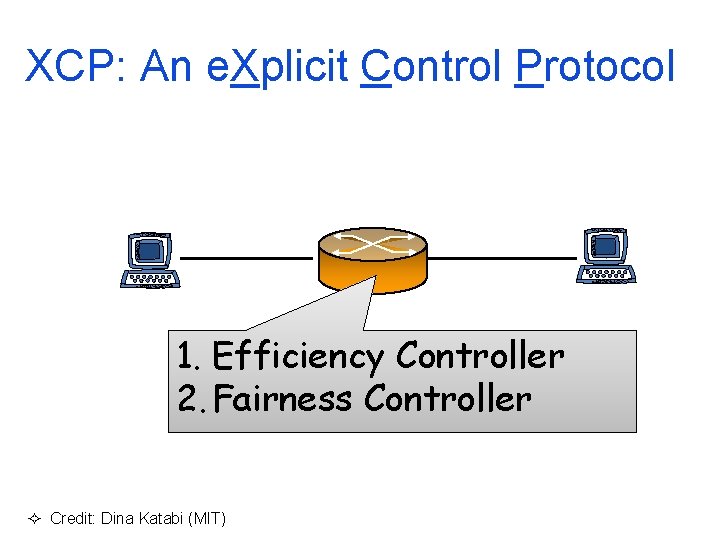

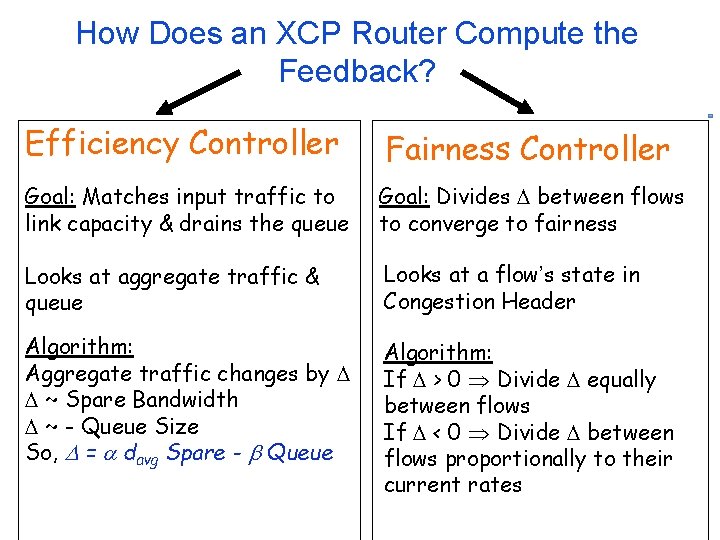

XCP: An e. Xplicit Control Protocol 1. Efficiency Controller 2. Fairness Controller ² Credit: Dina Katabi (MIT)

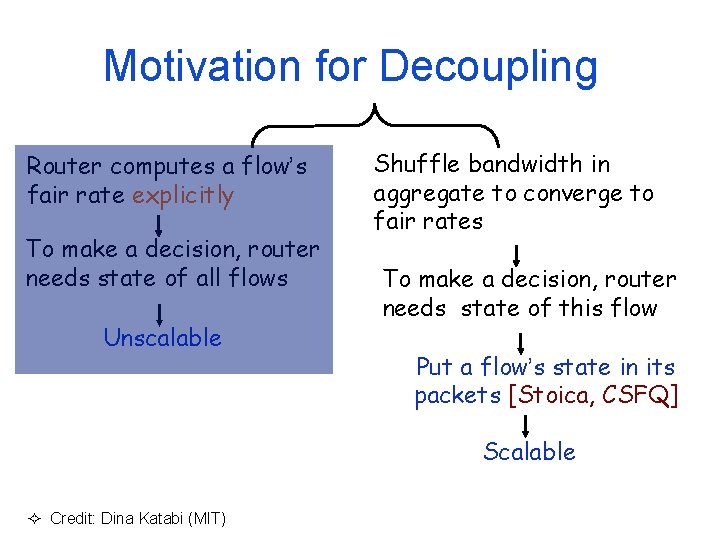

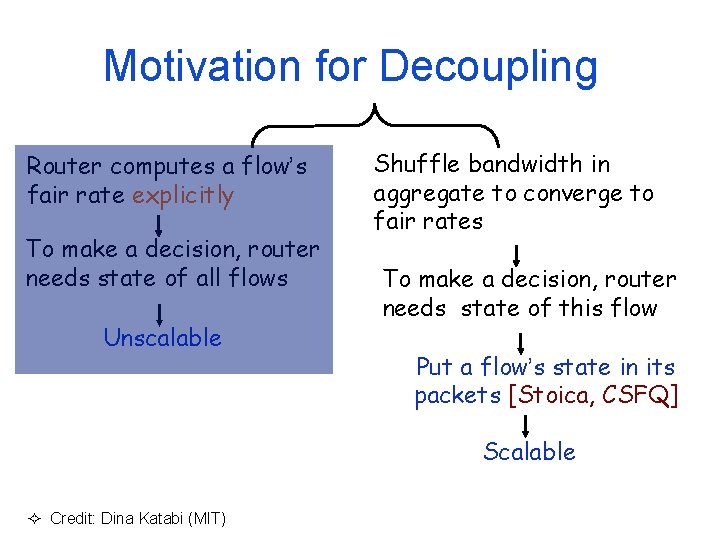

Motivation for Decoupling Router computes a flow’s fair rate explicitly To make a decision, router needs state of all flows Unscalable Shuffle bandwidth in aggregate to converge to fair rates To make a decision, router needs state of this flow Put a flow’s state in its packets [Stoica, CSFQ] Scalable ² Credit: Dina Katabi (MIT)

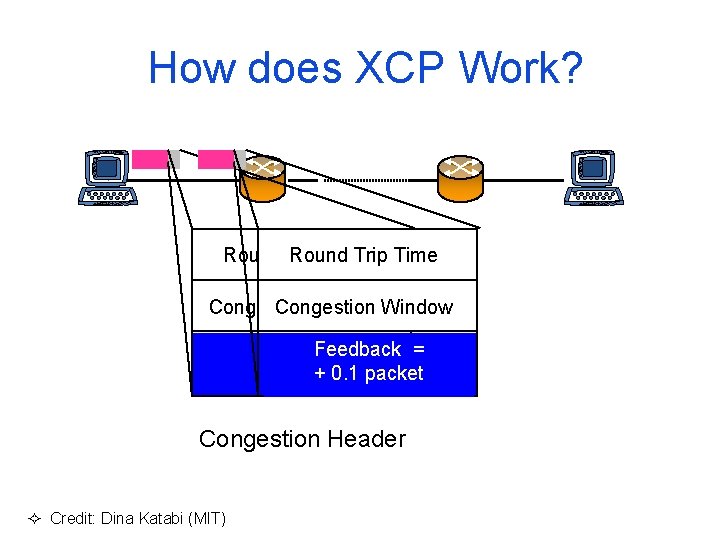

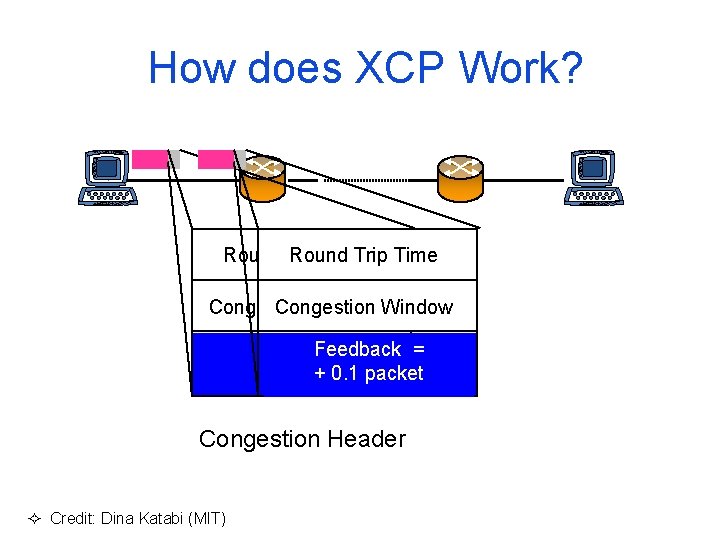

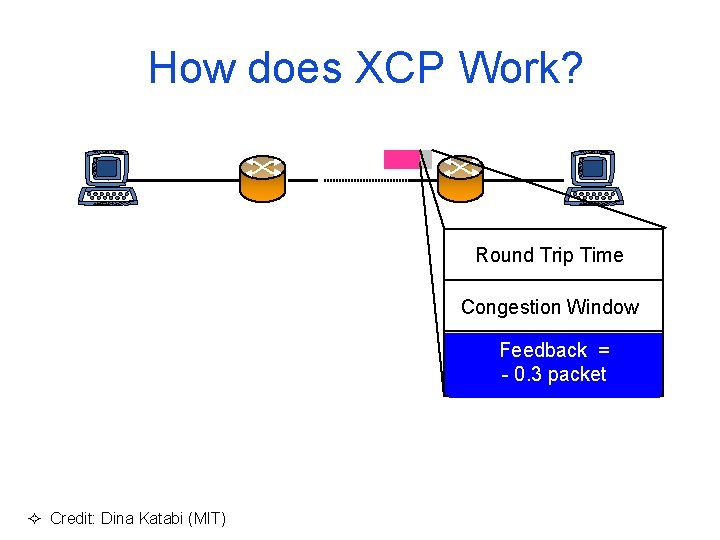

How does XCP Work? Round Trip Round Time Trip Time Congestion Window Feedback = + 0. 1 packet Congestion Header ² Credit: Dina Katabi (MIT)

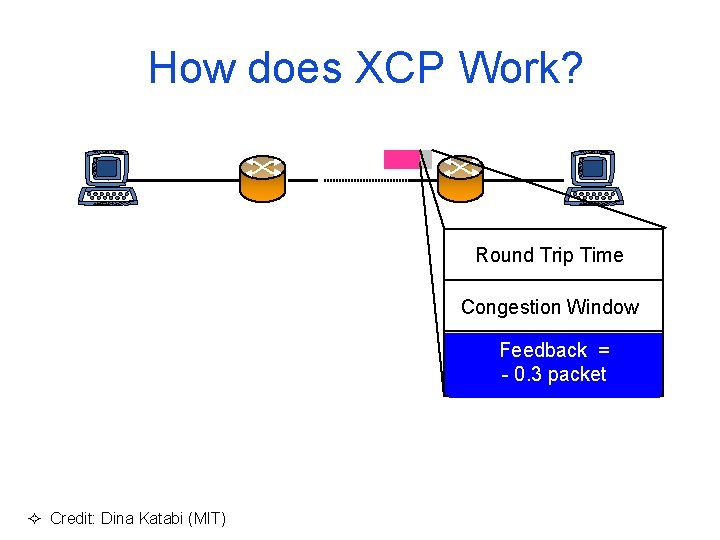

How does XCP Work? Round Trip Time Congestion Window Feedback == +- 0. 3 0. 1 packet ² Credit: Dina Katabi (MIT)

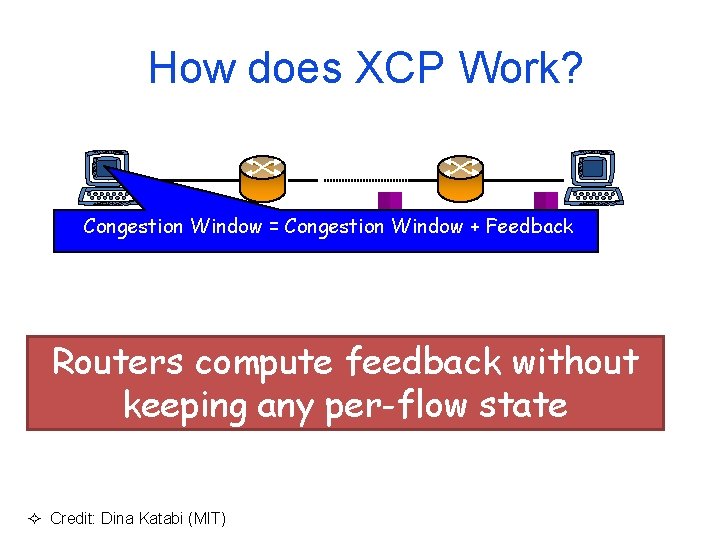

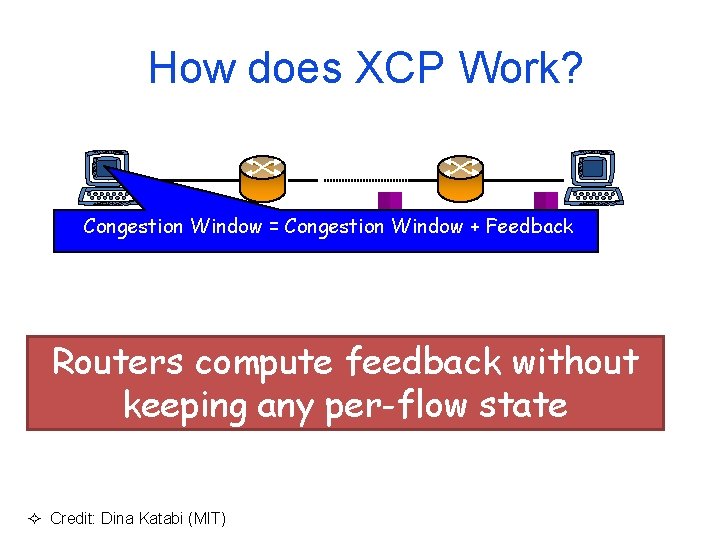

How does XCP Work? Congestion Window = Congestion Window + Feedback Routers compute feedback without keeping any per-flow state ² Credit: Dina Katabi (MIT)

How Does an XCP Router Compute the Feedback? Efficiency Controller Fairness Controller Goal: Matches input traffic to link capacity & drains the queue Goal: Divides between flows to converge to fairness Looks at aggregate traffic & queue Looks at a flow’s state in Congestion Header Algorithm: Aggregate traffic changes by ~ Spare Bandwidth ~ - Queue Size So, = davg Spare - Queue Algorithm: If > 0 Divide equally between flows If < 0 Divide between flows proportionally to their current rates

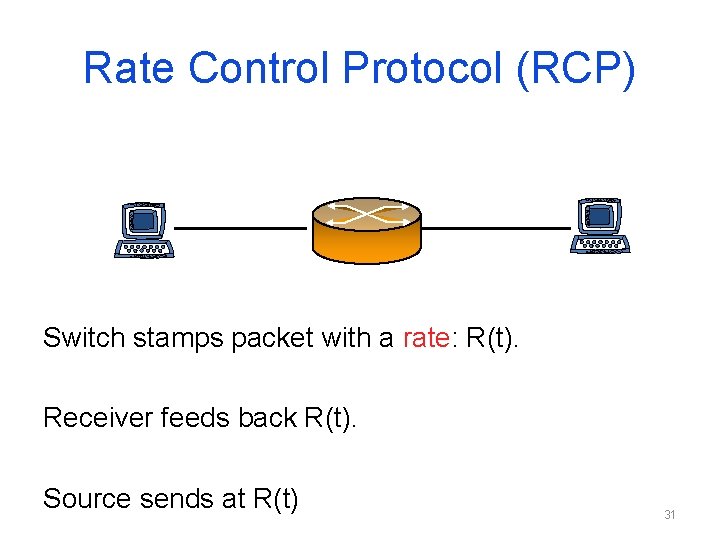

Rate Control Protocol (RCP) Switch stamps packet with a rate: R(t). Receiver feeds back R(t). Source sends at R(t) 31

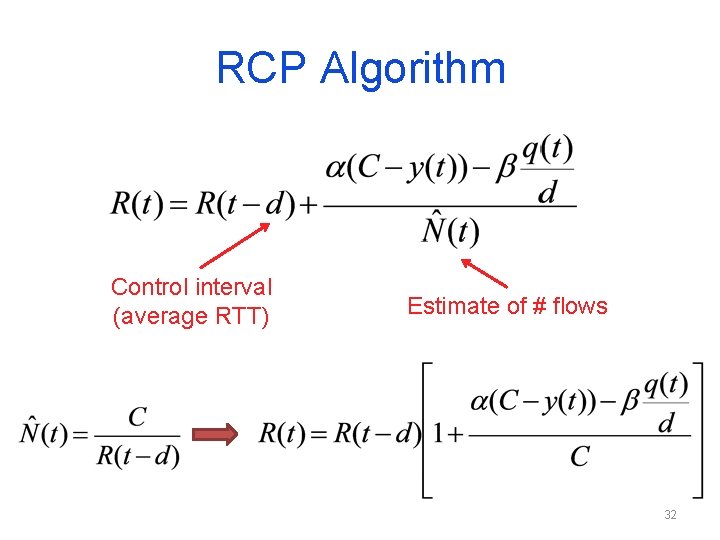

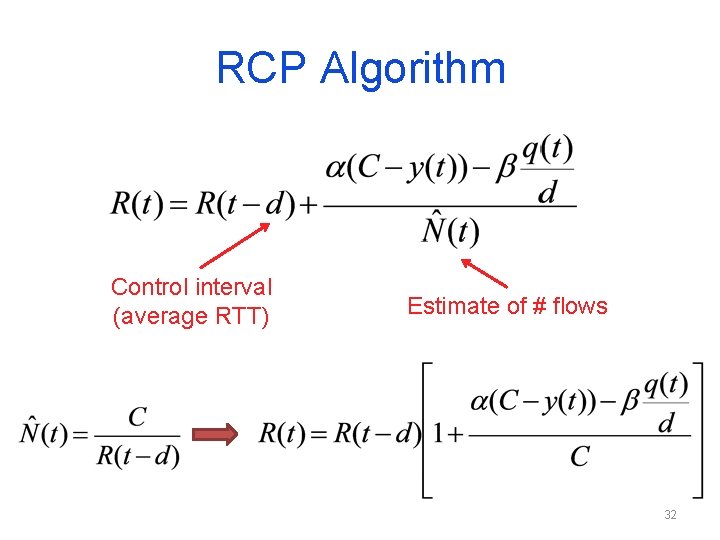

RCP Algorithm Control interval (average RTT) Estimate of # flows 32

33